Abstract

In this study, a novel hybrid metaheuristic algorithm, termed (BES–GO), is proposed for solving benchmark structural design optimization problems, including welded beam design, three-bar truss system optimization, minimizing vertical deflection in an I-beam, optimizing the cost of tubular columns, and minimizing the weight of cantilever beams. The performance of the proposed BES–GO algorithm was compared with ten state-of-the-art metaheuristic algorithms: Bald Eagle Search (BES), Growth Optimizer (GO), Ant Lion Optimizer, Tuna Swarm Optimization, Tunicate Swarm Algorithm, Harris Hawk Optimization, Artificial Gorilla Troops Optimizer, Dingo Optimizer, Particle Swarm Optimization, and Grey Wolf Optimizer. The hybrid algorithm leverages the strengths of both BES and GO techniques to enhance search capabilities and convergence rates. The evaluation, based on the CEC’20 test suite and the selected structural design problems, shows that BES–GO consistently outperformed the other algorithms in terms of convergence speed and achieving optimal solutions, making it a robust and effective tool for structural Optimization.

Similar content being viewed by others

Introduction

Many real-world problems can be modelled as optimization problems. Optimization could be described as the process of selecting of the best element(s) from among a set of available alternatives to get the best possible results when solving a particular problem. Optimization algorithms attempt to reach the optimal objective values (i.e., minimum, or maximum) and satisfy the related constraints. Optimization methods can be classified into two main groups: deterministic1 and non-deterministic methods. Deterministic methods based on mathematical modelling and programming techniques initiate the search process from an initial design point, typically by computing the gradient of the objective function. These methods aim to explore the search space towards the optimal point. Although they distinguish with a rapid convergence rate and high accuracy, their effectiveness relies heavily on the starting point. But they have some disadvantages which is the objective function must be continuous or partially continuous along with its gradients which cannot be possible for many engineering problems. The non-deterministic approaches are considered as an alternative optimization approach which overcomes the disadvantages of deterministic methods. Because they are based on probabilistic rules of transition rather than deterministic ones2.The non-deterministic approach can be categorized into heuristics and metaheuristics. The significant difference between both is that heuristics are more problem-dependent than metaheuristics.

In other words, heuristics can be efficiently applied to a specific problem meanwhile become insufficient to other problems. On the other hand, a metaheuristic is a generic algorithm framework or a black box optimizer that can be applied to all optimization problems3. The term “metaheuristics” refers to a “higher level of heuristics” and is a combination of the words “meta” and “heuristic,” where “meta” means beyond or higher level and “heuristic” refers to finding or discovering a goal by trial and error.

Metaheuristic algorithms are optimization algorithms that are used to find the optimal solution for complex problems that cannot be solved using traditional methods4 like travelling salesman problem5, the knapsack optimization problem6 and Bin Packing Problem7 Which are non-deterministic polynomial-time-hard combinatorial problems. Metaheuristics optimization can be classified into four main categories: Evolution-based, physics-based, swarm-based, and human-based, each of which simulates specific behaviours for proposing a novel algorithm with distinct characteristics (Exploration and exploitation). Evolution-based algorithms are based on the genetic characteristics and evolutionary methods of nature, and representative algorithms include ES, evolutionary programming (EP), genetic algorithm (GA), and differential evolution (DE). Swarm-based algorithms are based on the behaviour of organisms such as birds or ants in clusters, and representative algorithms include ant colony optimization (ACO), particle swarm optimization (PSO), cuckoo search, and crow search (CS). Physical-based algorithms are based on physical phenomena, and representative algorithms include simulated annealing (SA), harmony search (HS), gravitational search (GS), black hole (BH), and sine cosine (SC). Finally, the human behaviour-based algorithms are based on human intelligent behaviour, and representative algorithms include human-inspired (HI), social-emotional optimization (SEO), brainstorm optimization (BSO), teaching learning-based optimization (TLBO), and social-based (SB)8. They employ several randomly generated agents and gradually improve them until the convergence/termination condition is met. Metaheuristic approaches, as main the techniques belonging to this group, are inspired by natural phenomena. The basic clue behind these methods is to model natural concepts, like the survival behaviour of the animal colonies, physical rules etc. The population-based optimization algorithms are a powerful tool to reach global optimum solutions to real-world problems for 30 years have been commonly used to design optimal components9. Metaheuristic Optimization algorithms can solve many civil engineering optimization problems. For example, Optimum detailed design of reinforced concrete frames10.

In structural steel design optimization11, or implement the performance-based optimum seismic design of steel dual-braced frames for various performance levels by bat algorithm (BA), It also used in the asphalt pavement management system in transport infrastructure planning or geotechnical engineering optimization problems12 and many civil engineering optimization problems. Metaheuristic optimization algorithms solve efficiently many structural engineering problems which is one of civil engineering’s crucial branches. One of the primary objectives for structural engineers is a cost-effective design. Incorporating optimality criteria into the design procedure introduces additional complexities that result in problems that are nonlinear, nonconvex, and have a discontinuous solution space.13.

With the increase in the number of recent metaheuristics, the necessity of determining the best algorithm which solves optimization problems is questioned, many papers made comparisons to find the best algorithm according to convergence rate and minimum fitness for example a comparative investigation of eight recent population-based optimisation algorithms for mechanical and structural design problems9, comparison of recent meta-heuristic optimization algorithms using different benchmark functions14 and comparative assessment of five metaheuristic methods on distinct problems2 each paper study some of metaheuristic optimizations algorithms and compare between their results as (best, mean, worst, average, standard deviation and function evaluation).

After this comparison, the better algorithms can be used in many applications like the Effect of bar diameter on bond performance of helically ribbed GFRP bar to UHPC15, Mechanical properties and microstructure of waterborne polyurethane-modified cement composites as concrete repair mortar16, Reaction molecular dynamics study of calcium alumino-silicate hydrate gel in the hydration deposition process at the calcium silicate hydrate interface: The influence of Al/Si17, Durability enhancement of cement-based repair mortars through waterborne polyurethane modification: Experimental characterization and molecular dynamics simulations18 and Unveiling the dissolution mechanism of calcium ions from CSH substrates in Na2SO4 solution: Effects of Ca/Si ratio19

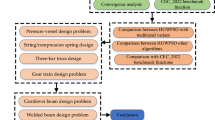

This paper’s main contributions come from the creation of a novel hybrid algorithm from Blade Eagle Search (BES)20, and Growth Optimizer (GO)21. Then it is compared to ten well-metaheuristic algorithms, selected for their noteworthy progress and success between 2009 and 2023. The comparison is conducted using ten benchmark functions CEC2020 and five challenging real-world structural design problems. These problems are the welded beam design, weight optimization of a cantilever beam, I-beam vertical deflection, tubular column design optimization, and the three-bar truss system optimization.

These issues present diverse levels of constraint difficulty and intricacies within their respective search spaces. The hybrid BES–GO algorithm is evaluated against the other ten algorithms to determine the best optimal value, minimum standard deviation, best average value, and highest convergence rate over 30 independent runs. These algorithms are Blade Eagle Search (BES), Growth Optimizer (GO), Ant Lion Optimiser (ALO)22, Tuna Swarm Optimization (TSO)23, Tunicate Swarm Algorithm (TSA)24, Harris Hawks Optimization (HHO)25, Artificial Gorilla Troops Optimizer (GTO)26, Dingo Optimizer (DOA)27, Particle swarm optimizations (PSO)28, Grey Wolf Optimizer (GWO)29.

The rest of this paper is organized as follows. Section “Related works” offers an extensive review of metaheuristic algorithms published in the last five years, along with their outcomes in solving five optimization problems. Section “Metaheuristics algorithms” provides a comprehensive review of the selected metaheuristic algorithms and their respective methodologies including BES and GO as the basis for the hybrid metaheuristic algorithm BES–GO. Section “BES–GO algorithm” explains the detailed structure of BES–GO. Section “Engineering structural design problems” provides a comprehensive overview of the selected optimization problems including objective functions constraints and benchmark functions CEC2020. Section “Experimental results analysis” provides the results of the implementation of these algorithms. Section “Nonparametric statistical analysis” provides a nonparametric statistical analysis for the BES–GO algorithm. Section “Conclusion and future work” provides the conclusion and future work.

Related works

Numerous population-based optimization methods are employed to tackle benchmark optimization problems such as the welded beam design problem, 3 Bar design problem, cost optimization of tubular columns, and vertical deflection minimization problem of an I-beam. This section will highlight significant endeavours in this domain. Various algorithms, including the Colliding Bodies Optimization Algorithm30, Social Spider Optimization (SSO-C)31, and the New Movement Strategy of Cuckoo Search (NMS-CS)32 For many other algorithms Table 1 presents recent metaheuristic algorithms along with their optimal solutions and corresponding fitness values when applied to solving the welded beam design problem.

The three-bar truss design problem is renowned as a constrained design problem that has garnered significant attention in academic literature. Numerous papers have endeavoured to tackle this challenge, employing methodologies such as the American Zebra Optimization Algorithm37, along with several other algorithms documented in the subsequent Table 2.

Additionally, there exist numerous algorithms that address the cantilever beam optimization problem, as detailed in Table 3.

The vertical deflection on an I-beam problem presents a considerable challenge, motivating numerous researchers to utilize diverse algorithms to showcase the effectiveness of their approaches. These endeavours are summarized in Table 4, highlighting notable discrepancies in the optimal results achieved by the different methodologies employed.

Few researchers have attempted to solve the cost optimization of the tubular column problem, and their endeavours are documented in Table 5.

Metaheuristics algorithms

In this section, a brief description of the algorithms and the parameter settings utilized in this study are provided. For more details and the literature, readers can go through the cited articles.

Bald eagle search algorithm (BES)

The Bald Eagle Search (BES) algorithm, established in 202020, mimics the intelligent hunting prowess of Bald Eagles to tackle challenging optimization problems. Inspired by their broad-scale scouting, prey localization, and precise dives, BES divides its search into three stages: exploring the search space, zeroing in on promising areas, and finally swooping down for the optimal solution. First stage is select stage, where bald eagles identify and select the best area (in terms of amount of food) within the selected search space where they can hunt for prey. Equation (1) presents this behaviour mathematically.

where \({P}_{new,i}\) is the updated position, \({P}_{best}\) denotes the search space that is currently selected by bald eagles based on the best position identified during their previous search, \(\alpha\) is the parameter for controlling the changes in position that takes a value between 1.5 and 2 and \(r\) is a random number that takes a value between 0 and 1, \({P}_{mean}\) indicates that these eagles have used up all information from the previous points. \({P}_{i}\) is the current position. The second stage is searching for stage where bald eagles search for prey within the selected search space and move in different directions within a spiral space to accelerate their search. The best position for the swoop is mathematically expressed in the following equation:

where \(a\) is a parameter that takes a value between 5 and 10 for determining the corner between point search in the central point and \(R\) takes a value between 0.5 and 2 for determining the number of search cycles. Third stage is swooping, where bald eagles swing from the best position in the search space to their target prey. All points also move towards the best point. The following equation mathematically illustrates this behaviour.

Artificial gorilla troops optimizer (GTO)

Artificial Gorilla Troops Optimizer (GTO), established in 2021. In the GTO algorithm five different operators are used for optimization operations (exploration and exploitation) simulated based on gorilla behaviours. We used three different mechanisms for the exploration phase, that is, migration to an unknown location, migration towards a known location, and moving to other gorillas. Each of these three mechanisms is selected according to a general procedure. Three mechanisms can be simulated by the following equation:

where \(GX\left(t+1\right)\) is the gorilla candidate position vector in the next t iteration. \(UB\) and \(LB\) represent the upper and lower bounds of the variables, respectively. \({r}_{1}\), \({r}_{2}\), \({r}_{3}\) and \(rand\) is random values ranging from 0 to 1 updated in each iteration. \(p\) is a parameter that must be given a value before the optimization operation and has a range of 0 – 1. \(X\left(t\right)\) is the current vector of the gorilla position. \({X}_{r}\left(t\right)\) is one member of the gorillas in the group randomly selected from the entire population and \(G{X}_{r}\left(t\right)\).One of the vectors of gorilla candidate positions randomly selected and includes the positions updated in each phase. \(C\), \(L\), and \(H\) are calculated using the following equations:

In Eq. (14) It is the current iteration value, \(MaxIt\) is the total value of iterations, l is a random value in the range of − 1 and 1, \({r}_{4}\) is random value ranging from 0 to 1. In the GTO algorithm’s exploitation phase, has two behaviours of Follow the silverback and Competition for adult females are applied. Follow the silverback can be simulated by this Eq.

In Eq. (18), \(X\left(t\right)\) is the gorilla position vector, and Xsilverback is the silverback gorilla position vector (best solution). \(G{X}_{i}\left(t\right)\) Eq. (19) shows each candidate gorilla’s vector position in iteration t. N represents the total number of gorillas. \(L\) is calculated using Eq. (16).

While Competition for adult females can be simulated using the following Eq.

where \({r}_{5}\) is random values ranging from 0 to 1, \(\beta\) is a parameter to be given value before the optimization operation. the \(E\) 's value of \(E\) will be equal to random values in the normal distribution and the problem’s dimensions, but if rand < 0.5, \(E\) will be equal to a random value in the normal distribution. rand is also a random value between 0 and 1.

Particle swarm algorithm (PSO)

It is well-known PSO is an effective metaheuristics algorithm, which has been widely used in different engineering domains28 . It mimics the preying behaviour of bird’s folk. In PSO, the movement of each particle is determined by the experiences of the particle itself and those of swarm individuals. Starting from a random population size N, the vector \({d}_{i}\) with ND-dimensional design variables is the ith solution. The new position and population velocity are evaluated using the following formulas.

In Eq. (24) \({V}_{ij}\left(t+1\right)\) is the updated velocity. \({V}_{ij}\left(t\right)\) is the current velocity. \({\text{P}}_{\text{ji}}\) is the best position. \({d}_{ij}\left(t\right)\) is the current position. \({r}_{1ij}\) and \({r}_{2ij}\) are two random values lie in [0,1]. The parameters \({C}_{1}\) and \({C}_{2}\) are the stochastic weighting. \(\omega\) Controls the impact of previous velocity on the current velocity. Typically, within the range [0, 1]. In Eq. (25) \({d}_{ij}\left(t+1\right)\) is the updated position.

Ant lion optimizer algorithm (ALO)

Inspired by the hunting behaviour from ant lions22. It is a new nature-inspired intelligent technique. The ant lions dig a cone-shaped pit in the sand, in which the trap size is determined by the relations between the hunger level and the moon shape. ALO uses the elitism strategy to update the movements of the ant lions; each ant walks around in the vicinity of a selected ant lion according to the roulette wheel and the elite.

where t denotes the iterative number, \(i\) denotes the ant number, \({R}_{A}^{t}\) denotes the walk of ant lion that is determined through the roulette wheel, and \({R}_{E}^{t}\) denotes the random of elite. \(An{t}_{i}^{t}\) denotes the position.

Grey wolf optimizer (GWO)

Mirjalili et al.29 recently created the GWO algorithm, in which the hunting information is shared by a grey wolf family. Inspired by the strict social dominant hierarchy, the grey wolf can be divided into three types: alpha (leader), beta (the best candidate), and omega (scapegoat). Their positions can be calculated by.

In Eq. (27) \(\overrightarrow{{d}_{1}}, \overrightarrow{{d}_{2}}\), \(\overrightarrow{{d}_{3}}\) are updated position. \(\overrightarrow{{d}_{\alpha }} , \overrightarrow{{d}_{\beta } } \overrightarrow{,{d}_{\delta }}\) are positions of alpha, beta, gamma wolves. \(\overrightarrow{{A}_{1}}\), \(\overrightarrow{{A}_{2}}\), \(\overrightarrow{{A}_{3}}\) are coefficient vectors. \(\overrightarrow{{D}_{\alpha }}\), \(\overrightarrow{{D}_{\beta }} , \overrightarrow{{D}_{\delta }}\) are distances between wolves (alpha, beta, gamma) and current positions multiplying by coefficient vectors.

Harris’s hawk optimizer (HHO)

The HHO, and it uses the surprise pounce behaviour of Harris hawks to mimic the exploratory and exploitative phases of optimization25 The Harris hawks track the rabbit using their powerful eyes after patiently waiting. It perches on the tall tree according to other family member positions and the rabbit.

where \(d\left(t+1\right)\) is the position vector of hawks in the next iteration \(t\), \({d}_{rabbit}(t)\) is the position of rabbit, \(d\left(t\right)\) is the current position vector of hawks, \({r}_{1}\), \({r}_{2}\), \({r}_{3}\), \({r}_{4}\), and \(q\) are random numbers inside (0,1), which are updated in each iteration, \(LB\) and \(UB\) show the upper and lower bounds of variables, \({d}_{rand}\left(t\right)\) is a randomly selected hawk from the current population, and \({d}_{m}\left(t\right)\) is the average.

Tuna swarm optimization (TSO)

TSO is based on the cooperative foraging behaviour of tuna swarm. The work mimics two foraging behaviours of tuna swarm, including spiral foraging that tuna are feeding, they swim by forming a spiral formation to drive their prey into shallow water where they can be attacked more easily which mathematical model in Eq. (29) and parabolic foraging Each tuna swims after the previous individual, forming a parabolic shape to enclose its prey which mathematical model in Eq. (30), Tuna successfully forage by the above two methods. More explanations about TSO can be found in23.

where \({x}_{i}^{t+1}\) is the ith individual of the \(t+1\) iteration, \({x}_{rand}^{t}\) is a randomly generated reference point in the search space. , \({x}_{best}^{t}\) is the current optimal individual (food), \({\alpha }_{1}\) and \({\alpha }_{2}\) are weight coefficients that control the tendency of individuals to move towards the optimal individual and the previous individual, \(\beta\) is a parameter calculated according to Eq. (31) and \(l\) using Eq. (32). t denotes the number of current iterations, \({t}_{\text{max}}\) is the maximum iterations, and \(b\) is a random number uniformly distributed between 0 and 1.

Dingo optimizer (DOA)

DOA mimics the social behaviour of the Australian dingo dog. The algorithm is inspired by the hunting strategies of dingoes which are attacking by persecution, grouping tactics, and scavenging behaviour 27]. To increment the overall efficiency and performance of this method, three search strategies associated with four rules were formulated in the DOA.

First strategy is group attack. predators often use highly intelligent hunting techniques. Dingoes usually hunt small prey, such as rabbits, individually, but when hunting large prey such as kangaroos, they gather in groups. Dingoes can find the location of the prey and surround it, such as wolves, this behaviour is represented by the following equation:

where \({\overrightarrow{x}}_{i}\left(t+1\right)\) is the new position of a search agent , na is a random integer number generated in the interval of [2, SizePop/2], where SizePop is the total size (dingoes that will attack) where \({\varphi }_{k}\left(t\right)\) is a subset of search agent , \(X\) is the dingoes population randomly generated, \({x}_{i}\)(t) is the current search iteration, and β1 is a random number uniformly generated from interval [-2,2], \({x}_{*}\)(t) is the best search agent found from the previous iteration. Second strategy is Persecution. Dingoes usually hunt small prey, which is chased until it is caught individually. The following Eq. models this behaviour:

where \({\beta }_{2}\) is a random number uniformly generated in the interval of [− 1, 1], \({r}_{1}\) is the random number generated in the interval from 1 to the size ofa maximum of search agents (dingoes), and i ≠ \({r}_{1}\).

Third strategy is Scavenger, which behaviour is defined as the action when dingoes find carrion to eat when they are randomly walking in their habitat. The following Eq. models this behaviour:

where \(\sigma\) is a \(a binary number randomly \epsilon \left\{\text{0,1}\right\},\) and i ≠ \({r}_{1}\).

Fourth strategy Dingoes’ Survival Rates, when the Australian dingo dog is at risk of extinction due to illegal hunting. The dingoes’ survival rate value is provided by the following equation:

Tunicate swarm algorithm (TSA)

Is a bioinspired metaheuristic optimization algorithm inspired from the swarm behaviours of tunicates during the foraging process. It was proposed by Kaur et al.24 TSA algorithm has been constructed mathematically on two main behaviours of tunicates that are jet propulsion and swarm intelligence. To mathematically model the jet propulsion behaviour, a tunicate should satisfy three conditions first Avoiding the conflicts among search agents is represented by the following equations:

\(\overrightarrow{A}\) Is employed for the calculation of new search agent position. \(\overrightarrow{G}\) is the gravity force and \(\overrightarrow{F}\) shows the water flow advection in deep ocean. The variables \({c}_{1},{c}_{2}\) and \({c}_{3}\) are random numbers lie in the range of [0, 1]. \(\overrightarrow{M}\) represents the social forces between search agents. \({P}_{min} and {P}_{max}\) represent the initial and subordinate speeds to make social interaction.

Second Movement towards the direction of best neighbour is represented by the following equations:

where \(\overrightarrow{PD}\) is the distance between the food source and search agent, \(\overrightarrow{FS}\) is the position of food source \(\overrightarrow{{p}_{p}\left(x\right)}\) indicates the position of tunicate. Third stage is Converge towards the best search agent. It can be mathematically formed as.

\(\overrightarrow{{P}_{p}\left(x\right)}\) Is the updated position of tunicate with respect to the position of food source \(\overrightarrow{FS}\). Then The swarm behaviour of tunicate can be mathematically formed as

\(\overrightarrow{{P}_{p} (x+1)}\) The final position would be in a random place, within a cylindrical or cone-shaped, which is defined by the position of tunicate.

Growth optimizer (GO)

Its main design inspiration originates from the learning and reflection mechanisms of individuals in their growth processes in society. Learning is the process of individuals growing up by acquiring knowledge from the outside world. Reflection is the process of checking the individual’s own deficiencies and adjusting the individual’s learning strategies to help the individual’s growth. Learning phase process as facing the gap between individuals, exploring the causes of these gaps, and learning from them can promote an individual’s growth. It can be mathematically formed as:

where \(K{\overrightarrow{A}}_{k}\), (k = 1, 2, 3, 4) is the knowledge acquired by the ith individual from the kth group of the gap. Reflection phases an individual should check and make up for deficiencies in all aspects and his knowledge should be retained. For their bad aspects, they should learn from outstanding individuals, while their good aspects should be retained. When the lesson of a certain aspect cannot be remedied, the previous knowledge should be abandoned, and systematic learning should be carried out again. The reflective process of GO is mathematically modelled through Eqs. (45) and (46).

where ub and lb are the upper and lower bounds of the search domain, and \({r}_{2}\), \({r}_{3}\), \({r}_{4}\), and \({r}_{5}\) are uniformly distributed random numbers in the range [0,1]. The value of \({P}_{3}\) controls the probability of reflection and is set to 0.3. The attenuation factor (\(AF\)) is composed of the current number of evaluations (\(FES\)) and the maximum number of evaluations (\(\text{Max}FEs\)). \({R}_{j}\) denotes an individual at the high level, and it serves as a reflective learning guider for the current individual i.

BES–GO algorithm

In this section, we explain BES–GO structure and steps of implementation. As mentioned above BES has three stages select space, search in space, and swoop each stage repeated iterations times. The purpose of selection BES is the power of the algorithm due to balance between exploration and exploitation. It has a cooperative learning strategy that allows individuals in the population to share information and learn from the best solutions found during the search which improves the overall performance. The second algorithm is the GO algorithm which is a remarkably effective algorithm. It consists of two phases learning phase and reflection phase. It is found reflection phase take time although the final optimal solution is improved a little. So, it suggests that creating a hybrid algorithm takes 3 stages of BES but applies conditions that search in space apace and swoop applied only for the first half of iteration and apply the learning phase also for the first half of iteration with little change in its mechanism to simplify calculations which will reduce implementation time. The following is the pseudo-code of the algorithm.

The pseudocode of BES–GO algorithm.

Engineering structural design problems

In this section, we provide a comprehensive overview of the problems under consideration, encompassing the objective function, decision variables, and constraints. For each problem, we formulate a model and delineate the relevant parameters, alongside presenting the mathematical formulations essential for addressing these problems.

Welded beam design problem

This benchmark problem was introduced by CoelloIn58 and many researchers solved it as a real optimization problem. The objective is to minimise the manufacturing cost of the welded beam. It has seven constraints related to deflection, stress, welding, and geometry. The design variables are the weld thickness h (\({x}_{1}\)), length of the weld l (\({x}_{2}\)), depth of the beam t (\({x}_{3}\)), and width of the beam b (\({x}_{4}\)). Design variables and structure of the welded beam are shown in Fig. 1. The problem can mathematically be stated as:

Subjected to

where weld stress τ(x) is calculated using Eq. (55):

The bar displacement is found using the following equation:

The bar bending stress σ(x) is calculated using the following equation:

The bar buckling load is calculated using the following equation:

\(\begin{gathered} \sigma _{{max}} \left( {Yield~\;stress} \right) = {\text{3}}0,000\;{\text{psi}} \hfill \\ {\text{E}}\left( {{\text{Modulus of elasticity}}} \right)\, = \,{\text{3}}0*{\text{1}}0{\text{6 psi}}, \hfill \\ {\text{p}}\left( {{\text{Load}}} \right) = {\text{ 6}}000{\text{ lb}},{\text{ L}}\left( {{\text{Length of beam}}} \right) = {\text{14 in}}, \hfill \\ {\text{G}}({\text{Shearing modulus}}) = {\text{12}}*{\text{1}}0{\text{6 psi}}, \hfill \\ \tau _{{max}} \left( {Design~stress~of~the~weld} \right) = 13,600~{\text{psi}}, \hfill \\ \delta _{{max}} \left( {{\text{Maximum deflection}}} \right)\, = \,0.{\text{25 in}}, \hfill \\ \end{gathered}\)

with, \(0.1\le {x}_{1},{x}_{4}\le 2, 0.1\le {x}_{2},{x}_{3}\le 10.\)

Weight optimization of cantilever beams

The second example is weight optimization of cantilever beam shown in Fig. 2 has 5 decision variables which are hollow square cross sections width and height, and the thickness is constant 2/3 for each step. This example was originally given by Fleury and Braibant59.The objective function is minimizing the total weight of the beam. The problem can mathematically be stated as.

And the solution limits are.

\(0.01{\le x}_{j}\le 100 for j=1 to 5.\)

I-beam vertical deflection optimization

This example was originally given by Gold and Krishnamurty60. The objective is to minimize the vertical deflection of the I-beam which exposed to vertical design load P and load Q which in another direction which shown in the Fig. 3. Consider the variable x = (\({x}_{1}\), \({x}_{2}\), \({x}_{3}\), \({x}_{4}\)) = (b, h, \({t}_{w}\), \({t}_{f}\)), the mathematical formulation of the problem is defined as follows:

where10 ≤ h ≤ 80; 10 ≤ b ≤ 50; 0.9 ≤\({t}_{w}\) ≤ 5; 0.9 ≤ \({t}_{f}\)≤ 5.

Tubular column design optimization problem

The tubular column55 is shown in Fig. 4. The problem is to minimize the cost of the tubular column section subjected to axially loaded with a load (P), and the upper and the lower bounds of the columns are supported by hinged bearings. Consider the variable x = (\({x}_{1}\), \({x}_{2}\)) = (d, t), the mathematical formulation of the problem is defined as follows:

Subjected to:

where σy (Yield stress) = 500 kg/cm2, E (Modulus of elasticity) = 0.85 x 106 kg/cm2, ρ(Density)= 0.0025 kg/cm3, k = 1 and L (Length of column) =250 cm

\(2\le d\le\) 14,0 \(.2\le t\le 0.8\)

A Three-bar truss system optimization problem

A three-bar truss structure is given in Fig. 5. This problem was first presented in Nowcki21. This problem requires finding the minimum volume of the truss according to the decision variables of cross-sectional area x = (\({x}_{1}\), \({x}_{2}\)) = (\({A}_{1}\), \({A}_{2}\)). The problem is described as follows:

Subject to:

where 0 ≤\({A}_{1}\) ≤ 1 and 0 ≤ \({A}_{2}\) ≤ 1.

l = 100 cm; P = 2 kN, and σ = 2 kN/cm2.

Experimental results analysis

In this section, the results of the numerical investigations are presented in which the capability of the proposed BES–GO algorithm is verified through some mathematical test functions, benchmark suits and some of the well-known engineering design problems.

Tests of benchmark mathematical functions

BES–GO efficiency is assessed at the IEEE Congress on Evolutionary Computation 2020 (CEC’20)61.The simulation results of the proposed BES–GO are compared to other metaheuristics algorithms. To conduct a fair comparison, the BES–GO algorithm and the other competitors are investigated through 30 runs. The number of iterations is set to 500 for all considered problems. Table 6 shows the parameters’ settings for each algorithm.

The algorithm results on the CEC’20 functions are compared. In particular, the efficiency of each algorithm is measured with the average of the best solutions obtained at each run and the corresponding standard deviation (STD). Table 7 presents the average and STD values of each algorithm for functions of 10-dimension, i.e., Dim = 10. The best results are shown in boldface.

As shown in Table 7, the BES–GO algorithm demonstrates superior performance across multiple benchmark functions from the CEC2020 suite, consistently outperforming several well-known metaheuristic algorithms. For instance, in F1, BES–GO achieved the best fitness value of 1.0000E+02, outperforming other competitive algorithms such as GO (1.0199E+02), BES (1.0032E+02), and PSO (1.0418E+02). This indicates BES–GO’s ability to achieve high precision in complex optimization problems. In Benchmark 2, it maintains a strong fitness value of 1.2336E+03, closely competing with algorithms like TSO and BES, while outperforming algorithms such as ALO and TSA. Notably, in more challenging functions such as Benchmark 5, BES–GO demonstrates significant superiority over other algorithms, whereas weaker algorithms like TSA (6.5187E+04) and DOA (2.9514E+07) show much higher error values.

This consistent performance across benchmarks highlights BES–GO’s effectiveness in balancing exploration and exploitation, adapting well to various problem landscapes. Its stability and precision make it a leading algorithm compared to alternatives like ALO, GWO, GTO, and HHO, which often display higher variability and less competitive results. Overall, BES–GO establishes itself as one of the top-performing algorithms in solving CEC2020 problems, offering enhanced reliability and accuracy in global optimization tasks. The convergence of the algorithms is evaluated (see Fig. 6. As shown in the figure, the BES–GO algorithm converges to (near)-optimal solutions faster than the other algorithms at F1, F3, F4, F5, and F7.

Structural engineering design problems

These problems are the welded beam, tubular column, cantilever beam weight, three-bar truss system, and I-beam vertical deflection. We have described the mathematical details of these problems in a previous section. Since these problems have constraints, we deal with them using an exterior penalty approach.

We convert equality constraints into inequalities and set a tolerance parameter to 0.0001. We run experiments with a population size of 30 and 500 iterations. Table 6 in the previous section shows the algorithm parameter settings.

To compare how quickly and effectively the algorithms converge, we plot convergence curves showing the history of the best fitness values obtained for each problem. Each algorithm undergoes 30 independent experiments. During these experiments, we record several statistics including the best, mean, worst, and standard deviation of fitness values. The computer used for these experiments has a processor 12th Gen Intel(R) Core (TM) i5-12600KF 3.70 GHz, 32 GB of RAM, and operates on a 64-bit Windows 11 system.

The welded beam design problem

As can be seen from Table 8, The BES–GO algorithm has superior performance in terms of both accuracy and stability compared to other algorithms. It achieves the optimal average and best solution (1.72), with an extremely low standard deviation (7.92E−16), indicating high reliability and minimal variation across runs. Its execution time (5.03E−01) is competitive, and it outperforms others in maintaining a robust worst-case solution (1.72). This balance between computational efficiency and solution quality makes BES–GO an ideal choice for optimization tasks requiring both precision and consistency. The zoomed-in section of the convergence graph, shown in Fig. 7, illustrates that the BES–GO algorithm converges to the optimal solution faster than the other algorithms after only 15 iterations.

Additionally, the box plot in Fig. 8 indicates that the BES–GO, BES, and GO algorithms exhibit superior performance compared to alternative algorithms in terms of performance metrics.

The cantilever beam weight optimization problem

As can be seen from Table 9, The BES–GO algorithm has a quick execution time of 0.431 s and maintains an average value of 1.34. Its standard deviation is remarkably low (4.16E−16), indicating minimal variation in performance across trials (Figs. 8, 9, 10).

The best and worst solution values are consistently at 1.34, reflecting the algorithm’s reliability. In comparison, other algorithms, such as the GO and BES, show similar average solutions but have higher standard deviations, suggesting less stability.

Algorithms like TSO and TSA also perform well, with execution times below 0.1 s, but their average solutions (1.34 for TSO and 1.36 for TSA) are slightly less optimal than those of BES–GO. Overall, BES–GO demonstrates a strong balance of speed, reliability, and consistent performance, making it a commendable choice for optimization tasks.

Additionally, the BES–GO algorithm has a superior convergence curve Fig. 9, reaching the optimal solution after just 20 iterations. This rapid convergence underscores its efficiency in finding high-quality solutions quickly. Moreover, the boxplot in Fig. 10 analysis further confirms BES–GO’s robust performance, showing minimal variation in results compared to other algorithms.

I-beam vertical deflection optimization problem

From Table 10 Notably, the BES–GO algorithm demonstrates a commendable performance with a computation time of 0.413 s, achieving an average solution of 1.31E−02 and a remarkably low standard deviation of 8.82E−18, indicating high consistency in its results. The best and worst solutions for BES–GO are both recorded on 1.31E−02, showcasing its reliability in producing optimal outcomes. In comparison, other algorithms like GTO and TSO exhibit faster computation times, 0.159 s, and 0.053 s respectively, yet they maintain similar average and best solution values as BES–GO. This suggests that while some algorithms may operate more quickly, their effectiveness in yielding superior solutions does not surpass that of BES–GO. From Fig. 11, it is observed that BES–GO converge slightly faster to the optimal solution. Furthermore, as depicted in the boxplot in Fig. 12, it is observed that BES–GO have a performance slightly better to other algorithms.

Tubular column design optimization problem

In Table 11, all algorithms except GWO, HHO, TSA, and DOA demonstrate the best fitness after 30 independent runs, the BES–GO algorithm stands out with a computation time of 0.383 s, achieving an average solution of 26.5 with an incredibly low standard deviation of 3.61E−15. Furthermore, analysis of Fig. 13 reveals that BES–GO is the fastest algorithm among the alternatives for this problem. Additionally, from Fig. 14, it is apparent that the performance of all algorithms is except HHO, TSA, and DOA closely clustered, indicating similar levels of effectiveness.

Three-bar truss system optimization problem

According to Table 12, the BES–GO algorithm has zero standard deviation indicating exceptional stability across iterations, with no variability in results, which is a strong indicator of reliability. Figure 15, it becomes evident that the BES–GO algorithm is faster than other algorithms when converging to the optimal solution after only a few iterations. Moreover, from Fig. 16, it is apparent that all algorithms demonstrate similar performance levels except TSA, HHO, and DOA.

Nonparametric statistical analysis

In this section, Friedman test62 is utilized to assess the performance of metaheuristic algorithms across the five problems. Furthermore, the Wilcoxon signed-rank test63 is performed to illustrate the significant differences for BES–GO with the other algorithms.

Friedman test

The nonparametric Friedman test is used with a significance level of 0.05 to statistically assess the experimental results. The Friedman statistical test is performed to demonstrate whether the differences in performance between the compared algorithms are significant or not in solving the five problems. In the Friedman test, the smaller the ranking, the better the performance of the algorithm. One more important term in the Friedman test is the p-value. A p-value gives an indicator whether there is a significant difference between algorithms or not, where the smaller the p-value, the stronger the evidence of a significant difference. In Friedman’s test statistics in Table 13, SS stands for Sum of Squares refers to the variation among the compared algorithms, df is degrees of freedom, and MS is calculated by dividing the Sum of Squares SS by the degrees of freedom df. Chi-square is a test statistic used to assess the significance of the differences between groups (Table 14).

The Probability (Prob > Chi-sq.) is the p-value. P-value = 1.95019e−11much lower than 0.05 was obtained, which clearly indicates significant differences in terms of precision and computational time.

Table 14 shows that BES–GO achieved the highest rank with an average rank of 3.63. BES and PSO followed in the second and third positions, respectively. GO and TSO are in the fourth and fifth ranks, while GTO and ALO occupy the sixth and seventh positions. GWO is the eighth then DOA. HHO and TSA exhibited the lowest performance, ranking last.

The Wilcoxon signed-rank test

The Wilcoxon signed-rank test was also performed to detect significant differences between the behaviors of the algorithms’ pair. Wilcoxon test provides the p-value, that is, the probability that the difference in the performance achieved by the two algorithms is obtained by chance and is not statistically significant. In Table 15, BES–GO is compared with the other algorithms in solving the five problems, respectively.

As shown in Table 15 the Wilcoxon test results indicate that the BES–GO had a better statistically significant performance compared to other algorithms except BES and GO as the p-value is smaller than the level of significance (Alpha = 5%).

Conclusion and future work

This paper proposes a novel hybrid algorithm called BES–GO, which combines the Bald Eagle Search (BES) algorithm with the Growth Optimizer (GO) algorithm. The BES–GO algorithm is compared against ten other algorithms, including BES, GO, ALO, TSO, TSA, HHO, GTO, DOA, PSO, and GWO, using CEC’20 tests and five benchmark structural design optimization problems. These problems are the vertical deflection of an I-beam, welded beam design, tubular column design, three-bar truss, and cantilever weight optimization. Among all the algorithms, BES–GO consistently achieves the optimal solution for all five problems, demonstrating the smallest standard deviation and a reasonable execution time. This highlights the significant potential of the BES–GO algorithm to advance the field of structural design optimization.

In future work, researchers can make modifications to the BES–GO algorithm to enhance its efficiency and applicability in solving various complex applications within a shorter time. Additionally, developing a multi-objective version of the BES–GO algorithm could enable its utilization in addressing diverse multi-objective optimization problems, including those encountered in tower design.

Data availability

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Remacle, J., Lambrechts, J. & Seny, B. Blossom-Quad: A non-uniform quadrilateral mesh generator using a minimum-cost perfect-matching algorithm. Int. J. Numer. Methods Eng. 89(February), 1102–1119. https://doi.org/10.1002/nme.3279 (2012).

Mortazavi, A. Comparative assessment of five metaheuristic methods on distinct problems. DÜMF Mühendislik Dergisi 10(3), 879–898. https://doi.org/10.24012/dumf.585790 (2019).

Abdel-Basset, M., Abdel-Fatah, L. & Sangaiah, A. K. Metaheuristic algorithms: A comprehensive review In (Elsevier, 2018). https://doi.org/10.1016/B978-0-12-813314-9.00010-4.

Almufti, S. M., Ahmad Shaban, A., Arif Ali, Z., Ismael Ali, R. & Dela Fuente, J. A. Overview of metaheuristic algorithms. Polaris Global J. Sch. Res. Trends 2(2), 10–32. https://doi.org/10.58429/pgjsrt.v2n2a144 (2023).

Mzili, T., Mzili, I. & Riffi, M. E. Artificial rat optimization with decision-making: a bio-inspired metaheuristic algorithm for solving the traveling salesman problem. Decis. Mak. Appl. Manag. Eng. 6(2), 150–176. https://doi.org/10.31181/dmame622023644 (2023).

Abdel-Basset, M. et al. A novel binary Kepler optimization algorithm for 0–1 knapsack problems: Methods and applications. Alex. Eng. J. 82(September), 358–376. https://doi.org/10.1016/j.aej.2023.09.072 (2023).

Munien, C. & Ezugwu, A. E. Metaheuristic algorithms for one-dimensional bin-packing problems: A survey of recent advances and applications. J. Intell. Syst. 30(1), 636–663. https://doi.org/10.1515/jisys-2020-0117 (2021).

Tang, J., Liu, G. & Pan, Q. A review on representative swarm intelligence algorithms for solving optimization problems: Applications and trends. IEEE/CAA J. Autom. Sin. 8(10), 1627–1643. https://doi.org/10.1109/JAS.2021.1004129 (2021).

Yildiz, B. S. A comparative investigation of eight recent population-based optimisation algorithms for mechanical and structural design problems. Int. J. Veh. Des. 73(1), 208–218. https://doi.org/10.1504/IJVD.2017.082603 (2017).

Govindaraj, V. & Ramasamy, J. V. Optimum detailed design of reinforced concrete continuous beams.pdf, vol 39, no. 4, 471–494 (2007).

Yang, X. S., Bekdas, G. & Nigdeli, S. M. Preface, vol. 7. 2016. https://doi.org/10.1007/978-3-319-26245-1.

Yang, X. S., Bekdas, G. & Nigdeli, S. M. Preface. Modeling and Optimization in Science and Technologies 7, v–vi. https://doi.org/10.1007/978-3-319-26245-1 (2016).

Kashani, A. R., Camp, C. V., Rostamian, M., Azizi, K. & Gandomi, A. H. Population-based optimization in structural engineering: A review. Artif. Intell. Rev. 55(1), 345–452. https://doi.org/10.1007/s10462-021-10036-w (2022).

Dirik, M. Comparison of recent meta-heuristic optimization algorithms using different benchmark functions. J. Math. Sci. Modell. 5(3), 113–124. https://doi.org/10.33187/jmsm.1115792 (2022).

Luo, Y., Liao, P., Pan, R., Zou, J. & Zhou, X. Effect of bar diameter on bond performance of helically ribbed GFRP bar to UHPC. J. Build. Eng. 91, 109577. https://doi.org/10.1016/j.jobe.2024.109577 (2024).

Zheng, H. et al. Mechanical properties and microstructure of waterborne polyurethane-modified cement composites as concrete repair mortar. J. Build. Eng. 84, 108394. https://doi.org/10.1016/J.JOBE.2023.108394 (2024).

Zheng, H. et al. Durability enhancement of cement-based repair mortars through waterborne polyurethane modification: Experimental characterization and molecular dynamics simulations. Constr. Build. Mater. 438, 137204. https://doi.org/10.1016/J.CONBUILDMAT.2024.137204 (2024).

Zheng, H. et al. Reaction molecular dynamics study of calcium alumino-silicate hydrate gel in the hydration deposition process at the calcium silicate hydrate interface: The influence of Al/Si. J. Build. Eng. 86, 108823. https://doi.org/10.1016/j.jobe.2024.108823 (2024).

Zheng, H. et al. Unveiling the dissolution mechanism of calcium ions from CSH substrates in Na2SO4 solution: Effects of Ca/Si ratio. Appl. Surf. Sci. 680, 161443. https://doi.org/10.1016/j.apsusc.2024.161443 (2025).

Alsattar, H. A., Zaidan, A. A. & Zaidan, B. B. Novel meta-heuristic bald eagle search optimisation algorithm. Artif. Intell. Rev. 53(3), 2237–2264. https://doi.org/10.1007/s10462-019-09732-5 (2020).

Zhang, Q., Gao, H., Zhan, Z. H., Li, J. & Zhang, H. Growth Optimizer: A powerful metaheuristic algorithm for solving continuous and discrete global optimization problems. Knowl. Based Syst. 261, 110206. https://doi.org/10.1016/J.KNOSYS.2022.110206 (2023).

Mirjalili, S. The ant lion optimizer. Adv. Eng. Softw. 83, 80–98. https://doi.org/10.1016/j.advengsoft.2015.01.010 (2015).

Xie, L. et al. Tuna swarm optimization: A novel swarm-based metaheuristic algorithm for global optimization. Comput. Intell. Neurosci. https://doi.org/10.1155/2021/9210050 (2021).

Kaur, S., Awasthi, L. K., Sangal, A. L. & Dhiman, G. Tunicate swarm algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 90(February), 103541. https://doi.org/10.1016/j.engappai.2020.103541 (2020).

Heidari, A. A. et al. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 97, 849–872. https://doi.org/10.1016/j.future.2019.02.028 (2019).

Abdollahzadeh, B. Artificial gorilla troops optimizer: A new nature‐inspired metaheuristic algorithm for global optimization problems. Int. J. Intell. Syst. 36(April), 5887–5958. https://doi.org/10.1002/int.22535 (2021).

H. Peraza-Vázquez, A. F. Peña-Delgado, G. Echavarría-Castillo, A. B. Morales-Cepeda, J. Velasco-Álvarez, and F. Ruiz-Perez, “A Bio-Inspired Method for Engineering Design Optimization Inspired by Dingoes Hunting Strategies,” Math Probl Eng, vol. 2021, 2021, https://doi.org/10.1155/2021/9107547.

Kennedy, J. & Eberhart, R. Particle swarm optimization. In Proceedings of ICNN’95 - International Conference on Neural Networks, Vol 4, 1942–1948. https://doi.org/10.1109/ICNN.1995.488968 (1995).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 69, 46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007 (2014).

Kaveh, A. & Mahdavi, V. R. Colliding bodies optimization: A novel meta-heuristic method. Comput. Struct. 139, 18–27. https://doi.org/10.1016/j.compstruc.2014.04.005 (2014).

Cuevas, E. & Cienfuegos, M. A new algorithm inspired in the behavior of the social-spider for constrained optimization. Expert Syst. Appl. 41(2), 412–425. https://doi.org/10.1016/j.eswa.2013.07.067 (2014).

Cuong-Le, T. et al. A novel version of Cuckoo search algorithm for solving optimization problems. Expert Syst. Appl. 186, 115669. https://doi.org/10.1016/j.eswa.2021.115669 (2021).

Mohapatra, S. & Mohapatra, P. American zebra optimization algorithm for global optimization problems. Sci. Rep. https://doi.org/10.1038/s41598-023-31876-2 (2023).

Akay, B. & Karaboga, D. Artificial bee colony algorithm for large-scale problems and engineering design optimization. J. Intell. Manuf. 23(4), 1001–1014. https://doi.org/10.1007/s10845-010-0393-4 (2012).

Pathak, V. K. & Srivastava, A. K. A novel upgraded bat algorithm based on cuckoo search and Sugeno inertia weight for large scale and constrained engineering design optimization problems. Eng. Comput. 38(2), 1731–1758. https://doi.org/10.1007/s00366-020-01127-3 (2022).

Khalilpourazari, S. & Khalilpourazary, S. An efficient hybrid algorithm based on water cycle and moth-flame optimization algorithms for solving numerical and constrained engineering optimization problems. Soft Comput. 23(5), 1699–1722. https://doi.org/10.1007/s00500-017-2894-y (2019).

Sun, P., Liu, H., Zhang, Y., Tu, L. & Meng, Q. An intensify atom search optimization for engineering design problems. Appl. Math. Model. 89, 837–859. https://doi.org/10.1016/j.apm.2020.07.052 (2021).

Gupta, S. et al. Comparison of metaheuristic optimization algorithms for solving constrained mechanical design optimization problems. Expert Syst. Appl. 183(June), 115351. https://doi.org/10.1016/j.eswa.2021.115351 (2021).

Dalirinia, E., Jalali, M., Yaghoobi, M. & Tabatabaee, H. Lotus effect optimization algorithm (LEA): A lotus nature-inspired algorithm for engineering design optimization. J. Supercomput. https://doi.org/10.1007/s11227-023-05513-8 (2023).

Karami, H., Anaraki, M. V., Farzin, S. & Mirjalili, S. Flow direction algorithm (FDA): A novel optimization approach for solving optimization problems. Comput. Ind. Eng. 156(March), 107224. https://doi.org/10.1016/j.cie.2021.107224 (2021).

Hashim, F. A., Mostafa, R. R., Khurma, R. A., Qaddoura, R. & Castillo, P. A. A new approach for solving global optimization and engineering problems based on modified sea horse optimizer. J. Comput. Des. Eng. 11(1), 73–98. https://doi.org/10.1093/jcde/qwae001 (2024).

Ong, P., Ho, C. S., Daniel, D. & Sheng, V. CCAM communications in computational and applied an improved cuckoo search algorithm for design optimization of structural engineering problems. 2(1), (2020).

Digehsara, P. A., Chegini, S. N., Bagheri, A. & Roknsaraei, M. P. An improved particle swarm optimization based on the reinforcement of the population initialization phase by scrambled Halton sequence. Cogent Eng. https://doi.org/10.1080/23311916.2020.1737383 (2020).

Shang, C., ting Zhou, T. & Liu, S. Optimization of complex engineering problems using modified sine cosine algorithm. Sci. Rep. 12(1), 1–25. https://doi.org/10.1038/s41598-022-24840-z (2022).

Yu, H., Zhao, N., Wang, P., Chen, H. & Li, C. Chaos-enhanced synchronized bat optimizer. Appl. Math. Model. 77, 1201–1215. https://doi.org/10.1016/j.apm.2019.09.029 (2020).

Abualigah, L. et al. Aquila optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng 157(October), 107250. https://doi.org/10.1016/j.cie.2021.107250 (2021).

Abualigah, L., Diabat, A., Mirjalili, S., Abd Elaziz, M. & Gandomi, A. H. The arithmetic optimization algorithm. Comput Methods Appl Mech Eng 376, 113609. https://doi.org/10.1016/j.cma.2020.113609 (2021).

Fauzi, H. & Batool, U. A three-bar truss design using single-solution simulated Kalman filter optimizer. Mekatronika 1(2), 98–102. https://doi.org/10.15282/mekatronika.v1i2.4991 (2019).

Ahmadianfar, I., Heidari, A. A., Noshadian, S., Chen, H. & Gandomi, A. H. INFO: An efficient optimization algorithm based on weighted mean of vectors. Expert Syst. Appl. 195(June), 116516. https://doi.org/10.1016/j.eswa.2022.116516 (2022).

Ahmadianfar, I., Heidari, A. A., Gandomi, A. H., Chu, X. & Chen, H. RUN beyond the metaphor: An efficient optimization algorithm based on Runge Kutta method. Expert Syst. Appl. 181(April), 115079. https://doi.org/10.1016/j.eswa.2021.115079 (2021).

Bayzidi, H., Talatahari, S., Saraee, M. & Lamarche, C. P. Social network search for solving engineering optimization problems. Comput. Intell. Neurosci. https://doi.org/10.1155/2021/8548639 (2021).

Yildiz, B. S., Pholdee, N., Bureerat, S., Yildiz, A. R. & Sait, S. M. Enhanced grasshopper optimization algorithm using elite opposition-based learning for solving real-world engineering problems. Eng. Comput. 38(5), 4207–4219. https://doi.org/10.1007/s00366-021-01368-w (2022).

Altay, E. V., Altay, O. & Özçevik, Y. A comparative study of metaheuristic optimization algorithms for solving real-world engineering design problems. Comput. Model. Eng. Sci. 139(1), 1039–1094. https://doi.org/10.32604/cmes.2023.029404 (2024).

Siddavaatam, P. & Sedaghat, R. A New Bio-heuristic Hybrid Optimization for Constrained Continuous Problems, Vol. 12620 (LNCS, 2021). https://doi.org/10.1007/978-3-662-63170-6_5.

Yuan, Y., Ren, J., Zu, J. & Mu, X. An adaptive instinctive reaction strategy based on Harris hawks optimization algorithm for numerical optimization problems. AIP Adv. https://doi.org/10.1063/5.0035635 (2021).

Nama, S. & Saha, A. K. A bio-inspired multi-population-based adaptive backtracking search algorithm. Cognit. Comput. 14(2), 900–925. https://doi.org/10.1007/s12559-021-09984-w (2022).

Nama, S., Saha, A. K. & Sharma, S. A novel improved symbiotic organisms search algorithm. Comput. Intell. 38(3), 947–977. https://doi.org/10.1111/coin.12290 (2022).

Coello Coello, C. A. Use of a self-adaptive penalty approach for engineering optimization problems. Comput. Ind. 41(2), 113–127. https://doi.org/10.1016/S0166-3615(99)00046-9 (2000).

Fleury, C. & Braibant, V. Structural optimization: A new dual method using mixed variables. Int. J. Numer. Methods Eng. 23(3), 409–428. https://doi.org/10.1002/nme.1620230307 (1986).

Gold, S., Krishnamurty, S. Trade-offs in robust engineering design. In ASME 1997 Design Engineering Technical Conferences (1997).

2020 IEEE Congress on Evolutionary Computation (CEC): 2020 conference proceedings. (IEEE, 2020).

Derrac, J., García, S., Molina, D. & Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 1(1), 3–18. https://doi.org/10.1016/j.swevo.2011.02.002 (2011).

García, S., Fernández, A., Luengo, J. & Herrera, F. Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: Experimental analysis of power. Inf. Sci. (N. Y.) 180(10), 2044–2064. https://doi.org/10.1016/j.ins.2009.12.010 (2010).

Acknowledgements

The authors are very thankful to the anonymous referees and the editors for their valuable comments and suggestions.

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

Essam H. Houssein: Supervising, Software, Methodology, Formal analysis, Writing—review & editing. Mohamed Hossam Abdel Gafar: Software, Investigation, Visualization, Resources, Data curation, Formal analysis, Writing—original draft, Writing—review & editing. Naglaa Fawzy: Validation, Conceptualization, Methodology. Ahmed Y. Sayed: Validation, Conceptualization, Methodology, Formal analysis, Writing—review & editing. All authors read and approved the final paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Houssein, E.H., Hossam Abdel Gafar, M., Fawzy, N. et al. Recent metaheuristic algorithms for solving some civil engineering optimization problems. Sci Rep 15, 7929 (2025). https://doi.org/10.1038/s41598-025-90000-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-90000-8

Keywords

This article is cited by

-

Deep fusion based transfer learning with bald eagle search algorithm for sign language recognition to assist individuals with hearing and speech impairments

Scientific Reports (2025)

-

Leveraging hybrid deep learning with starfish optimization algorithm based secure mechanism for intelligent edge computing in smart cities environment

Scientific Reports (2025)

-

Quadrilateral mesh optimisation method based on swarm intelligence optimisation

Scientific Reports (2025)

-

Enhance real-time activity recognition of disabled individuals using hybridisation of convolutional neural network with attention mechanism

Signal, Image and Video Processing (2025)

-

A Comprehensive Analysis of Metaheuristic Optimization Approach for Engineering Design Problems Hybridized with Machine Learning for Anomaly Detection in Predictive Modelling

Archives of Computational Methods in Engineering (2025)