Abstract

Recognition is an extremely high-level computer vision evaluating task that primarily involves categorizing objects by identifying and evaluating their key distinguishing characteristics. Categorization is important in botany because it makes comprehending the relationships between various flower species easier to organize. Since there is a great deal of variability among flower species and some flower species may resemble one another, classifying flowers may become difficult. An appropriate technique for classification that uses deep learning technology is vital to categorize flower species effectively. This leads to the design of proposed Sobel Restricted Boltzmann VGG19 (SRB-VGG19), which is highly effective at classifying flower species and is inspired by VGG19 model. This research primarily contributes in three ways. The first contribution deals with the dataset preparation by means of feature extraction through the use of the Sobel filter and the Restricted Boltzmann Machine (RBM) neural network approach through unsupervised learning. The second contribution focuses on improving the VGG19 and DenseNet model for supervised learning, which is used to classify species of flowers into five groups. The third contribution overcomes the issue of data poisoning attack through Fast Gradient Sign Method (FGSM) to the input data samples. The FGSM attack was addressed by forming the Adversarial Noise Layer in the dense block. The Flowers Recognition KAGGLE dataset preprocessing was done to extract only the important features using the Sobel filter that computes the image intensity gradient at every pixel in the image. The Sobel filtered image was then applied to RBM to generate RBM Component Vectorized Flower images (RBMCV) which was divided into 3400 training and 850 testing images. To determine the best CNN, the training pictures are fitted with the existing CNN models. According to experiment results, VGG19 and DenseNet can classify floral species with an accuracy of above 80%. So, VGG19 and DenseNet were fine tuned to design the proposed SRB-VGG19 model. The Novelty of this research was explored by designing two sub models SRB-VGG FCL model, SRB-VGG Dense model and validating the security countermeasure of the model through FGSM attack. The proposed SRB-VGG19 initially begins by forming the RBMCV input images that only includes the essential flower edges. The RBMCV Flower images are trained with SRB-VGG FCL model, SRB-VGG Dense model and the performance analysis was done. When compared to the current deep learning models, the implementation results show that the proposed SRB-VGG19 Dense Model classifies the flower species with a high accuracy of 98.65%.

Similar content being viewed by others

Introduction

Classifying objects by acquiring and examining their primary distinguishing characteristics is the foundation of the recognition process, which is a high-level computer vision computing task1. Certain physical characteristics, such as color, form, and roughness themes, have been utilized to differentiate between different flowers. Finding appropriate hue, form, and feature descriptors is challenging, and making the classifier capable of choosing important features is equally challenging. For the purpose of creating a robotic apple pollination system, identifying specific king flowers within flower clusters is essential. During the synthesis period, apple flower clusters open successively from the king flower to the lateral flowers, providing a chance for selective pollination2. During the synthesis period, apple flower clusters open successively from the king flower to the lateral flowers, providing a chance for selective pollination. As a result, keeping an eye on the flower’s blossoming stage is essential to precisely identifying the pollination targets and time3. Utilizing ANN in conjunction with computational image analysis methods, the flower recognition system was created. In order to recognize different species of flowers, several classification algorithms have been developed. These include genetic algorithms, artificial neural networks, and other machine learning algorithms4. Flowers belong to a broad class of plant life, many of which have relatively similar characteristics and appearances; however certain flower species differ from one another. Because of these similarities and differences, identifying flowers with a high degree of accuracy is an extremely difficult task. Identifying flowers from their images using standard methods such as visiting websites on the Internet, searching for keywords on search engines, or consulting floral reference books was ineffective5.

Current automated computer vision systems for flower identification rely on manually designed methods that perform inadequately and only function in certain situations. An automated method for identifying flowers may be used with a variety of flower species and is resistant to uncontrolled settings6. To produce apples of a greater quality and quantity, management practices that accommodate the physiological condition of individual trees must be implemented7. A versatile method for segmenting images is employed, incorporating two feature learning methods such as CNN and MLP networks8. In particular, the DL network’s output gives the active contour model a shape restriction9. Given the diversity of the species and the potential for visual similarity between many flower species, flower classification is a difficult undertaking. The process of classifying flowers entails a number of challenges, such as photos with poor quality and noise; images obscured by plant stems and foliage, and occasionally even by insects10. Computerized living plant recognition focuses on extracting stable features from plants. Consequently, evidence found in leaf veins is crucial for recognizing living plants. Neural networks with deep layers are frequently utilized in computer vision algorithms due to their increased capacity for recognizing patterns in images. Deep learning is a potent artificial intelligence approach that is becoming a common method of text and image recognition and categorization in modern times. The deep learning technology can be used to extend its application towards flower species recognition. Deep learning technology is essential for expanding its use in interdisciplinary fields to include diagnostics, forecasting, and decision-making. Since deep learning technology has improved to automate digital agriculture, there is a possibility that malicious users would damage the deep learning model that has been produced and attempt to breach model confidentiality through security vulnerabilities11,12,13,14,15,16,17. The input dataset had the biggest impact on the potential to alter the DL model’s prediction. Therefore, the suggested model, SRB-VGG19, took into consideration and addressed the adversarial attempt to alter the training dataset samples in the form of a data poisoning FGSM attack on the DL model.

Paper organization

This article was organized as follows. Section “Motivation and objectives of this research work” portrays the contributions of this research work. Section “Background study” summarizes the methodology and the findings of the background study articles towards the flower species recognition. Section “Research methodology of proposed SRB-VGG19” provides the research methodology of proposed SRB-VGG19. Section “Mathematical modelling of SRB-VGG19” explores the mathematical modeling of proposed SRB-VGG19. The implementation results of the proposed SRB-VGG19 are discussed in Section “SRB-VGG19 implementation results and discussions”. Finally, the proposed SRB-VGG19 model was concluded in the Section “Security countermeasure FGSM attack implementation results and discussion” with the findings and future enhancements.

Motivation and objectives of this research work

Classifying flowers can be challenging because there is a lot of variation among flower species and some may look similar to one another. Effective flower species classification requires a suitable classification method that makes use of deep learning technologies. This motivates to design the proposed SRB-VGG19, which is highly effective at classifying flower species and is inspired by VGG19 model. By capturing high-level representations of the data, dense layered block is incorporated into VGG19 to improve feature extraction and increase the model’s ability to perform accurate classification. This motivates the design of proposed SRB-VGG19 Dense ANL model that can deliver consistent performance in noisy, distorted input data in addition to achieving high accuracy on clean data. The motivation of this research is to close the gap between the latest deep learning architectures and the growing demand for reliable AI systems that can function consistently in challenging real-world situations. By using adversarial training, this work provides a balance between preserving the model’s robustness against adversarial attacks while preserving high performance on clean data. The proposed SRB-VGG19 Dense ANL model offers an innovative approach for boosting deep convolutional networks’ resilience and versatility, opening the door for more dependable AI systems in real-world settings. The main objective of this work is to adapt Sobel filter and the Restricted Boltzmann Machine approach through unsupervised learning for the purpose of feature extraction. The second objective is to finetune the VGG19 model by integrated with the dense layered block. The third objective is to address the issue of data poisoning attack through Fast Gradient Sign Method applied to the input data samples18,19.

Contribution of this research work

The following are the main contributions of this research are four folded.

-

(i)

The first contribution deals with the dataset preparation by means of feature extraction through the use of the Sobel filter and RBM neural network approach through unsupervised learning to form the RBM Component Vectorized (RBMCV) flower images.

-

(ii)

The second contribution is the design of four layered dense block for embedding with the SRB-VGG19 Dense Model

-

(iii)

The third contribution is the fine-tuning of VGG19 and DenseNet to design the proposed SRB-VGG19 with the following sub models. The RBMCV flower images are processed with five convolutional blocks to form RBMCV flower feature maps.

-

a.

The SRB-VGG19 FCL model retrieves the RBMCV flower feature maps and processed with two FCL layers along with an third additional FCL layer to generate optimized RBMCV feature map. The third updated Dense Layer block consists of one Batch Normalization, one ReLU, and one dropout layer. Finally the SRB-VGG19 FCL model ends with the softmax layer with five neurons to classify the five flower species.

-

b.

The SRB-VGG19 Dense model retrieves the RBMCV flower feature maps and processed with two Dense block to generate optimized RBMCV feature map. The addition of four layered Dense block in this model as shown in the Fig. 1 overcomes the problem of vanishing gradient. The dense blocks are composed of four convolution blocks connected in series. The single convolutional block in the dense block consists of batch normalization, RELU and convolution that allow cross-layer connections between two non-adjacent convolution blocks as shown in Fig. 1. Each input convolutional block contains feature maps from all the previous convolutional blocks. Finally, the SRB-VGG19 Dense model ends with the softmax layer with five neurons to classify the five flower species.

-

(iv)

The fourth contribution attempts to overcome the data poisoning FGSM attacks that are vulnerable to proposed SRB-VGG19 to design SRB-VGG19 Dense ANL Model. Inspired by the ANL against FGSM attack, the ANL layer was created as the hidden layer in between the Convolutional layers of the four layered dense block. The ANL layer was designed inside the hidden layer of the dense block as shown in the Fig. 2.

-

(iv)

-

a.

Background study

Roses are vulnerable to severe temperature swings, damage from drought, and insufficient water. Due to the benefits of a controlled environment, this led to a growth in greenhouse production in order to satisfy expanding demand with the best possible supply. The dataset used in rose greenhouse cultivation was subjected to ML models. It categorizes the best greenhouse conditions for improving the condition of roses and achieving maximum rose production1. As a result, keeping an eye on the flower’s blossoming stage is essential to precisely identifying the pollination targets and time. A machine vision system was created in order to gather pictures of two different apple types in an orchard setting. A machine vision system was created in order to gather pictures of two different apple types in an orchard setting. In order to recognize and find the most prominent flowers from an apple flower dataset during the blooming stage, from initial king bloom to full bloom, a Mask R-CNN-based recognition system and a king flower segmentation technique were built2. Beginning with image improvement, dataset images are cropped to generate a more acceptable dataset. Subsequently, image segmentation was employed to distinguish the foreground from the background, using active contour. All three features are color, texture, and shape and they were extracted, with GLCM serving as the texture identifier and Invariant Moments as the form descriptor3.

Flowers belong to a broad class of plant life, many of which have relatively similar characteristics and appearances; however certain flower species differ from one another. Because of these similarities and differences, identifying flowers with a high degree of accuracy is an extremely difficult task. Identifying flowers from their images using standard methods such as visiting websites on the Internet, searching for keywords on search engines, or consulting floral reference books was ineffective4. With Atrous convolution, it is possible to regulate the Deep CNN feature response computation resolution. Additionally, it enables us to efficiently expand the filter’s field of view to include more contexts without adding more parameters or computing power. Objects of different scales are pooled in an atrous spatial pyramid5. By adding a CNN, the need for manually constructed feature extractors of the model is avoided, and the optimal accuracy is attained by strengthening the model. Finally, to reveal pertinent vein configurations by using a straightforward visualization technique was done to analyze the produced models6. Apple blossoming abundance was detected in a high-density apple orchard by analyzing HSL (hue, saturation, and luminance) photographs to determine the number of flower clusters (FC) of individual trees7.

By adding contextual information regarding the image data’s collection method, which correlates with some of the observed appearance changes and class distributions in the data, the MLP and CNN networks were expanded. The watershed segmentation (WS) and circular Hough transform (CHT) algorithms are used to process the pixel-wise fruit segmentation output in order to identify and count individual apple fruits8. The apple, peach, and pear flower datasets are utilized to fine-tune the semantic segmentation network DeepLab-ResNet, which is then used for detection. An active contour model is utilized to improve the network’s coarse segmentation results, presuming that the network can identify the flower object approximately and that the flower’s color differs from the backdrop9. Neural Architecture Search –Feature Pyramid Network (NAS-FPN) along with Faster-RCNN deployed with transfer learning process was adopted to perform the flower classification process20. The minimal Redundancy Maximum Relevance (mRMR) approach was utilized in the Deep CNN feature selection algorithm to choose the more effective flower features. Using the collected features, a SVMclassifier with a Radial Bases Function (RBF) kernel was used to categorize the floral species21. For instance, a set of aesthetic characteristics was taken from a given set of flower images and used to generalize these images to new images with potentially unknown flowers, rather than training for a single category of flowers based solely on human developed features like SIFT and HoG. The classification system known as sparse representations is utilized to forecast the characteristics of a certain floral image from any category. In order to perform better during the flower classification stage, the most discriminative qualities were identified among others using the genetic algorithm22. In the Blockchain Data Lake framework, the original image data was verified for accuracy and uniqueness using the Color Consistency Automatic Network (CCAN) based flower classification method23. Pattern similarities and variances are distinguished using Multilayer NN and the pairwise confusion loss function based on pair similarity. The fine grain size serves the objective function of enabling the various flower patterns24. The Single Short Detector (SSD) and Faster-Recurrent CNN (Faster-RCNN) along with transfer learning model are used to locate, identify, and categorize floral items25. An image-based identification tool that allowed users to query or add to the framework with additional information was made accessible as a mobile and internet-based application that synchronizes with the expanding data. This makes it possible to query the system year-round and to create a plant observation with complementing images26. Multimodal CNN represent each word in a text as a vector and extract visual information. Then, using a CNN model for text data, the textual features are retrieved27. Due to a multitude of plant species variations, inaccurate image pre-processing methods like edge detection and contour extraction, and a lack of suitable models or representation strategies, computer-aided plant authentication remains most difficult tasks in CV28. The flowers were categorized using textural characteristics, such as the Gray level co-occurrence matrix (GLCM). The flower classification was done using multidimensional genetic association rule mining29.

Apple trees need to have some of their blossoms and fruitlets cut early in the period of development in order to maximize fruit production. The amount of blossoms in the orchard, or the bloom intensity, indicates how much needs to be removed30. Few labeled flower data are needed for multi-class fruit blossom detection in the Deep CNN framework, which increases the training process by lowering the sample size and broadens the model’s relevance to a larger variety of flower categories. Location guidance module (LGM) was utilized to emphasize foreground regions in query images that resembled the bloom references given by support images in order to increase the likelihood of flower31. The Ghost module was used to replace the Convolution module in the Neck section of YOLOv5’s network in order to detect apple blossoms. ShuffleNetv2 used Channel Splitting technique to lower the memory access cost. Ghost module kept the same detection performance while lowering the volume layer’s processing cost32. In order to create a consistent environment for accurate luminescence and color assessment even in the presence of fluctuating light enlightenment the color and brightness compensation approach employed a scanning method. Adaptive camera parameter modification was calibrated based on the relationships between color discrepancies and camera attributes in order to produce the optimum color for tomato flower recognition33. Table 1 shows the inferences from the literature review.

From the initial king flower to the complete flowering condition, a king flower segmentation algorithm and a Mask R-CNN-based detection model were created for identifying and locating the king flowers in an apple blossom dataset34. Contrast Limited Adaptive Histogram Equalization (CLAHE) and filtering approaches are used to pre-process the ensemble deep learning-based flowers categorization model. During the optimal pattern extraction step, which takes into account the pre-processed images, the best hybrid patterns are extracted from the Local Binary Pattern (LBP) and Local Vector Pattern (LVP).35. The Xception CNN incorporates both the channel attention and spatial attention mechanisms. The network was refined by combining Triplet Loss and Softmax Loss in the network loss layer, resulting in feature embedding space for flower classification36. The limitation and the advantages of the literature work is shown in Table 2.

Research methodology of proposed SRB-VGG19

The overall research methodology of the proposed SRB-VGG19 is shown in Fig. 3. The proposed SRB-VGG19 model Overall Framework was depicted in Fig. 4. The stage 1 of the proposed SRB-VGG19 model initiates by collecting the images from Flowers recognition Dataset. Stage 2 deals with the formation of Sobel filtered RBM Component Vectorized flower images. The dataset pre-processing for the flower image was done by forming the Sobel filtered image by performing spatial gradient measurement on the image and includes only the high spatial frequency edges from the images.

The sobel filtered image contains only the high intensity pixels that corresponds to the image edges and its module is shown in Fig. 5. This sobel filtered image are fitted to RBM to create RBM component vectorized images. The RBMCV flower image contains only the essential edges of the images that helps for identification of the flower image. Stage 3 focuses on selecting the best CNN towards classifying the flower species. Stage 4 proposes the design of SRB-VGG19 model by replacing FCL of existing VGG19 with the FCL and dense block. The sobel filter of the image was formed by retrieving the pixel values of the image. Then the horizontal gradient and vertical gradient of the image was found to compute the final gradient. The horizontal gradient of the image was created by finding the difference between the two pixels in the column of the image. The vertical gradient of the image was created by finding the difference between the two pixels in the rows of the image.

This sobel filtered image are fitted to RBM that provides unsupervised ANN which creates the RBM component vectorized images using the probabilistic values of the pixels in the sobel filtered image and its module is shown in the Fig. 6. To determine which best CNN model, the training images from the flower recognition dataset are fitted using the current models, including DenseNet, AlexNet, LeNet, VGG19, ResNet, Inception, and Xception. According to experiment results, VGG19 and DenseNet can classify floral species with an accuracy of above 80%. So, the VGG19 and DenseNet were selected to fine tune its structure to design the proposed SRB-VGG19 FCL and SRB-VGG19 Dense model.

SRB-VGG-19 FCL model architecture

The proposed SRB-VGG19 FCL model initially begins by using the Sobel filter and RBM to turn the input image into an RBM Component Vector of Flowers that only includes the essential flower edges. After that, RBMCV flower feature maps are produced by processing the RBM Component Vector of Flower images through five convolutional blocks. In order to create an optimized RBMCV feature map, the acquired important feature maps are then processed using a FCL made up of three dense layers. The third updated Dense Layer block, which consists of one Batch Normalization, one ReLU, and one dropout layer, is then fitted to the optimized feature map. The comparison of the VGG19 in SRB-VGG19 FCL model is shown in Fig. 7.

The SoftMax layer comes after the FCL. After that, the optimized RBMCV feature map is used for batch normalization, which centers the aligned features in the active region and removes the unaligned optimized RBMCV feature map weights from focus. Subsequently, nonlinearity is added to the aligned features using the ReLU activation function, assisting the feature vector in learning its complex form. The erroneous noise in the aligned weights of the optimized RBMCV feature map is then removed using dropout and the SRB-VGG19 FCL model architecture is shown in Fig. 8.

SRB-VGG-19 dense model architecture

The proposed SRB-VGG19 Dense model initially begins by using the Sobel filter and RBM to turn the input image into an RBM Component Vector of Flowers that only includes the essential flower edges. The RBMCV flower images are processed with five convolutional blocks to form RBMCV flower feature maps. The comparison of the VGG19 in SRB-VGG19 Dense model is shown in Fig. 9.

The SRB-VGG19 Dense model retrieves the RBMCV flower feature maps and processed with two Dense block to generate optimized RBMCV feature map. The dense blocks are composed of four convolution blocks connected in series. The single convolutional block in the dense block consists of batch normalization, RELU and convolution that allow cross-layer connections between two non-adjacent convolution blocks. Each input convolutional block contains feature maps from all the previous convolutional blocks. Finally, the SRB-VGG19 Dense model ends with the softmax layer with five neurons to classify the five flower species. After that, feature maps are produced by processing the RBM Component Vector of Flower images through five convolutional blocks. The SRB-VGG19 Dense model architecture is shown in Fig. 10.

SRB-VGG-19 dense ANL model architecture

The ANL Layer was added to the four layered dense block of SRB-VGG19 Dense model and the Dense ANL Block was shown in Fig. 11.

When the data poisoning attack takes place by adding the adversarial noise data sample as the input to confuse the trained DL model, there is a possibility of performance degradation in the system. This leads to assume the fake noise data samples also to be the real samples and the training happens along with the noise data ending with successful data poisoning attack. To overcome this, Adversarial Noise Layer (ANL) can be appended that are crafted with noises in the intermediate layer while designing the CNN layer. The SRB-VGG19 Dense ANL model was designed to address the adversarial data samples generated by FGSM attack.

Mathematical modelling of SRB-VGG19

The proposed SRB-VGG19 model initiates by collecting 4250 flower images with 850 flowers each from five classes of flowers such as daisy, dandelion, sunflower, tulip and rose from publicly available Flowers recognition dataset for classifying the fiver flower species and the images are denoted in the Eq. (1).

where \({{FLO}_{00}}_{1}\) denotes single flower image \({{CTLS}_{00}}_{1}\) as in the Eq. (2).

The flower images are applied to data processing module to generate the RBMCV flower images.

Problem formulation of unsupervised network feature extraction

The input data is processed to form the sobel filtered image to form the sobel filtered vector of flower images with the following steps.

Step 1 The flower image \({"FLO}^{IN"}\) input is denoting as vector with the pixel property mentioned in the Eq. (3).

Step 2 Get the row pixel \(``RowPixel"\) and column pixel \(``columnPixel"\) values of each image in the Flowers recognition dataset as in (4) and (5). Here \({FLO}_{{00}_{1}}^{IN}\) denotes single flower image.

Step 3 Find the horizontal gradient \(``{G}_{h}"\) of the flower image. The horizontal gradient was calculated by taking differences in the flower image values between the columns as in (6).Here \(AColumn\) and \(BColumn\) denotes the first and second column of the \(ColumnPixel\) flower image respectively. The horizontal gradient \(``{G}_{h}"\) should be normalized before processing as in (7).

Step 4 Find the vertical gradient \(``{G}_{v}"\) of the flower image. The vertical gradient was calculated by taking differences in the flower image values between the rows as in (8).Here \(ARow\) and \(BRow\) denotes the first and second column of the \(RowPixel\) flower image respectively. The vertical gradient \(``{G}_{v}"\) should be normalized before processing as in (9).

Step 5 Compute the final gradient \(``Grad"\) as in the Eq. (10).

Step 6 Predefine the Threshold value of the pixel and the mark the edge features accordingly as in the Eqs. (11) and (12).

Step 7 Connect all the \(Edge\) to form the Sobel Filtered Image \(``SobelImage"\) as in (13)

Problem formulation of RBM component vectorized image

The input sobel filtered image is processed to form the RBMCV flower images with the following steps. RBM is an unsupervised two layered ANN that extracts the essential component from the image using probabilistic stochastic maximum likelihood function.

Step 1 Let the input \({SobelImage}^{IN}\) denoted as vector with the pixel property as in (14).

Step 2 Identify the visible and invisible pixels of the each image from \({SobelImage}^{IN}\) . Let \({SIm}_{{00}_{1}}^{IN}\) represents the sobel filtered flower image. As the images as two dimensional, each image will have an alpha channel and each pixel in the image will have an alpha values either ‘0’ or ‘1’. If the alpha value of the pixel is ‘0’, then the pixel is invisible pixel. If the alpha value of the pixel is ‘1’, then the pixel is visible pixel and is denoted from the Eqs. (15) to (19).

Step 3 Make the visible pixels \(``VPixel"\) as input nodes \(``In"\) and invisible pixels \(``InVPixel"\) as hidden nodes \(``Hn"\) of the RBM network as in (20) and (21).

Step 4 Find the energy \(``Energy\left(In,Hn\right)"\) of the RBM network as in (22).

The \(``{a}_{i}"\) denotes activation function of node, denotes \(``{b}_{i}"\) bias function of node and \({"W}_{ij}"\) denotes the weight of the node.

Step 5 Find the RBM component vector \(``RBMCV"\) of the image using the Boltzmann distribution, Gibbs sampling and Contrastive Divergence step as in (23) to (27).

Proposed SRB-VGG19 modelling

The RBMCV flower image \(RBMCV\) input data was processed with existing CNN models to choose the best CNN model. According to experiment results, VGG19 and DenseNet can classify floral species with an accuracy of above 80%. So, the VGG19 and DenseNet were selected to fine tune its structure to design the proposed SRB-VGG19 FCL and SRB-VGG19 Dense model. The five convolutional blocks are same both in SRB-VGG19 FCL and SRB-VGG19 Dense model. The SRB-VGG19 model initiates first two convolutional blocks two kernel filters and single max pooling followed by three convolutional blocks with four kernel filters and single max pooling. The convolution block operates with the following steps.

Step 1 Retrieve the \(``RBMCV"\) input image. Let denotes \(``W"\) Width, \(``H"\) denotes Height and denotes \(``D"\) Depth of the image with the number of filters as \(``K"\), spatial extent as \(``F"\), the stride as \(``S"\) and the padding as \(``P"\). Then it forms the new image with volume as in (28) to (30).

Step 2 With the \(``RBMCV"\) input image and kernel as \(``K"\). The value \(``m"\) represents rows and \(``n"\) represents columns. The convolutional layer forms the numerical representation of the image that allows the neural network to extract the essential patterns from the image. The convolution of 3X3 kernel filter forms the output as in (31).

Step 3 The \(``RBMCVcon"\) convolutional image was then passed through 2X2 max pooling. The pooling operation was done to reduce the spatial image size to reduce the computation time. Let denotes \(``Wc"\) Width, \(``Hc"\) denotes Height and denotes \(``Dc"\) Depth of the \(``RBMCVcon"\) convolutional image with the spatial extent as \(``Fc"\) and the stride as \(``Sc"\) as in (32) to (37). The Dimension of the pooled RBMCV image is shown in (38).

The step 2 and step 3 was repeated for five convolutional block, the final pooled image \(``RBMCVPol"\) was formed.

Proposed SRB-VGG19 FCL problem formulation

In SRB-VGG19 FCL model, the pooled image \(``RBMCVPol"\) was given to two FCL layers that were used to make the prediction from the extracted features. The FCL layer is a MLP that maps the activation volume of the \(``RBMCVPol"\) image from the combination of previous layers into a class probability function. The volume of \(``RBMCVPol"\) image represents \(``Wp"\) Width, \(``Hp"\) denotes Height and denotes \(``Dp"\) Depth of the image. For FCL layer, the depth of the connection will be \(``l-1"\) having \(``l"\) layers in the FCL layer network. The output of the FCL layer performs the mapping \({m}_{a}^{(l-1)} \times {m}_{b}^{(l-1)}\times {m}_{c}^{\left(l-1\right)}\) of the activation volume from the previous layers in the form of probability distribution. Here \(``i"\) denotes number of layers in FCL network and output of the FCL layer is shown in (36) and (37).

Proposed SRB-VGG19 dense model problem formulation

In SRB-VGG19 Dense model, the pooled image \(``RBMCVPol"\) was given to two Dense block crafted with noise using ANL in the intermediate layer that were used to make the prediction from the adversarial FGSM data sample features. Each dense block contains four convolutions, where each single convolution layer contains a batch normalization, ReLU and single convolution followed by concatenation of the feature map. So, the four convolutional layer forms concatenated together to form the four layered Dense block. Assume the input given to the dense block is \(``RBMCVPol"\). The four layered dense block is formed as in (38) to (42).

Here \({"Y}_{1},{Y}_{2},{Y}_{3},{Y}_{4}"\) represents the output of first convolution, second convolution, third convolution, fourth convolution respectively.

Problem formulation of FGSM attack ANL module of SRB-VGG19 dense ANL

In SRB-VGG19 Dense ANL model, the pooled image \(``RBMCVPol"\) was given to two Dense block that were used to make the prediction from the extracted features. Each dense block contains four convolutions, where each single convolution layer contains a batch normalization, an intermediate ANL layer that process in two cycles followed by ReLU and single convolution followed by concatenation of the feature map. So, the four convolutional layer with ANL layer are concatenated together to form the four layered Dense ANL block. The FGSM attack was addresses by SRB-VGG19 Dense ANL model. The adversarial noise are samples are created using FGSM attack. Here \({{"FLO}_{00}}_{1}"\) is the original single flower image. The \({{"AdvFLO}_{00}}_{1}"\) is the single adversarial noise flower image. Let us see how the adversarial noise data sample is created by FGSM attack for a single image in (43).

Here \(``\in "\) denotes hyper parameter that controls the magnitude level of the noise, \(``\varphi "\) denotes SRB-VGG19 Dense ANL parameters, \(``Label"\) denotes the true flower class label of the input dataset and \(``Z"\) denotes the cost function of the SRB-VGG19 Dense ANL model. The ANL layer was added inside the dense block. Assuming \({" X}_{0}"\) as the input of the first convolutional layer of the dense block which is \(``RBMCVPol"\) as in (44). The number of layer is \(``t"\) which is four layer and \({"x}_{t}"\) is the output of the \({"t}^{th}"\) layer. \({"Y}_{t}^{-}"\) denotes the sub-network from \({"t+1}^{th}"\) layer to the last layer of \({"Y}_{t}^{+}"\) in ANL layer which was based on the equation from (45) to (47).

The Adversarial noise \({"\eta }_{t}"\) was created by FGSM method that was shown in (48). Here \(``r"\) denotes the random scalar that controls the magnitude level of the noise, \(``{s(x}_{t})"\) dentotes the standard deviation of the input \({"x}_{t}"\), method, \(``\oint "\) denotes the Gaussian distribution of hyper parameter and \({"\nabla }_{{x}_{t}}"\) denotes the gradient of the \({"t}^{th}"\) layer. The ANL Layer simply adds the Adversarial noise \({"\eta }_{t}"\) to the input \(``{x}_{t}"\) to generate \(\widehat{{"x}_{t}}"\) that is passed to the next cycle2 of the ANL layer.

SRB-VGG19 implementation results and discussions

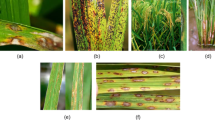

To categorize the five flower species, the proposed SRB-VGG19 starts by gathering 4250 flower images (https://www.kaggle.com/datasets/alxmamaev/flowers-recognition) from the openly available Flowers Recognition KAGGLE dataset. The daisy, tulip, rose, sunflower, and dandelion are the five groups of flower species that have been used in this study. The Flower Recognition dataset containing 4250 flower samples with 850 samples of daisy, sunflower, rose, tulip and dandelion was used for implementation with 3400 training sample and 850 testing samples. The sample images from the dataset are shown in the Fig. 12. The tensorflow compatible images are formed from original images and then grayscaled image are formed and the results are shown in Fig. 13.

From the grayscaled image, the sobel filtered image was formed by finding the prewitt vertical and horizontal kernel and the results are shown in Fig. 14.

From the prewitt vertical and horizontal kernel images, the sobel filtered images are formed. From the sobel filtered images, the RBM component vectorized flower images are formed and the results are shown in Fig. 15.

The Feature maps results obtained from the two convolutional blocks and two dense blocks of SRB-VGG19 Dense model is shown in Figs. 16, 17, 18, 19, 20, 21, and 22.

The RBMCV flower images are fitted to existing CNN models to choose the best CNN. The performance was analysed that is shown in Table 2 and Fig. 23. The RBMCV flower images are fitted to SRB-VGG19 FCL model and SRB-VGG19 Dense model prediction results are shown in Fig. 24.

Security countermeasure FGSM attack implementation results and discussion

The SRB-VGG19 Dense ANL model was validated by creating the adversarial noise samples using FGSM method. The implementation results of the FGSM noise data samples created in the SRB-VGG19 Dense ANL model is shown in Fig. 25 (Table 3).

The performance analysis of SRB-VGG19 Dense ANL model was done by giving the adversarial noise sample input with varying gradients of \(\nabla\) = 0, \(\nabla\) = 2 and \(\nabla\) = 4. The perturbation magnitude of FGSM noise sample magnitude was varied with \(\in =0.00, 0.01, 0.10, 0.15, 0.20, 0.25\) and defended accuracy of SRB-VGG19 Dense ANL model was analyzed using 10 k-Fold cross validation and mean performance metrics are shown in Table 4. The error analysis of misclassified instances of the proposed SRB-VGG19 Dense ANL model was done by considering with and without the ANL layer and the analysis is shown in Table 3.

The performance of the proposed SRB-VGG19 Dense ANL was compared with the existing works that performs flower species classification and it is shown in Table 5.

Computational complexity of proposed SRB-VGG19 dense ANL model

The computational complexity \(``CCom"\) of SRB-VGG19 Dense ANL model depends on the number of input and output dimensions and is shown in (52) where \({"N}_{In}"\) is the number of inputs of the proposed model and \({"N}_{Out}"\) is the number of outputs of the proposed model. The number of the inputs depends on the height \(``H"\) and width \(``W"\) of the feature map and number of input channels \(``{C}_{In}"\) as in (53). The number of the outputs depends on the kernel size \(``K"\) of the feature map \({"F}_{map}"\) and number of output channels \(``{C}_{Out}"\) as in (54).

Now the proposed model is refined by adding the ANL in the dense layered block and its complexity is shown in (55) with \(``\in "\) is the perturbation magnitude that controls the scale of noise and \(``\delta "\) is the adversarial noise generated by the proposed model.

The dataset bias of the proposed model should be considered for enhancing the robustness and the model generalization. The presence of imbalanced dataset and sampling bias may lead to model overfitting issues. However, the presence of ANL layer in the dense layered block mitigates the sampling bias and imbalanced datasets that ensures the robustness during training. The impact of adversarial defenses on model generalization is performed by analyzing the performance of the proposed model with various is the perturbation magnitude " ∈ " that controls the scale of noise and it is shown in Table 3. Since the proposed SRB-VGG19 Dense ANL model introduces noise, it could prevent the model from becoming highly sensitive to small data fluctuations, thus improving the model’s ability to generalize to new unseen inputs. Also, as the ANL layer involves techniques that mask gradients during backpropagation, it could make the model harder for attackers to manipulate the input data during training, as the model might learn less directly from the data thereby reducing the generalization. The input flower image was fine-tuned to RBM Component Vectorized (RBMCV) flower images that was received as the input for SRB-VGG19 Dense ANL model. Four layered dense block was added as the last layer of the existing VGG19 model to design proposed SRB-VGG19 Dense ANL model.During fine-tuning, the weights of dense layers are adjusted to handle the embeddings that have been modified by the Adversarial Noise Layer. The loss function was fine-tuned to cross-entropy loss for flower species classification, where the goal is to minimize the difference between the predicted class probabilities and the true labels that ensures the robustness to adversarial perturbations. The fine-tuning of the proposed model was finally done by testing the model with various noise scale to assess the performance.

Conclusion and future enhancements

This research attempt to categorize the flower species by analysing the essential features of the flower image and its edges. This study’s primary goal is to provide the effective data pre-processing through unsupervised learning by applying the Sobel filter and RBM neural network component to the input data image. The major contributions of this research are summarized into four ways. The initial contribution focuses on preparation of the dataset to enhance the extraction of flower essential features using unsupervised learning by influencing the Sobel filter technique to form the Sobel Filtered vectorized flower image. As the final outcome information is unknown, a spatial intensity image must be prepared in order to extract only the most significant information from the input image. So the Sobel filtered flower image was processed with RBM Neural network that learns the probability distribution of the image pixel thereby extracting the most essential features to form the RBMCV flower images. The second contribution involves optimizing the Dense block by creating the four layered dense block for adding into SRB-VGG19 Dense model. The third contribution is the fine-tuning of VGG19 and DenseNet to design the proposed SRB-VGG19 with the sub model design. The final contribution of this research deals with the security countermeasure towards data poisoning FGSM attack by designing SRB-VGG19 Dense ANL model.

As an overview of novelty, the first proposed SRB-VGG19 FCL model retrieves the RBMCV flower feature maps and processed with two FCL layers along with an third additional FCL layer to generate optimized RBMCV feature map. The third updated Dense Layer block consists of one Batch Normalization, one ReLU, and one dropout layer. The second SRB-VGG19 Dense model retrieves the RBMCV flower feature maps and processed with two Dense block to generate optimized RBMCV feature map. The addition of four layered Dense block overcomes the problem of vanishing gradient. The dense blocks are composed of four convolution blocks connected in series. The single convolutional block in the dense block consists of batch normalization, RELU and convolution that allow cross-layer connections between two non-adjacent convolutions. Each input convolutional block contains feature maps from all the previous convolutional blocks. Finally, both the SRB-VGG19 FCL and Dense model ends with the softmax layer with five neurons to classify the five flower species. The third SRB-VGG19 Dense ANL Model overcome the data poisoning FGSM attacks that are vulnerable to Adversarial data poisoning attack. The ANL layer was created as the hidden layer in between the Convolutional layers of the four layered dense block. The ANL layer was designed inside the hidden layer of the dense block. The proposed SRB-VGG19 model faced challenges with data pre-processing while forming the RBMCV flower image and choosing the optimal CNN to improvise the model accuracy. It also faced challenge during the creating of the ANL layer as two cycles to predict the ideal flower samples even the model receives adversarial FGSM noise data samples. The Flower Recognition dataset containing 4250 flower samples with 850 samples of daisy, sunflower, rose, tulip and dandelion was used for implementation with 3400 training sample and 850 testing samples. The training data was converted to grayscaled flower image, then to sobel filtered followed by RBMCV flower images.

The RBMCV flower images were fitted with existing CNN models to analyse the performance for selecting optimum CNN. According to experiment results, VGG19 and DenseNet can classify floral species with an accuracy of above 80%. So, VGG19 and DenseNet were integrated and fine-tuned to design the proposed SRB-VGG19 model. When compared to the current deep learning models, the implementation results show that the proposed SRB-VGG19 Dense Model classifies the flower species with a high accuracy of 98.65%. Now to test the security countermeasure of data poisoning FGSM attack, the adversarial FGSM data samples was created and attached with original image for performance evaluation. As the SRB-VGG19 Dense ANL model was designed with ANL as the intermediate layer in the dense block, the performance degradation was least negligible which is acceptable. When the FGSM samples are varied with magnitude and gradient, the accuracy of flower species classification was varied from 98.65% to 98.12, 98.43, 98.24, 98.33, 98.02 and 98.45. High-dimensional feature maps created by VGG19’s convolutional layers serve as embeddings of the input image. The input image is converted into a high-dimensional feature space by the convolutional layers, which act as the embedding layer. Feature maps that capture different aspects of the image are produced by each convolutional process. The original image’s embeddings are the feature maps that the convolutional layers create. For the purpose of analyzing and categorizing the flower species, these embeddings offer a condensed representation of the image. The convolutional layers learn to highlight more significant features that are less vulnerable to adversarial manipulation as the model trains with adversarial noise, making the embeddings they learn more resilient to the perturbations in the input data. The Adversarial Noise Layer plays a key role in modifying the learned embeddings. The model is compelled to learn more resilient embeddings that capture invariant features in spite of the noise by introducing adversarial noise into the input. By learning representations that are resistant to small, undetectable changes in the input image brought on by adversarial attacks, the embeddings learnt from adversarially augmented data aid in the model’s ability to generalize. The proposed approach uses the embeddings to generate final predictions, and accurate classification depends on the robustness of the embeddings. The model can accurately classify the flower species even when there is adversarial noise since the embeddings have been modified to be more resistant to adversarial attacks. The suggested SRB-VGG19 Dense ANL model performs exceptionally, but adjusting the ANL layers for high gradients, error functions, and optimizers is still a challenge. This challenge may be addressed in a future version of the study. As an extension of this study, the system may be validated for performance and the various types of data poisoning attacks may also be implemented for various noise data samples.

Data availability

The data used to support the findings of this study are available at https://www.kaggle.com/datasets/alxmamaev/flowers-recognition.

References

Albarico, J. P. et al. Roses greenhouse cultivation classification using machine learning techniques. Procedia Comput. Sci. 218, 2163–2171 (2023).

Mu, X., He, L., Heinemann, P., Schupp, J. & Karkee, M. Mask R-CNN based apple flower detection and king flower identification for precision pollination. Smart Agric. Technol. 4, 100151 (2023).

Almogdady, H., Manaseer, S. & Hiary, H. A flower recognition system based on image processing and neural networks. Int. J. Sci. Technol. Res. 7(11), 166–173 (2018).

Dias, P. A., Tabb, A. & Medeiros, H. Multispecies fruit flower detection using a refined semantic segmentation network. IEEE Robot. Autom. Lett. 3(4), 3003–3010. https://doi.org/10.1109/LRA.2018.2849498Fff (2018).

Chen, L. C., Papandreou, G., Kokkinos, I., Murphy, K. & Yuille, A. L. DeepLab: Semantic image segmentation with deep convolutional nets atrous convolution and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell 40(4), 834–848 (2018).

Grinblat, G. L., Uzal, L. C., Larese, M. G. & Granitto, P. M. Deep learning for plant identification using vein morphological patterns. Comput. Electron. Agric. 127, 418–424 (2016).

Hocevar, M., Sirok, B., Godesa, T. & Stopar, M. Flowering estimation in apple orchards by image analysis. Precis. Agric. 15(4), 466–478 (2014).

Bargoti, S. & Underwood, J. P. Image segmentation for fruit detection and yield estimation in apple orchards. J. Field Robot. 34(6), 1039–1060 (2017).

Sun, K., Wang, X., Liu, S. & Liu, C. H. Apple, peach, and pear flower detection using semantic segmentation network and shape constraint level set. Comput. Electron. Agric. 185(March), 106150. https://doi.org/10.1016/j.compag.2021.106150 (2021).

Duman, B. & Suzen, A. A. A study on deep learning based classification of flower images. Int. J. Adv. Netw. Appl. 14(02), 5385–5389 (2022).

Jin, X., Xu, Z. & Hirakawa, K. Noise parameter estimation for Poisson corrupted images using variance stabilization transforms. IEEE Trans. Image Process. 23(3), 1329–1339. https://doi.org/10.1109/TIP.2014.2300813 (2014).

Pal, B., Gupta, D., Rashed-Al-Mahfuz, M., Alyami, S. A. & Moni, M. A. Vulnerability in deep transfer learning models to adversarial fast gradient sign attack for covid-19 prediction from chest radiography images. Appl. Sci 11(9), 4233 (2021).

Sun, C. et al. Towards lightweight black-box attack against deep neural networks. Adv. Neural Inf. Process. Syst. 35, 19319–19331 (2022).

Chen, B. et al. Adversarial examples generation for deep product quantization networks on image retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 45(2), 1388–1404 (2023).

Goldblum, M. et al. Dataset security for machine learning: Data poisoning, backdoor attacks, and defenses. IEEE Trans. Pattern Anal. Mach. Intell. 45(2), 1563–1580 (2022).

Wang, Z. et al. Threats to training: A survey of poisoning attacks and defenses on machine learning systems. ACM Comput. Surv. 55(7), 1–36 (2022).

Shafee, A. & Awaad, T. A. Privacy attacks against deep learning models and their countermeasures. J. Syst. Archit. 114, 101940 (2021).

Balasubramaniam, S., Kadry, S., Dhanaraj, R. K. & Satheesh Kumar, K. Adaptive coati optimization enabled deep CNN-based image captioning. Appl. Artif. Intell. https://doi.org/10.1080/08839514.2024.2381166 (2024).

Balasubramaniam, S., Nelson, S. G., Arishma, M., Rajan, A. S. Machine learning based disease and pest detection in agricultural crops. EAI Endorsed Transactions on Internet of Things, 10 (2024).

Patel, I. & Patel, S. An optimized deep learning model for flower classification using NAS-FPN and faster RCNN. Int. J. Sci. Technol. Res. 9(03), 5308–5318 (2020).

Cibuk, M., Budak, U., Yanhui Guo, M., Ince, C. & Sengur, A. Efficient deep features selections and classification for flower species recognition. Measurement 137, 7–13 (2019).

Cheng, K. & Tan, X. Sparse representations based attribute learning for flower classification. Neurocomputing 145, 416–426 (2014).

Zhao, X. et al. A color constancy based flower classification method in the blockchain data lake. Multimed. Tools Appl. 83, 28657–28673. https://doi.org/10.1007/s11042-023-16656-4 (2024).

Yuan, P., Li, W., Ren, S. & Xu, H. Recognition for flower type and variety of chrysanthemum with convolutional neural network. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 34, 152–158 (2018).

Abbas, T. et al. Deep neural networks for automatic flower species localization and recognition. Comput. Intell. Neurosci. 2022, 9359353. https://doi.org/10.1155/2022/9359353 (2022).

Goeau, H. et al. Interactive plant identification based on social image data. Eco. Inform. 23, 22–34 (2014).

Bae, K. I., Park, J., Lee, J., Lee, Y. & Lim, C. Flower classification with modified multimodal convolutional neural networks. Expert Syst. Appl. 159, 113455. https://doi.org/10.1016/j.eswa.2020.113455 (2020).

Valliammal, N. & Geethalakshmi, S. N. Automatic recognition system using preferential image segmentation for leaf and flower images. Comput. Sci. Eng. Int. J. (CSEIJ). 1(4), (2011).

Mohanty, A. K. & Bag, A. Image mining for flower classification by genetic association rule mining using GLCM features. Int. J. Adv. Eng. Manag. Sci. 3(5), 516 (2017).

Dias, P. A., Tabb, A. & Medeiros, H. Apple flower detection using deep convolutional networks. Comput. Ind. 99, 17–28. https://doi.org/10.1016/j.compind.2018.03.010 (2018).

Zhou, W., Cui, Y., Huang, H., Huang, H. & Wang, C. A fast and data-efficient deep learning framework for multi-class fruit blossom detection. Comput. Electron. Agric. 217, 108592. https://doi.org/10.1016/j.compag.2023.108592 (2024).

Shang, Y. et al. Using lightweight deep learning algorithm for real-time detection of apple flowers in natural environments. Comput. Electron. Agric. 207, 107765. https://doi.org/10.1016/j.compag.2023.107765 (2023).

Ting, Y., Kondo, N. & Wei, L. Sunlight fluctuation compensation for tomato flower detection using web camera. Procedia Eng. 29, 4343–4347. https://doi.org/10.1016/j.proeng.2012.01.668 (2012).

Xinyang, Mu., He, L., Heinemann, P., Schupp, J. & Karkee, M. Mask R-CNN based apple flower detection and king flower identification for precision pollination. Smart Agric. Technol. 4, 100151. https://doi.org/10.1016/j.atech.2022.100151 (2023).

Suresh Anand, M., Swaroopa, K., Nainwal, M. & Therasa, M. An intelligent flower classification framework: Optimal hybrid flower pattern extractor with adaptive dynamic ensemble transfer learning-based convolutional neural network. Imaging Sci. J. 72, 1 (2023).

Zhang, M., Huihui, S. & Wen, J. Classification of flower image based on attention mechanism and multi-loss attention network. Comput. Commun. 179, 307–317. https://doi.org/10.1016/j.comcom.2021.09.001 (2021).

Kosankar, S. & Khan, V. Flower classification using MobileNet: An optimized deep learning model. Int. J. Adv. Res. Comput. Commun. Eng. 8(4), 54–60 (2019).

Togacar, M., Ergen, B. & Comert, Z. Classification of flower species by using features extracted from the intersection of feature selection methods in convolutional neural network models. Measurement 158, 107703. https://doi.org/10.1016/j.measurement.2020.107703 (2020).

Sun, K., Wang, X., Liu, S. & Liu, C. Apple, peach, and pear flower detection using semantic segmentation network and shape constraint level set. Comput. Electron. Agric. 185, 106150. https://doi.org/10.1016/j.compag.2021.106150 (2021).

Estrada, J. S., Vasconez, J. P., Longsheng, F. & Cheein, F. A. Deep learning based flower detection and counting in highly populated images: A peach grove case study. J. Agric. Food Res. 15, 100930. https://doi.org/10.1016/j.jafr.2023.100930 (2024).

Choudhary, R. R., Jisnu, K. K. & Meena, G. Image DeHazing using deep learning techniques. Procedia Comput. Sci. 167, 1110–1119. https://doi.org/10.1016/j.procs.2020.03.413 (2020).

Choudhary, R. R., Paliwal, S. & Meena, G. Image forgery detection system using VGG16 UNET model. Procedia Comput. Sci. 235, 735–744. https://doi.org/10.1016/j.procs.2024.04.070 (2024).

Choudhary, R. R., Singh, M. R. & Jain, P. K. Heart sound classification using a hybrid of CNN and GRU deep learning models. Procedia Comput. Sci. 235, 3085–3093. https://doi.org/10.1016/j.procs.2024.04.292 (2024).

Meena, G., Mohbey, K. K. & Lokesh, K. FSTL-SA: Few-shot transfer learning for sentiment analysis from facial expressions. Multimed. Tools Appl. https://doi.org/10.1007/s11042-024-20518-y (2024).

Ravì, D. et al. Deep learning for health informatics. IEEE J. Biomed. Health Informat. 21(1), 4–21 (2017).

Lecun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998).

Shin, H.-C. et al. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging 35(5), 1285–1298. https://doi.org/10.1109/TMI.2016.2528162 (2016).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Proc. Adv. Neural Inf. Process. Syst. 25, 1097–1105 (2012).

Li, Z., Liu, F., Yang, W., Peng, S. & Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 33(12), 6999–7019. https://doi.org/10.1109/TNNLS.2021.3084827 (2022).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern Recognition (CVPR), 770–778 (2016).

Szegedy, C., Ioffe, S., Vanhoucke, V. & Alemi, A. Inception-v4 inception-ResNet and the impact of residual connections on learning. Proc. AAAI Conf. Artif. Intell. 31(1), 4278–4284 (2017).

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2818–2826.

Xia, X., Xu, C. & Nan, B. Inception-v3 for flower classification. In 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, 783–787, https://doi.org/10.1109/ICIVC.2017.7984661 (2017).

Chollet, F., Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 1251–1258, (2017).

Iandola, F., Moskewicz, M., Karayev, S., Girshick, R., Darrell, T. & Keutzer, K. DenseNet: Implementing efficient ConvNet descriptor pyramids. Available online http://arxiv.org/abs/1404.1869 (2014).

Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K. Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 4700–4708 (2017).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014).

Paulchamy, B., Maharajan, K. & Srikanteswara, R. Deep learning based image classification using small VGG net architecture. In 2022 IEEE 2nd Mysore Sub Section International Conference (MysuruCon), Mysuru, India, 1–6. https://doi.org/10.1109/MysuruCon55714.2022.9972441 (2022).

Wen, L., Li, X., Li, X. & Gao, L. A new transfer learning based on VGG-19 network for fault diagnosis. In 2019 IEEE 23rd International Conference on Computer Supported Cooperative Work in Design (CSCWD), Porto, Portugal, 205–209. https://doi.org/10.1109/CSCWD.2019.8791884 (2019).

Jain, R., Nagrath, P., Kataria, G., Kaushik, V. S. & Hemanth, D. J. Pneumonia detection in chest X-ray images using convolutional neural networks and transfer learning. Measurement 165, 108046 (2020).

Rajaraman, S., Candemir, S., Kim, I., Thoma, G. & Antani, S. Visualization and interpretation of convolutional neural network predictions in detecting pneumonia in pediatric chest radiographs. Appl. Sci. 8(10), 1715 (2018).

Author information

Authors and Affiliations

Contributions

M.S. Conceptualisation, investigation, data curation, formal analysis and writing—original draft and S.B. Softwares and Resources and B.S. Project administration, supervision and writing, review and editing and M.S. Final Review.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

M, S.D., Balasubaramanian, S., S, B. et al. Restricted Boltzmann machine with Sobel filter dense adversarial noise secured layer framework for flower species recognition. Sci Rep 15, 12315 (2025). https://doi.org/10.1038/s41598-025-95564-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-95564-z