Abstract

Climate models exhibit errors in their simulation of historical trends of variables including sea surface temperature, winds, and precipitation, with important implications for regional and global climate projections. Here, we show that the same trend errors are also present in a suite of initialised seasonal re-forecasts for the years 1993–2016. These re-forecasts are produced by operational models that are similar to Coupled Model Intercomparison Project (CMIP)-class models and share their historical external forcings (e.g. CO2/aerosols). The trend errors, which are often well-developed at very short lead times, represent a roughly linear change in the model mean biases over the 1993–2016 re-forecast record. The similarity of trend errors in both the re-forecasts and historical simulations suggests that climate model trend errors likewise result from evolving mean biases, responding to changing external radiative forcings, instead of being an erroneous long-term response to external forcing. Therefore, these trend errors may be investigated by examining their short-lead development in initialised seasonal forecasts/re-forecasts, which we suggest should also be made by all CMIP models.

Similar content being viewed by others

Introduction

Simulated global and regional trends in historical climate model simulations often show differences from those found in observations for many variables, including sea surface temperature, sea level pressure, wind, and precipitation [e.g. refs. 1,2,3,4,5,6,7,8,9,10]. Arguably the most prominent and extensively studied of these differences is found in the tropical Pacific Ocean. An overall strengthening of the east-to-west SST gradient has been observed due to statistically significant warming of the west Pacific and no significant trends in the central and eastern Pacific11,12,13,14,15, which has coincided with a strengthening of the Walker circulation16,17. By contrast, the majority of historical climate model simulations indicate the opposite, with a weakening of the east-to-west SST gradient across the tropical Pacific4,5, which models also project to continue18,19,20,21,22. Understanding the reasons for this disparity is increasingly important, as tropical SST trends impact climate sensitivity23, projected ENSO teleconnections24,25,26,27, and atmospheric trends worldwide28,29. Moreover, SST trend discrepancies have been noted in other regions including the Atlantic, Indian, and Southern Oceans1,7. Extratropical trend discrepancies have also been found in historical climate simulations, including in storm track-related zonal winds and precipitation2,3,8,10, regional drought6, and surface temperature [e.g. central Asia/southern Europe7].

Many possible reasons have been proposed to explain differences between observed and uninitialised climate model trends. Perhaps the most straightforward argument is to note that an observed trend may fall within the range of plausible model outcomes once internal variability is considered30,31. However, as the trend discrepancies have continued to persist for decades, this possibility appears to be increasingly unlikely. For example, the tropical Pacific trend error has persisted for so long32 that more recent studies have concluded that incorrect model internal variability is most likely not its dominant cause1,5. Other studies have argued that some trend errors may be transient [e.g.33], though this does not reconcile the discrepancy in the historical simulations. Errors from other regions [e.g. the Southern Ocean,34,35,36], missing feedbacks37, and misrepresentation of atmosphere-ocean processes have also been suggested as possible causes14,38.

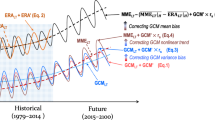

In this study, we will diagnose climate model trend errors by comparing them to trend errors in seasonal re-forecasts, which we find develop at surprisingly short leads. When analysing uninitialised climate model simulations with changing external forcings (i.e., radiative forcings including CO2 and aerosols), it can be difficult to ascertain what fraction of a trend is forced versus what fraction is a residual of internal variability. One approach often used to identify the forced component and its errors is to average across a large ensemble of uninitialised climate realisations39,40. In this study, we instead identify trend errors using initialised seasonal re-forecasts (sometimes termed hindcasts or retrospective forecasts), which use physical models similar to those used in uninitialised climate model simulations, are run over a historical period, and use the same external forcings (see Methods Section Re-forecasts and references therein). Re-forecasts are also initialised with realistic atmospheric and oceanic states, meaning that any trend errors are relative to the initial conditions. In contrast, when analysing a free-running model, the climate model realisations do not have to match observations over the same period of time. Therefore, analysing trend errors in seasonal re-forecasts may help us diagnose the initial trend error development as these trend errors are relative to the observed realisation, thus minimising the uncertainty due to internal variability.

The mean bias of a model represents the systematic error of the model relative to the observed state. For a seasonal prediction model, this is usually calculated by averaging the errors relative to observations across all re-forecast years, for a given lead time and verification period. Note that for seasonal prediction models, mean biases do not represent loss of predictability, since they are not random errors but rather represent systematic model errors that occur across all forecasts. Since initialised re-forecasts and uninitialised model simulations use similar physical models, many model errors are also common to both, including mean biases in the tropical Pacific cold tongue and double Inter-Tropical Convergence Zone (ITCZ)41, the position and intensity of the Gulf Stream42,43, and the extratropical storm tracks44. Notably, some mean biases often develop rapidly as a function of forecast lead in seasonal prediction models [e.g.45,46], perhaps as a consequence of top-of-atmosphere radiative imbalances47. These rapidly-developing mean biases in the atmosphere are distinct from the more slowly-evolving decadal oceanic drift that is common among longer climate simulations [e.g.48].

Here we will demonstrate that, even for re-forecast records of a few decades, many mean biases evident in initialised re-forecasts are not fixed but rather change as the external forcings are changed. This leads to global trend errors in seasonal re-forecasts that also develop rapidly, often within months following initialisation. We will then show that these trend errors are very similar to those found in historical, uninitialised climate simulations. That is, we suggest that model mean biases change as the imposed external forcings change, leading to similar trend errors in both re-forecasts and historical climate simulations.

Results

Changing re-forecast model mean biases

First, we show how seasonal prediction model mean biases are not stationary, but rather change roughly monotonically across their common re-forecast record (1993–2016; see Methods for a list of models). This is demonstrated by taking the difference in mean bias between the early (1993–2004) and late (2005–2016) re-forecast years, as shown here for multi-model mean two-season lead re-forecasts verifying in JJA for SST (Fig. 1a) and precipitation (Fig. 1b). This lead is chosen since the model mean biases have largely saturated by this time, but similar results are also seen for shorter leads, as is discussed further in Section Rapid development of re-forecast trend errors. There are statistically significant differences in many areas for both SST and precipitation, which is also the case for other seasons (Supplementary Fig. 1).

Difference in multi-model mean (MMM) mean bias between the first half (1993–2004) and second half (2005–2016) of the common re-forecast record for March initialisation, JJA verification (two-season lead) for a SST and b precipitation. (c, d) SST and e, f precipitation raw time series for observations (black) and the multi-model mean (red) for the common years (1993–2016) for different indices (shown as boxes on a and b). All model time series are for two-season leads, verifying in JJA. Units for a are Kelvin per decade and for b are mm per day per decade. Hatching indicates a mean bias difference that is statistically significant at the 5% level.

Another way of visualising the changing mean biases shown in Fig. 1a and b is shown in the observed and multi-model mean (MMM) time series for four locations (Fig. 1c–f). These time series suggest that the mean bias changes occur fairly continuously with time and in fact represent differences in observed and modelled trends across the re-forecast record. For some indices, such as Equatorial Pacific SST (Fig. 1c) and Southeast Asia precipitation (Fig. 1f), such differences may represent a reduction of the mean bias by the end of the re-forecast record; for example, in the case of the Equatorial Pacific, an apparent reduction of the cold tongue mean bias. For others, the mean bias may change sign (e.g. Southern Ocean SST, Fig. 1d), or may grow over the re-forecast record (e.g. Equatorial West Africa precipitation, Fig. 1e). A similar picture of changing mean biases exists for both U200 and MSLP (not shown). For the remainder of this paper, we will investigate these changing model mean biases by examining trend errors, defined as the differences in the slopes of the observed and modelled time series best fit lines (e.g. Fig. 1c–f; see Methods Section Linear trends and significance tests), in both re-forecasts and CMIP6 historical simulations for the 1993–2016 period.

Rapid development of re-forecast trend errors

As discussed in the introduction, many seasonal prediction model mean biases are known to develop rapidly, within the first few weeks following initialisation [e.g. refs. 45,46]. With this in mind, we next demonstrate how rapidly the re-forecast trend errors develop, by comparing the dependence of monthly trend errors upon the seasonal cycle and prediction lead time. Multi-model mean re-forecast trend errors for a selection of different SST and precipitation indices are shown in Fig. 2 for four different initialisation months. For all variables, the trend errors strongly depend upon the seasonal cycle and are mostly independent of the initialisation month and consequently the lead time, with significant trend errors often developing during the first month following initialisation. For example, no matter the initialisation month, the Southern Ocean SST trend error (Fig. 2d) appears to reach a maximum in July and a minimum in January, with roughly monotonic evolution between these extrema. Similarly, for Atlantic Niño (Fig. 2b), both the March and June initialisations show the same spike in August, despite one having been initialised three months earlier. While we have not investigated the development of trend errors for leads shorter than a month, we note that ref. 49 also found SST trend errors in Week 3 re-forecasts made by the European Centre for Medium-Range Weather Forecasts Integrated Forecast System (their Fig. 5) in many regions, including the central tropical Pacific, Atlantic Niño, and Gulf Stream, further demonstrating how rapidly these trend errors begin to develop. Still, for most of the SST trend errors in Fig. 2 there remains some dependence on lead time.

Area-averaged monthly a–d SST and e–h precipitation trend errors for a selection of different regions and indices. Four different initialisation months are shown by the different colours, with the x-axis indicating the verification month. Trend errors shown are as a percentage of the observed (ERA5/GPCP) standard deviation for a given verification month. SST and precipitation regions are shown as boxes on Figs. 3 and 7. Statistical significance is indicated by darker symbols with a black outline.

The seasonality of the trend errors is even more striking for precipitation (Fig. 2e–h), where the errors are also almost independent of lead time. In some cases, such as Southeast Asia precipitation for May–January verifications, the trend error has such similar magnitude for different initialisation months that the lines sit almost on top of each other. This also indicates that the errors are well developed at short lead times, in some cases within one month following initialisation. For example, in the Western US, the December verification trend error has almost identical magnitude for both the December initialisation (re-forecast Month 1) and the September initialisation (re-forecast Month 4). Similar levels of seasonal dependence of trend errors are also seen for 200 hPa zonal wind indices (Supplementary Fig. 2). This decoupling of the re-forecast trend errors from the lead time indicates that they are not simply due to a loss of predictability, but that they are systematic errors suggestive of each forecast model going into its own state space. The stronger dependence of both precipitation and U200 trend errors on the seasonal cycle compared to SST suggests that errors may be developing in the atmosphere first and reach something closer to saturation there, whereas the SST errors take longer to develop and so have slightly more dependence on lead time. Errors relating to ENSO in re-forecasts have likewise been suggested to have an atmospheric origin, showing similar levels of seasonal dependence, which is consistent with each model rapidly transitioning to its own attractor50.

Trend errors in re-forecasts compared to CMIP6 simulations

For the remainder of the paper we will focus on seasonally-averaged trend errors at two-season leads (see Methods). Significant SST trend errors are found year-round and across the globe, including the tropical Pacific, the Indian Ocean, the Southern Ocean, and the Atlantic (Fig. 3). In the tropical Pacific, DJF, JJA, and SON trend errors resemble an El Niño-like pattern, similar to the trend error pattern earlier found in the North American Multi-model Ensemble (NMME) re-forecasts for the years 1982–202051,52. In the Northwest Atlantic, the pattern is similar to the mean SST bias resulting from errors in the Gulf Stream separation from the US eastern seaboard42,43. These patterns of trend errors are largely consistent across the different seasonal re-forecasts. Global pattern correlations for individual model trend errors against the multi-model mean range between 0.55 and 0.85 (Supplementary Fig. 3), thus indicating a systematic misrepresentation of observed SST trends in seasonal re-forecasts. The pattern of trend errors in Fig. 3c is also very similar to the difference in the mean bias between the two halves of the re-forecast record shown in Fig. 1a (with the same also true for other seasons, Supplementary Fig. 1), with a global pattern correlation of 0.91.

Multi-model mean sea surface temperature trend error relative to ERA5 (1993–2016) for four different initialisations (September, December, March, June) averaged over two-season leads for verification in a DJF b MAM c JJA and d SON. Positive values indicate that the model trend is more positive (or less negative) than ERA5, and vice versa. Hatching indicates statistical significance at the 5% level.

The SST trend error pattern in the tropical Pacific bears a strong resemblance to the El Niño-like trend error exhibited by CMIP models over the historical period1. To quantify this similarity both in the tropical Pacific and globally, we define several indices based on the patterns of SST trend error in the re-forecast multi-model mean, which are shown as boxes on Fig. 3 for SST. In Fig. 4 we show trend errors for these indices standardised by the observed standard deviation. The trend error in individual seasonal re-forecasts is shown by the coloured scatter symbols, with the range of the CMIP6 ensemble illustrated by the box and whisker plots. We also include the trend error of two additional observationally-based datasets, relative to ERA5, as a measure of observational uncertainty. For comparison, we also reproduced this figure using the Months 1-3 re-forecast trend errors (Supplementary Fig. 4), and found generally similar results but with somewhat weaker amplitudes.

Seasonal trend error for a Equatorial Pacific b Atlantic Niño c Gulf Stream d Southern Ocean e Niño Upwelling and f North Indian Ocean SST (regions shown on Fig. 3). For each verification season, the first column shows the seasonal re-forecast trend error for each individual model and the re-forecast trend error for the multi-model mean, the second column shows the CMIP6 model trend error distribution and the third column shows two other observations-based datasets, relative to ERA5. As in Fig. 3, these are averaged for two-season leads for the re-forecasts. Units are trend error per year as a percentage of the ERA5 index standard deviation. Statistical significance is indicated by darker symbols with a black outline.

For all SST regions in Fig. 4, the CMIP6 model spread closely matches the range of the seasonal re-forecasts, with the two different model sets tending to follow the same evolution across different seasons. The agreement, at least in sign if not always magnitude, between the different seasonal re-forecasts is also apparent, particularly for those indices and seasons where the multi-model mean is significant. Note also that the global mean SST trend overall is too warm in the seasonal re-forecasts and even more so in the CMIP6 historical simulations (Supplementary Fig. 5).

There are also associated trend errors in mean sea level pressure (MSLP, Supplementary Fig. 6) and 2 m temperature (Supplementary Fig. 7). In the North Pacific for DJF, significant negative MSLP trend errors are indicative of an anomalous deepening and expansion of the Aleutian low (Supplementary Fig. 6a), with associated (though largely not significant in the multi-model mean) 2 m temperature trend errors over North America (Supplementary Fig. 7a). MSLP trend errors in the Arctic correspond to the negative phase of the Arctic Oscillation in MAM (Supplementary Fig. 6b) and the positive phase in JJA (Supplementary Fig. 6c), with similarly-matching 2 m temperature trend errors in the high latitudes that align with these phases53. MSLP trend errors near Europe in DJF and SON also resemble the negative phase of the North Atlantic Oscillation, though these errors are not significant. There is even greater similarity of the pattern of trend error across the different models for MSLP than for SST, with global pattern correlations of individual models against the multi-model mean with a range of roughly 0.70–0.98 (Supplementary Fig. 8).

Relatedly, models also exhibit significant trend errors in upper-level (200 hPa) zonal wind (U200, Fig. 5). Many of these are related to well-known biases in the position and/or strength of the jet streams, such as the North and South Pacific, North Atlantic, and tropical easterly jets. For example, in the North Atlantic in JJA, trend errors are indicative of a greater northward displacement relative to the observed jet with time, a trend error that has previously been found in SEAS554. Trend error patterns are similar across all 9 re-forecast models, with pattern correlations between 0.71–0.98 for individual models against the MMM (Supplementary Fig. 9).

Multi-model mean 200 hPa zonal wind trend error relative to ERA5 (1993–2016) for four different initialisations (September, December, March, June) averaged over two-season leads for verification in a DJF b MAM c JJA and d SON. Positive values indicate that the model trend is more positive (or less negative) than ERA5, and vice versa. Hatching indicates statistical significance at the 5% level.

Trend errors for selected 200 hPa zonal wind indices (three westerly jets and one easterly jet; regions shown in Fig. 5) show close agreement between the re-forecasts and CMIP6 historical simulations (Fig. 6a). These errors are particularly large for the North Atlantic and Equatorial Africa jet regions, with significant trend errors in all but one of the re-forecasts across the two regions.

a Seasonal trend error for 200 hPa zonal jet indices for the North Pacific jet (DJF), South Pacific jet (JJA), North Atlantic jet (JJA), and Equatorial Africa easterly jet (SON) (regions shown on Fig. 5). b and c show trend errors for two MSLP-based indices: b the Arctic Oscillation and c North Pacific Index (see Methods). For each verification season, the first column shows the seasonal re-forecast trend error for each individual model and the re-forecast trend error for the multi-model mean, the second column shows the CMIP6 model trend error distribution and the third column shows two other observations-based datasets, relative to ERA5. These are averaged for two-season leads for the re-forecasts. Units are trend error per year as a percentage of the ERA5 index standard deviation. Statistical significance is indicated by darker symbols with a black outline.

Also included in Fig. 6 are two MSLP-based indices: the Arctic Oscillation (AO) and North Pacific Indices (see Methods). As with the SST and U200 indices, the CMIP6 ensemble closely matches the re-forecast spread. This is particularly noticeable for the AO Index, which switches from a negative trend error in MAM to a positive one in JJA in both the seasonal re-forecasts and historical simulations, in agreement with the MSLP trend error maps (Supplementary Fig. 6). The agreement between the seasonal re-forecast trend errors from individual models is also striking, with all points clustered together for all verification seasons.

Figure 7 shows that there are also significant precipitation trend errors in many regions worldwide, which are largely in agreement with the SST and circulation (MSLP/U200) trend errors shown previously. These include precipitation trend errors that often look like the canonical El Niño signal (e.g. Southeast US in SON) or align with the climatological wet season (e.g. Western US, Western/Eastern Equatorial Africa). Also of note is the north-south dipole extending from the far North Atlantic (~ 70∘ N) southwards to Western Europe, which has been related to CMIP6 trend errors in the North Atlantic jet8, as seen in Fig. 5. These errors also have implications for seasonal prediction skill, which will be the topic of a future study. The trend error patterns across the different models are again quite similar to each other, with pattern correlations ranging from 0.75–0.95 against the multi-model mean (Supplementary Fig.10). The pattern of precipitation trend errors in Fig. 7c also closely matches the mean bias difference between the first and second halves of the re-forecast record (Fig. 1b), with a global pattern correlation of 0.89.

Multi-model mean precipitation trend error relative to GPCP (1993–2016) for four different initialisations (September, December, March, June) for two-season leads for verification in a DJF b MAM c JJA and d SON. Positive values indicate that the model trend is more positive (or less negative) than GPCP, and vice versa. Hatching indicates statistical significance at the 5% level.

The greater similarity of trend error patterns across the different models for precipitation, MSLP and U200 than for SST is suggestive of more rapid atmospheric error development (Supplementary Figs. S3, S8, S9, S10). As both mean biases and trend errors develop very rapidly (at short leads) within the re-forecasts, the similarity between the trend errors in the initialised seasonal re-forecasts and the uninitialised CMIP6 historical simulations for SST, precipitation, MSLP and U200 is suggestive of all the models rapidly transitioning from their initialised states to their own climates.

Precipitation trend errors for selected indices (Fig. 8; see boxes on Fig. 7) show that both the seasonality of the errors and their similarity between the re-forecasts and CMIP6 historical simulations are even more striking for precipitation than for SST (cf. Fig. 4). For example, in Southeast Asia the switch between positive trend errors in JJA and negative trend errors in SON is highly consistent between the two different model ensembles, with the re-forecast multi-model mean almost exactly matching the median CMIP6 value. Similar results are also seen for Western US, Southeast US and Western Asia. The re-forecast multi-model mean values for individual seasons are significant for all but one of the African regions (Fig. 8e), with similar levels of consistency with the CMIP6 ensemble. We again computed these regional trend errors for Months 1-3 and found very little difference (not shown).

Seasonal precipitation trend error for a Western US b Southeast US c Western Asia d Southeast Asia and e Equatorial East Africa (MAM), Southern Africa (MAM), Equatorial West Africa (JJA) Northeast Africa (JJA) and Equatorial Central Africa (SON) (regions shown on Fig. 7). For each verification season, the first column shows the re-forecast trend error for each individual model and the re-forecast trend error for the multi-model mean, the second column shows the CMIP6 model trend error distribution and the third column shows another observations-based dataset, relative to GPCP. As in Fig. 7, these are averaged for two-season leads for the re-forecasts. Units are trend error per year as a percentage of the GPCP index standard deviation. Statistical significance is indicated by darker symbols with a black outline.

On a global scale, both re-forecasts and CMIP6 historical simulations have trends towards becoming increasingly too wet (in agreement with previous studies10), primarily during the extratropical cold seasons (Fig. 9), although observational uncertainty may also be an issue in the Southern Hemisphere. These trend errors are linked to the storm track trend errors shown previously (Fig. 5). For example, Southern Hemisphere precipitation trend errors are largest in SON, coincident with the largest 200 hPa zonal wind errors.

Seasonal precipitation trend error for a the globe b northern hemisphere extratropics (30∘–90∘ N) c the tropics (30∘ S–30∘ N) and d southern hemisphere extratropics (30∘–90∘ S). For each verification season, the first column shows the individual re-forecasts and the multi-model mean, the second column shows the CMIP6 model distribution and the third column shows another observations-based dataset, relative to GPCP. These are averaged over two-season leads for the re-forecasts. Units are trend error per year as a percentage of the GPCP index standard deviation. Statistical significance is indicated by darker symbols with a black outline.

Calculating mean bias differences between the first 12 and last 12 years of the common re-forecast record for each region yields similar results to the trend errors in Figs. 4 and 8, suggesting that the mean bias changes are largely linear for most regions and seasons (Supplementary Figs. S11 and S12). Note also that for models with a longer re-forecast record, including the three with re-forecasts of longer than 30 years (SEAS5, GEOS-S2S, and SPEAR), we obtained similar SST and precipitation trend errors when using all years available for each model instead of only the common years (Supplementary Figs. S13 and S14).

Discussion

While several studies have examined trends, trend errors, and the stationarity of mean biases in either operational initialised seasonal re-forecasts51,52,55,56 or in historical climate model simulations5,7,57, to our knowledge this is the first study that directly compares them, even though both use generally similar models and are driven by similar CMIP external forcings. We found that, over the 1993–2016 period, many common trend errors in historical climate model simulations are also evident in seasonal re-forecasts, with a striking similarity in the amplitude and seasonal dependence of SST, precipitation, U200 and MSLP-based trend errors for many different regions (Figs. 4, 6 and 8). This similarity is particularly noticeable for trend errors of precipitation, U200 and MSLP-based indices, which develop rapidly and are often fully developed within leads as short as one month in the seasonal re-forecasts, suggesting that the errors may have an atmospheric origin.

Given how rapidly they develop, the re-forecast trend errors likely reflect the sensitivity of the model mean biases to the changing external forcing (e.g. CO2, aerosols). That is, the pattern and magnitude of trend errors for a given model, variable and lead time are very similar to the difference in mean bias between the first and second halves of the re-forecast record (cf. Fig. 1a, b and Figs. 3c and 7c). These mean biases are also known to develop rapidly (within weeks following forecast initialisation) and we find that these re-forecast trend errors develop on similar timescales. Moreover, since seasonal re-forecasts are initialised from observations, their trend errors are relative to a realisation drawn from nature and are unlikely to represent the uncertainty due to internal variability. We therefore conclude that trend errors in the historical climate simulations, because they are so similar to the re-forecast trend errors, also likely represent the relatively rapid adjustment of the local model mean bias to changing external forcing.

Our study cannot rule out the possibility that the imposed external forcings themselves contain errors, which could drive similar trend errors in both re-forecasts and historical simulations. This seems unlikely to be the dominant cause, as model mean biases are known to exist independent of these radiative forcings, but it is entirely possible that it is a contributory factor. This could be explored further using single forcing runs, which is beyond the scope of the current study. Initialisation shock is also unlikely to have a role here, as the seasonal prediction models analysed include both first-of-the-month initialisations and lagged ensembles, as well as different initialisation datasets. These would all have to drive the same shock across much of the globe to result in the trend error patterns that we find are so similar across different models. Initialisation shock also cannot explain the trend errors in the uninitialised models. All of the above points to a cause that is common across different models, and is consistent with models transitioning to their own attractor, which also raises the possibility that there may be spurious trends in model variability as well. Regardless of the cause, our results suggest that reducing or eliminating forecast model mean biases could also help alleviate historical model trend errors.

Model mean biases can have various causes, including poorly represented atmosphere-ocean interactions, ocean processes and cloud radiative feedbacks58,59. Previous studies have argued that the rapid mean error development in forecasts may be used to help identify and correct deficiencies with model parameterisations that are used in both forecasts and climate model simulations60,61,62 or to help correct the mean biases themselves63. Our results likewise suggest that diagnosis of climate model trend errors should be made by examining the early development of the mean bias in seasonal re-forecasts. We therefore suggest that all climate models that are used to produce CMIP7 climate projections should also be run as seasonal prediction models, with monthly start dates to capture the seasonal evolution of the errors. Since one uncertainty in our study is the relatively short common re-forecast record, it would also be beneficial for such re-forecasts to cover a longer period, to enable a more robust detection of trends and trend errors. This would also allow for a direct comparison between trend errors from individual seasonal prediction and climate models, which was not possible here due to the models available.

Given the importance of future trends in variables such as SST and precipitation to regional and global climate projections, diagnosing the causes of model trend errors and working to alleviate them is essential. Since many of the historical trend errors we have identified appear to have evolved roughly linearly over the re-forecast period, it is possible that they will continue into the future and thereby impact projected trends as well. Until we understand more about the potential sensitivity of model mean biases to external forcing and uncertainty in forcing fields, and are able to reduce these model mean biases so that their errors will not confuse the trends we are trying to discern64, we have to examine any model trends in both historical and projected simulations with great care.

Methods

Observations-based datasets

We use three observational estimates for sea surface temperature (SST), 200 hPa zonal wind (U200) and mean sea level pressure (MSLP) and two for precipitation. For SST, U200 and MSLP, we use the European Centre for Medium-Range Weather Forecasts (ECMWF) 5th generation reanalysis [ERA5,65] as our primary reference dataset. We also include results based on the ECMWF Interim Reanalysis (ERA-Interim,66, for SST, U200 and MSLP), the Hadley Centre Sea Ice and SST (HadISST) dataset (for SST) and the Japanese 55-year Reanalysis [JRA55,67] for U200 and MSLP to give an indication of observational uncertainty. For 2 m temperature (only used in the Supplementary Information), we use ERA5. Our primary precipitation reference dataset is the Global Precipitation Climatology Project (GPCP) analysis68. We also compare against the Climate Prediction Centre Merged Analysis of Precipitation (CMAP).

Re-forecasts

We use multi-decade re-forecasts from 11 different operational seasonal prediction models: the European Centre for Medium-Range Weather Forecasts (ECMWF) SEAS569, Deutscher Wetterdienst (DWD) GCFS2.170, Environment and Climate Change Canada (ECCC) CanCM4i71, ECCC GEM5-NEMO71, Euro-Mediterranean Centre on Climate Change (CMCC) SPS3.572, National Aeronautics and Space Administration (NASA) GEOS-S2S73, Geophysical Fluid Dynamics Laboratory (GFDL) SPEAR74, United Kingdom Met Office GloSea6-GC3.275, Meteo-France System 876, National Centres for Environmental Prediction (NCEP) CFSv277 and Japan Meteorological Agency (JMA) CPS378. Unless otherwise stated, analysis is performed for the common period across all 11 models (1993–2016).

All of the seasonal prediction models listed above are initialised every year of their re-forecast period (Table S1) and each initialisation is run out to approximately six months. In this study we only use four different monthly initialisations for each model (March, June, September and December), and we primarily analyse seasonal averages over the fourth, fifth and sixth months of the re-forecasts, which we term “two-season lead”. For example, for a March initialisation, verification would be the June-July-August average. We use SST and precipitation data from all models. MSLP, U200 and 2 m temperature data were not available for GEOS-S2S or SPEAR, so analysis of these variables excludes these models. Further model details can be found in Supplementary Table S1.

Climate models

We also use model simulations from the Coupled Model Intercomparison Project Phase 6 [CMIP6,79] from 38 different models (listed in Supplementary Table S2). We use the historical simulations for the years 1993–2014, and the Shared Socioeconomic Pathway (SSP)-245, a scenario with medium radiative forcing by the end of the century, for years 2015–2016, to align with the re-forecast record. We use surface temperature, precipitation, MSLP and U200 data from these models.

All data are regridded onto a common 1-degree grid prior to analysis.

Linear trends and significance tests

To calculate what we term “trend error” for each climate model or seasonal prediction model, we take data for the relevant verification period from each climate model simulation or yearly re-forecast initialisation and calculate the error relative to observations. The trend error is then calculated by computing the slope in the least squares estimator of this error over the relevant period, typically 1993–2016. For example, to determine the seasonal prediction model DJF SST trend error shown in Fig. 4a, we calculate the area-averaged Equatorial Pacific DJF error for each yearly October initialisation from 1993–2016 to create a time series of the error, and then calculate the trend of that error. Note that this is the same as calculating the trend of the observations and models separately and calculating the difference in the slopes. In the case of Fig. 4, this is then normalised by the observed standard deviation of the relevant index. Significance is computed using the Hamed and Rao modification to the Mann-Kendall trend test to account for serial autocorrelation80, and significance is shown at the 5% level.

Significance for mean bias differences is calculated by comparing to a distribution of 1000 randomly sampled mean bias differences, sampled with replacement from the original time series. The actual mean bias difference is labelled as significant if it falls above or below the 97.5th or 2.5th percentile of the bootstrapped distribution, respectively. Note that this may give rise to slightly higher levels of significance overall than the Mann-Kendall trend test (cf. Fig. 1a and b), due to limitations of the Mann-Kendall test with shorter time series81.

Indices

The North Pacific Index, as defined by Trenberth and Hurrell82, is the MSLP averaged over 30∘–65∘ N, 160∘ E–140∘ W. To calculate the Arctic Oscillation Index, the leading EOF of ERA5 1000 hPa geopotential height anomalies poleward of 20∘ N, with seasonal cycle removed, is computed for the period 1979–2000. 1000 hPa geopotential height anomalies from individual models (computed from MSLP using the approximate relation Z = 8(MSLP−1000), following Thompson and Wallace83) are then projected onto this loading pattern. All other indices are calculated by averaging over the regions shown as boxes on Figs. 3, 5 and 7.

Data availability

Monthly Copernicus seasonal forecast model data are available at https://doi.org/10.24381/cds.68dd14c3. NASA GEOS-S2S and GFDL-SPEAR hindcasts can be downloaded at http://iridl.ldeo.columbia.edu/SOURCES/.Models/.NMME/.NASA-GEOSS2S/.HINDCAST/.MONTHLY/and http://iridl.ldeo.columbia.edu/SOURCES/.Models/.NMME/.GFDL-SPEAR/.HINDCAST/.MONTHLY/, respectively. The CMIP6 data are available for download at https://esgf-node.llnl.gov/search/cmip6/. ERA5 data can be downloaded at https://doi.org/10.24381/cds.f17050d7. ERA-Interim, HadISST, JRA55, GPCP and CMAP data can be downloaded at https://psl.noaa.gov/data/gridded/.

Code availability

The underlying code for this study is not publicly available but may be made available to qualified researchers on reasonable request from the corresponding author.

References

Wills, R. C., Dong, Y., Proistosecu, C., Armour, K. C. & Battisti, D. S. Systematic climate model biases in the large-scale patterns of recent sea-surface temperature and sea-level pressure change. Geophys. Res. Lett. 49, e2022GL100011 (2022).

Vicente-Serrano, S. M. et al. Do CMIP models capture long-term observed annual precipitation trends? Clim. Dyn. 58, 2825–2842 (2022).

Donat, M. G. et al. How credibly do CMIP6 simulations capture historical mean and extreme precipitation changes? Geophys. Res. Lett. 50, e2022GL102466 (2023).

Coats, S. & Karnauskas, K. Are simulated and observed twentieth century tropical Pacific sea surface temperature trends significant relative to internal variability? Geophys. Res. Lett. 44, 9928–9937 (2017).

Seager, R., Henderson, N. & Cane, M. Persistent discrepancies between observed and modeled trends in the tropical Pacific Ocean. J. Clim. 35, 4571–4584 (2022).

Nasrollahi, N. et al. How well do CMIP5 climate simulations replicate historical trends and patterns of meteorological droughts? Water Resour. Res. 51, 2847–2864 (2015).

Bhend, J. & Whetton, P. Consistency of simulated and observed regional changes in temperature, sea level pressure and precipitation. Clim. Change 118, 799–810 (2013).

Blackport, R. & Fyfe, J. C. Climate models fail to capture strengthening wintertime North Atlantic jet and impacts on Europe. Sci. Adv. 8, eabn3112 (2022).

Vautard, R. et al. Heat extremes in Western Europe increasing faster than simulated due to atmospheric circulation trends. Nat. Comm. 14, 6803 (2023).

Gu, G. & Adler, R. F. Observed variability and trends in global precipitation during 1979–2020. Clim. Dyn. 61, 131–150 (2023).

Cane, M. A. et al. Twentieth-century sea surface temperature trends. Science 275, 957–960 (1997).

Karnauskas, K. B., Seager, R., Kaplan, A., Kushnir, Y. & Cane, M. A. Observed strengthening of the zonal sea surface temperature gradient across the equatorial Pacific Ocean. J. Clim. 22, 4316–4321 (2009).

Solomon, A. & Newman, M. Reconciling disparate twentieth-century Indo-Pacific ocean temperature trends in the instrumental record. Nat. Clim. Change 2, 691–699 (2012).

Seager, R. et al. Strengthening tropical Pacific zonal sea surface temperature gradient consistent with rising greenhouse gases. Nat. Clim. Change 9, 517–522 (2019).

Lee, S. et al. On the future zonal contrasts of equatorial Pacific climate: Perspectives from Observations, Simulations, and Theories. npj Clim. Atmos. Sci. 5, 82 (2022).

L’Heureux, M. L., Lee, S. & Lyon, B. Recent multidecadal strengthening of the Walker circulation across the tropical Pacific. Nat. Clim. Change 3, 571–576 (2013).

Sohn, B., Yeh, S.-W., Schmetz, J. & Song, H.-J. Observational evidences of Walker circulation change over the last 30 years contrasting with GCM results. Clim. Dyn. 40, 1721–1732 (2013).

Liu, Z., Vavrus, S., He, F., Wen, N. & Zhong, Y. Rethinking tropical ocean response to global warming: The enhanced equatorial warming. J. Clim. 18, 4684–4700 (2005).

Xie, S.-P. et al. Global warming pattern formation: Sea surface temperature and rainfall. J. Clim. 23, 966–986 (2010).

Yeh, S.-W., Ham, Y.-G. & Lee, J.-Y. Changes in the tropical Pacific SST trend from CMIP3 to CMIP5 and its implication of ENSO. J. Clim. 25, 7764–7771 (2012).

Huang, P. & Ying, J. A multimodel ensemble pattern regression method to correct the tropical Pacific SST change patterns under global warming. J. Clim. 28, 4706–4723 (2015).

Cai, W. et al. Changing El Niño–Southern oscillation in a warming climate. Nat. Rev. Earth Environ. 2, 628–644 (2021).

Colman, R. & Power, S. B. What can decadal variability tell us about climate feedbacks and sensitivity? Clim. Dyn. 51, 3815–3828 (2018).

Cai, W. et al. Increasing frequency of extreme El Niño events due to greenhouse warming. Nat. Clim. Change 4, 111–116 (2014).

Yeh, S.-W. et al. ENSO atmospheric teleconnections and their response to greenhouse gas forcing. Rev. Geophys. 56, 185–206 (2018).

Beverley, J. D., Collins, M., Lambert, F. H. & Chadwick, R. Future changes to El Niño teleconnections over the North Pacific and North America. J. Clim. 34, 6191–6205 (2021).

Beverley, J. D., Collins, M., Lambert, F. H. & Chadwick, R. Drivers of changes to the ENSO–Europe Teleconnection under future warming. Geophys. Res. Lett. 51, e2023GL107957 (2024).

Chung, C. E. & Ramanathan, V. Relationship between trends in land precipitation and tropical SST gradient. Geophys. Res. Lett. 34 (2007).

Deser, C. & Phillips, A. S. Atmospheric circulation trends, 1950–2000: The relative roles of sea surface temperature forcing and direct atmospheric radiative forcing. J. Clim. 22, 396–413 (2009).

Olonscheck, D., Rugenstein, M. & Marotzke, J. Broad consistency between observed and simulated trends in sea surface temperature patterns. Geophys. Res. Lett. 47, e2019GL086773 (2020).

Watanabe, M., Dufresne, J.-L., Kosaka, Y., Mauritsen, T. & Tatebe, H. Enhanced warming constrained by past trends in equatorial Pacific sea surface temperature gradient. Nat. Clim. Change 11, 33–37 (2021).

Kociuba, G. & Power, S. B. Inability of CMIP5 models to simulate recent strengthening of the Walker circulation: Implications for projections. J. Clim. 28, 20–35 (2015).

Heede, U. K. & Fedorov, A. V. Eastern equatorial Pacific warming delayed by aerosols and thermostat response to CO2 increase. Nat. Clim. Change 11, 696–703 (2021).

Hwang, Y.-T., Xie, S.-P., Deser, C. & Kang, S. M. Connecting tropical climate change with Southern Ocean heat uptake. Geophys. Res. Lett. 44, 9449–9457 (2017).

Andrews, T. et al. On the effect of historical SST patterns on radiative feedback. J. Geophys. Res. Atmos. 127, e2022JD036675 (2022).

Dong, Y., Armour, K. C., Battisti, D. S. & Blanchard-Wrigglesworth, E. Two-way teleconnections between the Southern Ocean and the tropical Pacific via a dynamic feedback. J. Clim. 35, 6267–6282 (2022).

Kang, S. M., Ceppi, P., Yu, Y. & Kang, I.-S. Recent global climate feedback controlled by Southern Ocean cooling. Nat. Geosci. 16, 775–780 (2023).

Fu, M. & Fedorov, A. The role of Bjerknes and shortwave feedbacks in the tropical Pacific SST response to global warming. Geophys. Res. Lett. 50, e2023GL105061 (2023).

Deser, C., Phillips, A., Bourdette, V. & Teng, H. Uncertainty in climate change projections: the role of internal variability. Clim. Dyn. 38, 527–546 (2012).

Kay, J. E. et al. The Community Earth System Model (CESM) large ensemble project: a community resource for studying climate change in the presence of internal climate variability. Bull. Am. Meteor. Soc. 96, 1333–1349 (2015).

Li, G. & Xie, S.-P. Tropical biases in CMIP5 multimodel ensemble: The excessive equatorial Pacific cold tongue and double ITCZ problems. J. Clim. 27, 1765–1780 (2014).

Lee, R. W. et al. Impact of Gulf Stream SST biases on the global atmospheric circulation. Clim. Dyn. 51, 3369–3387 (2018).

Chassignet, E. P. et al. Impact of horizontal resolution on global ocean-sea-ice model simulations based on the experimental protocols of the Ocean Model Intercomparison Project phase 2 (OMIP-2). Geosci. Model Dev. Dis. 2020, 1–58 (2020).

Priestley, M. D. K., Ackerley, D., Catto, J. L. & Hodges, K. I. Drivers of biases in the CMIP6 extratropical storm tracks. Part I: Northern Hemisphere. J. Clim. 36, 1451–1467 (2023).

Green, B. W., Sinsky, E., Sun, S., Tallapragada, V. & Grell, G. A. Sensitivities of subseasonal unified forecast system simulations to changes in parameterizations of convection, cloud microphysics, and planetary boundary layer. Mon. Wea. Rev. 151, 2279–2294 (2023).

Song, S. & Mapes, B. Interpretations of systematic errors in the NCEP Climate Forecast System at lead times of 2, 4, 8,..., 256 days. J. Adv. Model. Earth Syst. 4 (2012).

Magnusson, L., Alonso-Balmaseda, M., Corti, S., Molteni, F. & Stockdale, T. Evaluation of forecast strategies for seasonal and decadal forecasts in presence of systematic model errors. Clim. Dyn. 41, 2393–2409 (2013).

Gupta, A. S., Jourdain, N. C., Brown, J. N. & Monselesan, D. Climate drift in the CMIP5 models. J. Clim. 26, 8597–8615 (2013).

Wulff, C. O., Vitart, F. & Domeisen, D. I. Influence of trends on subseasonal temperature prediction skill. Quart. J. Roy. Meteor. Soc. 148, 1280–1299 (2022).

Beverley, J. D., Newman, M. & Hoell, A. Rapid development of systematic ENSO-related seasonal forecast errors. Geophys. Res. Lett. 50, e2022GL102249 (2023).

Shin, C.-S. & Huang, B. A spurious warming trend in the NMME equatorial Pacific SST hindcasts. Clim. Dyn. 53, 7287–7303 (2019).

L’Heureux, M. L., Tippett, M. K. & Wang, W. Prediction challenges from errors in tropical Pacific sea surface temperature trends. Front. Clim. 4, 837483 (2022).

Iles, C. & Hegerl, G. Role of the North Atlantic Oscillation in decadal temperature trends. Environ. Res. Lett. 12, 114010 (2017).

Patterson, M., Befort, D. J., O’Reilly, C. H. & Weisheimer, A. Drivers of the ECMWF SEAS5 seasonal forecast for the hot and dry European summer of 2022. Quart. J. Roy. Meteor. Soc. (2024).

Shao, Y., Wang, Q. J., Schepen, A. & Ryu, D. Embedding trend into seasonal temperature forecasts through statistical calibration of GCM outputs. Int. J. Climatol. 41, E1553–E1565 (2021).

Balmaseda, M. A. et al. Skill assessment of seasonal forecasts of ocean variables. Front. Mar. Sci. 11, 1380545 (2024).

Kerkhoff, C., Künsch, H. R. & Schär, C. Assessment of bias assumptions for climate models. J. Clim. 27, 6799–6818 (2014).

Richter, I. Climate model biases in the eastern tropical oceans: Causes, impacts and ways forward. WIREs Clim. Change 6, 345–358 (2015).

Zhang, Q., Liu, B., Li, S. & Zhou, T. Understanding models’ global sea surface temperature bias in mean state: from CMIP5 to CMIP6. Geophys. Res. Lett. 50, e2022GL100888 (2023).

Phillips, T. J. et al. Evaluating parameterizations in general circulation models: Climate simulation meets weather prediction. Bull. Am. Meteor. Soc. 85, 1903–1916 (2004).

Martin, G. et al. Analysis and reduction of systematic errors through a seamless approach to modeling weather and climate. J. Clim. 23, 5933–5957 (2010).

Randall, D. A. & Emanuel, K. The Weather-Climate Schism. Bull. Amer. Meteor. Soc. 105, E300–E305 (2023).

Ma, H.-Y. et al. On the correspondence between seasonal forecast biases and long-term climate biases in sea surface temperature. J. Clim. 34, 427–446 (2021).

Palmer, T. & Stevens, B. The scientific challenge of understanding and estimating climate change. Proc. Natl Acad. Sci. 116, 24390–24395 (2019).

Hersbach, H. et al. The ERA5 global reanalysis. Quart. J. Roy. Meteor. Soc. 146, 1999–2049 (2020).

Dee, D. P. et al. The ERA-Interim reanalysis: Configuration and performance of the data assimilation system. Quart. J. Roy. Meteor. Soc. 137, 553–597 (2011).

Kobayashi, S. et al. The JRA-55 reanalysis: General specifications and basic characteristics. J. Meteor. Soc. Jpn. 93, 5–48 (2015).

Adler, R. F. et al. The Global Precipitation Climatology Project (GPCP) monthly analysis (new version 2.3) and a review of 2017 global precipitation. Atmosphere 9, 138 (2018).

Johnson, S. J. et al. SEAS5: the new ECMWF seasonal forecast system. Geosci. Model Dev. 12, 1087–1117 (2019).

Fröhlich, K. et al. The German climate forecast system: GCFS. J. Adv. Model. Earth Syst. 13, e2020MS002101 (2021).

Lin, H. et al. The Canadian seasonal to interannual prediction system version 2 (CanSIPSv2). Weather. Forecast. 35, 1317–1343 (2020).

Gualdi, S. et al. The new CMCC operational seasonal prediction system. CMCC Tech. Note. TN0288, 1–34 (2020).

Molod, A. et al. GEOS-S2S version 2: The GMAO high-resolution coupled model and assimilation system for seasonal prediction. J. Geophys. Res. Atmos. 125, e2019JD031767 (2020).

Delworth, T. L. et al. SPEAR: The next generation GFDL modeling system for seasonal to multidecadal prediction and projection. J. Adv. Model. Earth Syst. 12, e2019MS001895 (2020).

MacLachlan, C. et al. Global Seasonal forecast system version 5 (GloSea5): A high-resolution seasonal forecast system. Quart. J. Roy. Meteor. Soc. 141, 1072–1084 (2015).

Batté, L., Dorel, L., Ardilouze, C. & Guérémy, J. Documentation of the METEO-FRANCE Seasonal Forecasting System 8. Technical Report (2021).

Saha, S. et al. The NCEP climate forecast system version 2. J. Clim. 27, 2185–2208 (2014).

Hirahara, S. et al. Japan Meteorological Agency/Meteorological Research Institute Coupled Prediction System Version 3 (JMA/MRI-CPS3). J. Meteor. Soc. Jpn. advpub, 2023–009 (2023).

Eyring, V. et al. Overview of the Coupled Model Intercomparison Project Phase 6 (CMIP6) experimental design and organization. Geosci. Model Dev. 9, 1937–1958 (2016).

Hamed, K. H. & Rao, A. R. A modified Mann-Kendall trend test for autocorrelated data. J. Hydrol. 204, 182–196 (1998).

Wang, F. et al. Re-evaluation of the power of the Mann-Kendall test for detecting monotonic trends in hydrometeorological time series. Front. Earth. Sci. 8, 14 (2020).

Trenberth, K. E. & Hurrell, J. W. Decadal atmosphere-ocean variations in the Pacific. Clim. Dyn. 9, 303–319 (1994).

Thompson, D. W. & Wallace, J. M. The Arctic Oscillation signature in the wintertime geopotential height and temperature fields. Geophys. Res. Lett. 25, 1297–1300 (1998).

Acknowledgements

The scientific results and conclusions, as well as any views or opinions expressed herein, are those of the author(s) and do not necessarily reflect the views of NOAA or the Department of Commerce. This work was supported by NOAA cooperative agreement NA22OAR4320151 and the Famine Early Warning Systems Network. The authors wish to thank Maria Gehne and the three reviewers for their helpful feedback.

Author information

Authors and Affiliations

Contributions

J.D.B., M.N. and A.H. all contributed to the design and implementation of the research, to the analysis of the results and to the writing of the manuscript. All authors read and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Beverley, J.D., Newman, M. & Hoell, A. Climate model trend errors are evident in seasonal forecasts at short leads. npj Clim Atmos Sci 7, 285 (2024). https://doi.org/10.1038/s41612-024-00832-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41612-024-00832-w