Abstract

This paper provides an updated assessment of the “International Research Institute for Climate and Society’s (IRI) El Niño Southern Oscillation (ENSO) Predictions Plume”. We evaluate 253 real-time forecasts of the Niño 3.4 index issued from February 2002 to February 2023 and examine multimodal means of dynamical (DYN) and statistical (STAT) models separately. Forecast skill diminishes as lead time increases in both DYN and STAT forecasts, with peak accuracy occurring post-northern hemisphere spring predictability barrier and preceding seasons. The DYN forecasts outperform STAT forecasts with a pronounced advantage in forecasts initiated from late boreal winter through spring. The analysis uncovers an asymmetry in predicting the onset of cold and warm ENSO episodes, with warm episode onsets being better forecasted than cold onsets in both DYN and STAT models. The DYN forecasts are found to be valuable for predicting warm and cold ENSO episode onsets at least several months in advance, while STAT forecasts are less informative about ENSO phase transitions. The results indicate that predicting ENSO onset is challenging and that the ability to do so is both model- and event-dependent.

Similar content being viewed by others

Introduction

Since February 2002, the International Research Institute for Climate and Society (IRI) has routinely disseminated forecasts of the El Niño Southern Oscillation (ENSO) every month, in both deterministic and probabilistic formats. These forecasts, generated by forecast producers around the world, employ a range of dynamical and statistical models. Referred to as the IRI ENSO predictions plume, this resource provides valuable insights into the expected ENSO conditions over the next nine overlapping 3-month periods, corresponding to a maximum lead time of 9 months. The identification of the ENSO state relies on the Niño3.4 index, which tracks the progression of sea surface temperature (SST) anomalies in the central-eastern equatorial Pacific region spanning from 5°S to 5°N and 170°W to 120°W. The objective of this paper is to conduct a reassessment of the IRI ENSO predictions over the extended period of February 2002—February 2023, encompassing a total of 253 real-time forecasts. This paper reevaluates the performance of the multimodel mean of dynamical and statistical real-time forecasts issued during last two decades, updating earlier analyses that were limited to the 2002–2011 period1,2, with a focus on the ability of dynamical and statistical models to predict the onset of ENSO.

Supplementary Figure S1 illustrates the IRI ENSO predictions plume (henceforth the plume) released in mid-February 2023 (the last issued forecast considered in this study). This example encompasses the forecast period from Feb–Apr 2023 through Oct–Dec 2023, showcasing predictions from various dynamical and statistical models. Additionally, it includes the multimodel mean (thick solid lines), which combines the predictions from both types of models. The plume stands out for its distinctiveness in two key aspects. It provides real-time ENSO forecasts issued monthly from various dynamical and statistical models, while keeping a track record of the models used and forecasts issued, and includes a forecast discussion released on the 19th of each month. The IRI website (https://iri.columbia.edu/our-expertise/climate/forecasts/enso/current/) hosts archived forecasts data and discussions, enabling users to retrospectively review past forecasts. This extensive compilation of real-time forecasts from diverse models, accompanied by pertinent discussions, stands as a vital asset, strengthening the efficacy of utilizing the plume for real-time ENSO predictions.

The real-time prediction data from the plume has been assessed in two previous studies1,2. In their 2012 study, Barnston et al.1 conducted an analysis to assess the real-time prediction capabilities of 20 models individually. The evaluation was performed using a dataset spanning 9 years, from February 2002 to March 2011. They found that the dynamical models exhibited a statistically significant advantage over the statistical models when predicting ENSO events during the months of March to May. This period is particularly challenging due to the presence of the northern hemisphere spring predictability barrier3,4,5,6,7. The superior performance of the dynamical models was attributed to several factors. First, these models largely consisted of coupled ocean–atmosphere prediction systems, which allowed for a comprehensive representation of the complex interactions between the atmosphere and ocean over the tropical Pacific. Additionally, most dynamical models utilized high spatial resolution, advanced physical parameterizations, and data assimilation schemes for model initializations8. In contrast, the statistical models relied on a longer historical data record to establish predictor–predictand relationships. The temporal resolution is coarser in statistical models and many statistical models have monthly or seasonal averaged predictors, meaning they cannot take into account the most recent changes, e.g., westerly wind bursts that occur over the span of a week or two. In addition, the crucial subsurface ocean information from the tropical Pacific Ocean was missing in most statistical models9. Tippett et al.2 undertook a verification analysis using the same dataset, but evaluating the multimodel mean predictions of the 12 dynamical models and 8 statistical models. They found that the multimodel mean forecast from dynamical models demonstrated a slight (but statistically insignificant) edge over the mean forecast derived from statistical models. They also found that the mean forecast of dynamical models displayed overall larger anomalies. These two studies provided a thorough evaluation of real-time prediction data from the IRI ENSO predictions plume up to 2011, but their findings are limited due to the dataset’s short duration. Furthermore, it has also been documented that the skill of ENSO exhibits variability on decadal timescales10,11. El Niño (warm) and La Niña (cold) episodes represent the two phases of the ENSO, with an ENSO-neutral condition (neither El Niño nor La Niña) occurring between them, which significantly influences worldwide weather patterns, potentially triggering severe climatic events and hazardous conditions in both tropical12,13,14,15 and extratropical16,17 regions. Considering that users frequently depend on real-time forecasts for decision-making and planning, it is desirable to reassess the real-time ENSO forecast and its performance using an extended data record. This study capitalizes on an expanded dataset of 253 forecasts over the period 2002–2023, more than doubling the 110 forecasts used in the earlier studies1,2. Capitalizing on the much larger sample size, this study also examines the plume’s capacity to predict the onset of the seven cold (La Niña) and seven warm (El Niño) ENSO events that occurred, based on the multimodel mean of dynamical and statistical models.

The rest of the manuscript is arranged as follows. The “Data, Models and Methods” section outlines the observed and model datasets used and the method employed to do the analysis. The ‘Results’ section reviews the observed characteristics of the ENSO during the period (May–Jul 2001 to Oct–Dec 2023), followed by the skill and error analysis during the real-time forecast period (Feb 2002 to Feb 2023), and the performance of the dynamical and statistical models multimodel mean for the onset of the ENSO events during the two decades. The “Summary and discussion” section provides a concise overview and analysis of the significant findings and challenges encountered in this study.

Results

ENSO characteristics during the last two decades and a general comparison of DYN and STAT ENSO forecasts

This section discusses the evolution of ENSO from May–July 2001 to October–December 2023 (Fig. S2). Over the past two decades, La Niña, the cold phase of ENSO, has become more prevalent (~32% of the total). The slightly increasing frequency of La Niña events in the last two decades has been attributed to a persistent negative phase of the Pacific Decadal Oscillation (PDO) since early 200018,19, the anomalous Indo-Pacific Warm Pool warming20,21, and stronger Walker Circulation22. A total of seven cold events (85 overlapping 3-month seasons) were observed during this period, including a double-dip La Niña in 2010–2011 and 2011–2012, and the triple-dip La Niña which began in Jul-to-Sep (JAS) 2020, and continued until Dec-to-Feb (DJF) 2022–2023, with a brief break during May-to-Jul and Jun-to-Aug of 2021. During a double or triple dip La Niña, the event typically persists successively for two or three successive boreal winters (Fig. S2, indicated with asterisks). Additionally, the past two decades have been marked by seven warm ENSO episodes (~25% of the total or 73 seasons), including the back-to-back El Niño of 2014–2015 and 2015–2016, and the El Niño of 2023, which ended in Apr–Jun 2024 (Table 2).

Figure 1 shows time series comparing the forecasts derived from the multimodel mean of dynamical (DYN) and statistical (STAT) models to the observed data. This figure serves as an illustrative representation of the observational and forecast datasets analyzed in this study. The trajectory plots demonstrate that the predicted anomalies generally (though there are exceptions) follow the subsequently observed ones, but this agreement weakens as the lead time increases. Both model types demonstrated reasonably accurate predictions for certain ENSO events, particularly the stronger episodes, even when considering longer lead times. Both DYN and STAT models tend to underestimate the amplitudes of the warm events in 2002–2003, 2009–2010, 2015–2016, and 2023–2024 especially at longer leads. This pattern of underprediction is in line with findings from previous studies23. Overall, STAT forecasts exhibit an intrinsic characteristic of relatively diminished amplitude as these models aim to minimize squared errors, resulting in a dampening effect on the intensity of sea surface temperature warming and cooling during El Niño and La Niña episodes (Fig. 1c, d). Additionally, statistical models often employ data with coarser temporal resolutions, typically utilizing monthly or seasonally averaged predictors. This limitation prevents them from incorporating high-frequency variability24,25,26, such as westerly wind bursts27 that occur over periods of one to two weeks and can have a significant influence on ENSO development. In contrast, DYN forecasts initialize with the most current observational data, integrating both oceanic (surface and subsurface) and atmospheric variables. For instance, intraseasonal surface wind variability can significantly influence real-time ENSO forecasts28. Westerly or easterly wind anomalies can trigger oceanic Kelvin waves29,30, leading to warmer or colder subsurface temperature anomalies, respectively. These wind-driven responses can either amplify or dampen the ongoing development of an ENSO phase. For example, westerly anomalies may reinforce warming during an El Niño event or hinder cooling during a La Niña event, while easterly anomalies have the opposite effects.

Dynamical (DYN: a, b) and statistical (STAT: c, d) forecasts for the Niño3.4 SST anomaly for overlapping 3-month periods from Feb-2002 to Feb-2023 initializations (253 real-time forecasts) and the corresponding observations. The observations are shown by the black line, while the forecasts are shown by the blue line. The verifying observed data is from the ERSSTv5.

Skill and error during two decades

Figure 2 offers a detailed illustration of the skill variation as a function of calendar start month and lead time for both DYN (solid lines in Fig. 2a) and STAT (dashed lines in Fig. 2a) forecasts. The forecasts generated at longer lead times generally exhibit a lower anomaly correlation compared to forecasts at shorter leads in both DYN and STAT. Specifically, upon analyzing the STAT forecasts, it becomes evident that the anomaly correlation is lower and experiences a rapid decline for forecasts initiated in the early calendar months (February to May) compared to DYN forecasts. In STAT forecasts, this decrease in skill is particularly noticeable even in the shortest lead time for the forecasts starting in April and May. The initial (Lead-1) correlation for these STAT forecasts are 0.76 and 0.73, respectively (Fig. 2a). This period is particularly challenging due to the presence of the northern hemisphere spring predictability barrier31,32,33. Furthermore, this period coincides with ENSO transitioning, which means these diminished skill levels may also have consequences for gaining the crucial information pertaining to the ENSO transition phase34,35,36,37. However, as we progress towards forecasts initiated in the mid-year calendar months (July, August, and September), the skill remains relatively higher and stable in both DYN and STAT forecasts38,39. Furthermore, this higher skill level persists even as the lead times become longer. On the other hand, for forecasts initiated in the late-year calendar months (October, November, and December), correlation values are notably higher during the shorter lead times (up to lead 5). Nevertheless, the correlations swiftly decline for longer lead forecasts (navigating through the spring predictability barrier), reaching values as low as 0.3 or even lower in STAT forecasts, which indicates that such forecasts have very limited practical utility. Differences in skill between the DYN and STAT forecasts are shown in Fig. 2b. The z-scores display high values for some target seasons, indicating a statistically significant difference, when the forecasts are initialized in February, March, April, and May aligning with the analysis presented in Fig. 2a. This analysis highlights the rapid degradation of skill in STAT forecasts as the forecast lead time increases for the earlier calendar start months, in contrast to the performance of DYN forecasts. Figure 2b demonstrates the superiority1 of DYN forecasts over STAT forecasts, particularly during the challenging period of ENSO forecasts known as the boreal spring ENSO predictability barrier. It is worth mentioning that the STAT forecasts show a slight advantage over the DYN forecasts during mid-year monthly starts, specifically in July, and August. However, the amplitude of these z-scores is relatively small indicating statistical insignificance. Additionally, forecasts generated during the remaining calendar months exhibit minimal differences and do not suggest a significant benefit of using one type of tool over the other2.

a Correlation coefficient for each start month for DYN (solid), and STAT (dashed) forecasts. The forecast lead time specified in this context refers to the nine consecutive seasons starting from the issue month. It is based on the three-month overlapping seasonal average of the Niño 3.4 index value. A value higher than 0.45 shows a statistically significant correlation coefficient. b Correlation comparison analysis between the DYN and STAT forecasts, considering different start months and lead times. The z-score values greater than 1.5 are indicating statistical significance at the 95% confidence level. Negative values indicate instances where the STAT forecasts outperform the DYN forecasts. Notably, the figure demonstrates that during the forecasting period initialized in the boreal spring (specifically, February, March, April, and May), the DYN forecasts exhibit an advantage over the STAT forecasts. In a, the notation “D” and “S” appended with the month signifies “DYN” and “STAT,” respectively.

Figure 3a displays a scatter plot of forecast errors obtained from DYN and STAT forecasts, illustrating the relationship between errors across all forecast start months and lead times, spanning from February 2002 to February 2023. Notably, the errors in both DYN and STAT forecasts demonstrate a strong correlation, with a linear correlation coefficient of 0.91, consistent with the analysis reported by Tippett et al. in their study2. Highlighting the asymmetry in errors for negative values, it is noteworthy that the STAT model exhibits greater discrepancies compared to the DYN model, particularly for errors exceeding 1 °C. Figure 3b shows mean absolute error (MAE) as a function of start month and target seasons in both DYN and STAT forecasts. Even though we analyzed a dataset with more than twice the sample size used in previous studies, this analysis closely echoes the findings of the two preceding studies1,2, which showed that both dynamical and statistical models displayed a similar level of error in predicting ENSO. Nonetheless, DYN exhibits the lowest MAE, especially for shorter lead times. Furthermore, the MAE for forecasts commencing in the summer and fall months exhibits comparable errors in both the DYN and STAT forecasts.

a Scatterplot of DYN and STAT multimodel mean forecasts errors (Forecast Anomaly – Observation Anomaly). The solid red line is the regression line with a 95% confidence interval. b Mean absolute error (MAE) for each start month and lead time for DYN (solid), and STAT (dashed) forecasts. The forecast lead time specified in this context refers to the nine consecutive seasons starting from the issue month. It is based on the three-month overlapping seasonal average of the Niño 3.4 index value. In b, the notation “D” and “S” appended with the month signifies “DYN” and “STAT,” respectively.

Furthermore, we calculated the variance from the individual models in the DYN and STAT forecast ensembles, averaged them to obtain the lead-dependent multimodel mean-variance for DYN and STAT, and compared these with observational data (Fig. 4). Overall, the lead and start-time dependent interannual variances from both DYN and STAT are notably lower compared to the observed variance for each target season. Specifically, the variance in STAT (Fig. 4b: blue and green curves: April to September) forecasts for these start months is considerably lower than the observed values at the beginning of the forecast period. DYN forecasts, on the other hand, exhibit values that closely align with the observational variance at initial leads, although they tend to be underestimated and significantly so for longer-lead and late-year targets (e.g., SON, OND, and NDJ). Both DYN and STAT forecasts’ variances demonstrate good alignment with observations for the start months of October, November, and December, extending up to the sixth lead. Similarly, for the start month of January, the initial three leads of STAT and DYN display variance values comparable to the observational values, while for longer leads, they tend to remain relatively constant (between 0.2 and 0.4). For the start months of February, March, and April, the variance of DYN is close to observations in initial leads but exhibits damped values in the later leads. In contrast, STAT forecasts predominantly display damped (between 0 and 0.3) values for early leads. This is important as it could indicate unrealistic (underactive) forecasts, even for target seasons close to the forecast start time. The substantially lower variance during the ENSO transition period could have implications for forecasting the onset of cold and warm episodes.

Seasonal march of variance of the Niño 3.4 index during the study period (February 2002 to February 2023) in a DYN and b STAT forecasts. The black curve shows observed variance, while the predicted variance (members-based) is color-coded based on the start month and calculated as the lead-dependent variance for each start month.

Onset of warm and cold ENSO episodes

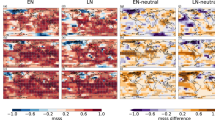

This section evaluates how well DYN and STAT forecasts the onset of ENSO episodes during our analysis period. We focus on fourteen events in total: seven warm episodes and seven cold episodes. Figure 5 presents both observed and forecasted Niño-3.4 index values for ENSO episodes, with warm episode onset seasons displayed in Fig. 5a and cold episode onset seasons shown in Fig. 5b. The observed onset values (denoted by both the season and year), indicated by thick red and blue lines respectively, hover around ±0.5 by definition (Fig. 5, and Fig. S2). The DYN and STAT forecasts for the specified target (onset) seasons are denoted by yellow and green lines with varying line thicknesses representing Lead-1, 2, and 3, respectively (Leads 4–9 are shown in Fig. S3). Both DYN and STAT forecasts tend to capture the onsets of warm episodes (Fig. 5a) better than cold episodes (Fig. 5b). Furthermore, the DYN onset forecasts outperform STAT for both warm and cold ENSO episodes, though they still show substantial bias in both cases. For example, during the El Niño event of 2009, the STAT forecast failed to anticipate the event until it had already formed, predicting SST anomalies approximately a quarter degree colder than the average for lead-3 and beyond (Fig. 5a, c). Similarly, in the case of the ongoing El Niño event in 2023, the STAT forecast was only able to predict the observed anomaly for the forecast initialized in April 2023 but was forecasting close to zero anomalies for February and March starts (Fig. 5a, d). When considering longer lead times (Fig. S3), the STAT forecasts remained within the range of −0.25 to 0.2 at the time of observed onset. It is worth emphasizing that the onset of the 2009 and 2023 El Niños happened during summer and spring. As previously discussed, the analysis indicates that STAT forecasts showed reduced skill when initiated during the late winter and spring months. This observation is particularly significant when considering the 2009 and 2003 El Niño events, as it highlights the potential limitations of STAT forecasts during that specific time period. This conclusion is derived from the analysis of only seven warm ENSO events, which constitutes a limited sample size. Given that forecasting performance can vary significantly across different El Niño episodes, broader conclusions should be drawn with caution. On the other hand, the DYN forecasts indicated anomalies that were quite close to the observed values for shorter leads for both 2009 and 2023 El Niños (Fig. 5a). For the El Niño of 2023, the DYN forecasts show anomalies within the range of 0.15–0.36 for longer leads (as shown in Supplementary Fig. S3). However, another exception is observed during the back-to-back El Niño of 2014–2015 and 2015–2016, when the DYN forecast was too strong at longer leads, while the STAT forecast correctly suggested a weaker warming signal. It is important to note that not every individual dynamical model overpredicted; however, the majority of the models did. Overall, onset trajectory analysis highlights that STAT forecasts tend to demonstrate subdued warming values compared to DYN forecasts. Likewise, in the case of cold episodes, both DYN and STAT forecasts show larger differences in the forecasted anomalies compared to observed values. Notable examples include the La Niña events of 2017, 2010, and 2007, which display substantial disparities in both DYN and STAT forecasts compared to observed values (Fig. 5b and Supplementary Fig. S3). The findings of this analysis are captivating, and delving into the underlying reasons and causes of these significant errors would necessitate a separate study that employs a more comprehensive analysis and additional variables.

The onset (season and year) of a warm and b cold episodes in observation (thick red and blue lines) and Forecasts (DYN and STAT). The DYN and STAT forecasts are shown by yellow and green lines for Lead-1, 2, and 3. The lines connecting events serve as visual aids, emphasizing and clarifying the Niño-3.4 values represented by markers.

Figure 6 summarizes the mean bias averaged over El Niño and La Niña cases for both DYN and STAT forecasts. Overall, both DYN and STAT forecasts exhibit a larger mean bias during cold events compared to warm events. The error gradually increases with increasing lead time in both DYN and STAT forecasts (Fig. 6: blue curves). During warm events, the DYN forecasts (solid red line) display a smaller mean bias compared to the STAT model (dashed red line), with a difference of approximately a quarter of a degree. For cold events, the DYN model (solid blue line) demonstrates less mean bias compared to the STAT model (dashed blue line) up to four leads; beyond this point, the mean biases of both models are nearly identical.

Figure 7 illustrates the squared error skill score (SESS) of individual forecasts from both DYN and STAT models for seven warm and seven cold events for all lead times (green lines: Lead 1–3, red lines: Lead 4–6, yellow lines: Lead 7–9). This examination unveils several noteworthy observations that complement the prior analysis:

-

1.

In comparison to cold episodes (Fig. 7c, d), both the DYN and STAT forecasts exhibit higher skill levels for warm episodes (Fig. 7a, b).

-

2.

DYN forecasts demonstrate greater value in providing information regarding the onset of ENSO episodes compared to STAT forecasts.

-

3.

When considering warm episodes across all lead times, DYN forecasts show higher SESS values compared to the STAT forecasts.

-

4.

During cold ENSO episodes, the SESS exhibits low values, even turning negative, in both DYN and STAT forecasts.

-

5.

The superiority of DYN over STAT is not consistent, especially notable in the cases of cold episodes in 2007, 2010, and 2017, when the DYN SESS often had more negative values than STAT.

Squared error skill score (SESS), in DYN and STAT forecasts for warm (a, b) and cold (c, d) episodes onset from Lead 1 to 9. A SESS value of 1 represents a perfect forecast, aligning precisely with the observed value and indicating a high level of forecast skill. SESS values less than 1 indicate that the forecasts have some level of skill, although it is not perfect. A negative SESS values indicate poorer forecast performance. The plotted data illustrates that the forecast skill tends to be lower for cold episodes when compared to warm episodes onset. Moreover, the analysis highlights that DYN forecasts consistently demonstrate higher skill levels than STAT forecasts for both warm and cold episodes onset. The lines connecting events serve as visual aids, emphasizing and clarifying the SESS values represented by markers.

Figure 8 summarizes the accuracy levels achieved by DYN and STAT forecasts in predicting the onset of ENSO episodes at different lead times. Accuracy is defined here as the ratio of correctly forecasted ENSO onset times to the total episodes. A forecast at a given lead is considered a “hit” if the forecasted anomaly of the observed onset season meets or exceeds the threshold of +0.50 for warm episodes or −0.50 for cold episodes. The DYN forecasts exhibit a remarkable level of accuracy when it comes to predicting the onset of warm episodes, maintaining their accuracy up to 3-month lead (as seen in Fig. 8a). However, in the case of cold episodes (as depicted in Fig. 8b), the accuracy of DYN is relatively lower (than warm episodes) for lead 1 and lead 2, and diminishes to less than 20% beyond lead 3. Subsequent leads reveal that DYN forecasts offer no valuable insights. For the onset of warm ENSO episodes, STAT forecasts demonstrate a moderate level of accuracy, particularly at Lead 1 (Fig. 8a). In stark contrast to DYN, the STAT forecasts fail to furnish significant information regarding the initiation of cold ENSO episodes, even at shorter lead times (Fig. 8b). These findings are derived from real-time ENSO forecast data and shed light on the current capabilities of ENSO prediction models in the context of ENSO phase transitions, emphasizing the need for further research in this area.

Discussion

In summary, we conducted an evaluation of 253 real-time forecasts of ENSO conditions, spanning from February 2002 to February 2023, based on multimodel mean of dynamical and statistical models (DYN and STAT), to improve the understanding of the current level of success of these models. The focus is on the ability of the DYN (multimodel mean of all dynamical models) and STAT (multimodel mean of all statistical models) models to predict the onset of ENSO. During this period, seven El Niño events and seven La Niña events occurred. The main findings can be summarized as follows:

-

The skill of the DYN and STAT forecasts demonstrates substantial variation based on the forecast start month and the target season.

-

The DYN forecasts exhibit superior performance when initiated during the boreal spring months, particularly from February to May.

-

The STAT forecasts are comparable to the DYN forecasts in predicting ENSO during the boreal summer and fall.

-

Both the DYN and STAT forecasts tend to have larger errors when predicting the onset of cold ENSO episodes compared to warm episodes.

-

The analysis demonstrates that DYN forecasts offer valuable insights several months in advance regarding the onset of both warm and cold ENSO episodes, whereas STAT forecasts provide limited information.

-

The findings suggest that predicting the onset of ENSO is challenging, with the accuracy of forecasts being contingent on both the model used and the specific event in question.

One plausible explanation for the discrepancy in the skill of DYN and STAT forecasts could be attributed to the limited progress in STAT models over the last two decades, while DYN models have rapidly1 evolved thanks to advancements in computer resources, enhanced observational data, and a better grasp of the underlying physical processes governing the nonlinear dynamics of Earth’s climate. Over the entire duration, a collection of thirty dynamical models and thirteen statistical models contributed their predictions to the IRI ENSO plume. Additional insight is provided in Supplementary Fig. S4, illustrating the count of dynamical and statistical models that have contributed to the plume since its initiation back in February 2002. Particularly noteworthy is the progressive increase in the count of dynamical models since the plume’s inception, marking a significant trend in the use of dynamical models. It is also important to note that the cohort of the models contributing to the plume also changed over time due to the introduction of new models, the replacement of existing ones, and the discontinuation of certain models. Although STAT models demonstrate certain limitations, they perform comparably to DYN models once the predictability barrier is crossed, as shown in Fig. 2b. As a result, these models will continue to be included in the plume. STAT models are highly valuable for diagnosing the predictors that drive forecasts—unlike dynamical models, where the influencing factors are often unclear. Additionally, STAT models serve as benchmarks for the development of more complex models. Furthermore, STAT models are cost-effective, requiring significantly lower initial and ongoing investments for maintenance compared to the resource-intensive DYN models.

Recently, machine learning (ML) techniques have found applications in ENSO predictions, and numerous studies have demonstrated their effectiveness in hindcast settings, extending predictions up to 18 months in advance. Some studies have even achieved successful predictions of ENSO event onsets using these ML40,41,42 methods. The progress made in ML-based ENSO prediction methods is creating a new generation of promising STAT models, and we would like to invite researchers and forecasting centers that utilize such methods to contribute their real-time ENSO forecasts to the IRI ENSO plume. It is noteworthy that recent contributions from statistical and ML model developers43,44 have been incorporated into the plume, leading to an increase in the number of these models within the plume. This suggests that these updated STAT and ML models could add value and potentially offer better real-time forecasts of ENSO conditions.

A potential limitation of this study is the small sample size, particularly concerning the number of warm and cold ENSO episodes. Despite this limited data, we can conclude that predicting ENSO onset is challenging and that the ability to do so is both model- and event-dependent.

Data and methods

Data

The IRI ENSO plume forecasts are typically a series of values denoted by \({\left({F}_{\rm{SL}}^{{\prime} }\right)}_{i}\), where “\({F}^{{\prime} }\)” shows the 3-month average Niño 3.4 index, “S” shows forecast start time, “L” shows the length of the forecast, while index “i” denotes the number of models in the plume. For a given start and lead time, the multi-model mean forecast from dynamical and statistical models can be denoted as \({\left({F}_{\rm{SL}}^{{\prime} }\right)}_{\rm{DYN}}\), and \({\left({F}_{\rm{SL}}^{{\prime} }\right)}_{\rm{STAT}}\) respectively. These forecasts cover a range of lead times, commencing from the 3-month period immediately after the latest observed data and extending up to nine consecutive 3-month periods, varying based on the specific model used. For example, in the forecast issued in February 2023, the period from Feb to Apr: FMA 2023 is designated as a 1-month lead forecast, while the period from Mar to May: MAM 2023 is referred to as a 2-month lead forecast. This naming convention continues for subsequent periods until we reach the final target period of Oct-Dec 2023, which is designated as a 9-month lead forecast. Most models (particularly dynamical models) produce ensemble ENSO forecasts; however, plume displays only the ensemble average provided by the respective institution.

Forecast length varied among models, leading to a wide range of forecast lengths. The statistical models consistently provided forecasts for the upcoming nine 3-month average seasons. However, the forecast lengths differed among different dynamical models, with some encompassing the full nine seasons while others had shorter durations. This discrepancy highlights that a greater number of models contribute to the average during the initial target seasons, resulting in fewer models available for the latter part of the forecast. It is worth noting that we do not attempt to address or rectify this discrepancy arising from the limited number of models available towards the end of the forecast period. Table 1 provides an overview (type of model, length of forecasts, start and end date of forecast contribution to plume) of the models employed in the IRI ENSO plume, starting from its inception.

The verifying observed data is from the extended reconstructed sea surface temperature (ERSSTv5)45. Observations are expressed as anomalies with respect to the 1991–2020 climatology. Models used different base periods for calculating anomalies. For the forecasts generated prior to January 2021, the climatological-based period was 1971–2000, while some models also use a different climatological-based period, which was from 1982–2000. Starting from January 2021 the recent climatological period 1991–2020 was recommended. In this paper, we do not attempt to make any adjustment for such discrepancies, though we used variable climatology values and found almost no impact on the skill (Supplementary Fig. S5).

Methods

Different parameters are used in this research to evaluate the performance of models for ENSO forecast. For a given start and lead time, the multi-model mean forecast from dynamical and statistical models are denoted as \({\left({F}_{\rm{SL}}^{{\prime} }\right)}_{\rm{DYN}}\), and \({\left({F}_{\rm{SL}}^{{\prime} }\right)}_{\rm{STAT}}\), and observed anomalies by \({O}^{{\prime} }.\) Then the start and lead dependent forecast error (FE) in DYN and STAT forecast can be calculated as follows;

Where \({O}^{{\prime} }\) is according to the start and lead time of the forecasts, aligning with the forecast anomalies.

The anomaly correlation coefficient is used for the skill evaluation in DYN and STAT forecasts are calculated as follows;

Where the correlation coefficients for given sample sizes of n1 and n2 for DYN and STAT forecasts are denoted as \({r}_{\rm{DYN}}\) and \({r}_{\rm{STAT}}\) respectively. The statistical significance of the correlation is assessed by using the two-tailed t-test46. At p = 0.05, the critical value that must exceed to consider our calculated correlation coefficient to be significant is 0.45.

We employed z-score47 analysis for the comparison of the correlation coefficients obtained from both forecast types for each start and lead time. This analysis can provide insights into the superiority (if any) of one forecast over the other. Notably, despite the dependence of both DYN and STAT forecasts, it is worth mentioning that this approach yielded a clear and informative perspective on the relative performance48 of the two forecast products. In this case, the correlation coefficients were computed using the different observations. For DYN forecasts, the ERSSTv5 dataset is employed, while for STAT forecasts, correlations are calculated using the COBE49 dataset. Then the Fisher’s z-transformation for DYN and STAT can be written as follows;

The statistical significance is determined by comparing it with the critical value. For instance, for Apr start at Lead-2 the \({z}_{{diff}}=1.81\) at the 0.05 level of significance, which indicates a critical value of \(\pm 1.72\), the \({z}_{{diff}}\) falls into the rejection region as it is greater than the critical value, thus showing the statistically significant difference between the two correlations. We can reject the null hypothesis that the two correlations \({r}_{{DYN}}\) and \({r}_{{STAT}}\) are not significantly different.

We also used the squared error skill score1 (SESS), which is defined as one minus mean square error divided by the observed climatological variance and it is given by;

The SESS ranges from +1 to \(-\infty\). SESS = 1 indicates a perfect forecast, where the forecast equals the observed value. SESS = 0 implies that the forecast error magnitude matches that of the observation, while SESS < 0 indicates a negative score, meaning that the forecast error exceeds the observational values. One advantage of this score is that it does not require averaging, unlike correlation. This allows for a direct comparison of the skill of individual forecasts with that of a climatological forecast. As a result, we utilized this score to evaluate the skill during the onset of ENSO episodes.

ENSO evolution has been quantified in terms of the 3-month running mean of SST anomalies in the Niño-3.4 region (5°N–5°S, 170°–120°W) based on a recent 30-year (1991–2020) climatology period. A threshold of ±0.5 C is used to define warm and cold ENSO episodes. The ENSO event is considered to have occurred when these conditions persist for five consecutive overlapping 3-month seasons (Supplementary Fig. S2 and Table 2).

Data availability

Different datasets used in this study are freely available at the following links; Monthly ONI: https://www.cpc.ncep.noaa.gov/data/indices/ersst5.nino.mth.91–20.ascii. Warm/Cold ENSO Episodes: https://origin.cpc.ncep.noaa.gov/products/analysis_monitoring/ensostuff/ONI_v5.php. IRI ENSO Forecast: https://iri.columbia.edu/our-expertise/climate/forecasts/enso/current/. All raw ENSO plume data is available on request. https://forms.gle/7PgLuf7rwET5GBxH7

Code availability

Free Software Python is used to conduct analysis and plot figures and is available on request.

References

Barnston, A. G. K., Tippett, M. K., L’Heureux, M. L., Li, S. & DeWitt, D. G. 2012: Skill of Real-Time Seasonal ENSO Model Predictions during 2002–11: is our capability increasing? Bull. Am. Meteor. Soc. 93, 631–651 (2012).

Tippett, M. K., Barnston, A. G. & Li, S. Performance of recent multi-model ENSO forecasts. J. Appl. Meteor. Climatol. 51, 637–654 (2012).

Webster, P. J. The annual cycle and the predictability of the tropical coupled ocean-atmosphere system. Meteor. Atmos. Phys. 56, 33–55 (1995).

Torrence, C. & Webster, P. J. The annual cycle of persistence in the El Niño-Southern oscillation. Quart. J. R. Meteor. Soc. 124, 1985–2004 (1998).

McPhaden, M. J. Tropical Pacific Ocean heat content variations and ENSO persistence barriers. Geophys. Res. Lett. 30(9), 1480 (2003).

Duan, W. & Wei, C. The ‘spring predictability barrier’ for ENSO predictions and its possible mechanism: results from a fully coupled model. Int. J. Climatol. 33, 1280–1292 (2013).

Tippett, M. K., L’Heureux, M. L. Low-dimensional representations of Niño 3.4 evolution and the spring persistence barrier. npj Clim. Atmos. Sci. https://doi.org/10.1038/s41612-020-0128-y (2020).

Zhu, J., Wang, W., Kumar, A., Liu, Y. & DeWitt, D. Assessment of a new global ocean reanalysis in ENSO predictions with NOAA UFS. Geophys. Res. Lett. 51, e2023GL106640, https://doi.org/10.1029/2023GL106640 (2024).

Barnston, A. G., Glantz, M. H. & He, Y. Predictive skill of statistical and dynamical climate models in SST forecasts during the 1997–98 El Niño episode and the1998 La Niña onset. Bull. Am. Meteor. Soc. 80, 217–243 (1999).

Chen, D. et al. Predictability of El Niño over the past 148 years. Nature 428, 733–736 (2004).

Liu, T., Song, X., Tang, Y., Shen, Z. & Tan, X. ENSO predictability over the past 137 years based on a CESM ensemble prediction system. J. Clim. 35(2), 763–777 (2022).

Ropelewski, C. F. & Halpert, M. S. Global and regional scale precipitation patterns associated with the El Niño/Southern Oscillation. Mon. Weather Rev. 115, 1606–1626 (1987).

Hoell, A., Funk, C., Magadzire, T., Zinke, J. & Husak, G. El Niño–Southern Oscillation diversity and southern Africa teleconnections during austral summer. Clim. Dyn. 45, 1583–1599 (2015).

Ehsan, M. A. et al. The ENSO fingerprint on Bangladesh summer monsoon rainfall. Earth Syst. Environ. https://doi.org/10.1007/s41748-023-00347-z (2023).

Attada, R., Ehsan, M. A. & Pillai, P. A. Evaluation of potential predictability of indian summer monsoon rainfall in ECMWF’s fifth-generation seasonal forecast system (SEAS5). Pure Appl. Geophys. 179, 4639–4655 (2022).

Sutton, M., Larson, S. M. & Becker, E. New insights on ENSO teleconnection asymmetry and ENSO forced atmospheric circulation variability over North America. Clim. Dyn. https://doi.org/10.1007/s00382-023-07058-1 (2024).

Scaife, A. A. et al. Tropical rainfall, Rossby waves and regional winter climate predictions. Q. J. R. Meteorol. Soc. 143, 1–11 (2017).

Hu, Z. Z. et al. The interdecadal shift of ENSO properties in 1999/2000: a review. J. Clim. 33(11), 4441–4462 (2020).

Wang, S. et al. Combined effects of the Pacific Decadal Oscillation and El Niño-Southern Oscillation on global land dry–wet changes. Sci. Rep. 4, 6651 (2014).

Wills, R. C. J., Dong, Y., Proistosecu, C., Armour, K. C. & Battisti, D. S. Systematic climate model biases in the large-scale patterns of recent sea-surface temperature and sea-level pressure change. Geophys. Res. Lett. 49, e2022GL100011, https://doi.org/10.1029/2022GL100011 (2022).

Lee, S. et al. On the future zonal contrasts of equatorial Pacific climate: perspectives from observations, simulations, and theories. npj Clim. Atmos. Sci. 5, 82 (2022).

Heede, U. K. & Fedorov, A. V. Colder eastern equatorial Pacific and stronger Walker circulation in the early 21st century: separating the forced response to global warming from natural variability. Geophys. Res. Lett. 50, e2022GL101020 (2023).

L’Heureux, M. L. et al. Observing and predicting the 2015/16 El Niño. Bull. Am. Meteorol. Soc. 98(7), 1363–1382 (2017).

Moore, A. M. & Kleeman, R. Stochastic forcing of ENSO by the intraseasonal oscillation. J. Clim. 12, 1199–1220 (1999).

Lengaigne, M. et al. Ocean response to the March 1997 Westerly Wind Event. J. Geophys. Res. 107(C12), 8015 (2002).

McPhaden, M. J., Zhang, X., Hendon, H. H. & Wheeler, M. C. Large scale dynamics and mjo forcing of enso variability. Geophys. Res. Lett. 33(16), 702 (2006).

Lai, A. W. C., Herzog, M. & Graf, H. F. ENSO forecasts near the spring predictability barrier and possible reasons for the recently reduced predictability. J. Clim. 31, 815–838 (2018).

Wang, W., Chen, M., Kumar, A. & Xue, Y. How important is intraseasonal surface wind variability to real-time ENSO prediction? Geophys. Res. Lett. 38, L13705 (2011).

Hendon, H. H., Liebmann, B. & Glick, J. D. Oceanic Kelvin waves and the Madden–Julian oscillation. J. Atmos. Sci. 55, 88–101 (1998).

Jauregui, Y. R. & Chen, S. S. Chen MJO-induced warm pool eastward extension prior to the onset of El Niño: observations from 1998 to 2019. J. Clim. 37, 855–873 (2024).

Kumar, A., Hu, Z.-Z., Jha, B. & Peng, P. Estimating ENSO predictability based on multi-model hindcasts. Clim. Dyn. 48, 39–51 (2017).

Hu, Z.-Z., Kumar, A., Zhu, J., Peng, P. & Huang, B. On the challenge for ENSO cycle prediction: an example from NCEP climate forecast system, Version 2. J. Clim. 32, 183–194 (2019).

Chen, H.-C., Tseng, Y.-H., Hu, Z.-Z. & Ding, R. Enhancing the ENSO predictability beyond the spring barrier. Sci. Rep. 10, 984 (2020).

Yang, X. et al. Key to ENSO phase-locking simulation: effects of sea surface temperature diurnal amplitude. npj Clim. Atmos. Sci. 6, 159 (2023).

Tziperman, E., Cane, M. A., Zebiak, S. E., Xue, Y. & Blumenthal, B. Locking of El Niño’s peak time to the end of the calendar year in the delayed oscillator picture of ENSO. J. Clim. 11, 2191–2199 (1998).

Li, T. Phase transition of the El Niño–Southern Oscillation: a stationary SST mode. J. Atmos. Sci. 54, 2872–2887 (1997).

Chen, H.-C. & Jin, F.-F. Fundamental behavior of ENSO phase locking. J. Clim. 33, 1953–1968 (2020).

Almazroui, M. et al. Skill of the Saudi-KAU CGCM in forecasting ENSO and its Comparison with NMME and C3S Models. Earth Syst. Environ. 6, 327–341 (2022).

Barnston, A. G. et al. Deterministic skill of ENSO predictions from the North American Multimodel Ensemble. Clim. Dyn. 53, 7215–7234 (2019).

Yan, J. et al. Temporal convolutional networks for the advance prediction of ENSO. Sci. Rep. 10, 8055 (2020).

Ham, Y. G., Kim, J. H. & Luo, J. J. Deep learning for multi-year ENSO forecasts. Nature 573, 568–572 (2019).

Ham, Y.-G., Kim, J.-H., Kim, E.-S. & On, K.-W. Unified deep learning model for El Niño/Southern Oscillation forecasts by incorporating seasonality in climate data. Sci. Bull. 66, 1358–1366 (2021).

Vimont, D. J., Newman, M., Battisti, D. S. & Shin, S. I. K. The role of seasonality and the ENSO mode in Central and East Pacific ENSO growth and evolution. J. Clim. 35, 3195–3209 (2022).

Mu, B., Qin, B. & Yuan, S. ENSO-GTC: ENSO deep learning forecast model with a global spatial-temporal teleconnection coupler. J. Adv. Model. Earth Syst. https://doi.org/10.1029/2022MS003132 (2022).

Huang, B. et al. Extended Reconstructed Sea Surface Temperature version 5 (ERSSTv5), Upgrades, validations, and intercomparisons. J. Clim. 30, 8179–8205 (2017).

Wilks, D. S. Statistical Methods in the Atmospheric Sciences. 2nd edn. (Elsevier Publishers, New York, 2006).

Hinkle D. E., Wiersma W., Jurs S. G. Applied Statistics for the Behavioral Sciences. 2nd ed. (Houghton Mifflin Company, Boston, 1988).

DelSole, T. & Tippett, M. K. Comparing forecast skill. Mon. Weather Rev. 142, 4658–4678 (2014).

Ishii, M., Shouji, A., Sugimoto, S. & Matsumoto, T. Objective analyses of sea-surface temperature and marine meteorological variables for the 20th century using ICOADS and the Kobe collection. Int. J. Climatol. 25, 865–879 (2005).

Acknowledgements

We acknowledge all the institutions that provide their ENSO forecast data. We are grateful to two anonymous reviewers whose insightful and constructive comments enhanced the final version of the paper. The scientific results and conclusions, as well as any view or opinions expressed herein, are those of the author(s) and do not necessarily reflect the views of NWS, NOAA, or the Department of Commerce.

Author information

Authors and Affiliations

Contributions

MAE collected data, analyzed the results, and prepared the initial draft of the paper. Discussions between MAE, MKT, AWR, MH, and JT, contributed to the final paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ehsan, M.A., L’Heureux, M.L., Tippett, M.K. et al. Real-time ENSO forecast skill evaluated over the last two decades, with focus on the onset of ENSO events. npj Clim Atmos Sci 7, 301 (2024). https://doi.org/10.1038/s41612-024-00845-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41612-024-00845-5

This article is cited by

-

Can CMIP6 models simulate the network-based early warning signals for El Niño? Insights for climate modeling and network analyses

Climate Dynamics (2026)

-

AI-Enabled conditional nonlinear optimal perturbation enhances ensemble prediction of extreme El Niño events

npj Climate and Atmospheric Science (2025)

-

Skilful global seasonal predictions from a machine learning weather model trained on reanalysis data

npj Climate and Atmospheric Science (2025)

-

Global climate mode resonance due to rapidly intensifying El Niño-Southern Oscillation

Nature Communications (2025)

-

ENSO and seasonal climate variability in the Maldives: an analysis of early warning opportunities

Theoretical and Applied Climatology (2025)