Abstract

This systematic review explores machine learning (ML) applications in surgical motion analysis using non-optical motion tracking systems (NOMTS), alone or with optical methods. It investigates objectives, experimental designs, model effectiveness, and future research directions. From 3632 records, 84 studies were included, with Artificial Neural Networks (38%) and Support Vector Machines (11%) being the most common ML models. Skill assessment was the primary objective (38%). NOMTS used included internal device kinematics (56%), electromagnetic (17%), inertial (15%), mechanical (11%), and electromyography (1%) sensors. Surgical settings were robotic (60%), laparoscopic (18%), open (16%), and others (6%). Procedures focused on bench-top tasks (67%), clinical models (17%), clinical simulations (9%), and non-clinical simulations (7%). Over 90% accuracy was achieved in 36% of studies. Literature shows NOMTS and ML can enhance surgical precision, assessment, and training. Future research should advance ML in surgical environments, ensure model interpretability and reproducibility, and use larger datasets for accurate evaluation.

Similar content being viewed by others

Introduction

Machine learning (ML) models have gained consistent attention within the medical field for their potential to revolutionise healthcare practices. ML algorithms are adept at modelling high dimensional data distributions, improving process efficiency, and reducing burden on healthcare professionals through data-driven insights1,2. They can be trained to identify data patterns and optimise predictive precision3,4,5, making them valuable tools in medical decision-making across various specialties, such as radiology5,6 and oncology7. This successful integration of ML into healthcare workflow demonstrates how technology to complement and enhance the capabilities of medical experts.

An emerging domain for ML application is surgical motion tracking, which offers potential advancements in surgical practice. Capturing and analysing the motion characteristics of surgeons’ hands and surgical instruments during procedures provides valuable data for several purposes. Surgical skill training and evaluation are labour-intensive and time-consuming for both trainers and trainees. Their automation could offer much-needed efficiency8,9, support professional development, and ensure high-quality care. Additionally, motion data could aid the development of assistive surgical tools to improve surgeon precision and patient outcomes. Research has also explored using surgical motion data to predict patient post-surgical outcomes10, offering the potential for real-time adjustments during surgery to reduce post-operative complications.

However, much of the existing surgical motions tracking research relies on visual sensors, such as cameras. While these systems are valuable for their convenience and integration into laparoscopic and robotic surgical devices, they have inherent limitations, such as poor quality and susceptibility to occlusion11. Non-optical motion tracking systems (NOMTS) offer promising solutions by providing robust and versatile data capture capabilities without the constraints of optical systems.

This systematic review aims to provide an overview of ML applications in surgical manoeuvre analysis using NOMTS. Objectives include identifying ML algorithms and models used, comparing their effectiveness, identifying NOMTS applications in surgical settings, and highlighting research trends, gaps, challenges, and future research directions.

Results

Search results

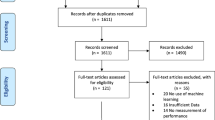

A total of 3632 unique records were identified through the literature search after duplicate removal. An additional 32 records were identified by bibliographic cross-referencing. After undergoing screening based on title and abstract, as well as full-text retrieval, a total of 139 studies were assessed in full text. The inclusion process led to 84 reports meeting the criteria for inclusion (Fig. 1). Table 1 provides full overview of the included studies, categorised by their machine learning aim. Six primary machine learning aims were identified: (1) skill assessment (SA); (2) feature detection (FD); (3) a combination of skill assessment and feature detection; (4) tool segmentation and/or tracking (TT); (5) undesirable motion filtration (UMF); (6) other. These are further detailed in the Results sections ML tasks.

Data collection and sources

The included studies featured one or more experiments, each designed with different set-ups, sensors, and procedures. Twenty studies included more than one experiment12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31. The procedures were categorised by surgical field and task. Robotic procedures were the most common, appearing in 65 experiment types, followed by laparoscopic in 20, and open in 17. Basic bench-top (BB) tasks, such as peg transfer or suturing, composed 72 experiments. Clinical simulations (CS), which mimic real-life surgery, were conducted in 10 experiments. Clinical models (CM) were used in 18 experiments, including animal models19,24,26,28,29,31,32,33,34,35, cadaver models16,29,34, and real-life surgeries like septoplasty12, tumour removal36, or prostatectomy10,22,30,37,38,39. Non-clinical simulations (NCS), which simulate surgical movement without a defined surgical task, were present in eight experiments (Fig. 2).

Among the experiments with human participants, 40 utilised datasets with at least 10 participants, while only 14 included at least 25 participants (Table 1). The largest datasets included 117 participants40, followed by 67 participants41 and 52 participants24.

One frequently used public dataset was the JHU-ISI Gesture and Skill Assessment Working Set (JIGSAWS)42, which appeared in 26 use cases (Table 1). It includes synchronised robotic video and tool motion from eight surgeons performing BB tasks (needle passing, knot tying, suturing) within a robotic surgical context. Multiple studies leveraged this dataset to compare their algorithms with others on the same dataset15,17,21,22,24,25,26,27,28,43,44,45, as well as for transfer learning applications20,26.

Another dataset, used by two studies, is the Johns Hopkins Minimally Invasive Surgical Training and Innovation Center Science of Learning Institute (MISTIC-SL) dataset14,23. It consists of synchronised robotic video and tool motion during BB tasks. The Robotic Intra-Operative Ultrasound (RIOUS) and RIOUS+ datasets are used by Qin et al. containing robotic video and tool motion of drop-in ultrasound scanning in dry-lab, cadaveric, and in-vivo settings28,29. The Basic Laparoscopic Urologic Skills (BLUS) also features synchronised video and tool motion of BB laparoscopic tasks40. The Bowel Repair Simulation (BRS) dataset consists of 255 porcine open enterotomy repair procedures captured with electromagnetic sensors and two camera views24. However, these datasets are not publicly available.

Non-optical motion tracking systems (NOMTS)

The included studies utilised five categories of NOMTS across various experiments, often featuring multiple experiment types within a single study. In total, 107 experiment designs were found across the 84 studies.

-

(1)

Device kinematic (DK) data recordings: in 67 experiments to capture the internal position logging of virtual reality46,47, laparoscopic40,48, endoscopic49, or robotic10,12,14,15,17,20,21,22,23,24,25,26,27,28,29,30,31,32,34,35,37,38,39,43,44,45,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69 surgical devices.

-

(2)

Electromagnetic (EM) systems: in 20 experiments, mostly using active EM systems19,24,41,70,71,72,73,74,75,76,77,78,79,80,81,82, except for a passive magnetic system83 and radio frequency identification (RFID)84.

-

(3)

Inertial (I) sensors: in 18 experiments, including accelerometers12,13,16,18,84,85,86,87,88,89 and inertial measurement units17,57,90,91.

-

(4)

Mechanical (M) sensors: in 13 experiments, including force33,35,36,50,58,80,85,92,93 and flex sensors16,71,90.

-

(5)

Surface electromyography (EMG): in one study13.

Twelve experiments combined multiple NOMTS types13,16,35,50,57,58,71,80,84,85,90, with mechanical16,35,50,58,71,80,85,90 and inertial13,16,57,84,85,90 sensors being the most frequently combined types. All combinations of experimental designs may be found in Fig. 2.

Optical sensor data as an NOMTS supportive tool

Of the 81 experiment designs that did not use optical sensors as input for ML analysis, 47 used video recordings to provide context for NOMTS data processing. The video recording served several purposes, including providing time-stamps, enabling third-party expertise evaluation, contextualising non-visual data, and facilitating manual annotation of manoeuvres and gestures. Twenty-six experiments incorporated additional optical sensors for ML analysis, including red-green-blue (RGB) endoscopic cameras34,37,39,40,44,45,68, RBG cameras aimed the subject18,20,24,35,69,80, and infrared (IR) cameras94,95. Among these, 19 experiments required manual annotation34,35,37,59,66,80. However, five experiments aimed to train their algorithms to automatically segment image frames, using their annotations as ground truth verification31,59,66. Two studies trained their ML models exclusively on optical data before testing on NOMTS data94,95 (Table 2).

NOMTS sensor placement

Sensor placement varied across tasks within the studies, as detailed in Table 3, with studies exploring relevant sensor placement combinations for their tasks. One study highlighted the significance of shoulder joint metrics for laparoscopic skill assessment87, while another identified the most relevant sensors in a tracking glove for gesture and skill identification during tissue dissection tasks16. Additionally, another used an ML model to determine optimal EMG sensor placement for open, laparoscopic, and robotic tasks13.

When examining the influence of surgeon handedness, the dataset showed a predominance of right-handedness: among 106 experiments, only 10 included left-handed surgeons, 48 deliberately excluded them, and 48 provided no information. However, two studies augmented their data by hand inversion to simulate left-handed surgeons and pseudo-balance their dataset24,26. Loukas et al. evaluated task recognition for both left and right hands using a database consisting of right-handed individuals, revealing superior performance on the right hand due to its higher activity level and consequent abundance of data75. Two studies used only right-handed sensor gloves for data collection16,90. Furthermore, 89 of the 106 experiments analysed data from both hands, while 16 focused solely on one hand.

Sensor and data challenges

Several challenges were identified regarding sensor usage. Metallic interference affected data collection for both EM sensors71,76,80,82,83,84 and IMUs using magnetometers17. Increasing the distance between EM sensors and the magnetic source led to increased tracking error83. Some studies used isolation methods to limit EM sensor contact with metal71,80. Nguyen et al. excluded magnetometer data from IMU analysis, favouring accelerometer data over gyroscopic data for skill identification17. However, precise accelerometer, gyroscope, and magnetometer data are needed to compute roll, pitch, and yaw angles. Errors in these three propagate over time, causing a phenomenon known as drift96. Sang et al. experienced drift with their IMU57, and Brown et al. note the inability to estimate the yaw angle using acceleration data alone, suggesting future work with additional magnetometers and gyroscopes85.

Uncorrelated noise was observed in EM sensors82,83 and IMUs57. Sun et al. used an artificial neural network (ANN) to address random measurement errors in EM sensors by directly incorporating the sensors’ intrinsic characteristics83. Acquisition errors were also noted with EM sensors74, robotic kinematics52, and video cameras18.

EM71, flex71,90, and force58,80 sensors required calibration. Oquendo et al. calibrated their EM and flex sensor after every five participants to ensure correct positioning and angle recording71. Sbernini et al. chose to omit calibration of flex sensor voltage to specific angles to save time, instead using raw voltage measurements90. For force sensors, Song et al. used an electrical scale for calibration80, while Su et al. used singular value decomposition58.

Loukas et al. found interpreting waveform non-optical data alone challenging, preferring to have video recordings of the experiments to assist in data interpretation75. Sensor data may lack clarity compared to visual data, such as when identifying tools in use81. However, video data is also limited by visibility, lighting, image background, and camera placement81. An ML model combining video and EM data for tool tracking yielded poorer results on an animal dataset than on a phantom dataset due to blood obstruction of the video input19. Zhao et al. found kinematic data better for clustering in tool trajectory segmentation, as video data has unclear detail and less stability59. However, they found video data more necessary when analysing non-expert demonstrations. Murali et al. reported similar findings for surgical task segmentation66.

Some studies raised concerns about wearability and usability, reporting issues such as sensor detachment18 and wire clutter16,87.

Machine learning methods

Several studies have explored a variety of ML methods and their combinations. Among these, ANNs were the most popular (91 times), followed by support vector machines (SVM) (26 times), and k-nearest neighbours (kNN) (16 times). While SVMs have received consistent attention since 2010, recent research has increasingly focused on ANNs and other emerging methods (Fig. 3), a trend also observed by Buchlak et al4. and Lam et al. 8.

The varied goals and outputs of these ML models have led to a wide range of evaluation metrics being used by researchers. Mean accuracy was reported in 69.0% (58/84) of the studies primarily for skill assessment and/or feature detection with only five exceptions31,59,79,94,95. Researchers also used metrics such as mean error14,29,30,47,51,56,83,90, precision and recall13,17,21,23,26,31,36,44,61,64,67,74,75,84, F-1 score13,17,19,22,24,26,31,34,44,45,61,64,74,81,88,93, root mean square error29,35,57,58,79,83, sensitivity and specificity36,46,77,91, area under the curve26,36,68,70,91,93, and Jaccard index18,19,34. In terms of validation, 82.1% (69/84) of studies detailed their processes, with leave-one-user-out and k-fold splitting being the most common (Table 1).

ML task: Skill assessment

Surgical skill assessment, which evaluates task execution by surgeons, is the focus of most studies (32/84) (Table 1). Notably, 24 of these were published after 2015.

To train ML methods, surgeon skill levels were established using various assessment measures, such as self-reported experience metrics such as hours12,20,32,43,51,67 or years10,13,38,50 of experience, number of surgeries performed39,41,73,87,89,92,93, or status as a student, resident, or surgeon46,70,72,90,91. One study did not specify any criteria for skill48. Allen et al. found that some of their included novices were classified as experts by the ML model70. Similarly, two other studies found that the “misclassified” novices actually possessed the skills to be considered expert46,87.

Eleven studies used objective Global Rating Scale (GRS) systems: the Objective Structured Assessment of Technical Skills (OSATS) system71,86; a modified OSATS12,43,53; the Global Evaluative Assessment of Robotic Skills (GEARS)85; the Global Operative Assessment of Laparoscopic Skills (GOALS)40; the Robotic Anastomosis Competence Evaluation tool (RACE)68; a Cumulative Sum (CUSUM) analysis-based approach52; and custom scoring systems69,88. Wang et al. discovered that ML models matched GRS scores more accurately than self-reported skill levels43. However, Brown et al. found grading each trial time-consuming and maintaining calibration between reviewers challenging85. Kelly et al. only trained their ML model on the top and bottom 15% of graded trials40.

Almost half of the experiments (47.2%) are conducted within a robotic surgical context, ten in laparoscopic, and eight in open scenarios. Watson et al. designed a microsurgical vessel anastomosis task91. BB models were the most common surgical task (68.6%), particularly prevalent in robotic contexts (41.2%).

As shown in Table 1, motion tracking in 18 experiments used internally logged device kinematic data. Inertial sensors were used in nine experiments, with five using accelerometers12,13,85,86,87 and four using inertial measurement units88,89,90,91. Magnetic tracking systems were used in five experiments, and EMG sensors in one. Additionally, six studies used mechanical sensors, with four using them alongside other sensor types. Only one study used video footage as additional training data for ML models. However, 14 studies used video recordings to aid human analysis.

Across the 32 studies, 59 algorithm architectures were evaluated. The most common ML algorithm was ANN, appearing 16 times. SVM was used in eight architectures, while LR, RF, and kNN were each used six times. An ensemble approach, combining multiple methods, was noted in 59.4% of cases. Evaluation methods were detailed in 28 studies, with 25 reporting mean accuracy and two reporting mean error. Twelve studies achieved a maximum accuracy rate exceeding 90% (Table 1).

ML task: Feature detection

Feature detection, which identifies specific surgical tasks or motion components, was the primary focus of 22 studies (Table 1). Except for one, all studies used video, either to contextualise non-optical data or as training input for ML models (Table 2).

RNNs, especially LSTM14,37,45,74,81, were the most commonly used ML techniques in this context. Zheng et al. developed a method combining attention-based LSTM to distinguish normal and stressed trials with a simple LSTM to distinguish normal and stressed surgical movements74. Zia et al. combined a CNN-LSTM for creating video feature matrices with a separate LSTM for extracting kinematic features37. Two studies compared different RNNs for gesture identification14,81. Goldbraikh et al. suggested that an ANN for non-optical data could be smaller and faster than one for video data, facilitating easier real-time analysis81.

Only 14 studies used ML to break down surgical procedures into actionable steps, with all but two15,16 falling into the feature detection category14,37,45,54,66,75,81,84. This process, termed surgical process modelling, involves detecting and segmenting surgical steps97.

Among the 18 papers reporting mean accuracy54,74,81,84, Peng et al. achieved the highest at 97.5%, using a continuous HMM with DTW to segment DK motion data into a labelled sequence of surgical gestures62. Precision and recall were also evaluation metrics in six studies26,61,64,74,75,84. Loukas et al. achieved the best results, with 89% precision and 94% recall, focusing on surgical phase segmentation75.

ML task: Skill assessment and feature detection

This section of the systematic review covers 13 studies (Table 1). While skill assessment remains the primary focus, interest in utilising feature detection for skill evaluation is growing. Most experiments were conducted in a robotic setting, with BB tasks representing 72.2% of experiment designs. The most commonly used data sources were internal DK data and inertial sensors. Video recordings were utilised in 11 studies, but only one used them as ML input data (Table 2).

Zia et al. used only the OSATS scale to determine surgeon skill level18 whereas Nguyen et al. initially categorised participants by the number of procedures performed and then verified eligibility with the OSATS scale17. Two studies use the number of hours/surgeries performed44,49, four used the year of training or surgeon status15,36,77,78, and six did not specify how they determined skill levels15,16,33,55,60,76. However, King et al. found novices were more likely to be misclassified as experienced with each task attempt, indicating a learning curve16.

Twenty-eight distinct ML architectures were employed, with 60.7% (17/28) involving a feature detection algorithm followed by a skill classifier. Eleven studies used different types of ANNs for feature detection, while 13 employed SVM as the skill classifier. King et al. used HMM for surgical process modelling to classify specific surgical gestures in laparoscopy16, and Forestier et al. used SAX-VSM on the JIGSAWS database to classify higher level surgical manoeuvres15.

All studies reported mean accuracy except for two60,78, and only two provided separate accuracy scores for feature detection and skill assessment15,44. The remaining studies focused on identifying the best feature detection ML methods for accurate skill classification. Nguyen et al. achieved the highest overall accuracy of 98.4% when evaluating data from the JIGSAWS database17.

ML task: Tool segmentation and/or tracking

Tool segmentation and/or tracking, which involve accurately identifying and locating surgical instruments within the operative field, are discussed in 11 papers (Table 1). Most studies were conducted in robotic settings, focusing on BB or CM tasks with video input. In laparoscopic settings, Wang et al. conducted BB tasks82, while Lee et al. conducted both BB and CM tasks19. Three NCS used EM or DK sensors for tool localisation. All studies used ML models involving ANNs, while one also used Gaussian mixture and kNN regression methods79.

ML task: Undesirable motion filtration

Undesirable motion filtration algorithms aim to predict and remove detrimental surgical movement, such as tremors. Three studies focused on this task (Table 1), all conducted through NCS of surgical motion. While all utilised inertial sensors, one also included DK57. Two studies gathered training data using infrared technology and validated their tremor estimation and prediction algorithms with real-time accelerometer data94,95.

Sang et al. implemented a zero-phase adaptive fuzzy Kalman filter and experimentally validated its effectiveness57. Tatinati et al. introduced a moving window-based least squares SVM in 201595, later comparing it to a multidimensional robust extreme learning machine in 2017, achieving up to 81% accuracy94.

ML task: Other studies

The “other” category includes three studies with unique objectives not covered by the previous descriptions (Table 1). Su et al. used an ANN to provide robotic surgeons precise force feedback by measuring the force between tools and tissue, compensating for gravity on the robotic end-effector58. Song et al. used a fuzzy NN trained with video, force sensors, and EM tracking inputs to achieve accurate haptic modelling and simulation of surgical tissue cutting80. Sabique et al. used RNN methods with DK, force sensors, and video to investigate dimensionality reduction techniques for force estimation in robotic surgery35.

Quality Assessment

The average MERSQI score was 11.0, with scores ranging from 9.5 to 14. The highest achievable score is 18. Many studies were limited in score by their design as single-group studies conducted at a single institution, with outcomes solely from a test setting. The full table of scores can be found in Supplementary Table 1.

Discussion

This study reviewed the application of ML in analysing surgical motion captured through NOMTS. The findings indicate rapid growth in ML applications for surgical motion analysis and demonstrate the diverse applicability of NOMTS. However, challenges persist in data availability, practical implementation, and model development.

A critical constraint identified is the lack of large, open-source databases. Only 14 experiments used databases with more than 25 participants (Table 1). Most databases remain closed-source, hampering result validation and cross-study comparison. JIGSAWS, a widely-used open-source database, enables comparative analysis. However, its limitation to eight participants restricts the training and testing of ML models, particularly deep learning architectures that require substantial data for effective generalisation98.

The predominant reliance on BB task models, due to their ease of execution and data collection, limits the applicability of ML in real surgical contexts. While foundational, BB tasks fail to capture the complexity and unpredictability of real surgical procedures. Nevertheless, there are promising applications in surgical environments: Brown et al. achieved accuracy rates exceeding 90% in porcine prostatectomy experiments32, and Ahmidi et al. had similar success in septoplasty procedures72. Federated learning could enhance these efforts by enabling the use of decentralised data from multiple institutions while maintaining data privacy99. Future research should prioritise developing larger, standardised, open-source databases applicable to real surgical scenarios. This would enable more robust training, benchmarking, and comparison of ML models across diverse surgical environments.

Machine learning methods have shown potential in processing NOMTS data, particularly in detecting subtle patterns in surgical motion that are imperceptible to human observers. The multidimensional, time-series nature of NOMTS data presents challenges for traditional analysis methods. ML approaches like RNNs and transformers are particularly valuable due to their ability to capture sequential dependencies and handle unstructured information100.

Selecting appropriate ML models for NOMTS requires careful consideration of data characteristics. RNNs are useful for capturing the sequential nature of surgical motions101. CNNs, while traditionally used in image processing, can be adapted to handle spatial aspects of motion data27,98. Recent developments in hybrid architectures, such as combining CNNs for local feature extraction with RNNs for global sequence modelling, have shown promise in addressing both spatial and temporal dependencies37,102. Transformers offer advantages through parallel data processing, mitigating latency issues common in sequential models, and making them suitable for real-time surgical applications29. Additionally, they can capture motion patterns over extended periods100. This is important because predictive accuracy in surgery relies on recognising extended sequences of motion rather than just the most recent ones.

Task-specific considerations also influence model selection. Continuous motion prediction benefits from RNNs or hybrid models, while spatial relationship analysis may favour CNNs, such as in tracking the position of instruments. Hybrid models that integrate CNNs and RNNs provide the flexibility to handle both the spatial and temporal dimensions of surgical motion data. For skill assessment, sliding-scale models that move beyond binary classifications of novice or expert would enable more nuanced assessments of surgical ability. Notable insights for trainee education include observations that expert surgeons use certain motion classes less frequently with greater separability between motions54, and that needle driving tasks were more relevant for skill differentiation51. Furthermore, subjective skill labelling can misrepresent talented beginners and occasional expert errors43,46,70,87, leading to inaccurately labelled data and reduced ML model accuracy.

Preprocessing NOMTS data for use with ML models presents challenges. Sensors such as IMUs and EM sensors generate large volumes of high-frequency data with inherent noise46,54,57,75,84,94,95. Techniques such as Kalman filtering and down-sampling can help reduce noise and make the data more manageable87, but challenges remain for real-time applications.

Surgical procedures generate data from various sources like IMUs, EM sensors, and optical systems, each with different data formats and noise characteristics. Integrating these multimodal data streams into a coherent framework that supports real-time performance is challenging. Recent advancements in ML, especially transformer-based architectures, enable the parallel processing of large volumes of multimodal data without sacrificing accuracy or speed29,100. This capability is necessary for maintaining real-time performance in NOMTS applications, as it preserves the temporal relationships across different data streams and ensures data synchronisation.

Despite advances in ML, the field still faces challenges related to interpretability. Future research should rationalise decisions on ML model architecture and hyperparameter tuning to enhance interpretability among peers, promote collective advancement in the field, and ensure reproducibility. Improved interpretability would increase human trust in the algorithms. The field of Explainable Artificial Intelligence (XAI) is developing methods to increase the transparency of supervised ML techniques103. In the context of non-optical sensor time-series data, explainability techniques predominantly target sequence classification models. However, there is insufficient research addressing explainability in probabilistic regression models104.

ML holds potential for integration into clinical practice. Further development of training algorithms for future surgeons could reduce training time and identify underdeveloped skills. Intelligent surgical systems could also be developed as decision support tools, thereby reducing fatigue and improving outcomes. An underexplored area is the use of ML for surgical process modelling, which could reveal insights and patterns missed by humans, furthering understanding of these processes97. Utilising ML to split tasks into smaller granularity levels is a first step. The JIGSAWS database could be a good starting point as it provides labelled manoeuvres and gestures14,15.

While ML can enhance surgical performance and reduce the required training time, it should be viewed as an augmentation tool rather than a replacement for clinical expertise. Despite rapid advancements in technology and ML models, their utility is limited by the data they are trained on and may struggle in new, unforeseen situations. Given the complexities of medical practice, broader ML applications face challenges in effective implementation.

Over a third of studies (30/84) show accuracy rates exceeding 90%, demonstrating the potential effectiveness of ML in surgical motion analysis. However, this also highlights the early stage of development in this field.

In 79/84 studies, at least one performance metric was reported, and 69/84 provided information on the validation process of ML models. There is notable diversity in assessment and validation techniques due to different applications (Fig. 4). Studies focusing on skill assessment or feature detection typically report accuracy rates, while other categories use a wide range of metrics, posing challenges for cross-model comparisons. Standardising methods is challenging due to variations in database structures and the different approaches required by ML models. A potential solution is standardised benchmark datasets, such as JIGSAWS, enabling researchers to compare and evaluate models effectively.

Cross-validation techniques presented as technique description, (plus sign +) advantages, and (minus sign -) disadvantages. Consists of hold out17,19,28,31,33,43,44,46,51,59,73,82,85, k-fold10,15,18,27,37,42,49,52,53,57,70,79,84,89,91, stratified k-fold29, leave-one-out21,35,37,49,62,64,72,87,88,90,92,93,95, leave one user out18,20,23,31,34,35,39,41,42,48,56,68,74,75,77,89,96, leave one trial out26,75,89, leave one super-trial out26,35,36,38,39,46,47,56,58.

NOMTS offer benefits in surgical motion analysis. Prioritising research to address implementation challenges and find effective solutions is necessary to unlock their potential in surgical practice.

Synchronisation of multiple data sources is necessary for accurate, reliable, and useful data. It allows precise event sequencing, time series analysis, direct comparison between measurements, and facilitates temporal correlation by linking data from multiple sensors to specific events. This can be done by aligning common events observed in multiple data streams, but it may lead to timestamp misalignment. Fixing desynchronisation post-hoc may render data unusable if metadata is not available to synchronise timestamps across multiple sensor streams. A reliable approach is synchronisation upon acquisition105. This may motivate analysing robotic device kinematic data, as the system outputs consistent timestamps.

Manual annotation of events was often required for useful data; however, this was also seen for optical data18,19,37,80. Adding an optical data source may help interpret as non-visual data, which is not easily interpreted75.

Magnetic interference poses a challenge for IMUs and EM sensors, particularly in environments with metal and electronic equipment like operating rooms. Some studies isolated their tracking systems71,80 or avoided using magnetometers to address this issue17,90. While reducing magnetic interference in experimental settings may be feasible, addressing inaccuracies in clinical settings remains difficult. Future research should focus on developing solutions to mitigate these inaccuracies.

Variation in sensor placement is observed across studies and even within the same study18. Only three studies investigated the optimal sensor placement to maximise accuracy and minimize data volume13,16,87. The lack of consistency suggests further research into comparing sensor placement within trials to determine the best positioning. Improper sensor attachment could cause jerking and noise in the data18, highlighting the importance of secure attachment methods for consistent and accurate sensor placement to maintain data quality. Excluding left-handed data undermines non-bias and inclusivity, neglecting many left-handed or ambidextrous surgeons. Incorporating this data or using data augmentation techniques prevents biased outcomes and enhances generalisation to real-life scenarios. It also enables the development of more effective surgical tools and techniques, improving patient outcomes.

Integrating NOMTS into surgical practice faces notable legal and practical constraints. Devices used in operating rooms must undergo rigorous medical certification and not disrupt the surgical process. Incorporating NOMTS directly into surgical instruments, as seen in certain robotic and laparoscopic devices10,37,38, may offer a solution. One study used a force-sensing forceps with regulatory approval36, and EM systems are already used in catheter procedures106 and experimentally in live surgery72, suggesting that the adoption of NOMTS in surgery may be closer than anticipated.

Due to taxonomy variability within the ML field, not all relevant publications may have been identified. To mitigate this, the authors created search terms with an information specialist, utilised multiple databases spanning medical and technical domains, and explored references from included studies. As only English publications were included, potential language bias may exist.

The possibility of publication bias should be noted, as significant and positive work is more likely to be published107,108. Research with poor results often goes unpublished, possibly leading to an absence of failed attempts in this review. Grey literature was excluded to maintain data quality109, potentially omitting some valuable works. The scientific community should publish failed attempts and conference presentations, as these contribute to understanding in the field.

In conclusion, the integration of NOMTS and ML in surgical motion analysis represents a promising frontier for surgical advancement. The challenges outlined by this review serve as a roadmap for future research and highlight the importance of collaborative interdisciplinary efforts to shape the future of surgical training and performance.

Methods

Search strategy

A comprehensive literature search was conducted across several databases: Embase.com, MEDLINE ALL via Ovid, Web of Science Core Collection, CINAHL via EBSCOhost, and Scopus. The search strategy was developed and implemented by an experienced medical information specialist (WMB) at Erasmus Medical Center on August 23 2024. It was based on three primary concepts: (1) machine learning and artificial intelligence; (2) motion tracking; (3) surgery and surgeon. The search query, detailed in Supplementary Note 1, included relevant terms and their synonyms. All retrieved records were imported into EndNote software, where duplicates were removed using an established method110. Additionally, relevant supplementary references identified through backward snowballing bibliographic cross-referencing during the full-text screening stage were considered for further analysis111. The review and research protocol were not registered prior to study commencement.

Study selection

The inclusion criteria required the use of ML techniques to analyse surgical motion data acquired through NOMTS, either independently or in conjunction with optical tracking. In this work, surgical motion is defined as deliberate hand and/or instrument movements performed by surgeons to accomplish surgical tasks. This includes basic tasks like suturing and knot-tying, simulations, and real-life surgeries. Original studies published in peer-reviewed journals, written in English, and available in full-text were assessed for eligibility. Additionally, conference papers from three high-profile medical engineering conferences were included: the International Conference on Intelligent Robots and Systems, the International Conference on Robotics and Automation, and the Conference of the IEEE Engineering in Medicine and Biology Society. Reviews, case-reports, and commentaries were excluded, as well as publications prior to the year 2000 due to their dated relevance. The first reviewer (TZC) screened titles and abstracts to determine eligibility, and full-text versions of selected studies were sought for in-depth review. Any papers lacking an immediate determination of eligibility underwent a secondary review by other reviewers (CT, MG, DV).

Data extraction process

The primary objective of the systematic review was to outline the types and applications of ML models using NOMTS for surgical motion analysis and to pinpoint future directions for the field, addressing any challenges identified. Secondary objectives included identifying the surgical approach, setting, procedure type, and dataset composition. Additionally, the study aimed to identify the roles of optical sensors when used alongside NOMTS, evaluate the effectiveness of ML models in achieving their tasks, and document the performance metrics and cross-validation techniques employed. All study characteristics and outcome measures were extracted by the first reviewer (TZC).

Quality assessment

The Medical Education Research Study Quality Instrument (MERSQI)112 was used for quality and risk of bias assessment. The tool consists of six domains of study quality: (1) study design, (2) sampling, (3) type of data, (4) validity of evaluation instrument, (5) data analysis, (6) outcomes. Each domain has a maximum score of 3, leading to an overall maximum score of 18. The included articles were scored by the first reviewer (TZC).

Data availability

The data extracted during the current study is available from the corresponding author upon reasonable request.

Code availability

No code was used for this study.

References

Li, X. et al. Artificial intelligence-assisted reduction in patients’ waiting time for outpatient process: a retrospective cohort study. BMC Health Serv. Res. 21, 237 (2021).

Li, X. et al. Using artificial intelligence to reduce queuing time and improve satisfaction in pediatric outpatient service: A randomized clinical trial. Front. Pediatr.10, 929834 (2022).

Jordan, M. I. & Mitchell, T. M. Machine learning: trends, perspectives, and prospects. Science 349, 255–260 (2015).

Buchlak, Q. D. et al. Machine learning applications to clinical decision support in neurosurgery: an artificial intelligence augmented systematic review. Neurosurg. Rev. 43, 1235–1253 (2019).

Nichols, J. A., Herbert Chan, H. W. & Baker, M. A. B. Machine learning: applications of artificial intelligence to imaging and diagnosis. Biophys. Rev. 11, 111–118 (2019).

Alhasan, M. & Hasaneen, M. Digital imaging, technologies and artificial intelligence applications during COVID-19 pandemic. Computerized Med. Imaging Graph. 91, 101933 (2021).

Hunter, B., Hindocha, S. & Lee, R. W. The role of artificial intelligence in early cancer diagnosis. Cancers14, 1524 (2022).

Lam, K. et al. Machine learning for technical skill assessment in surgery: a systematic review. npj Digital Med. 5, 24 (2022).

Lee, D. et al. Evaluation of surgical skills during robotic surgery by deep learning-based multiple surgical instrument tracking in training and actual operations. J. Clin. Med. 9, 1964 (2020).

Hung, A. J. et al. A deep-learning model using automated performance metrics and clinical features to predict urinary continence recovery after robot-assisted radical prostatectomy. BJU Int. 124, 487–495 (2019).

Saun, T. J., Zuo, K. J. & Grantcharov, T. P. Video technologies for recording open surgery: a systematic review. Surgical Innov. 26, 599–612 (2019).

Albasri, S., Popescu, M., Ahmad, S. & Keller, J. Procrustes dynamic time wrapping analysis for automated surgical skill evaluation. Adv. Sci., Technol. Eng. Syst. J. 6, 912–921 (2021).

Soangra, R., Sivakumar, R., Anirudh, E. R., Yedavalli, S. V. R. & Emmanuel, B. J. Evaluation of surgical skill using machine learning with optimal wearable sensor locations. PLoS ONE 17, e0267936 (2022).

DiPietro, R. et al. Segmenting and classifying activities in robot-assisted surgery with recurrent neural networks. Int. J. Computer Assist. Radiol. Surg. 14, 2005–2020 (2019).

Forestier, G. et al. Surgical motion analysis using discriminative interpretable patterns. Artif. Intell. Med. 91, 3–11 (2018).

King, R. C., Atallah, L., Lo, B. P. L. & Yang, G.-Z. Development of a wireless sensor glove for surgical skills assessment. IEEE Trans. Inf. Technol. Biomed.13, 673–679 (2009).

Nguyen, X. A., Ljuhar, D., Pacilli, M., Nataraja, R. M. & Chauhan, S. Surgical skill levels: Classification and analysis using deep neural network model and motion signals. Comput. Methods Prog. Biomed.177, 1–8 (2019).

Zia, A., Sharma, Y., Bettadapura, V., Sarin, E. L. & Essa, I. Video and accelerometer-based motion analysis for automated surgical skills assessment. Int. J. Comput. Assist. Radiol. Surg. 13, 443–455 (2018).

Lee, E. J., Plishker, W., Liu, X., Bhattacharyya, S. S. & Shekhar, R. Weakly supervised segmentation for real-time surgical tool tracking. Healthc. Technol. Lett. 6, 231–236 (2019).

Zhang, D. et al. Automatic microsurgical skill assessment based on cross-domain transfer learning. IEEE Robot. Autom. Lett. 5, 4148–4155 (2020).

Ahmidi, N. et al. A dataset and benchmarks for segmentation and recognition of gestures in robotic surgery. IEEE Trans. Biomed. Eng. 64, 2025–2041 (2017).

van Amsterdam, B. et al. Gesture recognition in robotic surgery with multimodal attention. ieee trans. Med. Imaging 41, 1677–1687 (2022).

Gao, Y. et al. Unsupervised surgical data alignment with application to automatic activity annotation. In: 2016 IEEE Int. Conf. on Robotics and Automation (ICRA) 4158–4163. (Curran Associates, Inc., Stockholm, Sweden, 2016).

Goldbraikh, A., Shubi, O., Rubin, O., Pugh, C. M. & Laufer, S. MS-TCRNet: Multi-Stage Temporal Convolutional Recurrent Networks for action segmentation using sensor-augmented kinematics. Pattern Recognit. 156, 110778 (2024).

Itzkovich, D., Sharon, Y., Jarc, A., Refaely, Y. & Nisky, I. Using augmentation to improve the robustness to rotation of deep learning segmentation in robotic-assisted surgical data. In: 2019 Int. Conf. on Robotics and Automation (ICRA) 5068–5075 (Curran Associates, Inc., Montreal, QC, Canada, 2019).

Itzkovich, D., Sharon, Y., Jarc, A., Refaely, Y. & Nisky, I. Generalization of Deep Learning Gesture Classification in Robotic-Assisted Surgical Data: From Dry Lab to Clinical-Like Data. IEEE J. Biomed. Health Inform. 26, 1329–1340 (2022).

Long, Y. et al. Relational graph learning on visual and kinematics embeddings for accurate gesture recognition in robotic surgery. In: 2021 IEEE Int. Conf. on Robotics and Automation (ICRA) 13346–13353 (Institute of Electrical and Electronics Engineers Inc., Xi'an, China, 2021).

Qin, Y. et al. Temporal segmentation of surgical sub-tasks through deep learning with multiple data sources. In: 2020 IEEE Int. Conf. on Conference on Robotics and Automation (ICRA) 371–377 (IEEE Press, Paris, France, 2020).

Qin, Y., Feyzabadi, S., Allan, M., Burdick, J. W. & Azizian, M. daVinciNet: Joint prediction of motion and surgical state in robot-assisted surgery. In: 2020 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS) 2921–2928 (IEEE Press, Las Vegas, NV, USA (Virtual), 2020).

Pachtrachai, K., Vasconcelos, F., Edwards, P. & Stoyanov, D. Learning to calibrate—estimating the hand-eye transformation without calibration objects. IEEE Robot. Autom. Lett. 6, 7309–7316 (2021).

Rocha, C. d. C., Padoy, N. & Rosa, B. Self-supervised surgical tool segmentation using kinematic information. In: 2019 Int. Conf. on Robotics and Automation (ICRA) 8720–8726 (IEEE, Montreal, QC, Canada, 2019).

Brown, K. C., Bhattacharyya, K., Kulason, S., Zia, A. & Jarc, A. How to Bring Surgery to the Next Level: Interpretable Skills Assessment in Robotic-Assisted Surgery. Visc. Med. 36, 463–470 (2020).

Rosen, J., Hannaford, B., Richards, C. G. & Sinanan, M. N. Markov modeling of minimally invasive surgery based on tool/tissue interaction and force/torque signatures for evaluating surgical skills. IEEE Trans. Biomed. Eng. 48, 579–591 (2001).

Liu, J. et al. Visual-kinematics graph learning for procedure-agnostic instrument tip segmentation in robotic surgeries. In: 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2023) (IEEE Press, 2023).

Sabique, P. V., Pasupathy, G., Ramachandran, S. & Shanmugasundar, G. Investigating the influence of dimensionality reduction on force estimation in robotic-assisted surgery using recurrent and convolutional networks. Eng. Appl. Artif. Intell. 126, 107045 (2023).

Baghdadi, A., Lama, S., Singh, R. & Sutherland, G. R. Tool‑tissue force segmentation and pattern recognition for evaluating neurosurgical performance. Sci. Rep. 13, 9591 (2023).

Zia, A., Guo, L., Zhou, L., Essa, I. & Jarc, A. Novel evaluation of surgical activity recognition models using task-based efficiency metrics. Int. J. Comput. Assist. Radiol. Surg. 14, 2155–2163 (2019).

Hung, A. J. et al. Utilizing Machine Learning and Automated Performance Metrics to Evaluate Robot-Assisted Radical Prostatectomy Performance and Predict Outcomes. J. Endourol. 32, 438–444 (2018).

Chen, A. B., Liang, S., Nguyen, J. H., Liu, Y. & Hung, A. J. Machine learning analyses of automated performance metrics during granular sub-stitch phases predict surgeon experience. Surgery 169, 1245–1249 (2021).

Kelly, J. D., Petersen, A., Lendvay, T. S. & Kowalewski, T. M. Bidirectional long short-term memory for surgical skill classification of temporally segmented tasks. Int. J. Comput. Assist. Radiol. Surg. 15, 2079–2088 (2020).

Uemura, M. et al. Feasibility of an AI-based measure of the hand motions of expert and novice surgeons. Comput. Math. Methods Med. 2018, 9873273 (2018).

Gao, Y. et al. JHU-ISI Gesture and Skill Assessment Working Set (JIGSAWS): a surgical activity dataset for human motion modeling. Modeling and Monitoring of Computer Assisted Interventions (M2CAI)—MICCAI Workshop. https://cirl.lcsr.jhu.edu/research/hmm/datasets/jigsaws_release/ (2014).

Wang, Z. & Majewicz Fey, A. Deep learning with convolutional neural network for objective skill evaluation in robot-assisted surgery. Int. J. Comput. Assist. Radiol. Surg. 13, 1959–1970 (2018).

Wang, Z. & Fey, A. M. SATR-DL: Improving surgical skill assessment and task recognition in robot-assisted surgery with deep neural networks. In: 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 1793–1796 (IEEE, Honolulu, HI, USA, 2018).

van Amsterdam, B., Clarkson, M. J. & Stoyanov, D. Multi-task recurrent neural network for surgical gesture recognition and progress prediction. In: 2020 IEEE International Conference on Robotics and Automation (ICRA) 1380–1386 (IEEE, Paris, France, 2020).

Bissonnette, V. et al. Artificial intelligence distinguishes surgical training levels in a virtual reality spinal task. J. Bone Jt. Surg. 101, e127 (2019).

Korte, C., Schaffner, G. & McGhan, C. L. R. A preliminary investigation into the feasibility of semi-autonomous surgical path planning for a mastoidectomy using LSTM-recurrent neural networks. J. Med. Devices 15, 011001 (2021).

Megali, G., Sinigaglia, S., Tonet, O. & Dario, P. Modelling and evaluation of surgical performance using hidden Markov models. IEEE Trans. Biomed. Eng. 53, 1911–1919 (2006).

Topalli, D. & Cagiltay, N. E. Classification of intermediate and novice surgeons’ skill assessment through performance metrics. Surg. Innov. 26, 621–629 (2019).

Baghdadi, A., Hoshyarmanesh, H., de Lotbiniere-Bassett, M. P., Choi, S. K. & Sutherland, G. R. Data analytics interrogates robotic surgical performance using a microsurgery-specific haptic device. Expert Rev. Med. Devices 17, 721–730 (2020).

Li, K. & Burdick, J. W. Human motion analysis in medical robotics via high-dimensional inverse reinforcement learning. Int. J. Robot. Res. 39, 568–585 (2020).

Lyman, W. B. et al. An objective approach to evaluate novice robotic surgeons using a combination of kinematics and stepwise cumulative sum (CUSUM) analyses. Surg. Endosc. 35, 2765–2772 (2021).

Fard, M. J. et al. Automated robot-assisted surgical skill evaluation: Predictive analytics approach. Int. J. Med. Robot. Comput. Assist. Surg. 14, e1850 (2018).

Lin, H. C., Shafran, I., Yuh, D. & Hager, G. D. Towards automatic skill evaluation: Detection and segmentation of robot-assisted surgical motions. Comput.Aided Surg. 11, 220–230 (2006).

Anh, N. X., Nataraja, R. M. & Chauhan, S. Towards near real-time assessment of surgical skills. Comput. Methods Prog. Biomed.187, 105234 (2020).

Shu, X., Chen, Q. & Xie, L. A novel robotic system for flexible ureteroscopy. Int. J. Med. Robot. Comput. Assist. Surg. 17, e2191 (2021).

Sang, H. et al. A zero phase adaptive fuzzy Kalman filter for physiological tremor suppression in robotically assisted minimally invasive surgery. Int. J. Med. Robot. Comput. Assist. Surg. 12, 658–669 (2016).

Su, H. et al. Neural Network Enhanced Robot Tool Identification and Calibration for Bilateral Teleoperation. IEEE Access 7, 122041–122051 (2019).

Zhao, H. et al. A fast unsupervised approach for multi-modality surgical trajectory segmentation. IEEE Access 6, 56411–56422 (2018).

Reiley, C. E., Plaku, E. & Hager, G. D. Motion generation of robotic surgical tasks: Learning from expert demonstrations. In: Annual Int. Conf. of IEEE Engineering in Medicine and Biology Society 967–970 (IEEE, Buenos Aires, Argentina, 2010).

Despinoy, F. et al. Unsupervised Trajectory Segmentation for Surgical Gesture Recognition in Robotic Training. IEEE Trans. Biomed. Eng. 63, 1280–1291 (2016).

Peng, W., Xing, Y., Liu, R., Li, J. & Zhang, Z. An automatic skill evaluation framework for robotic surgery training. Int. J. Med. Robot. Computer Assist. Surg. 15, e1964 (2019).

van Amsterdam, B., Nakawala, H., Momi, E. D. & Stoyanov, D. Weakly Supervised Recognition of Surgical Gestures. In: 2019 International Conference on Robotics and Automation (ICRA) 9565–9571 (IEEE, Montreal, QC, Canada, 2019).

Fard, M. J., Ameri, S., Chinnam, R. B. & Ellis, R. D. Soft Boundary Approach for Unsupervised Gesture Segmentation in Robotic-Assisted Surgery. Ieee Robot. Autom. Lett. 2, 171–178 (2016).

Lea, C., Vidal, R. & Hager, G. D. Learning Convolutional Action Primitives for Fine-grained Action Recognition. In: 2016 IEEE Int. Conf. on Robotics and Automation (ICRA) 1642–1649 (IEEE, Stockholm, Sweden, 2016).

Murali, A. et al. TSC-DL: Unsupervised Trajectory Segmentation of Multi-Modal Surgical Demonstrations with Deep Learning. In: 2016 IEEE International Conference on Robotics and Automation (ICRA) 4150–4157 (IEEE, Stockholm, Sweden, 2016).

Jog, A. et al. Towards integrating task information in skills assessment for dexterous tasks in surgery and simulation. In: 2011 IEEE International Conference on Robotics and Automation 5273–5278 (IEEE, Shanghai, China, 2011).

Hung, A. J. et al. Road to automating robotic suturing skills assessment: Battling mislabeling of the ground truth. Surgery 171, 915–919 (2022).

Sewell, C. et al. Providing metrics and performance feedback in a surgical simulator. Comput.Aided Surg. 13, 63–81 (2008).

Allen, B. et al. Support vector machines improve the accuracy of evaluation for the performance of laparoscopic training tasks. Surg. Endosc. 24, 170–178 (2010).

Oquendo, Y. A., Riddle, E. W., Hiller, D., Blinman, T. A. & Kuchenbecker, K. J. Automatically rating trainee skill at a pediatric laparoscopic suturing task. Surg. Endosc. 32, 1840–1857 (2018).

Ahmidi, N. et al. Automated objective surgical skill assessment in the operating room from unstructured tool motion in septoplasty. Int. J. Comput. Assist. Radiol. Surg. 10, 981–991 (2015).

Jiang, J., Xing, Y., Wang, S. & Liang, K. Evaluation of robotic surgery skills using dynamic time warping. Comput. Methods Prog. Biomed.152, 71–83 (2017).

Zheng, Y., Leonard, G., Zeh, H. & Fey, A. M. Frame-wise detection of surgeon stress levels during laparoscopic training using kinematic data. Int. J. Comput. Assist. Radiol. Surg. 17, 785–794 (2022).

Loukas, C. & Georgiou, E. Surgical workflow analysis with Gaussian mixture multivariate autoregressive (GMMAR) models: a simulation study. Comput. Aided Surg. 18, 47–62 (2013).

Ershad, M., Rege, R. & Majewicz Fey, A. Automatic and near real-time stylistic behavior assessment in robotic surgery. Int. J. Comput. Assist. Radiol. Surg. 14, 635–643 (2019).

Loukas, C. & Georgiou, E. Multivariate Autoregressive Modeling of Hand Kinematics for Laparoscopic Skills Assessment of Surgical Trainees. IEEE Trans. Biomed. Eng. 58, 3289–3279, (2011).

Loukas, C., Rouseas, C. & Georgiou, E. The role of hand motion connectivity in the performance of laparoscopic procedures on a virtual reality simulator. Med. Biol. Eng. Comput. 51, 911–922 (2013).

Xu, W., Chen, J., Lau, H. Y. K. & Ren, H. Data-driven methods towards learning the highly nonlinear inverse kinematics of tendon-driven surgical manipulators. Int. J. Med. Robot. Comput. Assist. Surg. 13, e1774 (2017).

Song, W. G., Yuan, K. & Fu, Y. J. Haptic Modeling and Rendering Based on Neurofuzzy Rules for Surgical Cutting Simulation. Acta Autom. Sin. 32, 193–199 (2006).

Goldbraikh, A., Volk, T., Pugh, C. & Laufer, S. Using open surgery simulation kinematic data for tool and gesture recognition. Int. J. Comput. Assist. Radiol. Surg. 17, 965–979 (2022).

Wang, Z., Yan, Z., Xing, Y. & Wang, H. Real-time trajectory prediction of laparoscopic instrument tip based on long short-term memory neural network in laparoscopic surgery training. Int. J. Med. Robot. Comput. Assist. Surg. 18, e2441 (2022).

Sun, Z., Maréchal, L. & Foong, S. Passive magnetic-based localization for precise untethered medical instrument tracking. Comput. Methods Prog. Biomed.156, 151–161 (2018).

Meißner, C., Meixensberger, J., Pretschner, A. & Neumuth, T. Sensor-based surgical activity recognition in unconstrained environments. Minim. Invasive Ther. Allied Technol. 23, 198–205 (2014).

Brown, J. D. et al. Using contact forces and robot arm accelerations to automatically rate surgeon skill at peg transfer. IEEE Trans. Biomed. Eng. 64, 2263–2275 (2017).

Khan, A. et al. Generalized and efficient skill assessment from IMU data with applications in gymnastics and medical training. ACM Trans. Comput. Healthc. 2, 1–21 (2020).

Lin, Z. et al. Objective skill evaluation for laparoscopic training based on motion analysis. IEEE Trans. Biomed. Eng. 60, 977–985 (2013).

Laverde, R., Rueda, C., Amado, L., Rojas, D. & Altuve, M. Artificial Neural Network for Laparoscopic Skills Classification Using Motion Signals from Apple Watch. In: 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 5434–5437 (IEEE, Honolulu, HI, USA, 2018).

Lin, Z. et al. Waseda Bioinstrumentation system WB-3 as a wearable tool for objective laparoscopic skill evaluation. In: 2011 IEEE International Conference on Robotics and Automation 5737–5742 (IEEE, Shanghai, China, 2011).

Sbernini, L. et al. Sensory-glove-based open surgery skill evaluation. IEEE Trans. Hum.-Mach. Syst. 48, 213–218 (2018).

Watson, R. A. Use of a machine learning algorithm to classify expertise: analysis of hand motion patterns during a simulated surgical task. Academic Med.: J. Assoc. Am. Med. Coll. 89, 1163–1167 (2014).

Horeman, T., Rodrigues, S. P., Jansen, F. W., Dankelman, J. & van den Dobbelsteen, J. J. Force parameters for skills assessment in laparoscopy. IEEE Trans. Haptics 5, 312–322, (2012).

Xu, J. et al. A deep learning approach to classify surgical skill in microsurgery using force data from a novel sensorised surgical glove. Sensors 23, 8947 (2023).

Tatinati, S., Nazarpour, K., Ang, W. T. & Veluvolu, K. C. Multi-dimensional modeling of physiological tremor for active compensation in hand-held surgical robotics. IEEE Trans. Ind. Electron. 64, 1645–1655 (2017).

Tatinati, S., Veluvolu, K. C. & Ang, W. T. Multistep prediction of physiological tremor based on machine learning for robotics assisted microsurgery. IEEE Trans. Cybern. 45, 328–339 (2015).

Wittmann, F., Lambercy, O. & Gassert, R. Magnetometer-based drift correction during Rest in IMU Arm Motion Tracking. Sensors 19, 1312 (2019).

Gholinejad, M., Loeve, A. J. & Dankelman, J. Surgical process modelling strategies: which method to choose for determining workflow? Minim. Invasive Ther. Allied Technol. 28, 91–104 (2019).

Esteva, A. et al. A guide to deep learning in healthcare. Nat. Med. 25, 24–29 (2019).

Daly, K. et al. Federated learning in practice: reflections and projections. Preprint at https://arxiv.org/abs/2410.08892 (2024).

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems (NeurIPS) Vol. 30 (eds I. Guyon et al.) (Curran Associates, Inc., 2017).

Lipton, Z. C. A critical review of recurrent neural networks for sequence learning. Preprint at https://arxiv.org/abs/1506.00019 (2015).

Bai, S., Kolter, J. Z. & Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. Preprint at https://arxiv.org/abs/1803.01271 (2018).

Allgaier, J., Lena, L., Draelos, R. L. & Pryss, R. How does the model make predictions? A systematic literature review on the explainability power of machine learning in healthcare. Artif. Intell. Med. 143, 102616 (2023).

Raman, C., Nonnemaker, A., Villegas-Morcillo, A., Hung, H. & Loog, M. Why did this model forecast this future? Information-theoretic saliency for counterfactual explanations of probabilistic regression models. In Advances in Neural Information Processing Systems Vol. 36 (eds A. Oh et al.) 33222–33240 (Curran Associates, Inc., 2023).

Raman, C., Tan, S. & Hung, H. A modular approach for synchronized wireless multimodal multisensor data acquisition in highly dynamic social settings. In Proceedings of the 28th ACM International Conference on Multimedia 3586–3594 (Association for Computing Machinery, Seattle, WA, USA, 2020).

Ramadani, A. et al. A survey of catheter tracking concepts and methodologies. Med. Image Anal. 82, 102584 (2022).

Chan, A., Hróbjartsson, A., Haahr, M. T., Gøtzsche, P. C. & Altman, D. G. Empirical Evidence for Selective Reporting of Outcomes in Randomized Trials. JAMA 291, 2457–2465 (2004).

Song, F. et al. Extent of publication bias in different categories of research cohorts: a meta-analysis of empirical studies. BMC Med. Res. Methodol. 9, 79 (2009).

Egger, M., Juni, P., Bartlett, C., Holenstein, F. & Sterne, J. How important are comprehensive literature searches and the assessment of trial quality in systematic reviews? Empirical study. Health Technol. Assess. 7, 1–76 (2003).

Bramer, W. M., Giustini, D., Jonge, G. B. D., Holland, L. & Bekhuis, T. De-duplication of database search results for systematic reviews in EndNote. J. Med. Libr. Assoc.104, 240–243 (2016).

Jalali, S. & Wohlin, C. Systematic literature studies: Database searches vs. backward snowballing. In: Proc. ACM-IEEE International Symposium on Empirical Software Engineering and Measurement 29-38 (IEEE, Lund, Sweden, 2012).

Reed, D. A. et al. Association between funding and quality of published medical education research. JAMA 298, 1002–1009 (2007).

Acknowledgements

T.Z.C., C.M.T., M.F., J.D., C.M.F.D., M.G., and D.V. disclose support for this work within DiMiRoS project from Health&Holland-TKI [grant number EMCLSH21018].

Author information

Authors and Affiliations

Contributions

T.Z.C.: conceptualised and designed the work, acquired and interpreted data, prepared figures and tables, drafted and edited the work. C.M.T.: conceptualised and designed the work, acquired and interpreted data, prepared figures and tables, edited the work. M.F.: conceptualised and edited the work. WMB: designed the work and acquired data. J.D., C.R., C.M.F.D.: conceptualised and edited the work. M.G., D.V.: conceptualised and designed the work, prepared figures and tables, edited the work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Carciumaru, T.Z., Tang, C.M., Farsi, M. et al. Systematic review of machine learning applications using nonoptical motion tracking in surgery. npj Digit. Med. 8, 28 (2025). https://doi.org/10.1038/s41746-024-01412-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-024-01412-1