Abstract

Visual monitoring of pre-term infants in intensive care is critical to ensuring proper development and treatment. Camera systems have been explored for this purpose, with human pose estimation having applications in monitoring position, motion, behaviour and vital signs. Validation in the full range of clinical visual scenarios is necessary to prove real-life utility. We conducted a clinical study to collect RGB, depth and infra-red video from 24 participants with no modifications to clinical care. We propose and train image fusion pose estimation algorithms for locating the torso key-points. Our best-performing approach, a late fusion method, achieves an average precision score of 0.811. Chest covering or side lying decrease the object key-point similarity score by 0.15 and 0.1 respectively, while accounting for 50% and 44% of the time. The baby’s positioning and covering supports their development and comfort, and these scenarios should therefore be considered when validating visual monitoring algorithms.

Similar content being viewed by others

Introduction

The neonatal period—the first 28 days of life1—is among the most critical in human development. Pre-term infants experience increased risk of short- and long-term complications2,3,4, while neonatal mortality accounts for almost half of all under-5 mortality5. In the UK, around 100,000 babies—approximately one out of every seven—will spend time in neonatal care every year6. In the neonatal intensive care unit (NICU), the highest dependency care, clinical staff are responsible for multiple babies, supported by patient monitors with continuous vital sign monitoring. However, they are unable to provide continuous visual monitoring—each nurse’s attention is divided between patients, while spending time writing notes, preparing feeds and carrying out their other clinical responsibilities. To address this monitoring gap, cameras, coupled with image processing and intelligent decision tools can be employed to identify critical situations7.

Continuous pose estimation has a range of applications in supporting neonatal care. Low frame-rate analysis, (e.g. once per second) could monitor the baby’s position and ensure that positioning guidelines8,9 are followed. It can also provide a reference point for vital sign monitoring algorithms10,11, especially those that rely on a manually selected region of interest12,13,14, or for motion detection algorithms to distinguish localized and gross body movement15,16,17. High frame-rate analysis can provide more detailed information about a baby’s movements, suitable for automatic General Movements Assessment (GMA)18,19, cerebral dysfunction, or monitoring sedation20.

Existing work in this area is predominantly focussed on controlled environments—well-lit, with babies uncovered and clearly visible, which is ideal for image analysis. In reality, the neonatal incubator can be a visually busy and varied environment, with monitoring equipment, blankets, clothing and nurses’ hands cluttering the scene, making pose estimation difficult. Understanding the performance of computer vision algorithms in this environment is a gap in existing literature. In this paper, we explore several approaches for pose estimation in the clinical environment combining RGB, depth and infra-red imaging. We investigate the covering and position of the babies during the recordings, and evaluate the pose estimation approaches across the different imaging scenarios. Our data includes multiple 24-hour recordings, during which nurses were instructed to continue delivering care as though the camera was not present.

Adult pose estimation is an extensively studied field, summarized by Munea et al. in a review for 2D21 and by Wang et al. for 3D pose estimation22, and further by Gamra et al. in 202123, and Zheng et al. in 202324. The two primary datasets for adult pose estimation are MPII Human Pose25, assessed using the percentage of correct keypoints (PCKh) metric, and MS COCO26, assessed using the average precision (AP) metric. The HRNet27,28 backbone yields promising results for the MPII dataset24, with PCKh@0.5=92.3. In addition, many subsequent state-of-the-art models are based on an HRNet backbone with additional enhancements29,30,31. The vision transformer, specifically ViTPose ViTAE-G,32 achieves state-of-the-art performance on the COCO dataset, albeit with vastly more parameters than comparable models. Smaller ViTPose-based models also achieve promising results. Other transformers, such as Swin33,34 and HRFormer35 are also successful. In our work, we use transfer learning from pose estimation models designed for adults and older infants, which are available in the MMPose model zoo36. The MMPose model zoo provides pre-trained models models and training configurations, with readily comparable scores. We use the HRNet model (up to AP=0.767), HRFormer (up to AP=0.774), and ViTPose (sizes S ‘small’ and B ‘base’, AP=0.739 and 0.757 respectively).

Pre-trained models from adults have been frequently used for infant pose estimation and deliver promising results. Huang et al.37 achieved an AP of 93.6 using the publicly available infant image dataset MINI-RGBD38. This dataset uses synthetic images of infants above term age superimposed on patterned backgrounds. Gross et al.39 used 1424 videos from an infant pose dataset and OpenPose40 pre-trained on MPII, achieving 99.61% PCKh@0.5, including a mixture of hospital and home recordings. These recordings were also of post-term age infants on plain backgrounds. Moccia et al. use a depth camera in clinical conditions, achieving a median root mean square (RMS) distance of limbs (limbs being a group of three joints) - of 10.2 pixels, across all limbs41, and subsequently depth video with temporal processing from 16 preterm infants with a median RMS distance of 9.06 pixels42. Further work43,44,45 continues improve neonatal pose estimation, though it is found that generalizability across neonatal datasets is poor. This behaviour is also found by Jahn et al.46, who show that retraining on one’s own infant dataset improves performance on that particular dataset, it fails to generalize to other infant datasets where it is outperformed by a generic adult model.

These datasets differ in terms of both the ages of the subjects, ranging from pre-term newborns to old babies, and the recording environment. The BabyPose dataset47 contains depth images of babies in incubators, our target demographic, but babies are uncovered and their limbs are clearly visible. The MINI-RGBD dataset38 is a synthetic dataset with generated images imposed over real baby poses. Each has a plain background. No existing dataset includes images of babies in the presence of blanket covering or clinical interventions. Understanding the performance of these algorithms in this environment is needed to demonstrate feasibility for continuous clinical use. Datasets used in the literature are summarized in Table 1. We distinguish between an infant—a baby who is post-term age, and may be able to move and crawl unaided, and a neonate—a new-born baby, who has yet to be discharged from hospital. Only the BabyPose dataset contains neonates in their NICU cots. The work in this paper focusses on pre-term neonates.

To overcome this challenge, the fusion of different camera types may increase reliability48 because each delivers different information which could not be extracted from a single signal49. For example, although depth imaging does not see patterns and similar features, it is unaffected by poor lighting conditions. Although existing work has included either RGB or depth images, to our knowledge no work has attempted to combine multiple image types within the same framework.

We recorded 24 babies using a calibrated RGB, depth and IR camera system during 1-hour and 24-hour sessions in the neonatal intensive care unit at Addenbrooke’s Hospital, Cambridge, UK. These recordings were in the normal clinical settings, and the 24-hour recordings included real interventions, light changes, movement and interactions with parents. This paper investigates the performance of signal fusion approaches for combining the three imaging methods in the unaltered clinical environment. In summary, we make the following contributions:

-

An assessment of the types of scenes found in the normal NICU clinical environment, and the performance of pose estimation algorithms.

-

Proposal and comparison of methods for combining RGB, depth and IR imaging for pose estimation, where earlier stages are separate (image-specific) and later stages are shared.

-

Comparison of neonatal and infant datasets, finding that models trained on our dataset show good generalization to other NICU data, but poor generalization to older infant and adult data.

Results

Dataset

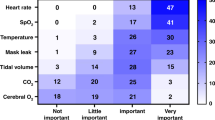

The data collection study consists of our 1-hour recordings, testing the clinical set-up, and 24-hour recordings, which we use to analyse the clinical environment. Each recording contains RGB, depth and infra-red images. The distribution of the baby’s position across the 24-hour recordings is shown in Fig. 1, arranged by position and covering. We find that babies are typically less covered during interventions and that the uncovered/half covered period, when the baby is easily visible, accounts for approximately half of the time. Babies are typically more covered when on their side. The supine, uncovered scenario is around 15% of the dataset (excluding interventions) and around 10% (including interventions). The complete distribution for the 1-hour dataset and the 1-hour plus 24-hour dataset is provided in the Supplementary Information (Supplementary Section 1), Supplementary Figs. 1-3 and Supplementary Tables 1 and 2.

Model evaluation

In addition to Single-Image models, which use only one image type (RGB, depth, or IR), we analyse three fusion methods. Early Image Fusion models (EIF) stack the image along the channel dimension at the input, with up to five color channels (RGB-D-IR), or a combination thereof. Intermediate Image Fusion models (IIF-X) have X separate stages that are specific to each image type, after which the feature maps are summed and input to a single set of shared layers. Late Image Fusion models (LIF-X) also have X separate stages followed by the remaining shared stages, but the heatmaps from each image are only combined after the key-point head. IIF and LIF fusion methods are tested from X = 1 to 4, representing separate/shared division after each of the four blocks of HRNet and HRFormer. All models are trained and tested using five-fold cross-validation. More detailed explanation of the models is provided in Section “Pose Estimation Models”.

Table 2 shows the AP scores for the models using the HRNet backbone. Scores are presented as mean ± standard deviation across the five folds. For 10 of the 15 models, models with input size 384 × 384 outperformed those with input size 256 × 256. The HRNet W48 model outperforms the W32 model in 11 of the 15 models. Table 3 shows the scores for the HRFormer and vision transformer backbones. The HRFormer and HRNet models achieve similar scores, though the ViTPose-based models are substantially worse, so several additional experiments are conducted for the depth-only ViTPose-B model to understand if this is due to the experimental configuration. For the depth-only model (AP=0.741), we find that freezing half of the stages reduces performance (AP=0.721) and freezing only the multi-head self-attention module as suggested by the original authors32 offers only marginal improvement (AP=0.742). This improved score does not exceed other backbones. Training curves over the 50 epochs are shown in Figure 2 for the HRNet-W48 LIF-3 and HRFormer-B IIF-2 model, with no noticeable improvement on the test sets after 30 epochs.

Inference time

The inference cost and number of model parameters are given in Fig. 3. Table 4 shows the inference latency in milliseconds for each model across three hardware specifications, reflecting different possible applications: MacBook Air (Apple M2 processor, CPU), for processing on a cot-side iPad or mini PC; a desktop computer with a modest GPU (NVIDIA RTX 3060Ti, CUDA), acting as a local computer away from the cot; a high-performance server (NVIDIA A100 80GB, CUDA) for off-site analysis. Models suitable for real-time (for continuous motion analysis) and 1 frame-per-second inference (for position summaries or vital sign monitoring) are highlighted, thought it should be noted that an off-site server would likely process data from multiple cameras at once time. Results are given for multiple batch sizes where applicable. The HRNet-W48 LIF-3 model achieves the highest performance, at the cost of the highest number of parameters and alongside the HRFormer-B IIF-2 model, the highest inference complexity. The ViTPose models, whose performance is worse in each case than the other models, are not included in these plots. The HRFormer-S and HRNet-W32 IIF-2 models offer high performance at lower complexity.

Effect of position and covering

Next, we consider variations in the level of covering and presence of intervention in the image for the best performing models. The OKS scores for a selection of the best performing model backbones and architectures are presented in Table 5 divided by position and covering. For every model, including those not presented in this table, increasing the covering decreases the mean OKS score, most notably when the baby is fully covered. The differences in performance between every pair of coverings or positions for each model are significant at p = 1e − 5, except for prone/supine. The prone and supine positions are similar in performance and neither position is better overall. However, performance is lower in all models during interventions, and even lower when the baby is on their side. The scores with and without intervention are presented for the HRNet-W48-384 LIF-3 and HRFormer-B IIF-2 models in Fig. 4. Interventions marginally decrease the score on average, and noticeably reduce the fifth percentile score for the HRFormer-B model. The most substantial difference caused by interventions is for babies that are at least 3/4 covered. This may be expected given that interventions effectively increase the level of covering by occluding parts of the baby, and moving from 3/4 covering to full covering (during non-intervention) drastically reduces performance. All differences between covering/intervention are significant at p = 1e − 5 except uncovered/half-covered with interventions, 3/4-covered/fully covered with interventions, and half-covered with/without interventions. The range of OKS scores is presented, rather than the average precision, as these scores are taken across each fold of cross-validation (and hence with different models). The complete statistical analysis is provided in the Supplementary Information (Supplementary Section 2), comparing covering and interventions in Supplementary Tables 3-5, position in Supplementary Table 6 and covering in Supplementary Table 7.

Table 6 shows the comparative performance of the models with and without pre-training on the COCO adult dataset. The models with pre-training achieve higher scores in each case.

Cross-dataset comparison

Next, we compare results across other datasets, summarised in Table 7. To create models suitable for grayscale inputs, the first convolutional layer is summed along the input channel dimension. Models trained on COCO perform well on the SyRIP dataset, but poorly on our dataset, indicating that are images are out-of-domain for the adult model. When evaluating models trained on COCO on depth images, both BabyPose and our dataset perform poorly; this is expected as the model is expecting grayscale images which would contain different features. We use the HRNet-W48-384 model to compare performance on other datasets. We find that our model performs well on BabyPose, outperforming the original work, while performing poorly on SyRIP; this can be expected due to SyRIP’s images being of older babies, and noting the high score for COCO → SyRIP models. We also see that training on our dataset has greatly degraded performance on the COCO dataset (AP=0.698 to 0.351 for RGB and AP=0.682 to 0.081 for depth).

Finally, we present some examples of images in our dataset, generated using the HRNet-W32-256 IIF-2 model. Figure 5 shows examples of infants in different poses with key-point detections. Figure 6 shows two examples, where only one image type is provided with the others set to zero. In the first example, the image is very dark and the model only works well when the IR image is given. In the second example, the image is well-lit and the model works best when the RGB image is available, although the IR image works well, but providing only the depth image to the fusion model does not allow good detection. This approach is tested across the entire dataset, presented in Table 8. The IIF-2 models lose performance when any images are missing, though the performance loss is lowest for the depth image. For the LIF-3 model, the performance loss is much smaller when images are missing and removing the depth image marginally increases performance. Poor visibility is the most common scenario affecting image quality. Some examples of motion artefacts are provided in Supplementary Fig. 4 and discussed in the Supplementary Information (Supplementary Section 3).

Discussion

The challenges of the neonatal clinical setting necessitate an alternative approach from typical RGB pose estimation. This paper has proposed early, intermediate and late fusion methods for combining RGB, depth and infra-red images for neonatal pose estimation, based on retraining from models originally trained for pose estimation in adults. Four model structures were explored; the use of a single image type only, or combining images at the input stage (EIF), summing features within the model (IIF) or summing output heatmaps (LIF). The possibility of sharing weights for each image type was also considered.

The dataset contained 14 one-hour and ten 24-hour recordings. The 24-hour recordings were analysed to understand the percentage of time that babies spend in different positions and coverings. It was found that babies spent approximately half of their time in the uncovered (no blanket cover) or half-covered (blanket covering the lower half of the body) state. In the remaining half of the data, the majority of the baby’s chest is covered. A subset of frames were annotated for training, with increased frequency where the depth variation was greater. While this approach somewhat biases the dataset towards selecting images with interventions, the number of frames selected in a fifteen-minute period is fixed; babies’ positions and coverings change much less frequently. Excluding interventions, 31% of images contained babies on their back (supine), 25% on their front (prone) and 44% on their side. These results have implications for future neonatal camera-based approaches: existing work includes babies which are predominantly unclothed and supine, accounting for around 15% of the non-intervention data in our dataset. This would inflate performance scores as the baby is (a) more visible and (b) always known to be in the same position, so the model does not have to identify if the baby is prone or supine.

We only annotate the hips and shoulders as these joints are visible in most frames. Including other key-points would either unbalance the dataset (joints such as ankles and knees are often not visible) or not represent the NICU environment, as certain types of images would become oversampled. When comparing to other work, only these four joints are evaluated. Five-fold cross validation was used for training and testing. This method ensures that models are tested on every image and baby in the dataset46. It also ensures that each baby is tested entirely unseen. This should provide greater confidence that the model can perform well on additional data.

We believe that existing methods for scoring detections require some adaptation for neonatal data. The RMS error does not account for the size of the image or subject. The PCKh metric has not been adjusted for the proportionally larger head of neonates, compared to adults. We calculated the median torso-to-head-size ratio for the MPII (adult) dataset (1.28) and for ours (0.83), indicating that the head is larger than the torso for our neonates. The AP metric is chosen as it uses the area of the subject, and contains per-key-point constants to account for the relative difficulty of each joint type. However, the per-key-point constants are based variance of human annotation in images of adults. An appropriate set of constants is needed for neonatal data; for exampe, Jahn et al.46 report greater error between human annotators and pose estimators for the hip joints than shoulders. This would require a larger dataset with all key-points annotated, which is a limitation of our work.

Comparison with other work is difficult due to lack of availability of images and models for privacy reasons. In addition, datasets contain images of infants in different environments and at different ages, and no available dataset contains multiple imaging modalities. We are therefore limited to comparing our single-image models with other work. We found that an adult COCO HRNet model with AP=0.682 scores only 0.201 on the BabyPose dataset, and 0.118 on our dataset, while scoring 0.727 on the SyRIP dataset. Conversely, our HRNet models score highly on our dataset (0.779) and the BabyPose dataset (0.824), but lower on the SyRIP (0.351) and COCO (0.383) datasets. The AP scores for our models evaluated on BabyPose (0.824) and ours (0.765) for the depth-only model also suggest that our depth dataset is similar to BabyPose, albeit with more challenging images due to the lower score and inclusion of multiple poses, interventions and covering. In summary, we find generalizabilty between adult/older infant datasets (COCO and SyRIP), and between neonatal datasets (ours and BabyPose), with retraining required between the two types.

Three backbones are tested - HRNet, HRFormer, and the Vision Transformer (labelled ViTPose). For single images, the best performance was achieved for RGB or depth models. A selection of fusion models (EIF-D-IR, IIF-1,2,3 and LIF-3) also performed well, outperforming the single-image models. The best-performing single-image model used the depth image; however, providing only the depth image to the fusion models greatly reduced performance. As the depth and IR images come from the same sensor, it is likely that either only the RGB image is available, or only depth and IR images are available, and in these cases, an RGB-specific or EIF-D-IR model is superior. Despite attempts to modify the training, the vision transformer models exhibited worse performance than the HRFormer and HRNet models.

For the HRFormer-based models and HRNet-W32-256, we find that IIF-X outperforms LIF-X for all X, and that IIF-2 outperforms all EIF models. This is also true for vision transformer models, but the lower overall performance of these models should be addressed before drawing a conclusion. The HRNet W32/48-384 models show different behaviour; LIF-3 outperforms all other models, and also has a marginally lower standard deviation across folds. This difference cannot be attributed purely to the resolution of the image (possibly requiring more modality-specific stages) as the HRFormer models use the same resolution. The LIF-3 model behaves differently to IIF-2 when one or two images are missing; removing the depth image marginally increases the score (0.811 to 0.813) and removing the IR or RGB image does not cause such drastic performance loss. The IIF-2 model’s deterioration can be explained by the latter stages of the model being trained to assume that all images are available, while removing images from the LIF-3 model would simply reduce the heatmap intensity. For example, when the RGB image is dark, the IIF-2 model should rely on the depth and IR images - but when these are removed, performance is poor. Further analysis is provided in the Supplementary Information (Supplementary Section 4) as a test image is made artificially darker (Supplementary Fig. 5). In all cases, only providing the depth image yields extremely poor performance (AP < 0.1), suggesting that fusion models trained on all three modalities learn to rely on the RGB and IR images and not depth.

These models have considerably different inference times - HRNet-W32-256 IIF-2 can be run at 30fps for 8 babies simultaneously on a desktop-class GPU, while HRNet-W48-384 LIF-3 would require a server-class GPU for just three babies. In scenarios where depth or infra-red imaging is unavailable, the LIF-3 model does not show as much performance degradation as the IIF-2 model, and its inference time could be greatly reduced by only evaluating branches for the available images. Further training with missing images may allow the model to become more robust and be used regardless of the imaging configuration. The IIF-2 and LIF-3 models therefore fill different use cases and we would suggest continuing to investigate both.

Using fusion models increases the model complexity, number of parameters and inference time; the later the fusion stage, the greater the effect. The best performing model - HRNet-W48 LIF-3, achieved an AP score of 0.811 but requires over 140 GFLOPS for inference and contains almost 100M parameters. The need for patient privacy and data security may necessitate smaller models for cot-side inference. The HRFormer-S, IIF-2 model requires 18 GFLOPS and only 9.1M parameters, while achieving AP 0.809, which may present a worthwhile trade-off. The best performing single-image model, HRFormer-B with depth images, achieves an AP of 0.801 with 43.3 GFLOPS and 43.6M parameters. We find that using a smaller backbone in a fusion architecture outperforms or matches a larger backbone in a single-image architecture with faster inference and fewer parameters. The HRFormer-S IIF-2 model is suitable for 1 frame-per-second inference on a small, cot-side PC, or real-time inference on a desktop or server PC. It should be noted that including full-body key-point annotations for 24-hour real data is not yet explored and may require the use of larger backbones. This remains a key limitation of this work.

The detection scores are shown to be marginally lower during interventions, and decrease as the baby is more covered. The best performance, with 95% of OKS scores above 0.9, is achieved for uncovered babies without intervention. For fully covered babies, the median OKS score decreases to around 0.86, though the range of scores, as shown in Fig. 4, increases greatly. We find an overall trend of performance decrease as the baby is more covered, regardless of the backbone or fusion architecture.

No conclusion can be drawn on the difference between prone and supine positions; the difference in performance for a given model is not always statistically significant, and the difference is not uniformly positive or negative across models. However, better performance is always seen for prone/supine compared to intervention, and again for intervention compared to the side position. The side position is the most challenging infant position. Despite the side position being more common than the front/back positions, and hence appearing more often in the dataset, it has not caused the models to perform better for babies in this position.

In our 24-hour dataset, a baby spends 44% (excluding interventions) of their time on the side, over 50% at least three-quarters covered and 24% fully covered. We have shown these images to be a more challenging, but real NICU scenario. We also see that for non-intervention images, the range of OKS scores increases as the baby is more covered, indicating that a completely failed detection becomes more likely. In summary, the side position and covered infants are common clinical environments that should be included in test datasets for future neonatal computer vision methods.

The fusion approaches used here simply sum the features from each separate part of the model. Each part’s weight is effectively the amplitude of the feature, so we rely on the separate branches not having large responses to irrelevant features such as a patterned blanket. Other strategies could use a separate part of the model to weight the branches, either on a per-image or per-feature basis. A model like this would require careful training, as plain gradient calculations would give whichever modality is currently favoured by the weighting scheme an effectively higher learning rate. The current fusion schemes are also symmetric. We could use the depth image, currently the best-performing single-image model, which is unaffected by patterns, within an attention mechanism for the RGB or IR images. Image dropout could also be used to make the LIF-3 model into a single model suitable for scenarios where certain image types are unavailable.

We have focussed on a wide range of model architectures and backbones, rather than deep hyperparameter analysis. This would ultimately require a separate validation fold, reducing the available training data. Further work will include more data (from more recordings), annotated with limbs as well as the torso, carefully divided into folds that match the overall distribution of positions and coverings. Once limb key-points have been added, we can also investigate the possibility of reducing the inference time and number of parameters by pruning the model asymmetrically.

This work has focussed exclusively on pose estimation through camera imaging from outside the incubator. The pose estimation accuracy decreases as the infant is more covered and when the infant is on their side. One possible avenue for enhancement involves incorporating additional sensors. Other research has already been conducted to derive movements based on an accelerometer50 or electromagnetic tracking systems (EMTS)51. It is also possible to use a pressure mat52,53, which can provide additional information when the baby is covered, and can confirm or reject spurious pose estimation results.

Improved pose estimation accuracy has significant potential clinical implications for enhancing neonatal care such as providing critical information about an infant when not directly observed by clinical staff. Accurate pose estimation can contribute to the early detection of developmental abnormalities and ongoing monitoring of infant health. These advancements offer valuable insights that can inform clinical decisions and interventions, ultimately improving the standard of care for neonates. This must be considered in the context of the real-world NICU environment; babies are covered for their own comfort, and the supine position is not always ideal for development54,55.

Methods

Clinical study design

The study was conducted in the Neonatal Intensive Care Unit (NICU) at Addenbrooke’s Hospital, Cambridge, UK, involving 24 preterm neonates in incubators in a single-center study. The study received approval from the North West Preston Research Ethics Committee (reference number 21/NW/0194, IRAS ID 285615) and followed the ethical guidelines of the 1964 Helsinki Declaration. Informed consent was received from each participant’s parent/guardian. Each neonate was recorded for 1 or 24 hours in normal clinical conditions. The incubator environment remained unaltered during the recording. As such, the dataset includes variability in lighting, infant position, and environmental factors. The camera was mounted to the side of the incubator using a flexible arm and placed on top of, and outside, the incubator (Fig. 7). The camera is kept within 1cm of the surface to prevent reflections from the camera’s infra-red emitter, or ceiling lights.

A laptop was used to record and synchronize data from an Azure Kinect camera (Microsoft Corporation, USA) and the neonate’s patient monitor (GE Healthcare Technologies Inc., USA). The Azure Kinect camera captured time-synchronized RGB, depth and infrared (IR) video at 30 frames per second. The RGB resolution was 1280 × 720 pixels, and the depth/IR frames were upsampled to match this resolution from a native 640 × 576 pixels during the RGB/IR alignment. The spatial camera alignment uses the camera’s factory calibration parameters to account for the physical separation between the RGB and IR sensors and their image distortion.

Dataset

The dataset contains 10 24-hour recordings and 14 1-hour recordings. The 1-hour recordings were during daylight hours and there were minimal interventions and disruptions during the recording. The 24-hour recordings contained the normal range of lighting, interventions and activity, as nurses and parents were instructed to behave as they normally would. The demographic information for the two datasets are given in Table 9, including the mean, standard deviation and range for the gestational age (GA) at birth and recording, weight at birth and recording, and sex/ethnicity. The dataset encompasses a wide range of baby sizes, (485-1950g), GA at birth (23+1 to 31+1 wk+d), and GA at recording (24+6 to 34+6 wk+d).

Ground truth

Our pose estimation algorithm is designed to locate the torso key-points (shoulders and hips) only. This is because the torso position is visible, or its position can be inferred, in almost all images. Face key-points are often not visible, and limbs are often covered by blankets. By using key-points which can be localized in all images, we have a larger dataset and we can compare the performance in different clinical conditions, such as the level of covering of the infant.

Due to the similarity of consecutive frames, images were annotated manually in 15-30 second intervals using a self-developed annotation tool. In the 24-hour recordings, the mean absolute difference between depth pixels was taken for each fifteen-minute window, and 30 frames were selected so as to be evenly spaced in cumulative depth difference. As such, frames featuring greater activity were prioritised. Annotators selected the position of the shoulders and hips, where they are visible or where they can be inferred. Inferred positions might indicate that although the hip is covered by a blanket, the video may be skipped to a point where the blanket is removed, provided that there is no motion of the baby in that period. Key-points were labelled as visible if they were either clearly visible or under a blanket, occluded if covered by any other object (such as a nurse’s hand), and not visible otherwise. Annotators included clinicians and staff with clinical experience, and multiple annotators reviewed each annotation.

Frames were also annotated with the infant’s position (prone, supine, or on their side), intervention (yes, no), and level of covering (none, half, three-quarter, full). Half covering indicates that the lower body was covered (or a similar amount, for example across the stomach). Three quarter covering indicates that the lower body and a significant part of the torso was covered, but the shoulders are clearly visible. Full covering indicates that the shoulders are only just visible, if at all.

Pose estimation models

For pose estimation, we use a top-down heatmap approach. As we know each frame contains exactly one baby, we do not need a joint connection method such as associative embedding. The model is built using an HRNet, HRFormer or ViTPose backbone, followed by a key-point head. These models are chosen due their recent state-of-the-art results in adult data, specifically ViTPose and derivatives of HRNet. The models described subsequently are designed to process different image types for the same task. We therefore anticipate that there will be merit to having separate, image type-specific early layers and shared later layers, as we are looking for the same features in each image but the appearance of the features in each image type is different. The HRNet and HRFormer backbones contain input layers followed by four stages. We consider possible separate/shared divisions after each stage; if the division is after the fourth stage, then only the key-point head is shared. The division point is denoted X, which can be between 1 and 4. For the Vision Transformer, we determine each stage to be a quarter of the transformer layers, with the patch embedding included in the first stage. We denote the image-specific early stages as Part 1 of the model and the shared later stages as Part 2. We implement four categories of models to process the different image types.

-

Single-Image models trained and evaluated using a single image type, providing reference performance scores.

-

Early Image Fusion Models (EIF). These models concatentate the input images into a single image with up to five color channels (RGB-D-IR), or a combination thereof. This architecture is illustrated in Fig. 8.

Fig. 8: Illustration of the early image fusion EIF-RGB-D-IR model. Images are concatenated along the channel dimension, producing a 5-channel image in the example shown. The very first layer of the backbone is modified to accommodate the different number of channels, and the model proceeds as normal. For other EIF variants (e.g. RGB-D), the number of channels produced would be different.

-

Intermediate Image Fusion Models (IIF-X). These models have X separate stages, at which point the features are summed before being input to a single set of later stages. If the separate/shared division is after the fourth stage (IIF-4), then the summation is before the key-point head. This architecture is illustrated in Fig. 9.

-

Late Image Fusion Models (LIF-X). These models have X separate stages, followed by 4 − X shared stages to produce a set of heatmaps for each image. The heatmaps are summed (after the key-point head) to produce the overall prediction. These models are the most computationally expensive as they effectively require three models to be evaluated for each frame. This architecture is illustrated in Fig. 10.

The models are evaluated for X from 1 to 4. We use HRNet backbone configurations W32 and W48 with input image sizes 256 × 256 and 384 × 384. The HRFormer backbone is evaluated at input size 384 × 384 in small and base configurations. The Vision Transformer backbone is evaluated using small and base (S, B) configurations with 256 × 192 image size.

Evaluation metrics

The COCO average precision (AP)26, percentage of correct key-points based on head size (PCKh)25,56, and RMS position error are commonly used in the literature for evaluating pose estimation algorithms. We choose to primarily use the AP metric in this work, for the following reasons: we only use four key-points and the AP metric is suitable as it includes a per-key-point constant that accounts for each key-point’s different localisation difficulty; the RMS error is dependent on the image size, which varies across datasets; PCKh is based on the head size of the subject, which does not account for differences in neonatal body proportions, and the difficulty in detecting the different key-points. We also compute the AP metric with/without interventions and different blanket coverage. In the AP metric, each frame k is given an object key-point similarity score (OKSk), defined in Equation (1) where δik is the visibility of key-point i in frame k (1 if visible, 0 otherwise), dik is the distance of detected key-point i in frame k from its ground truth, κ is a per-key-point constant reflecting the detection difficulty of each key-point type, and sk is a scale parameter for frame k.

Note that detection k is equivalent to frame k in this work as each frame contains exactly one person. The scale parameter in the COCO dataset is given by the square root of the person’s area. In the absence of the area parameter, the xtcocotools Python57 package uses 0.53 × the area of the rectangular bounding box around the person - this factor is approximately the median ratio of person area to bounding box in the dataset. As our dataset does not contain a labelled outline of the baby - this would be impossible due to the blanket - we use the bounding box around the visible key-points to determine the scale parameter, then apply multiplication factors based on the median ratio in the COCO dataset, as follows, depending on key-point visibility (each item’s percentage of our dataset in parenthesis):

-

1.

3-4 key-points, or 2 opposing corners: use \({s}_{k}^{2}=3.28\times\) the torso bounding box area (95.4%).

-

2.

Only left, or only right key-points: use \({s}_{k}^{2}=2.0\times\) torso height squared (0.9%).

-

3.

Only shoulders: use \({s}_{k}^{2}=6.16\times\) shoulder width squared (3.0%).

-

4.

Only hips: use \({s}_{k}^{2}=13.64\times\) hip width squared (0.7%).

-

5.

If one or zero key-points are visible, the scale cannot be found and the image is not evaluated.

Training

We employ transfer learning due to the small size of our dataset in comparison with COCO. Pre-trained models are obtained from the MMPose model zoo36. Certain weights are adjusted for this application: only indices 5, 6, 11 and 12, corresponding to the shoulders and hips, are used in the key-point head to produce 4 heatmaps instead of 17. For single channel (depth/IR) images, the weights of the initial convolutional layer are summed along the image channel dimension. For EIF models, these weights are stacked along the image channel dimension so that the number of input channels matches the input image. In models with separate stages for each image type, each is initialized with the same initial weights. Before training on our dataset, each pre-trained model was confirmed to produce correct outputs when provided with an image of an adult, with a grayscale version used as a substitute for depth/IR images.

Models are trained for up to 50 epochs using the Adam optimizer method with weight decay58 and learning rate 0.0005. The dataset is divided into five folds for cross-validation, and each model is tested on each held-out fold. Each baby’s recording is assigned to a particular fold. Our experiments are implemented in Python (version 3.10) using libraries PyTorch (version 2.3), CUDA (version 12.1), and MMPose (forked from version 1.3.1).

Cross-dataset evaluation

We compare our results to existing work, namely the SyRIP37 and BabyPose47 datasets. However, lack of availability of trained models or images, due to participant privacy, makes direct comparison impossible. No other dataset includes all three image types, hence we cannot compare our fusion models. In addition, SyRIP contains images of babies significantly older than those in our dataset, making them not directly comparable. Instead we analyse cross-dataset performance, using models trained on either COCO (adult) or our dataset, and tested on the SyRIP and BabyPose datasets.

Models trained on COCO and their respective evaluation scores are obtained from the MMPose model zoo36. These models are converted to grayscale by summing the first convolution kernel along the channel axis, and retaining the axis in the tensor. This is equivalent to converting the image to three-channel grayscale inference. We convert the SyRIP and BabyPose datasets to the COCO format, with only the torso annotated. Images where the torso is not at all visible are excluded. We then evaluate each model on each other dataset. For comparison with the BabyPose dataset, we also evaluate the root-mean-square error (RMSE). We scale the errors to be that of a 128 × 96 image, as used in the original work.

Data availability

The datasets generated and/or analysed during the current study are not publicly available due to the sensitive and identifiable nature of the data. The data may be made available for academic purposes under a controlled data sharing agreement.

Code availability

The underlying code for this study is not publicly available but may be made available to qualified researchers on reasonable request from the corresponding author.

References

Lee, H. C., Bardach, N. S., Maselli, J. H. & Gonzales, R. Emergency department visits in the neonatal period in the united states. Pediatr. Emerg. Care 30, 315–318 (2014).

Hannan, K. E., Hwang, S. S. & Bourque, S. L. Readmissions among nicu graduates: Who, when and why? Semin. Perinatol. 44, 151245 (2020).

Ray, K. N. & Lorch, S. A. Hospitalization of early preterm, late preterm, and term infants during the first year of life by gestational age. Hospital Pediatrics 3, 194–203 (2013).

Wang, M. L., Dorer, D. J., Fleming, M. P. & Catlin, E. A. Clinical outcomes of near-term infants. Pediatrics 114, 372–376 (2004).

Organization, W. H. Newborn mortality [Internet]. Genève: World Health Organization [accessed 14 May 2024]; Available from: https://www.who.int/news-room/fact-sheets/detail/newborn-mortality.

Newborn mortality [Internet]. London: National Institute for Health and Care Excellence [cited 2024 May 14]; [about screen 1]. Available from: https://www.nice.org.uk/about/what-we-do/into-practice/measuring-the-use-of-nice-guidance/impact-of-our-guidance/niceimpact-maternity-neonatal-care.

Keles, E. & Bagci, U. The past, current, and future of neonatal intensive care units with artificial intelligence: a systematic review. npj Digital Med. 6, 220 (2023).

Parry, S., Ranson, P. & Tandy, S. Positioning and handling guideline https://www.neonatalnetwork.co.uk/nwnodn/wp-content/uploads/2023/11/Positioning-and-Handling-Guideline-2023-Final-1.pdf (2023).

Trust, R. C. H. N. Developmental positioning on the neonatal unit clinical guideline https://doclibrary-rcht.cornwall.nhs.uk/GET/d10366683&ved=2ahUKEwii7pfAmsSOAxUgQUEAHQ7zA-4QFnoECBgQAQ&usg=AOvVaw3mfhvvYSXyrEcv5ghWAXq8 (2024).

Villarroel, M. et al. Non-contact physiological monitoring of preterm infants in the neonatal intensive care unit. NPJ Digital Med icine 2, 128 (2019).

Ruhrberg Estévez, S. et al. Continuous non-contact vital sign monitoring of neonates in intensive care units using rgb-d cameras. Sci. Rep. 15, 16863 (2025).

Cenci, A., Liciotti, D., Frontoni, E. & Mancini, A. Non-contact monitoring of preterm infants using rgb-d camera (2015).

Gibson, K. et al. Non-contact heart and respiratory rate monitoring of preterm infants based on a computer vision system: a method comparison study. Pediatr. Res. 86, 738–741 (2019).

Cobos-Torres, J.-C., Abderrahim, M. & Martínez-Orgado, J. Non-contact, simple neonatal monitoring by photoplethysmography. Sensors 18 https://www.mdpi.com/1424-8220/18/12/4362 (2018).

Cattani, L. et al. Monitoring infants by automatic video processing: A unified approach to motion analysis. Computers Biol. Med. 80, 158–165 (2017).

Olmi, B., Frassineti, L., Lanata, A. & Manfredi, C. Automatic detection of epileptic seizures in neonatal intensive care units through eeg, ecg and video recordings: A survey. IEEE Access 9, 138174–138191 (2021).

Plouin, P. & Kaminska, A. Chapter 51 - neonatal seizures. In Dulac, O., Lassonde, M. & Sarnat, H. B. (eds.) Pediatric Neurology Part I, vol. 111 of Handbook of Clinical Neurology, 467–476 (Elsevier, Amsterdam, 2013).

Schroeder, A. S. et al. General movement assessment from videos of computed 3d infant body models is equally effective compared to conventional rgb video rating. Early Hum. Dev. 144, 104967 (2020).

Marchi, V. et al. Automated pose estimation captures key aspects of general movements at eight to 17 weeks from conventional videos. Acta Paediatrica 108, 1817–1824 (2019).

Gleason, A. et al. Accurate prediction of neurologic changes in critically ill infants using pose ai. medRxiv https://www.medrxiv.org/content/early/2024/06/10/2024.04.17.24305953.full.pdf (2024).

Munea, T. L. et al. The progress of human pose estimation: A survey and taxonomy of models applied in 2d human pose estimation. IEEE Access 8, 133330–133348 (2020).

Wang, J. et al. Deep 3d human pose estimation: A review. Computer Vis. Image Underst. 210, 103225 (2021).

Gamra, M. B. & Akhloufi, M. A. A review of deep learning techniques for 2d and 3d human pose estimation. Image Vis. Comput. 114, 104282 (2021).

Zheng, C. et al. Deep learning-based human pose estimation: A survey. ACM Comput. Surv. 56, 1–37 (2023).

Andriluka, M., Pishchulin, L., Gehler, P. & Schiele, B. 2d human pose estimation: New benchmark and state of the art analysis. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2014).

Lin, T.-Y. et al. Microsoft coco: Common objects in context. In Fleet, D., Pajdla, T., Schiele, B. & Tuytelaars, T. (eds.) Computer Vision – ECCV 2014, 740–755 (Springer International Publishing, Cham, 2014).

Wang, J. et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 43, 3349–3364 (2021).

Sun, K., Xiao, B., Liu, D. & Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 5693–5703 (2019).

Zhang, F., Zhu, X., Dai, H., Ye, M. & Zhu, C. Distribution-aware coordinate representation for human pose estimation. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 7091–7100 (2020).

Liu, H., Liu, F., Fan, X. & Huang, D. Polarized self-attention: Towards high-quality pixel-wise mapping. Neurocomput 506, 158–167 (2022).

Zhang, J., Chen, Z. & Tao, D. Towards high performance human keypoint detection. Int. J. Comput. Vis. 129, 2639–2662 (2021).

Xu, Y., Zhang, J., Zhang, Q. & Tao, D. Vitpose: simple vision transformer baselines for human pose estimation. In Proceedings of the 36th International Conference on Neural Information Processing Systems, NIPS ’22 (Curran Associates Inc., Red Hook, NY, USA, 2024).

Liu, Z. et al. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In 2021 IEEE/CVF International Conference on Computer Vision (ICCV), 9992–10002 (IEEE Computer Society, Los Alamitos, CA, USA, 2021). https://doi.org/10.1109/ICCV48922.2021.00986.

Xiong, Z., Wang, C., Li, Y., Luo, Y. & Cao, Y. Swin-Pose: Swin Transformer Based Human Pose Estimation. In 2022 IEEE 5th International Conference on Multimedia Information Processing and Retrieval (MIPR), 228–233 (IEEE Computer Society, Los Alamitos, CA, USA, 2022). https://doi.org/10.1109/MIPR54900.2022.00048.

Yuan, Y. et al. Hrformer: high-resolution transformer for dense prediction. In Proceedings of the 35th International Conference on Neural Information Processing Systems, NIPS ’21 (Curran Associates Inc., Red Hook, NY, USA, 2021).

Contributors, M. Openmmlab pose estimation toolbox and benchmark. https://github.com/open-mmlab/mmpose (2020).

Huang, X., Fu, N., Liu, S. & Ostadabbas, S. Invariant representation learning for infant pose estimation with small data. In 2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), 1–8 (IEEE, 2021).

Hesse, N. et al. Computer vision for medical infant motion analysis: State of the art and rgb-d data set. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, 0–0 (2018).

Groos, D., Adde, L., Støen, R., Ramampiaro, H. & Ihlen, E. A. Towards human-level performance on automatic pose estimation of infant spontaneous movements. Computerized Med. Imaging Graph. 95, 102012 (2022).

Cao, Z., Simon, T., Wei, S.-E. & Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In CVPR (2017).

Moccia, S., Migliorelli, L., Pietrini, R. & Frontoni, E. Preterm infants’ limb-pose estimation from depth images using convolutional neural networks. In 2019 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), 1–7 (2019).

Moccia, S., Migliorelli, L., Carnielli, V. & Frontoni, E. Preterm infants’ pose estimation with spatio-temporal features. IEEE Trans. Biomed. Eng. 67, 2370–2380 (2020).

Cao, X. et al. Aggpose: Deep aggregation vision transformer for infant pose estimation. In Raedt, L. D. (ed.) Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, IJCAI-22, 5045–5051 (International Joint Conferences on Artificial Intelligence Organization, Vienna, Austria, 2022). https://doi.org/10.24963/ijcai.2022/700.

Soualmi, A., Ducottet, C., Patural, H., Giraud, A. & Alata, O. A 3D pose estimation framework for preterm infants hospitalized in the Neonatal Unit. Multimed. Tools Appl. 83, 24383–24400 (2023).

Yin, W. et al. A self-supervised spatio-temporal attention network for video-based 3d infant pose estimation. Med. Image Anal. 96, 103208 (2024).

Jahn, L. et al. Comparison of marker-less 2d image-based methods for infant pose estimation https://arxiv.org/abs/2410.04980 (2024).

Migliorelli, L., Moccia, S., Pietrini, R., Carnielli, V. P. & Frontoni, E. The babypose dataset. Data Brief. 33, 106329 (2020).

Assa, A. & Janabi-Sharifi, F. A robust vision-based sensor fusion approach for real-time pose estimation. IEEE Trans. Cybern. 44, 217–227 (2013).

Lyra, S. et al. Camera fusion for real-time temperature monitoring of neonates using deep learning. Med. Biol. Eng. Comput. 60, 1787–1800 (2022).

Gravem, D. et al. Assessment of infant movement with a compact wireless accelerometer system (2012).

Karch, D. et al. Quantitative score for the evaluation of kinematic recordings in neuropediatric diagnostics. Methods Inf. Med. 49, 526–530 (2010).

Kulvicius, T. et al. Infant movement classification through pressure distribution analysis. Communications Medicine3 (2023).

Aziz, S. et al. Detection of neonatal patient motion using a pressure-sensitive mat. In 2020 IEEE International Symposium on Medical Measurements and Applications (MeMeA), 1–6 (2020).

Waitzman, K. A. The importance of positioning the near-term infant for sleep, play, and development. Newborn Infant Nurs. Rev. 7, 76–81 (2007).

Raczyńska, A., Gulczyńska, E. & Talar, T. Advantages of side-lying position. a comparative study of positioning during bottle-feeding in preterm infants (<34 weeks ga). J. Mother Child 25, 269–276 (2021).

Yang, Y. & Ramanan, D. Articulated human detection with flexible mixtures of parts. IEEE Trans. Pattern Anal. Mach. Intell. 35, 2878–2890 (2013).

Extended coco api (xtcocotools).https://github.com/jin-s13/xtcocoapi.

Loshchilov, I. & Hutter, F. Decoupled weight decay regularization. In International Conference on Learning Representations https://api.semanticscholar.org/CorpusID:53592270 (2017).

Acknowledgements

We are grateful to all participants, and their parents, for taking part in the study. We also thank the staff on the neonatal unit for their support in undertaking the study in the busy NICU. This work is funded by the Rosetrees Trust, Stoneygate Trust, and Isaac Newton Trust, with funding support from the Cambridge Centre for Data-Driven Discovery and Accelerate Programme for Scientific Discovery, made possible by a donation from Schmidt Futures, and Trinity College, University of Cambridge. This work was performed using resources provided by the Cambridge Service for Data Driven Discovery (CSD3) operated by the University of Cambridge Research Computing Service (www.csd3.cam.ac.uk), provided by Dell EMC and Intel using Tier-2 funding from the Engineering and Physical Sciences Research Council (capital grant EP/T022159/1), and DiRAC funding from the Science and Technology Facilities Council (www.dirac.ac.uk).

Author information

Authors and Affiliations

Contributions

A.G. developed the software for clinical data collection. A.G. and J.M.W. conceptualised and implemented the fusion models and analysed the clinical data. M.L. and E.H. conducted the testing and training of the models and analysed the results. A.G., J.M.W., M.L., E.H., L.T. and K.B. conducted the data annotation, labelling and review. The clinical study was designed by A.G., L.T., K.B. and J.L. L.T. recruited participants. A.G. and L.T. conducted the data collection. The overall project conceptualisation, design and supervision was by J.L. (Engineering) and K.B. (paediatrics). J.M.W. and A.G. wrote the main manuscript text. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Grafton, A., Warnecke, J.M., Li, M. et al. Neonatal pose estimation in the unaltered clinical environment with fusion of RGB, depth and IR images. npj Digit. Med. 8, 539 (2025). https://doi.org/10.1038/s41746-025-01929-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-025-01929-z