Abstract

Proteins play diverse roles in all domains of life and are extensively harnessed as biomolecules in biotechnology, with applications spanning from fundamental research to biomedicine. Therefore, there is considerable interest in computationally designing proteins with specified properties. Protein structure generative models provide a means to design protein structures in a controllable manner and have been successfully applied to address various protein design tasks. Such models are paired with protein sequence and structure predictors to produce and select protein sequences for experimental testing. However, current protein structure generators face important limitations for proteins with more than 400 amino acids and require retraining for protein design tasks unseen during model training. To address the first issue, we introduce salad, a family of sparse all-atom denoising models for protein structure generation. Our models are smaller and faster than the state of the art and matching or improving design quality, successfully generating structures for protein lengths up to 1,000 amino acids. To address the second issue, we combine salad with structure editing, a sampling strategy for expanding the capability of protein denoising models to unseen tasks. We apply our approach to a variety of challenging protein design tasks, from generating protein scaffolds containing functional protein motifs (motif scaffolding) to designing proteins capable of adopting multiple distinct folds under different conditions (multi-state protein design), demonstrating the flexibility of salad and structure editing.

Similar content being viewed by others

Main

Computational protein design aims to generate protein sequences and three-dimensional structures with specified folds, functions and dynamics. Protein design tasks are varied, from designing proteins with a specified shape1,2 or symmetry3,4,5 and producing scaffolds for known functional motifs2,6,7 to designing potent binders for protein targets1,8,9,10 as well as proteins that can adopt multiple distinct folds under different conditions (multi-state design)11,12,13. Methods capable of solving these design tasks enable powerful applications in basic research and industry, for example, designing or optimizing enzymes14,15,16, antibodies8, vaccine scaffolds17,18 and biosensors12.

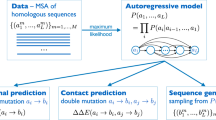

Protein generative models have recently been applied to solve many such protein design tasks1,2,19,20. Protein generation is fundamentally a multimodal generation problem, as proteins represent chains of amino acid residues, where each residue i carries an amino acid identity si and a set of atom coordinates xi. s is the protein’s sequence, and x is its structure. Designing proteins corresponds to sampling from the joint distribution of sequence and structure p(s, x|task) conditioned on a protein design task21. Many approaches to protein design decompose p(s, x|task) as p(s|xbb, task)p(xbb|task), where xbb are the coordinates of the protein backbone atoms that are present in all amino acids1,2,21. This results in a sequential pipeline of backbone generation, followed by sequence design. However, there is no guarantee that proteins generated in this way will express, fold and function as designed in a living cell. Producing experimentally viable protein designs requires the generation and computational screening of many backbone–sequence pairs1,20. This computational design selection is enabled by the combination of sequence design models such as ProteinMPNN20, ChromaDesign21 or Frame2Seq22, as well as protein structure predictors such as AlphaFold 2 (ref. 23) and ESMFold24.

Designed structure–sequence pairs are deemed successful if their structure is predicted with high confidence and matches the initial design1. The prediction confidence is measured in terms of the predicted local distance difference test (pLDDT)25 and the predicted aligned error (pAE)23 of the structure predictor used in the pipeline. Designed and predicted structures are considered to be in agreement if their root mean square deviation (RMSD), which measures the average distance between superimposed atoms, is low1. RMSD measures consistency between design and prediction and is generally referred to as self-consistent RMSD (scRMSD)1,26. A common choice of success criteria is scRMSD < 2 Å and pLDDT > 70 for ESMFold2 or pLDDT > 80 for AlphaFold 2, which have been shown to produce experimentally viable proteins1,10,27,28. This measure allows to compare different protein structure generators in terms of their designability, the fraction of generated structures for which at least one designed sequence meets the criteria for success. In addition to designability, models can be compared in terms of the diversity of their generations, as well as their novelty compared with the training set. Diversity can be measured in terms of the template modelling (TM) score2,29 within a set of generated structures. By contrast, novelty uses the TM-score to measure the dissimilarity between a model’s generated structures and its training set2. Together, these metrics characterize the performance of protein structure generators.

Current approaches to protein design combine multiple different methods for protein backbone generation. Knowledge-based design coupled with Rosetta30 plays an important role, especially for complex protein design tasks, such as enzyme design15,16, multi-state protein design11,12, and protein design with strong geometric and sequence constraints3,4. These methods are supported by machine learning models, which are capable of solving simpler design tasks without relying on Rosetta or manual design. Protein structure hallucination methods10,27,31,32,33,34 invert structure predictors using search or gradient descent to generate sequences with high-confidence predicted structures. These sequences are often adversarial and, therefore, discarded in favour of ProteinMPNN sequence designs10,27,31. Protein denoising diffusion probabilistic models35 iteratively generate proteins from random noise by learning to remove noise from corrupted protein sequences13,36 or structures1,2,21,37,38. Diffusion models have a runtime advantage over hallucination-based methods as they do not require optimization over a structure predictor1,27. Protein diffusion models have recently been applied to solve various protein design tasks from unconstrained de novo protein design1,2,21 to protein binders and complexes1,21.

Although current protein diffusion models have shown impressive performance for small protein generation, their performance deteriorates with protein sequence length N1,2,21,37, limiting their usefulness for designing large and complex proteins. The majority of protein diffusion models use model architectures derived from protein structure predictors23,39. Notable exceptions include Chroma21, Protpardelle40 and ProteinSGM41. Models based on structure predictors use residue-pair features and pair attention mechanisms, which result in O(N3) complexity, with pair features introducing a lower complexity bound of O(N2) and pair attention mechanisms increasing this complexity to O(N3) (refs. 23,39). Along with decreased runtime performance, these models also experience a drop in designability with increasing N. Although recent work on Proteus37 and Proteina42 has improved designability for proteins up to 800 residues long, no protein structure diffusion model has reached the designability of hallucination-based approaches beyond that length27. However, protein backbone hallucination suffers from long runtimes per design at these lengths27. This greatly reduces throughput and limits the applicability of backbone hallucination to large protein design tasks with lower per-design success rates compared with unconditional monomer design.

Another issue with current protein structure diffusion models is the need for additional training to solve specific protein design tasks. RFdiffusion and Genie are separately trained with protein motif conditioning to scaffold functional protein motifs1,2. Although Chroma’s conditioners allow for training-free adaptation of the model for different tasks, implementing new conditioners is not straightforward and requires the development of custom energy functions21. Thus, there is a substantial barrier to applying existing diffusion models to novel design tasks.

To address these issues, we introduce salad (sparse all-atom denoising), a family of efficient protein generative models with sub-quadratic complexity. We train our models with a denoising diffusion objective1,35 to remove noise from corrupted protein backbones. Starting from a sparse transformer architecture21,43,44, we investigate the impact of different model features and noise schedules on the designability and diversity of generated proteins. We find that our models are capable of generating diverse and designable backbones for proteins up to 1,000 residues long. salad matches or outperforms state-of-the-art diffusion models1,2,42 in terms of designability and drastically reducing runtime and parameter count. We combine salad with structure editing, a modified sampling algorithm for protein structure diffusion models. By editing the input noise and output of the model, we can enforce arbitrary structural constraints without the need for model retraining. This enables rapid prototyping of protein design tasks unseen during training. For example, we can symmetrize both model input and output to generate symmetric proteins, or replace residue coordinates with the coordinates of a protein structural motif to embed that motif in the generated structure. Structure editing allows us to tackle a variety of protein design tasks, generating designable backbones with specified shapes21, scaffolds for functional protein motifs1,2,6, repeat proteins3,4,13 and multi-state proteins that adopt distinct folds when cleaved13. In this way, salad provides an efficient plug-and-play replacement for other backbone generators in existing protein design pipelines, allowing fine-grained control via structure editing and enabling efficient design of large proteins.

Results

Sparse protein model architecture

We base our model architecture on the current best practices for transformer models. We use layer norm pre-normalization to increase the training stability45 and generalized Gaussian linear units (GeGLU) feed-forward layers that were found to improve model performance for transformer models46. We replace standard multi-head attention by invariant point attention (IPA) introduced by AlphaFold 2 (ref. 23) as an easy-to-implement SE(3)-equivariant self-attention layer. To improve the runtime complexity of protein structure generation, we limit the attention operation to a sparse set of neighbours for each amino acid residue. A schematic of the basic block of this architecture is shown in Fig. 1a. Each block in our model takes as input a set of amino acid features locali and position features xi. These are fed into a sparse version of IPA23. Instead of computing the full attention matrix and pair features, we first construct a set of neighbours for each amino acid (Fig. 1b). Each residue only computes pair features and attention weights for its set of neighbours. This procedure reduces attention complexity from O(N2) to O(N ⋅ K), where N is the number of residues and K is the number of neighbours. In contrast to other protein generative models1,2,21, our model does not use persistent pair features with pair attention or triangle multiplication23, which would increase complexity to O(N3). We also do not use explicit amino acid frame features23 that are updated in each block. Instead, our models directly update atom positions and recompute frame information when required to ensure equivariance. We reuse this basic block architecture (Fig. 1a) across all models in this work.

a, Schematic of the basic block of our architecture. b, Schematic of neighbour selection using residue index, nearest and random neighbours. c, Architecture of our sparse protein autoencoder. d, Sparse model performance on an autoencoding task. Box plots of scRMSD between the ground-truth and decoded structures for multiple sparse architectures: equivariant (EQ) and non-equivariant (NEQ). Optionally, we use predicted distograms for neighbour selection (dgram) and vector quantization (VQ). Measures of reconstruction performance are shown per number of model recycling iterations. The dotted line indicates the threshold of 1 Å for reconstruction at atomic precision. The box centre line indicates the median, the boundaries indicate the 1st and 3rd quartiles, and whiskers show the 1st or 3rd quartile + 1.5 times the interquartile range based on n = 34 CASP14 test structures and n = 45 CASP15 test structures.

As the first step to see if our sparse attention architecture can model protein structures, we trained a family of models as autoencoders on proteins in the Protein Data Bank (PDB)47 (Fig. 1c). We encoded protein structures using a single basic block with 32 nearest neighbours in Euclidean space per residue. We then optionally applied vector quantization (+VQ) to the resulting latent representation48 and decoded it using a six-block decoder with recycling23, reusing the previous iteration’s residue coordinates and locali features. VQ regularizes the latent space of the encoder and quantizes it into a set of discrete tokens for each residue48,49. This enables using the learned representation to train sequence generative models on structure-based data49. We tested both SE(3) equivariant and non-equivariant sparse transformers to check if there are any benefits to equivariance for the autoencoding task. Additionally, we investigated different neighbour-selection schemes. By default, we selected nearest neighbours based on distance along the protein chain and Euclidean distance between residues (Fig. 1b and Supplementary Algorithm 3). As Euclidean distances would be uninformative at the beginning of the decoding process, we optionally selected additional neighbours using average residue-pair distances predicted from locali by learning to predict a distogram23 in each block (+dgram, Fig. 1c (distogram attn.); also see the ‘Structure autoencoder models’ section and Supplementary Algorithms 7 and 8).

Evaluating these models on the CASP14 and CASP15 monomer test sets50,51 resulted in all models reaching <1 Å reconstruction accuracy after fewer than ten recycling iterations, regardless of equivariance and distogram-based neighbours (Fig. 1d). This indicates that our sparse attention architecture is expressive enough to model protein structures. Although there is a large difference in model performance between different architectures at one recycling iteration, this difference decreases with the number of iterations. We decided to keep the simplest version of our architecture using equivariant features without distogram neighbours for the rest of this work.

Edited denoising protein models

After ensuring that our sparse models are suitable for reconstructing protein structures, we modified our architecture for generative modelling. Our models operate on protein structures containing the backbone atoms (N, CA, C and O), an idealized beta-carbon (CB) and additional learned pseudo-atoms (Fig. 2a; see the ‘Model architecture’ section). We trained our models to denoise noisy structures xt ~ p(xt|x0) and to recover the original structure x0, resulting in a denoising diffusion probabilistic model loss \({{\mathcal{L}}}_{t}={{\mathbb{E}}}_{{\bf{x}}_{t}\sim p({\bf{x}}_{t}|{\bf{x}}_{0})}[||{\;f}_{\theta }({\bf{x}}_{t})-{\bf{x}}_{0}|{| }^{2}]\) (ref. 35). In addition to recovering x0, we introduced auxiliary losses to also predict an amino acid sequence and side-chain atom positions (Fig. 2a; also see the ‘Denoising model loss’ section). As our models generate all-atom structures, we refer to them as sparse all-atom denoising (salad) models throughout this work.

a, Schematic of the salad architecture. b, Sampling process of a salad model with structure editing, with example applications of input and output editing. c, Average runtimes on a single RTX 3090 GPU by number of amino acid residues of sparse diffusion models (full pair features and minimal pair features) compared with RFdiffusion, Genie 2, Chroma and Proteina. Runtimes are reported per designed structure, using the default number of denoising iterations for each model (salad, 400; Chroma, 500; Genie 2, 1,000; RFdiffusion, 50; Proteina, 400) as well as the time per iteration. Mean time is based on n = 10 generated backbones per size, and the generation time of the first backbone per size is discarded to exclude model compilation time from the average.

At inference, we can use our models to generate protein backbones by progressively denoising a pure noise structure x1. Given a noisy structure xt, we can use the model to predict an estimate fθ(xt) of the denoised structure x0. Reapplying noise at a lower diffusion time t′ results in a structure xt′ ~ p(xt′|fθ(xt)) (Fig. 2b). Repeating this process eventually results in a generated structure x. To enforce the structural properties of generated backbones directly in the denoising process, we introduce editing functions edit_input and edit_output, which modify the input and output of the denoising model, respectively (Fig. 2b). This results in a generative process:

Designing suitable editing functions allows us to adapt our models to various tasks, from motif scaffolding to multi-state protein design, without having to re-train our models.

As we are using sparse models for the sake of runtime efficiency, we compare the runtime performance of our models to state-of-the-art protein diffusion models (RFdiffusion, Genie 2, Chroma and Proteina). To see how far we can push the model runtime, we tested both full and lightweight versions of our model. For each model, we generated ten protein backbones per length (50–1,000 residues) on a single NVIDIA RTX 3090 GPU (Fig. 2c). salad outperformed all other models in terms of both time per design at the default number of diffusion steps and time per model iteration. Compared with the fastest non-salad model (Proteina), our models reached up to 7× speed-ups, and outperformed RFdiffusion by up to two orders of magnitude on large proteins. Indeed, generating a 1,000-residue protein structure using salad on a single NVIDIA RTX 3090 GPU takes only 19 s on average, whereas RFdiffusion takes over 10 min. In addition, our models use fewer parameters than comparable protein structure generators (Extended Data Table 1). This suggests that we have indeed reached our primary goal of implementing a runtime- and parameter-efficient protein generative model.

Sparse models generate diverse and designable protein structures

Although a flexible sampler and good runtime performance are important properties of our models, we need to assess model performance in terms of the quality of the generated backbones. To compare salad model performance to state-of-the-art diffusion models and hallucination-based approaches, we generated 200 backbones each for proteins with sizes of 50 to 1,000 residues (50, 100–600 in increments of 100, 800 and 1,000). For each backbone, we designed eight sequences using ProteinMPNN and predicted their structures with ESMFold. Following current best practices2,27, we computed designability as the percentage of structures, reaching an RMSD between design and predicted structure (scRMSD) < 2 Å and pLDDT > 70 for the best designed sequence (Fig. 3a). We assessed the impact of different noise distributions on protein structure generation by comparing model performance with both variance-preserving (VP) and variance-expanding (VE) noise with different standard deviations (80 Å and 100 Å, respectively). In addition, we include models trained with protein-length-dependent variance VP noise (VP scaled), as the variance of atom positions in protein backbones increases with the number of residues (Extended Data Fig. 4a). We compare the results of our models (VP, VP scaled and VE) with RFdiffusion1, Genie 2 (ref. 2) and Proteina42, as well as results from relaxed sequence optimization (RSO)—the state-of-the-art hallucination-based method for protein design27—using the same evaluation approach for all methods.

a, Example unconditional generations using salad, ranging from 50 to 1,000 residues (coloured by secondary structure; loop, grey; helix, red; strand, blue) and their ESMFold-predicted structures (light grey). The scRMSD between generation and ESMFold prediction is listed underneath each structure. b, scRMSD values using ESMFold for designed structures from 50 to 1,000 residues for salad, RFdiffusion1, Genie 2 (ref. 2), Proteina42 and RSO27. The region of successful designs with scRMSD < 2.0 Å is marked in light grey. The box centre line indicates the median scRMSD, the boundaries indicate the 1st and 3rd quartiles, and whiskers show the 1st or 3rd quartile + 1.5 times the interquartile range. c, Designability of generated structures by protein length from 50 to 1,000 residues. The shown RSO values are from ref. 27. Data in b and c are based on n = 200 generated backbones per length. d, Generations (coloured by residue index) and ESMFold predictions (light grey) for large protein structures generated using VE denoising starting from letter-shaped noise. Each structure is reported with its scRMSD between generation and ESMFold prediction. The grey letter shape in the top left corresponds to the shape of the noise the proteins were generated from. Scale bar (grey), 5 nm. The black bar corresponds to the same distance in the depicted protein structures. e,f, Model diversity computed as the fraction of designable clusters over designable structures (e) or all the generated structures (f). Clusters are generated using single-linkage clustering by pairwise TM-score with a cut-off of TM-score ≥ 0.6. Mean diversity across n = 10 random samples of 100 structures from 200 generated structures. Error bars indicate the minimum and maximum diversities across n = 10 samples. Area between the maximum and minimum sampled diversity is shaded.

Our models are able to generate designable backbones for a variety of protein lengths from 50 to 1,000 residues (Fig. 3a and Extended Data Fig. 2). The generated structures show low scRMSD, high scTM/pLDDT and diverse secondary structures that include both all-helix and all-strand topologies (Fig. 3a,b and Extended Data Figs. 3 and 5a,b). In the range from 50 to 400 residues, our VP model reaches comparable designability to previous VP models (Genie 2 and RFdiffusion), outperforming RFdiffusion and slightly underpeforming compared with Genie 2, which was trained on a much larger dataset2 (Fig. 3c). At 400 residues, all VP models show a sharp increase in scRMSD accompanied by a decrease in designability (Fig. 3b,c). Although neither Genie 2 nor RFdiffusion produce any designable structures with 800 residues, salad VP still produces 4.8% designable structures at that size. We suspect that the decrease in designability with residue count is caused by VP diffusion models generating highly compact backbones (Extended Data Fig. 4a). We find that such backbones require a high fraction of glycine and alanine residues to avoid clashes (Extended Data Fig. 4b,c), which might decrease designability. A likely cause of this is the fixed variance of the VP diffusion process, which requires the model to reduce amino acid distances at small protein sizes, but increase amino acid distances at large protein sizes (Extended Data Fig. 4d). If the model trains predominantly on small proteins, this discrepancy might result in the observed compact backbones for larger proteins at inference. This issue with protein diffusion models is anecdotally known to the protein design community52.

By contrast, VP-scaled and VE models do not experience increases in scRMSD at the 400-residue threshold. VP-scaled models maintain median scRMSD < 2 Å for proteins of up to 600 residues, whereas VE models maintain this value for proteins up to 800 residues in length (Fig. 3b). This is mirrored by designability, where both VP-scaled and VE models outperform all VP models at protein lengths above 300 residues. However, neither VP-scaled nor VE models can maintain high designability for generated backbones of length 1,000, where both types of model drop below 20%. We hypothesized that this decrease in designability is due to the models being unable to properly model the global structure of large proteins. As large proteins generally consist of multiple domains in which residues of a single domain are close in space53, we tested if VE models initialized from noise shaped in a similar way would result in lower scRMSD and greater designability for large proteins. Instead of using normal-distributed noise centred on the coordinate origin, we first sample a set of centres and then add normal-distributed noise (with standard deviation of 80 Å or 100 Å) for 200 residues to each of these centres. At every subsequent denoising step, we use standard VE noise. Using this shaped-noise initialization leads to decreased scRMSD and increased designability for large proteins, reaching a designability of up to 36.7% for 1,000 amino acid proteins (Fig. 3c). This way, shaped noise matches or improves on the designability of RSO27 and Proteina42, the current state-of-the-art hallucination- and diffusion-based approaches to large protein design, respectively (Fig. 3b,c). Strikingly, salad with shaped noise produces designable 1,000-residue proteins with only ~8M parameters, compared with concurrent work introducing Proteina, which uses 200M parameters (Extended Data Table 1) and does not result in any designable structures at 1,000 residues (Fig. 3b,c).

As we can use shaped noise to generate large proteins with VE models, we investigated if we could control the shape of the generated backbones by directly specifying the positions of noise centres used for shaping. By sampling the initial noise centred on letter shapes, we were able to generate designable structures spelling out the name of our framework (Fig. 3d). In contrast to previous work on shape-conditioned protein design using the Chroma model21, our approach does not require an additional shape conditioner and results in designs with low scRMSD and high pLDDT (Fig. 3d). In terms of standard designability criteria using ESMFold (scRMSD < 2 Å, pLDDT > 70), 55% of the letters generated by our models are designable, whereas up to 92.5% of the letters are re-foldable according to the criteria used for Chroma (scTM > 0.7)21 (Extended Data Fig. 1). This indicates that our models can be used to generate designable backbones even on challenging out-of-distribution design tasks.

In addition to designability, we measure the diversity of protein backbones generated by our models. Lin et al.2 previously quantified the diversity of generated backbones by performing hierarchical clustering with single linkage on designable structures, using a TM-score threshold of 0.6 to define distinct clusters. Diversity is then computed as the fraction of designable clusters in all generated backbones: \({{\mathtt{diversity}}}_{all}=\frac{{\mathtt{\#clusters}}}{{\mathtt{\#all}}}\) (ref. 2). This diversity measure implicitly includes backbone designability, as a lower number of designable backbones results in a lower number of clusters. A method trading off designability for increased diversity would, therefore, result in a low diversityall score. To disentangle diversity and designability, we decompose this diversity score as follows.

When computing diversitydesignable, only the diversity of designable structures is taken into account and diversity is not deflated by low designability. We argue that this is a more meaningful measure of diversity as only designable structures are used for protein design in the end.

We compare our models with RFdiffusion1 and Genie 2 (ref. 2). For proteins of length 50–400 residues, we take random samples of 100 generated structures and compute both diversityall and diversitydesignable for each sample. To quantify the spread of diversity across samples, we show the median as well as the minimum and maximum diversities over ten samples (Fig. 3e,f). Our VP model achieves similar diversity to RFdiffusion for both diversitydesignable and diversityall, whereas the VP-scaled model outperforms RFdiffusion on both metrics and approaches the diversity of Genie 2, outperforming it in terms of diversityall for 400-residue proteins. Our VE model shows reduced diversity at small protein sizes, but shows comparable diversitydesignable to RFdiffusion on 400-residue proteins and outperforms both Genie 2 and RFdiffusion in terms of diversityall for this protein length. This indicates that designable 400-residue structures generated by our non-VP models are comparably diverse to those generated using Genie 2. Their increased diversityall can be attributed to their improved designability (Fig. 3c). We, therefore, argue that diversitydesignable is a more meaningful measure of diversity as it is not inflated by changes in designability. Although our models slightly underperform Genie 2 in terms of diversity, we note that Genie 2 was trained on AlphaFold DB54—a larger and more diverse dataset.

Random secondary structure conditioning maximizes diversity

As our models can be conditioned to generate proteins with a given secondary structure, we investigated if conditioning models with random secondary structures could increase the diversity of generated backbones. We sampled random three-state secondary structure strings (helix, strand and loop) by selecting a random percentage of helices and strands, constructing secondary structure elements of random lengths that add up to the selected percentages and randomly arranging them into a secondary structure string (Fig. 4a). We then used our denoising models to produce backbones for each random secondary structure. Computing diversitydesignable for backbones of length 50–400 residues generated this way resulted in our models surpassing RFdiffusion at all sizes and matching or outperforming the designability of Genie 2 in spite of having been trained on a much smaller dataset2 (Fig. 4b). In particular, random secondary structure conditioning resulted in an increased diversity for small proteins and saturated the diversity metric on proteins of length 200 or larger. However, increasing diversity this way resulted in decreased designability across all protein lengths and models (Extended Data Fig. 5c).

a, Schematic of our random secondary structure sampling procedure. b, Diversity of designable structures generated using random secondary structure conditioning for 50–400 residues, compared with the diversity of designable structures generated using RFdiffusion and Genie 2. Mean diversity across n = 10 random samples of 100 backbones from 200 generated backbones. Error bars correspond to minimum and maximum diversities across these n = 10 samples. Area between the maximum and minimum sampled diversity is shaded. c, Secondary structure distribution for our models using no conditioning (left) or random secondary structure conditioning (right). d, Overview of diverse synthetic dataset generation using random secondary structure conditioning. e, Left: scatter plot of ESMFold scRMSD for all 50,000 generated structures. The line indicates the median scRMSD within a length window of 100 residues. Right: designability of the generated structures in the synthetic dataset computed for a length window of 100 amino acids. f, Single-shot performance of diffusion models trained on the synthetic dataset (synthetic 256) compared with the subset of proteins of length of <256 residues in PDB (PDB 256). Left: box plot of RMSD between the generated structures and ESMFold predictions for the argmax sequence prediction for models trained on the synthetic dataset and PDB. The centre line indicates the median scRMSD, box boundaries indicate the 1st and 3rd quartiles, and whiskers show the 1st or 3rd quartile + 1.5 times the interquartile range from the box. Right: designability of argmax sequence predictions for models trained on synthetic data and PDB based on n = 200 generated backbones per condition.

In addition to greatly increasing clustering-based diversity, this approach equalized secondary structure content biases inherent to our models (Fig. 4c and Extended Data Fig. 5a,b,d). Although all of our models showed a preference for alpha-helices for unconditional generation, conditioning resulted in a uniform distribution of secondary structure content. Quantifying the diversity in secondary structure content of the designs showed that conditioning increased the entropy of the secondary structure distribution relative to the non-conditioned baseline (Extended Data Fig. 5e,f). This indicates that conditioned designs are more diverse both in terms of shape and secondary structure content.

To test the limits of random secondary structure conditioning for generating diverse protein structures, we generated a synthetic dataset of 50,000 backbones with size between 50 and 256 residues (Fig. 4d; also see the ‘Synthetic dataset generation’ section). We designed ten sequences per backbone with ProteinMPNN; predicted their structures with ESMFold; and quantified the designability, diversity and novelty with respect to the PDB. Of the 50,000 backbones, 81.4% were designable. Across protein sizes, designs showed low median scRMSD and high overall designability (Fig. 4e). To quantify diversity, we clustered all backbones using Foldseek with TM-align alignment (TM-score threshold of 0.6) and a minimum coverage of 90% of the sequence to only cluster structures of similar sizes55. This yielded 45,713 clusters corresponding to 91.4% of the dataset. Of these cluster representatives, 75.3% were designable, resulting in a dataset of 37,661 diverse and designable structures. Using Foldseek to search the PDB for matches for all designable structures in the dataset resulted in 11,973 structures without a single match at TM-score > 0.5. In particular, most matches were concentrated in short backbones, with the majority of backbones with 200 or more residues had no matches in the PDB. This indicates that generating structures with a random secondary structure can explore parts of the protein fold space far outside the training set and result in ‘dark matter’ folds outside the PDB.

Synthetic data improve one-shot designability

Previous work on protein generative models49,56 reported that training on synthetic data with ProteinMPNN-designed sequence could improve model performance. To check if a synthetic dataset generated this way could be used to potentially train improved protein generative models, we compared the performance of two salad models trained on proteins of size 50–256 residues. We trained one model on a subset of PDB with chains of length between 50 and 256. The other was trained on designable structures and sequences in our synthetic dataset. Using each model, we generated 200 backbones for protein sizes between 50 and 300 residues. As our models learn to predict a sequence as an auxiliary task during training (see the ‘Denoising model loss’ section), we generated a single sequence per backbone. We predicted the structure of each sequence using ESMFold24 to assess design success. The model trained on PDB resulted in high median scRMSD (>2 Å) and low designability (<20%) across all tested protein sizes (Fig. 4f). By contrast, the model trained on our synthetic dataset showed low median scRMSD and high designability for in-distribution tasks, with performance deteriorating for 300-residue proteins, which the model was not trained on (Fig. 4f). Directly generating successful backbone–sequence pairs circumvents the sequence design step in the protein design pipeline, reducing the number of tested sequences and AlphaFold or ESMFold evaluations for design filtering from 8 to 1. This greatly decreases the runtime of the protein design pipeline.

Structure editing for motif scaffolding

Although unconditional backbone generation can give an indication about the general performance of a protein generative model, it is rather removed from the realistic applications of protein generative models. Motif scaffolding provides a more realistic benchmark task. Models have to generate backbones that accommodate one or more functional motifs from natural proteins1. This has immediate applications in enzyme design (scaffolding theozymes)15,16, synthetic vaccine design7 and design of natural protein mimics57.

We compare the performance of salad models against the state-of-the-art protein diffusion models Genie 2 and RFdiffusion on a standardized motif-scaffolding benchmark. The benchmark, introduced in ref. 1, includes 24 single-motif tasks of varying difficulties and was extended in ref. 2 to contain six additional tasks in which the models have to scaffold more than one motif in a single backbone (multi-motif scaffolding). For a direct comparison with Genie 2 and RFdiffusion (which are both VP models), we only use VP models in this benchmark. As our models are not trained for multi-motif scaffolding by default, we approach this problem in two different ways. First, we use our structure-editing approach to edit the denoised structure by aligning the motif backbone and replacing the output coordinates by the motif’s coordinates (Extended Data Fig. 7a). This ensures that the motif is present in the final generated backbone, even if the model is not conditioned on the motif’s structure. We call this configuration salad+edit. Second, we train a separate multi-motif-conditioned model, which we will refer to as salad+cond (Extended Data Fig. 7b).

In the following we compare the results for our method with the results for RFdiffusion and Genie 2 reported in ref. 2. To directly compare with these, we closely followed the same evaluation strategy. For each approach, we generated 1,000 backbones per scaffolding problem, designed eight sequences with ProteinMPNN and assessed the designability with ESMFold. We further filtered designable structures by their motif RMSD computed over all backbone atoms (N, CA, C and O). Structures were deemed successful if they reached motif RMSD < 1 Å. All successful structures were then clustered using TM-align at a TM-score cut-off of 0.6 to identify unique scaffolds for each problem. Evaluating success using CA-based motif RMSD showed little to no impact on both number of successful and unique designs for salad+cond (Extended Data Fig. 8a,b). By contrast, salad+edit showed large variability in success rates for some motifs (Extended Data Fig. 8a,b). This indicates that the lack of explicit motif conditioning may result in the model changing the motif orientation in the denoising step.

We found that both salad+edit and salad+cond solved 23/24 single-motif as well as 5/6 multi-motif design tasks (Extended Data Fig. 7c,d). Both salad+edit and salad+cond generated diverse backbones with low motif RMSD (Extended Data Fig. 7e). Only the motifs for 4jhw and 3ntn remained non-designable, consistent with Genie 2 (ref. 2). However, compared with Genie 2, we were able to solve one additional multi-motif-scaffolding task with 2b5i. Although RFdiffusion cannot be straightforwardly applied to multi-motif scaffolding, our approaches still outperformed it on single-motif scaffolding, solving one additional problem. Overall, salad+cond generated 1,610 (salad+edit, 1,446) unique scaffolds, slightly outperforming Genie 2 and dwarfing RFdiffusion’s 889 scaffolds (Extended Data Fig. 7c).

Thus, our models outperform RFdiffusion across all criteria, match Genie 2 in terms of the total number of unique scaffolds and solve one additional problem with 2b5i. Although a direct comparison of the number of unique backbones per scaffolding problem (Extended Data Fig. 8c) shows that there is currently no best model across all tasks, our models result in equal or more scaffolds for the majority of design tasks (21/24 versus RFdiffusion and 19/30 versus Genie 2 for structure editing; 20/24 and 20/30 for conditioning; Extended Data Fig. 8c). This indicates that both our approaches are competitive with the state of the art for single- and multi-motif scaffolding.

Structure editing for repeat protein design

As a second application to demonstrate the flexibility of our models combined with structure editing, we set out to generate repeat proteins. Similar to the approach used in previous work1, we can generate point-symmetric repeat proteins by symmetrizing the inputs of our models according to the action of a point group (Fig. 5a). As our model has residues attend to random neighbours, we also symmetrize the output of our models. All repeat subunits are aligned using the action of the point-symmetry group and averaged to produce a representative repeat unit. This can then be placed at a specified radius from the symmetry axis to control the radius of the generated symmetric repeats. Replicating the representative structure then gives us a symmetrized output structure. Applying this procedure resulted in designable backbones with low scRMSD for various cyclic symmetry groups (Fig. 5b).

a, Schematic of the structure editing procedure for point-symmetric repeat protein design. b, Example generated point-symmetric repeat proteins for different subunit sizes (50 and 100 residues) and cyclic groups (C3, C4, C6 and C7). The idealized symmetric design is shown in grey, and the ESMFold prediction for each design is coloured by the residue index. scRMSD values are reported for each example structure. c, Schematic of the structure editing procedure for screw-symmetric repeat proteins. d, Example screw-symmetric designs (grey) for different angles (30°–180°) and radii (0 Å and 10 Å), with a fixed inter-subunit translation of 12 Å. An ESMFold-predicted structure (turquoise) is superimposed onto the designed repeat (left). Additionally, a structure of the design predicted by AlphaFold 3 (ref. 72) replicated three times (coloured by residue index) is shown together with the scRMSD of the design to that extended repeat protein. e, Design success rates for cyclic and screw-symmetric designs using VP and VE models with different symmetry groups, radii and rotation angles based on n = 20 generated backbones per condition.

Although both hallucination5 and diffusion1 methods have been successful in producing point-symmetric proteins, only sequence-based13 machine learning methods and Rosetta-based protein design3,4 have been successfully applied to design extended repeat proteins that cannot be described by a point group. However, our editing approach is readily extended to arbitrary symmetry groups including screw (helical) symmetries, which cover the class extended repeats described in refs. 3,4,13 (Fig. 5c). By explicitly setting the radius R, rotation angle α and translation T of a screw-symmetric repeat (Fig. 5c), we can generate designable backbones with the specified geometry (Fig. 5d). Generated backbones are structurally diverse, with topologies ranging from fully alpha-helical to fully beta-sheet. Repeat sequences designed using ProteinMPNN20 are reliably predicted to take on the designed structure with low scRMSD, even when extending the number of repeats by a factor of 3 (Fig. 5).

Overall success rates for symmetric protein design vary between models (VP or VE) and design tasks (Fig. 5e). Strikingly, designabilities for both cyclic and screw-symmetric designs show a dependency on the specified radius and rotation angle, for example, C6 symmetry with a radius of 12 Å (r12) and a radius of 14 Å (r14). This is probably because the generated structures become highly compact for low radii, which is associated with a loss in designability (Extended Data Fig. 4).

Structure editing for multi-state design

Although both motif scaffolding and repeat protein generation demonstrate the applicability of structure editing to protein design, these tasks do not showcase the full flexibility of this approach. In both cases, external conditioning information is available in the form of a motif or symmetry group. Additionally, there is sufficient data to train structure generative models conditioned on either of these tasks. By contrast, designing amino acid sequences that can fold into multiple distinct backbone structures (multi-state design) fits none of these criteria: data on natural multi-state proteins are scarce58 and structure-based generative models are believed to be unsuitable for this task13. Therefore, we chose to demonstrate that salad models can solve a recent multi-state benchmark task introduced in ref. 13 by using structure editing to couple the outcome of multiple denoising processes—one for each state.

Following ref. 13, we designed backbones for a protein (the ‘parent’) that takes on a specified secondary structure when intact and a different secondary structure when split into two ‘child’ proteins. The N- and C-terminal parts of the parent share their secondary structure with the children, whereas the central part of the parent should transition from a beta-sheet to an alpha-helix when split (Supplementary Table III). As we cannot directly design the protein on the sequence level, we instead instantiate three separate denoising processes, one for each state (Fig. 6a). Each denoising process is conditioned on the secondary structure string of either parent, child 1 or child 2. At each denoising step, we enforce that the parts with the same secondary structure between parent and child share a similar three-dimensional geometry by aligning and averaging their substructures (Fig. 6a). Essentially, the denoising processes are coupled by conditioning their structures on each other. This approach results in three coupled structures per generation.

a, Schematic of the structure editing procedure for multi-state protein design. b, Example partially successful multi-state design. c, Scatter plots of AlphaFold 2 scRMSD values for parent and child sequences with pLDDT > 75. Failed sequences are marked in grey, partial successes (parent and at least one child successful) are marked in purple and successful designs are marked in blue. d, Examples of successful multi-state designs. Generated structures for parent and child proteins are shown in grey; the predicted structures are coloured by their corresponding child protein. The N-terminal part is coloured purple and the C-terminal part is blue. AlphaFold 2 scRMSD values are reported for each parent and child design based on n = 1,000 backbones per condition.

We generated 1,000 structure triples, designed their sequences with ProteinMPNN, tying the sequence across parent and child structures20. To compare with the benchmark done in ref. 13, we evaluated the design success with the same criteria of AlphaFold RMSD < 3.0 Å and pLDDT > 75. Here 16% of the generated backbones resulted in partially successful designs, where all structures passed the pLDDT threshold, and a parent and at least one child passed the RMSD threshold (Fig. 6b). Although these designs did not meet all the criteria, they still resulted in a sequence predicted to change conformation in the parent state compared with the child state. Using the complete criteria, 2.9% of the backbones generated by our approach were successful, compared with the 0.05% of successes reported for ProteinGenerator (PG) in ref. 13. Although we used ProteinMPNN for sequence design, PG returns one sequence per backbone. However, even evaluating the percentage of success on a sequence-level salad reached a success rate of 0.32%, outperforming PG by a factor of 6.

To check whether structure editing contributed to the success rate of multi-state design, we generated another 1,000 backbone triples using only secondary structure conditioning. This resulted in per-backbone success rate of 0.4% and a per-sequence success rate of 0.06%, matching PG (Extended Data Fig. 9). This indicates that structure editing is indeed behind the increased performance observed for this multi-state design task. Overall, our structure editing approach generates varied low-RMSD solutions to this multi-state design problem (Fig. 6d), outperforming previous machine learning approaches. To our best knowledge, this is the first demonstration of multi-state design with a protein backbone denoising model.

Discussion

In this work, we present salad, a family of efficient sparse denoising models, capable of generating designable and diverse protein structures up to a length of 1,000 amino acid residues. For unconditional protein structure generation, our models outperform RFdiffusion both in terms of designability and diversity for all protein lengths, closely approaching the diversity of Genie 2 (which was trained on a larger and more diverse dataset). We bridge this gap in diversity by applying random secondary structure conditioning at the cost of designability. This allows us to generate a large, highly diverse dataset of designable structures novel to the PDB. For longer proteins with 400–1,000 residues, our models clearly outperform both RFdiffusion and Genie 2, approaching the designability observed for hallucination-based methods27. Although the concurrently developed Proteina42 matches salad’s designability for up to 800-residue proteins, it does so at the cost of increased runtime, a 25-fold increase in the number of parameters, and does not generalize to 1,000-residue proteins. Therefore, salad pushes the boundaries of designability and diversity across protein lengths up to 1,000 and greatly reduces the time-per-design value due to its efficient sparse architecture. The ability to design large proteins in a high-throughput manner can open up new possibilities for designing increasingly large and complex molecular machines.

We expand the capabilities of salad by combining it with structure editing. By editing the output of a salad model at each denoising step, we can rapidly prototype generators for protein design tasks unseen during training. We show that this combination can generate designable, low-RMSD backbones for a variety of tasks. We design shape-conditioned proteins, scaffold multiple functional motifs, generate repeat proteins and produce protein sequences predicted to adopt distinct folds when cleaved. For motif scaffolding, salad matches or exceeds the performance of both RFdiffusion and Genie 2. For repeat protein design, we generate screw-symmetric repeats, which, to our knowledge, have not been explored using protein structure diffusion models beyond a single mention in ref. 21. Instead, such proteins have so far been designed using Rosetta4 or sequence-based design methods3,13. For multi-state protein design, we reproduced a design task introduced in ref. 13 and achieved a success rate one order of magnitude higher than the original work. This indicates that salad is, in fact, sufficiently flexible to generate designs even for tasks like multi-state design, which are believed to be unfavourable for structure generative models13.

Although salad produces acceptable results on computational benchmarks, this work does not contain additional experimental validation. While our ESMFold- and AlphaFold-based approach to measure designability have been previously shown to select experimentally viable protein designs1,13,20,28, it is ultimately not the ideal metric. Neither AlphaFold nor ESMFold can perfectly distinguish experimentally viable from non-viable designs28,59—both models are known to be vulnerable to adversarial protein sequences5,27,32 and have limited sensitivity to amino acid masking and mutation59. Nonetheless, prior work has produced extensive experimental validation, showing that a pipeline using a structure generator with ProteinMPNN sequence design and structure predictor filtering can produce experimentally viable designs at a reasonable rate1,13,20. We argue that salad matching or exceeding previous, experimentally validated models in terms of ESMFold/AlphaFold design success alleviates concerns about the lack of experimental validation. In particular, salad is part of the same pipeline of structure generation, ProteinMPNN sequence design and AlphaFold 2/ESMFold selection as RFdiffusion1. As salad was trained independently from ProteinMPNN and AlphaFold 2/ESMFold, it is unlikely that it has learned to generate backbones that are adversarial for both. We also emphasize that the focus of this work is on developing more efficient and versatile backbone generators, not to present an all-in-one solution for protein design.

Another limitation of salad is that it is currently restricted to a limited training set. salad is trained on protein structures in the PDB, with all small molecules, ions, waters and nucleic acids removed. Therefore, its uses for enzyme design and small-molecule binder design are limited. In particular, more recent versions of RFdiffusion can design proteins in the presence of small molecules16,19. The salad architecture is likely capable of handling small molecules with minor modifications, which makes this an attractive step to address in the future. In addition, salad struggles to match the diversity of Genie 2 (ref. 2) without using random secondary structure conditioning. Genie 2 was trained on a clustered subset of the AlphaFold database, which greatly exceeds our PDB dataset in both size and structural diversity2,54,60. We believe that this issue can be addressed in future work by training salad models on AlphaFold DB.

In this work, we compare salad to RFdiffusion1 and Genie 2 (ref. 2) as well as the concurrent work of Proteina42. Although many other protein diffusion models exist37,40,61,62,63, we argue that comparing to these ones in particular is sufficient to establish salad to be on par with the state of the art, as prior work has shown that they outperform most other protein structure generative models1,2,42. The designability of salad generations for up to 1,000-residue-long proteins as well as its runtime performance give it an edge over comparable models. This should enable salad to fill the niche it is designed for, providing efficient and versatile backbone generation in the first step of the protein design pipeline.

Methods

Protein structure denoising models

Denoising protein structure models are trained to reconstruct a noise-free structure x from a noisy input structure. To train our models, we sample random time points t ∈ [0, 1], where t = 0 corresponds to a noise-free structure and t = 1 corresponds to pure noise. Depending on the noise schedule, we then convert t into a noise scale σt. We use three different noise schedules: VP noise with a cosine schedule64 and constant-standard-deviation noise (VP), VP noise with a protein-size-dependent standard deviation (VP scaled) and VE noise (VE).

For VP noise, a noisy structure xt is then generated by sampling

where σnoise = 10 Å is the standard deviation of the noise at time t = 1. For VP-scaled noise, we instead set the standard deviation σnoise equal to the standard deviation of CA positions in input structure x:

The fully noised structure \({\bf{x}}_{1}^{VP-scaled}\) will, therefore, have the same standard deviation as the alpha-carbons starting structure xCA. Finally, for VE noise, instead of sampling diffusion time t, we directly sample a noise scale from a log-normal distribution, following ref. 65:

and sample noisy structures according to

The models are then trained to reconstruct x from xt by minimizing \(| | {\bf{x}}-{\bf{x}}_{t}| {| }_{2}^{2}\) and additional auxiliary losses (see the ‘Denoising model loss’ section).

Our models are trained using self-conditioning. At each training step, we sample two noisy structures \({{\bf{x}}}_{t} \, {\rm{and}} \, {{\bf{x}}}_{t}^{{\prime} }\). With probability 50%, we predict x′ from \({\bf{x}}_{t}^{{\prime} }\) without self-conditioning. We then predict x from xt using x′ as an additional input. At inference, the model passes the current noised structure xt as well as its previous prediction xprev to predict x.

In addition to self-conditioning, our models can also be conditioned on amino acid sequence, partial structure information, three-state secondary structure (helix, strand and loop), block contacts between secondary structure elements, inter-chain contact information and hotspot residues interacting with other protein chains1. During training, conditioning information is provided at random for 50% of training examples. Each conditioning modality is further randomly masked for a random fraction between 20% and 100% of the residues. To determine inter-residue and inter-chain contacts for conditioning, we compute pairwise CA distances between residues. Residues are considered in contact, if their CA distance is < 8 Å. Two chains and secondary structure elements are considered in contact if at least one pair of residues is in contact. Residues are considered hotspot residues if they are in contact with at least one residue in another chain. Partial structure information is presented to the model as a matrix of inter-residue CB distances together with a mask of amino acid pairs with valid conditioning information. Partial structure information is masked out for between 20% and 80% of residues in any training example.

To sample from a trained denoising model, we initialize a backbone x with all atom positions set to 0. We then partition the interval [0, 1] into N equally spaced time steps (t0…tN) with tN = 1. For each time step t starting with tN, we apply noise with a chosen noise schedule to x, resulting in a noised structure xt. This noisy structure is denoised by the model, resulting in a new structure x. Repeating this process gradually reduces the noise level and results in a denoised protein backbone. Our approach differs from the denoising processes described in the literature: protein structure denoising diffusion models generally sample structures xt according to a distribution q(xt|xt+s, x), which depends on both denoised structure x and an earlier noisy structure xt+s at diffusion time t + s (refs. 1,2,35). Instead, our approach samples from q(xt|x = fθ(xt+s)), removing any direct dependency on the previous noise xt and only depends on it through the model fθ. This approach has been previously reported for categorical text diffusion models66 and more recently for amino acid sequence diffusion models13.

We chose this approach not because of its success in sequence diffusion models but to enable the arbitrary modification of denoised structures x without having to take into account xt. To use our models for protein generation tasks they were not trained for, we wanted to allow the arbitrary editing of the denoised structure—for symmetrization, to introduce structural motifs for scaffolding and to couple multiple denoising processes for multi-state design. This necessitates translating, rotating and replacing parts of the denoised structure. Changing the denoised structure x this way without also adjusting xt+s in a compatible way could result in failure to generate valid protein structures.

Supplementary Algorithm 1 shows the generative process for a model involving conditioning information c, self-conditioning and structure editing.

Model architecture

Our sparse denoising models consist of three separate modules. An Encoder that encodes the ground-truth backbone atom positions (N, CA, C, O and idealized CB) xgt and adds 15 additional pseudoatom positions for each residue to result in the denoising model input x, a DenoisingModule that receives noised positions xt, and is trained to reconstruct x and an amino acid decoder (AADecoder), which predicts an amino acid sequence and side-chain conformations for each residue. The model is trained with self-conditioning, receiving a previously predicted structure xprev and per-residue representation localprev as additional inputs. All modules are based on a sparse transformer architecture44 with pre-normalization45.

DenoisingModule

The DenoisingModule consists of six denoising blocks based on a pre-norm transformer architecture45. Every block updates the per-residue representation locali of size local_size = 256 and residue atom positions x. We save the trajectory of x values across all blocks to apply losses over the entire denoising trajectory.

Supplementary Algorithm 2 shows an overview of a block in the DenoisingModule. We replace standard self-attention in the transformer block by a sparse version of IPA (SparseIPA)23. Instead of computing the attention matrix and pair features for all amino acid pairs, we compute them for a set of precomputed neighbours. This reduces the complexity of attention from O(N2) to O(N ⋅ K), where K is the number of neighbours per residue. To support conditioning on structure information, we use two SparseIPA layers. The first IPA layer operates on the current set of position features, whereas the second one operates on previous positions from self-conditioning, as well as block contact and distance conditioning information. For multi-motif models, we instead run IPA using motif information first, followed by IPA on the current position features.

Following SparseIPA, the per residue features locali are updated using a GeGLU-gated feed-forward layer with global pooling of the hidden state (Update; Supplementary Algorithm 5). This combination of sparse attention and global mean pooling of features allows the DenoisingModule to learn global dependencies without having to use full O(N2) attention.

Neighbour selection

To compute sparse attention features, we select a set of neighbours for each residue based on their sequence and CA distances. For each residue, we choose the 16 nearest neighbours by residue index. Then, we select an additional 16 neighbours by CA distance, excluding previously selected neighbours. Finally, we select #random neighbours at random with probability \(1/{d}_{CA}^{3}\) following ref. 21 and #cond neighbours based on pairwise conditioning information, such as block contact conditioning1 or pairwise distances. All default models have #random = 32 when computing neighbours on the current set of positions and #random = #cond = 16 when computing neighbours on self-conditioning information. This results in a total of 64 neighbours per amino acid. Multi-motif-conditioned models have #random = 32 and do not use additional neighbours from the conditioning information. It is important to note that unlike Chroma21, a new set of neighbours is computed for each DenoisingBlock, as each block updates the residue positions x.

Pair features

As part of SparseIPA, we compute amino acid pair features for each amino acid and its selected neighbours. Distances between all pairs of backbone atoms (N, CA, C, O and idealized CB) are computed for each pair and featurized using 16 Gaussian Radial Basis Functions20 uniformly spaced between 0 Å and 22 Å. The bandwidth is set to the distance between Radial Basis Function centres σ = 1.375 Å. In addition to distance features, we also compute direction from the CA atom of each residue to each of its neighbour, the relative rotation between residues and the atom positions of a residue and its neighbour in local coordinates. These features are then flattened and linearly projected to pair_size = 64 pair features. For models with minimal pair features, we instead only compute inter-residue distances and relative rotations (Supplementary Algorithm 4).

Update module

After applying SparseIPA, we update per-residue features locali using a gated feed-forward layer. We first update locali using the atom positions in each residue. Then, we linearly project and pool amino acid features within and across chains. Per-residue, per-chain and per-complex features are then summed and passed through a final linear layer (Supplementary Algorithm 5). Combined with SparseIPA, this allows the model to learn global dependencies within a protein complex without the need for full O(N2) attention.

Equivariant position update

The final component of a DenoisingBlock updates the atom positions xi for each residue in an equivariant manner. Per-residue features locali are linearly projected to a set of position updates, scaled by a unit factor of 10 Å and added to the current positions xi in the local frame of each residue i. The resulting updated positions are then transformed back into global coordinates (Supplementary Algorithm 6).

Structure encoder

The Encoder uses a simplified version of the DenoisingModule and uses the same feature size as the main DenoisingModule. As the Encoder does not change the protein backbone xgt, we use a precomputed set of neighbours for each residue. Each amino acid is assigned a set of 32 nearest neighbours based on the CA distance. The trunk of the Encoder consists of two blocks of SparseIPA followed by a GeGLU layer46. After the second block of the Encoder, the residue representation is used to generate 15 pseudoatom positions per residue, which are combined with the backbone atom positions. The resulting structure x is used to train the DenoisingModule.

AADecoder

The AADecoder uses three blocks of the same type as the Encoder, together with a set of 32 nearest neighbours per amino acid computed on the denoised CA positions. In addition to denoised positions x and DenoisingModule features locali, the AADecoder also receives a partially masked amino acid sequence during training. A random fraction between 1% and 100% of amino acids in each training sequence are replaced by a mask token. The AADecoder is then trained to predict the masked amino acids with a cross-entropy loss. This corresponds to the training objective of an autoregressive diffusion model67.

Model variants

We trained denoising models for three different noise schedules: VP with σ = 10 Å; VP with σ = σ(xCA) dependent on the standard deviation of CA atoms in the training example; and VE diffusion with σ ~ LogNormal (1.4 Å, 1.6 Å). For each noise schedule, we trained three ablated models: a model with full pair features and Fourier time embedding35; a model without time-embedding features; a model with minimal pair features and no time-embedding features.

Denoising model loss

Our denoising models are trained using a combination of standard denoising and auxiliary losses. A per-block denoising loss is computed on residue (pseudo) atom positions for the output \({f}_{{{b}}}^{\;ar}({{\bf{x}}}_{t})\) of each DenoisingBlock, where r are residues and a are the atoms in each residue:

The norm ||xb − x|| is clipped to 10 Å to stabilize training and the loss is averaged over residues r and (pseudo) atoms a in each residue. The losses for each block are then weighted together to result in a trajectory denoising loss:

where the final prediction is weighted by a factor of 2 to increase its importance in the final loss. This is combined with an auxiliary all-atom denoising loss using the all-atom structure \({f}_{atom}^{ar}({{\bf{x}}}_{t})\) predicted by the AADecoder:

To ensure that the models learn to reproduce the relative orientations between amino acid residues, we also introduce a rotation denoising loss for each block following RFdiffusion1:

where Rr and Rr,gt are the rotation matrices defined by the backbone frame of each residue in the predicted and ground-truth structures, respectively1. This results in a trajectory denoising loss for residue rotations as

In addition to using unaligned denoising losses, we also compute a squared frame-aligned point error (FAPE) loss \({{\mathcal{L}}}_{{\rm{FAPE}}}^{2}\) (ref. 23) over the trajectory of predictions \({f}_{b}^{\;ar}({{\bf{x}}}_{t})\) as well as a local FAPE loss on the predicted all-atom structure \({{\mathcal{L}}}_{local}\). Instead of computing the FAPE over all amino acid pairs, we instead compute it over the 64 nearest neighbours in the ground-truth structure \({{\mathcal{L}}}_{fape}\) and 16 nearest neighbours for \({{\mathcal{L}}}_{local}\). As with the denoising loss, the FAPE losses are also clipped to a maximum of 10 Å. Finally, the structural losses also include AlphaFold’s structural violation loss \({{\mathcal{L}}}_{viol}\) (ref. 23) to penalize clashes in denoised structures.

The models are also trained with a number of non-coordinate losses, consisting of a distogram \({{\mathcal{L}}}_{dist}\) (ref. 23) and amino acid prediction \({{\mathcal{L}}}_{aa}\) and secondary structure \({{\mathcal{L}}}_{dssp}\) cross-entropy losses.

The final weighted loss of the model is then as follows.

In this loss, \({{\mathcal{L}}}_{viol}\) and \({{\mathcal{L}}}_{local}\) are set to zero in the high-noise regime (diffusion time t > 0.5 for VP models; noise σt > 5.0 Å for VE models), as the model is unlikely to learn to predict non-clashing structures at high noise levels.

Structure autoencoder models

Our sparse autoencoders were implemented to have the same graph transformer architecture as the denoising models. Each autoencoder model consists of a single Encoder block with SparseIPA over 32 nearest neighbours for each residue, followed by a GeGLU layer46. The resulting per-residue representation locali is then layer normalized68 and linearly projected to a latent vector \({z}_{i}:{{\mathbb{R}}}^{{\mathtt{latent}}\_{\mathtt{size}}}\) for each residue i. For model variants with VQ48 enabled, zi is then quantized with a codebook of size 4,096.

This latent representation is decoded by a Decoder, which consists of six blocks of SparseIPA followed by the same Update and position_update layers used in our denoising models. The decoder is trained with zero to three recycling steps for each batch, initializing the positions x of each recycling step with the result of the previous step xprev. The first recycling step starts from randomly initialized positions \({\bf{x}} \sim {\mathcal{N}}(0,1)\). We train models with three different decoder variants: an SE(3) equivariant model using the same neighbour selection and features as used in our denoising models; an equivariant model with per-block distogram prediction (EQ+dist; Supplementary Algorithm 7) and an additional SparseIPA layer using distogram nearest neighbours (Supplementary Algorithm 8); and a non-equivariant model directly embedding atom positions without first projecting them to residue local coordinate systems. This results in a total of six models trained (three decoder variants with and without VQ).

All the models are trained with \({{\mathcal{L}}}_{{\rm{FAPE}}}^{2}\) over the entire denoising trajectory and \({{\mathcal{L}}}_{{\rm{local}}}\) for the final all-atom structure prediction. Amino acid cross-entropy \({{\mathcal{L}}}_{{\rm{aa}}}\) is used as an auxiliary loss. In addition, models with per-block distogram loss are trained with distogram cross-entropy for each layer \({{\mathcal{L}}}_{{\rm{dist}}}\). This results in a combined autoencoder loss of

Training dataset

We trained our models on a snapshot of PDB collected in October 2023 excluding any PDB entries submitted after 31 December 2020. PDB entries were then filtered for a resolution of ≤ 4 Å. Entries containing protein chains of length less than 16 were excluded from training and non-amino-acid residues were removed from chains in the dataset. We clustered all protein chains in the resulting dataset using mmseqs2 (git commit 4f046dd)69 with a 30% sequence identity cut-off. To train our model, we generated input batches of 1,024 residues. Batches were constructed by repeatedly sampling structures from the dataset, until the total number of residues reached 1,024. If the total number of residues would exceed 1,024, the batch was zero padded instead, and the sampled structure was included in the next batch. At each epoch, we sampled clusters from the dataset without replacement, selecting a random chain identifier and biological assembly for each cluster. If the selected chain belonged to a complex and the entire complex fit in the current batch, we added the complex to the batch with a probability of 50%. Otherwise, we added only the selected chain.

Model training

All the denoising models were trained for 200,000 iterations on the dataset with a mini-batch size of 1,024 and 32 batches per iteration, resulting in a total of 32,768 residues per iteration. Structure autoencoder models were trained for 200,000 steps with a batch size of 16,384 residues. We used the Adam optimizer70 with β1 = 0.9 and β2 = 0.99. The learning rate was warmed up from 0 for 1,000 steps at the start of training and then reduced to 1 × 10−7 using cosine decay71. On an example machine with eight NVIDIA RTX 3090 GPUs, an average training run took 3.5 days, or 672 GPU hours. Models were trained on different GPU nodes using eight of either NVIDIA RTX 3090, A40 or L40S GPUs.

Runtime benchmarking

We compared the runtimes of salad models with RFdiffusion, Genie 2, Chroma and Proteina on a single NVIDIA RTX 3090 GPU. We sampled ten structures from each model using their default settings (Supplementary Table I) and measured the time elapsed for each generated structure. We discarded times measured for the first generated structure to account for library initialization and model compilation and reported the average time for the remaining nine generations.

Model ablation study

We selected a model architecture and sampling hyperparameters by evaluating models with and without time-embedding features as well as with full and minimal pair features on unconditional structure generation. We generated 200 backbones for proteins of size 50–400 residues for each model, using 100, 200 and 500 diffusion steps with early stopping at 80, 180 and 400 steps into the denoising process. Self-conditioning was applied until diffusion time tprev = 0.8 for VP models as this was determined to yield good results in preliminary testing. For VE models, we tested self-conditioning thresholds of 0.8 and 0.99. Ten sequences were designed for each backbone using ProteinMPNN20. Structures were predicted using ESMFold24. Designability was measured as the fraction of backbones with at least one designed sequence with pLDDT > 70 and scRMSD < 2.0 Å (Extended Data Fig. 6a,b). Models with full pair features, time embedding and 500-step sampling were chosen for further benchmarking. tprev = 0.99 was chosen for VE models.

Unconditional generation benchmark

At each evaluated protein length between 50 and 1,000 residues, we generated 200 protein backbones using both our models as well as Genie 2, RFdiffusion and Proteina for comparison1,2,42. Backbones were sampled using 500 diffusion time steps with early stopping at 400 time steps and self-conditioning turned off below the threshold diffusion time tprev = 0.8 for VP models and tprev = 0.99 for VE models. For each backbone, we then generated ten amino acid sequences using ProteinMPNN with a temperature of 0.1 (ref. 20). This resulted in a total of 11 sequences for our models (ten ProteinMPNN and one from the model itself) compared with ten sequences for Genie 2 and RFdiffusion. To fairly measure the model performance and remain comparable to previous work, we restricted all the computed performance measures to use the first eight sequences generated by ProteinMPNN.

We predicted the structures of each sequence using ESMFold24 and AlphaFold 2 (ref. 23). For each structure prediction, we measured the RMSD to the generated backbone (scRMSD) and pLDDT. Following ref. 2, we then computed designability as the fraction of the generated backbones with at least one sequence with ESMFold pLDDT > 70 and scRMSD < 2 Å. We evaluated pairwise similarities between the generated backbones using TM-align (v.20220412)29. To compute backbone diversity for direct comparison with Genie 2 and RFdiffusion2, we randomly subsampled the set of generated structures to a size of 100 backbones. Designable structures in this subset were then clustered using single-linkage clustering on the TM-score. Backbones with TM-score > 0.6 were included in the same cluster. Diversity for all backbones (diversityall) was then defined as the fraction of designable clusters in all generated structures \(\frac{{\mathtt{\#clusters}}}{{\mathtt{\#generated}}}\) (ref. 2). We also defined a second diversity measure as the fraction of clusters in all designable structures \({{\mathtt{diversity}}}_{designable}=\frac{{\mathtt{\#clusters}}}{{\mathtt{\#designable}}}\) to fully separate diversity from designability. Diversity was measured on ten samples of 100 structures, each sampled from the original 200 generated structures to report median, minimum and maximum diversities for each model.

Shape-initialized structure generation

We prepared letter shapes as paths in SVG format using Inkscape 1.4 (e7c3feb100, 2024-10-09; Inkscape Project) and then extracted the coordinates of the nodes in each path into a CSV file. To sample structures based on these shapes, we used our VE model with default settings and shaped noise initialization. Instead of initializing the denoising process with noise for each residue i as

we instead centred the noise on the coordinates of nodes of the SVG path corresponding to the desired shape:

where nodei is the position of the node assigned to residue i. To generate the letter shapes described in this work, we assigned 200 consecutive residues to each node, that is, residues 1–200 were assigned to the first node, 201–400 to the second and so on. We then sampled ten structures for each letter shape (S, A, L and D) starting from this noise, designed ten sequences with ProteinMPNN at a temperature of 0.1 and predicted their structures with ESMFold. We then identified designable structures as described in the ‘Unconditional generation benchmark’ section.

Shaped noise

To initialize noise for VE models better suited for large protein generation than normal-distributed noise, we adapted shape-initialized noise generation to work with random noise centres. We sampled random starting positions for centres centrei according to

where \({\epsilon }_{i} \sim {\mathcal{N}}\)(0, (10 Å)2) is a normal-distributed offset. Essentially, we are sampling centres as Gaussian chains with average segment length of 10 Å (ref. 21). We then enforce globularity of the chain by optimizing inter-chain distances with a harmonic restraint centred on 10 Å. Optimization is done using ten steps of gradient descent with a learning rate of 0.1. We sample shape-initialized noise with 200 residues per centre using these randomly generated centres. We generate fresh centres for each designed backbone.

Random secondary structure conditioning