Abstract

Machine learning potential (MLP) has been a popular topic in recent years for its capability to replace expensive first-principles calculations in some large systems. Meanwhile, message passing networks have gained significant attention due to their remarkable accuracy, and a wave of message passing networks based on Cartesian coordinates has emerged. However, the information of the node in these models is usually limited to scalars, and vectors. In this work, we propose High-order Tensor message Passing interatomic Potential (HotPP), an E(n) equivariant message passing neural network that extends the node embedding and message to an arbitrary order tensor. By performing some basic equivariant operations, high order tensors can be coupled very simply and thus the model can make direct predictions of high-order tensors such as dipole moments and polarizabilities without any modifications. The tests in several datasets show that HotPP not only achieves high accuracy in predicting target properties, but also successfully performs tasks such as calculating phonon spectra, infrared spectra, and Raman spectra, demonstrating its potential as a tool for future research.

Similar content being viewed by others

Introduction

Molecular dynamics (MD) is a powerful computational technique allowing for the exploration of various physical and chemical phenomena at the atomic level and the study of the behavior of molecules and materials over time. It bridges the gap between theoretical predictions and experimental observations, enabling researchers to gain a comprehensive understanding of the behavior, properties, and interactions of molecules and materials. With sufficient computational resources, first principles calculations based on Density Functional Theory (DFT)1 can simulate systems with hundreds or even thousands of atoms. However, it struggles when it comes to larger systems. Another approach to computing atomic interactions is empirical force fields, providing much quicker calculations and the ability to handle significantly larger systems. Nevertheless, many of these force fields rely on empirical observations, limiting their applicability to specific ranges and lacking universality and transferability. The machine learning potential (MLP)2,3,4,5,6,7,8, which aim to accurately describe the potential energy surface of atomic configurations, combines the advantages of both DFT and empirical force fields. A well-trained machine learning force field can achieve accuracy close to DFT and even beyond DFT9,10, and perform very large-scale, long-time simulations, offering a glimpse into the future of research in studying complex dynamical problems.

Most existing machine learning potentials are based on the framework proposed by Behler2, which fits the total energy as a sum of atomic energies \(E=\sum {E}_{i}\), and the atomic energies are determined by the atomic environment within a certain cutoff radius. This format ensures the scalability of the potential, allowing the network to be trained on small systems and extrapolated to larger systems. The quality of such a model is highly dependent on the choice of descriptors that describe the atomic environments11. A reasonable descriptor should first possess invariance to rotations, translations, and atom permutation; thus, the same atomic environment yields the same atomic energy. A common approach is to construct a series of symmetric functions based on interatomic distances and angles between atoms since these two quantities are naturally invariant under rotations and translations. Depending on the number of atoms involved, a series of so-called two-body and three-body descriptors can be obtained, such as atom-centered symmetry functions (ACSF)2,12,13, the NEP descriptor7,14,15,16, the smooth overlap of atomic positions (SOAP)17, the DeePMD descriptor5,18. However, these descriptors are not complete19, as different atomic environments can yield the same descriptor. Atomic cluster expansion (ACE)6,20 and Moment Tensor Potential (MTP)4,21 have proposed complete descriptors that can account for interactions of arbitrary order, but the number of descriptors can easily grow to tens of thousands as the order increases. Another issue is that such descriptors are only dependent on the coordinate information within the cutoff radius. When dealing with long-range interactions, simply increasing the cutoff radius would significantly raise computational complexity since the number of atoms is proportional to the cube of cutoff radius.

Message passing network (MPN)22 can help address both of these issues. In the context of MLP, MPN is used to represent molecule or crystal structures as graphs, where atoms are nodes and bonds are edges. The key idea behind MPN is the iterative passing of messages between nodes, allowing information to be exchanged and aggregated. Such message passing processes can, on one hand, lead to the emergence of multiple atoms in the final descriptor (thus, resulting in n-body symmetric functions). On the other hand, it allows information from atoms beyond the cutoff radius to be transmitted to the current atom. As a result, many machine learning potentials based on message passing networks have achieved high levels of accuracy8,23,24,25,26,27,28,29,30,31. It is worth noting that as long as the energy (or other properties) obtained at the end satisfies the symmetry requirements, the messages used in the network do not necessarily have to be scalars24,32. For example, NequIP8, BotNet30, and MACE31 utilize high-order tensors based on spherical harmonics in the message passing, coupling them through Clebsch-Gordon (CG) coefficients to construct equivariant networks. These methods have shown significant improvements in accuracy compared to approaches that only use scalar messages. Another category of methods, including PaiNN26 and torchMD-Net28, directly utilize vectors in Cartesian space as messages and obtain equivariant results through a series of designed layers. This approach does not require coupling through CG coefficients, but the vectors are only equivalent to l = 1 tensors in the spherical harmonics method. TeaNet33 can pass matrices information equivalent to l = 2 tensors, but this introduces a multitude of artificially designed duplications, resulting in a highly intricate network structure that becomes challenging to extend to higher order tensors. Machine learning potential that use tensors of arbitrary orders as messages based on Cartesian coordinates have not been proposed.

In this work, we propose High-order Tensor Passing Potential (HotPP), which can utilize arbitrary order Cartesian tensors as messages. By combining some basic equivariant operations between tensors, all the high-order tensors used in the network are E(n)-equivariant, thus the output is consistent with the rotation of coordinates. In other words, if the output is a scalar, it remains invariant under rotations, while the vector output will rotate in accordance with the rotation of the coordinates, and the matrix output will transform as \({M}^{{\prime} }={RM}{R}^{T}\). Therefore, the method can directly predict high-order tensors such as dipole moments and polarizability tensors without any modifications. We validate HotPP in three prediction tasks: the energies and forces of molecular dynamics trajectory of small molecule; the energies, forces, and stresses of carbon with periodic boundary conditions; and the dipole moments and polarizability tensors of small molecules with coupled cluster singles and doubles (CCSD) accuracy. In these tests, our model achieves good performance with fewer parameters comparable to other high-order models, which provide a frame of equivariant network based on Cartesian coordinates.

Results

Equivariant functions of Cartesian tensors

Cartesian tensors are the tensors that transform under rotations in Euclidean space in a simple way. In other words, a Cartesian tensor is a tensor whose components transform as a product of vectors and covectors under rotations, without any additional factors that depend on the rotation matrix itself. Specifically, a n-th rank tensor transforms under rotation as:

where R is an orthogonal matrix. Under this definition, it is easy to find that since \({{{\bf{v}}}}_{i}\to {{{\bf{v}}}}_{i}^{{\prime} }={{{\boldsymbol{R}}}}_{{ij}}{{{\bf{v}}}}_{j}\), the vectors are first-order tensors, and the dyadic product of two vectors is a second-order tensor since \({({{\bf{u}}}{{\bf{v}}})}_{{i}_{1}{i}_{2}}{\longrightarrow }^{{{\boldsymbol{R}}}}{({{\bf{u}}}{{\bf{v}}})}_{{i}_{1}{i}_{2}}^{{\prime} }=({{{\boldsymbol{R}}}}_{{i}_{1}{j}_{1}})({{{\boldsymbol{R}}}}_{{i}_{2}{j}_{2}}){({{\bf{u}}}{{\bf{v}}})}_{{j}_{1} \, {j}_{2}}\).

And equivariance is a property of functions or transformations between two spaces, where the transformation preserves the relationships between the elements of those spaces. More formally, a function \({{\rm{\phi }}}:{{\bf{X}}}\to {{\bf{Y}}}\) is said to be equivariant with respect to a group G acting on two sets X and Y if for all \(g\in {{\bf{G}}}\) and \(x\in {{\bf{X}}}\), we have:

This means that applying a function ϕ to an object x and then applying a group element g to the resulting object should give the same result as first applying the group element to the object and then applying the function. And the composition of equivariant maps is also equivariant:

Therefore, by providing some basic equivariant functions between Cartesian tensors and combining them, we can obtain an equivariant neural network. Here, we use the following three equivariant operations, whose equivariances are proven in the Supplementary Note 1:

-

1.

Linear combinations of tensors with the same order: \(f\left({T}_{1},{T}_{2},\cdots,{T}_{m}\right)=\sum {c}_{i}{T}_{i}\).

-

2.

Contraction of two tensors.

The contraction of tensors is a mathematical operation that reduces the rank of tensors by summing over one or more pairs of indices. For example, consider a 3-order tensor A and a 2-order tensor B, the contraction of them can be \({{{\boldsymbol{C}}}}_{{ijk}}={{{\boldsymbol{A}}}}_{{ijl}}{{{\boldsymbol{B}}}}_{{kl}}\), this will reduce the rank of tensors by 2. More generally, if we sum over more than one pair of indices between an x-order tensor T1 and a y-order tensor T2 such as:

We can get a new tensor with \(x+y-2z\) order, where \(0\le z\le \min (x,y)\). When z = 0, none of the indices are contracted and the Eq. (4) becomes tensor product \({{{\boldsymbol{T}}}}_{{a}_{1}\cdots {a}_{x}{b}_{1}\cdots {b}_{y}}={{{{\boldsymbol{T}}}}_{1}}_{{a}_{1}\cdots {a}_{x}}\cdot {{{{\boldsymbol{T}}}}_{2}}_{{b}_{1}\cdots {b}_{y}}\).

3. Partial derivative with respect to another Cartesian tensor: \(\frac{\partial }{\partial {{{\boldsymbol{T}}}}_{{j}_{1}{j}_{2}\cdots {j}_{n}}}\).

By the combination of these operations, we can get many equivariant functions. Many common operations frequently used in other equivariant neural networks that operate on vectors, such as scaling of vectors: \({{\rm{s}}}\cdot \vec{{{\rm{v}}}}\), scalar products \(\left\langle \vec{{v}_{1}},\,\vec{{v}_{2}}\right\rangle \), vector products \(\vec{{v}_{1}}\times \vec{{v}_{2}}\) (the upper triangle part of \(\vec{{v}_{1}}\otimes \vec{{v}_{2}}-\vec{{v}_{2}}\otimes \vec{{v}_{1}}\)) can all be viewed as special cases of these three operations. Some other more complex descriptors, such as MTP descriptors4,21, can also be obtained through combinations of these operations as shown in the Supplementary Note 3.

Equivariant message passing neural network

To obtain an end-to-end machine learning model for predicting material properties, the input should be the positions \(\{{{{\bf{r}}}}_{i}\}\) and chemical elements \(\{{Z}_{i}\}\) of all atoms, and for periodic crystals, the lattice parameters should also be considered. To apply graph neural networks, we first transform the crystal structure into a graph \(\{{n}_{i},{e}_{{ij}}\}\), where each node \({n}_{i}\) corresponds to an atom i in the unit cell, and all atoms j within a given cutoff distance rcut are considered connected to \({n}_{i}\) labeled with their relative positions rij. For periodic structures, since atom j and its equivalent atom j’ may both lie within the cutoff distance of atom i, \({n}_{i}\) may have more than one edge connected to \({n}_{j}\). To extract the information of the nodes, we use the scheme of MPN. A normal MPN can be described as:

where \({h}_{i}^{t}\) is the hidden feature of \({n}_{i}\) at layer t that captures its local information, messages are then passed between nodes along edges, with the message at each edge \({e}_{{ij}}\) being a function \({{{\rm{M}}}}_{t}\) of the features of the nodes connected by that edge. The ⨁ is a differentiable and permutation invariant function such as sum, mean or max to aggregate the message at each node together to produce an updated message \({m}_{i}^{t+1}\) for that node, which in turn is used to update the hidden feature with the function \({{{\rm{U}}}}_{t}\) for the next iteration.

A concrete example illustrating the principles of MPN is presented in Fig. 1. We first determine the connectivity of a structure based on a given cutoff radius and convert it into a graph (quotient graph for periodic structures). Then the messages on the nodes can be passed through the edges by a two-body interaction. As the process of message passing, the information from atoms beyond the cutoff radius can also be conveyed to the central atom. As illustrated in Fig. 1b, the blue arrows represent the first time of message passing, while the yellow arrows denote the second. To be noticed, each layer of message passing is performed simultaneously on all atoms; here, we focus on a specific atom in each layer for ease of analysis. During the initial time of message passing, information of atom 1 is encoded into the hidden information of atom 2. Subsequently, in the second time of message passing, the information of atom 2 including some information of atom 1 is collectively transmitted to atom 3, thereby achieving non-local effects from atom 1 to atom 3. On the other hand, due to the interaction between atom 4 and atom 2 in the second time of message passing also containing the information from atom 1, the effective interaction is elevated from a two-body interaction to a three-body interaction. This indeed encapsulates the two advantages of the message-passing architecture.

However, the scalar hidden feature, message, and edge information (always relatively distance between two atoms) here will limit the expressive capacity and may cause the incompleteness of atomic structure representations. As shown in Fig. 2a and d, if we only use scalar information \({h}_{i}\), \({h}_{j}\), and \({d}_{{ij}}\) to pass the message in Eq. (5) and update the feature in Eq. (6), all nodes will always produce the same embedding information. As a result, the network will be unable to distinguish between these two structures and give the same total energy. Even if the 3-body message includes angles \({\alpha }_{{ijk}}\) are taken into consideration, some structures with only 4 atoms cannot be distinguished26, as shown in Fig. 2b and e. Due to the identical atomic environments within the truncation radius, no matter how many message passing iterations are performed, these two different structures will only yield the same result. To alleviate this problem, a series of models that use high-order geometric tensors during the message passing have been proposed. For example, allowing vectors in the message passing process can differentiate Fig. 2b and e, but in the case of Fig. 2c and f which have different α, the summation in Eq. (5) would cause the network to confuse these two structures (a more detailed explanation can be seen in the Supplementary Note 5). It can be anticipated that increasing the order of tensors in message passing would enhance the expressive power of the network. Previously, the order of high-order tensor networks based on Cartesian space was typically limited to 233,34, while our method can work with any order Cartesian tensors. In the following, we use \({\scriptstyle{l}\atop}\!{{\boldsymbol{h}}}_{i}^{t}\) to represent the l-order Cartesian tensor features of node i in the t-th layer, and \({{{\bf{r}}}}^{\otimes n}\) to represent tensor product of a vector r for n times: r⊗r⊗⋯⊗r. In particular, for n = 0 we define this to a learnable function of \({||}{{\bf{r}}}{||}:\,{{{\bf{r}}}}^{\otimes 0}={{\rm{f}}}({||}{{\bf{r}}}{||})\).

a, d cannot be distinguished by using two-body scalar information; b, e cannot be distinguished by using three-body scalar information26; and c, f cannot be distinguished by using two-body vector information. c, f have different α and β, so they have the same center of mass but different moment of inertia.

Initialize of node features

The scalar features in the first layer \({\scriptstyle0\atop}\!h_{i}^{0}\) should be invariant to rotation, translation, and permutation of the atoms with the same chemical species. This is also the requirement for most descriptors used in machine learning potentials, so these descriptors such as ACSF, SOAP, ACE, MTP, etc., can be used directly to expedite the process of feature extraction. Here, we used the trainable chemical embedding similar to SchNet23 to minimize human-designed elements as much as possible. Specifically, the atomic numbers are first encoded by one-hot and then multiplied by a learnable weight matrix, resulting in a learnable embedding for each element Zi. For high-order features \({\scriptstyle{l}\atop}\!{{\boldsymbol{h}}}_{i}^{0}\) with l > 0, we set them all to 0 at the beginning.

Message and aggregate

To combine the information of neighboring nodes, we need to design a message passing function \({{{\rm{M}}}}_{t}\) in Eq. (5). Considering that the hidden feature \({\scriptstyle{l}_{i}\atop}\!{{\boldsymbol{h}}}_{i}^{t}\), \({\scriptstyle{l}_{j}\atop}\!{{\boldsymbol{h}}}_{j}^{t}\), the bond info \({e}_{{ij}}\), and the target message \({\scriptstyle{l}_{{{\rm{out}}}}\atop}\!{{\boldsymbol{m}}}_{{ij}}^{t+1}\) can be tensors of arbitrary order. Therefore, we need to find an equivariant way to compose the two tensors to a new tensor with different order, and Eq. (4) is such an operation. In our model, we write \({{{\rm{M}}}}_{t}\) in Eq. (5) as:

Where \({d}_{{ij}}={||}{r}_{{ij}}{||}\) is the relative distance between atom i and atom j, \({{{\bf{u}}}}_{{ij}}=\frac{{{{\bf{r}}}}_{{ij}}}{{d}_{{ij}}}\) is the direction vector, \(0\le {l}_{c}\le \min ({l}_{i},{l}_{r})\) is the number of the indices summing up during the contraction. \({{{\rm{f}}}}_{{l}_{r}}^{t}\left({d}_{{ij}}\right)\) is the radial function, which is a learnable multi-layer perceptron of radial basis functions such as Bessel basis and Chebyshev basis. The result is a Cartesian tensor with order \({l}_{o}=|{l}_{i}+{l}_{r}-2{l}_{c}|\), which is between \(|{l}_{i}-{l}_{r}|\) and \({l}_{i}+{l}_{r}\). Since \({l}_{r}\) can be chosen arbitrarily, we can obtain an equivariant tensor of order from 0 to any arbitrary order.

We use a summation operation as the aggregation function for the messages in Eq. (5), that is, directly adding all the messages obtained from neighboring nodes. For tensors of the same order obtained from different \(({l}_{i},{l}_{r})\), we add them together with different coefficients. Due to the arbitrariness of \({l}_{o}\) and \({l}_{r}\), we need to specify their maximum values. With given \({l}_{{{\rm{omax}}}}^{t}\), \({l}_{{{\rm{rmax}}}}^{{{\rm{t}}}}\), we sum up all possible \(({l}_{i},{l}_{r})\):

Update

For scalar message \({\scriptstyle0\atop}\!m_{i}^{t+1}\), we feed it to a fully connected layer followed by a non-linear activation function to extract the information, and update the hidden feature with residual neural networks:

Where σ is the nonlinear activation function, \({\scriptstyle0\atop}\!{{\rm{W}}}^{t}\) is a linear layer with bias in the t layer for the scalar message. However, for the tensors above 0 order, both the bias and the activation function will break the equivariance (Supplementary Note 2). Therefore, we only apply bias when l = 0.

For the high-order activation function, as shown in Eq. (3), tensor multiplication by a scalar is equivariant. Hence, we need to find a mapping from an n-order tensor to a scalar. One simple idea is to use the squared norm of the tensor \({{||}{{\boldsymbol{T}}}{||}}^{2}={\sum }_{{i}_{1}\cdots {i}_{l}}{{{\boldsymbol{T}}}}_{{i}_{1}\cdots {i}_{l}}^{2}\) since it is invariant by definition. Therefore, for \(l \, > \, 0\), we write the element-wise non-linear function as:

It should be noted that different notations σ and σ' were used for the activation function in Eqs. (9) and (10), as the choice of activation function may vary for scalar and high-order tensors. Take the SiLU function \({{\rm{\sigma }}}\left(x\right)=x\cdot {{\rm{sigmoid}}}(x)\) for example and suppose \({\scriptstyle{l}\atop}\!{{\rm{W}}}\) is the identity function. For scalar, SiLU maps x to x itself when \(x\gg 0\). However, for higher-order tensors, Eq. (10) will map \({{{\boldsymbol{T}}}}_{{i}_{1}\cdots {i}_{l}}\) to \({{||}{{\boldsymbol{T}}}{||}}^{2}\cdot {{{\boldsymbol{T}}}}_{{i}_{1}\cdots {i}_{l}}\) instead of \({{{\boldsymbol{T}}}}_{{i}_{1}\cdots {i}_{l}}\) when \({{{\boldsymbol{T}}}}_{{i}_{1}\cdots {i}_{l}}\gg 0\). This is because if we apply the formula for higher-order tensors to a scalar, which is \({y}_{i}=\sigma \left({{\rm{W}}}({x}_{i})\right)\cdot {x}_{i}\), an extra \({x}_{i}\) is multiplied. Therefore, if we use SiLU function for \({{\rm{\sigma }}}\), we should use Sigmoid function for \({{\rm{\sigma }}}^{\prime} \). Other activation functions can be handled using a similar approach, and hence the update function for high-order tensors is:

Readout

For a target n-order property, we utilize a two-layer nonlinear MLP to operate on the n-order tensor at the last hidden layer. For the same reason, bias and element-wise nonlinear functions cannot be used in the high-order tensor.

Performance of HotPP

We validate the accuracy of our method on a diverse range of systems, including small organic molecules, periodic structures, and predictions of dipole moments and polarizability tensors. For each system, we trained the HotPP model on the commonly used dataset and compared the results with other models. To demonstrate the robustness of our model, most models were trained using the same network architecture shown in Fig. 3 and similar hyperparameters. More training details can be seen in Methods section.

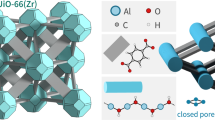

After embedding atomic information into scalars, vectors, and tensors, 4 propagation layers are used to further extract information and outputs the target properties. For higher-order tensors or additional propagation layers, the frame remains similar. The inputs to the network are the atomic numbers Zi and relative displacements between atoms rij. After Embedding layer, the scalar node embedding \({\scriptstyle0\atop}\!h^{0}\) are initialized based on Zi and the higher order embeddings are set to zero. In each Propagate layer, the inputs are the node embeddings \({\scriptstyle{l}_{{in}}\atop}\!h\) and the filter tensors \({\scriptstyle{l}_{r}\atop}\!f_{{ij}}\) obtained by the Filter layer as \({f}_{{l}_{r}}\left({d}_{{{\rm{ij}}}}\right)\cdot {{{\bf{u}}}}_{{ij}}^{\otimes {l}_{r}}\), where \({d}_{{{\rm{ij}}}}\) is the relative distance and uij is the unit vector of rij. The output messages \({\scriptstyle{l}_{{out}}\atop}\!m_{i}\) are transformed through a linear layer and activated by the nonlinear function \(\sigma \) or \(\sigma {\prime} \), dependent on the order of the message tensors. In the Readout layer, the node embeddings are transformed and summed up to get the target properties \({\scriptstyle{l}\atop}\!o\), such as \({\scriptstyle0\atop}\!o\) for energy, and \({}^{1}o\) for dipole.

Small organic molecule

We first test our model on molecular dynamics trajectories of small organic molecules. The ANI-1x dataset35,36 contains DFT calculations for approximately five million diverse molecular conformations obtained through an active learning algorithm. To evaluate the extrapolation capability of HotPP, we train our model with 70% data from the ANI-1x dataset and test on the COmprehensive Machine-learning Potential (COMP6) benchmark35, which samples the chemical space of molecules larger than those included in the training set. The results are shown in Table 1. Compared to ani-1x, our model has demonstrated superior performance across the majority of prediction tasks.

Periodic systems

After testing HotPP on small molecule datasets without periodicity, we evaluated its performance on periodic systems. We selected the carbon system with various phases as the example37. It is a complicated dataset with a wide range of structures containing structural snapshots from ab initio MD and iteratively extended from GAP-driven simulations, and randomly distorted unit cells of the crystalline allotropes, diamond, and graphite. We show the results in Table 2. Clearly, our model with about 150k parameters demonstrates advantages in predicting forces and virials compared to most models and can achieve accuracy close to l = 3 NequIP model with around 2 million parameters. And when the parameters are expanded to 600k, HotPP achieves the best results on this dataset.

Next, we verify the accuracy of our potential in calculating phonon dispersions of diamond, which was not well-predicted in some of the previous models for carbon38. We can obtain the force constant matrix of the structure directly through automatic differentiation \(\frac{{\partial }^{2}E}{\partial {r}_{i\alpha }\partial {r}_{j\beta }}\). We used Phonopy Python package39,40 to calculate the phonon spectrum of diamond and compared it with the results from DFT in Fig. 4. The results show that HotPP can describe the vibrational behavior well. Although there are relatively large errors in the high-frequency part at the gamma point, this could be attributed to the inaccuracy of the DFT calculations within the training dataset. We retrained the model using a more accurate dataset38, and the newly calculated phonon spectrum almost perfectly matches the results from DFT, which demonstrates the reliability of our model.

Dipole moment and polarizability tensor

Since our model can directly output vectors and matrices, we attempted to directly predict the dipole moments and polarizability tensors of structures in the final section. We consider the water systems including water monomer, water dimer, Zundel cation, and liquid water bulk41. The dipole and polarizability of the aperiodic systems were calculated by CCSD theory and those of liquid water were calculated by DFT. Each system contains 1000 structures and we use 70% of them as a training set and the rest as a testing set. We calculate the RMSEs relative to the standard deviation of the testing samples to compare with previous results obtained by other models41,42,43 as shown in Table 3 In most cases, HotPP gets the best results except for the polarizability tensor of the water monomer. Compared to T-EANN42 and REANN43, HotPP performs particularly well in the case of the dipole moment of liquid water. This may be because they fit the dipole moment by learning \({q}_{i}\) and calculating \({{\boldsymbol{\mu }}}={\sum }_{i=1}^{N}{q}_{i}{{{\bf{r}}}}_{i}\), which is inappropriate for periodic systems. In contrast, the output results of our model are all obtained through relative coordinates and thus we can get rid of the selecting of reference point.

Since now we can obtain the dipole moment and polarizability by HotPP, we can calculate the infrared (IR) absorption spectrum and Raman spectrum for liquid water. We separately trained a machine learning potential to learn the energy, forces, and stresses of liquid water to assist us in conducting dynamic simulations. With this potential, we perform a classical MD simulation under ambient conditions (300 K, 1 bar) for 100 ps and calculate the dipole moment and the polarizability tensor every 1 fs. Then we compute the IR and Raman spectra by Fourier transforming the autocorrelation function (ACF) of them and the results are compared to the experiment data44,45 as shown in Fig. 5. We can observe that both HotPP model and DeePMD model46,47 can closely approximate the experimental IR spectra. Our results accurately fit the first three peaks, corresponding to the hindered translation, libration, and H-O-H bending respectively, but there is a long tail in comparison to the experimental data for the O-H stretching mode. This discrepancy may arise from not accounting for quantum effects in our classical molecule dynamic simulation. And for Raman spectra, our model also gives the result in agreement with experimental data.

a The comparasion of the infrared abosption spectrum b The comparasion of the reduced anisotropic Raman spectra. The black circles are the experimental data44,45, and the lines are calculated with molecular dynamics trajectories performed by HotPP, MB-pol56, DeePMD46,47, and T-EANN42. Source data are provided as a Source Data file.

Discussion

In this work, we introduce HotPP, an E(n) equivariant high-order tensor message passing network based directly on Cartesian space. Compared to other Cartesian-space based high-order tensor networks, HotPP can utilize tensors of arbitrary order, providing enhanced expressive power. In contrast to high-order tensor networks based on spherical harmonics and coupled with CG coefficients, HotPP employs simple tensor contraction operations, resulting in a reduced number of parameters and better computational efficiency for small l (Supplementary Note 6). Moreover, the network’s output can be any-order tensor, enabling convenient prediction of vector or tensor properties. With its ability to achieve high accuracy while saving substantial computational time compared to first-principles calculations, HotPP holds great promise in scenarios such as molecular dynamics simulations and structure optimizations, where exploration of potential energy landscapes is essential. In future work, we would investigate approaches to eliminate redundancies in high-order Cartesian tensors to further enhance accuracy. Additionally, due to its E(n) equivariance (rather than E(3)), HotPP can be explored for high-dimensional structure optimization48 to expedite potential energy surface exploration. It can also serve as a foundation for generating models to directly generate structures or predict wave functions. Overall, HotPP is a promising neural network that we believe can facilitate further explorations in physical chemistry, biology, and related fields.

Methods

Software

All experiments were run with the HotPP software available at https://gitlab.com/bigd4/hotpp, git commit be36dae6c2b35148ba214d5626f9960a8eaf5a07. In addition, the Pytorch version was 2.0.1+cu117, PyTorch Lightning under version 2.0.7, and Python under version 3.9.17.

Datasets

Ani-1x: The ANI-1x dataset35,36 contains approximately five million diverse molecular conformations obtained through an active learning algorithm. We use 70% data of ani1x.h5 dataset downloaded from https://doi.org/10.6084/m9.figshare.c.4712477.v1 to train the model, and then test on the COmprehensive Machine-learning Potential (COMP6) benchmark34. The reference energies are extracted from “wb97x_dz.energy” label, and forces from “wb97x_dz.forces” label.

Carbon: The GAP-17 dataset37 consists of a training set comprising 4080 structures and a test set comprising 450 structures. The reference data are got by single-point DFT-LDA computations with dense reciprocal-space meshes. The GAP-20 dataset38 contains 6088 structures and are calculated with the optB88-vdW dispersion inclusive exchange–correlation functional. We use 70% of the data as the training set.

Water: This dataset includes water monomer, water dimer, Zundel cation, and liquid water bulk41, each systems contains 1000 configurations, 70% of which are used for training. The water monomer, water dimer, and Zundel cation are calculated at (CCSD)/d-aug-cc-pVTZ level, and the liquid water bulk at the DFT/PBE-USPP level.

Training details

The tensor of the out layer \({l}_{{{\rm{omax}}}}^{t}\) and the tensor product of the relative coordinates \({{{\rm{l}}}}_{{{\rm{rmax}}}}^{{{\rm{t}}}}\) in the models are both set to 2 and a discussion about the effect of these values can be seen in the Supplementary Note 4. The number of chemical embedding channels and features of node messages is 64. The radial function is a 3 layer MLP of dimensions [64, 64, 64] with SiLU nonlinearity and the basis function is 8 trainable Bessel functions similar to NequIP8. The readout layer is a 2 layer MLP of dimensions [64, 64] and SiLU nonlinearity. The models were trained with the Adam optimizer49 in PyTorch with default parameters. We used a learning rate of 0.01 and the learning rate was reduced using an on-plateau scheduler based on the validation loss with a decayfactor of 0.8.

Ani-1x: We used 5 propagation layers, a radial cutoff of 4.5 Å, a batchsize of 128, and the following loss function with \({\lambda }_{E}\) = 0.1 and \({\lambda }_{f}=1\):

Where N, \(E\), \(\hat{E}\), \({{{\bf{F}}}}_{i\alpha }\) denote the number of atoms, target energies, predicted energies, and the force of atom i on direction \(\alpha \).

Carbon: The cell is multiplied by a unit matrix S before calculations. We used 5 propagation layers, the difference between the models with different parameters is that, for the model with more parameters, we updated both node information and edge information in the propagation layers. We used a radial cutoff of 3 Å, a batchsize of 16, and the following loss function with \({\lambda }_{E}\) = 0.5, \({\lambda }_{f}=1\), and \({\lambda }_{v}=0.2\):

Where \({{{\boldsymbol{V}}}}_{\alpha \beta }\), \({{{\boldsymbol{s}}}}_{\alpha \beta }\) denotes the \(\alpha,\,\beta \) component of the virial and the unit matrix S.

The NequIP model was trained on the same dataset with NVIDIA GeForce RTX 4090 24GB. We used 4 layers with 64 channels for even and odd parity for both l = 1, l = 2, and l = 3. Radial features are generated using 8 trainable Bessel basis functions and a polynomial envelope for the cutoff with p = 6, the numbers of invariant layers and neurons were set to 2 and 64. Such hyperparameter settings result in model parameter quantities of 389k, 971k, and 1,970k respectively. Models were trained with Adam optimizer with default parameters. We initialized the learning rate to 0.01 and used an on-plateau scheduler based on the validation loss with a patience of 100 and a decay factor of 0.8. We used an exponential moving average with weight 0.99.

Water: We used 4 propagation layers, a radial cutoff of 4 Å, a batchsize of 4, and the following loss functions:

Where \({{\bf{P}}}{{\boldsymbol{,}}}\,\hat{{{\bf{P}}}}{{\boldsymbol{,}}}\,{{\boldsymbol{\alpha }}}{{\boldsymbol{,}}}\,\hat{{{\boldsymbol{\alpha }}}}\) are target dipoles, predicted dipoles, target polarizations, predicted polarizations.

Molecluar dynamics simulations

We constructed the PES of liquid water using HotPP with the dataset provided by DeePMD50, including 1888 structures computed with the strongly constrained and appropriately normed (SCAN) functional51. Our model gives the RMSE of 2 meV/atom for the per atom energy, 49 meV/ Å for forces, and 11 meV/atom for per atom virials.

Next we used LAMMPS52 to perform the MLMD with the system of 512 water molecules in a cubic≈24.8 Å supercell to make sure the density close to 1 \({{\rm{g\; c}}}{{{\rm{m}}}}^{-3}\). The system was equilibrated at ambient conditions using Nosé-Hoover chain thermostat53,54 for 200 ps. 10 statistically independent initial conditions were then sampled from the last 100 ps simulation to initialize NVE trajectories of 200 ps length using a time step of 0.5 fs. The initial and final configurations of these 10 simulations can be found in Supplementary Data 1. The dipole moment and polarizability tensor were calculated every 1 fs.

Calculation of infrared absorption spectrum and Raman spectrum

We can get different types of vibrational spectra by Fourier transforming the time autocorrelation functions (ACF) of different physical properties with a trajectory sampled by molecule dynamics. For IR absorption, it can be computed by the ACF of the dipole moment as:

Where \(\mu \) is dipole moment, \(\omega \) is the vibrational frequency, and the bracket is the average over the time origin.

And for Raman spectrum, the isotropic components can be calculated by:

Where \({\alpha }_{{iso}}\equiv {Tr}(\alpha )/3\) is the isotropic components of the polarizability tensor. And,

Where \(\beta \) is the anisotropic traceless tensor \(\beta=\alpha -{\alpha }_{{iso}}I\).

The IR spectrum and Raman spectrum are scaled to make the maximum values consistent with the experimental data.

Data availability

The datasets used in this paper (ANI-1x, carbon, and water) are publicly available (see “Method”). Source data are provided with this paper.

Code availability

The HotPP code used in the current study is available at GitLab (https://gitlab.com/bigd4/hotpp) and Zenodo (https://doi.org/10.5281/zenodo.12952612), ref. 55.

References

Hohenberg, P. & Kohn, W. Inhomogeneous electron gas. Phys. Rev. 136, B864–B871 (1964).

Behler, J. & Parrinello, M. Generalized neural-network representation of high-dimensional potential-energy surfaces. Phys. Rev. Lett. 98, 146401 (2007).

Bartók, A. P., Payne, M. C., Kondor, R. & Csányi, G. Gaussian approximation potentials: the accuracy of quantum mechanics, without the electrons. Phys. Rev. Lett. 104, 136403 (2010).

Shapeev, A. V. Moment tensor potentials: a class of systematically improvable interatomic potentials. Multiscale Model. Simul. 14, 1153–1173 (2016).

Zhang, L., Han, J., Wang, H., Saidi, W. A. & Car, R. End-to-end Symmetry Preserving Inter-atomic Potential Energy Model for Finite and Extended Systems. In Proceedings of the 32nd International Conference on Neural Information Processing Systems. 4441–4451 (Curran Associates Inc., Red Hook, NY, USA, 2018).

Drautz, R. Atomic cluster expansion for accurate and transferable interatomic potentials. Phys. Rev. B 99, 014104 (2019).

Fan, Z. et al. GPUMD: a package for constructing accurate machine-learned potentials and performing highly efficient atomistic simulations. J. Chem. Phys. 157, 114801 (2022).

Batzner, S. et al. E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials. Nat. Commun. 13, 2453 (2022).

Smith, J. S. et al. Approaching coupled cluster accuracy with a general-purpose neural network potential through transfer learning. Nat. Commun. 10, 2903 (2019).

Daru, J., Forbert, H., Behler, J. & Marx, D. Coupled cluster molecular dynamics of condensed phase systems enabled by machine learning potentials: liquid water benchmark. Phys. Rev. Lett. 129, 226001 (2022).

Musil, F. et al. Physics-inspired structural representations for molecules and materials. Chem. Rev. 121, 9759–9815 (2021).

Behler, J. Atom-centered symmetry functions for constructing high-dimensional neural network potentials. J. Chem. Phys. 134, 074106 (2011).

Gastegger, M., Schwiedrzik, L., Bittermann, M., Berzsenyi, F. & Marquetand, P. WACSF - weighted atom-centered symmetry functions as descriptors in machine learning potentials. J. Chem. Phys. 148, 241709 (2018).

Fan, Z. et al. Neuroevolution machine learning potentials: combining high accuracy and low cost in atomistic simulations and application to heat transport. Phys. Rev. B 104, 104309 (2021).

Fan, Z. Improving the accuracy of the neuroevolution machine learning potential for multi-component systems. J. Phys.: Condens. Matter 34, 125902 (2022).

Xu, N. et al. Tensorial properties via the neuroevolution potential framework: fast simulation of infrared and raman spectra. J. Chem. Theory Comput. https://doi.org/10.1021/acs.jctc.3c01343 (2024).

Bartók, A. P., Kondor, R. & Csányi, G. On representing chemical environments. Phys. Rev. B 87, 184115 (2013).

Zhang, L. et al. Deep potential molecular dynamics: a scalable model with the accuracy of quantum mechanics. Phys. Rev. Lett. 120, 143001 (2018).

Pozdnyakov, S. N. et al. Incompleteness of atomic structure representations. Phys. Rev. Lett. 125, 166001 (2020).

Drautz, R. Atomic cluster expansion of scalar, vectorial, and tensorial properties including magnetism and charge transfer. Phys. Rev. B 102, 024104 (2020).

Novikov, I. S., Gubaev, K., Podryabinkin, E. V. & Shapeev, A. V. The MLIP package: moment tensor potentials with MPI and active learning. Mach. Learn.: Sci. Technol. 2, 025002 (2021).

Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O. & Dahl, G. E. Neural message passing for Quantum chemistry. In Proceedings of the 34th International Conference on Machine Learning. Vol. 70, 1263–1272 (JMLR.org, Sydney, NSW, Australia, 2017).

Schütt, K. T. et al. SchNet: A continuous-filter convolutional neural network for modeling quantum interactions. Adv. Neural Inf. Process. Syst. 2017, 992–1002 (2017).

Thomas, N. et al. Tensor field networks: Rotation- and translation-equivariant neural networks for 3D point clouds. Preprint at http://arxiv.org/abs/1802.08219 (2018).

Unke, O. T. & Meuwly, M. PhysNet: a neural network for predicting energies, forces, dipole moments, and partial charges. J. Chem. Theory Comput. 15, 3678–3693 (2019).

Schütt, K., Unke, O. & Gastegger, M. Equivariant message passing for the prediction of tensorial properties and molecular spectra. in Proceedings of the 38th International Conference on Machine Learning (eds. Meila, M. & Zhang, T.) 139 9377–9388 (PMLR, 2021).

Gasteiger, J., Groß, J. & Günnemann, S. Directional Message Passing for Molecular Graphs. In International Conference on Learning Representations (ICLR) (2020).

Thölke, P. & Fabritiis, G. D. Equivariant Transformers for Neural Network based Molecular Potentials. In International Conference on Learning Representations (2022).

Haghighatlari, M. et al. NewtonNet: a Newtonian message passing network for deep learning of interatomic potentials and forces. Digital Discov. 1, 333–343 (2022).

Batatia, I. et al. The Design Space of E(3)-Equivariant Atom-Centered Interatomic Potentials. Preprint at http://arxiv.org/abs/2205.06643 (2022).

Batatia, I., Kovacs, D. P., Simm, G. N. C., Ortner, C. & Csanyi, G. MACE: Higher Order Equivariant Message Passing Neural Networks for Fast and Accurate Force Fields. In Advances in Neural Information Processing Systems (eds. Oh, A. H., Agarwal, A., Belgrave, D. & Cho, K.) (2022).

Finkelshtein, B., Baskin, C., Maron, H. & Dym, N. A Simple and Universal Rotation Equivariant Point-cloud Network. In TAG-ML (2022).

Takamoto, S., Izumi, S., Li, J. & TeaNet Universal neural network interatomic potential inspired by iterative electronic relaxations. Computational Mater. Sci. 207, 111280 (2022).

Simeon, G. & Fabritiis, G. D. TensorNet: Cartesian Tensor Representations for Efficient Learning of Molecular Potentials. In Thirty-seventh Conference on Neural Information Processing Systems (2023).

Smith, J. S., Nebgen, B., Lubbers, N., Isayev, O. & Roitberg, A. E. Less is more: sampling chemical space with active learning. J. Chem. Phys. 148, 241733 (2018).

Smith, J. S. et al. The ANI-1ccx and ANI-1x data sets, coupled-cluster and density functional theory properties for molecules. Sci. Data 7, 134 (2020).

Deringer, V. L. & Csányi, G. Machine learning based interatomic potential for amorphous carbon. Phys. Rev. B 95, 094203 (2017).

Rowe, P., Deringer, V. L., Gasparotto, P., Csányi, G. & Michaelides, A. An accurate and transferable machine learning potential for carbon. J. Chem. Phys. 153, 034702 (2020).

Togo, A. First-principles phonon calculations with phonopy and phono3py. J. Phys. Soc. Jpn. 92, 012001 (2023).

Togo, A., Chaput, L., Tadano, T. & Tanaka, I. Implementation strategies in phonopy and phono3py. J. Phys.: Condens. Matter 35, 353001 (2023).

Grisafi, A., Wilkins, D. M., Csányi, G. & Ceriotti, M. Symmetry-adapted machine learning for tensorial properties of atomistic systems. Phys. Rev. Lett. 120, 036002 (2018).

Zhang, Y. et al. Efficient and accurate simulations of vibrational and electronic spectra with symmetry-preserving neural network models for tensorial properties. J. Phys. Chem. B 124, 7284–7290 (2020).

Zhang, Y., Xia, J. & Jiang, B. REANN: a PyTorch-based end-to-end multi-functional deep neural network package for molecular, reactive, and periodic systems. J. Chem. Phys. 156, 114801 (2022).

Bertie, J. E. & Lan, Z. Infrared intensities of liquids xx: the intensity of the oh stretching band of liquid water revisited, and the best current values of the optical constants of H2O(l) at 25 °C between 15,000 and 1 cm−1. Appl. Spectrosc., 50, 1047–1057 (1996).

Brooker, M. H., Hancock, G., Rice, B. C. & Shapter, J. Raman frequency and intensity studies of liquid H2O, H218O and D2O. J. Raman Spectrosc. 20, 683–694 (1989).

Zhang, C. et al. Modeling liquid water by climbing up jacob’s ladder in density functional theory facilitated by using deep neural network potentials. J. Phys. Chem. B 125, 11444–11456 (2021).

Sommers, G. M., Calegari Andrade, M. F., Zhang, L., Wang, H. & Car, R. Raman spectrum and polarizability of liquid water from deep neural networks. Phys. Chem. Chem. Phys. 22, 10592–10602 (2020).

Pickard, C. J. Hyperspatial optimization of structures. Phys. Rev. B 99, 054102 (2019).

Kingma, D. P. & Ba, J. Adam: A Method for Stochastic Optimization. Preprint at https://doi.org/10.48550/arXiv.1412.6980 (2017).

Zhang, L. et al. Phase diagram of a deep potential water model. Phys. Rev. Lett. 126, 236001 (2021).

Sun, J., Ruzsinszky, A. & Perdew, J. P. Strongly constrained and appropriately normed semilocal density functional. Phys. Rev. Lett. 115, 036402 (2015).

Thompson, A. P. et al. LAMMPS - a flexible simulation tool for particle-based materials modeling at the atomic, meso, and continuum scales. Computer Phys. Commun. 271, 108171 (2022).

Nosé, S. A molecular dynamics method for simulations in the canonical ensemble. Mol. Phys. 52, 255–268 (1984).

Hoover, W. G. Canonical dynamics: equilibrium phase-space distributions. Phys. Rev. A 31, 1695–1697 (1985).

Wang, J. et al. E(n)-equivariant cartesian tensor passing potential: HotPP. Zenodo https://doi.org/10.5281/zenodo.12952612 (2024).

Medders, G. R. & Paesani, F. Infrared and raman spectroscopy of liquid water through “first-principles” many-body molecular dynamics. J. Chem. Theory Comput. 11, 1145–1154 (2015).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (grant number. 12125404), the Basic Research Program of Jiangsu (Grant BK20233001, BK20241253), the Jiangsu Funding Program for Excellent Postdoctoral Talent (2024ZB075), the Postdoctoral Fellowship Program of CPSF (Grant GZC20240695), and the Fundamental Research Funds for the Central Universities. Y. W. was partially supported by the Computational Chemical Sciences Center: Chemistry in Solution and at Interfaces (CSI) funded by DOE Award DE-SC0019394, and used resources of the National Energy Research Scientific Computing Center (NERSC) operated under Contract No.DE-AC02-05CH11231 using NERSC award ERCAP0021510. The calculations were carried out using supercomputers at the High-Performance Computing Center of Collaborative Innovation Center of Advanced Microstructures, the high-performance supercomputing center of Nanjing University.

Author information

Authors and Affiliations

Contributions

J.Sun. conceived and led the project. J.W. and Y.W. deduced the formula and completed the coding. H.Z. helped to optimize the efficiency of the code. Z.Y., Z.L. and J.Shi. trained the model in different datasets. J.W., Y.W., H.-T.W., D.X. and J. Sun wrote the manuscript. All authors discussed the results and commented on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Wilson Gregory and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, J., Wang, Y., Zhang, H. et al. E(n)-Equivariant cartesian tensor message passing interatomic potential. Nat Commun 15, 7607 (2024). https://doi.org/10.1038/s41467-024-51886-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41467-024-51886-6

This article is cited by

-

Optimal invariant sets for atomistic machine learning

npj Computational Materials (2026)

-

Monolayer methane hydrate formation in 2D confinement with multiple plastic phases and low superionic pressure

Science China Physics, Mechanics & Astronomy (2026)

-

Efficient crystal structure prediction based on the symmetry principle

Nature Computational Science (2025)

-

Accurate piezoelectric tensor prediction with equivariant attention tensor graph neural network

npj Computational Materials (2025)