Abstract

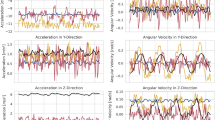

Human activity recognition (HAR) with wearable sensors is widely applied in health monitoring, fitness tracking, and smart environments, but the choice of sensor configuration remains a critical factor for balancing recognition performance with usability and comfort. Existing datasets often lack the full-body coverage required to systematically evaluate sensor placement strategies. We present a comprehensive dataset of 12 daily activities performed by 30 participants, recorded using 17 inertial measurement units (IMUs) distributed across the entire body. Each IMU provides tri-axial acceleration and angular velocity signals at 60 Hz, aligned within a standardized global coordinate system. The dataset further includes detailed anthropometric metadata, structured annotations of activity and effort level, and processing scripts to support feature extraction, segmentation, and baseline model training. Benchmark experiments with both machine learning and deep learning models demonstrate the usability of the dataset across multiple temporal windows and sensor subsets. This resource enables systematic evaluation of sensor layout strategies and supports the development of practical, generalizable HAR systems.

Similar content being viewed by others

Data availability

The dataset described in this study is available on Figshare29 (https://doi.org/10.6084/m9.figshare.30234940) under a non-commercial license. The repository contains CSV files for each participant’s trial and a CSV file providing anthropometric information.

Code availability

All project code including data segmentation, feature extraction, and model training process is released under a non-commercial license on the project’s repository at https://github.com/FudanBSRL/Comprehensive-IMU-Dataset.

References

Wang, Y., Cang, S. & Yu, H. A survey on wearable sensor modality centred human activity recognition in health care. Expert Syst. Appl. 137, 167–190, https://doi.org/10.1016/j.eswa.2019.04.057 (2019).

Bisio, I., Garibotto, C., Lavagetto, F. & Sciarrone, A. When eHealth Meets IoT: A Smart Wireless System for Post-Stroke Home Rehabilitation. IEEE Wirel. Commun. 26, 24–29, https://doi.org/10.1109/MWC.001.1900125 (2019).

Alhazmi, A. K. et al. Intelligent Millimeter-Wave System for Human Activity Monitoring for Telemedicine. Sensors 24, 268, https://doi.org/10.3390/s24010268 (2024).

Bock, M., Kuehne, H., Van Laerhoven, K. & Moeller, M. WEAR: An Outdoor Sports Dataset for Wearable and Egocentric Activity Recognition. Proc. ACM Interactive, Mobile, Wearable Ubiquitous Technol. 8, 175, https://doi.org/10.1145/3699776 (2023).

Liang, L. et al. WMS: Wearables-Based Multisensor System for In-Home Fitness Guidance. IEEE Internet Things J. 10, 17424–17435, https://doi.org/10.1109/JIOT.2023.3274831 (2023).

Czekaj, Ł. et al. Real-Time Sensor-Based Human Activity Recognition for eFitness and eHealth Platforms. Sensors 24, 3891, https://doi.org/10.3390/s24123891 (2024).

Hiremath, S. K. & Plötz, T. The Lifespan of Human Activity Recognition Systems for Smart Homes. Sensors 23, 7729, https://doi.org/10.3390/s23187729 (2023).

Li, Y., Yang, G., Su, Z., Li, S. & Wang, Y. Human activity recognition based on multienvironment sensor data. Inf. Fusion 91, 47–63, https://doi.org/10.1016/j.inffus.2022.10.015 (2023).

Qureshi, T. S., Shahid, M. H., Farhan, A. A. & Alamri, S. A systematic literature review on human activity recognition using smart devices: advances, challenges, and future directions. Artif. Intell. Rev. 58, 276, https://doi.org/10.1007/s10462-025-11275-x (2025).

Gomaa, W. & Khamis, M. A. A perspective on human activity recognition from inertial motion data. Neural Comput. Appl. 35, 20463–20568, https://doi.org/10.1007/s00521-023-08863-9 (2023).

Zappi, P. et al. Activity recognition from on-body sensors: Accuracy-power trade-off by dynamic sensor selection. in Verdone, R. (eds) Wireless Sensor Networks vol. 4913 17–33, https://doi.org/10.1007/978-3-540-77690-1_2 (Springer, Berlin, 2008).

Reiss, A. & Stricker, D. Introducing a new benchmarked dataset for activity monitoring. in 2012 16th international symposium on wearable computers 108–109, https://doi.org/10.1109/ISWC.2012.13 (IEEE, Newcastle, UK, 2012).

Reiss, A. & Stricker, D. Creating and benchmarking a new dataset for physical activity monitoring. in PETRA ’12: Proceedings of the 5th International Conference on PErvasive Technologies Related to Assistive Environments, https://doi.org/10.1145/2413097.2413148 (Association for Computing Machinery, New York, NY, USA, 2012).

Roggen, D. et al. Collecting complex activity datasets in highly rich networked sensor environments. in 2010 Seventh International Conference on Networked Sensing Systems (INSS) 233–240, https://doi.org/10.1109/INSS.2010.5573462 (IEEE, Kassel, Germany, 2010).

Kwapisz, J. R., Weiss, G. M. & Moore, S. A. Activity recognition using cell phone accelerometers. ACM SIGKDD Explor. Newsl. 12, 74–82, https://doi.org/10.1145/1964897.1964918 (2011).

Anguita1, D., Ghio, A., Oneto, L., Parra, X. & Reyes-Ortiz, J. L. A public domain dataset for human activity recognition using smartphones. in ESANN 2013 Proceedings, 21st European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (Bruges, Belgium, 2013).

Chan, S. et al. CAPTURE-24: A large dataset of wrist-worn activity tracker data collected in the wild for human activity recognition. Sci. Data 11, 1135, https://doi.org/10.1038/s41597-024-03960-3 (2024).

Davoudi, A. et al. The effect of sensor placement and number on physical activity recognition and energy expenditure estimation in older adults: Validation study. JMIR mHealth uHealth 9, e23681, https://doi.org/10.2196/23681 (2021).

Dang, X., Li, W., Zou, J., Cong, B. & Guan, Y. Assessing the impact of body location on the accuracy of detecting daily activities with accelerometer data. iScience 27, 108626, https://doi.org/10.1016/j.isci.2023.108626 (2024).

Urukalo, D., Nates, F. M. & Blazevic, P. Sensor placement determination for a wearable device in dual-arm manipulation tasks. Eng. Appl. Artif. Intell. 137, 109217, https://doi.org/10.1016/j.engappai.2024.109217 (2024).

Tsukamoto, A., Yoshida, N., Yonezawa, T., Mase, K. & Enokibori, Y. Where Are the Best Positions of IMU Sensors for HAR? - Approach by a Garment Device with Fine-Grained Grid IMUs -. in UbiComp/ISWC ’23 Adjunct: Adjunct Proceedings of the 2023 ACM International Joint Conference on Pervasive and Ubiquitous Computing & the 2023 ACM International Symposium on Wearable Computing 445–450, https://doi.org/10.1145/3594739.3610736 (Association for Computing Machinery, New York, NY, USA, 2023).

Ainsworth, B. E. et al. Compendium of physical activities: An update of activity codes and MET intensities. Med. Sci. Sports Exerc. 32, https://doi.org/10.1097/00005768-200009001-00009 (2000).

Ainsworth, B. E. et al. 2011 compendium of physical activities: A second update of codes and MET values. Med. Sci. Sports Exerc. 43, 1575–1581, https://doi.org/10.1249/MSS.0b013e31821ece12 (2011).

Pedregosa, F. et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

He, K., Zhang, X., Ren, S. & Jian, S. Deep Residual Learning for Image Recognition. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 770–778, https://doi.org/10.1002/chin.200650130 (2016).

Chen, Z., Zhang, L., Cao, Z. & Guo, J. Distilling the Knowledge from Handcrafted Features for Human Activity Recognition. IEEE Trans. Ind. Informatics 14, 4334–4342, https://doi.org/10.1109/TII.2018.2789925 (2018).

Saeb, S., Lonini, L., Jayaraman, A., Mohr, D. C. & Kording, K. P. The need to approximate the use-case in clinical machine learning. Gigascience 6, 1–9, https://doi.org/10.1093/gigascience/gix019 (2017).

Paszke, A. et al. PyTorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 32 (2019).

Feng, M., Zhang, Q. & Fang, H. A Comprehensive IMU Dataset for Evaluating Sensor Layouts in Human Activity and Intensity Recognition. Figshare https://doi.org/10.6084/m9.figshare.30234940 (2025).

Acknowledgements

This research was supported by the National Natural Science Foundation of China (Grant No. 12532002) and the Shanghai Pilot Program for Basic Research - Fudan University, China (Grant No. 21TQ1400100- 22TQ009).

Author information

Authors and Affiliations

Contributions

M.F. and H.F. designed the experimental protocol; M.F. performed data collection, annotation, technical validation, and drafted the manuscript. Q.Z. contributed to discussion of results and helped draft the manuscript; H.F. conceived of the study, supervised the study at all stages, commented on the approach and results, and critically revised the manuscript. All authors gave final approval for publication.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Feng, M., Zhang, Q. & Fang, H. A comprehensive IMU dataset for evaluating sensor layouts in human activity and intensity recognition. Sci Data (2026). https://doi.org/10.1038/s41597-026-06710-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-026-06710-9