Abstract

Crop yield production could be enhanced for agricultural growth if various plant nutrition deficiencies, and diseases are identified and detected at early stages. Hence, continuous health monitoring of plant is very crucial for handling plant stress. The deep learning methods have proven its superior performances in the automated detection of plant diseases and nutrition deficiencies from visual symptoms in leaves. This article proposes a new deep learning method for plant nutrition deficiencies and disease classification using a graph convolutional network (GNN), added upon a base convolutional neural network (CNN). Sometimes, a global feature descriptor might fail to capture the vital region of a diseased leaf, which causes inaccurate classification of disease. To address this issue, regional feature learning is crucial for a holistic feature aggregation. In this work, region-based feature summarization at multi-scales is explored using spatial pyramidal pooling for discriminative feature representation. Furthermore, a GCN is developed to capacitate learning of finer details for classifying plant diseases and insufficiency of nutrients. The proposed method, called Plant Nutrition Deficiency and Disease Network (PND-Net), has been evaluated on two public datasets for nutrition deficiency, and two for disease classification using four backbone CNNs. The best classification performances of the proposed PND-Net are as follows: (a) 90.00% Banana and 90.54% Coffee nutrition deficiency; and (b) 96.18% Potato diseases and 84.30% on PlantDoc datasets using Xception backbone. Furthermore, additional experiments have been carried out for generalization, and the proposed method has achieved state-of-the-art performances on two public datasets, namely the Breast Cancer Histopathology Image Classification (BreakHis 40\(\times \): 95.50%, and BreakHis 100\(\times \): 96.79% accuracy) and Single cells in Pap smear images for cervical cancer classification (SIPaKMeD: 99.18% accuracy). Also, the proposed method has been evaluated using five-fold cross validation and achieved improved performances on these datasets. Clearly, the proposed PND-Net effectively boosts the performances of automated health analysis of various plants in real and intricate field environments, implying PND-Net’s aptness for agricultural growth as well as human cancer classification.

Similar content being viewed by others

Introduction

Agricultural production plays a crucial role in the sustainable economic and societal growth of a country. High-quality crop yield production is essential for satisfying global food demands and better health. However, several key factors, such as environmental barriers, pollution, and climate change, adversely affect crop yield and quality. Nevertheless, poor soil-nutrition management causes severe plant stress, leading to different diseases and resulting in a substantial financial loss. Thus, plant nutrition diagnosis and disease detection at an early stage is of utmost importance for overall health monitoring of plants1. Nutrition management in agriculture is a decisive task for maintaining the growth of plants. In recent times, it has been witnessed the success of machine learning (ML) techniques for developing decision support systems over traditional manual supervision of agricultural yield. Moreover, nutrient management is critical for improving production growth, focusing on a robust and low-cost solution. Intelligent automated systems based on ML effectively build more accurate predictive models, which are relevant for improving agricultural production.

Nutrient deficiency in plants exhibits certain visual symptoms and may cause of poor crop yields2. Diagnosis of plant nutrient inadequacy using deep learning and related intelligent methods is an emerging area in precision agriculture and plant pathology3. Automated detection and classification of nutrient deficiencies using computer vision and artificial intelligence have been studied in the recent literature4,5,6,7,8. Diagnosis of nutrient deficiencies in various plants (e.g., rice, banana, guava, palm oil, apple, lettuce, etc.) is vital, because soil ingredients often can not provide the nutrients as required for the growth of plants9,10,11,12. Also, early stage detection of leaf diseases (e.g., potato, rice, cucumber, etc.) and pests are essential to monitor crop yield production13. A few approaches on disease detection and nutrient deficiencies in rice leaves have been developed and studied in recent times14,15,16,16,17,18. Hence, monitoring plant health, disease, and nutrition inadequacy could be a challenging image classification problem in artificial intelligence (AI) and machine learning (ML)19.

This paper proposes a deep learning method for plant health diagnosis by integrating a graph convolutional network (GCN) upon a backbone deep convolutional neural network (CNN). The complementary discriminatory features of different local regions of input leaf images are aggregated into a holistic representation for plant nutrition and disease classification. The GCNs were originally developed for semi-supervised node classification20. Over time, several variations of GCNs have been developed for graph structured data21. Furthermore, GCN is effective for message propagation for image and video data in various applications. In this direction, several works have been developed for image recognition using GCN22,23. However, little research attention has been given to adopting GCN especially for plant disease prediction and nutrition monitoring24. Thus, in this work, we have studied the effectiveness of GCN in solving the current problem of plant health analysis regarding nutrition deficiency and disease classification of several categories of plants.

The proposed method, called Plant Nutrition Deficiency and Disease Network (PND-Net), attempts to establish a correlation between different regions of the leaves for identifying infected and defective regions at multiple granularities. For this intent, region pooling in local contexts and spatial pooling in a pyramidal structure, have been explored for a holistic feature representation of subtle discrimination of plant health conditions. Other existing approaches have built the graph-based correlation directly upon the CNN features, but they have often failed to capture finer descriptions of the input data. In this work, we have integrated two different feature pooling techniques for generating node features of the graph. As a result, this mixing enables an enhanced feature representation which is further improved by graph layer activations in the hidden layers in the GCN. The effectiveness of the proposed strategy has been analysed with rigorous experiments on two plant nutrition deficiency and two plant disease classification datasets. In addition, the method has been tested on two different human cancer classification tasks for the generalization of the method. The key contributions of this work are:

-

A deep learning method, called PND-Net, is devised by integrating a graph convolutional module upon a base CNN to enhance the feature representation for improving the classification performances of unhealthy leaves.

-

A combination of fixed-size region-based pooling with multi-scale spatial pyramid pooling progressively enhances the feature aggregation for building a spatial relation between the regions via the neighborhood nodes of a spatial graph structure.

-

Experimental studies have been carried out for validating the proposed method on four public datasets, which have been tested for plant disease classification, and nutrition deficiency classification. For generalization of the proposed method, a few experiments have been conducted on the cervical cancer cell (SIPaKMeD) and breast cancer histopathology image (BreakHis 40\(\times \) and 100\(\times \)) datasets. The proposed PND-Net has achieved state-of-the-art performances on these six public datasets of different categories.

The rest of this paper is organized as follows: “Related works” summarizes related works. “Proposed method” describes the proposed methodology. The experimental results are showcased in “Results and performance analysis”, followed by the conclusion in “Conclusion”.

Related works

Several works have been contributed to plant disease detection, most of which were tested on controlled datasets, acquired in a laboratory set-up. Only a few works have developed unconstrained datasets considering realistic field conditions, which have been studied in this work. Here, a precise study of recent works has been briefed.

Methods on plant nutrition deficiencies

Bananas are one of the widely consumed staple foods across the world. An image dataset depicting the visual deficiency symptoms of eight essential nutrients, namely, boron, calcium, iron, potassium, manganese, magnesium, sulphur and zinc has been developed25. This dataset has been tested in this proposed work. The CoLeaf dataset contains images of coffee plant leaves and is tested for nutritional deficiencies recognition and classification26. The nutritional status of oil palm leaves, particularly the status of chlorophyll and macro-nutrients (e.g., N, K, Ca, and Mg) in the leaves from proximal multi spectral images, have been evaluated using machine learning techniques27. The identification and categorization of common macro-nutrient (e.g., nitrogen, phosphorus, potassium, etc.) deficiencies in rice plants has been addressed17,28. The percentage of micro nutrients deficiencies in rice plants using CNNs and Random Forest (RF) has been estimated28. Detection of biotic stressed rice leaves and abiotic stressed leaves caused by NPK (Nitrogen, Phosphorus, and Potassium) deficiencies have been experimented with using CNN29.

A supervised monitoring system of tomato leaves for predicting nutrient deficiencies using a CNN for recognizing and to classify the type of nutrient deficiency in tomato plants and achieved 86.57% accuracy30. The nutrient deficiency symptoms have been recognized in RGB images by using CNN-based (e.g., EfficientNet) transfer learning on orange with 98.52% accuracy and sugar beet with 98.65% accuracy31. Nutrient deficiencies in rice plants have reported 97.0% accuracy by combining CNN and reinforcement learning32. The R-CNN object detector has achieved accuracy of 82.61% for identifying nutrient deficiencies in chili leaves33. Feature aggregation schemes by combining the features with HSV and RGB for color, GLCM and LBP for texture, and Hu moments and centroid distance for shapes have been examined for nutrient deficiency identification in chili plants34. However, this method performed the best using a CNN with 97.76% accuracy. An ensemble of CNNs has reported 98.46% accuracy for detecting groundnut plant leaf images35. An intelligent robotic system with a wireless control to monitor the nutrition essentials of spinach plants in the greenhouse has been evaluated with 86% precision36. The nutrient status and health conditions of the Romaine Lettuce plants in a hydroponic setup using a CNN have been tested with 90% accuracy37. The identification and categorization of common macro-nutrient (e.g., nitrogen, phosphorus, potassium, etc.) deficiencies in rice plants using pixel ratio analysis in HSV color space has been evaluated with more than 90% accuracy17. A method for estimating leaf nutrient concentrations of citrus trees using unmanned aerial vehicle (UAV) multi-spectral images has been developed and tested by a gradient-boosting regression tree model with moderate precision38.

Approaches on plant diseases

The classification of healthy and diseased citrus leaf images using a (CNN) on the Platform as a Service (PaaS) cloud has been developed. The method has been tested using pre-trained backbones and proposed CNN, and attained 98.0% accuracy and 99.0% F1-score39. A modified transfer learning (TL) method using three pre-trained CNN has been tested for potato leaf disease detection and the DensNet169 has achieved 99.0% accuracy40. Likewise, a CNN-based transfer learning method has been adapted for detecting powdery mildew disease with 98.0% accuracy in bell pepper leaves41, and woody fruit leaves with 85.90% accuracy42. A two-stage transfer learning method has combined Faster-RCNN for leaf detection and CNN for maize plant disease recognition in a natural environment and obtained 99.70% F1-score43. A hybrid model integrating a CNN and random forest (RF) for multi-classifying rice hispa disease into distinct intensity levels44. A method of multi-classification of rice hispa illness has attained accuracy of 97.46% using CNN and RF44. An improved YOLOv5 network has been developed for cucumber leaf diseases and pest detection and reported 73.8% precision13. A fusion of VGG16 and AlexNet architecture has attained 95.82% testing accuracy for pepper leaf disease classification45. Likewise, the disease classification of black pepper has gained 99.67% accuracy using ResNet-1846. A ConvNeXt with an attention module, namely CBAM-ConvNeXt has improved the performance with 85.42% accuracy for classifying soybean leaf disease47. A channel extension residual structure with an adaptive channel attention mechanism and a bidirectional information fusion block for leaf disease classification48. This technique has brought off 99.82% accuracy on the plantvillage dataset. A smartphone application has been developed for detecting habanero plant disease and obtained 98.79% accuracy49. In addition, an ensemble method for crop monitoring system to identify plant diseases at the early stages using IoT enabled system has been presented with the best precision of 84.6%50. A dataset comprising five types of disorders of apple orchards has been developed, and the best accuracy is 97.3%, which has been tested using CNN51. A lightweight model using ViT structure has been developed for rice leaf disease classification and attained 91.70% F1-score52.

Methods on graph convolutional networks (GCN)

Though several deep learning approaches have been developed for plant health analysis yet, little progress has been achieved using GCN for visual recognition of plant diseases53. The SR-GNN integrates relation-aware feature representation leveraging context-aware attention with the GCN module22. Cervical cell classification methods have been developed by exploring the potential correlations of clusters through GCN54 and feature rank analysis55. On the other side, fusion of multiple CNNs, transfer learning and other deep learning methods have been developed for early detection of breast cancer56. This fusion method has achieved F1 score of 99.0% on ultrasound breast cancer dataset using VGG-16. In this work, a GCN-based method has been developed by capturing the regional importance of local contextual features in solving plant disease recognition and human cancer image classification challenges.

Proposed method

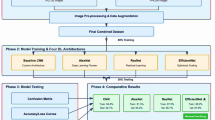

The proposed method, called PND-Net, combines deep features using CNN and GCN in an end-to-end pipeline as shown in Fig. 1. Firstly, a backbone CNN computes high-level deep features from input images. Then, a GCN is included upon the CNN for refining deep features using region-based pooling and pyramid pooling strategies for capturing finer details of contextual regions as multiple scales. Finally, a precise feature map is built for improving the performance.

Background of graph convolutional network (GCN)

GCNs have widely been used for several domains and applications such as node classification, edge attribute modeling, citation networks, knowledge graphs, and several other tasks through graph-based representation. A GCN could be formulated by stacking multiple graph convolutional layers with non-linearity upon traditional convolutional layers, i.e., CNN. In practice, this kind of stacking of GCN layers at a deeper level of a network enhances the model’s learning capacity. Moreover, graph convolutional layers are effective for alleviating overfitting issue and can address the vanishing gradient problem by adopting the normalization trick, which is a foundation of modeling GCN. A widely used multi-layer GCN algorithm was proposed by Kiff and Welling20, which has been adopted here. It explores an efficient and fast layer-wise propagation method relying on the first-order approximation of spectral convolutions on graph structures. It is scalable and apposite for semi-supervised node classification from graph-based data. A linear formulation of a GCN could be simplified which, in turn, is capable of parameter optimization at each layer by convolution with filter \(g_{\theta }\) and \(\theta \) parameters, which can further be optimized with a single parameter. Here, a simplified graph convolution has been concisely defined20.

The graph Laplacian (\(\Psi \)) could further be normalized to mitigate the vanishing gradients within a network.

where the binary adjacency matrix \(\tilde{\textbf{A}}=\textbf{A}+\textbf{I}_{{P}}\) denotes \(\textbf{A}\) with self-connections and \(\textbf{I}_{{P}}\) is the identity matrix, and degree matrix is \(\tilde{\textbf{D}}_{ii}= \sum _{j}^{\hspace{0.2 cm}} \tilde{\textbf{A}}_{ij}\), and X is an input data/signal to the graph. The simplified convoluted signal matrix \(\Omega \) is given as

where input features \(X \in \mathbb {R}^{{P}\times {C}}\), filter parameters \(\Theta \in \mathbb {R}^{{C}\times {F}}\), and \(\Omega \in \mathbb {R}^{{P}\times {F}}\) is the convoluted signal matrix. Here, P is the number of nodes, C is the input channels, F is the filters/feature maps. Now, this form of graph convolution (Eq. 3) is applied to address the current problem and is described in “namerefsec33”.

Convolutional feature representation

A standard backbone CNN is used for deep feature extraction from an input leaf image, denoted with the class label \(I_l\) \(\in \) \(\mathbb {R}^{h\times w\times 3}\) is passed through a base CNN for extracting the feature map, denoted as \(\textbf{F}\) \(\in \) \(\mathbb {R}^{h\times w\times C}\) where h, w, and C imply the height, width, and channels, respectively. However, the squeezed high-level feature map is not suitable for describing local non-overlapping regions. Hence, the output base feature map is spatially up-sampled to \(\textbf{F}\) \(\in \) \(\mathbb {R}^{H\times W\times C}\) and \(\omega \) number of distinct small regions are computed, given as \(\textbf{F}\) \(\in \) \(\mathbb {R}^{\omega \times h\times w\times C}\). These regions represents complementary information at different spatial contexts. However, due to fixed dimensions of regions, the importance of each region is uniformly distributed, which could be tuned further for extracting more distinguishable information. A simple pooling technique could further be applied at multiple scales for enhancing the spatial feature representation. For this intent, the region-pooled feature vectors are reshaped to convert them into an aggregated spatial feature space upon which multi-scale pyramidal pooling is possible. In addition, this kind of feature representation captures overall spatiality to understand the informative features holistically and solve the current problem.

Spatial pyramid pooling (SPP)

The SPP layer was originally introduced to alleviate the fixed-length input constraints of conventional deep networks, which effectively boosted the model’s performance57. Generally, a SPP layer is added upon the last convolutional layer of a backbone CNN. This pooling layer generates a fixed-length feature vector and afterward passes the feature map to a fully connected or classification layer. The SPP enhances feature aggregation capability at a deeper layer of a network. Most importantly, SPP applies multi-level spatial bins for pooling while preserving the spatial relevance of the feature map. It provides a robust solution through performance enhancement of diverse computer vision problems, including plant/leaf image recognition.

A typical region polling technique loses its spatial information while passing though a global average pooling (GAP) layer for making compatible with and plugging in the GCN. As a result, a region pooling with a GAP layer aggressively eliminates informativeness of regions and their correlation, and thus often it fails to build an effective feature vector. Also, the inter-region interactions are ignored with a GAP layer upon only region-based pooling. Therefore, it is essential to correlate the inter-region interactions for selecting essential features, which could further be enriched and propagated through the GCN layer activations.

Our objective is to utilize the advantage of multi-level pooling at different pyramid levels of \(n \times n\) bins on the top of fixed-size regions of the input image. As a result, the spatial relationships between different image regions are preserved, thereby escalating the learning capacity of the proposed PND-Net. The input feature space prior to pyramid pooling is given as \(\textbf{F}^{\omega \times (HW)\times C}\), which has been derived from \(\textbf{F}^{\omega \times H\times W\times C}\). It enables the selection of contextual features of neighboring regions (i.e., inter-regions) through pyramid pooling simultaneously. This little adjustment in the spatial dimension of input features prior to pooling captures the interactions between the local regions of input leaf disease. Experimental results reflect that pyramidal pooling indeed elevates image classification accuracy gain over region pooling only.

where \(\delta _i\) and \(\delta _j\) define the window sizes, which enable to pool a total of \(P=(i\times i) + (j\times j)\) feature maps after SPP, given as \(\textbf{F}^{P\times C}\). These feature maps are further fed into a GCN module, described next. The key components of proposed method are pictorially ideated in Fig. 1.

Graph convolutional network (GCN)

A graph \(G=({P},E)\), with P nodes and E edges, is constructed for feature propagation. A GCN is applied for building a spatial relation between the features through graph G. The nodes are characterized by deep feature maps, and the output \(\textbf{C}\) with the convoluted features per node. The edges E are described by an un-directed adjacency matrix \(\textbf{A} \in \mathbb {R}^{{P}\times {P}}\) for representing node-level interactions. This graph convolution has been applied to \(F_{SPP}\) (i.e., \(\textbf{F}^{P\times C}\)), described above. The layer-wise feature propagation rule is defined as:

\(l=0, 1, \dots , L-1\) is the number of layers, \(\textbf{W}^{(l)}\) is a weight matrix for the l-th layer. A non-linear activation function (e.g., ReLU) is denoted by \(\sigma (.)\). The symmetrically normalized adjacency matrix is \(\hat{\tilde{\textbf{A}}}=Q\tilde{\textbf{A}}Q; \) and \(Q=\tilde{\textbf{D}}^{-1/2}\) is the diagonal node degree matrix of \(\tilde{\textbf{A}}\) (defined in Eq. 3). Next, the reshaped convolutional feature map \({\textbf {F}}\) is fed into two layers of graph convolutions, subsequently which is capable of capturing local neighborhoods via the non-linear activations of rectified linear unit (ReLU) in the graph convolutional layers. The dimension of the output feature maps remains the same input of GCN layers, i.e., \( \textbf{G}^{(L)}\rightarrow {\textbf {F}}_{G}\) \(\in \mathbb {R}^{{P}\times {C}}\). However, the node features could be squeezed to a lower dimension, which may lose essential information pertinent to spatial modeling. Hence, the channel dimension is kept uniform within the network pipeline in our study. Afterward, the graph-based transformed feature maps (\({\textbf {F}}_{G}\)) are pooled using a GAP for selecting the most discriminative channel-wise feature maps of the nodes.

Classification module

Generally, regularization is a standard way to tackle the training-related challenges of any network, such as overfitting. Here, the layer normalization and dropout layers are interposed for handling overfitting issues as a regularization technique. Lastly, \({F}_{final}\) is passes through a softmax layer for computing the output probability of the predicted class-label \(\bar{b}\), corresponding to the actual-label \(b \in Y\) of object classes Y.

The categorical cross-entropy loss function (\(\mathscr {L}_{CE}\)) and the stochastic gradient descent (SGD) optimizer with \(10^{-3}\) learning rate has been chosen for experiments.

where \(Y_i\) is the actual class label and \(log\hat{Y}_i\) is the predicted class label by using softmax activation function \(\sigma (.)\) in the classification layer, and N is the total number of classes.

Results and performance analysis

At first, the implementation description is provided, followed by a summary of datasets. The experiments have been conducted using conventional classification and cross validation methods. The performances are evaluated using the standard well-known metrics: accuracy, precision, recall, and F1-score (Eq. 8).

where TP is the number of true positive, TN is the number of true negative, FP is the number of false positive, and FN is the number of false negative. However, accuracy is not a good assessment metric when the data distributions among the classes are imbalanced. To overcome such misleading evaluation, the precision and recall are useful metrics, based on which F1-score is measured. These three metrics are widely used for evaluating the predictive performance when classes are imbalanced. In addition, we have evaluated the performance using confusion matrix which provides a reliable performance assessment of our model. The performances have been compared with existing methods, discussed below.

Implementation details

A concise description about the model development regarding the hardware resources, software implementation data distribution, evaluation protocols, and related details are furnished below for easier understanding.

Summary of convolutional network architectures

The Inception-V3, Xception, ResNet-50, and MobileNet-V2 backbone CNNs with pre-trained ImageNet weights are used for convolutional feature computation from the input images. The Inception module focuses on increasing network depth using 5\(\times \)5, 3\(\times \)3, and 1\(\times \)1 convolutions58. Again, 5\(\times \)5 convolution has been replaced by factorizing into 3\(\times \)3 filter sizes59. Afterward, the Inception module is further decoupled the channel-wise and spatial correlations by point-wise and depth-wise separable convolutions, which are the building block of Xception architecture60. The separable convolution follows the depth-wise convolution for spatial (3\(\times \)3 filters) and point-wise convolution (1\(\times \)1 filters) for cross-channel aggregation into a single feature map. The Xception is a three-fold architecture developed with depth-wise separable convolution layers with residual connections. Whereas, the residual connection a.k.a. shortcut connection is the central idea of deep residual learning framework, widely known as ResNet architecture61. The residual learning represents an identity mapping through a shortcut connection following simple addition of feature maps of previous layers rendered using 3\(\times \)3 and 1\(\times \)1 convolutions. This identity mapping does not incur additional computational overhead and still able to ease degradation problem. In a similar fashion, the MobileNet-V2 uses bottleneck separable convolutions with kernel size 3\(\times \)3, and inverted residual connection62. It is a memory-efficient framework suitable for mobile devices.

These backbones are widely used in existing works on diverse image classification problems (e.g., human activity recognition, object classification, disease prediction, etc.) due to their superior architectural designs63,64 at reasonable computational cost. Here, these backbones are used for a fair performance comparison with the state-of-the-art methods developed for plant nutrition and disease classification65. We have customized the top-layers of base CNNs for adding the GCN module without alerting their inherent layer-wise building blocks, convolutional design such as the kernel-sizes, skip-connections, output feature dimension, and other design parameters. The basic characteristics of these backbone CNNs are briefed in Table 1. The network depth, model size and parameters have been increased due to the addition of GCN layers upon the base CNN accordingly, evident in Table 1.

Two GCN layers have been used with ReLU activation, and the feature size is the same as the base CNN’s output channel dimension. For example, the size of channel features of ResNet-50, Xception and Inception-V3 is 2048, which is kept the same dimension as GCN’s channel feature map. The adjacency matrix is developed considering overall spatial relation among different neighborhood regions as a complete graph. Therefore, each region is related with all other regions even if they are far apart which is helpful in capturing long-distant feature interactions and building a holistic feature representation via a complete graph structure. Batch normalization and a drop-out rate of 0.3 is applied in the overall network design to reduce overfitting.

Data pre-processing and data splitting techniques

The basic pre-processing technique provided by the Keras applications for each backbone has been applied. It is required to convert the input images from RGB to BGR, and then each color channel is zero-centered with respect to the ImageNet dataset, without any scaling. Data augmentation methods such as random rotation (± 25\(\circ \)), scaling (± 0.25), Gaussian blur, and random cropping with 224 \(\times \) 224 image-size from the input size of 256\(\times \)256 are applied on-the-fly for data diversity in image samples.

We have maintained the same train-test split provided with the datasets e.g., PlantDoc. However, other plant datasets does not provide any specific image distribution. Thus, we have randomly divided the datasets into train and test samples following a 70:30 split ratio which is complied in several works. The details of image distribution is provided in Table 3. For cross-validation, we have randomly divided the training samples into training and validation set with a 4:1 ratio i.e., five-fold cross validation in a disjoint manner, which is a standard techniques adopted in other methods66. The test set remains unaltered for both evaluation schemes for clear performance comparison. Finally, the average test accuracy of five executions on each dataset has been reported here as the overall performance of the PND-Net.

A summary of the implementation specification indicating the hardware and software environments, training hyper-parameters, data augmentations, and estimated time (milliseconds) of training and inference are specified in Table 2. Our model is trained with a mini-batch size of 12 for 150 epochs and divided by 5 after 100 epochs. However, no other criterion such as early stopping has been followed. The proposed method is developed in Tensorflow 2.x using Python.

Dataset description

The summary of four plant datasets used in this work are summarized in Table 3. These datasets are collected from public repositories such as the Mendeley Data and Kaggle.

-

The Banana nutrition deficiency dataset represents healthy samples and the visual symptoms of deficiency of the: Boron, Calcium, Iron, Magnesium, Potassium, Sulphur, and Zinc. The samples of this dataset are shown in Fig. 2. More details are provided in Ref25.

-

The Coffee nutrition deficiency dataset (CoLeaf-DB)26 represents healthy samples and the deficiency classes are: Boron, Calcium, Iron, Manganese, Magnesium, Nitrogen, Potassium, Phosphorus, and more deficiencies. The samples of dataset are illustrated in Fig. 3.

-

The Potato disease classes are: Virus, Phytopthora, Pest, Nematode, Fungi, Bacteria, and healthy. The samples of this dataset are shown in Fig. 4. The dataset is collected from the Mendeley67 repository.

-

The PlantDoc is a realistic plant disease dataset65, comprising with different disease classes of Apple, Tomato, Potato, Strawberry, Soybean, Raspberry, Grapes, Corn, Bell-pepper, and others. Examples are shown in Fig. 5.

-

The Breast Cancer Histopathology Image Classification (BreakHis)68 dataset with 40\(\times \) and 100\(\times \) magnifications contain 8-classes: adenosis, fibroadenoma, phyllodes tumor, and tubular adenoma; ductal carcinoma, lobular carcinoma, mucinous carcinoma, and papillary carcinoma. The samples of this dataset are exemplified in Fig. 6.

-

The SIPaKMeD69, containing 4050 single-cell images, which is useful for classifying cervical cells in Pap smear images, shown in Fig. 7. This dataset is categorized into five classes based on cytomorphological features.

Result analysis and performance comparison

A summary of the datasets with data distribution, and the baseline accuracy (%) achieved by aforesaid base CNNs are briefed in Table 3. The baseline model is developed using the pre-trained CNN backbones with ImageNet weights. A backbone CNN extracts the base output feature map which is pooled by a global average pooling layer and classified with a softmax layer. Four backbone CNNs with different design characteristics have used for generalizing our proposed method. The baseline accuracies are reasonable and consistent across various datasets, evident in Table 3.

Two different evaluation strategies i.e., general classification and k-fold cross validation (\(k=5\)) have been experimented. An average performance has been estimated from multiple executions on each dataset and reported here. The top-1 accuracies (%) of the proposed PND-Net comprising two-GCN layers with the feature dimension 2048, included on the top of different backbone CNNs, are given in Table 4. The overall performance of the PND-Net on all datasets significantly improved over the baselines. Clearly, it shows the efficiency of the proposed method. In addition, the PND-Net model has been tested with five-fold cross validation for a robust performance analysis (“Fivefold cross validation experiments”). These cross-validation results (Tables 5, 6 and 7) on each dataset could be considered as the benchmark performances using several metrics. Our method has driven the state-of-the-art performances on these datasets for plant disease and nutrition deficiency recognition.

An experimental study has been carried out on two more public datasets for human medical image analysis. The BreakHis with 40\(\times \) and 100\(\times \) magnifications68 and SIPaKMeD69 datasets have been evaluated for generalization. The SIPaKMeD dataset69 is useful for classifying cervical cells in pap smear images, illustrated in Fig. 7. This dataset is categorized into five classes based on cytomorphological features using the proposed PND-Net. The conventional classification results are given in Table 4, and the performances of cross validations are provided in Tables 6 and 7.

Fivefold cross validation experiments

The fivefold cross-validation experiments on various datasets have been conducted for evaluating the performance of PND-Net using the ResNet-50 and Xception backbones, and the results are given in Table 5, 6, and 7. The actual train set is divided into five disjoint subsets of images for each dataset. In each experiment, four out of five subsets are used for training and the remaining one is validated independently. Finally, the average validation result of five folds is reported.

The results of five-fold cross validation on potato leaf disease dataset are provided in Table 5. The numbers of potato leaf images in each fold for training, validation, and testing are 1608, 402, and 869, respectively. The results using different metrics are computed and the last row implies an average performance of cross validation on this dataset.

Likewise, the performance five-fold validation on the BreakHis-40\(\times \) dataset has been presented in Table 6. In this experiment, the number of training samples in each fold is 1280 images, and validation set containing remaining 320 images. The test set contains 400 images which remains the same as used in aforesaid other experiments. Each of the five-fold experiment has been validated and tested on the test set. Lastly, an average result of five-fold cross validation has been computed, and given in the last row of Table 6.

A similar experimental set-up of five-fold cross validation has been followed for other datasets. The average performances of PND-Net on these datasets are provided in Table 7. The average cross-validation results are better than the conventional classification approach on the potato disease (ResNet-50: 94.48%) and BreakHis-40\(\times \) (ResNet-50: 97.10%) datasets. The reason could be the variations in the validation set in each fold enhances the learning capacity of model due to training data diversity. As a result, improved performances have been achieved on diverse datasets. The results are consistent with the results of conventional classification method on other datasets as described above. The overall performances on different datasets validates the generalization capability of the proposed PND-Net.

Model complexity and visualization

The model parameters are computed in millions, as provided in Table 8. The model parameters have been estimated for three cases: (a) baseline i.e., the backbone CNN only; and the output feature dimension of GCN layers is (b) 1024 and (c) 2028. An average computational time of PND-Net using ResNet-50 has been estimated. The training time is 15.4 ms per image, and inference time is 5.8 ms per image, and model size is 122MB (given in Table 2). The confusion matrices on these four plant datasets are shown in Fig. 8, indicating an overall performance using ResNet-50. Also, the feature map distributions are clearly shown in different clusters in the t-SNE diagrams70 represented with two backbone models on the potato leaf dataset, shown in Fig. 10. The gradient-weighted class activation mapping (Grad-CAM)71 has been illustrated in Fig. 11 for visual explanations which clearly show the discriminative regions of different images.

Performance comparison

The highest accuracy on Banana nutrition classification was 78.76% and 87.89% using the raw dataset and an augmented version of the original dataset72. In contrast, our method has attained 84.0% using lightweight MobileNet-V2 and the best 90.0% using ResNet-50 on the raw dataset, implying a significant improvement in accuracy on this dataset.

The performances of PND-Net on the Coleaf-DB (Coffee dataset) are very similar, and the best accuracy (90.54%) is attained by the Xception. The differences of performances with other base CNNs are very small, implying a consistent performance. The elementary result using ResNet-50 reported on this recent public dataset is 87.75%26. Thus, our method has set new benchmark results on Coleaf-DB for further enhancement in the future. Likewise, the Potato Leaf Disease dataset is a new one67, collected from Mendeley data source. We are the first to provide in-depth results on this realistic dataset acquired in an uncontrolled environment.

A deep learning method has attained 81.53% accuracy using Xception and 78.34% accuracy using Inception-V3 backbone on the PlantDoc dataset73. In contrast, our PND-Net has attained 84.30% accuracy using Xception and 81.0% using Inception-V3, respectively. It evinces that PND-Net is more effective in discriminating plant diseases compared to the best reported existing methods. Clearly, the proposed graph-based network (PND-Net) is capable of distinguishing different types of nutrition deficiencies and plant diseases with a higher success rate in real-world public datasets.

The BreakHis dataset has been studied for categorizing into 4-classes and binary classification in several existing works. However, we have compared it with the works of classifying into 8 categories at the image-level for a fair comparison. The top-1 accuracy attained using Xception is 94.83%, whereas the state-of-the-art accuracy on this dataset is 93.40±1.8% achieved using a hybrid harmonization technique74. The accuracy reported is 92.8 ±2.1% using a class structure-based deep CNN75. The cross-validation results (ResNet-50: 97.10%) are improved over existing methods.

Several deep learning methods have been experimented with the SIPaKMeD dataset. A CNN-based method achieved 95.35 ± 0.42% accuracy69, a PCA-based technique obtained 97.87% accuracy for 5-class classification76, 98.30% using Xception77, and 98.26% using DarkNet-based exemplar pyramid deep model78. A GCN-based method has reported 98.37± 0.57% accuracy54. A few more comparative results have been studied in Ref79. In contrast, our method has achieved 98.98 ± 0.20% accuracy and 99.10% test accuracy with cross validation using Xception backbone on this dataset. The confusion matrices on both human disease datasets are shown in Fig. 9. Overall rigorous experimental results imply that the proposed method has achieved state-of-the-art performances on different types of datasets representing plant nutrition deficiency, plant disease, and human disease classification.

Ablation study

An in-depth ablation study has been carried out to observe the efficacy of key components of the PND-Net. Firstly, the significance of computing different local regions is studied. These fixed-size regional descriptors are combined to create for a holistic representation of feature maps over the baseline features. Notably, the region pooling technique has improved overall performances on all the datasets, e.g., the gain is more than 12% on the Banana nutrition deficiency dataset using ResNet-50 backbone. The results of this study are provided in Table 9.

Afterward, a component-level study has been evaluated by removing a module from the proposed PND-Net to observe the influence of the key component in performance. An ablation study depicting the significance of spatial pyramid pooling (SPP) layer has been conducted, and the results are shown in Table 10. As the selection of discriminatory information at multiple pyramidal structures has been avoided, the model might overlook finer details which could have been captured at multiple scales by the SPP layer. It causes an obvious degradation of the capacity of network architecture, which is evident from the performances. Thus, capturing multi-scale features is useful to select relevant features for effective learning of plant health conditions.

Next, the efficacious GCN modules are excluded from the network architecture, and then, experiments have been conducted with regional features selected by our composite pooling modules (i.e., regions + SPP) from upsampled high-level deep features of a base CNN. The results are provided in Table 11.

It is evident that the GCN module indeed improves performance remarkably. In the case of the Banana dataset using Xception backbone, the accuracy of PND-Net is 89.25%. Whereas, averting GCN layers, the degraded accuracy is 81.46%, implying 7.79% drop in accuracy. Even though, one GCN layer (Banana: 86.0%) does not suffice to render the state-of-the-art performance on these plant datasets. The results of considering one layer GCN on all datasets are demonstrated in Table 12. Indeed, two layers in GCN are beneficial in enhancing the performance over one GCN layer, which is evident in the literature22. Hence, two GCN layers are included in the proposed PND-Net model architecture.

A comparative study on different number of regions and the number of pyramid pooled feature vectors using ResNet-50 is shown in Fig. 12a, which clearly implies a gradual improvement in accuracy on the PlantDoc and Banana datasets. Lastly, the influences of different feature vector sizes in GCN layer activations have been studied. In this study, the channel dimensions of feature vectors 1024 and 2048 have been chosen for building the graph structures using ResNet-50 backbone, implying the same channel dimensions have been considered in the PND-Net architecture. The results (Fig. 12b of such variations provide insightful implications about the performance of GCN layers.

The performances of PND-Net with GCN output feature vector size of 1024 have been summarized in Table 13. The results are very competitive with GCN’s size of 2048. Thus, the model with 1024 GCN feature size could be preferred considering a trade off between the model parametric capacity with the performance. The detailed experimental studies imply overall performance boost on all datasets, and the proposed PND-Net achieves state-of-the-art results. In addition, new public datasets have been benchmarked for further enhancement.

However, other categories of images such as high resolution, hyperspectral, etc. have not been evaluated. One reason is unavailability of such plant datasets for public research. Also, data modalities such as soil-sensor information could be utilized for developing fusion based approaches. Several existing ensemble methods have used multiple backbones, which suffer from a higher computational complexity. Though, our method performs better than several existing works, yet, the computational complexity regarding model parameters and size of PND-Net could be improved. The reason is plugging the GCN module upon the backbone CNN, which incurs more parameters. To address this challenge, the graph convolutional layer could be simplified for reducing the model complexity. In addition, more realistic agricultural datasets representing field conditions such as occlusion, cluttered backgrounds, lighting variations, and others could be developed. These limitations of the proposed PND-Net will be explored in the near future.

Conclusion

In this paper, a deep network called PND-Net has been proposed for plant nutrition deficiency recognition using a GCN module, which is added on the top a CNN backbone. The performances have been evaluated on four image datasets representing the plant nutrition deficiencies and leaf diseases. These datasets have recently been introduced publicly for assessment. The network has been generalized by building the deep network using four standard backbone CNNs, and the network architecture has been improved by incorporating pyramid pooling over region-pooled feature maps and feature propagation via a GCN. We are the first to evaluate these nutrition inadequacy datasets for monitoring plant health and growth. Our method has attained the state-of-the-art performance on the PlantDoc dataset for plant disease recognition. We encourage the researcher for further enhancement on these public datasets for early stage detection of plant abnormalities, essential for sustainable agricultural growth. Furthermore, experiments have been conducted on the BreakHis (40\(\times \) and 100\(\times \) magnifications) and SIPaKMeD datasets, which are suitable for human health diagnosis. The proposed PND-Net have attained enhanced performances on these datasets too. In the future, new deep learning methods would be developed for early stage disease detection of plants and health monitoring with balanced nutrition using other data modalities and imaging techniques.

Data availability

The six datasets that support the findings which were used in this work are available using the given links. The Nutrient Deficient of Banana Plant dataset25 is collected from https://data.mendeley.com/datasets/7vpdrbdkd4/1. The CoLeaf-DB dataset26 for coffee leaf nutrition deficiency classification is available at https://data.mendeley.com/datasets/brfgw46wzb/1. The Potato Leaf Disease Dataset67 is available at https://data.mendeley.com/datasets/ptz377bwb8/1. The PlantDoc dataset65 is available at https://github.com/pratikkayal/PlantDoc-Dataset. The BreakHis dataset68 is available at https://web.inf.ufpr.br/vri/databases/breast-cancer-histopathological-database-breakhis/, and can also be downloaded from https://data.mendeley.com/datasets/jxwvdwhpc2/1. The original SIPaKMeD dataset69 can be found at https://www.cs.uoi.gr/marina/sipakmed.html, and Kaggle https://www.kaggle.com/datasets/mohaliy2016/papsinglecell.

Change history

05 August 2024

A Correction to this paper has been published: https://doi.org/10.1038/s41598-024-68260-7

References

Jung, M. et al. Construction of deep learning-based disease detection model in plants. Sci. Rep. 13, 7331 (2023).

Aiswarya, J., Mariammal, K. & Veerappan, K. Plant nutrient deficiency detection and classification-a review. In 2023 5th International Conference Inventive Research in Computing Applications (ICIRCA). 796–802 (IEEE, 2023).

Yan, Q., Lin, X., Gong, W., Wu, C. & Chen, Y. Nutrient deficiency diagnosis of plants based on transfer learning and lightweight convolutional neural networks Mobilenetv3-large. In Proceedings of the 2022 11th International Conference on Computing and Pattern Recognition. 26–33 (2022).

Sudhakar, M. & Priya, R. Computer vision based machine learning and deep learning approaches for identification of nutrient deficiency in crops: A survey. Nat. Environ. Pollut. Technol. 22 (2023).

Noon, S. K., Amjad, M., Qureshi, M. A. & Mannan, A. Use of deep learning techniques for identification of plant leaf stresses: A review. Sustain. Comput. Inform. Syst. 28, 100443 (2020).

Waheed, H. et al. Deep learning based disease, pest pattern and nutritional deficiency detection system for “Zingiberaceae’’ crop. Agriculture 12, 742 (2022).

Barbedo, J. G. A. Detection of nutrition deficiencies in plants using proximal images and machine learning: A review. Comput. Electron. Agric. 162, 482–492 (2019).

Shadrach, F. D., Kandasamy, G., Neelakandan, S. & Lingaiah, T. B. Optimal transfer learning based nutrient deficiency classification model in ridge gourd (Luffa acutangula). Sci. Rep. 13, 14108 (2023).

Sathyavani, R., JaganMohan, K. & Kalaavathi, B. Classification of nutrient deficiencies in rice crop using DenseNet-BC. Mater. Today Proc. 56, 1783–1789 (2022).

Haris, S., Sai, K. S., Rani, N. S. et al. Nutrient deficiency detection in mobile captured guava plants using light weight deep convolutional neural networks. In 2023 2nd International Conference on Applied Artificial Intelligence and Computing (ICAAIC). 1190–1193 (IEEE, 2023).

Munir, S., Seminar, K. B., Sukoco, H. et al. The application of smart and precision agriculture (SPA) for measuring leaf nitrogen content of oil palm in peat soil areas. In 2023 International Conference on Computer Science, Information Technology and Engineering (ICCoSITE). 650–655 (IEEE, 2023).

Lu, J., Peng, K., Wang, Q. & Sun, C. Lettuce plant trace-element-deficiency symptom identification via machine vision methods. Agriculture 13, 1614 (2023).

Omer, S. M., Ghafoor, K. Z. & Askar, S. K. Lightweight improved YOLOv5 model for cucumber leaf disease and pest detection based on deep learning. In Signal, Image and Video Processing. 1–14 (2023).

Kumar, A. & Bhowmik, B. Automated rice leaf disease diagnosis using CNNs. In 2023 IEEE Region 10 Symposium (TENSYMP). 1–6 (IEEE, 2023).

Senjaliya, H. et al. A comparative study on the modern deep learning architectures for predicting nutritional deficiency in rice plants. In 2023 IEEE IAS Global Conference on Emerging Technologies (GlobConET). 1–6 (IEEE, 2023).

Ennaji, O., Vergutz, L. & El Allali, A. Machine learning in nutrient management: A review. Artif. Intell. Agric. (2023).

Rathnayake, D., Kumarasinghe, K., Rajapaksha, R. & Katuwawala, N. Green insight: A novel approach to detecting and classifying macro nutrient deficiencies in paddy leaves. In 2023 8th International Conference Information Technology Research (ICITR). 1–6 (IEEE, 2023).

Asaari, M. S. M., Shamsudin, S. & Wen, L. J. Detection of plant stress condition with deep learning based detection models. In 2023 International Conference on Energy, Power, Environment, Control, and Computing (ICEPECC). 1–5 (IEEE, 2023).

Tavanapong, W. et al. Artificial intelligence for colonoscopy: Past, present, and future. IEEE J. Biomed. Health Inform. 26, 3950–3965 (2022).

Kipf, T. N. & Welling, M. Semi-supervised classification with graph convolutional networks. In International Conference on Learning Representations (2017).

Zhang, S., Tong, H., Xu, J. & Maciejewski, R. Graph convolutional networks: A comprehensive review. Comput. Soc. Netw. 6, 1–23 (2019).

Bera, A., Wharton, Z., Liu, Y., Bessis, N. & Behera, A. SR-GNN: Spatial relation-aware graph neural network for fine-grained image categorization. IEEE Trans. Image Process. 31, 6017–6031 (2022).

Qu, Z., Yao, T., Liu, X. & Wang, G. A graph convolutional network based on univariate neurodegeneration biomarker for Alzheimer’s disease diagnosis. IEEE J. Transl. Eng. Health Med. (2023).

Khlifi, M. K., Boulila, W. & Farah, I. R. Graph-based deep learning techniques for remote sensing applications: Techniques, taxonomy, and applications—A comprehensive review. Comput. Sci. Rev. 50, 100596 (2023).

Sunitha, P., Uma, B., Channakeshava, S. & Babu, S. A fully labelled image dataset of banana leaves deficient in nutrients. Data Brief 48, 109155 (2023).

Tuesta-Monteza, V. A., Mejia-Cabrera, H. I. & Arcila-Diaz, J. CoLeaf-DB: Peruvian coffee leaf images dataset for coffee leaf nutritional deficiencies detection and classification. Data Brief 48, 109226 (2023).

Chungcharoen, T. et al. Machine learning-based prediction of nutritional status in oil palm leaves using proximal multispectral images. Comput. Electron. Agric. 198, 107019 (2022).

Bhavya, T., Seggam, R. & Jatoth, R. K. Fertilizer recommendation for rice crop based on NPK nutrient deficiency using deep neural networks and random forest algorithm. In 2023 3rd International Conference on Artificial Intelligence and Signal Processing (AISP). 1–5 (IEEE, 2023).

Dey, B., Haque, M. M. U., Khatun, R. & Ahmed, R. Comparative performance of four CNN-based deep learning variants in detecting Hispa pest, two fungal diseases, and npk deficiency symptoms of rice (Oryza sativa). Comput. Electron. Agric. 202, 107340 (2022).

Cevallos, C., Ponce, H., Moya-Albor, E. & Brieva, J. Vision-based analysis on leaves of tomato crops for classifying nutrient deficiency using convolutional neural networks. In 2020 International Joint Conference on Neural Networks (IJCNN). 1–7 (IEEE, 2020).

Espejo-Garcia, B., Malounas, I., Mylonas, N., Kasimati, A. & Fountas, S. Using Efficientnet and transfer learning for image-based diagnosis of nutrient deficiencies. Comput. Electron. Agric. 196, 106868 (2022).

Wang, C., Ye, Y., Tian, Y. & Yu, Z. Classification of nutrient deficiency in rice based on cnn model with reinforcement learning augmentation. In 2021 International Symposium on Artificial Intelligence and its Application on Media (ISAIAM). 107–111 (IEEE, 2021).

Bahtiar, A. R., Santoso, A. J., Juhariah, J. et al. Deep learning detected nutrient deficiency in chili plant. In 2020 8th International Conference on Information and Communication Technology (ICoICT). 1–4 (IEEE, 2020).

Rahadiyan, D., Hartati, S., Nugroho, A. P. et al. Feature aggregation for nutrient deficiency identification in chili based on machine learning. Artif. Intell. Agric. (2023).

Aishwarya, M. & Reddy, P. Ensemble of CNN models for classification of groundnut plant leaf disease detection. Smart Agric. Technol. 100362 (2023).

Nadafzadeh, M. et al. Design, fabrication and evaluation of a robot for plant nutrient monitoring in greenhouse (case study: iron nutrient in spinach). Comput. Electron. Agric. 217, 108579 (2024).

Desiderio, J. M. H., Tenorio, A. J. F. & Manlises, C. O. Health classification system of romaine lettuce plants in hydroponic setup using convolutional neural networks (CNN). In 2022 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET). 1–6 (IEEE, 2022).

Costa, L., Kunwar, S., Ampatzidis, Y. & Albrecht, U. Determining leaf nutrient concentrations in citrus trees using UAV imagery and machine learning. Precis. Agric. 1–22 (2022).

Lanjewar, M. G. & Parab, J. S. CNN and transfer learning methods with augmentation for citrus leaf diseases detection using PaaS cloud on mobile. Multimed. Tools Appl. 1–26 (2023).

Lanjewar, M. G., Morajkar, P. P. Modified transfer learning frameworks to identify potato leaf diseases. Multimed. Tools Appl. 1–23 (2023).

Dissanayake, A. et al. Detection of diseases and nutrition in bell pepper. In 2023 5th International Conference on Advancements in Computing (ICAC). 286–291 (IEEE, 2023).

Wu, Z., Jiang, F. & Cao, R. Research on recognition method of leaf diseases of woody fruit plants based on transfer learning. Sci. Rep. 12, 15385 (2022).

Liu, H., Lv, H., Li, J., Liu, Y. & Deng, L. Research on maize disease identification methods in complex environments based on cascade networks and two-stage transfer learning. Sci. Rep. 12, 18914 (2022).

Kukreja, V., Sharma, R., Vats, S. & Manwal, M. DeepLeaf: Revolutionizing rice disease detection and classification using convolutional neural networks and random forest hybrid model. In 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT). 1–6 (IEEE, 2023).

Bezabih, Y. A., Salau, A. O., Abuhayi, B. M., Mussa, A. A. & Ayalew, A. M. CPD-CCNN: Classification of pepper disease using a concatenation of convolutional neural network models. Sci. Rep. 13, 15581 (2023).

Kini, A. S., Prema, K. & Pai, S. N. Early stage black pepper leaf disease prediction based on transfer learning using convnets. Sci. Rep. 14, 1404 (2024).

Wu, Q. et al. A classification method for soybean leaf diseases based on an improved convnext model. Sci. Rep. 13, 19141 (2023).

Ma, X., Chen, W. & Xu, Y. ERCP-Net: A channel extension residual structure and adaptive channel attention mechanism for plant leaf disease classification network. Sci. Rep. 14, 4221 (2024).

Babatunde, R. S. et al. A novel smartphone application for early detection of habanero disease. Sci. Rep. 14, 1423 (2024).

Nagasubramanian, G. et al. Ensemble classification and IoT-based pattern recognition for crop disease monitoring system. IEEE Internet Things J. 8, 12847–12854 (2021).

Nachtigall, L. G., Araujo, R. M. & Nachtigall, G. R. Classification of apple tree disorders using convolutional neural networks. In 2016 IEEE 28th International Conference on Tools with Artificial Intelligence (ICTAI). 472–476 (IEEE, 2016).

Borhani, Y., Khoramdel, J. & Najafi, E. A deep learning based approach for automated plant disease classification using vision transformer. Sci. Rep. 12, 11554 (2022).

Aishwarya, M. & Reddy, A. P. Dataset of groundnut plant leaf images for classification and detection. Data Brief 48, 109185 (2023).

Shi, J. et al. Cervical cell classification with graph convolutional network. Comput. Methods Prog. Biomed. 198, 105807 (2021).

Fahad, N. M., Azam, S., Montaha, S. & Mukta, M. S. H. Enhancing cervical cancer diagnosis with graph convolution network: AI-powered segmentation, feature analysis, and classification for early detection. Multimed. Tools Appl. 1–25 (2024).

Lanjewar, M. G., Panchbhai, K. G. & Patle, L. B. Fusion of transfer learning models with LSTM for detection of breast cancer using ultrasound images. Comput. Biol. Med. 169, 107914 (2024).

He, K., Zhang, X., Ren, S. & Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37, 1904–1916 (2015).

Szegedy, C. et al. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1–9 (2015).

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2818–2826 (2016).

Chollet, F. Xception: Deep learning with depthwise separable convolutions. In IEEE Conference on Computer Vision Pattern Recognition. 1251–1258 (2017).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceeding of the IEEE Conference on Computer Vision and Pattern Recognition. 770–778 (2016).

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A. & Chen, L.-C. MobileNetv2: Inverted residuals and linear bottlenecks. In Proceeding of the IEEE Conference on Computer Vision and Pattern Recognition. 4510–4520 (2018).

Bera, A., Nasipuri, M., Krejcar, O. & Bhattacharjee, D. Fine-grained sports, yoga, and dance postures recognition: A benchmark analysis. IEEE Trans. Instrum. Meas. 72, 1–13 (2023).

Bera, A., Wharton, Z., Liu, Y., Bessis, N. & Behera, A. Attend and guide (AG-Net): A keypoints-driven attention-based deep network for image recognition. IEEE Trans. Image Process. 30, 3691–3704 (2021).

Singh, D. et al. PlantDoc: A dataset for visual plant disease detection. In Proceedings of the 7th ACM IKDD CoDS and 25th COMAD. 249–253 (ACM, 2020).

Hameed, Z., Garcia-Zapirain, B., Aguirre, J. J. & Isaza-Ruget, M. A. Multiclass classification of breast cancer histopathology images using multilevel features of deep convolutional neural network. Sci. Rep. 12, 15600 (2022).

Shabrina, N. H. et al. A novel dataset of potato leaf disease in uncontrolled environment. Data Brief 52, 109955 (2024).

Spanhol, F. A., Oliveira, L. S., Petitjean, C. & Heutte, L. A dataset for breast cancer histopathological image classification. IEEE Trans. Biomed. Eng. 63, 1455–1462 (2015).

Plissiti, M. E. et al. SIPAKMED: A new dataset for feature and image based classification of normal and pathological cervical cells in Pap smear images. In 2018 25th IEEE International Conf. Image Processing (ICIP). 3144–3148 (IEEE, 2018).

Van Der Maaten, L. Accelerating t-SNE using tree-based algorithms. J. Mach. Learn. Res. 15, 3221–3245 (2014).

Selvaraju, R. R. et al. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In 2017 IEEE International Conference on Computer Vision (ICCV). 618–626 (2017).

Han, K. A. M., Maneerat, N., Sepsirisuk, K. & Hamamoto, K. Banana plant nutrient deficiencies identification using deep learning. In 2023 9th International Conference on Engineering, Applied Sciences, and Technology (ICEAST). 5–9 (IEEE, 2023).

Ahmad, A., El Gamal, A. & Saraswat, D. Toward generalization of deep learning-based plant disease identification under controlled and field conditions. IEEE Access 11, 9042–9057 (2023).

Abdallah, N. et al. Enhancing histopathological image classification of invasive ductal carcinoma using hybrid harmonization techniques. Sci. Rep. 13, 20014 (2023).

Han, Z. et al. Breast cancer multi-classification from histopathological images with structured deep learning model. Sci. Rep. 7, 4172 (2017).

Basak, H., Kundu, R., Chakraborty, S. & Das, N. Cervical cytology classification using PCA and GWO enhanced deep features selection. SN Comput. Sci. 2, 369 (2021).

Mohammed, M. A., Abdurahman, F. & Ayalew, Y. A. Single-cell conventional pap smear image classification using pre-trained deep neural network architectures. BMC Biomed. Eng. 3, 11 (2021).

Yaman, O. & Tuncer, T. Exemplar pyramid deep feature extraction based cervical cancer image classification model using pap-smear images. Biomed. Signal Process. Control 73, 103428 (2022).

Jiang, H. et al. Deep learning for computational cytology: A survey. Med. Image Anal. 84, 102691 (2023).

Acknowledgements

This work is supported by the New Faculty Seed Grant (NFSG) and Cross-Disciplinary Research Framework (CDRF: C1/23/168) projects, Open Access facilities, and necessary computational infrastructure at the Birla Institute of Technology and Science (BITS) Pilani, Pilani Campus, Rajasthan, 333031, India. This work has been also supported in part by the project (2024/2204), Grant Agency of Excellence, University of Hradec Kralove, Faculty of Informatics and Management, Czech Republic.

Author information

Authors and Affiliations

Contributions

A. B. has played a pivotal role in this research. He has contributed in the model development, coding, generating results, and initial manuscript preparation. D.B. and O. K. both have contributed by reviewing the work meticulously, validated the model, and corrected the manuscript to enhance the clarity of the text description, and overall organization of the article. D.B. has carefully read the manuscript, and provided valuable inputs for improving the overall quality of the article.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: The Acknowledgements section in the original version of this Article was incomplete. It now reads: “This work is supported by the New Faculty Seed Grant (NFSG) and Cross-Disciplinary Research Framework (CDRF: C1/23/168) projects, Open Access facilities, and necessary computational infrastructure at the Birla Institute of Technology and Science (BITS) Pilani, Pilani Campus, Rajasthan, 333031, India. This work has been also supported in part by the project (2024/2204), Grant Agency of Excellence, University of Hradec Kralove, Faculty of Informatics and Management, Czech Republic."

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bera, A., Bhattacharjee, D. & Krejcar, O. PND-Net: plant nutrition deficiency and disease classification using graph convolutional network. Sci Rep 14, 15537 (2024). https://doi.org/10.1038/s41598-024-66543-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-66543-7

Keywords

This article is cited by

-

Towards smart farming: a real-time diagnosis system for strawberry foliar diseases using deep learning

BMC Plant Biology (2025)

-

Efficient deep learning-based tomato leaf disease detection through global and local feature fusion

BMC Plant Biology (2025)

-

Hybrid vision GNNs based early detection and protection against pest diseases in coffee plants

Scientific Reports (2025)

-

A neural architecture search optimized lightweight attention ensemble model for nutrient deficiency and severity assessment in diverse crop leaves

Scientific Reports (2025)

-

A high-throughput ResNet CNN approach for automated grapevine leaf hair quantification

Scientific Reports (2025)