Abstract

Taking into account the whale optimization algorithm’s tendency to get trapped in local optima easily and its slow convergence rate, this paper proposes a diverse strategies whale optimization algorithm (DSWOA) and uses it to optimize the parameters of GRU, thereby achieving better regression prediction effects. First, an innovative t-distribution perturbation is used to perturb the optimal whale to expand the optimization space of the optimal whale. Secondly, in the random search stage, we perform a Cauchy walk on the whale’s position and then use reverse learning to enable the algorithm to effectively navigate away from the local optimum. Finally, we adopt a horizontal learning strategy for all whales and use two random whales to determine the current whale’s position. Updated, the results suggest that DSWOA is highly effective in global optimization. By utilizing DSWOA, the parameters of GRU were fine-tuned. The experimental findings reveal that GRU produces promising outcomes on multiple datasets, making it a more effective tool for regression prediction tasks.

Similar content being viewed by others

Introduction

Meta-heuristic algorithms are a kind of optimization algorithm that simulates intelligent phenomena such as natural biological evolution and group behavior1. It exhibits strong global search capabilities and quick convergence speed2. In complex optimization problems, swarm intelligent optimization algorithms have shown their unique advantages and are widely used in fields such as machine learning3, data mining4, image processing5, and engineering optimization6,7.

Whale Optimization Algorithm(WOA)8, as a type of intelligent optimization algorithm, replicates the predatory behavior of whales and achieves global search in the search space. However, the traditional WOA still exhibits certain weaknesses, including challenges in addressing high-dimensional problems and the struggle to strike a balance between global and local search capabilities9. In response to these shortcomings, researchers have recommended several improvement strategies to further optimize the functionality of WOA. For example, methods such as uniformly distributed population initialization10, adaptive inertial weights11, and update ideas that integrate other algorithms12 have been used to improve WOA. Although considerable results have been achieved, there are also many limitations, such as computational cost, complex parameter adjustment, local optimal traps, etc.

Recognizing the shortcomings of WOA, this paper suggests a refined whale optimization algorithm. On the basis of maintaining the advantages of the WOA, diverse strategies are used to improve it from three aspects, namely perturbation strategy, wandering strategy and learning strategy, seeking to elevate the algorithm’s convergence speed and global search efficiency.

Firstly, the introduction of t-distribution perturbation strategy permits the algorithm to adjust the search step size more flexibly during the search process, avoiding the dilemma of hitting local optima too early. At the same time, the application of Cauchy’s walk strategy enables the algorithm to better explore new search spaces during the search process, improving its global search ability.

Secondly, the introduction of reverse learning strategy provides a new search direction for the algorithm, which helps it break free from the limitations of the current search area and discover better solutions. The vertical and horizontal cross strategy further improves the effectiveness of the algorithm’s search by combining information from multiple search directions.

To strengthen the effectiveness of the Gated Recurrent Unit (GRU) network, this paper employs the enhanced whale optimization algorithm for optimizing its parameters. As a popular recurrent neural network structure, GRU proves to be highly effective in forecasting sequence data. Yet, the task of optimizing parameters can be a complicated nonlinear endeavor. By applying the improved whale optimization algorithm, we have successfully found a more optimal GRU parameter setting, thereby improving the accuracy of the prediction results.

Related work

Gated Recurrent Unit (GRU) neural networks have gained significant attention for their ability to handle sequence data efficiently, making them a popular choice for tasks such as time series forecasting and pattern recognition. However, GRU models often struggle with issues like vanishing gradients and limited long-term memory, which can affect their performance in complex tasks. To address these limitations, various studies have proposed hybrid approaches that combine GRU with other models or techniques. For instance, Sajjad et al. extracted features from Convolution Neural Network and input them into GRU to enhance its sequence learning ability13. Similarly, Pan et al. developed a water level prediction model that integrates GRU with Convolutional Neural Networks (CNN) to enhance its ability to capture spatial features14. In the field of structural response analysis, Zhang et al. proposed a time-varying uncertain structural response analysis method based on a combination of gated recurrent unit (GRU) recurrent neural network and ensemble learning. This method uses an active learning strategy to improve computational efficiency while ensuring accuracy15. Furthermore, Yu et al. employed a GRU model, based on quantile regression, to predict reservoir parameters, thereby achieving more accurate and reliable reservoir evaluations16. Despite these advancements, GRU models still face challenges in optimizing their performance for various applications. To overcome these challenges, meta-heuristic algorithms have been explored as a means to enhance GRU-based models by optimizing their parameters and improving their convergence rates.

Meta-heuristic algorithms refer to algorithms that explore for the most effective solutions within a particular area by learning the principles of nature. Because of its intelligent and flexible characteristics, it is widely used to resolve complex optimization puzzles. Meta-heuristic algorithms can be broadly categorized into three primary groups: physics-inspired, evolution-driven, and swarm-based methods. Figure 1 illustrates the specific classification of these meta-heuristic algorithms.

Physics-based meta-heuristic algorithms usually use physical principles for optimization. Typical representatives include simulated annealing(SA)17, charged system search (CSS)18, gravitational search algorithm(GSA)19, central force optimization(CFO)20, water evaporation optimization(WEO)21, etc. Evolution-based meta-heuristic algorithms mainly simulate the biological evolution process to solve optimization problems. Representative algorithms include genetic algorithm (GA)22, differential evolution (DE)23, evolutionary strategy(ES)24, genetic programming(GP)25, etc. The meta-heuristic algorithms of swarm-based methods are inspired by animal behavior in nature and can address challenging tasks by fostering interaction among individuals. Typical algorithms include particle swarm optimization algorithm(PSO)26, moth-flame optimization algorithm(MFO)27, sine cosine algorithm(SCA)28, dwarf mongoose optimization algorithm29, sparrow search algorithm(SSA)30, whale optimization Algorithm(WOA)8, etc.

With its unique approach to swarm intelligence optimization, the whale optimization algorithm stands out for its simplicity in control parameters, ease of implementation, and high optimization effectiveness. It has been employed in multiple areas, including path planning, image segmentation, and data classification. Although WOA’s distinctive performance has proven to be effective across a wide range of fields, it also has shortcomings such as slow rate of convergence and inadequate accuracy. Hence, plenty of scholars have made new improvements to it, mainly focusing on integrating other algorithms, wandering or flight strategy, chaos initialization strategy, etc.31.

Using the advantages of other algorithms can make up for the shortcomings of WOA. During the development of WOA, Korashy et al. utilized the leadership structure of the grey wolf optimizer algorithm (GWO)32 to adjust the search agent’s position33. Similarly, Vu Hong et al. used GWO to optimize WOA and formed the hWOA model to find a solution for the issue of limited capacity in vehicle routing34. Abdel-Basset et al. combined the slime mold algorithm (SMA) and the whale optimization algorithm to better adapt to the image segmentation problem of COVID-19 chest X-ray images35. Strumberger et al.36 put forward a hybrid algorithm (WOA-AEFS) that combines WOA with artificial bee colony (ABC)37 algorithm and firefly algorithm (FA)38, which can enhance the speed of convergence and preserve population diversity in the early stage of the algorithm36. Saxena et al. integrated the lion optimization algorithm (LOA)39 into WOA for routing selection of wireless sensors40. On the other hand, random walk or flight strategy is also an important strategy to improve the whale optimization algorithm. For example, many studies41,42,43,44 use the Lévy flight strategy or Gaussian random walk in WOA’s position update mechanism, which can help it quickly step out of the local optimum and broaden the diversity of the population and the global optimization capability of the algorithm. Chaotic mapping is a mapping that exhibits sophisticated and evolving behavior in nonlinear systems. Its dynamic behavior can prevent WOA from falling into local optimal trap and enhance the precision of searching for global optimal values. Kaur et al. integrates chaos theory into the WOA optimization process to enhance the speed of global convergence and achieve superior performance10. Si et al. introduced a refined logistic chaotic mapping to augment the initial whale population and boost the algorithm’s global search effectiveness45. Elmogy et al. proposed ANWOA with a couple of discrete chaotic maps. The period states of these two maps are fitting, displaying a high sensitivity to initial conditions, randomness, and stability, thereby enabling the best choice of initial populations and achieving global optimality46. Furthermore, there are advanced whale optimization methods that take cues from physical phenomenon. For instance, Tang et al. proposed a novel WOA algorithm that incorporates the concept of atom-like differential evolution, which defines whale behavior as quantum mechanical behavior47.

Although the above algorithm can substantially increase the algorithm’s precision, it also has some limitations. WOA integrated with other algorithms often takes too long, especially when faced with multimodal functions. Flying and walking strategies have requirements for step size. A too big step size could result in the local optimal solution being neglected. The chaos mapping strategy is also a challenge for the selection of appropriate chaos mapping. For a clearer understanding of the research gaps, we evaluated the algorithms discussed and displayed the findings in Table 1.

In order to integrate the advantages of various improvements and avoid limitations, this paper proposes a mixed-strategy WOA algorithm. The idea of this algorithm design is to expand the search space as much as possible on the one hand, and on the other hand to learn more information about similar whales, and to verify its performance on the test function. After effective improvement, it is implemented in the area of data prediction, and its performance is improved by improving Gated Recurrent Unit(GRU) parameters. GRU has many advantages in prediction, with its unique gating mechanism that can effectively capture long-term dependencies in sequences. However, GRU also has some drawbacks: its performance are sensitive to hyper parameters. Hence, the advantage of this optimization is that it can automatically find the optimal combination of hyper parameters, avoiding the tedious and subjective manual parameter tuning.

Methods

This chapter introduces the principles of the models and algorithms used in this article. The details are outlined as follows: Section "Gate Recurrent Unit" presents an overview of GRU, Section "Whale optimization algorithm" introduces WOA, and Section "Overview of the diverse strategies Whale Optimization Algorithm" discusses several strategies aimed at enhancing the WOA’s performance.

Gate Recurrent Unit

Gate Recurrent Unit is a variant of the Recurrent Neural Network (RNN) that shares similar objectives with the LSTM, primarily aiming to address the issue of gradient disappearance. Within the GRU model, there are two gates at work: the reset gate and the update gate. The reset gate plays a crucial role in determining how new input information is integrated with past memories, while the update gate dictates the extent to which prior memories are retained for the current time step.

The network architecture of the GRU is depicted in Fig. 2. In this figure, \({\text{x}}_{t}\) denotes the input information at the current moment; \({\text{h}}_{t - 1}\) represents the hidden state from the previous moment, encompassing information from the preceding section. \({\text{h}}_{t}\) is the hidden information of the current node. \({\text{r}}_{t}\) serves as the reset gate; \({\text{z}}_{t}\) acts as the update gate. \(\sigma\) is the sigmoid function that transforms the data into values within the range of [0,1]. Tanh activation function can change the data into values in the range of [− 1,1]. The relevant formulas of GRU can be represented by formulas 1–4.

Whale optimization algorithm

Whale optimization algorithm is an algorithm that performs optimization in the search space by imitating the behavior of whales in nature8. This process is similar to whales looking for prey in the ocean, including three stages: encircling prey, exploitation phase, and searching for prey. Suppose there is a whale group P containing k whales, then P can be expressed as \({\text{P}} = \{ {\text{P}}_{1} ,{\text{P}}_{2} ,...,{\text{P}}_{k} \}\). If the optimization search space has N dimensions, the position of the i-th whale can be expressed as \({\text{P}}_{{\text{i}}} = \{ {\text{P}}_{(i,1)} ,{\text{P}}_{(i,2)} ,...,{\text{P}}_{(i,N)} \}\).The whale optimization algorithm determines which of the three stages to execute through random probability P and control parameter A. The three stages of WOA are introduced below.

Encircling prey

When surrounding prey, the whale group regards the whale with the optimal fitness value \({\text{P}}_{{{\text{best}}}}\) as the prey and surrounds it. this process of approaching the current optimal value can be described as formula 5.

The random linear distance between the i-th whale and the optimal whale is denoted by \({\text{D}}\), which is expressed by formula 6 and 7. Additionally, \({\text{A}}\) serves as a dynamic control factor that varies based on the iteration count. This factor comprises a convergence factor \(a\), which linearly decreases from 2 to 0. The composition of \({\text{A}}\) is expressed by formulas 8 and 9, where r2 is a random number likes r1 in formula 7. t and T represent the current iteration number and the maximum iteration number, respectively.

Exploitation phase

Like most meta-heuristic algorithms, the efficiency of WOA depends on two key stages: global exploration and local refinement search. If these two stages can be balanced, it can ensure the improvement of optimization accuracy48. A higher exploration capability during search reduces the possibility of low solution accuracy and slow convergence. In order to further improve the algorithm’s search capability, the whale optimization algorithm provides a spiral search.

Similar to the stage of encircling prey, all whales in this stage also swim towards the optimal whale to find the better positions. What is special is that this process runs in a spiral and can be expressed as follows by formula 10.

Search for prey (exploration phase)

Many meta-heuristic algorithms algorithms use random selection to explore the optimal solution49. During the prey search phase, the whales alter their movement patterns and no longer swim towards the optimal whale, but prefer to swim towards a randomly chosen group member instead. The likelihood of the whale optimization algorithm discovering a global optimum is improved with this adaptation, ultimately improving its efficiency in finding a suitable prey. This process is shown in Eq. 11 and Eq. 12.

In fact, the whale optimization algorithm uses probability p and control parameter A to control which stage of the update formula is executed. When p > = 0.5, it will execute the exploitation phase, when p < 0.5 and |A|< 1, it enters the phase of encircling prey, otherwise it enters the phase of searching for prey.

Overview of the diverse strategies Whale Optimization Algorithm

Although WOA has shown good performance in many application areas, it also has some limitations. The position of the optimal whale in WOA is of great significance to the position update of the overall whale, but in the later stages of the iteration it will fall into the local optimal area50, so it needs to be perturbed to a certain extent to lead it out of the local optimal area. Second, the selection of random whales during the prey search phase cannot expand the search area in the later stages of the iteration. At this time, random walking or even reverse search of the whale’s position is an effective strategy44,51. Finally, WOA often uses the position of one whale when updating its position, and it is difficult to learn information about other whales. Therefore, using a horizontal crossover strategy to allow whales to learn information about other whales will help the whales swim toward a better position.

To summarize, the main innovative aspects of DSWOA for global optimization are summarized as follows:

-

Utilize a perturbation technique using an adaptive t-distribution to encourage the optimal whale avoid getting stuck in the local optimum;

-

During the stage of searching for prey, every whale initially executes a Cauchy walk to shift its location before employing reverse learning to broaden its search area;

-

By implementing a lateral cross-learning tactic, the whale adjusts its location based on the position data of two other whales chosen at random;

Advanced Whale Optimization Algorithm

This section details the improvement techniques of DSWOA and the principles of how to use DSWOA to improve GRU. Section "Adaptive t-distribution perturbation" introduces an innovative t-distribution perturbation, which is used to actively perturb the position of the optimal whale. Section "Cauchy random walk and reverse learning" introduces the process of updating the position of randomly selected whales using Cauchy walk and reverse learning strategies. Section "Randomly weighted horizontal crossover strategy" introduces the randomly weighted horizontal crossover strategy and uses it to update all particles, using a greedy strategy to ensure better fitness after the update. Section "Op4.4. Optimizing GRU using DSWO" introduces the overall framework and principles of using DSWOA to improve GRU.

Adaptive t-distribution perturbation

The t-distribution is a probability distribution similar to the normal distribution, but with longer tails52. It is often used to boost the randomness of the search, serving a similar purpose to adding noise to help the algorithm extensively investigate the solution space. The probability density formula of t-distribution perturbation is shown in Formula 13, where \(\nu\) is the number of degrees of freedom and Γ is the gamma function.

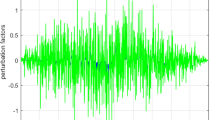

This paper proposes an innovative adaptive t-distribution perturbation to perturb the optimal whale to ensure that the probability of mutation is increased in the later stages of iteration. Equations 14 and 15 represent the adaptive t-distribution perturbation in this paper. Where \({\text{m}}_{{1}} { = 0}{\text{.64}}\),\({\text{m}}_{2} = 0.04\) in this paper. \({\text{trnd}}(t)\) is the T-distributed random number whose degree of freedom parameter is the number of iterations.

Cauchy random walk and reverse learning

With increasing iterations, the randomly selected whale in the prey search phase is more prone to ending up in the local optimal area. Utilizing the Cauchy walk and reverse learning strategy allows the algorithm to explore a wider search space. Cauchy walk is a random walk process that introduces greater randomness into the search space53. The Cauchy walk process used in this article is shown in Eqs. 16. where c = 1,m = 0.5,n = 0 in this paper, the variable r represents a random number that falls within the range of 0 and 1, providing a degree of randomness and variability to the algorithm.

The Cauchy walk was followed by a reversal of the learning process. Optimization algorithms can benefit from the search enhancement provided by reverse learning. The primary objective is to generate mirrored individuals within the individual’s location. The expression of this process representation can be found in formulas 17 and 18. where T represents the largest number of iterations, u and l are the upper and lower limits of the optimization space respectively, and e is the Cauchy walk in Eq. 16.

Randomly weighted horizontal crossover strategy

In the original whale optimization algorithm, whales will only absorb the wisdom of the best-performing whale and a random one, making it hard to fully grasp the information of the rest of the whales. Therefore, after each round of iteration, this article uses a learning strategy to let the whale group learn. to other whale information. This paper proposes the idea of a randomly weighted horizontal crossover strategy54 to learn information from any two whales and update its own position. This learning strategy can be expressed by formulas 19.

Among them, \({\text{P}}_{rand1}\) and \({\text{P}}_{rand2}\) are two whales randomly selected from the whale group, while \({\text{r}}_{{1}}\) represents a random number within the range of 0 to 1. This paper implements a greedy approach to prevent the learning strategy from being updated in a negative direction. If the fitness value of the updated position is less than that of the original, it will be substituted, otherwise it will remain unchanged. The greedy strategy is shown in Eq. 20.

The pseudo code of the DSWOA algorithm is presented in Algorithm 1.

Op4.4. optimizing GRU using DSWO

GRU’s advantages include a straightforward structure and swift training speed. However, there may still be some problems with the GRU model when processing sequential data55. The more obvious problem is that it is sensitive to hyperparameters, and manual setting is difficult to achieve ideal results and requires a lot of time. Therefore, optimization algorithms can be used to find suitable hyperparameters.

For enhancing the predictive ability of GRU, utilizing the DSWOA technique introduced in this paper to optimize the learning rates of the two hyperparameters and the hidden layer neuron quantity of GRU. The overall process is shown in Fig. 3. The data is divided into training and testing sets before the GRU training begins. Specifically, the two hyperparameters of the GRU are mapped to the whale position of DSWOA, and the loss function is mapped to the fitness value of DSWOA. DSWOA’s whale secures the smallest fitness value, implying that the GRU’s loss function is minimized with these particular hyperparameters. Upon completion of the GRU training, input the data set from the test set into the GRU to obtain the prediction results of the GRU.

Experiment and result analysis

CEC 2017 benchmark functions

The improved whale optimization algorithm’s performance is evaluated by applying the classic CEC 2017 test function. The CEC 2017 test function offers a range of benchmark test functions of different types that are useful for assessing the performance of diverse optimization algorithms. It contains 30 single objective test functions, namely: unimodal functions (F1-F3), simple multimodal functions (F4-F10), mixed functions (F11-F20), and combination functions (F21-F30). In particular, F2 has been deleted in CEC 2017. The CEC2017 testing problem is extremely difficult to solve as the dimensions increase. Table 2 provides a description of 30 test functions for CEC2017 and Fig. 4 shows images of some CEC 2017 test functions.

Comparison of DSWOA with other meta-heuristic algorithms

A comparison of the performance of the proposed DSWOA algorithm with classic optimization algorithms is conducted in this chapter, using mean and standard deviation as indicators to measure the quality. Compare DSWOA with 6 classic optimization algorithms: GA22, MFO27, SCA28, DMOA29, SSA30, WOA8. The experimental setup is as follows: The original settings of the CEC 2017 test functions are used, where the dimension of the F4 function is 100, the dimension of the F8, F9, and F30 functions is 10, and the dimension of the remaining functions is 30. The population size of WOA is set to 100, the number of iterations is 1000, and under this setting, each algorithm runs 30 times to take the average and standard deviation of the optimal optimization results as the final result.

Table 3 illustrates the outcomes. The proposed DSWOA has achieved better results than WOA on 29 test functions in CEC 2017, and has many advantages of magnitude, such as F1, F7, F9, F12, etc., which is sufficient to demonstrate the effectiveness of its improvement. Furthermore, it showcased excellent optimization results when compared to various other traditional intelligent optimization algorithms, with 29, 23, 29, 20, and 17 better than GA, MFO, SCA, DMOA, and SSA on 29 test functions, respectively. In addition to calculating the number of winners, the Friedman test is also used to evaluate the performance of multiple comparison algorithms and calculate the average rank ranking, so that the differences between the algorithms can be intuitively compared. A smaller average rank ranking means that the algorithm has better performance. The Friedman test is performed using the average value of each algorithm on the F1-F30 function. The results are shown in the last row of Table 3. DSWOA ranks first among the comparison algorithms, proving that the improved DSWOA has better performance.

Comparison of DSWOA with other advanced WOA algorithms

To further illustrate the strengths of DSWOA, this article compare it with several improved WOA algorithms. Similarly, they are executed separately 30 times to determine the mean and deviation. Table 4 contains the results. Based on the statistical analysis, it is evident that DSWOA achieved better results. Compared with eWOA56, MWOA57, MSWOA58, and WOA-LFDE59, the proposed WOA algorithm achieved better results on 26, 28, 26, and 19 test functions, respectively. This indicates that the diverse strategies proposed in this article can effectively expand the search space and achieve global optimization. DSWOA still has considerable competitiveness compared to other improved WOA algorithms.

Table 5 is the result of Wilcoxon rank sum test and Friedman test analysis on Table 4. The difference performance (Y/N) indicates whether there is a significant difference in performance between the two algorithms, while the Friedman mean rank can comprehensively compare the advantages and disadvantages of the algorithms. The results in Table 5 show that DSWOA is significantly different from several other improved WOA variants, and ranks first in Friedman index. Combined with the comparison results above and other algorithms, it proves that DSWOA has strong performance in optimization problems.

In addition to the accuracy of optimization, convergence speed and stability are also indicators for evaluating the quality of optimization algorithms. To assess the convergence speed and stability of the upgraded DSWOA, this paper conducted experiments on the convergence speed and outlier detection of the generated results. Figure 5 depicts the convergence speed of several improved algorithms and this algorithm on the 30 test functions of CEC2017. The DSWOA proposed in this article is clearly superior in terms of convergence speed on most test functions, requiring fewer iterations to achieve convergence compared to other functions, and consistently producing the best results.

Figure 6 lists the box plots of several improved WOA algorithms on several test functions in CEC 2017. It can be seen that the DSWOA proposed in this article can not only achieve better results on many test functions, but also generate fewer outliers in the results. Together with Table 4 and Fig. 5, it highlights DSWOA’s competitive strengths in accuracy, convergence speed, and stability.

Case study of industrial design issues

Description of the industrial design issues

In order to further demonstrate the advantages of DSWOA and its practical applications, this section selects several classic industrial design problems and compares them with several classic algorithms such as DBO, PSO, GWO, SSA, WOA, and eWOA. Table 6 shows the names and related details of the six industrial design problems60 selected in this paper. In Table 5, D is the problem dimension, g is the inequality constraint, h is the equality constraint, and \({\text{f}}_{\min }\) is the theoretical optimal.

Industrial optimization results and analysis

In the optimization experiment of industrial design problems, the population size of each model is set to 30, the number of iterations is 500, and after 30 independent experiments, the optimal value, average value, worst value and standard deviation of the optimization results are statistically analyzed. The results are shown in Table 7.

As shown in Table 7, the DSWOA proposed in this paper has a great advantage in optimizing several engineering problems. Compared with classic or improved algorithms such as PSO or eWOA, DSWOA can achieve smaller optimal values in F1, F2, and F6, and is closer to or reaches the theoretical optimal value. In the actual application of F3, F4, and F5, although DSWOA and some other algorithms achieve the same best optimization value, DSWOA is better than all algorithms in average optimization results. This shows that DSWOA can be applied to many complex scenarios and problems in real life.

Experiments of DSWOA-GRU for prediction task

Datasets

The prediction experiment involved the use of three data sets: Concrete compressive strength data set (CCSD)61, Real estate valuation data set (REVD)62 and ASND(Airfoil Self-Noise data set)63. CCSD is a data set for predicting the strength of reinforced concrete. CCSD has a total of 1030 pieces of data, including 8 characteristics, such as blast furnace slag and fly ash. The final prediction yields an output, which is the concrete compressive strength of continuous values. REVD contains 414 pieces of data from the Taipei housing market, including 6 features such as longitude, latitude, and distance from the subway. The output is the housing price per square meter. The data in ASND was gathered by NASA through aerodynamic and acoustic tests conducted on two and three-dimensional airfoil blade sections in a wind tunnel. It contains 1503 pieces of data, each piece of data has 5 features, such as frequency, attack-angle, etc., and a target value, namely scaled-sound-pressure.

Statistic results of prediction

The relevant settings for the prediction task are as follows: the GRU training times of the three networks of GRU, WOA-GRU and DSWOA-GRU are all 20. The learning rate and the number of hidden layer neurons in GRU are optimized using whale positions from both WOA and DSWOA. The search range for the learning rate encompasses values from 0.0001 to 0.01, while the number of hidden layer neurons is explored from 1 to 200. WOA and DSWOA optimization algorithms are both programmed to execute 10 iterations, with 10 search agents in each iteration. Each dataset is individually divided into a 70% training set and a 30% testing set, and the performance is evaluated using the RMSE and MAE metrics. In addition, the optimization effect of machine learning models is also related to the selection of non-numerical hyperparameters, which are difficult to select using intelligent optimization algorithms, such as optimizers. Therefore, this article specifically verifies the prediction effect of GRU under the three optimizers Adam, SGD, and RMSprop.

Table 8 shows the prediction results. The RMSE and MAE values obtained by the three models on Adam are smaller. In other words, Adam achieves the best effect.Under the Adam optimizer, the GRU model achieved RMSE values of 8.3962, 8.767, and 6.9125, and MAE values of 6.6455, 6.4805, and 5.7969 across the CCSD, REVD, and CCSD datasets, respectively. The WOA-GRU model demonstrates a noticeable enhancement in performance. With RMSE values of 7.9345, 8.2594, and 6.6236, and MAE values of 6.1813, 6.1858, and 5.5044, respectively, across the datasets, it is evident that the WOA-GRU model offers superior prediction accuracy compared to the base GRU model. Additionally, the DSWOA-GRU model delivers the most outstanding results among the three models. Across the CCSD, REVD, and CCSD datasets, the GRU model showed RMSE values of 7.4919, 7.9265 and 6.6037, and MAE values of 5.9121, 5.7261 and 5.5402. Under the SGD optimizer, DSWOA-GRU achieved the best results on all three datasets, and under RMSprops, it also had an advantage over GRU and WOA-GRU.

These results indicate that the integration of optimization techniques, such as WOA and its dynamic variant, can effectively enhance the performance of GRU-based models in sequential data prediction tasks. The DSWOA-GRU model, in particular, offers a promising approach for achieving higher prediction accuracy.

Conclusions

To tackle the slow convergence speed and local optima problem of the whale optimization algorithm, this study introduces a novel diverse strategies version of the algorithm. This improved algorithm utilizes adaptive t-distribution perturbation, Cauchy walk, reverse learning, and innovative horizontal crossover strategy to address the constraints of the traditional WOA. Testing the proposed method on 29 CEC 2017 test functions shows its superiority in optimization accuracy, convergence speed, and stability. Furthermore, the application of the optimized GRU model to prediction tasks yields promising results. The results show that the upgraded WOA greatly improves the predictive ability of the GRU model, underscoring the potential of this combined method to boost the accuracy and efficiency of sequential data prediction. Nevertheless, various approaches result in extended model runtime. Therefore, the future plan of the current research is to devise a technique to optimize the model’s runtime and investigate its potential applications in different fields.

Data availability

The utilized data in this study is available upon reasonable request from the corresponding author.

References

Hu, J. et al. Swarm intelligence-based optimisation algorithms: an overview and future research issues. Int. J. Autom. Control. 14(5–6), 656–693 (2020).

Mashwani, W. K. et al. Large-scale global optimization based on hybrid swarm intelligence algorithm. J. Intell. Fuzzy Syst. 39(1), 1257–1275 (2020).

Cai, Y. & Sharma, A. Swarm intelligence optimization: an exploration and application of machine learning technology. J. Intell. Syst. 30(1), 460–469 (2021).

Khennak, I. et al. I/F-Race tuned firefly algorithm and particle swarm optimization for K-medoids-based clustering. Evol. Intell. 16(1), 351–373 (2023).

Xu, M. et al. Application of swarm intelligence optimization algorithms in image processing: A comprehensive review of analysis, synthesis, and optimization. Biomimetics. 8(2), 235 (2023).

Wei, D. et al. Preaching-inspired swarm intelligence algorithm and its applications. Knowl.-Based Syst. 211, 106552 (2021).

Liang, Z., Shu, T. & Ding, Z. A novel improved whale optimization algorithm for global optimization and engineering applications. Mathematics. 12(5), 636 (2024).

Mirjalili, S. & Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 95, 51–67 (2016).

Wang, J. & Wang, Y. An efficient improved whale optimization algorithm for optimization tasks. Eng. Lett. 32(2) (2024).

Kaur, G. & Arora, S. Chaotic whale optimization algorithm. J. Comput. Des. Eng. 5(3), 275–284 (2018).

Li, M. et al. Whale optimization algorithm based on dynamic pinhole imaging and adaptive strategy. J. Supercomput. 1–31 (2022).

Fan, Q. et al. ESSAWOA: enhanced whale optimization algorithm integrated with salp swarm algorithm for global optimization. Eng. Comput. 38(Suppl 1), 797–814 (2022).

Sajjad, M. et al. A novel CNN-GRU-based hybrid approach for short-term residential load forecasting. IEEE Access. 8, 143759–143768 (2020).

Pan, M. et al. Water level prediction model based on GRU and CNN. IEEE Access. 8, 60090–60100 (2020).

Zhang, K. et al. A GRU-based ensemble learning method for time-variant uncertain structural response analysis. Comput. Methods Appl. Mech. Eng. 391, 114516 (2022).

Yu, Z. et al. Gated recurrent unit neural network (GRU) based on quantile regression (QR) predicts reservoir parameters through well logging data. Front. Earth Sci. 11, 1087385 (2023).

Kirkpatrick, S., Gelatt, C. D. & Vecchi, M. P. Optimization by simulated annealing. Science. 220, 671–680 (1983).

Kaveh, A. & Talatahari, S. A novel heuristic optimization method: Charged system search. Acta Mech. 213, 267–289 (2010).

Rashedi, E., Nezamabadi-Pour, H. & Saryazdi, S. GSA: a gravitational search algorithm. Inf. Sci. 179(13), 2232–2248 (2009).

Formato, R. Central force optimization: a new metaheuristic with applications in applied electromagnetics. Prog. Electromagn. Res. 77, 425–491 (2007).

Kaveh, A. & Bakhshpoori, T. Water evaporation optimization: A novel physically inspired optimization algorithm. Comput. Struct. 167, 69–85 (2016).

Holland, J. H. Genetic algorithms. Scientific american. 267(1), 66–73 (1992).

Storn, R. & Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 11, 341–359 (1997).

Rechenberg, I. Evolutionsstrategien Vol. 8, 83–114 (Springer, 1978).

Koza, J. R. Genetic programming as a means for programming computers by natural selection. Stat. Comput. 4(2), 87–112 (1994).

Eberhart, R. & Kennedy, J. A new optimizer using particle swarm theory. In MHS’95. Proceedings of the Sixth International Symposium on Micro Machine and Human Science. 39–43 (IEEE, 1995).

Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-based Syst. 89, 228–249 (2015).

Mirjalili, S. SCA: a sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 96, 120–133 (2016).

Agushaka, J. O., Ezugwu, A. E. & Abualigah, L. Dwarf mongoose optimization algorithm. Comput. Methods Appl. Mech. Eng. 391, 114570 (2022).

Xue, J. & Shen, B. A novel swarm intelligence optimization approach: sparrow search algorithm. Syst. Sci. Control Eng. 8(1), 22–34 (2020).

Xu, D. G., Wang, Z. Q., Guo, Y. X. & Xing, K. J. Review of whale optimization algorithm. Appl. Res. Comput. 40(02), 328–336. https://doi.org/10.19734/j.issn.1001-3695.2022.06.034 (2023).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 69, 46–61 (2014).

Korashy, A. et al. Hybrid whale optimization algorithm and grey wolf optimizer algorithm for optimal coordination of direction overcurrent relays. Electr. Power Compon. Syst. 47(6–7), 644–658 (2019).

Pham, V. H. S., Nguyen, V. N. & Nguyen Dang, N. T. Hybrid whale optimization algorithm for enhanced routing of limited capacity vehicles in supply chain management. Sci. Rep. 14(1), 793 (2024).

Abdel-Basset, M., Chang, V. & Mohamed, R. HSMA_WOA: A hybrid novel Slime mould algorithm with whale optimization algorithm for tackling the image segmentation problem of chest X-ray images. Appl. Soft Comput. 95, 106642 (2020).

Strumberger, I. et al. Resource scheduling in cloud computing based on a hybridized whale optimization algorithm. Appl. Sci. 9(22), 4893 (2019).

Karaboga, D. Artificial bee colony algorithm. Scholarpedia. 5(3), 6915 (2010).

Yang, X. S. & He, X. Firefly algorithm: recent advances and applications. Int. J. Swarm Intell. 1(1), 36–50 (2013).

Yazdani, M. & Jolai, F. Lion optimization algorithm (LOA): a nature-inspired metaheuristic algorithm. J. Comput. Des. Eng. 3(1), 24–36 (2016).

Saxena, M., Dutta, S. & Singh, B. K. Optimal routing using whale optimization and lion optimization algorithm in WSN. Wirel. Netw. 1–18 (2023).

Zong, X. et al. Whale optimization algorithm based on Levy flight and memory for static smooth path planning. Int. J. Mod. Phys. C. 33(10), 2250138 (2022).

Chen, Z., Yu, Y. & Wang, Y. Parameter identification of Jiles-Atherton model based on Levy Whale Optimization Algorithm. IEEE Access. 10, 66711–66721 (2022).

Li, M. et al. Hybrid whale optimization algorithm based on symbiosis strategy for global optimization. Appl. Intell. 53(13), 16663–16705 (2023).

Hussien, A. G. et al. Boosting whale optimization with evolution strategy and Gaussian random walks: An image segmentation method. Eng. Comput. 39(3), 1935–1979 (2023).

Si, Q. & Li, C. Indoor robot path planning using an improved whale optimization algorithm. Sensors. 23(8), 3988 (2023).

Elmogy, A. et al. ANWOA: an adaptive nonlinear whale optimization algorithm for high-dimensional optimization problems. Neural Comput. Appl. 35(30), 22671–22686 (2023).

Tang, J. & Wang, L. A whale optimization algorithm based on atom-like structure differential evolution for solving engineering design problems. Sci. Rep. 14(1), 795 (2024).

Chakraborty, S. et al. SHADE–WOA: A metaheuristic algorithm for global optimization. Appl. Soft Comput. 113, 107866 (2021).

Nasiri, J. & Khiyabani, F. M. A whale optimization algorithm (WOA) approach for clustering. Cogent Math. Stat. 5(1), 1483565 (2018).

Al-Quraan, A. et al. Optimal prediction of wind energy resources based on WOA—A case study in Jordan. Sustainability. 15(5), 3927 (2023).

Xu, Z. et al. A whale optimization algorithm with distributed collaboration and reverse learning ability. Comput. Mater. Continua. 75(3) (2023).

Lange, K. L., Little, R. J. A. & Taylor, J. M. G. Robust statistical modeling using the t distribution. J. Am. Stat. Assoc. 84(408), 881–896 (1989).

Bartumeus, F. et al. Animal search strategies: a quantitative random-walk analysis. Ecology. 86(11), 3078–3087 (2005).

Chakraborty, S. et al. Horizontal crossover and co-operative hunting-based Whale Optimization Algorithm for feature selection. Knowl.-Based Syst. 282, 111108 (2023).

Rana, R. Gated recurrent unit (GRU) for emotion classification from noisy speech. arXiv preprint arXiv:1612.07778 (2016).

Chakraborty, S. et al. An enhanced whale optimization algorithm for large scale optimization problems. Knowl.-Based Syst. 233, 107543 (2021).

Anitha, J., Pandian, S. I. A. & Agnes, S. A. An efficient multilevel color image thresholding based on modified whale optimization algorithm. Expert Syst. Appl. 178, 115003 (2021).

Yang, W. et al. A multi-strategy Whale optimization algorithm and its application. Eng. Appl. Artif. Intell. 108, 104558 (2022).

Liu, M., Yao, X. & Li, Y. Hybrid whale optimization algorithm enhanced with Lévy flight and differential evolution for job shop scheduling problems. Appl. Soft Comput. 105954. https://doi.org/10.1016/j.asoc.2019.105954 (2019).

Kumar, A. et al. A test-suite of non-convex constrained optimization problems from the real-world and some baseline results. Swarm Evol. Comput. 56, 100693 (2020).

Yeh, I.-C. Concrete compressive strength. UCI Machine Learn. Repository. https://doi.org/10.24432/C5PK67 (2007).

Yeh, I.-C. Real estate valuation. UCI Mach. Learn. Repos. https://doi.org/10.24432/C5J30W (2018).

Brooks, T., Pope, D. & Marcolini, M. Airfoil self-noise. UCI Mach. Learn. Repos. https://doi.org/10.24432/C5VW2C (2014).

Funding

This work was supported by Guangdong Distance Open Education Research Fund Project (YJ2021), Guangdong Open University System’s Education Reform Project (2024TXJG005).

Author information

Authors and Affiliations

Contributions

The author has solely contributed to the entire research work presented in this article, including the design of the study, data collection and analysis, interpretation of results, and writing of the manuscript. No other individuals have been involved in any aspect of this work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lin, Z. Enhanced GRU-based regression analysis via a diverse strategies whale optimization algorithm. Sci Rep 14, 25629 (2024). https://doi.org/10.1038/s41598-024-77517-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-77517-0

Keywords

This article is cited by

-

Time-domain optimization based NMPC path tracking control for underground LHD

Scientific Reports (2025)

-

A novel deep learning framework with artificial protozoa optimization-based adaptive environmental response for wind power prediction

Scientific Reports (2025)