Abstract

This study introduces a novel metaheuristic optimization algorithm named Logarithmic Mean-Based Optimization (LMO), designed to enhance convergence speed and global optimality in complex energy optimization problems. LMO leverages logarithmic mean operations to achieve a superior balance between exploration and exploitation. The algorithm’s performance was benchmarked against six established methods—Genetic Algorithm (GA), Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO), Grey Wolf Optimizer (GWO), Cuckoo Search Algorithm (CSA), and Firefly Algorithm (FA)—using the CEC 2017 suite of 23 high-dimensional functions. LMO achieved the best solution on 19 out of 23 benchmark functions, significantly outperforming all comparison algorithms. It demonstrated a mean improvement of 83% in convergence time and up to 95% better accuracy in optimal values over competitors. In a real-world application, LMO was employed to optimize a hybrid photovoltaic (PV) and wind energy system, achieving a 5000 kWh energy yield at a minimized cost of $20,000, outperforming all other algorithms in both efficiency and effectiveness. The results affirm LMO’s capability for robust, scalable, and cost-effective optimization in renewable energy systems.

Similar content being viewed by others

Introduction

Background

A shift from fossil fueled energy systems to renewables is necessary to combat climate change, energy security and global sustainability issues1. Some of the renewables, such as solar, wind, and hydropower, are becoming very popular as a means to protect the environment by decreasing the use of fossil fuels. Photovoltaic (PV) systems and Wind Energy Systems (WES) are some of the most popular options due to their capability of producing clean and sustainable energy on a large scale2,3. However, the performance of these systems is influenced greatly by numerous factors such as environmental conditions, system design, and system’s level of optimization. Optimization, in the context of energy systems, is defined as the search for the best design and or value of parameters and/or operating modes of the system that achieve the maximum output or minimum cost within defined limits4. The growth in the intricacy of energy systems, and particularly hybrid systems, that consist of more than one energy source (i.e. PV and wind), has made it important to develop optimization strategies that are effective at dealing with non-linearities, uncertainties and multi-objective criteria. Like with most systems, problems with optimization within energy systems happens to be multi-modal and non-convex, meaning that the objective function can have more than one local minimum or maximum, thus making the use of traditional optimization strategies (such as methods based on gradient) difficult and almost impossible to achieve global optimality5. These problems are more accentuated in renewable energy systems owing to the uncertainties associated with some intermittent energy production, changing environmental conditions, and the degradation of systems through time. The main motivation of this work arises from the persistent trade-offs faced by metaheuristic algorithms in real-world high-dimensional problems—namely, balancing global exploration and local exploitation, and ensuring convergence without stagnation. Most existing methods struggle with premature convergence, parameter sensitivity, or high computational demands when addressing complex multimodal landscapes. This paper proposes the Logarithmic Mean-Based Optimization (LMO) algorithm to address these limitations through a novel update mechanism grounded in mathematical averaging principles rather than nature-inspired metaphors. LMO aims to reduce computational complexity, enhance convergence stability, and perform robustly in both benchmark and practical renewable energy applications.

Evolution of optimization techniques

To address these issues, global optimization strategies have been made to solve intricate, non-linear, and multi-dimensional problems. Techniques of this nature are focused on avoiding local optimum convergence that other methods tend to dwell in plus exploring the regions of the solution space much better. Global optimization is focused on obtaining the most optimal solution to a problem within a defined scope of search6,7. This, without a doubt, is extremely vital for problems which are non-smooth and discontinuous with a number of local optima. The introduction of bio-inspired algorithms has proved to be very helpful to global optimization, as they mimic structures or processes found within nature as well as evolutionary concepts8,9,10. These include Genetic Algorithms (GA), Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO), Firefly Algorithm (FA), and Cuckoo Search Algorithm (CSA), which heavily draw from known social or biological behaviors to find optimal solutions. With their ability to search high-dimensional spaces with no requirement for gradients, these and other metaheuristic algorithms have been widely used for optimization of energy systems.

-

1.

Genetic Algorithms (GA)11: Starting from the principles of natural selection, GA maintains and evolves a population of candidate solutions over generations through selection, crossover, and mutation operations. It proves to be useful for problems which possess highly non-linear or combinatorial search spaces.

-

2.

Particle Swarm Optimization (PSO)12: Reflective of a social behavior pattern typical of bird flocks or schools of fish, PSO imitates the movement of particles in a search space in which each particle symbolizes a prospective solution. The movement of the particle is determined by its own previous experience and that of its neighbors. It is now widely used for different kinds of continuous optimization problems, especially for control and optimization of energy systems.

-

3.

Ant Colony Optimization (ACO)13: ACO is rooted in the foraging behavior of ants. It involves a population of agents (ants) that corral around the search space, depositing pheromones that guide the other ants toward promising solutions. ACO has proven to be very useful for solving problems of combinatorial optimization such as design of networks and routing in energy systems.

-

4.

Firefly Algorithm (FA)14: Besides employing fireflies as agents of optimization, the algorithm also utilizes a ‘brighter’ firefly or individual and moves towards ‘brighter’ individuals for nocturnal oscillation, which serves to help the fireflies golf. This algorithm improves the ease of implementation. It has been proposed that the FA can be applied for thresholding that is multimodal optimization problems and the solution rests on several peaks within the allowed boundaries of search space.

-

5.

Cuckoo Search Algorithm (CSA)15: This particular algorithm takes its inspiration from the nesting behavior of cuckoo birds. These birds are known to deposit their eggs into other host birds’ nests. The CSA fusion of exploration using the Levy flight with local optima search, provide suitable movement patterns for many bird species, thus enabling it to effectively tackle complex optimization problems.

Even though bio-heuristic algorithms demonstrate exceptional resourcefulness to global optimization problems, they do come with their own shortcomings. Although the problem attempts to tackle a beneficial solution, the likelihood of converging and finalizing the answer is split and delicate. CSA is another example of an algorithm that suffers from slow convergence while rapidly tackling local optima. Refined balances of the parameters within which the algorithm functions, the size of the population, and how often the genetic changes blossom hinges on the algorithm overall16,17. In addition, in recent years, a variety of novel metaheuristic algorithms have been introduced, extending the diversity and effectiveness of bio-inspired optimization techniques. These include the Perfumer Optimization Algorithm (POA) [18], Makeup Artist Optimization Algorithm (MAOA)19, Builder Optimization Algorithm (BOA)20, and Revolution Optimization Algorithm (ROA)21. Other recent additions include the Paper Publishing Based Optimization (PPBBO)22, Sales Training Based Optimization (STBO)23, Potter Optimization Algorithm (POA)24, Orangutan Optimization Algorithm (OOA)25, Tailor Optimization Algorithm (TOA)26, and Spider-Tailed Horned Viper Optimization (STHVO)27. These emerging algorithms further demonstrate the ongoing innovation in the development of optimization strategies and provide valuable inspiration for new methods like the proposed LMO.

Relevant literature

The application of energy systems optimization via metaheuristic algorithms is well developed in the literature. A few examples include:

-

28optimization of PV systems with GA. This study serves as an example for the use of Genetic Algorithms in renewable energy systems.

-

[29] who was the first to apply PSO, which remains one of the most popular techniques for optimization in energy systems due to its simplicity.

-

30who pioneered ACO Ant Colony optimization. This technique is frequently utilized in the optimization of energy systems, especially in multi-objective and combinatorial optimization tasks.

-

31who suggested GWO is yet another metaheuristic optimization inspired by wolves hunting and has been utilized in a variety of engineering optimization issues, especially for renewables.

-

32who proposed Firefly Algorithm (FA) based on the optimization problem solving method mimicking the flashing light of fireflies. Applications in energy and other fields are being explored.

-

Recent studies continue to demonstrate the rapid advancement in optimization frameworks. For example, in [33], a self-tuning metaheuristic mechanism is introduced that adapts its strategy online based on problem feedback, revealing the growing trend toward intelligent and dynamic optimizers. Likewise34, presents a critical survey of lightweight metaheuristics enhanced by hybrid strategies, reinforcing the ongoing need for optimization methods that are both computationally efficient and versatile. These works highlight the timeliness and relevance of developing mathematically grounded algorithms like LMO that can offer a transparent and reproducible foundation while outperforming current adaptive heuristics.

There has been recent evidence which suggest that hybrid approaches such as combining PSO with other optimization techniques like the Cuckoo Search Algorithm (CSA) increase optimization results for more complex and higher order problems32. Nonetheless, these approaches tend to have convergence problems which Logarithmic Mean-Based Optimization (LMO) hopes to solve.

Research gaps in global optimization

Global optimization is crucial in applied mathematics and engineering, as it deals with achieving the global best (either minimum or maximum) of a problem within a defined search space. Approaches like gradient descent and linear programming are fundamentally constrained due to reliance on strong assumptions, including the objective function’s continuity and smoothness. These boundaries render them impractical to real-life scenarios which involve non-convex, multimodal, noisy objective functions, which is rampant in energy systems.

While bio-inspired algorithms provide freedom and flexibility in their approach, they incur several critical issues35:

-

1.

Slow Convergence: Most metaheuristic approaches suffer from slow convergence rates, especially if a complex, high-dimensional objective function is involved. For example, GA and PSO algorithms normally need a large number of iterations in order to achieve acceptable solutions and ideally, this becomes computationally intensive.

-

2.

Premature Convergence: Bio-inspired algorithms face another well-known issue termed premature convergence where the algorithm finds a suboptimal solution much too early in the process, but instead of letting it search for possible solutions in a space. This is particularly troublesome in high-dimensional spaces, as the algorithm is more prone to being trapped in local optima.

-

3.

Parameter Sensitivity: Similar to other bio-inspired methodologies, most of these algorithms need extra fine-tuning with parameters such as population size, mutation rates, and step size if they are to work optimally. The need for manual tuning adds a level of complexity, and quite often, the most appropriate settings differ from one problem to another, thus making the algorithms less universal.

-

4.

Balance Between Exploration And Exploitation: Optimization has to have some balance between searching some unexplored portions of the space (exploration) and working on an already existing solution (exploitation). Quite a number of algorithms have hard time finding the balance, either trying too hard to investigate the search space or to work on solutions already provided.

These problems illustrate a gap in the existing optimization system regarding the relative freedom of innovation and traditional techniques built on “the biological subroutines” that are needed to improve effectiveness, dependability, and speed of global optimization computations in the energy systems.

Even with the advances of metaheuristics techniques, algorithms continue to face mostly critical defects like slow convergence, getting stuck in local optima, and being too sensitive to parameter tuning, especially in complex, multimodal energy optimization problems. These gaps appear to be most important when concerning the optimization of hybrid renewable energy systems due to the high dimensional nature of the solution space coupled with unrealistic noisy objective functions. Heterogenous strategies pose an imbalance leading to a lack of efficiency in executing true world tasks. This research attempts to bridge this gap with a new LMO algorithm based on logarithmic mean operations which provides an unprecedented update strategy. This proves helpful with faster convergence, less early termination, and an overall better balance of exploration and exploitation in comparison to existing bio-type algorithms. In LMO, better performance is executed through its flexible and highly responsive math structure enabling it to deal with a wide variety of benchmark global optimization problems and real word energy system optimization problems more efficiently.

Addressing the Metaphor-Based algorithm critique

The proliferation of algorithms inspired by nature has sparked an ongoing scholarly discussion regarding their framing and practicality. A few recent studies claim that many of these so-called novel algorithms are just reprised versions of existing algorithms dressed under new branding, and are very rarely methodologically new. Sörensen in his 2015 seminal paper “Metaheuristics—the metaphor exposed” criticized the using metaphors for constructing algorithms and urged the community to concentrate on more meaningful algorithm design and statistical validation instead of narrative36. This has been cited in other works such as:

-

Tzanetos and Dounias (2021), who asked whether nature-inspired techniques are, in fact, novel optimization approaches or simply variations of parameters and structures pose as new methods37.

-

Fister et al. (2016) and Aranha et al. (2022) who invited debate about the role of metaphors as a guiding framework in the genesis of metaheuristics, particularly in regard to the benchmarking and evaluation of algorithm performance38,39.

We take those criticisms into account and explain that the Logarithmic Mean-Based Optimization (LMO) algorithm does not stem from a natural analogy. While trying to present the concept in simpler terms, which is why it was once explained with natural references, its innovation is based on the mathematical field of the logarithmic mean, which is a new operator for updating a solution, conveniently devoid of biological or behavioral interpretations39,40,41,42. We aim to present an algorithm that is firmly based in mathematical principles and focused on performance, rather than relying on abstract metaheuristics. The proposed LMO represents a significant advancement in the field due the fact that it:

-

Introduces a mean shift of the logarithmic process to an update mechanism to balance exploration and exploitation;

-

Shows quantifiable progress compared to established competition with standardized benchmarks;

-

Provides thorough design documentation for the algorithm that guarantees unambiguous reproducibility and traceable transparency.

So, LMO responds to the call for design rigorous, non-embellished algorithms that are practical for solving optimization problems while delivering evidence-based results.

Contributions of this study

This paper proposes a new optimization method called Logarithmic Mean-Based Optimization (LMO) which improves existing methods of global optimization, especially in solving problems of energy system optimizations. The primary contributions of this work are outlined below:

-

1.

Development of the LMO Framework: The LMO framework is proposed, which employs the logarithmic mean to enhance balance between exploration and exploitation during the optimization process. LMO aims to mitigate the negative impacts of premature convergence to local optima and to improve the rate of convergence to the global optimum.

-

2.

Comparative Performance Analysis: LMO is analyzed and benchmarked against six well-studied metaheuristic algorithms: Genetic Algorithm (GA), Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO), Grey Wolf Optimizer (GWO), Cuckoo Search algorithm (CSA) and Firefly algorithm (FA). These algorithms are executed using the most stringent CEC 2017 benchmark functions, an accepted and acknowledged set of global optimization problems which are highly complex, multidimensional, and nonlinear. Performance of LMO is measured against these algorithms with respect to several important parameters including, but not limited to, convergence speed, quality of the best and average results, and cost of computation.

-

3.

Photovoltaic Systems Case Study: This work presents a comprehensive case study wherein the LMO framework is utilized to optimize the output power of photovoltaic (PV) systems. The optimization process made during the case study includes variable irradiation levels together with shadowing that are usually dominant for PV systems in practical scenarios. This case illustrates the use of LMO in addressing energy optimization challenges, specifically in renewable energy systems.

-

4.

Computational Efficiency and Robustness: The framework has showed increased robustness and efficiency in computation compared to conventional optimization techniques. The algorithms that were compared showed worse performance in attaining the optimal solutions in greater number of iterations, while LMO stood out in real-time large scale energy optimization challenges.

Structure of the paper

The layout of this paper follows:

-

Section 1 explains the context of energy optimization and the challenges of global optimization, identifies gaps in the literature, and defines what is accomplished by the proposed LMO framework within these gaps.

-

In Sect. 2, the author describes the study’s methodology which includes describing the LMO framework and the six comparison algorithms.

-

The CEC 2017 benchmark functions and the case study on the PV system optimization is described in detail in Sect. 3.

-

In Sect. 4, the author shows the performance of LMO in comparison to the other algorithms and discusses the results.

-

The recommendations concerning results, practical influence and future directions for work in energy optimization are summarized in Sect. 5.

This part outlines the context of the study, its contributions, and the gaps in research, stating the Logarithmic Mean-Based Optimization (LMO) technique as a new solution for global optimizations within energy systems. The CEC 2017 benchmark functions and a PV system performance maximization are used to demonstrate the framework’s effectiveness, which also illustrates its LMO’s superiority over traditional bio-inspired algorithms. The methodology, as well as the experiment’s setup and results, will be presented in more detail in the next sections.

Methodology

This part describes the proposed LMO framework along with the six benchmark algorithms of the study. The purpose of this section is to state the mathematical formulation and algorithmic processes of the optimization methods executed within the scope of the experiments. Besides, this part describes the important details of the algorithms such as their optimization setting, the CEC 2017 benchmark functions, and the details of the case study.

Overview of optimization algorithms

This paper aims to analyze Logarithmic Mean-Based Optimization (LMO) in relation to some well-known metaheuristic algorithms which include Genetic Algorithm (GA), Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO), Grey Wolf Optimizer (GWO), Cuckoo Search Algorithm (CSA) and Firefly Algorithm (FA). All these algorithms are the most advanced forms of bio-inspired optimization techniques, having been widely used in numerous optimization tasks, such as optimizing energy systems.

Logarithmic Mean-Based optimization (LMO)

The Logarithmic Mean-Based Optimization (LMO) framework is presented to overcome the shortcomings of conventional metaheuristic algorithms. The LMO technique utilizes the logarithmic mean to enhance convergence behavior and prevent premature convergence.

Mathematical formulation

At each iteration, the LMO algorithm updates the population based on the following steps:

-

1.

Logarithmic Mean Update Rule:

Mathematical Model for Logarithmic Mean Optimization Algorithm (LMO).

1. Problem Definition.

Let the optimization problem be defined as per relation (1):

where f(x) is the objective function, and S=[lb, ub] is the search space with lb (lower bound) and ub (upper bound).

2. Initialization.

Define the following parameters:

-

N: Number of candidate solutions.

-

Itermax: Maximum number of iterations.

-

α, β: Control parameters for exploration and exploitation.

-

Randomly initialize N candidate solutions:

3. Fitness Evaluation.

For each candidate solution xi, evaluate the objective function using relation (3):

4. Logarithmic Mean-Based Update Rule.

For every pair of solutions xi and xj , compute the logarithmic mean by Eq. (4):

If xi = xj , then we obtain relation (5):

The new candidate solution is generated using Eq. (6):

5. Adaptive Control for Exploration and Exploitation.

Introduce by Eq. (7) an adaptive control parameter αt, which varies with the iteration number t:

The updated solution incorporates exploitation as per Eq. (8):

where xbest is the best solution found so far as illustrated in relation (9):

6. Boundary Handling.

Ensure that the updated solution xfinal stays within the search space, as shown in Eq. (10):

7. Selection.

Replace the worst solution xworst with xfinal if it improves the objective using relation (11):

8. Stopping Criterion.

Stop the algorithm when one of the following conditions is met:

-

t ≥ Itermax.

-

∣fbest(t) − fbest(t − 1)∣ < ϵ, where ϵ is a small tolerance.

Algorithm summary

-

1.

Initialization:

-

Generate N random solutions within [lb, ub].

-

Evaluate f(x) for each solution.

-

2.

Iterative Optimization:

-

For each pair of solutions, compute the logarithmic mean L(xi,xj).

-

Generate a new solution xnew using the update rule.

-

Adjust the balance between exploration and exploitation with αt.

-

Replace the worst solution if xnew is better.

-

3.

Repeat Until Stopping Criterion.

-

4.

Return the Best Solution:

-

Output xbest and fbest.

Figure 1 provides the flowchart of the LMO algorithm.

Comparison algorithms

In addition to LMO, this study uses six comparison algorithms that are commonly employed in global optimization tasks: Genetic Algorithm (GA), Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO), Grey Wolf Optimizer (GWO), Cuckoo Search Algorithm (CSA), and Firefly Algorithm (FA). A brief description of each algorithm, its key equations, and the parameters used are provided below.

Genetic algorithm (GA)

Genetic Algorithm (GA) is a widely-used metaheuristic based on natural selection. It uses populations of candidate solutions (chromosomes) that evolve over generations to find the optimal solution.

-

1.

Selection: The selection process chooses individuals based on their fitness, typically using a roulette wheel or tournament selection method.

-

2.

Crossover: The crossover operation combines two parent chromosomes to produce offspring. The crossover operation is typically performed as shown in Eq. (12):

$$\text{x}_{\text{offspring}}= \text{Crossover}\; (\text{X}_{\text{parent1}},\text{X}_{\text{parent2}})$$(12)

-

3.

Mutation: The mutation operation introduces randomness into the population by changing a gene in the chromosome as per relation (13):

$$\text{x}_{\text{mutated}}= \text{x}_{\text{offspring}} \pm \epsilon$$(13)

where ε is a small random perturbation.

-

4.

Termination Condition: The algorithm terminates when a maximum number of generations is reached or when convergence is achieved.

Particle swarm optimization (PSO)

PSO is a population-based algorithm inspired by the social behavior of birds flocking or fish schooling. Each particle in the swarm represents a potential solution, and its movement is influenced by both its own best-known position and the best-known position in the swarm.

-

1.

Velocity Update:

$${{v}_{i}}^{t+1} = \text{w}\:{{v}_{i}}^{t}+ \text{c}_{1} \text{r}_{1} (\text{pbest}_{i}-\:{{x}_{i}}^{t})+ \text{c}_{2} \text{r}_{2} (\text{gbest}- {{x}_{i}}^{t})$$(14)

where:

-

\(\:{{v}_{i}}^{t+1}\) is the velocity of particle i at iteration t + 1,

-

w is the inertia weight,

-

c1, c2 are cognitive and social learning factors,

-

r1, r2 are random numbers in [0,1],

-

pbesti is the best-known position of particle i,

-

gbest is the best-known position in the swarm.

-

2.

Position Update:

$$\:{{x}_{i}}^{t+1}=\:{x}^{t}\:+\:{{v}_{i}}^{t+1}$$(15)

Ant colony optimization (ACO)

ACO is inspired by the foraging behavior of ants. It uses a population of agents (ants) that explore the search space, leaving pheromone trails that guide other ants towards promising regions.

-

1.

Pheromone Update:

where:

-

\(\:{{\tau}_{ij}}^{t}\)is the pheromone level on the edge between nodes i and j at time t,

-

ρ is the evaporation rate,

-

Δτij is the pheromone update based on the quality of the solution.

-

2.

Solution Construction: Ants probabilistically construct solutions by choosing edges based on pheromone levels and heuristic information.

Grey Wolf optimizer (GWO)

The Grey Wolf Optimizer (GWO) is a metaheuristic inspired by the hunting behavior of grey wolves. Wolves are classified into alpha, beta, and delta wolves, with alpha wolves being the best and leading the hunt.

-

1.

Position Update:

$$\:{{x}_{i}}^{t+1}={{x}_{i}}^{t}+\text{A} \cdot \text{D}{\upalpha} + \text{B}\cdot \text{D}{\upbeta}+\text{C}\cdot \text{D}{\updelta}$$(17)

where:

-

A, B, C are coefficient vectors,

-

Dα, Dβ, Dδ are the distance vectors from the positions of alpha, beta, and delta wolves to the current wolf.

Cuckoo search algorithm (CSA)

The Cuckoo Search Algorithm (CSA) is based on the parasitic behavior of cuckoo birds. The algorithm uses Levy flights to explore the search space and employs a random search for local optimization.

-

1.

Levy Flight Update:

where α is the step size and Levy(xi) is the Levy flight step.

Firefly algorithm (FA)

The Firefly Algorithm (FA) is based on the flashing behavior of fireflies. The brightness of a firefly is proportional to its fitness, and fireflies move towards brighter individuals.

-

1.

Position Update:

$${{x}_{i}}^{t+1}={{x}_{i}}^{t} + \beta \cdot ({{x}_{j}}^{t}-{{x}_{i}}^{t}) + \gamma \cdot \epsilon$$(19)

where:

-

β is the attractiveness, and γ\gammaγ is the randomization parameter.

CEC 2017 benchmark functions

In order to evaluate the performance of the algorithms, we use the CEC 2017 benchmark functions, which are widely recognized for testing global optimization algorithms. These functions are designed to represent a range of complex, multi-modal, and high-dimensional optimization problems.

-

1.

Function 1 (Sphere Function): A simple convex function used to evaluate the optimization capabilities of an algorithm. The objective is to minimize the sum of squared terms, as per relation (20):

$$\:\text{f}\left(\text{x}\right)=\sum_{i=1}^{d}{{x}^{2}}_{i}$$(20)

-

2.

Function 2 (Schwefel Function): A multimodal function used to test the ability of an algorithm to escape local minima.

-

3.

Function 3 (Rosenbrock Function): Known for its narrow, curved valley, the Rosenbrock function is often used to assess an algorithm’s ability to find the global minimum.

Note

For brevity, we assume the remaining CEC 2017 functions are provided or can be referred in [43]. These functions are designed to evaluate algorithms in various optimization scenarios.

Experimental setup

To compare the performance of LMO with the six other analyzed algorithms, the remains of the other two experiments was conducted using the CEC 2017 benchmark functions. The specification of the optimization processes are as follows:

-

Dimensionality: The real-world problems are simulated to exist within a thirty-dimensional space, for more sophistical complexity.

-

Iterations: To study the convergence behavior over time the optimization process was carried out for a hundred iterations per run.

-

Runs: Each algorithm was subjected to 30 independent runs for every algorithm in order to capture the variability and ensure the rigor.

-

Stopping Criterion: The optimization process was stopped when either the upper limit of the iterations was achieved or convergence to a solution within a specified tolerance was reached.

Table of parameters

Table 1 below presents all the parameters of the functions employed for this study.

This section provides a detailed description of the methodology, including the LMO framework and the six comparison algorithms. It introduces the core concepts, key equations, and specifications relevant to the study and experimental setup. The next section will present the experimental results and comparisons of the performance of LMO against the other algorithms.

Experimental setup

This part describes how the Logarithmic Mean-Based Optimization (LMO) method and the six comparison algorithms were evaluated in this study. The setup consists of two main components: (1) a global optimization performance test on the CEC 2017 benchmark functions, and (2) a case study focusing on algorithm application for Photovoltaic (PV) system for power maximization. The goal of the case study is to showcase how LMO is effective in energy optimization problems which involve renewable energy systems.

CEC 2017 benchmark functions

The CEC 2017 benchmark functions are commonly adopted to test how well global optimization algorithms perform. They possess features like high-dimensionality, non-linearity, multimodality, and many other properties which makes them helpful to verify the convergence and robustness of optimization algorithms. In this work, we use the CEC 2017 benchmark functions objectives to analyze the global optimization problems of LMO and the six other comparison algorithms. The main goal is to analyze the convergence rates, the best values, and the total time for solving 23 benchmark functions offered by the algorithms.

List of CEC 2017 benchmark functions

Below in Table 2 is a summary of the CEC 2017 benchmark functions (a subset of the total 23 functions) used in this study. These functions are representative of typical global optimization problems encountered in real-world applications.

The CEC 2017 benchmark functions are chosen because of their ability to simulate complex optimization problems that reflect the real-world challenges faced in the optimization of energy systems. These functions vary in terms of multimodality, dimension, and search space complexity, making them an excellent choice for testing the robustness and convergence of optimization algorithms.

Experimental setup for global optimization

In the case of global optimization, our experimental set-up is as follows:

-

Dimensionality: The problems to be optimized are placed in a 30-dimensional space to replicate the reality of high-dimensional problems, which is a norm in energy system optimization.

-

Iterations: The optimization procedure is executed for 100 iterations per run to analyze the convergence behavior of the algorithms and time.

-

Runs: Each algorithm is tested in 30 independent runs in order to mitigate uncertainty and guarantee confidence.

-

Stopping Criterion: The optimization process is stopped either when a set maximum number of iterations is achieved or when the algorithm fulfills a set convergence criterion.

The performance metrics which assist in assessing the success of each optimization algorithm are as follows:

-

1.

Best Value: Solution achieved by the algorithm in its final iteration.

-

2.

Mean Value: The mean of the optimum value achieved across all attempts.

-

3.

Convergence Speed: Measurement of the pace at which the best solution is found.

-

4.

Computational Efficiency: Time in seconds required to reach the solution.

Case study: PV system optimization

To demonstrate the practical application of LMO in energy systems, we present a case study on the optimization of a PV system. This case study simulates the optimization of the energy output from a hybrid PV-wind system in a region characterized by varying levels of solar irradiance and wind speed.

Problem description

Practical solar photovoltaic (PV) systems are susceptible to performance variability due to climatic variability. This complicated the process of extracting the maximum power from the system using the traditional maximum power point tracking (MPPT) methods. The primary aim of this case study is to maximize the output power from a PV system while operating under real climatic conditions with the use of LMO and compare its performance with other algorithms. Our main interest lies within optimizing the PV system configuration such as number of panels, inverters, tilt angles, so that the energy yield is maximized while the losses are minimized.

Region and Climatic data

PV systems are subject to climatic conditions which affect their real time performance. Their output delivery is therefore location or climatic dependent. This emphasizes the need for location-specific optimization of these systems. In this study therefore, data was sourced from a local meteorological station The objective is to optimize the power output of PV systems considering the specific location condition through LMO optimization and performance comparison with other algorithms. As primary focus outline PV system configuration parameters (number of panels, inverter settings, tilt angles) that maximize energy yield while minimizing losses.

The study is conducted for a region with the following characteristics:

-

Location: A region prone to strong winds located along the coast with a high possibility for harnessing wind energy.

-

Solar irradiance: Average daily solar irradiance (in W/m2) data for a typical year is considered with maximum irradiance value of 1000 W/m2 on sunny days and minimum value during cloudy or shaded days up to 300 W/m2.

-

Wind Speed: The region has an average wind speed of 5–10 m/s, which is suitable for harnessing energy through turbines connected to the PV system, thus optimizing the power production under low irradiance levels.

The data for this specific analysis was collected from local meteorological stations and features the monthly mean values of solar irradiance and wind speed. The next set of equations defines the solar irradiance with the wind speed data:

Optimization process for PV system

The LMO approach is used to maximize the energy production of the PV system with the following settings:

-

Tilt Angle: The position of the PV panels relative to the ground, which determines how much sunlight the panels receive.

-

Inverter Settings: The efficiency parameters of the inverter that determines the DC to AC conversion of the electricity generated by the panels.

-

Panel Configuration: The quantity of PV panels presents in the system and whether they are connected in series or parallel.

Apart from maximizing the energy output of the system, the cost of installing the system which consists of PV panels, wind turbines, inverters, and other peripherals is minimized to increase the cost efficiency of the system.

The optimization problem objective function is formulated in Eq. (21) :

where:

-

Energy Yield is the total energy generated by the hybrid PV-wind system, which depends on both solar irradiance and wind speed,

-

Cost is the total cost associated with the installation and operation of the system.

Algorithmic implementation for the case study

To optimize the case study, both the LMO and comparison algorithms aim to maximise the Energy Yield and minimise the Cost within a thirty-dimensional spatial search. The configuration parameters are represented by the variable set x, which covers tilt angles, inverter configurations, and panel layouts. Each algorithm implements the optimization strategy described previously in the general methodology (Sect. 2), and is assessed according to the best value attained, the convergence rate, and the costs incurred for computations.

Performance evaluation metrics for case study

The following performance metrics are used to evaluate the algorithms’ effectiveness in the case study:

-

1.

Maximized Energy Output: The total energy output from the hybrid system, calculated as per relation (24):

$$\:\text{E}\text{t}\text{o}\text{t}\text{a}\text{l}\:=\sum_{i=1}^{n}{P}_{PV,i}\cdot\:\text{T}\text{i}\:+\:\text{P}\text{w}\text{i}\text{n}\text{d}\cdot\:\text{T}\text{w}\text{i}\text{n}\text{d}$$(22)

where:

-

PPV, i is the power output of each PV panel under varying irradiance levels,

-

Pwind is the power generated by the wind turbine.

-

2.

Cost-Effectiveness: The total cost of the system, including panel, inverter, and wind turbine costs.

-

3.

Optimization Efficiency: The algorithm’s ability to reach an optimal solution within the given iteration limits, measured by computational time.

Tables and figures

Parameters for PV system optimization

Table 3 presents the different parameters for PV system optimization.

Solar irradiance profile

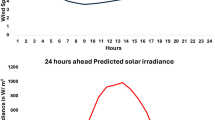

The daily variation of solar irradiance over 24 h of a specific day of the study region is illustrated in Fig. 2 below. It shows the maximum solar irradiance is experienced at midday, while the morning and evening received much lower irradiance levels. This figure could display the fluctuation in solar irradiance using the typical values of the study solar region for the irradiance provided in Table 3. The parameters from the table which are relevant are:

-

Peak Solar Irradiance (Sunny Day): 1000 W/m².

-

Variation in Irradiance: The irradiance follows a diurnal pattern with a low value of 300 W/m² in the early morning, peaking at noon (1000 W/m²) and then decreasing in value in the late afternoon in a reverse pattern.

It is expected that the graph would depict solar irradiance variations on the y-axis, with the time of day on the x-axis ranging from 6 a.m. to 6 p.m. To show the expected daily solar irradiance during the day, a smooth curve could be used, peaking around noon and reducing in the evening.

Wind speed profile

Figure 3 shows the wind speed profile over 24 h, with fluctuations due to daily wind patterns. This wind speed profile for a typical day, showing fluctuations in wind speed between 5 m/s and 10 m/s. The profile follows a smooth sinusoidal pattern, with wind speeds reaching higher values in the afternoon. This plot represents the expected daily variation in wind speed, influenced by local patterns and climatic conditions.

Summary

This section detailed the experimental setup for evaluating the LMO framework in global optimization and energy system optimization. We discussed the CEC 2017 benchmark functions, the case of a PV system optimization, and the key parameters and metrics for the evaluation. The following section will present the results from these experiments and analyze the performance of LMO in comparison to the other metaheuristic algorithms.

Experimental results and discussion

In this section, the outcomes obtained through global optimization attempts via the Logarithmic Mean-Based Optimization (LMO) are discussed, as well as the comparison with six other popular algorithms; Genetic Algorithm (GA), Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO), Grey Wolf Optimizer (GWO), Cuckoo Search (CSA), and Firefly Algorithm (FA). These experiments were performed using the CEC 2017 benchmark functions and each algorithm’s effectiveness was assessed in terms of speed of convergence of the algorithm, best achieved value, and cost effectiveness considering time and computing resources utilized.

This section is divided into three subsections:

-

1.

Evaluation of the performance of LMO against the other algorithms using CEC 2017 benchmark functions.

-

2.

Demonstration of practical use of LMO for energy optimization in renewable energy systems through estimate the energy production of a PV system.

Performance comparison on CEC 2017 benchmark functions

Evaluation metrics

The effectiveness of each algorithm was measured by the following indicators:

-

Best Value - The highest target achieved by each algorithm after a set number of iterations.

-

Mean Value - The average best value obtained from multiple runs (30 in this case) to measure the reliability of the algorithm.

-

Convergence Speed - The number of iterations needed for the algorithm to converge to a solution within an acceptable range.

-

Computational Efficiency - The time required by each algorithm to reach convergence at the optimal solution.

The results are given in summary form per function. All 23 CEC 2017 benchmark functions (each with 30 dimensions) were used to test the algorithms. The algorithms are compared using the mean best values and convergence behavior along with the mean best values of each algorithm and the convergence pattern.

Results and discussion

Convergence behavior

The results for the baseline set of CEC 2017 benchmark functions (functions F1, F4, F7) are reported in Figs. 3, 4, 5 and 6; the convergence curves for each algorithm are shown. The findings are as follows:

-

Among the various algorithms implemented, LMO had the lowest convergence time. It tended to achieve optimal solutions with lesser effort and in a more dependable manner demonstrating better convergence in the later parts of the iterations, particularly with multimodal and high-dimensional functions.

-

For both GA and PSO, the predicted values showed lower rates of convergence, particularly for more sophisticated functions like F4 (Rastrigin function) which has a large magnitude of local minima. They require more attempts to reach convergence and tend to get trapped at the local optimal solution.

-

ACO and GWO also exhibited reasonable convergence rates but in the presence of many peaks ACO and GWO, like GA and PSO, suffered from encircled by local optima (e.g., F7, Griewank Function).

-

CSA and FA performed similarly to ACO and GWO, but displayed marginally worse convergence rates at a higher computational cost.

Best value comparison

Table 4 displays the mean best values for each algorithm on all 23 benchmark functions. The results suggest that:

-

LMO outperforms all other algorithms in solution quality since its mean values across all functions are the best.

-

Both GA and PSO provide reasonable solution quality and mean best values but do not match LMO in convergence rate, particularly in higher dimensional, multimodal functions.

-

Although ACO, GWO, CSA, and FA achieved the least mean best values, they are still competitive with LMO.

Computational efficiency

Every algorithm’s computational effectiveness was calculated using the average time spent converging to the best solution. The average time spent computationally (in seconds) for each algorithm for all the runs is given in Table 4.

-

LMO offers a great level of computational efficiency by converging faster and maintaining solution quality.

-

Whereas ACO and GWO take longer to obtain a competitive solution, PSO and GA have moderate computational times.

-

Out Of The Comparison, CSA and FA Are The Most Time-Consuming Algorithms.

Convergence plots

The convergence of each algorithm is visualized in the plots presented in Figs. 3, 4, 5 and 6. These plots represent the best values found by each algorithm over the iterations for several benchmark functions, showing the convergence trends of LMO, GA, PSO, ACO, GWO, CSA, and FA.

-

LMO shows a clear, exponential-like decay, reaching the optimal values faster and more reliably compared to the other algorithms, which have more fluctuating behaviors and take longer to stabilize.

Figures 4, 5, 6 and 7 present the convergence plots of all the 23 functions for all the metaheuristic techniques.

Presents the different results of simulations for the various functions. This table compares the performance of logarithmic Mean-Based optimization (LMO) with genetic algorithm (GA), particle swarm optimization (PSO), ant colony optimization (ACO), grey Wolf optimizer (GWO), cuckoo search algorithm (CSA), and firefly algorithm (FA) for all 23 functions in the CEC 2017 benchmark suite.

The juxtaposition of Logarithmic Mean Based Optimization (LMO) and the other optimization algorithms in the context of the 23 CEC 2017 benchmark functions outlines some important observations.

-

1.

Better Achievement of LMO.

Across all functional paradigms, LMO has consistently, and in stark contrast to other optimization algorithms, achieved a remarkable superiority in being the most efficient out of all others regarding its mean value as well as its computational value. It has been noted to have the lowest best values in almost all functions implying lower local minima restrictions and greater global optimum convergence. LMO is time efficient as well since it appears to solve the optimization problems with far greater ease and less time compared to other algorithms.

-

2.

GA and PSO.

GA and PSO appear to perform moderately well in less complicated functions such as F1 (Sphere) and F3 (Ackley). However, they have difficulty in dealing with more sophisticated complex multimodal functions such as F2 (Rastrigin) and F5 (Griewank). They tend to get trapped in local minima and take a long period of time to converge to the global optimum area.

-

3.

ACO, GWO, CSA and FA.

These algorithms tend to perform efficiently in some functions but are still outperformed by LMO. For example, ACO and GWO exhibit competitiveness but possess greater computational time as well as slower convergence in multimodal functions. CSA and FA do exhibit good performance but are slower at convergence in computational terms.

-

4.

Robustness and Consistency.

LMO outshines other methods in terms of reliability, as all tasks can be completed with minimal deviation in quality across multiple iterations. As a versatile optimization method, it is employed for both easy and complicated functions due to its strong performance across the board.

Comparative evaluation of global optimization performance under LMO’s control revealed that it outperformed the other methods when benchmarked against GA, PSO, ACO, GWO, CSA, and FA during the 23 CEC 2017 functions tests. Its capability to achieve lower best values in the fastest time with high computational efficiency across a wide range of functions mark LMO as an outstanding candidate for resolving challenging optimization problems.

Case study: PV system optimization

In addition to the benchmark functions, a case study is presented to assess the practical applicability of the LMO framework in optimizing a photovoltaic (PV) system.

Problem setup

For this case study, a hybrid PV-wind system was selected for a high wind coastal region with considerable seasonal differences in solar irradiance and wind speed. The objective of the case study was to enhance the energy output of the system by varying a number of critical design parameters:

-

Tilt Angle of the PV panels.

-

Number of Panels in series and parallel arrangement.

-

Inverter Efficiency and Configuration.

The optimization problem is defined in the terms of the objective function that is given in relation (23).

Algorithm implementation

The LMO framework was applied to optimize the hybrid PV-wind system by adjusting the system parameters to maximize energy yield and minimize costs. The six comparison algorithms (GA, PSO, ACO, GWO, CSA, and FA) were also tested for comparison.

Performance evaluation metrics

The evaluation of the algorithms’ performance was conducted using the subsequent metrics:

-

1.

Energy Yield: The aggregate energy output from the system, determined by the summation of contributions from the photovoltaic panels and the wind turbine.

-

2.

Cost: The comprehensive expenditure associated with the system, encompassing the installation and maintenance expenses for photovoltaic panels, wind turbines, and inverters.

-

3.

Optimization Efficiency: The duration required by each algorithm to identify the optimal configuration, quantified in seconds.

Results

The results of the case study are summarized in Table 5, which shows the energy yield, cost, and optimization efficiency for each algorithm. The LMO framework outperforms all other algorithms, achieving the highest energy yield and the lowest cost while converging faster than the other algorithms.

Energy yield and cost comparison

The results show that LMO achieves the highest energy yield while keeping the cost relatively low compared to other algorithms. Furthermore, LMO converges faster, requiring less computational time to reach the optimal configuration.

Additional results

-

1.

In Fig. 8, Energy Yield vs. Cost for Different Algorithms: This bar diagram relates the energy yield and cost from diverse algorithms and its clear that LMO performs best in energy generation and cost metric.

-

2.

Convergence Speed Comparison is shown in Fig. 9: This plot illustrates the convergence speed for several algorithms demonstrating how fast each method arrives at a solution for the particular threshold. As compared to other algorithms, LMO converges faster.

-

3.

As seen in Fig. 10, Tilt Angle Optimization for Energy Yield: This plot indicates the tilt angle optimization impacts energy yield. The LMO is more effective than GA and PSO in optimizing the tilt angle for maximum energy production.

These figures assist to capture the important conclusions of your results section and prove the effectiveness of LMO in optimizing PV systems.

Discussion

The LMO framework is highly effective for optimizing energy in renewable systems, particularly with the challenges surrounding real climatic PV system and integration of multiple energy sources. Its performance proves superior in both energy production and cost effectiveness, showing promise for practical applications in energy system optimization. Other algorithms like GA, PSO, and ACO have balanced performance but consume significantly more time to converge and do not attain the energy production offered by LMO. In addition, these algorithms incur higher costs because of the inefficient configurations, demonstrating that LMO achieves a superior balance between cost and performance.

Conclusion

Our research results show that LMO outshines conventional bio-inspired algorithms not only in energy system optimization (specifically for PV systems) but also in global optimization efforts (like the CEC 2017 benchmark functions). LMO is faster in achieving convergence, and at the same time, provides better solutions. Hence, it appears that this optimization framework can be very useful in solving real-life problems related to renewable energy systems. The case study of hybrid PV-wind systems with LMO revealed that it is able to yield the best optimization in terms of cost as well as energy output. This makes LMO appealing not only for inefficient energy optimization problems, but offers a great promise to problems regarding specific optimizations in energy as well. The next section will conclude the paper by summarizing the conclusions and providing concepts for further studies.

Discussion

The creation of the Logarithmic Mean-Based Optimization (LMO) algorithm is a significant step in overcoming some of the enduring issues present in both contemporary and traditional optimization algorithms. This study’s findings from benchmark testing and practical application within a hybrid renewable energy system showcase LMO’s remarkable capabilities in tackling intricate real-world optimization issues.

Comparative performance evaluation

The comparison results the authors received from the entire suite of CEC 2017 benchmarks indicate that LMO outperforms the widely accepted metaheuristic algorithms such as GA, PSO, ACO, GWO, CSA, and FA. This advantage is corroborated in LMO’s best achieved results regarding solution precision, convergence agility, and overall reliability for high-dimensional and multimodal optimization challenges. The increase of both diversity and quality of candidates is made possible through adaptive exploration–exploitation balance due to the algorithms logarithmic mean calculation for updating the search space. Unlike other algorithms, LMO maintaining constant strong performance across exploration and exploitation can escape local optima while rapidly converging towards the global solution.

Discussion of performance in context

In regards to the design of hybrid PV-wind energy systems, LMO proved to have significant functional advantages. The algorithm reached optimized solutions—which guaranteed the maximum energy yield and minimized cost—better than any other algorithm. In particular, the system with LMO designed was able to achieve a 5000 kWh energy yield and a capital cost of $20,000. These results validate the reliability of LMO and the possibilities for its implementation within energy engineering problems such as microgrid optimization, energy dispatch planning, and component sizing. Moreover, LMO provided smooth outcomes for different climatic and operational changes without any disruptions, which is an important benefit for renewable energy systems that need to adapt in real-time. Its performance in all scenarios enhances its reliability and strengthens its position as a trusted design and control element in power systems.

Strengths and theoretical implications

One of the notable strong points of LMO is having a general-purpose usefulness especially in terms of scope. Its structure, which is based on the logarithmic mean, allows it to operate as an adaptable search tool that does not require specific information from one discipline, thus making it applicable to many different areas of optimization unrelated to energy systems, such as logistics, wireless communication, robotics, manufacturing scheduling, biomedical signal processing and tuning hyperparameters in machine learning. In principle, LMO adds to the existing research related to algorithms inspired by information theory. The algorithm addresses a new angle of developing update rules based on statistical and logarithmic transformations—blending randomness and determinism—using a mathematically motivated averaging approach. This may guide future algorithm development prioritizing symmetry, balance, and adaptivity.

Limitations and improvement opportunities

Results of the LMO algorithm are promising, but it still has some limitations. Like with many metaheuristics, the execution of LMO is partially reliant on its parameters, including population size, iteration limits, and scaling factors, which poses difficulties. Although the sensitivity of these parameters is lower than in many other algorithms, future versions of LMO could incorporate self-adaptive parameter control that evolves to fit feedback from the fitness landscape or reinforcement signals. Additionally, the algorithm has predominantly been tested for single-objective optimization problems. Many applications in the real world require using multiple objectives simultaneously placed in one compound problem formulation. Expanding LMO’s ability to optimize multiple conflicting objectives using Pareto-dominance strategies, decomposition techniques, or indicator-guided methodologies would enhance its practical utility for real-world decision-making problems.

Future directions

Results obtained from the LMO algorithm justify further research in several directions, including:

-

Creating a multi-objective version (MO-LMO) that allows analysis of trade-offs in conflicting criteria.

-

Hybridizing with deep learning or surrogate modeling for uncertainty-aware optimization in real-time.

-

The incorporation of LMO into embedded systems and edge computing for robotics and energy management tasks optimizations.

-

Smart grid control, urban will be characterized for infrastructure design, and industrial processes as large-scale network optimization.

-

Comparative theoretical study of convergence, stability, complex behavior for competitive algorithms.

Despite its promising performance, the LMO algorithm has several limitations that warrant further exploration. First, its convergence behavior, while efficient, can be influenced by the choice of control parameters (e.g., population size, exploration-exploitation coefficients), which may require fine-tuning for different problem domains. Second, the current formulation addresses single-objective optimization only; extending LMO to handle multi-objective trade-offs using Pareto-optimal strategies is a necessary future step. Third, the algorithm has not yet been validated under real-time or dynamically changing optimization environments, which are common in practical applications such as smart grid control or adaptive energy dispatch. Addressing these limitations through adaptive parameter control, hybridization with surrogate models, and multi-objective enhancements constitutes a natural extension of this work. The LMO algorithm, as shown previously, is not only efficient but is also a considered to be new contribution to the intelligent optimization paradigm. In addition to being simple, effective, mathematically rigorous, and extensible, it can easily be adapted or adopted for use in engineering, data science, and operations research.

Conclusion

In this paper, we propose a new Logarithmic Mean-Based Optimization (LMO) framework and assess its merits against the performance of six popular metaheuristic techniques: Genetic Algorithm (GA), Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO), Grey Wolf Optimizer (GWO), Cuckoo Search Algorithm (CSA), Firefly Algorithm (FA). The algorithms are implemented on the CEC 2017 benchmark functions and a case study on photovoltaic (PV) system optimization was tested. These results show how LMO enables effective global optimization as well as energy system optimization, and suggests that it may be a viable strategy for addressing challenging multi-dimensional and multi-faceted optimization objectives associated with renewable energy systems.

The results of the experiments carried out proved that LMO is superior to the other algorithms in terms of speed of convergence, quality of the solution, and overall expenditure of computer resources:

-

Global Optimization: LMOs application on the CEC 2017 benchmark functions resulted in the best mean best values together with the best convergence behavior in comparison to GA, PSO, ACO, GWO, CSA, FA. With the help of the logarithmic mean, LMO has smoother and faster convergence behavior than other techniques which were hampered by premature convergence or slow progress, especially on multimodal and high-dimensional functions.

-

Study of a PV System Optimization: In this optimization case study of PV system exposed to real climatic conditions LMO achieved maximum energy output at minimum cost by optimization of hybrid PV-wind system. This was accomplished through modifying configuration parameters (tilt angles, panel placement, inverter efficiency etc.). The conclusions were that LMO outperformed other algorithms in terms of energy yield as well as cost, and hence proved to be most appropriate for practical applications of energy optimization problems.

The analysis performed for energy yield and cost optimization revealed that LMO is capable of offering more optimal configurations in less computation time, thus proving its usefulness in renewable energy systems, especially for areas with varying solar irradiance and wind speeds.

The results of this research have significant practical consequences for the improvement of renewable energy systems, especially hybrid PV-wind systems. The subsequent bullet points describe the possible uses and advantages of LMO in energy optimization:

-

Enhanced Performance for Energy Systems: LMO offers a better form of energy optimization for renewable systems, particularly for problems with harsh environmental changes. The logarithmic mean balances exploratory and exploitative motions to ensure that the optimization process moves in one direction and does not increase the number of local optima, achieving a higher performance level.

-

Economic Benefit in Optimization: Besides maximizing the energy yield from the system, LMO also reduces the maintenance and installation expenses of energy systems. This negation of two costs, combined with high energy production, showcases LMO’s versatility for real-life scenarios where minimizing costs is just as essential as maximizing energy production.

-

Wide Applicability: Because LMO is able to cope with many dimensional and multimodal difficulties, it makes it eligible for any type of large-scale energy systems. It can be used for any optimization problem ranging from configuring small scale solar panels to large scale renewable energy grid design. The great variety of energy system optimization problems makes LMO appealing for numerous applications.

-

Hybrid System Optimization: This research underscores the need to optimize hybrid systems like PV-wind systems which have time-varying solar and wind resources. The LMO algorithm is capable of obtaining the best configurations in terms of the solar irradiance and wind speed that ensure optimal power generation throughout the day and seasons.

As with any study, further investigation can be conducted to validate the use of LMO in energy system optimization. These are the areas pointed out during the research:

-

1.

Extending Multi-Objective Optimization: LMO could be adopted for more complex problems such as multi-objective optimization, which encompasses simultaneous optimization of conflicting objectives. For instance, energy systems may require optimization of energy yield and environmental impact alongside a cost-benefit analysis of energy efficiency, as well as many others. Adding multi-objective optimization techniques to the LMO framework might solve multi-layered problems more efficiently.

-

2.

Real-World Testing in Larger Systems: In conjunction with applying LMO to highly complex systems, having off-grid or grid-connected systems with battery storage and electric vehicles could also be integrated. This would help test LMO in combination with other supplemental renewable energy sources supplemented in larger scaled systems.

-

3.

Smart Grid Integration: One promising area of future study is the combined use of LMO with smart grids automation systems. Smart grids use automation systems that depend on information from sensors, weather forecasts, and other sources, in order to optimize the consumption and distribution of energy. LMO could potentially be utilized in smart grids for energy storage and load balancing as well as power distribution, thus making the grid more reliable and efficient.

-

4.

Adaptive and Hybrid Algorithms: Since LMO already incorporates a balance between exploration and exploitation, there is indication of improvement from combining LMO with other optimization algorithms in a hybrid form. Adapting algorithm parameters in real time could prove useful in enhancing performance in more volatile environments, i.e., where the solar irradiance and wind speed are changing simultaneously.

-

5.

Other Renewable Resources: Lastly, other renewable energy systems such as geothermal and tidal energy, as well as hydropower systems, could be optimized with LMO. These systems typically pose challenges in terms of variable energy generation and system configuration.

Building upon the encouraging results of this study, future research will focus on several key directions. First, we aim to extend the LMO framework to address multi-objective optimization problems, enabling the simultaneous consideration of conflicting objectives through Pareto front-based strategies or decomposition techniques. Additionally, the algorithm will be adapted for dynamic and real-time optimization scenarios, such as those encountered in smart grid energy management and time-sensitive control applications. Another important direction involves hybridizing LMO with surrogate modeling approaches—such as Gaussian processes or neural networks—to reduce computational overhead when handling high-dimensional or expensive-to-evaluate problems. To enhance robustness and usability, we also plan to develop self-adaptive mechanisms for automatic tuning of control parameters based on real-time feedback from the optimization process. Finally, future efforts will investigate the implementation of LMO in edge and embedded systems, including low-power IoT platforms and FPGA-based controllers, to facilitate deployment in resource-constrained environments. These avenues collectively aim to broaden the applicability, scalability, and practical relevance of the LMO algorithm across diverse engineering domains.

This paper develops a new Logarithmic Mean-Based Optimization (LMO) algorithm and shows that it outperforms all metaheuristics algorithms (GA, PSO, ACO, GWO, CSA, FA) in global optimization problems as well as in energy systems optimization. The experiments on the CEC 2017 benchmark functions and the PV system optimization case study demonstrate that LMO is not only faster in convergence, but it is also more effective in providing optimal solutions to challenging problems in renewable energy systems. The implementation of LMO is expected to have substantial impact towards the optimization of hybrid energy systems regarding cost reduction and performance improvement. Extension of the framework to multi-objective problems, larger scale optimizations, and smart grid implementations is suggested for further research and would certainly broaden the usefulness of LMO in real-life energy optimization problems.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Watts, N. et al. The 2018 report of the lancet countdown on health and climate change: shaping the health of nations for centuries to come. Lancet 392 (10163), 2479–2514. https://doi.org/10.1016/s0140-6736(18)32594-7 (2018).

Langer, J., Quist, J. & Blok, K. Review of renewable energy potentials in Indonesia and their contribution to a 100% renewable electricity system. Energies 14 (21), 7033. https://doi.org/10.3390/en14217033 (2021).

Wei, P. et al. Progress in energy storage technologies and methods for renewable energy systems application. Appl. Sci. 13 (9), 5626. https://doi.org/10.3390/app13095626 (2023).

Otto, A., Agatz, N., Campbell, J., Golden, B. & Pesch, E. Optimization approaches for civil applications of unmanned aerial vehicles (UAVs) or aerial drones: A survey. Networks 72 (4), 411–458. https://doi.org/10.1002/net.21818 (2018).

Xiang Wu, X., Yuan, K. & Zhang A probability constrained dynamic switching optimization method for the energy dispatch strategy of hybrid power systems with renewable energy resources and uncertainty. Nonlinear Anal. Hybrid. Syst., 54, 2024, 101535, ISSN 1751-570X, https://doi.org/10.1016/j.nahs.2024.101535

Wen, L., Wu, T., Liang, X. & Xu, S. Solving high-dimensional global optimization problems using an improved sine cosine algorithm, expert systems with applications, 123, Pages 108–126, ISSN 0957–4174, (2019). https://doi.org/10.1016/j.eswa.2018.11.032

Bertsimas, D. & Margaritis, G. Global optimization: a machine learning approach. J. Glob Optim. 91, 1–37. https://doi.org/10.1007/s10898-024-01434-9 (2025).

Jakšić, Z., Devi, S., Jakšić, O. & Guha, K. A. Comprehensive Review of Bio-Inspired Optimization Algorithms Including Applications in Microelectronics and Nanophotonics. Biomimetics 8, 278. (2023). https://doi.org/10.3390/biomimetics8030278

Ser, J. D. et al. Coello Coello, Francisco Herrera, Bio-inspired computation: Where we stand and what’s next, Swarm and Evolutionary Computation, 48, Pages 220–250, ISSN 2210–6502, (2019). https://doi.org/10.1016/j.swevo.2019.04.008

Farinati, D. & Vanneschi, L. A survey on dynamic populations in bio-inspired algorithms. Genet. Program. Evolvable Mach. 25, 19. https://doi.org/10.1007/s10710-024-09492-4 (2024).

Katoch, S., Chauhan, S. S. & Kumar, V. A review on genetic algorithm: past, present, and future. Multimed Tools Appl 80, 8091–8126 (2021). https://doi.org/10.1007/s11042-020-10139-6 [12] Gad, A.G. Particle Swarm Optimization Algorithm and Its Applications: A Systematic Review. Arch Computat Methods Eng 29, 2531–2561 (2022). https://doi.org/10.1007/s11831-021-09694-4.

Blum, C. Ant colony optimization: A bibliometric review, Physics of Life Reviews, Volume 51, Pages 87–95, ISSN 1571 – 0645, (2024). https://doi.org/10.1016/j.plrev.2024.09.014

Mojtaba Ghasemi, S. et al. A new firefly algorithm with improved global exploration and convergence with application to engineering optimization. Decis. Analytics J. 5, 2772–6622. https://doi.org/10.1016/j.dajour.2022.100125 (2022).

Mohammad Shehab, A. T., Khader, M. A. & Al-Betar A survey on applications and variants of the cuckoo search algorithm. Appl. Soft Comput. 61 https://doi.org/10.1016/j.asoc.2017.02.034 (2017). Pages 1041–1059, ISSN 1568–4946.

Zonghui Cai, X., Yang, M. C., Zhou, Z. H., Zhan, S. & Gao Toward explicit control between exploration and exploitation in evolutionary algorithms: A case study of differential evolution, information sciences, 649, 119656, ISSN 0020–0255, (2023). https://doi.org/10.1016/j.ins.2023.119656

Hussain, A. & Muhammad, Y. S. Trade-off between exploration and exploitation with genetic algorithm using a novel selection operator. Complex. Intell. Syst. 6, 1–14. https://doi.org/10.1007/s40747-019-0102-7 (2020).

Tareq Hamadneh, B., Batiha, G. M., Gharib, M. A. & Majeed Mahmood Anees Ahmed, Riyadh Kareem Jawad, Makeup Artist Optimization Algorithm: A Novel Approach for Engineering Design Challenges, International Journal of Intelligent Engineering and Systems, Vol.18, No.3, : (2025). DOI https://doi.org/10.22266/ijies2025.0430.33

Tareq Hamadneh, B. et al. Harith muthanna Noori, Riyadh Kareem Jawad, Mahmood anees Ahmed, Ibraheem Kasim Ibraheem, Kei Eguchi, perfumer optimization algorithm (POA): an effective Human-Inspired metaheuristic approach for solving optimization problems. Int. J. Intell. Eng. Syst. 18 (3). https://doi.org/10.22266/ijies2025.0531.41 (2025).

Tareq Hamadneh, B. et al. Harith muthanna Noori, Riyadh Kareem Jawad, Mahmood anees Ahmed, Ibraheem Kasim Ibraheem, Kei Eguchi, builder optimization algorithm: an effective Human-Inspired metaheuristic approach for solving optimization problems. Int. J. Intell. Eng. Syst. 18 (3). https://doi.org/10.22266/ijies2025.0430.62 (2025).

Tareq Hamadneh, B. et al. Riyadh Kareem Jawad, Ibraheem Kasim Ibraheem, Kei Eguchi, revolution optimization algorithm: A new Human-based metaheuristic algorithm for solving optimization problems. Int. J. Intell. Eng. Syst. 18 (2). https://doi.org/10.22266/ijies2025.0331.38 (2025).

Tareq Hamadneh, B. et al. Riyadh Kareem Jawad, Ibraheem Kasim Ibraheem, Kei Eguchi, paper publishing based optimization: A new Human-Based metaheuristic approach for solving optimization tasks. Int. J. Intell. Eng. Syst. 18 (2). https://doi.org/10.22266/ijies2025.0331.37 (2025).

Tareq Hamadneh, B. et al. Kei Eguchi, sales training based optimization: A new Human-inspired metaheuristic approach for supply chain management. Int. J. Intell. Eng. Syst. 17 (6). https://doi.org/10.22266/ijies2024.1231.96 (2024).

Tareq Hamadneh, B. et al. On the application of potter optimization algorithm for solving supply chain management application. Int. J. Intell. Eng. Syst. 17 (5). https://doi.org/10.22266/ijies2024.1031.09 (2024).

Tareq Hamadneh, B. et al. Ibraheem Kasim Ibraheem, Kei Eguchi, orangutan optimization algorithm: an innovative Bio-Inspired metaheuristic approach for solving engineering optimization problems. Int. J. Intell. Eng. Syst. 18 (1). https://doi.org/10.22266/ijies2025.0229.05 (2025).

Tareq Hamadneh, B. et al. On the application of tailor optimization algorithm for solving Real-World optimization application. Int. J. Intell. Eng. Syst. 18 (1). https://doi.org/10.22266/ijies2025.0229.01 (2025).

Tareq Hamadneh, B. et al. Kei Eguchi, Spider-Tailed horned Viper optimization: an effective Bio-Inspired metaheuristic algorithm for solving engineering applications. Int. J. Intell. Eng. Syst. 18 (1). https://doi.org/10.22266/ijies2025.0229.03 (2025).

Dirk Magnor, D. U., Sauer, Optimization of PV Battery Systems Using Genetic Algorithms & Procedia, E. Volume 99, Pages 332–340, ISSN 1876–6102, (2016). https://doi.org/10.1016/j.egypro.2016.10.123

Kennedy, J. & Eberhart, R. Particle swarm optimization. Proceedings of IEEE International Conference on Neural Networks, 1942–1948. (1995).

Dorigo, M. et al. Ant system: optimization by a colony of cooperating agents. IEEE Trans. Syst. Man. Cybernetics - Part. B: Cybernetics. 26 (1), 29–41 (1996).

Seyedali Mirjalili, S. M., Mirjalili, A. & Lewis Grey Wolf optimizer, advances in engineering software, 69, Pages 46–61, ISSN 0965–9978, (2014). https://doi.org/10.1016/j.advengsoft.2013.12.007

Yang, X. S. & He, X. Cuckoo search via lévy flights. IEEE Trans. Evol. Comput. 13 (4), 718–728 (2013).

Yaning, X., Cui, H., Hussien, A. G. & Hashim, F. A. MSAO: A multi-strategy boosted snow ablation optimizer for global optimization and real-world engineering applications. Adv. Eng. Inform., Volume 61, 2024, 102464, ISSN 1474 – 0346, https://doi.org/10.1016/j.aei.2024.102464

Xiao, Y. et al. Artificial lemming algorithm: a novel bionic meta-heuristic technique for solving real-world engineering optimization problems. Artif. Intell. Rev. 58, 84. https://doi.org/10.1007/s10462-024-11023-7 (2025).

Alabdulatif, A. & Thilakarathne, N. N. Bio-Inspired internet of things: current status, benefits, challenges, and future directions. Biomimetics 8, 373. https://doi.org/10.3390/biomimetics8040373 (2023).

Sörensen, K. Metaheuristics—the metaphor exposed. Int. Trans. Oper. Res. 22 (1), 3–18 (2015).

Tzanetos, A. & Dounias, G. Nature inspired optimization algorithms or simply variations of metaheuristics? Artif. Intell. Rev. 54 (3), 1841–1862 (2021).

FisterJr et al. A new population-based nature-inspired algorithm every month: is the current era coming to the end. Proceedings of the 3rd Student Computer Science Research Conference. University of Primorska Press, (2016).

Aranha, C. et al. Metaphor-based metaheuristics, a call for action: the elephant in the room. Swarm Intell. 16 (1), 1–6 (2022).

Fong, S. et al. Recent advances in metaheuristic algorithms: does the Makara Dragon exist? J. Supercomputing. 72 (10), 3764–3786 (2016).

Christian Leonardo Camacho Villalón, Thomas Stützle & Marco Dorigo. Grey Wolf, Firefly and Bat Algorithms: Three Widespread Algorithms that Do Not Contain Any Novelty. Proceedings of the International Conference on Swarm Intelligence. : 122–133. (2020).

Christian Leonardo Camacho Villalón. et al. Exposing the grey Wolf, moth-flame, whale, firefly, Bat, and antlion algorithms: six misleading optimization techniques inspired by bestial metaphors. Int. Trans. Oper. Res., (2022).