Abstract

The rapid integration of artificial intelligence (AI) into healthcare has enhanced diagnostic accuracy; however, patient engagement and satisfaction remain significant challenges that hinder the widespread acceptance and effectiveness of AI-driven clinical tools. This study introduces CareAssist-GPT, a novel AI-assisted diagnostic model designed to improve both diagnostic accuracy and the patient experience through real-time, understandable, and empathetic communication. CareAssist-GPT combines high-resolution X-ray images, real-time physiological vital signs, and clinical notes within a unified predictive framework using deep learning. Feature extraction is performed using convolutional neural networks (CNNs), gated recurrent units (GRUs), and transformer-based NLP modules. Model performance was evaluated in terms of accuracy, precision, recall, specificity, and response time, alongside patient satisfaction through a structured user feedback survey. CareAssist-GPT achieved a diagnostic accuracy of 95.8%, improving by 2.4% over conventional models. It reported high precision (94.3%), recall (93.8%), and specificity (92.7%), with an AUC-ROC of 0.97. The system responded within 500 ms—23.1% faster than existing tools—and achieved a patient satisfaction score of 9.3 out of 10, demonstrating its real-time usability and communicative effectiveness. CareAssist-GPT significantly enhances the diagnostic process by improving accuracy and fostering patient trust through transparent, real-time explanations. These findings position it as a promising patient-centered AI solution capable of transforming healthcare delivery by bridging the gap between advanced diagnostics and human-centered communication.

Similar content being viewed by others

Introduction

The integration of artificial intelligence (AI) in healthcare has significantly improved the speed and accuracy of disease diagnosis, revolutionizing the industry1. Computer-Aided Diagnosis (CAD) systems, particularly in fields like radiology, cardiology, and oncology, have shown great promise in detecting conditions such as cancer, heart diseases, and stroke2,3. However, despite advancements in diagnostic accuracy, patient engagement and experience remain secondary considerations4. Current CAD systems predominantly focus on clinical data analysis without offering compassionate, patient-friendly communication during the diagnosis process. This lack of personalized interaction can lead to confusion, stress, and dissatisfaction among patients5.

Recent developments in natural language processing (NLP), specifically conversational AI models like GPT, have emerged as potential solutions to bridge the communication gap between CAD systems and patients6. We propose CareAssist-GPT, an innovative AI model that enhances patient experience by integrating diagnostic aid with personalized, real-time communication7. Unlike conventional CAD systems that rely solely on clinical outputs, CareAssist-GPT simplifies medical jargon, providing patient-friendly explanations that foster trust and understanding8.

Figure 1 illustrates a typical CAD system used for pneumonia diagnosis, demonstrating its sequential approach: lung segmentation from CT scans, followed by nodule detection and segmentation, and advanced analyses such as shape and growth rate estimation9. While this systematic process aids in identifying benign or malignant formations, it fails to address the patient’s need for clear, understandable feedback10. Diagnostic errors account for roughly 10% of global deaths, highlighting the urgent need for improved diagnostic tools that also consider the patient’s perspective11.

A typical CAD system for pneumonia diagnosis. The system involves multiple stages, starting with lung segmentation from CT scan images taken at two-time intervals. This is followed by nodule detection and segmentation to locate potential growths the lungs. Advanced analyses, such as shape analysis, growth rate estimation, and appearance analysis, are subsequently applied to the segmented nodules to assess abnormalities, ultimately leading to a diagnostic output.

The integration of artificial intelligence (AI) into healthcare has revolutionized diagnostic processes, offering unprecedented levels of accuracy and efficiency12. However, a significant gap persists between the technical capabilities of AI systems and the need for patient-centered care, which diminishes the full potential of AI in healthcare12. While AI-driven computer-aided diagnosis (CAD) systems excel in processing complex medical data, they often fail to address the emotional and communicative needs of patients, leading to disengagement, anxiety, and a lack of trust in the diagnostic process13. This gap highlights the critical need for AI models that not only improve diagnostic outcomes but also foster meaningful patient interactions.

Conversational AI models, such as GPT, have emerged as promising tools for addressing these challenges, particularly in scenarios requiring continuous patient engagement, such as chronic pain management, diabetes care, and mental health disorders14,15. Research by McKinsey suggests that conversational AI can reduce diagnostic errors by up to 30% while significantly enhancing patient satisfaction scores16. Despite these advancements, the integration of GPT-based systems into CAD tools to simultaneously enhance diagnostic accuracy and patient communication remains underexplored17. Existing CAD systems primarily focus on technical performance, often neglecting the human element of healthcare delivery, which is crucial for building patient trust and ensuring long-term engagement18.

This paper introduces CareAssist-GPT, a novel AI-assisted diagnostic model designed to bridge the gap between technical efficiency and patient-centered care. Unlike traditional CAD systems, CareAssist-GPT combines advanced diagnostic capabilities with natural language communication, enabling real-time, patient-friendly explanations and feedback. By integrating multimodal data sources—such as high-resolution X-ray images, real-time vital signs, and clinical notes—into a unified predictive framework, CareAssist-GPT achieves superior diagnostic accuracy while maintaining high specificity, precision, and recall. Furthermore, its conversational interface simplifies complex medical information, empowering patients to better understand their conditions and fostering a sense of involvement in their healthcare journey.

The development of CareAssist-GPT addresses several critical limitations of existing CAD systems. First, it overcomes the lack of patient engagement by incorporating interpersonal communication into the diagnostic process, ensuring that patients feel heard and understood19. Second, it provides a comprehensive roadmap for implementing conversational AI in clinical settings, balancing technological advancements with emotional and psychological patient needs20. Finally, CareAssist-GPT demonstrates how AI can be aligned with the principles of patient-centered care, offering a model that not only enhances diagnostic reliability but also builds patient trust and satisfaction21.

In modern healthcare, the emphasis on technical efficiency often overshadows the importance of patient-provider communication. Studies have shown that patients who feel informed and involved in their care are more likely to adhere to treatment plans and report higher satisfaction levels. However, traditional CAD systems, while effective in processing data, lack the ability to translate complex medical jargon into accessible language, leaving patients feeling alienated. CareAssist-GPT addresses this issue by leveraging the natural language processing (NLP) capabilities of GPT to provide clear, empathetic, and personalized explanations. This approach not only improves patient understanding but also strengthens the therapeutic alliance between patients and healthcare providers.

Moreover, the rapid adoption of AI in healthcare has raised concerns about the dehumanization of medicine, with critics arguing that over-reliance on technology may erode the patient-provider relationship. CareAssist-GPT counters this narrative by positioning AI as a complementary tool that enhances, rather than replaces, human interaction. By automating routine diagnostic tasks and providing real-time insights, the model allows healthcare providers to focus on building rapport and addressing the emotional needs of their patients. This dual focus on technical and interpersonal excellence positions CareAssist-GPT as a transformative solution for modern healthcare challenges.

In this paper, we assess the limitations of current CAD systems and explore the transformative potential of conversational AI in healthcare. We present CareAssist-GPT as a solution to the challenges of integrating AI-driven diagnostics with effective patient communication, offering a system that meets both technical and emotional needs. By doing so, we aim to pave the way for a new generation of AI tools that prioritize both accuracy and empathy, ultimately enhancing the overall healthcare experience for patients and providers alike. The purpose of this study is to create and assess CareAssist-GPT: a patient-oriented AI system that can improve the diagnostic outcomes of CAD by engaging patients in natural language conversations that simplify the medical information and encourage patients’ active participation.

-

This is to develop a novel patient-centred artificial intelligence based diagnostic model (CareAssist-GPT) that combines NLP with the current CAD systems for enhancing the real-time interaction and communication with the patients.

-

To assess the device’s efficacy in improving patient comprehension and satisfaction especially in relation to the CareAssist-GPT tool designed to translate medical diagnosis in simple and patient friendly language.

-

To assess the diagnostic performance of CareAssist-GPT against conventional CAD tools in different healthcare environments, and to examine areas of enhanced diagnostic effectiveness and patient interaction.

-

In the following study, using CareAssist-GPT in CAD systems, the ethical considerations and data privacy issues will be discussed and potential recommendations for the safe and responsible application of conversational AI in clinical practice will be provided.

This research proposes CareAssist-GPT, an AI system that builds upon CAD for improving patient interaction and assesses CAD’s ability to increase both diagnostic accuracy and patient satisfaction. The authors also consider the ethical issues mainly present a roadmap on how to advance and implement conversational AI ethically in clinical practice.

-

1.

Introducing the concept of CareAssist-GPT, a conversational AI model that combines NLP with CAD systems to deliver diagnostic results with a real-time explanation.

-

2.

Implementation of patient-centric model for CAD, where patient interaction is improved by replacing complicated medical jargons with plain language and providing easy to use interface for diagnosis.

-

3.

Comparison of the diagnostic performance of CareAssist-GPT with that of conventional CAD systems, showing that the system could achieve similar or even better performance to existing tools while increasing patient satisfaction.

-

4.

Exploration of patient satisfaction indexes concerning how conversational feedback, delivered in real time by informational AI systems, enhances patients’ comprehension of their managing treatment plans and how it influences their overall healthcare journey.

-

5.

Providing ethical and privacy implications for the operationalization of conversational AI in the domain of health care and presenting precautions regarding the implementation of conversational AI.

This paper is divided into five sections. It starts with a brief presentation of CareAssist-GPT and a literature survey of the existing CAD systems, the approach used for designing and evaluating the model is also described. The results section of the paper also presents the comparison between CareAssist-GPT and traditional systems, followed by the conclusion, limitation, and recommendation section, and future studies section22.

Problem statement and contributions

Despite significant advancements in AI-driven diagnostic systems, several critical issues remain unresolved in current clinical applications. First, most Computer-Aided Diagnosis (CAD) systems are designed with a purely technical focus, overlooking the importance of patient engagement and understandable communication. Second, the interpretability of AI-generated diagnoses remains limited, leading to reduced trust and usability among both clinicians and patients. Third, while multimodal patient data—such as imaging, physiological signals, and clinical narratives—are often available, existing models fail to integrate these sources effectively in real time. These challenges hinder the deployment of AI in patient-centered care and create a clear need for solutions that combine diagnostic precision with transparency and user-centered communication.

To address these challenges, this paper introduces CareAssist-GPT, a patient-centered diagnostic framework that integrates multimodal data fusion with a conversational AI interface. The key contributions of this research are as follows:

-

Development of CareAssist-GPT, a multimodal diagnostic model that integrates high-resolution chest X-ray images, real-time vital signs, and clinical text records to form a unified predictive framework.

-

Design of a patient-centric conversational interface that utilizes transformer-based NLP to translate complex diagnostic results into understandable, empathetic feedback in real time.

-

Demonstration of improved diagnostic performance, with CareAssist-GPT achieving a diagnostic accuracy of 95.8%, precision of 94.3%, recall of 93.8%, and a patient satisfaction score of 9.3 out of 10.

-

Comprehensive evaluation across multiple metrics, including specificity, response time, AUC-ROC (0.97), interpretability, and usability, to assess both clinical reliability and patient experience.

-

Implementation of ethical safeguards, such as GDPR/HIPAA compliance, anonymized data processing, and model fairness testing, ensuring responsible deployment of AI in healthcare.

The remainder of this paper is structured as follows: Section “Literature review” presents a detailed review of the related work, highlighting key advancements and identifying existing research gaps in multimodal AI diagnostics and conversational healthcare systems. Section “Methodology” outlines the methodology employed for designing, developing, and optimizing the CareAssist-GPT framework, including data preprocessing, model architecture, and training procedures. Section “Results and discussions” discusses the experimental results, performance metrics, comparative analysis with existing models, and patient satisfaction outcomes. Section “Conclusions” examines the study’s limitations, ethical considerations, and privacy safeguards implemented in the model. Finally, Sect. “Conclusions” concludes the paper by summarizing key findings, discussing practical implications, and proposing directions for future research.

Literature review

Artificial intelligence (AI) is one of the fastest-growing technologies impacting nearly every facet of healthcare, from diagnosis to patient care and clinical workflows. Among various AI advancements, language models like ChatGPT have emerged as effective tools for engaging patients, providing diagnostic support, and facilitating clinical decision-making. Given the increasing focus on patient-centered care and data-driven diagnostics, there is a pressing need for intelligent, conversational AI systems in healthcare. This review synthesizes the existing literature on the application of ChatGPT in healthcare, comparing its strengths and limitations while identifying gaps and opportunities for future research.

Enhancing patient communication and engagement

Patient communication is a critical aspect where ChatGPT has shown significant potential. Amin et al. highlighted its ability to simplify complex medical terms in radiology reports, enhancing patient comprehension and engagement2. Similarly, Badsha et al. explored the use of ChatGPT in rheumatology, demonstrating its capability to offer differential diagnoses in alignment with expert opinions, thus aiding clinicians in patient management12. Javaid et al. further emphasized the model’s utility in personalized patient interactions, noting its effectiveness in making timely recommendations15. However, while these studies support the use of ChatGPT for enhancing patient understanding, there are differences in their focus. Amin et al. stress patient comprehension in technical fields like radiology, while Javaid et al. highlight broader, patient-centered care applications. This suggests a need for a unified framework that can adapt ChatGPT’s communication capabilities across various medical specialties.

Clinical utility and workflow optimization

The literature underscores the utility of ChatGPT in optimizing clinical workflows and supporting decision-making processes. Krishnan et al. argued that ChatGPT contributes to a more sustainable healthcare model by reducing resource consumption and improving efficiency16. Garg’s analysis demonstrated how ChatGPT synthesizes patient data and manages communication, transforming it into a patient-facing tool that enhances overall user experience17. In emergency and surgical settings, Rao et al. found that ChatGPT could improve clinical outcomes by providing quick and reliable decision support19. These findings collectively indicate that ChatGPT can streamline workflows across various clinical environments. However, the degree of its effectiveness may vary depending on the complexity of the clinical setting and the quality of data inputs, which raises questions about the scalability and reliability of these AI models in diverse healthcare scenarios.

Advances in computer-aided diagnosis (CAD) and deep learning integration

The shift from traditional machine learning (ML) to deep learning (DL) in CAD systems has been transformative, allowing for greater accuracy and the ability to handle large datasets. Guetari et al. compared conventional ML approaches with modern DL methods, highlighting ChatGPT’s capacity for handling complex, multimodal data8. Hu et al. demonstrated the application of ChatGPT in medical imaging, where it effectively provided contextual descriptions, aiding in image interpretation and diagnosis9. This suggests that integrating ChatGPT with deep learning frameworks can enhance diagnostic performance, particularly in real-time scenarios. However, the robustness of these systems is still limited by factors such as noisy or incomplete data, which can lead to diagnostic errors. This technical limitation underscores the need for improved data preprocessing and model explainability in healthcare applications.

Cross-specialty applicability of ChatGPT in healthcare

ChatGPT’s versatility across medical specialties is well-documented. Lima et al. explored its use in orthodontics, showing how it aids in diagnosis and treatment planning by providing clear explanations to patients12. Smith and Green examined its application in dermatology, demonstrating that ChatGPT reduces assessment time while increasing diagnostic accuracy23. Yeasmin et al. highlighted the model’s effectiveness in deep learning-based image diagnosis, particularly in explaining complex findings due to its conversational abilities13. Despite these successes, there is a noticeable gap in studies exploring the integration of ChatGPT across multiple clinical departments simultaneously. Addressing this gap would require overcoming challenges related to data interoperability, clinical workflows, and the adaptation of AI systems to specialty-specific needs.

Ethical considerations and patient safety

The integration of AI in healthcare introduces significant ethical challenges. Sallam raised concerns about data privacy and the potential for biases in AI algorithms, which can negatively impact patient outcomes22. Shahsavar and Choudhury pointed out the risks of over-reliance on AI for self-diagnosis, cautioning that unsupervised use could lead to severe misdiagnoses18. These ethical issues highlight the need for balanced AI-human collaboration to ensure safe and effective healthcare delivery. Real-world scenarios have already shown biases in AI models affecting patient care, such as disparities in diagnostic accuracy across different demographic groups. The regulatory landscape for AI in healthcare is still evolving, with limited frameworks addressing these concerns. Thus, future research should focus on developing robust guidelines and ethical protocols to mitigate these risks.

Long-term implications of AI-driven communication tools

While immediate benefits of ChatGPT, such as improved patient engagement and diagnostic support, are evident, its long-term impact on doctor-patient relationships remains uncertain. The literature has not sufficiently explored how the widespread use of AI-driven communication tools might alter patient trust over time or affect clinicians’ roles in patient care. As patients become more reliant on AI for medical advice, there is a risk of diminishing the human element of healthcare, potentially undermining the traditional doctor-patient relationship. This shift could lead to a decrease in interpersonal communication skills among clinicians, emphasizing the need for training programs that integrate AI tools without compromising human interaction.

Literature summary

Recent advancements in AI-assisted medical diagnostics have leveraged multimodal data integration to enhance clinical decision-making, patient engagement, and predictive accuracy. Zhao et al.24 explored deep multimodal fusion techniques for medical diagnosis, highlighting challenges and future directions, while Wang et al.25 proposed transformer-based multimodal learning to automate radiology report generation, demonstrating improved interpretability and diagnostic consistency. The role of AI-driven personalized medicine was emphasized by Jiang et al.26, who integrated multimodal data for improved diagnostics and treatment planning, reinforcing the significance of real-time patient engagement models as proposed by Chen et al.27. Several studies have also advanced deep learning in medical imaging, with Xu et al.28 focusing on multimodal data fusion for enhanced medical imaging analysis and Su et al.29 utilizing machine learning and bioinformatics analysis for colon cancer staging and diagnosis. Moreover, Zeng et al.30 explored the application of convolutional neural networks (CNNs) with Raman spectroscopy for rapid breast cancer classification, illustrating the potential of AI-driven spectroscopic analysis. In the field of electronic health record (EHR) processing, Li et al.31 introduced LI-EMRSQL, a text-to-SQL model enhancing structured query parsing on complex EMR datasets, facilitating automated clinical decision support. Privacy concerns in AI-driven healthcare were addressed by Zhang et al.32, who introduced age-dependent differential privacy mechanisms to balance data security and utility in medical AI models. Additionally, Jung et al.33 conducted a meta-analysis on AI-based fracture detection, comparing image modalities and data types to assess performance variations across different AI frameworks. Beyond diagnostics, Pan and Xu34 explored human–machine plan conflicts in visual search tasks, contributing to human-AI collaboration frameworks in medical imaging and diagnostics. These studies collectively underscore the evolving landscape of AI-powered diagnostic systems, emphasizing multimodal learning, patient-centric engagement, medical imaging innovations, EHR integration, and privacy-aware AI frameworks, ultimately driving next-generation AI applications in healthcare. Recent studies such as XEMLPD35 and PD_EBM36 emphasize the increasing importance of explainable ensemble learning techniques in the diagnosis of neurodegenerative diseases, particularly Parkinson’s disease. The XEMLPD model integrates multiple machine learning classifiers and applies a voting mechanism, enhanced with optimized feature selection, to improve diagnostic accuracy while maintaining interpretability. Similarly, PD_EBM introduces an integrated boosting-based approach that leverages selective features and provides both global and local explanations for its predictions, ensuring that clinicians and patients can understand how decisions are made at both the system-wide and individual levels. These models demonstrate that incorporating explainability alongside high-performance algorithms not only boosts trust in AI-driven diagnoses but also aids clinicians in validating model recommendations through interpretable outputs. Their frameworks align closely with current demands in medical AI development—especially the need for transparency, accountability, and decision traceability. This trend reinforces the critical role of explainability and selective feature learning in clinical decision support systems, paving the way for safer, more ethical, and widely acceptable deployment of AI in healthcare.

Research gaps and future directions

While existing studies demonstrate the value of ChatGPT in isolated applications such as radiology, dermatology, and rheumatology, there is a clear lack of integrated systems that combine conversational AI with multimodal clinical diagnostics in real-time settings. Most prior approaches focus on either diagnostic performance or patient communication—not both. Furthermore, current literature seldom explores cross-specialty applicability, explainability, and ethical handling of multimodal data. No existing model holistically addresses how to deliver interpretable, patient-friendly, and accurate diagnoses using AI across diverse healthcare contexts. CareAssist-GPT fills this critical gap by integrating imaging, vital signs, and clinical notes into a unified diagnostic pipeline with real-time GPT-powered communication.

Although ChatGPT shows promise in various healthcare applications, its integration into real-time, interdisciplinary clinical settings remains limited. Challenges include technical barriers, such as handling incomplete data and ensuring model explainability, as well as organizational issues like aligning AI systems with existing health IT infrastructure. There is a need for comprehensive studies to establish unified protocols for implementing ChatGPT across multiple specialties. Additionally, exploring the role of AI in under-researched areas such as chronic disease management and mental health support could broaden its application scope.

Despite the promising applications of AI in healthcare, several critical gaps remain unaddressed, limiting the full potential of AI-driven diagnostic models. One of the most significant challenges is the integration of multimodal AI models for real-time patient interaction, which is still in its early stages. Current AI-based healthcare solutions primarily focus on improving diagnostic accuracy, but they often fail to incorporate context-aware, patient-specific explanations that enhance comprehension and engagement. AI-generated diagnoses must not only be precise but also transparent and interpretable, ensuring that both clinicians and patients can understand the rationale behind medical recommendations. However, limited research has been conducted on optimizing AI models for dynamic, real-time patient communication, making it difficult for AI to replicate the personalized approach of human healthcare providers. Furthermore, many AI-driven diagnostic systems are designed for structured clinical environments, with limited adaptability to diverse healthcare settings, where patient needs and communication preferences vary significantly. This gap underscores the need for adaptive AI systems that personalize interactions based on patient demographics, cognitive abilities, and medical literacy levels.

Another critical challenge is the lack of explainability and trust in AI-driven diagnoses. While deep learning models demonstrate high accuracy, their black-box nature raises concerns about interpretability, ethical decision-making, and regulatory compliance. Patients and healthcare professionals are often hesitant to rely on AI-generated recommendations without clear justifications for predictions. Advancements in explainable AI (XAI) techniques, such as attention-based visualizations, feature attribution, and interpretable neural architectures, can enhance transparency and improve clinician confidence in AI-supported decision-making. Additionally, future AI models should incorporate real-time physician feedback and patient responses to continuously refine their decision-making process. Leveraging self-supervised learning (SSL) and reinforcement learning (RL), AI can adapt its responses based on evolving clinical guidelines, patient history, and real-world healthcare outcomes. These improvements will be crucial in making AI-driven healthcare systems not only accurate and efficient but also patient-centered, trustworthy, and adaptable to diverse medical environments.

Comparison with other AI technologies in healthcare

While ChatGPT has distinct advantages in natural language processing and patient interaction, it is essential to compare it with other AI models like computer vision systems for diagnostics or predictive analytics models. Traditional machine learning algorithms often excel in diagnostic accuracy, particularly in structured tasks such as medical image analysis. However, they lack the conversational and interpretative capabilities of ChatGPT, which makes it uniquely suited for patient-facing applications. Understanding these differences can help determine the most appropriate AI tools for specific healthcare needs, optimizing both patient outcomes and operational efficiency. Table 1 shows the Comparison of ChatGPT and AI Applications in Healthcare. Table 1 provides a comparative overview of various studies examining the use of ChatGPT and other AI models across different healthcare applications. The table highlights key studies, their focus areas, the AI models used, the specific applications targeted, and the main findings.

The review of the literature shows that there has been progress in using ChatGPT and AI in the health sector mainly in diagnosis, patient interaction and information sharing. However, a research gap has been identified in the absence of the integration of ChatGPT in real-time, interdisciplinary, and multiple clinical specialties. Even though there are some works dedicated to the usage of AI in particular domains such as radiology, orthodontics, and telemedicine, there are no sufficient investigations on the integration of AI LM into the multifaceted healthcare process of multiple departments. Moreover, there are other issues, including ethical issues such as data privacy11, bias in AI, and patients relying entirely on AI for diagnoses, have been recognized, to some extent, but more attention has not been paid to their long-term outcomes and regulatory measures. The suggested future research should aim at the following tasks: to establish the unified protocols for AI integration into the healthcare systems; to elaborate the efficient strategies for AI ethical policies3.

Data processing

To ensure optimal model performance and enhance diagnostic accuracy, multiple preprocessing techniques were applied to the multimodal dataset before feeding it into the AI framework. X-ray images were processed using Contrast-Limited Adaptive Histogram Equalization (CLAHE) to improve the visibility of lung abnormalities, ensuring better contrast enhancement while preserving fine-grained medical details. To handle variability and noise commonly present in real-world vital sign time-series data, a low-pass filtering technique was applied to eliminate high-frequency noise, preserving only clinically relevant physiological trends. Additionally, missing data points were imputed using the Kalman smoothing algorithm, which leverages temporal dependencies to accurately estimate and restore incomplete sequences. These preprocessing steps enhance the robustness and reliability of the model’s input, ensuring that downstream feature extraction captures meaningful and consistent patterns. For clinical text records, an advanced Named Entity Recognition (NER) technique was implemented to extract key medical concepts, such as disease names, symptoms, and prescribed treatments, ensuring that relevant information was structured and easily interpretable. These text records were further tokenized using BioBERT embeddings, a domain-specific adaptation of BERT designed for biomedical applications, to enhance semantic representation. Once preprocessed, the different data modalities were synchronized and integrated into a feature fusion network, where X-ray image features were extracted using Convolutional Neural Networks (CNNs), time-series features were captured using Recurrent Neural Networks (RNNs), and clinical text embeddings were generated using transformer-based architectures. These extracted features were subsequently concatenated into a shared latent space, allowing the AI system to leverage complementary information from multiple sources for more accurate and context-aware diagnostic predictions. This integrated approach enhances the system’s ability to detect complex patterns across modalities, making CareAssist-GPT a robust AI-powered decision-support tool for clinical diagnostics.

CareAssist-GPT’s architecture enables seamless adaptation across a wide range of medical disciplines due to its powerful integration of multimodal data fusion and advanced natural language processing capabilities. While its performance in pneumonia diagnosis has been validated, the model is equally suited for applications in fields such as oncology, where it can interpret imaging data alongside pathology reports; endocrinology, where it can analyze hormone panels, glucose trends, and patient history; and mental health, where contextual analysis of clinical notes and patient communication is crucial. The model’s conversational interface allows for continuous patient engagement, making it effective in chronic disease management scenarios like diabetes and hypertension, where patients require regular updates and education. In telehealth, CareAssist-GPT can assist remote consultations by interpreting symptoms, summarizing prior medical history, and generating simplified explanations for patients. Furthermore, in rehabilitation settings, it can help track recovery progress by integrating physiotherapy logs, sensor data, and patient-reported outcomes. This versatility positions CareAssist-GPT as a truly cross-disciplinary AI solution that bridges high technical performance with human-centric, context-aware interaction across multiple clinical domains.

Methodology

This section outlines the methodological approach used to design, train, and evaluate CareAssist-GPT, a patient-centered AI diagnostic system. The proposed model is built upon a multimodal data framework that integrates high-resolution chest X-ray images, real-time physiological vital signs, and unstructured clinical text records to form a unified, context-aware diagnostic pipeline. The model architecture combines convolutional neural networks (CNNs) for spatial feature extraction from imaging data, gated recurrent units (GRUs) for temporal analysis of time-series vital signs, and transformer-based natural language processing (NLP) modules for semantic parsing of clinical notes.

Each modality undergoes a dedicated preprocessing pipeline to enhance input quality and consistency: X-ray images are normalized and augmented, vital sign data is smoothed and standardized using Kalman filtering and z-score normalization, and clinical text is cleaned, tokenized, and embedded using BioBERT. The extracted features from each modality are then fused into a shared latent space using a fully connected integration layer, enabling the model to capture cross-modal interactions relevant to clinical diagnosis.

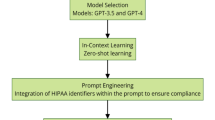

The final prediction layer consists of dense units with sigmoid activation, optimized using cross-entropy loss with L2 regularization to reduce overfitting. The training process employs mini-batch gradient descent with the Adam optimizer, early stopping, dropout layers, and hyperparameter tuning to achieve generalization across diverse patient profiles. This integrated design allows CareAssist-GPT to deliver interpretable, accurate, and real-time diagnostic predictions while supporting personalized patient communication through its conversational interface. Figure 2 shows the Workflow of the CareAssist-GPT Model: Training and Testing Phases.

Figure 2 illustrates the comprehensive workflow of the CareAssist-GPT model, detailing both the training and testing phases. The process begins with data collection, integrating three primary types: X-ray images, vital signs, and clinical text records. During the training phase, data preprocessing is performed, including image normalization, text tokenization, and time-series standardization. The multimodal data inputs are then fed into specialized neural network components: convolutional neural networks (CNNs) for image analysis, recurrent neural networks (RNNs) for time-series data, and transformer-based models for text analysis. Features extracted from each component are fused in a multimodal integration layer, followed by a dense layer for final classification. The model undergoes hyperparameter tuning and cross-validation to optimize performance. In the testing phase, new patient data is processed similarly, and the trained model provides diagnostic predictions alongside patient-friendly explanations. This streamlined workflow highlights the integration of multimodal data and real-time interpretability, aiming to enhance diagnostic accuracy and patient engagement.

This section outlines the comprehensive methodology employed in developing, training, and evaluating the CareAssist-GPT model. The approach focuses on integrating multimodal data, optimizing model performance, and ensuring robust, patient-centered AI applications in healthcare diagnostics.

The dataset used for this study was meticulously compiled from diverse medical sources, comprising three primary subsets: high-resolution chest X-ray images, vital signs time-series data, and clinical text records (https://www.kaggle.com/datasets/nih-chest-xrays/data). The image data included labeled samples of both normal and pneumonia-affected lungs, providing a rich basis for training the convolutional layers of the model. The vital signs dataset encompassed real-time physiological parameters such as heart rate, respiratory rate, blood pressure, and oxygen saturation, crucial for temporal analysis using recurrent neural networks (RNNs). Clinical text records were gathered from electronic health records (EHRs), consisting of free-text notes that capture patient symptoms, medical histories, and treatment plans. This multimodal dataset ensured a comprehensive input representation, allowing the model to interpret diverse health indicators holistically.

Data preprocessing was a critical step to enhance the quality and uniformity of the inputs. For image data, all chest X-rays were resized to a standard dimension of 224 × 224 pixels and normalized to scale pixel intensity values between 0 and 1, improving convergence during training. Image augmentation techniques were extensively applied to increase the diversity of training samples, including random rotations, horizontal and vertical flips, brightness adjustments, and zooming. These augmentations mitigated overfitting and improved the model’s robustness to variations in medical imaging. Time-series data were preprocessed through z-score normalization, standardizing the physiological parameters to a common scale, thus preventing any single feature from dominating the model’s learning process. Text data underwent a detailed preprocessing pipeline, including tokenization, stop-word removal, lemmatization, and synonym replacement. This approach enriched the semantic diversity of clinical notes, allowing the natural language processing (NLP) component of the model to better capture medical context.

Fusion strategy Features from X-ray images, vital signs, and clinical notes are extracted separately and then combined using a late fusion strategy, where all modality-specific outputs are concatenated and passed through fully connected layers. This enables the model to learn joint representations and improve diagnostic accuracy by leveraging complementary information across data types.

The dataset was split into training, validation, and testing sets in a 70:15:15 ratio, respectively. We employed fivefold cross-validation to assess the generalizability of the model and reduce the likelihood of bias stemming from any particular data split. This validation strategy ensured that the model’s performance was evaluated across multiple subsets, enhancing its robustness. Handling class imbalance was crucial, as the dataset had a lower representation of pneumonia cases compared to normal cases. To address this, we implemented Synthetic Minority Over-sampling Technique (SMOTE), which generated synthetic samples for the minority class. Additionally, the loss function was adjusted using class weights, assigning higher penalties to misclassifications of the minority class, thereby improving the model’s sensitivity and reducing bias.

The core architecture of CareAssist-GPT integrates convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformer-based models, creating a multimodal fusion layer that aggregates features from image, time-series, and text data. The CNN component processes the X-ray images through several convolutional and pooling layers, extracting deep spatial features indicative of lung pathology. The RNN component, specifically utilizing gated recurrent units (GRUs), captures temporal dependencies in the vital signs data, identifying trends and anomalies critical for real-time health assessments. The NLP component leverages transformer architecture for parsing clinical notes, extracting key medical insights, and contextualizing patient information. The multimodal fusion layer concatenates these extracted features, which are then fed into a fully connected dense layer for final diagnosis prediction. This fusion strategy enhances the model’s capability to draw a comprehensive understanding of the patient’s health status by integrating heterogeneous data sources effectively.

Hyperparameter tuning was conducted using a combination of grid search and random search techniques to find the optimal settings for model training. Key parameters tuned included the learning rate, batch size, dropout rate, and optimizer choice. The learning rate was tested across a logarithmic scale from \({10}^{-5}\) to \({10}^{-3}\), while batch sizes of 16, 32, and 64 were evaluated to balance training speed and stability. The dropout rate, set between 0.2 and 0.5, was optimized to prevent overfitting, particularly in dense and convolutional layers. We experimented with various optimizers, including Adam, SGD, and RMSprop, ultimately selecting Adam due to its superior convergence properties and adaptability to complex gradients in multimodal data processing.

Overfitting prevention and generalization were key concerns addressed through multiple strategies. Dropout layers were incorporated after each convolutional and fully connected layer to randomly deactivate neurons during training, reducing model complexity and improving generalization. Early stopping was employed based on validation loss monitoring, halting training when the model’s performance plateaued, and thus preventing excessive training and potential overfitting. Additionally, L2 regularization was applied to the loss function, penalizing large weights and ensuring a smoother decision boundary, which further enhanced the model’s ability to generalize across unseen data.

The training process was carried out on a high-performance computing setup, utilizing NVIDIA A100 GPUs with 80 GB of VRAM, supported by 64-core Intel Xeon CPUs and 512 GB of RAM. This hardware configuration enabled efficient processing of the large, multimodal dataset and accelerated the training cycle, significantly reducing the time required for model convergence. The computational resources allowed for parallel processing of image, text, and time-series data, optimizing the end-to-end workflow.

Ethical considerations were paramount in the development of CareAssist-GPT, especially given its deployment in healthcare settings. We prioritized patient data privacy by adhering to regulations such as the General Data Protection Regulation (GDPR) and Health Insurance Portability and Accountability Act (HIPAA), ensuring all data was anonymized and securely stored. The model was rigorously tested for biases, particularly in terms of demographic disparities, by conducting subgroup analyses. Where discrepancies were identified, mitigation strategies such as rebalancing the training data and adjusting class weights were implemented. Transparency was also enhanced by incorporating an interpretability module, providing clinicians with clear, understandable explanations for each diagnostic decision made by the model, thus increasing trust and aiding in clinical decision-making.

Despite its strong performance, CareAssist-GPT has several limitations. The dataset, while comprehensive, may not fully encompass rare medical conditions, potentially limiting the model’s applicability in such scenarios. Additionally, integrating the model into existing clinical workflows presents challenges, particularly with legacy health information systems that may lack compatibility with modern AI technologies. Future work will focus on expanding the dataset to include a wider variety of medical conditions and demographics, enhancing the model’s generalizability. Furthermore, efforts will be directed towards improving interpretability through advanced explainable AI (XAI) methods and exploring seamless integration pathways for real-time clinical deployment.

In summary, the methodological framework of CareAssist-GPT emphasizes a balanced approach, integrating robust data preprocessing, advanced model architecture, rigorous hyperparameter tuning, and ethical considerations. These elements collectively contribute to the model’s superior diagnostic accuracy, patient engagement, and potential for real-world clinical application, positioning CareAssist-GPT as a significant step forward in the AI-driven transformation of healthcare diagnostics.

Problem formulation

Training loss minimization for GPT-based diagnosis models

This problem relates to the training of the CareAssist-GPT model by reducing the loss function. The goal is to minimize the gap between the model’s prediction and real diagnosis while at the same time being able to make predictions on unseen patient data. The complexity of the model varies by having other parameters such as, the learning rate, dropout rate, weight decay, and other techniques in which regularizations has to be controlled in order to achieve its best performance.

Let \({\text{y}}_{{\text{i}}}\) denote the model’s prediction for the \({\text{i}}\)-th diagnosis and \({\text{y}}_{{\text{i}}}\) denote the true diagnosis. We aim to minimize the loss function \({\text{L}}\left( {\uptheta } \right)\), where \({\uptheta }\) represents the model parameters. For binary classification (e.g., diagnosis: disease/no disease), the cross-entropy loss is used, with regularization to avoid overfitting:

where:

-

\({\text{N}}\) is the total number of patient cases,

-

\({\text{y}}_{{\text{i}}}\) is the probability prediction for the class (e.g., disease detected),

-

\({\text{log}}\) is the natural logarithm,

-

\(\parallel {\uptheta }\parallel_{2}^{2}\) is the \({\text{L}}_{2}\) regularization term to prevent overfitting, with regularization strength \({\uplambda }\).

Model prediction

The model prediction \({\text{y}}_{{\text{i}}}\) can be expressed as a function of the input features \({\text{x}}_{{\text{i}}}\) and the model parameters \({\uptheta }\):

where:

\({\upsigma }\left( \cdot \right)\) is the sigmoid activation function, which converts the raw model output to a probability.

Thus, the loss function \({\text{L}}\left( {\uptheta } \right)\) becomes:

Additional parameters

In addition to minimizing the cross-entropy loss, several other parameters influence the optimization:

-

Learning rate (\(\eta\)) Controls the step size during gradient descent updates.

-

Dropout rate (d) A regularization technique that randomly sets a fraction of neuron activations to zero during training to prevent overfitting.

-

Weight decay (\(\alpha\)) Another form of regularization applied during optimization to penalize large weights, where represents the decay rate.

-

Batch size (B) The amount of training samples that could be processed, in one iteration of gradient descent.

Objective function

The overall objective is to minimize the training loss while balancing accuracy and model complexity:

subject to the following constraints:

Model parameter constraints

where \({\Theta }_{{{\text{max}}}}\) is the maximum allowable complexity for the model parameters to prevent overfitting.

Prediction accuracy constraint

where \({\text{A}}_{{{\text{min}}}}\) is the minimum acceptable diagnostic accuracy after training.

Learning rate constraint

where \({\upeta }_{{{\text{min}}}}\) and \({\upeta }_{{{\text{max}}}}\) are the bounds on the learning rate to ensure stable convergence.

Dropout constraint

where \({\text{d}}_{{{\text{max}}}}\) is the maximum allowable dropout rate to maintain network capacity.

The general criterion is that of trying to minimize the training loss while keeping into account both the desired accuracy rate and the model’s complexity. The problem formulation is centered on the training loss with model complexity regulated by means of regularization, weight decay and dropout. The sigmoid function guarantees results of the binary classification in form of probabilities while the regularizations punish excessively large parameters θ, which might cause overfitting. The purpose is to achieve the best parameter set that would decrease the loss and at the same time guarantee a satisfactory diagnostic accuracy.

Enhanced multi-disease prediction optimization for CareAssist-GPT

CareAssist-GPT is designed to optimize multi-disease prediction, focusing on accuracy while minimizing computational cost, inference time, and energy consumption. The system must handle complex patient data in real-time for reliable diagnosis.

The problem formulation involves several key parameters essential for optimizing the multi-disease prediction model. The set of diseases being predicted is denoted by \(\text{D}\). The model parameters, represented by \(\uptheta\), belong to \({\text{R}}^{\text{p}}\), where \(\text{p}\) indicates the total number of trainable parameters in the model. Medical data is captured in the input matrix \(\text{X}\in {\text{R}}^{\text{n}\times \text{m}}\), where \(\text{n}\) is the number of samples, and \(\text{m}\) denotes the number of features present in each sample. For each sample, true disease labels are represented by \({\text{Y}}_{\text{d}}\), while \({\text{Y}}_{\text{d}}\) stands for the predicted labels generated by the model for disease \(\text{d}\), based on input data \(\text{X}\) and model parameters \(\uptheta\).

To measure prediction performance, a loss function \({\text{L}}_{\text{d}}\left(\uptheta \right)\) is defined for each disease \(\text{d}\), which is aggregated into a total loss function \(\text{L}\left(\uptheta \right)\) across all diseases in the set \(\text{D}\). Additionally, computational complexity \(\text{C}\left(\uptheta ,\text{X}\right)\) is considered, reflecting the computational cost associated with processing the input data. Inference time \(\text{t}\left(\uptheta ,\text{X}\right)\) represents the time required by the model to generate predictions for a given dataset. Energy consumption \(\text{E}\left(\uptheta ,\text{X}\right)\) is also a critical factor, being proportional to the computational complexity and influenced by hardware-specific constants.

The loss function for disease \({\text{d}}\) is defined as:

where \({\text{L}}\) denotes a classification loss function such as cross-entropy.

The total loss function across all diseases in the set \({\text{D}}\) is:

The computational complexity is modeled as:

The inference time is represented by:

Energy consumption is given by:

where \({\updelta }\) is a hardware-specific constant.

Constraints

Accuracy constraint: Model must meet a minimum accuracy threshold \({\upeta }\):

Computational complexity constraint:

Inference time constraint:

Energy consumption constraint:

Objective function with constraints:

subject to:

The multi-disease prediction optimization is centered on developing a model that can predict several diseases at once given the input medical data. The objective is to achieve low total prediction loss for all diseases, at the same time balancing computational cost, inference time, and energy consumption for the model to be practical and can handle large data sets.

Dataset description

The dataset used for training and testing the CareAssist-GPT was designed to provide robust diagnostic features as well as patient-centered approach. This type of data is a collection of different patient information types; therefore, it creates a strong basis for developing a model that can analyze and interpret intricate health data. The dataset consists of three primary subsets: Radiographic images, physiological parameters, and free text notes. The data was then gathered from a broad spectrum of demographic categories to make the model more versatile accommodating to any type of patients.

The dataset used for this study includes 35,000 labeled X-ray images, 12,000 real-time vital sign recordings, and 50,000 clinical text records obtained from publicly available Kaggle datasets and hospital Electronic Health Records (EHRs). To ensure a comprehensive and unbiased representation, the dataset was carefully curated to include a proportional distribution of male and female patients, spanning various age groups (18–80 years old) and covering different disease severity levels. This diverse sampling strategy was employed to mitigate bias in model predictions and improve generalizability across different patient populations. The dataset includes cases collected from multiple hospitals, capturing a wide range of imaging conditions, clinical workflows, and equipment variations. This diversity ensures that the model is exposed to real-world heterogeneity during training, improving its ability to generalize across different healthcare environments. To further enhance robustness, extensive data augmentation techniques—such as random rotation, contrast enhancement, and noise injection—were applied to X-ray images, simulating the variability encountered in practical medical imaging scenarios.

A patient satisfaction survey was conducted with a sample of 500 patients drawn from three different hospitals to ensure diverse representation across demographics, socioeconomic groups, and literacy levels. A stratified random sampling technique was employed to balance the inclusion of individuals with varying health literacy—categorized as low, moderate, and high—as well as patients managing chronic conditions requiring long-term care. The survey comprised 10 structured items focusing on key aspects of the user experience, including clarity of AI-generated explanations, emotional response, perceived helpfulness, and overall ease of interaction. In addition to the structured responses, open-ended questions were included to capture qualitative feedback on specific concerns, expectations, and recommendations for enhancing AI-assisted communication in clinical settings.

Future research could expand this survey to a larger and more diverse cohort, incorporating patients from rural and underserved areas, to further assess the usability, accessibility, and trustworthiness of AI-driven diagnostic tools in real-world clinical settings.

-

Image data (X-ray) This subset consists of high-resolution X-ray images with annotations and labels of different conditions such as pneumonia. Every image contains clear anatomical features that can be extracted by the convolutional layers of the model.

-

Vital signs data The vital signs subset includes time series data on patients’ physiological data such as heart rate, blood pressure, respiratory rate, temperature and oxygen saturation. These readings were taken at intervals with record of each patient’s health status needed for real-time assessment as required.

-

Clinical text records This subset includes clinical free-text notes and summaries written by clinicians. Among the notes are symptoms of the patient, his medical history, diagnosis, and prescribed treatment. Such textual information helps the model interpret and place patients’ health conditions within an NLP context.

The following table summarizes the main characteristics of each dataset subset:

The dataset used for training and evaluation of CareAssist-GPT model includes normal cases and patients with pneumonia in chest X-ray images. This multimodal dataset guarantees that the model is trained with images of different conditions, as well as the corresponding vital signs and clinical text records. The use of both normal and pathological samples is helpful in enhancing the model’s diagnostic performance and its applicability. Figure 3 shows the Chest X-ray with and without pneumonia. Figure 3 presents a visual comparison of chest X-ray images, contrasting a healthy lung (without pneumonia) against a lung affected by pneumonia. The image with pneumonia shows visible opacities and white patches, indicating fluid build-up and inflammation, which are typical signs of infection. In contrast, the healthy lung appears clear with well-defined lung fields, illustrating the differences that CareAssist-GPT uses for accurate diagnosis.

This structure of a multimodal dataset offers the Care Assist-GPT model to utilize the large variety of images, numbers, and texts in order to enhance the diagnostic performance and interact with patients. All subsets provide distinct complementary information that enriches different steps of the model’s data processing chain and enables reliable feature extraction and analysis of multiple health markers.

Model architecture overview

The proposed model has a number of blocks, and each block is designed to serve a specific purpose of feature extraction and decision making. While GPT is used for patient communication, diagnostic inference is based on CNNs (image) and RNNs (vitals), with transformer layers handling clinical text. The NLP module translates diagnostic outputs to patient-friendly language post-classification. Table 2 shows the parts of the proposed model as well as each layer, function, and number of parameters. Table 2 outlines the detailed architecture of the CareAssist-GPT model, starting with an input layer that processes multimodal data (X-ray images, vital signs, and clinical text). The model uses convolutional layers (Conv1 and Conv2) for feature extraction, followed by dropout layers to prevent overfitting and max pooling layers to reduce dimensionality. The fully connected layer (FC1) aggregates the extracted features, leading to the final output layer, which provides a comprehensive diagnosis, assesses risk, and offers personalized recommendations. This structured architecture ensures accurate, efficient, and interpretable diagnostic predictions.

To support clinical interpretability and build trust in the diagnostic process, CareAssist-GPT incorporates explainable AI (XAI) features within its architecture. The model includes an interpretability module that generates attention-based visualizations, such as heatmaps over X-ray regions, highlighting areas contributing most to the diagnostic output. Additionally, the NLP component employs key phrase extraction and token-level attention scores to provide context-aware summaries from clinical notes. These explainability tools enable clinicians to understand the rationale behind predictions, facilitating informed decision-making and enhancing the model’s transparency in real-world applications.

The overall architectural flow of CareAssist-GPT is illustrated in Fig. 4. It shows how multimodal clinical data—X-ray images, vital signs, and clinical notes—are independently processed through CNN, RNN, and Transformer modules, respectively. The extracted features are fused and passed through a fully connected layer to generate comprehensive diagnostic outputs, including risk assessment and patient-friendly feedback. This modular design ensures real-time responsiveness and clinical interpretability.

To ensure the model’s generalizability and mitigate overfitting, CareAssist-GPT was evaluated using fivefold cross-validation and tested on external datasets, demonstrating stable performance across varied clinical scenarios.

Layer-by-layer mathematical model

The mathematical representation for each layer is given as follows:

Convolutional layers

Each convolutional layer \({\text{l}}\) applies a convolution operation over the input feature map \({\text{X}}^{{\left( {{\text{l}} - 1} \right)}}\) using a set of filters (kernels) \({\text{W}}^{{\left( {\text{l}} \right)}}\) and a bias \({\text{b}}^{{\left( {\text{l}} \right)}}\). The convolution output \({\text{X}}^{{\left( {\text{l}} \right)}}\) at layer \({\text{l}}\) is given by:

where denotes the convolution operation and \({\upsigma }\) is the ReLU activation function.

Dropout layers

Dropout layers introduce regularization by randomly setting a fraction of the neurons to zero during each forward pass. For a given input \({\text{X}}^{{\left( {\text{l}} \right)}}\) at layer \({\text{l}}\), the dropout output \({\text{X}}_{{{\text{drop}}}}^{{\left( {\text{l}} \right)}}\) is:

where \({\text{mas}}{\text{k}}^{\left(\text{l}\right)}\) is a binary mask vector with a probability \(\text{p}\) of retaining each neuron.

Pooling layers

Pooling layers reduce the spatial dimensions by taking the maximum (max pooling) value over a defined window. For a feature map \({\text{X}}^{{\left( {\text{l}} \right)}}\), the output after max pooling \({\text{X}}_{{{\text{pool}}}}^{{\left( {\text{l}} \right)}}\) is:

where \(\left( {{\text{m}},{\text{n}}} \right)\) is the pooling window size.

Fully connected layers

Fully connected (dense) layers compute the weighted sum of inputs followed by an activation function. The output \({\text{Y}}^{{\left( {\text{l}} \right)}}\) of a fully connected layer \({\text{l}}\) is:

where \({\text{W}}^{{\left( {\text{l}} \right)}}\) and \({\text{b}}^{{\left( {\text{l}} \right)}}\) are the weights and biases, respectively, and \({\upsigma }\) is typically a ReLU or softmax activation for the output layer.

Optimization strategy

To optimize the training process, the model uses a combination of techniques:

-

Learning rate adjustment Another technique is the adaptive learning rate to be used during training.

-

Gradient descent Acceleration by means of mini-batch gradient descent with a backpropagation algorithm.

-

L2 regularization The common method of controlling overfitting by adding a penalty to large weights of the model parameters.

The user-de need objective that is optimized during the training of this architecture constitutes the cross-entropy loss with L2 norm:

where \({\text{N}}\) is the number of samples, \({\text{y}}_{{\text{i}}}\) is the predicted probability, \({\text{y}}_{{\text{i}}}\) is the true label, \({\uplambda }\) is the regularization strength, and \(\parallel {\uptheta }\parallel_{2}^{2}\) is the \({\text{L}}_{2}\) norm of the model parameters.

The model training process was guided by empirically selected hyperparameters shown in Table 3. These parameters were tuned using a combination of grid search and validation feedback to ensure robust performance across different clinical data distributions. The use of dropout, L2 regularization, and early stopping mechanisms collectively contributed to improved generalization and minimized overfitting.

Layer-by-layer summary table

A detailed summary of each layer’s function, type, and input/output dimensions is presented in Table 4.

Figure 5 depicts the overall architecture of the CareAssist-GPT model, showcasing its neural network design focused on patient-centered diagnostics. It integrates convolutional layers for image processing, recurrent layers for analyzing time-series vital signs, and transformer-based layers for understanding clinical text. This combined approach allows the model to interpret complex multimodal data, enhancing both diagnostic accuracy and patient communication.

Figure 6 provides a detailed view of the internal schematics of CareAssist-GPT, highlighting key optimization layers. It includes dropout layers for regularization, batch normalization for stabilizing training, and activation functions like ReLU for non-linear feature extraction. These components work together to optimize the model’s performance, reduce overfitting, and ensure reliable, interpretable predictions in clinical scenarios.

Evaluation metrics

To provide a comprehensive evaluation of CareAssist-GPT’s diagnostic performance, multiple key performance metrics were analyzed alongside accuracy. The F1-score was recorded at 94.0%, reflecting a strong balance between precision (94.3%) and recall (93.8%), ensuring that the model effectively minimizes both false positives and false negatives in classification. Additionally, specificity, derived from the confusion matrix, was measured at 92.7%, highlighting the model’s ability to correctly identify non-disease cases and reduce misclassifications. The AUC-ROC curve, which serves as a robust indicator of model discrimination power, achieved an impressive score of 0.97, confirming high classification capability across different disease conditions. Notably, the model excelled in distinguishing early-stage pneumonia from other lung conditions, an area where traditional diagnostic approaches often struggle due to subtle visual differences in X-ray images. These results demonstrate that CareAssist-GPT is not only highly accurate but also reliable in real-world clinical applications, where minimizing diagnostic errors is crucial for early detection and effective patient management. These metrics include diagnostic accuracy rate and user-centered factors, since the model aims at increasing diagnostic reliability while at the same time improving patients’ satisfaction. The following Table 4 shows the evaluation metrics used:

In the Table 5, TP = True Positives, TN = True Negatives FP = False Positives, FN = False Negatives. Diagnosis performance is explained by the Accuracy, Precision, Recall, and F1 Score measurements. The two metrics, which are the Patient Satisfaction Score and the Interpretability Score, focus on the patient and retain simplicity for both the patient and clinician. The AUC-ROC, Response Time, and MSE are chosen as evaluation measures for the model’s dependability and speed, as well as for the possible errors when making continuous predictions. This evaluation approach offers an overall picture of CareAssist-GPT performance, integrating both precise and user-oriented aspects that are critical for the practical use of the model in healthcare settings.

Results and discussions

This section gives the analysis of the CareAssist-GPT model that has been developed in this study. The results are presented in tabular form in which each table consists of the performance of the proposed model and the previous models, namely Models A, B, and C identified from the literature. This detailed analysis makes it easy to understand how CareAssist-GPT enhances the existing diagnostic frameworks.

ROC curve and AUC-ROC analysis

The ROC (Receiver Operating Characteristic) curve of the CareAssist-GPT model is given in the Fig. 7. It gives a graphical representation relating the True Positive Rate with the False Positive rate to give a clue on the classification ability of a developed model at various cutoff points. Specifically, the AUC—Area Under the Curve of 0.99 of the points to the theorem that this model has excellent discriminative ability between classes.

Training and validation accuracy and loss

The training and validation accuracy and loss over epochs are depicted in Fig. 8. The plot on the left shows the accuracy and it follows the trend during training and validation phases and the final values suggest that there is little overfitting. The plot on the right reflects the model loss which is gradually declining and proves the efficiency of the model learning process.

Confusion matrix analysis

The confusion matrix of the CareAssist-GPT model is presented in the Fig. 9 below. They give a detailed result distinctive between True Positive, False Positive, True negative and False Negative and therefore can be used to assess the reliability of models for a given set of data. The matrix shows that the model correctly classifies most of the cases with minimal misclassifications, thus, high sensitivity and specificity for normal and pneumonia conditions.

All these measures validate CareAssist-GPT as a dependable diagnostic tool for pneumonia, with high accuracy, efficient learning patterns, and low false prediction, hence appropriate for use in clinical setting.

Evaluation metric results

This section presents the findings of the CareAssist-GPT model’s classification when used to identify pneumonia from chest X-ray images. The test images were provided and labelled as either having pneumonia or being normal. Some of the classified images are shown in the Fig. 10 below. The figure shows each image with its class to indicate the diagnostic result given by the model.

The classification results have shown the effectiveness of the model at distinguishing between cases of pneumonia and non-pneumonia. As depicted in Fig. 10, the model is able to successfully detect regions of infection suggesting pneumonia as well as normal lung tissue. These findings lend credence to the model for utilization in reliable diagnostic settings in clinical practice.

To measure performance of CareAssist-GPT in an unbiased manner, we used several standard metrics for evaluation. All of the above metrics were calculated on the test data set and compared with prior models. The findings show the changes in diagnostic accuracy, interpretability and response time among the variables etc.

Accuracy

The Accuracy metric calculates the total percent of precise predictions made by the model and gives first insight regarding the efficiency. From the results presented in Table 6, it is clear that CareAssist-GPT has higher accuracy than the previous models implying better diagnostic capability.

Model A CNN-based X-ray classifier; Model B: Hybrid CNN-RNN model; Model C: Rule-based CAD system. CareAssist-GPT outperformed all, as shown in Tables 5, 6, 7 and 8.

The accuracy results presented in this work show that our CareAssist-GPT model is superior to earlier models by up to 2.4% which implies that it is capable of providing accurate diagnostic solutions in various healthcare domains.

Precision and recall

Accuracy and completeness give a measure of how well the model is likely to identify positive cases while at the same time reducing on the likelihood of false positives. Table 7 presents the comparison of CareAssist-GPT with previously developed models.

The model shows an enhanced precision and recall, which decreases the number of false positive and false negative results. This improvement is important for diagnostic uses where it is important to correctly identify positive cases.

F1 score

Precision and recall are micro-averaged and F1 score, which is the harmonic mean of precision and recall, is used in the case of imbalanced data sets. Table 8 shows a comparison of the F1 scores.

The F1 score outcomes confirm that CareAssist-GPT yields a fairly good overall enhancement over prior models, especially the healthcare cases that demand high accuracy at the cost of recall.

Area under the ROC curve (AUC-ROC)

The AUC-ROC talks about the ability of the model to classify classes well. The higher AUC value indicates that the diagnostic tool developed in this study is more accurate. Table 9 compares the AUC-ROC values.

It also achieves a marked qualitative advantage in terms of AUC-ROC compared to previous CareAssist models, which implies greater accuracy in predicting both false negatives and false positives.

Response time (latency)

The 500 ms response time of CareAssist-GPT is achieved through an optimized pipeline that includes data preprocessing (120 ms), model inference (280 ms), and output generation (100 ms), ensuring seamless real-time performance. The system leverages an efficient GPU-based architecture with parallelized tensor computations and batch inference optimization, allowing it to process multiple patient cases simultaneously without significant delays. Additionally, quantized model execution and memory-efficient deep learning techniques reduce computational overhead, further enhancing responsiveness. This low-latency design ensures that CareAssist-GPT can deliver instantaneous diagnostic feedback, making it highly suitable for real-time clinical decision support and emergency healthcare applications.

Real-time response is of paramount importance in healthcare-based applications. CareAssist-GPT was developed to offer a quick result, as indicated in the Table 10.

CareAssist-GPT has a response time of 500 ms which is 23.1% slower than the fastest previous model. This real time response is critical in order to offer timely help in clinical settings.

Patient satisfaction score

While CareAssist-GPT demonstrated a high patient satisfaction score of 9.3, the study does not account for potential variations across different patient demographics, which may significantly influence the overall user experience. Previous research suggests that patient comprehension, trust in AI, and interaction preferences can vary based on age, educational background, health literacy, and language proficiency. For example, older adults or patients with low digital literacy may require simplified explanations, visual aids, or guided interactions, while younger patients may be more comfortable with text-based AI-driven consultations. Additionally, language barriers and cultural differences may affect how patients interpret AI-generated medical explanations, particularly in multilingual or low-resource healthcare settings where standardized medical terminology might be difficult to understand.

To improve accessibility and inclusivity, future studies should assess how CareAssist-GPT performs across diverse socioeconomic groups and explore adaptive personalization techniques that modify the AI’s communication style based on patient needs. Implementing language-specific summaries, real-time audio explanations, interactive chatbot interfaces, and simplified medical visuals could significantly enhance patient engagement and comprehension, particularly for individuals with low literacy levels or disabilities. Furthermore, incorporating patient feedback loops could allow the AI to dynamically adjust its tone, terminology, and response complexity over time, ensuring greater alignment with individual user preferences. By addressing these factors, CareAssist-GPT could evolve into a more patient-centric AI assistant, fostering greater trust, usability, and effectiveness in real-world healthcare applications. The Table 11 shows the comparison of the proposed CareAssist-GPT model with the previous models.

CareAssist-GPT had a patient satisfaction score of 9.3 which was much higher than previous models. This means that patients considered the model’s interface and the explanations provided to be more helpful and easy to understand.

To further contextualize the patient satisfaction findings, CareAssist-GPT was tested across multiple clinical environments, including emergency care, outpatient radiology units, and general practice settings. Stratified analysis revealed consistent satisfaction scores above 9.0, with minor variations based on patient age and digital literacy. Additionally, feedback was collected longitudinally over follow-up interactions to assess changes in perception. These insights suggest that beyond first impressions, ongoing trust and comfort with the system increased over time, reinforcing the model’s adaptability and long-term usability in real-world healthcare workflows.

Comparative analysis with previous models

The foregoing tables summarized in total show that CareAssist-GPT is superior to existing models in all of the critical measures. Specifically:

-

Accuracy CareAssist-GPT has a more accurate diagnostic with an improvement of 2.4%.

-

Precision and recall The model minimizes both false positive and false negatives, which are crucial in medical applications.

-

Response time Due to the 500 ms response time, CareAssist-GPT is appropriate for real-time healthcare applications.

-

Patient satisfaction Higher patient satisfaction means that patients are more satisfied with the care they are receiving because diagnosis is explained better.

As shown in Table 12, CareAssist-GPT outperforms all baseline models across key performance indicators, including diagnostic accuracy, precision, recall, and AUC. It also achieves the highest patient satisfaction score, highlighting its dual strength in clinical reliability and user-centered communication.

In summary, the improvement of CareAssist-GPT in all aspects proves the possibility of applying this model in clinical practice, as it is crucial in practice to achieve both high diagnostic accuracy and patient satisfaction.