Abstract

For object detection in complex dark environments, the existing methods generally have problems such as low detection accuracy, false detection and missed detection. These problems lead to a lack of key information and incomplete context information, which seriously affects the detection effect. Therefore, this paper proposes an object detection method for complex dark environment based on YOLO-AS. First, the Zero-DCES image enhancement module is designed to improve the image quality of the dark environment via adaptive contrast enhancement. Second, a YOLO-AS detection model is constructed, which integrates ECA_ASPP and the SK attention mechanism. The receptive field is expanded by dilated convolution, and dynamic feature detection is realized by combining channel attention, which effectively enhances the ability of multiscale feature expression. Finally, the model is tested on the ExDark dataset and the LOL dataset, and compared with current mainstream models such as YOLOv5s, YOLOv8n and YOLOv11. Experiments show that the proposed method achieves 78.39% map@50 on the ExDark dataset while maintaining a basically unchanged detection speed, which is 5.78% higher than that of the benchmark model, and significantly improves the detection accuracy in complex dark environments.

Similar content being viewed by others

Introduction

As the most popular research field in machine vision, object detection is widely used in robot inspection, facial recognition, road safety monitoring, intelligent navigation and other fields, and has a very broad application prospects1. However, although the object detection algorithm has made significant progress, the potential for effective operation in complex dark environments remains to be fully explored. Images collected in complex dark environments usually have problems such as low image quality, detail loss, and low contrast, which can lead to low object detection accuracy, missed object detection, and false detection.

To solve the above problems, some researchers have proposed image enhancement methods. Xu2 alleviated the problem of information loss in dark light images by embedding a physical illumination model into a deep network and combining it with a nonlocal feature extraction module. Khurram3 designed the FeatEnHancer module, which uses a multihead attention mechanism to enhance the multiscale feature expression, and significantly improves the performance of object detection and semantic segmentation. The LightingNet fusion visual converter (VIT) and complementary learning network proposed by Yang4 achieve precise control of brightness, color and noise through global‒local feature collaborative optimization. Kong5 combined the ZeroDCE image enhancement algorithm with the improved AFF-YOLO network to improve the vehicle detection accuracy by 4.9–94.7%. Wang6 developed a UAV image enhancement algorithm based on Retinex theory, and solved the problem of insufficient brightness and blurred details through dual-module collaboration. Zhu7 proposed a multiillumination estimation network Ghillie, which uses an optical modulation network (LMN) and gradient guided denoising (GDN) to improve uneven exposure under complex illumination, and combines AM-SSIM loss to improve the robustness of color restoration.

In addition, some scholars have proposed unique insights into image denoising, domain transfer, supervision and comparison, and adversarial networks. Lin8 proposed a supervised comparative detection framework SCDet, which separates dark interference features by decoupling pretraining tasks and joint optimization modes. Mark9 used domain transfer technology to realize online conversion from nighttime images to the daytime domain, and extended the adaptability of the detector. Tian10 integrated wavelet transform into a multistage dynamic convolutional network (MWDCNN) to achieve a balance between denoising efficiency and accuracy. Wang11 improved the YOLOv8 architecture by introducing an empty space pyramid and a global attention mechanism, which increased the mAP of the ExDark dataset by 3.6%. Chen12 optimized the dark reasoning ability of instance segmentation via adaptive downsampling and interference suppression. Sun13 innovatively used adversarial attacks to generate pseudo-label fine-tuning models to increase detection robustness.

Although significant progress has been made in the field of object detection, its object detection ability in complex dark environments has not been effectively improved. In the complex dark environment, the accuracy of detection is still low due to the low quality of the collected image and the difficulty in identifying the object. Therefore, aiming at the problem of low object detection accuracy in complex dark environment, this paper proposes a object detection method based on YOLO-AS, which aims to achieve high-precision object detection under complex dark conditions. The structure of the article is as follows: In Section “Related work”, we provide a brief overview of image enhancement methods, attention mechanisms, and YOLO algorithms. In Section “Methods”, the structure of the YOLO-AS model is analyzed comprehensively, and the composition and improvement methods of each module of the model are further introduced. In Section “Experiment”, the detection ability of the model is tested on two datasets, and ablation experiments and comparative experiments are performed to verify the effectiveness of the model. Finally, in Section “Conclusion”, we provide a comprehensive summary of the content of this article.

Related work

Image enhancement methods

At present, low-light image enhancement methods can be divided into two main types: traditional low-light enhancement methods and deep learning-based low-light enhancement methods. Traditional low-light enhancement methods enhance images by adjusting physical parameters such as brightness, grayscale, contrast, color, and noise. Classic approaches include AE14, CLAHE15, AHE16, DCP17, SSR18 and MSR19. Deep learning-based low-light enhancement methods integrate deep learning models for preprocessing, combining object detection frameworks and adaptive loss functions to optimize image parameters. Representative techniques include Zero-DCE20, KinD21, CPA-Enhancer22, Retina-Net23, and Spatial-Aware GAN24.

The traditional low-light enhancement methods are typically represented by AHE and MSR methods. The AHE method does not require training data, has a small amount of calculation, and has a wide applicability. It has obvious enhancement effect on low-contrast areas, but it is easy to amplify dark area noise and produce edge artifacts. The MSR method uses 2HSV spatial separation processing, which has high color fidelity and high detail clarity. However, its parameter tuning is complex, requires manual experience adjustment, has weak generalization ability, and has the problem of high illumination and overexposure. The low-light image enhancement method based on deep learning is represented by Retina-Net and Zero-DCE. The Retina-Net method can perform denoising, contrast enhancement and color correction at the same time, and the comprehensive effect is excellent. However, it has a large amount of calculation, relies on GPU acceleration, has strong data dependence, and requires a lot of labeled data training. The Zero-DCE method can achieve lightweight real-time processing, and can perform unsupervised learning without relying on data. It has strong generalization ability, but the recovery of details is prone to distortion.

Attention mechanism

The main purpose of attention mechanisms is to focus on key information, suppress irrelevant parts, and improve performance by assigning dynamic weights. Global attention focuses on all regions of the input and captures the global context information. The advantage is that it can obtain the input information globally. The disadvantage is that the computational complexity is high, which will increase the training and inference time of the model. SE, ECA, CBAM, CA are typical global attention mechanisms. Local attention only focuses on the sub-regions of the input. The advantage is to reduce the amount of calculation and can flexibly focus on different regions of information. The disadvantage is that the ability to obtain global information is weak, such as the Swin transformer25, ELA26, MLCA and ECLA27. Other mechanisms include multihead attention, such as Transformer23 and ViT28, which uses multiple attention heads to learn different semantic aspects, and hierarchical attention, such as HRNet29 and AF-FPN30, which applies attention at different scales to integrate multigranularity information.

Typical global attention mechanisms include ECA and CBAM. ECA uses one-dimensional convolution to generate channel weights, which has a very small number of parameters and can be plug-and-play. The disadvantage is the lack of spatial information, which limits the detection accuracy. CBAM adopts two dimensions of channel attention and spatial attention, and the target focusing ability is strong. The disadvantage is that the number of convolution layers is large and the number of parameters is high. Typical local attention mechanisms include Swin Transformer and ELA. Swin Transformer is suitable for multi-scale feature extraction, and the calculation amount is only linearly related to the image size. The disadvantage is that the data dependence is strong and requires a large amount of data for pre-training. ELA has strong position perception ability and is more stable for small batch training. The disadvantage is that it does not pay enough attention to global context information.

YOLO algorithm

YOLO (You Only Look Once) is a leading single-stage object detection algorithm known for its fast speed and end-to-end design.YOLOv1-331 transformed detection into regression, and introduced anchor boxes and a multiscale architecture, increasing detection capabilities.YOLOv432 improved feature extraction with attention mechanisms and cross-stage connections.YOLOv533 optimized engineering with adaptive anchors and Focus slices to balance speed and accuracy.YOLOv7 added an Extended Efficient Layer Aggregation Network and dynamic label strategy for better accuracy.YOLOv8 enhanced robustness in complex scenes via distributed focus loss and adjustable gradients.YOLOv11 recently improved multitask detection accuracy with a new feature extraction module and lightweight head, maintaining real-time performance.

The YOLO series algorithms mainly include YOLOv5, YOLOv7, YOLOv8 and YOLOv11. The YOLOv5 algorithm has the best real-time performance and fast reasoning speed, but the disadvantage is that the detection accuracy for small targets and occlusion scenes is low. The advantages of YOLOv7 are strong multi-scale feature fusion and high SOTA accuracy, but its computational complexity is large. YOLOv8 has the advantages of high integration and automatic allocation of dynamic tags. The disadvantage is that the CPU reasoning speed is slow and the calculation cost is high. YOLOv11 has the fastest CPU reasoning and can adapt to a variety of platforms. The disadvantage is that the amount of calculation is large, and the new version has fewer supporting tools.

Methods

The overall framework of YOLO-AS

To address the problem of low target detection accuracy in complex dark environments, this paper proposes a dark target detection method based on deep learning. The framework is shown in Fig. 1, and includes two parts: image enhancement and target detection. In the image enhancement part, the improved Zero-DCES algorithm is used for enhancement, and ConvNext34 and a spatial attention mechanism are introduced to improve the contrast and brightness of the image, especially the target part. In the target detection part, YOLO-AS is used for detection, combined with improved ECA _ ASPP (Effificient Channel Attention and atrous spatial pyramid pooling35) and SKAttention36 (Selective Kernel Attention), to expand the receptive field and improve the ability of multiscale information acquisition, while enhancing the ability of feature extraction without increasing complexity.

Image enhancement module composition

Zero-reference depth curve estimation (Zero-DCE) uses image enhancement as the curve estimation task of deep learning. Dynamic range adjustment is achieved by predicting pixels and high-order curves through DCE-Net37. Specifically, the image in the dark environment is input into the Zero-DCE network, and the high-order tone curves are output. These curves are used to adjust the dynamic range of the input image at the pixel level to obtain the enhanced image. The curve estimation process can maintain the dynamic range of the enhanced image and preserve the contrast of adjacent pixels.

To solve the problem that Zero-DCE is slow in processing many images, a lightweight and efficient ConvNext convolutional network is used to improve the speed and accuracy, which is suitable for scenes such as multiscale fusion and semantic segmentation. At the same time, the spatial attention mechanism (SAM) is introduced to enhance the contrast and brightness of the target in the image.

Figure 2 shows an improved Zero-DCES network structure with 8 layers. Each layer is composed of several 3 × 3 convolution kernels, the step size is 1, and the boundary is filled with 1. The ReLU38 activation function is used to calculate linearity, simplify complex operations, and achieve efficient and stable propagation performance. The first 6 layers use ReLU, and the last layer introduces Tanh activation to standardize the output value to the expected range. After the dark environment image is input, the curve parameters of each pixel are output through multilayer convolution, and the size is wxhx24. The 24 parameters are divided into three groups, corresponding to the R, G, and B channels of the image. Each channel has 8 sets of data, representing 8 iterative adjustment stages.

After the curve adjusts the parameters, the parameters are used to perform multiple iterations of the gray value of the dark image. The iterative formula is as follows:

Here, x is the pixel coordinate parameter, \(I(\mathbf{x})\) is the pixel value of the corresponding position, \(\alpha \in [-\text{1,1}]\) is the curve adjustment parameter, and \(LE(I(\mathbf{x});\alpha )\) is the enhanced image after inputting \(I(\mathbf{x})\), that is, the result after multiple iterations.

On the basis of Formula (1), the dark environment image is iterated 8 times to obtain the enhanced image. The enhancement principle of the image is shown in Fig. 3.

Zero-DCES loss function refers to Zero-DCE image enhancement algorithm. To avoid algorithm redundancy, a no-reference loss function is introduced, which is Spatial Consistency Loss, Exposure Control Loss, Color Constancy Loss, Illumination Smoothness Loss, etc.39. The enhanced image is constrained by spatial consistency, exposure, color, illumination brightness and other angles. These loss functions are more efficient and lightweight, and include the following four key functions:

Spatial consistency loss

Spatial Consistency Loss, that is, the spatial consistency error loss function. After image enhancement, the content of the image changes. This change is based on a large change in the difference between the pixel value and its adjacent pixel value. To reduce this change, we designed the following loss function:

where K is the number of regions with a pixel size of 4 × 4 in the image, i is the total value of the pixels, \(\Omega (i)\) represents the four neighborhoods of the ith pixel in the image, I represents the input image, and Y represents the output enhanced image.

Exposure control loss

Exposure Control Loss, that is, the exposure control error loss function, will be too bright or too dark in some areas after image enhancement. To control the extreme brightness value, the exposure control error loss function is introduced, that is, the brightness of each pixel of the image is closer to an intermediate value. The expression of the loss function is as follows:

where E is a constant that represents the median value of brightness under good vision, that is, the exposure value of the image, which is usually 0.6. Where \({Y}_{k}\) represents the average value of brightness in a local pixel region, where the width and height of the local pixel region are 16 × 16, and M represents the total number of disjoint regions with the pixel region in the image.

Color constancy loss

Color Constancy Loss, that is, the color stability error loss function, the image phenomenon will exhibit color distortion after enhancement. To reduce the distortion of the image, the color stability error loss function is introduced to ensure that the color of the image does not change after enhancement.

Among them, (p, q) represents a set of color channels of the image, that is, there are three sets of color channels: \((R,G),(R,B),(G,B)\). \({J}_{p}\) and \({J}_{q}\) represent the average brightness values of the images of the p and q channels, respectively.

Illumination smoothness loss

Illumination Smoothness Loss, that is, the error loss function of illumination smoothness. After image enhancement, to enhance the coordination relationship between adjacent pixels, reduce the situation in which the brightness changes of adjacent pixel areas are too different, and enhance the smoothness of brightness changes, the error loss function of illumination smoothness is introduced. Its expression is as follows:

Here, N is the number of iterations, \({\nabla }_{x},{\nabla }_{y}\) represent the gradient operators in the horizontal and vertical directions of the image respectively, c represents the three color channels of R, G and B, and \({A}_{n}^{c}\) represents the color channel parameters corresponding to c when the number of iterations is n.

The total error loss function is obtained by weighted combination of the above four error loss functions, namely,

where \({w}_{1}, {w}_{2}, {w}_{3}\) and \({w}_{4}\) represent the weight ratios of each loss function, which are 1,10,5 and 200, respectively.

Object detection module composition

As a representative of the YOLO series, YOLOv7 is famous for its high speed and high precision. However, the detection effect is not good in complex dark environments, and have problems such as missed detection, false detection and large deviations can easily occur. To this end, this paper proposes YOLO-AS (You Only Look Once-atrous spatial pyramid pooling and Selective Kernel Attention), and introduces improved ECA_ASPP and SKAttention. The network structure mainly includes the input, backbone and detection head. The backbone is responsible for multiscale feature extraction, and the detection head performs in-depth processing of features to achieve target positioning and category prediction. The YOLO-AS network structure is shown in Fig. 4.

ECA_ASPP

After the dark environment is enhanced, when the traditional convolutional neural network40 is used for target detection, the features are first extracted via convolution, and then the size is compressed by pooling. However, with the deepening of network layers and upsampling, information loss and spatial hierarchy problems canoccur. To solve this problem, combined with the methods of ECA_ASPP and SKAttention, the receptive field is expanded at different sampling rates, and the input features are obtained at multiple scales. Finally, the features are fused to improve the extraction effect. The ASPP structure is shown in Fig. 5.

ECA is a channel-based attention mechanism that can improve the expression and generalization ability of the model. By learning to allocate the weights of each channel dynamically, important features are enhanced and unimportant features are suppressed. The ECA module first uses a convolution operation to obtain a feature map with height and width of H, W, and a channel number of C, then performs global average pooling to obtain the description vector of each channel, and generates channel weights through an excitation operation. Finally, the enhanced feature map is output by multiplying it with the original feature. The structure is shown in Fig. 6.

SK attention mechanism

To obtain more context information, ECA_ASPP is introduced for multiscale feature extraction, but ECA_ASPP may not be able to extract some key features significantly. Therefore, the SK attention mechanism is added after SPP to highlight important features and compensate for the problem of insufficient ECA_ASPP feature extraction. SK attention includes three steps. Split, different convolution kernels are used to perform multichannel convolution on the feature map to extract diverse features. Fuse, through global average pooling and adaptive fusion, fuses different features into a feature map Select, and uses an adaptive mechanism to select the most representative features for final fusion. The structure is shown in Fig. 7.

Experiment

Experimental platform and experimental indicators

The experimental platform and experimental parameters are shown in Tables 1 and 2. In this work, the experiments use the ExDark41dataset and LOL42 dataset, which are divided into a training set and a test set. The experimental platform uses PyCharm2.0, the programming language uses Python3.11, and the experiments use mAP@50 and mAP@50:95 to quantify the performance indicators.

Experimental dataset

We use two different dark environment datasets to verify the feasibility, effectiveness and generalizability of the YOLO-AS algorithm. The two datasets are randomly divided into training set and a test set at a ratio of 8:2.

ExDark is an image dataset for dark environment target detection, which is mainly used in scenes such as autonomous driving and security. The dataset contains 7363 images covering 10 lighting conditions from very low light to twilight. The main categories include bicycles, boats, bottles, buses, cars, cats, chairs, cups, dogs, motorcycles, bicycles, pedestrians and tables (Fig. 8). All the images are manually labeled with the target bounding box and lighting conditions, aiming to improve the detection capabilities in dark environments.

LOL is designed for low-light image enhancement, and includes 500 pairs of images (Fig. 9). Each pair includes a low-light image and a normal-light image, which are adjusted to a resolution of 400 × 600, covering different intensities from extremely low light to dark environments. The goal is to improve the image enhancement and target detection capabilities of the model in a dark environment.

Ablation experiment

The YOLO-AS algorithm uses Zero-DCES module, ECA_ASPP module and SK mechanism to improve the network structure. To verify whether the improved parts have a positive impact on the model object detection effect, ablation experiments are carried out to verify the effectiveness and necessity of each part of the module. In accordance with the YOLOv7 algorithm, the Zero-DCES image enhancement module is added, and the ASPP-SE module and SK mechanism are added to the feature extraction component. The ExDark dataset and the LOL dataset are used for testing under the same conditions. The test results are shown in Tables 3 and 4.

Tables 3 and 4 show that in the ExDark dataset test, the Zero-DCES module is introduced separately on the basis of YOLOv7, the mAP@50 is increased by 3.23%, and the mAP@50:95 is increased by 1.64%. With the introduction of the ECA_ASPP module, the mAP@50 increased by 1.02%, and the mAP@50:95 increased by 1.25% ; with the addition of the SK mechanism only, the mAP@50 increased by 1.54%, and the mAP@50:95 increased by 2.12%. In the LOL dataset test, for the benchmark YOLOv7 plus Zero-DCES alone, mAP@50 increased by 3.51%, mAP@50:95 increased by 1.77%, and the addition of ECA_ASPP increased by 1.68% and 0.98%, respectively. The SK mechanism is increased by 2.15% and 1.2%. The Zero-DCES, ECA _ ASPP and SK modules significantly improve the detection accuracy when the parameters and detection speed are basically unchanged. In particular, Zero-DCES performs best, which verifies its ability to enhance feature expression in dark environments.

The effect of different module combinations is better. For the ExDark dataset, the mAP@50 of Zero-DCES + ECA_ASPP increased by 4.82%, and the mAP@50:95 increased by 2.73%, Zero-DCES + SK increased by 4.66% and 2.85%, ECA_ASPP + SK increased by 3.23% and 3.32%, Zero-DCES + ECA_ASPP + SK increased by 5.78% and 4.06%, respectively. There is a similar trend in the LOL dataset, indicating that there is a synergistic effect between modules and that the combination effect is better than that of a single module.

Comparative test

Image enhancement contrast test

The images of the ExDark and LOL test sets are input into the Zero-DCES network for enhancement. To evaluate the enhancement effect, the nonreference image quality indices MUSIQ43 (Multi-scale Image Quality Transformer) and PIQE44 (Perception based Image Quality Evaluator) are used to evaluate the dark environment image and the enhanced image. The higher the MUSIQ index is, the better the image quality ; the lower the PIQE index is, the higher the image quality.

Tables 5 and 6 show the experimental results of Zero-DCES network and the other four image enhancement networks on the ExDark and LOL datasets. By comparison, the image quality enhanced by Zero-DCES and Retinexforme is higher. Although the effect of Retinexforme is better than that of Zero-DCES, the number of parameters is larger and the computing resource demand is much greater than that of Zero-DCES. Therefore, Zero-DCES has significant generalization ability in image enhancement.

Comparative experiment of the attention mechanism

To verify the effectiveness and generalizability of the attention mechanism proposed in this paper, a systematic comparative experiment is conducted on seven mainstream attention mechanisms, namely, SE, ECA, CBAM, CA, MLCA, ECA_ASPP and SK, on the ExDark dataset and LOL dataset, and sufficient experimental verification is conducted. The heatmap is used to represent the performance of the attention mechanism, as shown in Fig. 10.

Tables 7 and 8 show that most of the models combined with the attention mechanism are superior to the benchmark model in terms of detection accuracy, and the performance improvement is more obvious. The ECA_ASPP + SK mechanism performs best, and achieves the highest mAP improvement on both the ExDark and LOL datasets under the premise of ensuring that the detection speed is basically unchanged. This design combines ECA, ASPP and SK feature selection mechanisms to effectively enhance the local details and global context awareness of dark targets. The advantages of the attention mechanism in the model’s attention to the details of dark environment targets are verified, and the effectiveness and generalizability of the proposed attention mechanism are proven.

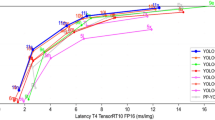

SOTA model comparison test

For the ExDark and LOL object detection datasets, we compare the YOLO-AS algorithm with other SOTA algorithms, and compare the model detection effects in terms of the parameters Params, Flops, map@50, map@50:95, Speed and other indicators. The results are shown in Tables 9 and 10.

In terms of parameter quantity and computational power, the YOLO-AS algorithm proposed in this paper is superior to the YOLOv3, YOLOv4, YOLOv11, Faster R-CNN and Swin Transformer models. Although its detection accuracy is slightly lower than that of the Swin Transformer and YOLOv11, it is superior to the other algorithms, the parameter quantity and computing power requirements are lower, and the overall performance is the best. Compared with lightweight YOLOv5s and YOLOv8n, YOLO-AS has more parameters and computing power, but the detection accuracy is significantly better while maintaining a similar detection speed.

In general, the parameters and computing power of YOLO-AS are lower than those of some mainstream algorithms, while the detection speed and accuracy can reach or even surpass those of some mainstream models, which fully verifies its effectiveness and good generalization ability.

Visualization of results

The detection results of the YOLO-AS algorithm proposed in this paper on the ExDark dataset and the LOL dataset are shown in Fig. 11. The YOLO-AS model can enhance the dark environment image through the Zero-DCES module, and then combine the ECA_ASPP module and the SK mechanism for feature extraction and detection. It can better adapt to complex environments such as dark environments and low-light environments.

Conclusion

To address the problems of low object detection accuracy, missed detection and false detection in complex dark environments, this paper proposes an improved method that combines image enhancement and deep learning in complex dark environments. First, by designing Zero-DCES image enhancement module, comparative experiments are carried out on the ExDark dataset and LOL dataset. The evaluation of the MUSIQ and PIQE indicators reveals that the proposed algorithm is more effective than other algorithms are and provides support for subsequent detection tasks. Second, a YOLO-AS detection model that combines ECA_ASPP and the SK attention mechanism is constructed, which enhances the expression ability and dynamic detection ability of multiscale features, and effectively enhances the model’s feature expression ability for dark environment objects. Experiments show that the mAP@50 of YOLO-AS on the ExDark and LOL datasets reaches 78.39% and 67.81%, respectively, which are 5.78% and 6.39% higher than those of the benchmark model YOLOv7, and the parameter quantity and detection speed remain basically unchanged. In addition, comparative experiments verify that the proposed method outperforms mainstream models such as YOLOv8n and YOLOv11 in terms of detection accuracy, and proves its robustness and generalizability in complex dark environments.

This research provides an effective solution for object detection in dark environments, and provides strong technical support for practical applications such as autonomous driving and monitoring. However, the accuracy of small object detection in complex dark environment is still limited. The detection of small objects is difficult, and the proportion of images is small. The dark environment leads to serious loss of details, and the problems of missed detection and false detection are serious. Future work will further optimize the model structure, improve the robustness and real-time performance of the model, and expand a wider range of application scenarios.

Data availability

The datasets used and analysed during the current study available from the corresponding author on reasonable request.

References

Wang, Z. et al. Deep learning-based object detection techniques for remote sensing images: A survey. Remote Sens. 14, 2385 (2022).

Xu, X. et al. Exploring image enhancement for salient object detection in low light images. ACM Trans. Multimed. Comput. Commun. Appl. 17, 1–19 (2021).

Hashmi, K. A., Kallempudi, G., Stricker, D. et al. Featenhancer: Enhancing hierarchical features for object detection and beyond under low-light vision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 6725–6735 (2023).

Yang, S. et al. LightingNet: An integrated learning method for low-light image enhancement. IEEE Trans. Comput. Imaging 9, 29–42 (2023).

Kong, X. et al. Vehicle object detection method for dark environments. J. Hunan Univ. Nat. Sci. 1, 1–9 (2024).

Wang, D. W. et al. Dark instance-based image enhancement algorithm for UAV aerial dark images. J. Beijing Univ. Aeronaut. Astronaut. 1, 1–14 (2024).

Zhu, Z. et al. Ghost imaging in the dark: A multi-illumination estimation network for low-light image enhancement. IEEE Trans. Circuits Syst. Video Technol. 35, 1576 (2024).

Lin, T. et al. SCDet: Decoupling discriminative representation for dark object detection via supervised contrastive learning. Vis. Comput. 40, 3357–3369 (2024).

Schutera, M. et al. Night-to-day: Online image-to-image translation for object detection within autonomous driving by night. IEEE Trans. Intell. Veh. 6, 480–489 (2020).

Tian, C. et al. Multi-stage image denoising with the wavelet transform. Pattern Recognit. 134, 109050 (2023).

Wang, M. L., Zhang, H. & Zhang, C. S. Deep learning-based dark object detection algorithm. J. Beijing Univ. Posts Telecommun. 1, 1–7 (2024).

Chen, L. et al. Instance segmentation in the dark. Int. J. Comput. Vis. 131, 2198–2218 (2023).

Sun, S. et al. Rethinking image restoration for object detection. Adv. Neural Inf. Process. Syst. 35, 4461–4474 (2022).

Lü, X. R. Research on Infrared and Visible Image Fusion Methods Based on Deep Learning (Qilu Univ, 2024).

Chang, Y., Jung, C. & Ke, P. Automatic contrast limited adaptive histogram equalization with dual gamma correction. IEEE Access 6, 1–1 (2018).

Pizer, S. M. et al. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 39, 355–368 (1987).

Liu, X. & Hou, C. L. Research status and prospects of nighttime image dehazing algorithms. Laser Optoelectron. Prog. 60, 26–33 (2023).

Li, C. L., Zhu, J. J. & Liu, J. H. An adaptive SSR method for foggy low-light image enhancement. Comput. Appl. Softw. 39, 233–239 (2022).

Rao, W., Bi, Z. B. & Tan, Y. K. Low-light ship image enhancement by combining homomorphic filtering and MSR algorithm. Ship Sci. Technol. 46, 152–157 (2024).

Li, C., Guo, C. & Loy, C. C. Learning to enhance low-light image via zero-reference deep curve estimation. IEEE Trans. Pattern Anal. Mach. Intell. 44, 4225–4238 (2021).

Zhang, Y., Zhang, J. & Guo, X. Kindling the darkness: A practical low-light image enhancer. In Proceedings ACM International Conference on Multimedia, 3931–3939 (2019).

Zhang, Y., Wu, Y., Liu, Y. et al. CPA-Enhancer: Chain-of-thought prompted adaptive enhancer for object detection under unknown degradations. arXiv preprint arXiv:2403 (2024).

Li, Y. & Ren, F. Light-weight RetinaNet for object detection. arXiv preprint arXiv:1905.10011 (2019).

Jiang, W., Liu, S., Gao, C. et al. PSGAN: Pose and expression robust spatial-aware GAN for customizable makeup transfer. In Proceedings of the Computer Vision and Pattern Recognition, 524–533 (2020).

Liu, Z., Lin, Y., Cao, Y. et al. Swin Transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 9992–10002 (2021).

Xu, W. & Wan, Y. ELA: Efficient local attention for deep convolutional neural networks. Proc. AAAI Conf. Artif. Intell. 38, 1–9 (2024).

Zhang, Y., Wu, Y., Liu, Y. et al. CPA-Enhancer: Chain-of-thought prompted adaptive enhancer for object detection under unknown degradations. arXiv preprint arXiv:2403. (2024).

Jiang, W., Liu, S., Gao, C. et al. PSGAN: Pose and expression robust spatial-aware GAN for customizable makeup transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 524–533 (2020).

Hu, J. et al. Squeeze-and-excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 42, 2011–2023 (2020).

Wang, Q., Wu, B., Zhu, P. et al. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 11531–11539 (2020).

Woo, S., Park, J., Lee, J. Y. et al. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, 3–19 (2018).

Hou, Q., Zhou, D. & Feng, J. Coordinate attention for efficient mobile network design. arXiv preprint arXiv:2103.02907 (2021).

Liu, Z., Lin, Y., Cao, Y. et al. Swin Transformer: Hierarchical vision transformer using shifted windows. Proceedings of the IEEE/CVF International Conference on Computer Vision, 9992–10002 (2021).

Zeng, W. et al. A masked-face detection algorithm based on M-EIOU loss and improved ConvNeXt. Expert Syst. Appl. 225, 120037 (2023).

Lian, X. et al. Cascaded hierarchical atrous spatial pyramid pooling module for semantic segmentation. Pattern Recognit. 110, 107622 (2021).

Li, X., Wang, W., Hu, X. et al. Selective kernel networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 510–519 (2019).

Liu, J., Shao, L., Zhou, C. et al. DCE-Net: A dynamic context encoder network for liver tumor segmentation. arXiv preprint arXiv:230 (2023).

Agarap, A. F. M. Deep learning using rectified linear units (ReLU). arXiv preprint arXiv:1803.08375 (2018).

Ahmadi, C., Chen, J. L. & Hsiao, Y. Y. Zero-DCE xT: A computational approach to addressing low-light image enhancement challenges. IEEE Trans. Circuits Syst. II 71, 1–5 (2024).

Xu, B. B. et al. A survey of graph convolutional neural networks. J. Comput. Sci. Tech. 43, 755–780 (2020).

Yuen, P., Chan, C. S. Getting to know low-light images with the Exclusively Dark dataset. arXiv preprint (2025).

Wei, C., Wang, W., Yang, W. et al. Deep Retinex decomposition for low-light enhancement. arXiv preprint arXiv:1808.04560 (2018).

Zhang, S. & Liu, Y. Multi-scale transformer with decoder for image quality assessment. In Proceedings of CAAI International Conference on Artificial Intelligence, 220–231 (2023).

Venkatanath, N., Praneeth, D., Bh, M. C. et al. Blind image quality evaluation using perception based features. In Proceedings of National Conference on Communications, 1–6 (2015).

Wan, D. et al. Random interpolation resize: A free image data augmentation method for object detection in industry. Expert Syst. Appl. 228, 120355 (2023).

Woo, S., Park, J., Lee, J. Y., et al. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), 3–19 (2018).

Zhang, Y., Zhang, J. & Guo, X. Kindling the darkness: A practical low-light image enhancer. In Proceedings of the ACM International Conference on Multimedia, 3931–3939 (2019).

Cai, Y., Bian, H., Lin, J., et al. Retinexformer: One-stage retinex-based transformer for low-light image enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 12504–12513 (2023).

Wan, D. et al. YOLO-MIF: Improved YOLOv8 with multi-information fusion for object detection in gray-scale images. Adv. Eng. Inform. 62, 102709 (2024).

Khanam, R. & Hussain, M. YOLOv11: An overview of the key architectural enhancements. arXiv preprint arXiv:240 (2024).

Carion, N., Massa, F., Synnaeve, G. et al. End-to-end object detection with transformers. Proceedings European Conference on Computer Vision, 213–229 (2020).

Ren, S., He, K. & Girshick, R. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of Advances in Neural Information Processing Systems, 91–99 (2016).

Zhou, B., Jiang, P. F. & Duan, C. Improved RetinaNet-based object detection method for single-background scenarios. Comput. Sci. 50, 137–142 (2023).

Tan, M., Pang, R. & Le, Q. V. EfficientDet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 10781–10790 (2020).

Acknowledgements

The research of this paper is supported by the key project of the National Natural Science Foundation of China (12032017) and the general project of the National Natural Science Foundation of China (11872256).

Author information

Authors and Affiliations

Contributions

Methodology, Bin Ren and Zhaohui Xu; Algorithm design, Bin Ren Zhaohui Xu and Junwu Zhao; Exper iments, Bin Ren,Rujiang Hao and Zhaohui Xu; Original draft, Bin Ren and Zhaohui Xu; Review and editing, Zhaohui Xu,Rujiang Hao,Junwu Zhao and Jianchao Zhang; Visualization, Zhaohui Xu,Rujiang Hao and Jianchao Zhang.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ren, B., Xu, Z., Zhao, J. et al. Complex dark environment-oriented object detection method based on YOLO-AS. Sci Rep 15, 21873 (2025). https://doi.org/10.1038/s41598-025-07348-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-07348-0