Abstract

Recent human activity recognition (HAR) developments have allowed numerous applications like healthcare, smart homes, and improved manufacturing. Activity recognition plays a crucial part in improving human well-being by capturing behavioral data, enabling computing systems to analyze, monitor, and assist the daily lives of individuals with disabilities. HAR assist in comprehending complex human actions and behaviors by observing body movements and the surrounding environment, aiming to support individuals with disabilities, and is therefore extensively utilized in smart homes, athletic competitions, medical care, and other applications. This paper proposes a binary grey wolf optimization-driven ensemble deep learning model for human activity recognition (BGWO-EDLMHAR) technique. The BGWO-EDLMHAR technique aims to develop a robust HAR method for disability assistance using advanced optimization models. The data normalization stage applies z-score normalization at first to transform raw data into a clean and structured format suitable for analysis or modelling. Furthermore, the binary grey wolf optimization (BGWO) method is utilized for the feature selection process. Moreover, the ensemble of DL models, namely bidirectional long short-term memory, variational autoencoder, and temporal convolutional network methods, are employed for the classification process. Finally, the cetacean optimization algorithm optimally adjusts the ensemble models’ hyperparameter values, resulting in more excellent classification performance. The BGWO-EDLMHAR approach is examined using the WISDM dataset. The comparison study of the BGWO-EDLMHAR approach portrayed a superior accuracy value of 98.51% over existing models.

Similar content being viewed by others

Introduction

HAR is frequently related to establishing and recognizing activities utilizing sensory observations1. Mainly, human activity refers to the movement of one or more segments of the person’s body. This is both atomic and composed of multiple primitive activities accomplished in a few sequential order2. Consequently, HAR should allow labelling similar activities with the same label, even when performed by diverse individuals under varying styles or conditions. HAR method endeavours to automatically recognize and analyze such human activity utilizing the attained data from the multiple sensors3. Furthermore, HAR outputs might be used to organize succeeding decision-support methods. Various analysts have projected a HAR method that could assist an instructor in controlling a multi-screening and multi-touch teaching device, like sweeping left or right to access the preceding or succeeding slide, calling the eraser device to eliminate the wrong content, and so on4. An activity recognition job usually precedes the HAR methods. It contains the temporal recognition and localization of such actions in the scene to improve the knowledge of the existing event. Thus, the activity recognition job might be segmented into dual categories: detection and classification. The HAR is an effective device to monitor the activities of elderly and disabled people. So, HAR became a central scientific domain in the computer vision (CV) community. It involves the growth of significant applications like human–computer interaction (HCI), security, virtual reality, home monitoring, and video surveillance5.

HAR intends to detect an individual’s physical activities depending on video and/or sensor data6. It is a method that offers data about user behaviour that might preclude hazardous situations or forecast actions that could arise7. The presence of gadgets such as video cameras, radio frequency identification (RFID), sensors, and Wi-Fi isn’t novel, but the utilization of these gadgets in HAR is in its infancy. The aim of HAR progression is the rapid evolution of models like artificial intelligence (AI) that allows the utilization of these gadgets in several fields of application8. Previously, these techniques depended on a particular image or a minor sequence of images, but the developments in AI have offered greater chances9. Based on the inspections, the development of HAR is directly proportional to the progression of AI, which advances the scope of HAR in multiple fields of application. The growing demand for assistive technologies in healthcare, specifically for individuals with disabilities, underscores the significance of accurate HAR systems. These systems can significantly improve the quality of life by enabling seamless interaction with devices through gesture recognition. By utilizing advanced optimization and DL methods, HAR models can enhance the accuracy and efficiency of detecting diverse activities in real-time. Such innovations can authorize healthcare professionals and caregivers by giving insightful data, facilitating better patient care management, and improving the autonomy of individuals with disabilities. This motivates the requirement for optimized, robust, and scalable HAR solutions10.

This paper proposes a binary grey wolf optimization-driven ensemble deep learning model for human activity recognition (BGWO-EDLMHAR) technique. The BGWO-EDLMHAR technique aims to develop a robust HAR method for disability assistance using advanced optimization models. The data normalization stage applies z-score normalization at first to transform raw data into a clean and structured format suitable for analysis or modelling. Furthermore, the binary grey wolf optimization (BGWO) method is utilized for the feature selection (FS) process. Moreover, the ensemble of DL models, namely bidirectional long short-term memory (BiLSTM), variational autoencoder (VAE), and temporal convolutional network (TCN) methods, are employed for the classification process. Finally, the cetacean optimization algorithm (COA) optimally adjusts the ensemble models’ hyperparameter values, resulting in more excellent classification performance. The BGWO-EDLMHAR approach is examined using the WISDM dataset. The major contribution of the BGWO-EDLMHAR approach is listed below.

-

The BGWO-EDLMHAR method applies z-score normalization to pre-process the data, ensuring proper scaling of features for optimal performance. This step improves the accuracy of the subsequent DL technique. By standardizing the data, the model enhances stability and convergence during training.

-

The BGWO-EDLMHAR model employs the BGWO technique to perform effective FS, reducing dimensionality while preserving crucial features. This results in a more accurate and computationally efficient model. The BGWO method improves the technique’s capability to concentrate on the most relevant features, improving overall classification performance.

-

The BGWO-EDLMHAR methodology integrates BiLSTM, VAE, and TCN models, utilizing their complementary merits to improve classification accuracy. This integration allows the model to capture temporal dependencies and complex feature representations effectively. Employing these diverse models improves overall performance and robustness in classification tasks.

-

The BGWO-EDLMHAR approach implements the COA model to fine-tune the technique’s hyperparameters, optimizing performance and accuracy. By adjusting key parameters, this tuning process confirms that the model operates at its highest potential. By refining the hyperparameters, COA improves the model’s efficiency and classification capability.

-

The novelty of the BGWO-EDLMHAR method stems from the hybrid integration of BGWO-based FS, an ensemble of DL models, and COA-based hyperparameter tuning. This unique integration creates a robust and optimized framework that improves classification accuracy for HAR in disability assistance. Utilizing each component’s merit, the model effectively handles complex tasks in real-time applications, making it adaptable and effective for diverse scenarios.

Review of literature

Yazici et al.11 developed an e-health structure that employs real-world information from ECG, inertial, and video sensors to observe the activities and health of people. The structure utilizes edge computing for effective inquiry and creates notifications depending upon data analysis when prioritizing confidentiality to activate only the needed multimedia sensors. Raj and Kos12 offer a complete survey of the CNN application in classifying HAR jobs. The authors illustrate their improvement, from their antecedents to the advanced DL method. The authors have offered complete functional standards of CNN for the HAR job, and a CNN-based technique is projected to implement the human activity classifications. The developed method translates information from sensor input sequences by employing a multiple-layered CNN that collects spatial and temporal data connected to the activity of humans. In13, DL techniques with an innovative automated hyper-parameter generator are developed and applied to forecast human activity like jogging, walking downstairs, walking upstairs, standing, and sitting robustly and more precisely. Traditional HAR methods cannot handle real-world variations in the neighbouring resources. Advanced HAR methods overwhelm these constraints by incorporating multiple sensing modalities. These sensors can yield precise data, inducing a more excellent perception of activity detection. The projected method utilizes sensor-level fusion to combine accelerometer and gyroscope sensors. The author14 developed a multiple-level feature fusion model for multimodal HAR utilizing multi-head CNN with CBAM to process the visual information and ConvLSTM for handling the time-sensitive multiple-source sensor data. These advanced structures can examine and retrieve channel and spatial dimensional aspects using three parts of CNN and CBAM for visual data. Snoun et al.15 projected a novel assistive method to help patients with Alzheimer’s disease implement their day-to-day routines individually. The projected supportive methods are collected from dual kinds. HAR segment to observe the behaviour of patients. Here, the authors projected dual HAR methods. Primarily, two-dimensional skeleton information and CNN are used, followed by three-dimensional transformers and skeleton. Another branch of the supportive method contains a supportive component, which identifies the patient’s behavioural irregularities and problems with proper warnings. Hu et al.16 introduce a field of unsupervised adaptation technique with sample weight learning (SWL-Adapt) for cross-user WHAR. SWL-Adapt determines the weight of samples based on the loss of classification and discrimination loss of every sample with a parameterized system. The authors developed the meta-optimizer-based upgrade rule to learn this system end-to-end, which is directed with the loss of meta-classification on the chosen pseudo-labeled target instances.

In17, an online HAR structure on a streaming sensor is projected. Dynamical segmentation chooses two subsequent actions related to similar activity modules or not. Subsequently, a CNN2D needs a multiple-dimension format in input, and stigmergic track encrypting is accepted to develop encrypted aspects in a multiple-dimension format. Alhussen, Ansari, and Mohammadi18 develop a novel selfish herd optimization-tuned long/short-term memory (SHO-LSTM) methodology for vocal emotion recognition. The technique utilizes Mel-frequency cepstral coefficients (MFCC) for feature extraction and employs various optimization techniques. The method is compared with techniques such as cumulative attribute-weighted graph neural network (CA-WGNN), voting classifier with logistic regression and stochastic gradient descent (VC-LR-SGD), support vector machine (SVM), and gradient boosting model (GBM) methods. Pan et al.19 propose a fatigue state recognition system for miners utilizing a multimodal extraction and fusion framework, integrating physiological data and facial features. The system utilizes advanced techniques such as ResNeXt-50, GRU, and Transformer+ for feature extraction and fusion. Gonçalves et al.20 employ a DL-based methodology for recognizing daily motor activities using inertial data to assist in the continuous and objective assessment of motor disabilities in Parkinson’s disease (PD). Liang et al.21 investigate optimal modelling methods and FS techniques for gait synergy, utilizing advanced neural networks such as Seq2Seq, LSTM, recurrent neural network (RNN), and gated recurrent unit (GRU) methods to improve human–machine interaction in lower limb assistive devices. Alsaadi et al.22 propose a lightweight behaviour detection technology utilizing multisource sensing and ontology reasoning to address the challenges of high resource consumption, data gathering, and scalability in health monitoring and assistance behaviour recognition. Inoue et al.23 developed a new myoelectric prosthetic hand and control system that improves wrist joint functionality. This allows users to utilize their remaining wrist motion and enhance performance in pick-and-place operations. Al Farid et al.24 compare single-view and multi-view HAR for ambient assisted living (AAL), analyzing key advancements in DL models, feature extraction, and classification approaches. Gao et al.25 developed a dual-hand detection and 3D hand pose estimation methodology for accurate monocular motion capture, enabling effectual bionic bimanual robot teleoperation.

Hamad et al.26 present a self-supervised learning network that improves HAR by utilizing balanced unlabeled data, addressing class imbalances with an enhanced iSMOTE technique, and constructing generic semantic representations through random masking. Uesugi, Mayama, and Morishima27 measure the rowing force of the middle leg of water striders utilizing a bio-appropriating probe (BAP) and compare it with the force estimated through indirect measurement via image analysis. Paul et al.28 present HAR_Net, a two-stage pipeline that encodes 3D time-series sensor data into 2D images utilizing a Gramian angular field (GAF) and classifies them using a customized convolutional neural network (CNN) for HAR. Zhang et al.29 propose a versatile continuum grasping robot (CGR) technique with a concealable gripper while utilizing a global kinematic model and motion planning approach for cooperative operation. Rajpopat et al.30 improve cerebral palsy detection in infants by integrating body part-based analysis with a pose-based feature fusion framework, enhancing accuracy and robustness by integrating both time and frequency domain data. Yu et al.31 developed a lower limb rehabilitation robot with adaptive gait training capabilities, utilizing human–robot interaction force measurement to improve patient participation, safety, and personalized rehabilitation. Liu et al.32 propose a hybrid model incorporating YOLO and spatio-temporal graph convolutional network (ST-GCN) methods for multi-person fall detection. Almalki et al.33 developed a bat optimization algorithm with an ensemble voting classifier (BOA-EVCHAR) technique, assisting disabled individuals by utilizing LSTM and deep belief network (DBN) models for classification and BOA for hyperparameter optimization. Pellano et al.34 evaluate the reliability and applicability of Explainable AI (XAI) methods, specifically CAM and Grad-CAM, in predicting Cerebral Palsy (CP) using skeletal data from infant movements. Prakash, Jeyasudha, and Priya35 improve activity recognition in lower limb prosthetics by utilizing an optimized DL technique, integrating pre-processing, feature extraction, and feature classification with an optimized LSTM model, enhanced by the black window optimization (BWO) approach. Duc and Toan36 developed a smart wearable device for stroke warning based on fall detection, incorporating IoT technology and DL methods to monitor movement data, improve data labelling accuracy, and provide real-time alerts for timely intervention. Zaher et al.37 utilize DL techniques, comprising Bi-LSTM, LSTM, CNN, and CNN-LSTM, to improve exercise evaluation and patient care in physical rehabilitation.

Although crucial improvements have been made in HAR, various limitations persist. Many studies primarily concentrate on specific sensor modalities, neglecting the benefits of multimodal sensor fusion for more accurate activity recognition. Furthermore, data sparsity, class imbalance, and dependency on large labelled datasets remain common issues, affecting the scalability and robustness of models. Moreover, while XAI methods exhibit promise in medical applications like CP detection, their practical applicability in real-time systems remains underexplored. Many existing techniques fail to adequately address real-world environments’ dynamic and unpredictable nature, restricting their efficiency in diverse and constrained settings. Furthermore, optimization techniques, like BOA and BWO, illustrate potential but lack generalizability across diverse HAR tasks.

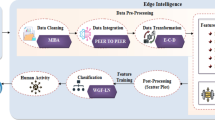

Proposed methodology

This paper proposes a BGWO-EDLMHAR technique. The proposed technique aims to develop a robust HAR method for disability assistance using advanced optimization models. Figure 1 depicts the entire flow of the BGWO-EDLMHAR method.

Z-score normalization

At first, the data normalization stage applies z-score normalization to transform raw data into a clean and structured format38. This technique is chosen for its capability to standardize features, making them comparable regardless of their original scales. Unlike min–max normalization, which can be sensitive to outliers, Z-score normalization ensures that all features are centred around a mean of zero with a standard deviation of one, enhancing model stability. This technique assists algorithms like DL models, which are sensitive to feature magnitude, converge faster and improve performance. It is particularly effective when dealing with data that contains features of varying scales, as it standardizes them without distorting the relationships. Furthermore, it gives a clear basis for comparing feature importance and assists in averting biases caused by dominant features with large ranges. Overall, it improves the model’s efficiency, accuracy, and robustness.

Z-score normalization is a model applied for standardizing data by converting it into a distribution with a standard deviation of 1 and a mean of 0. For HAR, this model is primarily beneficial as it aids in removing the effect of changing scales in sensor data, like gyroscope or accelerometer readings, making it simpler for ML methods to recognize patterns. By normalizing the features, this method guarantees that each input data gives similarly to the classification method, avoiding features with larger areas from dominating the technique. This is particularly significant after addressing heterogeneous data resources, while dissimilar sensors might have dissimilar scales or units. Therefore, this model enhances the efficiency and accuracy of HAR models.

Binary GWO technique

Following this, the proposed BGWO-EDLMHAR technique utilizes the BGWO approach for the FS process39. This method is selected for its capability to mitigate dimensionality while retaining the most informative features effectually. The BGWO method replicates the hunting behaviour of grey wolves, enabling it to explore the feature space efficiently and select a subset of relevant features. BGWO can handle complex, high-dimensional data more effectively than conventional methods like filter or wrapper-based approaches. It is less prone to overfitting, as it optimizes feature selection based on exploration and exploitation, ensuring the optimum feature subset is chosen for model performance. Furthermore, the flexibility of the BGWO model in dealing with binary decision variables makes it highly appropriate for feature selection tasks, giving a more efficient and accurate feature set for the model. This results in enhanced computational efficiency and better generalization in the learning process. Figure 2 specifies the steps involved in the BGWO method.

GWO was initially presented to look for the global optimal. The hierarchical mechanism GWO applies includes hunting and searching for prey, with all wolves acting as a possible solution for possibly taking the prey. The best three optimum solutions inside the population are called \(\alpha ,\beta\), and \(\delta\), whereas the lower‐ranked candidates are mentioned as \(\omega\). The \(\omega\) wolves upgrade their locations depending on the locations of \(\beta\), and \(\delta\) leader wolves that are computed from Eq. (1):

whereas \(a\) refers to convergence factor; \({\vec{\text{A}}}\) and \({\vec{\text{C}}}\) mean dual coefficient vectors including arbitrary vectors amongst \(\left[ {0,1} \right];\;{\vec{\text{D}}}_{\alpha } ,\) \({\vec{\text{D}}}_{\beta }\) and \({\vec{\text{D}}}_{\delta }\) correspondingly characterize the distance vectors from \(\omega\) wolves to \(\alpha ,\beta\), and \(\delta\) leader wolves; \({\vec{\text{X}}}\left( t \right),{\vec{\text{X}}}_{\alpha } ,{\vec{\text{X}}}_{\beta }\), and \({\vec{\text{X}}}_{\delta }\) denotes the present location vectors of \(\omega ,\alpha ,\beta\), and \(\delta\) wolves.

Every wolf’s position in the feature selection problem is represented by a binary value, either 0 or 1. To update these positions effectively, a transfer function (TL) is applied. Equation (7) defines the sigmoid TL used to map continuous values to probabilities.

Equation (8) presents the binary update rule, where the wolf’s position \(X_{i}^{d} \left( t \right)\) in the dth dimension at iteration \(t\) is updated based on the sigmoid output compared against a random threshold.

Here, Eq. (7) refers explicitly to the sigmoid function, while Eq. (8) describes how this function governs the binary position update.

In recent years, a larger quantity of GWO‐based FS models was intended for classification problems. A TL is a significant portion of BGWO, which verifies different TLs and provides an updated equation for parameter \(a\). The observed results authorize the effectiveness of the enhanced BGWO model. A dual‐stage mutation was combined with the BGWO model to decrease the selected feature counts without degrading the performance of the classification. The experimentations on 35 datasets presented its outperformance. Earlier studies have completed significant developments in FS problems. Nevertheless, it is noted that few GWO‐based works concentrated on higher‐dimensional classification tasks.

The fitness function (FF) applied in the BGWO model is intended to have a balance amongst the selected feature counts in all solutions (minimal), and the classification precision (maximal) gained by utilizing these FSs, Eq. (9) characterizes the FF to assess solutions.

whereas \(\gamma_{R} \left( D \right)\) exemplifies the classification error ratio of a specified classifier. \(\left| R \right|\) denotes the cardinality of the chosen subset, and \(\left| C \right|\) implies the total feature counts in the dataset. The parameters \(\alpha\) and \(\beta\) determine the relative importance of classification accuracy and feature reduction, with \(\beta = 1 - \alpha\). These values are chosen based on the objective of maintaining high classification performance while reducing dimensionality.

Ensemble DL classifiers

In addition, the ensemble of DL models, namely BiLSTM, VAE, and TCN, is used for the classification process. The ensemble model is chosen for their complementary strengths in handling sequential and time-series data. BiLSTM captures past and future context in sequence data, enhancing model comprehension of temporal dependencies. VAE, with its generative capabilities, assists in learning complex latent representations, improving the ability of the model to reconstruct input data and capture underlying patterns. TCN, on the other hand, is effectual for modelling long-range dependencies in sequences, particularly for tasks that involve irregular temporal patterns. Integrating these models utilizes their merits, enabling more accurate and robust classification results than any single model. Additionally, this ensemble approach mitigates the risk of overfitting and enhances generalization, improving performance on diverse data sets.

BiLSTM model

The LSTM stocks the input data in a hidden layer (HL) comprising a time series model40. The Bi-LSTM is an enhanced edition of traditional LSTM that takes previous and future states to upgrade the forecast occurrence. The Bi-LSTM procedures the data into two systems, backwards and forward LSTM, and the consequences of these systems are incorporated at each time. During the layer of forward, assessment is achieved from time \(\left[ {1,t} \right]\), and its output is gained and protected at each time. The assessment is backward in time [t,1] within the background layer, and its output has always been obtained and protected. Finally, the last outcome is gained at each instant over incorporating output for the particular time of the backward and forward layers. The mathematical formula of Bi-LSTM is stated in (10)–(12).

Here, \({\overleftarrow{h}} _{t}\) and \(\vec{h}_{t}\) represent backwards and forward HL outputs projected over superscripts \(\left( {\vec{.}} \right)\), \(\left( {\overleftarrow{\cdot }} \right)\) individually. The \(W_{{\vec{h}\vec{h}}} ,\) \(W_{{{\overleftarrow{h}} y}}\), and \(W_{{x\vec{h}}}\) represent the weighted matric of HL, output, and input layers. The \(b_{{\vec{h}}} ,\) \(b_{{{\overleftarrow{h}} }}\) and \(b_{y}\) specifies output biased vector of HL, output, and input layers, respectively. The Bi-LSTM takes spatial aspects and bi-directional time dependency from historical information that enhances the occurrence of the method. Figure 3 illustrates the infrastructure of BiLSTM.

VAE model

To evade this restriction, VAE provides an extensively accepted solution41. VAE usually utilizes a latent space of substantially lower dimension than the data space, specified as \(q << n\); VAE offers improved control through the latent space dimensions, as deliberated consequently. Since the generator \(g_{\theta }\) is not invertible, computing the negative log‐probability loss is unfeasible. Recall that the possibility of a sample \(x\) drawn from \(\ddot{X}\) indicated by the generator is specified \(sP_{\theta } \left( x \right)\). It utilizes Bayes’ rule and permits for the possibility of re‐expression in the following method:

The generator is trained for art generation utilizing the VAE method, with the latent space discussed in 2D. Since the image intensity ranges from \(\left[ {0,1} \right]\), the reconstruction quality is determined using the Bernoulli probability of \(\left[ x \right]\). Though the generator is diverse, the same neural network structure is used to compute the covariance and mean of the posterior in the exceptional VAE. For a given art image \(x\), dual convolutional layers are utilized as the feature extractor.

Here, \(C_{VAE}^{\left( 1 \right)}\) and \(C_{VAE}^{\left( 2 \right)}\) are convolutional operators with 4 × 4 strides and stencils of two, which decreases the pixel counts by a dual factor on every axis. The initial layer has thirty-two latent channels, and another layer has sixty-four latent channels. The biased vectors \(c_{VAE}^{\left( 1 \right)}\) and \(c_{VAE}^{\left( 2 \right)}\) employ continuous changes to every channel. The given aspect \(h^{\left( 2 \right)}\), the covariance, and the mean diagonal of the estimated posterior, \(e_{\psi } (z|x)\), are computed.

TCN model

Compared with GRU and LSTM, the TCN method has an improved memory for sequential modelling tasks and carries out large‐scale parallel processing42. It has been enhanced in the succeeding methods,

-

TCN has causal convolution that is applied to better processing the sequential dataset.

-

To recollect enormous historical information, TCN uses residual mapping and dilated causal convolution principles.

Causal convolution. It assumes the forecast output relies only on the outcomes of the present components and previous layers, not the upcoming inputs. The normal convolution output relies on the upcoming input at some time; it may or may not depend on the last information unrelated to sequential modelling tasks. Nevertheless, the causal convolution depends entirely on the historical time series dataset.

TCN uses a 1D fully connected system to ensure that the output is the same length as the input.

Dilated convolution. The backward training problem of DL methods is resolved by utilizing Dilated convolution. An easy causal convolution observes the historical information directly, assuming to examine enormous historical details to use for a sequential task. To resolve the problem, TCN applied dilated convolution.

Dilated convolution is a causal convolution, while a filter is used at an area greater than its length by skipping several input values by a particular stage to successfully permit the system to have a vast receptive area with only a few layers. The Dilated Causal Convolution with dilation factor 4. The convolution process for sequence \(a\) for the component \(b\) is accessible as,

Correspondingly, the dilated convolution process for the component \(b\) is characterized as,

whereas \(f\left( n \right)\) offered the nth filter count of its consistent layer, \(d\) signifies the dilation feature, \(k\) represents the filter size, and \(b - d \cdot n\) signifies the past directions. If the d value turns one, the dilated causal convolution is transformed into a normal one.

Receptive mapping. It is essential to attain the best prediction precision with appropriate filter dimensions. Thus, TCN accepted residual mapping, a jumper connection for residual convolution. The residual block contains a shortcut jumper connection to manage a residual mapping from the sequence of input \(y\) to the transformation \(f\left( y \right)\). The residual mapping process is described,

whereas \(\varphi\) characterizes a non-linear activation function.

Parameter optimizer using COA

Finally, the COA optimally adjusts the ensemble models’ hyperparameter values, resulting in more excellent classification performance43. This method is chosen because it can efficiently explore the search space and find optimal solutions. COA is inspired by the social behaviour of cetaceans, making it effective in handling complex, high-dimensional optimization problems. Unlike conventional methods like grid or random search, COA adapts dynamically to changes in the search space, ensuring better exploration and exploitation balance. Its robustness in averting local optima, integrated with its capability to converge quickly, makes it appropriate for fine-tuning hyperparameters in DL models. The global search capabilities of the COA model allow it to discover optimal configurations, improving model performance without requiring exhaustive parameter sweeps. Moreover, it gives an advantage in computational efficiency and scalability, making it ideal for large-scale optimization tasks. Figure 4 demonstrates the steps involved in the COA model.

The COA is a population‐based stochastic optimizer model stimulated by nature, which was only made using a group of search agents to deal with optimizer issues successfully. Taking as a model from humpback cetaceans, surrounding prey, bubble net attacking, and surveying around the optimal prey are the three important features in the COA’s bubble‐net searching method. The cetaceans’ bubble‐net foraging standard includes making a spiral bubble net afterwards, recognizing prey, and moving upstream to seize it. This predation behaviour is explained in 3 steps: encircling prey, bubble‐net attack, and searching prey. Like cetaceans surrounding fishes in hunting, the COA agents fine-tune their positions to discover the optimal choice for histogram augmentation. Equation (19) states the essential mathematical aspect of the COA;

When t refers to iteration or time index, \({\mathfrak{x}}\) stands for a vector comprising each position of the cetaceans, and \({\mathfrak{x}}^{*}\) represents the optimal answer thus far; a coefficient vector with a linear decline from 2 to \(0\) through the repetition counts is \(= 2a \cdot \left( {r - a} \right);{\mathfrak{C}} = 2 \cdot r\); The variables \(r\) and \({\mathcalligra{b}}\) identify the form of the logarithmic spiral, correspondingly, according to the input. This paper sets b to 1, whereas \(r\) denotes a randomly formed vector with values amongst (0,1). During Eq. (4), the probability \({\wp}\) is 50% and 50%. \(\ell\) stands for an arbitrary integer between ‐l and 1 that is applied for swapping updated positions of the cetaceans; it designates that, with an equivalent probability, cetaceans select both options at random throughout the optimizer method. The arbitrary value for \({\mathcalligra{u}}\) in the bubble‐net stage is \(\left( { - 1,1} \right)\). Nevertheless, the randomly generated value of vector A in the hunting stage may be either more or less than 1.

Equation (20) exemplifies the search procedure.

The random searching method, featuring \(a\left| u \right|\) value surpassing 1, emphasizes the exploration procedure and requires the COA model to perform a wide-ranging global search. At the beginning of the COA search, random solutions are produced. Then, these solutions experience iterative upgrades over the model. The search continues until a set of maximal iteration counts is attained.

Fitness choice is a significant aspect that prompts the performance of the COA. The hyperparameter choice procedure comprises the solution encoder model to assess the effectiveness of the candidate solutions. In this paper, the COA considers precision as the key condition to intend the FF, which is stated as demonstrated.

On the other hand, true positive values denote TP, and false positive values represent FP.

Performance validation

The performance estimation of the BGWO-EDLMHAR methodology is inspected below the WISDM dataset44. The suggested technique is simulated using the Python 3.6.5 tool on PC i5-8600 k, 250 GB SSD, GeForce 1050Ti 4 GB, 16 GB RAM, and 1 TB HDD. The parameter settings are provided: learning rate: 0.01, activation: ReLU, epoch count: 50, dropout: 0.5, and batch size: 5. This dataset contains 5424 instances below six classes, as shown in Table 1. The total number of attributes is 45, but only 32 features have been selected.

Figure 5 establishes the confusion matrix made by the BGWO-EDLMHAR approach under dissimilar epochs. The outcomes recognize that the BGWO-EDLMHAR method detects and identifies each class label precisely.

The HAR of the BGWO-EDLMHAR technique is demonstrated under distinct epochs in Table 2. The outcomes state that the BGWO-EDLMHAR technique correctly recognized all the samples. On 500 epochs, the BGWO-EDLMHAR technique provides an average \(accu_{y}\) of 94.42%, \(prec_{n}\) of 87.08%, \(reca_{l}\) of 67.05%, \(F1_{score}\) of 70.90%, and MCC of 70.24%. In addition, on 1000 epochs, the BGWO-EDLMHAR approach provides an average \(accu_{y}\) of 96.40%, \(prec_{n}\) of 89.66%, \(reca_{l}\) of 79.71%, \(F1_{score}\) of 83.25%, and MCC of 81.63%. Besides, on 2000 epochs, the BGWO-EDLMHAR approach provides an average \(accu_{y}\) of 97.09%, \(prec_{n}\) of 90.68%, \(reca_{l}\) of 83.60%, \(F1_{score}\) of 86.42%, and MCC of 84.90%. Also, on 2500 epochs, the BGWO-EDLMHAR approach provides an average \(accu_{y}\) of 97.75%, \(prec_{n}\) of 92.31%, \(reca_{l}\) of 87.76%, \(F1_{score}\) of 89.71%, and MCC of 88.41%. Moreover, on 3000 epochs, the BGWO-EDLMHAR approach provides an average \(accu_{y}\) of 97.75%, \(prec_{n}\) of 92.31%, \(reca_{l}\) of 87.76%, \(F1_{score}\) of 89.71%, and MCC of 88.41%.

Figure 6 illustrates the training (TRA) \(accu_{y}\) and validation (VAL) \(accu_{y}\) analysis of the BGWO-EDLMHAR technique below 3000 epoch. The \(accu_{y}\) analysis is computed across the range of 0–3000 epochs. The figure highlights that the TRA and VAL \(accu_{y}\) analysis exhibitions an increasing trend, which learned the capacity of the BGWO-EDLMHAR with superior performance across multiple iterations. Simultaneously, the TRA and VAL \(accu_{y}\) remain closer across the epochs, indicating inferior overfitting and exhibiting the maximum BGWO-EDLMHAR model’s maximum performance, ensuring reliable predictive on hidden samples.

Figure 7 reveals the TRA loss (TRALOS) and VAL loss (VALLOS) analysis of the BGWO-EDLMHAR methodology under epoch 3000. The loss values are computed throughout 0–3000 epochs. The TRALOS and VALLOS analysis establishes a decreasing tendency, informing the ability of the BGWO-EDLMHAR technique to balance a trade-off between data fitting and simplification. The continuous reduction in loss values also assures the maximum outcome of the BGWO-EDLMHAR technique and tunes the prediction results over time.

The comparative examination of the BGWO-EDLMHAR method with other techniques is exemplified in Table 3 and Fig. 818,45,46. The simulation result stated that the BGWO-EDLMHAR method outperformed more outstanding performances. Based on \(accu_{y}\), the BGWO-EDLMHAR approach has a higher \(accu_{y}\) of 98.51%. In contrast, the SHO-LSTM, MFCC, CA-WGNN, RecurrentHAR, DeepConvLG, ResNet-BiGRU-SE, Generic, CNN-BiLSTM, TAHAR-student-LSTM, and HMM methods have lesser \(accu_{y}\) of 97.82%, 98.01%, 96.68%, 96.26%, 98.01%, 98.11%, 97.24%, 97.40%, 96.04%, and 94.36%, respectively. Likewise, depend on \(Reca_{l}\), the BGWO-EDLMHAR approach has better \(reca_{l}\) of 91.76% where the SHO-LSTM, MFCC, CA-WGNN, RecurrentHAR, DeepConvLG, ResNet-BiGRU-SE, Generic, CNN-BiLSTM, TAHAR-student-LSTM, and HMM methods have lower \(reca_{l}\) of 89.03%, 87.75%, 88.37%, 89.43%, 88.19%, 88.63%, 88.46%, 86.96%, 87.71%, and 88.96%, correspondingly. Moreover, concerning \(F1_{score}\), the BGWO-EDLMHAR approach has a greater \(F1_{score}\) of 93.11%. In contrast, the SHO-LSTM, MFCC, CA-WGNN, RecurrentHAR, DeepConvLG, ResNet-BiGRU-SE, Generic, CNN-BiLSTM, TAHAR-student-LSTM, and HMM methods have worst \(F1_{score}\) of 91.56%, 91.26%, 92.48%, 87.72%, 87.27%, 91.54%, 90.86%, 90.53%, 92.86%, and 90.02%, respectively.

Table 4 and Fig. 9 show the computation time (CT) outcomes of the BGWO-EDLMHAR technique. The results imply that the BGWO-EDLMHAR technique gets superior performance. Based on CT, the BGWO-EDLMHAR method presents a lesser CT of 7.72 s. In contrast, the SHO-LSTM, MFCC, CA-WGNN, RecurrentHAR, DeepConvLG, ResNet-BiGRU-SE, Generic, CNN-BiLSTM, TAHAR-student-LSTM and HMM methods accomplish higher CT values of 15.01 s, 13.20 s, 14.99 s, 19.94 s, 10.63 s, 20.32 s, 11.02 s, 15.18 s, 11.33 s, and 20.22 s, correspondingly. These methods exhibit improved performance with higher CT values, showing better efficiency. This reflects their capability to effectively process and classify diverse movement patterns in diverse real-time scenarios.

Conclusion

In this paper, a BGWO-EDLMHAR technique is proposed. The proposed BGWO-EDLMHAR technique aims to develop a robust HAR method for disability assistance using advanced optimization models. The data normalization stage applies z-score normalization at first to transform raw data into a clean and structured format. Furthermore, the BGWO method is utilized for the FS process. Moreover, the ensemble of DL models, namely BiLSTM, VAE, and TCN, is employed for the classification process. Finally, the COA optimally adjusts the ensemble models’ hyperparameter values, resulting in more excellent classification performance. The BGWO-EDLMHAR method is examined using the WISDM dataset. The comparison study of the BGWO-EDLMHAR method portrayed a superior accuracy value of 98.51% over existing models. The limitations of the EDLMHAR method comprise various factors that affect the generalizability and effectualness of the proposed models. First, the dependence on a limited set of sensors may not capture the full range of human activity, mitigating accuracy in real-world settings. Furthermore, the existing techniques may encounter difficulty handling complex or overlapping activities, resulting in misclassifications. The dataset utilized for training may also have biases, affecting the model’s performance across diverse populations or environments. Another limitation is the computational complexity of the models, which could affect real-time application in resource-constrained devices. Moreover, the efficiency of the proposed methods is still constrained by the lack of cross-domain validation. To address these issues, further work is required to explore multimodal sensor fusion, develop more robust data pre-processing techniques, and improve model efficiency for real-time deployment.

Data availability

The data supporting this study’s findings are openly available at https://www.cis.fordham.edu/wisdm/dataset.php, reference number44.

References

Dhiman, C. & Vishwakarma, D. K. A review of state-of-the-art techniques for abnormal human activity recognition. Eng. Appl. Artif. Intell. 77, 21–45 (2019).

Mekruksavanich, S. & Jitpattanakul, A. Lstm networks using smartphone data for sensor-based human activity recognition in smart homes. Sensors 21(5), 1636 (2021).

Qiu, S. et al. Multi-sensor information fusion based on machine learning for real applications in human activity recognition: State-of-the-art and research challenges. Inf. Fusion 80, 241–265 (2022).

Deepaletchumi, N. & Mala, R. Leveraging variational autoencoder with hippopotamus optimizer-based dimensionality reduction model for attention deficit hyperactivity disorder diagnosis data. J. Intell. Syst. Internet Things 16(1), 75 (2025).

Yadav, S. K., Tiwari, K., Pandey, H. M. & Akbar, S. A. A review of multimodal human activity recognition with special emphasis on classification, applications, challenges and future directions. Knowl. Based Syst. 223, 106970 (2021).

Singh, A. D., Sandha, S. S., Garcia, L. & Srivastava, M. Radhar: Human activity recognition from point clouds generated through a millimeter-wave radar. In Proceedings of the 3rd ACM Workshop on Millimeter-wave Networks and Sensing Systems, 51–56 (2019).

Hussain, Z., Sheng, M. & Zhang, W. E. Different Approaches for Human Activity Recognition: A Survey. arXiv preprint https://arxiv.org/abs/1906.05074 (2019).

Wang, Y., Cang, S. & Yu, H. A survey on wearable sensor modality centred human activity recognition in health care. Expert Syst. Appl. 137, 167–190 (2019).

Xia, K., Huang, J. & Wang, H. LSTM-CNN architecture for human activity recognition. IEEE Access 8, 56855–56866 (2020).

Jalon Arias, E., Aguirre Paz, L. M. & Molina Chalacan, L. Multi-sensor data fusion for accurate human activity recognition with deep learning. Fusion Pract. Appl. 13(2), 62 (2023).

Yazici, A. et al. A smart e-health framework for monitoring the health of the elderly and disabled. Internet Things 24, 100971 (2023).

Raj, R. & Kos, A. An improved human activity recognition technique based on convolutional neural network. Sci. Rep. 13(1), 22581 (2023).

Patil, B. U. & Ashoka, D. V. Data integration based human activity recognition using deep learning models. Karbala Int. J. Mod. Sci. 9(1), 11 (2023).

Islam, M. M., Nooruddin, S., Karray, F. & Muhammad, G. Multi-level feature fusion for multimodal human activity recognition in Internet of Healthcare Things. Inf. Fusion 94, 17–31 (2023).

Snoun, A., Bouchrika, T. & Jemai, O. Deep-learning-based human activity recognition for Alzheimer’s patients’ daily life activities assistance. Neural Comput. Appl. 35(2), 1777–1802 (2023).

Hu, R., Chen, L., Miao, S. & Tang, X. SWL-Adapt: An unsupervised domain adaptation model with sample weight learning for cross-user wearable human activity recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, no. 5, 6012–6020 (2023).

Najeh, H., Lohr, C. & Leduc, B. Convolutional neural network bootstrapped by dynamic segmentation and stigmergy-based encoding for real-time human activity recognition in smart homes. Sensors 23(4), 1969 (2023).

Alhussen, A., Ansari, A. S. & Mohammadi, M. S. Enhancing user experience in AI-powered human–computer communication with vocal emotions identification using a novel deep learning method. Comput. Mater. Contin. 82(2), 2909–2929 (2025).

Pan, H., Tong, S., Wei, X. & Teng, B. Fatigue state recognition system for miners based on a multimodal feature extraction and fusion framework. IEEE Trans. Cogn. Dev. Syst. 17, 410–420 (2024).

Gonçalves, H. R. et al. A Convolutional neural network based model for daily motor activities recognition: A contribution for Parkinson’s disease. Eng. Appl. Artif. Intell. 151, 110706 (2025).

Liang, F. et al. Interlimb and Intralimb synergy modeling for lower limb assistive devices: Modeling methods and feature selection. Cyborg Bionic Syst. 5, 0122 (2024).

Alsaadi, M. et al. Logical reasoning for human activity recognition based on multisource data from wearable device. Sci. Rep. 15(1), 380 (2025).

Inoue, Y., Kuroda, Y., Yamanoi, Y., Yabuki, Y. & Yokoi, H. Development of wrist separated exoskeleton socket of myoelectric prosthesis hand for symbrachydactyly. Cyborg Bionic Syst. 5, 0141 (2024).

Al Farid, F. et al. A structured and methodological review on multi-view human activity recognition for ambient assisted living. J. Imaging 11, 182 (2025).

Gao, Q., Deng, Z., Ju, Z. & Zhang, T. Dual-hand motion capture by using biological inspiration for bionic bimanual robot teleoperation. Cyborg Bionic Syst. 4, 0052 (2023).

Hamad, R. A., Woo, W. L., Wei, B. & Yang, L. Self-supervised learning for activity recognition based on datasets with imbalanced classes. IEEE Trans. Emerg. Top. Comput. Intell. https://doi.org/10.1109/TETCI.2025.3526504 (2025).

Uesugi, K., Mayama, H. & Morishima, K. Analysis of rowing force of the water strider middle leg by direct measurement using a bio-appropriating probe and by indirect measurement using image analysis. Cyborg Bionic Syst. 4, 0061 (2023).

Paul, A., Khan, S., Mondal, D. & Singh, P. K. Recognizing human activities in ambient assisted environment from wearable sensor data using Gramian angular field and deep CNN. In Enabling Person-Centric Healthcare Using Ambient Assistive Technology, Volume 2: Personalized and Patient-Centric Healthcare Services in AAT, 199–226 (Springer, Cham, 2025).

Zhang, S. et al. A versatile continuum gripping robot with a concealable gripper. Cyborg Bionic Syst. 4, 0003 (2023).

Rajpopat, S., Kumar, S. & Punn, N. S. Cerebral palsy detection from infant using movements of their salient body parts and a feature fusion model. J. Supercomput. 81(1), 106 (2025).

Yu, F., Liu, Y., Wu, Z., Tan, M. & Yu, J. Adaptive gait training of a lower limb rehabilitation robot based on human–robot interaction force measurement. Cyborg Bionic Syst. 5, 0115 (2024).

Liu, L., Sun, Y. & Ge, X. A hybrid multi-person fall detection scheme based on optimized YOLO and ST-GCN. Int. J. Interact. Multimed. Artif. Intell. 9(2), 26 (2025).

Almalki, N. S. et al. IoT-assisted human activity recognition using bat optimization algorithm with ensemble voting classifier for disabled persons. J. Disabil. Res. 3(2), 20240006 (2024).

Pellano, K. N., Strümke, I., Groos, D., Adde, L. & Ihlen, E. A. F. Evaluating explainable ai methods in deep learning models for early detection of cerebral palsy. IEEE Access. 13, 10126–10138 (2025).

Prakash, S., Jeyasudha, M. & Priya, S. Lower limb prosthetics using optimized deep learning model—A pathway towards SDG good health and well being. J. Appl. Data Sci. 5(3), 1147–1161 (2024).

Duc, P. T. & Toan, V. D. Wearable fall detection device for stroke warning based on IoT technology and convolutional neural network. Meas. Interdiscip. Res. Perspect. https://doi.org/10.1080/15366367.2025.2464970 (2025).

Zaher, M., Ghoneim, A. S., Abdelhamid, L. & Atia, A. Unlocking the potential of RNN and CNN models for accurate rehabilitation exercise classification on multi-datasets. Multimed. Tools Appl. 84, 1–41 (2024).

Cochran, J. M. et al. Breast cancer differential diagnosis using diffuse optical spectroscopic imaging and regression with z-score normalized data. J. Biomed. Opt. 26(2), 026004–026004 (2021).

Yifan, Y., Dazhi, W., Yanhua, C., Hongfeng, W. & Min, H. A novel evolutionary multitasking feature selection approach for genomic data classification. In The 16th Asian Conference on Machine Learning (Conference Track) (2025).

Jayanna, N. S. & Lingaraju, R. M. Seasonal auto-regressive integrated moving average with bidirectional long short-term memory for coconut yield prediction. Int. J. Electr. Comput. Eng. 15(1), 2088–8708 (2025).

Jlidi, A. & Kovács, L. Applying deep generative models to portrait art generation: A comparative study of GANs and VAEs. Prod. Syst. Inf. Eng. 12(1), 58–67 (2024).

Samal, K. K. R. Auto imputation enabled deep temporal convolutional network (TCN) model for PM2.5 forecasting. EAI Endorsed Trans. Scalable Inf. Syst. 12(1), 1–15 (2025).

Radhika, R. & Mahajan, R. A novel framework of hybrid optimization techniques for contrast-enhancement in cardiac MRI medical images. J. Angiother. 8(3), 1–11 (2024).

Ankalaki, S. Simple to complex, single to concurrent sensor based human activity recognition: Perception and open challenges. IEEE Access 12, 93450–93486 (2024).

Miah, A. S. M., Hwang, Y. S. & Shin, J. Sensor-based human activity recognition based on multi-stream time-varying features with ECA-net dimensionality reduction. IEEE Access 12, 151649–151668 (2024).

Acknowledgements

The author extends his appreciation to the King Salman center For Disability Research for funding this work through Research Group no KSRG-2024-136.

Author information

Authors and Affiliations

Contributions

All contributions done by Dr. Hamed Alqahtani.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Alqahtani, H. Implementing ensemble of deep learning model with optimization techniques for human activity recognition to assist individuals with disabilities. Sci Rep 15, 36519 (2025). https://doi.org/10.1038/s41598-025-09970-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-09970-4