Abstract

The structural integrity and longevity of aluminum alloy components in lightweight engineering require accurate and efficient damage detection and prognosis methods. Traditional supervised machine learning (ML) techniques often face limitations due to dependency on large datasets, risk of overfitting, and high computational costs. To overcome these challenges, this study proposes an unsupervised learning framework that combines k-means clustering with a multi-phase gamma process to detect and model damage in aluminum plates. Scanning Acoustic Microscopy (SAM) images serve as the data source, from which comprehensive features are extracted in time, frequency, and time-frequency domains using Short-Time Fourier Transform (STFT). The K-means algorithm enables precise localization and sizing of surface defects without prior labels, while the gamma process captures the stochastic progression of damage over time. The method demonstrates high accuracy in estimating defect geometry and prognostic trajectories while maintaining low computational complexity. This unified, interpretable approach is generalizable to a range of materials and holds strong potential for real-time structural health monitoring (SHM) in safety-critical domains.

Similar content being viewed by others

Introduction

Damage detection has gained substantial attention in recent years due to its critical role in fields such as Structural Health Monitoring (SHM), non-destructive testing, and prognostic health management of engineering systems1. SHM is often integrated with damage prognosis, where the future state of a system is predicted through simulations based on prior knowledge and real-time data. The ability to predict damage progression is crucial, especially in mechanical and civil structures, to ensure safety and minimize economic losses associated with structural defects2. Lightweight structures are extensively used in industries such as aerospace, civil engineering, and automotive manufacturing. Lightweight design strategies aim to reduce the structural weight while preserving durability, thereby increasing productivity and optimizing resource utilization3,4. These structures provide significant environmental and structural benefits5. Among the materials used, aluminium and its alloys are particularly favored due to their high strength-to-weight ratio, ease of fabrication, workability, ductility, thermal conductivity, corrosion resistance, and aesthetically pleasing appearance6.

Despite these advantages, engineering structures are susceptible to damage from various adverse conditions. Early detection of such defects is essential for maintaining functionality and preventing failures7. Structural failures can occur due to poor design, faulty construction, foundation issues, excessive loads, and other factors. For instance, foundation failures may cause uneven stress distributions, leading to collapse. At the same time, extraordinary loads, such as those from heavy snowfalls, floods, earthquakes, and hurricanes, can severely compromise structural integrity8. Several techniques have been developed to detect and monitor damage in aluminum structures. Model-based methods use statistical processing and guided waves to locate defects. PL-SLDV phased arrays provide high-resolution, non-contact visualization using laser-induced vibrations9. Optical correlation techniques track surface strain to identify fatigue-related damage10. Acoustic emission methods detect real-time damage in hybrid aluminum/glass laminates11. Laser ultrasonic techniques combined with deep learning and energy mapping enhance detection performance. Elastic wave modulation spectroscopy detects microcracks through nonlinear wave interactions12. Together, these approaches form a comprehensive toolkit for structural health monitoring of aluminum components. To address similar challenges in metals, composites, and layered structures, additional methods have been developed. These include traditional techniques13,14,15, machine learning approaches16,17,18,19,20,21,22, and statistical signal analysis methods23,24,25,26,27. The core principle behind all these techniques is that structural damage alters physical properties such as stiffness, crack width, damping, or mass1,27. For instance, vibration-based SHM systems extract damage-related features from sensor-acquired signals to assess structural integrity28,29,30,31,32,33. In such systems, the identification of the structural properties is achieved by analyzing the measured vibrations34. Although natural frequency-based methods have been widely used, they often fall short in precisely locating and quantifying the damage35,36,37,38.

Damage detection methods compare structural responses between damaged and undamaged states. Various characteristics are measured and subsequently analyzed using signal processing techniques39,40 or machine learning algorithms41,42. Recently, Artificial Intelligence (AI)-driven approaches have gained prominence, offering solutions to complex problems when sufficiently high-quality datasets are available. However, the performance of these AI models heavily depends on the quality of the training data34. Signal processing remains a pivotal element in damage detection frameworks, as it enables the extraction of subtle changes in structural responses. Feature extraction techniques across time, frequency, and time-frequency domains have been extensively utilized. While time-domain analysis provides direct qualitative insights, it lacks clear frequency information. Frequency-domain analysis, enabled by the Fast Fourier Transform (FFT), reveals spectral content but cannot capture how these characteristics evolve over time. Consequently, time-frequency analysis methods, such as the Short-Time Fourier Transform (STFT), are employed to analyze signals in both domains simultaneously. The STFT partitions a signal into smaller time windows, computing the Fourier Transform of each segment to observe temporal variations in frequency content. However, the method’s accuracy is influenced by the size of the time window, with the uncertainty principle stating that achieving both high time and frequency resolutions simultaneously is not possible43.

Further, damage prognosis aims to forecast the future condition of a system based on its current damaged state, historical data, and anticipated operational environments. This capability is particularly vital for civil infrastructures vulnerable to degradation from events such as earthquakes, where accurate forecasting can save lives, accelerate post-event recovery, and reduce economic losses2. Numerous data-driven methods, including deep learning, recurrent neural networks (RNNs), and meta-learning approaches, have been explored for Remaining Useful Life (RUL) prediction44. Long Short-Term Memory (LSTM) networks have been employed to estimate RUL and model performance degradation in engines45,46. More recently, architectures like the Multiple Convolutional Long Short-Term Memory (MCLSTM) have improved RUL prediction accuracy by capturing temporal-spatial correlations in degradation signals47. Nevertheless, data-driven approaches are often constrained by low interpretability and the necessity of extensive training datasets. Particle filter-based prognostics have also been proposed48, although they can be computationally intensive and less accurate in high-dimensional spaces. Existing research predominantly addresses damage identification, with relatively fewer studies focusing on integrated damage prognosis. Many approaches treat damage identification and prognosis separately, highlighting the need for unified frameworks that integrate both tasks seamlessly.

This study proposes an approach that extracts features from the time, frequency, and time-frequency domains to enhance defect estimation. STFT is specifically utilized for time-frequency analysis to capture important transient signal characteristics. Incorporating all three domains ensures comprehensive feature representation, minimizing the risk of overlooking critical information. K-Means clustering is employed to estimate defect sizes by distinguishing anomalous from normal signal patterns. By partitioning signals into two clusters, the method efficiently identifies anomalies associated with structural defects. Defect diameters serve as a damage index, which is then modeled using a gamma process for prognosis. Unlike supervised deep learning models, this unsupervised technique does not require labeled training data, making it highly suitable for scenarios characterized by sparse or low-fidelity datasets. The approach offers low computational complexity, prevents overfitting, and provides greater interpretability, especially considering that damage in civil structures typically follows a gamma distribution. To validate the proposed methodology, damaged aluminum plates are examined using Scanning Acoustic Microscopy (SAM). SAM is a powerful label-free imaging technique widely employed in biomedical imaging, non-destructive testing, and material research49,50,51. It enables precise visualization of surface and subsurface features, offering both qualitative inspection and quantitative analysis capabilities crucial for structural diagnostics.

Experimental procedure

Sample preparation

An aluminum sheet of grade 7075 was selected for the experiments due to its high strength and favorable mechanical properties. Zinc is the primary alloying element in 7075 aluminum, which contributes to its excellent fatigue strength and superior corrosion resistance, particularly when compared to 2000-series aluminum alloys. Its strength is comparable to many steel grades, making it ideal for applications that require a combination of low weight and high strength. Common uses include aerospace structures, automotive components, medical devices, bicycle parts, and rock-climbing equipment. Although it is more expensive than other aluminum grades, its unique properties justify its use in demanding applications where performance is critical. In less critical scenarios, more cost-effective alloys may be suitable alternatives. For the purpose of high-resolution acoustic imaging, the aluminum sheet was sectioned into smaller samples to facilitate localized inspection using SAM. To simulate damage conditions, a high-speed Dremel drill was employed, using various drill bits to introduce controlled defects into the samples. The alloy’s medium machinability in the annealed condition allowed for the creation of precise and repeatable damage patterns, suitable for evaluation under SAM.

Scanning acoustic microscopic imaging

Figure 1 provides a detailed representation of the configured SAM setup, illustrating the system used for imaging the aluminum samples. The figure includes detailed annotations. It highlights key components and operational configurations. These serve as a visual reference for understanding the SAM imaging process. SAM operates in both reflection and transmission modes, each providing unique insights into the material’s internal properties. These imaging modes also allow visualization of subsurface structures, enabling more detailed analysis of the sample. For further details on the underlying principles and imaging methodologies of SAM, the numerical and imaging aspects are thoroughly explored in52,53,54.

This work relies on the use of Scanning Acoustic Microscopy (SAM) in reflection mode for imaging. The system uses a concave sapphire lens rod. It concentrates the acoustic energy onto the sample. Water is used as the coupling medium. This offers effective transmission of acoustic waves. Ultrasound signals are generated by a signal generator and directed toward the sample. The reflected waves, resulting from interactions with the sample’s surface, are captured and transformed into digital signals, referred to as A-scans or amplitude scans. To generate a C-scan image, the process is repeated across various positions within the XY plane by moving the sample using an X–Y stage. This repeated scanning process collects information from many locations. The collected information is compiled into an accurate 3D image in the direction of X, Y, and Z. This provides valuable information on the internal structure and properties of the sample.

Representation of the SAM setup, which includes a signal generator, RF amplifier (\(T_x\)), and signal receiver amplifier (\(R_x\)) to excite and detect ultrasonic signals. The system is integrated with an XY stage and Z-axis motor controller for precise spatial positioning of the transducer. Automated scanning and data acquisition are managed by a computer-based control unit linked to the trigger and stage controllers. The sample is positioned in a dedicated container to allow controlled ultrasonic wave propagation55,56,57.

The imaging system employed a 40 MHz PVDF-focused Olympus transducer with specific features, including a 6.35 mm aperture and a 12.5 mm focal length. A custom-designed SAM, depicted in Fig. 1, was controlled using a LabVIEW program. This SAM was integrated with a Standa high-precision motorized XY scanning stage (8MTF-200-Motorized XY Microscope Stage) for data collection. Acoustic imaging was facilitated by National Instruments’ PXIe FPGA modules and FlexRIO hardware housed within a PXIe-1082 chassis. The setup employed an arbitrary waveform generator (AT-1212-NI) to excite the transducer using predefined Mexican hat wavelets, chosen for their sharp time localization and smooth frequency profile, enabling precise detection of material changes52,53,58. Once the transducer generated the excitation signal, the reflected acoustic waves from the material were collected and amplified using a high-frequency RF amplifier (AMP018032-T) to enhance signal strength and clarity. These amplified reflections were then digitized using a 12-bit high-speed digitizer (NI-5772) operating at a sampling rate of 1.6 gigasamples per second (GS/s). This high sampling rate ensured fine temporal resolution, allowing accurate capture of the ultrasonic waveform details needed for subsequent signal analysis.

Development of the damage index

For an effective prognosis, a damage index that reflects the system’s condition is essential. This can be a direct or indirect measure of damage. Time-domain features, such as mean, RMS, and peak value, provide statistical insights into signal amplitude and energy distribution but may be insufficient for detecting complex structural changes. Frequency-domain features are obtained using the FFT. These features reveal important spectral characteristics. The fundamental frequency gives information about the stiffness of the material. Spectral entropy helps detect subtle or hidden changes in the signal. Power Spectral Density (PSD) shows which frequency ranges contain the most energy. Time-frequency features, derived from the Wavelet Transform, capture transient and non-stationary effects by analyzing signals at multiple scales. Integrating all three domains enhances damage detection accuracy, offering a robust framework for structural health monitoring. In this paper, defect size is evaluated using K-means clustering for prognosis, based on extracted time, frequency, and time-frequency domain features.

K-means clustering

Most of the available data is arbitrary and is not labeled. Unsupervised learning algorithms like K-means clustering can be useful in such cases59. Clustering algorithms are used to partition the unlabeled data into clusters such that data objects within the same cluster have similar features but differ from those in other clusters. The K-means clustering algorithm works well if we can pre-identify the number of clusters. In this work, only two clusters will be used for defect estimation: one representing normal signals and the other representing defects. The K-means clustering algorithm is a partitional clustering algorithm that divides the data into clusters. The distance is calculated, and a data point is assigned to a cluster if the distance between the data point and the mean of the cluster is the closest. The standard K-means algorithm uses Euclidean distance as a metric59. Mathematically, given a dataset \(X = \{x_1, x_2, \ldots , x_n\}\) of \(d\)-dimensional data points of size \(n\),

is partitioned into \(k\) clusters \(C = \{c_1, c_2, \ldots , c_k\}\) such that

The K-means algorithm aims to minimize the sum of squared errors for each \(k\) cluster. That is,

where \(\mu _i\) is the mean of the points in cluster \(c_i\). The steps of the K-means algorithm are as follows:

-

1.

Initialization: Select \(k\) random centroids (centers of clusters).

-

2.

Assignment: Assign each data point to the nearest centroid.

-

3.

Recomputation: Recompute the centroids as the mean of all data points assigned to each centroid.

-

4.

Reassignment: Reassign each data point to the new centroids based on the updated mean values.

-

5.

Iteration: Repeat steps 3 and 4 until the cluster assignments no longer change.

-

6.

Completion: Finish the process if there are no reassignments of data points to different centroids.

The data may require some preprocessing. In this work, the features are first normalized so that the algorithm works effectively. Standardizing all the features gives them equal weight, ensuring that redundant or noisy objects do not impact the performance of our model and that we have reliable data to work with. The K-means algorithm uses Euclidean distance as a distance metric, which can cause the algorithm to give inaccurate results if there are irregularities in the size of different features. Otherwise, some features will be given more importance than others. The K-means algorithm is applied with only two clusters. With such a small number, it is not significantly affected by the curse of dimensionality. to perform effectively in estimating defect locations and characteristics. This algorithm is scalable on large datasets and requires lower computational costs than various deep neural network architectures59.

Stochastic process modelling

Gamma process modelling

After estimating the defect diameters, the next step is to develop a surrogate model for structural damage. In this model, the defect diameter is used as a key indicator of damage, denoted as \(\eta _{jt}\), where jth represents the specific location and t represents the time instant. This indicator provides a quantitative measure of damage severity at a specific location over time. To capture how the damage evolves, a stochastic process is used. This approach accounts for the inherent uncertainty and variability in the degradation behavior. Among the various stochastic processes available, the gamma process is particularly well-suited for this application. The gamma process is ideal for modeling monotonic, non-decreasing degradation processes, which are characteristic of many structural damage phenomena. It provides a robust framework for describing how damage accumulates over time in a way that reflects the natural progression of wear, stress, and environmental influences on the structure60. The gamma process is a continuous-time Markov process where the increments are independent and gamma-distributed61. For \(0 \le s < t\), the distribution of the random variable \(X(t) - X(s)\) is a gamma distribution \(\Gamma (a(t-s), b)\)62:

Here, a is the shape parameter and b is the scale parameter. By the additivity property of the gamma distribution, X(t) also follows the distribution, \(\Gamma (at,b)\)63. Since the gamma process is monotonically increasing, it becomes a suitable choice for modeling damage that increases over time. In this study, we will assume that the damage indicator follows a gamma process. Let the shape and scale parameters in the undamaged state be \(a_0\) and \(b_0\), respectively. Suppose a shock at time instant \(\tau + 1\) causes the scale parameter to change from \(b_0\) to \(b_1\), but the shape parameter \(a_0\) remains constant. The increments in damage indicator can then be modeled as a two-phase gamma process62.

Here, \(\Delta \eta _{jt}\) is an increment in \(\eta _{jt}\) from time t to \(t + t_0\) where \(t_0\) is the time difference between two consecutive measurements. The likelihood is given by the following equation62

Log-likelihood can be obtained by taking the logarithm of this function.

Now, to maximize the likelihood:

In this model, the parameter \(b_1\) is a function of the change point \(\tau\). To determine the optimal change point \(\hat{\tau }\), the value of \(\tau\) that maximizes the likelihood function must be identified. This corresponds to the time at which a change in the system is most likely to have occurred. The estimation of \(\hat{\tau }\) and the corresponding parameter \(b_1\) is carried out under the assumption that the parameters \(a_0\) and \(b_0\) are known. These parameters characterize the system’s behavior before the change and can be estimated from measurements taken when the system is in a healthy state, as described in62.

Parameter update and prognosis

Let the changes in damage indicator (\(\Delta \eta _ {jt}\)) follow a gamma distribution with a and b as the shape and scale parameters, respectively. Suppose the conjugate prior of b follows a gamma distribution with shape and scale parameters as \(\alpha _0\) and \(\beta _0\) respectively. Then the prior, likelihood, and posterior will be given by the following equations:

Prior :

Likelihood:

Posterior:

Since b has a conjugate prior, its posterior also follows a gamma distribution but with parameters - \(\alpha _ 0 '\) and \(\beta _0'\) given by62:

The posterior distribution \(p(b|\mathcal {D})\) gets continuously updated as new data becomes available; hence, the estimate of b also gets updated.

Results and discussion

Extracting signal features and developing the damage index

Several samples with different defect sizes were imaged using Scanning Acoustic Microscopy (SAM). Each sample measured 25 mm \(\times\) 25 mm, and consisted of an aluminum plate containing a predefined defect. When acoustic waves interacted with the sample, they were reflected back and subsequently captured and converted into electrical signals for analysis. The analog signals are converted to discrete-time signals using a digitizer with a sampling frequency of 200 MHz. The transducer’s raster scanning motion was used on the sample surface. In the raster mode, the echo signals are collected at each plate point, and the signal information is recorded. The step size 0.05 mm was used in horizontal and vertical directions, thus acquiring \(100 \times 100\) pixels. Thus, the acquired signals include those resulting from damage (defect) to the plate, which are classified as anomalous, while others represent normal conditions.

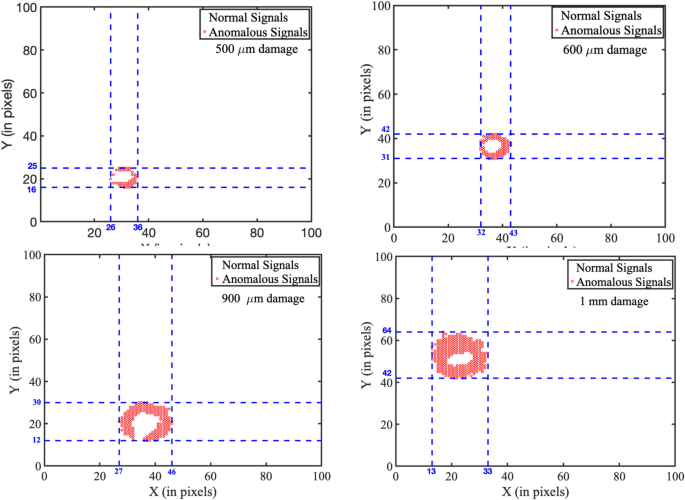

Clustering of anomalous and normal signals using K-means clustering, shown in four subfigures (a–d), representing different defect diameters as indicated in each subfigure. Subfigure (a) corresponds to a defect diameter of (\(500 \upmu m\)), showing a localized anomaly cluster (red circles) near the lower-left region. Subfigure (b) represents a defect diameter of (\(600 \upmu m\)), with a noticeable shift in the distribution of anomalous signals. Subfigure (c) depicts a defect diameter of (\(900 \upmu m\)), showing increased density and spread of anomalous signals across a broader region. Subfigure (d) corresponds to the largest defect diameter of (1mm), where anomalies consolidate into a distinct central cluster. The red circular regions represent cluster boundaries and centroids, illustrating the relationship between defect diameter and the spatial distribution of anomalous signals. Each coordinate in the graph corresponds to a signal at a specific spatial location. Anomalous signals (marked in red) indicate defect regions, while white areas denote normal signals from undamaged positions.

From these signals, features are extracted across the time domain (mean value, RMS value, peak value), the frequency domain (mean frequency, occupied bandwidth, power bandwidth), and the time-frequency domain (spectral skewness, time-frequency ridges). These extracted features are then normalized to acquire our training dataset. The K-means clustering algorithm was then employed to identify anomalous and normal signals based on these features. The experiment was conducted on \(5.05 mm \times 5.05 mm\) aluminum plates with defect diameters of \(500 \upmu m\), \(600 \upmu m\), \(900 \upmu m\), and \(1000 \upmu m\). The results for these 4 samples are shown in Fig. 2. The cluster points are mapped onto their spatial locations, and the maximum pairwise distance among the anomalous cluster points is obtained to find the diameter of the defect. If any outliers were observed, they were ignored in this analysis. The estimated defect diameters are shown in Table 1. The results obtained are very close to the actual diameters.

The estimation error for the largest defect (1 mm) reaches 19%, which is notably higher than for smaller defects. This can be attributed to several technical factors. In SAM, although high-resolution imaging is achievable, the lateral resolution is fundamentally limited by the acoustic wavelength and transducer properties. As the defect size increases, the acoustic signal tends to exhibit more diffuse boundary characteristics, making precise delineation difficult. This results in increased uncertainty during feature extraction and classification. In addition, K-means clustering introduces errors of approximation due to the assumption of spherical clusters with the same variance, which cannot hold for higher-order defects characterized by their irregular and spatially dispersed nature. In this case, signal variability and overlapping decision boundaries reduce the accuracy of estimation. As damage spreads over larger regions, the features may lose sensitivity to small spatial changes. This leads to a decline in classification performance.

In order to address these issues, several improvements are proposed. Applying advanced clustering algorithms such as Gaussian Mixture Models or Density-Based Spatial Clustering of Applications with Noise (DBSCAN) can better capture more complex spatial patterns63,64. Increasing the transducer frequency or incorporating signal deconvolution algorithms can provide higher spatial resolution and tighter borders. Additionally, incorporating spatial correlation features into the feature set offers a promising approach to improving discrimination between larger undamaged regions and long-damaged regions. Together, these advancements can significantly reduce estimation error, particularly for large or irregular defect morphologies.

Surrogate modeling using gamma process

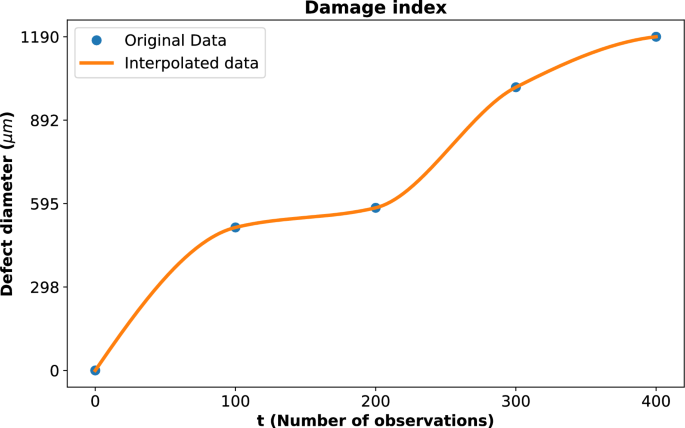

Due to the limited number of data points in the damage index, interpolation was employed to estimate intermediate values. The Piecewise Cubic Hermite Interpolating Polynomial (PCHIP) method was used, as it preserves the shape and monotonicity of the data while providing smooth predictions between existing points. The technique provides a smooth and continuous curve of the damage development, as shown in Fig. 3. For the gamma process modeling, the initial shape parameter value, \(a_0\), was assumed to be 6 and was kept constant throughout the analysis. Similarly, the initial rate parameter (\(b_0\)) was assigned a value of 1. Using these assumptions, the likelihood function was calculated to evaluate the fit of the gamma process to the given data.

Damage index represented by defect diameter (\({\upmu }m\)) as a function of the number of observations. The blue dots indicate the original data points, while the orange line represents the interpolated data using PCHIP. The interpolation provides a continuous representation of the defect diameter progression.

The damage index is represented by defect diameter (\({\upmu }m\)) as a function of the number of observations, as shown in Fig. 3. The deep blue dots indicate the original data points obtained from experimental measurements, which represent discrete instances of observed damage progression. The orange line shows the interpolated data generated using PCHIP, a method chosen for its ability to preserve the monotonicity and shape of the data. The interpolation technique offers a smooth and continuous representation of defect diameter progression. It effectively bridges gaps between sparse data points and enables a more detailed understanding of damage evolution over time.

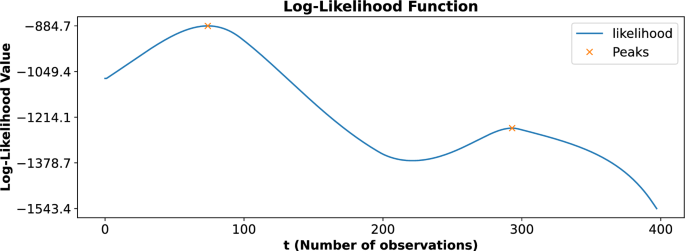

The likelihood function as a function of the number of observations (t). The blue curve represents the computed likelihood values, while the orange markers indicate the identified local maxima at time instants 74 and 293. These maxima correspond to critical change points in the damage progression, highlighting transitions in the structural degradation process and serving as the basis for the two-phase gamma process modeling.

Upon analyzing the complete dataset, the likelihood function revealed two prominent local maxima, as shown in Fig. 4. These peaks occurred at time instants 74 and 293, signifying critical points where the progression of damage exhibited noticeable changes. These time instants, referred to as change points, represent transitions in the behavior of the damage progression, indicating that the process is not uniform over time but instead evolves in distinct phases. To accurately capture these transitions and effectively model the damage progression, a two-phase gamma process modeling approach was employed. The first phase of the gamma process was applied to the time interval spanning from 0 to 293, with the first change point occurring at time instant 74. This phase represents the initial progression of damage, encompassing the behavior both before and after the first significant transition at time 74. The second phase of the gamma process was applied to the time interval from 74 to 400, with the second change point identified at time instant 293. This phase represents the progression of damage following the second major transition at time 293, indicating a distinct dynamic in the degradation process. By dividing the gamma process into two distinct phases, this modeling approach provided a more nuanced and accurate representation of the damage progression over time. The model effectively captured the evolving dynamics of the degradation process, with distinct transitions marked by identified change points. By differentiating between the pre- and post-transition phases, the two-phase modeling approach offers a clearer understanding of how damage accumulates over time. Detecting these behavioral shifts is crucial for enhancing the accuracy and reliability of structural health assessments.

The likelihood function, as illustrated in Fig. 4, represents the computed likelihood values as a function of the number of observations (t). The blue curve shows the variation of the likelihood values across the dataset, while the orange markers highlight two critical local maxima observed at time instants 74 and 293. These maxima are significant as they correspond to change points in the damage progression, indicating distinct transitions in the structural degradation process. The presence of these peaks in the likelihood function suggests that the damage evolution does not follow a uniform pattern but consists of two distinct phases separated by the change points. The first peak at \(t=74\) marks the transition into the initial phase of damage progression, while the second peak at \(t=293\) indicates the onset of a second degradation phase. These change points were instrumental in defining the two-phase gamma process model, which was employed to capture the temporal dynamics of the damage progression accurately. By identifying these critical points, the likelihood function provides a robust method for detecting transitions in structural behavior, enabling more detailed and precise modeling of the degradation process over time.

Real-time updation of the gamma process for prognosis

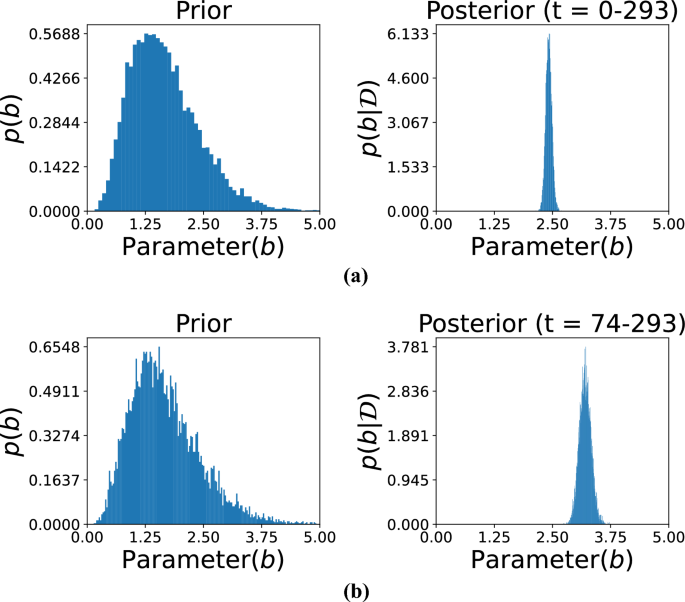

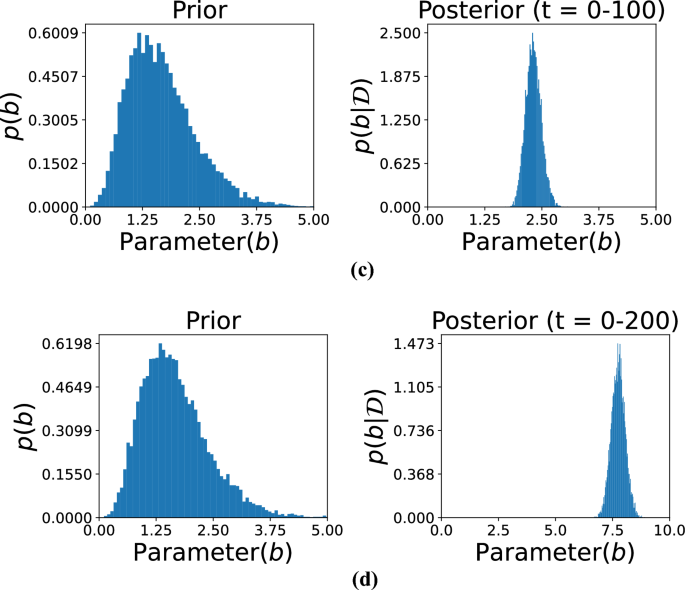

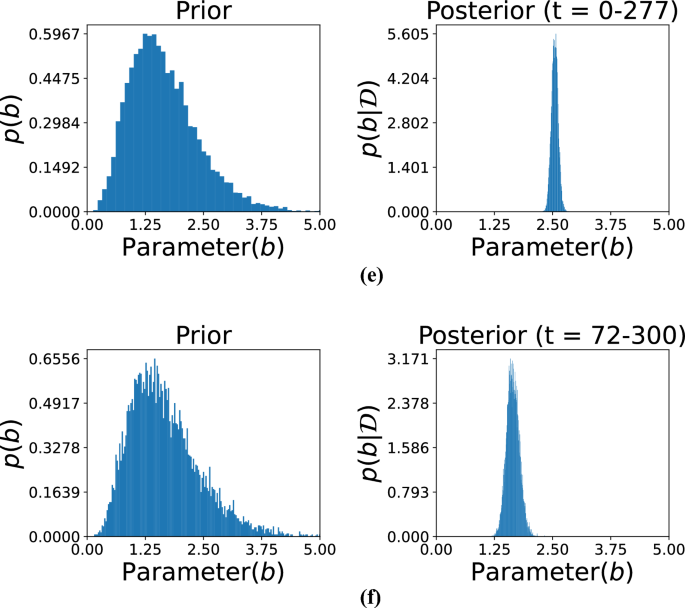

Figures 5, 6, and 7 illustrate the comparison between prior and posterior distributions for the model parameter b, demonstrating the application of Bayesian inference in refining parameter estimates based on observed data across different time intervals. Each figure displays the prior and posterior distributions of \(b\). The prior distribution reflects the initial assumptions, modeled as a gamma distribution with hyperparameters \(\alpha _0 = 5\) and \(\beta _0 = 3\). These parameters define the initial spread and central tendency of the distribution, establishing a prior baseline before incorporating any observed data. The posterior distribution of \(b\), obtained by updating the prior with data from specific time intervals, is also presented. This allows for a refined estimation that reflects both prior knowledge and evidence from actual observations.

The subfigures in these three figures represent different temporal segments that capture distinct phases of the damage evolution process. Specifically, Fig. 5 includes subfigures (a) for time t= 0–293 and (b) for t= 74–293, Fig. 6 includes (c) for t= 0–100 and (d) for t= 0–200, and Fig. 7 includes (e) for t= 0–277 and (f) for t=72–300. These intervals were selected to reflect the evolving nature of structural degradation and allow an assessment of how time-dependent data influences the estimation of the parameter b.

Comparison of the prior and posterior distributions of parameter b across different observation intervals. Subfigure (a) displays the distribution based on observations from 0 to 293, while subfigure (b) focuses on the updated distribution from observations 74 to 293. The comparison illustrates how incorporating new data refines the estimate of parameter b over time.

Shorter observation windows, such as those in subfigures (c) and (d) of Fig. 6, often result in broader posterior distributions. This indicates higher uncertainty due to limited data, which constrains the model’s ability to infer the true parameter values with confidence. In contrast, longer intervals, such as those in Fig. 5, allow for the inclusion of more data points, which sharpens the posterior distribution and narrows the uncertainty. This trend illustrates the principle that increasing the volume of data over time leads to more accurate and confident estimates. Together, these figures highlight the importance of Bayesian inference in damage modeling, where prior knowledge is continually refined through the integration of new data. The shifting and narrowing of the posterior distributions visually and quantitatively emphasize the model’s increasing confidence in the parameter b as more evidence becomes available. Furthermore, the variation in posterior behavior across different time intervals underlines the significance of temporal data selection in predictive modeling. The ability of this approach to adaptively incorporate new information makes it particularly well-suited for structural health monitoring applications, where the degradation process is progressive and dynamic.

Posterior distributions of the model parameter b over large observation time spans. Subfigure (c) presents results based on time steps 0–100, whereas subfigure (d) depicts distributions based on time steps 0–200. These comparisons demonstrate how including more temporal information improves posterior estimation and thus enhances confidence in inferring parameters.

Visualization of the posterior distributions of the parameter evolving b from data covering different periods. Subfigure (e) presents the distribution according to early-period data (0–277), and subfigure (f) presents those from a later period (72–300). The contrast indicates how additional data focuses the estimate of b, monitoring dynamic change in system behavior over time.

The hyperparameters \(\alpha _0\) and \(\beta _0\) for the prior distribution of b were set to 5 and 3, respectively. These hyperparameters determine the shape and scale of the prior distribution, thereby influencing its initial variance and central tendency. As shown in the figure, observational data from specific time ranges were then used to compute the posterior distributions. The subfigures correspond to the following time intervals: (a) t= 0–293, (b) t= 74–293, (c) t= 0–100, (d) t= 0–200, (e) t= 0–277, and (f) t= 72–300. Each time range reflects a different phase of the damage progression, providing insights into how parameter b evolves. The posterior distributions reveal the effect of the observed data in sharpening and shifting the initial assumptions represented by the priors. For instance, shorter time intervals, such as in subfigures (c) and (d), might yield less concentrated posterior distributions due to limited data, leading to broader uncertainty in the parameter estimates. Conversely, longer time intervals, as in subfigures (a) and (b), provide more precise posterior distributions with reduced uncertainty. This progression demonstrates how both the quantity and quality of data contribute to the refinement of parameter estimates. The figure illustrates the dynamic interaction between prior knowledge and observed data within the Bayesian framework. The prior distribution serves as the initial foundation for parameter estimation, while new observations iteratively update this foundation, leading to more accurate and confident results. Moreover, the selection of time intervals significantly influences the resulting posterior distributions, showing how the temporal resolution of data impacts the inference process. This approach is particularly effective for modeling time-dependent phenomena, such as damage progression in structures, where the parameter b captures critical characteristics of the underlying degradation behavior.

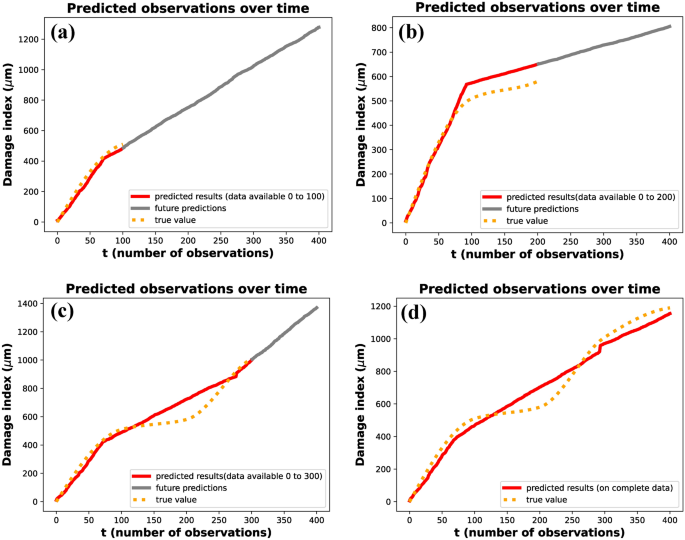

Predicted observations over time using gamma process modeling. The orange dotted curve represents the original data available up to a given time instant. The red curve illustrates the predicted results based on the available data, while the grey curve depicts the predicted future state of the system. Subfigures (a–d) show the predictions for data availability up to \(t= 100\), \(t= 200\), \(t= 300\), and the complete dataset, respectively. The figure demonstrates the accuracy of predictions as the dataset increases in size.

The damage index progression over time was analyzed to evaluate the effect of available data on the accuracy of predictions, as illustrated in Fig. 8. The figure presents four scenarios where the predicted damage index is compared to the true values (\({\upmu }m\)) for varying levels of data availability: (a) data up to \(t=100\), (b) data up to \(t=200\), (c) data up to \(t=300\), and (d) the complete dataset. In each subfigure, the red line represents the predicted results based on the available data, the orange dotted line indicates the future predictions, and the gray line shows the true values. The analysis demonstrates that as more data becomes available, the predictions become increasingly accurate and align more closely with the true damage progression. When the complete dataset was analyzed, two distinct change points were identified at \(t=72\) and \(t=277\). The estimated values of the parameter b from the posterior distributions were found to be 2.42 after the first change point and 3.20 after the second change point (Fig. 8c,d). This indicates that the damage progression exhibited two distinct phases, with a clear transition in the degradation dynamics after each change point. A two-phase gamma process was subsequently applied to model this behavior, effectively capturing the progression over time.

For partial datasets, different observations were made. When data from t = 0–100 was analyzed, a single change point was identified at \(t= 72\), and the parameter b was estimated from the posterior distribution (Fig. 5). Similarly, when data from t = 0–200 was analyzed, a single change point was observed at \(t=93\), with a corresponding parameter value of b obtained from the posterior distribution (Fig. 5f). However, in data from t = 0–300, two change points were identified at \(t= 72\) and \(t=277\), aligning with the results obtained from the complete dataset. These results highlight the impact of data availability on the ability to detect change points and estimate model parameters accurately. With smaller datasets, fewer change points were identified, and the predictions exhibited greater uncertainty, as reflected in the future predictions (orange dotted lines).

As additional data were incorporated, the posterior distributions became increasingly concentrated, resulting in improved estimates of parameter b and more accurate modeling of the damage progression. The value of b was computed as the mean of its posterior distribution. These estimated values were then used to model the system using a Gamma process, capturing the stochastic nature of cumulative damage over time. Figure 8 presents the predicted results and the predicted future state of the system based on this gamma process modeling at various time instants. Figure 8a–c represent the predicted and future damage index up to time instants 100, 200, and 300, respectively. Figure 8d shows the predicted results when complete data is available, closely following the true values. The application of a two-phase gamma process for prognosis provided a robust framework for capturing the non-linear progression of damage over time. This methodology demonstrates the importance of leveraging complete datasets whenever possible to improve the reliability and precision of damage modeling.

Thus, the damage index follows the following distribution on analyzing the complete data from 0–400 :

Figure 8 shows the predicted observations by this gamma process modeling across different time instants.

To quantitatively evaluate the accuracy of the damage prognosis model, a comparison between the predicted and actual damage indices was conducted using Root Mean Squared Error (RMSE) and Mean Absolute Percentage Error (MAPE). Table 2 presents the performance metrics across different partial observation intervals. For forecasts generated using data up to \(t=100\), the RMSE was 80.94 and MAPE was 11.09%, indicating reasonable accuracy during early-stage degradation. When the observation window was extended to \(t = 200\), the RMSE increased to 195.57 and the MAPE to 16.56%, reflecting a temporary drop in performance. This decline may be attributed to evolving damage dynamics that were not yet well captured by the model, possibly due to the use of interpolated data instead of original measurements and the inherent randomness of the gamma process. However, predictive accuracy improved markedly with further data: for \(t=300\), the RMSE decreased to 75.71 and MAPE to 10.60%, while for t=400, the RMSE was 61.59 with a MAPE of 9.99%. These results demonstrate the model’s capacity to generate reliable forecasts with limited initial data, while progressively refining its accuracy as more observations become available. The inclusion of these quantitative metrics strengthens the validation of the proposed unsupervised framework for robust and data-efficient structural health prognosis.

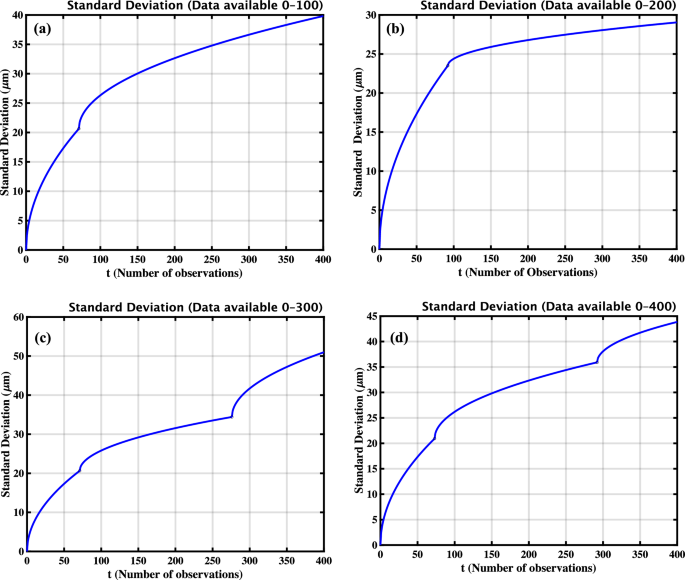

The uncertainty in measurements, or the prediction interval, can be quantified by tracking the standard deviation as more data becomes available. Given that the increments of the damage index follow a Gamma process and are treated as independent random variables, the variance of the damage index at a specific time instant is equal to the cumulative sum of the variances of all previous increments. For a Gamma distribution with shape parameter a and scale parameter b, the variance of each increment is \(a/b^2\). Therefore, the standard deviation of the damage index at time t, accounting for cumulative contributions, is given by60,65,66 :

The figures show how the standard deviation changes with time. Let \(\text {Standard Deviation}(\eta _{jt}) = \sigma (t)\)

For data available 0–100 :

For data available 0–200 :

For data available 0–300 :

For data available 0–400 :

The standard deviation of the damage index is computed over varying amounts of observed data to assess signal stability and damage sensitivity in the PZT sample. Subfigures (a–d) display standard deviation curves calculated using increasing data lengths: (a) 100 observations, (b) 200 observations, (c) 300 observations, and (d) the complete dataset of 400 observations. Each plot illustrates the evolution of standard deviation over time, allowing for a comparison of forecasting uncertainty under different levels of data availability. As additional data are incorporated, the standard deviation increases, reflecting the cumulative uncertainty associated with the progression of the damage index.

To investigate the variability introduced by the stochastic nature of the damage evolution model, the standard deviation of the damage index was analyzed over time. Increments in the damage index are modeled using a Gamma process. As a result, the variance of the damage index increases over time. This is because the variance at any given time is the cumulative sum of the variances of all previous increments. This holds under the assumption that the increments are independent Gamma-distributed variables. Figure 9 illustrates this behaviour by showing standard deviation curves computed using increasing amounts of available data: 100, 200, 300, and 400 observations, corresponding to subfigures (a) through (d), respectively. As expected, the standard deviation of the damage index increases with the number of observations. This reflects the accumulating uncertainty associated with progressive damage over time. The analysis validates the suitability of the gamma process in capturing both the mean trend and the growing variability of the damage index, which is essential for accurate prognosis in structural health monitoring applications.

Conclusion

This paper demonstrates the efficacy of a data-limited, unsupervised learning model that combines K-means clustering and a multi-stage gamma process for both defect detection and prognosis in aluminum structures. Clustering performances were successful in separating defective and healthy areas of the signal spatially for defects ranging from 500, 600, 900 \(\upmu m\), and 1 mm, with identifiable localization patterns being observed as damage worsened. The PCHIP-interpolated damage index accurately represented the trend of development, while the likelihood function identified important damage evolution milestones at observation times t = 74 and t = 293. These served as the basis for initiating a two-phase Gamma process modeling. Prognostic forecasts generated using partial data (i.e., up to t = 100, t = 200, and t = 300) showed strong agreement with actual measurements, thereby validating the reliability of early predictions. The proposed methodology avoided excessive use of large labeled datasets with a high accuracy rate. It also ensured interpretability through the use of a stochastic gamma-based damage index that was derived based on natural degradation behavior. Through the use of comprehensive feature extraction in the time, frequency, and time-frequency domains through STFT, the model achieved resolution limitations that are typical of standard signal processing. Due to its low computational cost and broad applicability, the proposed approach is well-suited for real-time SHM applications in aluminum structures and other fields such as aerospace, civil, and mechanical systems, where early detection of small-scale damage is essential for ensuring safety and maintaining performance.

Limitation and future direction

The proposed unsupervised framework effectively identifies and models single-stage deterioration in aluminum plates. However, it currently assumes piecewise-stationary damage progression. This limits its application to more general instances of multi-stage deterioration wherein damage behavior may exhibit sudden jumps, non-monotonicity, or shifts in underlying stochastic properties. Future work will extend the gamma process model to capture such multi-phase or hierarchical deterioration kinetics more realistically.

Enhancing predictive accuracy and interpretability can be achieved by integrating physical degradation models−such as fracture mechanics or fatigue crack growth laws−into the data-driven framework. Embedding such models within a Bayesian updating scheme would enable real-time updating of prognostic estimates while being consistent with known physics. The hybrid approach is particularly valuable in identifying damage at an early stage and in scenarios where data are sparse or uncertain. Future work will extend the framework to handle multiple damage sites and their interactions. This will make it more applicable to real-world structures. The method will also be adapted for complex materials, such as composites and anisotropic systems. In these materials, damage signals vary more due to directional properties and internal heterogeneity. From the signal analysis side, alternatives to the Short-Time Fourier Transform, such as wavelet transforms or learned signal embeddings, will be investigated to improve feature robustness against noise and low resolution. Experimental validation at a larger scale under varying operating and environmental conditions will also be required for real-world deployment. Incorporation of uncertainty quantification in both the clustering and prognosis stages will improve the reliability of the framework for real-time structural health monitoring applications.

Data availability

The data sets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Ojha, S. & Shelke, A. Probabilistic deep learning approach for fatigue crack width estimation and prognosis in lap joint using acoustic waves. J. Nondestr. Eval. Diagn. Progn. Eng. Syst.8 (2025).

Farrar, C. R. & Worden, K. An introduction to structural health monitoring. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 365, 303–315 (2007).

Wang, J., Li, Y., Hu, G. & Yang, M. Lightweight research in engineering: a review. Appl. Sci. 9, 5322 (2019).

Hassan, H. Z. & Saeed, N. M. Advancements and applications of lightweight structures: a comprehensive review. Discov. Civ. Eng. 1, 47 (2024).

Landolfo, R. Lightweight steel framed systems in seismic areas: Current achievements and future challenges. Thin-Walled Struct. 140, 114–131 (2019).

Georgantzia, E., Gkantou, M. & Kamaris, G. S. Aluminium alloys as structural material: A review of research. Eng. Struct. 227, 111372 (2021).

Singh, T. & Sehgal, S. Damage identification using vibration monitoring techniques. Mater. Today Proc. 69, 133–141 (2022).

Almarwae, M. Structural failure of buildings: Issues and challenges. World Sci. News 97–108 (2017).

Tian, Z., Howden, S., Ma, Z., Xiao, W. & Yu, L. Pulsed laser-scanning laser doppler vibrometer (PL-SLDV) phased arrays for damage detection in aluminum plates. Mech. Syst. Signal Process. 121, 158–170 (2019).

Haworth, W., Hieber, A. & Mueller, R. Fatigue damage detection in 2024 aluminum alloy by optical correlation. Metall. Trans. A 8, 1597–1604 (1977).

Dia, A., Dieng, L., Gaillet, L. & Gning, P. B. Damage detection of a hybrid composite laminate aluminum/glass under quasi-static and fatigue loadings by acoustic emission technique. Heliyon.5 (2019).

Straka, L., Yagodzinskyy, Y., Landa, M. & Hänninen, H. Detection of structural damage of aluminum alloy 6082 using elastic wave modulation spectroscopy. Ndt & E Int. 41, 554–563 (2008).

Yang, Y. & Ma, F. Constrained Kalman filter for nonlinear structural identification. J. Vib. Control 9, 1343–1357 (2003).

Yang, J. N., Lin, S., Huang, H. & Zhou, L. An adaptive extended Kalman filter for structural damage identification. Struct. Control Health Monit. 13, 849–867 (2006).

Ojha, S., Kalimullah, N. M. & Shelke, A. Application of constrained unscented Kalman filter (CUKF) for system identification of coupled hysteresis under bidirectional excitation. Struct. Control. Health Monit. 29, e3115 (2022).

Rezaeianjouybari, B. & Shang, Y. Deep learning for prognostics and health management: State of the art, challenges, and opportunities. Measurement 163, 107929 (2020).

Lei, Y. et al. Applications of machine learning to machine fault diagnosis: A review and roadmap. Mech. Syst. Signal Process. 138, 106587 (2020).

Chen, J., Huang, R., Chen, Z., Mao, W. & Li, W. Transfer learning algorithms for bearing remaining useful life prediction: A comprehensive review from an industrial application perspective. Mech. Syst. Signal Process. 193, 110239 (2023).

Xu, Y., Kohtz, S., Boakye, J., Gardoni, P. & Wang, P. Physics-informed machine learning for reliability and systems safety applications: State of the art and challenges. Reliabil. Eng. Syst. Saf. 230, 108900 (2023).

Salehi, H. & Burgueño, R. Emerging artificial intelligence methods in structural engineering. Eng. Struct. 171, 170–189 (2018).

Ochella, S., Shafiee, M. & Dinmohammadi, F. Artificial intelligence in prognostics and health management of engineering systems. Eng. Appl. Artif. Intell. 108, 104552 (2022).

Prakash, G., Yuan, X.-X., Hazra, B. & Mizutani, D. Toward a big data-based approach: A review on degradation models for prognosis of critical infrastructure. J. Nondestr. Eval. Diagn. Progn. Eng. Syst. 4, 021005 (2021).

Pamwani, L. & Shelke, A. Damage detection using dissimilarity in phase space topology of dynamic response of structure subjected to shock wave loading. J. Nondestr. Eval. Diagn. Progn. Eng. Syst. 1, 041004–041004 (2018).

Rajeev, A., Pamwani, L., Ojha, S. & Shelke, A. Adaptive autoregressive modelling based structural health monitoring of RC beam-column joint subjected to shock loading. Struct. Health Monit. 22, 1049–1068 (2023).

Ojha, S., Pamwani, L. G. & Shelke, A. Damage detection in base-isolated steel structure using singular spectral analysis. In Indian Structural Steel Conference, 387–401 (Springer, 2020).

Sohn, H. & Farrar, C. R. Damage diagnosis using time series analysis of vibration signals. Smart Mater. Struct. 10, 446 (2001).

Nair, K. K., Kiremidjian, A. S. & Law, K. H. Time series-based damage detection and localization algorithm with application to the ASCE benchmark structure. J. Sound Vib. 291, 349–368 (2006).

Lynch, J. P. & Loh, K. J. A summary review of wireless sensors and sensor networks for structural health monitoring. Shock Vib. Digest. 38, 91–130 (2006).

Shen, N. et al. A review of global navigation satellite system (GNSS)-based dynamic monitoring technologies for structural health monitoring. Remote Sens. 11, 1001 (2019).

Colombani, I. A. & Andrawes, B. A study of multi-target image-based displacement measurement approach for field testing of bridges. J. Struct. Integr. Maintenance 7, 207–216 (2022).

Lee, Y., Lee, G., Moon, D. S. & Yoon, H. Vision-based displacement measurement using a camera mounted on a structure with stationary background targets outside the structure. Struct. Control. Health Monit. 29, e3095 (2022).

Lee, Y., Park, W.-J., Kang, Y.-J. & Kim, S. Response pattern analysis-based structural health monitoring of cable-stayed bridges. Struct. Control. Health Monit. 28, e2822 (2021).

Šedková, L., Vlk, V. & Šedek, J. Delamination/disbond propagation analysis in adhesively bonded composite joints using guided waves. J. Struct. Integr. Maintenance 7, 25–33 (2022).

Lee, Y., Kim, H., Min, S. & Yoon, H. Structural damage detection using deep learning and fe model updating techniques. Sci. Rep. 13, 18694 (2023).

Pandey, A. & Biswas, M. Damage detection in structures using changes in flexibility. J. Sound Vib. 169, 3–17 (1994).

Farrar, C. R. & James Iii, G. System identification from ambient vibration measurements on a bridge. J. Sound Vib. 205, 1–18 (1997).

Vestroni, F. & Capecchi, D. Damage detection in beam structures based on frequency measurements. J. Eng. Mech. 126, 761–768 (2000).

Soyoz, S. & Feng, M. Q. Long-term monitoring and identification of bridge structural parameters. Comput.-Aided Civ. Infrastr. Eng. 24, 82–92 (2009).

Staszewski, W. Intelligent signal processing for damage detection in composite materials. Compos. Sci. Technol. 62, 941–950 (2002).

Li, H., Deng, X. & Dai, H. Structural damage detection using the combination method of EMD and wavelet analysis. Mech. Syst. Signal Process. 21, 298–306 (2007).

Figueiredo, E., Park, G., Farrar, C. R., Worden, K. & Figueiras, J. Machine learning algorithms for damage detection under operational and environmental variability. Struct. Health Monit. 10, 559–572 (2011).

Ghiasi, R., Torkzadeh, P. & Noori, M. A machine-learning approach for structural damage detection using least square support vector machine based on a new combinational kernel function. Struct. Health Monit. 15, 302–316 (2016).

Goyal, D. & Pabla, B. Condition based maintenance of machine tools\(-\)a review. CIRP J. Manuf. Sci. Technol. 10, 24–35 (2015).

Tsialiamanis, G., Sbarufatti, C., Dervilis, N. & Worden, K. On a meta-learning population-based approach to damage prognosis. Mech. Syst. Signal Process. 209, 111119 (2024).

Zhang, J., Wang, P., Yan, R. & Gao, R. X. Long short-term memory for machine remaining life prediction. J. Manuf. Syst. 48, 78–86 (2018).

Zhao, S., Zhang, Y., Wang, S., Zhou, B. & Cheng, C. A recurrent neural network approach for remaining useful life prediction utilizing a novel trend features construction method. Measurement 146, 279–288 (2019).

Huang, G., Li, H., Ou, J., Zhang, Y. & Zhang, M. A reliable prognosis approach for degradation evaluation of rolling bearing using mclstm. Sensors 20, 1864 (2020).

Li, T., Chen, J., Yuan, S., Cadini, F. & Sbarufatti, C. Particle filter-based damage prognosis using online feature fusion and selection. Mech. Syst. Signal Process. 203, 110713 (2023).

Briggs, A. & Kolosov, O. Acoustic Microscopy, vol. 67 (Oxford University Press, 2010).

Hofmann, M. et al. Scanning acoustic microscopy\(-\)a novel noninvasive method to determine tumor interstitial fluid pressure in a xenograft tumor model. Transl. Oncol. 9, 179–183 (2016).

Habib, A. et al. Mechanical characterization of sintered piezo-electric ceramic material using scanning acoustic microscope. Ultrasonics 52, 989–995 (2012).

Kumar, P. et al. Numerical method for tilt compensation in scanning acoustic microscopy. Measurement 187, 110306 (2022).

Gupta, S. K., Habib, A., Kumar, P., Melandsø, F. & Ahmad, A. Automated tilt compensation in acoustic microscopy. J. Microsc. 292, 90–102 (2023).

Banerjee, P. et al. High-resolution imaging in acoustic microscopy using deep learning. Mach. Learn. Sci. Technol. 5, 015007 (2024).

Ojha, S., Agarwal, K., Shelke, A. & Habib, A. Quantification of impedance and mechanical properties of zeonor using scanning acoustic microscopy. Appl. Acoust. 221, 109981 (2024).

Khan, M. S. A. et al. Autosaft: Autofocusing for extended depth of imaging in scanning acoustic microscopy. J. Microsc. (2025).

Agarwal, K. et al. Uncertainty analysis of Altantic salmon fish scale’s acoustic impedance using 30 mhz c-scan measurements. Ultrasonics 142, 107360 (2024).

Gupta, S. K., Pal, R., Ahmad, A., Melandsø, F. & Habib, A. Image denoising in acoustic microscopy using block-matching and 4d filter. Sci. Rep. 13, 13212 (2023).

Ikotun, A. M., Ezugwu, A. E., Abualigah, L., Abuhaija, B. & Heming, J. K-means clustering algorithms: A comprehensive review, variants analysis, and advances in the era of big data. Inf. Sci. 622, 178–210 (2023).

Pandey, M., Yuan, X.-X. & Van Noortwijk, J. The influence of temporal uncertainty of deterioration on life-cycle management of structures. Struct. Infrastruct. Eng. 5, 145–156 (2009).

Abdel-Hameed, M. A gamma wear process. IEEE Trans. Reliab. 24, 152–153 (1975).

Prakash, G. & Narasimhan, S. Bayesian two-phase gamma process model for damage detection and prognosis. J. Eng. Mech. 144, 04017158 (2018).

Wang, H., Xu, T. & Yang, J. Lifetime prediction based on degradation data analysis with a change point. In Proceedings of the First Symposium on Aviation Maintenance and Management-Volume II, 527–534 (Springer, 2014).

Ester, M., Kriegel, H.-P., Sander, J. & Xu, X. Density-based spatial clustering of applications with noise. In Int. Conf. Knowledge Discovery and Data Mining, vol. 240 (1996).

van Noortwijk, J. M., van der Weide, J. A., Kallen, M.-J. & Pandey, M. D. Gamma processes and peaks-over-threshold distributions for time-dependent reliability. Reliabil. Eng. Syst. Saf. 92, 1651–1658 (2007).

Van Noortwijk, J. M. A survey of the application of gamma processes in maintenance. Reliabil. Eng. Syst. Saf. 94, 2–21 (2009).

Funding

Open access funding provided by UiT The Arctic University of Norway (incl University Hospital of North Norway)

Author information

Authors and Affiliations

Contributions

A.H. and S.O. have conceptualized the idea. M.J. and S.O. implemented the algorithm with initial help from A.S. and A.H. Formal analysis and experimental validation were performed by M.J., S.O., and A.H., who also wrote the original draft, and reviewed, and edited the manuscript with support from all co-authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jambulkar, M., Ojha, S., Shelke, A. et al. Autonomous defect estimation in aluminum plate and prognosis through stochastic process modeling. Sci Rep 15, 29549 (2025). https://doi.org/10.1038/s41598-025-13189-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-13189-8