Abstract

Accurate detection of blood in CCTV surveillance footage is critical for timely response to medical emergencies, violent incidents, and public safety threats. This study proposes a real-time deep learning framework that combines the InceptionV3 architecture with Convolutional Block Attention Modules to enhance spatial and channel-level feature discrimination. The model is further optimized through a proposed attention module that intensifies attention to small and minute blood-related patterns, even under challenging conditions such as occlusions, motion blur, and low visibility. A dedicated benchmark dataset comprising over 9500 manually annotated CCTV images captured under diverse lighting and environmental scenarios is developed for model training and evaluation. It achieves a detection accuracy of 94.5%, with precision, recall, and F1-scores all exceeding 94%, outperforming baseline methods. These results demonstrate the effectiveness in accurately identifying blood traces in real-world surveillance footage, offering a practical and scalable solution for enhancing public health and safety monitoring. All code and data are available at https://github.com/irshadkhalil23/bloodNet_model.

Similar content being viewed by others

Introduction

The rapid advancement of technology and the increasing demand for enhanced security measures have led to significant developments in smart surveillance systems1. These systems, equipped with the capability to analyze and interpret scenes in real time, play a crucial role in maintaining public safety and security2,3. Traditional surveillance techniques, often reliant on human observation, pose certain limitations due to the possibility of human errors, biases, and the demanding requirement of continuous monitoring. One particularly important aspect of surveillance, especially in remote settings such as crime scene inspection and medical diagnosis, is detecting the presence of bloods in a scene4. Blood presence can provide vital information about security issues or medical emergencies5. However, identifying blood, particularly in dynamic and crowded environments, can be challenging even for experienced human operators6.

Image processing techniques have shown promise in overcoming these challenges7. By employing sophisticated algorithms to analyze visual data, it is possible to detect specific features, such as the blood specific color and texture, more accurately and consistently than through human observation alone. These techniques can be implemented in an automated system, reducing the need for continuous human monitoring and improving the overall efficiency and reliability of the surveillance process8.

Modern Video surveillance system are design to enhance the safety of individual and properties9. These systems use CCTV cameras to monitor people’s activities or other real-time information. The smart surveillance system is now widely used in public and private places because it provides improved security and safety2,10. It protects and activates an alarm on different activities like robbery, theft, and criminal activities happenings. The surveillance system utilizes human motion to detect suspicious activity and activate an alarm to alert the security guards to stop the ongoing activity. Recent research articles are available in the open literature, which covers the most recent methods for improving security using CCTV surveillance systems11,12,13. However, this section reviews the most recent methods that potentially address different surveillance system applications.

Automated analysis of CCTV images and videos has been studied to detect any various situations14,15. For example, Marbach et al.16 proposed an automated fire detection algorithm in CCTV images by using temporal variation of fire intensity in the candidate region to determine the presence of fire and non-fire pixels. Similarly, Celik et al.17 and Gope et al.18 have developed color models to detect fire in video sequences or in still images. Benjamin et al.19, Liu et al.20, and Han et al.21 used different color spaces and developed models to detect fire and smoke in still images or in video sequences. Pedestrian detection is a difficult problem because people can show widely varying appearances in the scene. Malagon-Borja et al.22 detect pedestrians using PCA without knowing the prior knowledge of the scene. Cucchiara et al.23 developed a method to analyze human behaviors by calculating the frame wise projection histogram24. This method classifies the posture of the monitored person.

According to the best of our knowledge, there is no prior method that exists for CCTV surveillance systems, specifically designed to detect blood in real-world surveillance footage. However, many approaches have already been proposed for the detection of blood in endoscopic video. Most of the approaches are based on color-based features. For instance, Gopi et al.25 developed a bleeding detection algorithm specifically for capsule endoscopy by utilizing color texture features derived from orthogonal moments on HSV color images. The authors integrated the local binary pattern with color texture features and employed a multilayer perceptron neural network to detect bleeding within the vein. Pan et al.26 introduced a novel approach that used RGB variations and HSV saturation. Their method measures original color differences within the RGB space, and refines them using region-growing segmentation. Figueiredo et al.27 introduced a technique for detecting blood using the CIELab color space. Similarly, Abouelenien et al.28 implemented a method that leverages HSV histograms, dominant colors, and a co-occurrence matrix to identify blood. Furthermore, Ghosh et al.29 developed an approach that relies on the statistical descriptors from transformed RGB features. Yong et al.30 used the RGB color spaces and its conversion between the color spaces for the identification of bleeding and non-bleeding regions with endoscopic images. Pan et al.31, shows that the bleeding regions show dominant red, high saturation, and low brightness color configurations with in images and green and blue are relatively low as compared to the red color in the RGB color space. As deep learning (DL) models evolve, the enhanced learning capabilities of neural network architectures are increasingly replacing conventional image analysis techniques32. Recent studies have harnessed the power of the Convolutional Neural Network (CNN) framework to detect and classify blood regions in images33,34,35. For instance, Abouelenien et al.34 incorporated CNNs with HSV histograms, dominant colors, and a co-occurrence matrix to detect blood in images. Ghosh et al.35 proposed a method that combines statistical features of a transformed RGB color space with CNNs for bleeding detection36. Proposed an enhanced model by using attention-enhanced model for detecting blood vessels in the OCT images.

CNN based models have proven effective, while on the other hand, most CNN-based models not only learn relevant features but unfortunately also learn from irrelevant features like noise37 and background information, which reduces overall performance. Learning from irrelevant features can significantly reduce the overall performance of the model. Inspired by human visual perception, which focuses on important regions within a complex scene, many researchers have proposed various attention modules that have been investigated in different domains38,39. Based on this, important regions representing the abnormality within the images are considered more significant compared to other regions. Attention-based mechanisms have been used alongside various deep CNN models, showing great potential in further enhancing CNN performance. These attention networks suppress redundant information in the feature map and focus more on effective features. They have been widely used in different tasks and improve the representational power of CNN networks by focusing on the dominant features while suppressing redundant and unnecessary ones. The attention mechanism extracts better feature effects and improves predictive power. Recent research focused on the development of more powerful attention mechanisms40 to improve CNN model performance. The Convolutional Block Attention Module (CBAM) proposed by Woo et al.41 greatly improves CNN model performance by capturing important features in the feature maps. CBAM contains two different types of attention modules: The Channel Attention Module (CAM) and the Spatial Attention Module (SAM), which make the model focus more on the important regions within the image. Multi-scale convolutional operations allow CNN-based models to focus on more relevant and concise features and enable the module to better capture the spatial relationships between features, thus improving the performance of the module42,43.

While CNN models have shown strong capabilities in detecting blood-related conditions, several limitations and challenges remain. First, many existing studies have concentrated on identifying a single type of blood anomaly. As a result, these models are proficient at specific tasks but may struggle with more complex real-world scenarios. We argue that there is a critical need to develop a more comprehensive blood detection system that can identify various blood conditions concurrently. Secondly, there is a notable lack of datasets featuring authentic blood images annotated with multiple conditions. Studies have shown that there can be significant correlations between different blood samples from the same patient. We have addressed these issues by developing our own benchmark dataset for more effective evaluation and advancement in this area. In this study, we present the BloodNet system, an automated and accelerated multiclass deep learning classification tool specifically designed to detect blood in CCTV images. Our approach begins by extracting discriminative deep feature representations from blood images. This is achieved by feeding the images into multiple deep learning blocks, which act as feature descriptors, often referred to as the backbone. Next, these extracted features undergo refinement and are integrated into a unified feature representation using the attention block. Finally, a trained model, combined with a Softmax layer, generates a probability distribution for three distinct blood conditions, acting as a non-linear classifier. By following this method, BloodNet effectively addresses the challenges of blood detection in CCTV footage, making the process faster and more accurate.

Main contributions

We have offered the main contributions, which can be summarized as follows:

-

1.

The primary contribution of this work is the development of a new dataset named “BloodCCTV-9500”, curated from diverse media sources, which serves as the foundation for detecting multiple blood abnormalities in various scenarios. It includes a wide variety of complex blood scenes, designed to enhance the adaptability of the proposed systems for real-time scenario.

-

2.

This paper introduces a new model for classifying blood in complex CCTV footage. The proposed model utilizes InceptionV3 architecture due to its effective features extraction tailored specially for blood detection in complex scenario and a multi-scale attention module, enabling it to extract features from the most relevant regions in CCTV images. InceptionV3 captures global representations of blood regions. In our study, we assessed the performance of several CNN model backbones to identify the most effective one for feature extraction. After selecting a high-performance backbone, we integrated an attention block designed to capture additional high-level feature representations. This enhancement helps in distinguishing different classes of blood images by leveraging the backbone network’s output. Our proposed network block also incorporates channel-wise attention, which refines and fuses features by utilizing discriminative feature maps generated in the previous step.

-

3.

We demonstrate that the proposed BloodNet system outperforms all other tested backbone models. This evaluation is conducted using our proposed dataset, which comprises a diverse collection of challenging blood images representing three distinct blood scenarios.

-

4.

We performed extensive experiments and ablation studies to empirically demonstrate the effectiveness of the proposed multi-scale model in achieving high classification accuracy.

Paper organization

This paper is structured to guide readers through our research on optimizing CCTV blood detection using a multi-scale deep learning approach. Section 3 presents the dataset creation criteria and explains the architecture of the proposed network. This section also discusses the BloodCCTV-9500 dataset, shares our empirical results, and provides a comparative analysis. Finally, in Sect. 4, we summarize our findings and suggest potential directions for future research.

Proposed methodology

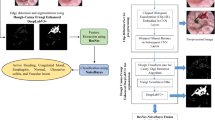

In this section, we present an overview of BloodCCTV-9500, the architecture of the blood detection network, and a feasibility study. Additionally, we discuss the modifications made to the InceptionV3 model to improve detection capabilities. Figure 3 shows a visual representation of the general workflow, encompassing the stages of training, testing, and applying the proposed model. Further details are provided in the following sub-sections.

Dataset generation and sample selection criteria

We have made a significant contribution to the field of blood detection by developing and annotating a novel medium-scale benchmark dataset. The dataset is curated with the help of domain field experts and validated by experts to visually verify the presence of blood spot in each and every individual image. From extensive review of recent literature, it can be concluded that BloodCCTV-9500 is the most challenging dataset compare of different types of challenging scenario. BloodCCTV-9500 includes visual samples for varying illumination, diverse and challenging environments, backgrounds, and a range of blood spot appearances in both indoor and outdoor environments. Our dataset is unique and focus on blood detection, which address the gaps by being the first of its kind specific real-world scenarios and application.

Dataset description

BloodCCTV-9500 includes a collection of images for both indoor and outdoor environments, which addresses the challenge across various backgrounds. It consists of wide ranges of lighting conditions, to ensure the model generalization in recognition under different illumination levels. This collection of different lighting condition represent the real-world conditions. Additionally, BloodCCTV-9500 presents specific challenges such as blood visibility at various backgrounds and degree of occlusion. The most challenging aspect of is the variations in the color and texture of blood spots at different background. These crucial factors are particularly important during creation of dataset to ensure its generalization to detect blood presence in complex scenarios. The collection of different images in a variety of backgrounds, similar colored objects, tests the model’s performance to distinguish the presence of blood in challenging ways. We also applied standard image augmentation techniques including rotation, horizontal flipping, zooming, and brightness adjustment to improve the model’s robustness and reduce overfitting. These techniques helped in simulating real-world variability in these images and were important in improving model generalization.

In order to ensure the transparency and reproducibility of the results, we plan to publicly release of BloodCCTV-9500 and code implementation, for future research by broader community. This will allow other researchers to test and improve upon our work, promoting further advancements in blood detection and related fields.

Comparison

To date, few datasets have focused specifically on blood detection in CCTV surveillance footage, making BloodCCTV-9500 a novel contribution. While there is existing dataset available specifically for medical imaging and endoscopic blood detection, these focus on controlled environments with high-resolution images. BloodCCTV-9500 consists of different images belonging to three scenarios including blood on floor in outdoor setting, blood in indoor setting and collection of blood on various items which pose threat to person security. BloodCCTV-9500 is tailored specially for real-world surveillance contexts, which are more challenging due to lower resolution, variable lighting, and occlusion.

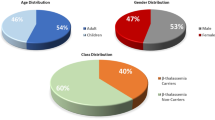

Comparing our dataset to public medical imaging dataset (e.g., those used for endoscopy) highlights how our dataset captures more realistic, dynamic scenarios. The images in our dataset simulate actual CCTV footage, featuring lower-resolution images, diverse appearances, and background noise typical of surveillance footage. This makes BloodCCTV-9500 especially well-suited for testing algorithms designed for real-time blood detection in challenging environments. Some sample images and the distribution of images in each class are shown in Figs. 1 and 2, respectively.

Ethical considerations

The use of CCTV footage for research purposes raises important ethical and privacy concerns. In this study, we ensured that all collected images in the BloodCCTV-9500 dataset were sourced from publicly available on social media platform repositories and included in a manner that avoids revealing any personally identifiable information. During dataset preparation, we have ensure that sensitive regions were manually reviewed and cropped to ensure individual privacy. This dataset was developed exclusively for academic research only and can be obtained for further research purposes upon request.

InceptionV3 architecture

Convolutional Neural Networks (CNNs) play a crucial role in deep learning, particularly for object classification using image data. Instead of relying on general matrix multiplication, CNNs utilize convolutional operations. Typically, a CNN consists of three main layers: the input layer, which prepares the data for processing; hidden layers, which connect the input and output layers; and the output layer, which produces the results.

In our research, we utilize InceptionV3 as the backbone model. InceptionV3 is an advanced version of the original Inception-V1 model, incorporates several optimization techniques to enhance its adaptability, and offers greater network complexity and improved recognition performance over its earlier versions. In this research, we have used transfer learning which allows the model to retain the weights of earlier layers while adapting the final layer to new categories. This enhances training efficiency by reusing low-level learned features. Moreover, we have optimized the InceptionV3 architecture framework to balance precision and efficiency. Our proposed solution is effective in real-time blood detection, offering high performance in challenging environments. It performs robustly across diverse, complex scenarios, demonstrating strong recognition capability.

Proposed network

We present two significant enhancements in the network architecture to achieve high performance on our proposed BloodCCTV-9500 blood detection dataset. First, we incorporated a proposed module at the beginning of the backbone to improve intermediate feature extraction from the images. Additionally, we have removed the final layer from this module and introduced an attention block module to further enhance the intermediate features before making the final recognition. By fine-tuning a pre-trained model, we used the existing learned weights and parameters, enabling the network to effectively capture domain-specific visual features. The feature extraction pipeline of a pre-trained network is inherently robust and diverse, making it an excellent starting point for any vision-based classification task. Our pipeline encompasses feature extraction, integration of attention mechanisms, and final classification to achieve optimal performance (Fig. 3).

Building on the success depicted in Fig. 4, which illustrates the InceptionV3 architecture for blood detection, we draw inspiration from successful feature extraction strategy across various computer vision domains. To optimize feature selection for blood classification tasks, particularly in extremely challenging scenarios, we utilize several backbone feature extractors. These include Xception, MobileNet, ResNet50, NASNetMobile, and InceptionV3. Our experimental results in Section IV demonstrate that InceptionV3 offers superior feature extraction for blood detection tasks. Theoretically, InceptionV3 surpasses its predecessors due to its enhanced Inception modules, which have been refined for better performance. One of the most remarkable aspects of Inception modules is their multi-scale processing capability, which yields superior results across numerous tasks. InceptionV3 incorporates three fundamental Inception modules: Inception (A), (B), and (C). Each module features multiple convolutional and pooling layers operating in parallel as shown in Fig. 4.

These layer leverage compact filter sizes such as 1 × 1, 3 × 3, 1 × 3, and 3 × 1 to effectively minimize the number of trainable parameters. InceptionV3 processes input images that are typically sized as 299 × 299 × 3. Initially, the image passed in five through 5 convolutional layers, each utilizing multiple 3 × 3 kernels. In our proposed framework, we have removed the final dense layers from the InceptionV3 model. This modification yields a feature vector with dimensions of 8 × 8 and 2048 channels, denoted by α. This transformation is mathematically expressed in Eq. 1:

Where ζ represents each Inception modules and µ represents the initial convolutional operation performed on the input χ. Because of the operation utilizing Eq. 1, we have obtained a feature vector presented by α which contains different information from the images including its structure and other details, etc. To improve classification accuracy and mitigate misclassification issues, we incorporated a modified attention module. This addition enhances the extraction of both channel-specific and spatial details. As illustrated in Fig. 5, this augmented approach significantly refines our detection capabilities.

These enhancements are designed to improve the model’s accuracy and computational efficiency. A comprehensive explanation of each modification is provided in the following subsections.

Proposed attention network

In our framework, we have removed the last layer from the InceptionV3 module and integrated a modified attention block to refine and enhance features before making final predictions. These architectural modifications are design to boost the model’s overall accuracy and efficiency. While Integrating a CA module with backbone model features can be effective in simpler scenarios, such as distinguishing between blood and non-blood objects. However, it falls short in more complex cases that require precise blood detection and localization. To address this, we incorporate both CA and modified SA modules into our proposed network. This integration enhances the model’s ability to focus on critical regions for blood detection. Our attention scheme effectively extracts the most significant blood regions, thereby improving the precision and accuracy of blood identification in various challenging contexts. The details of the proposed framework are further explained in the next subsection.

Channel Attention (CA): CA plays an important role by enabling the models to focus on the most informative channels within the feature map. It consists of enhance attention mechanism which involves several computational steps which are specially designed to enhance the model’s focus and recognition performance. Initially, it computes both global average and max pooling operations across each channel in the intermediate extracted feature map. These operations combined aggregate useful information on a channel-by-channel basis and capturing the overall importance features of each channel. The pooled features are then passed through a shared multi-layer perceptron layer, which includes fully connected layers with nonlinear activations. This layer is important for enhancing the discriminative power by inter channel dependencies. The output of this layer is further processed by a sigmoid activation function, producing an attention map that highlights the importance of each channel. This enhance feature map indicates and represent the most important across each channel. During inference process, the attention map is applied element-wise to the original feature map, thereby refining the feature representation. As a result, channels are scaled according to their attention values, effectively amplifying the informative channels while diminishing the impact of the less useful ones. This mechanism allows the model to focus computational resources on the most discriminative features. Consider α be the feature map, which is generated by the application of InceptionV3 model on each image. This feature map yields two distinct spatial background descriptions: αCavg and αCmax. Mathematically:

By these operations, the output of αCavg and αCmax is generated by two fully connected (FC) layers, f c1 and f c2, which utilizes the ReLU activation function to extract both Mmax and Mavg. By combining it, the weight coefficients Mc(α) are calculated as follows:

In this approach, f c1 and f c2 use 1 × 1 convolution operations to expand and compress the features to optimize each channel. The ⊖ symbol stands for the ReLU function, while ⊕ signifies the addition operation. By adding Mmax and Mavg, we derive the final, more concise features, Mc (α). To ensure a smooth feature flow, a skip connection ⊗ is incorporated, leading to the creation of the CA map Fc:

Modified Spatial attention

This mechanism improves a neural network’s ability to focus on important spatial region within feature maps. This process involves a few key steps. Initially, global max pooling and average pooling operations are applied across the channel dimension of the input feature maps. These pooling operations generate two separate 2D maps that summarize the spatial context by capturing the most and average activated features across all channels. This allows the model to highlight spatial regions of interest by emphasizing their relative importance.

The pooled outputs, αSavg and αSmax, are concatenated and then passed into a convolutional layer to generate a spatial attention map. This map assigns importance to different spatial locations, allowing the model to emphasize informative regions while suppressing irrelevant ones. The final refined feature map, denoted as MsFc, which is computed using the following method:

Here, f represents the filter size in each convolution operations. Additionally, global average pooling operation is applied to generate an enhanced feature descriptor:

The concatenated features are then batch normalized, and the final feature map FCS is generated by combining batch normalization with α:

In our approach, the extracted feature maps are first passed through a dense layer with 150 neurons, followed by a softmax layer. To improve recognition performance in complex scenarios, we integrate dual attention modules that generate enhanced feature representations. The resulting features are then passed through three fully connected layers with 64, 32, and 2 neurons, respectively.

Results and discussion

In this section, we present a comprehensive analysis of our experimental setup and results. We will study the experimental setup and results thorough out the model development process. Our setup includes a detailed description of the evaluation metrics, used to assess the effectiveness of our models. We have used standard evaluation matrices to provide valuable insights into different aspects of model performance. These metrics are used for overall evaluation and assessment of the results obtained from extensive experiments. Furthermore, we have examined the integration and effects of various attention modules within our experimental framework, and analyzed how these modules contribute to the overall recognition capability of our proposed framework. A key aspect of our analysis is the ablation study, where we systematically evaluate the performance effects of each module on overall model performance. This helps understand the contribution of each module to the model’s performance improvements. In addition to this, we present quantitative results, derived from extensive experimentation. These results are evaluated through statistical metrics, offering valuable insights into the model’s accuracy and recognition.

Experimental setting and hyper-parameter selection

In this section, we present the experimental setups which are used in our experimentation and model development. For training the model, we have utilized the Keras framework with TensorFlow44 at the back end. For optimizing the model, we have used Stochastic Gradient Descent45 with momentum with training and learning rate at 0.0001. We have trained our model for 30 epochs, with a batch size of 24. We have processed the entire image to the default input dimension of 299 × 299 × 3, which is the default input size for the Inception model. All the experiments were conducted on an Intel Core i9 CPU running at 3.60 GHz, with an NVIDIA GeForce RTX 3070. To mitigate overfitting, we incorporated early stopping based on validation loss and applied batch normalization to stabilize the training process. We have used standard approach to mitigate overfitting, we incorporated early stopping based on validation loss and applied batch normalization to stabilize the training process.to check the model performance. Table 2 summarizes the number of images used for training and testing. Along with these experimental settings, we have analyzed into a combination of parameter values through extensive experimentation to find out the most effective and details configuration. Table 1 presents the complete implementation details and hyperparameter for the development of the proposed model (Table 2).

Performance evaluation

To effectively analyze the robustness and generalization ability of the proposed network architecture, we employed standard performance metrics, including accuracy, precision, recall, and F1-score. These metrics were used to compare the performance of different attention mechanisms integrated into our framework. The definitions of accuracy, precision, recall, and F1-score are provided below. .

Where TP represents the number of positive samples that are correctly predicted; TN represents the number of positive samples that are incorrectly predicted as negative; FP represents the number of negative samples that are predicted as positive; FN represents the number of negative samples that are incorrectly predicted as positive. TP + FP represents the total number of samples predicted as positive, while TP + FN represents the actual number of positive samples in the ground truth.

Results analysis

In this section, we present a detailed evaluation of the experimental results obtained from the proposed network architecture. Using both quantitative analysis and ablation studies, we explore the impact of various backbone architectures to optimize performance for the specific task of blood detection in surveillance footage. The findings highlight the strengths and limitations of the model, providing a comprehensive assessment of its effectiveness and potential areas for enhancement.

Backbone feature extractor selection

We conducted an ablation study to assess the impact of different components in our proposed network. We utilized several baseline CNN models for backbone feature extraction and blood detection from CCTV images, including DenseNet, NasNetMobile, ResNet50, ResNet101, MobileNetV2, and InceptionV3. Table 3 shows that the dual attention network achieved the best overall performance, whereas Xception and NASNetMobile produced the weakest results, largely due to the limited adaptability of their features to the challenges posed by blood detection in CCTV footage. The features extracted by these models were less effective in distinguishing refined visual cues.

Table 3 presents the performance comparison of different backbone models without attention mechanisms on our blood dataset, with the corresponding graphical representation provided in Fig. 7. The backbones evaluated include DenseNet, MobileNet, NasNet, ResNet101, ResNet50, and InceptionV3. Among these models, InceptionV3 outperforms the others, achieving the highest accuracy of 93.90%, along with a balanced precision, recall, and F1 score of 93.33% across all metrics.

In contrast, other models such as DenseNet and MobileNet show slightly lower performance, with accuracy scores of 92.28% and 92.97%, respectively. ResNet101 and ResNet50 also fall short with accuracies of 92.47% and 92.41%, indicating their relatively lower performance in comparison to InceptionV3.

Based on these results, InceptionV3 was selected as the backbone for our model due to its superior performance on BloodCCTV-9500, providing the best balance across all key metrics.

Additionally, we integrated the dual attention module comprising both of CA and SA mechanisms along within our backbone model to improve the precision of blood detection and localization in complex scenes using our proposed dataset. The training and validation accuracies corresponding to each backbone models evaluated in our experiments are shown in Fig. 6.

As shown in Table 3, the baseline CNN models combined with the dual attention module outperformed approaches based solely on deep features, as blood detection presents more complicated challenges than standard image classification tasks. The attention modules amplify the extraction of discriminative features, leading to enhanced accuracy in blood scene classification. Among the baseline models, InceptionV3 with a soft max classifier delivered the best performance due to its superior feature extraction capabilities. Table 3 presents the experimental results of our ablation experimentation, exhibiting the efficiency measurements with modifications that incorporate certain modules. From experimentation and analysis, we have analyzed consistent improvement in performance as we include additional modules into the InceptionV3 networks. First, we have utilized different feature extractor as backbone to find out the optimal and enriched feature backbone. Then we have utilized modified SA modules by utilizing various kernel size and numbers as shown in Fig. 2 (Fig. 7).

The confusion matrix shown in Fig. 8 shows the classification performance across three classes BloodNet achieves high accuracy for each class, with 94.5%, 94.7%, and 95.6% respectively. Numerical results indicating that it effectively distinguishing between different blood scenarios in CCTV images in complex visual conditions.

To further validate BloodNet, we also conducted a comparative evaluation and evaluated three baseline techniques: (1) SVM with Histogram of Oriented Gradients (HOG) features, (2) SVM with color histogram features, and (3) K-Nearest Neighbors based classification. These approaches are used for image classification. The results showed that these classical models significantly low on the BloodCCTV-9500 dataset, achieving minimal accuracies of only 53.5%, 63.8%, and 74% respectively. These limitations are primarily due to their inadequate ability to extract robust features. To illustrate the inadequacy of color-based feature discrimination, Figs. 9, 10 and 11 shows the normalized average color histograms for each blood class which presents distinguishable peaks. Overall patterns show significant overlap and making it difficult for conventional classifiers to distinguish between classes based on color distribution alone. These results validates the application of deep learning-based feature extraction, as it demonstrate superior robustness under real-world surveillance CCTV images (Fig. 11).

Ablation study

Table 4 provides a detailed comparison of different configurations of the InceptionV3 backbone with various attention modules and kernel sizes. The configurations explore the effectiveness of CA, SA, and combinations of both (CA + SA) on different evaluation matrics. In simpler configurations where only CA or SA is applied, the recognition performance remains consistent with an accuracy of 92.28%, precision at 92.66%, recall at 92.20%, and an F1 score of 90.66%. When CA and SA are combined with standard kernel sizes, the model yields similar results.

Notably, when more complex kernel sizes are utilized (such as 2 × 2 and 3 × 3 for CA + SA), the accuracy significantly improves to 93.9%, with precision, recall, and F1 score all reaching 93.33, demonstrating the benefits of multiscale attention. Further experimentation with different kernel sizes, such as 1 × 1, 7 × 7, and 1 × 1, leads to marginal improvements in performance metrics, maintaining accuracy in the range of 92.41–92.47%, with precision and recall around 91.66% and 91.33%, respectively. This indicates that overly large kernels (e.g., 7 × 7) may introduce excessive smoothing and reduce spatial specificity, while overly small kernels may not capture sufficient contextual detail46,47. The proposed model, which uses the kernel configuration of 1 × 1, 3 × 3, and 1 × 1, archives the best over results for all matrices. This combination benefits from the 1 × 1 kernel’s channel-wise compression and projection, and the 3 × 3 kernel’s ability to extract rich spatial features from localized regions. The 1 × 1 layers help enhance representation learning without introducing high computational cost46. It achieves a remarkable accuracy of 94.9%, precision of 94.6%, recall of 94.63%, and an F1 score of 94.65%, respectively.

This demonstrates that our approach, incorporating a combination of CA and SA with optimized kernel sizes, leads to superior performance across all key metrics. Also numerical results which are obtained via extensive experimentation empirically validate that a multiscale attention structure motivated from46,47 and by using smaller receptive fields significantly enhances the model’s discriminative capability in complex CCTV scenes and results are presented in Table 4.

Quantitative analysis

The quantitative analysis presented in Tables 3 and 4 underscores the superior performance of InceptionV3 as the backbone model for our blood detection dataset. As shown in Table 3, InceptionV3 outperforms other backbone models such as DenseNet, MobileNet, NasNet, ResNet101, and ResNet50 in all key metrics, achieving the highest accuracy of 93.90% alongside precision, recall, and F1 scores of 93.33%. In contrast, the other backbones have recognition accuracy of around the 92.28%-92.97%, with marginally lower values for precision, recall, and F1 scores, indicating their relative low performance.

To further refine the performance of the InceptionV3 backbone, we experimented with various attention mechanisms and kernel configurations, as shown in Table 3. In simpler configurations where only CA or SA is applied, the performance is consistent with an accuracy of 92.28%, precision of 92.66%, and F1 score of 90.66%. However, when a multi scale approach is introduced with a combination of CA and SA attention modules, the performance improves significantly, particularly with larger and more complex kernel sizes such as 2 × 2, 3 × 3.

Our model, which incorporates both CA + modified SA attention with optimized kernel size of 1 × 1, 3 × 3, and followed by a 1 × 1 kernel configurations, and archived the best recognition performance for all evaluation metrics. Our model achieves an impressive classification accuracy of 94.9%, with precision, recall, and F1 scores more than 94.6%, making this model the optimal choice specifically tailored for our blood detection and recognition task.

This comprehensive evaluation shows that InceptionV3 with attention mechanisms and multi scale kernel utilization, not only outperforms other backbones models but also significantly improves detection performance in challenging scenarios.

Figure 12 represent the different configuration versions of kernel used and their details are explained in Table 4. Figure 13 shows the training accuracy and training loss of the proposed model over 30 epochs. The training accuracy increases and saturates near 96%, which show a strong indication that the BloodNet model learns effectively from the training data. The training loss curve also decreases and stabilizes at a very low value, reflecting convergence and minimized error. It show that the proposed model demonstrates efficient learning and optimization. Therefore, it can be concluded from Fig. 13 that the proposed model is well-trained without significant signs of under fitting or overfitting during the training phase.

Conclusion

In the field of computer vision and image analysis, the application of CNNs has greatly enhanced performance across a variety of tasks. In this research, we introduced a system specifically designed for blood detection in challenging and dynamic environments. We employed the InceptionV3 models as backbone feature extractor and a novel dual attention mechanism. Our model significantly improved blood detection recognition which has the enhance capability even in complex scenarios. The proposed model, integrates a proposed module in the backbone and an attention-over-attention mechanism before classification, which performed better than other state-of-the-art approaches. Our model shows high recognition performance, and a classification accuracy of 94.5%. These results show the effectiveness of combining CA and SA mechanisms with the InceptionV3 architecture, making it particularly best choice for real-time blood detection in CCTV surveillance systems. For future work, we plan to develop a more diverse, large scale dataset that includes more scenarios and additional classes with even more complex environments, such as extreme lighting conditions and additional types of occlusions. This expanded large scale dataset will further improve the model’s generalization to real-world surveillance scenarios. We also plan to investigate advanced attention mechanisms, such as Vision Transformers, in future iterations to potentially enhance the model’s ability to focus on complex visual cues. In addition, we aim to optimize our model architecture for real-time deployment by exploring lightweight variants that reduce computational load while maintaining strong classification performance. This will support integration into edge devices for practical surveillance applications.

Data availability

All code and data are available at https://github.com/irshadkhalil23/bloodNet_model.

References

Aliouat, A., Kouadria, N., Maimour, M., Harize, S. & Doghmane, N. Region-of-interest based video coding strategy for rate/energy-constrained smart surveillance systems using Wmsns. Ad Hoc Netw. 140, 103076 (2023).

Myagmar-Ochir, Y. & Kim, W. A survey of video surveillance systems in smart City. Electronics 12 (17), 3567 (2023).

Ortiz, P. A. P. & Manzano, V. P. C. Forensic techniques in blood macules: an updated review. Centro Sur 7(2) (2023).

Vajpayee, K. et al. A novel method for blood detection using fluorescent dye. Microchem. J., 108987 (2023).

Indalecio-C´espedes, C. R., Hern´andez-Romero, D., Legaz, I., Rodr´ıguez, M. F. S. & Osuna, E. Occult bloodstains detection in crime scene analysis. Forensic Chem. 26, 100368 (2021).

Nandhini, T. & Thinakaran, K. An improved crime scene detection system based on convolutional neural networks and video surveillance. In: 2023 Fifth International Conference on Electrical, Computer and Communication Technologies 21 (ICECCT), pp. 1–6 IEEE (2023).

Nakib, M., Khan, R. T., Hasan, M. S. & Uddin, J. Crime scene prediction by detecting threatening objects using convolutional neural network. In: 2018 International Conference on Computer, Communication, Chemical, Material and Electronic Engineering (IC4ME2), pp. 1–4 IEEE (2018).

Fujihara, J. et al. Blood identification and discrimination between human and nonhuman blood using portable Raman spectroscopy. Int. J. Legal Med. 131, 319–322 (2017).

Hashi, A. O., Abdirahman, A. A., Elmi, M. A. & Rodriguez, O. E. R. Deep learning models for crime intention detection using object detection. Int. J. Adv. Comput. Sci. Appl. 14(4) (2023).

Mahor, V. et al. Iot and artificial intelligence techniques for public safety and security. In: Smart Urban Computing Applications, 111–126. River, ??? (2023).

R¨aty, T. D. Survey on contemporary remote surveillance systems for public safety. IEEE Trans. Syst. Man. Cybernetics Part. C (Applications Reviews). 40 (5), 493–515 (2010).

Popoola, O. P. & Wang, K. Video-based abnormal human behavior recognition—a review. IEEE Trans. Syst. Man. Cybernetics (Applications Reviews). 42 (6), 865–878 (2012).

Gautama, S., Atzmueller, M., Kostakos, V., Gillis, D. & Hosio, S. Observing human activity through sensing. In: Participatory Sensing, Opinions and Collective Awareness, 47–68. Springer, (2017).

Mohtavipour, S. M., Saeidi, M. & Arabsorkhi, A. A multi-stream CNN for deep violence detection in video sequences using handcrafted features. Visual Comput. 38 (6), 2057–2072 (2022).

Wang, T. et al. Improving YOLOX network for multi-scale fire detection. Visual Comput. 40 (9), 6493–6505 (2024).

Marbach, G., Loepfe, M. & Brupbacher, T. An image processing technique for fire detection in video images. Fire Saf. J. 41 (4), 285–289 (2006).

Celik, T., Demirel, H., Ozkaramanli, H. & Uyguroglu, M. Fire detection in video sequences using statistical color model. In: 2006 IEEE International Conference on Acoustics Speech and Signal Processing Proceedings, vol. 2, p. IEEE (2006).

Gope, H. L., Uddin, M., Barman, S., Islam, D. & Islam, M. K. Fire detection in still image using color model. Indonesian J. Electr. Eng. Comput. Sci. 3 (3), 618–625 (2016).

Benjamin, S. G., Radhakrishnan, B., Nidhin, T. & Suresh, L. P. Extraction of fire region from forest fire images using color rules and texture analysis. In: Emerging Technological Trends (ICETT), International Conference On, pp. 1–7 IEEE (2016).

Liu, Z. G., Yang, Y. & Ji, X. H. Flame detection algorithm based on a saliency detection technique and the uniform local binary pattern in the Ycbcr color space. Signal. Image Video Process. 10 (2), 277–284 (2016).

Han, X. F. et al. Video fire detection based on Gaussian mixture model and multi-color features. Signal. Image Video Process., 1–7 (2017).

Malag´on-Borja, L. & Fuentes, O. Object detection using image reconstruction with Pca. Image Vis. Comput. 27 (1–2), 2–9 (2009).

Cucchiara, R., Grana, C., Prati, A. & Vezzani, R. Probabilistic posture classification for human-behavior analysis. IEEE Trans. Syst. Man. Cybernetics-Part A: Syst. Hum. 35 (1), 42–54 (2005).

Haritaoglu, I., Harwood, D. & Davis, L. S. Ghost: A human body part labeling system using silhouettes. In: Pattern Recognition, 1998. Proceedings. Fourteenth International Conference On, vol. 1, pp. 77–82 IEEE (1998).

Gopi, V. P. & Balaji, V. Bleeding detection in wireless capsule endoscopy videos using color feature and k-means clustering. ICT Express. 2 (3), 96–99 (2016).

Pan, G., Xu, F. & Chen, J. A novel algorithm for color similarity measurement and the application for bleeding detection in Wce. IJ Image Graphics Signal. Process. 5, 1–7 (2011).

Figueiredo, I. N., Kumar, S., Leal, C. & Figueiredo, P. N. Computer-assisted bleeding detection in wireless capsule endoscopy images. Comput. Methodsin Biomech. Biomedical Engineering: Imaging Visualization. 1 (4), 198–210 (2013).

Abouelenien, M., Yuan, X., Giritharan, B., Liu, J. & Tang, S. Cluster-based sampling and ensemble for bleeding detection in capsule endoscopy videos. Am. J. Sci. Eng. 2 (1), 24–32 (2013).

Ghosh, T., Bashar, S. K., Alam, M. S., Wahid, K. & Fattah, S. A. A statistical feature based novel method to detect bleeding in wireless capsule endoscopy images. In: Informatics, Electronics & Vision (ICIEV), 2014 International Conference On, pp. 1–4 IEEE (2014).

Lee, Y. G. & Yoon, G. Bleeding detection algorithm for capsule endoscopy. World Academy of Science, Engineering and Technology 81, 672–7 (2011).

Pan, G., Yan, G., Qiu, X. & Cui, J. Bleeding detection in wireless capsule endoscopy based on probabilistic neural network. J. Med. Syst. 35 (6), 1477–1484 (2011).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Commun. ACM. 60 (6), 84–90 (2017).

D´ıaz, M. et al. Automatic segmentation of the foveal avascular zone in ophthalmological oct-a images. PloS One. 14 (2), 0212364 (2019).

Abouelenien, M., Ott, J., Perez, L. & Suri, J. S. Blood detection in natural scenes using convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–10 (2018).

Ghosh, S., Das, N., Das, I., Maulik, U. & Sarkar, R. Bleeding detection in wireless capsule endoscopy videos using Cnn and statistical features. IEEE J. Biomedical Health Inf. 24 (12), 3213–3222 (2020).

Upadhyay, K., Agrawal, M. & Vashist, P. Learning multi-scale deep fusion for retinal blood vessel extraction in fundus images. Visual Comput. 39 (10), 4445–4457 (2023).

Zhang, C., Bengio, S., Hardt, M., Recht, B. & Vinyals, O. Understanding deep learning requires rethinking generalization. ArXiv Preprint arXiv :161103530 (2016).

Hu, J., Shen, L. & Sun, G. Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018).

Vaswani, A. et al. Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017).

Li, H., Xiong, P., An, J. & Wang, L. Pyramid attention network for semantic segmentation. In: Proceedings of the British Machine Vision Conference (BMVC) (2018).

Woo, S., Park, J., Lee, J. Y. & Kweon, I. S. Cbam: Convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018).

Szegedy, C. et al. Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2015).

Yang, M., Yu, K., Zhang, C., Li, Z. & Yang, K. DenseASPP for semantic segmentation in street scenes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3684–3692 (2018).

Paszke, A. et al. : Pytorch: An imperative style, high performance deep learning library. Advances in neural information processing 24 systems 32 (2019).

Kiefer, J. & Wolfowitz, J. Stochastic Estimation of the maximum of a regression function. Ann. Math. Stat., 462–466 (1952).

Yar, H. et al. Optimized dual fire attention network and medium-scale fire classification benchmark. IEEE Trans. Image Process. 31, 6331–6343 (2022).

Khalil, I., Mehmood, A., Kim, H. & Kim, J. OCTNet: A modified Multi-Scale attention feature fusion network with InceptionV3 for retinal OCT image classification. Mathematics 12 (19), 3003 (2024).

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Contributions

A.K. and F.A. developed the concept and designed the study. I.K. implemented the model, conducted experiments, and curated the dataset. D.S. and S.A. contributed to the evaluation metrics and technical validation. M.T. wrote the main manuscript text and revised all sections critically for important intellectual content. All authors reviewed and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Khalil, A., Alam, F., Shah, D. et al. Real time blood detection in CCTV surveillance using attention enhanced InceptionV3. Sci Rep 15, 28977 (2025). https://doi.org/10.1038/s41598-025-14941-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-14941-w