Abstract

Multimodal medical image fusion plays an important role in clinical applications. However, multimodal medical image fusion methods ignore the feature dependence among modals, and the feature fusion ability with different granularity is not strong. A Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical Images is proposed in this paper. The main innovations of this paper are as follows: Firstly, A Cross-dimension Multi-scale Feature Extraction Module (CMFEM) is designed in the encoder, by extracting multi-scale features and aggregating coarse-to-fine features, the model realizes fine-grained feature enhancement in different modalities. Secondly, a Long-range Correlation Fusion Module (LCFM) is designed, by calculating the long-range correlation coefficient between local features and global features, the same granularity features are fused by the long-range correlation fusion module. long-range dependencies between modalities are captured by the model, and different granularity features are aggregated. Finally, this paper is validated on clinical multimodal lung medical image dataset and brain medical data dataset. On the lung medical image dataset, IE, AG, \({\textbf {Q}}^{{\textbf {AB/F}}}\), and EI metrics are improved by 4.53%, 4.10%, 6.19%, and 6.62% respectively. On the brain medical image dataset, SF, VIF, and \({\textbf {Q}}^{{\textbf {AB/F}}}\) metrics are improved by 3.88%, 15.71%, and 7.99% respectively. This model realizes better fusion performance, which plays an important role in the fusion of multimodal medical images.

Similar content being viewed by others

Introduction

Multimodal medical image fusion is to fuse medical images of different modals into one image, which provides a more comprehensive technical support for disease diagnosis and treatment. Medical images play an important role in computer-aided detection and diagnosis of malignant tumors. However, due to the difference of medical imaging equipment, different modals medical images examine different characteristics of the human body, and a single modality of medical images does not provide sufficient information. For example, Computed Tomography (CT) clearly displays bones and high-density structures information, CT images provide limited information on organ metabolism. Positron emission tomography (PET) reflects biological metabolic processes and neurotransmitter activity, but its spatial resolution is low. Magnetic resonance imaging (MRI) has advantages in imaging human soft tissue, but it does not reflect metabolic activity. Multi-modal medical image fusion aims to provide reliable references for clinical diagnosis and scientific research by integrating complementary and redundant information from images of different modalities1, which assists doctors in accurately diagnosing lesions2.

In recent years, deep learning is a key technology in multimodal medical image fusion3. The fusion methods are generally classified into 3 categories: Convolutional Neural Network (CNN)-based fusion methods, Autoencoder (AE)-based fusion methods, and Generative Adversarial Network (GAN)-based fusion methods. CNN-based fusion methods are a technology that uses convolutional neural network to extract and fuse image features. It learns local features through the convolutional layer and reduces the feature dimension through the pooling operation, and finally realizes the fusion with different modal image. Tang4 et al. proposes the Residual Decoder-Encoder Detail-Preserving Cross Network (DPCN), which employs a dual-branch framework to extract structural details from the source image. However, because the model only uses the last layer results, it is easy to lose the information of the middle layer. Umirzakova5 et al. propose a spatial/channel dual attention CNN combined with deep learning reconstruction (DLR), which improves the feature extraction. VIF-Net6 adopts a hybrid loss function that combines a modified structural similarity metric and total variation, it adaptively fuses thermal radiation and texture details through unsupervised learning. Image fusion methods based on encoder–decoder networks obtain fused images by designing and training encoders and decoders. The encoder extracts features, and the decoder reconstructs them, effectively mitigating the network depth impact on performance. DenseFuse7 introduces a dense connection mechanism in the encoder, effectively resolving the intermediate layer information loss issue and achieving better fusion results. Res2Net8 integrates ResNet into the encoder, enhancing the network’s multi-scale feature extraction capacity. GAN-based fusion methods use adversarial learning between the generator and discriminator to estimate the target probability distribution, thereby implicitly performing feature extraction, feature fusion, and image reconstruction. DSAGAN9 uses a dual-stream structure and multi-scale convolutions to extract deep features, thus enhancing the fused features with an attention mechanism to generate the final fused image. UCP2-ACGAN10 presents an adaptive conditional GAN model that uses a context perceptual processor to obtain context perceptual feature maps, which better highlight the lesion regions in the fused image. Zhou et al.11 propose a GAN model with dual discriminators, which uses the source image’s semantic information as constraints to generate semantically consistent images. However, multimodal medical image fusion still faces several challenges: In the encoding phase, existing methods don’t achieve effective interaction among different modalities and different granular features. In the feature fusion, the internal dependencies between modality are ignored in some degree, and it is difficult to capture the long-range dependencies between local and global features effectively. To solve this problem, this paper proposes a Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical Images. The main contributions of this paper are as follows:

-

A Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical Images is proposed. In the encoder, a Cross-dimension Multi-scale Feature Extraction Module and a dense network architecture are used to strengthen the feature Transmitting-Reuse ability among different layers. In the fusion module, it uses correlation calculation and layer-by-layer aggregate strategy to capture the long-range dependencies between different modal images.

-

Aiming at the effective feature extraction problem at different dimension features. This paper designs Cross-dimension Multi-scale Feature Extraction Module (CMFEM). In the feature extraction stage, multi-scale features are extracted along the height and width dimensions, enhancing the network’s sensitivity for lesion size.

-

Aiming at the problem of feature dependence between modalities. In the fusion stage, this paper designs a Long-range Correlation Fusion Module (LCFM). which calculates the long-range correlation coefficient between local features and global features, the features of the same granularity are fused by the LCFM. Long-range dependencies between modalities are captured, and features of different granularity are aggregated, avoiding detail information being neglected.

Methodology

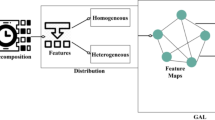

Existing multimodal medical image fusion methods generally focus on improving the individual modalities’ fine-grained feature extraction ability, but it neglects the inter-modal feature dependencies and the effective fusion about different granularity features. This paper designs a Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical Images, including the Cross-dimension Multi-scale Feature Extraction Module (CMFEM), Long-range Correlation Fusion Module (LCFM), and the loss function construction. The Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical Images adopts a dual-branch network structure, and it extracts coarse-to-fine grained features from the two modes through the dense connection structure, which enables efficient feature extraction and fusion. Each branch includes 5 feature extraction layers, where the 1 to 4 layers consist of 4 CMFEM blocks, and the last layer uses a 1\(\times\)1 convolution followed by Tanh as the nonlinear activation function. To reduce information loss, inspirated by DenseNet, this paper uses dense connections on each branch, which strengthen the feature transmitting-reuse ability among different layers. In order to improve the interaction ability of multi-scale features, the extracted image features of each layer are fused by LCFM module, and the fused features are concatenated and aggregated to generate global fused images. Finally, the model reconstructs the image using five 3\(\times\)3 convolution layers, generating a fusion image with sharp edges and clear lesion regions. The network structure of the Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical Images is shown in Fig. 1.

Cross-dimension multi-scale feature extraction module

Attention mechanism is a technique that simulates the ability of human visual attention and is used in deep learning to help models focus on important parts of input images. models are able to be more efficient and precise in handling complex tasks. A model weight is a parameter used to adjust the importance of input features. the weights determine how much each feature influences the final output. Channel attention deals with the relationship between channels in the image. Its core idea is to evaluate which channels are more important for the current task and dynamically adjust the activation intensity of each channel accordingly. Spatial attention mechanism focuses on the spatial dimension of the image, which enhances the model’s ability to focus on specific areas by applying weights to different positions of the input images. For multimodal medical images, spatial information is reflected as semantic features at the pixel level, where local spatial information is helpful to capture fine-grained low-level semantic features, and global spatial information supports the recognition and understanding of high-level semantic features. Due to the complexity of multimodal medical images, a single attention mechanism struggles to achieve extract critical features. Therefore, this paper designs a Cross-dimension Multi-scale Feature Extraction Module (CMFEM), as shown in Fig. 2, which extracts features of different scales through multi-scale convolution in width and height dimensions to obtain multi-scale feature Xs. Then, it computes the self-attention in space, enhances the features of the spatial information, and obtains the channel attention. This approach not only helps to reduce information redundancy and data complexity, but also improves model performance, making feature extraction of PET, CT and MRI images more accurate and efficient.

As shown in Fig. 2, the internal structure of Cross-dimension Multi-scale Feature Extraction Module (CMFEM), Firstly, the input \(X\in {\mathbb {R}}^{B\times C\times H\times W}\) is convolved and decomposed along the height and width dimensions. Two one-dimensional sequences are created by using global average pooling: \(X_H\in {\mathbb {R}}^{B\times C\times W}\) and \(X_W\in {\mathbb {R}}^{B\times C\times H}\). Secondly, in order to capture spatial information at different scales, the features are split into 4 sub-feature maps, \(X_H^i\) and \(X_w^i\), where i\(\in\) \(\{1,2,3,4\}\), each sub-feature map has C/4 channels, it efficiently capture the diverse spatial information within sub-feature maps. The module utilizes one-dimension convolutions with kernel sizes of 3,5,7,and 9 for the 4 sub-feature maps. In addition, in order to solve the issue of limiting receptive fields caused by using one-dimension convolutions, this paper utilizes lightweight sharing convolution. This method captures consistent features between the two dimensions indirectly modeling their dependencies, which expands the perceptive field and improves feature representation ability. Then, 4 groups of Group Normalization (GN) are applied for normalization, followed by a Sigmoid activation function to generate spatial attention, which activates specific spatial regions. Finally, the feature maps \(F_H\) and \(F_W\) from the H and W dimensions are multiplied with the input feature map X to obtain \(X_\textrm{s}.\) This process is represented by the following formula (1)–(5):

Where X represents the input feature map, and \(X_H^i\) and \(X_W^i\) represent the spatial structural information of the ith sub-feature along the H and W directions. \({\hat{i}}\) represents the ith sub-feature, \({\hat{i}} \in \{1, 2, 3, 4\}\).

Where \(\sigma (\cdot )\) represents the Sigmoid activation function, and \(GN_H^4(\cdot )\) and \(GN_W^4(\cdot )\) represent the 4 group normalization along the H and W directions, \(X_s\) represents the spatial information of X.

In order to retain and utilize the multi-scale spatial information extracted by multi-scale convolution, this paper uses a self-attention module to enhance the spatial prior information, which improves the performance of the model. Firstly, 3 different mapping functions \(F_j^Q,F_j^K\), \(F_j^V\)are used to project \(X_s\) into the query, key and value respectively, and Q, K, V are obtained. These features are used in subsequent attention calculations to obtain \(X_F\), then, \(X_F\) is compressed into one-dimension vector and activated by the Sigmoid function. Finally, the enhanced feature map F is obtained by multiplying Xs with the feature map that is calculated by Sigmoid and average pooling operation. The process is represented by the following formula (6)–(8):

Where \(F_{\text {proj}}(\cdot )\) represents the mapping functions for generating the query, key, and value. \(\sigma (\cdot )\) represents the Sigmoid activation function, and F represents the final output feature map.

Long-range correlation fusion module

To address the problem of feature dependence between modalities, this paper designs a Long-range Correlation Fusion Model (LCFM). This module captures the long-range dependencies between local and global features by calculating the correlation, and these dependencies are encoded into a correlation matrix. Then, 1\(\times\)1 convolution layer is used to reduce the dimension of the correlation matrix. After that, the two correlation feature maps are added, their size is compressed to 1\(\times\)1 by adaptive pooling, and then multiply with the input feature map to enhance the feature representation. In the last layer of the LCFM module, the two enhanced feature maps are concatenated with the input feature map along the channel dimension. The features of the same granularity are fused through the LCFM, which captures the long-range dependencies between the different modalities, and then the features of different granularities are aggregated. This paper presents the forward flow of LCFM in Algorithm 1.

The structure of the LCFM module is shown in Fig. 3, The LCFM fuses the feature maps extracted by CMFEM. In image fusion tasks, capturing the long-range dependencies of different modalities is the key to image fusion. However, when capturing long-range dependencies, overly relying on global information leads to the loss of fine details, and overly relying on local information fails to capture global semantic relationships. Therefore, balancing the relationship between the two in network design and ensuring that they can work together is a challenge. To solve this issue, in this paper, the long-range dependencies of local and global features are captured by calculating the correlation of different modalities. For example, the correlation between the features \(F_{i}\) and \(F_{j}\) is calculated by the following formula (9):

Where \(F_{i,j} \in {\mathbb {R}}^{C \times H \times W}\) (\(i, j \in \{1,\ldots ,N\}\)), and \(\Vert \cdot \Vert _2^2\) represents the L2 norm. Since the two modalities from the same scene are registered, the correlation distribution range remains consistent. The correlation \(Corr(F_i, F_j)\) between two different modality images ranges from [-1, 1]. long-range dependencies are captured by correlation calculation, but correlation calculation is complex and time-consuming. For example, when the feature map size is N=H\(\times\)W, the computational cost is \(N^2\). As the feature map size increases, the computational cost becomes extremely large. To overcome this problem, this paper introduces a pooling operation to simplify the calculation by the following formula (10):

Where \(AdapAvgPool(\cdot )\) is adaptive average pooling, which is used to compress the feature map and generate the feature map \({\hat{F}}_{temp} \in {\mathbb {R}}^{C \times T \times T}\). Then, the feature map \({\hat{F}}_{temp}\) is used to compute the correlation with the original image by the following formula (11):

Where \({\widehat{F}}_{temp}^k\in {\widehat{F}}_{temp},(k\in \{1,\ldots ,{\mathcal {T}}^2\}).\) In the fusion process, \(1\times 1\) convolution is used to reduce the dimension and linear transform input features, which enables reduce the redundancy between channels and highlights important features. 3\(\times\)3 convolution is used to improve the ability of capturing local features. The Sigmoid activation function is used to introduce nonlinearity and constrain the output values to the range of [0,1]. Finally, \(Corr_{F_1}\) and \(Corr_{F_2}\) are obtained by adaptive average pooling of the feature maps using the following formula (12) and (13).

Where \(Corr^{temp}_{F_1}\) and \(Corr^{temp}_{F_2}\) represent the long-range correlation matrices of features \(F_1\) and \(F_2\), respectively. \(Conv_{1\times 1}(\cdot )\) represents the 1\(\times\)1 convolution layer, \(Conv_{3\times 3}(\cdot )\) represents the 3\(\times\)3 convolution layer, \(\sigma (\cdot )\) represents the Sigmoid activation function, and \(AdapAvgPool(\cdot )\) represents the adaptive average pooling operation. Then, the obtained feature maps are multiplied with the original feature maps by the following formula (1415) and ():

Finally, the feature maps are concatenated using a concatenation strategy:

where \({\hat{F}}_f\) represents the fused feature, and \(concat(\cdot )\) represents the concatenation operation along the channel dimension.

Loss function

For the medical image fusion task, in this paper, the fusion network is trained in an unsupervised manner. The loss function of the Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical Images is designed, it is the combination of intensity loss and gradient loss by the following formula (17):

Where \(L_f\) represents the total loss, \(L_{int}\) represents the intensity loss, \(L_{grad}\) represents the gradient loss, \(\alpha\) is a hyperparameter.

Intensity Loss: The intensity loss ensures the global brightness consistency of the fused image by constraining the low-frequency components of the image. Therefore, the intensity loss is defined as formula (18):

Where \(L_{int}^{F1}\) and \(L_{int}^{F2}\) represent the intensity loss for the images, which are defined as formulas (19) and (20):

Where H and W represent the height and width of the image, and \(\Vert \cdot \Vert _1\) represents the \(L_I\)-norm.

Gradient Loss: The gradient loss is used to capture the high-frequency components of the image to ensure the accurate localization about the lesion and the clarity of the image texture information. Therefore, the gradient loss is defined as formula (21):

Where \(|\cdot |\) represents the absolute operation, \(\nabla\) represents the image gradient is computed using the Sobel operator, and max(\(\cdot\)) is the operation to obtain the maximum value.

Results

Dataset

The model is trained on two different datasets:

-

(1)

Lung tumor PET/CT images, The dataset uses clinical patients with lung tumors who underwent PET/CT general examination in a top-three hospital in Ningxia from January 2018 to June 2020. These images are high-quality, without artifacts, and clearly show tumor lesions. The patients did not undergo radiofrequency ablation or lung resection, and they have complete and detail pathological reports. The experiment includes 95 patients who met the specified criteria. Among them, There are 46 women (48%) aged between 30 and 80 years, with an average age of 54.32 years. There are 49 male (52%) aged between 27 and 74 years, with an average age of 50 years, and the height of the patients is not restricted. Patients need to do the following preparations before the PET/CT general examination: fast for 6 hours, which ensure that blood sugar is below 10, urinate, and remove metal ornaments. The patient is injected with 3.7mBq/kg deoxyglucose and waited for 1 hour. Subsequently, the patient lies flat in a dark room and waits for 45 to 60 minutes. Then, PET/CT images of the lungs and torso are collected, including cross-sectional, sagittal, and coronal images. To ensure the correct labeling of lesions and ensure the accuracy of the data, the dataset is evaluated and diagnosed by three expert physicians combined with clinical experience. The final result is decided according to the opinion of the majority experts. The three expert doctors include a thoracic surgeon with 8 years of clinical experience, a pulmonologist with 5 years of clinical experience, and a radiologist specializing in radiology. The final number of samples for the two image datasets of different modalities is 2430, respectively. In this paper, 1000 PET images and 1000 CT images are selected as the training set, and 400 are selected as the test set. The labels of the images are manually drawn by two clinicians. The data is transformed into JPG format by algorithms, and the image is adjusted to 356\(\times\)356 pixels. These pre-processing steps are designed to improve image quality and adapt to the training requirements of neural networks.

-

(2)

Brain MRI/PET images. This dataset comes from Harvard Medical dataset. 269 MRI images and 269 PET images are selected from this dataset. In order to expand the training dataset, this paper applies data augmentation to the MR-PET images, which generates 807 MRI images and 807 PET images. In this paper, 600 MRI images and 600 PET images are selected as the training set, and 200 MRI images and 200 PET images are selected as the test set. The size of the training image is 256 \(\times\) 256.

Experimental environment

Random seed: All experiments use a fixed random seed (seed = 42). Data split: Training/validation/test = 74%/10%/16%. Early stopping: Monitored metric = validation QAB/F; patience = 10, min_delta = 1e-4. During training, we use a batch size of 8 and the Adam optimizer. The initial learning rate is 0.01, and it is reduced by 10% every 5 epochs. Training runs for 80 epochs. Hardware Environment: The processor is Inetl(R) Xeon(R) Gold 5218 CPU @ 2.30GHz, Memory: 64GB, GPU: NVIDIA TITAN RTX. Software Environment: Windows Server 2019 Datacenter 64-bit operating system, Pytorch 1.12.1 deep learning framework, Python version 3.7.12, CUDA version 11.3.58.

Comparison experiment and evaluation metrics

Comparison experiment design

In order to verify the effectiveness of the Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical lmages, two sets of comparative experiments are conducted in the PET/CT image dataset of lung tumors. The first set of experiments is compared with decomposition transformation methods, including method 1: image fusion method based on NSCT12. Method 2: LatLRR13, the second set of experiments is compared with deep learning methods, including Method 3: Multi-modal image fusion method EMMA14. Method 4: Unsupervised DIF-Net15 based on encoder–decoder. Method 5: DATFuse16. Method 6: Fusion method based on dense Res2net and dual non-local attention model, Res2Fusion8. Method 7: U2Fusion17. Method 8: GAN-FM18. Method 9: CDDFuse19. In the brain MRI/PET image dataset, the fusion results of 6 deep learning-based methods are compared. Method 1: CDDFuse19; Method 2: DATFuse16; Method 3: EMMA14; Method 4: MATR20; Method 5: U2Fusion17; Method 6: PLAFusion21; Method 7: DDBFusion22; Method 8: MMIF23; Method 9: MURF24. To ensure the fairness of the comparison, all parameter values of the above methods are set to the default values specified by their authors.

In this paper, 8 evaluation metrics widely used in the field of image fusion are used, including Information Entropy (IE)25, Average Gradient (AG)26, Standard Deviation (SD)27, Spatial Frequency (SF)28, Sum of the Correlations of Differences (SCD)19, Visual Information Fidelity (VIF)29, Edge Preservation Values QAB/F30, and Edge Intensity (EI)31. Among them, IE is used to measure the randomness or variation of pixel values in an image. AG is used to represent image sharpness, reflecting the richness of texture details in the image. SD is used to measure the degree of variation in pixel values and the difference in brightness, reflecting the image’s contrast and details. SF describes the frequency and periodicity of brightness or color changes at different locations in the image, indicating texture and detail information. SCD evaluates the image fusion quality by comparing the structure, content, and distortion levels between the original images. VIF is used to assess the ability of the image to retain original information during transmission or processing. QAB/F reflects the visual information quality in the fused image. All these metrics are positively correlated with image fusion quality, meaning that the higher the value of the evaluation metric, the better the fusion quality.

Comparison experiment

In order to verify the validity of the Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical Images, 9 comparative experiments are carried out. In Section “CT lung window image and PET image group”, 10 methods are qualitatively evaluated for 200 pairs of CT lung window images and PET images, and 8 evaluation metrics are quantitatively evaluated for the fused images. In Section “CT mediastinal window image and PET image group”, 10 methods are qualitatively evaluated for 200 pairs of CT mediastinal window images and PET images, and 8 evaluation metrics are quantitatively evaluated for the fused images. In Section “MRI brain image and PET image group”, 10 methods are qualitatively evaluated for 100 pairs of MRI brain images and PET images, and 8 evaluation metrics are quantitatively evaluated for the fused images.In Section “Ablation experiment 4: ablation of pooling size”, ablation experiments are performed on the pooling size.

CT lung window image and PET image group

In this section, 200 pairs of CT lung window images and PET images are divided into 5 groups, with 40 pairs of CT lung window images and PET images in each group, and 10 comparison methods are used for comparison. 5 groups of visualization fusion results are selected, and the fusion results are shown in Fig. 4. In Fig. 4, columns 3 and 4 are results of the first set, and columns 5 through 12 are results of the second set. Table 1 shows the average results of evaluation metrics for each group of fused images. Among them, the best evaluation metric value is represented in red, and the second-best evaluation metrics are represented in blue. The histogram of the average evaluation metrics for the fused images is shown in Fig. 5.

As shown in Fig. 4, Methods 2, 3, 6, and 9 generate relatively clear fused images, but Methods 1 and 9 suffer from overly high brightness, weak lesion information, and unclear textures, making it difficult to accurately identify the detailed lung bronchial structures in the CT source images. Methods 3 and 7 accurately locate the lesion areas, but their contrast is low, resulting in the lesion is not prominent. Among them, Method 3 is severely exposed, which impairs the observation of details. Method 7 enables better retain the edge and texture information of CT source images, but its lesion information is not obvious. Method 1 generates fusion images with clear lesions but its ability to retain gradient information is poor, which makes it difficult to recognize edge and bone information in CT source images. The fusion images obtained by methods 4 and 8 are generally dark with poor visual effects. Moreover, the lesion information perception ability of method 8 is weak, which makes it difficult to locate the lesion area effectively. Methods 5 and 7 result in fusion images that are blurry with high brightness, resulting in the contrast between the region information and the background region information is not obvious. In contrast, the method proposed in this paper not only retains the edge and contour information from the CT source images effectively but also enhances the lesion information from the PET source images.

As shown in Table 1 and Fig. 5, there is little difference between the proposed method and NSCT in IE and SD. Compared with the highest value of the comparison method, the proposes method improves by an average increase of 4.55%, 5.64% and 4.49%, respectively, and compared with the lowest value of the comparison method, the proposed method improves by an average of 4.55%, 5.64%, and 4.49%, respectively. Therefore, the proposed method in this paper shows better performance in fusing CT lung window images and PET images.

CT mediastinal window image and PET image group

In this section, 200 pairs of CT lung window images and PET images are divided into 5 groups, with 40 pairs of CT mediastinal window images and PET images in each group, and 10 comparison methods are used for comparison. 5 groups of visualization fusion results are selected, and the fusion results are shown in Fig. 6. In Fig. 6, columns 3 and 4 are results of the first set, and columns 5 through 12 are results of the second set. Table 2 shows the average results of evaluation metrics for each group of fused images. Among them, the best evaluation metric value is represented in red, and the second-best evaluation metric is represented in blue. The histogram of the average evaluation metrics for the fused images is shown in Fig. 7.

As shown in Fig. 6, Methods 1, 4, and 10 are capable of generating clear fusion images and accurately locating the lesion area. However, the images from Methods 1 ,4 and 7 exhibit low overall contrast and blurred details, with a lack of clear edge information. Method 2 generates fused images with a clear lesion area and high contrast. However, the exposure is overly high, the edge and texture information cannot be clearly and accurately identified. Method 8 generates fused image that lacks prominent lesion information in the lesion area. The lesion information of fusion images generated by methods 3 and 6 is weak and unclear. The fusion images generated by method 5 and method 9 are blurred, the visual effect is poor, and the contrast between the lesion information and the background information is not obvious. Method 10 generates fused images that are clearer, and the contrast between the lesion area and the background area is obvious, which enables effectively highlights the lesion area. It not only retains the bone and edge contour information of the CT source images, but also highlights the lesion information of the PET source image.

As shown in Table 2 and Fig. 7, there is little difference between the proposed method and NSCT in SCD, and CDDFuse in EI. However, our method performs better in AG, QAB/F, and EI, with average increases of 4.47%, 6.74%, and 8.74% over the highest values of the comparison methods, and average increases of 112.41%, 168.75%, and 87.85% over the lowest values. Therefore, our method achieves clear edge textures and lesion regions, resulting in good visual effects in the fused images.

MRI brain image and PET image group

In this section, 100 pairs of MRI brain images and PET images are divided into 5 groups, with 20 pairs of MRI brain images and PET images in each group, and 10 comparison methods are used for comparison. 5 groups of visualization fusion results were selected, and the fusion results are shown in Fig. 8. Table 3 shows the average results of evaluation metrics for each group of fused images. Among them, the best evaluation metric value is represented in red, and the second-best evaluation metric is represented in blue. The histogram of the average evaluation metrics for the fused images is shown in Fig. 9.

As shown in Fig. 8, Methods 2, 3, 4, and 9 better retain the color features of the PET images, but the structural details of the MRI are insufficient, the tissue level of the brain is not obvious, and the contrast is low, which leads to the unclear distinction between the lesion area and the surrounding structure. Methods 1, 6 and Method 10 not only retain the color information of PET, but also well preserve the structural details of MRI. However, method 10 has higher contrast and clear edges, and has a clear sense of brain hierarchy and rich structural information.

As shown in Table 3 and Fig. 9, there is little difference between the proposes method and CDDF in AG, and DATFuse in IE and EI. However, our method performs better in SF, VIF, and QAB/F, with average increases of 3.88%, 15.71%, and 7.99% over the highest values of the comparison methods, and average increases of 123.08%, 89.25%, and 201.54% over the lowest values. Therefore, this paper gains a clear edge textures and lesion regions, resulting in good visual effects in the fused images.

Ablation experiment

In order to verify the effectiveness of each module of the Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical lmages, 3 CT lung window images and PET images, 3 CT mediastinal window images and PET images, and 3 MRI brain images and PET images are selected for ablation experiments to verify the effectiveness of the proposed method. Exp1: Remove all the modules design in this paper, and use the basic encoder–decoder network (Base) for feature extraction, and adopts the direct addition fusion strategy for fusion. Exp2: Dual-encoder Single-decoder network architecture is used to verify the effectiveness of enhancing fine-grained features from different modals. Exp3: Based on Exp2, CMFEM is added to validate its effectiveness. Exp4: Based on Exp3, only the last stage of CMFEM - LCFM is used for feature fusion. Exp5: Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical lmages. The details are shown in Table 4.

Ablation experiment 1: CT lung window image and PET image

In Fig. 10, the fusion image is generated by Exp1 retains some edge information from the CT source image and lesion information in the PET source image. However, because the dense aggregate encoder, CMFEM and LCFM modules are not included, the direct addition fusion strategy is adopted, which leads to the lesion area is not significant enough, and the contrast between the lesion and the background is low. In contrast, the fusion images from Exp2 show improve edge and texture information, indicating that the dense Aggregate dual-encoder is more effective at preserving the structural details. However, due to the lack of CMFEM and LCFM modules, the intensity distribution of the image is uneven, resulting in unclear lesion information. In the fusion image of Exp3, the lesion area is more prominent, and the contrast between the lesion and the background is significantly improved, which indicates the effectiveness of CMFEM module in capturing image intensity distribution. However, due to the lack of LCFM module, the edge and intensity information extracted by the fusion strategy of direct addition is insufficient, resulting in high overall image brightness. Compared with Exp3, Exp4 fusion image improves brightness, but there are still artifacts around the lesion, and the edge and detail information are poor. Exp5, the method proposed in this paper generates a fusion image that effectively preserves edge and texture information, with the lesion area clearly visible.

As shown in Table 5 and Fig. 11, The evaluation metrics value of Exp1 is the lowest. Compared with Exp1, the values of various evaluation metrics of Exp3 and Exp4 have little difference from the proposed method, but they are all lower than the proposed method. For example, the SCD of Exp4 is second only to the proposed method. The above results reflect that the fusion images obtained by the proposed method have certain advantages in both subjective and objective evaluation.

Ablation experiment 2: CT mediastinal window image and PET image

The results of CT mediastinal window images and PET images ablation experiments are consistent with the fusion results of CT lung window images and PET images ablation experiments. As shown in Fig. 12, the overall effect of Exp1 fusion image is poor, the lesion area is not prominent, and the contrast is low. Exp2 retains more edge and texture information, and CT structure information is clearer, but the brightness distribution is uneven, resulting in local brightness distortion. In the fusion image of Exp3, the lesion area is more prominent, and the contrast between the lesion and the background is enhanced, but the overall brightness of the image is high, and the detail performance is still insufficient. Exp4 not only makes the lesion information more prominent, but also improves the brightness distribution, and CT structure information is retained, but there are still artifacts and blurring phenomena in the detailed areas. Exp5 is the method proposed in this paper. The fusion images are clear in edges and details, the lesion area is prominent and the overall visual quality is better.

As shown in Table 6 and Fig. 13, The method in this paper is superior to the other 4 methods in 8 objective evaluation metrics, especially in AG, VIF, QAB/F, and EI, the method in this paper has obvious advantages over the other 4 methods. In addition, the evaluation metrics value of Exp1 is the lowest. Exp3 and Exp4 have little difference with this paper in each evaluation metric. For example, Exp4 is only secondary to this method on SD, SCD, and VIF. Therefore, the fusion image obtained by the method in this paper has certain advantages in both subjective and objective evaluation.

Ablation experiment 3: MRI brain image and PET image

As show in Fig. 14, the overall effect of Exp1 fusion images is poor and the contrast is low. The edge and texture information of the fusion image obtained by Exp2 are improved, but the brightness distribution is uneven. The fusion images obtained by Exp3 shows more prominent lesion areas, but blurred details. The fusion image obtained by Exp4 loses MRI structural information and has artifacts. The fusion image of Exp5 has the best performance in edge sharpness and contrast, and has rich details, and has a good visual effect.

As shown in Table 7 and Fig. 15, The method in this paper is superior to the other 4 methods in 8 objective evaluation metrics, especially in SD, VIF, QAB/F, and EI, the method in this paper has obvious advantages over the other 4 methods. In addition, the evaluation metrics value of Exp1 is the lowest. Exp3 has little difference with this paper in each evaluation metric. Therefore, the fusion image obtained by the method in this paper has certain advantages in both subjective and objective evaluation.

Ablation experiment 4: ablation of pooling size

When calculating the correlation among modalities, we perform pooling operations on the feature map to reduce parameters and computational load. We selected three Pooling sizes, namely 8\(\times\)8, 16\(\times\)16 (Our), and 32\(\times\)32, and provided a comparison between the FLOPs/ parameter and the fusion metrics (IE, AG, SD, SF, SCD, VIF, QAB/F, EI). The experimental results are listed in Tables 8 and 9. When T=16, FLOPS and Parameters are only 94.75G / 556.8K; Compared with T=32, FLOPs decreased by 29.9%, parameters decreased by 22.7%, while the indicators were better or remained the same. Compared with T=8, although FLOPs increased by 12%, the metrics have been comprehensively improved. In conclusion, T=16 strikes the best balance between computational cost and fusion quality, so we set it as the default setting in the paper.

Conclusion and future work

Conclusion

Aiming at the existing multimodal medical image fusion methods ignore the feature dependence among modals, and the feature fusion ability with different granularity is not strong. This paper proposes a Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical Images. Firstly, a Long-Range Correlation-Guided Dual-Encoder Fusion Network for Medical Images is designed, which aggregates multi-scale features layer by layer and captures feature dependencies between modals, it achieves an effective fusion of different granularity features. Secondly, a Cross-dimension Multi-scale Feature Extraction Module is designed in the feature extraction stage, which effectively retains the coarse-to-fine grain features by extracting different scale information. Finally, the long-range correlation coefficients of local and global features are calculated by the Long-range Correlation Fusion Module, and the long-range dependencies between local and global features is captured. In addition, The method presented in this paper is validated on clinical multimodal lung medical image dataset and brain medical image dataset. On the lung medical image dataset, the evaluation metrics such as IE, AG, QAB/F, and EI show average improvements of 4.53%, 4.10%, 6.19%, and 6.62%, respectively, compared to the optimal performance of the other 9 methods. On the brain medical image dataset, metrics like SF, VIF, and QAB/F show average improvements of 3.88%, 15.71%, and 7.99%, respectively, compared to the best performance of the other 6 methods. The experimental results show that the medical images fused by the model exhibit clear structures and rich texture details. This accomplishment provides valuable support for doctors’ diagnostic assistance and preoperative preparation.

Future work

Although encoder–decoder network is widely used in the medical image fusion field. However, there are still some problems that need further study: Firstly, due to differences in imaging principles and dynamic organ deformation, most medical multimodal datasets have spatial registration errors; Secondly, the traditional method only relies on image information, but it lacks multi-source data integration (Such as medical history, doctor’s advice). Thirdly, the evaluation metrics of image fusion effect are not uniform, which leads to the lack of algorithm comparability. Therefore, the future encoder–decoder network research for multi-modal medical image fusion are further explored from the following directions: Firstly, combined with the cross-modal self-supervised registration method, which improves the accuracy and robustness of image registration. Secondly, Multi-source clinical data (such as medical history and doctor’s advice) are fused to enhance the model’s performance. Thirdly, a unified evaluation system is important to improve the algorithms comparability.

Data Availability

1. The brain PET/MRI dataset used in this study is publicly available from the Harvard Brain Atlas: https://www.med.harvard.edu/AANLIB/home.html. 2. The clinical lung PET/CT dataset is not publicly available due to patient privacy restrictions but is available from the corresponding author upon reasonable request.

References

Zhang, R. et al. Utsrmorph: A unified transformer and superresolution network for unsupervised medical image registration. IEEE Trans. Med. Imaging 44, 891–902. https://doi.org/10.1109/TMI.2024.3467919 (2025).

Zhou, T. et al. Model-data co-driven u-net segmentation network for multimodal lung tumor images. Appl. Soft Comput. 180, 113410. https://doi.org/10.1016/j.asoc.2025.113410 (2025).

Zhou, T. et al. Deep learning methods for medical image fusion: A review. Comput. Biol. Med. 160, 106959 (2023).

Tang, W., Liu, Y., Cheng, J., Li, C. & Chen, X. Green fluorescent protein and phase contrast image fusion via detail preserving cross network. IEEE Trans. Comput. Imaging 7, 584–597 (2021).

Umirzakova, S., Shakhnoza, M., Sevara, M. & Whangbo, T. K. Deep learning for multiple sclerosis lesion classification and stratification using MRI. Comput. Biol. Med. 192, 110078. https://doi.org/10.1016/j.compbiomed.2025.110078 (2025).

Hou, R. et al. VIF-Net: An unsupervised framework for infrared and visible image fusion. IEEE Trans. Comput. Imaging 6, 640–651. https://doi.org/10.1109/TCI.2020.2965304 (2020).

Li, H. & Wu, X.-J. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 28, 2614–2623. https://doi.org/10.1109/TIP.2018.2887342 (2019).

Wang, Z., Wu, Y., Wang, J., Xu, J. & Shao, W. Res2Fusion: Infrared and visible image fusion based on dense res2net and double nonlocal attention models. IEEE Trans. Instrum. Meas. 71, 1–12. https://doi.org/10.1109/TIM.2021.3139654 (2022).

Fu, J., Li, W., Du, J. & Xu, L. DSAGAN: A generative adversarial network based on dual-stream attention mechanism for anatomical and functional image fusion. Inf. Sci. 576, 484–506. https://doi.org/10.1016/j.ins.2021.06.083 (2021).

Zhou, T., Li, Q., Lu, H., Liu, L. & Zhang, X. UCP2-ACGAN: An adaptive condition GAN guided by U-shaped context perceptual processor for PET/CT images fusion. Biomed. Signal Process. Control 96, 106571. https://doi.org/10.1016/j.bspc.2024.106571 (2024).

Zhou, H., Wu, W., Zhang, Y., Ma, J. & Ling, H. Semantic-supervised infrared and visible image fusion via a dual-discriminator generative adversarial network. IEEE Trans. Multimedia 25, 635–648. https://doi.org/10.1109/TMM.2021.3129609 (2023).

Thakur, S., Singh, A. K. & Ghrera, S. P. NSCT domain-based secure multiple-watermarking technique through lightweight encryption for medical images. Concurr. Comput. Practice Exp. 33, e5108 (2021).

Li, H. & Wu, X.-J. Infrared and visible image fusion using latent low-rank representation. arXiv preprint arXiv:1804.08992 (2018).

Zhao, Z. et al. Equivariant multi-modality image fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 25912–25921 (2024).

Jung, H., Kim, Y., Jang, H., Ha, N. & Sohn, K. Unsupervised deep image fusion with structure tensor representations. IEEE Trans. Image Process. 29, 3845–3858 (2020).

Tang, W., He, F., Liu, Y., Duan, Y. & Si, T. DATFuse: Infrared and visible image fusion via dual attention transformer. IEEE Trans. Circuits Syst. Video Technol. 33, 3159–3172 (2023).

Xu, H., Ma, J., Jiang, J., Guo, X. & Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 44, 502–518 (2020).

Zhang, H., Yuan, J., Tian, X. & Ma, J. GAN-FM: Infrared and visible image fusion using GAN with full-scale skip connection and dual Markovian discriminators. IEEE Trans. Comput. Imaging 7, 1134–1147 (2021).

Zhao, Z. et al. CDDFuse: Correlation-driven dual-branch feature decomposition for multi-modality image fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5906–5916 (2023).

Tang, W., He, F., Liu, Y. & Duan, Y. MATR: Multimodal medical image fusion via multiscale adaptive transformer. IEEE Trans. Image Process. 31, 5134–5149 (2022).

Tang, L., Yuan, J., Zhang, H., Jiang, X. & Ma, J. PIAFusion: A progressive infrared and visible image fusion network based on illumination aware. Inf. Fusion 83–84, 79–92. https://doi.org/10.1016/j.inffus.2022.03.007 (2022).

Zhang, Z., Li, H., Xu, T., Wu, X.-J. & Kittler, J. DDBFusion: An unified image decomposition and fusion framework based on dual decomposition and Bézier curves. Inf. Fusion 114, 102655. https://doi.org/10.1016/j.inffus.2024.102655 (2025).

He, D., Li, W., Wang, G., Huang, Y. & Liu, S. MMIF-INet: Multimodal medical image fusion by invertible network. Inf. Fusion 114, 102666. https://doi.org/10.1016/j.inffus.2024.102666 (2025).

Xu, H., Yuan, J. & Ma, J. Murf: Mutually reinforcing multi-modal image registration and fusion. IEEE Trans. Pattern Anal. Mach. Intell. 45, 12148–12166 (2023).

Roberts, J. W., Aardt, J. A. & Ahmed, F. B. Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2, 023522 (2008).

Yu, S., Zhongdong, W., Xiaopeng, W., Yanan, D. & Na, J. Tetrolet transform images fusion algorithm based on fuzzy operator. J. Front. Comput. Sci. Technol. 9, 1132 (2015).

Zhang, X., Liu, G., Huang, L., Ren, Q. & Bavirisetti, D. P. IVOMFuse: An image fusion method based on infrared-to-visible object mapping. Digit. Signal Process. 137, 104032 (2023).

Wu, P., Yang, S., Wu, J. & Li, Q. Rif-Diff: Improving image fusion based on diffusion model via residual prediction. Image Vis. Comput. 157, 105494. https://doi.org/10.1016/j.imavis.2025.105494 (2025).

Han, Y., Cai, Y., Cao, Y. & Xu, X. A new image fusion performance metric based on visual information fidelity. Inf. Fusion 14, 127–135 (2013).

Ouyang, Y., Zhai, H., Hu, H., Li, X. & Zeng, Z. FusionGCN: Multi-focus image fusion using superpixel features generation GCN and pixel-level feature reconstruction CNN. Expert Syst. Appl. 262, 125665. https://doi.org/10.1016/j.eswa.2024.125665 (2025).

Song, W., Li, Q., Gao, M., Chehri, A. & Jeon, G. SFINet: A semantic feature interactive learning network for full-time infrared and visible image fusion. Expert Syst. Appl. 261, 125472. https://doi.org/10.1016/j.eswa.2024.125472 (2025).

Funding

This work was supported by the National Natural Science Foundation of China (Grant Nos. 62561002 and 62576009).

Author information

Authors and Affiliations

Contributions

Tao Zhou: Writing—original draft, visualization, validation, methodology, investigation. Zhe Zhang: Writing—review and editing, supervision. Huiling Lu: Writing—review and editing, supervision, methodology, investigation. Mingzhe Zhang: Methodology, investigation. Jiaqi Wang: Writing—review and editing, supervision. Qitao Liu: Supervision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, T., Zhang, Z., Lu, H. et al. Long-range correlation-guided dual-encoder fusion network for medical images. Sci Rep 15, 38964 (2025). https://doi.org/10.1038/s41598-025-22834-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-22834-1