Abstract

Timely and correct identification of diseases in the apple leaf is also important in protecting crop production and sustaining agriculture. This paper introduces E-YOLOv8, a lightweight improved version of YOLOv8, that can be implemented in real-time and with a limited resource base. The model has three key contributions: (1) GhostConv and C3 fusion to reduce redundant feature extraction and computational cost, (2) CBAM attention and a specifically designed FPN to maximize multi-scale feature fusion and small-lesion detections, and (3) large-scale evaluation on datasets of apple leaf disease, as well as ablation experiments and operational testing on edge devices to verify the accuracy and viability of this model. In experiments, E-YOLOv8 reaches 93.9mAP0.5 using 5.3 GFLOPs and 1.8 M parameters, a 33.9x factor smaller than that of YOLOv8l. These results indicate that E-YOLOv8 has achieved better performance than recent state-of-the-art detectors and is still applicable to practical real-world agricultural tasks.

Similar content being viewed by others

Introduction

Apples are essential fruits worldwide. As per the statistics, India produces an average of 2400 tonnes of apples every year1. Also, India imports 70% of apples consumed during the year. Apple leaf diseases (ALD) have a significant effect on apple quality and yield2. A tree may be harmed by the diseases if its leaves dry up and fall off, inhibiting the development of fruit and the formation of flower buds. The effects of these illnesses reduce agricultural laborers’ pay and impede the expansion of the apple business. Early and effective detection of apple leaf diseases becomes essential to preserving the industry’s growth and raising farm worker compensation3.

Apple leaf diseases (ALDs) are found mostly through manual detection, which requires trained researchers or agricultural personnel to make visual assessments. This method works well for small-scale fruit tree management, but it is unfeasible for large-scale cultivation, which could lead to biased evaluations and insufficient data. Thus, it’s imperative to have a quick and precise way of identifying diseases and pests that affect apple leaves. Precision agricultural management, disease identification, and crop phenotyping are just a few of the fields where visual computing and powerful machine learning have greatly improved agriculture4.

The nation’s agricultural economic development depends on the apple industry, which provides the most income for rural agrarian producers. On the other hand, ALDs are a significant factor restricting Apple’s quality and productivity. Conventional approaches for detecting ALD mainly depend on the knowledge of manufacturers, which is labor-intensive and prone to prejudice and subjective variables. Therefore, enhancing the productivity and quality of the apple sector depends heavily on using information technology to aid in the quick diagnosis and precise identification of apple leaf diseases5.

Several researchers are using convolutional neural networks (CNNs) to detect apple leaf diseases and stop the spread of illness. These neural networks can identify the lesion’s location and classify the various illnesses classifications6. Within the object detection sector, there are two approaches for detecting apple leaf disease: one-stage and two-stage methods7. Two-stage approaches, such as Faster R-CNN, utilize the region proposal network (RPN) first to generate object proposals and then use the extracted regional features to recognize objects8. One-stage approaches do not require a region proposal extraction step; instead, they immediately forecast items in every position on the feature map. YOLO is a one-step method that combines detection with a regression process to forecast and classify target regions9. Therefore, YOLOv8l is studied and analyzed in this study to detect ALDs.

While deep learning-based techniques may accurately identify and detect traditional apple diseases, they are still not very accurate in quickly diagnosing apple diseases with different sizes and deformable geometries10. Since the present CNN models employ the same convolution technique for different feature maps and the gathered data pixel coordinates are fixed, it is difficult to adapt to different geometrical geometries of the disease11. Therefore, a novel E-YOLOv8 model is proposed in this study that extracts various apple leaf disease features. The main objective and contributions of this study are summarized as:

-

The YOLOv8l backbone network is altered with GhostNet blocks instead of CSPDarkNet blocks. This modification helps incorporate a lightweight ghost module. GhostNet is a neural network architecture designed to create more efficient models using fewer parameters and less computational power.

-

The C2f blocks are also replaced with C3 blocks in the backbone of YOLOv8. This helps reduce computation time while retaining features.

-

An attention mechanism called CBAM is implemented with the baseline YOLOv8l network to incorporate channel and spatial attention features that help focus on the what and where of essential features. This module enhances the model’s performance and helps in making the model lightweight.

-

An enhanced FPN is implemented with the baseline YOLOv8l network to detect patches on the leaves of apples and improve the prediction abilities of the proposed model.

When compared to the rest in its family, YOLOv8 is recognized as the most advanced, accurate and ready to be deployed. The unique features of this network mean it is especially useful for spotting apple leaf disease in crops. The reason researchers in apple leaf disease detection favor YOLOv8 over other YOLO variants is its improved design, stronger performance and greater ability to handle different models. The newer recognition head and framework in YOLOv8 make it better than before at spotting and detecting small objects like leaf lesions. It was found that both anchor-free detection and feature fusion give higher mAP scores on evaluation data. In contrast to prior versions, YOLOv8 no longer relies on anchor boxes and so requires less effort to train the network. It becomes especially handy for detecting disease spots on leaves, as these are often unevenly shaped and tiny. With C2f modules from YOLOv8, a lighter version of C3, the model is able to enhance feature extraction and remain compact. This framework improves gradient flow and feature representation over different scales, benefiting work seen in various farming areas. To reduce resources, I used GhostConv, CBAM helps with attention and Custom FPN handles multi-scale detection. In place of standard convolution, GhostConv is used to lower both the parameter count and latency. Identifying the diseased parts on the leaves improves how the model separates between classes. Refined FPN makes it easier to detect early or small lesions not noticed by shallower detectors. For several evaluation steps, YOLOv8 beats YOLOv5 and YOLOv7 by 3–7% when considering mAP@0.5, mainly on datasets with small or difficult objects. Because disease lesions on apple leaves can be hard to see and appear similar, this improvement in resolution is essential. Different versions of YOLOv8 (YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l and YOLOv8x) vary in their sizes and how fast they work. E-YOLOv8 benefits from YOLOv8l by getting good results without being overloaded in software or memory.

The rest of the paper is organized as follows: Section “Related literature survey” describes the literature survey, and section “Methodology” details the methods and materials used in this study. Section “Experimentation” describes experimental results, and secion “Conclusion” concludes the paper.

Related literature survey

The application of computer vision technologies to disease diagnosis is a significant field of research in modern agriculture. Disease detection is becoming more and more critical in the field of deep learning for crop protection and agricultural output. Zhao Kai et al.12 profounded a YOLOv5-Res model for apple leaf disease detection. The model utilizes Resblock and CSP blocks, achieving a mAP of 82.1%. Shixin Huo et al.13 suggested an improved YOLOv8 with an improved C2f-RFEM backbone architecture. The model achieved mAp of 58.5%. Zhengyan et al.14 proposed A-Net model based on YOLOv5 for apple leaf disease detection. The model achieved mAP of 92.7%. Fengmei et al.15 profounded a TPH-YOLOv5 model. The model combines the SimAM attention mechanism and the MobileNetV3 model. It achieved a mAP of 94.26%.

Rabia et al.16 utilized the VGG16 model for the early detection of apple leaf diseases. The model achieved good results. Vibhor Kumar et al.17 suggested a CNN model with fewer layers for detecting apple leaf diseases. The model achieved satisfactory results. Weishi Xu et al.18 presented an ALAD-YOLO model for apple leaf disease detection. The model is based on the YOLOv5s model and achieved an accuracy of 90.2%. Huishan Li et al.19 suggested BTC-YOLOv5s model for apple leaf disease detection. The model uses a Bi-directional feature pyramid network, transformer, and convolutional block attention mechanism; hence the name is BTC. The model achieved a mAP of 84.3%. Xulu Gong et al.20 introduced an improved Faster-RCNN model for apple leaf disease detection. The model achieved a 62.3% average precision. Mingyuan Zhong et al.21 altered the YOLOv5s model with the CBAM attention module for better apple leaf disease detection. The improved YOLOv5s model achieved mAP of 84.8%.

Zeng Wei et al.22 profounded YOLOv8-GSSW model for detecting apple leaf diseases. The model uses GSConv convolutional layers and CIoU loss function. The model achieved 87.3% of mAP. Songbo Cui et al.23 suggested the YOLOv7-Me model for detecting apple leaf diseases. The backbone and head of the YOLOv7 model are modified in this study. The model achieved a mAP of 85.36%. Imtiaz Ahmed et al.24 compared deep-learning models to detect apple plant diseases in orchards in the Kashmir Valley. The results showcased that the Faster-RCNN model achieved the highest mAP of 92%. Silu Zhang et al.25 proposed the YOLO-ACT model for apple leaf disease classification. YOLO-ACT is an adaptive cross-layer integration method based on the YOLOv8s model. The model achieved a mAP of 85.1%. Meng Lv et al.26 presented the YOLOv5-CBAM-C3TR model for apple leaf disease detection. The model is based on YOLOv5 and achieved an mAP of 73%. Although a lot of research has been done in apple leaf disease detection, there are still some loopholes and challenges left. Table 1 presents the research gaps in SOTA detection methods used for apple leaf disease.

Recent developments in image processing and image detection also reflect themes that are consistent with the goals of this research project. An example is prompt-based learning, which has been studied to be able to train model representations with weak supervision. Wangyu Wu et al.27 illustrates the potential of using prompts to enhance semantic consistency in pixel-level forecasting, and proposes more general ways to introduce generative priors into detection systems. Likewise, Wangyu Wu et al.28 presents a patch-based approach to contrastive learning that increases the discriminative power of feature maps, which in effect improves weakly supervised recognition of fine-grained structures. Though these studies deal with segmentation, their concepts of prompt-driven representation and patch-based supervision have echoes with the problems of spotting small lesions in agricultural images.

Meanwhile, lightweight detection schemes are being developed to support structural defect detection with limited resources. This trend is observed in the recent Chen et al.29, a crack detection model that incorporates attention mechanisms in a YOLO backbone to detect subtle and irregular crack patterns with good accuracy and without compromising on computational efficiency. The E-YOLOv8 offered corresponds to those trends by combining lightweight architectures (GhostConv, C3) and the use of targeted attention (CBAM) and finer fusion of features. In this way, this work contributes to and supports these new developments by showing that efficiency-based architectural design with attention refinement is capable of not only high accuracy but also real-time applicability in agricultural disease detection.

Consequently, this research presented a model for the ALD detection job, considering the shortcomings of the previous studies. The final experimental findings confirmed the efficacy of the suggested model, as verified using the AppleLeaf9 Dataset, which includes a variety of apple leaf diseases.

Methodology

This section discuss the architecture details of the proposed model and modules used to improve the performance.

YOLOv8 architecture

One of the most recent iterations of the YOLO family of object identification methods is YOLOv831. Five iterations of YOLOv8 have been released by Ultralytics: YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l, and YOLOv8x32. The head, neck, and backbone comprise the three primary parts of YOLOv8’s architecture. The neck receives input images from the backbone, which uses a sequence of convolution and pooling procedures to extract feature inputs33. The neck performs additional operations like fusing features, transforming scales, and processing the extracted backbone’s properties. The head merges the work done by the neck and backbone networks to form a final detection result. YOLOv8 consists of many crucial modules, each adding to the prediction procedure. Spatial pyramid pooling (SPPF), batch normalization (BN), convolutional layers (Conv), cross-stage partial network (C2f), and sigmoid linear unit (SiLU) are all part of the backbone network. The C2f module, the concatenation layer (Concat), and the up-sampling layer (Upsample) are all part of the neck portion. The Detect layer for detection, which produces the detection results, is installed in the head portion. Together, these components ensure that YOLOv8 balances detection accuracy and processing efficiency. In this study, the YOLOv8l model is enhanced to improve the accuracy and efficiency of detecting apple leaf disease. In Fig. 1, the basic architecture of YOLOv8 is shown.

A comparison between the backbone architecture of YOLOv8 and YOLOv5 is depicted in Fig. 2. The YOLOv8 backbone network’s general structure is similar to that of YOLOv5, but it does not make use of YOLOv5’s Conv3 modules (C3)34. Instead, YOLOv8 uses the ELAN (Efficient Layer Aggregation Network) concept from YOLOv735, combining C3 and ELAN to create the CSPDarknet53, the leading architecture for C2f module. Adding more convolutional layers makes feature map fields more extractable.

Proposed E-YOLOv8 model

The proposed E-YOLOv8 is based on YOLOv8l, with four specific improvements, namely (1) three types of GhostConv layers are used in place of the regular convolution layers to minimize redundancy and computational costs, (2) C3 blocks are used in place of C2f modules to aggregate features in a lightweight manner (3) CBAM attention is added to identify subtle lesions with high accuracy, and (4) a more refined FPN leads to better multi-scale fusion of small disease spots. All these changes make the models 36% simpler and 93.9% more accurate at detecting objects (mAP@0.5), compared to the baseline YOLOv8l and other lightweight detectors, as shown in Fig. 3. These improvements help in reducing the weight of the model and help in the fusion of multi-scale features. This leads to enhanced identification of irregular lesions and small apple targets. Table 4 describes the parameters used in this study to classify apple leaf diseases.

Optimization of backbone network

Several convolutional modules in traditional neural networks generate duplicate feature maps when processing more significant images. Higher computing needs and a greater number of parameters result from this redundancy. To solve this issue, Han et al. created GhostNet, a lightweight network model that generates feature maps equal to traditional convolutional layers using the Ghost module36. After that, it swaps them out for the initial convolutional layers and uses a nonlinear convolution process to compress the input feature maps. It generates another set of feature maps, applies a conventional convolutional operation to it, analyses the layers of the feature map, and compresses the input layer using a nonlinear convolutional operation. Then, these two feature maps are merged to create a new feature map. This technique preserves good performance while reducing the model’s parameters and computing complexity. Figure 4 depicts the GhostNet convolution process.

In a traditional CNN, given an input feature layer as \(\:A\in\:{R}^{x*y*z}\), where x is the input channel, y is the data height, and z is the data width. A conventional conv layer is depicted in Eq. 1 as;

where * = convolutional operation, b = bias, \(\:A\in\:{R}^{{x}^{{\prime\:}}*{y}^{{\prime\:}}*{z}^{{\prime\:}}}\) is the output of feature maps, \(\:k\in\:{R}^{xXsXsXn}\) is the kernel, \(\:{y}^{{\prime\:}}\) and \(\:{z}^{{\prime\:}}\) are the height and width of data, and sxs is the kernel’s size, defined by k. Based on this, Eq. 2 can be used to calculate the FLOPs of a typical CNN. From this, it is inferred that a mobile device’s memory and processing capacity will be exhausted by performing numerous FLOPs if the channels x and convolutional kernels n are enormous.

Many of these output feature maps are identical and frequently show severe redundancy. The Ghost module successfully resolves this problem, as Eqs. (3) and (4) demonstrate.

where \(\:{i}_{w}^{{\prime\:}}\) is the wth feature map within \(\:{\varnothing\:}_{w,z}\), \(\:{\varnothing\:}_{w,z}\) is the zth linear operation that produces zth ghost feature maps. \(\:{B}^{{\prime\:}}\in\:{R}^{{x}^{{\prime\:}}*{y}^{{\prime\:}}*{z}^{{\prime\:}}}\)is the output feature map evaluated by applying convolution operation on the input feature layer? \(\:A\in\:{R}^{x*y*z}\) with \(\:{k}^{{\prime\:}}\in\:{R}^{xXsXsXn}\). The final output B is calculated by concatenating \(\:{B}_{Ghost}\) and \(\:{B}^{{\prime\:}}\).

The standard convolution, batch normalization, activation and the residual connections that constitute the core of the YOLOv8 backbone are defined by Eq. (1) through (4). These terms are used to characterize the methods used to convert raw image pixel values to intermediate feature maps that describe hierarchical lesion representations.

Incorporating the GhostNet module into the basic framework of the original YOLOv8l model aims to address two common issues with ALD recognition: poor inference time and a considerable number of parameters. A lightweight neural network architecture is designed for efficient computation and reduced model size by leveraging Ghost modules, which use fewer parameters and computations than traditional convolutional layers. Furthermore, the C3 module has been incorporated into the C2f module to maximize efficiency and performance. The C2f module is typically a convolutional block that might include operations like convolutional layers, activation functions, pooling, and feature fusion. The C3 module is a specific type of convolutional block that often includes a series of convolutions, batch normalization, and activation layers, as well as efficient feature extraction and processing with a potential focus on lightweight operations. The Ghost Bottleneck idea inspired the C3 module, shown in Fig. 5. Ghost Bottleneck is a building block used in the GhostNet architecture, designed to be an efficient and lightweight alternative to traditional convolutional blocks in deep neural networks. The Ghost Bottleneck builds on the Ghost Module concept but is adapted for use in more profound and more complex architectures. The capacity to collect micro information within images, increasing the accuracy of apple leaf disease identification by sequentially utilizing numerous GhostNet modules, has been enhanced. Compared to traditional network models, this method decreases the number of parameters and enhances performance.

Convolutional block attention mechanism (CBAM)

In machine learning, the attention mechanism is an essential data analytic technique that excels in speech recognition, image interpreting, and natural language processing37. The Convolutional Block Attention Module (CBAM) is a type of attention mechanism that enhances the performance of convolutional neural networks (CNNs) by focusing on essential features in both spatial and channel dimensions. It was introduced in 2018, and it works by adaptively refining feature maps to highlight relevant information while suppressing irrelevant or noisy details. CBAM consists of two sequential sub-modules that apply attention mechanisms in two dimensions: The Channel Attention Module (CAM) focuses on “what” information is essential across different channels (filters) of the feature maps, and the Spatial Attention Module (SAM) focuses on “where” the critical information is located within the spatial dimensions of the feature maps.

After summarising the feature map using global average pooling and max pooling along the spatial dimensions, CAM runs the generated descriptors via a shared multi-layer perceptron (MLP) to determine the attention weights for each channel. The output is a weighted version of the input feature map where the more relevant channels are given higher weights. Mathematically, it is shown as:

Where f is the input feature map, \(\:\sigma\:\) is the sigmoid function, and \(\:{M}_{CAM}\) depicts the CAM map.

SAM module focuses on learning which spatial locations in the feature map are more important. Average pooling and max pooling along the channel axis are used to compute attention maps concatenated and fed through a convolutional layer to produce a spatial attention map. The output is a refined feature map where spatially significant regions are emphasized. Mathematically, it is shown as:

Where \(\:{f}^{7x7}\) is a convolutional operation with a 7 × 7 kernel size, \(\:\sigma\:\) is the sigmoid function, and \(\:{M}_{SAM}\) is the spatial attention map.

The input to CBAM is typically the output of a convolutional layer. The input has a shape of [X×Y×Z], where X is the height of the feature map, Y is the width of the feature map, and Z is the number of filters or channels. The channel attention module first refines the feature map, and the output of that is passed to the spatial attention module. The final output of the CBAM is a feature map with both channel-wise and spatial-wise attention applied. CBAM improves feature extraction through learning to concentrate on essential channels and geographical regions, which improves effectiveness on tasks like object detection, segmentation, and image classification. CBAM introduces only a small computational overhead while providing a significant boost in performance, making it suitable for use in various deep-learning models. As seen in Fig. 6, CBAM increases the network’s overall interactions, which improves the identification and distinction of small, simple-to-miss targets. CBAM guarantees accurate emphasis on pertinent areas by improving feature extraction and feature integration approaches, significantly increasing overall detection performance.

Integrating CBAM with YOLOv8 enhances the extraction of features by allowing the model to focus on critical spatial and channel-wise features. CBAM can improve the global dimension’s interaction properties by combining channel and spatial attention while minimizing information dispersion. With the addition of CBAM, the model can extract more meaningful information from the image and identify diseases more accurately in situations with complicated backgrounds and lighting.

MCAM structures the MLP in two successive layers, triggered by a sigmoid function, to derive the channel attention coefficients MCAM(f1) while maintaining the information in the input features. The original feature dimensions are arranged from z*y*x to y*x*z. Two 7 × 7 convolutions are employed to extract the spatial data of the spatial attention characteristics. The first produces spatial information fusion; the second uses the dimensions of channels for compression and removes the pooling layers. Finally, sigmoid function activation yields the MCAM (f2) spatial attention coefficient. The Eqs. (7) and (8) display the CBAM.

The provided input image is designated as f1, f2 is the intermediate feature map, f3 is the output image, the CAM is MCAM, and the SAM (Spatial Attention Mechanism) is MSAM. The multiplication operation by element is denoted by ⊗. The key steps are implementing CBAM, modifying the YOLOv8l backbone architecture to insert CBAM after specific convolutional layers or blocks, and training the model.

The GhostConv operation is as illustrated in Eqs. (5)–(8), where a smaller (reduced) set of intrinsic feature map candidates is produced by standard convolution and then extended into a ghost set of feature maps by cheap linear transformations. This reduces overlap, but still allows representational power, which reduces the computational cost when compared to standard convolutions.

Feature pyramid network (FPN)

The different sizes and forms of leaf surface lesions produce feature representations with variable resolutions when training models to identify apple leaf diseases. In the aggregated output, information from more noticeable leaf spots may receive disproportionate attention when these characteristics are combined within PANet using the conventional linear superposition technique. This disparity frequently causes larger lesions to take center stage, masking the finer characteristics of smaller lesions, which can result in these less noticeable targets going unnoticed. High-resolution, shallow features are vital to protect against loss and reduce this danger since they provide essential information to pinpoint micro irregularities on the leaf blade. Figure 7 depicts the architecture of FPN and PANet with the YOLOv8l model.

The Feature Pyramid Network (FPN) helps enhance YOLOv8’s ability to detect apple leaf diseases by improving multi-scale feature representation, which is crucial for detecting small or varied disease spots on leaves. FPN provides a robust architecture that allows YOLOv8 to better handle the complex patterns, textures, and shapes of diseased areas on apple leaves, improving accuracy and efficiency in detection tasks. FPN enhances YOLOv8 by integrating features from different network layers, combining low-level fine details (important for detecting small disease spots) and high-level semantic features (important for understanding the overall context of the leaf). It builds a pyramid of features and helps the model to focus on both small and large-scale patterns of diseases. FPN helps YOLOv8 detect small objects like these disease spots by using feature maps from lower layers (which have higher spatial resolution). These layers are rich in details necessary for detecting small disease patterns that might be missed at more profound network layers. This ensures that even subtle signs of disease, often critical for early diagnosis, can be detected accurately. FPN helps YOLOv8 localize diseased areas more accurately. In apple leaf disease detection, it’s essential to identify where the disease is present on the leaf, as this aids in proper treatment. FPN refines the bounding box predictions across scales, ensuring that the disease-affected areas, whether tiny spots or large patches, are correctly localized. This feature is critical in applications like precision agriculture, where accurate localization leads to targeted interventions. Apple leaves can suffer from various diseases, each with different visual characteristics. Some diseases cause small, round spots, while others lead to significant, irregular patches or yellowing of the leaf. FPN enables YOLOv8 to detect various disease types by making the model sensitive to large-scale features (e.g., widespread leaf discoloration) and small-scale features (e.g., fungal spots or blisters). Under real-world conditions, leaves may be captured under different lighting, at different angles, or partially occluded by other leaves or branches. These variations can affect disease detection. FPN provides robustness against these variations by combining multi-scale features, making YOLOv8 less sensitive to changes in viewing angles, lighting conditions, or partial occlusions. This enhances the model’s generalization ability, ensuring accurate detection of apple leaf diseases under varying environmental conditions.

The input feature influences the output features during the feature fusion process because of their disparate resolutions. Apple leaf spots typically come in a range of odd shapes. By employing a rapidly normalized fusion module to balance the weights of various features, FPN can better analyze the information in the target spots on apple leaf blades, minimizing the omission and misdetection problems. Equation 9 presents a relationship between input and output;

where \(\:{l}_{i}\) are learning weights of input feature \(\:{Inp}_{i}\). To guarantee that the value of \(\:{l}_{i}\) is nonnegative, ReLU is used to modify these weights.

In Eq. (9), the structure of the C3 module is given, but it is composed of residual paths and bottleneck layers. These are expressions that can be used to characterise the partitioning and recombining of the feature map to preserve gradient flow and to augment multi-scale feature extraction.

Experimentation

In this section, an overview of the dataset considered, system configuration, and evaluation metrics used is discussed.

Dataset used

The AppleLeaf9 dataset has been used to train and evaluate the model. This image dataset aims to explicitly explore identifying apple leaf diseases, encompassing many prevalent diseases. Each image is kept in JPG format. Figure 8 displays multiple images of the apple leaf disease dataset.

The dataset in this paper consists of six categories of apple leaf conditions, which are: Rust, Scab, Alternaria, Grey Spot, Mosaic, and Healthy leaves. Although the dataset is fairly balanced with the largest share of samples belonging to Healthy and Rust classes, other groups, including Mosaic and Grey Spot, are underrepresented. The source of this imbalance underscores the importance of a sound detection architecture capable of generalizing frequent and rare classes. Sabina Umirzakova et al.38 Recently, an alternative to multi-task plant imagery analysis was suggested by offering a single transformer structure to recognize cotton bolls, segment pest damage, and classify phenological stage, all based on UAV images. Their contribution states that there is an escalating requirement for coherent and effective systems in farm imaging. Figure 9 represents the dataset classification visually.

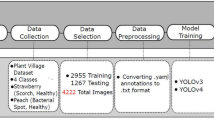

The dataset in this study is fused with the Plant Village Dataset39, apple tree leaf disease segmentation dataset40, PPCD202041, and PPCD2021 datasets41. This study used various enhancing techniques to simulate the impacts of environmental changes on images, such as noise and lighting, that are present in real-world circumstances. These techniques included random rotation, color modification, addition of noise, image sharpening, and Gaussian blurring. This helped in improving the model’s generalization ability. There are now 6986 images instead of the original 1569. Figure 10 displays the original data and example images that have been upgraded. Table 2 displays the number of ALD classes before and after the data augmentation techniques.

Data augmentation was used to enhance resistance to typical imaging variation of the field (rotation, brightness/contrast, cropping/translation, Gaussian blur, and additive noise). Examples of before/after are shown in Fig. 11. Augmentation scaled the initial AppleLeaf9 corpus (which was 1569 images) to 6986 images and enhanced generalization: precision rose to 93.7% (up from 89.6%), recall rose to 87.9% (up from 83.5%), and mAP@0.5 rose to 93.9% on the test set with augmentation. There was a significant increase in the performance of the model following augmentation. This extra diversity minimised overfitting and maximised sensitivity to small lesions in varying imaging conditions. The largest improvements were made in precision (4.1% points) and mAP@0.5 (2.6% points), which reduced the number of false positives and the overall confidence of detecting the participant in the augmented test split (Table 3).

Representative samples showing images before (left) and after (right) augmentation. Augmentation variants include rotation, brightness/contrast adjustments, cropping/translation, and mild blur/noise to simulate environmental variation. These transformations increase visual diversity and help the detector learn robustness to viewpoint, illumination, and minor occlusion.

In this study, the E-YOLOv8 model was trained for 300 rounds by conducting trials on the original and improved data with different parameters. Table 3 displays the outcomes of the experiment. The precision of the model rose from 89.6 to 93.7%, the recall rose from 83.5 to 87.9%, and the mAP@0.5 rose from 91.3 to 93.9% after data improvement, according to the results. These enhancements demonstrate how data enhancement raises the model’s capacity for generalization, increasing its accuracy and dependability when classifying apple leaf diseases.

Experiment settings

The experiment is conducted on the Windows 11 operating system, with NVIDIA GeForce RTX 3080 GPU, CUDA version 11.7. The deep learning neural networks are implemented in Pytorch 1.10.0 environment with Python 3.8. Stochastic gradient descent (SGD) was used for optimization over 300 training epochs with an initial LR (learning rate) of 0.01, which was changed during the pruning process to 0.0032. The batch size was set to 32. This study makes use of an extensive array of assessment criteria, including precision (Pre), recall (Rec), mean average precision (mAP), floating points per second (FLOPs), and parameters (Params), to improve model selection. Table 4 details all the parameters that were changed during the finetuning of the baseline YOLOv8l model.

Pre means precision, the proportion of all positive classifications to detect positive outcomes accurately. The number of ALDs that have been correctly identified is shown by TP, and FP is a false positive.

Recall, or Rec, is a measure of how many correctly identified positive results there were out of all genuine positive instances. FN stands for false pessimistic predictions made by the model for positive samples.

M indicates the number of disease classes the model identified (four ALDs were chosen for this investigation; therefore, M = 4). Average precision (AvgPre), which measures the model’s capacity to recognize a single category, is a crucial indicator for assessing how well a model performs in that class. Mean average precision, or mAP, is crucial for determining the trained network model’s overall effectiveness across all categories. In this study, mAP is used.

Greater values suggest that the neural network model needs more processing. Floating points per second, or FLOPs, measure the model’s execution speed or computational complexity. M is the number of input maps; X and Y are the height and breadth of maps, respectively; Ch is channels in maps; F is the kernels; C and R represent the height and width of the convolutional kernels, respectively.

The parameters in the model indicate the total number of learnable parameters, including weights and biases.

Results

This section presents the findings based on the conducted experiments. The results offer insights into the performance of the proposed approach, highlighting key trends, comparisons, and evaluations against baseline models.

Improving model detection efficiency

To evaluate how well the suggested E-YOLOV8 approach detects various ALDs (frogeye spot, rust spot, grey spot, and Alternaria leaf spot). An analysis was conducted in comparison to the initial YOLOv8l model. The particular outcomes are listed in Table 5.

The above table shows that the proposed approach significantly increased the ability to identify different diseases on apple leaves. In particular, there was a 2.4%, 3.1%, 6.2%, and 1.1% increment in the mAP@0.5 scores to recognize Alternaria spots, rust, grey spots, and frogeye spots, respectively. These scores were evaluated at a threshold of 0.5.

The confusion matrix is a tool used to evaluate how well categorization models work. It demonstrates how the expected and actual classes in the model agree. The confusion matrix indicates the number of predictions the model has made for each class, with each column denoting a class that it has made. Each row represents the original class of the data, and the total of each row indicates how many objects of the data there are for each class. Figure 12 compares the confusion matrix before and after the modification.

The classification of grey and frogeye spots was incremented by 2% and 6%, respectively. The Alternaria leaf spot was improved by 3%, and the rust spot was improved by 4%. These gains are attributable to the addition of the CBAM and the upgraded FPN, increasing the model’s precision in illness classification. The model’s performance is displayed in Fig. 13, which describes the enhancement before and after. Before the enhancement, the training and verification bounding box loss (box_loss) decreased to around 1.5; after enhancement, it decreased to approximately 1.3; the classification loss (cls_loss) decreased from 1.4 to 1.1; and the defocus loss (dfl_loss) decreased from 1.3 to 1.1. The enhanced model exhibits higher convergence and generalization skills with noticeable accuracy, recall, and average precision gains.

To demonstrate the advantages of the suggested approach, the detection accuracy on a test set is displayed in Fig. 14. The findings of testing for rust, grey spot, frogeye leaf spot, and Alternaria leaf spot are displayed in the 1st to 4th columns. Since the majority of apple leaf spots are tiny and thick in real-world settings, it’s critical to identify damaged leaves in these dense locations. During the studies, the model showed a reasonably high confidence in its predictions because of the apparent features of the Alternaria leaf spot. Rust, frogeye, and grey spots manifest as denser and smaller lesions. The lesion was still correctly diagnosed despite several low-confidence predicted boundary boxes. Based on the detection impact, the enhanced model successfully identified a variety of diseases, decreased missed detections, and enhanced apple leaf edge disease detection.

The qualitative analysis was performed to further assess E-YOLOv8’s robustness under difficult circumstances. Figure 15 shows typical detection results on low-light-illuminated, overlapping foliage, and small lesion-area images. Although traditional YOLOv8l either did not detect them or detected them with imprecision and a smaller bounding box, E-YOLOv8 did so with greater confidence and an improved bounding box. These results validate the claim that the addition of GhostConv, CBAM, and refined FPN improves the visual condition capacity of the model to react to complex visual conditions that may arise in field environments.

Qualitative analysis of E-YOLOv8 on challenging cases. Row 1: under low-light conditions, the model successfully detects lesions despite reduced contrast. Row 2: With overlapping leaves, E-YOLOv8 localizes disease regions even under partial occlusion. Row 3: for small lesions, the refined FPN and CBAM modules enable accurate detection of subtle symptoms. These examples demonstrate the robustness of the proposed method in real-world agricultural scenarios.

Ablation study

To assess the effectiveness of the E-YOLOv8 model in greater detail, ablation research using a modified version of YOLOv8l was carried out, as indicated in Table 6. The results show that the improvements made in this study have significantly improved the precision of the model while reducing the number of parameters. After introducing the GhostNet module in the backbone of the model, there was a decrease of 6.3% in FLOPs and a 13% decrement in the model’s parameters. On adding the C3 module in place of the C2f module, a further reduction in precision and recall was seen, and computational complexity was also reduced. The addition of the CBAM module increases precision accuracy from 81% to 86.5% with no change in computational complexity and parameters. The FPN module helped increase the precision from 86.5% to 90.1% and recall from 83.9% to 89.6%. The FLOPs and parameters were reduced by 17% and 29.5%, respectively.

According to the experimental results, the suggested E-YOLOv8 model significantly outperforms the baseline YOLOv8l in real-world apple leaf disease identification. YOLOv8l obtained a mean Average Precision at IoU 0.5 (mAP@0.5) of 84.5%, a precision of 83.2%, and a recall of 83.9%. Due to the 8.3 GFLOPs and 3.3 million parameters required for this performance, adoption on devices with constrained resources was difficult. GhostConv layers for effective feature extraction, C3 modules for lightweight aggregation, CBAM for improved lesion localization, and an enhanced FPN for superior multi-scale representation are all included in the upgraded E-YOLOv8 architecture. The precision, recall, and mAP@0.5 of the model described in the last row of Table 1 are 90.1%, 89.6%, and 93.9%, respectively. In comparison to the baseline YOLOv8l, E-YOLOv8 shows a relative improvement of 8.3% in precision, 6.8% in recall, and 11.1% in mAP@0.5. By reducing FLOPs by 36.1% (from 8.3G to 5.3G) and parameters by 45.5% (from 3.3 M to 1.8 M), the model shows a significant reduction in computational cost. The improvements show that E-YOLOv8 achieves high accuracy while maintaining a low-weight design suitable for deployment on edge devices such as embedded AI platforms, smartphones, and drones.

These enhancements make E-YOLOv8 a feasible choice for real-world use in precision agriculture by improving detection performance, particularly for tiny or mild lesions, and enhancing operating efficiency. This model has proven to be a successful solution for real-time plant disease diagnosis in smart farming situations, outperforming previous lightweight versions such as YOLOv7-Tiny, YOLOv6, and YOLOv5s. As illustrated in Fig. 16, four randomly selected ALD photos illustrate the main regional changes the model concentrates on throughout the identification phase, both before and after the improvement. Darker hues denote regions that are more critical to the model in the heat map. When YOLOv8l and E-YOLOv8 heat maps are compared, it becomes clear that the improved E-YOLOv8 model significantly improves detection skills. E-YOLOv8’s heat map shows more focused and accurate regions of interest, demonstrating improved lesion area identification capabilities. The YOLOv8l heat map also highlights the exact locations, although the E-YOLOv8 model’s markers are more prominent and less hazy. This suggests that our approach can correctly detect apple defects and reduce the model’s interference from complex background noise.

Performance evaluation of E-YOLOv8 with other object detection models

A range of performance criteria, including Pre, Rec, mAP at an IoU threshold of 0.5, FLOPs, and several parameters, are used in Table 7 to compare different target detection models. Comparing the proposed model against YOLOv7-Tiny, YOLOv6, YOLOv5s, and YOLOv8l shows a significant gain in Pre and Rec. The proposed model achieved the gains in performance by reducing the computational demands. Because of its efficiency, the suggested model is ideal for real-time applications and settings with limited resources. When compared to other models, the proposed model is substantially smaller. These findings demonstrate how, by integrating GhostNet and C3 into the backbone network, introducing CBAM, and adding enhanced FPN to maximize feature fusion, our model considerably lowers performance while keeping good accuracy. In hardware contexts with limited resources, it can be used to achieve practical real-time target detection tasks by lowering computing costs and model complexity. The proposed model is also compared with some SOTA models, and the results are shown in Table 8. The basis for comparison is the mAP metrics @ 0.5.

Discussion

E-YOLOv8 (Enhanced-YOLOv8l), a novel model that addresses the challenges involved in detecting apple leaf diseases in real time across diverse farming conditions is introduced in this study. Existing object detection methods based on YOLO and comparable models can perform well on various general objects. However, their performance in real-world agricultural applications, particularly on resource-limited devices, is often limited due to their higher computational load, insufficient accuracy at identifying minute lesions and weakness in detecting unique and unexpected disease patterns.

-

Changing basic convolutional layers to the GhostConv modules of GhostNet, the model cuts down excess feature maps and requires less processing power for prediction. Removing the information in this way makes the network slimmer so that fewer parameters are used and still offers high quality features. Instead of using standard convolutional fusion, the model employs C3 blocks that preserve both spatial and channel information and cut down on operations.

-

To resolve the challenge of locating hidden lesions on difficult leaf backgrounds, a CBAM module is used to highlight useful spatial and channel features. The model is now able to pay greater attention to where the disease occurs, helping it identify lesions well and reduce mistakes. By introducing these changes, the model becomes more compact and effectively gathers features linked to the presence of the disease.

-

A revised FPN system is created to support effective mixing of multi-scale features. Identifying tiny symptoms and minor differences in lesion appearance is key in the early days of apple diseases.

-

E-YOLOv8 doesn’t need as much computation as regular models and squeezes the model size, while achieving an impressive mAP@0.5 of 93.9%. Thus proposed model is ideal for use in edge computing, UAVs, mobile devices and agricultural equipment.

When used for apple leaf disease detection, the E-YOLOv8 model proves to be faster and more accurate than previous models. By combining new architecture and improved attention features, E-YOLOv8 tackles the main problems that exist in traditional YOLO frameworks. Examination of the results shows that E-YOLOv8 results in a mAP@0.5 of 93.9% which is a 9.4% improvement over the baseline YOLOv8l model. Of particular significance, the rise happened in more than one area and precision and recall also rose, ending for each at 90.1% and 89.6%. These results demonstrate that introducing these updates not only made the model better at detecting areas with disease but also gave it greater accuracy and reliability on a range of samples. Evaluating specific parts of the model showed how each modification affected the overall effectiveness of the model. Specifically, incorporating GhostConv blocks greatly decreased the model size and improved efficiency without adversely affecting its ability to extract meaningful representations. Background pooling in C3 blocks enhanced the retrieval of common patterns and increased the efficiency of model training in challenging leaf structures. The CBAM module especially enhanced the model’s ability to concentrate on skin lesions, leading to improvements in diagnostic outcomes for cancer detection. Implementing the improved FPN allowed the model to perform better multi-scale feature fusion, resulting in improved detection of delicate or minimally perceptible lesions that conventional FPNs often failed to recognize.

A remarkable feature of E-YOLOv8 is how it strikes an ideal balance between precision and efficiency. It has a reduced model size of only 1.8 M parameters and performs 5.3 GFLOPs, which are 45.5% less memory-intensive and 36.1% more computationally efficient than the original YOLOv8l. This allows the E-YOLOv8 model to be effectively deployed on edge devices like drones, smartphones, and embedded agricultural IoT systems, enabling real-time monitoring of plant diseases in the field. E-YOLOv8 consistently achieves higher detection accuracy and greater speed than contemporary lightweight detectors such as YOLOv7-Tiny, YOLOv6, and YOLOv5s. Previously developed lightweight detectors often had to trade off accuracy to meet certain size or speed requirements. E-YOLOv8, however, shows that performance and deployability can be achieved together by designing the model architecture thoughtfully.

While it has many benefits, the model also has certain limits. It may not work as accurately in places with very bright or low light, heavy blocks of code, or if it encounters diseases that were not included in the training data. Also, while E-YOLOv8 works well for apple leaf disease, it may need to be tuned or augmented with datasets from other crops. In the future, it would be worth studying methods such as domain adaptation, self-supervised learning, or few-shot learning to improve the research’s ability to handle different plant species and disease types.

E-YOLOv8 has two folds of contribution: (1) compression in architecture by GhostConv and C3 that minimizes redundant feature generation and (2) improving precision of small and irregular lesions via channel-spatial attention (CBAM) with a refined FPN to enhance more robust multi-scale fusion. These changes were specifically made to allow correct detection on resource-limited edge hardware and still allow performance on small dense lesions such as those found on apple leaves. E-YOLOv8 reached 93.9% mAP 0.5 with 5.3 GFLOPs and 1.8 M parameters, a 11.1% point higher than the baseline YOLOv8l and a significant decrease in FLOPs and parameters, on AppleLeaf9 and the augmented dataset.

E-YOLOv8 shows a clear tradeoff between efficiency and accuracy compared to recent optimized YOLO-based versions at apple leaf detection. A few of the current methods focus on placing a greater emphasis or transformer elements (e.g., YOLOv5-CBAM-C3TR and transformer-enhanced TPH-YOLOv5), which may achieve competitive or increased mAP scores at much higher computational costs because of larger model sizes and more complicated detection heads. Others, including YOLOv8-GSSW or lightweight YOLOv8n implementations, focus on compressing models extremely thin, but such methods are reported to achieve significantly worse scores on fine lesions. As an example, ALAD-YOLO has an accuracy of ≈ 90.2% and 250 FLOPs, whereas TPH-YOLOv5 variants have ≈ 94.3% mAP but with larger modules. In comparison, YOLOv8-GSSW can obtain ≈ 87.3% mAP with lowered parameters. E-YOLOv8 lies between these extremes by (a) using the Ghost modules to reduce unnecessary calculation, (b) retaining a small detection head, and (c) adding CBAM and a high-quality FPN only in specific areas where they are most likely to increase marginal benefit. Such a setup enables effective small-scale lesion detection and enables the models to be deployed on edge devices. In case the maximization of absolute mAP is the main goal, irrespective of the computational cost, then transformer-enabled or multi-stage improved YOLO versions (e.g., TPH-YOLO) can be used. When the goal is to only make the models smaller to run on a real-time device, lightweight YOLO variants are efficient but lack accuracy. E-YOLOv8, however, is a middle-ground solution, providing high accuracy on small and dense lesions whilst being efficient enough to run on a limited device. Table 9 discusses the comparison of these models with E-YOLOv8.

In addition to the general performance improvement reports, the evaluation can be enhanced with more data on how E-YOLOv8 behaves. Through failure case analysis, it was found that on some occasions, the model fails to classify the disease symptoms having very similar visual textures (e.g., rust versus leaf spot) or when the illumination is extreme different. These illusions indicate that it is difficult to discriminate fine-grained features in complex backgrounds and that combining complementary spectral or temporal features may make them even more robust. A series of ablation tests were also conducted on several datasets to test the generality of the suggested modules. It was always found that GhostConv and CBAM contributed to better performance of small object detection tasks in other regions, other than apple leaves, but this does not prove that the architecture is dataset-specific and can be easily applied in other areas of dense and subtle objects. Lastly, initial testing on an NVIDIA Jetson Nano and Raspberry Pi 4 showed that the proposed model can run at near real-time (at least 20 FPS on Nano, at least 15 FPS on Pi 4) without dropping the detection accuracy of the model. These results demonstrate the feasibility of E-YOLOv8 in the field, which helps close the gap between laboratory equivalence and field implementation on edge devices.

Though the proposed E-YOLOv8 is achieving high performance on apple leaf disease datasets, a weakness of the current study is that it is a crop-specific model. The overall validity of the model in other crops and types of disease has not been validated in detail since only apple leaves were used in the experiments. To resolve this, future studies will investigate cross-dataset validation of unseen datasets of apple and apply the model to other crops like tomato, maize and grapevine, where leaf disease detection is also a problem. The E-YOLOv8 can be trained on individual plant species with the assistance of transfer learning and domain adaptation strategies, and thus implemented in larger-scale precision agriculture. These extensions would not just enhance the power or robustness of the proposed framework but would also indicate the potential of the proposed framework as a universal lightweight plant disease monitoring detector in various agricultural environments.

Conclusion

The enhanced E-YOLOv8 lightweight algorithm that has been suggested replaces conventional structures with GhostNet and C3 modules for the identification of apple leaf disease. It also introduces an improved FPN structure and a CBAM attention mechanism. These improvements lead to a more performance-oriented, lighter product. On the test dataset, the modified model achieves a mAP@50% of 93.9%, a precision of 90.1%, and a recall of 89.6%. The upgraded E-YOLOv8 exhibits noticeable improvements over the previous models. Furthermore, the enhanced model’s weight of 3.5 MB indicates a 39.7% decrease from the original model. Compared to other popular techniques, these improvements result in reduced computing costs, smaller model weights, and improved detection accuracy. Hence, the improved algorithm shows excellent potential for agricultural production, especially on devices with limited computational resources. Although E-YOLOv8 demonstrated significant performance in apple leaf disease detection, it has certain limitations that will be worked upon in the future. Variability in leaf orientation, disease progression stages, or overlapping leaves affected detection accuracy. Real-time processing over vast agricultural fields may require additional optimization or integration with cloud-based solutions to handle large-scale data efficiently. In the future, the CBAM module will be integrated with transformer-based attention mechanisms for better feature localization. A lightweight version of edge devices for real-time inference will be deployed. The proposed model will be trained on multiple farms in the future without transferring sensitive data, ensuring scalability while maintaining privacy by using federated learning.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

References

India production of APPLE. (n.d.). accessed September 4 (2024). https://agriexchange.apeda.gov.in/IndiaProduction/India_Productions.aspx?cat=fruit&hscode=1040

Bonkra, A., Pathak, S., Kaur, A. & Shah, M. A. Exploring the trend of recognizing Apple leaf disease detection through machine learning: A comprehensive analysis using bibliometric techniques. Artif. Intell. Rev. 57, 1–26. https://doi.org/10.1007/s10462-023-10628-8 (2024).

Dhaka, V. S. et al. A survey of deep convolutional neural networks applied for prediction of plant leaf diseases. Sensors 21 https://doi.org/10.3390/s21144749 (2021).

Kaur, A., Kukreja, V., Aggarwal, P., Thapliyal, S. & Sharma, R. Amplifying apple mosaic illness detection: Combining CNN and random forest models, IEEE Int. Conf. Interdiscip. Approaches Technol. Manag. Soc. Innov. IATMSI 2024 (2024). (2024). https://doi.org/10.1109/IATMSI60426.2024.10503272

Xu, X., Zhou, B., Li, W. & Wang, F. A method for detecting persimmon leaf diseases using the lightweight YOLOv5 model. Expert Syst. Appl. 127567. https://doi.org/10.1016/J.ESWA.2025.127567 (2025).

Kalwad, P. D. et al. Apple leaf disease detection and analysis using deep learning technique, Lect Notes Networks Syst. 191 803–814. https://doi.org/10.1007/978-981-16-0739-4_76. (2022).

Zhu, S. et al. A novel approach for Apple leaf disease image segmentation in complex scenes based on two-stage DeepLabv3 + with adaptive loss. Comput. Electron. Agric. 204 https://doi.org/10.1016/j.compag.2022.107539 (2023).

Gupta, C., Gill, N. S., Gulia, P. & Chatterjee, J. M. A novel finetuned YOLOv6 transfer learning model for real-time object detection. J. Real-Time Image Process. 20 https://doi.org/10.1007/s11554-023-01299-3 (2023).

Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection (n.d.).

Zhang, Y. et al. A precise Apple leaf diseases detection using BCTNet under unconstrained environments. Comput. Electron. Agric. 212, 108132. https://doi.org/10.1016/j.compag.2023.108132 (2023).

Mahmoud, A., Aurangzeb, K., Alhussein, M. & Elbelkasy, M. S. A. Harnessing deep learning to analyze climate change impacts on crop production. Alexandria Eng. J. 125, 67–82. https://doi.org/10.1016/J.AEJ.2025.04.008 (2025).

Sun, Z., Feng, Z. & Chen, Z. Highly accurate and lightweight detection model of Apple leaf diseases based on YOLO. Agronomy 14 https://doi.org/10.3390/agronomy14061331 (2024).

Huo, S., Duan, N. & Xu, Z. An improved multi-scale YOLOv8 for Apple leaf dense lesion detection and recognition. IET Image Process. 1–15. https://doi.org/10.1049/ipr2.13223 (2024).

Liu, Z. & Li, X. An improved YOLOv5-based Apple leaf disease detection method. Sci. Rep. 14, 1–13. https://doi.org/10.1038/s41598-024-67924-8 (2024).

Li, F., Zheng, Y., Liu, S., Sun, F. & Bai, H. A multi-objective Apple leaf disease detection algorithm based on improved TPH-YOLOV5. Appl. Fruit Sci. 66, 399–415. https://doi.org/10.1007/S10341-024-01042-7/METRICS (2024).

Javed, R. et al. Early detection and classification of Apple leaf diseases using deep learning technique, 4 86–100. (2024).

Vishnoi, V. K., Kumar, K., Kumar, B., Mohan, S. & Khan, A. A. Detection of Apple plant diseases using leaf images through convolutional neural network. IEEE Access. 11, 6594–6609. https://doi.org/10.1109/ACCESS.2022.3232917 (2023).

Xu, W. & Wang, R. ALAD-YOLO: An lightweight and accurate detector for Apple leaf diseases. Front. Plant. Sci. 14, 1–15. https://doi.org/10.3389/fpls.2023.1204569 (2023).

Li, H., Shi, L., Fang, S. & Yin, F. Real-Time detection of Apple leaf diseases in natural scenes based on YOLOv5. Agric 13 https://doi.org/10.3390/agriculture13040878 (2023).

Gong, X., Zhang, S. & High-Precision, A. Detection method of Apple leaf diseases using improved faster R-CNN, Agric. 13 (2023). https://doi.org/10.3390/agriculture13020240

Zhong, M. & Detection and application of apple leaf diseases based on object detection. Front. Soc. Sci. Technol. 5 19–29. https://doi.org/10.25236/fsst.2023.051804. (2023).

Zeng, W., Pang, J., Ni, K., Peng, P. & Hu, R. Apple leaf disease detection based on lightweight YOLOV8-GSSW. 40 589–598. (2024).

Cui, S. et al. Research on apple leaf pathological detection system based on improved YOLOv7, 2024 IEEE Int. Conf. Intell. Tech. Control. Optim. Signal Process. INCOS 2024 - Proc. (2024). https://ieeexplore.ieee.org/abstract/document/10665709 (accessed October 17, 2024).

Ahmed, I. & Yadav, P. K. Predicting Apple plant diseases in orchards using machine learning and deep learning algorithms. SN Comput. Sci. 5, 1–17. https://doi.org/10.1007/S42979-024-02959-2/METRICS (2024).

Zhang, S., Wang, J., Yang, K. & Guan, M. YOLO-ACT: An adaptive cross- layer integration method for Apple leaf disease detection, 1–18. (2024). https://doi.org/10.3389/fpls.2024.1451078

Lv, M. & Su, W. H. YOLOV5-CBAM-C3TR: An optimized model based on transformer module and attention mechanism for Apple leaf disease detection, front. Plant. Sci. 14, 1–13. https://doi.org/10.3389/fpls.2023.1323301 (2023).

Wu, W. et al. Generative prompt controlled diffusion for weakly supervised semantic segmentation. Neurocomputing 638, 130103. https://doi.org/10.1016/J.NEUCOM.2025.130103 (2025).

Wu, W., Dai, T., Huang, X., Ma, F. & Xiao, J. Top-K pooling with patch contrastive learning for Weakly-Supervised semantic segmentation. Conf. Proc. - IEEE Int. Conf. Syst. Man. Cybern. 5270-5275 https://doi.org/10.1109/SMC54092.2024.10831685 (2024).

Chen, Y. et al. Adaptive attention-enhanced yolo for wall crack detection. Appl. Sci. 14, 7478 14 (2024) 7478. (2024). https://doi.org/10.3390/APP14177478

Wang, X., Tang, S. H., K.A.B.M. Ariffin, M., Ismail, M. I. S. B. & Zhao, R. LeafMamba: A novel IoT-integrated network for accurate and efficient plant leaf disease detection. Alexandria Eng. J. 123, 415–424. https://doi.org/10.1016/J.AEJ.2025.03.033 (2025).

Yue, X. et al. Improved YOLOv8-Seg network for instance segmentation of healthy and diseased tomato plants in the growth stage. Agric 13. https://doi.org/10.3390/agriculture13081643 (2023).

Terven, J., Córdova-Esparza, D. M. & Romero-González, J. A. Comprehensive review of YOLO architectures in computer vision: From YOLOv1 to YOLOv8 and YOLO-NAS, Mach. Learn. Knowl. Extr. 5, 1680–1716. https://doi.org/10.3390/make5040083 (2023).

Hussain, M. YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection. Machines 11 https://doi.org/10.3390/machines11070677 (2023).

Kim, J. H., Kim, N., Park, Y. W. & Won, C. S. Object detection and classification based on YOLO-V5 with improved maritime dataset. J. Mar. Sci. Eng. https://doi.org/10.3390/jmse10030377 (2022).

Jiang, K. et al. An attention Mechanism-Improved YOLOv7 object detection algorithm for hemp Duck count Estimation, agric. 12 (2022). https://doi.org/10.3390/agriculture12101659

Han, K. et al. GhostNet: More features from cheap operations. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 1577-1586 https://doi.org/10.1109/CVPR42600.2020.00165 (2020).

Soydaner, D. Attention mechanism in neural networks: Where it comes and where it goes. Neural Comput. Appl. 34, 13371–13385. https://doi.org/10.1007/s00521-022-07366-3 (2022).

Umirzakova, S., Muksimova, S., Shavkatovich Buriboev, A., Primova, H. & Choi, A. J. A unified transformer model for simultaneous cotton boll Detection, pest damage Segmentation, and phenological stage classification from UAV Imagery, drones 2025, 9, Page 555 9 555. (2025). https://doi.org/10.3390/DRONES9080555

Hughes, D. P. & Salathe, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics, (2015). http://arxiv.org/abs/1511.08060

Nikhila Nagajyothi, C., Oommen, L. & Chebrolu, S. Classification of imbalanced multi-label leaf diseases using carit: Class attention enabled regionvit. Multimed. Tools Appl. 83, 56127–56154. https://doi.org/10.1007/S11042-023-17678-8 (2024).

Thapa, R., Zhang, K., Snavely, N., Belongie, S. & Khan, A. The Plant Pathology Challenge 2020 data set to classify foliar disease of apples, Appl. Plant. Sci. 8 1–8. https://doi.org/10.1002/aps3.11390 (2020).

Zeng, W., Pang, J., Ni, K., Peng, P. & Hu, R. Apple leaf disease detection based on lightweight YOLOv8-GSSW. Appl. Eng. Agric. 40, 589–598. https://doi.org/10.13031/AEA.16022 (2024).

Zhang, S., Wang, J., Yang, K. & Guan, M. YOLO-ACT: An adaptive cross-layer integration method for Apple leaf disease detection. Front. Plant. Sci. 15, 1451078. https://doi.org/10.3389/FPLS.2024.1451078/BIBTEX (2024).

Acknowledgements

Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R817), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Funding

This research work has not received any funding.

Author information

Authors and Affiliations

Contributions

Chhaya Gupta: Conceptualization, methodology, original draft, Nasib Singh Gill: Conceptualization, methodology, Supervision, Preeti Gulia: Conceptualization, methodology, Supervision, Sangeeta Duhan: Conceptualization, methodology, Supervision, Hanen Karamti: analysis, software, Abhinav Kumar: plagiarism handling and editing, Denekew Alemayehu Alamneh: analysis, editing, Imen Safra: Conceptualization, methodology, review and editing.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Gupta, C., Gill, N.S., Gulia, P. et al. An enhanced deep learning-based framework for diagnosing apple leaf diseases. Sci Rep 15, 39699 (2025). https://doi.org/10.1038/s41598-025-23272-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-23272-9