Abstract

This paper addresses the fault-tolerant control of nonlinear heterogeneous multi-agent systems with actuators and sensors affected by faults under inconsistent Markov switching topology. A double event-triggered strategy is introduced to reduce the communication burden in the network and to improve the effectiveness. Moreover, to avoid Zero behavior, the considered event-triggered conditions are dependent on sampled-data. Based on this, the state observers and fault estimators are designed to estimate the system states and faults, and the estimation results are utilized to design the fault-tolerant controllers. The stability criterion of the estimated error system can be obtained based on Lyapunov theory and some inequality techniques. Finally, the effectiveness of the proposed method is verified by a numerical simulation.

Similar content being viewed by others

Introduction

With the rapid development of computer and communication technologies, multi-agent systems (MASs) have received extensive interest. The characteristics of high efficiency and flexibility have facilitated wide applications of MASs in many fields, such as unmanned aerial vehicles (UAVs)1,2, wireless sensor networks3, smart grids4 and so on. However, in real systems, the system parameters or structures are usually different, such as UAVs may face different nonlinearities in a UAV show due to their different ages or location, or a system consisting of UAVs and unmanned ground vehicles (UGVs)5,6. Therefore, in recent years, heterogeneous MASs (HMASs) with different characteristics have gradually received wide attention7,8,9. Considering the increasing complexity of the tasks in practice, the dynamic characteristics of the agents in the system tend to be different. Therefore, it seems more relevant that the HMASs considered in this paper are composed of first order (FO) and second order (SO) agents.

In current industrial sectors, with the increasing scale and complexity, MASs are also exposed to numerous unexpected failures, especially HMASs with more sensors and actuators. Therefore, it is significant theoretical significance and engineering application value to study the fault-tolerant control of HMASs to ensure the system is sufficiently safe and reliable. In recent years, numerous works have been carried out by scholars on HMASs with faults, for example, the system faults is considered in10,11, the actuator faults is considered in the system12,13, the sensor faults is considered in14,15. In these studies, only single faults were considered, while concurrent faults involving actuators and sensors (composite faults) are relatively common in actual HMAS. For example, damage to a drone motor (actuator fault) may occur simultaneously with the loss of GPS signals (sensor fault). Some research results have also been obtained on related fault-tolerant control issues in homogeneous MASs16,17,18. Nevertheless, for HMASs with different dynamic characteristics, these methods may not be fully applicable due to their more complex system structure, and there are still few studies addressing the related problems in the existing research results, which motivates this paper.

In the above literature and even in most of the literature on the consensus problem, the communication topology between agents is assumed to be fixed. However, the operating environment of HMASs is often subject to internal or external uncertainties that lead to topology changes in HMASs. Markov has been introduced to characterize this phenomenon. In recent years, the consensus problem of HMASs under Markov switching topologies has also been gradually attracted more attention and many important results have been obtained19,20. However, assuming that state transition probabilities are homogeneous is often unrealistic in many cases. For example, the evolution between operating modes of DC motor equipment is determined by state transition probabilities that vary over time. Furthermore, delays or packet losses in network control systems vary over time, leading to time-varying transition probabilities. For such cases, inconsistent Markov processes are more suitable for describing these real-world systems. Currently, inconsistent Markov processes have been considered in some systems, such as neural networks21,22 and Markov jump systems23,24. In HMASs, agents may be affected by different environments or communication delays. For example, the low mobility of FO agents results in slow topological changes, while the high mobility and complex aerodynamic characteristics of SO agents lead to frequent/discontinuous link changes, which also causes inconsistent communication topology switching between agents. Therefore, this paper introduces inconsistent Markov processes into HMASs.

In addition, since communication resources in a system are usually limited, it is important to improve network resource utilization while ensuring system security. Event-triggered strategy (ETS) is one of the common methods to improve communication efficiency and save computational resources, and have been applied in several works25,26,27. In traditional control systems, most of them only need to design event triggers from sensors to observers/controllers and from controllers to actuators to reduce redundant data transmission in the network channel. In contrast, it is necessary to consider information from both the agent itself and from its neighbors in HMASs, which makes the complexity of the network structure and the communication burden greatly increased. On the other hand, unnecessary data transmission can also be reduced by designing appropriate ETS, which have also been used in some literatures for fault-tolerant control of HMASs to reduce the transmission of fault messages. Inspired by28,29, a double ETS is introduced to HMASs in this paper, which means that ETS are designed on both sensor-to-observer and observer-to-controller channels. Furthermore, to avoid Zeno behavior, the double ETS rely on sampled-data, which means that the events occur only at the instant of the sampling time.

Inspired by previous discussions, the primary target is to design event-triggered controllers that rely on sampled-data to address the consensus of nonlinear HMASs with actuator and sensor failures subject to inconsistent Markov processes. The main contributions are listed as:

(i) Unlike the results in30,31, where all agents are considered to follow the identical Markov chain. In practice, due to the different dynamic characteristics of agents in HMASs, making their topological switching more likely to follow inconsistent Markov jumping rules. Therefore, the inconsistent Markov is introduced in HMASs in this paper, namely, the FO agent and the SO agent systems follow different Markov chains.

(ii) In contrast to the existing results in32,33, which only considered single faults in the system. However, when both actuators and sensors in the system are affected by faults, composite faults simultaneously disturb control inputs and state measurements, these methods are inadequate for handling composite faults. Therefore, a fault-tolerant control method is designed for HMASs with actuator and sensor failures to ensure that the system maintains consensus.

(iii) Since HMASs consisting of agents with different dynamic characteristics have more sensors and actuators, to minimize the transmission of information, combining the advantages of sampled-data and ETS as well as inspired by28,29, double ETS is introduced into HMASs, where the ETS is designed between the sensor to the observer and the observer to the controller.

Moreover, to more clearly illustrate the contributions of this paper, Table 1 is provided to summarize and compare the relevant literature mentioned, focusing on System model type, whether communication topologies involve Markov switching, the types of faults present in the systems, and the types of ETS. In Table 1, ’NO’ indicates they are not involved.

Preliminaries

Notations: \({\mathscr {I}}_m\) is a identity matrix of dimension m. \(\mathscr {P} > (\ge )\) 0 denotes that \(\mathscr {P}\) is a positive definite (positive semi-definite) matrix. \({\mathscr {Q}}^{-1}\) and \({\mathscr {Q}}^\textrm{T}\) mean the inverse and transpose matrix of matrix \({\mathscr {B}}\), respectively. \(\otimes\) represents the Kronecker product. \(*\) indicates the terms derived from the omission of symmetry. For simplicity, the time parameter t is omitted in this paper, such as \(x_i = x_i (t)\). Besides, the abbreviations are defined: \(\hbar _\eta \buildrel \Delta \over = \hbar \left( {t - \eta } \right)\), where \(\hbar = x, v, {\delta _{xi}}, {\delta _{vi}}, \delta , \Delta , e\), which will be detailed later.

Assume that \({ {\mathscr {G}}^{{\sigma _1} \left( t \right) }} = \left( { {\mathscr {N}}, { {\mathscr {E}}^{{\sigma _1} \left( t \right) }}, {A^{{\sigma _1} \left( t \right) }}} \right)\) is a weighted directed graph, with \({\mathscr {N}} = \left\{ {1, 2,..., n} \right\}\) is the set of n nodes, and \({{\mathscr {E}}^{{\sigma _1}\left( t \right) } \subseteq \mathscr {N} \times \mathscr {N}}\) represents the edge set. An edge is characterized by \(e_{ij}^{\sigma _1 \left( t \right) } = \left( {j, i} \right)\). \({\mathscr {N}}_j = \{i | (i, j) \in {\mathscr {E}} \}\) is the neighbors set of agent j. \({A^{{\sigma _1} \left( t \right) }} = \left[ {a_{ij}^{{\sigma _1}\left( t \right) }} \right] \in { {\mathscr {R}}^{n \times n}}\) denotes the adjacent matrix, and \({a_{ij}} > 0\) if \(\left( {{v_j}, {v_i}} \right) \in {\mathscr {E}}^{{\sigma _1}\left( t \right) }\). The degree matrix \({\mathscr {D}} = \textrm{diag}\{d_1, d_2,..., d_n \}\) with \(d_i = \sum \limits _{j \in {\mathscr {N}}_i} {a_{ij}^{{\sigma _1}\left( t \right) }}\). The Laplacian matrix \(L = \left( {l_{ij}^{{\sigma _1}\left( t \right) }} \right) \in {{\mathscr {R}}^{n \times n}}\) is defined as \(L = {\mathscr {D}} - A\) can be characterized as:

\({\sigma _1} \left( t \right)\) denotes a continuous-time Markov process, the definition of which will be given later.

Problem formulation

Assume that the considered nonlinear HMASs are described as:

where \({N_1}\mathrm{{ = }} \left\{ {1, 2,..., m} \right\}\) and \({N_2}\mathrm{{ = }} \left\{ {m+1, m+2,..., n} \right\}\), which implies that HMASs (1) involves m FO and \(n-m\) SO agents. \({x_i}\) and \({v_i}\) represent the state and velocity of ith agent, respectively. \(y_i\) is the measurement output. \(x_i^f\), \(v_i^f\), \(u_i^f\) represents the positions, velocities, and inputs involving fault information. \(f (\cdot )\) is a nonlinear function that satisfies the Lipschitz condition. \({w_i}\) denotes external disturbance. Furthermore, the adjacency matrix \({A^{\sigma \left( t \right) }}\) for HMASs (1) is given as:

According to the matrix (2), the matrix \(A^{(\sigma (t))}\) can be considered as including four blocks. \(A_{ss}^{{\sigma _1}\left( t \right) } \in {R^{n \times n}}\) denotes the adjacent matrices of SO agents, \(A_{ff}^{{\sigma _2}\left( t \right) } \in {R^{m \times m}}\) is the adjacent matrices of FO agents. \(A_{sf}^{{\sigma _2}\left( t \right) }\) and \(A_{fs}^{{\sigma _1}\left( t \right) }\) are the adjacent matrices from SO agents to FO agents and from FO agents to SO agents, respectively. Based on this, the Laplacian matrix \(L^{\sigma (t)}\) of the HMASs (1) can be divided as follows:

where \(L_{ss}^{{\sigma _1}\left( t \right) } = \left[ {l_{sij}^{{\sigma _1}\left( t \right) }} \right] \in {R^{n \times n}}\) is the laplace matrix of SO agents. \(L_{ff}^{{\sigma _2}\left( t \right) } = \left[ {l_{fij}^{{\sigma _2}\left( t \right) }} \right] \in {R^{m \times m}}\) indicates the laplace matrix of FO agents. \(D_{sf}^{{\sigma _1}\left( t \right) } = diag\left\{ {\sum \limits _{j \in {N_{i,f}}} {a_{ij}^{{\sigma _1}\left( t \right) }}, i \in {N_1}} \right\}\) and \(D_{fs}^{{\sigma _2}\left( t \right) } = diag\left\{ {\sum \limits _{j \in {N_{i,s}}} {a_{ij}^{{\sigma _2}\left( t \right) }}, i \in {N_2}} \right\}\) denote the in-degree matrices of different order agents.

Furthemore, \({\sigma _1}\left( t \right) (t > 0)\) represents a continuous-time Markov process with right continuous trajectories and taking values in a finite set \({S_1} = \left\{ {1, 2,..., {s_1}} \right\}\) with transition probability matrix \(\Pi = {\left( {{\pi _{ij}}} \right) _{s_1 \times s_1}}\) given by

where \(\Delta t > 0\), \({\lim _{\Delta t \rightarrow 0}}\left( {o\left( {\Delta t} \right) /\Delta t} \right) = 0\) and \({\pi _{ij}} \ge 0\) \(\left( \textrm{for} {\hspace{3.0pt}} i \ne j \right)\) is the transition rate from mode i at time t to mode j at time \(t + \Delta t\) and \({\pi _{ii}} = - \sum \limits _{j = 1,j \ne i}^s {{\pi _{ij}}}\). \({\sigma _2}\left( t \right) (t > 0)\) stands for another Markov process that taking values in the set \({S_2} = \left\{ {1,2,...,{s_2}} \right\}\) and is related to \({\sigma _1}\left( t \right)\) as follows:

Review the system (1), in which both sensors and actuators are subject to partial loss of effectiveness (PLOE). Specifically, the position and velocity outputs are affected by sensor failures, which are described as:

where \({f_{xi}}\) and \({f_{vi}}\) are sensor efficiency faults, namely, \({f_{xi}} = p_{xi} x_i\), \({f_{vi}} = p_{vi} v_i\), \(-1< p_{xi}, p_{vi} <0\). \(t_{fxi}\) and \(t_{fvi}\) indicate the time of sensor failure.

Similarly, the actuator fault is depicted as:

where \(u_i\) is control input, \(t_{fui}\) indicates the time of actuator failure.

Assumption 1

16 The noise disturbance is limited to a known and positive upper limit, it satisfies: \(\left\| {{w_i}} \right\| \le {w_{i1}}\), and \(\left\| {{{\dot{w}}_i}} \right\| \le {w_{i2}}\).

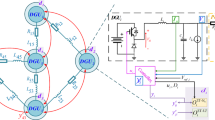

Due to multiple actuator/sensor failures in the system, the coupling effect of sensor and actuator failures further increases the complexity of system dynamics. Furthermore, inconsistent Markov also complicates changes in system topology. This coupling of inconsistent Markov and dual failures makes traditional fault-tolerant control methods difficult to apply directly, requiring the design of new observers and event-triggered strategies. To alleviate the communication burden, two event triggers are employed for sensor-to-observer and observer-to-controller. The schematic diagram of the overall design scheme is depicted in Fig. 1.

First, the following errors and fault estimation errors are defined: \({e_{xi}} = x_i^f - {\hat{x}_i} - {\hat{f}_{xi}}\), \({e_{vi}} = v_i^f - {\hat{v}_i} - {\hat{f}_{vi}}\), \({e_{fxi}} = {f_{xi}} - {\hat{f}_{xi}}\), \({e_{fvi}} = {f_{vi}} - {\hat{f}_{vi}}\). Then, based on the scheme in Fig. 1, the state observer is designed as follows:

and the sensor fault estimators are devised:

where \({\hat{x}}_i\), \({\hat{v}}_i\), \({\hat{f}}_i\) and \(\hat{y}_i\) denote the estimate of state, velocity, fault and measurement output, respectively. Parameters \(H_1\), \(H_2\), \(H_3\), \(F_1\), \(F_2\) and \(F_3\) need to be devised. \(e_{xi} ( t^i_{k}) = x_i^f ( t^i_{k1})- {\hat{x}_i} ( t^i_{k2}) - {\hat{f}_{xi}} ( t^i_{k2})\) and \(e_{vi} ( t^i_{k}) = v_i^f ( t^i_{k1})- {\hat{v}_i} ( t^i_{k2}) - {\hat{f}_{vi}} ( t^i_{k2})\), \(t^i_{k1}\) and \(t^i_{k2}\) represent the last triggering moments of ETS-a and ETS-b, respectively, suppose \(t_0^i = 0\).

In this paper, the considered ETS-a and ETS-b rely on sampled-data to avoid the Zeno behavior. Let \(t_s\) be the sampling instants with \(0 = t_0< t_1<...< t_s <...\). Based on this, when \(t = t_s\), the event-triggered condition of ETS-a for the ith agent in HMASs (1) is expressed as:

where the error \({\delta _{xi}} \left( {t_s} \right) = {x_i^f} \left( {t_s} \right) - {x_i^f} \left( {t_{k1}^i} \right)\) and \({\delta _{vi}} \left( {t_s} \right) = {v_i^f} \left( {t_s} \right) - {v_i^f} \left( {t_{k1}^i} \right)\) represent the difference between the position and velocity at the current sampled moment and the last event-triggered instant, respectively. \({\sigma _{1}} > 0\), \({\sigma _{2}} > 0\) and \({\sigma _{3}} > 0\) denote the threshold parameter. \(\Omega _1\), \(\Omega _2\) and \(\Omega _3\) are adjustment parameter matrix to be designed.

Similarly, the event-triggered conditions of ETS-b represented as:

where \({\theta _{{\hat{x}}_i}} \left( {t_s} \right) = \sum \limits _{j \in {{\mathscr {N}}_i}} {{a_{ij}} \left( {e_{{\hat{x}}_i} \left( {t_s} \right) - {e_{{{\hat{x}}_j}}} \left( {t_s} \right) } \right) }\), \({e_{{\hat{x}}_i}} \left( {t_s} \right) = {\hat{x}_i} \left( {{t_s}} \right) - {\hat{x}_i} \left( {t_{{k_2}}^i} \right)\), \(\varphi _{{\hat{x}_{i} }} \left( {t_{s} } \right)\; = \;\)\(\sum\limits_{{j \in {\mathcal{N}}_{i} }} {a_{{ij}} }\)\(\left( {\hat{x}_{i} \left( {t_{s} } \right) - \hat{x}_{j} \left( {t_{s} } \right)} \right)\); \({\theta _{{\hat{v}}_i}} \left( {t_s} \right) = \sum \limits _{j \in {{\mathscr {N}}_i}} {{a_{ij}} \left( {e_{{\hat{v}}_i} \left( {t_s} \right) - {e_{{{\hat{v}}_j}}} \left( {t_s} \right) } \right) }\), \({e_{{\hat{v}}_i}} \left( {t_s} \right) = {\hat{v}_i} \left( {{t_s}} \right) - {\hat{v}_i} \left( {t_{{k_2}}^i} \right)\), \({\varphi _{{\hat{v}}_i}} \left( {t_s} \right) =\;\)\(\sum \limits _{j \in {{\mathscr {N}}_i}} {{a_{ij}} \left( {{\hat{v}}_i \left( {t_s} \right) - {{\hat{v}}_j} \left( {t_s} \right) } \right) }\). \({\sigma _{4}} > 0\), \({\sigma _{5}} > 0\) and \({\sigma _{6}} > 0\) denote the threshold parameter. \(\Omega _4\), \(\Omega _5\) and \(\Omega _6\) are adjustment parameter matrix to be designed.

Remark 1

The double ETS (ETS-a and ETS-b) proposed in this paper rely on sampled data, which means event-triggered occurs only at discrete sampling instants, thereby fundamentally avoiding Zeno behavior. Compared with general ETS in25,26,27 or double ETS in28,29, the double ETS are applied to HMASs with inconsistent Markov and compound failures, significantly reducing communication overhead while ensuring system consistency.

Defining \(0 \le \eta = t - {\tau _s}h \le {\eta _k} = {t_{k + 1}} - {\tau _s}h \le {\eta _M}\) with \(\dot{\eta }\left( t \right) = 1\), from \({\delta _{xi}} \left( {t_s} \right) = {x_i^f} \left( {t_s} \right) - {x_i^f} \left( {t_{k1}^i} \right)\), it has \(x_i^f \left( {t_{k1}^i} \right) = x_{i\eta }^f - \delta _{x_ {i\eta }}\). Likewise, \(v_i^f \left( {t_{k1}^i} \right) = v_{i\eta }^f - \delta _{v_ {i\eta }}\). Similar to the transformation, define a transition variable \({\delta _{fi}}\left( {{\tau _s}h} \right) = {\hat{f}_{xi}}\left( {{\tau _s}h} \right) - {\hat{f}_{xi}}\left( {t_{{k_2}}^i} \right)\).

Let \(\tilde{e}_i = \mathrm{{col}}\)\(\{ e_1, e_2, e_3 \}\), \(e_1 = \mathrm{{col}}\)\(\{ e_{x_1}, e_{x_2}, \;\)\(\ldots , e_{x_m} \}\), \(e_2 = \mathrm{{col}}\)\(\{ e_{x_{m+1}}, e_{x_{m+2}},\)\(\;\ldots , e_{x_n} \}\), \(e_{3} \; = \; {\text{col}}\)\(\{ e_{{v_{{m + 1}} }} ,e_{{v_{{m + 2}} }} ,\;\)\(\ldots ,e_{{v_{n} }} \}\), \(\tilde{e}_{f_i} = \mathrm{{col}}\)\(\{ e_{f_1}, e_{f_2}, e_{f_3} \}\), \(e_{f_1} = \mathrm{{col}}\)\(\{ e_{f_{x_1}}, e_{f_{x_2}},\)\(\;\ldots , e_{f_{x_m}} \}\), \(e_{{f_{2} }} = {\text{col}}\)\(\{ e_{{f_{{x_{{m + 1}} }} }} ,e_{{f_{{x_{{m + 2}} }} }} ,\)\(\;\ldots ,e_{{f_{{x_{n} }} }} \}\), \(e_{f_3} = \mathrm{{col}}\)\(\{ e_{f_{v_{m+1}}}, e_{f_{v_{m+2}}},\)\(\;\ldots , e_{f_{v_n}} \}\), \(\tilde{\delta }_i = \mathrm{{col}}\)\(\{ \delta _1, \delta _2, \delta _3 \}\), \(\delta _1 = \mathrm{{col}}\)\(\{ \delta _{e_{x_1}}, \delta _{e_{x_2}},\)\(\;\ldots , \delta _{e_{x_m}} \}\), \(\delta _2 = \mathrm{{col}}\)\(\{ \delta _{e_{x_{m+1}}}, \delta _{e_{x_{m+2}}},\)\(\;\ldots , \delta _{e_{x_{n}}} \}\), \(\delta _3 = \mathrm{{col}}\)\(\{ \delta _{e_{v_{m+1}}}, \delta _{e_{v_{m+2}}},\)\(\;\ldots , \delta _{e_{v_{n}}} \}\), \({\tilde{f}}_i = \mathrm{{col}}\)\(\left\{ {f(x_{i} )} \right.\)\(- f(\hat{x}_{i} ),\)\(f(x_{i} ,v_{i} )\; - \;\)\(f\left( {\hat{x}_{i} ,\hat{v}_{i} } \right)v_{i} \; - \;\)\(\left. {f\left( {\hat{x}_{i} ,\hat{v}_{i} } \right)} \right\}\), \(\varpi = \mathrm{{col}}\)\(\{ \omega ,f_{{xi}} ,f_{{xj}} ,f_{{vj}} ,\)\(f_{{ui}} ,\dot{f}_{{xi}} ,\dot{f}_{{xj}} ,\dot{f}_{{vj}} \}\), \(i \in N_1\), \(j \in N_2\). From the definition of error and the fault estimation error, one obtains:

where

with \(I_1= {\mathscr {I}}_m\), \(I_2={\mathscr {I}}_{n-m}\), \(I_3 = \textrm{diag} \{{\mathscr {I}}_m, 0_{n-m}\}\), \(I_4 = \textrm{diag} \{0_m, {\mathscr {I}}_{n-m}\}\).

Let \({\tilde{\varepsilon }}_i = \mathrm{{col}} \{ {\tilde{e}}_i, {\tilde{e}}_{fi} \}\), based on (9) and (10), the following augmented system can be derived:

where

In this step, the objective is to address the fault-tolerant estimation for HMASs with inconsistent Markov topology by designing the observer (5), adaptive fault tolerant laws (6) and ETS-a (7), so that the augmented system (11) can be stable and satisfy:

(1) for \(\varpi _i = 0\), the system (11) is stable;

(2) under the zero-initial condition, for all non-zero \(\varpi _i = 0\), the system (11) satisfies the \({H_\infty }\) performance index requirement \({\left\| {\tilde{\varepsilon }_i} \right\| _2} \le \beta {\left\| {\varpi _i} \right\| _2}\).

Based on the above analysis, the fault tolerant controller will next be designed by using the estimation information as compensation to enable the heterogeneous multi-agent system can achieve consensus.

Let \({X_1} = \mathrm{{col}} \{ \hat{x}_1, \hat{x}_2, \ldots , \hat{x}_m \}\), \({X_2} = \mathrm{{col}} \{ \hat{x}_{m + 1}, \hat{x}_{m + 2}, \ldots , \hat{x}_n \}\), \({X_3}= \mathrm{{col}} \{ \hat{v}_{m + 1}, \hat{v}_{m + 2}, \ldots , \hat{v}_n \}\). Define the consensus error as: \({e_{\hat{x}_i}} = {\hat{x}_1} - {\hat{x}_i}\), \(i \in N_1\), \({e_{\hat{x}_j}} = {\hat{x}_{m + 1}} - {\hat{x}_j}\), \(i \in N_2\), \({e_{\hat{v}j}} = {\hat{v}_{m + 1}} - {\hat{v}_j}\), \(j \in N_2\). Then, for \(i \in N_1\), one has \({e_{\hat{x}i}} = \left( {{C_1} \otimes {I_p}} \right) {X_1}\), \({X_1}\left( t \right) = \left( {{D_1} \otimes {I_p}} \right) {e_{\hat{x}i}}\left( t \right) + \left( {{\mathbf{{1}}_{m - 1}} \otimes {I_p}} \right) {\hat{x}_1}\left( t \right)\), with \({C_1} = \left[ {1, - {I_{m - 1}}} \right]\), \({D_1} = \left[ {0, - {I_{m - 1}}} \right] ^T\). For \(i \in N_2\), there’s a similar transformation. Based on this, the consensus error system can be described:

where \({\bar{e}_i} = \mathrm{{col}} \{ e_{\hat{x}_i}, e_{\hat{x}_j}, e_{\hat{v}_j} \}\), \(\bar{\Delta }_i = \left( {C_1} \otimes {I_p} \right) {\bar{\delta }_i}\), \(\bar{\delta }_i = \mathrm{{col}} \{ \bar{\delta }_1, \bar{\delta }_2, \bar{\delta }_3 \}\), \(\bar{\delta }_1 = \mathrm{{col}}\)\(\{ \delta _{e_{\hat{x}_1}}, \delta _{e_{\hat{x}_2}},\)\(\;\ldots , \delta _{e_{\hat{x}_m}} \}\), \(\bar{\delta }_2 = \mathrm{{col}}\)\(\{ \delta _{e_{\hat{x}_{m+1}}}, \delta _{e_{\hat{x}_{m+2}}},\)\(\;\ldots , \delta _{e_{\hat{x}_{n}}} \}\), \(\bar{\delta }_3 = \mathrm{{col}}\)\(\{ \delta _{e_{\hat{v}_{m+1}}}, \delta _{e_{\hat{v}_{m+2}}},\)\(\;\ldots , \delta _{e_{\hat{v}_{n}}} \}\). \(\bar{\varpi }_i =\)\(\;\{ {\mathscr {E}}_{i \eta }, \Delta _{e_{i \eta }} \}\), \({\mathscr {E}}_{i \eta } =\)\(\;{C_1} \otimes {I_p} \tilde{e}_i\), \(\Delta _{e_{i \eta }} =\;\)\({C_1} \otimes {I_p} \tilde{\delta }_i\), \(D = \left[ { - \bar{L} \otimes H, \bar{L} \otimes H} \right]\).

The main target is to deal with the consensus problem for HMASs, the controller and event-triggered scheme (8) are proposed so that the consensus error system is stable and meet condition: \({\left\| {\bar{e}} \right\| _2} \le {\beta _2} {\left\| {\varpi _2} \right\| _2}\).

Assumption 2

34 For the nonlinear function \(f \left( \cdot \right)\), there exists constants \({\eta _1}\), \({\eta _2} > 0\) for any x, v, \(x'\), \(v'\), such that

Main results

In this part, sufficient conditions for addressing the fault estimation and consensus control problems of HMASs (1) will be obtained. For convenience, the symbols present in the paper are summarized in Table 2.

Theorem 1

Assuming the graph \({\mathscr {G}}\) exists directed spanning tree. For given scalars \(\eta _1\), \(\eta _2\), \(\eta _3\), \(\beta\), \(\vartheta _1\), \(\eta _M\), the augmented system (11) is stable, if there exist real matrices \(\tilde{P}\), \(\tilde{Q}_1\), \(\tilde{Q}_2\) > 0 and appropriate dimensions matrix \(\tilde{W}_1\), \(\tilde{W}_2\), \(\tilde{\Omega }\) such that:

where

with

and satisfies the \({H_\infty }\) performance. Moreover, the observer parameters can be obtained: \(\tilde{H} = Y_1 \tilde{P}^{-1}\).

Proof

Choose the following Lyapunov function:

When \(\sigma \left( t \right) = l\), by the weak infinitesimal operator \(\mathscr {L}\) in , one yields

From Assumption 1, one obtains

Furthermore, according to Jensen’s inequality35 and Lemma 3 in36, it follows that

where

Combining the above formula, ETS-a and Kronecker product, one has

where \(\varsigma _1 = \mathrm{{col}} \left[ {\tilde{\varepsilon }, \tilde{\varepsilon }_\eta , \tilde{\varepsilon }( {t - {\eta _M}} ), \tilde{\delta }_\eta , F, \varpi } \right]\), by using Schur complement37, \(\Upsilon\) can be obtained as following:

where

with

Defining \(\tilde{P} = P_l^{ - 1}\), \({\tilde{Q}_1} = \tilde{P}{Q_1}\tilde{P}\). Then, pre- and post-multiplying both sides of (15) by \(\mathrm{{diag}}\left\{ {\tilde{P},\tilde{P},\tilde{P},\tilde{P},I,I,I} \right\}\) and its transpose, one has

with

Moreover, from Lemma3 in38, one has the following:

Further, apply Schur complement to \(\tilde{\Upsilon }\), \(\Sigma\) is obtained as shown in Theorem 1.

If \(\Sigma < 0\) hold, then one yields

Under zero initial condition \(V\left( {e\left( 0 \right) } \right) = 0\), it is easy to show that \(\mathrm{{E}}\left\{ {V\left( {e\left( { + \infty } \right) } \right) } \right\} = \mathrm{{E}}\left\{ {\int _0^{ + \infty } {LV\left( {t,\sigma \left( t \right) } \right) dt} } \right\} \ge 0\). Furthermore, one can get \(\mathrm{{E}}\left\{ {\int _0^{ + \infty } {{{\tilde{e}}^\mathrm{{T}}}\tilde{e}dt} } \right\} \le {\gamma ^2}\mathrm{{E}}\left\{ {\int _0^{ + \infty } {{\varpi ^\mathrm{{T}}} \varpi dt} } \right\}\). It completes the proof. \(\square\)

Theorem 2

Assuming the graph \({\mathscr {G}}\) exists directed spanning tree. For given scalars \(\eta _4\), \(\eta _5\), \(\eta _6\), \(\beta _2\), \(\vartheta _2\), \(\eta _M\), the estimation error system 12 is stable, if there exist real matrices \(\bar{R}\), \(\bar{Q}_3\), \(\bar{Q}_4\) and appropriate dimensions matrix \(\bar{W}_3\), \(\bar{W}_4\), \(\bar{\Omega }\) such that:

where

with

and satisfies the prespecified constraints. In addition, the controller parameters can be obtained: \(K = Y_2 \tilde{R}^{-1}\).

Proof

Choose the following Lyapunov function:

The following proof steps is similar to Theorem 1. These details are omitted for brevity. \(\square\)

Simulation study

The validity of the previous results will be illustrated in this section by an numerical example presented below.

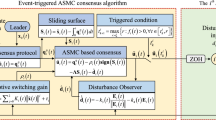

Assume that the considered HMASs (1) consist of four agents, labeled 1, 2, 3, and 4, where agents 1 and 2 are FO agents and agents 3 and 4 are SO agents. As shown in Fig. 2, the communication topology of the agents switches between the two topologies, which follows an inconsistent Markov process. It is assumed that all weights of topology 1 are all 1 and the weights of topology 2 are represented in Fig. 2.

Set the Markov process parameters \(\Pi = [-2, 2; 0.8, -0.8]\) for the FO agents, and \(p_{11} = 0.8\), \(p_{12} = 0.2\), \(p_{21} = 0.4\), \(p_{22} = 0.6\) for the SO agents. By taking these parameters, the Markov process of the HMASs is shown in Fig. 3, where \(\sigma _1 (t)\) indicates the Markov process of the SO agent, and the coordinate variable denotes the mode at the current moment of the SO agent. \(\sigma _2 (t)\) depicts the Markov process for the FO agent, and the coordinate variable expresses the mode at the current moment of the FO agent.

Furthermore, the parameter of EMTa is set as: \(\sigma _1=0.008\), \(\sigma _2=0.015\), \(\sigma _3=0.012\), \(\Omega _1=[8.41,0.61;0.63,8.12]\), \(\Omega _2=[5.38,0.45;0.42,5.21]\), \(\Omega _3=[7.41,0.53;0.56,7.42]\), and the parameter of EMTb is set as: \(\sigma _4=0.03\), \(\sigma _5=0.05\), \(\sigma _6=0.06\), \(\Omega _4=[4.14,0.18;0.18,4.77]\), \(\Omega _5=[5.64,0.16;0.16,5.56]\), \(\Omega _6=[4.85,0.32;0.32,4.69]\). The sampling interval is set to \(t_h = 0.1s\). In addition, in the proposed state observer and fault estimator, the gain matrix is chosen: \(H_1=[0.69,0.06;0.04,0.62]\), \(H_2=[0.15,0.02;0.03,0.66]\), \(H_3=[0.58,0.18;0.17,0.56]\), \(F_1=-[3.94,-0.15;0.08,2.24]\), \(F_2=-[2.41,0.12;-0.25,4.12]\), \(F_3=-[3.39,0.16;0.19,2.26]\), and the controller gain matrix is: \(k_1=[1.21,0.16;0.11,1.84]\), \(k_2=[2.52,0.11;0.16,2.23]\), \(k_3=[1.96,0.18;0.19,1.88]\).

Due to the fact that external disturbances and nonlinearities are inevitable in real systems, it is assumed that the disturbances presented in each agent is \(w = 0.1 sin (t)\), and the nonlinear function is \(f (x_i) = 0.1 sin(x_i)\), \(i = 1, 2\), \(f (x_i, v_i) = -0.45 sin(x_i) - 0.2 v_i\), \(i = 3, 4\). In addition, the states of different agents in the system are subject to partial loss of efficiency failures at different times, with a failure rate of \(20\%\), as indicated below:

Similarly, the controller is subjected to partial failure faults at times of 1s, 1.25s, 1.0s, 1.25s respectively.

Next, the initial state value is set for each agent : \(x_1(0) = [3;-4]\), \(x_2(0) = [2;-1.5]\), \(x_3(0) = [-12;1]\), \(x_4(0) = [3;-1]\), \(v_3(0) = [-0.2;0.1]\), \(v_4(0) = [0.4;0.7]\). By simulation, one can obtain Figs. 4, 5, 6, 7, 8, and 9. Figures 4 and 5 show the estimated values of fault and state information, respectively, from which it can be seen that the designed fault estimator and state observer are valid. Figure 6 illustrates the triggering moments of the position and velocity states under ETS-a and ETS-b, from which it is also seen that it is possible to reduce the data transmission. Table 3 shows the number of triggers for each agent between double ETS and single ETS.

Figures 7 and 8 demonstrate the trajectory evolution of the position and velocity states in the system, from which it can be seen that the states are able to reach consistency under the designed controller. Figure 9 represents the control inputs. Figure 10 shows the evolution curves of all agents following the same Markov process. Comparing Figs. 7, 8, and 10b–d, it can be seen that when following the same Markov process, the state values are relatively large at the first peak.

To further validate the effectiveness of the proposed double ETS, in this paper, quantitative analysis was conducted on convergence time, trigger times, and control cost, as well as compared with the single ETS. As shown in Table 4, the dual ETS significantly reduces communication overhead, that is, trigger times decreased by 37.1%, while shortening convergence time by 16.7% and achieving smoother control inputs, all while ensuring system consistency. These results demonstrate that the proposed strategy maintains robust control performance while conserving network resources.

Conclusions

In this paper, the fault-tolerant control problem is investigated for the HMASs subject to inconsistent Markov, disturbances, nonlinear, sensor failures and actuator failures. Inconsistent Markov processes are introduced to characterize the different topological switching behaviors between FO and SO agents. In order to minimize the transmission of redundant information, a double ETS dependent on sampled-data is introduced. Based on this, a fault-tolerant observer and fault estimator are designed by utilizing the relative output estimation error. Then, based on the obtained estimation results, the relative output information is utilized to design fault-tolerant consensus controllers for each agent. Further, based on Lyapunov function method, Kronecker product and inequality techniques, criteria are obtained to achieve consensus for the system. Finally, the validity of the proposed method is verified by a simulation. Numerical simulations show that after a 20% performance loss fault occurs in the sensor/actuator, the proposed method can restore consistency within 15 seconds, with the position tracking error stabilizing within ±5%. At the same time, under the proposed double ETS, the number of triggers can be significantly reduced. In future work, it is proposed to combine deep reinforcement learning with double ETS to enhance real-time identification accuracy during concurrent actuator-sensor failures.

Data availability

The complete experiment data is available by contacting the corresponding author.

References

Wang, D., Liu, Y., Yu, H. & Hou, Y. Three-dimensional trajectory and resource allocation optimization in multi-unmanned aerial vehicle multicast system: A multi-agent reinforcement learning method. Drones 7, 641 (2023).

Yan, Z. et al. Event-triggered formation control for time-delayed discrete-time multi-agent system applied to multi-UAV formation flying. J. Franklin Inst. 360, 3677–3699 (2023).

Wang, C. et al. Multi-agent reinforcement learning-based routing protocol for underwater wireless sensor networks with value of information. IEEE Sens. J. 24, 7042–7054 (2023).

Chreim, B., Esseghir, M. & Merghem-Boulahia, L. Energy management in residential communities with shared storage based on multi-agent systems: Application to smart grids. Eng. Appl. Artif. Intell. 126, 106886 (2023).

Shu, P., Hu, Y., Hua, Y., Dong, X. & Ren, Z. Output formation tracking control for heterogeneous multi-agent systems with application to unmanned aerial and ground vehicles. In 2022 41st Chinese Control Conference (CCC), 5038–5043 (IEEE, 2022).

Liu, H., Wang, X., Liu, R., Xie, Y. & Li, T. Air-ground multi-agent system cooperative navigation based on factor graph optimization slam. Measure. Sci. Technol. 35, 066303 (2024).

Xiong, H., Deng, H., Liu, C. & Wu, J. Distributed event-triggered formation control of UGV-UAV heterogeneous multi-agent systems for ground-air cooperation. Chinese J. Aeronautics 37, 458–483 (2024).

Li, H., Jia, X., Chi, X. & Li, B. Fully distributed prescribed-time leader-following output consensus of heterogeneous multi-agent systems with dynamic event-triggered mechanism. IEEE Trans. Autom. Sci. Eng. 22, 8341–8350 (2024).

Ren, Y. et al. Model-free adaptive consensus design for a class of unknown heterogeneous nonlinear multi-agent systems with packet dropouts. Sci. Rep. 14, 23093 (2024).

Yazdanpanah, N., Farsangi, M. M. & Seydnejad, S. R. A data-driven subspace distributed fault detection strategy for linear heterogeneous multi-agent systems. ISA Trans. 146, 186–194 (2024).

Chen, L. & Wang, Q. Prescribed performance-barrier lyapunov function for the adaptive control of unknown pure-feedback systems with full-state constraints. Nonlinear Dyn. 95, 2443–2459 (2019).

Guo, X., Wang, C. & Liu, L. Adaptive fault-tolerant control for a class of nonlinear multi-agent systems with multiple unknown time-varying control directions. Automatica 167, 111802 (2024).

Dong, K., Yang, G.-H. & Wang, H. Estimator-based event-triggered output synchronization for heterogeneous multi-agent systems under denial-of-service attacks and actuator faults. Inf. Sci. 657, 119955 (2024).

Elsayed, A. M., Elshalakani, M., Hammad, S. A. & Maged, S. A. Decentralized fault-tolerant control of multi-mobile robot system addressing LiDAR sensor faults. Sci. Rep. 14, 25713 (2024).

Zhang, J., Ma, L., Zhao, J. & Zhu, Y. Fault detection and fault-tolerant control for discrete-time multi-agent systems with sensor faults: A data-driven method. IEEE Sens. J. 24, 22601–22609 (2024).

Liu, C., Zhao, J., Jiang, B. & Patton, R. J. Fault-tolerant consensus control of multi-agent systems under actuator/sensor faults and channel noises: A distributed anti-attack strategy. Inf. Sci. 623, 1–19 (2023).

Rotondo, D., Theilliol, D. & Ponsart, J.-C. Virtual actuator and sensor fault tolerant consensus for homogeneous linear multi-agent systems. IEEE Trans. Circ. Syst. I: Regular Papers 71, 1324–1334 (2023).

Yu, Y., Guo, J. & Xiang, Z. Distributed fuzzy consensus control of uncertain nonlinear multiagent systems with actuator and sensor failures. IEEE Syst. J. 16, 3480–3487 (2021).

Pu, X. & Zhang, L. The couple-group consensus of heterogeneous multi-agent systems with different leaders under Markov switching in cooperative-competitive networks. Neural Process. Lett. 55, 1799–1831 (2023).

Wen, G., Jiang, D., Peng, Z., Huang, T. & Rahmani, A. Fully distributed bipartite formation control for stochastic heterogeneous multi-agent systems under signed markovian switching topology. IEEE Trans. Control Netw. Syst. https://doi.org/10.1109/TCNS.2024.3355039 (2024).

Tai, W., Li, X., Zhou, J. & Arik, S. Asynchronous dissipative stabilization for stochastic markov-switching neural networks with completely-and incompletely-known transition rates. Neural Netw. 161, 55–64 (2023).

Song, X., Man, J., Song, S. & Ahn, C. K. Gain-scheduled finite-time synchronization for reaction-diffusion memristive neural networks subject to inconsistent markov chains. IEEE Trans. Neural Netw. Learn. Syst. 32, 2952–2964 (2020).

Zhang, Y. & Wu, Z.-G. Event-based asynchronous \(h{\infty }\) control for nonhomogeneous markov jump systems with imperfect transition probabilities. IEEE Trans. Cyber. 54, 6269–6280 (2024).

Zhou, J., Dong, J. & Xu, S. Asynchronous dissipative control of discrete-time fuzzy markov jump systems with dynamic state and input quantization. IEEE Trans. Fuzzy Syst. 31, 3906–3920 (2023).

Wang, Y., Chen, L. & Xu, H. Distributed event-triggered finite-time composite learning cooperative control for high-order mimo nonlinear multi-agent systems. J. Franklin Inst. 362, 107856 (2025).

Cai, X., Zhu, X. & Yao, W. Distributed time-varying out formation-containment tracking of multi-uav systems based on finite-time event-triggered control. Sci. Rep. 12, 20296 (2022).

Yang, J., Wang, J. & Shen, D. Observer-based sampled-data event-triggered tracking for nonlinear multi-agent systems with semi-markovian switching topologies. Inf. Sci. 676, 120803 (2024).

Li, S., Chen, Y. & Liu, P. X. Double event-triggered leader-following consensus and fault detection for lipschitz nonlinear multi-agent systems via periodic sampling strategy. Nonlinear Dyn. 111, 8293–8311 (2023).

Chen, M., Yan, H., Zhang, H., Fan, S. & Shen, H. Dual periodic event-triggered control for multi-agent systems with input saturation. ISA Trans. 136, 61–74 (2023).

Guo, H., Meng, M. & Feng, G. Mean square leader-following consensus of heterogeneous multi-agent systems with Markovian switching topologies and communication delays. Int. J. Robust Nonlinear Control 33, 355–371 (2023).

Wang, J., Wen, G. & Duan, Z. Distributed antiwindup consensus control of heterogeneous multiagent systems over Markovian randomly switching topologies. IEEE Trans. Autom. Control 67, 6310–6317 (2022).

Sader, M., Li, W., Liu, Z., Jiang, H. & Shang, C. Semi-global fault-tolerant cooperative output regulation of heterogeneous multi-agent systems with actuator saturation. Inf. Sci. 641, 119028 (2023).

Liu, Y. & Wang, Z. Data-based output synchronization of discrete-time heterogeneous multiagent systems with sensor faults. IEEE Trans. Cyber. 54, 265–272 (2022).

Yu, W., Ren, W., Zheng, W. X., Chen, G. & Lü, J. Distributed control gains design for consensus in multi-agent systems with second-order nonlinear dynamics. Automatica 49, 2107–2115 (2013).

Peng, C., Ma, S. & Xie, X. Observer-based non-pdc control for networked T-S fuzzy systems with an event-triggered communication. IEEE Trans. Cyber. 47, 2279–2287 (2017).

Wang, H., Xue, B. & Xue, A. Leader-following consensus control for semi-Markov jump multi-agent systems: An adaptive event-triggered scheme. J. Franklin Inst. 358, 428–447 (2021).

Su, H., Wang, Z., Song, Z. & Chen, X. Event-triggered consensus of non-linear multi-agent systems with sampling data and time delay. IET Control Theory Appl. 11, 1715–1725 (2017).

Yin, X., Yue, D. & Hu, S. Adaptive periodic event-triggered consensus for multi-agent systems subject to input saturation. Int. J. Control 89, 653–667 (2016).

Funding

This work was supported by the National Natural Science Foundation of China (No. 62073124).

Author information

Authors and Affiliations

Contributions

Dongxiao Hu was responsible for methodology, verification and writing-original draft. Jianwei Ma was responsible for supervision, review and editing. Shaofei Zang was responsible for Conceptualization, Methodology, guidance, and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Hu, D., Ma, J. & Zang, S. Double event-triggered fault tolerant consensus of nonlinear heterogeneous multiagent systems under inconsistent Markov. Sci Rep 16, 243 (2026). https://doi.org/10.1038/s41598-025-29570-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-29570-6