Abstract

This study aims to compare and evaluate the accuracy, consistency, and response behavior of ChatGPT-4o and DeepSeek in orthodontic health literacy tasks. A total of 50 multiple-choice, text-based questions covering five orthodontic domains were developed using the Delphi method by a panel of five experienced orthodontic professionals. Each question was asked to both models three times daily (morning, noon, evening) over three days, in both English and Chinese versions. Accuracy, consistency, and response time were analyzed using SPSS and R software. The overall accuracy of ChatGPT-4o (90.4%) was slightly higher than that of DeepSeek (88.0%), though the difference was not statistically significant in either English (p = 1.000) or Chinese (p = 0.263). Across individual question groups, no significant differences in accuracy were found between models (p > 0.05). The time of day and testing day had no effect on model performance (p > 0.05 for all comparisons). Both models demonstrated similar consistency in their responses (p = 1.000 for English; p = 0.412 for Chinese). However, ChatGPT-4o showed significantly faster response times than DeepSeek in both languages (p < 0.01). Both ChatGPT-4o and DeepSeek demonstrate comparable accuracy and consistency in orthodontic health literacy tasks. However, ChatGPT-4o’s significantly faster response time makes it more suitable for clinical applications requiring efficient information delivery.

Similar content being viewed by others

Introduction

Artificial intelligence (AI), a subfield of computer science, seeks to develop systems capable of performing cognitive functions traditionally associated with human intelligence, including reasoning, learning, and decision-making1. In healthcare, AI technologies have been increasingly employed to enhance diagnostic accuracy, optimize treatment protocols, reduce clinical workload, and support patient education2. One of the most transformative areas of AI application lies in education, where it offers the potential to deliver personalized learning experiences, simulate complex clinical scenarios, and reinforce domain-specific knowledge.

Among recent advances in AI, transformer-based language models have become particularly influential due to their ability to process and generate human-like language. These models are underpinned by self-attention mechanisms that allow them to evaluate relationships between all elements in a sequence, thereby producing coherent, context-aware outputs3. The transformer architecture enables efficient parallel processing and contextual representation, making it foundational to large language models (LLMs) such as ChatGPT and DeepSeek. ChatGPT-4o, developed by OpenAI and released in 2024, incorporates multimodal capabilities and improved response accuracy, especially in non-English contexts4. DeepSeek, a more recent open-source alternative, emphasizes transparency and domain customization, offering enhanced adaptability for specialized educational tasks5.

Orthodontic health literacy, defined as the ability to comprehend, evaluate, and apply information related to orthodontic conditions, appliances, and treatment protocols, is fundamental for the general public and patients alike6. It plays a critical role in fostering treatment compliance, obtaining truly informed consent, and sustaining appropriate self-care practices throughout orthodontic therapy7. Despite its established importance, effectively disseminating and translating professional knowledge to bridge the health literacy gap remains a practical challenge. Concurrently, while artificial intelligence (AI) has found applications in various dental domains, its integration as a tool to directly support and enhance orthodontic health literacy among the public remains insufficiently investigated.

To address this gap, this study aims to evaluate and compare the performance of two advanced large language models (LLMs), ChatGPT-4o and DeepSeek, in providing accurate and consistent responses to a standardized set of questions encompassing core dimensions of orthodontic health literacy. This study seeks to determine whether these widely accessible AI models can serve as reliable and effective adjuncts in public-facing orthodontic education, thereby potentially empowering individuals to make more informed decisions about their care.

To move beyond the limitations of prior research in dental / health literacy, which has typically been single-language, single-session assessments focused primarily on accuracy8,9,10. This study employs a bilingual, expert-validated item set, implements repeated measurements across days / sessions to characterize stability, and incorporates response latency as a usability endpoint. Together, these design choices position the study to offer a more complete view of model behavior across accuracy, stability, and speed. Moreover, this study does not conceptualize large language models as learners, nor does it evaluate their direct impact on human learning outcomes. Instead, it investigates their potential role as accessible digital tools for supporting orthodontic health literacy among the general public. We hypothesize that if ChatGPT-4o and DeepSeek demonstrate adequate accuracy, linguistic consistency, and responsiveness when addressing standardized orthodontic questions, they may function as supportive resources for informal learning within public health education contexts.

Materials and methods

To align with the theoretical construct of public health literacy, the question set was designed to reflect key competencies: the ability to access, understand, and apply health information for informed decision-making6. These domains were contextualized to orthodontic care, covering basic knowledge, risk awareness, treatment decisions, oral health maintenance, and long-term follow-up. A panel of five orthodontic specialists developed a total of 50 multiple-choice, text-based questions using the Delphi method, with three iterative rounds of anonymous review and feedback to ensure content validity, clinical relevance, and linguistic clarity11. A bidirectional translation process (English ↔ Chinese) and expert verification were used to maintain semantic and conceptual equivalence across languages (Fig. 1)12. Bloom’s Taxonomy of thinking complexity was applied to categorize items by cognitive demand, from factual recall to higher-order decision-making, ensuring alignment with educational objectives in orthodontic health literacy (Supplementary Material; Table 1)13.

Delphi study

A modified Delphi study was conducted to develop a consensus-based assessment instrument for orthodontic health literacy. The expert panel comprised five senior orthodontic specialists from the Department of Orthodontics at a major tertiary care hospital in Hubei Province, China. Stringent inclusion criteria ensured all experts held an advanced post-graduate degree (PhD or Master’s) and possessed a minimum of 10 years of dedicated clinical and teaching experience, forming a highly homogeneous and authoritative panel suited for achieving focused consensus in this specialized domain, as supported by methodological literature14.

The process involved two structured rounds with a pre-defined consensus threshold of ≥ 80% agreement for item inclusion and wording15. In Round 1, the panel received a preliminary bilingual item set derived from a literature review. All five experts (100% response rate) anonymously rated each item on a 5-point Likert scale for clinical relevance and public clarity, while providing open-ended feedback on wording and scope. A revised questionnaire incorporating this feedback was then distributed in Round 2, where panelists gave a definitive “Approve” or “Disapprove” for each item’s final inclusion, again achieving a 100% response rate.

Following this process, a strong consensus was achieved, resulting in the development of a final set of 50 multiple-choice, text-based questions. Every item exceeded the consensus threshold, each receiving ≥ 80% approval in the final round, thereby robustly representing the collective judgement of the expert panel on the core dimensions of orthodontic health literacy.

ChatGPT-4o (OpenAI, San Francisco, CA, USA) and DeepSeek (DeepSeek Inc., Hangzhou, Zhejiang, China) were tested in this study. To ensure consistency and neutrality in model responses, all questions were submitted in newly initialized sessions using only the question stem and its four multiple-choice options, without any additional metadata or contextual information. When necessary, prompts were accompanied by the instruction “only short answers” or “Only answer True/False (e.g., True), without additional explanation” to avoid elaborated explanations and promote objective response comparison. This minimal-input protocol was intended to standardize response formatting and simulate typical user interactions in digital health education scenarios.

Data were collected in March 2025. Each model was prompted with the complete question set three times per day (morning, afternoon, evening) over three consecutive days. This yielded a total of 900 responses (50 questions × 2 models × 3 time points × 3 days). No prompts were repeated or followed up during the interaction, and no manual feedback was given to either system. Both models were used via their official public interfaces with default configurations and without any supplementary training. Ethical approval was not required for this study, as no human or animal participants were involved.

Model response times were measured in a 3 × 3 design (three time points across three consecutive days) under standardized network conditions (1000 Mbps ± 50 Mbps bandwidth, < 10 ms latency). For each question at each time point, three replicates were collected and the median was reported to reduce transient fluctuations. Times were captured with a timestamped screen recorder; prompts were submitted via the official web interfaces of ChatGPT-4o and DeepSeek, with start/stop timing manually operated by a trained observer. The response time for each question was measured as the duration between pressing the “Enter” key to submit the query and the complete display of the model’s final response.

All measurements were conducted on a single, standardized computer workstation to eliminate hardware-induced variability. The specifications were: 11th Gen Intel(R) Core(TM) i7-1165G7 @2.80 GHz CPU, 16GB RAM, and the Windows 10 Operating System. All interactions with the AI models (ChatGPT-4o and DeepSeek) were performed using the Google Chrome browser (version 141.0.7390.66).

Accuracy and consistency were evaluated following the method of Arılı Öztürk16. Accuracy for each model was calculated as the percentage of correct responses across nine repetitions per question, with a 95% confidence interval calculated using the binomial Wald method. Consistency was calculated as the ratio of the most frequently repeated answer to the total number of repetitions for each question. Group-level consistency was determined by calculating the mean and standard deviation of consistency values across all questions within each thematic group.

Statistical analysis

Data were analysed by IBM SPSS Statistics 22 (SPSS Inc., Chicago, IL, USA) and R statistical software (R Core Team, Vienna, Austria). The assumption of normality was evaluated through the Shapiro–Wilk test. Independent samples t-test was used for group comparisons, with Cohen’s d calculated to estimate effect sizes. For variables not following a normal distribution, comparisons were performed by a three-way robust ANOVA using Kruskal–Wallis test and Mann–Whitney U test for pairwise comparisons. The intraclass correlation coefficient (ICC) was computed to evaluate measurement consistency. Categorical data were examined with Pearson’s chi-square test, Yates’ continuity correction, Fisher–Freeman–Halton test, and Fisher’s exact test. In cases requiring multiple comparisons, the Bonferroni-adjusted Z-test was applied. The confidence intervals were determined by the Binomial Wald method. A significance level of p < 0.05 was considered statistically significant.

Results

The Shapiro–Wilk test indicated that the data in all groups followed a normal distribution (p > 0.05), satisfying the assumption of normality for parametric analysis. Regardless of the question groups and the times the questions were asked, the overall accuracy rate of ChatGPT-4o was 90.4% for English and 91.3% for Chinese, while that of DeepSeek was 88.0% for English and 83.6% for Chinese. In the English version, no statistically significant difference was observed between the two models (p = 0.247, Cohen’s d = 0.077), whereas in the Chinese version, ChatGPT-4o demonstrated a significantly higher overall accuracy rate compared to DeepSeek (p < 0.001), though with a small effect size (Cohen’s d = 0.234).

Subgroup analyses revealed notable performance variations across different question groups. In Group A, ChatGPT-4o significantly outperformed DeepSeek in both English (82.2% vs. 50.0%, p < 0.001, Cohen’s d = 0.720) and Chinese versions (83.3% vs. 55.6%, p < 0.001, Cohen’s d = 0.629). In Group C, DeepSeek achieved perfect accuracy in English (100.0% vs. 90.0%, p = 0.002, Cohen’s d = 0.469), while maintaining superior performance in Chinese (98.9% vs. 93.3%, p = 0.055). Significant differences were also observed in Chinese versions of Group D (100.0% vs. 93.3%, p = 0.013, Cohen’s d = 0.373) and Group E (100.0% vs. 90.0%, p = 0.002, Cohen’s d = 0.465), where ChatGPT-4o demonstrated higher accuracy. No significant differences were found in Group B for either language version (p > 0.05).

Regardless of the question groups and the times when the questions were asked, the effect of language on accuracy rates was similar for ChatGPT-4o and DeepSeek overall (p = 0.643, Cohen’s d = 0.031 for ChatGPT-4o; p = 0.056, Cohen’s d = 0.127 for DeepSeek; Table 3). Comparing different question groups for both AIs, no significant differences in accuracy between English and Chinese versions were observed for ChatGPT-4o across all groups (p > 0.05; Table 3), whereas for DeepSeek, significant differences were identified in Group D (p = 0.013, Cohen’s d = 0.374) and Group E (p = 0.002, Cohen’s d = 0.466; Table 3).

The effect of different time points during the day (morning, noon, and evening) on accuracy was similar for both AIs, with no significant differences observed in either the English or Chinese versions (p > 0.05 for all comparisons). Similarly, accuracy across different days was stable for both models in both language versions, with no significant differences detected (p > 0.05 for all comparisons).

Regardless of the question groups and the times when the questions were asked, the consistency of ChatGPT-4o and DeepSeek was similar (p = 1.000 for English; p = 0.412 for Chinese; Table 4), with both models demonstrating excellent inter-rater reliability (ICC = 0.982 for ChatGPT-4o and 1.000 for DeepSeek in English; ICC = 0.984 for ChatGPT-4o and 0.978 for DeepSeek in Chinese). Comparing different question groups for both AIs, the consistency rates were also similar (ChatGPT-4o: p = 0.086 for English, p = 0.574 for Chinese; DeepSeek: p = 1.000 for English, p = 0.437 for Chinese; Table 4). Comparing the consistency between different question groups for the two AI models, the rates remained comparable (p > 0.05 for all comparisons; Table 4). The high ICC values across all groups indicate outstanding measurement consistency for both models in their response patterns. In addition, a heatmap was generated to visualize the response frequency distributions of ChatGPT-4o and DeepSeek across different thematic groups (Fig. 2). The visualization depicts the consistency with which each model selected the same answer choice for identical questions across all testing sessions. The color gradient represents the frequency of consistent responses, with blue indicating low consistency and red indicating high consistency. Analysis of the heatmap reveals distinct response patterns between the two models, with ChatGPT-4o demonstrating more uniform consistency across groups while DeepSeek showed greater variability, exhibiting high consistency in complex decision-making domains but lower consistency in basic knowledge areas.

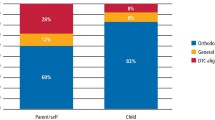

Furthermore, the response time for ChatGPT-4o was significantly shorter than that for DeepSeek in both English and Chinese versions of the questions (p < 0.001 for both languages; Fig. 3). Specifically, the mean response times for ChatGPT-4o were 1.974 ± 0.404s (English) and 1.902 ± 0.396s (Chinese), while those for DeepSeek were 5.149 ± 0.712s (English) and 5.292 ± 1.476s (Chinese).

Discussion

In dentistry, AI applications are increasingly being explored for clinical decision support, patient education, and dental student training17. Large language models such as ChatGPT can complement traditional educational methods by serving as virtual tutors and interactive learning tools16. This study evaluated the accuracy, consistency, and response time of ChatGPT-4o and DeepSeek when answering bilingual multiple-choice questions on orthodontic health literacy. Notably, the comparative analysis reveals profound disparities in the domain-specific performance of ChatGPT-4o and DeepSeek. While the overall accuracy rates showed minimal difference in English versions (90.4% vs. 88.0%, p = 0.247, Cohen’s d = 0.077) but significant advantage for ChatGPT-4o in Chinese versions (91.3% vs. 83.6%, p < 0.001, Cohen’s d = 0.234), subgroup analyses uncovered more striking variations. A particularly notable finding is DeepSeek’s significant underperformance in Group A (Basic Orthodontic Knowledge), where its accuracy (50.0% in English, 55.6% in Chinese) substantially lagged behind ChatGPT-4o (82.2%, 83.3%) with large effect sizes (English: p < 0.001, Cohen’s d = 0.720; Chinese: p < 0.001, Cohen’s d = 0.629). The performance dynamics reversed in Group C, where DeepSeek achieved perfect accuracy in English (100.0% vs. 90.0%, p = 0.002, Cohen’s d = 0.469) and maintained strong performance in Chinese (98.9% vs. 93.3%, p = 0.055). However, this superior performance was not consistent across all advanced domains. In the Chinese versions of Groups D and E, ChatGPT-4o significantly outperformed DeepSeek (Group D: 100.0% vs. 93.3%, p = 0.013, Cohen’s d = 0.373; Group E: 100.0% vs. 90.0%, p = 0.002, Cohen’s d = 0.465), suggesting potential limitations in DeepSeek’s consistency when handling complex Chinese medical content. This pattern of struggling with foundational knowledge while demonstrating competence in complex domains suggests potential knowledge fragmentation within DeepSeek’s architecture, aligning with the concept of “capability overhang” where emergent abilities on complex tasks do not guarantee robustness on simpler, foundational ones18.

The wide confidence intervals for DeepSeek in Group A (39.3–60.7 in English) compared to the narrower ranges for ChatGPT-4o (72.7–89.5), coupled with the large effect sizes, further underscore the substantial instability of DeepSeek’s performance on core concepts. This may reflect differences in how the model handles lexical recall versus contextual reasoning. Group A items relied on definition matching, which can be sensitive to prompt phrasing or vocabulary shifts, especially across languages19. In contrast, Groups B and C required reasoning and pattern recognition, which align better with DeepSeek’s architecture focused on multi-step understanding and dense attention20.These findings underscore the need to match task types with model strengths when applying LLMs in health education.

The performance profiles suggest the models may have undergone divergent optimization pathways. ChatGPT-4o demonstrates high-floor performance, maintaining accuracy above 80% across all groups, indicative of robust generalization essential for clinical decision-support tools21. In contrast, DeepSeek exhibits a high-ceiling, low-floor profile, achieving perfect or near-perfect accuracy in Groups C, D, and E (90–100%) while struggling profoundly in Group A. This “all-or-nothing” pattern resembles models trained with emphasis on mastering complex, reasoning-intensive tasks at the potential expense of uniformly consolidating foundational knowledge22. The near-perfect scores in Group C (Treatment Decision-Making Capacity) for both models suggest that structured, scenario-based reasoning represents a shared strength of contemporary LLMs, possibly due to abundant similar formats in their training data.

Language condition did not significantly affect accuracy for ChatGPT-4o across groups, suggesting strong bilingual generalization. In contrast, DeepSeek showed significantly lower accuracy in Group D and Group E in the Chinese version (Table 3), indicating potential limitations in cross-linguistic generalization for complex or context-dependent content. This discrepancy may stem from imbalances in training data distribution or less extensive pretraining in non-English medical corpora. While previous studies have highlighted the multilingual strengths of ChatGPT-based models23, evidence also suggests that non-English performance of newer domestic models such as DeepSeek may be more sensitive to domain complexity and language-specific semantics24. This pattern is consistent with prior cross-lingual healthcare evaluations reporting higher response quality in English than in non-English languages25, and with GPT-4o’s documentation that reports significant improvements on non-English text compared with earlier GPT-4-series variants, which may partially explain the smaller English–Chinese gap for GPT-4o. In contrast, DeepSeek-V2’s public documentation indicates its training data are primarily Chinese and English, with evaluations run in both languages. However, differences in data composition and alignment, alongside the “curse of multilinguality” are identified as fundamental limitations26. These limitations are amplified when processing care- and follow-up-oriented content, which demands nuanced cultural-pragmatic adaptation, thereby explaining the pronounced discrepancies in Groups D and E.Such findings highlight the importance of evaluating AI tools not only by overall performance, but also by their ability to maintain cross-linguistic reliability—an essential consideration when applying them to multicultural, multilingual health education environments.

LLMs exhibit stable performance across different times of the day and days of testing when assessed in controlled environments27. By the results of this study, no significant influence of either time of day or testing day on model accuracy was observed, supporting these earlier findings. Additionally, the consistency rates between ChatGPT-4o and DeepSeek remained comparable across different groups and languages, further reinforcing the notion of stable AI outputs across diverse contexts. These results align with recent literature on the reproducibility of AI performance in healthcare education28. Such temporal stability is particularly valuable for public health education, where learners often seek information asynchronously; consistent model performance across time enhances the reliability of LLMs as tools for supporting self-guided learning and informed patient engagement.

To ensure standardization and reduce interpretive bias, the models in this study were instructed to respond using only the letter corresponding to the selected answer, without explanation. This “choices-only” approach has been widely adopted in benchmark studies to enable reproducible, accuracy-focused comparisons across models, languages, and content domains16. While explanatory responses are important in real-world health education, evaluating them requires separate rubrics for coherence, clarity, and source reliability, which were beyond the scope of this initial assessment. Prior work has also shown that explanations may introduce stylistic variability or hallucinated logic, complicating direct performance comparisons in early-stage LLM evaluations29.

ChatGPT-4o demonstrated significantly faster response times compared to DeepSeek in both language versions, which is consistent with previous studies highlighting the superior efficiency of GPT-4-based models in interactive educational applications30. In addition, The response time differences between ChatGPT-4o (< 2s) and DeepSeek (~ 5s) reveal distinct architectural approaches(Fig. 3). ChatGPT-4o’s speed advantage stems from its optimized multimodal architecture19, particularly beneficial for time-sensitive applications such as real-time patient communication or mobile-based orthodontic education. DeepSeek’s design prioritizes different objectives: (1) hybrid sparse-dense attention mechanisms for computational efficiency, (2) thorough response generation emphasizing quality over speed, and (3) optimized processing for complex reasoning tasks20. This trade-off makes DeepSeek potentially better suited for learning scenarios that demand detailed explanation and analytical depth, such as answering complex orthodontic case questions or explaining long-term treatment options.

Although efforts were made to control environmental and network conditions during timing assessments, minor confounders such as browser rendering latency, client-side processing, or server workload may have contributed to slight variations. Prior studies have highlighted that latency performance is influenced not only by model inference speed, but also by network transmission delays and user interface overhead31. Future research may employ automated scripts or direct API-level access to improve timing accuracy and eliminate observer bias—an approach aligned with current best practices in LLM benchmarking32.

Prior evaluations in dental education and orthodontics generally report that ChatGPT-class models can perform competitively on curricular or licensing-style content, yet topic-level variability is common and conclusions are often based on single-language, single-session, accuracy-only assessments8. In contrast, the present study contributes a bilingual, expert-validated item set spanning five dimensions of orthodontic health literacy, repeated measurements across days/time windows to characterize stability (reported as percent agreement), and response latency as a usability endpoint—thereby offering a more complete view of model behavior across accuracy, stability, and speed. This design aligns with current reporting guidance that emphasizes transparency and evaluability for clinical AI systems (CONSORT-AI/SPIRIT-AI; DECIDE-AI; TRIPOD + AI/TRIPOD-LLM)21. Recent comparative studies within dentistry/oral medicine that include ChatGPT-4o and DeepSeek tend to be single-shot evaluations on specific domains (e.g., oral pathology cases, dental anatomy, or mixed MCQs) and typically do not examine bilingual performance, time-of-day/day-to-day stability, or latency33. Our stratified analysis therefore complements this literature by revealing model- and language-specific heterogeneity at the group level (A–E), while aggregate performance remained comparable. In practical terms for orthodontic health literacy, these findings map onto patient-facing needs: foundational knowledge (Group A) requires stronger human oversight, whereas structured scenario-based counseling (Group C) appears to be a shared strength, suitable for adjunctive use in education and reinforcement34. In the context of health literacy and patient education, the findings are highly relevant. Comprehension, recall, and application underpin informed consent, adherence, and self-care, making clear bilingual communication and timely responsiveness essential35. By documenting bilingual accuracy, stability across sessions, and latency, the study delivers evidence that is stronger methodologically than single-session work and more usable in real settings8.

Several limitations warrant consideration. First, the Delphi process relied on a panel of five experts from a single institution. Although the panel was composed of senior specialists with substantial clinical and academic experience, and the consensus process was rigorously structured, the relatively small and homogeneous panel may limit the generalizability of the developed item set. Second, the 50-question set, although designed via the Delphi method, may not comprehensively reflect the full spectrum of orthodontic health knowledge relevant to the public11. Third, this study assessed AI-generated responses only in a simulated digital context; no human participants or real-world behavioral metrics were involved36. Thus, the educational impact on actual learning outcomes or decision-making processes was not measured. Fourth, key elements such as explanatory adequacy, source verifiability, and cultural contextualization were not assessed, despite their critical role in ensuring the safe and meaningful application of AI tools in public health education37. Additionally, the lack of a human comparison group limits the contextual understanding of model performance. Future studies should include participants such as laypersons and orthodontic patients to establish benchmarks for accuracy and reliability38. Such comparisons are crucial for evaluating the practical role of AI in supporting oral health literacy among the general public.

While this study focused on the comparative performance of two LLMs in orthodontic health education tasks, its scope was intentionally limited to a pairwise evaluation using a structured but concise question set. This exploratory design was not intended as a comprehensive benchmark, but rather as a foundational assessment. Notably, in contrast to prior dental/health-literacy evaluations that emphasized single-language, single-session accuracy, the present design integrates a bilingual, expert-validated item set, repeated measurements across days/sessions, and latency as a usability endpoint. This combination broadens the evidentiary base from correctness alone to include stability and user-relevant speed, improving interpretability for clinical and educational settings. Building upon this methodological advancement, future research should expand to include larger model cohorts, broader question banks, and multi-dimensional evaluation frameworks encompassing explanation quality, source transparency, and user learning outcomes.

AI holds strong potential as a complementary educational resource in the field of orthodontic health literacy. The findings of this study support the feasibility of applying general-purpose LLMs, such as ChatGPT-4o and DeepSeek, to simulate patient education scenarios across languages. As the accuracy and linguistic adaptability of these systems continue to evolve, they may become increasingly useful in health communication and public dental education.

Conclusion

This study demonstrates that while both ChatGPT-4o and DeepSeek perform well in orthodontic health literacy tasks, ChatGPT-4o significantly outperforms DeepSeek in Chinese accuracy (91.3% vs. 83.6%) and response speed (1.9s vs. 5.2s). These findings indicate that while both models are generally competent, ChatGPT-4o’s combination of linguistic advantage and superior efficiency makes it more suitable for clinical applications requiring timely and accurate information delivery.

Data availability

All data generated or analyzed during this study are available from the corresponding author upon reasonable request.

References

Panteli, D. et al. Artificial intelligence in public health: Promises, challenges, and an agenda for policy makers and public health institutions. Lancet Public. Health. 10 (5), e428–e432. https://doi.org/10.1016/S2468-2667(25)00036-2 (2025). Epub 2025 Feb 28. PMID: 40031938; PMCID: PMC12040707.

Ghods, K., Azizi, A., Jafari, A. & Ghods, K. Application of artificial intelligence in clinical dentistry, a comprehensive review of literature. J. Dent. (Shiraz). 24 (4), 356–371 (2023). PMID: 38149231; PMCID: PMC10749440.

Chen, A. et al. Contextualized medication information extraction using transformer-based deep learning architectures. J. Biomed. Inf. 142, 104370. https://doi.org/10.1016/j.jbi.2023.104370 (2023). Epub 2023 Apr 24. PMID: 37100106; PMCID: PMC10980542.

Denecke, K., May, R. & Rivera-Romero, O. Transformer models in healthcare: A survey and thematic analysis of Potentials, shortcomings and risks. J. Med. Syst. 48 (1), 23. https://doi.org/10.1007/s10916-024-02043-5 (2024). PMID: 38367119; PMCID: PMC10874304.

Wu, J., Wang, Z. & Qin, Y. Performance of DeepSeek-R1 and ChatGPT-4o on the Chinese National Medical Licensing Examination: A Comparative Study. J. Med. Syst. 49(1), 74 (2025). https://doi.org/10.1007/s10916-025-02213-z. PMID: 40459679.

McCarlie, V. W. Jr et al. Orthodontic and oral health literacy in adults. PLoS One. 17 (8), e0273328. https://doi.org/10.1371/journal.pone.0273328 (2022). PMID: 35981083; PMCID: PMC9387824.

Baskaradoss, J. K. Relationship between oral health literacy and oral health status. BMC Oral Health. 18 (1), 172. https://doi.org/10.1186/s12903-018-0640-1 (2018). PMID: 30355347; PMCID: PMC6201552.

Kavadella, A., Dias da Silva, M. A., Kaklamanos, E. G., Stamatopoulos, V. & Giannakopoulos, K. Evaluation of chatgpt’s real-life implementation in undergraduate dental education: mixed methods study. JMIR Med. Educ. 10, e51344. https://doi.org/10.2196/51344 (2024). PMID: 38111256; PMCID: PMC10867750.

Hatia, A. et al. Accuracy and completeness of ChatGPT-generated information on interceptive orthodontics: A multicenter collaborative study. J. Clin. Med. 13 (3), 735. https://doi.org/10.3390/jcm13030735 (2024). PMID: 38337430; PMCID: PMC10856539.

Fukuda, H. et al. Evaluating the accuracy and performance of ChatGPT-4o in solving Japanese National Dental Technician Examination. Int. Dent. J. 75 (4), 100847. https://doi.org/10.1016/j.identj.2025.100847 (2025). Epub 2025 Jun 9. PMID: 40494209; PMCID: PMC12178709.

Hasson, F., Keeney, S. & McKenna, H. Research guidelines for the Delphi survey technique. J. Adv. Nurs. 32 (4), 1008–1015 (2000). PMID: 11095242.

Mahler, J. & Stahl, K. Early labour experience questionnaire: Translation and cultural adaptation into German. Women Birth. 36 (6), 511–519. https://doi.org/10.1016/j.wombi.2023.05.003 (2023). Epub 2023 May 12. PMID: 37183137.

Krathwohl, D. R. A revision of Bloom’s taxonomy: An overview. Theory Pract. ;41(4) (2002).

Boulkedid, R., Abdoul, H., Loustau, M., Sibony, O. & Alberti, C. Using and reporting the Delphi method for selecting healthcare quality indicators: A systematic review. PLoS One. 6 (6), e20476. https://doi.org/10.1371/journal.pone.0020476 (2011). Epub 2011 Jun 9. PMID: 21694759; PMCID: PMC3111406.

Muhl, C., Mulligan, K., Bayoumi, I., Ashcroft, R. & Godfrey, C. Establishing internationally accepted conceptual and operational definitions of social prescribing through expert consensus: A Delphi study protocol. Int. J. Integr. Care. 23 (1), 3. https://doi.org/10.5334/ijic.6984 (2023). PMID: 36741971; PMCID: PMC9881447.

Arılı Öztürk, E., Turan Gökduman, C. & Çanakçi, B. C. Evaluation of the performance of ChatGPT-4 and ChatGPT-4o as a learning tool in endodontics. Int. Endod J. 2. https://doi.org/10.1111/iej.14217 (2025 Mar). Epub ahead of print. PMID: 40025853.

Fatima, A. et al. Advancements in dentistry with artificial intelligence: Current clinical applications and future perspectives. Healthc. (Basel). 10 (11), 2188. https://doi.org/10.3390/healthcare10112188 (2022). PMID: 36360529; PMCID: PMC9690084.

Madrid-García, A. et al. Harnessing ChatGPT and GPT-4 for evaluating the rheumatology questions of the Spanish access exam to specialized medical training. Sci. Rep. 13 (1), 22129. https://doi.org/10.1038/s41598-023-49483-6 (2023). PMID: 38092821; PMCID: PMC10719375.

Levin, C., Orkaby, B., Kerner, E. & Saban, M. Can large language models assist with pediatric dosing accuracy? Pediatr Res. 8 (2025). https://doi.org/10.1038/s41390-025-03980-8. Epub ahead of print. PMID: 40057653.

Yadav, B. Generative AI in the era of transformers: Revolutionizing natural Language processing with LLMs. J. Image Process. Intell. Remote Sens. 4 (2), 54–61 (2024).

Liu, X. et al. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: The CONSORT-AI extension. Nat. Med. 26 (9), 1364–1374. https://doi.org/10.1038/s41591-020-1034-x (2020). Epub 2020 Sep 9. PMID: 32908283; PMCID: PMC7598943.

Perkonigg, M. et al. Dynamic memory to alleviate catastrophic forgetting in continual learning with medical imaging. Nat. Commun. 12 (1), 5678. https://doi.org/10.1038/s41467-021-25858-z (2021). PMID: 34584080; PMCID: PMC8479083.

Qutieshat, A. et al. Comparative analysis of diagnostic accuracy in endodontic assessments: dental students vs. artificial intelligence. Diagnosis (Berl.) 11(3), 259–265 (2024). https://doi.org/10.1515/dx-2024-0034. PMID: 38696271.

Zhu, S., Hu, W., Yang, Z., Yan, J. & Zhang, F. Qwen-2.5 outperforms other large Language models in the Chinese National nursing licensing examination: retrospective Cross-Sectional comparative study. JMIR Med. Inf. 13, e63731. https://doi.org/10.2196/63731 (2025). PMID: 39793017; PMCID: PMC11759905.

Yelpaala, K. et al. The role of data in public health and health innovation: Perspectives on social determinants of health, Community-Based data Approaches, and AI. J. Med. Internet Res. 27, e78794. https://doi.org/10.2196/78794 (2025). PMID: 41060023; PMCID: PMC12505398.

Shaitarova, A., Zaghir, J., Lavelli, A., Krauthammer, M. & Rinaldi, F. Exploring the latest highlights in medical natural Language processing across multiple languages: A survey. Yearb Med. Inf. 32 (1), 230–243. https://doi.org/10.1055/s-0043-1768726 (2023). Epub 2023 Dec 26. PMID: 38147865; PMCID: PMC10751112.

Krishna, S., Bhambra, N., Bleakney, R. & Bhayana, R. Evaluation of reliability, repeatability, robustness, and confidence of GPT-3.5 and GPT-4 on a Radiology Board-style Examination. Radiology. 311(2), e232715 (2024). https://doi.org/10.1148/radiol.232715. PMID: 38771184.

Funk, P. F. et al. ChatGPT’s response consistency: A study on repeated queries of medical examination questions. Eur. J. Investig Health Psychol. Educ. 14 (3), 657–668. https://doi.org/10.3390/ejihpe14030043 (2024). PMID: 38534904; PMCID: PMC10969490.

Lee, J., Park, S., Shin, J. & Cho, B. Analyzing evaluation methods for large Language models in the medical field: A scoping review. BMC Med. Inf. Decis. Mak. 24 (1), 366. https://doi.org/10.1186/s12911-024-02709-7 (2024). Published 2024 Nov 29.

Huh, S. Are chatgpt’s knowledge and interpretation ability comparable to those of medical students in Korea for taking A parasitology examination? A descriptive study. J. Educ. Eval Health Prof. 20, 1. https://doi.org/10.3352/jeehp.2023.20.1 (2023). Epub 2023 Jan 11. PMID: 36627845; PMCID: PMC9905868.

Sun, L. et al. Taming unleashed large Language models with blockchain for massive personalized reliable healthcare. IEEE J. Biomed. Health Inf. 29 (6), 4498–4511. https://doi.org/10.1109/JBHI.2025.3528526 (2025).

Li, M., Sun, J. & Tan, X. Evaluating the effectiveness of large Language models in abstract screening: A comparative analysis. Syst. Rev. 13 (1), 219. https://doi.org/10.1186/s13643-024-02609-x (2024). Published 2024 Aug 21.

Kaygisiz, Ö. F. & Teke, M. T. Can deepseek and ChatGPT be used in the diagnosis of oral pathologies? BMC Oral Health. 25 (1), 638. https://doi.org/10.1186/s12903-025-06034-x (2025). PMID: 40281436; PMCID: PMC12023442.

Kang, E. Y., Fields, H. W., Kiyak, A., Beck, F. M. & Firestone, A. R. Informed consent recall and comprehension in orthodontics: traditional vs improved readability and processability methods. Am. J. Orthod. Dentofacial. Orthop. 136(4), 488.e1-13; discussion 488-9 (2009). https://doi.org/10.1016/j.ajodo.2009.02.018. PMID: 19815144.

Perrenoud, B., Velonaki, V. S., Bodenmann, P. & Ramelet, A. S. The effectiveness of health literacy interventions on the informed consent process of health care users: a systematic review protocol. JBI Datab. Syst. Rev. Implement Rep. 13(10), 82–94 (2015). https://doi.org/10.11124/jbisrir-2015-2304. PMID: 26571285.

Johri, S. et al. An evaluation framework for clinical use of large Language models in patient interaction tasks. Nat. Med. 31 (1), 77–86. https://doi.org/10.1038/s41591-024-03328-5 (2025). Epub 2025 Jan 2. PMID: 39747685.

Mohammad-Rahimi, H. et al. Validity and reliability of artificial intelligence chatbots as public sources of information on endodontics. Int. Endod J. 57 (3), 305–314. https://doi.org/10.1111/iej.14014 (2024). Epub 2023 Dec 20. PMID: 38117284.

Mortensen, M. G., Kiyak, H. A. & Omnell, L. Patient and parent understanding of informed consent in orthodontics. Am. J. Orthod. Dentofacial Orthop. 124(5), 541–550 (2003). https://doi.org/10.1016/s0889-5406(03)00639-5. PMID: 14614422.

Acknowledgements

The authors express their gratitude to the five orthodontic specialists from the Department of Orthodontics, School and Hospital of Stomatology, Wuhan University, for developing the questionnaire using the Delphi method. We also thank the professional bilingual experts for the forward translation (English–Chinese) and the independent expert for the backward translation (Chinese–English).

Funding

This work was supported by the National Natural Science Foundation of China (Nos. 82271011).

Author information

Authors and Affiliations

Contributions

Zhaoxiang Wen designed the study and wrote the main manuscript text. Jiaxin Huang and Keer Yu collected and analyzed the data. Yaqi Li and Zhenhui Wang prepared Tables 1, 2, 3 and 4. Xiaozhu Liao and Biao Li prepared Figs. 1, 2 and 3. Zhendong Tao contributed to the literature review.Hong He supervised the study and provided critical revisions. All authors reviewed and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval and consent to participate

Ethical approval was not required as this was not a study on humans or animals.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wen, Z., Huang, J., Yu, K. et al. Evaluation of ChatGPT-4o and DeepSeek as tools for orthodontic health literacy in public dental education. Sci Rep 16, 494 (2026). https://doi.org/10.1038/s41598-025-30050-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-30050-0