Abstract

Cross-dataset modeling of electrocardiogram signals faces the dual challenges of domain shift and insufficient interpretability in clinical applications. To address these issues, this study proposes a Transformer-based cross-domain ECG modeling framework that incorporates a Domain-invariant Feature Enhancement Module and an Interpretability-driven Attention Constraint Mechanism, aiming to simultaneously improve cross-domain generalization and clinical interpretability. Experiments on four authoritative arrhythmia databases (MIT-BIH Supraventricular, MIT-BIH Arrhythmia, INCART, and SCD-Holter) demonstrate that the proposed method achieves the best performance across all target domains, with accuracy on the MIT-BIH Arrhythmia dataset reaching 0.768 (an improvement of 8.4% over the baseline Transformer) and F1-score on the INCART dataset attaining 0.898 (a gain of 4.7% compared with the second-best method). In addition, ablation studies verify the complementary contributions of DFEM and IACM, while feature importance analysis and t-SNE visualization further confirm that the model consistently attends to clinically relevant features. These results indicate that the proposed framework can effectively mitigate domain shift without relying on target-domain samples, while enhancing interpretability alongside discriminative performance.

Similar content being viewed by others

Introduction

In recent years, with the rapid advancement of medical artificial intelligence, the electrocardiogram (ECG), as the most widely used cardiac electrophysiological signal, has been extensively applied to the automated detection and auxiliary diagnosis of arrhythmias and various heart diseases1,2,3. Traditional approaches primarily rely on manually designed temporal, spectral, and morphological features, combined with conventional classifiers such as Support Vector Machines (SVM) and Random Forests. While these methods achieve certain success on small-scale datasets, they are difficult to generalize to complex clinical scenarios. With the rise of deep learning, convolutional neural networks (CNNs), recurrent neural networks (RNNs), and more recently Transformer-based architectures have gradually become mainstream, demonstrating remarkable advantages in large-scale ECG analysis4.

Unlike CNNs and RNNs, which rely on fixed receptive fields or sequential recurrence, Transformers capture global dependencies through self-attention, enabling them to model long-term temporal relationships and inter-lead correlations in ECG signals more effectively. Moreover, their parallel computation and scalability make them suitable for large-scale datasets and real-time clinical deployment. Table 1 provides a concise comparison of these architectures in the context of ECG signal analysis.

However, issues of domain shift and lack of interpretability still hinder the clinical deployment of ECG intelligence models, highlighting the urgent need for approaches that simultaneously ensure cross-domain generalization and clinical interpretability5.

In cross-dataset ECG analysis, differences in acquisition devices, subject populations, and annotation standards often lead to significant distribution discrepancies between the source and target domains, resulting in severe performance degradation on unseen datasets—commonly referred to as the “domain shift” problem. Although existing transfer learning and domain adaptation methods alleviate distribution gaps to some extent, they usually require target-domain samples, which does not align with real-world clinical settings where target-domain data are unlabeled6,7. To address these challenges, this paper proposes a joint modeling framework for cross-dataset ECG domain generalization and feature interpretability. The framework adopts a Transformer backbone, incorporates a Domain-invariant Feature Enhancement Module (DFEM) to mitigate distribution discrepancies, and introduces an Interpretability-driven Attention Constraint Mechanism (IACM) to ensure the model focuses on clinically relevant ECG features during decision-making. This design enhances both generalization and interpretability.

The main contributions of this work can be summarized as follows:

-

(1)

We propose a novel domain generalization framework tailored for cross-dataset ECG analysis. By leveraging multi-source statistical alignment and cross-domain contrastive learning, the framework effectively alleviates distribution discrepancies across different databases.

-

(2)

We design an attention constraint mechanism guided by prior knowledge of feature importance, which enhances the interpretability of the model and ensures that its focus remains consistent with clinical ECG characteristics.

-

(3)

We conduct extensive experiments on four authoritative arrhythmia datasets, and results demonstrate that our method significantly outperforms state-of-the-art domain generalization approaches in terms of accuracy, recall, and F1-score, validating its effectiveness and robustness.

Related work

Cardiac signal analysis approaches

In the early stage of automated electrocardiogram (ECG) signal analysis, research efforts mainly focused on developing feature-engineering-based machine learning models. Time-domain, frequency-domain, and morphological descriptors were extracted from ECG signals and subsequently input into conventional classifiers such as Support Vector Machines (SVM) and Random Forests to recognize arrhythmias. For instance, Marzog et al. employed the wavelet scattering transform for feature extraction and combined it with machine learning algorithms to enhance ECG classification performance, significantly improving feature representation ability8. Similarly, Jahangir et al. proposed a hybrid approach integrating handcrafted features with stacked machine learning models, which effectively improved the robustness of arrhythmia classification9. Moreover, Pouriyeh et al. systematically compared multiple traditional algorithms for cardiac disease detection, confirming their effectiveness under small-scale datasets and controlled experimental conditions10.

With the advancement of deep learning, end-to-end ECG analysis based on neural networks has gradually become the mainstream. Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN) have been widely applied in arrhythmia detection and have demonstrated strong capability in modeling complex temporal patterns11. Ebrahimi et al. reviewed the applications of deep learning methods in ECG classification, highlighting their significant advantages when applied to large-scale data12. Meanwhile, Peimankar et al. proposed the DENS-ECG model, which achieved high-precision ECG segmentation through deep learning13. In recent years, Transformer architectures have also been introduced into ECG analysis. For example, Alghieth proposed DeepECG-Net, which combines the advantages of Transformer and deep neural networks to achieve real-time anomaly detection14, while Alamatsaz et al. designed a lightweight CNN-LSTM hybrid model that maintains low computational complexity while ensuring detection performance15.

Beyond single-signal modeling, multimodal fusion approaches have increasingly attracted attention for improving the robustness and generalization of cardiac health monitoring. Warnecke et al. proposed a multimodal signal fusion-based in-vehicle heartbeat detection method, which demonstrated strong robustness in complex environments16. Żyliński et al. explored multimodal fusion methods using in-ear wearable devices, combining ECG with other physiological signals to improve heart rate estimation accuracy17. These studies suggest that multimodal fusion not only enhances the accuracy of ECG analysis but also lays the foundation for future real-time health monitoring and edge computing applications.

Domain generalization in biomedical signals

In medical signal analysis, the domain shift problem is one of the core challenges that constrain the generalization ability of models. Due to variations across different data sources, including acquisition devices, subject populations, sampling conditions, and annotation standards, the performance of a trained model often degrades when transferred to new datasets or clinical environments18. In recent years, transfer learning and feature transfer methods have been introduced into medical signal modeling to mitigate inter-database discrepancies and enhance cross-domain adaptability of models19. However, such approaches typically require labeled samples from the target domain, which limits their applicability in real-world clinical settings.

To address the dependency on labeled target domain samples, researchers have proposed two categories of methods: Domain Adaptation (DA) and Domain Generalization (DG). DA focuses on reducing domain discrepancies by aligning the distributions of source and target domains, but still requires a small amount of target domain data for fine-tuning20. In contrast, DG methods aim to learn domain-invariant representations, enabling models to generalize directly to unseen target domain data without relying on any target domain samples21,22. Recent studies have systematically reviewed DG algorithmic paradigms, covering meta-learning, data augmentation, causal inference, and other approaches, and have highlighted their potential value in both medical imaging and medical signal analysis23.

In practical applications of medical signals, DG methods have already begun to demonstrate their advantages. Imtiaz et al. proposed a cross-database and cross-lead ECG classification approach that employed unsupervised domain adaptation to achieve knowledge transfer across different databases, though it remained limited by the need for alignment during the adaptation process24. By contrast, Feng et al. developed a method based on semantic alignment and label propagation, which improved the robustness of cross-domain arrhythmia classification25, while Lee and Shin introduced a cross-database learning framework leveraging two-dimensional heartbeat feature maps, significantly enhancing generalization across multi-source datasets26. These studies demonstrate that DG methods not only alleviate the domain shift problem but also provide new perspectives for unified modeling of multi-source medical signals and for cross-platform applications.

Interpretability in biomedical signal models

In recent years, deep learning has achieved significant progress in medical signal modeling; however, its “black-box” nature poses a major challenge for clinical deployment. The lack of interpretability in the decision-making process often leads to insufficient trust from physicians and patients in the model’s predictions, thereby hindering its adoption in real-world clinical diagnosis27,28. Consequently, improving interpretability while maintaining high performance has become a critical issue to be addressed in the field of medical artificial intelligence. Previous studies have pointed out that interpretability not only helps to reveal the features attended by the model but also assists in identifying potential biases and inconsistencies, thus enhancing model reliability in high-risk medical scenarios29.

To tackle this problem, researchers have proposed various interpretability approaches, including SHAP based on feature importance decomposition, Grad-CAM based on gradient information, and visualization methods derived from attention weights30,31,32. Applications of these techniques in cardiac signal analysis have also gradually emerged, such as using visualization methods to reveal key features in ECG classification models or employing multi-channel architectures to balance interpretability and predictive performance33,34. Although these attempts have alleviated the “black-box” problem to some extent, limitations remain in complex tasks such as arrhythmia classification, particularly regarding the stability of interpretability results and their generalization across databases. Therefore, further improving the clinical applicability of interpretability methods remains an important direction for future research.

Methods

Problem definition

The core challenge of cross-dataset cardiac signal modeling lies in the difference in domain distribution. Let the source domain set be \(\mathscr {D}_s=\{(x_i^s,y_i^s)\}_{i=1}^{N_s}\) and the target domain set be \(\mathscr {D}_t=\{x_j^t\}_{j=1}^{N_t}\), where x represents the cardiac signal input and y represents the corresponding class label. In this study, the symbol s denotes the source domain, and t denotes the target domain. Specifically, the source domain \(\mathscr {D}_s\) refers to the training data collected from several existing measurement devices or datasets that have been fully labeled and used for model optimization, while the target domain \(\mathscr {D}_t\) represents new cardiac signal data obtained from other devices, hospitals, or patient populations that have not been seen during training. These domains differ in acquisition equipment, sampling rate, sensor calibration, and population distribution, leading to a significant discrepancy between the input distributions \(P_s(x)\) and \(P_t(x)\), i.e., \(P_s(x) \ne P_t(x)\). Therefore, directly transferring the representation function \(f_\theta (x)\) learned in the source domain to the target domain will cause performance degradation due to the domain shift problem.

Formally, the domain generalization problem can be defined as: given access to multiple source domains, learn a robust representation mapping \(f_\theta :\mathscr {X}\rightarrow \mathscr {H}\) such that its prediction function \(h(x)=g(f_\theta (x))\) maintains high generalization performance for any unseen target domain distribution \(P_t(x)\). The proposed framework focuses on domain generalization rather than online adaptation, which means that it does not require target-domain data during training but can still generalize effectively to new signals in real-time deployment scenarios. Once the model has been trained on \(\mathscr {D}_s\), it can be directly applied to unseen \(\mathscr {D}_t\) for inference and continuous monitoring, thus supporting near real-time cardiac signal analysis when integrated with device-side streaming inputs.

On the other hand, to improve model interpretability, it is necessary to constrain the representation space and guide the attention mechanism to focus on physiologically meaningful features. Assume that the feature dimension of the cardiac signal is d, and the input sequence is encoded by the Transformer to obtain a token representation \(Z=\{z_1,\dots ,z_d\}\). Under the standard attention mechanism, the attention weight is

where Q and K are the query and key matrices, respectively. However, if attention overly relies on noisy or irrelevant features, it may reduce model stability and interpretability. To address this issue, we introduce a feature importance constraint using a feature importance vector \(w \in \mathbb {R}^d\) obtained via permutation importance as a regularization term. This encourages the attention distribution to align with clinically important features indicated by w, formulated as:

where \(\Vert \cdot \Vert _F\) represents the Frobenius norm. This formulation not only defines the cross-domain generalization problem but also incorporates interpretability constraints to enhance the stability and transparency of model representations.

Overall model architecture

The overall model architecture is shown in the figure. First, cardiac signal inputs from different source domains are uniformly encoded and fed into a Transformer encoder via a linear layer and positional encoding to extract deep features. Multi-scale convolutions and linear transformations further enhance representational capabilities. To achieve domain-invariant feature modeling, the extracted features are constrained via a contrastive learning module, ensuring that similar samples across domains are more closely distributed. Subsequently, an interpretability-driven attention constraint mechanism leverages feature importance distribution to focus attention on key features, thereby suppressing noise and improving model robustness. Finally, all representations are aggregated and fed into a classifier, enabling cross-dataset cardiac signal recognition and interpretation. The model architecture is shown in Fig. 1.

A schematic diagram of the overall model architecture, demonstrating cross-domain cardiac signal feature extraction, domain-invariant representation contrastive learning, and interpretability-driven attention constraint mechanisms. This design combines domain generalization with feature interpretability to achieve robust recognition and classification of cardiac signals across diverse datasets.

Domain-invariant feature enhancement module

In order to alleviate the issue of domain shift in cross-dataset cardiac signal tasks, we design a Domain-invariant Feature Enhancement Module (DFEM) based on the Transformer backbone. The core idea of this module is to enforce multi-source feature alignment and contrastive mechanisms so that signals of the same class across different datasets are closer in the feature space, thereby improving the model’s generalization ability on unseen target domains. As illustrated in the overall pipeline, the input signal is first embedded into a high-dimensional representation, and then progressively transformed through projection, domain alignment, and contrastive mechanisms to obtain domain-invariant representations.

Specifically, given a sample sequence \(x^{(m)} \in \mathbb {R}^{T \times C}\) from the m-th source domain, where T denotes the temporal length and C the number of channels, we first perform linear embedding with positional encoding to obtain the initial token representation:

where \(\textrm{Embed}(\cdot )\) denotes a linear mapping and \(\textrm{PE}(T)\) represents temporal positional encoding.

The tokens are then fed into a Transformer encoder to capture sequential dependencies via the self-attention mechanism. Specifically, the self-attention layer performs three parallel linear transformations on the token sequence to generate the query (Q), key (K), and value (V) matrices, formulated as:

where \(Z^{(m)} \in \mathbb {R}^{T\times d}\) denotes the token representation of the m-th source domain, and \(W_Q, W_K, W_V \in \mathbb {R}^{d\times d_h}\) are learnable projection matrices that map the input sequence into three latent subspaces of dimension \(d_h\). These matrices are optimized jointly with the rest of the network during training and serve to model pairwise dependencies between different temporal positions in the cardiac signal.

The attention weights are then computed by measuring the similarity between the query and key matrices through the standard scaled dot-product attention formulation (as defined in Eq. 1). In this operation, the similarity between Q and K determines how much each token attends to others, while the scaling factor \(\sqrt{d_h}\) stabilizes gradients and prevents extreme attention scores. The resulting attention map A represents the relative importance that each temporal token assigns to all others, thereby capturing long-range temporal dependencies in the ECG sequence. The context-enhanced representation is subsequently obtained as:

where \(H^{(m)}\) integrates information from all time steps, weighted by their learned attention relevance. In this design, the same parameterization (\(W_Q, W_K, W_V\)) is consistently used throughout both the domain-invariant feature enhancement module and the interpretability-driven attention mechanism, ensuring architectural coherence and shared feature semantics across modules.

Importantly, the interpretability of the attention mechanism arises from the fact that the learned attention matrix A explicitly reflects how much each segment of the ECG contributes to the final decision. By visualizing the normalized attention weights, clinicians can identify which cardiac waveform components are emphasized by the model. In addition, when combined with the feature importance constraint introduced in the interpretability-driven attention constraint mechanism, the attention distribution is further regularized to align with clinically relevant signal segments, enabling transparent and physiologically meaningful model interpretation. Figure 2 illustrates this process and its integration within the overall framework.

After obtaining intra-domain features, we project them into a shared contrastive space using a projection head:

where \(h^{(m)}_i\) is the projection vector of the i-th sample, and \(\sigma\) denotes a nonlinear activation. Here, \(W_p\) and \(b_p\) represent the learnable weight matrix and bias term of the projection head, respectively. They are trainable parameters that linearly transform the context-enhanced representation \(H^{(m)}_i\) into a lower-dimensional embedding space before contrastive learning. It should be noted that \(W_p\) and \(b_p\) are not inherited from \(H^{(m)}\) but are newly initialized parameters specifically designed to map the high-dimensional domain features into a shared latent space for distribution alignment and similarity computation.

To ensure consistency of representations across domains, we introduce a distribution alignment mechanism. For the m-th domain, we compute the mean and covariance matrix as:

where \(N_m\) is the number of samples in domain m.

In a multi-source setting, we aim for statistical consistency across domains. For any two domains m and n, we compute their mean and covariance discrepancies as:

where \(\Vert \cdot \Vert _F\) denotes the Frobenius norm. By minimizing \(\Delta _{\mu }\) and \(\Delta _{\Sigma }\), the feature distributions across domains are globally aligned.

Furthermore, the DFEM incorporates a contrastive learning mechanism. In this setting, samples from different domains but belonging to the same class are treated as positive pairs, while samples of different classes are treated as negative pairs. For a pair \(\{h_i, h_j\}\), if they share the same label but originate from different domains, they are considered positive; otherwise, they are negative. We compute their similarity as:

In summary, the Domain-invariant Feature Enhancement Module achieves global consistency through cross-domain statistical alignment, and further enforces intra-class compactness across domains via contrastive learning. Together, these mechanisms enable the model to learn highly generalizable representations using only source-domain data, thereby improving its robustness to domain shift in unseen target datasets.

Interpretability-driven attention constraint mechanism

In cross-dataset cardiac signal domain generalization scenarios, the model is required not only to exhibit robust discriminative ability but also to provide interpretability during the decision-making process, thereby clarifying which critical features the model relies on for classification. To this end, we introduce an Interpretability-driven Attention Constraint Mechanism (IACM) within the Transformer framework. By explicitly incorporating prior information and mask control into the attention distribution, the model is guided to focus on clinically or domain-relevant regions, while simultaneously improving cross-domain generalization. The module architecture is shown in Fig. 3.

Given the input sequence representation \(Z \in \mathbb {R}^{T \times d}\), where T denotes the temporal length and d the feature dimension, the multi-head attention mechanism first computes internal dependencies as:

where \(W_Q, W_K, W_V \in \mathbb {R}^{d \times d_h}\) are the projection matrices, and \(d_h\) is the dimension of a single head.

The attention weight matrix is then obtained following the standard scaled dot-product attention formulation (as defined in Eq. 1), where \(A \in \mathbb {R}^{T \times T}\), and each element \(A_{i,j}\) indicates the dependency strength between the i-th and j-th tokens in the sequence.

To guide the attention distribution toward more meaningful focus, we incorporate a mask matrix \(M \in \{0,1\}^{T \times T}\), which is derived through a gradient-based interpretability method. Specifically, the contribution of each temporal position in the input sequence Z to the final prediction is quantified using gradient attribution, and highly influential positions are preserved to form the binary mask. The overall computation process is summarized in Algorithm 1.

The resulting mask matrix M highlights temporal segments with strong gradient responses, indicating regions that contribute most to the model’s decision. The masked attention distribution is then obtained as:

where \(\odot\) denotes element-wise multiplication, and \(\tilde{A}\) is the constrained attention matrix emphasizing clinically relevant tokens. To ensure interpretability within the sequence, the masked attention distribution is normalized:

thereby producing a normalized attention distribution \(\hat{A}\), where each row sums to one. Based on the normalized attention, the sequence features are updated as:

where \(H \in \mathbb {R}^{T \times d_h}\) represents the context-enhanced representation guided by gradient-derived interpretability constraints.

To further strengthen interpretability, we design an attention alignment strategy based on feature importance. Let \(w \in \mathbb {R}^T\) denote the feature importance vector obtained from external interpretability methods. We expect the mean attention distribution to align with w:

where \(\bar{a}\) is the average attention distribution, and \(\textrm{Align}(\cdot )\) measures the consistency between the learned attention and prior feature importance.

On the other hand, we also encourage semantic sparsity in the attention distribution to emphasize the most critical signal segments. To achieve this, a sparsity operator is introduced:

which promotes concentration of attention weights on a limited number of key positions.

Finally, after multi-head aggregation, the outputs of different heads are concatenated and projected:

where h is the number of attention heads, \(W_O\) is the output projection matrix, and \(H'\) represents the interpretability-constrained multi-head attention output.

Through this mechanism, the model not only captures temporal dependencies in cardiac signals but also actively focuses on clinically relevant key segments, while suppressing irrelevant or noisy regions. In cross-domain scenarios, such interpretability-driven attention ensures consistent focus across datasets, thereby enhancing both generalization and interpretability. More importantly, this mechanism guarantees feature alignment while providing clear explanations of the model’s decision process through attention visualization, which establishes reliable support for subsequent medical applications.

Training objectives

To achieve robust modeling and domain generalization of cross-dataset cardiac signals, this study jointly designs three types of loss functions during training: task supervision loss, domain-invariant feature alignment loss, and contrastive learning loss. These three components work collaboratively in the overall optimization process, ensuring accurate performance on source domains while enhancing cross-domain representational consistency and discriminative capability. Theoretically, the combination of these losses can be interpreted as a multi-objective optimization problem, where the task supervision term guarantees empirical risk minimization on labeled data, the domain alignment term minimizes distributional divergence between heterogeneous domains, and the contrastive loss maximizes the mutual information between semantically consistent representations. This joint formulation follows the principle of structural risk minimization, providing both discriminative accuracy and generalization stability under domain shift.

To guarantee that the model possesses the fundamental ability for classification or regression, we introduce a standard task supervision loss. For classification tasks, given the predicted probability distribution \(\hat{y}_i\) of sample \(x_i\) with ground-truth label \(y_i\), the supervision loss is defined in the form of cross-entropy:

where C denotes the number of classes and N is the total number of samples. This term ensures that the model learns basic discriminative capability on the source domains.

In cross-dataset scenarios, significant distribution shifts among source domains may degrade performance on the target domain. To mitigate this, we adopt the Maximum Mean Discrepancy (MMD) to align the feature distributions of different domains. Let the feature representations of the m-th source domain be \(\{h_i^{(m)}\}_{i=1}^{N_m}\) and those of the n-th domain be \(\{h_j^{(n)}\}_{j=1}^{N_n}\), the MMD is defined as:

where \(\phi (\cdot )\) maps features into a Reproducing Kernel Hilbert Space (RKHS), and \(\Vert \cdot \Vert _{\mathscr {H}}\) is the norm in this space. The physical meaning of MMD is to minimize the discrepancy between mean embeddings of domains, thus enforcing alignment in the shared space. By considering all domain pairs, the domain-invariant feature alignment loss is formulated as:

This loss ensures that features learned by the model remain consistent across domains, thereby improving generalization to the target domain. Theoretically, minimizing MMD corresponds to minimizing the upper bound of the target domain error, which provides a theoretical guarantee for domain generalization.

To further enhance intra-class compactness and inter-class separability, we introduce a contrastive learning mechanism in the projection space. Assume a batch contains N samples, where \(h_i\) denotes the projection vector of the i-th sample, and P(i) is its set of positive samples. The contrastive loss is defined as:

where \(\textrm{sim}(\cdot ,\cdot )\) represents cosine similarity and \(\tau\) is a temperature coefficient. From an information-theoretic perspective, this loss maximizes the agreement between representations of similar samples and reduces redundancy in the feature space, thereby improving the mutual information between input signals and their latent representations.

In summary, the training objective of this study consists of three parts, corresponding to task supervision, domain-invariant alignment, and contrastive regularization. The overall optimization objective is expressed as:

where \(\lambda _1\) and \(\lambda _2\) are balancing coefficients. This joint optimization provides a theoretically grounded integration of empirical risk minimization, distributional alignment, and information maximization. Through this unified learning paradigm, the model simultaneously achieves strong discriminative ability on source domains, cross-domain consistency, and enhanced inter-class separability, thereby realizing robust domain-generalized cardiac signal modeling.

Dataset and experimental setup

Dataset

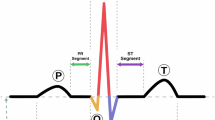

In this study, we employ four arrhythmia datasets from PhysioNet, namely the MIT-BIH Supraventricular Arrhythmia Database, the MIT-BIH Arrhythmia Database, the St Petersburg INCART 12-lead Arrhythmia Database, and the Sudden Cardiac Death Holter Database, all of which utilize dual-lead (II and V5) ECG features. Each dataset contains five heartbeat categories: Normal (N), Supraventricular Ectopic Beat (S), Ventricular Ectopic Beat (V), Fusion Beat (F), and Unknown Beat (Q). The record field denotes the subject identifier, the type field indicates the class label, and the remaining 34 columns correspond to feature data (17 features per lead). These features include RR interval metrics (average RR, RR, and Post RR), cardiac cycle characteristics (PQ, QT, ST intervals, and QRS duration), ECG amplitude features (peak values of P, T, R, S, and Q waves), as well as morphological descriptors (five-dimensional QRS complex morphology features). Importantly, all datasets maintain consistency in feature dimensionality, ensuring comparability for machine learning modeling and evaluation across different data sources.

Experimental setup

In the experimental design, we adopt a cross-dataset domain generalization training strategy, where one dataset is selected as the target domain for final testing only, while the remaining three datasets are jointly used as the source domain for training and validation. This setting follows the typical leave-one-dataset-out (LoDO) protocol, which enables a comprehensive evaluation of the model’s generalization performance on unseen target domains. For the source domain data, we split it into training and validation sets with an 8:2 ratio, where the training set is used for model parameter optimization and the validation set is used for model selection and hyperparameter tuning. To address class imbalance within the source domains, we apply random oversampling to ensure a balanced training distribution. The target domain data remains completely unseen during training and is only used for evaluation at the testing stage. This strategy ensures the fairness of experiments and realistically simulates domain generalization scenarios.

Regarding hardware and model configurations, all experiments are conducted on a server equipped with an NVIDIA RTX 3090 GPU, an Intel Xeon Gold 6226R CPU, and 128 GB of memory, running Ubuntu 20.04. The deep learning framework is PyTorch 2.0. We adopt a Transformer-based backbone as the representation model, and integrate the proposed Domain-invariant Feature Enhancement and Interpretability-driven Attention Constraint modules. During training, the AdamW optimizer is used with an initial learning rate of \(1 \times 10^{-4}\), batch size of 64, and a maximum of 50 epochs, with early stopping applied to prevent overfitting. Table 2 summarizes the key hardware and model hyperparameter configurations used in the experiments.

Evaluation metric

To comprehensively evaluate the model performance on the target domain, we select four commonly used metrics, including Accuracy, Precision, Recall, and F1-score. All metrics are computed on the test set of the target domain.

Accuracy measures the proportion of correctly predicted samples among the total number of samples:

Precision indicates the proportion of true positive samples among all samples predicted as positive by the model:

Recall represents the proportion of true positive samples that are successfully identified by the model among all actual positive samples:

The F1-score is the harmonic mean of Precision and Recall, which comprehensively reflects the model performance under class-imbalanced conditions:

Finally, the Area Under the Receiver Operating Characteristic Curve (AUC) quantifies the model’s ability to distinguish between positive and negative samples across all possible thresholds. It is calculated by integrating the true positive rate (TPR) and false positive rate (FPR) over the ROC curve:

where \(\textrm{TPR} = \frac{TP}{TP + FN}\) and \(\textrm{FPR} = \frac{FP}{FP + TN}\). A higher AUC value indicates better discriminative ability and more robust classification performance under varying decision thresholds.

Experimental results and analysis

Comparison experimental results with other domain generalization algorithms

In the comparative experiments, we select several representative domain generalization methods as baseline models for performance evaluation. Among them, Transformer serves as the most fundamental modeling framework and has been widely applied to cross-domain cardiac signal representation tasks. Methods such as MetaReg, FSDR, SelfReg, DLow, and DPStyler are all built upon Transformer as the core architecture, or extend it by incorporating mechanisms such as regularization, perturbation generation, and style transfer, thereby reflecting different perspectives of domain generalization. It is worth noting that DGMamba is the only method that does not rely on Transformer; instead, it adopts the Mamba sequence modeling structure to achieve feature alignment and cross-domain adaptation. Compared with these approaches, the proposed model (Ours) not only preserves the powerful representational capacity of Transformer but also integrates Domain-invariant Feature Enhancement and Interpretability-driven Attention Constraint mechanisms, thus achieving superior performance in domain generalization tasks for cross-dataset cardiac signals. The experimental results are presented in Table 3.

From the experimental results, it can be observed that the proposed method achieves the best performance across all metrics in the four different target domains. In particular, on the MIT-BIH Arrhythmia and INCART datasets, our approach demonstrates significant improvements in Accuracy (Acc), Precision, Recall, and F1-score compared to other methods. This indicates that the proposed Domain-invariant Feature Enhancement and Interpretability-driven Mechanisms effectively mitigate the distribution discrepancies between source and target domains, enabling the model to maintain robust discriminative ability on unseen target domains. Furthermore, compared with baseline methods that directly rely on Transformer, our approach still achieves an improvement of approximately 8 percentage points in accuracy on challenging datasets such as MIT-BIH Supraventricular Arrhythmia, highlighting its stronger generalization capability.

In addition, compared with a series of Transformer-based domain generalization methods (e.g., MetaReg, FSDR, SelfReg, Dlow, DPStyler), the proposed method maintains high precision while also achieving more balanced performance in terms of recall and F1-score. This demonstrates that the model not only captures a larger number of true abnormal heartbeats but also reduces the occurrence of false alarms. On the SCD-Holter dataset, which is characterized by more complex noise and larger distribution shifts, our approach still outperforms other methods, further validating the effectiveness and robustness of domain-invariant feature alignment and interpretability-driven attention constraints for cross-dataset ECG representation. This paper also gives the images of Acc changing with epoch during the training process of the four datasets, and the experimental results are shown in Fig. 4.

Experimental results of adding this algorithm to different backbones

This study also provides experimental results with different feature extraction architectures, where commonly used models such as LSTM, MLP, and 1D-CNN are employed to achieve domain generalization. The corresponding experimental results on the four target datasets are presented in Table 4.

From the experimental results, it can be observed that traditional deep learning models exhibit overall inferior performance compared to Transformer-based approaches in cross-dataset ECG domain generalization tasks. This indicates that relying solely on shallow temporal modeling or convolutional features is insufficient to effectively capture complex cross-domain distributional shifts, thereby limiting model robustness on unseen target domains. However, when these traditional models are combined with the proposed Domain-invariant Feature Enhancement and Interpretability-driven Constraint mechanisms, their performance improves to varying degrees across all four target domains, with particularly notable gains in Accuracy and F1-score. This demonstrates that the proposed module provides a universal capability for feature alignment and discriminative enhancement across different model architectures.

Further analysis reveals that on datasets such as INCART and MIT-BIH Arrhythmia, which are characterized by large sample sizes and diverse feature distributions, the improvements over the original baseline models are more pronounced after incorporating the proposed method. This indicates that our approach possesses strong generalization ability and stability in handling cross-domain distributional differences and noise perturbations. Meanwhile, on datasets like SCD-Holter, which contain complex noise and imbalanced class distributions, the proposed method is still able to maintain a good balance between Precision and Recall. This suggests that the interpretability-driven attention mechanism helps the model focus on clinically relevant signal segments, thereby reducing both false positives and false negatives. These results validate the effectiveness and universality of the proposed method in cross-dataset ECG signal analysis.

Ablation experiment results

In this section, we present the results of ablation experiments, aiming to explore the capabilities of the Domain-invariant feature enhancement module and the Interpretability-driven attention constraint mechanism module. The experimental results are shown in Table 5.

From the results in Table 5, it can be observed that the baseline Transformer shows relatively weak performance across the four target domains. When augmented with the Domain-invariant Feature Enhancement Module (+DFEM) and the Interpretability-driven Attention Constraint Module (+IACM), consistent performance improvements are achieved, demonstrating that these two modules play positive roles in feature alignment and attention focusing, respectively. Among them, +IACM outperforms +DFEM in most scenarios, indicating that the interpretability-driven mechanism makes a more significant contribution to cross-domain ECG classification. Finally, the complete model (Ours), which integrates both modules, achieves the best results on all target domains, with particularly notable improvements on the INCART and MIT-BIH Arrhythmia datasets. These results fully validate the effectiveness and robustness of the proposed method in cross-dataset ECG domain generalization tasks. In order to further illustrate the results of the ablation experiment, this paper also presents a statistical significance experiment, the experimental results of which are shown in Table 6.

As shown in Table 6, the performance improvements across all target domains relative to the baseline Transformer across different modules reached statistically significant levels (\(p<0.05\)), indicating that the proposed module improvements are not due to random fluctuations. Specifically, +DFEM and +IACM significantly improved model stability and generalization across all four datasets, while the full model achieved the lowest p values across all metrics, further validating the effectiveness and robustness of multi-module collaborative design for cross-domain ECG analysis. This result demonstrates that the feature augmentation and interpretability constraint mechanisms proposed in this paper can significantly improve model performance and have universal cross-dataset generalization capabilities.

Feature importance analysis

To further verify the interpretability and reliability of the proposed method in cross-dataset ECG tasks, we analyze the model’s attention to different feature dimensions. Specifically, based on the attention weight distribution and external attribution methods, we rank the importance of the 34 features (including RR intervals, cardiac cycle characteristics, ECG amplitude features, and QRS complex morphological features), thereby revealing the key features the model relies on when distinguishing different heartbeat categories. Through this feature importance analysis, we not only explain the decision-making basis of the model but also validate whether the Domain-invariant Feature Enhancement and Attention Constraint mechanisms effectively guide the model to focus on clinically relevant physiological features rather than noise or irrelevant information.

From the results in Fig. 5, it can be observed that the feature importance rankings across different datasets exhibit a consistent pattern, indicating that the model stably focuses on clinically meaningful ECG attributes during cross-domain learning. The selection of clinically relevant features was guided by established cardiological standards and prior medical literature, ensuring that all extracted features (e.g., RR interval, PQ interval, QT interval, and QRS complex duration) are physiologically interpretable and directly related to cardiac cycle characterization. Specifically, abnormalities in RR interval variability are closely associated with arrhythmias such as atrial fibrillation and sinus irregularity, prolonged PQ intervals are indicative of atrioventricular conduction blocks, extended QT intervals are linked to ventricular tachyarrhythmias, and variations in QRS duration often signify bundle branch blocks or ventricular ectopic activity. Feature importance was quantitatively determined through a permutation-based sensitivity analysis, where the accuracy degradation caused by random shuffling of each feature was used to assess its contribution to the model’s prediction. Moreover, wave amplitude characteristics (such as R, Q, and T peaks) consistently ranked among the top features, further highlighting the model’s sensitivity to key ECG morphological patterns that align with clinical diagnostic reasoning.

Despite the general consistency, variations in feature emphasis can also be observed among datasets. For example, the INCART dataset exhibits a stronger dependency on RR interval variability, whereas in the MIT-BIH Arrhythmia dataset, the ST interval and PQ interval show higher importance, possibly reflecting differences in the underlying pathologies and recording setups. In contrast, the SCD-Holter dataset places greater weight on QRS complex morphology and its derivatives, implying that arrhythmia discrimination in this dataset relies more heavily on waveform deformation characteristics. These differences not only reveal dataset-specific physiological tendencies but also demonstrate that the proposed interpretability-driven framework can adaptively capture clinically meaningful patterns while maintaining stable domain-invariant representations across heterogeneous ECG sources.

Feature attention visualization

This paper also gives the experimental results of feature attention visualization, which are shown in Fig. 6.

From Fig. 6, it can be observed that the feature attention distributions across the four target domains exhibit both consistency and domain adaptivity. The proposed Interpretability-driven Attention Constraint Mechanism (IACM) effectively guides the Transformer backbone to allocate higher attention weights to clinically meaningful ECG attributes such as RR interval, PQ interval, and QRS morphology, which are essential indicators in arrhythmia diagnosis. This demonstrates that our model not only captures domain-invariant temporal dependencies but also aligns its internal focus with medical priors, enhancing interpretability. Moreover, the slight variations in feature weighting across datasets—such as stronger emphasis on RR intervals in INCART and morphological patterns in SCD-Holter—highlight the adaptability of the proposed framework in handling inter-dataset discrepancies while maintaining stable interpretive consistency.

Confusion matrix and t-SNE analysis

In this section, we further analyze the model’s classification performance and feature distribution using confusion matrices and t-SNE visualization. The confusion matrix provides a visual representation of the prediction accuracy and confusionability of each category, while t-SNE helps reveal the clustering structure of high-dimensional features in a low-dimensional space. Combining these two methods provides a more comprehensive demonstration of the proposed method’s discriminative power and generalization performance in distinguishing different cardiac signal categories. First, we present the experimental results using t-SNE, as shown in Fig. 7

From the t-SNE visualization results in Fig. 7, it can be observed that the proposed method is able to effectively distinguish different categories of heartbeat features in the low-dimensional space. In most datasets (such as INCART and MIT-BIH Supraventricular Arrhythmia), Normal beats (N class) and Ventricular Ectopic Beats (VEB class) form clear cluster boundaries, indicating that the Domain-invariant Feature Enhancement module effectively reduces cross-domain feature distribution discrepancies. Moreover, with the integration of the interpretability-driven attention mechanism, the model is better able to capture category-relevant key features, thereby improving overall discriminability.

On the other hand, partial overlaps between certain categories can also be observed across datasets. For instance, in MIT-BIH Arrhythmia and SCD-Holter, some Q class and SVEB class samples remain intermingled, which is closely related to the inherently ambiguous boundaries and class imbalance in the original ECG signals. Nevertheless, the proposed method maintains clear clustering trends for the major categories even in such complex scenarios, demonstrating its strong robustness and adaptability in feature extraction and cross-domain generalization. Next, the experimental results of the confusion matrix are given, and the experimental results are shown in Fig. 8.

From the confusion matrix results in Fig. 8, it can be observed that the model achieves the most stable recognition performance for Normal beats (N class) and Ventricular Ectopic Beats (VEB class) across the four target domains, with consistently high prediction accuracy in most cases. For example, on the MIT-BIH Arrhythmia and INCART datasets, the recognition accuracy of the VEB class exceeds 0.9, indicating that the proposed method can effectively capture the distinct abnormal patterns in ECG signals. Meanwhile, for the commonly occurring Normal beats, the model also demonstrates strong robustness, providing reliable support for normal/abnormal rhythm classification in practical clinical applications.

On the other hand, certain levels of confusion remain in recognizing Fusion beats (F class) and Supraventricular Ectopic Beats (SVEB class) in some datasets. For instance, in the MIT-BIH Supraventricular Arrhythmia and SCD-Holter datasets, the prediction accuracy for SVEB is significantly lower than that of other categories. This phenomenon may be attributed to the high morphological similarity of these beats to either Normal or other abnormal classes. Moreover, due to the imbalanced sample distribution in the SCD-Holter dataset, overall confusion is more pronounced. Nevertheless, the general trend still suggests that the proposed method, while ensuring strong recognition performance for major classes, also highlights potential areas for improvement in distinguishing boundary-ambiguous categories.

Noise robustness analysis

In this experiment, Gaussian white noise was added to the input ECG signals to simulate measurement perturbations and evaluate model robustness. The experimental results are shown in Table 7. The noise level \(\sigma\) represents the standard deviation of the additive noise relative to the normalized signal amplitude. Specifically, \(\sigma \in \{0.10, 0.05, 0.01, 0\}\) corresponds to different noise intensities, where \(\sigma =0\) denotes the original clean signal without any disturbance.

As shown in Table 7, the proposed model demonstrates strong robustness against different noise intensities. When the noise level increases from \(\sigma =0\) to \(\sigma =0.10\), all four target domains exhibit only a slight and consistent decline in Accuracy, F1-Score, and AUC, indicating that the model maintains stable discriminative ability under perturbations. This robustness stems from the joint optimization of domain-invariant feature alignment and contrastive learning, which enables the model to capture stable cardiac signal representations even under noise interference. Notably, the INCART domain shows the smallest performance drop, suggesting that the proposed feature alignment mechanism effectively enhances noise tolerance across domains with diverse signal characteristics.

Discussion and analysis of computing resources and training time

This paper concludes with a discussion and analysis of computing resources, and the experimental results are shown in Table 8.

As shown in Table 8, all models were trained under identical hardware conditions using an NVIDIA RTX 3090 GPU to ensure fair comparison. The proposed model exhibits a slight increase in memory usage (12.4 GB) and total training time (4.8 h) compared to the baseline Transformer, mainly due to the introduction of the DFEM and IACM modules that enhance feature alignment and interpretability. Despite this marginal increase in computational cost, the performance improvement achieved by our method justifies the additional resources, demonstrating an efficient balance between model complexity and training efficiency.

Conclusion

This study focuses on the challenge of domain generalization and feature interpretability in cross-dataset electrocardiogram (ECG) analysis, and proposes a Transformer-based cross-domain modeling framework. The framework incorporates a Domain-invariant Feature Enhancement Module to achieve distribution alignment and cross-domain contrastive learning across multiple sources, thereby alleviating the significant discrepancies among different databases. In addition, an Interpretability-driven Attention Constraint Mechanism is introduced, enabling the model to focus more effectively on clinically relevant ECG features during feature selection and decision-making. Experiments conducted on several authoritative arrhythmia datasets, including MIT-BIH, INCART, and SCD-Holter, demonstrate that the proposed method consistently outperforms state-of-the-art domain generalization algorithms in terms of accuracy, recall, and F1-score. Furthermore, cross-backbone validation and ablation studies confirm stable and significant performance gains, highlighting the effectiveness and generalizability of the proposed framework in addressing domain shift, improving robustness, and enhancing clinical interpretability. In summary, compared with the baseline Transformer, the proposed method achieves an average accuracy improvement of approximately 6.5%, a recall increase of 5.1%, and an F1-score gain of 5.3% across all target domains. These quantitative improvements, together with enhanced robustness under noisy conditions, underscore the framework’s capability to deliver reliable and interpretable ECG analysis in complex real-world clinical scenarios.

Despite these promising results, several issues remain open for further investigation. First, on datasets with blurred boundaries or class imbalance, the model still shows confusion in distinguishing classes such as Fusion beats and supraventricular ectopic beats (SVEB), suggesting that more fine-grained feature modeling and advanced resampling strategies could be incorporated. Second, this study mainly focuses on unimodal ECG signals, while in real clinical scenarios, multimodal physiological signals such as photoplethysmography and phonocardiography can provide complementary information; extending the proposed framework to multimodal contexts is therefore an important future direction. Finally, the interpretability of the current model primarily relies on attention weights and feature importance constraints, which could be further enhanced by integrating causal inference and graph neural network techniques to improve the stability and clinical credibility of explanations. In summary, this work not only makes progress in domain generalization and interpretability for cross-dataset ECG analysis, but also lays a foundation for the deployment of intelligent ECG models in real-world clinical practice.

Data availability

The datasets analyzed in this study are publicly available from the PhysioNet repository. Specifically, the following databases were used: MIT-BIH Arrhythmia Database: https://www.physionet.org/content/mitdb/1.0.0/ MIT-BIH Supraventricular Arrhythmia Database: https://physionet.org/content/svdb/1.0.0/ St. Petersburg INCART 12-lead Arrhythmia Database: https://physionet.org/content/incartdb/1.0.0/ Sudden Cardiac Death Holter Database: https://physionet.org/content/sddb/1.0.0/.

References

Ballas, A. & Diou, C. Towards domain generalization for ECG and EEG classification: Algorithms and benchmarks. IEEE Trans. Emerg. Top. Comput. Intell. 8, 44–54 (2023).

Gliner, V., Makarov, V., Avetisyan, A. I., Schuster, A. & Yaniv, Y. Using domain adaptation for classification of healthy and disease conditions from mobile-captured images of standard 12-lead electrocardiograms. Sci. Rep. 13, 14023 (2023).

Vavekanand, R., Sam, K., Kumar, S. & Kumar, T. Cardiacnet: A neural networks based heartbeat classifications using ECG signals. Stud. Med. Health Sci. 1, 1–17 (2024).

Imtiaz, M. N. & Khan, N. Cross-database and cross-channel ECG arrhythmia heartbeat classification based on unsupervised domain adaptation. arXiv preprint arXiv:2306.04433 (2023).

Sam, K., Nawaz, S. & Vavekanand, R. Cardiomix: A multimodal image-based classification pipeline for enhanced ECG diagnosis. Med. Data Min. 8, 6 (2025).

Soleimani, M., Toosi, M. H., Mohammadi, S. & Khalaj, B. H. Using test-time data augmentation for cross-domain atrial fibrillation detection from ecg signals. arXiv preprint arXiv:2503.13483 (2025).

Vavekanand, R., Kumar, G. & Kurbanova, S. A lightweight physics-conditioned diffusion multi-model for medical image reconstruction. Biomed. Eng. Commun. 5, 12 (2026).

Marzog, H. A. & Abd, H. J. Machine learning ECG classification using wavelet scattering of feature extraction. Appl. Comput. Intell. Soft Comput. 2022, 9884076 (2022).

Jahangir, R. et al. Ecg-based heart arrhythmia classification using feature engineering and a hybrid stacked machine learning. BMC Cardiovasc. Disord. 25, 260 (2025).

Pouriyeh, S., Vahid, S., Sannino, G. et al. A comprehensive investigation and comparison of machine learning techniques in the domain of heart disease. In 2017 IEEE Symposium on Computers and Communications (ISCC), 204–207 (IEEE, 2017).

Jan, M. B., Rashid, M., Vavekanand, R. & Singh, V. Integrating explainable AI for skin lesion classifications: A systematic literature review. Stud. Med. Health Sci. 2, 1–14 (2025).

Ebrahimi, Z. et al. A review on deep learning methods for ECG arrhythmia classification. Expert Syst. Appl. X 7, 100033 (2020).

Peimankar, A. & Puthusserypady, S. Dens-ECG: A deep learning approach for ECG signal delineation. Expert Syst. Appl. 165, 113911 (2021).

Alghieth, M. Deepecg-net: a hybrid transformer-based deep learning model for real-time ECG anomaly detection. Sci. Rep. 15, 20714 (2025).

Alamatsaz, N., Yazdchi, M., Payan, H. et al. A lightweight hybrid CNN-LSTM model for ECG-based arrhythmia detection. arXiv preprint arXiv:2209.00988 (2022).

Warnecke, J. M., Lasenby, J. & Deserno, T. M. Robust in-vehicle heartbeat detection using multimodal signal fusion. Sci. Rep. 13, 20864 (2023).

Żyliński, M. et al. Hearables: In-ear multimodal data fusion for robust heart rate estimation. BioMedInformatics 4, 911–920 (2024).

Tozzi, A. E. et al. A systematic review of data sources for artificial intelligence applications in pediatric brain tumors in europe: implications for bias and generalizability. Front. Oncol. 13, 1285775 (2023).

Jafari, M. et al. Application of transfer learning for biomedical signals: a comprehensive review of the last decade (2014–2024). Inf. Fusion 118, 102982 (2025).

Guan, H. & Liu, M. Domain adaptation for medical image analysis: a survey. IEEE Trans. Biomed. Eng. 69, 1173–1185 (2021).

Zhou, K. et al. Domain generalization: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 45, 4396–4415 (2022).

Khoee, A. G., Yu, Y. & Feldt, R. Domain generalization through meta-learning: a survey. Artif. Intell. Rev. 57, 285 (2024).

Niu, Z., Ouyang, S., Xie, S. et al. A survey on domain generalization for medical image analysis. arXiv preprint arXiv:2402.05035 (2024).

Imtiaz, M. N. & Khan, N. Cross-database and cross-channel ECG arrhythmia heartbeat classification based on unsupervised domain adaptation. arXiv preprint arXiv:2306.04433 (2023).

Feng, P. et al. Semantic-aware alignment and label propagation for cross-domain arrhythmia classification. Knowl.-Based Syst. 264, 110323 (2023).

Lee, J. & Shin, M. Cross-database learning framework for electrocardiogram arrhythmia classification using two-dimensional beat-score-map representation. Appl. Sci. 15, 5535 (2025).

Hassija, V. et al. Interpreting black-box models: a review on explainable artificial intelligence. Cogn. Comput. 16, 45–74 (2024).

Marey, A. et al. Explainability, transparency and black box challenges of AI in radiology: impact on patient care in cardiovascular radiology. Egypt. J. Radiol. Nucl. Med. 55, 183 (2024).

Ayano, Y. M. et al. Interpretable machine learning techniques in ECG-based heart disease classification: a systematic review. Diagnostics 13, 111 (2022).

Ojha, J., Haugerud, H., Yazidi, A. et al. Exploring interpretable AI methods for ECG data classification. In Proceedings of the 5th ACM Workshop on Intelligent Cross-Data Analysis and Retrieval, 11–18 (ACM, 2024).

Baig, Z., Nasir, S., Khan, R. A. et al. Arrhythmiavision: Resource-conscious deep learning models with visual explanations for ecg arrhythmia classification. arXiv preprint arXiv:2505.03787 (2025).

Kilic, M. E., Tufekcioglu, O. A., Yilancioglu, Y. R. et al. Explainable AI for specific arrhythmia detection: Shap-based insights from multi-lead ECG data. J. Med. Biol. Eng. 1–11 (2025).

Goettling, M. et al. xecgarch: a trustworthy deep learning architecture for interpretable ECG analysis considering short-term and long-term features. Sci. Rep. 14, 13122 (2024).

Ayano, Y. M. et al. Interpretable hybrid multichannel deep learning model for heart disease classification using 12-lead ECG signal. IEEE Access 12, 94055–94080 (2024).

Vaswani, A. et al. Attention is all you need. Adv. Neural Inf. Process. Syst. 30 (2017).

Balaji, Y., Sankaranarayanan, S. & Chellappa, R. Metareg: Towards domain generalization using meta-regularization. Adv. Neural Inf. Process. Syst. 31 (2018).

Huang, J., Guan, D., Xiao, A. & Lu, S. Fsdr: Frequency space domain randomization for domain generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6891–6902 (2021).

Kim, D., Yoo, Y., Park, S., Kim, J. & Lee, J. Selfreg: Self-supervised contrastive regularization for domain generalization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 9619–9628 (2021).

Yuan, Y. & Kitani, K. Dlow: Diversifying latent flows for diverse human motion prediction. In European Conference on Computer Vision, 346–364 (Springer, 2020).

Tang, Y., Wan, Y., Qi, L. & Geng, X. Dpstyler: dynamic promptstyler for source-free domain generalization. IEEE Trans. Multimed. (2025).

Long, S. et al. Dgmamba: Domain generalization via generalized state space model. In Proceedings of the 32nd ACM International Conference on Multimedia, 3607–3616 (2024).

Author information

Authors and Affiliations

Contributions

R.L. and Y.A. conceived the study and drafted the manuscript. Y.X. contributed to data collection and analysis. J.L. and Y.T. provided clinical insights and assisted with the interpretation of results. All authors reviewed and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, R., Aierken, Y., Xu, Y. et al. Research on cross-dataset cardiac signal domain generalization and feature interpretability. Sci Rep 16, 3138 (2026). https://doi.org/10.1038/s41598-025-33057-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-33057-9