Abstract

The integration of technology into educational institutions has led to the generation of vast data, creating opportunities for Educational Data Mining (EDM) to improve learning outcomes. This study introduces a novel feature selection model, “Dynamic Feature Ensemble Evolution for Enhanced Feature Selection” (DE-FS), which combines traditional methods such as correlation matrix analysis, information gain, and Chi-square with heat maps to select the most relevant features for predicting student performance. The core innovation of DE-FS lies in its dynamic and adaptive thresholding mechanism, which adjusts thresholds based on evolving data patterns, addressing the limitations of static methods and mitigating issues like overfitting and underfitting. This research makes three key contributions: it introduces an advanced ensemble-based feature selection methodology, incorporates dynamic and adaptive thresholding to improve accuracy and flexibility, and demonstrates DE-FS’s superior predictive performance across diverse educational datasets. The results highlight DE-FS’s ability to adapt to fluctuating data patterns, enabling precise and reliable student performance predictions, supporting targeted interventions, and improving resource allocation to enhance personalized learning experiences.

Similar content being viewed by others

Introduction

The usage of technology in the classroom has resulted in a large amount of data that may be used to improve the modern educational system. EDM, which use data mining techniques to analyze large amount of academic and personal information, developed as a result of data growth. These include data about teachers, students, courses, syllabuses, test questions, and circulars. This large data set gives a significant opportunity to improve educational systems through machine learning and student performance prediction1. In this the environment, predicting student performance is crucial to educational interventions. Learning experiences also boost success2. Accurate student performance prediction helps schools provide individualized learning experiences and assist students to reach their academic potential2. Data’s dynamic nature makes algorithms inefficient1,2,3,4,5. Information gain, chi-square, and correlation matrix analysis with heat maps cannot adapt to changing data patterns2,3,4,5,6. Because of this constraint, education and prediction improvements are limited. We aim to correctly predict student success in these instances.

Standard feature selection algorithms cannot adapt to these changing data patterns. Our DE-FS algorithm combines classic feature selection with an ensemble approach that adjusts dynamically to changing data patterns to overcome these constraints6. This study helps three ways. DE-FS, an improved feature selection approach, combines established tactics to improve accuracy. We also provide dynamic and adaptive thresholding to effectively choose features across many datasets. To conclude, we evaluate DE-FS’s forecasting abilities for student accomplishment to help educators and data analysts make recommendations. We use student demographics, grades, and other information. Different educational contexts’ data is validated and tested7. We found that DE-FS outperforms the state-of-the-art in dynamic educational contexts and fluctuating data patterns. Greater flexibility improves precision and efficiency. The gains we found require dynamic thresholds and adaptive thresholding. De-FS ensures accurate and flexible student performance estimates in the ever-changing educational situation8.

The key contributions of our research are as follows:

-

We propose a novel feature selection model, “Dynamic Feature Ensemble Evolution for Enhanced Feature Selection” (DE-FS), which integrates traditional techniques like correlation matrix analysis, information gain, and Chi-square with heat maps to improve feature selection.

-

Our study introduces a dynamic and adaptive thresholding mechanism in DE-FS, allowing the model to adjust to evolving data patterns. This overcomes the limitations of static feature selection methods and prevents issues like overfitting and underfitting.

-

The DE-FS model demonstrates superior predictive accuracy for student performance, enabling more effective personalized learning interventions and better allocation of educational resources.

-

We validate the DE-FS model across diverse educational datasets, showcasing its adaptability to fluctuating data patterns and dynamic educational contexts.

Related works

Many studies on educational data mining have employed data-driven methods to predict and improve student performance. This section reviews existing publications to reveal the many ways and methods utilized to address this crucial educational topic. While previous works9,10,11,12,13,14,15,16,17 have made major contributions, DF-FS algorithm offers new perspectives and ways to better educational data mining.

Several studies have examined demographic, school, psychological, and socioeconomic factors that may affect children’ academic success11,18,19,20. Additionally, several models predict student achievement21,22. For instance10, improved student pass/fail prediction accuracy and success rates using KNN, decision trees, naive Bayes classification, and linear regression. A framework was developed to predict student performance by considering variables such as personal history, age, gender, educational background, mental well-being, and social activities. The model also identifies risk factors linked to critical values of key characteristics. In another study9, conducted an experiment aimed at early identification of high-risk students in a specific course using predictive techniques. By applying correlation analysis, they identified 14 key factors and achieved an 86.2% recognition rate for high-risk students using the Naive Bayes classifier.

The model also made datasets more accessible by precisely measuring true positives, true negatives, false positives, and false negatives11. described a decision tree method for estimating how well students will do in school, focusing on techniques for choosing features, like combining, cleaning, and normalizing data. The researchers carefully chose significant features and trained the system with filter-based approaches, resulting in a precision of 90.13%. However, their inability to access critical data, such as quiz questions, assignments, and midterm exams, delayed their capacity to develop the model even further12. Employed sequential minimum optimization (SMO)-based support vector machines (SVM) and logistic regression to predict students’ future behavior and classify their academic performance as outstanding, average, or substandard. The logistic regression model achieved 73% accuracy using the WEKA tool, but the SVM (SMO) model did better, at 79%. The models were assessed using criteria such as recall, precision, F1-score, and accuracy13. sought to improve student satisfaction by soliciting comments on academic performance, social activities, and interest in technical technologies using social networking sites. The study used a meta-heuristic wrapper technique and a stacked ensemble machine learning algorithm to identify key features and minimize data dimensionality, yielding an ensemble model with a Root Mean Square Error (RMSE) of 0.373, exceeding previous techniques14. Used data analysis and business intelligence approaches, such as decision trees and artificial neural networks (ANN), to estimate secondary school student performance in school. The study used grades from the second period of in-person instruction to forecast grades in courses such as mathematics and Portuguese. Although the decision tree and ANN algorithms achieved accuracies of 94% and 72%, respectively, incorporating internet data and additional attributes could improve real-time prediction capabilities15. proposed Support Vector Machines (SVM) and k-Nearest Neighbors (KNN) for predicting students’ exam results.

The study, which used various algorithms, achieved accuracies of 91% for SVM and 92% for KNN. KNN outperformed SVM with a correlation coefficient of 0.96 compared to 0.95. The proposed methodology combined decision-making, correlation analysis, and quantitative techniques, utilizing a data warehouse and tools like Python for diverse analyses and future predictions16,17. Developed a dual-phase machine learning approach combining supervised and unsupervised algorithms to improve recommendation accuracy compared to other methods. The author suggested that including more information from faculty members, such as evaluations of academic advisers, could enhance performance predictions21. used clustering and classification methods to predict students’ technical evaluation results. The dataset was split into training and testing groups, each with 50% of the instances. The decision tree method outperformed K-means clustering and naive Bayes, achieving the highest accuracy of 81%22. Established a student database and categorized instructors into low-risk, medium-risk, and high-risk groups based on in-class assignments, training participation, and final exam grades23. Recommended assessing student behavior during the early years after graduation to predict future achievements. Additionally24, developed a system to identify at-risk student’s early using classification methods. Table 1 gives the Summary of related works.

Prior research has highlighted several deficiencies, including limitations in classifier methods, feature selection techniques, ensemble methods, evaluation metrics, adaptability to changing data patterns, data source diversity, real-time prediction capabilities, and data comprehensiveness. Studies often used a narrow range of classifier methods and feature selection techniques, with limited exploration of ensemble techniques.

Evaluation measures were usually poor, focusing solely on accuracy rather than a broader range of metrics. Furthermore, there was no correction for shifting data patterns, which is critical for educational datasets that evolve over time. Many research23,25,26,27 only used historical campus data, ignoring the integration of internet data and the investigation of real-time prediction skills. The DF-FS algorithm solves these challenges by using a diverse set of classifiers, three feature selection strategies, a stacking ensemble method, and a thorough evaluation strategy.

Although previous research28,29,30,31,32 has made significant progress in predicting student success, our analysis fills several gaps in the existing works. These include a lack of variety in classifier algorithms and feature selection techniques, resulting in lower predicted accuracy. Additionally, there is an absence of research on ensemble methodologies and comprehensive evaluation metrics. Many present models are unable to adapt to changing data patterns in dynamic educational environments and rely on previous data, ignoring the possibility of adding data for real-time predictions. Previous research has also failed to address the timeliness of predictions and the need for additional data such as curriculum information33,34,35. By identifying these drawbacks, we establish the necessity of our proposed technique. The DE-FS algorithm developed in our research aims to address these gaps by offering more accurate and adaptive forecasts of student performance in ever-changing educational environments.

Sarker et al.36 employed Educational Data Mining (EDM) to examine Bangladeshi higher secondary students’ academic performance. Four major areas were studied: forecasting students’ final exam performance based on internal exam results, determining subjects’ effects on classifier performance, assessing students’ performance growth, and finding consistent performance trends from past exams. Internal examination scores predicted academic performance better than board GPA, according to the study. English, ICT, and Bangla were also identified as performance-affecting subjects. Sarker identified at-risk and high-performing subjects by tracking student performance. The study showed the ability to identify consistent performers, letting college officials adjust strategies for failing and outstanding students. Okoye et al.37 developed RG-DMML to predict student retention and graduation. The CRISP-DM model used Bagging and Wrapper feature selection with K-Nearest Neighbor (KNN) for supervised learning. Both retention and graduation had good precision, recall, accuracy, and F1-scores, with retention predicting more efficiently. The study also examined how these predictions could identify at-risk kids and enable timely interventions, counseling, and decision-making to improve educational results. Priyambada et al.38 suggested a two-layer ensemble learning system to predict student performance using learning behavior data and domain knowledge. SVM, KNN, and Random Forest algorithms are used in the ensemble prediction layer and the ensemble progressive prediction layer, which incorporates past and present predictions. A vertically coherent curriculum’s tight course-taking norms are considered, and students’ behavior and subject knowledge are used to predict whether they will graduate on time or late in eight semesters. Academic stakeholders can intervene sooner to assure on-time graduation due to enhanced student performance prediction. Lam et al.39 reviewed multiple machine learning models to predict student performance using data from 253 undergraduate students in five classrooms in three VnCodelab courses, an interactive learning management system. The study analyzed engagement patterns, exercise time, and progress tracking to predict student grades. This study examined five base classifiers (Random Forest, Logistic Regression, Support Vector Machine, Naïve Bayes, k-Nearest Neighbor) and a Stacking Classifier on a dataset with 13 characteristics. The Stacking Classifier predicted students’ final course scores better than individual models, demonstrating the importance of attendance and prior academic performance. The study identified attendance as an important predictor, proposing focused actions to boost student success. A predictive model to help instructors monitor and intervene with student progress is available, with plans to refine models and explore more data sources. Niyogisubizo al40 proposed a stacking ensemble method to predict university course dropout, a major education issue. Random Forest (RF), Extreme Gradient Boosting (XGBoost), Gradient Boosting (GB), and Feed-forward Neural Networks were used on a 2016–2020 Constantine the Philosopher University in Nitra dataset. The proposed model outperformed base models in accuracy and AUC. Early intervention can reduce dropout rates by identifying students at risk based on social welfare, learning conditions, and family status, according to research. Ensemble learning improved dropout predictions and suggested further research into deep learning models and other factors. Results showed that accurate prediction and early intervention can significantly reduce student dropout.

Bhuyan et al. addressed challenges in data privacy, feature selection, and cancer diagnosis. A novel privacy framework balanced computational cost and user-defined anonymity using multi-objective optimization. A robust feature selection algorithm enhanced classification accuracy by reducing irrelevant features. Additionally, a deep learning-based diagnostic framework integrated YOLO, FrCN, and CNN for precise cancer detection, outperforming conventional methods across datasets41,42,43.

Paper44 explores advanced ML and DL techniques for critical applications, including early cardiovascular disease (CVD) detection, intrusion detection systems (IDS), and Android malware detection. For CVD prediction, a voting ensemble model combined with chi-square feature selection achieved 92.11% accuracy. For IDS, feature selection using PCA and SVD improved classification accuracy, with Ridge Regression reaching 99.85% for binary classification. Lastly, the DL-AMDet deep learning framework effectively detected Android malware, achieving 99.935% accuracy by combining CNN-BiLSTM and Deep Autoencoders for static and dynamic analysis45,46.

Hussain et al.47 emphasized the significance of feature selection in predictive modeling in a foundational survey on data mining techniques. Punitha et al.48 introduced a high-ranking ensemble network with advanced feature selection strategies to enhance student performance prediction. Gaftandzhieva et al.49 explored student satisfaction in blended learning environments, emphasizing the need for adaptive models that consider evolving educational contexts. Hybrid optimization models have effectively enhanced decision-making processes in real-world scenarios50. AI-driven optimization frameworks have shown promising results in improving computational efficiency and predictive accuracy across various domains51. Evolutionary computation methods dynamically adapt to complex problem spaces, contributing to optimization strategies52. Machine learning-based student performance prediction has been extensively explored in education, providing data-driven insights for academic success and early intervention53,54.

The motivation for this study arises from the limitations and gaps identified in previous works on predicting student performance using educational data mining techniques. Existing studies have primarily focused on static feature selection methods, such as correlation analysis, information gain, and Chi-square, which fail to adapt to the dynamic nature of educational data. Many approaches lack diversity in classifier algorithms and ensemble methods, which hinders their ability to provide accurate and flexible predictions in fluctuating educational contexts. Additionally, most studies prioritize accuracy as the sole evaluation metric, neglecting other important measures such as precision, recall, and F1-score.

Another significant drawback is the reliance on historical campus data, with limited efforts to integrate diverse data sources, such as real-time internet-based information, which could enhance prediction capabilities. Previous methods often suffer from an inability to adapt to evolving data patterns, leading to overfitting or underfitting, and fail to incorporate dynamic thresholds for feature selection. The timeliness of predictions and the inclusion of critical data, such as curriculum content and continuous assessment, are also frequently overlooked. Furthermore, the absence of robust ensemble techniques and adaptive models has limited the scalability and effectiveness of prior methods in handling large and complex datasets.

These deficiencies motivated our development of the DE-FS algorithm, which integrates traditional and ensemble feature selection techniques with a dynamic and adaptive thresholding mechanism. By addressing these gaps, our study aims to provide a more accurate, adaptive, and comprehensive solution for predicting student performance, enabling real-time interventions and better decision-making in dynamic educational environments.

The manuscript is presented as follows: “Related works” section: discusses related works. “Problem statement” section discusses the problem statement, “Preliminaries” section discusses the fundamental concepts. “Proposed system” section explains the proposed methodology. “Experimental setup” section describes the hardware, software, datasets, evaluation metrics and methodologies used to conduct and validate the experiments and “Result and Discussion” section presents the experimental findings.

Problem statement

In the field of educational data mining, the precise prediction of student performance is essential for effective teaching strategies. Although traditional feature selection methods have demonstrated potential, they encounter difficulties in adjusting to the diverse educational data. With the constant evolution and diversification of educational data, there is an increasing need for a feature selection technique that can adapt dynamically and consistently enhance the accuracy of the model55. DF-FS tackles this issue by methodically and astutely choosing the most pertinent characteristics from datasets with diverse attributes. Choosing features is critical for enhancing ML models. DF-FS dynamically selects the best collection of features for a classification model based on data attributes.

The goal is to enhance accuracy, stability, and accessibility so educators and researchers can anticipate student outcomes. It solves feature dependency, data type differences, and model-specific feature selection.

Given a dataset D = {(x1, y1), (x2, y2)… (xn, yn)}, where xi denotes the feature vector for the ith student and yi is the corresponding performance label, the objective is to discover the optimal feature subset F* that maximizes a chosen evaluation metric E. This can be expressed as follows:

Subject to:

-

|F*| <= k (where k represents a predefined maximum number of features). This constraint ensures that the selected feature subset remains within practical limits.

-

F* exhibits dynamic adaptability to changing data patterns.

Preliminaries

Chi-square

Feature selection for categorical datasets was performed using the Chi-Square test, which assesses the relationship between features and the target variable56. This method helps identify the most relevant features by measuring statistical dependence. Features with higher Chi-Square values indicate a stronger association with the target variable, making them suitable for further analysis. Proper encoding of categorical variables is essential to ensure accurate results.

Information gain

Information Gain was employed to assess feature importance by measuring the reduction in uncertainty when splitting data, particularly in decision tree models57. Features with higher Information Gain were prioritized for improving prediction accuracy, ensuring the selection of the most relevant attributes while minimizing overfitting.

Correlation matrix

Pearson correlation was used in the Correlation Matrix with a Heat Map to identify features with significant linear relationships to student performance58. Along with Chi-Square and Information Gain, Correlation Analysis was incorporated into the DE-FS framework to enhance feature selection by addressing interdependencies, information gain, and linear correlations, ensuring an efficient and comprehensive approach to educational data analysis.

Dynamic thresholding

It is specifically the Mean and Standard Deviation-Based methodology, helps the DF-FS method uncover relevant features that adapt to changing data patterns7. The mean and standard deviation of feature values are used to calculate a dynamic threshold, a specified number of standard deviations from the mean. Data patterns change; thus, the mean and standard deviation are reassessed to alter the threshold. Features inside this threshold are kept; those outside may be removed. This dynamic technique was chosen for its ability to maintain feature selection precision while adapting to changing data patterns, making it ideal for educational data mining7,47.

Adaptive thresholding

For educational data mining, the DF-FS algorithm requires adaptive thresholding8. For feature value central tendency and dispersion, Mean and Standard Deviation-Based Thresholds is used. This adaptive threshold helps educational datasets that change over time by adapting dynamically to data distributions. Setting the threshold to a specified number of standard deviations from the mean matches the data distribution, retaining only important features for analysis. This versatility makes it a powerful and reliable tool for sustaining feature quality as data patterns change. Feature selection and prediction accuracy improve. Thus, this method is appropriate for data qualities that change often59.

Proposed system

Rationale

The DE-FS algorithm has several strong justifications. Firstly, it improves at feature selection by identifying the most important features, hence improving the prediction results. DE-FS is particularly useful in dynamic environments such as education, where data is always changing. The approach significantly improves prediction accuracy, which is crucial when predicting student progress. Furthermore, DE-FS lowers information overload by removing unnecessary features, which improves model efficiency and accessibility. Its adaptive thresholding function ensures that the parameters used to choose features are consistent with how the data develops, which is useful as educational data evolves. DE-FS contributes to the development of data-driven educational approaches and interventions, resulting in improved educational outcomes. DE-FS can be used to solve problems in educational data mining because it can work with different datasets and areas and can be used over and over again.

The use of chi-square, information gain, and correlation analysis in DE-FS is a calculated decision that builds on each method’s capabilities. The chi-square test detects statistical mutual dependence, information gain determines the relevance of features and correlation analysis investigates feature interaction. DE-FS combines these fundamental methods to create a robust ensemble capable of handling a wide range of patterns in educational data. Despite its potential difficulty, DE-FS’s adaptability overcomes this by tailoring feature selection algorithms to changing educational environments. This adaptability ensures that DE-FS remains successful in managing the challenges of combining multiple methods and accurately predicting student performance in changing educational conditions.

DE-FS offers many convincing reasons. First, it enhances feature selection by detecting key features, increasing prediction outcomes. DE-FS works well in dynamic situations like schooling where data changes frequently. It greatly improves forecast accuracy, which is critical for student development prediction. DE-FS also reduces information overload by reducing unneeded elements, improving model efficiency and accessibility. As educational data evolves, its adaptive thresholding algorithm guarantees that feature selection parameters match data development. DE-FS improves educational results by developing data-driven educational approaches and solutions. DE-FS can be reused and used across datasets and fields to solve educational data mining problems.

DE-FS uses chi-square, information gain, and correlation analysis to maximize their strengths. The chi-square test detects statistical mutual dependency, information gain determines feature relevance, and correlation analysis examines feature interaction. DE-FS uses these essential principles to produce a strong ensemble that can handle many educational data patterns. DE-FS adapts feature selection algorithms to changing educational contexts, overcoming its potential difficulty. DE-FS’s versatility helps it combine several methodologies and effectively forecast student performance under shifting educational situations.

Algorithm

DE-FS iteratively picks the best dataset characteristics. First, it selects a subset of features and evaluates performance using a dynamic threshold. Each feature’s relevance is assessed using several selection methods. Performance is measured using a metric and thresholds are modified accordingly. Repeat until a stopping point, such as iterations or performance, is achieved. The algorithm continuously examines the best-performing feature subset to find the best set depending on the criteria.

The complete description of the algorithm can be found in Algorithm 1.

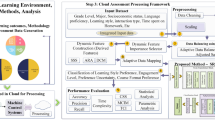

DE-FS for dynamic feature selection employs a systematic approach (Fig. 1). It uses traditional feature selection techniques such as Chi-square, Information Gain, and correlation analysis to perform dynamic selection. The main loop evaluates the relevance of each feature. Features that exceed the thresholds are included in the candidate subset. It controls execution by applying defined stopping criteria. DE-FS tracks the highest performing feature subset (F*) in each cycle. This subset is optimized and validated using tenfold cross-validation. DE-FS was evaluated to ensure its durability and effectiveness with educational datasets, with the boosting feature quality and prediction accuracy while addressing challenges posed by changing data patterns. The algorithm is distinguished by its use of adaptive and dynamic thresholding methods. They allow us to change the feature selection criterion in response to changing data patterns. It detects significant characteristics by responding to changing educational datasets. It ensures that the algorithm is successful and responsive to data variability. Our methodology is unusual in that it combines traditional feature selection approaches (Chi-square, Information Gain, and correlation analysis) with a dynamic threshold mechanism. This combination allows us to address the difficulties more effectively. The performance assessment reveals that the proposed strategy improves prediction accuracy in educational data mining.

Algorithm selection and synergy

Our research improves performance by combining feature selection methods with classification algorithm. Feature selection techniques are chosen based on their relevance to data features. We use Chi-Square to evaluate categorical variables like gender and school60. Information Gain evaluated the role of each attribute in generating pass/fail results60. The Correlation Matrix with Heat Map looks at the relationships between continuous and ordinal properties. Random Forest was chosen for classification because of its ability to handle complex, non-linear interactions and generate feature significance ratings. Decision trees are chosen because of their accessibility and ability to handle many attribute types. Neural Networks provided insights into attribute influence and linear correlations, whereas Support Vector Machines handled high-dimensional data and class imbalances.

Algorithmic combination and advantages

Our methodology for constructing algorithmic combinations61 aims to maximize the benefits of each feature selection and classification algorithm in order to develop a high-accuracy model62.

These algorithms are chosen for their specific advantages, resulting in an integrated effect that addresses the challenges experienced by current forecasting educational performance approaches. Algorithmic combinations are formed by combining feature selection and classification algorithms63. Our dataset has a different range of attributes with increasing importance. Feature selection strategies are chosen based on how well they match the properties of the features. We evaluate classification algorithms based on their ability to manage complicated interactions, nonlinear patterns, and class imbalances while maintaining dataset properties and aims62. For instance, we use Information Gain using Random Forest, a classifier known for its ensemble approach and complex relationship capturing. The suggested method improves prediction by identifying key traits and deleting irrelevant data. It decreases class gaps and gives a comprehensive overview of student success prediction by studying attribute-student success relationships. The method enhances model accuracy and usability. Using feature selection with classification algorithms improves individual benefits and addresses dimensionality reduction, nonlinear correlations, and class imbalances. It produces a model that outperforms its individual components.

Figure 2 depicts the use of feature selection approaches and classification algorithms in predictive modelling for student achievement in educational data analysis. It depicts how these aspects interact and collaborate to improve their efficiency and solve challenges.

Feature Selection Methods: Fig. 2 shows three feature selection strategies utilized in the study: Chi-Square, Information Gain, and Correlation Matrix. These methods find the most relevant attributes in a dataset. The numerical values (3, 1, 2) next to each technique denote the number of properties it assesses. Chi-Square, for example, analyzes three attributes, Information Gain one, and Correlation Matrix two.

Classification Algorithm: Fig. 2 shows many classifying methods that are necessary for generating predictions based on the selected attributes. Random Forest, Decision Trees, Neural Network, Support Vector Machine, J48, and Naive Bayes are some of the techniques used. The numerical values next to each algorithm indicate the number of attributes used for prediction.

Enhancing Strengths: Fig. 2 shows how combining feature selection approaches and classification algorithms strengthens each component. For example, Decision Trees with “2” attributes are combined with Information Gain, which concentrates on a particular attribute. This combination improves both feature significance and prediction accuracy. Different combinations provide specific benefits while improving overall performance.

Addressing issues: Fig. 2 also demonstrates how various combinations address different problems in the dataset and prediction purpose. Some combinations reduce dimensionality, deal with non-linear relationships, correct class imbalances, and manage variability, whereas others maximize hyperparameter settings.

Interaction and Synergy: Fig. 2 illustrates the interconnections between feature selection approaches and classification algorithms, demonstrating their collaborative function. The linkages show how each element complements and strengthens the others, indicating an integrated approach to enhancing predictive modeling.

Figure 2 emphasizes the significance of using the best combination of feature selection approaches and classification algorithms to improve forecast accuracy for student performance61.

It shows how effective feature selection reduces noise while identifying relevant qualities, hence improving classification algorithm performance62. The graph shows a number of classification methods, each of which is suitable for particular elements of the prediction task63. Overlapping areas in the diagram imply successful strategy integration and effectiveness. This method solves educational data analysis issues such complex relationships and unequal class distributions60. A mixed predictive modeling strategy for educational data is shown in the diagram. Features selection and categorization can be used to create a more accurate and useful predictive model62. In future work, we plan to incorporate advanced optimization algorithms64,65,66 to further enhance the feature selection process, ensuring even more precise identification of relevant features. This will allow for continuous refinement of the dynamic thresholds, improving the model’s accuracy and adaptability to large-scale, evolving educational datasets.

Experimental setup

Python and scikit-learn were used to construct the DF-FS algorithm2. The experiment used a system with an Intel Core i7 processor, NVIDIA GeForce GTX 1650 GPU, and 16 GB DDR4 RAM. The model was trained and tested using two public Portuguese high school datasets11. Several approaches were used for feature selection and classification, including Decision Trees, Random Forests, Support Vector Machines, Neural Networks, Naïve Bayes, and J4812,13,14,15,16,17. Humans and machines set hyperparameters. Performance was measured by accuracy, F1-score, and CV-score21.

Dataset used

The analysis predicted student progress using two public datasets. The records from two Portuguese schools included academic success, socio-demographic data, and school attributes. The data came from questionnaires and school reports. One dataset has 33 parameters for pupils’ math skills and the other for Portuguese language training. Student success trends may have been investigated with longitudinal data. This improved analysis and prediction. The extensive use of these datasets in earlier studies makes them relevant to our research goals. Because they are relevant to the research’s goals, these datasets allow for an in-depth investigation of the complexity of forecasting student performance by considering multiple aspects. The files explain research on how demographic, socioeconomic, and academic factors affect student achievement. Their widespread use in educational data mining shows their importance in solving student achievement prediction research issues. The dataset’s many features make it ideal for testing feature selection, classification, and model combining methods.

Data preprocessing

Missing values were imputed depending on the data, and categorical variables were encoded for numerical processing. Outlier management and data normalization were employed to reduce the impact of unexpected results. Data quality checks found duplicates and formatting mistakes. Feature engineering improved model prediction. To evaluate models properly, datasets were split into training and testing subsets.

Machine learning approaches

We applied various machine learning techniques to enhance feature selection and classification. Decision Trees and Random Forest simplified data patterns and improved prediction accuracy, while SVM handled high-dimensional, imbalanced data effectively. Neural Networks provided probabilistic insights, Naïve Bayes efficiently addressed class imbalances, and J48 excelled in large dataset classification.

Performance evaluation

-

1.

The performance is assessed using a confusion matrix. In the objective of the proposed work, the four alternative outcomes of a binary classification problem are specified as follows:

-

2.

True Positive (TP): The number of occurrences where the actual class and predicted class are both positive.

-

3.

True Negative (TN): The number of occurrences in which the actual and expected classes are both negative.

-

4.

False Positive (FP): The number of times in which the true category is negative while the predicted category is positive.

-

5.

False Negative (FN): The number of occurrences where the true category is positive while the predicted category is negative. This study evaluates the performance of the suggested models using accuracy, f-score, and CV-score metrics.

-

1.

Accuracy: This is the number of correctly predicted observations divided by the total number of observations. It measures how well the model works overall and is worked out like this67:

$$Accuracy=\frac{TP+TN}{(TP+TN+FP+FN)}$$(2)where TP = True Positive, TN = True Negative, FP = False Positive, FN = False Negative.

-

2.

The F1-score is the average of recall and precision. It is useful when the data is not balanced and we must find a balance between precision and recall. Here’s how to calculate your F1 score4,67:

$$Precision=\frac{TP}{TP+FP}$$(3)$$Recall=\frac{TP}{TP+FN}$$(4)$$F1-score=\frac{2*Precision*Recall}{Precision+Recall}$$(5) -

3.

The cross-validation score (CV-score) evaluates a machine learning model’s performance on untrained data. Cross-validation is a resampling strategy that involves separating data into training and validation sets and repeating the process several times. The cross-validation score is generated by averaging the performance across each validation set. Its objective is to examine if the model is overfitting or underfitting67.

$$CV-Score=\frac{\left(SumofvalidationScores\right)}{\left(Numberofiterations\right)}$$(6)The performance of the proposed model was evaluated using these metrics, and the results were compared with previous studies to determine the effectiveness of the proposed approach.

Result and discussion

Analyzing results: DF-FS for predicting student performance

Three experiments were carried out to predict student performance. In the first experiment, the chi-square feature selection method was applied to the student data subset from the UCI Machine Repository to identify the most important attributes. In the second experiment, the information gain method was used, and in the third, the correlation matrix with heat map was employed. Table 2 shows the features chosen by each feature selection technique, with G3 (the final grade) as the target feature.

Among these, the correlation matrix with heat map performed best, as it not only captured features highly correlated with student performance but also minimized redundancy. The distribution of attribute values is visualized in bar charts, as depicted in Figs. 3 and 4, showing the distribution for each attribute.

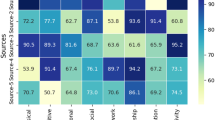

Table 3 presents the performance of six machine learning algorithms using three feature selection methods: Chi-Square, Information Gain, and Correlation Heat Map. The performance is evaluated using accuracy and F1-score. Neural Networks (NN) and Random Forest (RF) are the top performers in terms of accuracy, both achieving over 91% across all feature selection methods. Notably, NN achieves an accuracy of 92.43% with Correlation Heat Map.

RF exceeds all other feature selection approaches, with Information Gain being the most successful. In terms of F1-score, Neural Network and Naive Bayes outperform. It takes Correlation Heat Map to get an F1-score of 92.55% for NN and Information Gain to get the same score for Naive Bayes. Support Vector Machine (SVM) and RF both do well, but RF gets the best F1-score (92.91%) when Information Gain is used. In comparison, Decision Trees (DT) and J48 perform poorly. From 88.98 to 90.68%, DT’s accuracy and F1-score range, while J48’s varies from 87.29 to 91.53%. Naive Bayes performs well with Information Gain, scoring 92.91% in both accuracy and F1-score, but its performance suffers while utilizing Correlation Heat Map. When Information Gain is used, RF gets an accuracy of 92.43% and an F1-score of 92.55%, but when Correlation Heat Map is used, it drops to 86.44% accuracy and an F1-score of 86.89%.

Table 4 provides a comparison of different algorithms on the Portuguese dataset, using three feature selection methods: Chi-Square, Information Gain, and Correlation Heat Map. There are two measures used for the evaluation: accuracy and F1-score. Three algorithms—Neural Networks (NN), Random Forest (RF), and Naive Bayes (NB)—are the most accurate. All three get above 90% accuracy across all feature selection methods. NN works best, with a 92.82% success rate, which can be reached with both the Chi-Square and Correlation Heat Map feature selection methods. RF and NB perform well with all of the feature selection methods, with Chi-Square being the best for both of them. When examining F1-score, NB and RF take the lead, with NB achieving the greatest F1-score of 92.20% utilizing Correlation Heat Maps. The NN method also does well, with an F1-score of 89.23%, which is the second-highest of all the methods. Meanwhile, Support Vector Machine (SVM) and J48 work less well.

SVM achieves accuracy and F1-score values ranging from 90.23 to 92.30%, while J48 falls between 90.05% and 92.28%. The performance of each algorithm is influenced by the feature selection method. RF performs consistently well but achieves its highest accuracy and F1-score with Chi-Square. NB performs best with Correlation Heat Map, achieving 94.30% accuracy and a 92.20% F1-score. Decision Trees (DT) perform lowest with Chi-Square but improve when using Correlation Heat Map, where they reach their highest accuracy and F1-score.

Table 5 compares ensemble stacking performance of six machine learning algorithms using a Portuguese dataset. The algorithms’ accuracy and cross-validation (CV) scores are assessed. Chi-Square, Information Gain, and Correlation Heat Map stack. We found that Random Forest (RF) and Support Vector Machine (SVM) have the highest accuracy, ranging from 93.61 to 95.36%. The maximum accuracy for RF is 94.67% with Correlation Heat Map stacking, while SVM is 95.36% with Information Gain stacking.

Decision Trees (DT), Naive Bayes (NB), and Neural Networks (NN) all have good accuracy ranging from 92.93 to 94.39%. Information Gain stacking provides DT the good accuracy (96.03%), whereas Chi-Square stacking gives NB the highest accuracy (94.39%). In terms of cross-validation (CV) score, RF and NB perform good, with CV values ranging from 89.74 to 94.36%. The best Information Gain stacking score for RF is 93.33%, while for NB it is 94.36%.

Neural Networks (NN) and J48 perform relatively poorly in both accuracy and CV score, with accuracy values ranging from 86.17 to 94.05% and CV scores between 83.33% and 91.28%. The performance of each algorithm is influenced by the stacking method used. RF performs well across all stacking methods but achieves its best results with Correlation Heat Map stacking. DT and NB excel with Information Gain stacking, while SVM reaches its peak performance with Information Gain stacking for both accuracy and CV score.

The comparison of different algorithms on Mathematics dataset is given in Table 6. In the comparison we observed that Decision Trees (DT) perform consistently across all three stacking methods, with particularly strong results using Information Gain. Random Forest (RF) shows strong performance across all methods, especially with the Chi-Square method. Support Vector Machine (SVM) has lower performance across all methods, particularly with Correlation Heat Map. Neural Networks (NN) perform well with Chi-Square and Information Gain but show reduced performance with Correlation Heat Map. Naive Bayes (NB) performs best with Information Gain but poorly with Chi-Square and Correlation Heat Map. J48 performs well with Chi-Square and Information Gain but performs less effectively with Correlation Heat Map.

Table 7 compares the performance of different ensemble stacking methods on Mathematics and Portuguese datasets using Accuracy and F1-Score metrics. For the Mathematics dataset, the Chi-Square ensemble stacking approach achieved the accuracy of 95.80 and F1-Score of 96.91, while the Correlation Heat Map method achieved 95.36. The Chi-Square approach achieved the F1-Score of 91.80 for the Portuguese dataset. The ensemble stacking approaches performed well on both datasets, with accuracy ranging from 94.17 to 95.80 and F1-Scores ranging from 90.91 to 96.91.

Figure 5 illustrates the performance of different ensemble stacking methods (Chi-Square, Information Gain, and Correlation Heat Map) on the Mathematics dataset, where the model shows strong predictions for both “Pass” and “Fail” categories. Figure 6 shows the results for the Portuguese dataset, emphasizing the model’s ability to make accurate predictions, with 61 correct predictions and only 10 incorrect ones.

Figure 7 compares the accuracy of the proposed ensemble model against six base classifiers (DT, RF, SVM, NN, NB, and J48). The proposed model achieved the highest accuracy of 95.8% on the Mathematics dataset, significantly outperforming the base models, with Neural Networks (NN) coming second at 93.22%. Random Forest (RF) and Naive Bayes (NB) showed accuracies of 91.53% and 92.37%, respectively. These results underscore the strength of the ensemble approach, particularly for predicting student performance.

Figure 8 figure presents the mean computation time in seconds for different algorithms on the Mathematics and Portuguese datasets. The proposed “DF-FS” model demonstrates the fastest computation times (32.3 s for Mathematics and 41.5 s for Portuguese), indicating its efficiency compared to other base classifiers such as NN and NB, which take significantly longer. This suggests that the proposed model not only delivers superior performance but also operates more efficiently.

Enhancing predictive models with DE-FS

This section explores the results of our study in predicting student performance. Our analysis of 18 carefully chosen combinations reveals how predictive model performance is affected by data distribution, feature attributes, and class imbalances. The effectiveness of algorithms is notably influenced by the data distribution, with certain algorithms performing exceptionally well based on dataset attributes such as categorical, ordinal, or continuous features. Table 8 summarizes the effectiveness of different feature selection and classification algorithm combinations on the Mathematics and Portuguese datasets. The combinations are tailored to specific dataset characteristics, such as handling categorical, ordinal, or continuous features. Key insights include the ability of Chi-Square with Random Forest to effectively handle categorical attributes and class imbalances, while Information Gain with Decision Trees excels in identifying predictive attributes, leading to high performance in ensemble models.

Results on mathematics dataset

For the Mathematics dataset, “Chi-Square + Random Forest” pairing excels due to its ability to handle categorical attributes and class imbalances, making it effective for complex, non-linear data patterns as shown in Table 9. The “Information Gain + Decision Trees” combination improves in collecting important attributes, resulting in high recall and balanced performance. Meanwhile, “Correlation Heat Map + J48” uses its ability to detect linear correlations, resulting in good models with balanced precision and recall.

The differences in performance across the various combinations are determined by how effectively the feature selection methods correspond to the dataset’s features and are compatible with the classification algorithms. Combinations that are properly customized to the dataset’s features, distribution, and class imbalance management often produce better results. Choosing the right combination requires adapting it to the dataset’s particular features, ensuring a smooth interplay between feature selection and classification methodologies for successful predictive modeling in educational data analysis (Figs. 9, 10 and 11). Table 10 gives the performance Evaluation of Different Feature Selection and Classification Algorithm Combinations on Portuguese dataset.

Results on Portuguese dataset

The “Chi-Square” method is also effective, and the “Correlation Heat Map” method works well in some situations (Figs. 12, 13 and 14). Notable combinations include “Information Gain + Decision Tree” and “Information Gain + Random Forest,” both of which perform well as seen in Table 10. The “Information Gain + Decision Tree” combination performs better in terms of accuracy, precision, and recall. In contrast, “Information Gain + J48” demonstrates low accuracy and precision. The “Correlation Heat Map + SVM” combo provides good accuracy and precision. Several important features were used for evaluating combinations in both the mathematics and Portuguese datasets, including:

-

1.

Data Type: For datasets with mostly categorical features and strong connections, such as those addressed by “Chi-Square” approaches, these techniques can effectively capture and use these associations to improve performance. On the other hand, “Information Gain” is better suited to datasets with a large number of predictive attributes, allowing DT, RF, and Support Vector Machines (SVM) to focus on the most informative features.

-

2.

Interdependencies: Feature selection algorithms should take into account the interrelationships between variables. Techniques like the “Correlation Heat Map,” which highlight linear correlations, are useful for classifiers like SVMs.

-

3.

Classification Models: Decision trees are prized for their interpretability and ability to handle a wide range of data types, making them ideal for methods such as “Chi-Square” and “Information Gain.” SVMs, on the other hand, perform better when dealing with large amounts of data and complex decision boundaries.

Based on the data in Fig. 15, Combination 8 is the most effective for the Mathematics dataset. It uses “Information Gain” to choose the features and Random Forest (RF) as the classification method.

This combination is the best on all three criteria, as shown by its Pareto front position. Due to its accuracy and low false positives and negatives, Random Forest is best for mathematics datasets due to its adaptability and “Information Gain” impact. Figure 16 shows the Pareto front analysis for the Portuguese dataset, showing that “Information Gain” and SVM work well. This combination balances Accuracy, Precision, and Recall on the Pareto front, in the upper right corner. This indicates balanced assessment elements, making it a good choice for Portuguese dataset.

The study analyzes how datasets and feature selection and classification algorithms can predict student performance. The research uses 18 combinations of these strategies to show how data distribution, feature characteristics, and class imbalances affect model performance. The results show that feature selection approaches must match dataset attributes for optimal predictions. Since Random Forest can handle class imbalances, “Chi-Square + RF” works well with group qualities and strong relationships. Furthermore, “Information Gain” functions well in several contexts, making it a solid choice. It improves educational data prediction by explaining how feature selection and classification algorithms interact. It informs academics and practitioners on how to construct predictive models that match the dataset’s properties, improving student performance projections.

Misclassification rates for different feature selection and classification method combinations from Table 9 are shown in Fig. 17. The most effective model is Chi-Square + Random Forest (RF), which has the lowest error rate of 4.20%. Information Gain + Naïve Bayes (NB) has the greatest error rate (11.24%), 167.6% greater than the best-performing model. Figure 17 shows that Chi-Square-based feature selection, especially with RF, offers the best classification performance. However, Naïve Bayes models tend to have higher misclassification rates, independent of feature selection method. The histogram shows error rates, helping choose the best student performance prediction models.

Misclassification rates for different feature selection and classification method combinations from Table 10 are shown in Fig. 18. Information Gain + DT has the lowest error rate, 96.03% accuracy, 3.97% error rate. The highest error rate is 13.83% for Information Gain + J48, which has an accuracy of 86.17%. Feature selection approaches affect classification results, as seen by the 9.86% gap between the best and worst algorithms. Information Gain-based models outperform the other three feature selection approaches, with three out of six combinations achieving 94% accuracy. Chi-Square and Correlation Heat Map models are less accurate in some classifiers. Selecting the best feature selection and classification pair is important since several combinations around 92–95% accuracy reflect a concentration of models within a restricted performance range.

Figure 19 shows the average calculation time for classification models and feature selection approaches, with a red dashed line. This lets you compare model efficiency. The fastest model, “Chi-Square + DT,” computes 78% faster than the slowest, “Information Gain + RF,” which takes 75% longer. Computationally cheaper than Information Gain or Correlation Heat Map, Chi-Square feature selection models run faster. Complex classifiers like Random Forest (RF) and Neural Networks (NN) take longer to compute since they use many decision trees and matrix computations. Decision Tree (DT) and Naïve Bayes (NB) are the most efficient classifiers, with calculation speeds 40–60% faster than slower models. The J48 classifier, a decision tree variant, balances performance by keeping calculation time within 5–15% of the average. This analysis says “Chi-Square + DT” or “Chi-Square + NB” are optimal for speed.

The Fig. 20 compares classification models’ average calculation time with Chi-Square, Information Gain, and Correlation Heat Map feature selection methods. Model computation times vary, with more classifiers like Neural Networks (NN) and Random Forest (RF) taking longer than simpler models like Decision Trees (DT) and Naïve Bayes (NB). Information Gain + NN has the largest calculation time, 25% more than other models. Chi-Square + NB has the lowest computation time, 30% lower than average. The computing time trade-off shows model complexity versus efficiency. Neural Networks and Random Forests are accurate but need more processing resources, making them unsuitable for time-sensitive applications. However, Decision Trees and Naïve Bayes may have slightly lower classification performance but faster computations. The confusion matrices in Figs. 21 and 22 provide a visual representation of classification performance for different feature selection and classification algorithm combinations.

Comparative analysis

Table 11 presents the results of many machine learning studies that predicted student performance. Different studies employed different models. For 32 features, Random Forest (RF) and Support Vector Machine (SVM) classifiers with Recursive Feature Elimination (RFE) and Boruta algorithms performed 86.55% in Mathematics and 89.74% in Portuguese. In a combined filtering technique, Naïve Bayes (NB), Decision Tree (DT), and RF classifiers achieved 93% accuracy in Mathematics and 94% accuracy in Portuguese for 15 features. Using 10 features, XGBoost, Gradient Boosting (GB), and Light GBM models with ensemble modeling achieved 96.63% accuracy for Mathematics and 94.28% for Portuguese.

An SVM using a combined filter-wrapper technique had 92.91% mathematics accuracy and 93.91% Portuguese accuracy with 15 features. The suggested study used 12 features, NB, DT, SVM, KNN, and NN classifiers, chi-square, information gain, and correlation heat map approaches to achieve 95.8% mathematics precision and 95.3% Portuguese precision. Results reveal that the suggested strategy is more accurate than earlier research.

Discussion

DE-FS technology is a breakthrough in educational data mining since it combines standard feature selection methods with an adaptable ensemble approach to maintain accuracy as educational data changes. DE-FS outperformed “Chi-Square + Random Forest.” in a Mathematics dataset study. The 95.80% success percentage is much higher than previous methods. This shows how it can enhance student performance predictions and target interventions in real-world schooling. DE-FS is an innovative tool that helps schools improve learning, allocate resources, and forecast accurately. It could improve things, but further research is needed to make it more adaptable and evaluate its performance in other educational situations. More meaningful data and better student achievement will ensue.

The DF-FS method outperforms previous methods for altering datasets and data patterns. It does this by combining feature selection and classification. DF-FS employ adaptive thresholding to select the optimal group by continuously monitoring how significant each feature is, unlike older systems, which often encounter feature relevance issues and overfit or underfit. By showing how judgments are made, weighting the most essential variables enhances prediction accuracy and clarity. The DF-FS technique is good at resolving class imbalances and providing fair and accurate predictions since it focuses on minority classes. It can manage linear and nonlinear interactions with Random Forest and Neural Network methods, improving prediction accuracy. DF-FS makes prediction modeling more flexible, accurate, class imbalance-tolerant, and understandable for educational data analysis.

Significance and contribution

A major advancement in Educational Data Mining (EDM) and ICT is Dynamic Ensemble Feature Selection (DE-FS). Data-driven strategies boost student performance and resource allocation in the DE-FS, improving education. Its versatility allows it to quickly adapt to changing trends in modern educational environments, making it perfect for dynamic educational data. As ICT grows and provides more data, DE-FS must give students rapid, individualized aid to study better. Combining DE-FS with ICT shows how data and technology are becoming more significant in education, improving student performance and making teaching easier.

Limitations

DE-FS is improving despite its difficulties. Its performance depends on training data accuracy and quality because complete or skewed datasets lower its effectiveness. DE-FS requires a lot of computational power, which could be a concern for schools with poor tech. It is also important for the features to be well-defined, so datasets with poorly defined attributes may make the method less effective. Also, universities that don’t have data science tools find it hard to adopt DE-FS because they need people who know how to analyze and look at data. There needs to be work done to improve the quality of the data, make the best use of resources, and train teachers so that DE-FS can keep working well in school data mining.

Future work

Future research will focus on enhancing the adaptability and scalability of the DE-FS framework to accommodate larger and more diverse educational datasets. This includes exploring real-time implementation to enable instantaneous predictions and interventions, as well as incorporating additional data sources such as behavioral and social interactions to improve prediction accuracy further. Efforts will also be directed toward optimizing computational efficiency to make DE-FS more accessible for institutions with limited resources. Additionally, expanding the framework to evaluate its performance across various educational contexts and integrating advanced machine learning techniques, such as deep learning, will help address the evolving challenges in educational data mining.

Conclusion

This study shows that data-driven decision-making improves student performance prediction in dynamic educational environments. DE-FS, our feature selection model, combines Chi-square, information gain, correlation heat maps, ensemble stacking, and dynamic thresholding to achieve 95.8% accuracy while reducing the feature set to 12 attributes, a significant improvement over static feature selection methods. DE-FS improves predictive accuracy and computational efficiency by minimizing feature redundancy, making it ideal for dropout prediction, academic interventions, and personalized learning pathways. However, scalability for huge educational datasets and feature interpretability for educators and decision-makers remain issues. Further study will improve DE-FS to handle multi-modal learning analytics, integrate it with deep learning models for feature extraction, and optimize it for real-time adaptive learning applications. DE-FS improves Educational Data Mining by combining accuracy, flexibility, and reduced computational complexity, overcoming static approaches’ drawbacks and improving educational outcomes.

Data availability

The data that supports the findings of this study are available at https://archive.ics.uci.edu/dataset/320/student+performance.

References

Leena, H. et al. Predicting student academic performance using support vector machine and random forest. In 2020 3rd International Conference on Education Technology Management (ICETM 2020), December 17–19, 2020, London, United Kingdom8 (ACM, New York, NY, USA, 2020). https://doi.org/10.1145/3446590.3446607

Keser, S. B. & Aghalarova, S. H. E. L. A. A novel hybrid ensemble learning algorithm for predicting academic performance of students. Educ. Inf. Technol. 27, 4521–4552. https://doi.org/10.1007/s10639-021-10780-0 (2022).

Abid, A., Kallel, I., Blanco, I., & Benayed, M. Selecting relevant educational attributes for predicting students’ academic performance. https://doi.org/10.1007/978-3-319-76348-4_63 (2018).

Kongara, D. & Sathyanarayana, N. Relief-F and budget tree random forest based feature selection for student academic performance prediction. Int. J. Intell. Eng. Syst. 12, 30–39. https://doi.org/10.22266/ijies2019.0228.04 (2019).

Mason, C., Twomey, J., Wright, D. & Whitman, L. Predicting engineering student attrition risk using a probabilistic neural network and comparing results with a back propagation neural network and logistic regression. Res. High. Educt. 59(3), 382–400 (2018).

Zaffar, M. et al. A hybrid feature selection framework for predicting students performance. Comput. Mater. Contin. 70, 1893–1920. https://doi.org/10.32604/cmc.2022.018295 (2021).

Paddalwar, S., Mane, V. & Ragha, L. Predicting students’ academic grade using machine learning algorithms with hybrid feature selection approach. ITM Web of Conferences 44, 03036. https://doi.org/10.1051/itmconf/20224403036 (2022).

Evangelista, E. & Sy, B. An approach for improved students’ performance prediction using homogeneous and heterogeneous ensemble methods. Int. J. Electr. Comput. Eng. 12, 5226–5235. https://doi.org/10.11591/ijece.v12i5.pp5226-5235 (2022).

Marbouti, F., Diefes-Dux, H., Madhavan, K. & & Models for early prediction of at-risk students in a course using standards-based grading. Comput. Educ. 103 https://doi.org/10.1016/j.compedu.2016.09.005 (2016).

Malik, S. & Jothimani, K. Enhancing student success prediction with featurex: A fusion voting classifier algorithm with hybrid feature selection. Educ. Inf. Technol. https://doi.org/10.1007/s10639-023-12139-z (2023).

Malik, S., Jothimani, K. & Ujwal, U. J. A comparative analysis to measure scholastic success of students using data science methods. In: (eds Shetty, N. R., Patnaik, L. M. & Prasad, N. H.) Emerging Research in Computing, Information, Communication and Applications. Lecture Notes in Electrical Engineering, vol 928. Springer, Singapore. https://doi.org/10.1007/978-981-19-5482-5_3 (2023).

Zaffar, M., Hashmani, M. & Savita, K. S. Performance analysis of feature selection algorithm for educational data mining. 7–12. https://doi.org/10.1109/ICBDAA.2017.8284099 (2017).

Dabhade, P. et al. Educational data mining for predicting students’ academic performance using machine learning algorithms. Mater. Today: Proc. 47. https://doi.org/10.1016/j.matpr.2021.05.646 (2021).

Thaher, T. et al. An enhanced evolutionary student performance prediction model using Whale optimization algorithm boosted with sine-cosine mechanism. Appl. Sci. 11, 10237. https://doi.org/10.3390/app112110237 (2021).

Tsai, C. F., Sue, K. L. & Chiu, Y. H. H. A. Combining feature selection, instance selection, and ensemble classification techniques for improved financial distress prediction. J. Bus. Res. 130, 200–209. https://doi.org/10.1016/j.jbusres.2021.03.018 (2021).

Zaffar, M., Ahmed, M., Savita, K. S., Sajjad, S. & & & A study of feature selection algorithms for predicting students academic performance. Int. J. Adv. Comput. Sci. Appl. 9 https://doi.org/10.14569/IJACSA.2018.090569 (2018).

lrefaey, H., Gad, A., Abohany, A., Sorour, S. & & & An efficient data mining technique for assessing satisfaction level with online learning for higher education students during the COVID-19. IEEE Access. 10, 6286–6303. https://doi.org/10.1109/ACCESS.2022.3143035 (2022).

Malik, S. & Jothimani, K. Enhancing student success prediction with featurex: A fusion voting classifier algorithm with hybrid feature selection. Educ. Inf. Technol. 29, 8741–8791. https://doi.org/10.1007/s10639-023-12139-z (2024).

Ujwal, U. J., & Saleem, M. A hybrid weight based feature selection algorithm for predicting students. Academic Advancement by employing data science approaches. Int. J. Educ. Manage. Eng. (IJEME) 13, 1–22. https://doi.org/10.5815/ijeme.2023.05.01 (2023).

Malik, S. & Malik, S. Hybrid data science approaches to predict the academic performance of students. In Advances in Communication and Applications. ERCICA 2023. Lecture Notes in Electrical Engineering, Advances in Communication and Applications (eds Shetty, N. R., Prasad, N. H. & Nagaraj, H. C.) vol 1105. (Springer, Singapore, 2024). https://doi.org/10.1007/978-981-99-7633-1_39.

Kumar, M. et al. Analysis of feature selection and data mining techniques to predict student academic performance. (2022). https://doi.org/10.1109/DASA54658.2022.9765236

Wei, G., Zhao, J., Feng, Y., He, A. & Yu, J. A novel hybrid feature selection method based on dynamic feature importance. Appl. Soft Comput. 93, 1568–4946. https://doi.org/10.1016/j.asoc.2020.106337 (2020).

Rashid, A. N. M. B. et al. Cooperative co-evolution for feature selection in big data with random feature grouping. J. Big Data 7, 107. https://doi.org/10.1186/s40537-020-00381-y (2020).

Mohammed Faris, M. N., Mahmud, M. F. M. & Salleh, B. A differential evolution-based algorithm with maturity extension for feature selection in intrusion detection system. Alexandria Eng. J. 81, 178–192. https://doi.org/10.1016/j.aej.2023.09.032 (2023).

Youssef, M. et al. A predictive approach based on efficient feature selection and learning algorithms’ competition: Case of learners’ dropout in MOOCs. Educ. Inf. Technol. 24, 3591–3618. https://doi.org/10.1007/s10639-019-09934-y (2019).

Nayak, P. et al. Predicting students’ academic performance by mining the educational data through machine learning-based classification model. Educ. Inf. Technol. https://doi.org/10.1007/s10639-023-11706-8 (2023).

Badal, Y. T. & Sungkur, R. K. Predictive modelling and analytics of students’ grades using machine learning algorithms. Educ. Inf. Technol. 28, 3027–3057. https://doi.org/10.1007/s10639-022-11299-8 (2023).

Ezz, M. & Elshenawy, A. Adaptive recommendation system using machine learning algorithms for predicting student’s best academic program. Educ. Inf. Technol. 25, 2733–2746. https://doi.org/10.1007/s10639-019-10049-7 (2020).

Ali, A. M. et al. Explainable Machine Learning Approach for Hepatitis C Diagnosis Using SFS Feature Selection, (2023).

Sahana, P. et al. Software Defect Predictor and Classifier Tool Using Machine Learning Techniques. In 2023 International Conference on Network, Multimedia and Information Technology (NMITCON) (2023).

Rua Ismail, M., Omer, M., Tabir, N. & Mahadi, I. A. Sentiment Analysis for Arabic Dialect Using Supervised Learning. In International Conference on Computer, Control, Electrical, and Electronics Engineering (ICCCEEE), 2018 (2018).

Verma, A. K. Prediction of skin disease with three different feature selection techniques using stacking ensemble method. Appl. Biochem. Biotechnol. (2019).

Chung, J. Y., & Lee, S. Dropout early warning systems for high school students using machine learning. Child. Youth Serv Rev. 96, 346-353 (2018).

Alhassan, A., Zafar, B., & Mueen, A. Predict students’ academic performance based on their assessment grades and online activity data. Int. J. Adv. Comput. Sci. Appl. (2020).

Mahum Adil, F. & Tahir, S. M. Predictive Analysis for Student Retention by Using Neuro-Fuzzy Algorithm. In 10th Computer (Science and Electronic Engineering (CEEC) (2018).

Sarker, S. et al. Analyzing students’ academic performance using educational data mining. Comput. Educ.: Artif. Intell. 7, 100263 (2024).

Okoye, K. et al. Machine learning model (RG-DMML) and ensemble algorithm for prediction of students’ retention and graduation in education. Comput. Educ.: Artif. Intell. 6, 100205 (2024).

Priyambada, S., Adi, T., Usagawa & Mahendrawathi, E. R. Two-layer ensemble prediction of students’ performance using learning behavior and domain knowledge. Comput. Educ.: Artif. Intell. 5, 100149 (2023).

Lam, P. et al. Enhancing educational evaluation through predictive student assessment modeling. Comput. Educ.: Artif. Intell. 6, 100244 (2024).

Niyogisubizo, J. et al. Predicting student’s dropout in university classes using two-layer ensemble machine learning approach: A novel stacked generalization. Comput. Educ.: Artif. Intell. 3, 100066 (2022).

Bhuyan, H., Kumar, V., Ravi & Srikanth Yadav, M. Multi-objective optimization-based privacy in data mining. Cluster Comput. 25(6), 4275–4287 (2022).

Bhuyan, H. et al. Wide-ranging approach-based feature selection for classification. Multimed. Tools Appl. 82(15), 23277–23304 (2023).

Bhuyan, H., Kumar, A., Vijayaraj & Ravi, V. Diagnosis system for cancer disease using a single setting approach. Multimed. Tools Appl. 82(30), 46241–46267 (2023).

Korial, A. E., Gorial, I. I. & Amjad, J. An improved ensemble-based cardiovascular disease detection system with chi-square feature selection. Computers 13(6), 126 (2024).

Abed, R., Abdulhasan, E. K., Hamza, Amjad, J. & Humaidi A modified CNN-IDS model for enhancing the efficacy of intrusion detection system. Meas. Sens. 35, 101299 (2024).

Nasser, A. R., Ahmed, M., Hasan & Amjad, J. Humaidi. DL-AMDet: deep learning-based malware detector for android. Intell. Syst. Appl. 21, 200318 (2024).

Hussain, S. Survey on current trends and techniques of data mining research. Lond. J. Res. Comput. Sci. Technol. 17(1), 11 (2017).

Punitha, S. & Devaki, K. A high ranking-based ensemble network for student’s performance prediction using improved meta-heuristic-aided feature selection and adaptive GAN for recommender system. Kybernetes (2024).

Gaftandzhieva, S. et al. Student satisfaction with the quality of a blended learning course. Math. Inf.67(1) (2024).

El-Kenawy, E. S. M. et al. Greylag Goose optimization: nature-inspired optimization algorithm. Expert Syst. Appl. 238, 122147 (2024).

Ahmed, M., Gamal, M., Ismail, I. M., El-Din, E. & H An AI-Based system for predicting renewable energy power output using advanced optimization algorithms. J. Artif. Intell. Metaheuristics 1–08. https://doi.org/10.54216/JAIM.080101 (2024).

Abualigah, M. E. M. M. & L Machine learning in public health forecasting and monitoring the Zika virus. Metaheuristic Optim. Rev. 1–11. https://doi.org/10.54216/MOR.010201 (2024).

Eed, M. et al. Potato consumption forecasting based on a hybrid stacked deep learning model. Potato Res., 1–25. (2024).

Radwan, M., Alhussan, A. A., Ibrahim, A. & Tawfeek, S. M. Potato leaf disease classification using optimized machine learning models and feature selection techniques. Potato Res., 1–25. (2024).

Chen, R. C. et al. Selecting critical features for data classification based on machine learning methods. J. Big Data 7, 52. https://doi.org/10.1186/s40537-020-00327-4 (2020).

Alalawi, K. et al. Evaluating the student performance prediction and action framework through a learning analytics intervention study. Educ. Inf. Technol. https://doi.org/10.1007/s10639-024-12923-5 (2024).

Khairy, D. et al. Prediction of student exam performance using data mining classification algorithms. Educ. Inf. Technol. 29, 21621–21645. https://doi.org/10.1007/s10639-024-12619-w (2024).

Bhimavarapu, N. et al. Predicting Student Academic Performance Using Machine Learning: A Comparison of Classification Algorithms. In Advances in Machine Learning and Big Data Analytics I. ICMLBDA 2023 (eds Patel, A., Kesswani, N., Mishra, M., Meher, P.) Vol. 441 (Springer Proceedings in Mathematics & Statistics, Springer, Cham, 2025). https://doi.org/10.1007/978-3-031-51338-1_56

Ünal, F. Data mining for student performance prediction in education. https://doi.org/10.5772/intechopen.91449 (2020).

Shahzad, W., Ellahi, A. & Naseer, A. A hybrid approach for feature subset selection using ant colony optimization and multi-classifier ensemble. Int. J. Adv. Comput. Sci. Appl. 9(1), 306–313 (2018).

Alhamidi, M. R. & Jatmiko, W. Optimal feature aggregation and combination for two-dimensional ensemble feature selection. Information 11, 38. https://doi.org/10.3390/info11010038 (2020).

Cheng, X. et al. Optimal combination of feature selection and classification via local hyperplane based learning strategy. BMC Bioinform. 16, 219. https://doi.org/10.1186/s12859-015-0629-6 (2015).

Chuan, H., Ruifan, L. & Yixin, Z. Combining different classifiers in educational data mining. In Applied Informatics and Communication. ICAIC 2011 (ed Zhang, J.) Communications in Computer and Information Science, Vol. 228. (Springer, Berlin, Heidelberg, 2011). https://doi.org/10.1007/978-3-642-23223-7_60.

El-Kenawy, El-Sayed, M. et al. Greylag Goose optimization: nature-inspired optimization algorithm. Expert Syst. Appl. 238, 122147 (2024).

Abdollahzadeh, B. et al. Puma optimizer (PO): A novel metaheuristic optimization algorithm and its application in machine learning. Cluster Comput. 1–49. (2024).

El-Kenawy, El-Sayed, M. et al. Football optimization algorithm (FbOA): A novel metaheuristic inspired by team strategy dynamics. J. Artif. Intell. Metaheuristics 8(1), 21–38 (2024).

Basir, M. A., Hussin, M. S. & Yusof, Y. Ideal combination feature selection model for classification problem based on Bio-Inspired approach. In Computational Science and Technology (eds Alfred, R., Lim, Y., Haviluddin, H. & On, C.) . Lecture Notes in Electrical Engineering, Vol. 603 (Springer, Singapore, 2020). https://doi.org/10.1007/978-981-15-0058-9_56.

Acknowledgements

The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through Large Research Project under grant number RGP2/723/46

Author information

Authors and Affiliations

Contributions

Conceptualization, S.M., S.G.K.P.; Writing—Review and Editing, C.M., R.H.; Methodology, Q.N.N., A.L.; Formal analysis, A.B., A.F.E.; Supervision, N.K.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions