Abstract

For the robust localization in mixed line-of-sight (LOS) and non-line-of-sight (NLOS) indoor environments, we proposed a max-min optimization estimator from a measurement model and introduced an adaptive loss function to optimize the estimation. However, this estimator is highly nonconvex leading to difficulties in solving it directly. We employed the neurodynamic to solve it. In addition, we checked the local equilibrium stability of the corresponding projective neural network model. The proposed algorithm does not require any prerequisites compared to existing algorithms, which either require knowledge of the magnitude of the NLOS bias or a priori distinction between LOS and NLOS. We proposed an adaptive distance error upper bound method to improve the accuracy of localization model. Tested in representative numerical simulation and real environments, our proposed robust adaptive positioning algorithm outperforms existing methods in terms of localization accuracy and robustness, especially in severe NLOS environments.

Similar content being viewed by others

Introduction

In the increasingly digital and intelligent world of today, localization technology has become critical1,2. It plays a key role in variety of areas, such as helping people navigate through large buildings, improving the efficiency of traffic management, enhancing personal safety, and providing support in the Internet of Things(IoTs) and smart homes3,4,5. Highly accurate and robust positioning technology has become one of the most important prerequisites to support various applications.

Although global satellite navigation systems are widely used in outdoor environments, it is difficult to achieve positioning in heavily occluded indoor environments due to the weak signal strength of navigation satellite signals, which makes it difficult to penetrate buildings6. Various indoor positioning technologies such as Wi-Fi and Bluetooth positioning have made great strides in the last few decades7,8 However, as the requirements for positioning accuracy and reliability have increased, the traditional technology is limited by positioning technology itself and is difficult to meet.

Compared to traditional technologies, the Ultra-Wideband (UWB) has advantages of low power consumption, high distance measurement accuracy, low cost, etc9. It can be widely used for positioning in indoor, urban and industrial environments capable of realizing high-precision positioning10.

The UWB localization algorithm is based on relative distance measurements obtained from the timing and signal characteristics of radio signals, which are further calculated to determine positioning of the node. Compared to time-difference-of-arrival (TDOA), angle of arrival (AOA) and received signal strength indicator (RSSI) localization techniques, time-of-arrival (TOA) has a large advantage in terms of accuracy and computational overhead. Typically studies of TOA-based techniques assume a LOS environment11. But normally in indoor scenarios, UWB signals suffer from NLOS, multipath, and other interferences, resulting in large deviations in the information measured by the signals, which leads to a sharp drop in positioning accuracy, or even the inability.

For localization methods with NLOS mitigation, existing studies are categorized into localization methods based on weighted least squares, filtering, machine learning, to name a few. Localization methods based on weighted least squares utilize all available UWB measurements for position, with each measurement having its own weight. For LOS measurements with a larger weight and NLOS measurements have a smaller weight, which reduces the impact of NLOS errors on positioning. Under the a priori assumption of NLOS measurements, a least squares algorithm was used to perform a global search, and then the optimal initial value was obtained by threshold screening and weight calculation, which improved localization accuracy by 63% compared to least squares algorithm12. For the mixed LOS/NLOS environment with unknown anomaly variance, a weighted least squares method based on Hampel and jump filters is proposed to estimate positioning and reduce the impact of NLOS error on localization13. To mitigate NLOS errors of localization algorithms based on weighted least squares, the value of weights must be considered. As a result, algorithm performance relies on feature recognition of measurement, leading to limited localization accuracy in real-world.

Among the filtering-based localization methods, Yang et al. proposed a localization method based on particle filtering, which is filtered twice in order to reduce the effect of NLOS errors and improve localization accuracy14. The algorithm can handle nonlinear and non-Gaussian system model, which requires fewer assumptions of the model and increase the number of particles to reduce NLOS error. However, computational complexity is high, due to large number of particles to be processed. In addition, the calculation results of particle filtering algorithm have a certain degree of uncertainty because of introduction of randomness. Therefore, it is difficult to ensure the reliability of localization when applied in a real.

Localization methods based on filtering and weighted least squares need to first determine the NLOS state of ToA measurements before localization, which is computationally expensive and suffers from misidentification, whereas machine have been widely applied for LOS/NLOS identification15,16. There are already several machine learning-based approaches that can directly achieve robust estimation of the localization state without need for a known NLOS state17. A maximum likelihood (ML) position estimator is derived from measurement model, and localization problem is then transformed into a generalized trust domain subproblem (GTRS)18.

Thereafter, localization problem is reshaped into an optimized form using semidefinite relaxation (SDR) or second-order cone relaxation (SOCR) as well as regularized total least square semidefinite program (RTLS-SDP)11, new robust semidefinite programming method (RDSP-New)19, semidefinite programming (SDP)20. Another idea is to improve position estimator by constructing a robust localization optimization problem, based on maximum correntropy criterion (MCC)21, which does not require any a priori assumptions and can achieve robust estimation under NLOS22. However, these methods cannot eliminate the bias in TOA measurements and cannot adaptively change the positioning model according to the NLOS environment, leading to a decrease in localization accuracy.

The primary contribution of this paper is introduction of a robust adaptive localization algorithm for indoor UWB localization, based on neurodynamic principles. This contribution is characterized by two key aspects of adaptation robust: the first one is adaptation robust during localization solving process, while the second focuses on adaptation of localization model itself. By incorporating an adaptive robust function, our method advances existing localization model, resulting in development of a robust adaptive localization model. This approach distinguishes itself from traditional methods, such as MCC, by its ability to dynamically adapt based on the number of iterations, thereby providing enhanced flexibility and robustness across various localization scenarios. We apply the neurodynamic to effectively solve the localization model based on adaptive robust optimization problem and analyze the local stability of localization solution. Our localization model is different from other methods in that it can estimate the distance error bounds and adjust the localization model to achieve higher accuracy.

UWB adaptive robust localization model

The measurement model

Consider a 2D indoor localization scenario containing \(N\) UWB anchor and one unknown nodes. The positioning of \(i\)-th anchor node is denoted as \({x}_{i}\in {\mathbb{R}}^{2}\) and positioning of unknown node is represented as \(x\in {\mathbb{R}}^{2}\).

The \(x\) is calculated by the relative distances to the anchor nodes, and \({d}_{i}\) is the Euclidean distance between unknown and \(i\)-th anchor node, defined as:

where \({\Vert \cdot \Vert }_{2}\) denotes a L2 norm. Considering the indoor NLOS error and observation noise, the observation model of relative distance can be expressed as:

where \({r}_{i}\) denotes relative distance observation, \({n}_{i}\) is UWB measurement noise, which satisfies the Gaussian distribution \({\mathbb{N}}\left(0,{\sigma }_{i}\right)\). \({q}_{i}\) is NLOS measurement error, when \({q}_{i}=0\) denotes a LOS condition.

Introduce adaptive robust loss function

The adaptive robust loss function is23:

where \(c>0\) is a scale parameter that determines the degree of bending near \(L=0\), and L is the original loss function. \(\alpha\) is a hyperparameter that controls the solution robustness of optimization problem, and different values of \(\alpha\), the loss function can be expressed as follows:

The adaptive loss function with \(\alpha =2\) is equivalent to L2 loss, while using \(\alpha =1\) is a smoothed form of L1 loss, Charbonnier loss or L1–L2 loss. When \(\alpha =-\infty\), it approaches local mode finding. The α can be thought of as a smooth interpolation between these three averages during the estimation process, the robustness of the loss function to be automatically adapted during optimization of localization process, thus improving the localization performance without the need for any manual parameter tuning.

Problem formulation

Assuming UWB indoor localization without any NLOS a priori information. And according to Eq. (2), the L2 optimization problem for indoor UWB localization can be established as

where \(\in = \left[ { \in_{1} , \ldots , \in_{N} } \right]^{T} ,\delta = \left[ {\delta_{1} , \ldots ,\delta_{N} } \right]^{T}\). \({0}_{N}\) denotes an N-dimensional column vector with all elements 0, \(\epsilon \le \delta\) means \({\epsilon }_{i}\le {\delta }_{i}\) with \(i=\text{1,2},\dots ,N\). \({\epsilon }_{i}={n}_{i}+{q}_{i}\) is distance error between unknown and \(i\)-th anchor node. \({\delta }_{i}\) is the upper bound of ranging error \({\epsilon }_{i}\), which is usually unknown in real world, and setting the value of \({\delta }_{i}\) too large or too small will degrade the accuracy. Because of the continuity of motion trajectory, the upper bound of measurements varies less in a short period of time, for this reason, we proposed an adaptive robust (AR) method that approximates the error upper bound by an estimate of \({\epsilon }_{i}\) as

where \(\beta \ge 1\) is the amplification factor, which ensures that avoiding the \({\delta }_{i,t}\) too small when distance error become large. For simplicity, \(t\) will be omitted. In order to solve optimization problem (5), we transform it into an equivalent as

NLOS leads to UWB relative distance measurements with large measurement errors \({\epsilon }_{i}\). Therefore, the L2 loss function degrades accuracy and reliability of indoor positioning. For this reason, from the idea of robust learning in machine learning, combined with the adaptive robust loss function in Eq. (3). Then, the adaptive robust optimization function for indoor UWB positioning is

where \(L\) is denoted as

The \(\alpha\) and \(c\) affect robustness and convergence speed of loss function, and \(f\left(L,\alpha ,c\right)\) is monotonically increasing with respect to \(\alpha\). By gradually decreasing \(\alpha\), we can avoid optimization process from getting stuck in local optima, thereby enhancing the robustness of the position coordinate estimation. \(c\) determines the gradient of loss function \(f\) in the neighborhood of optimal solution. When \(\left|L\right|<c\), the gradient value \(\frac{\partial f}{\partial L}\) is linearly proportional to \(L\) for any parameter α, and can converge to optimal solution quickly and precisely. Therefore, we draw on α and c adaptive methods in the reference23 to establish robust adaptive factors for UWB indoor localization. \(\alpha\) and \(c\) is

where \({\alpha }_{k}\) and \({c}_{k}\) denote the hyperparameters of loss function \(f\) for the \(k\)-th iteration operation, respectively. \(S(\cdot )\) and \(\psi \left(\cdot \right)\) are the sigmoid and softplus functions, respectively, defined as

Neurodynamic robust localization algorithm

Consider the nonconvex nonlinear optimization problem with inequality constraints

where \(g\left(z\right)={\left[{g}_{1}\left(z\right),{g}_{2}\left(z\right),\dots ,{g}_{M}\left(z\right)\right]}^{T}\in {\mathbb{R}}^{M}\) is a vector function in dimension \(M\), and \(f,{g}_{1},{g}_{2}\dots ,{g}_{m}\) are a quadratically continuous differentiable function. \({0}_{M}\in {\mathbb{R}}^{M}\) is an M-dimensional zero vector. The Lagrangian function of optimization problem Eq. (12) is:

where \(u={\left[{u}_{1},{u}_{2},\dots ,{u}_{M}\right]}^{T}\in {\mathbb{R}}^{M}\) is a vector of Lagrange multipliers. The necessary condition for optimal solution is then to satisfy the KKT condition as

\({z}^{*}\) is the locally optimal solution to optimization problem Eq. (12). The projective form of augmented Lagrangian function can be obtained as

where \({\mathcal{L}}_{\rho }\left(z,u\right)=\mathcal{L}\left(z,u\right)+\frac{\rho }{2}{\sum }_{i=1}^{M}{\left[{u}_{i}{g}_{i}\left(z\right)\right]}^{2}\) is augmented Lagrangian function, \(\rho >0\) is augmented Lagrange parameter. The operator \({\left[\cdot \right]}^{+}=max(\cdot ,0)\) represents the primal feasibility, pairwise feasibility, and complementarity conditions of the inequality constraints equivalently in projective form.

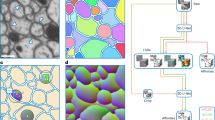

Based on Eq. (15), KKT points of optimization problem Eq. (12) can be calculated by projection neural network (PNN). Therefore, the localization problem is formulated using a recurrent neural network model24,25 and is represented as:

where \(\omega ={\left[{z}^{T},{u}^{T}\right]}^{T}\). Figure 1 shows how recurrent neural network based on neurodynamic model described by Eq. (16) has been implemented in hardware.

Next, recurrent neural network will be used to solve optimization problem Eq. (8), which can be transformed into

Comparing Eq. (17) with optimization problem Eq. (13) can be obtained

The \({\mathcal{L}}_{p}\left(z,u\right)\) is

Several relatively complex gradient calculations in Eq. (16) are

Stability and convergence

In this section, we discuss two important properties of neural network formulation Eq. (16), stability and convergence.

Theorem 1

If \({\omega }^{*}={\left[{z}^{T},{u}^{T}\right]}^{T}\) is the equilibrium point of neural network in Eq. (16), then \({\omega }^{*}\) is the KKT point of optimization problem in Eq. (12).

Proof

If \({\upomega }^{*}={\left[{\text{z}}^{\text{T}},{\text{u}}^{\text{T}}\right]}^{\text{T}}\) is equilibrium point of neural network Eq. (16), then there is.

It is equivalent to

If and only if \({g}_{i}\left({z}^{*}\right)\le 0, {{u}_{i}^{*}}^{T}g\left({z}_{i}^{*}\right)=0,{u}_{i}^{*}\ge 0\), satisfies \({\left[{u}^{*}+g\left({z}^{*}\right)\right]}^{+}={u}^{*}\). Substituting into Eq. (22) yields \({\nabla }_{z}\mathcal{L}\left({\omega }^{*}\right)={\nabla }_{z}{\mathcal{L}}_{\rho }\left({\omega }^{*}\right)=0\). Thus \({\upomega }^{*}\) is equilibrium point of neural network and is also KKT point.

Theorem 2

The neurodynamic model Eq. (16) is asymptotically stable at KKT point \({\upomega }^{*}\), where \({z}_{i}^{*}\) is a strict local minimum of optimization problem.

Proof

Consider the following Lyapunov equation is.

where

According to Theorem 2 in reference 26, if \(\frac{d\widehat{\omega }}{dt}=0\), then there is \(\frac{dV(\widehat{\omega })}{dt}=0\). According to \(\frac{dV}{dt}=0\), we have

where \(\hat{\omega }\) is denoted as neighbor of \(\omega^{*}\), according to Eq. (25) there is

Therefore, we can obtain

\(\frac{d{\omega }^{*}}{dt}=0\) means that \({\omega }^{*}\) is equilibrium point of neurodynamic model Eq. (16), and according to Theorem 1, \({\omega }^{*}\) is also a KKT point. Thus \({\omega }^{*}\) is asymptotically stable and is a strict local minimum.

Complexity analysis

Using Horner’s scheme27, the evaluation of a polynomial with fixed-size coefficients of degree \(n\) can be performed in \(O(n)\) time. Considering the polynomial evaluation as the operation in each iteration of Eq. (16) that dictates the computational complexity, it is straightforward to deduce that the dominant complexity of the proposed method (AR-PNN) is \(O({N}_{PNN}\cdot {N}^{2})\). The computational complexity of the algorithms considered, such as SR-MCC21, MCC-PNN22, RDSP-New19, RTLS-SDP11 and AR-PNN, is summarized in Table 1.Where \({N}_{PNN}\) denotes the number of iterations taken in discretely realizing the PNN, \(K\) is the number of steps taken by bisection search, and \({N}_{HQ}\) denotes the number of half-quadratic (HQ) iterations21.

Numerical results

We first validate the algorithm through numerical simulation and then verify the proposed algorithm through experimental data in real world in the next section. The purpose of the simulation is to verify localization performance and robustness of AR-PNN.

Simulation setup

The simulation in this section is divided into three parts, firstly to verify the adaptive ranging error bound of the proposed algorithm, and then to verify localization accuracy and robustness of AR-PNN under LOS and NLOS environments, respectively. The positioning of 10 anchor and unknown nodes are randomly generated and are localized in a \(20m\times 20m\) area. Different parts of simulation have different parameters, specifically in terms of the number of NLOS paths, ranging error variance \({\sigma }_{i}\), and maximum NLOS error \({q}_{m}\). In each simulation, NLOS paths are randomly assigned, and measurements used by different algorithms remain completely identical. The localization performance is evaluated by the root mean square error (RMSE) and the error cumulative distribution function (CDF).

Distance error upper bound adaptation study

To verify the effectiveness of AR-PNN, we verified ranging error bounds adaption under LOS and NLOS conditions. Evaluate error upper bound performances by averaging error. For visualization, we plotted only the last 100 results. We assume that the NLOS error \({q}_{i}\) satisfies a uniform distribution \(U\left(0, {q}_{m}\right)\). \(max(\epsilon )\) is maximum measurements error. \({L}_{NLOS}\) indicates the number of NLOS paths, when \({L}_{NLOS}=0\) means in LOS environment. Six different conditions were analyzed, and each condition was tested with 3000 Monte Carlo (MC) simulations. \({L}_{NLOS}\) NLOS paths are randomly selected. Figure 2 below gives the error upper bounds adaptive under six conditions.

It can be seen that the actual estimates are large in comparison, which is beneficial in the localization model to ensure correctness of localization model Eq. (8). However, it needs to be ensured that error bounds do not deviate too much from actual values, otherwise the problem of large positional estimation errors will occur. The average error between actual and estimated error bound is given in Table 2, and it can be seen that it is basically less than 0.35 m, which ensures positioning estimation performance.

Impact of the ranging noise \({{\varvec{\sigma}}}_{{\varvec{i}}}\) in LOS

We evaluate localization performance of AR-PNN, RDSP-New, SR-MCC, RTLS-SDP, and MCC-PNN algorithms under different Gaussian noise \({\sigma }_{i}\) variances in a LOS environment. The positionings of unknown and anchor nodes are randomized at each MC. The TOA measurements follow a Gaussian distribution with standard deviations \({\sigma }_{i}\) ranging from \(0.1 m\) to \(5 m\) at 0.1 m intervals. The RMSE for different \({\sigma }_{i}\) were obtained by running 3000 MCs. Figure 3 shows RMSE results of 5 algorithms for different ranging error distributions. AR-PNN has a smaller RMSE than any other algorithm, especially when \({\sigma }_{i}\) is large, the difference is more obvious. Since there are no NLOS paths in Fig. 3, both MCC-PNN and our proposed algorithm have similar formulations for localization optimization function, with the performance difference mainly arising from robust function. In this case, our algorithm can be viewed as an MCC-PNN algorithm with a modified robust function. As seen in Fig. 3, AR-PNN has significant performance improvements when the measurement noise is large, which highlights the impact of the robust function.

Impact of the localization performance in mild, moderate, and severe NLOS environments

Fixing ranging noise to \({\sigma }_{i}=0.1m\), Fig. 4 exhibits relationship between localization performance and maximum NLOS error \({q}_{m}\in \left[\text{1,5}\right] m\) for three representative light, medium and heavy NLOS environments. 3000 MCs were run with different environments.

We find that localization performance of algorithms deteriorate gradually with increasing \({q}_{m}\). AR-PNN has least deterioration among all the methods and has better robustness to increasing NLOS errors. Because AR-PNN can adaptively adjust the distance error bounds with a more accurate localization model relative to other algorithms. During the localization process, the adaptive robust loss function can better converge to the vicinity of the best solution, thus providing better scene adaptation and localization performance. This also makes localization performance of all algorithms progressively worse as the number of NLOS paths becomes larger. Nonetheless, especially in severe NLOS environments, AR-PNN has better localization performance and robustness compared to other methods. The same NLOS error distribution in different environments also minimizes the degradation of AR-PNN localization performance in most cases. Therefore, in the mild case of NLOS and errors, the performance and robustness of all localization methods are basically comparable. But in moderate and severe cases, AR-PNN has better localization performance and robustness.

Further analysis reveals that the key advantage of our proposed method lies in its ability to adaptively adjust the upper bound of distance error, which significantly enhances localization accuracy under challenging conditions with many NLOS paths. The robust function plays a crucial role in maintaining stability in the presence of sensor noise under LOS environment (as illustrated in Fig. 3). Its effect is less noticeable when sensor noise is relatively low (\({\sigma }_{i}=0.1m\)), as comparison with MCC-PNN, SR-MCC, and RTLS-SDP shows. In this case, impact of different robust functions on performance is minimal. As demonstrated in Fig. 4, when number of NLOS paths increases, performance improvement of our proposed becomes more pronounced. This is due to method’s ability to better handle the NLOS distortions, providing a more accurate estimate of unknown node’s position. In contrast, robust function mainly addresses performance degradation caused by large observation noise in sensors themselves.

Real world experiments

Dataset detail

In order to validate performance of AR-PNN in real world, we use a UWB localization dataset established by Breger28. The positioning system contains 8 UWB anchor and 1 unknown nodes. The distances are realized by DW1000 modules. Use the MQTT and Wi-Fi to collect distance to the laptop.

The localization environment is an indoor apartment, the overall length of the apartment is 12.06 m, width is 9.18 m, the external wall is made of brick structure, and all the internal walls are made of gypsum and thermal insulation materials The environment floor plan and moving trajectory are shown in Fig. 5. The fork symbols denote the positions of the anchor nodes, and position coordinates are shown in Table 3 below.

We first analyzed ranging errors in the real world, and Fig. 6 demonstrates actual ranging errors of all test points. It can be seen that maximum distance error is no more than \(4m\). The distribution of distance errors remain roughly constant over short periods of time, but varies to a large extent over long periods of time. Further, we analyzed all distance measurements for each anchor node by box-and-line plots. Figure 7 shows that the mean errors of the measurements for all anchor nodes do not vary much, but the outliers vary considerably. That is to say, it shows the high variability of the distribution of NLOS errors for different anchor nodes, where A1 and A8 have the most severe NLOS errors, and A3 has the lightest impact of distance errors.

Localization performance analysis

To evaluate localization performance in real scenarios, we first analyze trajectories errors. Then we evaluate localization performance by CDF and RMSE.

From Fig. 8, it can be seen that most of trajectory points of AR-PNN are closer to true values than other algorithms. Figure 9 demonstrates that AR-PNN have better performance and robustness among all algorithms. The localization performance was further analyzed by CDF, and it can be seen from Fig. 10. The localization accuracy of MCC-PNN and SR-MCC are close to each other in real world. This may be due to the fact that both methods use the MCC criterion, resulting in similar localization performance and robustness in real-world. Although both AR-PNN and MCC-PNN are based on reflective projection network, the AR-PNN has a more accurate localization model, which makes localization performance better as well. It also shows that the localization loss function is crucial to improve the localization performance and reliability.

Table 4 compares RMSE of different algorithms, and it can be seen that AR-PNN improves significantly compared to other algorithms. The main reason is that compared with other algorithms, AR-PNN takes into account the two main characteristics of the hybrid LOS/NLOS scenarios: small short-term and large long-term variations. By adaptively adjusting localization model, localization model accuracy is improved. Finally, thereby improving the localization performance and robustness.

Conclusion and future work

We proposed a robust adaptive localization method for UWB that does not require any a priori information from NLOS. The UWB distance measurements deteriorate in accuracy due to ambient occlusion and error characteristics change dynamically with environment. Due to nonlinear and nonconvexity of ToA-based localization model, we proposed a neurodynamic approach and verify the local stability conditions for equilibrium of corresponding dynamical system. A robust localization algorithm with adaptive tuning is established from both solution process and localization model. The stability and accuracy of solution is improved by dynamically adjusting loss function in optimization process. On the other hand, measurement error upper bounds are dynamically estimated for adjusting the localization model. Through these two strategies, the characteristics of small changes in NLOS errors in short time or small area range and large changes in long time are fitted, which makes the localization performance and robustness enhancement significant in the real world.

Our solution can be further applied in the field of autonomous mobile robots. In the future, the algorithm can be improved to fuse sensors such as IMUs and encoders, which can provide high-precision relative localization in a short period of time, and further improve localization accuracy and robustness when NLOS error changes. On the other hand, neurodynamic approach we adopted can be implemented using neural network-specific hardware, integrated into a single sensor, which can reduce power consumption and expand range of applications.

Data availability

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Isaia, C. & Michaelides, M. P. A review of wireless positioning techniques and technologies: From smart sensors to 6G. Signals 4, 90–136. https://doi.org/10.3390/signals4010006 (2023).

Mayer, P., Magno, M. & Benini, L. Self-sustaining ultrawideband positioning system for event-driven indoor localization. IEEE Internet Things J. 11, 1272–1284. https://doi.org/10.1109/JIOT.2023.3289568 (2023).

Kapucu, N., & Bilim, M. Internet of Things for Smart Homes and Smart Cities. In Smart Grid 3.0: Computational and Communication Technologies 331–356 (Springer International Publishing, Cham, 2023).

Deepa, A., Manikandan, N. K., Latha, R., Preetha, J., Kumar, T. S., & Murugan, S. IoT-Based Wearable Devices for Personal Safety and Accident Prevention Systems. In 2023 Second International Conference On Smart Technologies For Smart Nation 1510–1514 (2023).

Nigam, N., Singh, D. P. & Choudhary, J. A review of different components of the intelligent traffic management system (ITMS). Symmetry 15, 583. https://doi.org/10.3390/sym15030583 (2023).

Mazhar, F., Khan, M. G. & Sällberg, B. Precise indoor positioning using UWB: A review of methods, algorithms and implementations. Wirel. Pers. Commun. 97, 4467–4491. https://doi.org/10.1007/s11277-017-4734-x (2017).

Qi, L., Liu, Y., Yu, Y., Chen, L. & Chen, R. Current status and future trends of meter-level indoor positioning technology: A review. Remote Sens. 16, 398. https://doi.org/10.3390/rs16020398 (2024).

Dai, J., Wang, M., Wu, B., Shen, J. & Wang, X. A survey of latest Wi-Fi assisted indoor positioning on different principles. Sensors 23, 7961. https://doi.org/10.3390/s23187961 (2023).

Flueratoru, L., Wehrli, S., Magno, M., Lohan, E. S. & Niculescu, D. High-accuracy ranging and localization with ultrawideband communications for energy-constrained devices. IEEE Internet of Things J. 9, 7463–7480. https://doi.org/10.1109/JIOT.2021.3125256 (2021).

Mahfouz, M. R., Fathy, A. E., Kuhn, M. J., & Wang, Y. Recent trends and advances in UWB positioning. In 2009 IEEE MTT-S International Microwave Workshop on Wireless Sensing, Local Positioning, and RFID 1–4 (2009).

Wu, H., Liang, L., Mei, X. & Zhang, Y. A convex optimization approach for NLOS error mitigation in TOA-based localization. IEEE Signal Process. Lett. 29, 677–681. https://doi.org/10.1109/LSP.2022.3141938 (2022).

Huang, J., & Qian, S. Ultra-wideband indoor localization method based on Kalman filtering and Taylor algorithm. In 3rd International Conference on Internet of Things and Smart City (IoTSC 2023) 12708, 228–233 (2023).

Park, C. H. & Chang, J. H. WLS localization using skipped filter, Hampel filter, bootstrapping and Gaussian mixture EM in LOS/NLOS conditions. IEEE Access 7, 35919–35928. https://doi.org/10.1109/ACCESS.2019.2905367 (2019).

He, Y. P. et al. Indoor dynamic object positioning in NLOS environment based on UWB. Trans. Microsyst. Technol. 39, 46–49 (2020).

Miramá, V., Bahillo, A., Quintero, V. & Díez, L. E. NLOS detection generated by body shadowing in a 6.5 GHz UWB localization system using machine learning. IEEE Sens. J. 23, 20400–20411 (2023).

Singh, S., Trivedi, A. & Saxena, D. Unsupervised LoS/NLoS identification in mmWave communication using two-stage machine learning framework. Phys. Commun. 59, 102118 (2023).

Liu, Y. UWB ranging error analysis based on TOA mode. J. Phys.: Conf. Ser. 1939, 012124 (2021).

Tomic, S. & Beko, M. A bisection-based approach for exact target localization in NLOS environments. Signal Process. 143, 328–335. https://doi.org/10.1016/j.sigpro.2017.09.019 (2018).

Chen, H., Wang, G. & Ansari, N. Improved robust TOA-based localization via NLOS balancing parameter estimation. IEEE Trans. Veh. Technol. 68(6), 6177–6181 (2019).

Zheng, R., Wang, G. & Ho, K. C. Accurate semidefinite relaxation method for elliptic localization with unknown transmitter position. IEEE Trans. Wirel. Commun. 20, 2746–2760. https://doi.org/10.1109/TWC.2020.3044217 (2020).

Xiong, W., Schindelhauer, C., So, H. C. & Wang, Z. Maximum correntropy criterion for robust TOA-based localization in NLOS environments. Circuits Syst. Signal Process. 40, 6325–6339. https://doi.org/10.1007/s00034-021-01800-y (2021).

Xiong, W., Schindelhauer, C., So, H. C., Liang, J., & Wang, Z. Neurodynamic TDOA localization with NLOS mitigation via maximum correntropy criterion. arXiv preprint arXiv:2009.06281 (2020).

Barron, J. T. A general and adaptive robust loss function. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition 4331–4339 (2019).

Singh, S., Trivedi, A. & Saxena, D. Channel estimation for intelligent reflecting surface aided communication via graph transformer. IEEE Trans. Green Commun. Netw. 8, 756–766 (2023).

Choi, J. S., Lee, W. H., Lee, J. H., Lee, J. H. & Kim, S. C. Deep learning based NLOS identification with commodity WLAN devices. IEEE Trans. Veh. Technol. 67, 3295–3303 (2017).

Che, H. & Wang, J. A collaborative neurodynamic approach to global and combinatorial optimization. Neural Netw. 114, 15–27. https://doi.org/10.1016/j.neunet.2019.02.002 (2019).

Hildebrand, F. B. Introduction to numerical analysis. (Courier Corporation, 1987).

Bregar, K. Indoor UWB positioning and position tracking data set. Sci. Data 10, 744. https://doi.org/10.1038/s41597-023-02639-5 (2023).

Acknowledgements

This research was supported by the National Key Research and Development Program of China (grant number 2022YFB3904702); Dezhou University Research Program (grant number 2022xjrc111); Fundamental Research Funds for the Central Universities (grant number 500422806); Civilian Aircraft Research (grant number MJG5-1N21); and the Young Innovation Team of Shandong Provincial Higher Education Institutions (grant number 2022KJ094).

Author information

Authors and Affiliations

Contributions

Methodology, Y.L.; formal analysis, Y.L.; resources, E.H. and Y.L.; software, Y.L. and E.H.; data curation, C.G. and E.H.; writing—original draft preparation, Y.L.; writing—review and editing, Y.C., C.G. and Y.L.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, Y., Hu, E., Chen, Y. et al. Neurodynamic robust adaptive UWB localization algorithm with NLOS mitigation. Sci Rep 15, 14271 (2025). https://doi.org/10.1038/s41598-025-99150-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-99150-1