Abstract

Industry 4.0 profoundly impacts the insurance sector, as evidenced by the significant growth of insurtech. One of these technologies is chatbots, which enable policyholders to seamlessly manage their active insurance policies. This paper analyses policyholders’ attitude toward conversational bots in this context. To achieve this objective, we employed a structured survey involving policyholders. The survey aimed to determine the average degree of acceptance of chatbots for contacting the insurer to take action such as claim reporting. We also assessed the role of variables of the technology acceptance model, perceived usefulness, and perceived ease of use, as well as trust, in explaining attitude and behavioral intention. We have observed a low acceptance of insureds to implement insurance procedures with the assistance of a chatbot. The theoretical model proposed to explain chatbot acceptance provides good adjustment and prediction capability. Even though the three assessed factors are relevant for explaining attitude toward interactions with conversational robots and behavioral intention to use them, the variable trust exhibited the greatest impact. The findings of this paper have fair potential theoretical and practical implications. They outline the special relevance of trust in explaining customers’ acceptance of chatbots since this construct impacts directly on attitude but also perceived usefulness and perceived ease of use. Likewise, improvements in the utility and ease of use of robots are also needed to prevent customers’ reluctance toward their services.

Similar content being viewed by others

Introduction

Industry 4.0 (I4.0) is strongly impacting the economy, businesses, and society (Tamvada et al., 2022). Rooted in the extensive utilization of digital technologies that emerged in the early decades of the 21st century, I4.0 intertwines these advancements with innovative, interconnected front-end devices and machinery, resulting in the evolution of smart industries and services (Tamvada et al., 2022; Marcon et al., 2022). I4.0 has evolved from being used only at the production level to the supply chain, the way corporations contact customers and potential customers, workers and consumers (Liu and Zhao, 2022). I4.0 adds value to business since it triggers competitiveness. It allows the development of new products and services, making it possible to add new digital features to existing ones (Liu and Zhao, 2022) and expanding the channels used to interact with actual or potential customers and providers. It also enables rationalizing and automating processes in such a way that costs are reduced, productivity is improved (Dalenogare et al., 2018) and supply chain performance is enhanced (Qader et al., 2022). That is, I4.0 technologies allow competitive advantages to be attained while also reaching responsible and sustainable business objectives (Kazachenok et al., 2023).

Arner et al. (2015) define fintech as the application of a technological advance to satisfy customers’ demand and offer solutions to challenges in the financial industry. Although fintech currently seems to be a novel topic from the practitioner’s perspective and the existence of a structured empirical literature on fintech is recent (Bittini et al., 2022), this is truly not a new issue. The first stage of fintech began at the end of the 19th century, which was a period of financial globalization pushed by novel technological infrastructure such as transatlantic transmission cables (Arner et al., 2015). Currently, it is commonly agreed upon to use the term “fintech” for products such as new assets and channels to make financial agreements, services, processes or businesses arise from the application of information communication technologies developed in the 21st century to financial markets (Kazachenok et al., 2023). Drawing an analogy from the fintech concept, insurtech can be defined as the integration of emerging technologies to enhance insurance services through targeted solutions addressing distinct problems within the insurance sector (Cao et al., 2020). Like in the case of fintech, academic interest in insurtech has been very recent and dated back to the middle 2010s (Cao et al., 2020; Njegomir and Bojanić, 2021); since the beginning of that decade, the digitalization of insurance has experienced exponential growth (Bohnert et al., 2019; Sosa and Montes, 2022).

The application of I4.0 technologies to the insurance industry creates value for the insurance company, and heterogeneous transformational capabilities are sources of competitive advantage (Stoeckli et al., 2018). They may enhance internal processes (e.g., exploiting data to handle claims), create new products, and develop new channels to provide professional advisory services. Cao et al. (2020) outline artificial intelligence (AI), machine learning, robotic process automatization, augmented reality/virtual reality, and blockchain as principal impacting technologies. Of course, these technologies are interconnected. For example, any insured may generate a great amount of data from smart devices. These data could be transferred to the insurance company by using blockchain technology and then processed to fit policy prices by using AI algorithms such as those obtained from machine learning. This information may simplify underwriting because insured risk declaration is no longer needed (Ostrowska, 2021).

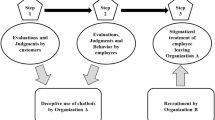

Figure 1 displays how I4.0 technologies impact insurance business innovation. These influences may be located at the product and process level and, therefore could be implemented in the market or at an inner level (Sosa and Montes, 2022). According to these authors, the impact of I4.0 on the insurance industry may stem from five sources: (1) the implementation of digital capabilities, (2) the provision of data-driven solutions, (3) the integration of services and customer experience, (4) the creation and development of digital insurance and (5) the distribution of digital insurance. These sources imply the need to integrate several I4.0 technologies. We outline the following as examples:

(1) The incorporation of digital capabilities for assessing claims can be achieved through the utilization of image-based AI and smart devices, eliminating the need to dispatch an expert for on-site evaluation of reported damage (Agarwal et al., 2022).

(2) The use of big data analysis tools, which are based on deep learning and machine learning, to evaluate all the data available by insurance companies may allow more accurate learning to predict fraudulent claims (Rawat et al., 2021). Agarwal et al. (2022) indicated that these tools allow the identification of 30% more irregular claims than conventional analytic tools.

(3) The efficient use of AI and machine learning on available data (structured and unstructured) can be leveraged to improve customer experience and services. This is the case for data from smart sensors (e.g., smart watches) that can be used to improve healthcare insurance (Kelley et al., 2018). In this vein, the new large language model-based AI systems that emerged in the early 2020s, such as ChatGPT, are also remarkable. Despite the fact that, currently, they do not guarantee reliable responses, their versatility and ability to provide plausible answers across a wide variety of issues have led many users to consider them assistants that can be useful in making decisions related to personal economy, including those linked to individual finance (Jangjarat et al., 2023).

(4) A typical example of digital insurance is smart contracts that rely on the IoT and blockchain (Christidis and Devetsikiotis, 2016).

(5) With regard to digital distribution, the use of robotic technologies such as chatbots, which are supported by IA, allows customers to access 24/7 a wide variety of products and to manage existing policies (Sosa and Montes, 2022).

Amidst the vast array of I4.0 technologies that are currently being implemented in the insurance sector, this paper is focused on the use of chatbots, whose adoption began in approximately 2017. Voice assistants can be defined as conversational engines that engage in interactive dialog with individuals and are facilitated by artificial intelligence (AI) algorithms that simulate natural language (Rodríguez-Cardona et al., 2019). Within the spectrum of methodologies presently utilized in the examination of voice assistants (Minder et al., 2023), this study aimed to investigate the acceptance of voice assistants by policyholders when interacting with insurance providers regarding existing policies, such as declaring claims.

Chatbots are revolutionizing the customer experience by substituting social network influences, such as those from user groups, with novel forms of digital guidance (Akram et al., 2022). Theoretically, using chatbots in customers’ service improves the quality of their attendance because of gains in aspects such as agility, accessibility, predictability or resoluteness (Baabdullah et al., 2022). Likewise, their use reduces (in fact, practically avoids) disgusting queues of call centers and allows human agents to strictly solve those more complex issues (DeAndrade and Tumelero, 2022). Likewise, their implementation triggers firms’ value in stock markets (Fotheringham and Wiles, 2023). Riikkinen et al. (2018) showed a great variety of applications of chatbots in the insurance industry: finding suitable products for a potential insured by asking smart questions, solving policyholders’ doubts about processes linked to existing policies, providing insurance advice about the insured’s own portfolio, and making more agile and flexible claims. The use of AI and machine learning algorithms has provided chatbots capable of learning from past conversations with customers to create added value for them and for the company (Riikkinen et al., 2018).

Although many conversational bots have been able to pass Turing’s test since the prototype Eugene Gootsman did so in 2014 (Warwick and Shah, 2016), they can perform only some routine conversational work, such as the first contact with the customer (Rodríguez-Cardona et al., 2019). Hence, conversational bots lack the ability to discern the nuances of a talk through users’ voice tones; thus, they cannot display human competencies such as empathy and critical assessments and are unable to meet complex requirements. These abilities were not present in chatbots at the end of the 2010s (Eeuwen, 2017) or at the beginning of the 2020s (Vassilakopoulou et al., 2023). In this regard, Rodríguez-Cardona et al. (2019) reported that whereas insurance experts have outlined that bots are far from being able to complete any insurance process by themselves without human help, a great number of customers are reluctant to interact with chatbots. This resistance has also been documented by Van Pinxteren et al. (2020) and PromTep et al. (2021).

The process of acquiring an insurance policy begins when individuals recognize a need for coverage due to a concrete circumstance (e.g., a car has been bought and needs third-party liability insurance to drive it). This entails the search for and evaluation of information about potential insurers capable of providing suitable protection. Traditionally, this task was undertaken by human brokers with a portfolio of insurers, while presently, it can be facilitated by robo-advisors (Marano and Li, 2023).

Once the customer selects an insurance provider, he or she must seek approval from the insurer, who will request details about the insured entity and the coverage sought. Following an analysis of this information, the insurer will then communicate whether the application is accepted, along with associated terms such as coverage extent and first-year premium (The National Alliance, 2023). Evidently, this process is amenable to automation, and communication with prospective customers can be facilitated through chatbots (Riikkinen et al., 2018).

In the third stage, upon purchasing the insurance and remitting the premium, the customer transitions into a policyholder. As legal status changes, as while the initial two steps lack a direct link between the insurer and customer, the policyholder becomes a creditor to the insurer, akin to a bank depositor’s relationship with the bank (Guiso, 2021). Therefore, the management of active policies might necessitate interactions with the insurer for various purposes, including modifications to coverage, adjustments in the designated bank account for premium payments, renewal terms, etc. (Niittuinperä, 2018). The most pivotal scenario arises during the communication of a claim, considering that the primary aim of an insurance contract is to shield the policyholder from the economic fallout caused by adverse events (Guiso, 2021).

To narrow the scope of our analysis, we focused on the acceptance of chatbots in the third stage, which was elucidated earlier; this stage constitutes a critical domain within the insurance sector and represents an area where chatbot utilization is extensively used (Koetter et al., 2019; Njegomir and Bojanić, 2021). It is expected that the digitalization of claim management processes will reduce the number of human operators linked with this insurance process by 70%–80% by 2030 (Balasubramanian et al., 2018).

The analysis developed in this paper is grounded in the Technology Acceptance Model (TAM) by Davis (1989), which combines with the Unified Theory of Acceptance and Use of Technology (UTAUT) by Venkatesh et al. (2003) and has been widely used to model the acceptance of new financial technologies and services (de Andrés-Sánchez et al., 2023; Firmansyah et al., 2023) and chatbots and voice assistants (Balan, 2023). In addition to the constructs inherent to the TAM, a factor that proves to be particularly significant in the analysis of the utilization of artificial intelligence technologies is trust (Mostafa and Kasamani, 2022). Therefore, this approach applies to conversational chatbots (Gkinko and Elbanna, 2023) and in the realm of fintech (de Andrés-Sánchez et al., 2023; Firmansyah et al., 2023) and insurtech (Zarifis and Cheng, 2022) powered by AI. The main arguments for its significance center on the relevance of its cognitive and relational dimensions defined in Glikson and Woolley (2020). In our context, the cognitive dimension of trust is manifested in the perceived effectiveness of chatbot technology for implementing procedures linked with active policies. Relational trust is identified as the confidence that policyholders have in the insurer’s implementation of chatbots, with the intention of enhancing their ability to provide satisfactory service (Zarifis and Cheng, 2022).

Building upon this conceptual ground, this study aims to address the following two research questions:

RQ1 = What are customers’ average intention to use and attitude toward using chatbots in communications with the company to manage existing policies?

RQ2 = What are the drivers of intention to use and attitude toward the assistance of conversational robots in managing existing policies?

The structure of the paper is as follows. In the second section, we propose a TAM-based model to explain behavioral intention (BI) and attitude toward chatbots. The third Section describes the material and quantitative methods used in this article. The fourth section shows our results. Finally, we discuss our results and implications for the insurance industry and outline principal conclusions.

Modeling chatbot acceptance with a technology acceptance model

The empirical analysis developed in this paper is developed over the structural equation model (SEM) displayed in Fig. 2. It is theoretically grounded in TAM by Davis (1989) and Venkatesh and Davis (2000), which is developed over the conceptual ground provided by the theory of reasoned action (TRA) (Fishbein and Ajzen, 1975), which proposes a positive relation between perceived usefulness (PU) and perceived ease of use (PEOU) of a given technology with users’ attitude (ATT) that mediates the influence of those antecedents on the BI to use it.

Models of technology acceptance and use, such as the TRA, TAM, and UTAUT, have been extensively employed to investigate the acceptance of chatbots among both customers and employees within implementing companies (Balan, 2023). For example, while Brachten et al. (2021) anchor their study to the TRA, Eeuwen (2017), Moriuchi (2019), Kasilingam (2020), Pillai and Sivathanu (2020), McLean et al. (2021), Pitardi and Marriott (2021), de Cicco et al. (2022) and Pawlik (2022) utilize the TAM for their assessment. Similarly, UTAUT analysis underpins studies by Kuberkar and Singhal (2020), Gansser and Reich (2021), Joshi (2021), Balakrishnan et al. (2022), Pawlik (2022) and de Andrés-Sánchez and Gené-Albesa (2023a).

Figure 2 shows that our model also takes into account the impact of trust (TRUST) on acceptance since it is a key variable for explaining customers’ acceptance of chatbots (Glikson and Woolley, 2020) and the keystone of the insurance industry (Guiso, 2021). Therefore, trust must be a keystone factor in explaining insurtech adoption (Zarifis and Cheng, 2022). The factors and hypotheses that sustain the model depicted in Fig. 2 are described below.

Fishbein and Ajzen (1975) provide a commonly agreed upon definition of ATT as a “learned predisposition to respond in a consistently favorable or unfavorable manner with respect to a given object”. On the other hand, in the TAM, BI is the usage intention of the assessed technology (Davis, 1989). In our context, ATT must be understood as the predisposition toward using chatbots in procedures linked to existing insurance contracts, such as communicating covered economic damage and ensuring that individuals are willing to accept or refuse interaction with a chatbot to implement that procedure. It is commonly accepted that a positive attitude toward a given tech positively influences BI (Fishbein and Ajzen, 1975; Davis, 1989). The empirical literature shows this relation in the acceptance of digital banking channels (Bashir and Madhavaiah, 2015), self-service tech (Yoon and Choi 2020), blockchain adoption for financial purposes (Albayati et al., 2020; Palos-Sánchez et al., 2021), I4.0 (Virmani et al., 2023) and bots and chatbot settings (Eeuwen, 2017; Han and Conti, 2020; Pillai and Sivathanu, 2020; Brachten et al., 2021; McLean et al., 2021; Pitardi and Marriott, 2021; Balakrishnan et al., 2022; de Cicco et al., 2022). Therefore, we state the following hypothesis.

Hypothesis 1 (H1) = A favorable attitude toward conversational robots positively influences policyholders’ intention to use them to communicate with the insurer.

PU can be defined as the degree to which a potential user feels that a new technology will improve his/her performance to make an action of interest (Davis, 1989). In this paper, PU can be reached because of policyholders’ perception that interacting with the chatbot improves communication with the insurer. Chatbots are available 7/24, and simple procedures become agile and have fast resolution since they do not need to wait for a human agent (DeAndrade and Tumelero, 2022). Likewise, that technology does not imply avoiding other communication channels with insurance companies. Thus, conversational robots can be regarded as a supplementary component of the insurance ecosystem through which the company interacts, conducts transactions, and delivers products to policyholders and potential consumers (Standaert and Muylle, 2022).

The purported potential of insutech to confer a competitive advantage (Stoeckli et al., 2018) must manifest in advantageous outcomes for customers, either through reduced insurance costs and/or improving the service offered to the policyholder. Nonetheless, as Rodríguez-Cardona et al. (2019) noted, to realize tangible benefits from the adoption of a chatbot system, insurance companies need to enhance their information systems to seamlessly integrate the back-end processing and transformation of data and information, enabling the front-end system to deliver an appropriate response.

However, chatbots currently cannot meet complex requirements (Rodríguez-Cardona et al., 2019) and thus often need the support of a human operator (Vassilakopoulou et al., 2023). When working with clients who have experienced a great loss (for example, the home or a beloved person), users may expect not only technical assistance but also active listening and empathy from the interlocutor (de Andrés-Sánchez and Gené-Albesa, 2023b). However, chatbots cannot provide emotional support or human warmth (Vassilakopoulou et al., 2023). Likewise, many workplaces will disappear because digitalization may be understood as the social negative utility of I4.0 (Kovacs, 2018). This phenomenon also affects insurance markets (Kelley et al., 2018).

The literature underscores that PU typically exerts a positive influence on the evaluation of novel technologies. It has been checked in the blockchain setting (Albayati et al., 2020; Nuryyev et al., 2020; Sheel and Nath, 2020; Palos-Sánchez et al., 2021) and in attitude toward digital channels such as m-banking and digital services (Bashir and Madhavaiah, 2015; Veríssimo, 2016; Khan et al., 2017; Farah et al., 2018; Sánchez-Torres et al., 2018; Warsame and Ireri, 2018; Hussain et al., 2019). In the case of innovations impacting the insurance industry, we can outline Legowo (2018), Huang et al. (2019), Oktariyana et al. (2019) and de Andrés-Sánchez and González-Vila Puchades (2023). Regarding the use of chatbots to provide services to clients, we remarked upon Eeuwen (2017), Moriuchi (2019), Kasilingam (2020), Kuberkar and Singhal (2020), Pillai and Sivathanu (2020), Brachten et al. (2021), Gansser and Reich (2021), Joshi (2021), McLean et al. (2021), Pitardi and Marriott (2021), Balakrishnan et al. (2022), de Cicco et al. (2022) and Pawlik (2022). Thus, we propose testing the following hypothesis:

Hypothesis 2 (H2) = Perceived usefulness positively influences attitude toward interacting with conversational robots in insurance procedures.

PEOU is often defined as “the extent to which an individual believes that utilizing a specific system would require minimal effort” (Davis, 1989). In our context, PEOU refers to the sensation of encountering no obstacles, such as susceptibility to errors, lack of error recovery, or confusion, when the procedure involving the insurer is mediated by a chatbot. Compared to alternative channels for managing policies, chatbots offer more availability than human agents and have fewer barriers to use than conventional applications. They require neither an installation nor the ability to learn a new user interface because only conventional phones are needed (Koetter et al., 2019).

Professionals tend to report that this technology is not mature enough to be easily used in insurance settings (Rodríguez-Cardona et al., 2019); in this regard, communication quality with the conversational bot is an essential item impacting customer satisfaction (Baabdullah et al., 2022). Chatbots lack the ability to discern shifts in voice tone or changes in conversational context (Vassilakopoulou et al., 2023), often resulting in incomplete interactions as robotic shortcomings are frequent (Xing et al., 2022). Many chatbots are confined to handling rudimentary interactions; beyond that, their responses tend to lack substance due to reliance on scripted conversational trees and basic dialog datasets (Nuruzzaman and Hussain, 2020). Failed responses from conversational robots have a negative impact on users’ judgments regarding the adoption of this technology, consequently leading to increased resistance towards its utilization (Jansom et al., 2022).

The literature outlines that PEOU usually positively influences the judgment of a technology and thus on attitude and BI. Several studies within blockchain technology (Albayati et al., 2020; Nuryyev et al., 2020; Sheel and Nath, 2020; Palos-Sánchez et al., 2021) and fintech communication channels (Bashir and Madhavaiah, 2015; Veríssimo, 2016; Warsame and Ireri, 2018) suggest this. In regard to insurance industry innovations, we refer to Legowo (2018) and Huang et al. (2019). With respect to the use of chatbots, we outline Moriuchi (2019), Pillai and Sivathanu (2020), Pawlik (2022), Kasilingam (2020), Kuberkar and Singhal (2020), Brachten et al. (2021), Gansser and Reich (2021), McLean et al. (2021), Mostafa and Kasamani (2022), Pitardi and Marriott (2021), Balakrishnan et al. (2022) and de Andrés-Sánchez and Gené-Albesa (2023a). Therefore, the following hypothesis is proposed:

Hypothesis 3a (H3a) = Perceived ease of use of chatbots positively influences attitude toward their use in procedures with the insurer.

The TAM also postulates a positive indirect impact of PEOU on attitude through its influence on PU. A service that is friendly may have a positive impact on PU (Davis, 1989). Albayati et al. (2020), Nuryyev et al. (2020) and Palos-Sánchez et al. (2021) found a significant positive impact in a blockchain setting. Bashir and Madhavaiah (2015) report this in internet banking. Regarding the use of chatbots, we can refer to Joshi (2021) and de Cicco et al. (2022). In the field of insurance technology and services, see Huang et al. (2019) and de Andrés-Sánchez and González-Vila Puchades (2023).

Hypothesis 3b (H3b) = Perceived ease of use positively impacts the perceived usefulness of chatbots in communicating with the insurer.

According to Glikson and Woolley (2020), trust in the adoption of chatbots encompasses three dimensions: cognitive, relational, and emotional. The cognitive dimension is associated with the perception that this technology is appropriate for the intended objectives of its use. Relational trust relies on the user’s confidence in the organization promoting the use of chatbot technology. Finally, emotional trust resides in the perception of humanity in chatbot technology, such as through empathy or anthropomorphism (Pitardi and Marriott, 2021; Pawlik, 2022; Gkinko and Elbanna, 2023). The main relevance of trust in customers’ perception of insurtech falls within the first two dimensions: the specific nature of the insurance business, which implies relational trust, and the fact that the relationship between the company and policyholders is mediated by technology, necessitating cognitive trust in chatbot technology (Zarifis and Cheng, 2022). In our opinion, the emotional dimension, although having some influence in the setting covered by this study, is notably less relevant due to the intermittent rather than continuous nature of interactions between policyholders and insurers, which are also often prosaic (de Andrés-Sánchez and Gené-Albesa, 2023b).

Relational trust is the basis of any financial transaction since one of the parties (the found lender) must believe that the counterpart (the found borrower) will pay promised cash flows at time. This fact explains why a commonly assessed factor in fintech acceptance studies is trust (de Andrés-Sánchez et al., 2023; Firmansyah et al., 2023). Likewise, trust is even more important in insurance markets since both sides of contract (company and customer) may trust each other. Indeed, trust forms the core of the insurance industry (Guiso, 2012), given its inherent challenges of moral hazard and adverse selection. Policyholders’ trust in insurance companies is the perception that their services may enable fast and reliable recovery of casualties and that interactions between them will be satisfactory (Guiso, 2021).

Likewise, trust is highly relevant in novel technology such as chatbots, and this fact is especially true in B2C relations (Baabdullah et al., 2022). People are often skeptical of these tools due to the number of choices they make and their transparency (Brachten et al., 2021). Bashir and Madhavaiah (2015) define trust in a new communication technology in the realm of mediated financial services as customers’ conviction that the financial provider can deliver a satisfactory level of service through this new technology. In services by conversational bots, two dimensions can be identified: competence and comfort (Joshi, 2021).

Insurtech has the main objective of improving the value of products offered to customers (Riikkinen et al., 2018) and their own value (Lanfranchi and Grassi, 2022). This fact may enhance trust in insurers’ main service, which covers satisfactorily honest claims (Guiso, 2021). According to the technology acceptance framework, trust is supposed to impact attitude or BI directly but is also mediated by PU and PEOU.

The direct impact of TRUST on BI, mediated by either ATT or not, has been shown to be significant in various contexts, such as blockchain technology acceptance (Albayati et al., 2020; Palos-Sánchez et al., 2021), customer evaluations of digital banking channels (Bashir and Madhavaiah, 2015; Sánchez-Torres et al., 2018), and the insurtech domain, as reported by Huang et al. (2019). Additionally, a positive impact of TRUST on the BI to use chatbots has been reported by Kasilingam (2020), Kuberkar and Singhal (2020), Joshi (2021), Gansser and Reich (2021), and Pitardi and Marriott (2021). Therefore, the propose testing:

Hypothesis 4a (H4a) = Trust in conversational robots positively affects policyholders’ attitude toward communicating with the insurer.

Furthermore, trust can influence the motivation and extent to which individuals use artificial intelligence-based technologies, which can impact the perceived utility and ease of use of conversational robots (de Andrés-Sánchez and Gené-Albesa, 2023b). This argument elucidates why several studies have noted the statistical significance of trust as a precursor to both PU (Han and Conti, 2020; Brachten et al., 2021) and PEOU (Albayati et al., 2020; Palos-Sánchez et al., 2021). Thus, the following hypotheses are proposed:

Hypothesis 4b (H4b) = Trust in conversational robots positively affects policyholders’ perceived utility.

Hypothesis 4c (H4c) = Trust in conversational robots positively affects policyholders’ perceived ease of use.

Materials and methods

Sampling protocol, respondent profile, and questionnaire

The data are derived from a structured questionnaire that was administered in Spanish. It underwent an initial testing phase with fifteen professionals from the Spanish insurance industry. Once their feedback was integrated, the questionnaire was administered to an additional twelve volunteers who were not affiliated with the financial or insurance sectors. Their input was carefully considered to further fine-tune the questionnaire to its final version. These eighteen responses were subsequently used to conduct an initial analysis to establish the validity of the scales.

We subsequently distributed the questionnaire through social networks (Facebook, LinkedIn, WhatsApp, and moderated e-mail lists). The questionnaire was completed completely online from 20 December 2022 to 23 February 2023. We sought a well-founded opinion by exclusively accepting responses from policyholders who possessed more than two insurance policies. A total of 252 responses were received. However, we did not use questionnaires that had nonfilled items linked to constructs, as shown in Fig. 2, or when the respondent did not meet the criteria regarding the number of policies. Therefore, the final number of responses was 226, which, following Conroy (2016), given that our population is large (>5000), allowed an error margin between ±5% and ±7.5% that we judged to be adequate. The profile of the respondents is displayed in Table 1.

The questionnaire began with the introductory paragraph: “We are asking for your opinion on carrying out procedures with your insurer (car third insured party, home insurance, health policies…) through automated systems such as voice robots (telephone) and text robots (internet chats and WhatsApp) instead of using a human operator. A typical example is communicating a claim or modifying policy coverages”.

Table 2 shows the scale measurements that we used. All the scales are reflective constructs and were answered on an 11-point Likert scale. The questions about BI were developed based on those proposed in Venkatesh et al. (2003) and Davis (1989). Attitude toward chatbots was measured with the four questions of Bhattacherjee and Premkumar (2004), which were used in a chatbot setting by Eeuwen (2017). PU is basically an adaptation of items in Venkatesh et al. (2003), Venkatesh et al. (2012) and Hussain et al. (2019). Some of their indicators were applied by Palos-Sánchez et al. (2021) with regard to fintech and by Gansser and Reich (2021) to assess chatbot acceptance. The questions measuring PEOU we formulated were based on those proposed in Venkatesh et al. (2012). The TRUST scale was used by Farah et al. (2018) and Kim et al. (2008) and is based on Morgan and Hunt (1994).

Data analysis

RQ1 was answered by comparing the mean and median values of items of these constructs with 5, which can be considered the neutral position. For comparison, simple t-ratio tests were performed for means, and Wilcoxon tests were used for medians.

RQ2 was evaluated by adjusting the structural equation model depicted in Fig. 2 with partial least squares (PLS-SEM). Following Hair et al. (2019), powerful arguments for the use of PLS-SEM include estimating complex models without an explicit hypothesis in the distribution of data, which can also be performed with small samples. According to the model depicted in Fig. 2, every construct has no more than four inner or outer links with other constructs. The so-called 10-times rule suggests that a sample with a size N > 40 can be sufficient (Kock and Hadaya, 2018); therefore, the size of our sample is clearly above the appropriate size.

Once the structural model and its measurement have been stated and the final sample is available, we estimated the model in Fig. 2 with PLS-SEM and SmartPLS4.0 software by following standard steps. Therefore, we first checked the consistency and reliability of the scales. Internal consistency is checked with Cronbach’s alpha, a composite reliability measure (CR), Dijkstra and Henseler’s ρA, which must be above 0.7 and with an average extracted variance (AVE) that is expected to be higher than 0.5. Likewise, item loadings must also be greater than 0.7. We analyzed the discriminant capacity of the scales with the Fornell-Larker criterion and heterotrait-monotrait (HTMT) ratios.

Path coefficient (β) is estimated by bootstrapping with 5000 subsamples and the percentile method. The p values allow testing of hypotheses as delineated in the literature review revision. Furthermore, we can assess the significance of indirect effects at this juncture. While the overall quality of the model is gauged by the determination coefficient R2, its predictive capacity is evaluated using Stone–Geisser’s Q2 measure, along with the implementation of the cross-validated predictive ability test (CVPAT) described in Liengaard et al. (2021).

Results

Descriptive statistics and scale validation

To answer RQ1, it is necessary to compare the means and medians attained by the items of the BI and ATT scales with a neutral value of 5. In all the cases, Table 3 shows that these central values are clearly less than 5, with a significance level of p < 0.01, if we perform Student’s t test on the mean or the Wilcoxon test on the median.

Table 3 shows that all the measurement scales have internal consistency since Cronbach’s alpha, CR, and ρ_A are always >0.7, the AVE is >0.5 and the factor loadings of all the items are >0.7. Table 4 allows evaluation of the discriminant validity of the latent variables. Both the Fornell-Larker and HTMT criteria are met. The Pearson correlations between factors are ever below the squared root of their AVEs, and HTMTs are less than 0.90.

Structural model assessment and results evaluation

The quality of the adjustment attained by our model was measured with the determination coefficient R2 and is shown in Fig. 3. For ATT, R2 = 0.717, which can be considered an adjustment close to substantial. Similarly, for BI (R2 = 0.604), PU (R2 = 0.626), and PEOU (R2 = 0.659), we attained a level of accuracy between moderate and substantial (Hair et al., 2019).

Figure 3 and Table 5 show the values of the path coefficients and their significance. These results allow us to test the hypotheses outlined in second section and thus answer RQ2. Note that hypotheses H1, H3a, H4a, H4b, and H4c are accepted at p < 0.01. Regarding the direct impact of PU on ATT, H2 is accepted at a significance level of p < 0.05 but not at p < 0.01. Likewise, H3b is also rejected. Table 6 shows the overall impact of the TRUST, EE, and PU antecedents on the final output variable, BI. This impact in the case of PU occurs because of the mediation of ATT. For the other variables, that mediation can also be performed by PU. In all the cases, these effects are significant at p < 0.01. Therefore, the interpretation of the coefficients allowed us to determine the most influential variable for explaining the BI associated with using chatbots at TRUST.

Figure 3 and Table 7 show that the structural equation model depicted in Figs. 2 and 3 has significant predictive power. For all the constructs with inner links, Q2 > 0; therefore, following Hair et al. (2019), the predictions made by the model are significant. The CVPAT results reinforce these statements, as they demonstrate that the proposed model provides good out-of-sample predictions (average loss difference = −3.179, p < 0.01).

Discussion

Regarding RQ1, what is customers’ average intention to use and attitude toward using chatbots in communications with the company to manage existing policies (e.g., to notify a claim)? We have found that mainstream policyholders tend to reject interacting with them. This finding is in accordance with Van Pinxteren et al. (2020), who reported the reluctance of customers, and with Rodríguez-Cardona et al. (2019) and PromTep et al. (2021), who found this reluctance in the German and Canadian insurance industries, respectively.

Regarding RQ2, what are the drivers of intention to use and attitude toward the assistance of conversational robots in managing existing policies? We checked that the TAM by Davis (1989) combined with trust explains more than half of the variability in attitude (ATT) and BI to use bots. We checked that all the assessed explanatory factors, trust (TRUST), PU, and PEOU, were significant in explaining BI through the mediation of ATT. Likewise, we have checked that ATT consistently explains BI, which is in accordance with the conceptual ground of Davis (1989), and reviewed the related literature (Bashir and Madhavaiah, 2015; Yoon and Choi, 2020; Albayati et al., 2020; Palos-Sánchez et al., 2021; Eeuwen, 2017; Han and Conti, 2020; Brachten et al., 2021; Pitardi and Marriott, 2021; de Cicco et al., 2022; Virmani et al., 2023).

The significance of PU in explaining attitude (direct) and BI (mediated) is in accordance with mainstream findings in revised studies: Albayati et al. (2020), Nuryyev et al. (2020), Sheel and Nath (2020), Palos-Sánchez et al. (2021) in blockchain techs; and Bashir and Madhavaiah (2015), Khan et al. (2017), Farah et al. (2018), Sánchez-Torres et al. (2018), Warsame and Ireri (2018) and Hussain et al. (2019) in banking digital channels. These findings are also congruent with findings regarding insurance innovation issues (Legowo, 2018; Huang et al., 2019; de Andrés-Sánchez and González-Vila Puchades, 2023) and about the interaction of customers with chatbots (Eeuwen, 2017; Moriuchi, 2019; Kasilingam, 2020; Kuberkar and Singhal, 2020; Pillai and Sivathanu, 2020; Brachten et al., 2021; Gansser and Reich, 2021; Joshi, 2021; McLean et al., 2021; Pitardi and Marriott, 2021; de Cicco et al., 2022; Pawlik, 2022).

We found that perceived ease has a positive significant impact on ATT but that this does not apply to PU. PEOU positively impacts ATT according to the reviewed literature on the perceptions of blockchain applications for finance (Albayati et al., 2020), digital banking acceptance (Bashir and Madhavaiah, 2015; Veríssimo, 2016; Warsame and Ireri, 2018), technology acceptance in the insurance sector (Huang et al., 2019) and consumers’ perceptions of service chatbots (Moriuchi, 2019; Kasilingam, 2020; Kuberkar and Singhal, 2020; Pillai and Sivathanu, 2020; Brachten et al., 2021; Gansser and Reich, 2021; Mostafa and Kasamani, 2022; McLean et al., 2021; Pitardi and Marriott, 2021; Pawlik, 2022).

The nonsignificant impact of PEOU on PU does not contradict the relevant positive link between PEOU and attitude and BI mediated by PU reported in the literature. For more details, see Albayati et al. (2020), Nuryyev et al. (2020) and Palos-Sánchez et al. (2021) for the blockchain setting; Bashir and Madhavaiah (2015) for internet banking; Joshi (2021); and de Cicco et al. (2022) for BI towards the use of bots. In the field of insurance new tech products, Huang et al. (2019) and de Andrés-Sánchez and González-Vila Puchades (2023) found this.

We found that the principal impact of policyholders’ chatbot acceptance comes from TRUST. We feel that this is not surprising since the way this factor impacts insurtech is twofold. Insurance transactions are based on mutual and great trust between insurers and insureds (Guiso, 2021). Trust is also a key factor in chatbot acceptance by consumers in B2C relationships (Brachten et al., 2021). We found that this influence comes not only from its impact on attitude but also from its mediation by PEOU and PU.

The significant impact of trust on attitude and BI is in accordance with mainstream reports. In the field of customer acceptance of chatbots, we can outline Kasilingam (2020), Kuberkar and Singhal (2020), Joshi (2021), Gansser and Reich (2021) and Pitardi and Marriott (2021). This impact has also been reported in the field of blockchain use (Palos-Sánchez et al., 2021), in the m-banking context (Bashir and Madhavaiah, 2015; Sánchez-Torres et al., 2018) and by Huang et al. (2019) within the insurtech field. Likewise, whereas our finding about the significant influence of trust on PU is in accordance with Han and Conti (2020) and Brachten et al. (2021), the relevance of its influence on PEOU is coincident with Albayati et al. (2020) and Palos-Sánchez et al. (2021).

The findings indicate that the current state of chatbot development for handling established policies, particularly in areas such as claims management, is not sufficiently advanced. As a result, insurance consumers exhibit hesitancy toward embracing chatbot usage. Common responses reflect a diminished perception of usefulness, modest levels of user friendliness, and a restricted level of trust in this technology, leading to its rejection. This finding is consistent with that of Xing et al. (2022), who assert that robotic technology can often introduce more challenges than it resolves, and as observed, chatbots have garnered neither professional nor customer appreciation within different contexts (Rodríguez-Cardona et al., 2019; Van Pinxteren et al., 2020; PromTep et al., 2021; de Andrés-Sánchez and Gené-Albesa, 2023b).

At the time of redacting this paper, a significant portion of conversational robots were developed using basic conversational databases (Nuruzzaman and Hussain, 2020). Consequently, their communication capabilities are restricted, leading to their inability to address intricate demands and lack of emotional skills. Like Vassilakopoulou et al. (2023), who studied the relationships of Norwegian citizens with public administrations, Vassilakopoulou et al. (2023) concluded that they can be given assistance given their simple requirements, but they need supervision by a human agent in issues that are slightly more complex. Thus, currently, conversational robot technology should be regarded as a supplementary channel in a company’s communication with a customer, one that could offer enhanced service in very specific circumstances.

This paper tests the reliability of the TAM by Davis (1989) in explaining policyholders’ attitude toward the mediation of chatbots in their interactions with insurers. Although the reliability of TAMs for explaining fintech acceptance has been extensively demonstrated (Firmansyah et al., 2023), empirical analyses in the insurtech sphere are not common. Thus, this paper has expanded the empirical evidence in this novel field of the insurance industry. Similarly, we have shown that trust is the keystone to understanding chatbot acceptance. This is because of the confluence of the peculiar features of the insurance business, which requires trust between all embedded agents for successful development and the use of novel technologies.

This work on the technological adoption of a response to an insurtech application addresses a specific relationship between the insurer and the insured: the derivative resulting from the mediation of chatbots in their interactions regarding active insurance contracts. Additionally, it is approached from the customer’s perspective. The proposed framework, although relatively straightforward, exhibits considerable explanatory and predictive capacity. Therefore, an evident application of the proposed framework that may be of interest is the analysis of the utilization of conversational robots in other phases of the insurance contract lifecycle, such as advise services or pricing. Of course, such an analysis can be conducted from the standpoint of the potential policyholder as well as from the perspective of professionals in the insurance sector involved in these processes, such as lawyers and actuaries.

Furthermore, the obtained results encourage us to speculate that the proposed model may be applicable in various areas of the insurance business impacted by insurtech, as depicted in Fig. 1. A few examples are as follows:

(1) Within the implementation of digital capabilities to streamline workflows, we could examine the assessors’ perceptions of new technologies that enable claims processing without physical contact or new AI tools that offer more precise detection of fraudulent claims.

(2) Concerning the provision of data-driven solutions, it may be of great interest to understand customers’ perceptions of the data collected by intelligent devices they use, e.g., health or travel habits, so that insurance companies can calculate premiums more accurately to provide coverage in these settings. The use of such data requires a high level of relational trust regarding the intentions and use of private and sensitive data.

(3) In the realm of digital insurance, a typical example is smart contracts, which are built on blockchain technology and the Internet of Things. We believe that the proposed framework, with minor modifications such as incorporating privacy concerns into the explanatory factors, can serve as a basis for analyzing the factors that explain judgment about such contracts and, consequently, the potential development of these types of products.

(4) Regarding the integration of services and the customer experience, which is manifested in the insurance industry through the convergence of activities such as advisory services, assessment and pricing, or claims processing, we believe that the proposed framework may be applicable for analyzing acceptance, both from potential policyholders and professionals within the insurance industry. The emergence of large language model-based AI systems in the early 2020s enhanced this suitability due to their versatility and capacity to offer credible responses across a diverse range of topics.

Conclusions and future research

This study used the TAM developed by Davis (1989) to elucidate the BI behind utilizing conversational bots to engage with insurers concerning existing policy matters, such as providing information about claims. The results show that the low acceptance of chatbots can be explained by the use of TAM constructs, performance expectancy and ease expectation along with trust.

In this work, out of the three dimensions of trust—cognitive, relational, and emotional—we have considered only the first two dimensions. This choice has been justified by the fact that the interaction between the policyholder and the insurer is sporadic and under the assumption that it is due to prosaic matters, such as reporting a minor claim. However, for certain significant events, such as the loss of a loved one or a substantial material loss, emotional trust in interactions with the insurance company could also be a relevant factor in the acceptance of conversational robots. We believe that introducing this factor in future research could be of interest, especially in contexts related to personal matters such as life and health insurance coverage.

We are aware that this empirical study has additional limitations. It has been developed in a single country, Spain, and many responses come from social networks such as LinkedIn, whose users are usually persons with university degree studies and professional status that may rank from medium to very high. Of course, educational level and economic position may be relevant for explaining attitude toward chatbots. Therefore, our conclusions must be taken with care to be extrapolated to policyholders from countries with nonenclosed cultures and/or persons with dissimilar profiles with regard to professional and educational status. To obtain more accurate conclusions, extending the countries represented in the sample and socioeconomic profiles is needed.

Moreover, we analyzed a cross-sectional survey. Therefore, our results cannot be extended to the long run. This question is especially relevant in I4.0 technology, which is a very dynamic and active field in rapid and continuous growth and improvement. To have a wider perspective about how policyholders’ acceptance of chatbots for making insurance procedures evolves over time, a longitudinal analysis covering different milestones in the development of artificial conversational technologies is needed.

Data availability

By demanding any of the authors by e-mail or at the link https://doi.org/10.7910/DVN/LK4LAT.

References

Agarwal S, Bhardwaj G, Saraswat E, Singh N, Aggarwal R, Bansal A (2022) Insurtech Fostering Automated Insurance Process using Deep Learning Approach. In 2nd International Conference on Innovative Practices in Technology and Management (ICIPTM) (Vol. 2, IEEE, pp. 386-392). https://doi.org/10.1109/ICIPTM54933.2022.9753891

Akram MS, Dwivedi YK, Shareef MA, Bhatti ZA (2022) Editorial introduction to the special issue: social customer journey–behavioural and social implications of a digitally disruptive environment. Technol Forecast Soc 185:122101. https://doi.org/10.1016/j.techfore.2022.122101

Albayati H, Kim SK, Rho JJ (2020) Accepting financial transactions using blockchain technology and cryptocurrency: a customer perspective approach. Technol Soc 62:101320. https://doi.org/10.1016/j.techsoc.2020.101320

Arner DW, Barberis J, Buckley RP (2015) The evolution of Fintech: A new postcrisis paradigm. Geo J Int’L L 47:1271

Baabdullah AM, Alalwan AA, Algharabat RS, Metri B, Rana NP (2022) Virtual agents and flow experience: an empirical examination of AI-powered chatbots. Technol Forecast Soc 181:121772. https://doi.org/10.1016/j.techfore.2022.121772

Balakrishnan J, Abed SS, Jones P (2022) The role of meta-UTAUT factors, perceived anthropomorphism, perceived intelligence, and social self-efficacy in chatbot-based services? Technol Forecast Soc 180:121692. https://doi.org/10.1016/j.techfore.2022.121692

Balan C (2023) Chatbots and voice assistants: digital transformers of the company–customer interface—a systematic review of the business research literature. J Theor Appl El Comm 18(2):995–1019. https://doi.org/10.3390/jtaer18020051

Balasubramanian R, Libarikian A, McElhaney D (2018) Insurance 2030—The impact of AI on the future of insurance. McKinsey Company

Bashir I, Madhavaiah C (2015) Consumer attitude and behavioural intention towards Internet banking adoption in India. J Ind Bus Res 7(1):67–102. https://doi.org/10.1108/JIBR-02-2014-0013

Bhattacherjee A, Premkumar G (2004) Understanding changes in belief and attitude toward information technology usage: a theoretical model and longitudinal test. MIS Q Manag Inf Syst 28(3):229–254. https://doi.org/10.2307/25148634

Bittini JS, Rambaud SC, Pascual JL, Moro-Visconti R (2022) Business models and sustainability plans in the FinTech, InsurTech, and PropTech industry: evidence from Spain. Sustainability 14(19):12088. https://doi.org/10.3390/su141912088

Bohnert A, Fritzsche A, Gregor S (2019) Digital agendas in the insurance industry: the importance of comprehensive approaches. Geneva Pap Risk Insur Issues Pr 44:1–19. https://doi.org/10.1057/s41288-018-0109-0

Brachten F, Kissmer T, Stieglitz S (2021) The acceptance of chatbots in an enterprise context–a survey study. Int J Inf Manag 60:102375. https://doi.org/10.1016/j.ijinfomgt.2021.102375

Cao S, Lyu H, Xu X (2020) InsurTech development: evidence from Chinese media reports. Technol Forecast Soc 161:120277. https://doi.org/10.1016/j.techfore.2020.120277

Christidis K, Devetsikiotis M (2016) Blockchains and smart contracts for the internet of things. IEEE Access 4:2292–2303. https://doi.org/10.1109/ACCESS.2016.2566339

Conroy RM (2016) The RCSI Sample size handbook. A rough guide, Technical Report, 59-61

Dalenogare LS, Benitez GB, Ayala NF, Frank AG (2018) The expected contribution of Industry 4.0 technologies for industrial performance. Int J Prod Econ 204:383–394. https://doi.org/10.1016/j.ijpe.2018.08.019

Davis FD (1989) Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q 13(3):319–340. https://doi.org/10.2307/249008

DeAndrade IM, Tumelero C (2022) Increasing customer service efficiency through artificial intelligence chatbot. Rev de Gestão 29(3):238–251. https://doi.org/10.1108/REGE-07-2021-0120

de Andrés-Sánchez J, González-Vila Puchades L, Arias-Oliva M (2023) Factors influencing policyholders’ acceptance of life settlements: a technology acceptance model. Geneva Pap Risk Insur Issues Pr 48(4):941–967. https://doi.org/10.1057/s41288-021-00261-3

de Andrés-Sánchez J, González-Vila Puchades L (2023) Combining fsQCA and PLS-SEM to assess policyholders’ attitude towards life settlements. Eur Res Manag Bus Eco 29(2):100220. https://doi.org/10.1016/j.iedeen.2023.100220

de Andrés-Sánchez J, Gené-Albesa J (2023a) Explaining policyholders’ chatbot acceptance with an unified technology acceptance and use of technology-based model. J Theor Appl El Comm 18(3):1217–1237. https://doi.org/10.3390/jtaer18030062

de Andrés-Sánchez J. Gené-Albesa J (2023b) Assessing attitude and behavioral intention toward chatbots in an insurance setting: a mixed method approach, Int J Hum-Comput Int. https://doi.org/10.1080/10447318.2023.2227833

de Cicco R, Iacobucci S, Aquino A, Romana Alparone F, Palumbo R (2022) Understanding Users’ Acceptance of Chatbots: An Extended TAM Approach. In Chatbot Research and Design. CONVERSATIONS 2021. Lecture Notes in Computer Science vol 13171. Springer Cham.https://doi.org/10.1007/978-3-030-94890-0_1

Eeuwen MV (2017) Mobile conversational commerce: messenger chatbots as the next interface between businesses and consumers (Master’s thesis, University of Twente)

Farah MF, Hasni MJS, Abbas AK (2018) Mobile-banking adoption: empirical evidence from the banking sector in Pakistan. Int J Bank Mark 36(7):1386–1413. https://doi.org/10.1108/IJBM-10-2017-0215

Firmansyah EA, Masairol Masri MA, Mohd Hairul AB (2023) Factors affecting fintech adoption: a systematic literature review. FinTech 2(1):21–33. https://doi.org/10.3390/fintech2010002

Fishbein M, Ajzen I (1975) Belief, attitude, intention and behavior: an introduction to theory and research. Reading: Addison-Wesley

Fotheringham D, Wiles MA (2023) The effect of implementing chatbot customer service on stock returns: an event study analysis. J Acad Market Sci (51): 802–822.https://doi.org/10.1007/s11747-022-00841-2

Gansser OA, Reich CS (2021) A new acceptance model for artificial intelligence with extensions to UTAUT2: an empirical study in three segments of application. Technol Soc 65:101535. https://doi.org/10.1016/j.techsoc.2021.101535

Glikson E, Woolley AW (2020) Human trust in artificial intelligence: review of empirical research. Acad Manag Ann 14(2):627–660. https://doi.org/10.5465/annals.2018.0057

Gkinko L, Elbanna A (2023) Designing trust: the formation of employees’ trust in conversational AI in the digital workplace. J Bus Res 158:113707. https://doi.org/10.1016/j.jbusres.2023.113707

Guiso L (2012) Trust and insurance markets. Econ Notes 41(1‐2):1–26. https://doi.org/10.1111/j.1468-0300.2012.00239.x

Guiso L (2021) Trust and insurance. Geneva Pap Risk Insur Issues Pr 46:509–512. https://doi.org/10.1057/s41288-021-00241-7

Hair JF, Risher JJ, Sarstedt M, Ringle CM (2019) When to use and how to report the results of PLS-SEM. Eur Bus Rev 31(1):2–24. https://doi.org/10.1108/EBR-11-2018-0203

Han J, Conti D (2020) The use of UTAUT and post acceptance models to investigate the attitude towards a telepresence robot in an educational setting. Robotics 9(2):34. https://doi.org/10.3390/robotics9020034

Huang WS, Chang CT, Sia WY (2019) An empirical study on the consumers’ willingness to insure online. Pol J Manag Stud 20(1):202–212. https://doi.org/10.17512/pjms.2019.20.1.18

Hussain M, Mollik AT, Johns R, Rahman MS (2019) M-payment adoption for bottom of pyramid segment: an empirical investigation. Int J Bank Mark 37(1):362–381. https://doi.org/10.1108/IJBM-01-2018-0013

Jangjarat K, Kraiwanit T, Limna P, Sonsuphap R (2023) Public perceptions towards ChatGPT as the Robo-Assistant. Online J Commun Med Technol 13(3):e202338. https://doi.org/10.30935/ojcmt/13366

Jansom A, Srisangkhajorn T, Limarunothai W (2022) How chatbot e-services motivate communication credibility and lead to customer satisfaction: The perspective of Thai consumers in the apparel retailing context. Innov Mark 18(3):13. https://doi.org/10.21511/im.18(3)2022.02

Joshi H (2021) Perception and adoption of customer service chatbots among millennials: an empirical validation in the Indian context. In WEBIST (pp 197–208)https://doi.org/10.5220/0010718400003058

Kasilingam DL (2020) Understanding the attitude and intention to use smartphone chatbots for shopping. Technol Soc 62:101280. https://doi.org/10.1016/j.techsoc.2020.101280

Kazachenok OP, Stankevich GV, Chubaeva NN et al. (2023) Economic and legal approaches to the humanization of FinTech in the economy of artificial intelligence through the integration of blockchain into ESG Finance. Humanit Soc Sci Commun 10:167. https://doi.org/10.1057/s41599-023-01652-8

Kelley KH, Fontanetta LM, Heintzman M, Pereira N (2018) Artificial intelligence: implications for social inflation and insurance. Risk Manag Ins Rev 21(3):373–387. https://doi.org/10.1111/rmir.12111

Khan IU, Hameed Z, Khan SU (2017) Understanding online banking adoption in a developing country: UTAUT2 with cultural moderators. J Glob Inf Manag 25(1):43–65. https://doi.org/10.4018/JGIM.2017010103

Kim DJ, Ferrin DL, Rao HR (2008) A trust-based consumer decision-making model in electronic commerce: the role of trust, perceived risk, and their antecedents. Decis Support Systs 44(2):544–564

Kock N, Hadaya P (2018) Minimum sample size estimation in PLS‐SEM: the inverse square root and gamma‐exponential methods. Inf Syst J 28(1):227–261. https://doi.org/10.1111/isj.12131

Koetter F, Blohm M, Drawehn J, Kochanowski M, Goetzer J, Graziotin D, Wagner S (2019) Conversational agents for insurance companies: from theory to practice. In Agents and Artificial Intelligence: 11th International Conference, ICAART 2019, Prague, Czech Republic, February 19–21, 2019, Revised Selected Papers 11, Springer International Publishing, pp 338–362

Kovacs O (2018) The dark corners of industry 4.0–Grounding economic governance 2.0. Technol Soc 55:140–145. https://doi.org/10.1016/j.techsoc.2018.07.009

Kuberkar S, Singhal TK (2020) Factors influencing adoption intention of AI powered chatbot for public transport services within a smart city. Int J Emerg Technol Learn 11(3):948–958

Lanfranchi D, Grassi L (2022) Examining insurance companies’ use of technology for innovation. Geneva Pap Risk Insur Issues Pr 47(3):520–537. https://doi.org/10.1057/s41288-021-00258-y

Legowo N (2018) Evaluation of Policy Processing System Using Extended UTAUT Method at General Insurance Company. International conference on information management and technology 172–177.https://doi.org/10.1109/ICIMTech.2018.8528113

Liengaard BD, Sharma PN, Hult GTM, Jensen MB, Sarstedt M, Hair JF, Ringle CM (2021) Prediction: coveted yet forsaken? Introducing a cross‐validated predictive ability test in partial least squares path modelling. Decis Sci 52(2):362–392. https://doi.org/10.1111/deci.12445

Liu H, Zhao H (2022) Upgrading models, evolutionary mechanisms and vertical cases of service-oriented manufacturing in SVC leading enterprises: product-development and service-innovation for industry 4.0. Humanit Soc Sci Commun 9:387. https://doi.org/10.1057/s41599-022-01409-9

Marano P, Li S (2023) Regulating Robo-advisors in insurance distribution: lessons from the insurance distribution directive and the AI act. Risks 11(1):12. https://doi.org/10.3390/risks11010012

Marcon É, Le Dain MA, Frank AG (2022) Designing business models for Industry 4.0 technologies provision: changes in business dimensions through digital transformation. Technol Forecast Soc 185:122078. https://doi.org/10.1016/j.techfore.2022.122078

McLean G, Osei-Frimpong K, Barhorst J (2021) Alexa, do voice assistants influence consumer brand engagement?–Examining the role of AI powered voice assistants in influencing consumer brand engagement. J Bus Res 124:312–328. https://doi.org/10.1016/j.jbusres.2020.11.045

Minder B, Wolf P, Baldauf M (2023) Voice assistants in private households: a conceptual framework for future research in an interdisciplinary field. Humanit Soc Sci Commun 10:173. https://doi.org/10.1057/s41599-023-01615-z

Morgan R, Hunt D (1994) The commitment-trust theory of relationship marketing. J Mark 58(3):20–38. https://doi.org/10.2307/1252308

Moriuchi E (2019) Okay, Google!: an empirical study on voice assistants on consumer engagement and loyalty. Psychol Mark 36(5):489–501. https://doi.org/10.1002/mar.21192

Mostafa RB, Kasamani T (2022) Antecedents and consequences of chatbot initial trust. Eur J Mark 56(6):1748–1771. https://doi.org/10.1108/EJM-02-2020-0084

Niittuinperä, J (2018) Policyholder Behaviour and Management Actions. In: IAA Risk Book. https://www.actuaries.org/iaa/IAA/Publications/Overview/iaa_riskbook/IAA/Publications/iaa_risk_book.aspx?CCODE=RBEBhkey=1bb7bce0-2c43-41df-9956-98d68ca45ce4. Accessed 14 Aug 2023

Njegomir V, Bojanić T (2021) Disruptive technologies in the operation of insurance industry. Tehčki Vjesn 28(5):1797–1805. https://doi.org/10.17559/TV-20200922132555

Nuryyev G, WangY-P, Achyldurdyyeva J, Jaw B-S, Yeh Y-S, Lin H-T, Wu L-F (2020) Blockchain technology adoption behavior and sustainability of the business in tourism and hospitality SMEs: an empirical study. Sustainability 12(3):1256. https://doi.org/10.3390/su12031256

Nuruzzaman M, Hussain OK (2020) IntelliBot: a dialogue-based chatbot for the insurance industry. Knowl-Based Syst 196:105810. https://doi.org/10.1016/j.knosys.2020.105810

Oktariyana MD, Ariyanto D, Ratnadi NMD (2019) Implementation of UTAUT and DM models for success assessment of cashless system. Res J Fin Acc 10(12):127–137. https://doi.org/10.7176/RJFA/10-12-16

Ostrowska M (2021) Does new technology put an end to policyholder risk declaration? The impact of digitalization on insurance relationships. Geneva Pap Risk Insur Issues Pr 46:573–592. https://doi.org/10.1057/s41288-020-00191-6

Palos-Sánchez P, Saura JR, Ayestaran R (2021) An exploratory approach to the adoption process of bitcoin by business executives. Mathematics 9(4):355. https://doi.org/10.3390/math9040355

Pawlik VP (2022) Design Matters! How Visual Gendered Anthropomorphic Design Cues Moderate the Determinants of the Behavioral Intention Towards Using Chatbots. In: Følstad A, Araujo T, Papadopoulos S, Law, EL-C, Luger E, Goodwin M. and Brandtzaeg PB. Chatbot Research and Design. CONVERSATIONS 2021. Lecture notes in computer science, 13171. Springer Cham, pp 192–208. https://doi.org/10.1007/978-3-030-94890-0_12

Pillai R, Sivathanu B (2020) Adoption of AI-based chatbots for hospitality and tourism. Int J Contemp Hospitality Manag 32(10):3199–3226. https://doi.org/10.1108/IJCHM-04-2020-0259

Pitardi V, Marriott HR (2021) Alexa, she’s not human but… Unveiling the drivers of consumers’ trust in voice‐based artificial intelligence. Psychol Mark 38(4):626–642. https://doi.org/10.1002/mar.21457

PromTep, S P, Arcand M, Rajaobelina L, Ricard L (2021) From what is promised to what is experienced with intelligent bots. In Advances in information and communication: Proceedings of the 2021 Future of Information and Communication Conference (FICC), vol 1. Springer International Publishing, pp 560–565

Qader G, Junaid M, Abbas Q, Mubarik MS (2022) Industry 4.0 enables supply chain resilience and supply chain performance. Technol Forecast Soc 185:122026. https://doi.org/10.1016/j.techfore.2022.122026

Rawat S, Rawat A, Kumar D, Sabitha AS (2021) Application of machine learning and data visualization techniques for decision support in the insurance sector. Int J Inf Manag Data Insights 1(2):100012. https://doi.org/10.1016/j.jjimei.2021.100012

Riikkinen M, Saarijärvi H, Sarlin P, Lähteenmäki I (2018) Using artificial intelligence to create value in insurance. Int J Bank Mark 36(6):1145–1168. https://doi.org/10.1108/IJBM-01-2017-0015

Rodríguez-Cardona D, Werth O, Schönborn S, Breitner MH (2019) A mixed methods analysis of the adoption and diffusion of Chatbot Technology in the German insurance sector. Twenty-fifth Americas Conference on Information Systems, Cancun, 2019

Sánchez-Torres JA, Canada F-JA, Sandoval AV, Alzate J-AS (2018) E-banking in Colombia: factors favouring its acceptance, online trust and government support. Int J Bank Mark 36(1):170–183. https://doi.org/10.1108/IJBM-10-2016-0145

Sheel A, Nath V (2020) Blockchain technology adoption in the supply chain (UTAUT2 with risk)–evidence from Indian supply chains. Int J Appl Manag Sci 12(4):324–346. https://doi.org/10.1504/IJAMS.2020.110344

Sosa I, Montes Ó (2022) Understanding the InsurTech dynamics in the transformation of the insurance sector. Risk Manag Ins Rev 25(1):35–68. https://doi.org/10.1111/rmir.12203

Standaert W, Muylle S (2022) Framework for open insurance strategy: insights from a European study. Geneva Pap Risk Insur Issues Pr 47(3):643–668. https://doi.org/10.1057/s41288-022-00264-8

Stoeckli E, Dremel C, Uebernickel F (2018) Exploring characteristics and transformational capabilities of InsurTech innovations to understand insurance value creation in a digital world. Elect Mark 28:287–305. https://doi.org/10.1007/s12525-018-0304-7

Tamvada JP, Narula S, Audretsch D, Puppala H, Kumar A (2022) Adopting new technology is a distant dream? The risks of implementing Industry 4.0 in emerging economy SMEs. Technol Forecast Soc 185:122088. https://doi.org/10.1016/j.techfore.2022.122088

The National Alliance (2023) Six steps of the underwriting process. https://www.scic.com/six-steps-of-the-underwriting-process. Accessed 14 Aug 2023

Van Pinxteren MME, Pluymaekers M, Lemmink JGAM (2020) Human-like communication in conversational agents: a literature review and research agenda. J Serv Manag 31:203–225. https://doi.org/10.1108/JOSM-06-2019-0175

Vassilakopoulou P, Haug A, Salvesen LMO, Pappas I (2023) Developing human/AI interactions for chat-based customer services: lessons learned from the Norwegian government. Eur J Inf Syst 32(1):10–22. 0960085X.2022.2096490

Venkatesh V, Davis FD (2000) A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Manage Sci 46:186–204. https://doi.org/10.1287/mnsc.46.2.186.11926

Venkatesh V, Morris MG, Davis GB, Davis FD (2003) User acceptance of information technology: toward a unified view. MIS Q 27(3):425–478. https://doi.org/10.2307/30036540

Venkatesh V, Thong JYL, Xu X (2012) Consumer acceptance and use of information technology: extending the unified theory of acceptance and use of technology. MIS Q 36(1):157–178. https://doi.org/10.2307/41410412

Veríssimo JMC (2016) Enablers and restrictors of mobile banking app use: a fuzzy set qualitative comparative analysis (fsQCA). J Bus Res 69(11):5456–5460. https://doi.org/10.1016/j.jbusres.2016.04.155

Virmani N, Sharma S, Kumar A, Luthra S (2023) Adoption of industry 4.0 evidence in emerging economy: behavioral reasoning theory perspective. Technol Forecast Soc 188:122317. https://doi.org/10.1016/j.techfore.2023.122317

Warsame MH, Ireri EM (2018) Moderation effect on mobile microfinance services in Kenya: an extended UTAUT model. J Behav Exp Fin 18:67–75. https://doi.org/10.1016/j.jbef.2018.01.008

Warwick K, Shah H (2016) Can machines think? A report on Turing test experiments at the Royal Society. J Exp Theor Art Int 28(6):989–1007. https://doi.org/10.1080/0952813X.2015.1055826

Xing X, Song M, Duan Y, Mou J (2022) Effects of different service failure types and recovery strategies on the consumer response mechanism of chatbots. Technol Soc 70:102049. https://doi.org/10.1016/j.techsoc.2022.102049

Yoon C, Choi B (2020) Role of situational dependence in the use of self-service technology. Sustainability 12(11):4653. https://doi.org/10.3390/su12114653

Zarifis A, Cheng X (2022) A model of trust in Fintech and trust in Insurtech: how artificial intelligence and the context influence it. J Behav Exp Finan 36:100739. https://doi.org/10.1016/j.jbef.2022.100739

Funding

This research has benefited from the Research Project of the Spanish Ministry of Science and Technology “Sostenibilidad, digitalización e innovación: nuevos retos en el derecho del seguro”(PID2020-117169GB-I00).

Author information

Authors and Affiliations

Contributions

Conceptualization: JdA-S and JG-A; methodology: JdA-S; validation: JG-A; formal analysis: JdA-S; investigation: JdA-S and JG-A; resources: JdA-S; data curation: JG-A; writing—original draft preparation: JdA-S; writing—review and editing: JG-A; visualization: JG-A; supervision: JG-A; project administration: JG-A; funding acquisition: JdA-S. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

The study adhered to the following principles: (1) all participants received comprehensive written information about the study and its procedure; (2) no data pertaining to the subjects’ health, either directly or indirectly, were gathered, hence the Declaration of Helsinki was not specifically mentioned during subject notification; (3) the confidentiality of the collected data was upheld at all stages; and (4) the research received a favorable evaluation from the Ethics Committee of the researchers’ institution (CEIPSA-2022-PR-0005).

Informed consent

Permission was obtained from all the respondents.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

de Andrés-Sánchez, J., Gené-Albesa, J. Not with the bot! The relevance of trust to explain the acceptance of chatbots by insurance customers. Humanit Soc Sci Commun 11, 110 (2024). https://doi.org/10.1057/s41599-024-02621-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1057/s41599-024-02621-5