Abstract

Using generalizability (G-) theory and qualitative feedback analysis, this study evaluated the role of ChatGPT in enhancing English-as-a-foreign-language (EFL) writing assessments in classroom settings. The primary objectives were to assess the reliability of the holistic scores assigned to EFL essays by ChatGPT versions 3.5 and 4 compared to college English teachers and to evaluate the relevance of the qualitative feedback provided by these versions of ChatGPT. The study analyzed 30 College English Test Band 4 (CET-4) essays written by non-English majors at a university in Beijing, China. ChatGPT versions 3.5 and 4, along with four college English teachers, served as raters. They scored the essays holistically following the CET-4 scoring rubric and also provided qualitative feedback on the language, content, and organization of these essays. The G-theory analysis revealed that the scoring reliability of ChatGPT3.5 was consistently lower than that of the teacher raters; however, ChatGPT4 demonstrated consistently higher reliability coefficients than the teachers. The qualitative feedback analysis indicated that both ChatGPT3.5 and 4 consistently provided more relevant feedback on the EFL essays than the teacher raters. Furthermore, ChatGPT versions 3.5 and 4 were equally relevant across the language, content, and organization aspects of the essays, whereas the teacher raters generally focused more on language but provided less relevant feedback on content and organization. Consequently, ChatGPT versions 3.5 and 4 could be useful AI tools for enhancing EFL writing assessments in classroom settings. The implications of adopting ChatGPT for classroom writing assessments are discussed.

Similar content being viewed by others

Introduction

The integration of artificial intelligence (AI) tools into educational settings has revolutionized teaching, learning, and assessment experiences (Guo & Wang, 2023; Link et al., 2022; Shermis & Hamner, 2013; Su et al., 2023). AI’s role in enhancing language education is increasingly recognized, especially in English-as-a-foreign-language (EFL) education where it introduces novel approaches to teaching, learning, and assessment (Ansari et al., 2023; Barrot, 2023; Farazouli et al., 2023; Guo et al., 2022). Among these AI tools, ChatGPT, a language model developed by OpenAI, has gained significant attention for its potential in the domain of EFL writing assessments (Kasneci et al., 2023; Lu et al., 2024; Praphan & Praphan, 2023; Song & Song, 2023; Su et al., 2023).

Assessing EFL students’ English writing tasks is inherently complex and time-consuming, demanding substantial expertise and resources (Huang, 2012; Huang & Foote, 2010; Liu & Huang, 2020; Zhao & Huang, 2020). EFL educators and researchers continually seek innovative solutions to streamline the assessment process while upholding the integrity and quality of educational outcomes (Lu et al., 2024; Song & Song, 2023; Zhang et al., 2023a, 2023b). Recent studies have highlighted the capabilities of AI tools like ChatGPT in EFL writing classroom assessments, showcasing their potential to automate assessment processes, provide instant feedback, and personalize learning experiences (Lu et al., 2024; Su et al., 2023; Yan, 2023; Zou & Huang, 2023a, 2023b). For example, Su et al. (2023) demonstrated ChatGPT’s ability to assist EFL students with outlining, revising, editing, and proofreading tasks in argumentative writing contexts. Similarly, Lu et al. (2024) reported that ChatGPT effectively complements teacher assessments of undergraduate EFL students’ academic writing tasks.

Despite these advancements, a significant gap remains in understanding the specific applications and effectiveness of ChatGPT in scoring EFL essays and providing qualitative feedback on the language, content, and organization aspects of EFL essays. As the capabilities of AI models evolve, it is essential to compare the performance of various iterations, such as ChatGPT3.5 and 4, to determine their role in real classroom settings. This comparison can reveal improvements and identify any persistent challenges, providing valuable insights for educators and developers. Moreover, the urgency to explore this area stemmed from the growing demand for effective and efficient assessment methods that can accommodate the diverse needs of EFL students and alleviate the increasing workload on EFL teachers (Gibbs & Simpson, 2004; Hu & Zhang, 2014; Wu et al., 2022).

Therefore, this study aimed to address this gap by investigating how ChatGPT versions 3.5 and 4 can assist classroom teachers in assessing EFL essays. By focusing on ChatGPT’s capabilities in reliably scoring EFL essays and providing relevant qualitative feedback, this study aimed to offer insights into leveraging ChatGPT to enhance assessment practices in EFL writing classrooms.

Literature review

The nature of EFL writing assessment

EFL writing assessments encompass both scoring and providing qualitative feedback on EFL essays (Baker, 2016; Lei, 2017; Li & Huang, 2022; Liu & Huang, 2020; Niu & Zhang, 2018; Wu et al., 2022; Yao et al., 2020; Yu & Hu, 2017; Zhao & Huang, 2020). The scoring of EFL essays typically involves one or more human raters, who are invited to score the essays either holistically or analytically (Huang, 2008, 2012; Lee et al., 2002; Li, 2012; Zhang, 2009). However, the scores that human raters assign to EFL essays may fluctuate due to various factors such as raters’ linguistic and assessment experience, their adherence to scoring criteria, and even their tolerance for errors (Barkaoui, 2010; Lee & Kantor, 2007; Guo, 2006; Roberts & Cimasko, 2008). Therefore, raters are a potential source of measurement error that can affect score reliability (Huang & Whipple, 2023).

Reliability is considered essential for sound EFL writing assessment (Barkaoui, 2010; Huang, 2008, 2012; Huang & Whipple, 2023; Lee et al., 2002; Li & Huang, 2022; Lin, 2014). The Standards for Educational and Psychological Testing (AERA et al., 2014) define reliability as the consistency of measurement outcomes across instances of the measurement procedure. Inter-rater reliability assesses the agreement among different raters scoring the same responses (AERA et al., 2014; Lee et al., 2002). Numerous studies have examined the inter-rater reliability of EFL writing assessments and have suggested implications for improving EFL writing assessment practices (e.g., Barkaoui, 2010; Lee et al., 2002).

Furthermore, EFL writing assessments require raters to provide qualitative feedback on the language, content, and organization of each essay (Lee et al., 2023; Guo & Wang, 2023). This feedback should be relevant and effective so that students can revise and edit their essays accordingly (Li & Huang, 2022; Lu et al., 2024; Wu et al., 2022). Relevant and effective feedback is defined as feedback that is perceived as clear, understandable, and supportive, enabling students to take specific, constructive actions toward improvement in their EFL writing (Koltovskaia, 2020; Link et al., 2022).

Advantages of using generalizability theory in EFL writing assessment

In the field of EFL writing assessment, ensuring the reliability and validity of test scores is paramount. Generalizability (G-) theory, developed by Cronbach et al. (1972), offers a robust framework that surpasses classical test theory (CTT) by providing a comprehensive approach to evaluating and improving the reliability of assessments. Using G-theory in EFL writing assessment has several advantages.

One of the primary advantages of G-theory is its capacity to disentangle the multiple sources of variance that affect test scores (Li & Huang, 2022; Liu & Huang, 2020, Brennan, 2001). Unlike CTT, which provides a single reliability coefficient, G-theory allows for the examination of different facets of measurement error, including rater variability, task variability, and the interaction between persons and tasks (Brennan, 2001; Shavelson & Webb, 1991). This detailed analysis is crucial in EFL writing assessment, where inconsistencies can arise from various factors, such as subjective rater judgments and the diverse nature of writing tasks.

Conducting a G-study within the framework of G-theory enables researchers to quantify the extent to which each source of variance contributes to the total score variance (Gao & Brennan, 2001; Shavelson & Webb, 1991). For instance, G-theory can determine how much of the variance is attributable to the examinee’s true ability, the inconsistencies in scoring among different raters, and the specific tasks chosen for the assessment.

Moreover, G-theory facilitates the optimization of assessment procedures through decision (D-) studies. These studies identify the most significant sources of measurement error and suggest practical ways to enhance score reliability (Brennan, 2001; Shavelson & Webb, 1991). For example, increasing the number of raters or tasks can reduce the impact of individual rater biases and task-specific difficulties, leading to more reliable and valid scores. This flexibility in optimizing assessment conditions is a significant advantage in the dynamic field of EFL writing assessment (Liu & Huang, 2020; Zhao & Huang, 2020).

Finally, the use of G-theory also contributes to the fairness of EFL writing assessment. By systematically analyzing and addressing sources of measurement error, G-theory helps ensure that test scores are not unduly influenced by extraneous factors unrelated to the examinee’s true writing proficiency (Liu & Huang, 2020; Wu et al., 2022). This focus on fairness is particularly important in high-stakes testing environments, where the consequences of assessment outcomes can significantly impact learners’ educational and professional opportunities.

In conclusion, G-theory provides a detailed framework for analyzing and improving the reliability, validity, and fairness of test scores. Its ability to disentangle various sources of measurement error, coupled with the flexibility to optimize assessment conditions, makes G-theory a powerful tool for educators and test developers. Consequently, it was employed as the quantitative methodological framework for this study.

The benefits of ChatGPT in EFL writing classrooms

The integration of ChatGPT in EFL writing classrooms has received significant attention in recent educational research. This section critically examines the benefits of ChatGPT in enhancing writing abilities and assessment processes within EFL settings, drawing on findings from ten recent studies.

Research focusing on specific writing tasks suggests that ChatGPT can significantly enhance the writing process. Guo et al. (2024) examined the effectiveness of ChatGPT in assisting five Chinese EFL undergraduate students’ argumentative writing task. The results indicated that ChatGPT provided essential assistance for them to scaffold the process of their argumentative writing. Similarly, Su et al. (2023) explored the possibility of using ChatGPT to assist EFL students’ argumentative writing in classroom settings. The results indicated that ChatGPT can assist students with outlining, revising, editing, and proofreading tasks in the writing classrooms.

A notable area of inquiry compares the performance of ChatGPT with human instructors. Guo and Wang (2023) investigated ChatGPT’s in contrast to five EFL teachers’ performance in providing feedback on 50 Chinese undergraduate students’ argumentative writing. Specifically, ChatGPT and the teacher participants gave feedback on the language, content, and organization aspects of these essays. The amount and type of feedback comparison between ChatGPT and human teachers indicated that ChatGPT performed significantly better than the human teachers. This is echoed in studies by Zhang et al. (2023a), who examined the perceived effectiveness of ChatGPT in contrast to web-based learning in terms of developing 15 Chinese EFL students’ knowledge of logical fallacy and motivation in English writing. The results showed that ChatGPT was slightly less effective in developing students’ knowledge but more effective in improving their learning motivation than the website. Zhang et al. (2023b) further examined the impact of ChatGPT training on 15 Chinese EFL students’ logical fallacies in EFL writing and reported positive findings.

Lu et al. (2024) examined if ChatGPT can effectively complement classroom teachers’ assessment of 46 Chinese undergraduate students’ English academic writing tasks. Their findings suggested that ChatGPT can effectively augment traditional teacher assessments, providing a robust tool that supports and enhances evaluative processes.

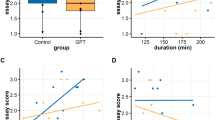

The impact of ChatGPT on student motivation and writing skills has also been a focus. Song and Song (2023) evaluated the impact of ChatGPT on 50 Chinese EFL students’ writing motivation and writing skills. An experimental group (ChatGPT-assisted) and a control group (with no-ChatGPT assistance) were compared in both writing motivation and skills. The findings suggested that the experimental group demonstrated higher writing motivation and better writing skills.

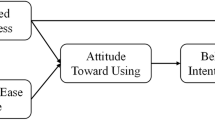

Studies exploring ChatGPT acceptance and utilization across different educational backgrounds offer insights into its broader applicability. Zuo and Huang (2023a) examined the impact of ChatGPT on the English writing of 215 Chinese doctoral students from diverse educational backgrounds. The results revealed that ChatGPT can be a useful tool for their English writing at pre-, during-, and post-writing stages. Furthermore, Zou and Huang (2023b) investigated 242 Chinese doctoral students’ acceptance of ChatGPT in their EFL writing. Again, the results revealed positive findings. ChatGPT can be an effective AI tool in EFL writing.

Despite the positive outcomes, there are concerns regarding the integration of ChatGPT in educational settings. Yan (2023) examined the impact of ChatGPT on Chinese EFL students in an English writing practicum. The findings suggested the potential application of using ChatGPT in EFL writing classrooms, although participants expressed their concerns about academic integrity. This suggests the need for careful consideration and guidelines when deploying ChatGPT in education.

To sum up, these studies suggest that ChatGPT can play a transformative role in EFL writing classrooms. It not only enhances the efficiency and effectiveness of writing assessments but also improves student motivation and engagement with writing tasks. However, the adoption of such technologies must be navigated with attention to ethical considerations and the potential need for new pedagogical strategies to optimize AI integration in educational contexts.

Research gaps and questions

While existing studies have highlighted the potential benefits of ChatGPT in enhancing EFL writing processes and complementing traditional EFL writing assessments, there is a lack of comprehensive research comparing the performance of different versions of ChatGPT in real classroom settings. Specifically, the relevance and reliability of ChatGPT versions 3.5 and 4 in scoring EFL essays and providing qualitative feedback have not been thoroughly investigated. Additionally, there is a need to understand how these AI tools measure up against human instructors in terms of consistency, depth, and relevance of feedback.

To address these gaps, this study examined the role of ChatGPT in enhancing EFL writing assessments in Chinese college English classrooms. Specifically, it addressed the following two research questions: a) what is the reliability of the holistic scores assigned to EFL essays by ChatGPT versions 3.5 and 4 compared to college English teachers? b) What is the relevance of the qualitative feedback provided on EFL essays by ChatGPT versions 3.5 and 4 compared to college English teachers?

Methods

The participants

The participants included 30 non-English major undergraduate students and four college English teachers from a university in Beijing, China. The 30 students were slightly different in their English writing proficiency as measured by their final examination in the previous semester. Among them, 21 were females, and nine males. They were invited to complete the above writing task in a normal class session within 30 minutes required by the CET-4 administration. The four English teachers had been teaching college English writing in that university for over ten years; further, all of them had served as the national CET-4 writing raters.

Like the four college English teachers, ChatGPT versions 3.5 and 4 served as raters of the writing samples in this study. They were used as powerful tools in EFL writing assessments because the integration of ChatGPT versions in EFL writing assessments has revolutionized the approach to evaluating and enhancing student writing by offering comprehensive support across various stages of the writing process, such as outlining, drafting, revising, editing, and proofreading (e.g., Guo et al., 2024; Su et al., 2023; Zou & Huang, 2023a; Zou & Huang, 2023b). Studies have demonstrated that ChatGPT could provide detailed and relevant feedback, often surpassing traditional methods in depth and clarity, thereby aiding students in identifying and correcting errors more effectively (e.g., Guo et al., 2024; Su et al., 2023). Furthermore, its adaptability allows ChatGPT to assist students from diverse educational backgrounds, providing tailored assistance that addresses individual needs (Zou & Huang, 2023a; Zou & Huang, 2023b).

The writing task

The writing task for this study was an authentic one (see below) previously utilized in the national College English Test Band 4 (CET-4) writing examination in China.

Suppose you have two options upon graduation: One is to find a job somewhere and the other to go to a graduate school. You are to make a choice between the two. Write an essay to explain the reasons for your choice. You should write at least 120 words but no more than 180 words.

Data collection procedures

Since EFL writing assessments involve quantitative scoring as well as providing qualitative feedback, this study compared both aspects of EFL writing assessments between ChatGPT versions 3.5 and 4 and four college English teachers. Data collection was performed in two major steps.

In the first step, the four college English teachers were invited to assess the 30 essays written by the 30 non-English major undergraduate students. They first scored each essay holistically at their own pace following the CET-4 assessment criteria (see Supplementary Information) and then provided qualitative feedback on the language, content, and organization aspects of these essays. Since they had previously served as the national CET-4 writing raters, only a brief online training was provided to these teacher raters before they started to assess the 30 EFL essays. The training included (a) review of CET-4 scoring criteria, (b) assessment of three selected writing samples, and (c) discussion of assessment outcomes.

In the second step, training was provided to ChatGPT versions. The training focused on refining its scoring of the same three writing samples aligned with the CET-4 criteria to enhance its assessment algorithms and ensure accurate scoring. Following a similar approach to the human raters, ChatGPT received this training before commencing its assessments of the 30 EFL essays. Data collection with ChatGPT (both versions 3.5 and 4) were completed four times (with three-day time interval) separately. Following the same procedures as human raters, ChatGPT first scored the essays holistically following the CET-4 assessment criteria (see Supplementary Information); and then, provided qualitative feedback on the language, content, and organization aspects of these essays.

Data analysis methods

Quantitative analysis was performed within the framework of G-theory using GENOVA (Crick & Brennan, 1983), a computer program designed for estimating variance components and their standard errors in balanced designs. Specifically, person-by-ChatGPT 3.5 rater (p x rG3.5), person-by-ChatGPT4 rater (p x rG4), and person-by-teacher rater (p x rt) random effects G-studies were executed, followed by person-by-ChatGPT 3.5 rater (P x RG3.5), person-by-ChatGPT4 rater (P x RG4), and person-by-teacher rater (P x Rt) random effects D-studies, with G- and Phi-coefficients calculated. Because the aim of this study was to compare the score variability and reliability among ChatGPT3.5, 4, and teacher raters, only the facet of rater was considered in the G-theory analyses.

Qualitative feedback provided by each type of rater (i.e., ChatGPT versions 3.5, 4, and teachers) was analyzed at two levels. First, the feedback was color-coded, sorted, and organized by the researchers independently, and then grouped according to themes, by the research team collaboratively (Creswell & Creswell, 2023). A three-level coding scheme was used to code the qualitative feedback on language, content, and organization. Second, the grouped qualitative feedback was further quantified in terms of the number of effective feedback statements for descriptive statistical analysis. Finally, major themes within the domains of language, content, and organization were identified.

Results

Holistic score reliability by ChatGPT versions 3.5, 4, and teacher raters

Within the framework of G-theory, G-studies are performed to examine the score variability, i.e., to obtain the percentage of total score variance each variance component could explain; the obtained variance components can be further analyzed through D-studies to examine the score reliability (Brennan, 2001). Therefore, results for both the G- and D-studies are presented in Tables 1 and 2, respectively.

Table 1 details the outcomes from the three G-studies conducted for ChatGPT versions 3.5, 4, and teacher raters, respectively. In all three cases, the object of measurement (i.e., person, p), representing the students’ writing abilities, emerged as the most significant source of variance. Specifically, for ChatGPT version 3.5 scoring, the variance component associated with person accounted for 64.91% of the total variance; for ChatGPT version 4 scoring, the same variance component explained 87.63% of the total variance; for teachers’ scoring, the person variance component explained 76.59% of the total variance. The person variance component is the desired variance because it is believed that students’ writing abilities vary from person to person (Brennan, 2001; Huang, 2008, 2012).

Furthermore, as shown in Table 1, for the ChatGPT version 3.5 scoring scenario, the residual variance component explained 32.88% of the total variance. It includes variability due to interactions between facets and other unmeasured errors. Over 32% of the unexplained variance indicates hidden facets, which were not considered in the design but might have affected the scoring of students’ essays (Brennan, 2001; Huang, 2008, 2012). For ChatGPT version 4 and teacher raters, the same variance component explained 10.83% and 18.58% of the total variance, respectively.

Finally, as shown in Table 1, in all three cases, the rater variance component (i.e., rG3.5, rG4, and rt), reflecting the differences in scoring leniency or stringency among raters, was the least significant source of variance. It explained 2.21%, 1.54%, and 4.83% for ChatGPT versions 3.5, 4, and teacher raters, respectively, suggesting that the three types of raters scored students’ writing tasks fairly consistently.

Results of the three D-studies highlight an overall increase of the reliability of holistic scores from ChatGPT version 3.5, teachers, and ChatGPT version 4 (see Table 2). Table 2 details the reliability measures for the three types of raters, utilizing the G-coefficients (for norm-referenced interpretations) and Phi-coefficients (for criterion-referenced interpretations). These coefficients were calculated under conditions from single to ten raters for each essay.

For the EFL essays, when assessed by a single rater, the G-coefficients for ChatGPT versions 3.5, 4, and teacher raters were 0.66, 0.89, and 0.80, respectively, with corresponding Phi-coefficients of 0.65, 0.88, and 0.77. When the number of raters increased to two, there was a notable improvement in reliability for three types of raters. Specifically, G-coefficients rose to 0.80, 0.94, and 0.89 for ChatGPT versions 3.5, 4, and teacher raters, respectively, while Phi-coefficients increased to 0.79, 0.93, and 0.87, respectively.

In comparison, ChatGPT version 3.5 scoring exhibited lower reliability coefficients than teacher raters; however, ChatGPT version 4 scoring achieved higher reliability coefficients than the teachers. These findings suggest that ChatGPT version 3.5 could be a good AI tool to enhance the scoring of EFL essays; it could be an effective aid to teachers’ scoring of EFL writing. Yet, ChatGPT version 4 could be an even better AI tool that could possibly replace teacher raters in scoring EFL compositions in the classroom settings.

Relevance of qualitative feedback across ChatGPT versions 3.5, 4, and teacher raters

The qualitative feedback on the 30 EFL essays by ChatGPT versions 3.5, 4, and teacher raters was analyzed both quantitatively (i.e., the mean and standard deviation) and qualitatively (i.e., themes) in terms of its relevance of feedback. The results are presented in Tables 3 and 4, respectively.

Table 3 displays the descriptive statistics of the number of effective feedback given by ChatGPT versions 3.5, 4, and teacher raters. ChatGPT version 4 gave the most number of effective feedback on language (mean = 201.25, SD = 4.46), content (mean = 103.5, SD = 3.68), and organization (mean = 106.5, SD = 3.98); ChatGPT version 3.5 gave the second-most number of effective feedback on language (mean = 179.5, SD = 9.25), content (mean = 89.5, SD = 7.62), and organization (mean = 94.25, SD = 9.59); and teacher raters gave the fewest number of effective feedback on language (mean = 54.5, SD = 12.56), content (mean = 20.75, SD = 8.72), and organization (mean = 36.25, SD = 12.79).

Overall, the descriptive statistical results suggest that effective feedback on language, content, and organization varied substantially among ChatGPT versions 3.5, 4, and teacher feedback givers. ChatGT version 4 gave substantially the most effective feedback, numerically speaking, followed by ChatGPT version 3.5 and teacher feedback givers.

To further assess and categorize the effective feedback on essays given by ChatGPT versions 3.5, 4, and teacher raters, it is crucial to identify and consolidate major themes within the domains of language, content, and organization (see Table 4). This approach allows for a clearer understanding of the areas needing improvement, as well as how feedback from each rater group contributes to these themes. The major findings are presented in the next section.

Language

The feedback across all three groups emphasizes several critical issues related to language use in essays, albeit with varying specifics: (a) grammar and syntax errors, (b) sentence structure and complexity, (c) word choice and vocabulary, and (d) spelling and typos.

First, all three rater groups noted significant concerns with grammatical errors, including incorrect verb tenses and article usage. These errors affect the clarity and readability of the essays. Second, concerns about sentence structure are universal across the feedback. ChatGPT3.5 specifically mentioned a lack of complexity, while ChatGPT4 and teacher raters pointed out problematic structures and incorrect expressions, indicating a fundamental issue with forming coherent and sophisticated sentences. Third, each rater group highlighted issues with word choice, including inappropriate or awkward phrasing and a lack of vocabulary variety. Teacher raters also noted the influence of thinking in another language (Chinese) on expression, which suggests a deeper, cross-linguistic challenge. Finally, both ChatGPT4 and teacher raters identified spelling errors and typos as a concern, indicating a need for more careful proofreading and attention to detail.

The following are specific feedback given by ChatGPT3.5, 4, and teachers on the language use of essay #23 (see below), respectively.

Essay #23

I am a university student who will graduate of my university after two years. May be most students who like me will leave school and will choose to take examinations for a graduate programme, but I will not do it like this.

I was often talk about the thing with my friends after came back. Coincidentally, we have a same answer which is go to work after graduation directly. I think and we all think that experience after to go to walk by you is better than you take two years to get a postgraduate by getting by under false pretences. But we don’t consider it will be what, if we keep the learning way on school until doctor.

My family ask me to take examinations for a graduate programme, but I don’t want to do it. So my choice is indeterminate.

“The essay suffers from numerous language errors, including grammar, syntax, and vocabulary choices, making it difficult to understand; many sentences are unclear or fragmented, such as ‘May be most students who like me will leave school will choose to take examinations for a graduate programme,’ which could be revised for clarity; and there are also issues with word choice and phrasing throughout the essay.” (Feedback given by ChatGPT3.5)

ChatGPT4 provided the following feedback on language use for the same essay: “The essay contains numerous language errors that affect clarity and comprehension. Issues include grammatical errors (‘graduate of my university,’ ‘May be most students who like me,’ ‘I was often talk’), misuse of words (‘go to walk by you,’ ‘getting by under false pretences’), and awkward phrasing (‘we have a same answer,’ ‘concider it will be what’). These errors significantly impede the reader’s understanding of your arguments. To improve, focus on verb tense consistency, proper preposition use, and clearer expression of ideas. Additionally, using simpler, more direct language can help avoid confusion.” (Feedback given by ChatGPT4)

“There are numerous language errors, most of which are serious grammatical mistakes, leaving essentially no correct sentences. ‘May be most students who like me will leave school will choose to take examinations for a graduate program’ uses ‘May be’ incorrectly, the relative clause ‘who like me’ has a verb error, and the main clause improperly uses two sets of verbs, ‘will leave’ and ‘will choose,’ consecutively. Instances such as ‘I was often talk about’ and ‘is go to work’ feature multiple verb errors in a single sentence.” (Feedback given by one teacher rater)

Content

In terms of content, the feedback revealed common themes of inadequacy in argument development and support: (a) lack of depth and specificity, (b) argument clarity and support, and (c) engagement and persuasiveness.

First, all three rater groups found the essays lacking in depth and specificity. There is a consistent call for more specific examples and personal experiences to strengthen arguments. Second, teacher raters’ feedback suggested essays are often off-topic or have a central idea that is not clearly articulated, paralleling concerns from three groups about vague arguments and a lack of evidence and support. Finally, ChatGPT4 specifically mentioned the absence of potential counterarguments and the overall unconvincing nature of the essays, pointing towards a need for more engaging and thoughtfully constructed arguments.

The following are specific feedback given by ChatGPT3.5, 4, and teachers on the content aspect of essay #23 (see above), respectively.

“The content lacks coherence and depth, with unclear reasoning and incomplete thoughts. The author mentions discussions with friends and family but fails to provide clear reasons for their own choice or discuss potential consequences. The essay also ends abruptly, leaving the reader with an incomplete understanding of the author’s perspective.” (Feedback given by ChatGPT3.5)

“The essay’s content is somewhat pertinent to the topic, but it lacks depth and specificity. The reasons for choosing to work immediately after graduation are not clearly articulated beyond a general preference for gaining experience. The mention of discussions with friends and family introduces potentially interesting perspectives, yet these are not explored in detail. To enhance your essay, consider including specific examples of how working immediately can offer valuable experiences or how it aligns with your personal or career goals.” (Feedback given by ChatGPT4)

“The central theme of the article is unclear; although it is divided into three paragraphs, the content is fragmented and disorganized.” (Feedback given by one teacher rater)

Organization

The organization of essays is another area where raters express significant concerns: (a) cohesion and flow, and (b) structural concerns.

First, feedback across all three rater groups pointed to a lack of coherence, with essays described as disjointed or barely coherent. This included specific mentions of missing transitions and unclear topic sentences, indicating a broader issue with connecting ideas logically and smoothly. Second, there is a shared observation about illogical flow and unclear organization, with ChatGPT4 explicitly noting the failure to achieve coherence and a meaningful conclusion. This suggests essays struggle with not only the order of ideas but also with effectively concluding and reinforcing arguments.

The following are specific feedback given by ChatGPT3.5, 4, and teachers on the organization aspect of essay #23 (see above), respectively.

“The essay lacks a clear organizational structure, with ideas presented in a disorganized manner. Transitions between ideas are weak, making it difficult to follow the author’s train of thought. The essay jumps between different topics without establishing a clear progression of ideas, further contributing to the lack of coherence.” (Feedback given by ChatGPT3.5)

“The organization of the essay is challenging to follow. Ideas are presented in a somewhat disjointed manner, with the transition from personal reflections to discussions with friends and family feeling abrupt. There is also a lack of a clear conclusion; the essay ends on an ambiguous note (“So my choise is indeterminate”), which leaves the reader unsure of your final decision or reasoning. Organizing your essay with a clear introduction, body paragraphs that each focus on a specific reason or example supporting your choice, and a definitive conclusion summarizing your stance would greatly improve coherence.” (Feedback given by ChatGPT4)

“The organizational structure is chaotic and lacks substance, without a conclusion. Argumentative essays must have a clear viewpoint.” (Feedback given by one teacher rater)

In sum, the feedback themes highlight pervasive issues in language use, content development, and organizational structure across the essays assessed by ChatGPT versions 3.5, 4, and teacher raters. This consolidated feedback emphasized the need for focused instruction and practice in grammatical accuracy, vocabulary expansion, argument development, and essay structuring to enhance both the clarity and persuasive power of student writing. Addressing these areas with targeted strategies could significantly improve essay quality and effectiveness.

Discussion

The findings from this study contribute to the ongoing research on the efficacy of ChatGPT in educational settings, particularly in the assessment of EFL writing. This study investigated the reliability of writing scores and the relevance of qualitative feedback provided by ChatGPT versions 3.5 and 4, aligning with and expanding upon existing literature on AI-driven educational assessments.

Consistent with the broader trend identified in studies such as Guo and Wang (2023) and Zhang et al. (2023b), our findings delineate a clear evolution in ChatGPT capabilities. While ChatGPT 3.5 showed lower reliability coefficients similar to earlier implementations noted by Guo et al. (2024), ChatGPT4 demonstrated higher reliability, surpassing that of human raters. This suggests an enhancement in AI sophistication and reliability over time, reinforcing Lu et al.‘s (2024) findings that ChatGPT can complement and enhance traditional assessment methods.

In line with Su et al. (2023) and Yan (2023), who highlighted ChatGPT’s capacity to assist significantly in the writing process, our study found that both versions of ChatGPT provided more effective and comprehensive feedback compared to human teachers. This supports the narrative from Zou and Huang (2023a, 2023b), who reported ChatGPT’s positive impact on student writing across multiple stages. Human raters tended to focus more on language aspects, while ChatGPT provided balanced feedback across language, content, and organization, echoing concerns raised by Song and Song (2023) about areas for improvement in human feedback.

Our findings underscore the potential for AI, particularly advanced iterations like ChatGPT4, to play a critical role in augmenting the assessment processes within EFL classrooms. This is particularly relevant given Zhang et al. (2023a) highlighted the need for tools that can motivate and enhance learning outcomes. By automating initial assessments and routine feedback, AI tools can allow educators to focus on more personalized teaching strategies, a possibility supported by the broader application of AI in educational settings discussed by Lu et al. (2024).

Conclusion

This study provides valuable insights into the potential of ChatGPT to enhance EFL writing assessments, with a particular focus on the reliability and relevance of ChatGPT versions 3.5 and 4. The results highlight that ChatGPT4 exhibits higher reliability coefficients compared to human raters, suggesting that advanced AI technology can offer more consistent and objective assessment outcomes. Additionally, both versions of ChatGPT were found to provide more comprehensive feedback across multiple dimensions of writing than human raters.

The implications of these findings are significant for language education. Improved reliability in scoring can lead to fairer and more equitable evaluations of student work, while comprehensive feedback can help students identify and correct a broader range of issues in their writing. For educators, adopting ChatGPT can complement instructional strategies with AI insights, potentially enriching the learning experience and fostering deeper understanding.

However, this study has several limitations. The sample size was limited to 30 essays from non-English major students at a single university, restricting the generalizability of the findings. Additionally, the use of human raters from one institution could introduce bias, and focusing on only two versions of ChatGPT may not capture the variability present in other AI models or configurations.

Future research should consider broader demographic studies and explore the impacts of various AI models in educational settings. Despite these limitations, the study underscores the transformative potential of AI in educational assessments, suggesting that tools like ChatGPT could significantly enhance the efficiency and effectiveness of evaluating English writing tasks. By improving reliability, offering comprehensive feedback, reducing teacher workload, facilitating personalized learning, and preparing for future technologies, ChatGPT stands to play a crucial role in modernizing and advancing language education.

Data availability

The participants did not provide written informed consent to share their personal feedback data publicly. They are the owners of their feedback on students’ writing samples. However, they did provide written informed consent to share their feedback with individuals on reasonable request. Therefore, the datasets generated during the current study are available from the corresponding author ONLY on reasonable request.

References

American Educational Research Association (AERA), American Psychological Association (APA), and National Council on Measurement in Education (NCME)(2014). Standards for educational and psychological testing. American Psychological Association, Washington, DC

Ansari AN, Ahmad S, Bhutta SM (2023) Mapping the global evidence around the use of ChatGPT in higher education: A systematic scoping review. Educ Inf Technol. https://doi.org/10.1007/s10639-023-12223-4

Baker KM (2016) Peer review as a strategy for improving students’ writing process. Act Learn High Educ 17(3):170–192

Barkaoui K (2010) Variability in ESL essay rating processes: The role of the rating scale and rater experience. Lang Assess Q 7(1):54–74

Barrot JS (2023) Using ChatGPT for second language writing: Pitfalls and potentials. Assess Writ 57:100745. https://doi.org/10.1016/j.asw.2023.100745

Black P, Wiliam D (1998) Assessment and classroom learning. Assess Educ: Princ, Policy Pract 5(1):7–74

Brennan RL (2001) Statistics for social science and public policy: Generalizability theory. Springer-Verlag, New York

Carless D, Salter D, Yang M, Lam J (2011) Developing sustainable feedback practices. Stud High Educ 36(4):395–407

Creswell JW, Creswell JD (2023) Research design: Qualitative, quantitative, and mixed methods approaches (6th Ed.). Thousand Oaks, CA: SAGE Publications

Crick JE, Brennan RL (1983) GENOVA: A general purpose analysis of variance system. Version 2.1. Iowa City, IA: American College Testing Program

Cronbach LJ, Gleser GC, Nanda H, Rajaratnam N (1972) The dependability of behavioral measurements: Theory of generalizability for scores and profiles. Wiley, New York

Farazouli A, Cerratto-Pargman T, Bolander-Laksov K, McGrath C (2023) Hello GPT! Goodbye home examination? An exploratory study of AI chatbots impact on university teachers’ assessment practices. Assess Eval High Educ, 1–13. https://doi.org/10.1080/02602938.2023.2241676

Gao X, Brennan RL (2001) Variability of estimated variance components and related statistics in a performance assessment. Appl Meas Educ 14(2):191–203

Gibbs G, Simpson C (2004) Conditions under which assessment supports students’ learning. Learn Teach High Educ 1:18–19

Guo A (2006) The problems and the reform of college English test in China. Sino-US Engl Teach 3(9):14–16

Guo K, Li Y, Li Y, Chu SKW (2024) Understanding EFL students’ chatbot-assisted argumentative writing: An activity theory perspective. Educ Inf Technol 29(1):1–20. https://doi.org/10.1007/s10639-023-12230-5

Guo K, Wang D (2023) To resist it or to embrace it? Examining ChatGPT’s potential to support teacher feedback in EFL writing. Education and Information Technologies. https://doi.org/10.1007/s10639-023-12146-0

Guo K, Wang J, Chu SKW (2022) Using chatbots to scaffold EFL students’ argumentative writing. Assess Writ 54:100666. https://doi.org/10.1016/j.asw.2022.100666

Hattie J, Timperley H (2007) The power of feedback. Rev Educ Res 77(1):81–112

Hu C, Zhang Y (2014) A study of college English writing feedback system based on M-learning. Mod Educ Technol 7:71–78. https://doi.org/10.3969/j.issn.1009-8097.2014.07.010

Huang J (2008) How accurate are ESL students’ holistic writing scores on large-scale assessments? – A generalizability theory approach. Assess Writ 13(3):201–218

Huang J (2012) Using generalizability theory to examine the accuracy and validity of large-scale ESL writing. Assess Writ 17(3):123–139

Huang J, Foote C (2010) Grading between the lines: What really impacts professors’ holistic evaluation of ESL graduate student writing. Lang Assess Q 7(3):219–233

Huang J, Whipple BP (2023) Rater variability and reliability of constructed response questions in New York state high-stakes tests of English language arts and mathematics: Implications for educational assessment policy. Hum Soc Sci Commun, 1–9. https://doi.org/10.1057/s41599-023-02385-4

Kasneci E, Seßler K, Küchemann S, Bannert M, Dementieva D, Fischer F, Kasneci G (2023) ChatGPT for good? On opportunities and challenges of large language models for education. Learn Individ Differ 103:102274

Koltovskaia S (2020) Student engagement with automated written corrective feedback (AWCF) provided by Grammarly: A multiple case study. Assess Writ 44:100450. https://doi.org/10.1016/j.asw.2020.100450

Lee Y, Kantor R (2007) Evaluating prototype tasks and alternative rating schemes for a new ESL writing test through G-theory. Int J Test 7(4):535–385

Lee Y, Kantor R, Mollaun P (2002) Score dependability of the writing and speaking sections of new TOEFL. Paper presented at the Annual Meeting of National Council on Measurement in Education

Lee I, Zhang JH, Zhang LJ (2023) Teachers helping EFL students improve their writing through written feedback: The case of native and non-native English-speaking teachers’ beliefs. Front Educ. https://doi.org/10.3389/feduc.2021.633654

Lei Z (2017) Salience of student written feedback by peer-revision in EFL writing class. Engl Lang Teach 10(2):151–157

Li H (2012) Effects of rater-scale interaction on EFL essay rating outcomes and processes. Unpublished doctoral dissertation, Zhejiang, China: Zhejiang University

Li J, Huang J (2022) The impact of essay organization and overall quality on the holistic scoring of EFL writing: Perspectives from classroom English teachers and national writing raters. Assess Writ 51:100604

Lin CK (2014) Treating either ratings or raters as a random facet in performance-based language assessments: Does it matter? CaMLA Work Pap, 1:1–15

Link S, Mehrzad M, Rahimi M (2022) Impact of automated writing evaluation on teacher feedback, student revision, and writing improvement. Comput Assist Lang Learn 35(4):605–634. https://doi.org/10.1080/09588221.2020.1743323

Liu Y, Huang J (2020) The quality assurance of a national English writing assessment: Policy implications for quality improvement. Stud Educ Eval 67:100941

Lu, Q, Yao, Y, Xiao, L, Yuan, M, Wang, J, & Zhu, X (2024) Can ChatGPT effectively complement teacher assessment of undergraduate students’ academic writing? Assess Eval High Educ, 1–18. https://doi.org/10.1080/02602938.2024.2301722

Niu R, Zhang R (2018) A case study of focus, strategy and efficacy of an L2 writing teacher’s written feedback. J PLA Univ Foreign Lang 41(3):91–99

Praphan PW, Praphan K (2023) AI technologies in the ESL/EFL writing classroom: The villain or the champion. J Second Lang Writ 62:101072. https://doi.org/10.1016/j.jslw.2023.101072

Roberts F, Cimasko T (2008) Evaluating ESL: Making sense of university professors’ responses to second language writing. J Second Lang Writ 17:125–143

Shavelson RJ, Webb NM (1991) Generalizability theory: A primer. Newbury Park, CA: Sage

Shermis MD, Hamner B (2013) Contrasting state-of-the-art automated scoring of essays: Analysis. Assess Educ: Princ, Policy Pract 20(1):131–148

Song C, Song Y (2023) Enhancing academic writing skills and motivation: Assessing the efficacy of ChatGPT in AI-assisted language learning for EFL students. Front Psychol 14:1260843. https://doi.org/10.3389/fpsyg.2023.1260843

Su Y, Lin Y, Lai C (2023) Collaborating with ChatGPT in argumentative writing classrooms. Assess Writ 57:100752. https://doi.org/10.1016/j.asw.2023.100752

Wu W, Huang J, Han C, Zhang J (2022) Evaluating peer feedback as a reliable and valid complementary aid to teacher feedback in EFL writing classrooms: A feedback giver perspective. Stud Educ Eval 73:101140

Yan D (2023) Impact of ChatGPT on learners in a L2 writing practicum: An exploratory investigation. Educ Inf Technol 28(11):13943–13967. https://doi.org/10.1007/s10639-023-11742-4

Yao, Y, Guo NS, Li C, McCampbell, D (2020) How university EFL writers beliefs in writing ability impact their perceptions of peer assessment: Perspectives from implicit theories of intelligence. Assess Eval High Educ, 1–17. https://doi.org/10.1080/02602938.2020.1750559

Yu S, Hu G (2017) Understanding university students’ peer feedback practices in EFL writing: Insights from a case study. Assess Writ 33:25–35

Zhang R, Zou D, Cheng G (2023a) Chatbot-based learning of logical fallacies in EFL writing: Perceived effectiveness in improving target knowledge and learner motivation. Interact Learn Environ, 1–18. https://doi.org/10.1080/10494820.2023.2220374

Zhang J (2009) Exploring rating process and rater belief: Seeking the internal account for rater variability. Unpublished doctoral dissertation Guangdong, China: Guangdong University of Foreign Studies

Zhang R, Zou D, Cheng G (2023b) Chatbot-based training on logical fallacy in EFL argumentative writing. Innov Lang Learn Teach 17(5):932–945. https://doi.org/10.1080/17501229.2023.2197417

Zhao C, Huang J (2020) The impact of the scoring system of a large-scale standardized EFL writing assessment on its score variability and reliability: Implications for assessment policy makers. Stud Educ Eval 67:100911

Zou M, Huang L (2023a) The impact of ChatGPT on L2 writing and expected responses: Voice from doctoral students. Educ Inf Technol. https://doi.org/10.1007/s10639-023-12397-x

Zou M, Huang L (2023b) To use or not to use? Understanding doctoral students’ acceptance of ChatGPT in writing through technology acceptance model. Front Psychol 14:1259531. https://doi.org/10.3389/fpsyg.2023.1259531

Acknowledgements

This study was supported by the Shanghai Educational Sciences Research Program (C2024114) granted to Junfei Li.

Author information

Authors and Affiliations

Contributions

Junfei Li: conceptualization, literature, methodology, data acquisition, data analysis, and writing. Jinyan Huang: conceptualization, literature, methodology, data analysis, writing, and editing for submission. Wenyn Wu: conceptualization, literature, methodology, data acquisition, data analysis, and revision. Patrick Whipple: conceptualization, methodology, data acquisition, and proofreading.

Corresponding author

Ethics declarations

Competing interests

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Ethical approval

This study involving human participants was reviewed and approved by the Evidence-based Research Center for Educational Assessment (ERCEA) Research Ethical Review Board at Jiangsu University (Ethical Approval Number: ERCEA2402).

Informed consent

The participants provided their written informed consent to participate in this study.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, J., Huang, J., Wu, W. et al. Evaluating the role of ChatGPT in enhancing EFL writing assessments in classroom settings: A preliminary investigation. Humanit Soc Sci Commun 11, 1268 (2024). https://doi.org/10.1057/s41599-024-03755-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1057/s41599-024-03755-2

This article is cited by

-

Clashing AIs: Artificial Intelligence Literacy and Academic Integrity in an EFL Context

Journal of Academic Ethics (2026)

-

An AI-driven tools assessment framework for english teachers using the Fuzzy Delphi algorithm and deep learning

Scientific Reports (2025)

-

GenAI competence is different from digital competence: developing and validating the GenAI competence scale for second language teachers

Education and Information Technologies (2025)