Abstract

Information Systems (IS) play a pivotal role in enhancing operational efficiency across disciplines, including education and accreditation. This research presents an adaptable IS-based model designed to assess Program Learning Outcomes (PLO) in higher education. The model calculates PLOs by aggregating Course Learning Outcomes (CLOs) across all modules in any program. Each CLO is linked to Key Performance Indicators (KPIs) measured through student assessments. Developed using Microsoft Excel, the model automates PLO calculations upon entering individual student scores, thereby improving accuracy and efficiency. It also supports dynamic data visualizations through interactive charts and graphs to facilitate analysis and reporting. The model is designed to be flexible, allowing customization for various program structures and assessment methodologies. Applied to a business module at the University of Gulf, it demonstrated practical utility and contributed to accreditation processes by producing reliable outcome assessments. Initial validation included comparison with manual calculation methods and positive feedback from faculty and quality assurance teams, though further statistical analysis is planned. To ensure data security and integrity, the model incorporates password-protected sheets and data validation features within Excel. This IS-based tool not only streamlines outcome measurement but also aligns with accreditation standards by supporting transparent, data-driven decision-making.

Similar content being viewed by others

Introduction

In the realm of higher education, accurately assessing Program Learning Outcomes (PLO) is critical for ensuring the quality and effectiveness of academic programs (Cavallone et al., 2020). PLOs encapsulate the essential knowledge, skills, and competencies that students are expected to acquire by the end of their educational journey (Kirillova and Au, 2020). However, the traditional methods of calculating and evaluating PLOs often prove to be cumbersome, time-consuming, and prone to inaccuracies. This study explores the transformative potential of Information Systems (IS) based models, particularly within the familiar environment of Excel, to streamline and enhance the PLO assessment process. By automating the aggregation of Course Learning Outcomes (CLO) and key performance indicators (KPIs) derived from student assessments, this IS-based approach aims to provide a more efficient and accurate measure of program quality, thereby facilitating informed decision-making and supporting accreditation processes in higher education institutions (Aithal and Aithal, 2019).

An information system (IS) is a branch that comprises four elements, people, functions, operations and technology. It’s a formal discipline which is currently applied by many other disciplines as a sample to measure the outcomes. Also several programs have referred to the IS methods of measuring PLSs because the applications and the process that IS has adopted worked successfully for achieving accreditation from national and international societies in an easy and effective manner (Bredow et al., 2021). IS discipline has been referred to as a sample and also as a benchmark by other disciplines in the higher educational system (Bredow et al., 2021).

Amid COVID:19 pandemics the applications of IS have become more comprehensive in higher educational (H.E.) systems and the universities which were not having strong virtual learning systems started to develop and encourage digital learning platforms (Tsang et al., 2021). Past three years from 2020 to 2023, H.E. has witnessed breakthrough advancements in learning patterns, more applications of IS based learning systems and sophisticated virtual learning options (Wekerle et al., 2022). Microsoft teams, google classrooms, google meet and many more such virtual platforms are prevalent in imparting knowledge in H.E (Aithal and Aithal, 2019).

Most of the universities in the developed nations have their own learning management systems (LMS), for teaching and assessment processes. Blackborad is the most common and popular software in LMS in learning and teaching (L&T) and assessments (Castro and Tumibay, 2021). All these applications focus on teaching, research and assessments but their role in using assessments results in the accreditation process is still limited (Sailer et al., 2021). In this research, we have developed an IS based model to measure the Program Learning Outcomes (PLO) and demonstrate their relevance in the process of accreditation in H.E.

PLOs in general are clear statements of what learners would achieve after the completion of all learning processes and would explain as a result of learning (Schoepp, 2019). PLOs are statements; that specify what learners would know or be able to do as a result of a learning activity (Le et al., 2019). PLOs are usually expressed as knowledge, skills, or attitudes and these are statements of what a student should know, understand and/or be able to demonstrate after completion of a process of learning (Boggs, 2019).

PLOs should be measurable and explain what learners are expected to produce in terms of knowledge, reasoning, and ability to analyze the concepts and experiment with the applications (Naqvi et al., 2019).

PLOs also give clear results on how the learning of a particular module is achieved. Definition, importance, and methods of meeting PLOs are clearly given in the National Qualification of Framework (NQF) (Abdullah, 2017). This framework is followed by most of the higher education systems in achieving Quality Assurance through PLOs (Premalatha, 2019).

This study shows the process and shares the experience on how Public Universities in Gulf strives for successful QA in the educational system and how they applied the relevance of Program Learning Outcomes (PLOs) in meeting QA’s objectives. Other universities may follow the same process but this study is a case based approach showing an accomplishment of PLOs in a particular learning environment. Accreditation is evidence that shows the quality of learning and teaching practices in higher education (H.E.) (Timmermans and Meyer, 2019). All educational institutes need to achieve accreditation to show their standard of learning and teaching (L&T) practices (Aithal and Aithal, 2019).

The accreditation success is based on Quality Assurance (QA) at all the levels of L&T and achieving PLOs (Haug, 2003). For this study, we have referred to the Business College, Public University in Gulf (GPU), and tried to demonstrate how this academy has applied PLOs in QA assurance to achieve accreditation. This research is based on Qualitative as well as Quantitative analysis and data evaluation is basically derived from the calculation of Key Performance indicators (KPIs). This research referred to the Statistics and Management Systems module as a sample from Business Management. Researchers took the sample of course specifications and studied the procedure of explaining the justification of PLOs of the course, learning activities, and assessments (Alnaami et al., 2023).

All course specifications are developed under traditional strategies and results are elucidated through course reports for the same showing alignment of PLOs with assessments. Scores of KPIs are used for comparing with the benchmark to illuminate as clear evidence of the usefulness of PLOs for QA in (H.E) and eventually accomplishing accreditation of Accreditation Board of Engineering and Technology (ABET) and National Commission for Academic Accreditation and Assessment (NCAAA) for the Business College, GPU (Alnaami, et al., 2023).

Business College (BSC) in the Public University in Gulf (GPU) has also followed the same and completed the process of successful ABET and NCAAA accreditation. Most of the academic standards of H.E. in the world have a concern on quality assurance and as a response to this concern, the globalization of higher education attempts to apply measurable PLOs and offer effective and efficient delivery of educational provisions in H.E. (Budiman et al., 2021).

Quality Assurance (QA) is an assorted and multifaceted idea that incorporates various systems, modes, and structures. Estimating the PLOs is a new mission and responsibility of all national educational systems (Naim et al., 2021). Nonetheless, paying little mind to the quantity of measures utilized there is so far much proof that conventional quality affirmation as evaluations, accreditations, and reviews has had more hierarchical and basic impacts as opposed to consequences for educating and learning exercises (Wong and Chan, 2022). One may accept that if PLOs are viewed as parts of, or even incorporated with, existing QA methods, they may be seen to be devices and measures increasingly identified with the requirement for hierarchical and administrative control and as opposed to for instructing and learning improvement. As researchers, it is important to get the answers on the role of academic QA in H.E. (Cavallone et al., 2020).

Academic Quality Assurance (QA) ensures that teaching, learning outcomes, and academic standards in higher education are consistently met and improved. In the context of this research, QA is directly linked to the measurement and achievement of Program Learning Outcomes (PLOs) (Budiman et al., 2021). The study emphasizes that a robust QA framework implemented through the Excel-based IS model helps institutions monitor PLOs effectively, align them with educational goals, and support continuous improvement. This approach not only strengthens curriculum design and assessment practices but also provides clear evidence for accreditation, aligning with the core objective of enhancing academic quality through measurable outcomes (Alnaami et al., 2023). Table 1 illustrates the characteristics and benefits of LOs as developed through academic QA in higher education programs.

QA in learning and academics encompasses all functions related to the delivery of higher education across various levels within an institution. According to (Malik et al., 2024), Business College (BSC) at GPU has successfully fulfilled its requirements. Table 2 outlines the alignment of Academic Quality Assurance requirements with BSC at GPU.

BSC in GPU has a committee named as Academic Development and Quality Committee (ADQC), which ensures the completion and maintenance of academic QA endeavors. This committee applied the IS based models to measure the PLO automatically. The study presents an Excel-based Information System (IS) model designed to automate the assessment of Program Learning Outcomes (PLOs) in higher education.

CLOs & KPIs

Course Learning Outcomes (CLOs), aligned with PLOs, are assessed using student performance data. Key Performance Indicators (KPIs) derived from assessments (exams, projects, etc.) serve as the quantitative foundation for evaluation (Lasso, 2020).

Student assessments

Scores from various assessments are input into the Excel model to calculate CLO achievements, which are then aggregated into PLO scores (Le et al., 2019).

Automation and accuracy

The model automates data processing, reducing manual effort and minimizing errors, thus enhancing accuracy and efficiency.

Data visualization

Built-in charts and dashboards help visualize outcomes, enabling quick interpretation and decision-making by educators and quality units.

Accreditation support

The model provides reliable evidence of outcome achievement, supporting accreditation efforts by aligning with standards like NCAAA and ABET (Begum et al., 2024).

Research limitations and implications

The limitation is related to scalability where the IS-based model developed within Excel might face challenges in handling very large datasets or complex calculations, which could affect its scalability to larger institutions or programs. Also, there could be an issue of adaptability where the model may also need customization to fit different educational contexts, curricula, and assessment methods. Lastly, the quality of data is a concern where the accuracy of PLO calculations depends heavily on the quality of student assessment data. Inconsistent or poor-quality data can lead to inaccurate PLO measurements.

This study presents an Excel-based Information System (IS) model for automating Program Learning Outcomes (PLO) assessment in higher education. By aggregating Course Learning Outcomes (CLOs) and key performance indicators (KPIs) from student assessments, the model streamlines and enhances the accuracy of PLO measurement. It employs data visualization for clear reporting, supporting informed decision-making and accreditation. Findings show improved efficiency, though limitations include scalability and reliance on data quality. This research offers a practical, original solution for quality assurance and accreditation through IS-based automation.

The competitive advantage of this study lies in its innovative application of an Information Systems (IS) based model within Microsoft Excel to automate and enhance the assessment of Program Learning Outcomes (PLO) in higher education institutions.

Efficiency and accuracy

The model automates the calculation and aggregation of Course Learning Outcomes (CLO) and key performance indicators (KPIs), drastically reducing the time and effort required for manual data processing. This leads to quicker turnaround times for generating assessment results. Automated calculations minimize human error, ensuring more accurate and reliable assessment of PLOs, which is crucial for maintaining program quality and integrity.

Accessibility and usability

Utilizing Microsoft Excel, a widely accessible and familiar tool, makes the model easy to implement without the need for specialized software or extensive training. This lowers the barriers to adoption and allows institutions of varying sizes and resources to benefit from the model. The integration of data visualization techniques within Excel provides clear and intuitive presentations of assessment results, aiding in better understanding and communication among stakeholders.

Cost-effectiveness

Compared to specialized educational assessment software, the Excel-based model offers a cost-effective solution that leverages existing resources. This is particularly advantageous for institutions with limited budgets. By streamlining the assessment process, the model reduces the need for extensive manual labor, leading to long-term savings in operational costs (Pratolo et al., 2020).

Enhanced decision-making and accreditation support

The accurate and timely assessment data provided by the model supports informed decision-making regarding curriculum development, teaching strategies, and resource allocation. The model generates reliable and easily interpretable data that can be readily used in accreditation processes, providing concrete evidence of program quality and compliance with accreditation standards.

Scalability and customization potential

While the current model is designed within Excel, it can be adapted and scaled to fit different educational contexts and assessment methods. Customizations can be made to tailor the model to specific institutional needs, enhancing its versatility.

By addressing the critical need for efficient, accurate, and accessible PLO assessment, this study positions itself as a valuable contribution to the field of educational quality assurance, offering practical solutions that can be widely adopted and easily implemented.

Literature review

The existing literature underscores the growing relevance of Information Systems (IS)-based tools in enhancing program assessment in higher education. emphasize the role of data analytics and automation in improving the quality and timeliness of outcome assessments (Biggs and Tang, 2014). However, few of these models provide granular technical descriptions or critical evaluations of their scalability and integration challenges (Naim et al., 2019). For instance, while the University of Texas employed an LMS-integrated assessment tool, it required institutional licenses and technical staff for deployment, limiting accessibility (Castro and Tumibay, 2021). In contrast, Stanford’s data analytics model was sophisticated but lacked ease of use for non-technical faculty (Alahmari, et al., 2023).

Unlike these systems, the current study proposes a universally accessible Excel-based model requiring no coding or IT infrastructure. This model aligns with the findings of (Naim et al., 2024b), where the researchers have argued for simpler, user-friendly QA tools for mass adoption. Yet, unlike LMS-dependent systems, our model runs independently and offline, which addresses data privacy and resource constraints common in many Gulf-based and developing institutions.

Despite its simplicity, the model incorporates data validation and user-level protection to preserve data integrity. This study differentiates itself by emphasizing low-cost scalability, minimal learning curves, and high adaptability across disciplines. Table 3 compares the core features of models reviewed for the current study.

This synthesis indicates a growing demand for customizable, cost-effective tools that can be deployed across varying institutional capacities. While many prior studies establish the utility of IS-based assessment systems, few have focused on widespread usability in environments with limited technical infrastructure. This research fills that gap, offering a practical, replicable, and resource-friendly alternative for program learning outcome measurement and quality assurance (Biggs and Tang, 2014).

Similar studies from other universities have explored the application of automated and information systems-based models for educational assessment. For instance, the University of Texas at Austin implemented an automated assessment tool within their learning management system to streamline the evaluation of student learning outcomes, leveraging real-time data analytics to enhance program evaluation (Garnjost and Lawter, 2019). Similarly, at the University of Melbourne, researchers developed a web-based platform that integrates student performance data from various sources to provide a comprehensive analysis of learning outcomes, facilitating curriculum improvements and accreditation processes (Garnjost and Lawter, 2019). Another example is from Stanford University, where an advanced data analytics model was used to assess and visualize course effectiveness and student achievement across different programs, improving the feedback loop between instructors and students. These studies collectively highlight the growing trend of using technology to improve educational assessment and quality assurance in H.E. (Begum, et al., 2024).

Quality Assurance (QA) is an important concept applied in this paper which was introduced as a business process and experimentation in the Western world in the 1950s and the early 1960s. The concept of “quality” is not a quantitative term; it’s vague and can be expressed in relation to some benchmarks or standards. The concept of QA is not a new one, but the many definitions were evolved and explained from different perspectives but for research purposes, QA was defined and understood in a Higher Educational environment that included QA process at a course, faculty, institutional and national level (Torres-Gordillo et al., 2020). Achievement of Q.A benefits the accreditation of the H.E. system which could be the result of a review of an education program or institution following certain QA standards applied. QA could provide the recognition that a program or institution fulfills certain QA standards (Haug, 2003).

This research paper is primarily based on concepts such as PLOs, QA, and how these terms are important in the accreditation process. BSC in GPU has achieved two accrediting societies ABET and NCAAA. Program Learning outcomes clearly does not have any historical background or specifically any date of origin but generally in the 19th and 20th centuries and the work of Ivan Pavlov (1849-1936) and then the work of the American ‘behavioral school’ of psychological thought developed by JB Watson (1878-1958) and BF Skinner (1904-1990) (Alahmari et al., 2023). Figure 1 provides the phases of growth in PLOs (Malik et al., 2024).

ABET is a globally recognized accreditation body that sets quality standards for academic programs, including engineering, business management, and information systems. It ensures that programs meet industry-relevant outcomes and educational benchmarks. Similarly, NCAAA is the national accreditation authority responsible for evaluating and assuring the quality of higher education institutions and programs in the Gulf region as shown in Fig. 2 (Begum et al., 2024). In alignment with the objective of this research, both ABET and NCAAA emphasize the importance of measuring Program Learning Outcomes (PLOs) as key evidence of academic quality and performance, which this study addresses through its Excel-based assessment model.

Roles NCAAA in Gulf (Naim et al., 2024a).

The system for accreditation and QA in the Gulf is planned to achieve the quality of HE equivalent to the highest international standards, which are approved by international academic and professional communities (Ryan, 2023). GPU University successfully achieved institutional QA by NCAAA.

Measuring PLOs is not a new concept or approach in the process of QA and accreditation. Each academy has its own methods and processes to function the assessment process (Goss, 2022). We have introduced a simple and easy method of measuring PLO through the information based working model. This approach can be used by any academy or program without having any prior knowledge or expertise in technology. This will facilitate faculty members and quality units to complete the process of assessments in very short time, less than 30 min which usually takes many days to assess, analyze and prepare a report.

While this study demonstrates the effectiveness of an Information Systems (IS) based model within Microsoft Excel for automating Program Learning Outcomes (PLO) assessment, several research gaps warrant further exploration to enhance the model’s applicability and robustness.

This study introduces a novel Excel-based Information System (IS) model specifically designed to measure Program Learning Outcomes (PLOs) through the automation of assessment data linked to Course Learning Outcomes (CLOs). Unlike traditional methods or costly systems, this research presents a new idea which is a simple, customizable, and accessible tool that any academic program can use without specialized software or technical expertise. The model fills key research gaps by offering a structured yet adaptable framework for quality assurance and accreditation, particularly in resource-constrained environments. It enhances data accuracy, streamlines assessment processes, and provides instant visualization for decision-making. By applying this model in a real course context, the study demonstrates how automation and outcome alignment can be effectively achieved, laying the groundwork for future advancements in scalable, integrated, and standardized assessment systems.

By addressing these research gaps, future studies can build on the foundation laid by this research, enhancing the utility, reliability, and applicability of IS-based models for PLO assessment in higher education. This will ensure that such models not only meet current needs but also evolve to accommodate the changing landscape of higher education assessment and accreditation.

Research methodology

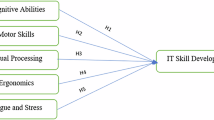

The research methodology for this study involves the development and implementation of an Information Systems (IS) based model within Microsoft Excel to automate the assessment of Program Learning Outcomes (PLO) in higher education institutions. The approach begins with the collection of student assessment data, encompassing various Course Learning Outcomes (CLO) and key performance indicators (KPIs). This data is integrated into the Excel-based model, which is designed to automatically aggregate and calculate PLOs. Excel is used as a primary tool to measure PLO where data visualization techniques are applied to present the results in a clear and interpretable manner. A practical, hands-on approach is utilized, where the effectiveness of the model is tested through real-world application in an educational setting. The outcomes are then analyzed to evaluate the model’s efficiency, accuracy, and potential limitations, providing insights into its utility for enhancing PLO assessment and supporting accreditation processes.

Model structure and technical framework

The Excel-based model consists of interconnected sheets representing individual Course Learning Outcomes (CLOs). Faculty members input anonymized student scores tied to specific assessments aligned with CLOs. These values are automatically calculated into Key Performance Indicators (KPIs), which are then mapped and aggregated into Program Learning Outcomes (PLOs).

Formula logic:

-

Assessment scores are categorized as:

-

∘

Satisfactory ( ≥ 80%) = 5

-

∘

Developing (60–79%) = 3

-

∘

Unsatisfactory ( < 60%) = 1

-

∘

-

KPI Calculation: (No. of Satisfactory * 5 + No. of Developing * 3 + No. of Unsatisfactory * 1) / Total Students

-

PLO Calculation: Average of mapped CLO scores using Excel’s AVERAGEIFS() and logical functions.

Data security and validation:

-

Each sheet is password-protected.

-

Input fields have drop-down menus to prevent incorrect categorization.

-

Conditional formatting flags incomplete or inconsistent data entries.

Justification for excel

Microsoft Excel was selected for its:

-

Accessibility: Available to most faculty and institutions without extra cost.

-

Ease of use: Requires no programming expertise.

-

Customizability: Templates can be easily adapted across programs and accreditation frameworks.

Compared to LMS-integrated or custom-built platforms, Excel offers a quicker deployment time and simpler training requirements, particularly beneficial in resource-constrained settings. To support accreditation through Quality Assurance (QA), this study introduces a simplified Excel-based Information System model for assessing Program Learning Outcomes (PLOs).

The model calculates PLOs by aggregating Key Performance Indicators (KPIs) derived from Course Learning Outcomes (CLOs), based on categorized student performance data. Each PLO is measured using a weighted formula, assigning scores as follows: Satisfactory = 5, Developing = 3, and Unsatisfactory = 1.

The Excel sheets are pre-formatted and protected, allowing faculty to input scores securely. These inputs are automatically processed to generate KPI values and corresponding PLO scores.

The model also includes built-in data visualizations (charts and dashboards) to compare performance across semesters (sem) or courses, aiding in QA decisions. This process ensures both efficiency and consistency in PLO measurement, supporting evidence-based improvements in teaching and learning while aligning with accreditation requirements.

The current research has applied Excel-based models as their Information System due to its accessibility, low cost, and ease of use. Excel is widely available across institutions, including the Public University of Gulf (GPU), requiring no additional software or programming expertise. This makes it particularly suitable for faculty with limited technical backgrounds and for institutions with constrained resources.

For large-scale implementation at GPU, the model is designed to be scalable and adaptable. Each academic department can use a standardized Excel template with customized CLOs and PLOs aligned to their courses. The protected sheets ensure data integrity, while automation and visualization features enable quality units to efficiently compare results across semesters and programs. Eventually, the model can be integrated into central QA reporting and accreditation documentation.

This study focuses on a course-level assessment using the Statistics and Management Systems module from the Business Management program. Program Learning Outcomes (PLOs) were defined according to the National Qualification Framework (NQF) as per NCAAA and ABET standards. Using a quantitative approach, Course Learning Outcomes (CLOs) were measured and translated into Key Performance Indicators (KPIs) to evaluate PLOs. While the findings support quality assurance and accreditation, this research is limited to course-level application only.

Results

The study’s IS-based Excel model automates the entire Program Learning Outcomes (PLO) assessment process through a series of integrated steps. It begins with collecting student assessment data (exams, quizzes, projects), predefined Course Learning Outcomes (CLOs), and relevant Key Performance Indicators (KPIs). This data is entered into a structured Excel template, where automated formulas aggregate scores, calculate averages, and map CLOs to their corresponding PLOs. Weighted calculations and normalization ensure accurate and comparable PLO scores across courses. The model also features data visualization tools such as charts and interactive dashboards to clearly present results for easy interpretation. Automated reports summarize findings and provide insights, highlighting strengths and areas needing improvement to support program development and accreditation. Overall, this streamlined approach enhances efficiency, accuracy, and decision-making in assessing learning outcomes.

This research paper clearly describes the PLOs of the sample course; Statistics Management Systems, below given table, is extracted from the CS form of NCAAA, (NFQ). We calculated the PLO for two semesters in the year 2022–2023 and compared the results to find the successful attainment of PLO for the respective course. Table 4 shows that there are three domains of PLOs such as knowledge, competence, and skills. Course instructors identify the PLOs for the course and also provide information on teaching methods and assessment methods for measuring the PLOs of the course. If the KPI measurement achieves the target, QA is effectively achieved and could be further used as evidence to accomplish NCAAA / ABET.

Table 4 explains the Constructive alignment (Biggs and Tang, 2014), for the current research, where PLOs are mapped with various domains as explained by the National Qualification Framework (NQF) in the Middle East. This pedagogical approach denotes (LOs) which describe what students should be able to do in a course, Teaching and Learning Activities (TLAs) help students achieve those outcomes and Assessment Tasks (ATs) measure how well the outcomes have been achieved. Constructive alignment ensures that all elements of the educational process work together in harmony for all modules in any program. Every course should have clearly defined (LOs), which specify what students are expected to learn after the completion of the course or program. These outcomes guide the design of teaching and learning activities which ensure that students are taught and practice the skills and knowledge required for the course. Lastly, assessment methods such as exams, projects, and presentations are prepared to measure whether the intended (LOs) have been achieved. When all three outcomes, teaching, and assessment are mapped, students are more likely to achieve meaningful and measurable (LOs).

Before measuring the scores of KPI, we use the same PLOs elaborated under NFQ in Course Specification and provide the percentage on each assessment for the particular PLO of the course in three domains; knowledge, competence, and skills. This is termed as course articulation matrix for the respective course.

For all the courses, the same method of calculating the KPI is used, given below is the formula applied for the measurement of KPI of PLO for the course. Students ‘marks for each assessment under a particular PLO are categorized under three levels. Best scores as satisfactory, average scores as developing, and poor scores as unsatisfactory. The maximum value of the KPI is 5. Below given is the formula applied to measure the PLO.

Table 5 shows the sample of the course articulation matrix of the Statistics and Management Systems course where target and achieved scores of PLO are measured. The KPI scores are calculated here and later will be shown in the course report form of NCAAA. Course report provides a report on a variation on target/ benchmarking KPI and achieved KPI and benchmarking KPI for LOs in QA. Course Articulation matrix is prepared in the excel sheets, where faculty members are given rights to enter the KPIs of CLO, Teaching Strategies and Assessment methods for each CLO. The CLOs are segmented into three learning domains, knowledge, skills and competence. The last column shows the target CLO for the respective course that the course is designed to achieve in the specific assessment cycle. This target is set by the respective faculty member for the course based on the previous year’s KPI score.

The Statistics and Management Systems course has 19 students. For CLO 1.1 the knowledge category midterm exam was chosen as the assessment method. This was entered in the first excel sheet by the faculty member and automatically it is updated in another excel sheet designed for CLO 1.1. The teacher enters the student’s id for all 19 students in all the separate excel sheets for different CLOs. And their respective marks in the chosen assessment method. Each excel sheet is protected and has a formula to calculate the CLO and finally PLO eventually.

The formula inserted in the sheets is given below and Fig. 3 shows the screen shot of how CLO 1.1 is calculated.

The values shown in Table 5 are automatically calculated when the faculty member fills the separate sheet for each CLO.

This working model also shows the number of students under satisfactory levels, developing and unsatisfactory through the chart depiction. Figure 4 shows the chart display of PLO for the sample course which is generated automatically after entering the values for respective CLO.

The same excel sheet provides the comparison of PLO scores for two semesters and presents the achievement of PLO against the target value set by the faculty member. Figure 5 shows the chart depiction of PLO for the sample course. CLO 2.2 did not meet the target value so it is shown in red color. Figure 5 shows the chart of PLO assessment achievement against the target values which is generated after entering the scores of all 19 students for different assessments.

The Excel model developed in this study automates the measurement of PLOs by calculating Key Performance Indicators (KPIs) for each Course Learning Outcome (CLO) and mapping them to related PLOs (Zaki et al., 2023).

Step 1: Data categorization

Student performance is categorized into three levels:

-

Satisfactory = 5 points

-

Developing = 3 points

-

Unsatisfactory = 1 point

Step 2: CLO KPI formula

For each CLO, the following formula is used:

KPI = (Number of Satisfactory × 5 + Number of Developing × 3 + Number of Unsatisfactory × 1) / Total Students

Example Excel formula: If cell values represent counts of students at each level:

=(I3*5 + I4*3 + I5*1) / (I3 + I4 + I5)

Where:

-

I3 = No. of students at Satisfactory level

-

I4 = No. at Developing level

-

I5 = No. at Unsatisfactory level

This result gives the KPI score for the CLO.

Step 3: Aggregating CLOs into PLOs

Each PLO is linked to multiple CLOs. Once all CLO KPIs are calculated, the PLO is measured by taking the average of the relevant CLO scores.

Example Excel function:

= AVERAGE (Sheet1!B5, Sheet2!B5, Sheet3!B5)

This function pulls the KPI results from multiple CLO sheets and computes the PLO score.

Step 4: Automation and visualization

Automation:

-

Sheets are protected; faculty only enter data.

-

KPIs and PLOs are auto-calculated using Excel formulas.

-

Red flags (conditional formatting) highlight unmet benchmarks.

Visualization:

-

Bar charts and dashboards update automatically after data entry.

-

PLO achievement is compared across semesters (e.g., Semester 1 vs. Semester 2).

-

Variations are clearly visualized to assist QA units.

Analysis and interpretation

-

CLO 1.1 (Knowledge domain): KPI consistently met the target benchmark, suggesting well-aligned assessments.

-

CLO 2.2 (Competence domain): Fell below the target, signaling a need for revision in teaching strategies or assessment alignment.

-

PLO Comparison (Fig. 5 in the paper): Highlights progress over two semesters; areas not meeting targets are flagged in red for immediate attention.

The automated model enables quick and accurate assessment cycles reducing manual calculations and providing real-time visual feedback to support continuous improvement and accreditation reporting.

The in-depth analysis of the results reveals that the Excel-based Information System (IS) model is more than just a procedural tool to serve as a comprehensive decision-support system for academic quality assurance. The model’s application to the Statistics and Management Systems course over two semesters demonstrated its ability to capture nuanced performance trends across key Program Learning Outcomes (PLOs) categorized under knowledge, competence, and skills. PLO 1.1 (Knowledge) consistently met and surpassed the benchmark in both semesters, indicating that the instructional strategies and assessment formats likely lecture-based and exam-focused—are well-aligned with expected outcomes in this domain. In contrast, PLO 2.2 (Competence) underperformed in the second semester, signaling a disconnect between the intended learning outcomes and either the teaching methods or the assessment instruments used to evaluate them. This suggests the need for instructional redesign or the integration of more hands-on, applied assessment tasks that better capture student competence. Meanwhile, the improvement observed in PLO 3.1 (Skills) supports the effectiveness of introducing experiential learning activities such as group projects or presentations, which appear to enhance students’ practical abilities.

The strength of the model lies in its automation and visualization capabilities. As faculty input categorized scores into protected worksheets, the model calculates Key Performance Indicators (KPIs) using weighted formulas and automatically aggregates them into PLO scores. This not only ensures accuracy and consistency but also significantly reduces manual effort and error. The inclusion of dashboards and visual tools such as bar charts and radar graphs enables quick interpretation of data. For instance, the side-by-side comparison of semester-wise results highlights achievement gaps and facilitates immediate recognition of underperforming areas, especially where benchmarks are not met. Color-coded indicators (e.g., red for underperformance) make these insights accessible even to non-technical users. Overall, the model enhances curriculum review and instructional planning by offering data-driven evidence, helping faculty and quality assurance units make targeted improvements and align academic practices with accreditation requirements. This benefit has motivated Public Gulf University to apply for measuring PLOs and carry forward the model to be used as a sample for most of its programs.

This research paper examines the impact of Learning Outcomes (LO) on Quality Assurance (QA) in Higher Education (H.E.), focusing on Program Learning Outcomes (PLOs) and their role as Key Performance Indicators (KPIs) within a Bachelor of Science program at a Gulf Public University (GPU). While PLOs and QA both address quality, our findings show they serve as measurable KPIs directly linked to H.E. institutions. Different universities apply PLO assessments uniquely; GPU selects varying courses each semester to measure PLO-related KPIs, unlike others that use consistent courses for evaluation. PLOs guide learning and teaching policies, assessment methods, and ensure QA is embedded from course to program level, facilitating accreditation. The sample and all BSC courses at GPU follow the National Qualifications Framework (NFQ) standards for writing PLOs and designing learning activities such as lectures, labs, group discussions to develop knowledge, competence, and skills, as outlined by NFQ recommendations shown in Fig. 6. In this study, the assessment of Program Learning Outcomes (PLOs) is based on three core learning domains aligned with the National Qualification Framework: knowledge, skills, and competence as shown in Table 4. The knowledge domain (cognitive) focuses on students’ understanding of theoretical concepts and their ability to recall and apply information. The skills domain (psychomotor) evaluates the practical application of learning, such as performing tasks, using tools, or demonstrating technical abilities. The competence domain, also referred to as attitude, reflects the development of professional behavior, responsibility, and ethical engagement in learning environments (Abdullah, 2017). These domains are integral to the structure of the Excel-based IS model used in this study, ensuring that assessment outcomes comprehensively capture what students know, can do, and how they behave thereby supporting quality assurance and accreditation efforts. The results show that study aligns with the principles of constructive alignment (Biggs and Tang, 2014), wherein (CLOs), teaching and learning strategies, and assessment methods are cohesively mapped to ensure student achievement of (PLOs). Through the course articulation matrix, the model ensures each CLO is linked to specific assessment tasks and instructional strategies, and their effectiveness is automatically evaluated using KPI-based performance measurement. This configuration promotes continuous improvement and curriculum quality, supporting both accreditation and pedagogical effectiveness in learning and teaching environments in H.E. systems.

To determine the impact of PLOs on QA, BSC in GPU pursued the structure of measuring Key Performance indicators (KPIs) and compared them with the benchmarking considered by the university GPU. As an example, we choose Module Statistics and Management Systems which is the course at a higher level. We represented how PLOs are measured through KPI and compared with the benchmarking systems in achieving quality assurance and the successful accreditation process of ABET and NCAAA. BSC in GPU deemed many principles to ensure the appropriateness of PLOs in QA and measuring KPIs for PLOs is one of them. There are some rules and methods practiced by ADQC of BSC in GPU as shown in Fig. 7.

Theoretical Framework for the applications of QA practices in H.E. by BSC in GPU

SCCR

ADQC of BSC in GPU systematically and regularly performed Course report evaluation and QA analysis at all academic levels. The first level includes evaluation at the course level. This comprises several tasks such as course management, elaborating the admission constraints, credit hours of the module and total credit hours of the program, qualification and experience of teaching staff along with their designation, scholarship of students and PLOs of course and program, KPI scores for PLOs of the course and program. But for this study, researchers are restricted to course level only. The second level of QA assurance is applied to the profile of academic staff such as their research in distinguished journals, conference attendance and participation, faculty reward, and other training programs. Finally, policies for staff promotion, risk management, financial aid, and human resource policies are applied by BSC in GPU under the same level.

SMMR

Monitoring management covers wide actions but for the study, we are restricted to CS development/result analysis and improvement based on KPI measurement and recommendations. BSC in GPU frequently checks the development and feedback of learners. Also maintain the standards of academic education, percentage of faculty and students, employment progression, and other infrastructure monitoring conclusions. ADQC has the responsibility to prepare the report on these activities and submit the BSC administration for achieving QA.

Benchmarking

Benchmarking is a standard or a target set by any academy to measure its QA efficiency. BSC in GPU has been applying an ordered, joint, learning process to evaluate follow-up and appraise the performance of LOs in order to identify overall strengths and weaknesses.

SCF

The success of any program is dependent on the success of students, therefore BSC in GPU keeps a record of PLOs, how well students performed, and how improved. This takes account of students’ retention score; report on results analysis, alumni’s report.

PLOs measures to QA

ADQC executes methodical and organized series of review, monitoring, and reporting processes course completions; student satisfaction data; staff with higher-level qualifications; and graduate achievement of PLOs.

Automation and efficiency

The IS-based model significantly streamlined the process of calculating PLO by automating the aggregation of student performance data. This reduced manual efforts and minimized errors associated with manual calculations.

Accuracy in measurement

The model provided accurate measurements of PLO by ensuring that all relevant data points from student assessments were considered. Each CLO’s key performance indicators (KPIs) were effectively tracked and measured.

Enhanced data visualization

The model facilitated the creation of comprehensive data visualizations, including charts and graphs, which helped in presenting the outcomes of the PLO assessments clearly and effectively. This made it easier for educators and quality assurance units to interpret and analyze the results.

Application in a business module

The study applied the model to a business module at the University of Gulf, showcasing its practical utility. The model’s implementation demonstrated how it could be used to measure and report on the PLOs for the module, providing valuable insights into student learning and program effectiveness.

Support for accreditation

By providing a reliable method for assessing PLOs, the model supports the university’s efforts in maintaining and achieving accreditation from relevant accrediting bodies. The systematic approach to evaluating program quality through student performance aligns with accreditation requirements.

The IS-based Excel model effectively improved the assessment of Program Learning Outcomes (PLOs) in higher education, enhancing quality assurance and accreditation. It automated the aggregation of Course Learning Outcomes (CLOs) and KPIs from various student assessments, reducing manual effort and increasing accuracy. The model integrated multiple assessment types to provide precise PLO calculations, identifying student strengths and areas for improvement. Data visualizations like charts helped stakeholders easily interpret results, supporting informed decisions and accreditation evidence. Limitations included scalability issues with large datasets and the need for customization for different contexts. Overall, the model proved valuable for improving program quality and streamlining accreditation processes.

Conclusion

This study has demonstrated that a simple yet structured Information Systems (IS)-based model built in Microsoft Excel can significantly enhance the assessment of Program Learning Outcomes (PLOs) in higher education. By automating the aggregation of Course Learning Outcomes (CLOs) and Key Performance Indicators (KPIs), the model improves accuracy, reduces manual workload, and offers accessible data visualization tools for quick analysis.

Unlike complex or expensive educational software systems, the Excel-based model is practical, adaptable, and user-friendly. It is especially valuable for institutions operating under financial, technological, or infrastructure constraints, making high-quality assessment practices more inclusive.

Beyond operational efficiency, the model also empowers faculty to engage in continuous curriculum evaluation and quality assurance. The integration of automated calculations with visual feedback loops allows real-time insight into student performance and program effectiveness, promoting data-driven decision-making.

This model not only supports accreditation efforts from bodies like ABET and NCAAA but also contributes to a culture of transparency and continuous improvement in higher education. Looking forward, future research should focus on integrating this tool with institutional databases and extending its use for longitudinal studies, enabling a deeper understanding of learning outcome trends over time.

In conclusion, this IS-based Excel model presents a scalable and replicable approach to outcome-based education assessment bridging the gap between academic quality assurance and everyday teaching practice.

Data availability

This research was conducted using anonymized student performance data measured from routine academic assessments, with no direct involvement or interaction with students. All student names and grades were removed and replaced with coded identifiers to maintain full confidentiality. The datasets generated and analysed during the current study are available from the corresponding author on reasonable request.

References

Abdullah RS (2017) Application of Saudi’s national qualifying framework in system analysis & design course. Int J Manag Excell 10(1):1208–1213

Aithal PS, Aithal S (2019) Analysis of higher education in Indian National Education Policy Proposal 2019 and its implementation challenges. Int J Appl Eng Manag Lett (IJAEML) 3(2):1–35

Alahmari F, Naim A, Alqahtani H (2023) E-learning modeling technique and convolution neural networks in online education. In IoT-enabled Convolutional Neural Networks: Techniques and Applications (pp. 261–295). River Publishers

Alnaami MY, Abdulghani HM, Elsobkey S, Yacoub H (2023) Assessment of Learning Outcomes. In Novel Health Interprofessional Education and Collaborative Practice Program: Strategy and Implementation (pp. 333–345). Singapore: Springer Nature Singapore

Begum A, Sabahath A, Naim A (2024) An iterative process of measuring learning outcomes and evaluation of academic programs as part of accreditation. In Evaluating Global Accreditation Standards for Higher Education (pp. 35–49). IGI Global

Biggs J, Tang C (2014) Constructive alignment: An outcomes-based approach to teaching anatomy. In Teaching Anatomy: A Practical Guide (pp. 31–38). Cham: Springer International Publishing

Boggs GR (2019) What is the learning paradigm? 13 ideas that are transforming the community college world, 33–51

Bredow CA, Roehling PV, Knorp AJ, Sweet AM (2021) To flip or not to flip? A meta- analysis of the efficacy of flipped learning in higher education. Rev Educ Res 91(6):878–918

Budiman A, Samani M, Setyawan WH (2021) The development of direct-contextual learning: a new model on higher education. Int J High Educ 10(2):15–26

Castro MDB, Tumibay GM (2021) A literature review: efficacy of online learning courses for higher education institution using meta-analysis. Educ Inf Technol 26:1367–1385

Cavallone M, Manna R, Palumbo R (2020) Filling in the gaps in higher education quality: An analysis of Italian students’ value expectations and perceptions. Int J Educ Manag 34(1):203–216

Garnjost P, Lawter L (2019) Undergraduates’ satisfaction and perceptions of learning outcomes across teacher-and learner-focused pedagogies. Int J Manag Educ 17(2):267–275

Goss H (2022) Student learning outcomes assessment in higher education and in academic libraries: a review of the literature. J Acad Librariansh 48(2):102485

Haug G (2003) Quality assurance/accreditation in the emerging European Higher Education Area: a possible scenario for the future. Eur J Educ 38(3):229–240

Kirillova K, Au WC (2020) How do tourism and hospitality students find the path to research? J Teach Travel Tour 20(4):284–307

Lasso R (2020) A blueprint for using assessments to achieve learning outcomes and improve students’ learning. Elon L Rev 12:1

Le TQ, Hoang DTN, Do TTA (2019) Learning outcomes for training program by CDIO approach applied to mechanical industry 4.0. J Mech Eng Res Dev 42(1):50–55

Malik PK, Naim A, Khan SA (2024) Enhancing higher education quality assurance through learning outcome impact. In Evaluating Global Accreditation Standards for Higher Education (pp. 114–128). IGI Global

Naim A (2025, January) Equity across the educational spectrum: innovations in educational access crosswise all levels. In Frontiers in Education (Vol. 9, p. 1499642). Frontiers Media SA

Naim A, Hussain MR, Naveed QN, Ahmad N, Qamar S, Khan N, Hweij TA (2019, April) Ensuring interoperability of e-learning and quality development in education. In 2019 IEEE Jordan International Joint Conference on Electrical Engineering and Information Technology (JEEIT) (pp. 736–741). IEEE

Naim A, Malik PK, Khan SA, Mohammed AB (2024a) Mechanism of direct and indirect assessments for continuous improvement in higher education. In Evaluating Global Accreditation Standards for Higher Education (pp. 200–216). IGI Global

Naim A, Saklani A, Khan SA, Malik PK (Eds) (2024b). Evaluating global accreditation standards for higher education. IGI Global

Naim A, Sattar RA, Al Ahmary N, Razwi MT (2021) Implementation of quality matters standards on blended courses: a case study. Financ INDIA Indian Inst Financ XXXV(No. 3):873–890. September 2021Pages

Naqvi SR, Akram T, Haider SA, Kamran M, Muhammad N, Nawaz Qadri N (2019) Learning outcomes and assessment methodology: case study of an undergraduate engineering project. Int J Electr Eng Educ 56(2):140–162

Pratolo S, Sofyani H, Anwar M (2020) Performance-based budgeting implementation in higher education institutions: determinants and impact on quality. Cogent Bus Manag 7(1):1786315

Premalatha K (2019) Course and program outcomes assessment methods in outcome-based education: a review. J Educ 199(3):111–127

Ryan M (2023) Higher education in Saudi Arabia: challenges, opportunities, and future directions. Res Higher Educat J 43

Sailer M, Schultz-Pernice F, Fischer F (2021) Contextual facilitators for learning activities involving technology in higher education: The C♭-model. Comput Hum Behav 121:106794

Schoepp K (2019) The state of course learning outcomes at leading universities. Stud High Educ 44(4):615–627

Timmermans JA, Meyer JH (2019) A framework for working with university teachers to create and embed ‘Integrated Threshold Concept Knowledge’(ITCK) in their practice. Int J Acad Dev 24(4):354–368

Torres-Gordillo JJ, Melero-Aguilar N, García-Jiménez J (2020) Improving the university teaching-learning process with ECO methodology: Teachers’ perceptions. PloS One 15(8):e0237712

Tsang JT, So MK, Chong AC, Lam BS, Chu AM (2021) Higher education during the pandemic: the predictive factors of learning effectiveness in COVID-19 online learning. Educ Sci 11(8):446

Wekerle C, Daumiller M, Kollar I (2022) Using digital technology to promote higher education learning: the importance of different learning activities and their relations to learning outcomes. J Res Technol Educ 54(1):1–17

Wong HY, Chan CK (2022) A systematic review on the learning outcomes in entrepreneurship education within higher education settings. Assess Eval High Educ 47(8):1213–1230

Zaki N, Turaev S, Shuaib K, Krishnan A, Mohamed E (2023) Automating the mapping of course learning outcomes to program learning outcomes using natural language processing for accurate educational program evaluation. Educ Inf Technol 28(12):16723–16742

Acknowledgements

This research is supported and funded by the ongoing research funding program (ORF-2025-521), King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

AN has led the formulation of the research objectives and methodology authored the initial draft of the manuscript, performed data analysis and interpreted the results. AN has participated in critical revisions of the manuscript, finalized the content and structure of the paper, Overseen the quality control throughout the writing process and provided overall supervision and project coordination. AN has also made substantial contributions to the refinement of all manuscript sections, managed the manuscript submission process to the journal portal, facilitated communication and coordination among co-authors, ensured adherence to ethical research and publication standards, oversaw the response and integration of feedback from co-authors and reviewers. MA has contributed in conducting in-depth review of relevant existing literature, contributed to the interpretation and contextualization of findings, participated in finalizing the manuscript for submission and ensured ethical code of research. NSA has supported the literature review and identification of theoretical gaps, assisted in improving the coherence and quality of the final draft and ensured ethical code of research.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This study is exempted from requiring ethical approval. Approval from the institution was not necessary because the research did not involve human participants or their data. Researchers have not collected data from any survey. The Names of participants and their grades are also not presented in the current research. Researchers have used coding on the grades and defined three levels such as satisfactory, developing and unsatisfactory to assess PLOs by the use of an IS based model.

Informed consent

For the current study ‘Informed consent was not required because we have not involved any human participants or their data for the assessment of PLO using IS based model. The data used in the measurement are converted into unique three levels of identifiers. The names, grades or any personal information is not presented in the current paper. Therefore, informed consent is not applicable to the current study.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Naim, A., Alnfiai, M.M. & Almalki, N.S. Information systems based model for the assessment of program learning outcomes in measuring the quality in higher education. Humanit Soc Sci Commun 12, 1975 (2025). https://doi.org/10.1057/s41599-025-06259-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1057/s41599-025-06259-9