Abstract

Recent advances in memory technologies, devices, and materials have shown great potential for integration into neuromorphic electronic systems. However, a significant gap remains between the development of these materials and the realization of large-scale, fully functional systems. One key challenge is determining which devices and materials are best suited for specific functions and how they can be paired with complementary metal-oxide-semiconductor circuitry. To address this, we present a mixed-signal neuromorphic architecture designed to explore the integration of on-chip learning circuits and novel two- and three-terminal devices. The chip serves as a platform to bridge the gap between silicon-based neuromorphic computation and the latest advancements in emerging devices. In this paper, we demonstrate the readiness of the architecture for device integration through comprehensive measurements and simulations. The processor provides a practical system for testing bio-inspired learning algorithms alongside emerging devices, establishing a tangible link between brain-inspired computation and cutting-edge device research.

Similar content being viewed by others

Introduction

The energy requirements of current deep learning algorithms have promoted research into alternative computing architectures and technologies. Some of these efforts are aimed at emulating the computational principles of biological intelligence to enhance efficiency and processing capabilities. In this regard, the development of neuromorphic computing architectures has seen substantial growth1,2,3,4,5,6,7. In particular, neuromorphic systems using hybrid complementary metal-oxide-semiconductor (CMOS)-memristive circuits offer a promising direction for low-power, highly compact solutions where computation is performed in-memory8,9. Memristive technologies encompass a wide range of novel electronic materials and devices that possess inherent memory and reprogrammability through state-dependent, and usually non-volatile, resistance modulation10,11.

When integrated in CMOS Spiking Neural Network (SNN) chips, hybrid neuromorphic/memristive circuits can exploit the physics of the devices and their intrinsic dynamics to carry out low-power computations that extend the advantages of conventional In-Memory Computing (IMC) dense crossbar array architectures12. IMC-based artificial neural network accelerators, which typically use either Static Random Access Memory (SRAM) or memristive crossbars, aim to maximize peak throughput, area, and power efficiency by circumventing the von Neumann bottleneck13,14,15,16. In contrast, mixed-signal neuromorphic architectures seek to further reduce overall power consumption, especially in edge computing applications like bio-signal processing or environmental monitoring, which involve slowly varying signals17,18,19. Recent research has focused on brain-inspired neural mechanisms to implement efficient neural networks targeting such applications20,21,22. These types of architectures implement SNNs, where the spikes are digital events communicated via asynchronous digital logic. The analog circuits implementing neural and synaptic dynamics, together with the asynchronous digital circuits handling event-based routing and network programmability, enable ultra-low-power computation. Typically, the analog circuits used in these neuromorphic platforms rely on the subthreshold analog transistor regime23 to emulate neuron-like dynamics for a further reduction in energy cost6,24,25.

By exploiting the physics of the CMOS devices, this approach has led to the development of a diverse array of circuits that implement computational models of synaptic plasticity26. Synaptic plasticity is the ability of synapses to be potentiated or depressed in a volatile (short-term plasticity) or non-volatile manner (long-term plasticity)27. Although pure CMOS hardware implementations of local synaptic plasticity rules have been shown to express complex and versatile computational properties2,28,29, they require substantial silicon real-estate to store the synaptic weights. A common strategy for addressing this issue has been to drive the weight to a stable value for storage. The use of bistable plastic synapses originates from some of the first developments of full-scale neuromorphic systems29,30 mimicking biological synapses which inherently have limited bit precision31,32. Other works propose to update the weights directly within a digital memory2,33, thus facilitating long-term storage, but they often require a continuous power supply to maintain the memory. Combining the mixed-signal neuromorphic engineering approach with the integration of memristive devices, would simultaneously enable the exploration of additional computational strategies, such as intrinsic stochasticity and state-dependence, as well as provide a compact and non-volatile storage option for maintaining weight values during power-cycles.

Recent efforts have thus initiated the exploration of integrating memristive devices with CMOS neuromorphic systems, aiming to leverage the synergy of both technologies34,35,36,37,38,39,40. A majority of these efforts have focused on complementing memristive crossbar arrays with neuromorphic peripheral circuitry to handle the generation of output spikes and the computation of learning signals35,36,37. Synaptic weights in these systems are realized by the resistance states of memristive devices in a crossbar array. Such crossbars are effective for performing matrix-vector multiplications (MVMs) when the weights are programmed once and read multiple times during network inference. However, to perform real-time on-chip learning, high-frequency and temporally unstructured reprogramming of these devices is required while retaining weight reading functionality. Therefore, few works explore the possibility of implementing IMC-based synaptic plasticity, with learning directly occurring at each synaptic device. In38 the authors proposed a differential three-terminal device interface to achieve more flexible device access for online learning while39 and40 proposed the exploitation of memristive device dynamics to implement in-memory plasticity directly in the crossbar. Although these approaches have been explored, they have been limited to simulations of a few circuit elements with restricted learning flexibility.

In this work, we introduce TEXEL, a fabricated chip combining the operational efficiencies of memristive devices with the spike-based approach of neuromorphic systems. The chip exploits the analog subthreshold CMOS regime and event-based computation to implement ultra-low power spiking neurons and plastic synapses with tunable always-on trace-based local learning functionality. TEXEL incorporates a Back-End Of Line (BEOL) device-agnostic differential synaptic interface, enabling the integration of a wide range of two- and three-terminal memristive devices across ~10K plastic synapses atop the CMOS chip. This design makes TEXEL a versatile research platform for large-scale BEOL device integration in neuromorphic systems. The platform addresses the research challenge of evaluating different memristive technologies within the context of a functional SNN processor, complementing ongoing efforts to overcome challenges in large-scale memristor fabrication and integration. While memristive devices are yet to be integrated, the processor exploits the synergies of IMC and SNNs to present a concrete step towards the following key developments for such systems:

-

Exploiting the capabilities of memristive materials and devices to facilitate the implementation and consolidation of on-chip synaptic plasticity.

-

Providing a platform to explore the large-scale BEOL integration of memristive materials and devices with an SNN processor.

Here, we present the TEXEL chip’s architectural design and learning mechanisms within the context of neuromorphic computing and beyond-CMOS device integration. Through comparisons with other existing full-scale memristor-CMOS spiking neuromorphic processors, we describe TEXEL’s design features and discuss its role in developing brain-inspired computing.

Results

Silicon measurements that validate the functionality of the TEXEL chip (Fig. 1) are outlined in the following sections. Experiments using the on-chip learning circuits demonstrate the emergent Spike-Timing-Dependent Plasticity (STDP)41 and Spike-Rate-Dependent Plasticity (SRDP)42. The functionality of the memristive device read-write circuits is verified experimentally, and the operation of the interfacing circuits is demonstrated with post-layout simulations, which define the parameter range of memristive devices aiming for compatibility with TEXEL. We present simulations of the CMOS device interface circuits with a Valence Change Mechanism (VCM) compact model to verify synaptic state switching and robustness of reading operations. Power consumption measurements provide a detailed breakdown of the contribution of each circuit block, exemplifying the inherent power efficiency advantages of subthreshold analog circuitry and the event-driven paradigm. Additionally, we perform network-level experiments and implement an on-chip SNN that demonstrates TEXEL’s capabilities in neuromorphic computing applications utilizing Vector-Symbolic Architectures (VSAs). The chip builds upon previous work25,38, moving beyond test configurations towards an integrated neuromorphic architecture with improved scalability. The device interface circuit provides enhanced flexibility and compatibility with different memristive technologies, addressing integration challenges in hybrid CMOS-memristive systems.

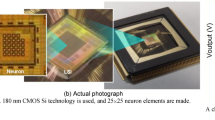

a Footprint of the chip, indicating the location of the architectural blocks. b The neuron block footprint, indicating the synaptic fan-in of the soma within the block. The location of the plastic and non-plastic synapses is shown with excitatory (exc) and inhibitory (inh) types. The plastic synapses contain the contacts and interface circuitry for BEOL integration of memristive devices. c A photograph of the 9 mm × 7.5 mm die, fabricated with the X-FAB 180 nm process.

Neural circuits

We measured the activation of the silicon neuron circuits to assess their transfer function and operating regimes. A Direct Current (DC) input was applied and systematically increased across all neurons while their spike rate was recorded (Fig. 2a). The resultant Frequency vs. Current (FI) curve shows both the aggregate mean response for each core as well as the individual activation profiles of all neurons. The discernible core-specific disparity is attributable to mismatch in the biasing circuitry. The dispersion in the FI curve of each neuron stems from inherent variations in individual neuron circuits. While device mismatch variability can be reduced by including calibration procedures for each element2, we chose to minimize it, through judicious analog circuit design techniques, and keep it, as it can be exploited for example in learning43.

We conducted validation measurements of the adaptive characteristics embedded in the circuitry of each neuron. The response of the membrane potential to a DC step input was measured, as well as the timing of output spikes (Fig. 2b). These observations reveal the expected temporal pattern in the neuron’s instantaneous spike rate, characterized by an initial peak followed by a gradual decay towards a stable state (Fig. 2c). Figure 2d shows an instance in which a neuron is stimulated by a Poisson spike train through its static excitatory synapses. The plastic synapses located within each neuron block are quantized to a binary value which is translated into an analog bias representing high and low synaptic efficacy. The on-chip weight matrix, encoding the state of all plastic synapses, can be read-out post learning and also programmed for inference (see Supplementary Fig. S5).

a The measured firing rates of all neurons on TEXEL in response to a constant DC input current. b Measurement of the membrane potential of a single neuron in response to a DC step input. The neuron’s adaptation characteristic is evident as its firing rate begins high and gradually diminishes to attain a steady state. c Measurements of the variations in the instantaneous firing rate and timing of output spikes in relation to the magnitude of DC injected into the soma. d The recorded membrane potential response of a neuron receiving presynaptic Poisson input at the static excitatory synapses. Below, in blue, is the presynaptic spike train, while above, in green, the postsynaptic spikes indicate the neuron’s spiking activity.

Learning circuits

The on-chip plasticity was implemented using mixed-signal circuitry embedded within each plastic synapse. This circuitry emulates the Bistable Calcium-based Local Learning (BiCaLL) rule44, which combines STDP for low activity with Hebbian changes45 at high activity. The combination of both learning rules allows for different learning mechanisms to be explored in the context of an on-chip SNN, with STDP being advantageous for temporal pattern recognition and more efficient learning with sparse spiking, while SRDP excels at rate-based learning and evidence accumulation. In this model, synaptic updates are driven by pre- and postsynaptic calcium traces representing neuronal activity. A secondary postsynaptic trace (Ca2+) with a slower time constant acts as a plasticity gating mechanism, ensuring weight updates occur only within specific firing rate ranges. The learning rule imposes a bistable analog internal weight (Vw) to help mitigate catastrophic forgetting in binary synapses42,46,47,48, stabilizing synaptic states using accumulated updates and bistability circuitry.

Figure 3a shows measurements of a plastic synapse undergoing short-term depression. Pre-trace integration of presynaptic spikes maintains a decaying record of presynaptic activity, but without postsynaptic activity, the synaptic weight remains unchanged. When postsynaptic spikes occur, plasticity becomes apparent, and if the post-trace crosses its lower threshold, depression is triggered. The synaptic weight experiences short-term depression but stabilizes to the high state due to bistability circuitry.

We conducted in-silico experiments to characterize STDP of the learning circuitry (Fig. 3b, c). The synaptic weight changes (Δw) were measured by systematically varying pre- and postsynaptic spike timings. Adjusting the biasing parameters allowed for on-chip configuration of STDP curves, enabling the introduction of depressive regions for positive pre-post pairings. Additionally, SRDP was measured by varying pre- and postsynaptic Poisson spike rates. A probability map (Fig. 3d) of synaptic weight changes demonstrates that under conditions of high presynaptic and postsynaptic activity, the likelihood of the synapse settling into a potentiated (high) state increases. In contrast, when activity levels are lower, the synapse is more likely to undergo depression, favoring the low-weight state. This data highlights the sensitivity of the learning circuitry to the frequency and timing of local spiking activity.

a A presynaptic spike train induces a current (blue) read by the spiking Analog-to-Digital Converter (sADC), while simultaneous stimulation with an Excitatory Post Synaptic Current (EPSC) triggers postsynaptic spiking (green). Shaded regions indicate when the post-trace exceeds the lower threshold, reflecting short-term memory. The Ca2+ trace (orange) accumulates postsynaptic activity, showing plasticity when above its threshold. b STDP measurements assess the impact of pre- and postsynaptic spike timing, \(\Delta t={{{\rm{pre}}}}-{{{\rm{post}}}}\), on the analog weight of the synapse (w). This is the most expressive STDP curve achievable on-chip where all potentiation and depression branches of the learning circuitry are active. c This STDP curve demonstrates modulation of potentiation and depression through analog biasing. In this experiment the effect of depression with positive Δt timings was switched off. d SRDP results show the probability of the synapse maintaining high or low weight based on pre- and postsynaptic firing rates (\({\nu }_{{{{\rm{pre}}}}}\) and νpost). e The plasticity circuit in each synapse uses three analog traces to govern weight updates. Two neuron-level traces, the postsynaptic trace (post-trace) and the Ca2+ trace, are transmitted to synapses and must meet threshold conditions for weight updates. If the post-trace exceeds a threshold, incoming presynaptic spikes reduce the synaptic weight by a fixed increment. The presynaptic activity (pre-trace) also determines whether the weight will increase or decrease, with updates occurring via charge deposition on a capacitor. The weight is then quantized into high or low states by a bistability circuit, which controls drift toward ground or supply voltage, with drift rates set by the slew up and slew down biases.

Memristive device interfacing circuits

Each plastic synapse on TEXEL (Fig. 1b) can be enabled to utilize a pair of memristive devices to store a binary weight using a differential device configuration49,50. When the chip is programmed to enable device operation, at the time of a presynaptic spike, the synaptic weight is read using a differential normalizer circuit38. To demonstrate the operation of the normalizer circuitry we performed extensive Spectre post-layout simulations over a range of memristive device parameters, namely: conductance, capacitance and on-off ratio. The memristive devices were modeled as parallel RC circuits. Figure 4a shows how the differential device setup, consisting of a “positive” and “negative” device, is able to store the binary synaptic weight. In the case where the resistance of the positive device is lower than that of the negative device, the current sourced through the positive device, Ipos, during a read pulse (presynaptic spike) is greater than the current sourced through the negative device, Ineg. In this scenario the normalizer circuit transmits a current, Inorm, proportional to the biasing of the normalizer circuit, norm_bias. For these simulations the current was normalized to 200 nA (norm_bias) and passed into a Differential Pair Integrator (DPI) synapse51 to elicit a postsynaptic current, Isyn. In the alternative case, when the differential synapse is programmed to represent a low weight, the positive device resistance is greater than the negative device resistance. Therefore Ineg > Ipos and the normalizer circuit does not convey a current. Figure 4b presents post-layout simulation results showing how Inorm varies with the ratio of the positive and negative device conductance. When the ratio is < 1, Inorm is zero, conversely when the ratio is >1, Inorm is large enough to elicit a postsynaptic current. For large ratios between the positive and negative devices, translating to a large on-off ratio, the differential synapse and normalizer circuit is able to source a current that is closer to norm_bias.

a A read pulse with a width of 500 μs activates the normalizer circuit, sourcing Ineg and Ipos. The circuit outputs a non-zero current, Inorm, if Ipos > Ineg, which is integrated by a DPI synapse, resulting in a current Isyn sent to the neuron. The left panel shows high weight storage (Rpos < Rneg), eliciting a response, while the right panel shows low weight storage (Rneg < Rpos), where no current is integrated. b With Rpos = 1GΩ, device capacitance of C = 100 fF, and a read pulse width of 500 μs, the relative resistances of both devices are varied by sweeping Rneg. The average output current of the normalizer circuit is measured as a % of norm_bias, showing non-zero current when the positive device’s conductance exceeds that of the negative device. c Simulations explore device characteristics’ impact on compatibility with TEXEL. The cross ( × ) represents a device with C = 100 fF, Gon/Goff = 100, Ron = 1 GΩ, and a read pulse width of 500 μs. Heatmaps indicate average current from the normalizer as a percentage of norm_bias. d A sweep of the device’s capacitance versus its on/off ratio is shown with Ron fixed at 1 GΩ.

The interfacing circuitry provides substantial protection against variations and drift in the integrated memristive devices. By employing a pair of memristive devices programmed in opposing states and connecting them to a normalizer circuit, we achieve a considerable reduction in output current variability. This configuration maintains robust performance as long as a sufficient difference between the High Resistance State (HRS) and Low Resistance State (LRS) is preserved. The impact of device drift can be assessed using Fig. 4c, where drift phenomena would manifest as horizontal movement on the graph, typically trending toward reduced Gon/Goff ratios. This representation allows for empirical evaluation of how resistance state drift affects the differential normalizer synapse performance over time. The circuit’s tolerance to such changes highlights its suitability for real-world applications where device stability remains challenging.

Memristive device requirements

To quantify the compatibility of TEXEL with co-integrated memristive devices we performed extensive Spectre post-layout simulations of the CMOS interface circuitry with realistic device characteristics, over several orders of magnitude. We parameterized all simulations using a fixed read voltage pulse width of 500 μs and a norm_bias of 200 nA, however these can be varied using the on-chip programming and biasing. Figure 4c shows a heat-map of a 2D logarithmic device characteristic sweep during which the on-off conductivity ratio of the device was varied with the on-resistance. This heat-map shows the percentage of the norm_bias of the normalizer circuit that was sourced during a read voltage pulse that was sent to the differential device synapse when storing a high weight. This is used as the metric determining whether a memristive device will operate as expected when integrated with the TEXEL chip and defines the compatibility. Similarly, we performed simulations varying the on-off conductivity ratio and capacitance of the device (Fig. 4d), here the same metric of compatibility is used. This is an additional memristive device constraint that must be satisfied to ensure successful integration with CMOS and one that is often overlooked. Table 1 presents the integration specifications derived from the aforementioned simulations, operating voltages and circuit footprints.

To more comprehensively evaluate the operation of the differential normalizer synapse, we conducted Spectre simulations using an open-source VCM compact memristive device model52. The simulation protocol involved repeatedly switching a single synapse between potentiated and depressed states, corresponding to high and low conductivity states, respectively (Fig. 5a). During each cycle, a presynaptic spike was sent to the synapse, and the resulting postsynaptic current was measured through the differential normalizer circuit. Crucially, the postsynaptic current was only significant when the synapse was in the potentiated state. The simulation was run over several minutes, encompassing numerous device cycles to mimic the weight dynamics of an SNN during learning and synaptic consolidation. The compact model incorporated cycle-to-cycle variability, which is evident in the histogram of charge sourced by the postsynaptic current (Fig. 5b). Despite the inherent variability in conductivity states between cycles, the proposed circuitry effectively discriminates between high and low weight states, with no overlap in the encoded states.

a Potentiation (POT) and depression (DEP) events arriving at the synapse execute the complementary set and reset of the two devices in the differential configuration. Presynaptic spikes arriving at the synapse read the state of the devices through the normalizer circuit and the rescaled current is integrated by a DPI circuit providing an EPSC, Isyn, to the associated neuron. Sequential POT and DEP events cycle the weight stored by the synapse, with presynaptic spikes occurring between cycles. b During the cycling of the state of the differential normalizer synapse, the current sourced through the DPI synapse circuit during a presynaptic spike was integrated to calculate the synaptic charge conveyed to the neuron. The distribution of this charge for high and low weight storage is shown, attributed to the cycle-to-cycle variability simulated in the compact model of the memristive device.

Power measurements

Extensive power measurements were conducted on the TEXEL chip using a femtoampere Source Measurement Unit (SMU) to assess its power distribution across operations for the analog and digital power sources. These measurements were performed on the CMOS chip and do not account for integrated devices. When considering a complete system with integrated memristive devices, the total power consumption would include additional contributions from the switching and reading operations of these devices. Memristive technologies that require low switching energy and can operate with low read voltages and currents would be optimal for maintaining energy efficiency in a complete system, particularly for learning operations that involve frequent weight updates. Figure 6a, c show how the dynamic power consumption varies with the global spike rate of the chip, this was modulated by increasing the DC input bias for all neurons. The total dynamic power consumption is divided into the contributions of the isolated digital, analog and padframe power supplies. The digital circuitry accounts for all programming and spike routing, while the analog circuitry encompasses neurons, synapses, learning circuits, and analog parameter generation. During these experiments, the learning circuitry was turned off. The energy per spike was also calculated for varying spiking rates (Fig. 6b, d). Figure 6e, f show the same power contribution breakdown for synaptic operations with the energy required per operation. This experiment was performed by increasing the input spike rate over the Address Event Representation (AER) bus, randomly addressing all synapses on the chip, over both cores. Figure 6g shows the breakdown of the static power consumption of the chip, measured at 27.4 μW.

a Dynamic power consumption versus postsynaptic event rate, measured for the three isolated power supplies. b Energy per spike for increasing mean firing rates across each power supply. c Dynamic power consumption of the analog power supply against postsynaptic event rate. d Energy consumed per spike versus mean firing rate per neuron, for the analog power supply. e Dynamic power consumption during random synaptic stimulation at increasing input event rates. f Energy consumption per synaptic operation against input event rate. g Breakdown of static power consumption while neurons are inactive and synapses are unstimulated. All error bars represent measurement uncertainty.

On-chip spiking neural network

We evaluated the network-level functionality and on-chip learning capabilities of the TEXEL processor by implementing an abstract set-membership task within an SNN, using the formalism of VSAs, also known as Hyperdimensional Computing53,54,55,56. VSAs represent symbolic data with high-dimensional random vectors, known as hypervectors, such that the information is distributed across many neurons. They are particularly well-suited for neuromorphic hardware implementation, in part due to their emergent robustness properties at high dimensionality, making them naturally compatible with the circuit-to-circuit variability in mixed-signal computing substrates57,58,59,60. Specifically, we generated High Dimensional (HD) vectors to represent semantic objects (vehicles and colors), then bundled them together by a superposition operation to generate HD vectors representing sets of objects (Fig. 7a). We used sparse binary block code vector representations61,62 such that vectors were partitioned into blocks of length L, with each block containing a single randomly positioned 1. Consequently, each atomic hypervector v ∈ {0, 1}N (N = 2000) maintained a sparsity of \(1-\frac{1}{L}\). The bundled set hypervectors are given by

where ⊕ is an element-wise add-and-threshold operation, implementing the bundling, and the vectors on the RHS are independently generated. These bundled representations can then be probed to verify whether an object exists within the sets they represent. Set membership is determined by measuring the overlap between a query vector and the bundled vector stored in memory, with significant overlap indicating membership.

a Sparse HD vectors representing semantic objects were bundled ( ⊕ ) to form vectors representing sets of objects. b The single-layer network configuration, HD vectors were converted to spike trains determined by the presence of 1s in the vector, where each 1 was translated to a single spike sent to a synapse based on the mapping between vector dimension and synapse/neuron id. c Spike patterns representing sets were presented to the SNN along with teaching signals, provided through static excitatory synapses. The plastic synapses receiving the input spike pattern and the teacher signals potentiated their state using the learning circuitry. d A readout of the weights matrix of the SNN before learning the input patterns, all synapses are in the low weight state. e A readout after learning, a subset of synapses have potentiated to the high weight state. f During inference, the SNN is sent spike patterns (g) representing classes stored within the learned sets of colors and vehicles. h The response of the network to the input patterns, the neuron populations corresponding to the set in which the object is stored respond with postsynaptic spikes. i A readout of the spike rate of the populations encoding for the sets demonstrate the correct response to the input spike vectors. An object not contained in either learned set is shown and network does not provide a response.

This operation can be efficiently realized by a single-layer neural network classifier. Due to the on-chip network size constraints, we realized the 2000-dimensional vector representations using 80 neurons randomly distributed across the chip, with two populations of 40 neurons (each utilizing 50 synapses) encoding each class (vehicles or colors) (Fig. 7b). Initially, all plastic synaptic weights were configured to the depressed state (Fig. 7d). The network was then presented with spiking versions of vvehicles or vcolours, with teaching signals provided through non-plastic synapses (Fig. 7c). The temporal relationship between presynaptic (pattern) spikes and postsynaptic spikes (induced by presynaptic teacher spikes) proved sufficient to potentiate a subset of synapses for pattern learning (Fig. 7e). Following this one-shot learning phase, plasticity was switched off to prevent further learning, and test patterns representing objects stored within the learned sets were presented (Fig. 7f, g). When subsequently presented with any of the atomic hypervectors (e.g., vcyan), the neurons designated to represent each class gave the correct responses (Fig. 7h, i). Importantly, when presented with an object not stored in any set, the network showed no response, demonstrating successful specification to target HD representations. The implementation of these VSA operations through Hebbian auto-associative memories in neuromorphic hardware represents a promising step toward more sophisticated semantic and analogical reasoning in ultra-low-power computing systems63.

Discussion

Recent advancements have produced only a few successfully co-integrated large-scale CMOS-memristor neuromorphic systems64,65,66,67,68, with most relying on foundry assistance65,66,67,68. This lack of co-integration is a key challenge in advancing such systems, emphasizing the importance of wafer-level integration platforms to establish compatibility with CMOS technology and to progress neuromorphic chip development. In this work, we introduced TEXEL, an SNN processor with on-chip learning circuits capable of interfacing with a large range of memristive devices and their operation requirements (Table 1). TEXEL functions in both full-CMOS and device-integrated modes, offering versatility to explore emerging memristive technologies within the context of a spiking neuromorphic system. The processor supports a wide range of device interfacing options, including read-write pulse widths from 10 ns to 100 ms, continuous read and pre-charge modes (see Supplementary Section A4), and high-voltage compatibility up to 5 V. The architecture further extends its compatibility to include both two-terminal devices and three-terminal technologies such as Ferroelectric Field-Effect Transistors (FeFET)69,70.

The chip prioritizes flexibility over efficiency by supporting multiple device debug modes and allowing operation without devices for CMOS circuit verification. This design approach enables thorough benchmarking and debugging of CMOS-memristor circuits through comprehensive signal monitoring capabilities (see Supplementary Table 1). However, while TEXEL’s broad compatibility with Non-Volatile Memory (NVM) devices provides versatility, it introduces significant area overhead (see Table 2 and Supplementary Fig. S4a). This overhead primarily stems from necessary components such as voltage level shifters and device interfacing circuitry like the differential normalizer circuit. Since these circuits scale with the number of synapses, which dominate the chip’s silicon real estate, their impact on area efficiency is substantial. Nevertheless, as NVM technology advances toward higher numbers of distinguishable states, a more favorable trade-off between area requirements and synaptic resolution is anticipated. Once a specific NVM technology is selected, future iterations should aim to optimize both density and performance by eliminating the redundant circuitry currently needed to accommodate multiple device types.

The integration of memristive devices and materials with CMOS neuromorphic systems extends beyond storing and reading synaptic weights. These technologies can be incorporated into neuron circuits71 and learning mechanisms72, enhancing characteristics such as time constants and dynamic behaviours. Additionally, emerging devices and materials have shown significant promise in sensory applications73,74, rendering them particularly appealing for integration into sensory front ends that can be interfaced with always-on neuromorphic chips. Research has also revealed how beyond-CMOS devices can enable advanced network features, including the realization of synaptic delays34 and small-world network topologies75, further enhancing functional capabilities of neuromorphic systems. Complementing these advances, ongoing development of monolithic 3D structures presents a promising direction to increase device density and reduce interconnect lengths, addressing fundamental challenges in scaling neuromorphic architectures76,77,78. Collectively, these capabilities position memristive device technology as a key component in the development of efficient and adaptable electronic architectures. Within this evolving landscape, TEXEL serves as a platform aiding the realization of CMOS-memristor neuromorphic systems that can scale effectively while leveraging the advantages of emerging device technologies.

Methods

Chip architecture

With 2 cores of 90 neuron blocks, TEXEL hosts 180 neurons each with 58 complex synapses (Fig. 1b). The chip’s digital periphery operates asynchronously, utilizing handshake protocols between functional blocks79. Robustness was tested through extensive testing for variable switching delays, eliminating the reliance on specific timing constraints. Spike I/O and register operations share an asynchronous pipeline tailored for AER. Demux circuits route incoming packets to either the spike decoder or register block. The decoder translates external AER spike packets, while the encoder processes on-chip neuron spikes for transmission off-chip. The register block comprises 64 23-bit asynchronous memory arrays (per core) used for biasing and programming, each capable of parallel read or write operations. All analog circuitry is biased using a 12-bit DAC (Digital-to-Analog Converter) (see Supplementary Section A3). To enable the integration of two- and three-terminal NVM devices there is interfacing circuitry including terminal contacts placed within each plastic synapse in every neuron block1,80,81 (see Supplementary Fig. S4a). Figure 1 shows the embedding of the neuron blocks and synapses within the chip architecture.

Neuron circuits

The Adaptive Exponential Leaky Integrate-and-Fire (AdExLIF) neuron circuit integrated on TEXEL is the latest iteration of a continuing design evolution that has undergone multiple enhancements to optimize performance1,6,24,82,83,84 (see Supplementary Fig. S2). The implementation of the neuron draws inspiration from the improvements detailed in25, focusing on minimizing power consumption and reducing mismatch. The neuron dynamics are driven by two inputs: a DC input and a somatic input current from the synaptic fan-in, enabling network-level experiments. The somatic input DPI models the neuron’s leak conductance, integrating synaptic currents into the membrane capacitance, producing a membrane current representing the neuron state variable. Between the somatic DPI and spike generation, three modules control membrane current dynamics: a threshold, exponential and refractory module. The threshold module, implemented with a low-power current comparator, triggers a spike at the moment the membrane current exceeds the spiking threshold. The exponential module, implemented with a current-based positive feedback, accelerates the membrane current increase when it is closer to the spiking threshold. Once the neuron generates a spike, the refractory module keeps the neuron silent for a certain time set by the refractory period bias. Furthermore, there is an adaptation module, implemented with a pulse extender and a negative-feedback low-pass filter circuit. This is activated with each output spike event, integrating the neuron’s recent spiking activity. All aforementioned modules can be controlled using seven tunable biases. The neuron circuit is designed to be compatible with AER circuits therefore an asynchronous digital handshaking block is incorporated to transmit spikes as address-events through the AER pipeline.

Synaptic circuits

Each neuron on the TEXEL chip has a synaptic fan-in of 58 synapses, 54 plastic and 4 non-plastic (static). Non-plastic synapses are realized through DPI circuits and activate in response to a presynaptic spike, producing a current with an amplitude that is tunable. Consequently, they can be deactivated by setting the weight bias current to zero. The nature of the non-plastic synapses is predetermined, with two per-neuron designated as excitatory and two as inhibitory (Fig. 1b). The weight of the plastic synapses, updated according to the on-chip local learning rule, is stored on a capacitor on a short-time scale and discretized into two stable states on a long-time scale. The weight update occurs in the analog domain, while the long-term storage takes place in the digital domain. The nature of the plastic synapses (excitatory or inhibitory) can be configured on-chip. Excitatory synapses inject a positive current into the soma, while inhibitory ones draw current away from it. The total synaptic activity, computed as the sum of weighted currents, is transmitted to four different DPIs, each independently tunable.

Learning circuits

Within each plastic synapse there exists a CMOS implementation of the BiCaLL rule44 that can be enabled, making use of signals local to each synapse to facilitate either Hebbian or anti-Hebbian Spike-Driven Synaptic Plasticity (SDSP) (Fig. 3e). A pre-trace, realized by a DPI circuit51, maintains a decaying memory of the presynaptic spike train. If this trace exists between an upper and lower threshold then with the co-occurrence of postsynaptic spikes the synaptic weight is depressed. In parallel, depression can also occur if the post-trace, a short term memory of postsynaptic activity, is above a low threshold and a presynaptic spike occurs. Potentiation occurs on a postsynaptic spike during which the value of the presynaptic trace is sampled from such that the magnitude of potentiation is proportional to the presynaptic trace at that time30. A smooth third trace, realized by a Second-order Differential Pair Integrator (SoDPI) circuit85, is used to track the neurons’ activity, representing the postsynaptic neuron’s Ca2+ concentration. The upper and lower thresholds of the Ca2+ trace establish a stop-learning region, restricting synaptic plasticity to occur only within this range. The weight is stored as a voltage as shown in Figure 3a and is discretized via a voltage threshold. Additionally, a bistability circuit is employed such that over the long time scale the weight drifts towards a binary value. The temporal dynamics of the aforementioned traces, strength of the potentiation/depression events, bistability slew rates and thresholds can all be varied through the biasing of the analog circuitry.

Memristive device integration

To support large-scale integration of plastic memristor-based synapses, the chip is designed with a device-agnostic architecture, ensuring high flexibility and offering multiple probing configurations for different memristive devices. This design accommodates both two- and three-terminal devices, supporting a broad range of operating voltages and currents (see Table 1). Device behaviour can be monitored either through on-chip read-outs of output currents during operation or via off-chip access to all device terminals through the interface circuit (see Supplementary Table 1). Full access to the device terminals enables external burn-in or programming of the memristive devices.

To facilitate BEOL integration, each terminal is accessible through a high-level metal contact with spacing and sizing depicted in Fig. 8a. Three branches in the interface circuitry employ n- and p-type transistors, along with transmission gates, to deliver voltage pulses for reading device states or for potentiating or depressing synaptic weights (Fig. 8b). The operation voltages are provided off-chip as inputs to the padframe with a maximum voltage of 5 V. Digital signals to the transistor gates are internally controlled by a synapse controller circuit which implements synaptic operations and weight updates. We note that an extra idle transistor and idle signal is used to facilitate the possibility of pre-charging the device between read pulses and allow a better distinction between their HRS and LRS currents (see Supplementary Section A4).

Often, device operation specifications are not immediately compatible with the technology node and cannot be compensated for by voltage scaling or pulse length modulation. This can occur when currents are too low or too high, device variability is significant, or the resulting output ranges are undefined. In these cases, scaling and normalizing circuits can be employed. Given this initial assumption about the properties of a device aiming for compatibility, the TEXEL chip uses the difference in state of two devices to store the synaptic weight of each plastic synapse. Therefore the canonical on-chip operation protocol for memristive devices is binary and complementary. As a result, while using the on-chip plasticity, devices are only switched in a binary operation between HRS and LRS, and always in a complementary fashion where if one is in its HRS, the other will be in its LRS. A differential normalizer circuit is used to compare the responses of two devices38 when the synaptic weight it being read. When the synapse is addressed for a read, at a presynaptic spike, the currents are sourced from the devices, Ipos and Ineg, and the normalizer circuit (Fig. 8c) rescales and rectifies the detected difference to the output current range required by the DPI synapse, Inorm. The rescaling factor of the output current can be modulated by the bias norm_bias.

a A diagram illustrating the physical dimensions and spatial arrangement of the source, drain, and gate contacts for two- or three-terminal devices. Each synapse deploys two devices configured differentially, serving as both positive and negative components. The diagram also provides information on the spacing between synaptic rows, depicting the distances between adjacent devices in each synapse. b Schematic of device interface circuitry. All voltages can be set in the range 0 V to 5 V in order to read or write both devices in the differential configuration. c The differential normalizer circuit functions to compare the currents generated by positive and negative devices during a device read, prompted by a presynaptic spike. It evaluates the disparity between these currents and generates an output current, denoted as Inorm, which is proportional to the normalized discrepancy between Ipos and Ineg. Moreover, Inorm is exclusively non-zero when Ipos surpasses Ineg and can be modulated by the bias norm_bias. Consequently, the output represents the binary state of the synapse, and the sourced current is directed towards a DPI circuit for further processing.

Since many memristive devices use the same terminals for both reading and writing, they require exclusive control to prevent conflicts. In other words, when a read and write instruction occur simultaneously, a decision must be made regarding which operation to execute first. To manage this, each plastic synapse has a dedicated control circuit that ensures mutual exclusivity between read and write pulses (see Supplementary Fig. S3). Read instructions are prioritized, therefore if both commands occur concurrently, the write pulse is applied only after the read operation is completed.

Memristive device simulations

Spectre simulations were performed using the circuitry illustrated in Fig. 8, employing a compact VCM device model implemented in Verilog-A52. The voltages applied to the transistors for potentiating, depressing, and reading the device were configured within the operating ranges of the chip (Table 1) and maintained compatibility with the device model. A norm_bias current of 200 nA was used during the simulation. The synapse was repeatedly cycled between high and low conductivity states by applying depression and potentiation signals, with complementary device switching occurring through the device controller logic. During each cycle, a presynaptic spike was delivered to the synapse to induce a postsynaptic current. This current was measured and compared to the known state of the synapse to verify that the correct current magnitude was sourced relative to the conductivity state of the device. The simulation was run for a total of 3 minutes, with the synapse being cycled at a rate of 1 cycle per second and read operations performed at 2 presynaptic spikes (reads) per second.

On-chip spiking neural network

VSA hypervectors were generated off-chip with a dimensionality of 2000, using sparse binary block codes. Each vector was partitioned into blocks of length L = 20, with each block containing a single randomly positioned 1, maintaining a sparsity of 95%. Object representations were bundled (⊕) off-chip using element-wise addition and thresholding at 1 to create set vectors for vehicles and colors. The 2000-dimensional hypervectors were mapped to spike trains using a one-hot single spike encoding scheme, where 0 in the vector corresponded to no spike and 1 corresponded to a single spike on the associated synapse. These inputs were distributed randomly across 80 neurons on a single core of the TEXEL processor, with synaptic fan-in connections spanning the chip. Two distinct populations of 40 neurons each were designated to encode the two sets (vehicles and colours). Prior to learning, the weights of all plastic synapses were initialized to the low weight state. During the learning phase, each set pattern was presented simultaneously to both neuron populations, with the target population receiving additional teacher signals via non-plastic synapses. These teacher signals induced postsynaptic spikes in the designated population. The temporal coincidence between presynaptic spikes (representing the input pattern) and the teacher-induced postsynaptic spikes triggered synaptic strengthening according to the STDP relationship shown in Fig. 7c. This one-shot learning procedure was performed sequentially for both populations to encode their respective set vectors (vehicles and colours). For inference, vectors representing individual items from each set were presented simultaneously to both populations, and neuronal activity was recorded. Membership was determined by the response of the respective neuron population, with significant activity indicating set membership. The synaptic weight matrix was read out before and after the learning phase to verify changes in connectivity strength.

Data availability

All data and methods needed to evaluate our conclusions are presented in the main text and Supplementary Material. No extensive datasets were generated as a result of this study.

Code availability

The code generated to interface with the chip presented in this study is available at https://github.com/async-ic/uC-chip-interface-arduino86.

References

Richter, O. et al. DYNAP-SE2: a scalable multi-core dynamic neuromorphic asynchronous spiking neural network processor. Neuromorphic Comput. Eng. 4, https://doi.org/10.1088/2634-4386/ad1cd7 (2024).

Pehle, C. et al. The BrainScales-2 accelerated neuromorphic system with hybrid plasticity. Front. Neurosci. 16, 795876 (2022).

Gonzalez, H. A. et al. SpiNNaker2: a large-scale neuromorphic system for event-based and asynchronous machine learning. ArXiv,2401.04491 (2024).

Orchard, G. et al. Efficient neuromorphic signal processing with Loihi 2. In IEEE Workshop on Signal Processing Systems (SiPS), 254–259 (2021).

Davies, M. et al. Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018).

Moradi, S., Qiao, N., Stefanini, F. & Indiveri, G. A scalable multicore architecture with heterogeneous memory structures for dynamic neuromorphic asynchronous processors (DYNAPs). IEEE Trans. Biomed. Circ. Syst. 12, 106–122 (2018).

Benjamin, B. V. et al. Neurogrid: a mixed-analog-digital multichip system for large-scale neural simulations. Proc. IEEE 102, 699–716 (2014).

Sebastian, A., Le Gallo, M., Khaddam-Aljameh, R. & Eleftheriou, E. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 15, 529–544 (2020).

Christensen, D. V. et al. 2022 Roadmap on neuromorphic computing and engineering. Neuromorphic Comput. Eng. 2, 022501 (2022).

Yang, J. J., Strukov, D. B. & Stewart, D. R. Memristive devices for computing. Nat. Nanotechnol. 8, 13–24 (2013).

Lanza, M. et al. The growing memristor industry. Nature 640, 613–622 (2025).

Chicca, E. & Indiveri, G. A recipe for creating ideal hybrid memristive-CMOS neuromorphic processing systems. Appl. Phys. Lett 116, 120501 (2020).

Jia, H. et al. 15.1 a programmable neural-network inference accelerator based on scalable in-memory computing. In IEEE International Solid-State Circuits Conference (ISSCC), Vol. 64, 236–238 (2021).

Keller, B. et al. A 17-95.6 TOPS/W deep learning inference accelerator with per-vector scaled 4-bit quantization for transformers in 5nm. In IEEE Symposium on VLSI Technology and Circuits (VLSI Technology and Circuits), 16–17 (2022).

Khaddam-Aljameh, R. et al. HERMES-core-a 1.59-TOPS/mm2 PCM on 14-nm CMOS in-memory compute core using 300-ps/LSB linearized CCO-based ADCs. IEEE J. Solid-State Circ. 57, 1027–1038 (2022).

Le Gallo, M. et al. A 64-core mixed-signal in-memory compute chip based on phase-change memory for deep neural network inference. Nat. Electron. 6, 680–693 (2023).

Donati, E., Payvand, M., Risi, N., Krause, R. & Indiveri, G. Discrimination of EMG signals using a neuromorphic implementation of a spiking neural network. IEEE Trans. Biomed. Circuits Syst. 13, 795–803 (2019).

Bauer, F. C., Muir, D. R. & Indiveri, G. Real-time ultra-low power ECG anomaly detection using an event-driven neuromorphic processor. IEEE Trans. Biomed. Circ. Syst. 13, 1575–1582 (2019).

Sharifshazileh, M., Burelo, K., Sarnthein, J. & Indiveri, G. An electronic neuromorphic system for real-time detection of high frequency oscillations (HFO) in intracranial EEG. Nat. Commun. 12, 3095 (2021).

Fra, V. et al. Human activity recognition: suitability of a neuromorphic approach for on-edge AIoT applications. Neuromorphic Comput. Eng. 2, 014006 (2022).

Bartels, J. et al. Event driven neural network on a mixed signal neuromorphic processor for EEG based epileptic seizure detection. Sci. Rep. 15, 15965 (2025).

Frenkel, C., Bol, D. & Indiveri, G. Bottom-up and top-down approaches for the design of neuromorphic processing systems: Tradeoffs and synergies between natural and Artificial Intelligence. Proc. IEEE 111, 623–652 (2023).

Liu, S. C., Kramer, J., Indiveri, G., Delbruck, T. & Douglas, R. J. Analog VLSI: circuits and principles (MIT Press, 2002).

Chicca, E., Stefanini, F., Bartolozzi, C. & Indiveri, G. Neuromorphic electronic circuits for building autonomous cognitive systems. Proc. IEEE 102, 1367–1388 (2014).

Rubino, A., Livanelioglu, C., Qiao, N., Payvand, M. & Indiveri, G. Ultra-low-power FDSOI neural circuits for extreme-edge neuromorphic intelligence. IEEE Trans. Circuits Syst. I: Regul. Pap. 68, 45–56 (2021).

Khacef, L. et al. Spike-based local synaptic plasticity: a survey of computational models and neuromorphic circuits. Neuromorphic Comput. Eng. 3, 042001 (2023).

George, R. et al. Plasticity and adaptation in neuromorphic biohybrid systems. Iscience, 23, 101589 (2020).

Qiao, N. et al. A re-configurable on-line learning spiking neuromorphic processor comprising 256 neurons and 128K synapses. Front. Neurosci. 9, 141 (2015).

Chicca, E. et al. A VLSI recurrent network of integrate-and-fire neurons connected by plastic synapses with long-term memory. IEEE Trans. Neural Netw. 14, 1297–1307 (2003).

Indiveri, G., Chicca, E. & Douglas, R. J. A VLSI array of low-power spiking neurons and bistable synapses with spike-timing dependent plasticity. IEEE Trans. Neural Netw. 17, 211–221 (2006).

Bartol, J. et al. Nanoconnectomic upper bound on the variability of synaptic plasticity. eLife 4, e10778 (2015).

Amit, D. J. & Fusi, S. Learning in neural networks with material synapses. Neural Comput. 6, 957–982 (1994).

Cartiglia, M. et al. Stochastic dendrites enable online learning in mixed-signal neuromorphic processing systems. In IEEE International Symposium on Circuits and Systems (ISCAS), 476–480 (2022).

D’Agostino, S. et al. DenRAM: neuromorphic dendritic architecture with RRAM for efficient temporal processing with delays. Nat. Commun. 15, 3446 (2024).

Zhang, X. et al. Hybrid memristor-CMOS neurons for in-situ learning in fully hardware memristive spiking neural networks. Sci. Bull. 66, 1624–1633 (2021).

Payvand, M., Fouda, M. E., Kurdahi, F., Eltawil, A. M. & Neftci, E. O. On-chip error-triggered learning of multi-layer memristive spiking neural networks. IEEE J. Emerg. Sel. Top. Circ. Syst. 10, 522–535 (2020).

Camuñas-Mesa, L. A., Vianello, E., Reita, C., Serrano-Gotarredona, T. & Linares-Barranco, B. A CMOL-like memristor-CMOS neuromorphic chip-core demonstrating stochastic binary STDP. IEEE J. Emerg. Sel. Top. Circuits Syst. 12, 898–912 (2022).

Nair, M. V., Muller, L. K. & Indiveri, G. A differential memristive synapse circuit for on-line learning in neuromorphic computing systems. Nano Futures 1, 035003 (2017).

Zhao, Z. et al. A memristor-based spiking neural network with high scalability and learning efficiency. IEEE Trans. Circuits Syst. II: Express Briefs 67, 931–935 (2020).

Wang, Z. et al. Fully memristive neural networks for pattern classification with unsupervised learning. Nat. Electron. 1, 137–145 (2018).

Song, S., Miller, K. & Abbot, L. Competitive Hebbian learning through spike-timing-dependent plasticity. Nat. Neurosci. 3, 919–926 (2000).

Brader, J., Senn, W. & Fusi, S. Learning real world stimuli in a neural network with spike-driven synaptic dynamics. Neural Comput. 19, 2881–2912 (2007).

Perez-Nieves, N., Leung, V. C., Dragotti, P. L. & Goodman, D. F. Neural heterogeneity promotes robust learning. Nat. Commun. 12, 5791 (2021).

Girão, W. S., Risi, N. & Chicca, E. Learning in spiking neural networks with a Calcium-based Hebbian rule for spike-timing-dependent plasticity. ArXiv,2504.06796 (2025).

Hebb, D. O. The organization of behavior: a neuropsychological theory (Taylor & Francis, 1949).

Senn, W. & Fusi, S. Learning only when necessary: better memories of correlated patterns in networks with bounded synapses. Neural Comput. 17, 2106–2138 (2005).

Mitra, S., Fusi, S. & Indiveri, G. Real-time classification of complex patterns using spike-based learning in neuromorphic VLSI. IEEE Trans. Biomed. Circuits Syst. 3, 32–42 (2009).

Rubino, A., Cartiglia, M., Payvand, M. & Indiveri, G. Neuromorphic analog circuits for robust on-chip always-on learning in spiking neural networks. In IEEE 5th International Conference on Artificial Intelligence Circuits and Systems (AICAS), 1–5 (2023).

Bocquet, M. et al. In-memory and error-immune differential RRAM implementation of binarized deep neural networks. In IEEE International Electron Devices Meeting (IEDM), 20.6.1–20.6.4 (2018).

Hirtzlin, T. et al. Digital biologically plausible implementation of binarized neural networks with differential hafnium oxide resistive memory arrays. Front. Neurosci. 13, https://doi.org/10.3389/fnins.2019.01383 (2020).

Bartolozzi, C. & Indiveri, G. Synaptic dynamics in analog VLSI. Neural Comput. 19, 2581–2603 (2007).

Wiefels, S. et al. HRS instability in oxide-based bipolar resistive switching cells. IEEE Trans. Electron Devices 67, 4208–4215 (2020).

Kanerva, P. Hyperdimensional computing: an introduction to computing in distributed representation with high-dimensional random vectors. Cogn. Comput. 1, 139–159 (2009).

Plate, T. A. Holographic reduced representations. IEEE Trans. Neural Netw. 6, 623–641 (1995).

Gayler, R. W. Multiplicative binding, representation operators & analogy. Adv. Analogy Res. Integrat. Theory Data Cognit. Comput. Neural Sci. (1998).

Kleyko, D., Rachkovskij, D. A., Osipov, E. & Rahimi, A. A survey on hyperdimensional computing aka vector symbolic architectures, part I: models and data transformations. ACM Comput. Surv. 55, 130:1–130:40 (2022).

Cotteret, M. et al. Distributed representations enable robust multi-timescale symbolic computation in neuromorphic hardware. Neuromorphic Comput. Eng. 5, 014008 (2025).

Renner, A. et al. Neuromorphic visual scene understanding with resonator networks. Nat. Mach. Intell. 6, 641–652 (2024).

Frady, E. P. & Sommer, F. T. Robust computation with rhythmic spike patterns. Proc. Natlm Acad. Sci. USA 116, 18050–18059 (2019).

Kleyko, D. et al. Vector symbolic architectures as a computing framework for emerging hardware. Proc. IEEE 110, 1538–1571 (2022).

Laiho, M., Poikonen, J. H., Kanerva, P. & Lehtonen, E. High-dimensional computing with sparse vectors. In IEEE Biomedical Circuits and Systems Conference (BioCAS), 1–4. (2015).

Frady, E. P., Kleyko, D. & Sommer, F. T. Variable binding for sparse distributed representations: theory and applications. IEEE Trans. Neural Netw. Learn. Syst. 34, 2191–2204 (2023).

Kleyko, D., Rachkovskij, D., Osipov, E. & Rahimi, A. A survey on hyperdimensional computing aka vector symbolic architectures, part ii: applications, cognitive models, and challenges. ACM Comput. Surv. 55, 1–52 (2023).

Yan, B. et al. RRAM-based spiking nonvolatile computing-in-memory processing engine with precision-configurable in situ nonlinear activation. In IEEE Symposium on VLSI Technology, T86–T87 (2019).

Wan, W. et al. 33.1 A 74 TMACS/W CMOS-RRAM neurosynaptic core with dynamically reconfigurable dataflow and in-situ transposable weights for probabilistic graphical models. In IEEE International Solid-State Circuits Conference (ISSCC), 498–500 (2020).

Wan, W. et al. A compute-in-memory chip based on resistive random-access memory. Nature 608, 504–512 (2022).

De Los Ríos, I. D. et al. A multi-core memristor chip for Stochastic Binary STDP. In IEEE International Symposium on Circuits and Systems (ISCAS), 1–5 (2023).

Valentian, A. et al. Fully integrated spiking neural network with analog neurons and RRAM synapses. In IEEE International Electron Devices Meeting (IEDM), 14–3 (2019).

Falcone, D. F., Halter, M., Bégon-Lours, L. & Offrein, B. J. Back-end, CMOS-compatible ferroelectric FinFET for synaptic weights. Front. Electron. Mater. 2, 849879 (2022).

Dutta, S. et al. Logic compatible high-performance ferroelectric transistor memory. IEEE Electron Device Lett. 43, 382–385 (2022).

Choi, S., Yang, J. & Wang, G. Emerging memristive artificial synapses and neurons for energy-efficient neuromorphic computing. Adv. Mater. 32, 2004659 (2020).

Demirağ, Y. et al. PCM-trace: Scalable synaptic eligibility traces with resistivity drift of phase-change materials. In IEEE International Symposium on Circuits and Systems (ISCAS), 1–5 (2021).

Dai, S. et al. Emerging iontronic neural devices for neuromorphic sensory computing. Adv. Mater. 35, 2300329 (2023).

Lenk, C. et al. Neuromorphic acoustic sensing using an adaptive microelectromechanical cochlea with integrated feedback. Nat. Electron. 6, 370–380 (2023).

Dalgaty, T. et al. Mosaic: in-memory computing and routing for small-world spike-based neuromorphic systems. Nat. Commun. 15, 142 (2024).

Zhang, Y. et al. Monolithic 3D Integration of Multi-Layer CNT-CMOS/RRAM Macros for Mixed-Precision Analog-Digital Computing-in-Memory Architecture. In IEEE International Electron Devices Meeting (IEDM), 1–4 (2024).

Li, Y. et al. Monolithic three-dimensional integration of RRAM-based hybrid memory architecture for one-shot learning. Nat. Commun. 14, 7140 (2023).

Boahen, K. Dendrocentric learning for synthetic intelligence. Nature 612, 43–50 (2022).

Ataei, S. et al. An open-source eda flow for asynchronous logic. IEEE Des. Test. 38, 27–37 (2021).

Neckar, A. et al. Braindrop: A mixed-signal neuromorphic architecture with a dynamical systems-based programming model. Proc. IEEE 107, 144–164 (2019).

Park, J., Ha, S., Yu, T., Neftci, E. & Cauwenberghs, G. A 22-pJ/spike 73-Mspikes/s 130k-compartment neural array transceiver with conductance-based synaptic and membrane dynamics. Front. Neurosci. 17, 1198306 (2023).

Indiveri, G. Neuromorphic selective attention systems. In IEEE International Symposium on Circuits and Systems (ISCAS) III–III (2003).

Livi, P. & Indiveri, G. A current-mode conductance-based silicon neuron for address-event neuromorphic systems. In IEEE International Symposium on Circuits and Systems (ISCAS), 2898–2901 (2009).

Qiao, N., Bartolozzi, C. & Indiveri, G. Automatic gain control of ultra-low leakage synaptic scaling homeostatic plasticity circuits. In IEEE Biomedical circuits and systems conference (BioCAS), 156–159 (2016).

Richter, O. et al. A subthreshold second-order integration circuit for versatile synaptic alpha kernel and trace generation. In ACM Proceedings of the International Conference on Neuromorphic Systems, 1–4 (2023).

Richter, O. et al. A PC interface for small and simple async and neuromorphic IC test chips. https://zenodo.org/records/15607379 (2025).

Acknowledgements

The authors would like to thank Adrian Whatley, Vincent Jassies and Herman Adema for their technical support in developing PCBs, μC firmware and the TEXEL API. Additionally we would like to thank Nicoletta Risi and Matei Zainea for their investigations into algorithms and hardware. Thanks to Ton Juny Pina for his help soldering PCBs. Finally, we would like to thank Erika Covi, Luca Fehlings, Paolo Gibertini, Giuseppe Leo and Ton Juny Pina for their feedback on the manuscript. This work was supported by: the EU H2020 RIA project BeFerroSynaptic (871737) (A. R., E. C., G. I., J. C., M. F., P. K., and L. B. L.), the EU H2020 MSCA projects NeuTouch (813713) (E. C. and M. M.) and MANIC (861153) (E. C., W. S. G.), the ERC Synergy grant SWIMS (101119062) (E. C.), the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) projects MemTDE (441959088, part of the DFG priority program SPP 2262 MemrisTec, 422738993) (E. C., H. G.) and NMVAC (432009531) (E. C., M. C., and M. Z.). The authors would like to acknowledge the financial support of the CogniGron research center and the Ubbo Emmius Funds (University of Groningen) (E. C., H. G., M. C., M. F., M. M., O. R., P. K., and W. S. G.). The authors would like to thank IC Manage, Inc for providing us with their Global Design Platform XL for design data management.

Author information

Authors and Affiliations

Contributions

Conceptualization - A. R., E. C., G. I., H. G., J. C., M. C., M. F., M. M., O. R., P. K., and W. S. G.; Methodology - A. R., E. C., G. I., H. G., J. C., M. C., M. F., M. M., O. R., P. K., and W. S. G.; Software/Hardware - A. R., E. C., G. I., H. G., J. C., M. C., M. F., M. M., O. R., P. K., and W. S. G.; Investigation - A. R., E. C., G. I., H. G., M. M., and O. R.; Writing - A. R., E. C., G. I., H. G., M. C., M. F., L. B. L., M. M., M. Z., O. R., and P. K.; Visualization - E. C., G. I., H. G., M. C., M. M., and O. R.; Supervision - E. C. and G. I. The following authors contributed significantly to the CMOS design of the TEXEL chip - A. R., E. C., G. I., H. G., J. C., M. C., M. F., M. M., O. R., P. K., and W. S. G. Authors O. R. and M. M. conducted the work while affiliated with the BICS lab and CogniGron at the University of Groningen.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewers for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Greatorex, H., Richter, O., Mastella, M. et al. A neuromorphic processor with on-chip learning for beyond-CMOS device integration. Nat Commun 16, 6424 (2025). https://doi.org/10.1038/s41467-025-61576-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41467-025-61576-6