Abstract

The training and testing datasets for point cloud registration networks often fail to satisfy the independent and identically distributed assumption, that actually leads to significant performance degradation and poor generalization. To address this problem, some point cloud data post-processing methods were proposed to construct a dataset with abundant domain discrepancy variables. Specifically, these variables were introduced into the training data by optimizing spatial sampling and temporal interval frame matching. A total of 63461 sets of point cloud registration data pairs were produced. A 31.8% improvement in accuracy was demonstrated compared to that of the benchmark dataset. The network generalization, quantized by the one-step generalization ratio, reached 0.9832, significantly improves the generalization and provides reference data for cross-domain research on point cloud registration.

Similar content being viewed by others

Background & Summary

Point cloud registration (PCR) is a crucial step in computing the transfer matrix between two frames of point clouds, and it plays an important role in robot navigation, autonomous driving, and augmented reality1. Cross domain generalization refers to the ability of a network to perform tasks in an unknown target domain. Its generalization is usually reflected in the fact that during cross domain testing, the accuracy of the network decreases compared to the original domain. Traditional neural network-based PCR models are typically trained under the assumption of independent and identically distributed training and testing data2. However, for LiDAR sensors, there are obvious cross domain factors such as radar resolution, range of action, acquisition environment and other factors3,4,5, which definitely lead to a notable generalization degradation of the network6. The mainstream approach to overcome this issue is to attempt to cover all features of testing data by increasing training datasets. However, in applications such as autonomous driving and robotics7, it is hard to acquire large scale-datasets8. Therefore, new insights, different from that of increasing datasets, are needed to be made to solve this problem.

Three main ways have been proposed to enhance network generalization. The first approach is multi-domain joint training, where variational attention technique was introduced to network by modifying the attention distributions. Only 24% gains of MAE (Mean Absolute Error) were realized on NWPU dataset9. However, this kind of network only focus on part of the high-level features, definitely ignores the domain low-level features, resulting in lower generalization. Another way focus on optimizing the feature extractor. Peng et al. firstly proposed a two-stage matching and registration algorithm. ESF (Ensemble of Shape Functions) was firstly applied to coarsely extract the features of point clouds, and then ICP (Iterative Closest Point) sed to finely extract the features. The proposed two-stage algorithm ran much faster than the baseline of single stage of ICP but suffered from low accuracy10. In addition, generalization was enhanced by extracting the local geometric details and global shape properties in using relative scale estimation of multi-modal geometric data11. Furthermore, generalization was also enhanced by paying more attention on global information rather than local structural distortion12, where Gaussian mixture model was used to estimate the transformation. Recently, a fast semi-supervised approach was proposed to minimize a feature-metric projection error without correspondences and a limited range (less than 60 degrees) of initial angles was approved13. These networks often require a large amount of labeled data, which prolong the training process and make it difficult to fine-tune14. The third is to augment the network training dataset. Randomization of the synthetic images, with the styles of real images in terms of visual appearances using auxiliary datasets, was proposed to effectively learn domain-invariant representations. This technique improved the point cloud segmentation of state-of-the-art networks by only 5%-12% in different domains15.

The existing methods are usually trained and processed by single domain data, which makes the network unable to learn more diverse data distributions, thus limiting its generalization. Building multi-domain datasets prevent network from overfitting on a single domain, enabling it to learn universal cross domain feature and avoiding complex network optimization and specific structural adjustments16. In addition, rich datasets can provide the network with diverse cross domain feature differences, thereby improving its generalization ability. However, the existing datasets still have deficiencies in feature diversity, which limits the further improvement of generalization capabilities. Therefore, there is currently a lack of cross domain point cloud datasets for studying and improving the cross domain performance of networks17. Very recently, a cross domain dataset was built based on the point cloud pairs from cross domain data to train the existing state-of-the-art registration network. However, this dataset only obtained 165 pairs of point cloud pairs by manually aligning the ground-truth transformation and removing outliers, which resulted in low efficiency and large deviations18. Thus, there is an urgent need for a cross domain PCR datasets including rich cross domain factors.

In order to obtain a cross domain PCR datasets containing rich cross domain factors, self-collected data was built by using spatial consistency key point cloud extraction, random frame selection-based sparse registration and variable spatial resolution where abundant domain discrepancy variables were introduced. The one-step generalization ratio of the network has been increased to 0.9832, and compared to the KITTI19 dataset, the accuracy on the Geo-Transformer network improved by 31.8%. Our work provides high-quality datasets for the domain migration task.

Methods

Network generalization on the common datasets

To evaluate the network generalization in using common datasets, experiments were conducted using three different types of deep learning networks: PCRNet20, HRegNet21, and GeoTransformer22, then trained base on four widely adopted benchmark datasets, ModelNet4023, WHU-TLS24, KITTI and Nuescenes25. The ModelNet40 dataset contains 40 common object categories. The WHU-TLS dataset is a ground-based laser scanning dataset containing complex urban environment point cloud data. The KITTI dataset contains diverse point cloud data encompassing vehicles, pedestrians, and buildings. Additionally, The Nuscenses dataset includes a wealth of traffic scenes and ambient point cloud data. By training the selected PCR network on these diverse datasets, we aim to comprehensively analyze their generalization and identify any potential performance gaps or biases across the varying data distributions.

To compare the generalization ability, we use the original network configuration and hyperparameters of the selected PCR network. Each network is trained for 40 epochs on each dataset with CUDA acceleration on NVIDIA Tesla A100 GPU. After training, we test the transferability of the network on unknown object categories and its generalization on unknown datasets. For each network, we use two datasets for training and testing respectively. The relative rotation error (RRE) and relative translation error (RTE) of the test samples are obtained, where samples with RRE ≤ 0.5° and RTE ≤ 20 cm are considered positive matches, and the proportion of positive matches to the total number of test samples was called the accuracy. If the difference between the training accuracy and the test accuracy of the network is small, that is, the error bar is short, it indicates that its generalization performance is good. The comparison of the accuracy changes of the PCR network is presented in the form of an error bar chart in Fig. 1.

(a,b) show the network transferability by testing the networks on unseen object categories after training on the ModelNet40, WHU-TLS, KITTI, and Nuescenes datasets. (c,d) demonstrate the network generalization when tested on unseen datasets, after being trained on the ModelNet40, WHU-TLS, KITTI, and Nuescenes datasets.

From the error bars, it can be seen that when comparing the impact of different datasets on network specificity, we used the modelnet40 (Fig. 1(a)) and WHU-TLS (Fig. 1(b)) datasets for training, and tested the unseen categories in the Nuscenses and KITTI datasets to obtain the difference in training accuracy (blue bar chart) and testing accuracy (orange bar chart) for each network. From the error bars, it can be seen that the accuracy of the three networks trained on the modelnet40 dataset changed slightly and generally had higher accuracy compared to the networks trained on the WHU-TLS dataset. When comparing the impact of different datasets on network generalization, we used the Nuscenses (Fig. 1(c)) and KITTI (Fig. 1(d)) datasets for training, and tested the same category data in the modelnet40 and WHU-TLS datasets. As can be seen from the error bars, among the three networks, the KITTI dataset exhibits higher accuracy and smaller accuracy decline than the Nuscenses dataset, and therefore performs better in generalization performance. However, compared with the test results of KIITI in the same domain, the accuracy of the network still dropped significantly, indicating that its generalization performance is still insufficient. By analyzing the changes in accuracy of the network trained on four standard public datasets, it can be clearly seen that the datasets have a significant impact on the network generalization performance. In addition, when the network is trained on common standard datasets and tested on different domains, the accuracy rates decrease to varying degrees, indicating that existing data sets limit the generalization ability of the network to a certain extent. Therefore, a point cloud registration dataset with higher generalization is urgently needed.

Dataset construction with domain discrepancy point cloud

In existing public datasets, domain discrepancy variables are often ignored during preprocessing, which makes it difficult to control the inherent domain discrepancy variables in the data. Therefore, we chose to build a custom dataset for this study. Through the generated point cloud data samples, we introduced a variety of domain discrepancy variables, including changes in data acquisition scenes, differences in sensor resolution, differences in spatial sampling rates, and changes in time frame sequences. In this way, we can systematically introduce and manipulate these domain discrepancy variables and comprehensively analyze their impact on the generalization performance.

Data collection

The parameters of the three models of LiDAR used in this article are compared in Table 1. It considers variations in the data acquisition scenes, sensor resolutions, spatial sampling rates, and temporal frame sequences. As shown in Fig. 2(a), we utilized a mobile robot equipped with LiDAR and IMU sensors as the point cloud data collection platform. Firstly, for the data acquisition scenes, we selected multiple environments, including a suburban traffic road as Scene 1, an urban traffic road as Scene 2, and a campus road as Scene 3. The point cloud from Scene 1 is primarily composed of a traffic road environment, including vehicles, pedestrians, and various traffic infrastructure such as guardrails. Scene 2 not only encompasses the traffic road but also the surrounding buildings. The Scene 3 data mainly captures a campus environment, featuring both roads and buildings. All data were collected during the day and under the same weather conditions to ensure data consistency. Secondly, to introduce sensor resolution variations, we employed three different LiDAR sensors: the Hesai PandarXT with 32 beams, the Velodyne Ruby Plus with 128 beams, and the DJI MID360 with 32 beams. We set the scanning frequency to 10 Hz for all sensors to capture multi-resolution point cloud data. At last, temporal and spatial variables are incorporated during the data post-processing.

Finally, 16276 sets of point cloud data were captured in Scene 1, 45095 sets of point cloud data were captured in Scene 2, and 2090 sets of point cloud data were captured in Scene 3. The collected data is illustrated in Fig. 2. We intend to use the Scene 1 and Scene 2 data for comparative training, while the Scene 3 data will be primarily utilized for testing.

Beam counts significantly affect the angular resolution in the vertical direction, and the higher the number of beams, the higher the density of the point cloud, and the better the ability to capture complex structures, thus improving the spatial diversity. Increasing the FOV will disperse the fixed angular resolution resources of the radar, and make the point cloud of the long-distance target become sparse, thus weakening the spatial diversity. Increasing the Maximum Detection Range decreases the frame rate and affects the temporal diversity, while increasing the Effective Point Rate enhances the synergy between the frame rate and the density of the point cloud, optimizing the spatial and temporal properties of the point cloud.

Self-built dataset

We utilized the point cloud data and IMU data collected in our field experiments as input to the LiDAR Simultaneous Localization and Mapping algorithm, Point-LIO, to obtain the pose information and 3D point cloud map data. Then we segmented the resulting integrated point cloud map into individual point cloud frames in the PCD format, based on the temporal sequence. Using the estimated pose information, we computed the corresponding pose transformation matrices and paired them with the point cloud files to create the ground truth registration data for the training dataset. In the end, we get a dataset for different scenes, and use the first 3/4 of each dataset as the training set, 1/8 as the testing set, and the rest as the validation set.

Data post-processing

-

1.

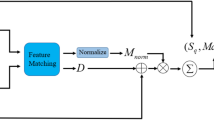

Spatial consistency key point cloud extraction

We divided the point clouds into a three-dimensional voxel grid, where each voxel represents a discrete volume element. For each voxel, we retained only a representative point, typically the centroid of the points within that voxel. The result of this extraction process is a new point cloud that contains only the selected voxel key points.

To ensure scale consistency across the datasets, we first cropped all the point cloud data to a maximum bounding cube of 70 m length. We used the ratio of the selected point cloud to the original point cloud as the sampling rate, then applied different voxel sampling rates to the point clouds from Scene 1 and Scene 2, setting the rates to 0.4, 0.5, and 0.6, respectively.

Fig. 3 shows the comparison of the original point cloud at different sampling rates after spatial consistency processing. The processed data has consistent spatial attributes and sparse and critical point cloud data is obtained by extracting feature points.

-

2.

Random sparse interframe registration

Random frame selection is performed on the data of the continuous time series of Scene 1 and Scene 2. In detail, by performing interval sparse sampling on these consecutive frame data, we set a fixed frame interval (e.g., every 4 frames) or select any frame within every 10-frame interval as the ground truth pair for PCR. This way, a sparse frame dataset with random time sequences can be obtained.

Fig. 4 is a comparison of pose transformations between different data frame intervals. As can be seen from the figure, different time intervals lead to different posture transformation amplitudes. The smaller the amplitude, the higher the data overlap rate; and the larger the amplitude, the lower the data overlap rate.

Fig. 4 (a) Data pose transformation at one frame interval, the four pictures from left to right are frame 1, frame 2, frame 3, frame 4. (b) Data pose transformation at four frame intervals, the four pictures from left to right are the pose diagrams of the 1st frame, the 5th frame, the 9th frame, and the 13th frame. (c) Data pose transformation at any frame interval, the four pictures from left to right are the pose diagrams of the 1st frame, the 7th frame, the 17th frame, and the 27th frame.

-

3.

Augmentation of LiDAR resolution diversity

In order to augment the resolution diversity of the dataset, this paper selected 16267 sets of 32-line lidar data (Scene 1) and 16267 sets of 128-line LiDAR data (Scene 2), and directly mixed them to introduce LiDAR resolution variables.

Data Records

The dataset named cross-domain-dataset26 is available in the data repositories Science Data Bank, and can be downloaded for free. The dataset has a clear organizational structure and contains folders covering both raw data and processed data, which provides great convenience for users with different needs.

Dataset directory

The dataset is compressed into a zip file. After decompression, you can see that it contains three folders, each corresponding to a scene. The following is a detailed description of the data structure in each scene: Each scene has a raw data folder named keyframes, which contains frame data arranged in the order of data acquisition time. The point cloud file records the point cloud information at each acquisition time point and is the basis for subsequent analysis. The loop folder contains transformation matrix files corresponding to the point cloud files in the keyframes. These transformation matrices can realize the coordinate transformation between point cloud data in different frames. The downsampled folder is a collection of results obtained after spatial consistency sampling of the original point cloud data. It contains three subfolders: 01, 02, and 03. The data stored in the downsampled folder accounts for 3/4 of the original data and is used as a training set. The 02 and 03 subfolders are used to store the test set and validation set data, respectively. The data they contain accounts for 1/8 of the original data. Users can adjust the sampling rate according to their specific needs. The transform folder contains three subfolders: training set, test set, and validation set. The files in each subfolder contain a variety of important information, including data sequence, frame IDs between two matching frames, transformation matrix between two frames, and point clouds of two frames. The metadata folder contain the metadata of the generated training set, test set, and validation set. The dataset folder directory is shown in Fig. 5.

Interpretations about data format

The point cloud file is stored in pcd format. Scenes 1, 2, and 3 are the resolution data of 32, 32, and 128 beams respectively. The transformation matrix is stored in txt format. The data after point cloud spatial consistency sampling is converted into npy format for storage. The transform point cloud true value pair file is in npy format,the file content includes data sequence, frame ID between two matching frames, transformation matrix between corresponding frames, and point clouds of two frames. Metadata is in pkl format. The.pkl file storing motion information is generated by the pickle library in Python.

Technical Validation

In order to study the impact of each data processing method on generalization, the GeoTransformer network was selected as the baseline network to be trained on our datasets with the batch size of 1, training period of 40 epochs, and based on NVIDIA Tesla A100 GPU with CUDA acceleration.

Evaluation index

In machine learning, we typically use Shannon entropy27 to measure the distribution of features in a dataset, which is defined as the expectation that information will be included in each piece of data, expressed by the following formula.

\(p\left({x}_{i}\right)\) represents the probability of a random feature \({x}_{i}\).

The Shannon entropy allows us to compare the information gain of each data post-processing method, thereby exploring the correlation between data information complexity and network generalization. Data post-processing methods increase the Shannon entropy of the dataset by introducing sample diversity, increasing the complexity and uncertainty of the data, thereby promoting the network to learn a wider range of features during training and reducing its dependence on specific samples. This increase in entropy can help the network better cope with unseen data and improve generalization performance. However, if the post-processing methods are overly complex and the entropy value is too high, it may introduce too much noise, which may lead to overfitting of the model and weaken the generalization ability. Therefore, we calculate Shannon entropy to maintain data rationality while improving generalization.

The accuracy gap (training accuracy minus test accuracy) mainly reflects the overall performance of the network in prediction and is easy to explain. The error gap (training error minus test error) more accurately describes the network’s fitting ability and can capture subtle differences in network performance. When the error gap increases, it is usually accompanied by an increase in the accuracy gap. In order to accurately quantify and compare the impact of different datasets on the cross-domain generalization performance of the network, we use an algorithm to characterize the generalization ability. N sets of data were extracted from the original domain Ds as the training dataset \({{\rm{D}}}_{{\rm{s}}}^{{\rm{n}}}\), let Ds = {(xs1, ys1), …,(xsn, ysn).}~\({{\rm{D}}}_{{\rm{s}}}^{{\rm{n}}}\). N sets of data were extracted from Dt from the target domain as the test set \({{\rm{D}}}_{{\rm{s}}}^{{\rm{n}}}\), let Dt = {(xt1, yt1), …,(xtn, ytn).} ~ \({{\rm{D}}}_{{\rm{s}}}^{{\rm{n}}}\). Assuming that the number of training datasets is the same as that of test datasets, we represent the training error and test error as:

Therefore, \(L\left[{Dt}\right]-L[{Ds}]\) is regarded as generalization gap.

In the gradient descent algorithm, the loss of training and testing will gradually decrease, and we use \(\triangle L\left[{Ds}\right]\) and \(\triangle L\left[{Dt}\right]\) to represent the reduction of the loss of testing and training at one training step, respectively, and we define the ratio between their expectations \(R\left(D,n\right)\) as the one-step generalization ratio (OSGR)28.

There are three possibilities for the OSGR value:

-

1.

OSGR = 0: This indicates that the test loss does not decrease, implying the network has no generalization.

-

2.

OSGR = 1: This suggests that the test loss and training loss decrease at the same rate, indicating excellent generalization.

-

3.

0 < OSGR < 1: In this range, a larger OSGR value, closer to 1, represents a better generalization.

Effect of spatially consistent keypoints extraction on generalization

Different spatial sampling rates (0.4, 0.5, 0.6) to the point cloud samples from Scene 1 were selected to address effect of spatially consistent keypoints extraction on generalization. And the GeoTransformer network was trained on these spatially datasets and evaluated the network on 2090 samples from Scene 329. The training results are shown in Fig. 5:

It is evident that the point cloud sampling rate is a crucial parameter affecting the generalization. From the OSGR in the Fig. 6(a), it can be seen that an excessively high or low sampling rate can reduce generalization30. Firstly, a small sampling rate is beneficial for preserving detailed information, which is important to point cloud registration that requires detailed information. However, a sampling rate that is too small can also bring some problems. For example, as shown in Fig. 6(b), the Shannon entropy increases significantly as the sampling rate decreases, which indicating an increase in data diversity. Although higher data diversity enriches feature representation, it also leads to increased network complexity and introduces more noise31.

On the other hand, a larger sampling rate can effectively reduce network complexity and improve generalization, but at the same time, it may also lose some key detailed features, which is not conducive to capturing lesion information. Therefore, the selection of voxel size requires a trade-off between preserving detailed information and reducing model complexity. The experimental results show that when the sampling rate is less or more than0.5, the OSGR value decreases significantly, resulting in a decrease in the generalization, as shown in Fig. 6(a). In contrast, the OSGR value peaks at 0.7299 when the sampling rate is 0.5, and the OSGR value indicates that its test loss rate decreases faster, suggesting that the network has the best generalization. Therefore, a sampling rate of 0.5 was used for our dataset.

Impact of random sparse interframe registration on generalization

In addition, we further investigate the impact of random sparse interframe registration on generalization performance. We used the 32-line lidar data of Scene 1, through the previous spatial consistency key point cloud extraction operation and adopted the optimal sampling parameter 0.5. On this basis, we created three training dataset variants:

-

1.

Fixed frame sequence: The point cloud frames were sampled at a fixed interval, we choose 1 interframe.

-

2.

sparse interframe sequence: We sparse the original sequence so that it is spaced at a 4-frame interval.

-

3.

Random frame sequence: The point cloud frames were sampled at random intervals (within a maximum of 10 frames).

We then train the GeoTransformer network on the processed dataset from Scene1, and evaluated on 2090 sets of Scene 3 data. The results of the network training with three different frame intervals are shown in Fig. 7:

From the OSGR in the Fig. 7(a) above, it can be seen that the generalization performance trained on the dataset with an interval of 4 frames is significantly better than that of the dataset with consecutive frames, but the generalization performance with an interval of any frame is relatively reduced. This suggests that the temporal continuity is crucial for the generalization. As indicated by the higher Shannon entropy, this is because the consecutive frame data lacks diversity, and the network may overfit to a specific scene. The Shannon entropy values in the Fig. 7(b) show that the interval frame data can increase the diversity of the data, allowing the network to learn more general features. It is necessary to choose an appropriate interval between frames while maintaining a certain degree of continuity. The experimental results show that the lowest error is found when the frame interval is 4. The test accuracy is 1 and the OSGR value reaches 0.9832, which is significantly better than other intervals. Therefore, a interval of 4 frames was determined in our dataset.

Impact of LiDAR resolution diversity on generalization

To investigate the impact of resolution diversity on the generalization, we conducted an experiment by mixing the training data from Scene 1 and Scene 2, and testing on the 2,090 scenes from Scene 3 (as shown in Fig. 8).

Figure 8(a) shows 16276 sets of data obtained by combining point cloud data of different resolutions from two different sensors, and compares them with 128-line point cloud data of a single resolution (also 16276 sets). Both sets of data sets are processed by spatially consistent key point sampling and sparse frame processing, and the optimal parameters (sampling rate 0.5 and frame interval 4 frames) are used in both operations to form two sets of datasets, which are input into the GeoTransformer network for training.

As can be seen from Fig. 8(b), the multi-resolution combined data set expands the coverage of the data, but from the comparison of Shannon entropy, it is found that although the mixed data increases the data diversity, it also reduces many key features, resulting in a decrease in generalization. In addition, the OSGR obtained by training the 128-line data set is 0.2371, which is significantly lower than the OSGR (0.9832) of the 32-line data set in Section 4.3. The Shannon entropy of 128-line data is 75.613, which is much higher than 38.646 of 32-line data. This indicates that too many features may cause the network to overfit to specific features, which in turn limits the improvement of generalization ability. Therefore, excessive feature richness is not necessarily conducive to improving the generalization.

Analysis of validation results

Our dataset contains 63,461 sets of samples covering 3 different scenarios. The dataset is spatially consistent while exhibiting diversity in the temporal dimension, thus possessing strong generalization and can be effectively migrated to other related domains. However, there are some limitations in the performance in fast-moving scenarios because the dataset is processed using sparse frame intervals.

Comparative study

Firstly, based on the GeoTransformer network, the KITTI dataset was used for training, and the Scene 3 data collected in this paper was used for testing. Then, using the optimal dataset to train, with a spatial sampling rate of 0.5, a time frame interval of 4 frames, and 32 lines of single resolution scene data. Finally, a portion of the KITTI dataset was used for network performance testing. The difference between the test accuracy of the data in the same domain as the training set and the accuracy of the test accuracy of cross -domain data is called the accuracy difference. The results, as shown in the Table 2, demonstrate that the GeoTransformer network trained on the optimal dataset achieved a 0.318 improvements in accuracy gap in the cross domain generalization test, compared to the model trained on the KITTI dataset alone, The accuracy difference of the Waymo32 dataset and the ModelNet40 dataset is relatively small, however, compared to our dataset, their original training accuracy is lower.

The Fig. 9 presented in this study shows the PCR results tested in cross domain scene, using GeoTransformer network trained on different datasets.

To visualize the PCR network, we selected key points within the point clouds and calculated the Euclidean distances between the transformed points and their ground-truth correspondences33. A distance threshold of 0.5 was set to generate a boolean mask array. If the Euclidean distance between a pair of points is less than the threshold, the corresponding mask value is set to “true”, indicating a successful registration; otherwise, it is set to “false”. The initial point clouds and the transformed point clouds were then connected according to their respective mask values for visualization. The green lines represent the point pairs that were successfully registered, while the red lines indicate the point pairs that failed to register.

Figure 9(a,b) show the test results of the GeoTransformer network after training on the self-built dataset and the KITTI dataset, respectively. In the figure, the red point cloud represents the initial state of the point cloud, and the blue point cloud represents the transformation result after applying the transformation matrix predicted by the network. By comparing the visualization results in Fig. 9(a,b), it is evident that the network trained on the self-built optimized dataset achieves a significantly higher proportion of successful registrations and better overlap in the same test data. We divide the number of correctly matched keypoints by the total number of matches, which is called the inlier ratio. In four different scenes, the inlier ratios of the network trained on the KITTI dataset were 19.14%, 28.91%, 32.81% and 26.69% respectively. However, on our self-built dataset, the inlier ratios were 59.77%, 66.41%,67.19% and 59.77% indicating that the dataset proposed in this article can significantly improve the PCR performance of the GeoTransformer network in cross domain scenes.

In addition, when the ModelNet40 dataset is used for network training, the RRE of the test using the same domain data is 4.031° and the RTE is 6.035 cm. However, when testing with the WHU-TLS dataset, the experimental results were invalidated due to excessive testing errors. This is because ModelNet40 is an indoor 3D modeling dataset, while WHU-TLS is an outdoor natural scene dataset, and the domain discrepancy between the two is too large, resulting in the failure of the training network in cross-domain scenarios. This suggests that the effect of the dataset on the domain generalization is within a certain range of domain discrepancy, beyond which the performance drops significantly.

Usage Notes

In the field of autonomous driving, our dataset can help models better adapt to new driving environments under multiple weather and road conditions; in the field of medicine, with the dataset generated by our data generalization method, trained models can be directly deployed to new hospitals without the need for retraining. Raw NumPy files and PCD point cloud files are provided in the dataset, which can be converted to the desired format by the researchers as needed and further adapted to the specific needs of different domains by adjusting the spatial consistency parameter and the time frame interval parameter.

Code availability

We have uploaded the relevant Python code for the processing method used in this dataset to the GitHub platform (URL: https://github.com/Vickywq123/cross-domain-dataset.git). Users can directly use the prepared dataset or change the domain discrepancy variables according to their own needs to create a dataset with the variables they need.

References

El Banani, M., Gao, L. & Johnson, J. et al. UnsupervisedR&R: Unsupervised Point Cloud Registration via Differentiable Rendering, 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 7125–7135, https://doi.org/10.1109/CVPR46437.2021.00705 (2021).

Chatterjee, S., Zielinski, P. On the generalization mystery in deep learning. Preprint at https://doi.org/10.48550/arXiv.2203.10036 (2022).

Tobin, J., Fong, R., Ray, A. et al. Domain randomization for transferring deep neural networks from simulation to the real world, 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 23–30, https://doi.org/10.1109/IROS.2017.8202133 (2017).

Qin, X., Wang, J., Chen, Y. et al. Domain Generalization for Activity Recognition via Adaptive Feature Fusion. ACM Transactions on Intelligent Systems and Technology 14(1), 1–21, https://doi.org/10.1145/3552434 (2022).

Xu, N., Qin, R. & Song, S. Point cloud registration for LiDAR and photogrammetric data: A critical synthesis and performance analysis on classic and deep learning algorithms. ISPRS Open Journal of Photogrammetry and Remote Sensing 8, 100032, https://doi.org/10.1016/j.ophoto.2023.100032 (2023).

Sharma, A., Horaud, R. & Mateus, D. 3D Shape Registration Using Spectral Graph Embedding and Probabilistic Matching, Image processing and analysing with graphs: theory and practice, 441–474, https://doi.org/10.48550/arXiv.2106.11166 (2012).

Xu, Z., Zhang, Y., Xie, E. et al. DriveGPT4: Interpretable End-to-End Autonomous Driving Via Large Language Model. IEEE Robotics and Automation Letters 9(10), 8186–8193, https://doi.org/10.1109/LRA.2024.3440097 (2024).

Zhang, H., Cissé, M., Dauphin, Y. & Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. Preprint at https://doi.org/10.48550/arXiv.1710.09412 (2017).

Chen, B., Yan, Z., Li, K. et al. Variational Attention: Propagating Domain-Specific Knowledge for Multi-Domain Learning in Crowd Counting, 2021 IEEE/CVF International Conference on Computer Vision (ICCV), 16045–16055, https://doi.org/10.1109/ICCV48922.2021.01576 (2021).

Peng, F., Wu, Q., Fan, L. et al. Street view cross-sourced point cloud matching and registration, 2014 IEEE International Conference on Image Processing (ICIP), 2026–2030, https://doi.org/10.1109/ICIP.2014.7025406 (2014).

Huang, X., Zhang, J., Fan, L. et al. A Systematic Approach for Cross-Source Point Cloud Registration by Preserving Macro and Micro Structures. IEEE Transactions on Image Processing 26(7), 3261–3276, https://doi.org/10.1109/TIP.2017.2695888 (2017).

Huang, X., Zhang, J., Fan, L. et al. A Coarse-to-Fine Algorithm for Registration in 3D Street-View Cross-Source Point Clouds, 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), 1–6, https://doi.org/10.1109/DICTA.2016.7796986 (2016).

Huang, X., Mei, G. & Zhang, J. Feature-Metric Registration: A Fast Semi-Supervised Approach for Robust Point Cloud Registration Without Correspondences, 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 11363–11371, https://doi.org/10.1109/CVPR42600.2020.01138 (2020).

Chen,R., Peng,Y., Li, Z. et al. Floating object detection using double-labelled domain generalization. Engineering Applications of Artificial Intelligence 133, 108500, https://doi.org/10.1016/j.engappai.2024.108500 (2024).

Yue, X. et al. Domain Randomization and Pyramid Consistency: Simulation-to-Real Generalization Without Accessing Target Domain Data, 2019 IEEE/CVF International Conference on Computer Vision (ICCV), 2100–2110, https://doi.org/10.1109/ICCV.2019.00219 (2019).

Huang, W., Yi, M., Zhao, X. & Z., J. Towards the Generalization of Contrastive Self-Supervised Learning. Preprint at https://doi.org/10.48550/arXiv.2111.00743 (2021).

Wang, Z., Li, W. & Xu, D. Domain Adaptive Sampling for Cross-Domain Point Cloud Recognition. IEEE Transactions on Circuits and Systems for Video Technology 33(12), 7604–7615, https://doi.org/10.1109/TCSVT.2023.3275950 (2023).

Huang, X., Mei, G., Zhang, J. & Abbas, R. A comprehensive survey on point cloud registration. Preprint at https://doi.org/10.48550/arXiv.2103.02690 (2021).

Geiger, A., Lenz, P. & Urtasun, R. KITTI Vision Benchmark Suite. http://www.cvlibs.net/datasets/kitti/ (2012).

Sarode, V. et al. PCRNet: Point Cloud Registration Network using PointNet Encoding. Preprint at https://doi.org/10.48550/arXiv.1908.07906 (2019).

Lu, F., Guang, C., Yinlong, L. et al. HRegNet: A Hierarchical Network for Large-scale Outdoor LiDAR Point Cloud Registration. 2021 IEEE/CVF International Conference on Computer Vision (ICCV), 15994–16003, https://doi.org/10.1109/ICCV48922.2021.01571 (2021).

Qin, Z., Hao, Y., Changjian, W. et al. GeoTransformer: Fast and Robust Point Cloud Registration With Geometric Transformer. IEEE Transactions on Pattern Analysis and Machine Intelligence 45(8), 9806–9821, https://doi.org/10.1109/TPAMI.2023.3259038 (2023).

Wu, Z., Song, S., Khosla, A. et al. ModelNet40. https://modelnet.cs.princeton.edu/ (2015).

Yang, B., Han, X., Dong, Z. WHU-TLS: A large-scale benchmark dataset for TLS point cloud registration. github https://github.com/WHU-USI3DV (2021).

Holger, C. NUSCENES by motional. https://www.nuscenes.org/ (2020).

Qian, W. cross-domain-dataset, Science Data Bank, https://doi.org/10.57760/sciencedb.21204 (2025).

Krisyesika, K., Buliali, J., Saikhu, A. Optimized Feature Selection Approach Based on Entropy for Multi-Class Data Classification, 2023 10th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), 349–354, https://doi.org/10.1109/EECSI59885.2023.10295582 (2023).

Liu, J., Jiang, G., Bai, Y. et al. Understanding Why Neural Networks Generalize Well Through GSNR of Parameters. International Conference on Learning Representations (ICLR) https://doi.org/10.48550/arXiv.2001.07384 (2020).

Zhang, X., Jie, G., Baosheng, Y. et al. A Comprehensive Survey and Taxonomy on Point Cloud Registration Based on Deep Learning. Preprint at https://doi.org/10.48550/arXiv.2404.13830 (2024).

Liang, A., Zhang, H., Hua, H. et al. SPSNet: Boosting 3D point-based object detectors with stable point sampling. Engineering Applications of Artificial Intelligence 126, 106807, https://doi.org/10.1016/j.engappai.2023.106807 (2023).

Pei, S., Henrik, K., Xerxes, D. et al. Waymo Open Dataset. https://waymo.com/open (2020).

Havrilla, A., Dai, A., O'Mahony, L. et al. Surveying the Effects of Quality, Diversity, and Complexity in Synthetic Data From Large Language Models. Preprint at https://doi.org/10.48550/arXiv.2412.02980 (2024).

Li, J., Zhang, C., Xu, Z. et al. Iterative Distance-Aware Similarity Matrix Convolution with Mutual-Supervised Point Elimination for Efficient Point Cloud Registration, European Conference on Computer Vision (ECCV), 378–394, https://doi.org/10.1007/978-3-030-58586-0_23 (2020).

Acknowledgements

Q. Wang thanks Great Wall Motor Company Limited for their help in this work.

Author information

Authors and Affiliations

Contributions

Overall design and supervision: Q. Wang, F. Liang, J. Xu; The hardware platform built: Q. Wang, B. Tu, P. Chen; Data post-processing: Q. Wang, A. Xing; Model building and training: Q. Wang, A. Xing, P. Chen; Technical validation: Q. Wang, T. Huang; First draft writing: Q. Wang, T. Huang; Reviewing and revising the manuscript: all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, Q., Liang, H., Xu, J. et al. Self-built dataset for better generalization in point cloud registration. Sci Data 12, 557 (2025). https://doi.org/10.1038/s41597-025-04897-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-025-04897-x