Abstract

Effective treatment for brain tumors relies on accurate detection because this is a crucial health condition. Medical imaging plays a pivotal role in improving tumor detection and diagnosis in the early stage. This study presents two approaches to the tumor detection problem focusing on the healthcare domain. A combination of image processing, vision transformer (ViT), and machine learning algorithms is the first approach that focuses on analyzing medical images. The second approach is the parallel model integration technique, where we first integrate two pre-trained deep learning models, ResNet101, and Xception, followed by applying local interpretable model-agnostic explanations (LIME) to explain the model. The results obtained an accuracy of 98.17% for the combination of vision transformer, random forest and contrast-limited adaptive histogram equalization and 99. 67% for the parallel model integration (ResNet101 and Xception). Based on these results, this paper proposed the deep learning approach—parallel model integration technique as the most effective method. Future work aims to extend the model to multi-class classification for tumor type detection and improve model generalization for broader applicability.

Similar content being viewed by others

Introduction

The identification and categorization of malignant neoplasms, which are brain tumors, are crucial but fairly complicated medical-related activities. The outcome and survival of a patient are highly dependent on successful detection. The brain is also a common site for using structural lesions that are within the head cavities1. However, accurate identification of brain tumors is essential for the treatment and prognosis of diseases2. With the introduction of more sophisticated imaging methods like magnetic resonance imaging (MRI) and the skillful work of a radiologist, it is realized that these conventional methods are still slow, inaccurate, and limited in response to different types and sizes of tumor3. In particular, due to the complex structure of the skull, the brain is more vulnerable to the harmful effects of the tumor, which may cause profound neurological dysfunctions and even death4. Moreover, brain tumor is diagnosed in over 700,000 individuals every year in the United States only, and the number keeps increasing thus, signifying the need for faster and more accurate methods of diagnosis5. Also, Soldiers can be diagnosed with brain tumors, resulting in thousands of them dying, which can only worsen the already dire need for better diagnostic tools6.

Brain tumor diagnosis is heavily dependent on MRI and other methods of medical imaging, and its follow-up as well. However, given the great amount of medical imagery being harnessed, and with the manual interpretation of imagery relying on human capabilities, computer-assisted diagnosis systems are necessary78. Furthermore, the use of machine learning and deep learning has also been able to demonstrate tangible gains in diagnostic accuracy. For instance, convolutional neural networks (CNNs) have worked well with tumor detection in medical images as an image classification tool. But these methods do require a lot of manually annotated data and computational resources, which confronts their more broad applicability in a clinical setting9.

The systems for automated diagnosis of diverse medical conditions have been made more accurate and efficient thanks to recent innovations based on deep learning. Many researchers have sought to improve the techniques that suffer from high computation requirements, limited feature extraction, and low accuracy. A new model for brain tumor detection was proposed which improves backbone feature integration with additional layers and improves computational efficiency with separable convolutions, striking a balance between efficiency and accuracy. Also, SKINC-NET10, which is a lightweight CNN architecture, was created for skin cancer classification and proved more accurate than previous methods relying on resource-demanding transfer learning. In the scope of infectious disease diagnosis, DNLR-NET11 used feature extraction with DenseNet20111 and combined it with a logistic regression model which gave impressive accuracy for Mpox disease1211 and proved the effectiveness of transfer learning.

The ensemble model, which combines DenseNet16913, DenseNet201, and Xception architectures with attention mechanisms and metaheuristic optimization13, also achieved great performance across multiple datasets for diagnosing cervical cancer. Further improvement was demonstrated in brain tumor classification in another study where a manta-ray foraging14 optimizer was applied with DenseNet-169 and enhanced residual blocks, showcasing powerful hierarchical feature learning across several datasets. The introduction of a light-weight, truncated model based on MobileNet12 architecture for multi-modal medical image analysis15 allowed for the accurate diagnosis of multiple diseases while maintaining low computational cost. All this work underscores the custom and optimized deep learning algorithms in directed and undirected graphs to address the identified gaps in medical diagnosis frameworks.

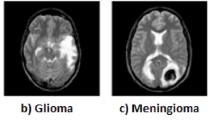

Primary brain tumors grow in the brain while secondary tumors are those that grow within the brain but are spread from other parts of the body, most secondary tumors are malignant. For noninvasive estimation, Radiological imaging, MRI, is extensively employed for its ability to exclude ionizing radiation. The congenial methods of different images have a significant role in detection and description of brain tumors16.

In this paper, we present a new framework for classifying brain tumors which utilizes Parallel Model Integration of two deep learning models, ResNet101, and Xception. We extract both hierarchical and detailed features from MRI scans using both models and combine their feature representations for classification. This approach aims to increase accuracy, decrease the rate of false negatives, and improve consistency across different imaging conditions throughout the classification. In order to refine the trustworthiness of the model within clinical environments, XAI, particularly LIME, is used to generate visual rationales for the model’s predictions.

This research mainly offers a new strategy that applies Deep Learning through Parallel Model Integration of ResNet101 and Xception models to improve feature representation and classification accuracy for brain tumor detection using MRI scans. In order to assess the accuracy of the method, we additionally applied two other deep learning integration approaches, self-attention and feature-fusion techniques, on the same backbone networks. Also, we applied a Hybrid Machine Learning pipeline with the Vision Transformer (ViT)17 as a fixed feature extractor, which is then classified using SVM and Random Forest. Including these other approaches offers a strong comparative benchmark to highlight the strengths of the proposed parallel integration approach in accuracy, efficiency, and possible clinical use. This not only strengthens the claims regarding the suggested approach but also illustrates different strategies concerning the architecture that can be utilized for real-world diagnostic applications. This paper employs local interpretable model-agnostic explanations(LIME)18 which enhance model transparency and explainability19, which therefore make it possible for the technology to be used in clinical practice.

Related work

Deep learning systems put some ease on the task involving the diagnostics classification by letting the deep neural networks learn the features and patterns of the datasets on their own. The growing use of deep learning as an approach for classification problems has an endorsement of powerful computing hardware architectures, particularly GPUs and TPUs20. Also, some other free-source coding frameworks like Keras with TensorFlow backend and PyTorch are contributing to this process. In addition, a variety of such neural network deep architectures as VGG, AlexNet, Inception, ResNet, Xception and other models are available for incorporation in deep computer vision applications in other21,22.

MRI images aided in the construction of an advanced classification that supports recognizing brain tumors. Tumor magnitudes such as VGG1623, ResNet50, DenseNet24 and VGG19 enabled the use of transfer learning so as to efficiently identify most typical cases of brain tumors. The Deep Transfer Learning models were trained and validated on 3,000 MRI scans available in the Figshare data repository. It achieved 99.02% accuracy, which is very favorable when comparing it with results obtained from ResNet5025.

The described transformer architecture consists of an encoder that has multi-headed self-attention and MLP26 components. To fit into the requirements of transformers, images must first be tokenized. So, the input image of size \(1024\times 256\times 3\) is split into 256 patches of uniform size \(32\times 32\). These image patches are transformed with linear layer projections, these patches are then fed into the model as fixed-sized 1-D embeddings. One-dimensional positional embeddings are incorporated into the patch embeddings in order to maintain spatial context. Furthermore, the model includes a class token that is appended to the beginning of the patch embeddings set. In the end, the model output is taken from the MLP head and the dense layer. The weight output layer that corresponds to the categories was also included27,28.

The escalation in the application of deep learning algorithms has optimized the accuracy and efficiency diagnoses related to brain tumors and neurological disorders using MRI scans. Swin Transformer based models like Hybrid Shifted Window Multi-Head Self-Attention (HSW-MSA) and Residual MLP (ResMLP)29 have achieved unmatched accuracy and effectiveness in classifying brain tumors. Also, EfficientNetv2 boosted with Global Attention Mechanism (GAM)30 and Efficient Channel Attention (ECA)30has made great strides in the interpretative region of tumor images; with high-class interpretability through Grad-CAM alongside considerable focus on essential areas of the tumor images. In stroke segmentation tasks, Attention U-Net has surpassed the performance of the traditional U-Net and U-Net++ by virtue of better manipulation of lesion variability and noise in the input through attention-based focus31. Analysts underline the prominence of both CNN and Vision Transformer models with respect to medical diagnostics in their comprehensive review of 61 studies based on MRI with the sharp focus on the need for explainability, data variety, relevance, and ethics32. This set of works collectively makes the case for the paradigm shift deep learning introduces to the domain of neuroimaging and patient care.

The ongoing increased adoption of deep learning (DL) approaches, and most specifically convolutional neural networks (CNNs), has made many contributions to medical image interpretation. In contrast to the traditional machine learning (ML) techniques, CNNs employ a top-down feature learning strategy that analyses images in their natural form with no input on feature design33. This ability has tremendously enhanced the accuracy and efficiency of brain tumor detection systems. Most of the studies have concluded that deep learning algorithms outperform classical machine learning algorithms, particularly in image analysis involving complex spatial features and tumor shape deformation. However, despite these achievements, the application of DL into real-world clinical applications is hampered by large annotated data sets, high-performance computational resources, and model explainability, among others, are required2.

Deep learning refers to a method that seeks a hierarchy of features from a given input through a neural network with several layers. It is a popular method since, in contrast to traditional machine learning techniques where features of an image are hard-coded, this one does it on its own. A variety of different deep-learning algorithms have been used in order to achieve algorithms based on various objectives34. It is important to explain that such architectures as ResNet101 or Xception, for example, are deep and good at capturing complex features, so they are needed for complicated work such as the processing of medical images. As a starting point, the authors proposed a deep wavelet autoencoder-based deep neural network (DNN)35 for extracting high-level features from brain tumor images. The images were segmented so that different regions could be processed through the deep wavelet autoencoder. The processed brain tumor images were further analyzed with a deep CNN. In this case, a few other classifiers were compared with the deep autoencoder classifier, which was shown to be the most reliable as well as efficient with an accuracy of 96%36.

A DNN with an auto-encoding module was further used to classify brain tumors in the next study. The images were pre-segmented, and features were extracted from the images while running through the DNN layers37,38. Intensity-based features and textures were obtained and captured by using gray-level co-occurrence matrix (GLCM)39 and discrete wavelet transform (DWT)40, respectively. Finally, two modules of autoencoder were included in the architecture of DNN and a softmax layer was also included to classify36. Recent advancements in the field of artificial intelligence and machine learning have led to a breakthrough in identifying medical emergencies, disease detection, and treatment of patients41.

Studies integrating deep learning feature extractors with classical machine learning classifiers have shown a lot of promise across medical imaging domains, particularly in brain tumor diagnosis systems. For example, Maqsood et al.37 performed better than CNN-based performance solo with their brain tumor classification technique that integrated multiclass SVM with CNN based feature extraction. Also, Gokulalakshmi and Karthik40 successfully detected brain tumors from MRI images using SVM classifiers with GLCM feature extraction. In another study, Saeedi et al.38 improved the interpretability and efficacy of their model by using deep convolution layers for hierarchical defining features and applying traditional ML classifiers for the decisions. These studies solidify our approach of combining Vision Transformers as feature extractors with the SVM and Random Forest classifiers, where the former benefits from deep contextual information and the latter from commanding classification margins.

In healthcare analytics, random forest classifiers and support vector machines (SVM)42 are important because they can analyze medical data to provide accurate predictions and valuable insights. Random Forest, a tree-based machine learning method, has performed well in understanding the interrelations between patient safety outcomes and different elements of organizational safety culture43. The random forest model can utilize the aggregate data from healthcare facilities and identify other key factors, including the quality of knowledge about healthcare services, organizational policies, and the objectives of the top management, which affect patient safety grades. This method is one of the ways a physician can try and understand safety culture in different healthcare systems and provide better patient safety measures. On the contrary, SVMs have shown impressive performance in clinical prognosis evaluations, mainly for patients suffering from severe acute myocardial infarction. For example, SVM models perform automated risk prediction with high performance and generalization using their approach to electronic medical records from huge datasets such as the MIMIC III44 database. SVMs can process high-dimensional medical data; hence, model parameters are easily optimized45,46.

Deep learning has opened new dimensions not only in the classification of brain tumors but in other forms of cancers as well. For example, a recent skin cancer diagnosis study used a light MetaFormer model with a focal self-attention mechanism and attained F1-scores of 0.8886 and 0.9334 on the ISIC 2019 and HAM10000 datasets, respectively, making it clinically deployable47. In urology cancers, a systematic review of 48 AI studies reported classification accuracy for prostate, bladder, and renal cancers within the range of 77% to 95%, with the highest accuracy from convolutional neural networks48. Moreover, in dental oncology, models based on ConvNeXt classifed panoramic X-rays with an accuracy of 94.2% for different brands of dental implants, illustrating the power of deep architectures for clinical classification problems49. This demonstrates the potential of deploying deep learning algorithms for various medical issues and their proven versatility across disciplines, as in this research study focusing on brain tumors.

Figure 1 illustrates the crucial steps of this research study for a better understanding of the methodology of the proposed technique.

Method

The deep learning and machine learning models are used for identifying MRI images of brain tumor. The brain MRI image dataset, different deep learning and machine learning models, and train and test parameters are explained in this section.

Dataset description

The brain MRI image dataset is publicly available on Kaggle (https://www.kaggle.com/datasets/abhranta/brain-tumor-detection-mri).

The dataset used in this research contains MRI images arranged into two classes ’yes’ for tumors present and no for absent tumors. In spite of this dataset being helpful in building and testing the machine learning models meant for brain tumor detection, a few concerns stand out. First, the dataset’s quantity is underwhelming, particularly for deep learning models such as Vision Transformers, which tend to perform best with vast data resources. Secondly, the dataset does not contain rich context about the image data like the hospitals where the images were obtained, how they were imaged medically, and the procedures employed to capture them. The lack of such context makes it impossible to gauge the diversity and biases of the dataset, which is crucial for machine learning models intended for wide clinical use. Therefore, while the dataset provides a useful starting point for building models and conducting tests to verify model performance, there is a risk if the outcomes are assumed to be applicable in different clinical domains. This follows the sample images from both the classes, which are in Fig. 2. The dataset distribution is given in Table 1 which clearly discusses the splitting of the dataset into training and testing.

Apart from the dataset used for training, by splitting it into training and testing sets, a completely different dataset collected from a different source has been used to evaluate the model’s generalizability. The results of the model’s performance on the new dataset are discussed in the results section with the name testing on unseen data.

With the aim of addressing overfitting affected by the limited size of the brain tumor dataset, we attempted real-time image augmentation employing the ImageDataGenerator utility from Keras. The modifications undertaken with respect to augmentation encompassed horizontal flipping, zooming (up to 20%), indexing and shifting width and height (up to 10%), minor rotations of \(15^{\circ }\), and rescaling to [0,1] interval. These transformations were limited to the training set, with the validation and test sets scaled without augmentation to mitigate biases during evaluation. From our experiments, models that trained with augmentation exhibited 2–3% improvement in validation accuracy as opposed to models that trained without augmentation, demonstrating the positive impact of augmentations on model robustness. These trends were in alignment across the ResNet101+Xception hybrid, Vision Transformer based experiments, as well as the augmentation controlled experiments.

Hybrid machine learning approach

Fig. 3 displays the workflow of hybrid machine learning approach. The very first step in classifying a brain tumor is gathering the medical images like CT or MRI scans, including those that should be analyzed, into one dataset. The preprocessing of these scans for analysis begins by increasing their quality to be usable with the extraction algorithms.This ensures that the images are ready for the analysis and training the model, which is further discussed in the paper.

In the hybrid machine learning approach, ViT is used for feature extractions due to having a high level of attention to important parts of the image. SVMs or random forest classifiers are used in this stage for final classification since they are the best predictive classifiers available. The outcome of this stage is a trained model capable of distinguishing between tumor-positive and tumor-negative cases, which will be further evaluated and refined in the subsequent phase.

Aside from using the same preprocessing practices of contrast limited adaptive histogram equalization (CLAHE), histogram of oriented gradients (HOG), and contour mapping and region masking during test phases, the model is also tested and validated on a different database. After evaluation, the model’s outcomes are cross-referenced and weight measures including for the CLAHE, HOG, and other aided measures such as the accuracy identifying how well the model tackles the task, recall showing the ability of the model to diagnose the tumors and the F1 score which incorporates the rest of the evaluation metrics.

Image pre-processing techniques

In order to study the effect of feature-centric preprocessing on bolstering input quality and classification accuracy, we implemented three image preprocessing techniques: CLAHE, HOG, and contour-region masking. CLAHE improves local contrast of an image by redistributing intensities in small tiles which assists the model in distinguishing tumor regions that are likely to be embedded within low contrast areas. This method provided strong generalization as marked by high accuracy on the test data in Table 4 without restoring deviated anatomical detail from the images. Moreover, the tumor contours were also masked, preserving highly detailed anatomical structures enabling enhanced generalization. As for HOG, it captures bound shape information encoding it into structured feature maps where contour directions are emphasized and gradient intensity is concentrated as well. Contour based masking around tumor regions helps model focus on the relevant areas and ignore nonimportant regions which also aids in masking background information. Although providing great specialization, the omission of surrounding context renders models vulnerable to overfitting. All of these different approaches alter the way in which a model focuses and learns different features and thus perform differently on transfer learning tasks. This paper implements different image preprocessing techniques on brain mri images before training the hybrid machine learning models whose results are displayed in Figs. 4, 5 and 6.

-

1.

Contrast limited adaptive histogram equalization (CLAHE)—CLAHE is an image preprocessing technique that improves contrast and reduces noise. It’s a variant of adaptive histogram equalization (AHE).

-

2.

Contour and region masking—Contour masking is the process of identifying and isolating the boundaries(or contours) of objects within an image. Region Masking involves selecting and highlighting specific areas in the image, often using thresholding or spatial selection methods.

-

3.

Histogram of Oriented Gradients—A HOG is a machine vision technique that describes the shape and appearance of an object in an image.

Integration of vision transformer and machine learning models

Recent advances in vision transformers(ViTs)50 has also provided a new method for medical image processing which is different from CNNs51 as it includes self-attention, which enables capturing more efficiently global modes of representation, therefore making them dominant for sophisticated tasks such as detecting brain tumors. To understand ViT’s internal functioning, the architecture of ViT can be seen in Fig. 7. Nevertheless, ViTs do not perform so well when dealing with small or unbalanced datasets because of their high number of parameters. To ameliorate this, The combination of ViTs with classical machine learning models like support vector machines (SVM) and random forests (RF) presents an interesting opportunity. In this combined approach, ViTs are good at extracting features from images, while SVMs and RFs are good at classifying images with low levels of data. The combination of models is expected to increase the performance of the deep learning and traditional machine learning integration targeting efficient and effective brain tumor diagnosis systems27.

In this work, we worked with the pre-trained ViT-B/16 model from torchhvision which was trained on the ImageNet dataset. It was modified as a fixed feature extractor by deleting the classification layer and taking the hidden states output after the transformer encoder as the classification outputs. All input MRI images were resized to \(224\times 224\) pixels and normalized with ImageNet using input mean = [0.485, 0.456, 0.406] and std = [0.229, 0.224, 0.225] to conform to the set limits of the ViT model. No further tuning of the weights of ViT was done in order to limit overfitting in the small dataset. These features were then used to train standard SVM and Random Forest classifiers. This overfitting ability of modular models together with preserved generalization ability afforded by switching to a ViT model ensured flexible controllable classification. The application of pretrained models boosted performance relative to methods using raw pixel data significantly and also made accuracy across different preprocessed preliminaries such as raw, HOG, and CLAHE more reliable.

-

1.

Vision Transformer combined with Support Vector Machines - The combination of Vision Transformers (ViTs) and Support Vector Machines (SVMs) forms a strong synergy that jointly addresses some of the difficulties that exist in the task of brain tumor detection. ViTs can capture global and other information across medical images as feature extractors’ using self-attention mechanisms, and thus perform better than previous convolutional designs in representing complex patterns. However, they tend to be susceptible to data, which obviously is a lot less than ideal for medical imaging, and their classification heads risk overfitting if data is either small or heavily imbalanced. On the flip side, support vector or hyperplane machine (SVM) performs perfectly for smaller dataset52. SVMs also seek to maximize the margin between classes in high-dimensional feature spaces. They also incorporate the use of kernel functions to form a non-linear decision boundary, which is ideal for sophisticated classification tasks. Such a decision opens a window to combine the two learnings and accordingly position the ViT as a deep feature extractor under the classification function provided by SVM. In this way, the ViT classifiers, provide overfitting risks in small data conditions, are avoided as the SVM ensures sufficient and operative classifications. The architecture diagram of the model has been given in Fig. 8.

-

2.

Vision Transformer combined with Random Forest Classifier - The combination of ViTs and random forest classifiers provides an advanced integration by combining the capabilities of deep learning feature extraction and the very basic concept of ensemble-based learning machine. Medical images have global dependencies that are spatially related, and this problem has been effectively solved by the self-attention mechanism of ViTs at the cost of producing high dimensional features53. On the downside, ViTs are limited by their strong exploitative nature in that they are often equipped with dense classification layers making generalization over poorly or imbalanced datasets a problem. On the other hand, Recurrent random neural network terminators classifiers are ensemble methods that aggregate predictions by building multiple decision trees; thus, they tend to be overfitting resistant and are ideal for use with small sample datasets. They are useful in dealing with complex features but do not require extensive computation and are easy to understand. When compatible, ViT helps extract deeper features by transforming medical imaging into globally contextualized features while the RF classifier uses ensemble learning to enhance generalization and deal with noise or redundancy during classification. This integration reduces the reliance on large data. The architecture diagram of the model has been given in Fig. 9.

With regards to filling in classification gaps that the standalone model Vision Transformer (ViT) had with SVM and Random Forest hybrid classifiers, which each performed above 90% accuracy while ViT was stuck at 50%, we delved into multiple potential causes. The architecture of ViTs captures global dependencies very well, however, it struggles with small-scale datasets due to high data need and lack of strong inductive biases. Coupling ViT to traditional classifiers like SVM and Random Forest tends reduces classifiers’ reliance on ViTs, improving feature utility because of the rich multi-dimensional features yielded by ViTs. In particular, SVM does well in generating hyperplanes in those high-dimensional spaces that may become over-fitted but controlled by regularization. With this, the model can generalize and stabilize its performance more. To follow proper methodology, we checked our implementation and controlled for data leakage and overfitting using thorough cross-validation, consistent non-train-test related pre-processing, and reproducibility validation. All evidence explains why the hybrid models present strong performance.

A plethora of image processing techniques like CLAHE and HOG was applied to the dataset in a bid to fortify and increase the generalization of the integrated models. CLAHE is useful in improving the visibility of finer details in medical images. It also helps in enhancing the local contrast which is especially useful in emphasizing the tumor regions. HOG depicts structural information as it extracts edge and gradient data; thus, it can also be used as a shape descriptor. These image pre-processing techniques also make the feature representations extracted by the Vision transformer model more salient, hence guiding the models to attend to the various regions of interest. The ViT-SVM and ViT-random forest integrations were then trained on these datasets so as to test whether the additional pre-processing would improve the ability of the models to generalize across the varying conditions of the image. The models were thus aimed at improving performance by neither progressing the challenges related to the variety in the lighting and the quality of the image. These steps also help to mitigate the issues of overfit by making the models focus on variation across the features that can enhance classification performance. The pre-processing in itself adds an extra level of robustness against overfitting.

The SVM classifier was set up with a linear kernel where C = 1.0, and no adjustment to the kernel was made during tuning. The initial configuration which used the RBF and polynomial kernels was simpler than the final configuration that included the linear kernel. The linear kernel seemed to generalize better than the other options and did not overfit, particularly with features derived from the ViT model. In the same way, the Random Forest classifier was started with n_estimators equal to 100 and fixed randomness to improve the reproducibility of the results. These particular choices were made even though no extensive grid search was conducted at this iteration because of existing knowledge around the domain and trials based on previously similar binary classification portions. In later iterations, randomized selection from a set of hyperparameter setters will be implemented to further improve performance.

Proposed methodology—deep learning approach

The workflow of the deep learning approach shown in Fig. 10 begins an exploration of multiple CNNs, including ResNet’s variants and Xception, leading to the model selection for integration. To validate the model’s prediction and enhance interpretability, this paper employs XAI techniques, specifically LIME, providing model transparency. The performance of ResNet’s variants, Xception, and integrated hybrid models are displayed in the results section for the purpose of comparative analysis.

ResNet101 was created in a way that solves the problem of rapid decay of gradients in deep networks. In deep networks, this is a common problem. This is made possible by its highly innovative structure of residual learning which incorporates identity mappings through skip connections and thus, allows gradients to flow freely during the backpropagation cycle. The model consists of 101 such convolutional layers and thus, its depth is such that it can capture the hierarchical features in a very complex way. This makes the model useful in capturing nuances and outliers such as brain tumor segmentation. In addition, ResNet101 employs a design strategy in which computational resource efficiency and representational efficacy are integrated since bottleneck structures are deployed to reduce complexity without impacting performance. Its pre-trained versions come from image data sets such as the ImageNet which empowers it high functional features which are important in many tasks. All these trait’s of depth, residual learning, and transferability makes ResNet101 fit for medical imaging-related researches where precision and scale of implementation is required54.

Considered an extreme perfection in design and computation, the Xception model (of the extreme inception architecture) is best known for the feature of depthwise separable convolutions for feature extraction where it makes the most impact. Using this advanced convolutional mechanism, the inputs information in the spatial and channel wise pieces are compressed into a variety of operations which classify and considerably reduce the amount of compute requirements while preserving the depth of representation. Xception on the other hand, replaces standard convolutional layers with depthwise and pointwise convolutional layers and this way it provides the opportunity for the model to learn even more complex patterns without any flattery55. Its distinct advantage is that from large dimensional datasets it can obtain detailed information thus making it optimal for brain tumor detection and other domains that require detail. Further, its application simply entails transfer learning as it is already trained on a large data set similar to that of the ImageNet data set. The reasons which make Xception the model of choice for complex image analysis problems especially those involved in pattern recognition include a compromise between computational efficiency and model interpretability as well as better performance in differential features20.

Baseline experimentation

Before the execution of the integration techniques, a preliminary experiment was planned to check the performance of the models that were trained and fixed. Particularly, ResNet50 was trained with Adam, RMSProp, and SGD optimizers, while ResNet101, ResNet152, and Xception were trained with Adam too. These early findings were instrumental in determining the improvements expected from the integrated approaches. This section covers the comparative analysis of the results of the preliminary experiments conducted by the training default pre-trained models like ResNet50, ResNet101, ResNet152, and Xception without any enhancements or changes in the functionality.

The findings from the initial experiments, whose results are presented in Table 2, reveal high-performance discrepancies of the trained and pre-trained models with default settings on brain tumor MRI images with no enhancement and technique applied in the training and testing. Of these, ResNet50 with the Adam optimizer reached the best mark and managed 54% testing accuracy. This was considerably better than models trained using SGD and RMSProp that achieved 51% and 47% testing accuracy, respectively. For instance, ResNet101, ResNet152, and Xception had testing accuracies of 51%, 52%, and 49%, respectively, when similar conditions were set using the Adam optimizer on them.

None of them performs better individually, yet Xception and ResNet101 have been chosen for integration as they have complementary design features. This is achieved through the application of residual connections allowing for ResNet101, as a deeper member of the ResNet family, to serve as a strong feature extractor that prevents vanishing gradient problems of deep networks. Xception on the contrary utilizes depthwise separable convolutions that are structurally efficient for establishing spatial relations among feature maps. Integrating efficiencies of hierarchical features extraction of ResNet101 with spatial features processing provided by Xception opens up synergetic opportunities for improvement of the model performance. Besides, the differences in their architectural frameworks also help eliminate overlap or redundancy while maximizing feature representation so that combining these models offers great potential to improve the accuracy of brain tumor detection.

Model integration

While inspired by ResNet101 and Xception architectural concepts, the synergy stems from harnessing the strengths of both models and addressing the deficiencies in the two models. The reasons for the strategies of combining these two architectures, ResNet101 and Xception, are rather synergetic in nature with respect to the fact that the models complement each other in the given process. Practically, the architecture of ResNet101 in association with its architecture that contains numerous layers and residual connections is outstanding for capturing hierarchical and wide features while Xception is able to efficiently capture channel dependent and spatial features with inbuilt depthwise separable convolutions. The strength of these approaches is that they allow for wider and more comprehensive imaging, accounting for both global and key local features which enable the tumor to be more evidently illustrated. Moreover, the combination employs the mechanism lowering the probability of overfitting—the efficiency of Xception’s parameters compensates for the given depth of the ResNet101 thus allowing better performance with new data. Such a strategy improves diagnostic accuracy and robustness of the model as it takes advantage of the ensemble-like trait of the combination thus enhancing the performance of the model under noise and distortions of medical images.

In addition, brain tumor identification requires the ability to detect both large-scale features, such as the locating of the tumor, and small scale features such as rough textures which the model built is able to do more efficiently than the use of individual models. The proof of this effectiveness is also performance metrics improvement, say accuracy and precision, which are performance indicators this integration approach clearly demonstrated its advantage in the ability to extract and leverage multiple distinct features that are needed to address the task of the correct tumor classification. This all encompassing approach makes it possible for the integrated model, which is particularly useful for brain tumor detection, as significantly greater than that of ResNet101 or Xception alone.

We have implemented three different techniques to integrate ResNet101 and Xception based on the model’s architecture which are as follows:

-

1.

Feature fusion technique—

The feature fusion approach consists of integrating more than one feature representation model to heighten the performance of the model over the different parameters of the input data. In this case, various models are built to view the image separately; thus, every model can capture different input image or target features. When the singular models get their features independently, they usually get fused together during the confinement stage or blended to create one single unit that is more robust. This fusion makes it possible for the model to incorporate the most functional model components, allowing for a more effective feature set that captures both essential and intricate aspects of the image. By fusing multiple models, the prediction errors location, generalizability and precision of the model is successfully enhanced by the feature fusion in a way that prevents feature extracting errors. For circumstances like image recognition, this method comes in quite handy for the reason that it allows performing difficult tasks such as distinguishing whether a brain had (or did not have) tissue cancer. The adopted model utilizes feature fusion technique incorporating ResNet101 and Xception for binary brain tumor classification. To determine the model architecture, the authors first performed data generation methods by preprocessing the input images, where images are resized to 224 \(\times\) 224 and the pixel values are normalized. A common input layer ensures that identical images are provided to both ResNet101 and Xception, both initialized with imagenet weights, which are the two pre-trained models’ architectures. These models are loaded without their top layers so that new features can be learned, and they are bottlenecked to protect their pre-trained information. A global average pooling was applied to all models for an increase of their activation as well as reduce the spatial dimensions of the models to compact feature vectors. These vectors are joined together into a single representation, which allows ResNet101 to extract features at different levels while Xception captures spatial and channel-wise features in a more effective manner. The integrated features were crunched down into a simplified dense layer with ‘sigmoid’ to yield a score that can predict whether tumor is present or not. The model was compiled with Adam, binary cross entropy, and accuracy as the performance measure. This architecture combines the advantages of ResNet101 and Xception which creates a strong framework to enhance the brain tumor detection.

-

2.

Self attention mechanism—

The self-attention mechanism technique has the potential to improve a model by emphasizing the most salient parts of an input image through assigning importance to certain features. This technique therefore allows the model to dynamically adjust the weighting of a feature in terms of its relevance to all other features and look for significant areas or patterns within the image to make the required predictions accurately. Self-attention processes the input by first generating query, key, and value representations from the input feature map and use them to compute attention scores. These scores suggest the relative need of the strength of focus on a feature as compared other features. The sounds have predictive implications therefore these predictive implications are used to alter the feature map. Features which are irrelevant or not predictive are suppressed. Such a mechanism assists the model in capturing the long range interaction as well the contextual relationship among the features within the image, important for recognition of both local and global structures such as even small or faint elements in medical images. With self–attention it is expected that the model will perform better for classifying images as it will concentrate more on the essential components of the image’s predicted ones yielding more accurate and robust predictions.

The picture in Fig. 11 presented above visualizes the architecture of the self-attention mechanism technique to use the best features of ResNet101 and Xception for brain tumor identification. Using TensorFlow’s image dataset from the directory feature, the pictures are loaded, resized to \(224 \times 224\), and training and validation sets are created. Both the ResNet101 and Xception models have been loaded with Imagnet pre-trained weights; however, their top layers have been removed in order to allow feature extraction. To further ensure weight updating within training does not affect the models, these models are also frozen. Global average pooling is used to pool the feature maps obtained from both models and they are integrated to create a joined feature vector. To these combined features, self-attention is added so the model can focus on important parts of the image. The attention layer is outputted, then flattened and passed along with a dense layer for a final time, where the sigmoid activation function provides the model eye for the probability of the picture containing a brain tumor. This model is trained using the Adam optimizer with binary cross-entropy loss on the given dataset for 20 epochs. The architecture uses the unique advantages of both ResNet101 and Xception, but it is the self-attention mechanism that augments the representation of the features, hence enabling the model to achieve better performance on the classification of brain tumors.

-

3.

Parallel model integration—The Parallel Model Integration technique consists of a set of models that operate on the input data simultaneously and each one of them is responsible for a different characteristic of the image. Pivotal to this direction is the reason as to why this direction appears to be reasonable at first that different models could view similar data differently and hence there’s heterogeneity that could be missed by just one model only. After performing the transformations on the input made by all the prevailing models, their features are usually combined to form a single representation of the features, being concatenation in most cases. This set of features and relevant attributes are then used in the final classification or regression task, which is the task under concern. However, because of the parallel processing, the models are said to have performed better as they have enjoyed the strengths of individual models leading to better comprehension of complexity and hence generalization. Parallel model systems that incorporate the models’ outputs help to enhance the system’s tolerance to data variations and the risk of error, bias, or limitation of a single model working on its own. Such Progression of Integrated Parallel Modeling is beneficial especially in the Classification act as it improves the accuracy of the models by focusing on the same techniques.

The architecture of parallel model integration in Fig. 12 presented above integrates two models ResNet101 and Xception, where input image is passed through both models first then its feature outputs are combined, by merging the features through concatenation. In this case, the two models which are both trained separately from both models trained images on ImageNet, were able to concentrate on learning different perspectives of the same data. ResNet101’s features were the ones with deep structures which help efficiently capture hierarchical patterns while Xception features applied depthwise separable convolutions for good fast feature learning then merged through the Concatenate layer. Since the combination of the two model’s features offers different perspectives, it helps the model provide a better and more robust representation of the input image by leveraging different architectures. The features were then pushed through a final sigmoid layer that gave a probability score that further improves featured overall extraction and enhances the models ability to perform the task of classifying once brain tumors better than either of the models alone.

Mathematical analysis of proposed methodology

To prove how good the proposed method is, this section discusses the mathematical concepts involved in key steps like fusion of complementary feature representations, classification layer.

Feature extraction and fusion

The proposed Parallel Model Integration, combining ResNet101 and Xception architectures, is mathematically justified based on the fusion of complementary feature representations, enhanced generalization capability, and empirically validated superior performance.

Given an input MRI image \(x \in {\mathbb {R}}^{H \times W \times C}\), feature vectors are extracted independently from ResNet101 and Xception models:

The features extracted using (1) are concatenated to form a unified feature representation:

This concatenated feature vector (2) captures both hierarchical (global) and localized (channel-specific) features critical for accurate brain tumor classification.

Classification layer

The combined feature vector is fed into a dense layer with a sigmoid activation function to predict the probability \({\hat{y}}\) of tumor presence:

where \(W \in {\mathbb {R}}^{d_1 + d_2}\) and \(b \in {\mathbb {R}}\) are learnable parameters, and \(\sigma (\cdot )\) denotes the sigmoid function:

The model is optimized using the binary cross-entropy loss function:

where \(y \in \{0,1\}\) represents the ground-truth label.

Theoretical analysis of proposed model

Broader feature space

The concatenation of orthogonal or weakly correlated feature vectors from ResNet101 and Xception expands the representational capacity. Assuming orthogonality, the total feature energy is preserved and enhanced:

This ensures richer and more diverse features critical for robust classification.

Orthogonal complementarity

ResNet101 captures deep, hierarchical spatial patterns, while Xception focuses on channel-wise and fine-grained spatial relationships through depthwise separable convolutions. Their combination enables the network to model complex tumor morphology effectively.

Generalization enhancement

Parallel integration reduces overfitting by allowing diverse model perspectives during learning. As the two networks process input images differently, the combined features act as a form of implicit regularization, leading to improved generalization on unseen datasets.

Overfitting mitigation strategies

To reduce overfitting, we adopted multiple approaches. Firstly, we incorporated classical classifiers like random forests and support vector machines with vision transformers, which have minimum overfitting on limited datasets, thus making them ideal. In addition, enhancements such as CLAHE and HOG were applied to the data set prior to the training phase of the hybridized vision transformer with traditional machine learning algorithms, which helped reduce noise and highlighted distinguishable features to improve generalization. Second, we implemented transfer learning by freezing some layers of ResNet101 and Xception to preserve general features, while initializing them with pre-trained weights on ImageNet. The combined use of these two models provides implicit regularization by reinforcing competing characteristics.

Evaluation metrics

To assess the performance of the proposed binary classification models (classes: Yes for tumor present and No for tumor absent), we employ the following evaluation metrics:

-

1.

Accuracy: Measures the overall correctness of the model by calculating the ratio of correctly predicted tumor and non-tumor cases to the total number of predictions.

$$\begin{aligned} \text {Accuracy} = \frac{TP + TN}{TP + TN + FP + FN} \end{aligned}$$(7)Relevance: Accuracy gives a general view of the model’s performance, but in medical applications where class imbalance or serious consequences exist (e.g., misdiagnosing a tumor), it alone may not be sufficient.

-

2.

Precision: Indicates the proportion of correctly identified tumor cases among all cases predicted as tumors.

$$\begin{aligned} \text {Precision} = \frac{TP}{TP + FP} \end{aligned}$$(8)Relevance: High precision reduces the risk of false alarms, helping to avoid unnecessary medical procedures or stress for patients incorrectly classified as having a tumor.

-

3.

Recall (Sensitivity): Represents the proportion of actual tumor cases that were correctly identified.

$$\begin{aligned} \text {Recall} = \frac{TP}{TP + FN} \end{aligned}$$(9)Relevance: Critical in clinical settings, high recall ensures that tumor cases are not missed, which is vital for timely diagnosis and treatment.

-

4.

F1-Score: The harmonic mean of precision and recall, balancing both metrics.

$$\begin{aligned} \text {F1-Score} = 2 \times \frac{\text {Precision} \times \text {Recall}}{\text {Precision} + \text {Recall}} \end{aligned}$$(10)Relevance: The F1-score provides a single performance measure when there is a trade-off between precision and recall, which is often the case in tumor detection where both types of errors are costly.

Computational environment

Table 3 consists of the components and its specifications used for the implementation or experimentation required for this study.

This configuration was sufficient for training all models on the 3000-image brain MRI dataset within reasonable time frames. Lightweight CNN models (e.g., DenseNet, EfficientNet) and hybrid classifiers (e.g., ViT + SVM) trained efficiently using the GTX 1650 GPU, while transformer-based models like Swin and DeiT required extended training durations and more memory management. Preprocessing and feature extraction steps were handled through a combination of CPU and GPU resources. Including these detailed specifications supports the reproducibility of our work and offers practical benchmarking insight for researchers aiming to replicate or adapt the methodology on mid-range hardware.

Results and discussions

Hybrid machine learning results

This research involves designing a brain tumor classification system by combining Vision Transformers (ViTs) with classical machine learning algorithms including support vector machines (SVM) and Random Forest algorithms. The developed model was tested on a standard data set and the data was portioned into training and testing sets to establish a baseline accuracy. To further measure the transferability of the model, it was evaluated on self-collected data from other sources and the results of which are shown in the Tables 4 and 5. Such self-collected external data included images that were taken from different views which were missing from the original training data so that an adequate assessment of the model’s performance and generalization capability could be performed.

The information within the table shows a significant drop in accuracy for the ViT-based models, particularly for those trained on engineered image variants like HOGs and masked regions, when evaluated on unseen data. Although some models managed to achieve over 98% accuracy on the test data, accuracy on unseen images plummeted—often going below 50%. This discrepancy evidences a severe lack of flexibility for certain pipelines built on ViT architectures. A key contributing factor to this gap in performance is the models’ overfitting to particular feature-enhanced representations during training. Methods such as HOG and region masking emphasize certain spatial structures, causing the models trained using those techniques to overfit to specific features rather than truly learning generalizable patterns. On the other hand, models trained with CLAHE-enhanced or normal images showed consistent performance on the unseen data supporting the idea that preserving a broader distribution of texture and intensity features is beneficial for learning. Such findings highlight the need to train ViT models using data with realistic augmentations to improve cross-distribution generalization in clinical settings.

To further evaluate the best performing model which is ViT integrated with RF and trained on CLAHE preprocessed images, 5 folds cross validation is applied where the dataset is split into 5 folds and the model is trained and validated on different folds ensuring every fold serves as a validation set exactly once. The results of the cross validation can be found in Table 6 and the results are visualized using confusion matrix in Fig. 13.

Deep learning experimentation results

Table 7 shows the results of an evaluation involving all three integration techniques: Parallel Model Integration, Self-Attention Mechanism, and Feature Fusion Technique which merge ResNet101 and Xception architectures. The models were trained and tested on partitions of the same dataset, where testing data was retrieved from the initial split performed during dataset collection. This allows confirming that the evaluation will accurately capture the model’s capability to generalize on data with an imaging distribution features similar to the training dataset. Out of the three models, the Parallel Model Integration technique provided the best accuracy of 99.67%, whilst also being the best on all other metrics—precision, recall, and F1-score—out of the “Tumor” and “No Tumor” classes. The Self-Attention Mechanism also performed well achieving an accuracy of 98.16% which shows its effectiveness in enforcing dependencies of features along different spatial locations. On the other hand, the Feature Fusion Technique achieved a lower accuracy of 95.50%, which indicates that, while beneficial, merging features at the fusion level fails to exploit the full advantages of both backbone models. These results confirm that parallel integration offers the most robust and discriminative feature representation when tested on data drawn from the same distribution as the training set.

Table 8 depicts the assessment of three integration techniques—Parallel Model Integration, Self-Attention Mechanism, and Feature Fusion Technique—has been done on a novel dataset containing MRI scans of varying types, independent of the training set and not included in the model’s training sequence. This analysis measures the extendability and practical versatility of each integration method. While both the Parallel and Self-Attention methods attained identical overall accuracy of 83.58%, they markedly differed in precision and recall. The Self-Attention Mechanism had high recall for the tumor cases (98.73%) while sustaining strong precision for non-tumor cases (98.18%), indicating increased sensitivity but also likely more misclassification among non-tumor cases. The Parallel Model Integration performed with lower F1 scores of 0.8324 (tumor) and 0.8391 (no tumor), suggesting a moderate level of accuracy but indicating improved stability and consensus across predictions. The Feature Fusion Technique underperformed relative to other models, achieving overall accuracy of 79.79%, thus demonstrating a discrepancy in tumor recall 91.41% and no tumor recall at 68.18%.

Figures 14, 15 and 16 depict the learning progression of the three integration techniques over the epochs.

To ensure robustness and generalizability of the proposed model, 5-fold cross validation has been employed. This method balances reliable performance estimation and workable computational efficiency within the system. In this scenario, the dataset was divided into 5 partitions, also known as folds, or groups, of equal size. Each subset works as a combination of both train and test data. For every pass, one subset is set aside as the validation set, and the remaining four subsets serve as training data. While this step is necessary for optimization, it must be done 5 times, allowing each subset to be used once for validation. These results from all of the folds worked alongside each other to form new metrics for the model in question. The choice of five folds provides a practical trade-off, reducing variance in performance estimation while keeping computational costs reasonable. The detailed comparison of the results of all three techniques are displayed in Tables 9, 10 and 11.

The visualization of k-fold cross-validation with confusion matrix in Figs. 17, 18, and 19 demonstrates the capacity of integrated models to extend across diverse datasets, where parallel model integration was superior to other integration techniques. Therefore, confirming its applicability as a reliable solution in the real world.

Continuing with the study, an impressive gap in performance was noted with regard to the internal ResNet Intern test data compared with external sets of data models had never encountered before. This gap showcases the problem of generalization in the field of medical imaging. DenseNet and the newly proposed models, ResNet101 + Xception (parallel integration), achieved marked precision for both internal and external datasets which points towards deep feature learning. On the other hand, transformer models like DeiT and Swin Transformer’s performance dropped significantly on unseen datasets, which suggests dependence on large scale training as well as sensitivity to shift in domains. In the same way, in case of hybrid ML models, the SVM/RF ViT models trained with normal images CLAHE performed consistently well on external datasets, however models trained with HOG and masked region inputs showed extremely low accuracy (dropping from over 90% to under 50%). These over-reliance on specific drawn visuals led to lack of diverse generalizable features resulting in lack of robust readiness. As noted with numerous tendencies, the sharp differences in performance precision and accuracy severely undermines any sort of clinically validated benchmarking. In order for the aforementioned models to be usable in real-life situations however, without a doubt they must endure without falter across a wide range of imaging systems and patient demographics.

Benchmarking the proposed model against state-of-the-art architectures

In order to devise an exhaustive evaluation strategy and assess the accuracy of the suggested ResNet101-Xception parallel integration model, we performed benchmark tests using several advanced architectures including DenseNet, EfficientNet V1, EfficientNet V2, ConvNeXt, Swin Transformer, and DeiT. All models were trained on the same MRI dataset and evaluated under identical conditions to ensure a fair comparison. From the analysis presented, the relative advantages and disadvantages of each model with regard to classification accuracy and efficiency are apparent. Using both convolutional models and transformer-based architectures evidences the relevance and competitiveness of the proposed model in contemporary medical imaging frameworks, especially in automated brain tumor detection from MRI scans.

In Table 12 the precision, recall, F1 score and accuracy of the model are evaluated against several state-of-the-art deep learning accuracies for both “Tumor” and “No Tumor” classes and compare it to proposed models. Out of all the models, the proposed model performed the best with an overall accuracy of 99.67% and was the only one to maintain precision, recall and F1 score of 0.9967 for each of them, showing perfect balance among both classes. The benchmarked models also did not do as well, with EfficientNet V2 at 98.83% accuracy and maintained strong class-wise metrics, then DenseNet at 99.50% coming next. Although some models such as DeiT and EfficientNet V1 did not classify well, with DeiT scoring 50% accuracy and 0% precision for the tumor class. ConvNeXt and Swin Transformer perform moderately well but did not meet the balanced precision and recall threshold. These results clearly show that the proposed model is the most reliable and discriminatory among all when it comes to classifying brain tumors, proving that it’s the most effective among other solutions aimed at analyzing medical imaging.

Explainablity analysis using LIME

In order to examine the classification model’s explainability, we implemented the LIME method and manually assessed the MRI scans. The areas marked by LIME were compared with the anticipated tumor regions and, although LIME correctly highlighted areas of abnormal tissue to some degree, it still traversed beyond the edges of the anticipated tumor borders, which propels its substantivity. Nonetheless, it should be pointed out that LIME’s explanations are limited in scope and could potentially differ with minor changes to an image56. This poses a problem with noise sensitivity, which in certain conditions, could undermine the interpretation in a clinical setting if not carefully contextualized57. Additionally, because LIME lacks domain-specific features and is model-agnostic, it might overlook lesions that are obvious to radiologists but are not clinically salient58. LIME, even with these challenges, makes it easier to formulate intuitive interpretations of the models’ decisions alongside clinical insights where human scrutiny is needed to justify AI assertions59,60. The results of LIME algorithm explanation on all three techniques are provided in the Figs. 20, 21 and 22.

Practical deployment eorkflow

A simple web prototype design was created using the Gradio framework to show off the usefulness of the proposed brain tumor classification system. This lets users submit brain MRI scans and get back tumor type classifications immediately. On the backend, the system loads a trained deep learning model, runs the image through the neat preprocessing, and then performs prediction processes detailed in this paper.

While the application is not online, users can locally access it by executing the code available in our GitHub public repository:

https://github.com/dvamsidhar2002/Brain-Tumor-Classification-using-Gradio

This interface aims to be a basis for future clinical or research oriented implementations. It provides a basic interface that’s easy to use while allowing sophisticated connections to be made with hospital systems like PACS or mobile health systems.

Limitations

Along with its high predictive performance, the Parallel Model Integration technique has a host of problems to deal with. Running two deep networks on one image at the same time incurs significant costs in terms of computation expenses, GPU memory, prolonged training time, and increased inference time too, when set next to the less demanding Feature Fusion or Self-Attention modules. This can be problematic for smaller clinics and mobile health systems that have limited computational resources. Moreover, the rich feature diversity provided from the dual-model setup increases the chances of feature redundancy, overfitting, and high maintenance costs if proper regularization frameworks aren’t included. The complexity of the system increases with additional rigid synchronously trained models along with exhaustive hyperparameter optimization. On the other hand, self-attention mechanisms lie slightly behind in classifying images compared to the feature fusion models that gain higher speeds and lower costs. These mechanisms provide a favorable balance between interpretability and computational cost. Further details on improving accuracy without losing efficiency can incorporate model compression techniques through knowledge distillation or attention-over-pruning.

A detailed comparison of proposed method with other techniques is discussed in Table 13.

The inaccuracies illustrated in Fig. 23 illustrate the problems with the parallel ResNet101 + Xception model integration. In Fig. 23a, the tumor area can have low contrast, an atypical location, or irregular boundaries, all of which make it look like normal brain tissue. Such features may not have been properly captured in the shared feature space constructed by the parallel deep models. As a consequence, a negative tumor detection can occur. On the other hand, Fig. 23b shows a false positive case where underlying anatomical features or imaging structures that are not part of the tumors are mistakenly registered as tumor features. The pre-trained models do not seem to make adequate generalization to all variations which could be due to the sizes of the datasets, noise, or absence of outlier instances in the training data. In addition to that, the lack of localized attention approaches and global average pooling may lead the model to ignore salient detailed textures that aid in the accurate classification of the image.

Ethical considerations

Before clinical implementation, automated brain tumor classification systems require careful evaluation from an ethical standpoint. The most important issue results from the potential for soft tissue brain tumors to be misclassified as non-tumorous lesions (false negatives), which could slow down the necessary emergency treatments and have dire health implications. On the other hand, these systems can also lead to false-positive predictions which may increase unwarranted medical intervention alongside distressing emotions for patients. Such consequences underscore the importance of developing AI systems designed to assist in decision-making rather than acting autonomously as sole diagnosticians. Moreover, a lack of equity in the provision of healthcare services may arise from bias in training data as well as opaque models. Therefore, incorporating explainability methods, such as LIME, along with clinician-controlled validation, is crucial to establishing trust and responsibility alongside safety for wider clinical use.

Conclusion

This study proposes a classification system for brain tumors that combines ResNet101 and Xception using feature fusion, self-attention mechanism, and parallel model integration. Out of all the methods, the parallel model integration method exhibited better results because it used the benefits of both architectures for enhanced feature discrimination and classification robustness. The test results confirm that this method outperforms traditional approaches by effectively capturing the complex spatial and semantic patterns present in medical images. To overcome the inherent challenges presented by deep learning models, the use of explainable AI, like the LIME algorithm, has been applied to enhance model transparency. LIME, like other XAI techniques, sheds light on the components that ensure the model is free from bias and is reliable during clinical sessions. Such methods demonstrate the expected competencies of advanced medical imaging understanding integrated with the fusion of the interpretability components within deep learning models.

Future directions

Looking ahead, we plan to focus on expanding our efforts to a multi-class classification problem for differentiating distinct subtypes of brain tumors. Also, the addition of cross-modality MRI data (T1, T2, FLAIR) will increase the diagnostic utility even further. Moreover, semi-supervised and self-supervised paradigms can alleviate the problem posed by too few labeled data. Also, further investigation may be conducted on strong domain adaptation techniques for generic use on different MRI scans from varying sources. Another direction that holds potential is treating tumor segmentation and tumor classification as interdependent, which could improve the accuracy of the diagnosis.

Data availability

The datasets generated and/or analysed during the current study are available in the kaggle repository, https://www.kaggle.com/datasets/abhranta/brain-tumor-detection-mri.

References

Siar, M., Teshnehlab, M. & Brain tumor detection using deep neural network and machine learning algorithm. In 9th international conference on computer and knowledge engineering (ICCKE). vol. 2019 363–368 (IEEE, 2019).

Rajput, G. S. et al. Brain tumour detection and multi-classification using advanced deep learning techniques. J. Electr. Syst. 20(3s), 2077–2088 (2024).

Sajid, S., Hussain, S. & Sarwar, A. Brain tumor detection and segmentation in MR images using deep learning. Arab. J. Sci. Eng. 44, 9249–9261 (2019).

Khan, A. H. et al. Intelligent model for brain tumor identification using deep learning. Appl. Comput. Intell. Soft Comput. 2022(1), 8104054 (2022).

Almadhoun, H. R. & Abu-Naser, S. S. Detection of Brain Tumor Using Deep Learning (Springer, 2024).

Dhakshnamurthy, V. K., Govindan, M., Sreerangan, K., Nagarajan, M. D. & Thomas, A. Brain tumor detection and classification using transfer learning models. Eng. Proc. 62(1), 1 (2024).

Brindha, P. G., Kavinraj, M., Manivasakam, P. & Prasanth, P. Brain tumor detection from MRI images using deep learning techniques. In IOP conference series: materials science and engineering. vol. 1055 012115 (IOP Publishing; 2021).

Methil, A. S. Brain tumor detection using deep learning and image processing. In 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS) 100–108 (IEEE, 2021).

Anantharajan, S., Gunasekaran, S., Subramanian, T. & Venkatesh, R. MRI brain tumor detection using deep learning and machine learning approaches. Meas. Sens. 31, 101026 (2024).

Asif, S., Khan, S. U. R., Amjad, K. & Awais, M. SKINC-NET: an efficient lightweight deep learning model for multiclass skin lesion classification in dermoscopic images. Multimedia Tools Appl. 2024, 1–27 (2024).

Khan, S. U. R., Asif, S., Bilal, O. & Ali, S. Deep hybrid model for Mpox disease diagnosis from skin lesion images. Int. J. Imaging Syst. Technol. 34(2), e23044 (2024).

Khan, S. U. R., Zhao, M. & Li, Y. Detection of MRI brain tumor using residual skip block based modified MobileNet model. Clust. Comput. 28(4), 248 (2025).

Bilal, O., Asif, S., Zhao, M., Khan, S. U. R. & Li, Y. An amalgamation of deep neural networks optimized with Salp swarm algorithm for cervical cancer detection. Comput. Electr. Eng. 123, 110106 (2025).

Khan, S. U. R., Asif, S., Zhao, M., Zou, W. & Li, Y. Optimize brain tumor multiclass classification with manta ray foraging and improved residual block techniques. Multimedia Syst. 31(1), 1–27 (2025).

Khan, S. U. R. et al. Optimized deep learning model for comprehensive medical image analysis across multiple modalities. Neurocomputing 619, 129182 (2025).

Cheng, J. et al. Enhanced performance of brain tumor classification via tumor region augmentation and partition. PLoS ONE 10(10), e0140381 (2015).

Jain, S. & Sachdeva, B. A systematic review on brain tumor detection using deep learning. In AIP Conference Proceedings. vol. 3168 (AIP Publishing, 2024).

Lin, L. P. & Seow, Z. H. Classifying brain tumours: a deep learning approach with explainable AI. In Proceedings of the 2024 14th International Conference on Biomedical Engineering and Technology 101–107 (2024).

Pasvantis, K. & Protopapadakis, E. Enhancing deep learning model Explainability in brain tumor datasets using post-heuristic approaches. J. Imaging 10(9), 232 (2024).

Joshi, S. A. et al. Enhanced pre-trained xception model transfer learned for breast cancer detection. Computation. 11(3), 59 (2023).

Paul, J. S., Plassard, A. J., Landman, B. A. & Fabbri, D. Deep learning for brain tumor classification. In Medical Imaging 2017: Biomedical Applications in Molecular, Structural, and Functional Imaging. vol. 10137 253–268 (SPIE, 2017).

Majib, M. S., Rahman, M. M., Sazzad, T. S., Khan, N. I. & Dey, S. K. Vgg-scnet: a vgg net-based deep learning framework for brain tumor detection on mri images. IEEE Access. 9, 116942–116952 (2021).

Srinivas, C. et al. Deep transfer learning approaches in performance analysis of brain tumor classification using MRI images. J. Healthcare Eng. 2022(1), 3264367 (2022).

Aziz, N. et al. Precision meets generalization: enhancing brain tumor classification via pretrained DenseNet with global average pooling and hyperparameter tuning. PLoS ONE 19(9), e0307825 (2024).

ZainEldin, H. et al. Brain tumor detection and classification using deep learning and sine-cosine fitness grey wolf optimization. Bioengineering 10(1), 18 (2022).

Latif, G., Butt, M. M., Khan, A. H., Butt, M. O. & Al-Asad, J. F. Automatic multimodal brain image classification using MLP and 3D glioma tumor reconstruction. In 9th IEEE-GCC Conference and Exhibition (GCCCE) 1–9 (IEEE, 2017).

Zhang, Y., Wang, J., Gorriz, J. M. & Wang, S. Deep learning and vision transformer for medical image analysis. MDPI (2024).

Zhang, P. et al. Multi-scale vision longformer: a new vision transformer for high-resolution image encoding. In Proceedings of the IEEE/CVF international conference on computer vision 2998–3008 (2021).

Pacal, I. A novel Swin transformer approach utilizing residual multi-layer perceptron for diagnosing brain tumors in MRI images. Int. J. Mach. Learn. Cybern. 15(9), 3579–3597 (2024).

Pacal, I., Celik, O., Bayram, B. & Cunha, A. Enhancing EfficientNetv2 with global and efficient channel attention mechanisms for accurate MRI-Based brain tumor classification. Clust. Comput. 27(8), 11187–11212 (2024).

İnce, S. et al. U-Net-based models for precise brain stroke segmentation. Chaos Theory Appl. 7(1), 50–60 (2024).

Bayram, B., Kunduracioglu, I., Ince, S. & Pacal, I. A systematic review of deep learning in MRI-based cerebral vascular occlusion-based brain diseases. Neuroscience (2025).

Martínez-Del-Río-Ortega, R., Civit-Masot, J., Luna-Perejón, F. & Domínguez-Morales, M. Brain tumor detection using magnetic resonance imaging and convolutional neural networks. Big Data Cogn. Comput. 8(9), 123 (2024).

Krishnapriya, S. & Karuna, Y. Pre-trained deep learning models for brain MRI image classification. Front. Hum. Neurosci. 17, 1150120 (2023).

Veeramuthu, A. et al. MRI brain tumor image classification using a combined feature and image-based classifier. Front. Psychol. 13, 848784 (2022).

Noreen, N. et al. Brain tumor classification based on fine-tuned models and the ensemble method. Comput. Mater. Continua 67, 3 (2021).

Maqsood, S., Damaševičius, R. & Maskeliūnas, R. Multi-modal brain tumor detection using deep neural network and multiclass SVM. Medicina 58(8), 1090 (2022).

Saeedi, S., Rezayi, S., Keshavarz, H. & Niakan Kalhori, S. MRI-based brain tumor detection using convolutional deep learning methods and chosen machine learning techniques. BMC Med. Inf. Decis. Mak. 23(1), 16 (2023).

Kadam, M. & Dhole, A. Brain tumor detection using GLCM with the help of KSVM. Int. J. Eng. Tech. Res. 7(2), 2454–4698 (2017).

Gokulalakshmi, A., Karthik, S., Karthikeyan, N. & Kavitha, M. S. ICM-BTD: improved classification model for brain tumor diagnosis using discrete wavelet transform-based feature extraction and SVM classifier. Soft. Comput. 24(24), 18599–18609 (2020).

Habehh, H. & Gohel, S. Machine learning in healthcare. Curr. Genom. 22(4), 291 (2021).

Sumathi, K. & Pandiaraja, P. E-health care patient information retrieval and monitoring system using SVM. In Smart Technologies in Data Science and Communication: Proceedings of SMART-DSC 2022 15–28 (Springer, 2023)..