Abstract

In this study, we introduce the Channa Argus Optimizer (CAO), a novel swarm-based meta-heuristic algorithm that draws inspiration from the distinctive hunting and escaping behavior observed in Channa Arguses in the natural world. The CAO algorithm mainly emulates the hunting and escaping behavior of Chinna Argus to realize a tradeoff between exploitation and exploration in the solution space and discourage premature convergence. The competitiveness and effectiveness of CAO are validated utilizing 29 typical CEC2017 and 10 CEC2020 unconstrained benchmarks and 5 real-world constrained optimization mechanical engineering issues. The CAO algorithm was tested on CEC2017 and CEC2020 functions and compared with 7 algorithms to evaluate performance. In addition, the CAO algorithm is tested on the CEC2017 benchmark functions with dimensions of 10-D, 30-D, 50-D, and 100-D. It is then compared and evaluated against other algorithms, using the Wilcoxon rank-sum test and Friedman mean rank. Finally, the CAO algorithm is utilized to tackle five intricate engineering problems to show its robustness. These results have demonstrated the effectiveness and potential of the CAO algorithm, yielding outstanding results and ranking first among other algorithms.

Similar content being viewed by others

Introduction

As society and technology have advanced, scientists now face a vast array of challenges1,2. These issues get more complicated with time. Problems in optimization can be roughly divided into a number of categories, including single-objective or multi-objective, continuous or discrete and static or dynamic3. These categories make it feasible to create resolution techniques that are as effective as possible while taking into account the inherent characteristics of the situations. In order to identify the optimal solution, resolution techniques scan the search space or solution space. They frequently call for iterative procedures that enhance one or more solutions simultaneously. As a result, the search space is explored and gradually advances in the direction of the best answer.

Numerous techniques have been developed throughout time to address optimization issues, which may be generally divided into two families: meta-heuristics and heuristics4,5,6. When obtaining an accurate solution in a finite amount of time is not feasible, heuristics are employed as an approach.

Although it might not be the precise answer, using heuristics yields a good approximation of the issue in a reasonable amount of time7,8. When it comes to tackling situations that call for approximations, heuristics are helpful. Conversely, meta-heuristics are higher-level heuristics that are employed to address optimization issues, especially those using partial data, such as those in machine learning and artificial intelligence9,10,11. Meta-heuristics can be used in situations where the solution set is too big to test thoroughly. They may also be used in combinatorial optimization and stochastic optimization, where they look for a sizable, discrete set of workable solutions. Meta-heuristics are a generic technique used to create a broad range of optimization algorithms and need less computing power than conventional approaches12. Any problem can be solved using meta-heuristic algorithms, even if the result isn’t always the best or most precise.

Real-world optimization problems frequently have uncertain search spaces and stochastic behavior. As a result, meta-heuristic algorithms without derivatives and without limiting assumptions have been developed. Because of their great adaptability, metaheuristic algorithms may be used for a wide range of optimization issues. In complex and dynamic situations, metaheuristic algorithms offer helpful answers to a wide range of optimization issues due to their great degree of flexibility. There are two types of mathematical optimization techniques: deterministic and stochastic13. Deterministic methods, including linear and non-linear programming, explore the issue space and identify a solution by using the gradient knowledge of the problem14. These methods work well for linear search space problems, but when used for non-linear search space problems—like real-world non-convex problems—they are susceptible to local optima entrapment. To solve these problems, these algorithms must be modified or hybridized15,16.

One well-known class of algorithms created especially to handle complex optimization problems is called a metaheuristic. They are founded on human-based (HU), physics-based (PH), swarm intelligence (SI), and evolutionary algorithms (EA)17,18,19. One of the primary causes of the SI’s increased acceptance across all courses is the mathematical models’ simplicity. In optimization as well as many other fields, metaheuristics have become increasingly prominent20. This category includes stochastic optimization methods, which are useful in many sectors and scientific fields. More and more new algorithms have emerged in recent years, such as Builder Optimization Algorithm(BOA)21, Candle Flame Optimization(CFA)22, Greylag Goose Optimization(GGO)23, Makeup Artist Optimization Algorithm(MAO)24, Tailor Optimization Algorithm (TOA)25, Orangutan Optimization Algorithm(OOA)26, Paper Publishing Based Optimization(PPBO)27, Perfumer Optimization Algorithm(POA)28, Revolution Optimization Algorithm(ROA)29, Singer Optimization Algorithm(SOA)30, Spider-Tailed Horned Viper Optimization(SHVO)31. However, the applications of these algorithms are not thoroughly discussed because the theoretical component of this study is its primary focus. Other pertinent sites are recommended for researchers who wish to investigate the real-world applications32.

The primary objective of this research paper is to introduce a novel metaheuristic algorithm named Channa Argus Optimizer (CAO), which is specifically designed to address optimization problems characterized by extensive search spaces. It is based on animals’ behavior and mimics Channa Argus’ behavior. The proposed algorithm is evaluated against five popular and recent metaheuristic algorithms using CEC2017, CEC2020, and industrial engineering problems. It is crucial to remember, nonetheless, that the No Free Lunch theorem for optimization states that an algorithm’s performance on one optimization issue does not ensure that it will succeed on another problem with distinct features. Therefore, in order to maximize the efficiency and usefulness of metaheuristic algorithms, it is essential to carefully examine and modify them to fit specific problem domains.

The main contributions of this paper are presented as follows.

-

1.

An innovative optimization algorithm named Channa Argus Optimizer is introduced for global optimization industrial engineering problems.

-

2.

The performance of the Channa Argus Optimizer is calculated utilizing three challenging problems: CEC2017, CEC2020, and industrial engineering problems.

-

3.

The performance of the Channa Argus Optimizer is analyzed with the state-of-the-art swarm intelligence (SI) algorithms, physical inspiration algorithms, and biological inspiration-based algorithms.

The subsequent sections of the paper are structured as follows: Sect. "Literature review" presents an elaborate literature review, while Sect. "Channa argus optimizer(CAO)" provides a comprehensive explanation of the inspiration behind and the mathematical model of the newly proposed Channa Argus Optimizer. The outcomes derived from the experimentation are discussed in Sect. "Results on benchmark functions". The paper concludes by summarizing the findings and outlining potential future directions for research in Sect. "Engineering optimization test problems".

Literature review

Due to the increasing popularity of metaheuristic algorithms in solving different types of problems, various types of metaheuristic algorithms have been proposed. Each algorithm has its own characteristics and methods for solving optimization problems33,34. The inspiration for optimizing algorithms can include different types of natural phenomena, including animals and humans, physics, and evolution35,36. Various algorithms have been proposed based on the source of inspiration. In the context of mathematical modeling of natural behavior, these algorithms have led to the emergence of new methods and technologies in optimization methods37. Several optimization methods have been proposed in the literature, each with unique inspirations38,39. The inspiration for metaheuristic algorithms can be roughly divided into several natural sources. The general classification of meta-heuristic algorithms can be seen in Fig. 1.

The main inspiration for metaheuristic algorithms comes from animal life and behavior. These algorithms have been influenced by the collective behavior of social insects and animals and have also made significant contributions to human evolution40.

The main inspiration for metaheuristic algorithms comes from animal life and behavior. These algorithms , influenced by the collective behavior of social insects and animals, have also made significant contributions to the development of metaheuristic algorithms in human evolution41. The Puma Optimizer (PO), a heuristic metaheuristic algorithm, is essentially such a method42. It is proposed as a new optimization algorithm inspired by the intelligence and life of Pumas. Whale Optimization Algorithm (WOA) is a new metaheuristic optimization algorithm43,44,45. Its foam search is a response to the social behavior of Humpback whales. Pied kingfisher optimizer, a heuristic metaheuristic algorithm, is inspired by the unique hunting behavior and symbiosis of kingfishers in nature. Mayfly Algorithm (MA) is inspired by the flight behavior and the mating process of mayflies. The suggested method combines the main benefits of evolutionary algorithms with swarm intelligence46,47,48. Nature has an impact on Ant Lion Optimization (ALO). It mimics the five essential hunting processes that ants and lions use when hunting49,50,51,52. To handle optimization issues with various structures, another approach called the Pathfinder approach (PFA) has been presented. It looks for the best food source or prey, is inspired by the collective evolution of animals, and establishes a hierarchical structure of group leadership.

The second source of inspiration is physical processes or mathematical models. For instance, the arithmetic optimization algorithm (AOA), a novel metaheuristic technique, was proposed by Abualigah et al53,54,55. This approach makes use of how popular mathematical operations—like multiply, divide, add, and subtract—behave in different search spaces. Optimization algorithms are carried out using the mathematical description of AOA. Using mathematical models based on sine and cosine functions, Mirjalili created the56,57,58,59 Technique (SCA), a population-based optimization method. In order to find and use the search space at different optimization milestones, SCA employs a number of random and adaptive factors and fluctuates either outward or towards the optimal solution. Furthermore, based on regulated volume and mass, Faramarzi et al. presented a novel equilibrium optimizer (EO) that uses each solution as a search agent whose location can reach equilibrium state33.

Metaheuristic algorithms also draw inspiration from the motivations of populations and their behaviors. People’s driving behavior while learning serves as the basis for the Driving Training Based Optimization (DTBO) algorithm, which is based on driving training60. Three phases make up the demonstration: practice, teacher-created modes, and instruction by the teacher driving instructor. To assess and test the DTBO algorithm, the author used 53 industry-standard features, including CEC 2017 functionality. This work proposes a new metaheuristic algorithm, the Group Teaching Optimization Algorithm (GTOA), to tackle a variety of optimization problems. It is a mechanism influenced by community teaching. Four fundamental guidelines for modifying group instruction, utilizing group technology, and implementing group teaching mode to facilitate workflow were described. Presented a novel human behavior-based optimization method for the election and leader selection process. On the basis of this, the algorithm guides search agents in two stages: exploration and exploitation. The author tested the algorithm using 33 objective functions of different dimensions and complexities. The results demonstrate how the algorithm effectively handles a range of optimization problems. To solve numerical and structural design optimization problems, Jahangiri et al. Presented an efficient and reliable metaheuristic method for a novel called 'Interactive Self-directed Teaching School’. International Accounting Standards are group based algorithms inspired by the experience of a self-study school where students can enhance their knowledge through self-study, collaborative discourse, feedback, and competition.

According to all the explanations in this section, each optimization algorithm has its own advantages and disadvantages. A significant drawback of optimizer algorithms is their poor performance in intensive and diverse components, lack of balance between exploration and development stages, staying at local optima, lack of adaptation to different mechanisms to solve discrete problems, and high execution time. The ability of optimizer algorithms can be improved by adapting powerful mechanisms that can increase the diversity of output solutions or quickly move towards the best solution to easily explore the entire optimization space. However, in order to minimize execution time to a reasonable level, these techniques should be as computationally simple as possible. In addition, intelligent mechanisms and programs can be used to balance the exploration and development stages, significantly improving the performance of algorithms61. Regarding the provided explanation, in these cases, it prompts us to provide a powerful algorithm, a powerful mechanism in the exploration and development phase with minimal execution time, and then introduce a new intelligent mechanism phase transition to achieve maximum performance from the proposed algorithm. Finally, due to its unique functionality, the proposed algorithm can be used to solve various optimization problems in different optimization spaces. We studied cases of global optimization problems and engineering technical problems.

Channa argus optimizer(CAO)

In this section, the main inspiration for the Channa Argus algorithm was reviewed, followed by a comprehensive description of the proposed algorithm and the establishment of a mathematical model.

Inspiration

Channa argus (see Fig. 2 which is Photographed by the corresponding author Da Fang), also known as black fish, money fish, mullet and snake head fish, belongs to the perciformes Ophiocephalus genus fish. The adult length is about 40 ~ 60 cm, and the maximum length can reach 1 m. The general weight is about 0.5 to 1 kg, and the maximum is 8 to 9 kg. The body is fat and elongated, cylindric at the front and flat at the rear. The head is large and long pointed, slightly flattened at the front, slightly raised at the back, and the top of the skull is covered with irregular scales. The snout is short and blunt, the mouth is large, the mouth cleft is slightly oblique, and the jaw is slightly prominent. The teeth in the mouth are clustered, the upper jaw has a fine tooth band, and the teeth on both sides of the lower jaw are sharp. The body color is gray-black, the back of the head and the back of the body are darker and darker, the abdomen is light white, there are about 11 irregular large black spots on the side of the body, and there is 1 small black spot along the middle line of the back. Channa argus is a large benthic freshwater fish. It is native to the river basins of the East Asian and Pacific river systems, and its worldwide distribution can extend from the Korean Peninsula, the Heilongjiang River basin and the Ussuri River basin on the border of China and Russia to the Xingkai Lake and the Yangtze River basin in the south. In China, it is mainly distributed in Hunan, Hubei, Anhui, Henan, Shandong, Hebei, Liaoning and other provinces. Later, it was widely introduced into Japan, Central Asian countries, and eastern North America. Channa argus is a ferocious carnivorous fish that feeds on other fish, frogs, crustaceans, and insects. It has a special structure of mouthparts adapted to its predation behavior and mainly adopts ambush mode of predation. Channa argus chooses different foods at different stages of growth.

It is a fierce carnivorous fish that feeds mainly on other fish, frogs, freshwater crustaceans, and aquatic insects. Channa argus preys by ambush. They usually hide near grass or other cover, and when they spot an approaching fish or shrimp, they rush to swallow their prey in one gulp. Their food intake is quite large, and their maximum stomach capacity can even reach 60% of their body weight. After laying their eggs, the Channa argus will lurk beneath or near the nest, guarding the eggs. This protective behavior continues until the fry hatch and are able to swim freely and feed independently, which usually takes about 4 weeks, by which time the fry have reached 2 cm, at which point the fry have the ability to live independently.

Inspired by the behaviors of Channa argus, we have developed a novel meta-heuristic algorithm named the Channa argus optimizer (CAO). In the subsequent subsection, we establish the mathematical model of CAO as follows.

Mathematical model

This section provides a detailed description of the mathematical model for CAO. We first present the mathematical expressions for the hunting and escaping strategies, followed by an analysis of the mathematical models for the hunting and escaping strategy.

Initialization

CAO starts the search process by creating a set of initial solutions at random from the search space as the first trial, much like many other population-based techniques. The initial population was created using the following equation:

where UB and LB stand for the upper and lower boundaries of the search range, rand is a random value between 0 and 1, and \({X}_{i,j}\) is the location of the ith individual at the jth dimension.

A fitness function is used to assess each person’s fitness value according to their capacity to solve the challenge after the first population has been created.

Hunting strategies (exploration phase)

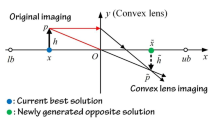

CAO’s exploratory phase was inspired by the predatory behavior of Channa Argus. The Channa Argus, known as the "tiger of the water", holds a top predatory position in the pond ecosystem with its powerful hunting ability and unique survival strategy. This carnivorous fish not only preys on various small fish and shrimp, but when it is large enough, it may even hunt frogs, young birds and small mammals. They prefer to inhabit still water environments with abundant aquatic plants and soft mud substrates, and their range of activities is relatively fixed. Channa Argus often lurk quietly in hidden spots at the bottom of the water, patiently waiting for fish, shrimp and other prey to pass by. Then they strike with lightning speed, catching the prey in one fell swoop without chasing. The Channa Argus will look around for a partner who has found prey, as shown in Fig. 3. The optimal solution, the suboptimal solution and the central position of the prey could all be the locations where Channa Argus ambushed, and the attack direction was in one direction between the optimal solution and the central position.

In CAO, the location of the search agent is determined by the location of the parent and the center, and the direction is determined by the optimal prey and Channa argus center. The center location of Channa arguses is updated according to the following equation:

Among them, Zi(t) denotes the i th individual during the t th iteration, P(t) indicates a vector including random numbers on the basis of Gaussian distribution denoting the Brownian motion, the sign \(\otimes\) represents entry-wise multiplications, r indicates a number randomly chosen from [0,1]. Furthermore, G(t) refers to the current best solution, S(t) is a random individual selected from a set of three elites in the swarm, and \(\overline{Z }\)(t) denotes the centroid position of the whole swarm. The corresponding mathematical expressions are presented in the following:

where Zsecond (t) represent the second-best individual in the current population, respectively. \({Z}_{c}(t)\) denotes the centroid position of individuals whose fitness values ranked in the top 50%. In this study, for simplicity, the individuals whose fitness values ranked in the top 50% are named leaders. Additionally, Zc (t) is calculated utilizing the mathematical expression in Eq. (5).

where N1 indicates the number of leaders, that is, N1 is equal to half the size of the whole swarm, and Zi(t) represents the ith best leader. Therefore, during each iteration, the Elite(t) is randomly selected from a set that consists of the current best solution, second-best individual, and centroid position of leaders.

Escaping strategy (exploitation phase)

As shown earlier, Channa argus seedlings will swim into the mouth of large Channa argus if they are in danger while prowling. But it is not in order to save the mother, the mother Channa argus will not be blind because of production, the big Channa argus rise fierce, has a strong attack ability, but the small Channa argus is easy to become the prey of other fish, so when the fish found danger, it will suck the small Channa argus into the mouth. Wait for the danger to pass, and then spit out the baby Channa argus. The baby Channa argus will also react normally and will swim into the mouth of the adult Channa argus when danger comes, so that it can be safe. After becoming a mother, a Channa argus will exhibit a completely different instinct—an extreme protection of its children. Black fish protecting their young usually lasts for about a month. When they protect their young, they usually show these two behaviors: first, they will act like dogs protecting their food. As soon as a strange object approaches their cubs, they will be particularly fierce and launch an attack at any time. Second, when they sense danger approaching, they will put their cubs in their mouths. This is also a normal phenomenon of the law of survival. Figure 4 shows the escape of the Channa argus.

In this part, the exploitative characteristic of CAO is introduced. Instead of expanding with a high-decentralized feature in the solution space, search agents are encouraged to exploit high-quality solutions around the current best solution when Channa argus encounter danger. The escaping strategy can be mathematically modeled in Eq. 6 and K(t) parameter can be calculated as follows:

where K(t) is the Original position inertia, r indicates the random number chosen from [0,1], Zi(t) denotes the i th individual during the t th iteration, P(t) indicates a vector including random numbers on the basis of Gaussian distribution denoting the Brownian motion, the sign \(\otimes\) represents entry-wise multiplications, t indicates current iteration value, maxIter indicates maximum iteration value. Furthermore, G(t) refers to the current best solution, S(t) is a random individual selected from a set of three elites in the swarm, and \(\overline{Z }\)(t) denotes the centroid position of the whole swarm.

Computational complexity analysis of CAO

This section explains the operational capabilities of the proposed CAO in terms of its time and space complexity.

Time complexity

To properly describe the computational complexity of an optimization algorithm, a function is typically utilized to relate the algorithm’s running time to the size of the input problem. This function is commonly expressed using Big-O notation. The time complexity of the algorithm is influenced by various factors, including the population size (N), the dimensions of the problem (Dim), the number of iterations (T), and the cost of function evaluations (C).Thus, the time complexity of the CAO algorithm can be more precisely expressed as:

where the components of Eq. (8) can be characterized by their time complexities as follows:

-

(1)

The generation of the population initialization requires \(O(N\times Dim)\).

-

(2)

The evaluation of the cost function requires \(O(T\times N)\) time.

-

(3)

The position updating in exploration or exploitation phase requires \(O(T\times N\times Dim)\) time.

Therefore, the overall time complexity of CAO can be formulated as follows:

Space complexity

The space complexity of CAO is determined by two parameters: the number of Channa arguses and the dimensions of the problem, and it affects the memory space.

In short, CAO’s model of behavior involves constantly exploring and using the surface of the water to find potential prey, then moving toward the prey to capture the prey, while encountering predators that swim quickly like the motherfish in search of safety. Mathematical models have been proposed for CAO to demonstrate its ability to solve optimization problems. It is important to note that the framework of CAO for addressing optimization problems bears a resemblance to other meta-heuristic algorithms. The optimization process starts with a randomly generated population of potential solutions, which is subject to iterative improvements until a predefined termination condition is met (i.e., when \(t<T+1\)). The specific operators used to update the population are what distinguish one algorithm from another.

Pseudo-code and flowchart of CAO

This section describes the pseudo-code and flowchart of CAO. In our study, the mechanism is devised to reflect this situation and maintain exploitation and exploration. As presented in Algorithm 1, in the early stages of the iteration, individuals from the entire population are randomly exploited and explored.

The above procedure is shown in Fig. 5.

Results on benchmark functions

Assessment of CAO on CEC-2017 benchmark test functions

Description of the benchmark test functions

We employ the well-known CEC-2017 test suite to assess the CAO algorithm’s global exploration, local extremum avoidance, local mining, and other performance metrics. Due to space constraints, we concentrate on the Dim = 10, 30, 50, and 100 dimensions situation in this experiment. The specifics of the CEC-2017 test functions, which are separated into four groups as indicated, are given in Table 1.

The algorithms’ capacity for exploration is examined using multimodal functions Fun4–Fun10, while their capacity for exploitation is assessed using unimodal functions Fun1 and Fun3. The more challenging test functions used to assess the algorithms’ local extremum avoidance are the composition functions Fun21–Fun30 and hybrid functions Fun11–Fun20. Note that because of its unpredictable nature, we did not utilize the Fun2 test function from the CEC-2017 benchmark.

Experimental setup

We compared the proposed CAO algorithm with seven well-known algorithms, namely Mayfly Algorithm (MA)46, Loin Swarm Optimization (LSO)58, Grey Wolf Optimizer(GWO)62,63, Harris Hawks Optimization (HHO)64,65,66,Whale Optimization Algorithm (WOA)43,44, Equilibrium Optimizer (EO)33, and Marine Predators Algorithm (MPA)50. Table 2 displays the parameter settings for all algorithms. The parameters of CAO, in addition to the consistent parameters for all algorithms, r is a random number of 0 and 1, as well as original position inertia, which is set to 0.2 here. The comparison algorithm parameters were set based on their respective literature. To ensure a fair comparison, each function was executed separately for 20 trials. The statistical analysis was performed using the mean fitness value (Mean) and the standard deviation (Std) of the 20 trials. The experiments were implemented on MATLAB 2018b on a Windows 11 Operating System, utilizing a core R7 CPU and 32 GB RAM.

Qualitative analysis

To better understand how the CAO algorithm improves with each iteration, this section of the paper provides a qualitative assessment of the CAO’s performance. Specifically, in this experiment, the convergence behavior of CAO is reflected by the search history, convergence graph, history of average fitness, and diagram of trajectory in the first dimension. As depicted in Fig. 6, the first column is the description of the parametric space, and it reveals the smooth structure of unimodal problems such as C20171 and C20173. Meanwhile, a substantial number of local optima exist in simple multimodal problems and complicated hybrid problems well emulate the real solution space. The convergence graph in the second column is the most broadly utilized metric to validate the performance of metaheuristic techniques. As described in Fig. 6, the convergence graphs attained by CAO suggest that the algorithm has a rapid convergence rate on all benchmarks. For unimodal problems, due to the interaction and learning between individuals, CAO presents a good exploitative characteristic to approach the global optimum. When handling simple multimodal problems and hybrid problems, CAO sometimes falls temporarily into local optima, but the algorithm achieves a high precision under the guidance of the elites in the swarm. Meanwhile, in the last steps of iteration, the dynamic step length generated by Brownian motion can discourage premature convergence effectively. Also, in the third column, the descending behavior can be observed in the diagrams of average fitness history. Then in the fifth column, the search history diagram visually presents all individuals’ position history during the iteration procedure. Note that individuals tend to discover potential and promising areas in early iterations and finally cluster around the global optimal solution, which indicates CAO realizes a great tradeoff between exploration and exploitation. Especially for hybrid problem C201724, the CAO has focused on exploiting the left region in the solution space for a long time, whereas the best outcomes are attained in the right space. Actually, this shows the excellent exploration capability possessed by the develop technique, which enables the swarm’s diversity to be preserved and facilitates local optima avoidance.

More investigation into the exploration and exploitation balance of the CAO algorithm is required to better understand why particular metaheuristics perform better for global optimization issues. Therefore, an empirical analysis was carried out to identify the factors influencing the CAO’s capacity for exploration and exploitation, and the findings were presented. Figures 7 and 8 display the CAO’s diversity and balance analyses, respectively. As observed in Fig. 7, CAO showed high exploration and low exploitation at the start of the iterations. It became increasingly exploitative as the iterations went on. As can be seen in Fig. 8, every graph has a declining trend, signifying a shift from early exploration to late exploitation.

In conclusion, Fig. 6's convergence and average fitness graphs show that CAO can accomplish consistent optimization for issues with varying degrees of complexity. The diversity, trajectory, and search history data show that CAO can appropriately strike a balance between exploitation and exploration. The capacity of CAO to identify the global optimum solution is due to this characteristic.

Statistical results

In this subsection, CAO and other compared algorithms have been evaluated. A total of 29 standard functions have been used for evaluation, each of which has been discussed in its respective sections. Firstly, the scalability analyses have been done using functions F1 to F13, which are scalable functions, and their dimensions can be changed. For this evaluation, functions were tested with dimensions of 10, 30, 100, 500, and 1000, respectively, in four separate implementations and the corresponding results are depicted in four Tables 3, 4, 5, 6. This experiment shows whether the proposed algorithm can maintain its search capacities if facing problems with large dimensions.

The statistics from this analysis can be seen in Table 3. CAO proved to be superior to the other algorithms on CEC-2017 benchmark functions for 25 out of 58 indicators and for 13 out of 29 functions. Through the observation of experimental results, we find that CAO algorithm is better than other algorithms in solving unimodal functions. For multimodal functions, CAO was the most effective for two out of seven functions in terms of mean indicators. For functions, Fun6 and Fun9, CAO can reach the global optimal value, whereas the other algorithms such as MA, LSO, GWO, HHO, WOA, EO, and MPA can only go as far as a lower precision. For Fun10 function, GWO ranked first according to the mean metric, and CAO ranked second. For standard deviation, HHO has the minimum value, but its mean is the maximum among the 8 algorithms. For Fun5, Fun6, and Fun7 functions, the mean metric and standard deviation results of CAO were better than those of other algorithms. Regarding hybrid functions, CAO demonstrated outstanding exploration capabilities. Compared to the other algorithms, CAO achieved superior results for the Fun11, Fun12, and Fun18 functions. MA obtains the optimal value in functions 15,16 and 19, GWO obtains the optimal value in functions 17,20,22,28,29 and 30, EO obtains the optimal value in functions 14,and EO obtains the optimal value in functions 13,21 and 24. As can be seen from the Table 3, CAO is second only to GWO in the complex hybrid functions, and the performance of EO is comparable to that of other functions. By expanding the spatial dimension to 30, 50 and 100 dimensions, we can get a conclusion similar to that of 10 dimensions from Table 4,5 and 6. CAO is far better than other algorithms in unimodal and multimodal functions, while only GWO is better than the other 6 algorithms except EO in the complex hybrid functions.

Boxplot analysis

The distribution of the thirty findings is depicted in Fig. 9 using boxplots of the various approaches applied to the CEC-2017 benchmark functions. Instances when the method was run 20 times are indicated by the ( +) symbols outside the boxplots’ edges. It indicates that the algorithm successfully searched the search space when the ( +) signs are outside the lower border. The CAO algorithm’s interim results outperform those of the eight competing algorithms, as illustrated in Fig. 9. However, especially for the whole set of functions, there is little variation between the top and lower bounds of CAO. There are fewer ( +) signs in CAO, and there is no discernible difference between the upper and lower bounds. This suggests that even for difficult situations, CAO continues to produce satisfactory optimization outcomes. Additionally, the boxplot confirms the previously indicated analysis by reaffirming the consistency and robustness of CAO.

Convergence analysis

We performed a convergence analysis in order to assess the exploration and exploitation of the CAO and seven other comparison algorithms. The convergence curves of these eight algorithms on the CEC-2017 benchmark functions at 10 dimensions are shown in Fig. 10 and include unimodal (Fun1;F3), multimodal (Fun4; F5; F6), hybrid (Fun10; F16; F17), and composition functions (Fun23), which represent the differences in the iterative optimization of the algorithms on different functions. Other optimizers tend to approach a point of stagnation as the number of iterations grows, as seen in Fig. 10. However, even after other optimizers have reached the point of convergence, CAO continues to look for newer optimum values. This suggests that CAO can assist the channa argus in jumping out of the local optimum value and is more reliable in terms of exploration abilities when compared to the competing algorithms.

The reason is that CAO avoids using existing optimal values from the beginning, diligently searching for new spaces, discovering a new potential optimal value, evaluating it against the original optimal value, and then greedily retaining the one with the best optimization result. The convergence curves of different algorithms exhibit different potentials in global optimization, especially in the case of multimodal functions. Most notably, this method skips local optima at the beginning and then converges precisely to the global optimum. On the other hand, other algorithms require longer time to reach the point. For mixed and composite functions, CAO not only converges quickly in the early stages, but also becomes proficient in re exploring in the later stages.

Non-parametric statistical analysis

To determine if there was a statistically significant difference in accuracy between the optimization techniques used in the experiment, multiple statistical analyses were performed in this section, including the Wilcoxon Signed-Rank (WSR) test and Friedman’s test.

To obtain an accurate evaluation of CAO, we ran the WSR on this experiment. The WSR assesses whether there is a significant statistical difference between the results of the proposed approach and other methods67,68. The WSR was conducted with a 95% level of confidence. The test results of the WSR with 29 functions and 20 runs are presented in Table 7. In these tables, a (-) denotes that CAO exhibits a 95% significance level in comparison to the other optimizers, whereas a ( +) indicates the opposite. An ( =) signifies that there is no notable difference between CAO and the other optimizers. Table 7 provides the statistical results of the WSR in comparison to other algorithms when CAO is employed. Based on the data in Table 7, for the majority of functions, CAO demonstrates a clear superiority over all other methods.

To evaluate the effectiveness of our proposed method in comparison to its competitor algorithms, we employed the Friedman test in our study. This non-parametric test can detect significant differences in the performance of multiple optimizers, and we computed the ranks for a total of 29 functions across all techniques69,70. The radar chart in Fig. 11 presents the ranks of all the compared methods for each function, while Table 6 displays the average ranks. According to Table 8 and Fig. 13, CAO has the lowest average rank, indicating that it ranks first among all the algorithms, thus proving the efficacy of our method in quickly finding the global optimum for numerous problems.

Scalability analysis

Multiple choice variables are present in many real-world optimization issues, which can make them computationally difficult to solve. Scalability analysis can be used to determine the algorithm’s constraints for large-scale problems and to comprehend how the number of variables (dimension) affects an optimization algorithm’s performance69,70. The performance of the CAO algorithm is assessed in this section by means of a scalability test on a number of functions with varying dimensions, including the composition functions (Fun21, Fun23, Fun24,Fun27), the unimodal function (Fun1), the multimodal functions (Fun5, Fun6, Fun8, Fun9), and the hybrid functions (Fun16, Fun17, Fun20). The test uses the CEC-2017 test suite, which has dimensions of 10, 30, 50, and 100. Each algorithm can have up to 50,000 function evaluations, and 30 independent runs are carried out. The average fitness values of the various algorithms for dimensions 10, 30, 50, and 100 are displayed in Fig. 12.

With the exception of the Fun1 function, the CAO algorithm produced the best search results for the most of the functions that were studied. This experiment shows how the CAO algorithm can obtain higher convergence precision while managing the complexity of optimization brought on by an increase in the function’s dimension.

Computational time analysis

The computational time of the CAO algorithm is examined in this subsection in relation to other optimizers on the CEC-2017 benchmark functions. Table 9 presents the results of 20 executions of each optimization strategy for each assigned function.

From the table, it can be seen that the computation time of CAO is slightly longer than some other algorithms because it requires more computing power to execute its operators. Nevertheless, CAO still outperforms MA, EO, MPA, and HHO in a relatively short period of time. Although it may take more time to complete, CAO has many advantages over other optimizers.

Sensitivity analysis

In optimization, the values given to an algorithm’s parameters can have a significant impact on how effective the method is. It can be difficult to determine the ideal parameter settings, and it takes a lot of trial and error. Because parameter tuning entails running the algorithm several times with various parameter choices, it can be a time-consuming and computationally costly procedure. Additionally, various situations may require separate tuning runs because the ideal parameter settings can change based on the particular problem being solved. Sensitivity analysis is used to determine which parameters have the most effects on the algorithm’s performance and to assess how changes to the algorithm’s parameters affect the caliber of the solutions produced. In this study, we conducted a parameter sensitivity analysis to evaluate the effects of the original position inertia(OPI) on the performance of the algorithm. For four functions from the CEC-2017 benchmark test in 10 dim, we examined the effects of different control parameters and the values that are associated to them. We conducted 20 separate runs of the processes, each including up to 500 function evaluations. By changing each parameter’s value separately while leaving the others constant, we were able to evaluate its impact. We display the sensitivity analysis’s findings in terms of standard deviation (STD) and mean fitness (Mean). We ran the CAO algorithm for different values of OPI {0.1, 0.15, 0.2, 0.25, 0.3}. Table 10 show the results of the overall fitness values obtained by the algorithm for different OPI values. We observed that the performance of the algorithm is superior when OPI is set to 0.2. However, depending on the specific problem, experts may choose to adopt a different value for OPI.

Assessment of CAO on CEC-2020 benchmark test functions

To evaluate the performance of the CAO optimizer more precisely, we employed the CEC-2020 benchmark problems, which are widely used and complex. These test functions consist of composite, hybrid, multimodal, and rotated and shifted unimodal functions. The specific information of the CEC-2020 test suite is presented in Table 11, and Fig. 13 illustrates a two-dimensional format of some test functions. We analyzed the proposed CAO algorithm by comparing it with other well-known optimizers on benchmark functions. All tests were conducted using 10 and 20 dimensions, and each optimizer was given 500 iterations over 20 runs. The parameters for each algorithm remained consistent and are detailed in Table 2.

Tables 12 and 13 summarizes the results of each algorithm, including the average and standard deviation of the 20 runs.

From the table, we can observe that CAO ranks first among 6 functions in the 10 dimensional and 9 functions in the 20 dimensional problems. In addition, CAO achieved the second best result among other functions that were not ranked first. Figures 14, 15, 16, and 17 depict the convergence curve and boxplot of the eight algorithms for different CEC-2020 test suits. The figures show that the CAO algorithm converges rapidly to the optimal solution and achieves lower fitness values, as indicated by the small boxplot height. This demonstrates the robustness and steadiness of the CAO algorithm.

The Friedman test results for eight distinct algorithms are shown in Tables 14 and 15. According to the table, CAO outperformed the other eight algorithms used for comparison in terms of efficiency and received the top score. Therefore, the Friedman rank tests prove that the proposed CAO is effective and reliable. In addition, Tables 16 and 17 provide a comparison of the optimizers using the Wilcoxon rank test for 10 and 20 dimensions. A (-)sign indicates that the CAO algorithm is more efficient than its eight competitors, a ( +)sign implies that the eight competitors are more efficient than CAO, and a ( =) sign denotes equal performance between CAO and the competitor algorithm. Tables 16 and 17 presents the statistical results of all 20 runs and reveals that the CAO algorithm performed remarkably better than its competitors.

Engineering optimization test problems

In this section we assess the CAO algorithm’s performance on five real-world engineering optimization problems, encompassing both constrained and unconstrained issues. Multiple inequality constraints in the constrained issues call for a constraint-handling strategy, like the death penalty approach71,72,73. We used eight more algorithms to evaluate the CAO algorithm’s performance against that of other optimization techniques, MA, LSO, GWO, WOA, HHO, EO, and MPA. For every engineering challenge, we ran the CAO and the other optimization algorithms 20 times independently to guarantee unbiased assessments. There are several inequality constraints in these constrained issues. A penalty function technique is used to deal with constraint breaches; if any constraints are broken, the algorithm is penalized heavily. The parameter configurations are still the same as those from earlier studies. The specifics of the confined and unconstrained engineering benchmark tasks are as follows.

Pressure vessel design problem

As shown in Fig. 18, the main goal of this study is to reduce the overall cost of the raw materials needed for pressure vessel design. Finding the ideal values for the four decision variables—the thickness of the head (Th), the thickness of the shell (Ts), the radius of entrance (R), and the length of the cylindrical section (L), excluding the head—is our goal in order to do this. The following is the mathematical formulation for this optimization problem:

Consider variable \(\mathbf{x}=[{x}_{1},{x}_{2},{x}_{3},{x}_{4}]=[{T}_{s},{T}_{h},R,L].\)

Minimize \(f(\mathbf{x})=0.6224{x}_{1}{x}_{3}{x}_{4}+1.7781{x}_{2}{x}_{3}^{2}+3.1661{x}_{1}^{2}{x}_{4}+19.84{x}_{1}^{2}{x}_{3}.\)

Subject to \({g}_{1}(\mathbf{x})=-{x}_{1}+0.0193{x}_{3}.\)

Variable range \(0\le {x}_{1},{x}_{2}\le 99.\)

We used the suggested CAO algorithm in conjunction with other competing methods to ascertain the ideal values for the pressure vessel design factors. Table 18, which displays the simulation results, demonstrates that the CAO method performs better than the other competing algorithms by offering a more optimal computation of the objective function. Furthermore, statistical data for each algorithm are shown in Table 19, emphasizing the CAO algorithm’s superiority in terms of the mean, worst, and standard deviation of the best solutions. We have incorporated a convergence curve, constraint. The CAO algorithm’s gradual convergence to the ideal solution is shown in this figure.

Welded beam design

The welded beam design problem, a form of composite beam, is one of the most well-known engineering problems used to assess the algorithm’s performance. A weld is produced by welding multiple components together with molten metal in the way depicted in Fig. 19.

The best goal is to lower the total cost of the beam by selecting the optimal four design parameters: bar thickness (b), bar length (l), weld thickness (t), and bar height (h). One way to express the optimization model is as.

Already know: \({\varvec{x}} = \left[ {{x}_{1}, {x}_{2}, {x}_{3}, {x}_{4} } \right] = \left[ {{h,m,t,b}} \right]\),

Minimize: \({f}\left( {\varvec{x}} \right) = 1.10471{x}_{1}^{2} {x}_{2} + 0.04811{x}_{3} {x}_{4} \left( {14.0 + {x}_{2} } \right)\),

Variables range: \(0.1 \le x_{1} \le 2,0.1 \le x_{2} \le 10,0.1 \le x_{3} \le 10,0.1 \le x_{4} \le 2,\)

Restriction condition: \(\left\{ {\begin{array}{*{20}l} {h_{1} \left( {\varvec{x}} \right) = \tau \left( {x} \right) - \tau_{\max } \le 0,h_{2} \left( {\varvec{x}} \right) = \sigma \left( x \right) - \sigma_{\max } \le 0,} \hfill \\ {h_{3} \left( {\varvec{x}} \right) = \delta \left( x \right) - \delta_{\max } \le 0,h_{4} \left( {\varvec{x}} \right) = x_{1} - x_{4} \le 0,} \hfill \\ {h_{5} \left( {\varvec{x}} \right) = P - P_{C} \left( x \right) \le 0,h_{6} \left( {\varvec{x}} \right) = 0.125 - x_{1} \le 0,} \hfill \\ {h_{7} \left( {\varvec{x}} \right) = 1.1047x_{1}^{2} x_{2} + 0.04811x_{3} x_{4} \left( {14.0 + x_{2} } \right) - 5.0 \le 0,} \hfill \\ \end{array} } \right.\)

where

\(P = 6000\,{\text{lb}}\), \(L = 14\;{\text{in}}\), \(\delta_{\max } = 0.25\;{\text{in}}\), \(E = 3 \times 10^{6} \,{\text{psi,}}\)

\(G = 12 \times 10^{6} \;{\text{psi}}\), \(\tau_{\max } = 13600\;{\text{psi,}}\) \(\sigma_{\max } = 30000\;{\text{psi}}{.}\)

After 20 runs, all results are gathered in Table 20. The results for CAO are the best across all four indicators. CAO is more stable, as seen by its superior average value and STD. The variable values for each algorithm’s optimal result are displayed in Table 21.

Three bar truss design

The three-bar truss design seeks to achieve the lowest weight feasible while building a truss with multiple limitations, including deflection, buckling, and stress. As shown in Fig. 20, this optimization problem has two design parameters, and. It is displayed mathematically as.

Consider: \(\user2{x = }\left[ {x_{1} ,x_{2} } \right] = \left[ {A_{1} ,A_{2} } \right],\)

Minimize: \(f\left( {\varvec{x}} \right) = \left( {2\sqrt {2x_{1} } + x_{2} } \right) * l,\)

Restriction condition: \(\left\{ {\begin{array}{*{20}c} {h_{1} \left( {\varvec{x}} \right) = \frac{{\sqrt {2x_{1} } + x_{2} }}{{\sqrt {2x_{1}^{2} } + 2x_{1} x_{2} }}P - \sigma \le 0,} \\ {h_{2} \left( {\varvec{x}} \right) = \frac{{x_{2} }}{{\sqrt {2x_{1}^{2} } + 2x_{1} x_{2} }}P - \sigma \le 0,} \\ {h_{3} \left( {\varvec{x}} \right) = \frac{1}{{\sqrt {2x_{1}^{2} } + x_{1} }}P - \sigma \le 0,} \\ \end{array} } \right.\)

Variables range: \(0 \le x_{1} ,x_{2} \le 1,\)

where \(l = 100\) cm, \(P = 2\) kN/cm2, \(\sigma = 2\) kN/cm2.

The CAO algorithm was employed to solve the three-bar truss design problem, and its effectiveness was compared with that of other optimization techniques. A comprehensive comparison of the capabilities of the CAO algorithm versus other methods is provided in Table 22, and the statistical results are shown in Table 23. The CAO algorithm outperforms other approaches, particularly in terms of the ’Std’, ’Worst’, ’Mean’, and ’Best’ metrics.

Spring design

The construction of a tension/compression spring with the design depicted in Fig. 21 is used as another acknowledged standard engineering design problem to assess the viability of the suggested CAO method in conventional engineering applications. Reducing the strain on a tension/compression spring design is the aim of this optimization work. Shear stress, minimum deflection, and minimum surge frequency are the only limitations on this engineering design problem. The diameter of the wire (d), the diameter of the mean coil (D), and the total number of active coils (N) are the optimization choice criteria for the design case. A vector representing the optimization parameters for this design scenario looks like this: x = [× 1, × 2, × 3], where the variables × 1, × 2, and × 3 stand in for the constants d, D, and N. The following is a description of the mathematical formula for this optimization design problem:

Minimize: \(f\left( {\varvec{x}} \right) = \left( {x_{3} + 2} \right)x_{2} x_{1}^{2} ,\)

Restriction condition: \(\left\{ {\begin{array}{*{20}l} {h_{1} \left( {\varvec{x}} \right) = 1 - \frac{{x_{2}^{3} x_{3} }}{{71785x_{1}^{4} }} \le 0,} \hfill \\ {h_{2} \left( x \right) = \frac{{4x_{2}^{2} - x_{1} x_{2} }}{{12566\left( {x_{2} x_{1}^{3} - x_{1}^{4} } \right)}} + \frac{1}{{5108x_{1}^{2} }} \le 0,} \hfill \\ {h_{3} \left( {\varvec{x}} \right) = 1 - \frac{{104.45x_{1} }}{{x_{2}^{2} x_{3} }} \le 0,} \hfill \\ {h_{4} \left( {\varvec{x}} \right) = \frac{{x_{1} + x_{2} }}{1.5} - 1 \le 0,} \hfill \\ \end{array} } \right.\)

Variables range: \(0.05 \le x_{1} \le 2,0.25 \le x_{2} \le 1.30,2.00 \le x_{3} \le 15.\)

Regarding the values of design variables and objective cost values, the suggested CAO algorithm is contrasted with other prospective competing algorithms for the tension/compression spring design problem in Table 24. The results presented in Table 24 show that the suggested CAO algorithm can be used to optimally design the tension spring problem with an optimum cost of 0.0127. In many instances, this cost is marginally lower than that of competing optimization techniques.

An overview of the statistical results of this design challenge as decided by the CAO algorithm and various competing meta-heuristics is shown in Table 25. Once again, when considering the best, average, worst, and standard deviation statistical results, the CAO algorithm fared better than other optimization methods, as shown in Table 25. According to this, CAO is more reliable and effective than many of its competitors at solving this design problem for the same number of iterations and search agents.

Speed reducer design problem

The design of a speed reducer, whose structural schematic is shown in Fig. 22, is another real-world example that is frequently used as a reference benchmark for evaluating optimization techniques. There are seven decision parameters in this design challenge, which makes it difficult. The following four limitations have an impact on the weight that needs to be decreased in this design problem: bending stress of the gear teeth, surface stress, transverse shaft deflections, and shaft stresses. To address this optimization problem, seven decision design parameters were used, which are as follows: b, m, z, l1, l2, d1, and d2. These characteristics are as follows: the diameter of the first and second shafts, the distance between the bearings between the first and second shafts, the tooth module, the number of teeth in the pinion, and the face width. In order to solve this optimization problem, these parameters were represented by a vector, which is provided as follows: x = [× 1 × 2 × 3 × 4 × 5 × 6 × 7] = [b m z l1 l2 d1 d2]. The following is a description of the mathematical formula for this problem:

The cost function that needs to be optimized can be described as follows:

The following limitations apply to this engineering design:

where, for the variables b, m, z, l1, l2, d1, and d2, the ranges of the design variables are 2.6 ≤ × 1 ≤ 3.6, 0.7 ≤ × 2 ≤ 0.8, 17 ≤ × 3 ≤ 28, 7.3 ≤ × 4 ≤ 8.3, 7.3 ≤ × 5 ≤ 8.3, 2.9 ≤ × 6 ≤ 3.9, and 5.0 ≤ × 4 ≤ 5.5, respectively.

Table 26 shows a comparison between the designs and cost solutions for the speed reducer design challenge that CAO came up with and the other optimization methods mentioned above. Table 26 shows that the suggested CAO algorithm works better than other competing optimization methods because it has the best design cost for this problem, which is around 2994.471. This suggests that CAO can be used to determine the best design for this issue.

The statistical results of the CAO algorithm and other optimization strategies for the speed reducer design problem are tabulated in Table 27. The statistics shown in Table 27 show that the CAO algorithm is superior to other meta-heuristic techniques. This indicates that the CAO algorithm produced the best optimal solutions among all the competing algorithms. This demonstrates how, based on these statistical findings, the CAO algorithm performs better than rival algorithms.

Cantilever beam design problem

Despite the similarities to the previous problem, the objective of this one is to lower the weight of a cantilever beam composed of five components, each of which has a hollow cross section that gradually thickens. As seen in Fig. 23, the beam is securely supported and the free end of the cantilever is subject to an external vertical force.

The goal of this design challenge is to reduce the weight of a cantilever beam while placing a maximum limit on the vertical displacement of the free end. The design variables are each part’s cross-sectional heights and widths. Because the upper and lower bounds are too large and little, respectively, these variables do not become operational in the issue. Finding workable combinations of the five structural design parameters is the first step in solving the cantilever beam design challenge. These design parameters could be represented by a vector like this: \(\begin{array}{c}\overrightarrow{x}=[{x}_{1},{x}_{2},{x}_{3},{x}_{4},{x}_{5}]\end{array}\). The objective cost function for this design problem can be written as follows:

f(x) = 0.0624(× 1 + × 2 + × 3 + × 4 + × 5), where the optimization constraint that follows is applicable.

The variables were assumed to be in the range 1 ≤ xi ≤ 10 for this design issue, where i ∈ 1, 2, 3, 4, 5.

The optimization results of the suggested CAO technique and other similar competing meta-heuristics used to address this issue are shown in Table 28. Based on the cost weight values given in Table 28, the suggested CAO algorithm yielded the optimum solution for the cantilever design problem, with an ideal cost of around 263.896. When compared to other competing algorithms, this result showed incredibly competitive results for CAO and outperformed the majority of them.

The statistical optimization results of the CAO method and the other optimization strategies covered above are compiled in Table 29 with regard to the mean, standard deviation, best, and worst scores for the cantilever design problem across 20 different runs.

As demonstrated by the solutions in Table 29, the suggested CAO approach fared better statistically than many other competing algorithms for this design problem. This implies that CAO is superior to other meta-heuristics, exceeding most algorithms in producing results that are on par with them.

Gear train design problem

As shown in Fig. 24, the primary goal of this challenge is to reduce the cost of the gear ratio in a gear train, which comprises four design variables, including the number of gear teeth. It is possible to formulate this unconstrained discrete design problem quantitatively.

Consider variable \(\mathbf{z}=[{z}_{1},{z}_{2},{z}_{3},{z}_{4}]=[{T}_{a},{T}_{b},{T}_{d},{T}_{f}].\)

Variable range: \(0.01\le {z}_{i}\le 60i=1,...,4.\)

Several techniques, including the proposed CAO algorithm, were used to determine the optimal parameter values for the gear train design problem. The results are shown in Table 30 and show that, with the exception of the MFO algorithm, the CAO algorithm yields the same minimum cost as the other approaches. The statistical results for each method are shown in Table 31, which shows that the CAO algorithm is the most effective in terms of both the “Mean” and “Std” metrics.

Discussion

This part summarizes and discusses the experimental results, which are categorized into four groups to fully demonstrate the competitiveness and efficacy of the CAO algorithm suggested in this work. First, qualitative study shows that CAO well balances exploration and exploitation, avoids local optima, shows good convergence, and demonstrates swarm intelligence traits. Second, the comparison results show that CAO exhibits better convergence accuracy across the majority of benchmark functions (CEC-2017 and CEC-2020) when compared to popular algorithms. Its growing benefit as the problem dimension grows is especially notable. Convergence curves further demonstrate CAO’s potential for optimization, indicating robustness against local optima problems for more promising solutions and demonstrating sustained convergence behavior in the late iteration. CAO’s result distribution is more centralized, as shown by box plots. Additionally, statistical research confirms CAO’s outstanding performance on benchmarks of many dimensions, demonstrating thorough and efficient issue optimization capabilities.

Third, CAO demonstrates its advantage in handling complicated issues by achieving expected numerical optimization outcomes even when compared to two benchmark-winning techniques and six sophisticated algorithms.

Lastly, compared to several state-of-the-art methods, CAO ranks among the top optimizers and obtains the best solutions for eight well-known industrially limited issues. These results highlight how the suggested CAO effectively handles issues related to local optima and immature convergence across many problem classes with its exploratory and exploitative methods, demonstrating a greater possibility for avoiding local optima stagnation.

The better performance of CAO over current optimization algorithms is firmly supported by our experimental data. This benefit is due to a number of important factors:

-

CAO employs a hunting search strategy that allows it to solve optimization problems with different characteristics, including single-peak landscapes, numerous variables, and constraints.

-

The escape strategy in CAO is designed for exploration and helps the algorithm to carry out global search.

-

By successfully avoiding local extremes and premature convergence, the commensalism phase adds to the algorithm’s resilience throughout exploration and exploitation.

-

CAO is distinguished by its simplicity and ease of use.

-

CAO offers benefits in terms of computational cost and complexity while guaranteeing the best outcomes.

The analysis presented above leads to the following conclusions: the suggested algorithm performs exceptionally well in terms of optimization, particularly when dealing with multimodal and composite functions. Three main qualities are responsible for this effectiveness: its extraordinary ability to avoid local optima, its excellent exploration capabilities, and its skillful balance between exploration and exploitation.

These search features are closely related to the algorithm’s multi-strategy search mechanism, which guarantees solution variety, promotes thorough exploration, and reduces the possibility of becoming trapped in local optima. It is ideal for resolving industrial optimization issues because of these qualities.

Conclusion and future work

This study introduces a new biologically inspired optimizer inspired by the hunting and escape behavior of Channa Argus in their natural environment. The CAO’s performance is evaluated using a broad range of 39 benchmark functions, including unimodal, multimodal, hybrid, and composite functions. To underscore its optimization capabilities, CAO is compared against state-of-art meta-heuristics, results from actual engineering challenges show that CAO is particularly competitive when it comes to solving engineering jobs with uncertain and limited search spaces.

Even with its exceptional efficiency, CAO has limitations when it comes to solving some discrete optimization problems, especially in high-dimensional binary spaces where many optimization algorithms are challenged by the solution space’s exponential development. Furthermore, CAO may exhibit relative inefficiency on particular engineering challenges, just like any optimizer. These shortcomings offer insightful guidelines for further study, creating chances for notable breakthroughs in optimization algorithms for challenging, real-world issues. Combining CAO with other algorithms for synergistic enhancement to overcome its shortcomings is also a future development direction. Meanwhile, CAO also has a wide range of application Spaces, such as bearing fault diagnosis74, defect identification75, complex machinery applications76, waste management techniques77, forecasting production78, predictive analysis79.

Data availability

The code used to evaluate the algorithm CAO is available with the paper. The datasets used and analysed during the current study available from the corresponding author on reasonable request.

References

Braik, M. et al. Tornado optimizer with Coriolis force: A novel bio-inspired meta-heuristic algorithm for solving engineering problems. Artif. Intell. Rev. 58, 123 (2025).

Ghasemi, M. et al. An efficient bio-inspired algorithm based on humpback whale migration for constrained engineering optimization. Results Eng. 25, 104215 (2025).

Kaur, S., Awasthi, L. K., Sangal, A. L. & Dhiman, G. Tunicate Swarm Algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 90, 103541 (2020).

Ge, Y., Chen, Z. & Liu, Y. An efficient keywords search in temporal social networks. Data Sci. Eng. 8, 368–384 (2023).

Zhong, C. Starfish optimization algorithm (SFOA): A bio-inspired metaheuristic algorithm for global optimization compared with 100 optimizers. Neural Computing and Applications.

Połap, D. & Woźniak, M. Red fox optimization algorithm. Expert Syst. Appl. 166, 114107 (2021).

Deng, L. & Liu, S. Snow ablation optimizer: A novel metaheuristic technique for numerical optimization and engineering design. Expert Syst. Appl. 225, 120069 (2023).

Li, K. et al. A multi-strategy enhanced northern goshawk optimization algorithm for global optimization and engineering design problems. Comput. Methods Appl. Mech. Eng. 415, 116199 (2023).

Hemeida, M. G., Alkhalaf, S., Mohamed, A.-A.A., Ibrahim, A. A. & Senjyu, T. Distributed generators optimization based on multi-objective functions using manta rays foraging optimization algorithm (MRFO). Energies 13, 3847 (2020).

Zhao, W., Zhang, Z. & Wang, L. Manta ray foraging optimization: An effective bio-inspired optimizer for engineering applications. Eng. Appl. Artif. Intell. 87, 103300 (2020).

Alghamdi, T. A. H., Anayi, F. & Packianather, M. Optimal design of passive power filters using the MRFO algorithm and a practical harmonic analysis approach including uncertainties in distribution networks. Energies 15, 2566 (2022).

Gao, Y., Zhang, J., Wang, Y., Wang, J. & Qin, L. Love evolution algorithm: A stimulus–value–role theory-inspired evolutionary algorithm for global optimization. J. Supercomput. https://doi.org/10.1007/s11227-024-05905-4 (2024).

Javed, S. T., Zafar, K. & Younas, I. Kids learning optimizer: Social evolution and cognitive learning-based optimization algorithm. Neural Comput. Appl. https://doi.org/10.1007/s00521-024-10009-4 (2024).

Gandomi, A. H. & Alavi, A. H. Krill herd: A new bio-inspired optimization algorithm. Commun. Nonlinear Sci. Numer. Simul. 17, 4831–4845 (2012).

Abdel-Basset, M., Mohamed, R., Azeem, S. A. A., Jameel, M. & Abouhawwash, M. Kepler optimization algorithm: A new metaheuristic algorithm inspired by Kepler’s laws of planetary motion. Knowl.-Based Syst. 268, 110454 (2023).

Amiri, M. H., Mehrabi Hashjin, N., Montazeri, M., Mirjalili, S. & Khodadadi, N. Hippopotamus optimization algorithm: A novel nature-inspired optimization algorithm. Sci. Rep.-uk 14, 5032 (2024).

Peraza-Vázquez, H., Peña-Delgado, A., Merino-Treviño, M., Morales-Cepeda, A. B. & Sinha, N. A novel metaheuristic inspired by horned lizard defense tactics. Artif. Intell. Rev. 57, 59 (2024).

Joshi, S. K. Chaos embedded opposition based learning for gravitational search algorithm. Appl. Intell. https://doi.org/10.1007/s10489-022-03786-9 (2022).

Zolfi, K. Gold rush optimizer: A new population-based metaheuristic algorithm. Oper. Res. Decis. 33, 113–150 (2023).

Mohammadi-Balani, A., Dehghan Nayeri, M., Azar, A. & Taghizadeh-Yazdi, M. Golden eagle optimizer: A nature-inspired metaheuristic algorithm. Comput. Ind. Eng. 152, 107050 (2021).

Hamadneh, T. et al. Builder Optimization Algorithm: An effective human-inspired metaheuristic approach for solving optimization problems. IJIES 18, 928–937 (2025).

Hamadneh, T. et al. Candle Flame Optimization: A physics-based metaheuristic for global optimization. IJIES 18, 826–837 (2025).

El-kenawy, E.-S.M. et al. Greylag Goose Optimization: Nature-inspired optimization algorithm. Expert Syst. Appl. 238, 122147 (2024).

Hamadneh, T. et al. MAO Algorithm: A novel approach for engineering design challenges. IJIES 18, 484–493 (2025).

Hamadney, T. et al. On the application of tailor optimization algorithm for solving real-world optimization application. IJIES 18, 1–12 (2025).

Hamadneh, T. et al. Orangutan Optimization Algorithm: An innovative bio-inspired metaheuristic approach for solving engineering optimization problems. IJIES 18, 47–57 (2025).

Hamadneh, T. et al. PPB optimization: A new human-based metaheuristic approach for solving optimization tasks. IJIES 18, 504–519 (2025).

Hamadneh, T. et al. Perfumer optimization algorithm: A novel human-inspired metaheuristic for solving optimization tasks. IJIES 18, 633–643 (2025).

Hamadneh, T. et al. Revolution optimization algorithm: A new human-based metaheuristic algorithm for solving optimization problems. IJIES 18, 520–531 (2025).

Hamadneh, T. et al. Singer optimization algorithm: An effective human-based approach for solving optimization tasks. IJIES 18, 114–126 (2025).

Hamadneh, T. et al. Spider-tailed horned viper optimization: An effective bio-inspired metaheuristic algorithm for solving engineering applications. IJIES 18, 25–35 (2025).

Tan, Y. & Zhu, Y. Fireworks Algorithm for Optimization.

Faramarzi, A., Heidarinejad, M., Stephens, B. & Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 191, 105190 (2020).

Azizi, M., Aickelin, U., Khorshidi, A., Baghalzadeh, H. & Shishehgarkhaneh, M. Energy valley optimizer: A novel metaheuristic algorithm for global and engineering optimization. Sci. Rep. 13, 226 (2023).

Shamsaldin, A. S., Rashid, T. A., Al-Rashid Agha, R. A., Al-Salihi, N. K. & Mohammadi, M. Donkey and smuggler optimization algorithm: A collaborative working approach to path finding. J. Comput. Des. Eng. 6, 562–583 (2019).

Hasan, N. M. et al. An enhanced donkey and smuggler optimization algorithm for choosing the precise job applicant. Iran J. Comput. Sci. 6, 233–243 (2023).

Zhang, M. & Wen, G. Duck swarm algorithm: Theory, numerical optimization, and applications. Clust. Comput. 27, 6441–6469 (2024).

Marinaki, M., Taxidou, A. & Marinakis, Y. A hybrid Dragonfly algorithm for the vehicle routing problem with stochastic demands. Intell. Syst. Appl. 18, 200225 (2023).

Singh, H., Sawle, Y., Dixit, S., Malik, H. & García Márquez, F. P. Optimization of reactive power using dragonfly algorithm in DG integrated distribution system. Electr. Power Syst. Res. 220, 109351 (2023).

Lang, Y. & Gao, Y. Dream Optimization Algorithm (DOA): A novel metaheuristic optimization algorithm inspired by human dreams and its applications to real-world engineering problems. Comput. Methods Appl. Mech. Eng. 436, 117718 (2025).

Azizi, M., Talatahari, S. & Gandomi, A. H. Fire Hawk Optimizer: A novel metaheuristic algorithm. Artif. Intell. Rev. 56, 287–363 (2023).

Abdollahzadeh, B. et al. Puma optimizer (PO): a novel metaheuristic optimization algorithm and its application in machine learning. Clust. Comput. https://doi.org/10.1007/s10586-023-04221-5 (2024).

Dewi, S. K. & Utama, D. M. A new hybrid whale optimization algorithm for green vehicle routing problem. Syst. Sci. Control Eng. 9, 61–72 (2021).

Deng, H., Liu, L., Fang, J., Qu, B. & Huang, Q. A novel improved whale optimization algorithm for optimization problems with multi-strategy and hybrid algorithm. Math. Comput. Simul. 205, 794–817 (2023).

Weng, S., Liu, Z., Fan, Z. & Zhang, G. A whale optimization algorithm-based ensemble model for power consumption prediction. Electr. Eng. https://doi.org/10.1007/s00202-024-02611-5 (2024).

Zervoudakis, K. & Tsafarakis, S. A mayfly optimization algorithm. Comput. Ind. Eng. 145, 106559 (2020).

Yang, K. & Pan, D. An improved mayfly optimization algorithm for type-2 multi-objective integrated process planning and scheduling. Mathematics 11, 4384 (2023).

Zhao, Y., Huang, C., Zhang, M. & Lv, C. COLMA: A chaos-based mayfly algorithm with opposition-based learning and Levy flight for numerical optimization and engineering design. J. Supercomput. 79, 19699–19745 (2023).

Pham, V. H. S. & Nguyen, V. N. Cement transport vehicle routing with a hybrid sine cosine optimization algorithm. Adv. Civ. Eng. 2023, 1–15 (2023).

Hassan, M. H., Daqaq, F., Selim, A., Domínguez-García, J. L. & Kamel, S. MOIMPA: multi-objective improved marine predators algorithm for solving multi-objective optimization problems. Soft Comput. https://doi.org/10.1007/s00500-023-08812-7 (2023).

Kuşoğlu, M. & Yüzgeç, U. Multi-Objective Harris Hawks Optimizer for Multiobjective Optimization Problems (2020).

Zulfiqar, R., Javed, T., Anwar Ali, Z. H., Alkhammash, E. & Hadjouni, M. Selection of metaheuristic algorithm to design wireless sensor network. Intell. Autom. Soft Comput. 37, 985–1000 (2023).

Dhal, K. G., Sasmal, B., Das, A., Ray, S. & Rai, R. A comprehensive survey on arithmetic optimization algorithm. Arch. Comput. Methods Eng. 30, 3379–3404 (2023).

Mahajan, S., Abualigah, L. & Pandit, A. K. Hybrid arithmetic optimization algorithm with hunger games search for global optimization. Multimed. Tools Appl. 81, 28755–28778 (2022).

Abualigah, L., Diabat, A., Mirjalili, S., Abd Elaziz, M. & Gandomi, A. H. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 376, 113609 (2021).

Jia, Y., Wang, S., Liang, L., Wei, Y. & Wu, Y. A flower pollination optimization algorithm based on cosine cross-generation differential evolution. Sensors 23, 606 (2023).

Liu, H., Duan, S. & Luo, H. A hybrid engineering algorithm of the seeker algorithm and particle swarm optimization. Mater. Test. 64, 1051–1089 (2022).

Shehadeh, H. A., Mustafa, H. M. J. & Tubishat, M. A hybrid genetic algorithm and sperm swarm optimization (HGASSO) for multimodal functions. Int. J. Appl. Metaheurist. Comput. 13, 1–33 (2022).

Tao, L., Yang, X., Zhou, Y. & Yang, L. A novel transformers fault diagnosis method based on probabilistic neural network and bio-inspired optimizer. Sensors 21, 3623 (2021).

Rehman, H. et al. Driving training-based optimization (DTBO) for global maximum power point tracking for a photovoltaic system under partial shading condition. IET Renew. Power Gen. 17, 2542–2562 (2023).

Mohamed, A.-A.A. et al. Parasitism – Predation algorithm (PPA): A novel approach for feature selection. Ain Shams Eng. J. 11, 293–308 (2020).

Nandi, A. & Kamboj, V. K. A Canis lupus inspired upgraded Harris hawks optimizer for nonlinear, constrained, continuous, and discrete engineering design problem. Numer. Methods Eng. 122, 1051–1088 (2021).

Tu, B., Wang, F., Huo, Y. & Wang, X. A hybrid algorithm of grey wolf optimizer and Harris Hawks optimization for solving global optimization problems with improved convergence performance. Sci. Rep.-uk 13, 22909 (2023).

Fathiamuthal Rajeena, P. P., Ismail, W. N. & Ali, M. A. S. A Metaheuristic Harris Hawks optimization algorithm for weed detection using drone images. Appl. Sci. 13, 7083 (2023).

Too, J., Abdullah, A. R. & Mohd Saad, N. A new quadratic binary harris hawk optimization for feature selection. Electronics 8, 1130 (2019).

Chong, G. & Yuan, Y. An improved Harris Hawk optimization algorithm. Mech. Eng. Sci. 6, 13224 (2024).

Deb, K., Pratap, A., Agarwal, S. & Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Computat. 6, 182–197 (2002).

Shang, Q. et al. A multi-stage competitive swarm optimization algorithm for solving large-scale multi-objective optimization problems. Expert Syst. Appl. 260, 125411 (2025).

Yang, X.-S. & Deb, S. Multiobjective cuckoo search for design optimization. Comput. Oper. Res. 40, 1616–1624 (2013).

Price, K. V., Awad, N. H., Ali, M. Z. & Suganthan, P. N. Problem Definitions and Evaluation Criteria for the 100-Digit Challenge Special Session and Competition on Single Objective Numerical Optimization.

Kudela, J. A critical problem in benchmarking and analysis of evolutionary computation methods. Nat. Mach. Intell. 4, 1238–1245 (2022).

Huang, X., Xu, R., Yu, W. & Wu, S. Evaluation and analysis of heuristic intelligent optimization algorithms for PSO, WDO, GWO and OOBO. Mathematics 11, 4531 (2023).

Dhiman, G. & Kaur, A. STOA: A bio-inspired based optimization algorithm for industrial engineering problems. Eng. Appl. Artif. Intell. 82, 148–174 (2019).

Peraza-Vázquez, H. et al. A bio-inspired method for engineering design optimization inspired by dingoes hunting strategies. Math. Probl. Eng. 2021, 1–19 (2021).

Vashishtha, G., Chauhan, S., Singh, M. & Kumar, R. Bearing defect identification by swarm decomposition considering permutation entropy measure and opposition-based slime mould algorithm. Measurement 178, 109389 (2021).

Chauhan, S. & Vashishtha, G. A synergy of an evolutionary algorithm with slime mould algorithm through series and parallel construction for improving global optimization and conventional design problem. Eng. Appl. Artif. Intell. 118, 105650 (2023).

Mahmoud, M. A review on waste management techniques for sustainable energy production. MOR 3, 47–58 (2025).

Mishra, P. et al. Forecasting production of potato for a sustainable future: global market analysis. Potato Res. 67, 1671–1690 (2024).

Khaled, K. & Singla, M. K. Predictive analysis of groundwater resources using random forest regression. JAIM 09, 11–19 (2025).

Author information

Authors and Affiliations

Contributions

Da Fang performed the data analysis; Jun Yan performed the formal analysis; Quan Zhou performed the validation; Da Fang wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This material is the authors’ own original work, which has not been previously published elsewhere. The paper is not currently being considered for publication elsewhere. The paper reflects the authors’ own research and analysis in a truthful and complete manner.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions