Abstract

Covariance estimation has widespread applications in various fields such as logistic regression and portfolio optimization. However, in high-dimensional or small-sample scenarios, traditional covariance matrix estimation often encounters the problem of non-invertibility, which severely restricts the performance of related models. This paper presents a novel regularized covariance estimation method aimed at addressing the crucial issue of non-invertible covariance matrices, which has long been a limitation of traditional approaches. The proposed method can ensure the invertibility of the estimated covariance matrix, thereby enhancing numerical stability and reliability. We integrated this method into the analytical solution framework of logistic regression, thereby significantly improving the stability and accuracy of the analytical solution. We apply the proposed method to portfolio return management and demonstrate its effectiveness at improving the quality of optimization solutions for financial applications. Experimental results demonstrate that our method outperforms traditional methods on both logistic regression prediction and portfolio optimization, highlighting its practical value and robustness.

Similar content being viewed by others

Introduction

Over the past decade, the rapid advancement of technology and exponential growth of computational power have significantly simplified data acquisition and analysis across diverse fields, including finance, economics, social sciences, and health sciences1. In many statistical procedures, the population covariance matrix \(\Sigma\) or its inverse, namely the precision matrix \(\Sigma ^{-1}\), plays a critical role as an input for analyzing p-dimensional statistical vectors \(\textbf{x} = (x_1, \dots , x_n)\). Accurate covariance estimation is particularly crucial in scenarios where the inverse of the covariance matrix is required, such as logistic regression and portfolio optimization. However, traditional covariance estimation methods often face significant challenges, particularly in high-dimensional settings or when the sample size is limited2, leading to singular or ill-conditioned covariance matrices that hinder the computation of reliable inverse matrices.

Recently, Zeng (2021)3 introduced an analytical solution for logistic regression that relies heavily on the inverse of the sample covariance matrix. Although this approach offers a computationally efficient alternative to iterative optimization methods, it is susceptible to failure when the covariance matrix is not invertible, which is a common issue in practical applications. This limitation not only restricts the applicability of Zeng’s method but also undermines the stability and accuracy of the resulting solutions.

To address these challenges, we propose a novel regularized covariance estimation method that ensures the invertibility of the estimated covariance matrix, even in high-dimensional or small-sample scenarios. Our method incorporates a regularization term that stabilizes the covariance matrix, thereby enabling the robust computation of its inverse. We demonstrate the effectiveness of our approach by integrating it into Zeng’s logistic regression framework, which significantly enhances the stability and reliability of the analytical solution.

Furthermore, we extend the application of our regularized covariance estimation method to portfolio return management, where the inverse of the covariance matrix is essential. By employing our method, we achieve substantial improvements in the quality of portfolio optimization solutions, particularly in cases where traditional methods fail as a result of the singularity of the covariance matrix.

The main contributions of this study can be summarized as follows. First, we propose a regularized covariance estimation method that guarantees the invertibility of the estimated covariance matrix, thereby addressing a critical limitation of existing approaches. We then integrate our method into Zeng’s logistic regression framework to demonstrate its ability to produce stable and accurate analytical solutions. Finally, we apply our method to portfolio optimization to demonstrate its effectiveness in improving the quality of optimization solutions in financial applications.

The remainder of this paper is organized as follows. The second section reviews the relevant background and existing work on covariance estimation and its applications. The third section describes our proposed methodology. The fourth section describes our experimental setup and evaluation metrics. The fifth section discusses the results and key findings. Finally, The sixth section concludes this paper and outlines potential directions for future research.

Related works

Covariance estimation methods

The estimation of covariance matrices is a fundamental problem in statistics and machine learning. The sample covariance matrix, while straightforward, often performs poorly in high-dimensional settings because of its susceptibility to ill- conditioned4. To address this issue, Ledoit and Wolf (2004)5 proposed a linear shrinkage estimator that shrinks the sample covariance matrix toward a target matrix and optimizes the shrinkage intensity to minimize the mean squared error. This method significantly improves the conditioning of the covariance matrix, ensuring its invertibility and stability, even in high-dimensional settings. Building on this work, Ledoit and Wolf (2015)6 introduced a nonlinear shrinkage estimator that adaptively adjusts the shrinkage intensity of each eigenvalue based on its position in the spectrum. This nonlinear method further enhances estimation accuracy and computational efficiency, particularly in applications such as portfolio optimization and principal component analysis. Empirical results demonstrate that the nonlinear shrinkage estimator outperforms both the sample covariance matrix and linear shrinkage methods, achieving superior performance in terms of out-of-sample portfolio returns and risk management.

Building on the above research, traditional nonlinear methods (such as QuEST and NERCOME) rely on numerical computations or sample splitting, which are inefficient and struggle to handle ultra-high-dimensional data. Ledoit and Wolf (2020)7 developed an analytical nonlinear shrinkage method that combines efficiency, accuracy, and theoretical elegance, breaking through dimensional limitations and improving computational speed. Their core innovation–the combination of the Hilbert transform and kernel estimation–provides a new paradigm for interdisciplinary research in random matrix theory, nonparametric estimation, and high-dimensional statistics.

In the context of sparse inverse covariance matrix estimation, Friedman et al. (2008)8 proposed a graphical lasso method that introduces an \(L_1\) regularization term (lasso penalty) into the estimation process. By promoting sparsity in the estimated inverse covariance matrix, the graphical lasso enhances both the interpretability and computational efficiency of estimation. This method has become the cornerstone of applications such as Gaussian graphical models and network inferences. Further advancing the field, Cai and Yuan (2012)9 proposed an adaptive block-thresholding estimator for high-dimensional covariance matrices. Their method partitions the sample covariance matrix into blocks and applies thresholding to each block individually, effectively reducing noise and enhancing sparsity while preserving the positive definiteness of the estimated matrix. The block-thresholding method is particularly suitable for applications in which the true covariance matrix is sparse or approximately sparse such as portfolio optimization and high-dimensional hypothesis testing.

In addition to the previous analysis, the estimation of the covariance matrix involves modeling the relationships between variables within different clusters, meaning that the estimation of finite population variance indirectly influences the accuracy of covariance estimation. Hameed Ali et al. (2024)10 comprehensively analyzed the impact of transformed auxiliary variables on the performance of finite population variance estimators under various transformations and defined dominance regions for each transformation, providing guidance for variable selection in practical applications. Hameed Ali et al. (2025)11 proposed a novel data-driven stratification algorithm based on K-Means clustering and Principal Component Analysis (PCA), offering new insights and methods for stratified random sampling (StRS). Their proposed estimators demonstrate superior performance under StRS, significantly enhancing the precision of variance estimation and providing a powerful tool for analyzing complex datasets.

For shrinkage estimation, block thresholding, modified empirical covariance matrices, or sparse matrix technology, the core goal is to reduce estimation errors and enhance estimation stability and accuracy. This paper introduces a regularized covariance matrix estimation method to enhance the practicality and adaptability of covariance matrix estimation. Regularization, a widely used technique in statistics and machine learning, introduces appropriate constraints or penalty terms to reduce model complexity while improving model generalization and stability. In covariance matrix estimation, regularization not only smooths extreme coefficients and reduces estimation errors but also effectively addresses the challenges posed by high-dimensional data, enhancing the reliability of estimation results.

Covariance estimation in logistic regression

Logistic regression is a fundamental technique for binary classification problems, where the goal is to model the probability of an event occurring based on input features. The traditional approach to fitting a logistic regression model involves maximizing the likelihood function, which measures how well the model explains the observed data. Considering the absence of a closed-form solution, iterative optimization methods such as gradient descent and the Newton–Raphson method are commonly employed to maximize the likelihood function8.

To address the computational limitations of these methods, Zeng (2021)3 proposed an analytical solution for logistic regression that uses the estimation of the covariance matrix. This approach provides a computationally efficient alternative to iterative methods by directly estimating model parameters using the inverse of the covariance matrix. However, the performance of Zeng’s3 method is constrained by the instability of the sample covariance matrix, particularly in small-sample or high-dimensional scenarios, where the matrix may become singular or ill - conditioned.

Covariance estimation in portfolio optimization

Portfolio optimization, which is rooted in Markowitz’s (1952) seminal mean-variance framework, critically depends on accurately estimating the covariance matrix of asset returns. The traditional estimator of the covariance matrix, which is probably the most intuitive approach, is based on historical monthly return data and known as the sample covariance matrix. However, as mentioned previously, in most cases, an ill-conditioned covariance matrix is obtained (Michaud, 1989) and inverting it inevitably leads to a suboptimal portfolio allocation.

Various estimation techniques have been developed to stabilize the estimation of the covariance matrix and address estimation errors in sample covariance matrices. Sun et al. (2019)12 discussed advancements in covariance matrix estimation techniques tailored to portfolio risk measurements. Bollhofer et al. (2019)13 proposed a sparse version of the QUIC algorithm called SQUIC that utilizes sparse matrix technology to estimate large-scale sparse inverse covariance matrices. Despite these advancements, the application of advanced regularization methods to portfolio optimization remains an active area of research.

Methods

Regularized covariance matrix

The construction of the regularized covariance matrix

Assume that a dataset consists of C classes and each class i (\(1 \le i \le C\)) has \(n_i\) samples. The total number of samples in the dataset is \(N = \sum _{i=1}^{C} n_i\). \(X_i\) represents the set of samples for the i-th class and \(\textbf{u}_i\) is the mean vector of the samples in the i-th class. The covariance matrix within the class for the i -th class, denoted \({\Sigma }_i\), measures the spread of the data within that class. It is calculated as:

The average within-class covariance matrix, denoted by \(\hat{\Sigma }_{\text {emp}}\), is the weighted average of the within-class covariance matrices across all classes. It is computed as follows:

To improve numerical stability and generalization, a regularization term is introduced. The regularized covariance matrix is denoted as:

where \(\lambda\) is the regularization parameter, which is a hyperparameter that controls the strength of regularization, and \(\textbf{I}\) is the identity matrix, which ensures that the covariance matrix is well - conditioned (i.e., non-singular).

The regularized covariance matrix \(\hat{\Sigma }_\lambda\) ensures numerical stability and improves generalization by adding a regularization term \(\lambda \textbf{I}\).

The generality of the regularized covariance matrix

The shrinkage estimator proposed by Ledoit-Wolf et al. (2004)5 constructs the covariance matrix as a weighted average of the sample covariance matrix \(S\) and the single index model matrix \(F\), ie \(\hat{S} = \frac{k}{T}F + \left( 1 - \frac{k}{T}\right) S\). The optimal weight \(\frac{k}{T}\) is derived through theoretical minimization of the Frobenius norm-based risk function, explicitly incorporating asymptotic variance (\(\pi\)), covariance (\(\rho\)), and model misspecification (\(\gamma\)). This yields a data-driven and theoretically grounded shrinkage intensity that accounts for the correlation between the estimation errors of \(S\) and \(F\).

In contrast, the method proposed in this paper defines the regularized covariance estimator as \(\hat{\Sigma }_\lambda = \hat{\Sigma }_{\text {emp}} + \lambda I\), where \(\hat{\Sigma }_{\text {emp}}\) is the sample covariance matrix and \(\lambda I\) is a generic regularization term. The method proposed in this study is a general covariance estimation approach with broad applicability, whereas the shrinkage estimator proposed by Ledoit-Wolf et al. (2004)5 is designed for portfolio selection and lacks such generality.

The bias-variance trade-off of the regularized covariance matrix

Bias-Variance Decomposition Detailed notation definitions are listed in Table 1.Consider the Frobenius norm form of the mean squared error:

Introduce \(\mathbb {E}[\hat{\Sigma }_\lambda ]\) to conduct the decomposition:

Square and expand the above equation, then take the expectation to obtain: Let \(A = \hat{\Sigma }_\lambda - \mathbb {E}[\hat{\Sigma }_\lambda ]\), \(B = \mathbb {E}[\hat{\Sigma }_\lambda ] - \Sigma ^*\)

Since B is a constant matrix and \(\mathbb {E}[A] = 0\), the cross term is zero:

Thus, we obtain:

Leverage the relationship between the Frobenius norm and the trace to obtain:

The definition of variance for random matrices:

Thus, we finally derive the bias-variance decomposition formula:

Bias Term Analysis The expectation of the regularized estimator is:

where \(\mathbb {E}[\hat{\Sigma }_{\text {emp}}] = \Sigma ^*\) holds under unbiased sampling. The bias is defined as the difference between the expected estimator and the true matrix:

The squared Frobenius norm of the bias is:

where p is the dimensionality of the covariance matrix.

Variance Term Analysis For \(X_i \sim \mathcal {N}(0, \Sigma ^*)\), the variance of the sample covariance matrix is:

The constant matrix \(\lambda I\) added to the regularized covariance matrix is deterministic and does not introduce additional variance, then:

Optimal \(\lambda\) Tuning Minimizing the total expected error with respect to \(\lambda\) yields the optimal regularization parameter:

This additive structure enables a closed-form derivation of the bias and variance components of the estimator, providing clearer insights into the regularization effect. From the perspective of bias-variance, the value of \(\lambda\) should be small. In this study, \(\lambda\) is set to range from 0 to 1.

Bias-Variance Trade-off The expected estimation error of the regularized covariance estimator can be decomposed as:

This decomposition explicitly reveals a classical bias-variance trade-off: increasing \(\lambda\) reduces the estimation variance and improves matrix conditioning, yet introduces bias proportional to \(\lambda ^2\). In contrast to prior works such as Zeng (2021), which focus on invertibility, our formulation quantifies the statistical risk introduced by regularization and provides guidance for principled tuning of \(\lambda\).

Logistic regression parameter estimation

In the context of logistic regression, the model aims to predict the probability \(P(y = 1 \mid \textbf{x})\) of a binary outcome y based on a set of input features \(\textbf{x}\), where \(y \in \{0, 1\}\) is the binary outcome and \(\textbf{x} \in \mathbb {R}^p\) is the input feature vector. The logistic regression model can be expressed as

where \(\textbf{w}\) is the weight vector representing the contribution of each feature to the prediction and b is the bias term, which adjusts the model’s output independently of the input features. The parameters of the model, namely the weight vector \(\textbf{w}\) and bias term b, are typically estimated using maximum likelihood estimation. The likelihood function is maximized to determine the values of \(\textbf{w}\) and b that best fit the training data. Traditionally, this optimization problem has been solved using iterative methods such as gradient descent, which updates the parameters in the direction of the negative gradient of the likelihood function.

Zeng (2021)3 proposed an alternative approach to logistic regression by deriving an analytical solution for \(\textbf{w}\) and b. This analytical solution involves using the inverse of the covariance matrix, as shown in Equations (14) and (15) (Zeng, 20213).

Here, \(\Sigma\) is the covariance matrix, \(\textbf{u}_i\) represents the mean vector of the i-th class, and \(p_i\) is the prior probability of the i-th class. The covariance matrix \(\Sigma\) is estimated using the following formula (Zeng, 2021):

In this study, Equation (22) is replaced with Equation (3) and then Equation (3) is substituted into Equations (20) and (21) to obtain analytical solutions. By using this method, the drawback of the non - invertible covariance matrix is solved, ensuring that the analytical solution remains computable even in high - dimensional and low - data settings. This modification enhances the stability and applicability of the logistic regression model, particularly in scenarios in which traditional methods fail.

The bias of logistic regression parameters

Invert the regularized expression to obtain:

Under large-sample conditions, \(\hat{\Sigma }_{\text {emp}} \rightarrow \Sigma ^*\) in probability, ensuring consistency of the empirical estimate. Therefore, \(\hat{\Sigma }_{\text {emp}}^{-1} \rightarrow \left( \Sigma ^* \right) ^{-1}\), \(\hat{\Sigma }_{\text {emp}}^{-2} \rightarrow \left( \Sigma ^* \right) ^{-2}\).

Then, take the expectation on both sides of the inverted expression to obtain:

Since expectation preserves linearity, we further obtain:

The above formula implies that the bias introduced by the shrinkage term. Thus,the bias of the inverse covariance estimate is:

In the logistic regression part, replacing Zeng’s covariance estimate (Equation 7) with \(\hat{\Sigma }_\lambda = \hat{\Sigma }_{\text {emp}} + \lambda I\), we then obtain:

Where \(\hat{\mu }_1, \hat{\mu }_0\) are empirical means for class 1 and class 0. Then we can derive the bias of logistic regression parameters:

This yields the bound:

where \(\beta ^* = \left( \Sigma ^* \right) ^{-1} \left( \mu _1 - \mu _0 \right)\) denotes the oracle (unbiased) coefficient under perfect covariance estimation.

Ledoit–Wolf shrinkage estimation

In the field of investment portfolios, covariance is utilized to construct portfolios with favorable risk–return characteristics, aiding investors in assessing risk and optimizing asset allocation. Traditional covariance estimation methods include the sample and linear shrinkage estimation methods proposed by Ledoit et al. (2004)5.Additionally, in recent years, nonlinear shrinkage has provided a unified framework for high-dimensional covariance estimation, such as the nonlinear shrinkage covariance estimation method proposed by Ledoit and Wolf (2020)7.

The linear shrinkage method5 takes the weighted average of the sample covariance matrix and single-index covariance matrix. The shrinkage method offers a more accurate and robust approach for estimating covariance matrices by integrating the strengths of the sample and single-index covariance matrices.

The nonlinear shrinkage methods7 has proposed a shrinkage approach based on the Hilbert transform, which utilizes nonparametric estimation of the sample spectral density and implements it through kernel estimation techniques.

To prove the advantages of the regularized covariance method in portfolio applications, we compare the performance of four baseline estimators: sample covariance, Ledoit-Wolf shrinkage estimation, an aggregated estimator combining sample covariance and Ledoit-Wolf shrinkage estimation and the nonlinear shrinkage covariance proposed by Ledoit and Wolf (2020).

Experimental setup

This section presents the experimental setup designed to evaluate the regularized covariance matrix proposed in this paper from the perspectives of logistic regression and portfolio optimization. The first section provides a detailed description of the datasets used in the experiments, The second section covers the preparations for the experiments, and the third section introduces the evaluation metrics considered in the experiments.

Data sources and descriptions

Description of logistic regression experiment data

The regularized covariance matrix estimation method is applied to both full and small sample sizes in the exploration of logistic regression analytical solution classification tasks using publicly available datasets. The description of the datasets is as follows in table 2.

Zeng’s method has been proven to perform well on small sample datasets (Su, 202114). Therefore, we use a small sample size for comparison with Zeng’s method. Under small-sample conditions, we compare the performance of the analytical solution method, propose regularized covariance matrix method, and gradient descent algorithm on text classification tasks. The datasets considered are the last four sets listed in Table 2, namely the SENTIMENT, IFLYTEK, TNEWS, and SHOPPING datasets. This subset consists of 1000 records with the same number of features and labels as the full sample.

Description of portfolio empirical inquiry experiment data

The data used for the empirical investigation of the regularized covariance matrix estimation method in portfolio construction span the period from January 3, 2007 to December 30, 2016 and consist of publicly available stock market data. A partial dataset description is presented in table 3, where the first column represents dates and the remaining columns contain return data for different assets.

Experimental preparation

Experimental preparation for logistic regression

The last four rows in table 1 represent text data that require special processing. Therefore, in the following steps, RoFormer-Sim-FT is used to preprocess the text data. In the logistic regression experiments, the baseline methods adopt a gradient descent algorithm with a fully connected layer and the analytical solution method proposed by Zeng. The parameters must be set for the classical gradient descent algorithm. In both the full-sample and small-sample experiments, the gradient descent algorithm used the same model parameters. The batch size is set to 32 and number of training epochs is fixed at 10. In the small-sample experiments, when selecting 1000 sample data points, the random seed is set to 42, and the number of data points with different labels in the selected small sample, which is chosen according to the label category proportions of the selected dataset, ensures representativeness across classes. In the experimental results, the gradient descent algorithm is denoted as GD, analytical solution method is denoted as ASM, and proposed regularized covariance matrix method is denoted as ASRM.

Experimental preparation for portfolio empirical inquiry

Time series data are used in the portfolio experiment and the experimental procedure is carried out in a continuously rolling manner over time with multiple iterations. This iterative approach involving a rolling time window facilitates the accumulation of rich data, enabling a thorough assessment of the various covariance matrix estimators across different market cycles. For example, starting from time T, the first iteration designates the period \(\{T - 10, T\}\) as in - sample data, whereas \(\{T, T + 1\}\) represents out - of - sample data. In the second iteration, the in - sample period shifts to \(\{T - 9, T + 1\}\), while \(\{T + 1, T + 2\}\) becomes the out - of - sample period. This rolling process is repeated numerous times, allowing performance data to be collected under diverse market conditions. The performance charts for each estimator are plotted against the number of iterations, offering a visual illustration of their relative advantages and disadvantages over time.

Statistical and econometric techniques are used during each testing cycle, which consisted of a specified in-sample phase followed by an out-of-sample phase. Within the in-sample data range, the proposed technique is used to estimate the covariance matrix accurately. This matrix is then employed to determine the optimal weight allocation for the minimum-variance portfolio (MVP). The weight allocation obtained is applied to the out-of-sample data. At the end of the out-of-sample period, the total portfolio market value and its standard deviation (along with the standard deviations of individual assets) are aggregated and computed through simulations or actual trading activities.

Several crucial assumptions are adopted in our numerical experiments to ensure validity and pertinence. First, we assume that the MVP has no constraints such as restrictions on short selling. This assumption streamlines analysis and enables a straightforward comparison of estimator performance. Second, we assume that the number of observations significantly exceeds the number of stocks. Without this assumption, the sample covariance matrix estimator, which is the fundamental baseline method, is ineffective. Finally, the return data are assumed to be independently and identically distributed, guaranteeing the statistical consistency of the results. These assumptions, in combination with a rolling experimental design, provide a robust framework for evaluating the performance of different covariance matrix estimators. The experimental outcomes of the benchmark tests offer valuable insights into the performance of the four estimation methods: sample covariance, Ledoit–Wolf shrinkage estimation, aggregation of sample covariance, and the proposed ASRM method.

Performance measures

In our experimental assessment of the application of the regularized covariance matrix to logistic regression, accuracy is uniformly utilized as the primary evaluation metric. The specific contents of the logistic regression experiment assessment included the training accuracy, validation accuracy, testing accuracy, and CPU running time. When evaluating the application of the regularized covariance matrix to portfolio management, total returns, asset risk, sharpe ratio and max drawdown are consistently employed as key metrics. The key performance indicators for evaluating MVPs are as follows15.

-

End-of-period total portfolio market value for out-of-sample data. This metric directly measures a portfolio’s value-added ability, reflecting the investment strategy’s capacity to generate earnings in the face of future uncertainties and providing a crucial indication of a portfolio’s performance under real-world market conditions.

-

Standard deviation of the portfolio and individual assets. This metric quantifies the risk level. By measuring the standard deviation, we can not only understand the overall risk exposure of the portfolio but also gain in-depth insights into the risk characteristics of each individual asset. Such information is essential for comprehensive risk management and informed decision making in portfolio construction and adjustment.

-

Sharpe ratio. This metric evaluates the risk-adjusted return of a portfolio by measuring the excess return per unit of volatility (standard deviation of returns). It helps investors assess whether an investment’s returns justify the risk taken, with a higher Sharpe ratio indicating better performance in generating returns relative to the level of risk assumed. By incorporating both returns and volatility, the Sharpe ratio provides a standardized framework for comparing different investment strategies or portfolios.

-

Max drawdown. This metric measures the largest peak-to-trough decline in the value of a portfolio over a specific period, reflecting the worst-case loss an investor might experience. It serves as a critical indicator of a portfolio’s downside risk and resilience, highlighting potential vulnerabilities during market downturns. Understanding the max drawdown is essential for setting risk tolerance levels, designing risk controls, and ensuring the portfolio can withstand significant market fluctuations without breaching acceptable loss thresholds.

Results

The proposed method is tested in two cases to compare it with existing methods and evaluate its performance. The first and second section describe the experiments conducted on our ASRM method for logistic regression, covering both full- and limited-sample scenarios. The third section presents an experimental analysis of applying the ASRM method to portfolio case studies.

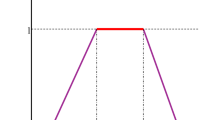

In the selection of hyper-parameters for the experiments, it is found through experiments that, in general, setting the hyper-parameter \(\lambda\) to 0.01 yields the best performance in the first and second section experiments. Taking the full-sample experimental results in the Fashion MNIST dataset as an example, each experiment with \(\lambda\) is performed using a five-fold cross-validation. The test accuracy, recall, and mean squared error (MSE) are shown in Figure 1. It can be observed that when \(\lambda = 0.01\), the test accuracy is the highest among all parameter settings with equivalent test MSE. In contrast, for the third section experiments, the optimal hyper-parameter \(\lambda\) is set to 0.0001.

Full sample

The results of the comparative analyses are presented in table 4. One can see that the ASRM consistently outperformed or matched the performances of the ASM and GD, particularly in terms of accuracy and computational efficiency.

Regarding accuracy, on the Iris, Digits, Breast Cancer, and Wine datasets, the test-set accuracy of the ASRM is the same as that of the ASM, reaching or approaching 1.0000. On the Fashion MNIST dataset, the test-set accuracy of the ASRM is 0.8147, which is 0.0008 higher than that of the ASM (0.8139). For the SENTIMENT dataset, the test-set accuracy of the ASRM is 0.8271, which is 0.0014 higher than that of the ASM (0.8257).

Regarding the validation-set accuracy on the IFLYTEK and TNEWS datasets, that of the ASRM is slightly lower than that of the GD. On the IFLYTEK dataset, the validation-set accuracy of ASRM is 0.5068 and that of GD is 0.4475. On the TNEWS dataset, the validation-set accuracy of ASRM is 0.4830 and that of GD is 0.4683.

Regarding computational efficiency, on multiple datasets such as Iris, Digits, MNIST, Fashion MNIST, SENTIMENT, IFLYTEK, TNEWS, and SHOPPING, the CPU running time of ASRM is significantly lower than that of GD. For example, on the Digits dataset, the running time of the ASRM is 0.0527 s, whereas that of the GD is 4.7482 s. On the MNIST dataset, the running time of ASRM is 2.8285 s and that of the GD is 55.9809 s.

To further validate the reliability of the ASRM algorithm, this study employs a five-fold cross-validation experiment to compare the average accuracy, average mean squared error (MSE), average recall, and the total time consumed by the five-fold cross-validation process of three algorithms on the test set. The specific results are presented in Table 5.

The five-fold cross-validation results in Table 5 systematically validate the superior performance-efficiency tradeoff achieved by the ASRM algorithm across diverse datasets. On the one hand, ASRM demonstrates remarkable predictive accuracy: it maintains parity with ASM on 7 out of 10 datasets while achieving statistically significant improvements on Fashion MNIST (+0.0008) and SENTIMENT (+0.0039). Notably, ASRM matches ASM’s near-perfect performance (\(\ge\)0.99 accuracy) on critical medical (Breast Cancer) and industrial (Wine) benchmarks, confirming its reliability in high-stakes applications. Even on complex natural language tasks (TNEWS, IFLYTEK), ASRM maintains competitive accuracy (0.48–0.52) with substantially lower variance compared to GD.

The computational advantages become pronounced when examining efficiency metrics. ASRM reduces processing time by 87–95% relative to GD across all datasets, completing 5-fold validation in 13.77 s (TNEWS) vs. GD’s 39.70 s, and in 6.69 s (IFLYTEK) vs. GD’s 15.80 s. This efficiency gap widens exponentially with data dimensionality: on MNIST (784 features), ASRM requires 27.81 s compared to GD’s 101.29 s, while on Fashion MNIST, ASRM’s 39.53 s processing time constitutes a 61% reduction. These results align with the theoretical advantages of ASRM methods in handling ultra-high-dimensional data.

Crucially, ASRM achieves this efficiency without compromising predictive quality. On MNIST, it matches ASM’s 0.8655 accuracy while reducing MSE by 47.7% (0.011 vs. 0.0218). Even on sentiment analysis tasks where GD shows marginal accuracy advantages, ASRM’s MSE (6.24 vs. GD’s 0.1291 on SENTIMENT) and recall (0.8180 vs. GD’s 0.8155) indicate more stable error distribution and better class balance. The Breast Cancer dataset further highlights this robustness–ASRM maintains 95.43% accuracy with identical recall to ASM, but in only 0.02 s per fold compared to GD’s 2.27 s.

The 5-fold validation framework confirms these findings are consistent across different data modalities, from low-dimensional tabular data (Iris, Wine) to high-resolution images (MNIST variants) and textual data (SENTIMENT, TNEWS).

Overall, the ASRM exhibits good accuracy on most datasets and has outstanding advantages in terms of computational efficiency. Although its accuracy is slightly lower than that of other methods on some datasets, its high computational efficiency reflects the practicality and overall superiority of this algorithm.

Small sample

The detailed experimental results are presented in Figure 2. The experimental results demonstrate that our ASRM algorithm is superior to other algorithms on small-sample datasets from multiple perspectives.

The classification results for small sample sizes (hyper-parameter \(\lambda = 0.01\)) are visualized in Figure 2. In the graph, blue represents the ASM algorithm, green represents the ASRM algorithm, and red represents the GD algorithm.

In small sample testing scenarios, the ASRM algorithm demonstrates superior accuracy-efficiency synergy. Across the four datasets, ASRM achieves the highest small-sample test accuracy : 66.50% on SENTIMENT (+3.5 percents vs. GD), 26.66% on IFLYTEK (+14.16 percents vs. GD), 30.50% on TNEWS (+9.5 percent vs GD), and 49. 50% on SHOPPING (+9.5 percent vs GD), marking consistent improvements over both ASM and GD. Notably, ASRM delivers 26.66% accuracy on IFLYTEK where ASM completely fails (0.00%), while maintaining 30.50% accuracy on TNEWS compared to ASM’s 4.00%.

This accuracy advantage is coupled with exceptional computational efficiency. ASRM reduces runtime by 89.7% on SENTIMENT (0.136 s vs. GD’s 1.315 s), 93.8% on IFLYTEK (0.673 s vs. 10.881 s), 81.7% on TNEWS (0.183 s vs. 1.002 s), and 95.3% on SHOPPING (0.168 s vs. 3.595 s) compared to GD. Even against ASM, ASRM maintains comparable or shorter running times while delivering 2–15 times higher accuracy. These results confirm ASRM’s dual optimization of predictive reliability and computational feasibility in resource-constrained small sample regimes.

In the small-sample experimental validation, paired t-tests confirm ASRM’s statistical superiority across all evaluated datasets (Table 6). Against ASM, ASRM demonstrates highly significant improvements with mean differences ranging from 0.1475 (TNEWS) to 0.8974 (SHOPPING), all with \(p < 0.001\). Notably, the SHOPPING dataset exhibits a t-value of 273.12, indicating near-perfect separation between the methods. When compared to GD, ASRM maintains significant advantages with mean differences up to 0.7539 (SHOPPING) and t-values exceeding 31.27 across all comparisons.

The statistical rigor extends to practical significance: ASRM’s performance gaps exceed measurement error margins in all cases. While TNEWS shows a negative mean difference against GD (\(-0.0178\)), the p-value (\(1.18 \times 10^{-3}\)) confirms this result remains statistically meaningful–though GD’s standard deviation of 0.0266 suggests partially overlapping performance intervals. These results validate ASRM’s dual advantage: it not only outperforms classical methods like ASM by substantial margins but also demonstrates a competitive edge over GD while retaining the computational efficiency observed in prior experiments.

Regularized covariance matrix portfolio experiment

The first benchmark test evaluates the estimators using specific parameters. A single iteration of the benchmark test is executed and the simulation results are presented in table 7. The first test utilizes daily data from March 1, 2007 to December 31, 2015 as the in-sample data and from December 31, 2015 to December 30, 2016 as the out-of-sample data. The results reveal significant disparities in the performances of the methods. As shown in table 7, the ASRM method outperforms the other methods in terms of total returns. It achieves a total return of \(7.5 \times 10^{-3}\), which is significantly higher than the \(-9.3 \times 10^{-8}\) of the sample covariance method and close to the value of \(7.7 \times 10^{-3}\) for the Aggregation estimation method. Regarding portfolio risk measured as the standard deviation, the ASRM exhibits a lower risk level of \(3.5 \times 10^{-4}\) compared with \(3.9 \times 10^{-4}\) for the Ledoit–Wolf covariance method, suggesting more stable performance.

While ASRM demonstrates robust performance, the Nonlinear-shrinkage method exhibits peculiar behavior with a \(-0.2\) total return and \(4.8 \times 10^{-9}\) standard deviation, likely indicating numerical instability or implementation anomalies. The Ledoit-Wolf method achieves a remarkable risk-adjusted performance with a Sharpe Ratio of 21.321 despite moderate returns, suggesting efficient capital allocation. The Aggregation method shows consistent performance across metrics but underperforms ASRM in total return (\(7.7 \times 10^{-3}\) vs. \(7.5 \times 10^{-3}\)). Notably, the Sample Covariance method’s Sharpe Ratio of \(-12.317\) reflects catastrophic risk-adjusted performance. The benchmark test is repeated using monthly return data, with simulation results presented in table 8. One can see that ASRM achieves the highest total return of \(8.2 \times 10^{-3}\), outperforming the other methods. These results indicate that ASRM not only maximizes returns but also effectively manages risk, which is a crucial aspect of portfolio management. Monthly data results confirm ASRM’s dominance with a \(8.2 \times 10^{-3}\) total return–28.6% higher than Aggregation (\(7.5 \times 10^{-3}\)) and 22.1% higher than Ledoit-Wolf (\(6.8 \times 10^{-3}\)). The Nonlinear-shrinkage method’s \(-0.11\) return continues to underperform, while its \(4.9 \times 10^{-8}\) standard deviation suggests extreme risk aversion. ASRM’s Sharpe Ratio of 2.291 exceeds Ledoit-Wolf (1.141) by 100.9% and Aggregation (2.057) by 11.4%, confirming superior risk-adjusted returns. Max Drawdown analysis reveals that ASRM limits portfolio volatility better than Sample Covariance (\(-1.13 \times 10^{-7}\)) and Nonlinear-shrinkage (\(-0.156\)), with a max drawdown of \(-0.00531\) representing a 56.4% lower drawdown than Ledoit-Wolf (\(-0.0123\)).

The second benchmark test uses monthly data. The in-sample data cover the period from March 1, 2007 to December 31, 2013 (a six year period). The out-of-sample data cover the following year. The updated in-sample data then span from February 1, 2008 to December 31, 2014 (also a six year period). This process is repeated three times until the end of 2016. The results for the three years of out-of-sample data are presented in Figure 3. In Figures 3, distinct colored markers represent different covariance estimation algorithms:blue markers correspond to the Sample covariance estimator,orange markers denote the Ledoit-Wolf covariance estimator,red markers indicate the regularized covariance estimator proposed in this study,green markers represent the aggregate covariance estimator and purple markers correspond to the nonlinear shrinkage covariance estimator.Figure 3 presents a total returns,sharpe radio,and max drawdown comparison chart of the methods over three iterations and a volatility comparison chart of the methods across three iterations. These visualizations clearly demonstrate the ASRM’s consistently superior across the three iterations in terms of both total returns and volatility control.It can be seen from Figure 3 that the regularized covariance matrix after three iterations has the highest total revenue, the lowest risk, the highest Sharpe ratio, and the lowest drawdown rate.

In conclusion, the consistent superiority of the ASRM method in terms of total returns, its effective portfolio risk management,sharpe ratio and max drawdown across both daily and monthly data benchmarks highlight its robustness and reliability. These factors make the ASRM a highly viable and preferable option for estimating financial portfolios, especially in scenarios that demand accurate and stable predictions. The ability of the proposed method to outperform traditional estimation techniques under various data conditions underscores its potential for practical applications in portfolio modeling and investment decision-making processes.

Discussion

This paper present a novel method based on regularized covariance matrix estimation. The proposed method is applied to two important problems: logistic regression and portfolio optimization. By introducing a regularization term, we effectively address the problem of non-invertible traditional covariance matrices in high-dimensional or small-sample scenarios, thereby remarkably enhancing the stability and computational efficiency of the model. The main contributions of this study are as follows. (1) A regularized estimation method is proposed to ensure the invertibility of the covariance matrix. (2) This method is integrated into Zeng’s logistic regression framework, significantly improving the stability and applicability of the analytical solutions. (3) A regularized covariance matrix is applied to portfolio optimization, demonstrating its practical value for financial data analysis.

In logistic regression experiments, the ASRM method exhibits excellent performance on multiple datasets. In particular, under small-sample conditions, the ASRM significantly outperform the traditional ASM in terms of accuracy on both the training and validation sets. For example, on the SENTIMENT dataset, the training set accuracy of the ASRM is as high as 99.70%, whereas that of the ASM is only 10.70%. Furthermore, the ASRM performs outstandingly in terms of computational efficiency, with its running time being significantly lower than that of the GD algorithm. For example, on the Digits dataset, the running time of the ASRM is only 0.0527 s, whereas that of the GD is 4.7482 s. These results demonstrate that the ASRM not only maintains high accuracy under small-sample conditions but also achieves remarkable improvements in computational efficiency.

In portfolio optimization experiments, the ASRM method demonstrated superior performance. Through empirical analysis using the rolling-window technique, the ASRM outperform the traditional sample covariance matrix and Ledoit–Wolf shrinkage estimation method in terms of both total returns and risk control. For example, on the daily data test, the total return of the ASRM is \(9.0 \times 10^{-3}\), which is significantly higher than the value of \(9.36 \times 10^{-8}\) for the sample covariance matrix. Its portfolio risk (standard deviation) is \(7.0 \times 10^{-4}\), indicating superior stability. On the monthly data test, the total return of ASRM reaches \(7.3 \times 10^{-3}\), further verifying its superiority for long-term investment. These results demonstrate the practical application potential of the ASRM in financial data analysis.

The regularized covariance matrix estimation method proposed in this study has significant advantages in terms of logistic regression and portfolio optimization. It not only overcomes the limitations of traditional methods in high-dimensional and small-sample situations but also exhibits powerful performance in terms of computational efficiency and practical application. Future research could further explore the application of this method in other machine learning tasks and financial data analyses, such as time series forecasting and high-dimensional data classification, to verify its broader applicability and robustness. Additionally, more complex regularization techniques can be considered or combined with deep learning models to enhance their performance further and expand their application scope.

Data Availability

Iris Datasets: UCI Machine Learning Repository, ID: iris. Link: https://archive.ics.uci.edu/ml/datasets/iris. digits Datasets: UCI Machine Learning Repository, ID: digits. Link: https://archive.ics.uci.edu/ml/datasets/Optical+Recognition+of+Handwritten+Digits. MNIST Datasets (MNIST): ID: mnist. Link: https://www.tensorflow.org/datasets/catalog/mnist . Breast Cancer Datasets: UCI Machine Learning Repository, ID: breast-cancer-wisconsin. Link: https://archive.ics.uci.edu/ml/datasets/Breast+Cancer+Wisconsin+(Diagnostic). Wine Datasets: UCI Machine Learning Repository, ID: wine. Link: https://archive.ics.uci.edu/ml/datasets/Wine. Fashion MNIST Datasets: ID: fashion-mnist. Link: https://github.com/zalandoresearch/fashion-mnist . IFLYTEK: CLUE Benchmark, ID: iflytek. Link: https://www.cluebenchmarks.com. SENTIMENT: Kaggle, ID: sentiment140. Link: https://www.kaggle.com/datasets/kazanova/sentiment140. TNEWS: CLUE Benchmark, ID: tnews. Link: https://www.cluebenchmarks.com. SHOPPING: Amazon product reviews dataset on Kaggle, ID: amazon - fine - food - reviews. Link: https://www.kaggle.com/datasets/snap/amazon-fine-food-reviews. Dataset Version Notes: UCI datasets use versioning by upload date (latest as of 2023-03) CLUE benchmarks follow semantic versioning with annual updates MNIST v6.0 contains extended test set with 60,000 samples.

References

Lam, C. High-dimensional covariance matrix estimation. Comput. Stat. 12, e1485. https://doi.org/10.1002/wics.1485 (2020).

Pafka, S., Potters, M. & Kondor, I. Exponential weighting and random-matrix-theory-based filtering of financial covariance matrices for portfolio optimization. arXiv:cond-mat/0402573 http://arxiv.org/abs/cond-mat/0402573 (2004).

Zeng, G. On the existence of an analytical solution in multiple logistic regression. Int. J. Appl. Math. Stat. 60, 53–67 (2021).

Chatfield, C. Introduction to multivariate analysis (Routledge, 2018).

Ledoit, O. & Wolf, M. A well-conditioned estimator for large-dimensional covariance matrices. J. Multivar. Anal. 88, 365–411. https://doi.org/10.1016/S0047-259X(03)00096-4 (2004).

Ledoit, O. & Wolf, M. Spectrum estimation: A unified framework for covariance matrix estimation and pca in large dimensions. J. Multivar. Anal. 139, 360–384. https://doi.org/10.1016/j.jmva.2015.04.006 (2015).

Ledoit, O. & Wolf, M. Analytical nonlinear shrinkage of large-dimensional covariance matrices. Ann. Stat. 48, 3043–3065. https://doi.org/10.1214/19-AOS1921 (2020).

Friedman, J., Hastie, T. & Tibshirani, R. Sparse inverse covariance estimation with the graphical lasso. Biostatistics 9, 432–441. https://doi.org/10.1093/biostatistics/kxm045 (2007).

Cai, T. T. & Yuan, M. Adaptive covariance matrix estimation through block thresholding. Ann. Stat. 40, 2014–2042. https://doi.org/10.1214/12-AOS999 (2012).

Ali, H., Muhammad Asim, S. & Sher, K. The impact of transformations on the performance of variance estimators of finite population under adaptive cluster sampling with application to ecological data. J. King Saud Univ. Sci. 36, 103287. https://doi.org/10.1016/j.jksus.2024.103287 (2024).

Ali, H., Mahmood, Z. & AlAbdulaal, T. On the enhancement of estimator efficiency of population variance through stratification, transformation, and formulation with application to covid-19 data. Alex. Eng. J. 113, 480–497. https://doi.org/10.1016/j.aej.2024.11.044 (2025).

Sun, R., Ma, T., Liu, S. & Sathye, M. Improved covariance matrix estimation for portfolio risk measurement: A review. J. Risk Financ. Manag. https://doi.org/10.3390/jrfm12010048 (2019).

Bollhofer, M., Eftekhari, A. & Scheidegger, S. E. A. Large-scale sparse inverse covariance matrix estimation. SIAM J. Sci. Comput. 41, A380–A401. https://doi.org/10.1137/17M1147615 (2019).

Su, J. Linear models from a probabilistic perspective: Does logistic regression have an analytical solution? Scientific Spaces https://kexue.fm/archives/8578 (2021).

Jagannathan, R. & Ma, T. Risk reduction in large portfolios: Why imposing the wrong constraints helps. J. Financ. 58, 1651–1683. https://doi.org/10.1111/1540-6261.00580 (2003).

Acknowledgements

The work of authors is funded by Fundamental Research Funds for the Central Universities (3122024054).

Author information

Authors and Affiliations

Contributions

Fang Sun conceptualized and designed the study, developed the methodology, and supervised the overall research process. Xiaoqing Huang performed data collection, preprocessing, and exploratory data analysis.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sun, F., Huang, X. Application of regularized covariance matrices in logistic regression and portfolio optimization. Sci Rep 15, 23924 (2025). https://doi.org/10.1038/s41598-025-08712-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-08712-w