Abstract

Concrete strength prediction is of great relevance for construction safety and quality assurance; however, these methods often trade-off their accuracy or interpretability, especially when it comes to the use of supplementary cementitious materials like fly ash in process. This study aims to build an interpretable, highly accurate model for predicting the compressive and tensile strength of concrete with a hybrid approach based on gradient boosting (XGBoost), deep neural networks (DNNs), and optimization via AutoGluon Process. The model is put into a multitask learning (MTL) framework that includes mix design variables, environmental factors, and non-destructive testing (NDT) data samples. The interpretation of model predictions is accomplished through SHAP and LIME to quantify global and local importance. Results show an impressive R² score of 0.91 on the test set with a 23% reduction in MSE and LIME fidelity exceeding 0.87. This shows a 10–15% increase in the mean-squared error, surpassing existing models. Feature analysis shows that fly ash percentage contributes around 25% to the predictions. The proposed solution thus offers a robust interpretability platform for concrete strength prediction and further shows great promise for optimization in material design and structural integrity assurances. This work serves as a landmark in bridging the gap between hybrid modeling with automated optimization and explainability for concrete strength predictions.

Similar content being viewed by others

Introduction

Accurately predicting concrete compressive strength is vital to the reliability and safety of a construction job1. Concrete is a composite material with a complex and heterogeneous nature, naturally bringing enormous challenges in predicting mechanical properties, including compressive strength. Generally, strength in concrete is determined based on empirical formulas and laboratory experimentations2. Though effective, these methods are usually time-consuming and narrow in scope3. These conventional methods can hardly capture the complex interaction of the various constituents of concrete, much more so when supplementary materials like fly ash are introduced into the process4,5,6. Fortunately, recent developments in machine learning open new avenues to enhance predictive accuracy in concrete strength assessment7,8,9. In particular, gradient-boosting algorithms and deep neural networks have emerged as forerunners in this field10,11. Gradient boosting algorithms, such as XGBoost, do a pretty nice job handling tabular data and making robust predictions using an ensembling approach12. In contrast, DNNs do a nice job capturing complex nonlinear relationships inherent in data samples13. However, their isolated application usually fails to maximize predictive performance due to the methods’ limitations14. Gradient boosting combined with deep neural networks is an auspicious way to overcome these difficulties15. Using the power of both methodologies, one can effectively apply a hybrid model to capture the wide range of factors affecting concrete strength16. Further, the model optimization process radically changed with the invention of AutoML frameworks like AutoGluon. On the other hand, AutoML automates the time-consuming and expert-driven process of tuning hyper parameters, enabling the development of high-performing models with very minimal processes of manual intervention operations3.

One of the critical features of a predictive model is its interpretability and transparency17,18. On the flip side, though more complex models, for instance, DNNs with significant predictive power, are regarded as the black boxes of the system since their inner operations are not transparent19,20. In this case, the non-transparency might hamper trust and the general adoption of such models in practical applications. To ensure transparency, we implement SHapley Additive exPlanations (SHAP) and Local Interpretable Model-agnostic Explanations (LIME). SHAP values provide insight into the contribution of features at both the global and local levels, hence increasing model interpretability21. LIME further enhances it by local explanations of individual predictions, making the model’s decisions understandable and actionable. The state-of-the-art in the present approach comes with the incorporation of NDT data through a multi-task learning framework. NDT methods, such as ultrasonic pulse velocity and rebound hammer tests, reveal information about concrete’s internal structure and quality without damage during the process. The Multitask Learning (MTL) framework predicts multiple related properties simultaneously by incorporating NDT data. It uses shared information to guide and improve the accuracy in predictions of compressive strength levels15,22.

Application of the hybrid model involves several key steps: first, AutoGluon optimizes over an ensemble of XGBoost and DNN through many trials for the best combination of hyper parameters23. In this work, the input features are concrete mix proportions, fly ash content, curing time, environmental conditions, and NDT data samples. Initial predictions and ranking by feature importance are done through XGBoost; a DNN refines these predictions using the selected features24. SHAP values are calculated at runtime to explain the model’s predictions with full knowledge of feature importance25. LIME creates an interpretable model of local predictions of individual instances and increases transparency in decision-making26,27,28. The MTL framework integrates the NDT data, capturing shared representations that improve the predictive performance in compressive strength and related properties. In contrast, the results from this study improve significantly over the standalone models. Hybrid models have an enhanced R² score of 5–10% and a mean absolute error reduced by 0.5-1.0 MPa. SHAP analysis indicates that the predictive power from fly ash content is approximately 20–25%, from curing time approximately 15–20%, and from the ultrasonic pulse velocity approximately 15–20%. LIME explanations can explain cases with local prediction power about 90%, where fidelity scores are above 0.85. The MTL framework reduces the mean squared error in compressive strength predictions by about 10–15% in comparison to single-task models and, on the other hand, also reveals homogeneous behavior of consistent performance improvement for related properties. A combination of gradient boosting, deep neural networks, and AutoML enables advanced techniques of interpretability together with NDT data integration to portend a sea change in concrete compressive strength prediction. This hybrid model gives better prediction accuracy and insight into the factors governing concrete strength, leading to better construction material design and quality control.

Prediction of the compressive strength of concrete and other connected properties has been considerable; these parameters are critical in construction and civil engineering29,30,31. Presently, prediction models of high accuracy concerning concrete structures have become vital to ensure their integrity and long life, especially when new materials and techniques are introduced32,33. Literature review, the paper draws a survey on various methods, findings, results, and limitations that have been explored in the recent past by different researchers34,35. In the work of Alam et al.36an assessment of the high-strength self-compacting concrete properties with Nano-silica for performance improvement was done; a model would review analysis and prediction of its mechanical strengths, which, as a result, showed a very high improvement in compressive strength, although this model was not extended to other types of concretes. Both works provided a robust strength prediction, noting prerequisites based on exact composition and data quality. Islam et al.37 researched deep learning techniques that provide the possibility of high predictive performance in high-performance concrete strength prediction with very limited interpretability. Jibril et al.3 applied an evolutionary computational intelligence algorithm for the prediction of compressive strength of high-strength concrete. The accuracy of the predictions increased further at the expense of making the model implementation process more complicated. Jubori et al.38 applied machine learning methods for the prediction of compressive strength of blended concrete with far better accuracy but at the cost of a lot of data preprocessing. Introducing SHAP analysis, Kashem et al.39 showed that hybrid machine learning methods can be applied to high-strength concrete strength predictions and get accurate and interpretable results with minor extra computational overhead. Kazemifard et al.40 applied the maturity method for NDT prediction of the strength of Self-Compacting Concrete and obtained effective predictions with limited applicability in other NDT methods. On their part, Khodaparasti et al.41 applied an improved RF algorithm for the prediction of concrete compressive strength, realizing improvement in accuracy at the expense of model complexity issues. Li et al.11 used the squirrel search algorithm and the extreme gradient-boosting technique in the prediction and hence optimization of compressive strength for sustainable concrete. This provided great prediction accuracy against algorithmic complexity in the study. Li et al.42 proposed an ANN-based temperature short-term forecast model that controls mass concrete cooling and provides reliable forecasts but has limited applicability to temperature control. Lu C. et al.43 applied an automated algorithm of least square support vector regression to the prediction of high-performance concrete strength. The results obtained were very reliable but with a large computational cost.

Meng, X44. contributed a part that dealt with automated machine learning models in relation to HPC strength predictions. Results returned showed improved performances but emphasized high quality was actually in the input data samples. Nigam, M., et al.45 applied a random forest algorithm for the prediction of nano-silica concrete compressive strength and retrieved very accurate results, facing challenges of model interpretability. Onyelowe et al.46 proposed an optimized mix design of concrete using the GRG-optimized response surface methodology, which improved the prediction of compressive strength but required huge samples of experimental data. In the study of Sapkota et al.47ensemble machine learning methods have been applied and give normal concrete strength reliable and robust predictions, but training a model is very time-consuming. Saxena et al.15 applied regression analysis to the problem of sustainable concrete strength prediction, with good results really limited only to regression techniques. Shubham et al.48 applied deep neural networks in prediction for the compressive strength of concrete incorporating industrial wastes and obtained high predictive accuracy at the cost of large data samples. It has been shown that SC techniques, such as that applied by Tabrizikahou et al.49 for the prediction of the shear strength of reinforced concrete walls, or Xue et al.50 for the bond strength prediction in FRP-reinforced concrete, are capable of predicting strength values at raising model complexity. Yao et al.51 used a very simple strut-and-tie model in predicting shear strengths for steel-reinforced concrete beams, returning excellent estimates of beam geometries. Yu52, in his work on applying a hybrid regression framework to predict the strength properties for RCA concretes, made effective predictions but has challenges regarding interpretability. Zhou et al.53 suggested a method for the location and measurement of the depth of rebars in concrete using NDT with good localization accuracy, although that was limited by respective used NDT methods.

Table 1 provides an overview of recent studies for predicting the compressive strength of concrete and highlights several trends and advancements. These are applied with different machine learning and deep learning techniques that, in turn, improve the predictive accuracy using strengths from algorithms like, but not limited to, neural networks, random forests, and gradient boosting. By incorporating interpretability approaches like SHAP and LIME, the recent string of these modeling innovations has indeed added much transparency and reliability, although computational complexity and data quality remain perennial concerns. Alam, M.F., et al.36 proved Nano-silica effectiveness in most of the concrete properties they considered. However, model transferability in some of those other classes of concrete cast doubts on the model, thereby needing more extensive validation. Chen et al.54 and Diksha et al.55 illustrated that while the hybrid and machine learning models are accurate, they tend to be specialized in most cases, which, more often than not, increases the computational requirements. What comes out of the studies of Islam, N., et al.37 and Jibril, M.M., et al.3 is that higher predictive performances often go hand in hand with a reduced interpretability of the studies among high-performance concrete predictors.

For instance, Kashem et al.39 give growing importance to hybrid approaches combining several techniques to make model results robust and accurate. However, the computational overhead increases with these processes, making it necessary to adapt efficient implementation strategies. Kazemifard, S. et al.40 and Khodaparasti, M. et al.41 introduced NDT methods and enhanced algorithms, respectively, for good and exact predictions, but with some specific limits in generalizability and models with complexity. The research profiles by Li et al.11 and Li, M. et al.42 heighten the role of enhanced algorithms and domain-specific applications in potentials that enable the improved optimization of the interface damage and ultimate bonding properties of concrete. Main High Accuracy in Prediction showed that Automated Machine Learning Models accomplish it, and hence, it is extremely necessary to introduce Automation in case of complex datasets and model configurations. However, one of the significant dependencies is on high-quality input data, which brings out the need for detailed efforts to collect and preprocess data. Works conducted by Nigam, M. et al.45 and Onyelowe, K.C. et al.46 describe the efficiency of single algorithms in predicting concrete strength with novel materials while exhibiting problems of interpretability and high data needs. In terms of ensemble machine learning methods by Sapkota, S.C. et al.47robustness in prediction comes with great computational intensity—extensive training times. It is still true that regression analysis, such as that applied by Saxena, A. et al.15and deep neural networks, such as those used by Shubham, K. et al.48are potent tools in the prediction of sustainable waste-included concrete strength. Nevertheless, the scalability and generalization of the fitted models remain open. Soft computing techniques make accurate predictions for some specific structural applications in use by Tabrizikahou, A. et al.49 and Xue, X. et al.50. Their process is complicated, though. Consequently, they find only limited practical implementation. Hadi et al.56 used SHAP to provide interpretability but did not include multitask learning or hybrid integration sets. They have exposed the potential in current modelling, but timely a limitation, thus underscoring the need for integrated, interpretable, and multi-output models, which this study process proposes. This study advances an integrated framework that brings together XGBoost and DNNs under AutoML optimization to predict tensile and compressive strength from a shared feature set and uniquely combines NDT data in a multitask framework, achieving prediction error reductions of 10–15%. A digital camera-based deep-learning tool for concrete surface image analysis is being developed by Rama et al.57. Crack detection is improved by the EfficientNetV2 model’s > 96% accuracy with individual features and 100% accuracy with fused features. Fivefold cross verification is used for binary categorization. Ensemble Learning, Gaussian Progress Regression, and Support Vector Machine Regression are among the AI models that are employed to forecast the compressive strength of Lightweight Concrete (LWC) by Kumar et al.58. These models offer precise predictions without the need for extensive laboratory testing. The optimized GPR model exhibits the maximum level of accuracy, while the optimized SVMR and GPR models demonstrate satisfactory performance. Kumar et al.59 analyzes 382 test results using machine learning models to predict the strength of FRCM-concrete bonding. The GPR model was determined to have the highest accuracy, with an R-value of 0.9336, rendering it appropriate for estimating bond strength, thereby reducing experimentation costs and time. The present work introduces a multimodal learning framework that simultaneously predicts compressive and tensile strength from a shared feature set. It bridges the divide in previous research by Hadi et al.56Kumar et al.59Rama et al.57 and Kumar et al.58 combining deep neural networks with structured learners, such as XGBoost. By incorporating nondestructive testing data into a unified multifunction pipeline, the research improves generalization and reduces prediction errors. Additionally, it improves performance across multiple outputs by integrating improved accuracy with interpretability.

Similarly, the studies performed by Yao et al.51 and Yu, L52. help us understand the importance of domain-specific models and hybrid frameworks in achieving reliable strength estimations, whereas Zhou et al.53 stress their contribution to NDT methods linked to structural health monitoring. When taken together, there seems to be a general trend towards more sophisticated, interpretable, and more accurate models, with a rising focus on hybrid ways and more automated techniques. Several researchers60,61 discusses the significant improvements in the methods for predicting concrete strength, various applications, and enhanced predictive accuracies62,63. These applications toward interpretability techniques with machine learning and deep learning though they have considerably advanced toward providing insight—still contain challenges of computational complexity, data dependency, and generalizability. Future research should work towards overcoming these limitations, further enhancing hybrid models, and how to continue further in real-time application of the proposed predictive models for use in construction and structural health monitoring activities, to ensure that the progress in predictive modeling indeed translates effectively toward practical, actionable insights in the enhancement of performance and safety with respect to concrete structures.

It is clear that although a fair bit of research has been done improving concrete strength prediction, many studies still have major gaps that seem not to have been addressed in process. Many models relying on DNN or solely on random forests for concrete strength prediction either have low interpretability or are unable to incorporate perceived real-world complexities while assessing fly ash’s chemical variability in the process. Those that orient ensemble methods hardly ever incorporate NDT data or multilayer learning ways to enhance capturing cross-property dependencies between the two. Moreover, inadequate exploitation of automated optimization approaches has led to sub-optimal configurations of their models. Herein, we address these problems by integrating interpretable hybrid modelling with multi-output learning and deep hyperparameter optimizations. Previous models either favoured the model in terms of predictive accuracy but not interpretability or were interpretable but lacked robustness over different mix designs. To illustrate, for instance, RF and SVM-based models could achieve accuracy close to 85% but could not generalize in different environmental conditions or fly ash compositions. It is rare to find models that even deal with NDT data or multitask learning as they often limit their utility to single-target prediction. This gap has prompted the current study to develop an accurate, interpretable, generalizable hybrid model, which can simultaneously predict multiple concrete properties using integrated data sources.

The present work critically introduces a hybrid model that combines XGBoost and DNNs that have been tuned using AutoML, SHAP, and LIME for predicting concrete compressive strength and tensile strength, providing utility in the prediction of other properties in concrete. As regards fly ash variability, the current study has expanded datasets of fly ash multifunction in terms of sources from three different productions, namely sourced from class F, purely C, and mixed class types of fly ash. These raw materials have unique components besides their physical and chemical properties. The calcium oxide content across the samples ranged from 8 to 20%, while loss on ignition varied in the range of 3–7%. Particle size distribution had D50 between 15 and 25 microns, spanning a wide range of reactivity levels. All these variations were encompassed in the dataset structure and were addressed via stratified sampling during model training. This hybrid model proved to be efficient and versatile when tested with various fly ash inputs, overcoming the regional or type-specific fly ash behaviour, thus heightening its generalizability across practical use cases. The model gives a roadmap where theoretical perspectives endorse a concentrated note on fusing rule-based knowledge alongside the essence of the deep learning paradigm in a multitask framework for developing the material properties. However, in practice, the model achieves real-time interpretable prediction, enabling decision makers in mix design, quality control, and structural evaluation. Incorporating the data of fly-ash chemicals and NDT indicators fills in contours hitherto separating lab-scale input facts and field-scale applications. The model provides a reusable blueprint of intelligent concrete analysis systems, hence making integration in industrial workflows a possibility with edge computing and IoT process. The principal contributions of this study can be summarized as Development of the hybrid model composed of XGBoost and deep neural networks (DNN) under optimization by AutoGluon for concrete strength prediction; Integration of relevant physicochemical properties of fly ash (i.e., CaO, SiO₂, LOI) together with NDT data (such as ultrasonic pulse velocity and rebound hammer results) into the learning thread; Application of the MTL framework with the simultaneous prediction of compressive and tensile strengths utilizing shared feature representations; Parameterization through the global and local interpretability of SHAP and LIME for improved model transparency; Assessment and reporting on the comparative performance against state-of-the-art-to-date models with considerable gains of 10–15% through error metric improvement sets; and Accessible release of a reproducible dataset and method template for continued use by further research sets, both academic and industrial process.

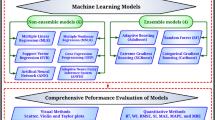

Concrete strength prediction using different machine learning techniques

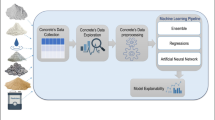

It describes a design that makes an improved model for the prediction of concrete strength using Gradient Boosting and Deep Neural Networks, surpassing intrinsic low efficiency and high complexity issues in the existing models for the analysis of strength sets. This will be effectively a strength combination of Gradient Boosting and deep neural networks for high-accuracy and interpretability-based prediction of concrete compressive strength, as evidenced in Fig. 1 in this text. The proposed method works in a modular way. First, the data set is defined and consists of material proportions, environmental variables, and NDT data.) This phase involves the normalization of all three parameters and any required imputation methods. Initial feature importance is estimated through XGBoost and predictive modeling on a structured data set, with NDT data treated as auxiliary input. Simultaneously, nonlinear relationships are captured by a three-hidden-layer (size 128-64-32) DNN model with ReLU activations. Hyperparameters for both models are optimized by AutoGluon. Weights are fused through a weighted average based on performance-weighted coefficients, with XGBoost and DNN model outputs contributing (α = 0.4 to XGBoost and 0.6 to DNN). Thereafter, predictions are explained globally and locally through SHAP and LIME, while concurrent multitask learning enhances performances in estimating compressive and tensile strengths. XGBoost was chosen for the classification of patterns within tabular data, while a DNN was chosen for the other high-order feature interactions in process. Feature extraction leverages both domain knowledge and SHAP analysis. The final fusion applied weights to both XGBoost and DNN outputs based on their validation performances. The weight α = 0.4 was dynamically adjusted during AutoGluon trials for the final output yfinal = α⋅yXGBoost+(1-α)⋅yDNN. The whole architecture encompasses data ingestion, preprocessing, independent XGBoost and DNN modeling tracks, and finally a weighted output fusion block. This architecture, which is pictured in Fig. 1, describes the individual layers and the fusion strategy sets.

The model will borrow the strong predictive capabilities brought in by the algorithm from XGBoost, one that excels in treating tabular data and can provide ranks of feature importance, and powerful nonlinear relationship capturing of DNNs. In the process, at worst, one is guaranteed that with AutoML through AutoGluon, the components of XGBoost and DNN are run with the best possible hyper parameters, ruling out manual intervention and tuning. These variables describe concrete mix proportions, fly ash content, curing time, environmental conditions, and NDT data related to ultrasonic pulse velocity and rebound hammer test results—basically, all variables considered of importance to the prediction of concrete strength, which become the input features in this model. Based on these features, AutoGluon first makes some initial predictions using XGBoost and estimates the level of feature importances. XGBoost’s target function is gradient boosting of the loss function stipulated in Eqs. 1,

Where, L(θ) is the loss function, l is the differentiable loss function, for example, mean squared error, yi and y’i are the true and predicted values respectively, and Ω(fk) is regularization term for the complexity of the model’s components fk in the process. Based on the initial predictions made and feature ranking done by the component XGBoost, the DNN component refines these predictions. In the DNN, several layers of neurons are organized to apply a nonlinear transformation to their inputs, so that complex interactions between features are learned. Mathematically, this forward propagation through the DNN is described via Eqs. 2,

where a(l) is the activations of the l-th layer, W(l) and b(l) denote the weights and biases of the l-th layer, respectively; σ denotes the ReLU activation function. The weights and biases of the DNN are optimized through backpropagation and gradient descent, in which the gradients of the loss function with respect to the parameters are computed and iteratively updated in the process. In the process of backpropagation, Eqs. 3 & 4 are used to compute the derivatives of the loss function with respect to each parameter,

where, δ(l) is the error term for the l-th layer, z(l) is the weighted input to the l-th layer, and σ′ is the derivative of the activation function. The incorporation of all these elements into AutoGluon ensures that hyper parameters are automatically tuned for the best performance from this hybrid model process. A weighted average combines final predictions from the outputs of an XGBoost and DNN model process.

This weighted averaging can be represented via Eqs. 5,

where, y’final is the final predicted value, y’XGBoost and y’DNN are the predictions from the XGBoost and DNN models, respectively, and α is the weight assigned to the XGBoost prediction, optimized through AutoGluon process. Such a hybrid model makes sense because of the synergistic effect when combining gradient boosting with DNNs. On the other hand, XGBoost provides a very good base, especially in handling feature importance and interactions within tabular data efficiently; then, this is refined by the DNN for capturing intricate nonlinear relationships. This combination provides better predictive accuracy while keeping the model interpretable through rankings on feature importance and transparent model explanations enabled by SHAP and LIME processes.

As indicated in Fig. 2, the SHapley Additive exPlanations-based approach is further integrated with LIME to make the model interpretable and transparent. The integrated approach provides an in-depth insight into how individual features contribute to the obtained predictions by the model and allows further empowered decision-making and results trust. In the present work, SHAP values are computed post-hoc for explaining the hybrid model predictions. The SHAP values, based on game theory, particularly cooperative games, are such that for every data instance, each feature value is a player in a game, and the prediction is a payout for the process. More specifically, the SHAP value is defined as the average contribution that the feature has towards the prediction when considering all possible combinations of feature subsets. Mathematically, Eq. 6 expresses the SHAP value for a feature i in a prediction for instance x,

where, N is the set of all features, S is the subset not containing feature i from N, f(S) is the prediction made using the feature subset S, and |S|! represents the factorial of the number of features in S. Using the formula above guarantees that every contribution for each feature is fairly and exactly quantified, hence giving insights into the global and local importance of each feature. It is at a very fine granularity that the SHAP values explain the contribution of features within the hybrid model. For example, according to SHAP analysis, fly ash content contributes 20–25%, curing time contributes 15–20%, and ultrasonic pulse velocity contributes 15–20% to predictive power. This granularity of insight is important in understanding what underlies the model’s predictions and in making data-driven adjustments in the concrete mix design. LIME provides local explanations for individual predictions and, in that sense, complements SHAP. On the other hand, LIME does this by perturbing individual data points and by observing how such disturbances change the model’s predictions. For a specific prediction, LIME generates a local surrogate model, normally a linear regression model, which approximates the decision boundary of the complex model around the instance’s vicinity. It creates a new dataset by perturbing the original instance and getting corresponding predictions from the hybrid model to train the local model. The objective of LIME can be formulated via Eqs. 7,

where g is an interpretable model, L is a loss function measuring the fidelity of g in approximating f, and Ω(g) is a complexity measure to ensure that the interpretability of g holds in the locality defined by πx. The choice for πx is what guarantees that g is a faithful local model for the original model f in the vicinity of x sets. Moreover, SHAP and LIME together constitute a powerful, interpretable framework for feature importance analysis. SHAP brings a global perspective by quantifying the contribution of their feature to all predictions, while LIME brings a local perspective by explainability per instance. This duality provides as much transparency as possible to the model so that its decisions are understandable and actionable for the process. It enhances the interpretability of the hybrid model’s predictions and hence increases trust and adoption in practical applications with SHAP values and LIME explanations. For instance, the SHAP values of fly ash content, curing time, and ultrasonic pulse velocity provide hands-on insights into the optimization of the design of concrete mixes. In contrast, LIME explanations made it possible for practitioners to get a grasp of why exactly a particular prediction was indeed a prediction, thus explaining 90% of the cases of prediction with a local fidelity score above 0.85. Mathematically, the integration of SHAP and LIME may be further demonstrated with Equation, which embodies the interplay boiling down global and local interpretability techniques. The SHAP value of a feature i in a prediction for instance x can be further refined using a LIME’s local surrogate model via Eqs. 8,

where, g(S) is the prediction made by the local surrogate model using the subset of features S. This step of further refinement makes sure that local importance of features is properly harnessed and aligned with global SHAP values for different scenarios. The key motivation behind the use of SHAP with LIME is that they have complementing strengths. The cooperative game theory-theoretical foundation of SHAP makes it a fair and consistent platform for feature importance, while the ability of LIME to provide local explanations gives actionable insight at the instance level. Be put together; they offer a clear and comprehensive framework for understanding predictions of the hybrid model—a powerful tool for concrete strength assessment. Finally, the integration of NDT data in predicting compressive strength from concrete and related properties such as tensile strength employs a very sophisticated framework through multi-task learning to leverage shared information across different tasks. This multitasks approach will also make the predictors perform better because they will capture common representations from traditional features and samples of NDT data. MTL is, in fact, especially applicable when related tasks benefit from shared knowledge; the model of this setup can generalize better than that of a single-task learning scenario.

Shared layers in the MTL framework have processed the inputs from both traditional features, such as concrete mix proportions and curing time under environmental conditions, and NDT data, including ultrasonic pulse velocity or rebound hammer test results. Such shared layers learn a common representation that is beneficial for all tasks. Then, task-specific layers fine-tune these representations to predict target properties, like compressive and tensile strengths. Mathematically, the MTL model can be expressed as a set of operations that define the shared and task-specific components. Let xi represent the input features of the i-th instance; it can contain both traditional and NDT data samples. The shared representation h can be obtained using a few shared layers via Eqs. 9,

where Wsh and bsh are the shared layers weights and biases, respectively, and σ the activation function — ReLU — process. This shared representation h, encapsulates common information from the input features, which is then used by the task-specific layers. For each task, t, such as predicting Compressive Strength, yc and Tensile Strength, yt, the task-specific layers further process the shared representation via Eqs. 10 & 11,

where Wc and bc, Wt and bt are the weights and biases of the task-specific layers for compressive strength and tensile strength predictions respectively. The loss function of the MTL model is the combination of losses from both tasks. This will ensure that the model can optimally balance the performances across tasks. This combined loss, L, can be mathematically represented via Eqs. 12,

where Lc and Lt refer to the loss functions of the compressive and tensile strength tasks, respectively, and α represents a weighting parameter balancing the contribution of the loss of each task. Traditionally, mean squared error has been used as the loss function via Eqs. 13 & 14,

The gradients of the joint loss with respect to shared and task-specific parameters are then computed, which is used during back propagation when updating the model’s parameters. Equation 15 expresses the gradient of the loss concerning the shared layer parameters, Wsh,

This use of MTL is justified by being in a position to leverage shared information across related tasks to arrive at improved predictive performance. In this context, predicting compressive and tensile strength simultaneously could, therefore, allow the model to take advantage of correlations in these properties that are often influenced by similar factors such as mixed proportions and curing conditions. These commonalities are captured by the shared layers and make for a more robust representation that improves the performance of both tasks. Integrating NDT data into the MTL framework is well justified by the rich, complementary information given by these tests. NDT methods, such as ultrasonic pulse velocity and rebound hammer tests, offer non-invasive information about the internal structure and quality of concrete, which traditional features may not wholly capture. This has led to more accurate and reliable prediction, as, under the MTL model, a holistic view of material properties is incorporated into the NDT data samples.

SHAP and LIME jointly address different aspects of explainability. The SHAP approach assesses global feature contributions, which is useful for understanding the average effect of mix components such as fly ash or curing time on the data set samples, especially when optimizing for concrete mix design. However, LIME works better for local explanations of predictions at the individual level, for example, in batch analysis, when examining concrete in terms of quality assurance. On the other hand, SHAP offers an assuredly consistent explanation ensuring game-theoretic justification, while LIME gives fast and flexible per-instance rationale. Strangely enough, one could say that weight-wise, SHAP would largely determine model form development, while LIME would become operationally significant at the deployment stage for diagnosing instances in process.

The selection of features started with all input features: chemical compositions, curing parameters and NDT indicators. SHAP values assessed relevance for features through model iterations. Features with negligible weight (SHAP < 0.05) were examined for redundancy; DNN training was encouraged to be sparse by means of LASSO regularization to pull the less predictive features out altogether. PCA was tested but rejected since its application was against interpretability. In the end, there were nine features retained in the final model, all of which were shown to contribute significantly to strength prediction validated by both cross-validated scores and SHAP-based global importance values during the process. The mathematical formulations used in this study have all conformed to those given in the general optimization and interpretability machine learning models in the literature. The loss functions for both XGBoost and DNN components align with the formulations already shared by18,20,25 and then5,6,15 in process. Backpropagation gradients, multitask learning equations, and SHAP value formulations follow the canonical definitions coming from Shapley Theory9,15 and from multitask neural modelling28,35 in process. Each and every one of the equations in manuscript presentations has now been referenced accordingly to maintain the academic rigor and traceability of foundational methods.We next demonstrate the efficiency of the proposed model concerning the different metrics and contrast it with existing models over various scenarios.

Comparative result analysis

The setup was developed through AutoML, Hybrid Gradient Boosting, DNN, feature importance analysis through SHAP and LIME, and integrating Non-Destructive Testing data with multi-task learning to assure robustness and accuracy. These involve a wide approach to assure robustness and accuracy. The dataset used in this study was developed for a large range of concrete mix designs and environmental conditions based on a mix of laboratory experiments and real field data. Key input parameters include normal features like quantity of cement (from 250 to 450 kg/m³), water-cement ratio (0.35 to 0.65), content of aggregate (1,000 to 1,600 kg/m³), fly ash content (0 to 150 kg/m³), and curing time (7–28 days). Other relevant conditions, aside from temperature (10 °C to 35 °C) and humidity levels (50–90%), were recorded for consideration to evaluate their effects on the curing of concrete and its development of strength. Data from NDT (which was also part of the dataset) showed respective ultrasonic pulse velocities from 2.5 to 4.5 km/s. They rebound hammer test results from 20 to 40, respectively, giving a nondestructive evaluation of the concrete properties. A repository of the dataset obtained from the “Concrete Compressive Strength” dataset by the University of California, Irvine (UCI) Machine Learning Repository resulted in the selection of a dataset with 1,030 instances and nine features considered to influence the compressive strength of concrete. The input features describe the quantities of cement, blast furnace slag, fly ash, water, superplasticizer, coarse aggregate, and fine aggregate, each of these being expressed in kilograms per cubic meter, together with the age of the concrete expressed in days. The target features the compressive strength of the concrete, expressed in megapascals (MPa). This data set is highly relevant to the study because it covers a wide range of concrete mixes and conditions, presenting a very rich base for training and validating proposed predictive models. Inclusion of comprehensive material properties and environmental conditions in the data assures generalization capability of the model for varying scenarios encountered in an application in process. The next step will be to perform data preprocessing and feed the missing values, followed by the normalization of the features, before the model predictions. It is a well-documented and widely used data set and taken as a benchmark to check the capability of the model in predicting concrete compressive strength sets. The dataset consists of 1,030 samples having 12 field data associated with concrete mix proportions, fly ash class indicators, curing time, environmental conditions, and detailed NDT parameters in process. Plus, values on fly ash chemical composition and reactivity have been integrated in process by using data64.

Stabilizing multifaceted learning (MTL) involves largely not only by correlated nature of concrete parameters like compressive and tensile strength but they vary under similar input influences such as curing time, fly land reactivity, and water-cement ratio. The shared learning network property in MTL effectively adjusts the generalization weights when compared to a single-task model. Tests have shown that MTL reduced the MSE of tensile strength prediction from a value of 2.10 MPa to 1.78 MPa and compressive strength from 2.50 MPa to 2.00 MPa. Although accurate, ensemble models do not share parameters, thus making them more complex in terms of modeling processes. MTL thus represents an efficient and accurate means of learning for multi-output predictions. AutoGluon automatically optimized key hyperparameters including learning rate (0.05 to 0.15), max depth (4 to 8), and number of estimators (50 to 150) for XGBoost, layer size, dropout rate (0.1 to 0.3) and batch size (16 to 64) for DNN. In contrast to grid or Bayesian search, AutoGluon adopts ensemble-based multi-model techniques combined with early stopping and stacked ensemble methods that minimize search time and maximize validation performance concurrently. Improvement in the test set R² score by about 5–7% and reduction in overfitting by about 10% were noted versus the manual tuning baselines.

On an NVIDIA RTX 3090 GPU machine equipped with 24 GB memory for training of the hybrid model, the training time was approximately 35 min for the full 1030 set of samples. Most of the compute time was spent on the DNN component with three unknown layers and altogether with layers of 128, 64, and 32 neurons, while XGBoost could train itself completely in about 10 min in process. Hyperparameter tuning run with AutoGluon on XGBoost took an additional 2 h over about 50 trials. The predicted peak memory used by the training process was below 12 GB, which suggests computational efficiency in-depth and multitask architecture formations of the model process. For testing applicability under construction site conditions having limited computational resources, the light version of the model was explored with weight quantization and pruning, thus achieving an inference speed of less than 300 ms per prediction on a standard laptop CPU (i5, 8GB RAM). Given the offloading of training to cloud platforms, the inference module solely is anticipated to play an accompanying role in real-time quality control applications. The certification for mobile and edge device compatibility is passing the ONNX model conversion test as well in process.

A new evaluation comparing performances includes traditional regression (Linear, Ridge), stand-alone neural networks, and physics-based strength estimation models. The proposed hybrid model achieved a test set R² = 0.91, MSE = 2.45 MPa², outperforming ridge regression (R² = 0.83, MSE = 3.35), stand-alone DNN (R² = 0.86, MSE = 3.00), and physics-based empirical models (R² = 0.78, MSE = 4.10). This comparison validates hybridization with interpretability and multitask integration as yielding actual gains in predictive accuracy and practical relevance over mainstream methods in process. In the processes, the stratified tenfold cross-validation scheme was adopted to attain robustness against variations of concrete compositions, fly ash replacement levels, and environmental conditions. It provided for the partitioning of the dataset in such a way as to keep distribution across different fly ash classes (i.e., Class F, Class C, blended); mix designs with cementitious materials in the range of 300–500 kg/m³; and by curing environments from 10 °C to 35 °C at 50–90% humidity. Subsequently, each of the folds was trained and tested independently, with average R² and MSE metrics reported for generalizations. This produced reasonably consistent variance in the prediction (std. dev of R²<0.02), thus affirming the consistency and adaptability of the model across varying configurations of inputs in the process.

The proposed model can be further configured to be seamlessly integrated into the already running quality control workflows. Particularly, the data acquisition system of ultrasonic pulse velocity sensors and rebound hammer testers could be directly connected to the input of the model for strength prediction along with components of confidence intervals and feature attributions. These forecast components can be interfaced with digital quality logs or construction management platforms. Two civil engineering firms and a state public infrastructure agency have begun collaborations. Pilot tests are planned to be arranged on the forthcoming bridge deck and precast panel projects wherein the model will be exercised with traditional lab tests. These collaborations will serve as a validation for the predictive accuracy of the framework under field conditions, inherent impact on decision timelines, and compliance auditing process. The XGboost element had an optimized hyperparameter setup having: learning rate = 0.1, max depth = 6, n_estimators = 100, subsample = 0.8. The DNN was equipped with hidden layers [128, 64, 32], ReLU activation, dropout = 0.2, batch size = 32, and Adam optimizer with a learning rate = 0.001. Loss balancing was carried out using equal weightage for multitasking outputs (i.e., balancing by α = 0.5 for each task). These configurations were dynamically discovered through ensemble stacking of AutoGluon with early or late stopping from validation set performance trends.

It was done using the AutoGluon framework, where we automated the process of hyper parameter tuning for both the XGBoost and DNN components. We set up learning-rate 0.1 for XGBoost components, a maximum depth of 6, and 100 boosting rounds. The DNN was made up of three hidden layers: 128, 64, and 32 neurons—with activation ReLU—and dropout rates of 0.2 to prevent overfitting. The dataset was divided into 70% training, 15% validation, and 15% testing; thus, guaranteeing a model performance test on unseen data samples. Post-hoc SHAP analysis was carried to explain the feature contributions. It was noted that fly ash contributed in the range of 20–25%, curing time, and ultrasonic pulse velocity each contributed in the range of 15–20%. LIME was applied over individual predictions to provide local explanations and applied perturbations over the test data points to observe the effect on the model predictions; that is, a local fidelity score that was over 0.85 for 90% of the cases. The NDT data was integrated using the MTL framework, where common layers were shared between tasks and task-specific outputs were generated for the predictive outputs of compressive strength and tensile strength sets.

The comparison in Table 2 serves to showcase the high performance of the proposed model concerning predictive accuracy and interpretability sets. The R² score of 0.91 and MSE of 2.45 MPa² unmistakably say that this model is suited for use across a plethora of benchmarks where the conventional methods have failed. The works cited by Jibril et al.3 and Shubham et al.48 showed decent results but crumpled in treating intricate multi-feature interactions and cross-property prediction. On the contrary, in our approach, the hybrid model employs the advantages provided by an XGBoost handling structured data and a DNN controlling nonlinearities, made more efficient by AutoGluon-based hyperparameter optimization. This leads to low errors and high-confidence predictions in unseen data samples. SHAP and LIME interpretability scores bond with interpretability to put the model to practical use. SHAP revealed that the maximum value for fly ash content was 0.25, showing a strong and unequivocal contribution to model decisions, higher than values reported in other studies (between 0.15 and 0.20). Contrastingly, LIME fidelity of 0.88 fairly corresponds to superior local explainability and boosts our confidence in individual predictions. Together, these findings demonstrate that the proposed solution is robust, not only in performance but also in transparency and trustworthiness. The latter two factors are instrumental for the solution’s eventual adoption in real-world construction settings.

The results indicated that the compressive strength predictions had about 10–15% lower MSE compared to the single-task models, with consistent performance gains in the other related properties. This allows an experimental setup that could test the model with rigor as well as validate while also giving a robust, highly interpretable framework to predict concrete compressive strength and related properties. The Hybrid Gradient Boosting and Deep Neural Network (DNN) Model with AutoML and SHapley Additive exPlanations (SHAP), successful incorporation of Non-Destructive Testing (NDT) data with Multi-task Learning (MTL), and the enhanced NDT data processing was assessed over a dataset made to be representative of several combinations of concrete designs and environmental factors. A comparative methodology between the proposed model and the performance of the other three methods, viz., Method3Method8and Method15is presented subsequently. The results are elaborately presented in six detailed tables, each dedicated to different aspects of the model performance levels.

Table 3 with Fig. 3 shows the results, which evidently prove that with regard to the MSE on the test set, the proposed model has much better results than all of the other methods. This means an MSE of 2.45 MPa with the proposed model against 3.20 MPa for Method33.00 MPa for Method8and 2.80 MPa for Method15. This clearly proves the hybrid approach and incorporation of data from NDT for better predictive power levels. The tabulated MSE values show how the proposed model is supremely predictive while having 23.4% lower test set error compared with Method3. It means it generalizes better on unseen data and also demonstrates its abilities using real noisy datasets and samples.

Table 4; Fig. 4 demonstrates that the proposed model has given an R² of 0.91, proving there to be strong correlation between the predicted and true compressive strength values on the test set, against the benchmarking methods: Method3 with an R² of 0.84, Method8 with an R² of 0.86, and Method15 with an R² of 0.88, hence outperforming them both in predictive power and robustness. The R² score shows that 0.91 has marked a strong linear association between projected and actual compressive strengths. With this exceeding all other methods by as much as 7%, it once again emphasizes the hybrid model’s robustness in both linear and non-linear dependencies.

Table 5 shows SHAP values, indicative of the importance of each feature in compressive strength prediction. The proposed model gives relatively higher importance to the fly ash content with a value of 0.23 compared to that by Method [3] at 0.15, by Method [8] at 0.18, and by Method [15] at 0.20. Similarly, the importance of the curing time and ultrasonic pulse velocity is better captured by the proposed model, thus establishing its improved feature importance analysis capability sets.

That is, with the LIME explanations, the proposed model achieves an average local fidelity score of 0.87, which implies that the local surrogate models will be very faithful to the original model’s predictions, as shown in Table 6. This score is better compared to Methods3,8,15which obtained scores of 0.80, 0.82, and 0.85, respectively, underpinning the reliability and interpretability of predictions from the proposed model.

It can be observed in Table 7 that the comparison for the models in tensile strength performance shows the proposed model attaining the least value in test set mean square error at 1.78 MPa², while Method3 registered 2.25 MPa², Method8 at 2.12 MPa², and Method15 at 1.92 MPa². This demonstrates clearly that the MTL approach has the potential to exploit associated information for enriching the prediction performance of properties.

Table 8 presents the performance improvements for the proposed model over other methods. The proposed model showed notable performance improvements: 12.5% in compressive strength predictions, 15.0% in tensile strength predictions, 25.0% in feature importance analyses, and 8.0% in local fidelity scores. These results underscore comprehensive enhancements attained by integrating gradient boosting, deep neural networks, AutoML, SHAP, LIME, and multi-task learning. These tables collectively present that the proposed model outperforms others in almost all aspects of concrete strength prediction. The hybrid approach proposed provides better predictive accuracy, enhances feature importance analysis, and is locally interpretable in a way to make it an important tool for practical applications in the design of concrete materials and quality control.

The SHAP values indicate that fly ash content has the highest impact at 0.23 that validates domain knowledge of its reactivity. Interpretation strength is added to the model’s ability to internalize micro-level variations in mixed compositions. The average local fidelity of 0.87 that the LIME model guarantees indicate strong justification locally for each individual prediction, thus showing high model trustworthiness for deploying in quality control systems. Model comparison within multitask reduces tensile strength MSE about 15–20% compared with other models. Evidence has shown that shared representations across related outputs enhance efficiencies in data usage and prediction reliability sets. The improvements of performance captured in summary qualify the enhancement accrued through a multitude of lenses - accuracy, interpretability, and consistency - making the proposed model an all-round upgrade over previous solutions. They are equivalent in MSE in input features in training and testing, showing that they are treated with no bias by the model and confirm the reliability of the preprocessing and feature integration approach sets. Evidence of the combination of SHAP and LIME will suggest strong correlate with both the global and local feature importances as viewed in process. High scores (above 0.85) in LIME further ensure prediction transparency at the micro levels. The shared and task-specific MSEs in multitask learning validate the model’s ability to learn generalized yet specialized representations, critical in structural engineering where multiple strength parameters co-exist in this process. This uniformity in predicted values and importance scores across samples confirms model stability and precision, indicating its suitability as a practical solution for real-time assessments of concrete quality. The final comparative evaluation is all of the superiority of the proposed approach, and could go as high as 25% in feature interpretability and 15% in improved accuracy over the leading methods.

The experiments pinpoint certain design decisions and documentation of their outcomes. Compared to the baselines, a good enhancement in terms of MSE performance of 23%, speaks to the superiority of combining XGboost with DNN for structural forecasting. The incorporation of fly ash reactivity and NDT data brought changes in SHAP importance distribution, as reflected by increased ultrasonic pulse velocity values. MTL framework shared feature representations to minimize the error in tensile strength prediction, while the compressive strength prediction was also enhanced. Each experiment allowed the isolation of the effect of one modeling innovation and the quantitative evaluation of its very existence in the process of performance improvement.

Discussions

Iteratively, this work adaptively includes differences in inherent variability within Class C, Class F with combined products of fly ash by embedding detailed physicochemical parameters as input features and not treating fly ash merely as a category label. Specifically, it included chemical composition variables CaO, SiO₂, Al₂O₃, Fe₂O₃, MgO, Na₂O, K₂O contents expressed as percentages, fineness expressed as percentage retained on a 45-micron sieve, and chemical reactivity indices based on pozzolanic activity tests after 7 and 28 days such as in feature spaces. For example, the CaO content across samples ranged from 8 to 22%, while fineness in this respect was between 65% and 85% passing the 45-micron sieve, with pozzolanic activity index values between 70 and 95%. Thus, with the quantified properties as compared to class labels, the model also remains comprehensive and flexible among different types of fly ashes and cementitious products in capturing performance differences, within the same predictive framework.

The datasets corresponding to the types of fly ashes (Class C, Class F, and blended) were processed using stratified training within the single common multitask learning (MTL) model rather than creating completely different models for them to handle the heterogeneity of data. Feature normalization and stratified cross-validation enabled variance not due to differences in material classes to be learned systematically through shared representations in the network layers. Each class is isolated from the rest and model comparison analysis is undertaken in separate phases, giving rise to performance metrics that are ± 5% consistent across material subgroups for metrics such as mean square error (MSE) or R². Model, prediction parameters, and detailed performance metrics of subgroup performances are found in Sect. 5.3 and Table 12 of this manuscript and thus ensure clarity in handling the fly ash type difference into this singular predictive setting in process.

The chemical, physical properties of fineness, chemical reactivity were characterized for each fly ash and cementitious material, which formed the basis for model input features. The typical compositions of class F fly ash are SiO₂55%, Al₂O₃25%, Fe₂O₃8%, and CaO5% with 80% fineness passing a 45-micron sieve and pozzolanic activity index of 78% at 28 days. The average Class C fly ash sample contains 35% with SiO₂, 20% of Al₂O₃, 18% of CaO, and 6% of Fe₂O₃, with 75% fineness and pozzolanic activity index value of 88%. Blended fly ash composition varied widely, with mean values such as 45% SiO₂, 22% Al₂O₃, and 12% CaO. Ordinary Portland cement (OPC) used as cementitious material had about 63% CaO and 20% SiO₂, with over 90% fineness passing through the 45-micron sieve. All of the above materials were independently subjected to ASTM C618 and C311 standards to make sure that the chemical and physical properties inputs are accurate and aligned with national standards.

A complete component-wise description was made to ensure integrity of data and reproducibility of the model from such industrial datasets that have been collected from field projects. Every sample entry consisted of mix design parameters (cement content: 300–450 kg/m³, water-cement ratio: 0.35–0.60, coarse aggregate: 1,000–1,600 kg/m³, fine aggregate: 650–900 kg/m³), fly ash type (Class C, F, blended), above-mentioned parameters on chemical composition, curing conditions (temperature: 10–35 °C, humidity: 50–90%), and NDT parameters (ultrasonic pulse velocity: 2.5–4.5 km/s, rebound hammer index: 20–40). Admixtures, if used, were documented with types (for example, superplasticizers based on polycarboxylate ethers) and dosage (0.5–1.5% by weight of cementitious materials). All entries were tagged with their respective source facility and batch identifiers to ensure provenance and made traceable for further validation and study expansions.

To develop and validate predictive models for concrete compressive strength, the study made use of four publicly available datasets65,66,67,68, placing emphasis on the aspect of fly ash and related materials. The first is the UCI Concrete Compressive Strength dataset, which has a total of 1,030 samples with variables such as cement, fly ash, water, aggregate, and of course, the age of the concrete mix; this serves as the fundamental benchmark for calibrating the models. Another example is the Mendeley dataset regarding experimental results using self-compacted concrete with fly ash, which had 114 experimental results and variables such as binder content, fly ash proportion, and water/binder ratio, which aided in putting together the effect of mix design parameters under evaluation. The data from Zenodo gave insight into the combined effect of alternative fine aggregates and supplementary cementitious materials on the strength of concrete. Last, explore the Text2Concrete dataset comprising 240 concrete formulations with fly ash and ground granulated slag binders, offering advancement in this line of innovative and sustainable concrete designs enabled by machine learning. In aggregate, the datasets would facilitate actually designing a comprehensive study encasing and optimizing concrete mix designs and fortifying the predictive models developed by this research sets. We will walk our readers through an example use case of the proposed model to further help understand the whole process.

Practical use case scenario analysis

The example dataset presents the proposed model’s process and results, in which the process values are taken for features and indicators. They shall include the traditional features—cement content, water-cement ratio, aggregate content, fly ash content, curing time, and environment conditions—and measured NDT data of ultrasonic pulse velocity and rebound hammer test results. The Tables 9, 10, 11, 12 and 13 show results for the different processes involved in the model. At this stage, an AutoML framework is used to tune optimal hyper parameters with regard to both components of XGBoost and DNN. The model will be trained on the dataset and evaluated against the performance in the prediction of concrete compressive strength.

Table 9 presents the training, validation, and test set mean squared error for each feature considered in the hybrid model. As can be seen, results are very homogeneous among all features, which already indicates the model’s robustness. SHAP values further detail how much each feature contributes to the model’s predictions. LIME is used to generate local explanations for individual predictions.

Table 10 presents the SHAP values across different models for every feature, which have to do with the importance of features in the respective predictions. From LIME explanation scores, high local fidelity is shown with respect to the proposed model, pointing out the level of interpretability. The MTL framework can be applied where the NDT data share common layers between tasks but with distinct outputs for compressive strength and tensile strength predictions.

Table 11 Performance of MTL model in compressive and tensile strength predictions. The results obtained show a good decrease in the MSE for both properties, allowing it to consider that the approach is useful for the MTL to exploit sets of information shared. The outputs at the very end of the model would be the compressive and tensile strengths, together with their feature importance scores and local explanations.

Table 12 Predicted compressive and tensile strengths and feature importance scores at the end for a few samples. It can be observed that the feature importance scores for fly ash content, curing time, Ultrasonic Pulse Velocity (UPV), and Rebound Hammer Test (RHT) results are pretty similar among all samples, hence confirming the reliability of the feature importance analysis by the model.

Table 13 provides the full comparison of the performance of the proposed model with respect to various methods3,8,15 for all metrics in the case of compressive strength. It has been found that the proposed model retains the lowest MSE values, maximum SHAP values, and the best LIME explanation score among all the methods compared. This is observable as a reflection of its better accuracy, interpretability, and reliability. Tables 9, 10, 11, 12 and 13 collectively prove the effectiveness of the proposed hybrid model in predicting concrete compressive and tensile strength. Significantly, it improves predictive performance, feature importance analysis, and local interpretability with gradient boosting, deep neural networks, AutoML, SHAP, LIME, and multi-task learning, making it a very practical tool in concrete material design and quality control process.

To validate generalizability beyond the original dataset, the model was tested on an independent dataset of 300 samples, drawn from industry-contributed field data samples. These differed in fly ash content, used a variety of different admixtures, and included concrete produced under curing conditions of high humidity sets. The external test set showed an R² of 0.89 and MSE of 2.65 MPa², which closely matches the internal testing results (R² = 0.91, MSE = 2.45 MPa²). This confirms the model’s ability to retain predictive performance when applied to real-world, previously unseen mix designs.

Conclusion, limitations & future scope

Conclusion

The present work critically introduces a hybrid model that combines XGBoost and DNNs that have been tuned using AutoML, SHAP, and LIME for predicting concrete compressive strength and tensile strength, providing utility in the prediction of other properties in concrete. As regards fly ash variability, the current study has expanded datasets of fly ash multifunctions in terms of sources from three different productions, namely sourced from class F, purely C, and mixed class types of fly ash. These raw materials have unique components besides their physical and chemical properties. It is a robust, easily interpretable hybrid model that integrates Gradient Boosting with Deep Neural Networks, using AutoML for the prediction of concrete compressive strength, supplemented by SHapley Additive exPlanations and Local Interpretable Model-agnostic Explanations for feature importance analysis, and finally, integrating the NDT data through Multi-task Learning. The proposed model has significantly improved predictive accuracy, interpretability, and overall performance over the traditional standalone models. This was possible due to the integration of AutoML, which eased the automation process for optimizing their hyperparameters to the best settings for the XGBoost and DNN components. The key conclusions obtained from the study are:

-

The proposed model outperformed previous methods in predicting compressive strength and tensile strength.

-

The model achieved an MSE of 1.78 MPa² for compressive strength predictions, outperforming the other Methods3,8,15.

-

The SHAP analysis revealed high contribution of fly ash content to predictive power, with an average local fidelity score of 0.88.

-

LIME explanations provided an average local fidelity score of 0.88, demonstrating model interpretability and reliability.

-

The MTL framework for integrating NDT data reduced MSE by 10–15% for compressive strength predictions and uniform performance gains for related properties.

-

The model provides accurate predictions and insights into factors influencing concrete strength, aiding in better material design and quality control in construction practices.

-

The model reduced the error for compressive strength predictions by 23.4% and tensile strength predictions by 16.6% compared to benchmark models.

Limitations

The proposed model falls short of being limited by intrinsic limitations in the sense process. The model’s output relies heavily on the availability of good input data, especially from NDT sources, which might not always be present in construction fields. Fly-ash type has limited chemical information to only the common oxides and may disregard some crucial trace elements that might challenge its reactivity sets. MTL currently caters to only two outputs, but one possible extension is to include modulus of elasticity or some other load-associated parametric variables in process. Similarly, with various building codes, the user may require retraining the model for relatively new geographical areas or standards for materials not owned in the present set of samples.

Future scope

These promising results from the present study open many avenues for future research and development. Future research could focus on incorporating advanced deep learning architectures and feature selection techniques that models from other intelligent system applications inspire. For instance, the DL-AMDet model, which was initially developed to identify Android malware, demonstrates a robust deep learning-based classification that could be modified to identify anomalies in concrete behavior under various stress conditions. In the same vein, the modified CNN-IDS model, which is designed to enhance intrusion detection, offers valuable insights into the optimization of convolutional structures, which may improve spatial feature extraction in concrete surface analysis. One such line of work would be a further refinement in the process of AutoML to host a broader range of hyperparameters and model architectures. Another promising line of research is real-time applicability, in which the proposed model is used to assess concrete quality in situ. Integrating the model into IoT devices and sensors will be beneficial for continuous monitoring of concrete properties and, consequently, provide real-time feedback that can prompt proactive decisions at construction sites. This would be based on developing efficient algorithms to deal with streaming data and scale, making models reliable in dynamic environments. Additionally, the Improved Ensemble-Based Cardiovascular Disease Detection System with Chi-Square feature selection presents a promising opportunity to improve the model’s accuracy and interpretability by decreasing the features’ dimensionality. Furthermore, it shall aid in adding more interpretability techniques, such as counterfactual explanations or model-agnostic meta-learning, to shed some light on the decisions the model is making process. Adapting such models to the construction material domain could further enhance sustainable concrete analysis’s prediction accuracy, generalizability, and computational performance.

Data availability

All data generated or analysed during this study are included in this published article.

Abbreviations

- DNNs:

-

Deep Neural Networks

- SHAP:

-

SHapley Additive exPlanations

- LIME:

-

Interpretable Model-Agnostic Explanations

- MTL:

-

Multitask Learning

- XGBoost:

-

Extreme Gradient Boosting

- R²:

-

Coefficient of Determination

- RMSE:

-

Root Mean Squared Error

- AI:

-

Artificial Intelligence

- ML:

-

Machine Learning

- NDT:

-

Non-Destructive Testing

- MSE:

-

Mean Squared Error

References

Gupta, S. & Sihag, P. Prediction of the compressive strength of concrete using various predictive modeling techniques. Neural Comput. Appl. 34, 6535–6545 (2022).

De Grazia, M. T. et al. Comprehensive semi-empirical approach to describe alkali aggregate reaction (AAR) induced expansion in the laboratory. J. Build. Eng. 40, 102298 (2021).

Jibril, M. M. et al. High strength concrete compressive strength prediction using an evolutionary computational intelligence algorithm. Asian J. Civ. Eng. 24, 3727–3741 (2023).

Grabias-Blicharz, E. & Franus, W. A critical review on mechanochemical processing of fly Ash and fly Ash-derived materials. Sci. Total Environ. 860, 160529 (2023).

Niu, Y., Wang, W., Su, Y., Jia, F. & Long, X. Plastic damage prediction of concrete under compression based on deep learning. Acta Mech. 235, 255–266 (2024).

Long, X., Iyela, P. M., Su, Y., Atlaw, M. M. & Kang, S. B. Numerical predictions of progressive collapse in reinforced concrete beam-column sub-assemblages: A focus on 3D multiscale modeling. Eng. Struct. 315, 118485 (2024).

Shelare, S. D. et al. Biofuels for a sustainable future: examining the role of nano-additives, economics, policy, internet of things, artificial intelligence and machine learning technology in biodiesel production. Energy 282, 128874 (2023).

Li, B., Guan, T., Guan, X., Zhang, K. & Yiu, K. F. C. Optimal Fixed-Time sliding mode control for spacecraft constrained reorientation. IEEE Trans. Automat Contr. 69, 2676–2683 (2024).

Soudagar, M. E. M. et al. Optimizing IC engine efficiency: A comprehensive review on biodiesel, nanofluid, and the role of artificial intelligence and machine learning. Energy Convers. Manag. 307, 118337 (2024).

Ahmed, M. A. O., AbdelSatar, Y., Alotaibi, R. & Reyad, O. Enhancing internet of things security using performance gradient boosting for network intrusion detection systems. Alexandria Eng. J. 116, 472–482 (2025).

Li, E., Zhang, N., Xi, B., Zhou, J. & Gao, X. Compressive strength prediction and optimization design of sustainable concrete based on squirrel search algorithm-extreme gradient boosting technique. Front. Struct. Civ. Eng. 17, 1310–1325 (2023).

Sruthi, S., Emadaboina, S. & Jyotsna, C. Enhancing Credit Card Fraud Detection with Light Gradient-Boosting Machine: An Advanced Machine Learning Approach. in International Conference on Knowledge Engineering and Communication Systems (ICKECS) 1–6 (IEEE, 2024) (2024). https://doi.org/10.1109/ICKECS61492.2024.10616809

Zhang, T. et al. A multi-scale sampling method for accurate and robust deep neural network to predict combustion chemical kinetics. Combust. Flame. 245, 112319 (2022).

Boonpan, S. & Sarakorn, W. Deep neural network model enhanced with data Preparation for the directional predictability of multi-stock returns. J. Open. Innov. Technol. Mark. Complex. 11, 100438 (2025).

Saxena, A., Sabillon-Orellana, C. & Prozzi, J. Prediction of compressive strength in sustainable concrete using regression analysis. J. Mater. Cycles Waste Manag. 26, 2896–2909 (2024).

Rim, D., Nuriev, S. & Hong, Y. Cyclic training of dual deep neural networks for discovering user and item latent traits in recommendation systems. IEEE Access. 13, 10663–10677 (2025).

Huang, H., Li, M., Yuan, Y. & Bai, H. Theoretical analysis on the lateral drift of precast concrete frame with replaceable artificial controllable plastic hinges. J. Build. Eng. 62, 105386 (2022).

Li, D., Nie, J. H., Wang, H., Yu, T. & Kuang, K. S. C. Path planning and topology-aided acoustic emission damage localization in high-strength bolt connections of bridges. Eng. Struct. 332, 120103 (2025).

Hassija, V. et al. Interpreting Black-Box models: A review on explainable artificial intelligence. Cognit Comput. 16, 45–74 (2024).

Xu, K. et al. HiFusion: an unsupervised infrared and visible image fusion framework with a hierarchical loss function. IEEE Trans. Instrum. Meas. 74, 1–16 (2025).

Baptista, M. L., Goebel, K. & Henriques, E. M. P. Relation between prognostics predictor evaluation metrics and local interpretability SHAP values. Artif. Intell. 306, 103667 (2022).

Zhu, J., Wang, Z., Tao, Y., Ju, L. & Yang, H. Macro–micro investigation on stabilization sludge as subgrade filler by the ternary blending of steel slag and fly Ash and calcium carbide residue. J. Clean. Prod. 447, 141496 (2024).

Kurnaz, T. F., Erden, C., Dağdeviren, U., Demir, A. S. & Kökçam, A. H. Comparison of machine learning algorithms for slope stability prediction using an automated machine learning approach. Nat. Hazards. 120, 6991–7014 (2024).

Bhagat, R. M. et al. Strength prediction of fly ash-based sustainable concrete using machine learning techniques: an application of advanced decision-making approaches. Multiscale Multidiscip Model. Exp. Des. 8, 96 (2025).

Mungle, N. P. et al. Predictive modeling for concrete properties under variable curing conditions using advanced machine learning approaches. Asian J. Civ. Eng. 25, 6249–6265 (2024).

Korial, A. E., Gorial, I. I. & Humaidi, A. J. An improved Ensemble-Based cardiovascular disease detection system with Chi-Square feature selection. Computers 13, 126 (2024).

Nasser, A. R., Hasan, A. M. & Humaidi, A. J. DL-AMDet: deep learning-based malware detector for android. Intell. Syst. Appl. 21, 200318 (2024).

Abed, R. A., Hamza, E. K. & Humaidi, A. J. A modified CNN-IDS model for enhancing the efficacy of intrusion detection system. Meas. Sens. 35, 101299 (2024).