Abstract

Chip-scale LiDARs hold promise for high volume, low cost, and compact footprint. A key candidate is based on the adoption of phased array beam-forming and beam-steering in the optical domain, hence the optical phased array (OPA). Piggybacking on the rapid development of photonic integrated circuits (PICs), integrated OPAs today can emit a diffraction-limited laser beam with sub-mrad divergence and steer the beam at a point-to-point rate of ~GHz. Nevertheless, key issues remained to be addressed for practical LiDAR application. Here, we review the features and development of PIC-based OPAs from a LiDAR-oriented perspective, providing necessary backgrounds and analysis of challenges, recent breakthroughs, and long-term prospects.

Similar content being viewed by others

Introduction

Light detection and ranging uses laser light instead of radio or microwave for distance and reflectivity measurement of a target surface. Thanks to the shorter wavelength of light, LiDAR can resolve targets that are orders of magnitude smaller than what Radar is capable of at the same range. Additionally, since it operates on the optical frequency, its theoretic spatial resolution is similar to that of the camera, allowing more accessible sensor fusion for emerging applications such as autonomous vehicles1,2,3,4.

Nevertheless, though the ranging techniques5,6 are comprehensively investigated and implemented with ever-improving laser sources and photodetectors7,8,9,10, the scaling of lasers and photodetectors up to the pixel density of cameras, especially at an affordable cost and footprint proves a bridge too far due to the active nature of LiDAR. This necessitates laser beam-steering11, which temporally multiplexes the transmitter and receiver pairs without significantly degrading the ranging performance compared to scanless/FLASH solutions1,12. For decades, the industry has been reinventing LiDARs to solve the Spinning-Laser Problem13, i.e., miniaturizing long-range LiDARs under an ever-tightening budget regarding cost, size, and power consumption14. One of the defining aspects of these endeavors revolves around the beam-steering technology used by the laser scanner, which is deterministic for the footprint and the appearance of the final product.

Encouraged by the market boom, solid-state LiDAR solutions based on nanophotonic devices15,16 are widely studied, especially on the silicon photonic platform17, where a closer integration with the electronic integrated circuits holds potential for further reduction in power consumption, manufacturing cost, and system footprint. One of the prominent candidates is the optical phased array, which boasts seamless and inertia-less beam-steering, scalable and zoomable spatial resolution, and reconfigurable and adaptive scanning. This translates to 10 ~ 100 times faster beam scanning compared to the miniaturized mechanical scanners such as MEMS mirros18,19, with almost no trade-off between aperture size and scanning speed, no concerns for resonance deformation20, and digitally controlled beam-steering, which is not dependent on the last scanned direction. Compared to scanless solutions such as FLASH and its derivatives9,10,21 using paired arrays of miniaturized lasers and photodetectors, with edge-emitting lasers (EELs) or vertical cavity surface emitting lasers (VCSELs) in the transmitter, and with single-photon avalanche diodes (SPADs) or silicon photomultiplier (SiPM) in the receiver, OPAs have no blind spot and do not require external optics for beamforming. The elimination of external optics is a key advantage of PIC-based OPAs when compared to other solutions since it replaces active optical alignment of multiple discrete components with digitally driven on-chip calibration. This has potential to greatly increase the manufacturing throughput and improve the field robustness of OPA-based LiDAR with adaptive calibration against the influence of shock, elements, or channel-wise failures.

For the last 15 years, PIC-based OPAs and LiDAR systems based on them have demonstrated a beam divergence smaller than 1 mrad22,23,24,25,26, a scanning speed larger than 1 GHz27, an array scale up to 49152 elements28, a self-contained transmitter with on-chip laser sources26,29,30,31,32, close integration with CMOS electronics23,24,33,34,35, and a monolithic integration of both transmitter and receiver28,36,37,38, with start-up companies poised to release production samples as early as 202539.

This review will focus on the PIC-based optical phased array from a LiDAR-oriented perspective. In “Operating principle and performance indicators”, the operating principle of OPAs will be introduced, based on which we will draw connections between key performance indicators of the device and LiDAR specifications. After establishing our perspective, we will identify challenges and key breakthroughs regarding the previously mentioned performance indicators in a case-study fashion. Afterward, discussions and prospects for emerging solutions and future development will be provided in “Discussions and prospects”, while the conclusions will be drawn in the last section.

Operating principle and performance indicators

In this section, we intend to cover the key features of the phased array as a beam scanner. An in-depth view is provided with design methodology rooted in the mathematical models of the array, thereby connecting design parameters and device performance. Advanced topics in the field of array synthesis are simplified into intuitions or basic procedures to provide the necessary background for the publications in Section III without delving into these topics themselves. At the end of this section, we will establish the general specifications of the automotive LIDAR and how they depend on the beam scanner’s performance and design.

Concepts and conditions for beam-forming and beam-steering

An optical phased array is an array of coherent laser sources with element-wise phase and amplitude control \({C}_{n}={a}_{n}\exp (j{\varphi }_{n})\) arranged according to a specific geometry \({{\bf{r}}}_{n}=({x}_{n},{y}_{n},{z}_{n})\). For example, the far-field coherent combining of N isotropic point sources in the direction of interest \({\bf{u}}=({u}_{x},{u}_{y},{u}_{z})=(\cos \,\alpha ,\,\cos \,\beta ,\,\cos \,\gamma )\) is expressed as,

where \({{\rm{k}}}_{0}=2{\rm{\pi }}/{{\rm{\lambda }}}_{0}\) is the free space wavenumber of the operating wavelength. This term is the array factor (\(\text{AF}\)), which contains the geometric and complex weight designation of the array elements without regard for the exact emission of individual elements. Also, note that the direction cosine can be expressed in terms of azimuth \({\theta }_{AZ}\) and elevation \({\theta }_{EL}\) or steered angle within the plane parallel \({\theta }_{Y}\) (ZOY) and perpendicular \({\theta }_{X}\) (ZOX) to the array. The coherent combining of a linear array along the Y-axis and corresponding coordinate systems is given in Fig. 1. As a special note, the coordinate system in this work is arranged with respect to the longitudinal (X) and lateral (Y) directions of the aperture, meaning that \({\theta }_{X}\) and \({\theta }_{Y}\) correspond to vertical and horizontal field of view in most cases, which may be counter-intuitive.

The free-space coherent combining of an OPA can be viewed as a discrete sampling and reconstruction of the wavefront of an artificial incident wave. The integrity of the reconstruction determines the quality of the emitted field pattern, be it a Gaussian beam or a field of arbitrary shape. As a LiDAR scanner, the OPA is responsible for coherent combining with high directional gain and low divergence, i.e., beam-forming, and subsequently addressing different directions with the steerable beam, i.e., beam-steering. This is equivalent to sampling and reconstructing a plane wave in the desired beam direction. In order words, the beam-forming condition in the target direction \({{\bf{u}}}_{i}=({{u}_{x}}^{i},{{u}_{y}}^{i})\) can be derived as,

In Eq. (2), a uniform amplitude \({a}_{0}\) is assumed across the array since it samples a plane wave of uniform amplitude. Nevertheless, since this is a finite sampling of an ideally infinite wavefront, amplitude windows over the array can reduce the Gibbs effect and improve the spatial signal-to-noise ratio of the beam. The beam-forming condition can be derived with Eq. (1), and the beam-forming of a 1-D array is as shown in Fig. 2. And by reconfiguring the element-wise phase and amplitude, OPA changes the beam-forming direction, resulting in beam-steering.

Beam-forming of a 1-D linear array in the ZOY plane, where an imaginary plane wave in the target direction \({{\rm{u}}}_{{\rm{i}}}=[0,\,\sin \,{{\rm{\theta }}}_{Y}]\) is sampled and reconstructed by array elements organized along the Y axis. By drawing a sinusoidal reference along the wave vector, it is notable that the required element phase for beamforming corresponds to the phase value sampled by the array geometry, as indicated by Eq. (2).

Due to fabrication variance and inter-element coupling, the exact emission profile of individual elements may vary across the array. For arrays with a large element count, the element factor is typically approximated with the average embedded/active element pattern40. Consequently, all elements across the array share an averaged emission profile \({\bar{E}}_{n}({\bf{r}}-{{\bf{r}}}_{n})\) centered at the position of the original point source and weighted with \({C}_{n}\). Based on the Fraunhofer approximation of the Fresnel–Kirchhoff diffraction formula and by omitting a common phase term, this update Eq. (1) to,

In addition, since \({\bar{E}}_{n}({\bf{r}}-{{\bf{r}}}_{n})={\bar{E}}_{0}({\bf{r}})* \delta ({\bf{r}}-{{\bf{r}}}_{n})\), and by changing the order of summing and integration, which corresponds to interfere in the near field first and analyze the corresponding far-field, this can be written as,

The integration above resembles a 2-D Fourier transform of the convolution term for a planar array located on the XY plane. Moreover, since \({{u}_{x}}^{2}+{{u}_{y}}^{2}+{{u}_{z}}^{2}=1\) while \(z\equiv 0\) for all \({\bf{r}}\) to integrate, we omit the redundant \({u}_{z}\),

Using the convolution property of the Fourier Transform and the sampling property of the delta function, the far-field pattern is proportional to the product of the element far-field pattern and the array factor. The former is also known as the element factor.

Equation (6) permits separate analysis of the element factor and array factor. The former depends on the design of the array element, which requires precise electromagnetic simulation at a nanophotonic scale. The latter depends on array geometry \({{\bf{r}}}_{n}\) and configuration \({C}_{n}\), which can be solved on sparser grids. By separating the design of the element factor and array factor, the computation load is reduced significantly compared to the direct simulation of the entire array.

Periodic properties of the array factor

If we ignore the element factor for the moment and consider the array factor of a 2-D periodic array with \(L\times M=N\) elements organized uniformly at pitches of \({\Lambda }_{X}\) and \({\Lambda }_{Y}\), the array factor can be written as the 2-D discrete Fourier Transform of the complex weights on a 2-D grid,

Note that any grid displacement will transform into a common phase term, which can be omitted for the intensity pattern. Additionally, by assigning zero values outside the array grid, Eq. (7) can be extended to,

where \({f}_{X}={u}_{x}{\Lambda }_{X}/{\lambda }_{0}\), \({f}_{Y}={u}_{y}{\Lambda }_{Y}/{\lambda }_{0}\) are the spatial frequencies of the angular spectrum. Since both \(l\) and \(m\) are integers, this means that no matter what exact array factor Eq. (8) sums up to, the angular spectrum will have a period of 1 in both \({f}_{X}\) and \({f}_{Y}\). In other words, we have,

Meaning that the array factor is periodic w.r.t to \({u}_{x}\) and \({u}_{y}\), with angular periods equal to \({\lambda }_{0}/{\Lambda }_{X}\) & \({{\rm{\lambda }}}_{0}/{\Lambda }_{Y}\). This periodicity can be shown in a grating lobe diagram. As a quick reference, for any real directions in the free-space, there is \({{u}_{x}}^{2}+{{u}_{y}}^{2}\le 1\), which is called the visible region, and is marked with a black circle. As shown in Fig. 3a, since \(-1\le {u}_{x},{u}_{y}\le 1\), for no overlap between the neighboring spatial spectrums, there are \({{\rm{\lambda }}}_{0}/{\Lambda }_{X}\ge 2\) and \({{\rm{\lambda }}}_{0}/{\Lambda }_{Y}\ge 2\), which is the aliasing-free condition for all directions within the visible region.

Grating lobe diagram generated with MATLAB sensor array toolbox: (a) Uniform rectangular array (URA) with \({\Lambda }_{X}={\Lambda }_{Y}=0.5{{\rm{\lambda }}}_{0}\) when beam is steered to [i] the broadside direction, [ii] the end-fire direction along X-axis, and [iii] the end-fire direction of the Y-axis; (b) URA with \({\Lambda }_{X}={\Lambda }_{Y}=0.75{{\boldsymbol{\lambda }}}_{{\boldsymbol{0}}}\) when beam is steered to [i] the broadside direction, and when the grating lobe enters the free-space due to beam-steering in the [ii] X and [iii] Y axis.

As the element pitch increases, the angular period decreases, causing an overlap of the angular spectrum and shrinking the grating-lobe-free area (colored in solid green). In Fig. 3b-ii and Fig. 3b-iii, when the beam is steered to the edge of the grating-lobe-free area (about 20° in \({\theta }_{X}\) or \({\theta }_{Y}\)), the grating lobe enters the visible region, resulting in an aliased pattern. To avoid aliasing between the main-lobe and the grating-lobe, we can further define an aliasing-free range where no grating-lobe will entire during the beam-steering of the main-lobe within this region. This equals the angular period. And if the main-lobe is steering around the broadside direction, this corresponds to \(2\text{asin}(0.5{\lambda }_{0}/{\Lambda }_{X})\) and \(2\text{asin}(0.5{\lambda }_{0}/{\Lambda }_{Y})\) in the ZOY and ZOX planes, respectively.

The term aliasing not only refers to the aliasing between beam patterns but also indicates that for the current beam-forming direction, the array is under-sampled since the spatial frequency (\({u}_{x}\),\({u}_{y}\)) now exceeds the sampling capability of the array. As we will see later, numerous parallels between digital signal processing and antenna array design can be drawn. Finally, though it may appear that Eq. (7) to Eq. (9) are derived based on a uniform rectangular grid, the conclusions can be extended to any array geometry as long as the geometry can be treated as a thinned array on a dense rectangular grid.

We have now covered the key beam-forming and beam-steering behaviors of the phased array. Additionally, since the underlying physics possesses the property of Fourier Transform, an array with a larger coherent aperture will form a tighter beam, similar to the fact that a longer time sequence will lead to a finer frequency resolution. For the exact property of the beam, which is typically referred to as the beam quality, we need to derive the intensity of the array factor,

And under the beam-forming condition given in Eq. (2), \({\varphi }_{n}-{\varphi }_{n\text{'}}={{\rm{k}}}_{0}{{\bf{u}}}_{i}\cdot ({{\bf{r}}}_{n}-{{\bf{r}}}_{n\text{'}})\), the intensity distribution can be simplified as,

where \({\hat{{\bf{u}}}}_{i}={\bf{u}}-{{\bf{u}}}_{i}\), \({\Delta }_{n,n\text{'}}={{\bf{r}}}_{n}-{{\bf{r}}}_{n\text{'}}\), indicating that the array factor is highly geometry-dependent, and by pairing terms according to \(\exp (\mathrm{jx})+\exp (-\mathrm{jx})=2\,\cos \,x\), the final intensity can be expressed as the sum of a group of DC terms and a group of cosine terms.

Here, we emphasize the following insights provided by Eq. (11) and Eq. (12). To begin with, all cosine terms in Eq. (12) reach their peaks at \({\hat{{\bf{u}}}}_{i}={\bf{u}}-{{\bf{u}}}_{i}=0\), and the angular period of the individual term is \({{\rm{\lambda }}}_{0}/{{\boldsymbol{\Delta }}}_{n,n\text{'}}\). On a uniform grid, the maximum periods equal to \({{\rm{\lambda }}}_{0}/{\Lambda }_{X}\) and \({{\rm{\lambda }}}_{0}/{\Lambda }_{Y}\), which are also the least common multiple of all periods. This again confirms the periodicity mentioned above. For an under-sampled array, optimizing array geometry to disperse this periodicity is a widespread practice. Nevertheless, by writing the cosine terms according to \(\cos (2x)=2{\cos }^{2}(x)-1\), we can identify that no matter how the periodicity is dispersed, the energy outside the main lobe cannot be recollected into the main lobe just by optimizing the array geometry and/or array amplitude distribution.

Equation (12) is used to conduct numerical simulations according to the configurations given in Fig. 3, the results are shown in Fig. 4. Except for the matched positions of the grating lobes during beam-steering, as the element pitch increases from half a wavelength to three-fourth of a wavelength, the beam becomes tighter as predicted.

Far-field intensity patterns in the visible region of 2-D URAs of uniform amplitude distribution with identical geometries (a, b) and beam-steering configurations [i-iii] as given in Fig. 3.

Gain, divergence and spatial SNR of the array factor

Additionally, we can treat beam-forming in the direction of \({{\bf{u}}}_{i}\) as invariant coordinate shift of the broadside beam pattern from \({\bf{u}}=({u}_{x},{u}_{y})\) to \({\hat{{\bf{u}}}}_{i}=({u}_{x}-{{u}_{x}}^{i},{u}_{y}-{{u}_{y}}^{i})\). This means that the beam quality of the array factor can be determined solely based on the beam quality in the broadside direction. Additionally, though side-lobe may arise wherever multiple cosine terms are in phase in the visible region, prominent side-lobe typically resides along the axis of \({u}_{x}-{{u}_{x}}^{i}=0\) or \({u}_{y}-{{u}_{y}}^{i}=0\) since this would cancel out one of the phase components in the dot product \({\hat{{\bf{u}}}}_{i}\cdot {{\boldsymbol{\Delta }}}_{n,n\text{'}}\), and subsequently facilitate the constructive interference of multiple cosine terms. Fundamentally, the strict orthogonality of the \(({u}_{x},{u}_{y})\) coordinate system guaranteed translational invariance and side-lobe concentration. Based on the properties above, we can base beam quality analysis of a given array design on its broadside pattern dissected along \({u}_{y}=0\) or \({u}_{x}=0\), which coincides with the ZOX or ZOY plane respectively. Also note that within these planes, there are \({u}_{x}=\,\sin \,{\theta }_{X}\) or \({u}_{y}=\,\sin \,{\theta }_{Y}\) according to the relationship in Fig. 1. And, since we are studying the far-field where \({u}_{y}=0\) or \({u}_{x}=0\), the array is also collapsed into a one-dimensional array along the X or Y axis since the spatial translation in Y or X no-longer account for any phase shift. For example, in the ZOY plane, Eq. (12) becomes,

The maximum is achieved when all cosine terms are in phase, which is,

If all elements share the same amplitude, a \({N}^{2}\) gain is achieved compared to the intensity of a single component. Meanwhile, if the input power is evenly divided among the array elements, compared to the input optical power, the achieved directivity is equal to \(N\). Note that this is the highest possible directivity under the above beam-forming condition due to the mathematical property of Eq. (14).

We will consider a periodic array here as a special case. As we will review in the next section, an array with dispersed periodicity can be thinned analytically or through optimization from a periodic array. Also, note that when a 2-D periodic array is collapsed into a 1-D array since the beam-forming condition is observed, we can add-up the amplitudes of elements with the same location in Y and focus on elements with unique locations. In other words, we assume a uniform linear array (ULA) with \(N\) elements along the Y-axis for later derivation. Additionally, since the grating lobes are \({{\boldsymbol{\lambda }}}_{0}/{\Lambda }_{Y}\) away, side-lobe analysis is limited to the prominent region of the main-lobe, which is within \(0.5{{\boldsymbol{\lambda }}}_{0}/{\Lambda }_{Y}\) on either side of the main-lobe. In theory, all maximums and minimums in Eq. (13) can be identified within the prominent region by solving the identities, that is,

In the case of \({{\boldsymbol{k}}}_{{\boldsymbol{0}}}(n-n\text{'}){\Lambda }_{Y}{u}_{y}\equiv 2m{\boldsymbol{\pi }}\) analytical solutions can be given for grating lobes, while \({{\boldsymbol{k}}}_{{\boldsymbol{0}}}(n-n\text{'}){\Lambda }_{Y}{u}_{y}\equiv (2m-1){\boldsymbol{\pi }}\) are the analytical solutions of the angular Nyquist point that defines the edge of the prominent region.

However, analytical solutions for nulls and side-lobes within the prominent region cannot be given since they depend on the amplitude distribution, as shown in Fig. 5. Therefore, we cannot give an analytical solution for the side-lobe suppression ratio (SLSR). But it can be solved numerically either through Eq. (15) or by finding peaks within the intensity pattern simulated based on Eq. (13). For an array of uniform amplitude distribution across the array, i.e., \({a}_{n}\equiv {a}_{0}\), the array factor becomes the classic multi-slit interference pattern since we can reorganize cosine terms according to their periods,

where \({\Psi }_{u}={{\boldsymbol{k}}}_{{\boldsymbol{0}}}{\Lambda }_{Y}{u}_{y}={{\boldsymbol{k}}}_{{\boldsymbol{0}}}{\Lambda }_{Y}\,\sin \,{\theta }_{Y}\). This can be further simplified by pairing terms according to the trigonometry identity \(\cos \,\alpha \cdot \,\cos \,\beta =0.5[\cos (\alpha +\beta )+\,\cos (\alpha -\beta )]\),

Far-field intensity patterns in ZOY plane of 1-D ULAs with (a) \({\Lambda }_{Y}=0.5{{\boldsymbol{\lambda }}}_{{\boldsymbol{0}}}\) and (b) \({\Lambda }_{Y}=0.57{{\boldsymbol{\lambda }}}_{{\boldsymbol{0}}}\); Both [i] graphs are the broadside pattern, while [ii] graphs are steered at \({\theta }_{Y}={30}^{\circ }\); Uniform and random amplitude distribution are assigned to individual elements across the array.

By subtracting Eq. (17) from Eq. (16), the array factor can be given as,

The nulls and side-lobe properties of the multi-slit interference pattern are well documented. The grating lobes are located at \(0.5{{\rm{\Psi }}}_{u}=m\pi\). The nulls within the prominent region of the mth-lobe are located periodically at \(0.5{\Psi }_{u}=(m+m{\text{'}}/N)\pi\), where \(-N/2\le m{\text{'}}\le N/2\). For beam width defined by the nearest neighboring nulls, the angular resolution is \(\Delta {u}_{y}=2{{\boldsymbol{\lambda }}}_{0}/N{\Lambda }_{Y}\). Note that \({u}_{y}=\,\sin \,{\theta }_{Y}\), \(d{u}_{y}=\,\cos \,{\theta }_{Y}d{\theta }_{Y}\). Therefore, for a large array with a tight beam, the null-defined beam width measured in rad is,

There is no analytical solution for the beam width defined by the 3-dB power level, also known as the Full width at Half Maximum (FWHM). However, for uniform arrays with >30 elements, this is typically approximated by,

And for the side-lobe suppression ratio, the largest side-lobe near the main-lobe is typically approximated at the midpoint of the first and the second nulls, which is \(0.5{{\Psi }_{u}}^{SL}=1.5{\rm{\pi }}/N\), the side-lobe intensity can be approximated at large element count as

The side-lobe suppression ratio \({R}_{SLSR}^{0}=10{\mathrm{log}}_{10}({I}_{\text{AFM}}/{I}_{\text{AFS}})\) of a ULA with uniform amplitude distribution is about 13 dB, which is the same value compared to the frequency leakage effect of the rectangular window. To increase the SLSR and thereby improve the spatial SNR, weighting of the amplitude distribution can be performed according to window functions or through more general array synthesis. The former, namely the weighting with a window function, is well documented41 and demonstrated with OPA chips42,43,44,45,46. This section will highlight an example of the Kaiser Window without diving into the classical window design problem. To achieve the desired side-lobe-suppression ratio of \({R}_{SLSR}^{K}\), we need first to evaluate its relative gain with respect to the rectangular window,

where \({\text{asinhc}}(x)\) is the inverse function of \({\text{sinhc}}(x)={\rm{s}}{\rm{i}}{\rm{n}}{\rm{h}}({\boldsymbol{\pi }}x)/({\boldsymbol{\pi }}x)\). Then, the array weight can be given as,

Here, we compare the beam-forming and beam-steering results of 1-D arrays weighted by a rectangular window and that by a Kaiser Window in Fig. 6.

Far-field intensity patterns in the ZOY plane of 1-D ULAs with the same array geometries (a, b) and beam-steering configurations [i, ii] as Fig. 5. The designed SLSR of the Kaiser Window is 30 dB.

The real weighting based on window functions does not influence the periodicity of the beam-forming and beam-steering. However, since real weighting is equivalent to multiplying the near-field with a taper function, whose Fourier Transform will be convoluted onto the angular spectrum, it will broaden the angular spectrum, increasing beam-width and reducing the directivity. From another perspective, weighting reduces the effective aperture size of a phased array and decreases the contrast of the interference to compensate for the leakage of angular frequency components.

We have now based the design of array geometry and amplitude distribution on the characteristics of underlying physical and mathematical models. Key aspects determining the periodicity, beam width, and spatial SNR are highlighted with theory and proof of concept. The remaining performance indicator important to our discussion is the beam-forming loss (BFL), typically defined as the power in the main-lobe divided by the total emitted power. In this review, we assumed that main-lobe power is integrated over the null-defined angular range of the main-lobe. This is more practical than the power within the FWHM range since, experimentally, main beam power is usually measured with a photodetector in the main-beam direction, which typically contains more power than the FWHM range, especially for large-scale arrays. To the best of our knowledge, there are no analytical solutions to the beam-forming loss due to the complexity of array synthesis and the influence of the element factor, which we will review later.

Nevertheless, a guideline toward a low beam-forming loss can be identified intuitively based on the simulations we have performed. Consider the array factor with a weighted amplitude distribution, where the energy is concentrated within the main-lobe and grating lobe. We can approximate the beam-forming loss of the array factor with the number of grating lobes within the visible region. Since the angular period is \({{\boldsymbol{\lambda }}}_{0}/{\Lambda }_{Y}\), the maximum number of grating lobes within the visible region is \(2{\Lambda }_{Y}/{{\boldsymbol{\lambda }}}_{0}\), while the power concentration is proportional to \(0.5{{\rm{\lambda }}}_{0}/{\Lambda }_{Y}\). Although this may seem coarse, in practice, OPAs will steer close to the edge of the aliasing-free region where the neighboring grating lobe will resemble the main-lobe even considering the nonlinear mapping between directional cosine and degree together with the modulation of the element factor. Therefore, a main-lobe power concentration proportional to \(0.5{{\rm{\lambda }}}_{0}/{\Lambda }_{Y}\) could be seen as the worst-case scenario or the array-factor-limited loss penalty.

Filtering properties of the element factor

Based on Eq. (6), the far-field intensity distribution is now the product of the array factor and the element factor. Although it is popular to consider a rectangular slit which will lead to the classical scenario of multi-slit diffraction, a more valid element factor is the Gaussian beam, which is a common free-space propagation mode of the laser beam. On the waist of the beam or the imaginary beam waist where we approximate the element, its general field distribution is,

where \({w}_{x}\) and \({w}_{y}\) denotes the beam waist width. Its element factor through Fourier Transform is still a Gaussian distribution,

Multiplying Eq. (25) with Eq. (12) will modulate the far-field intensity distribution similarly to applying an angular filter, which will suppress aliasing and reduce beam-forming loss. We demonstrate this effect with a 2-D URA at \({\Lambda }_{X,Y}=2{{\rm{\lambda }}}_{0}\), which has multiple grating-lobes within the visible region. As the divergence of the Gaussian beam decreases, which equals a gradual increase in the waist beam radius, we observe an improved beam quality in terms of grating-lobe suppression and directivity in Fig. 7. From an element design perspective, by increasing the directivity of the element emitter, we can trade available field-of-view for beam-forming loss and main-beam directivity.

To provide a more quantitative relationship between the filtering and the beam-forming loss, we have established a numerical model of 1-D ULA along the X-axis comprised of silicon waveguide facet as the emitting element. The element factor is simulated with the Finite Difference Eigenmode (FDE) method and tuned by changing the width of the waveguide \({w}_{Si}\), which is similar to changing the waist radius of a Gaussian beam. Inspired by the thinned array curse47, we choose the fill ratio of the array, defined by the ratio of \((N{w}_{Si})/((N-1){\Lambda }_{X}+{w}_{Si})\), as the design parameter responsible for the beam-forming loss. For 2D implementations, this can be extended to the sum of the field area of each element defined by its \(1/e\) dimensions divided by the total area of the aperture. Ideally, when the fill ratio reaches 100%, the array merges into a single emitter with no beam-forming loss originating from the interference of multiple sources. As shown in Fig. 8 The simulation results support both claims, the former being that the power concentration will be inversely correlated with the array pitch, the latter being that it is positively correlated with the fill ratio of the array. As a rule of thumb, the beam-forming loss is smaller with a denser array. This is the crucial reason for adopting a high-index contrast platform such as the silicon-on-insulator (SOI).

a, b Near and far-field intensity distributions of a 220-nm thick silicon waveguide, which is adopted as the element factor; (c) Power concentration in the main-lobe obtained through numerical integration of the far-field pattern similar to those simulated in Fig. 6.

LiDAR specifications and OPA requirements

As consumer electronics, LiDAR specifications14 update yearly. The current consensus for automotive LiDAR, according to publications48,49,50, is summarized in Table 1 and 2. It is assumed that the long-range LiDAR will be responsible for autonomous driving in the highway scenario with a speed up to 130 km/h. The corresponding stopping distance at the maximum speed can reach 170 m, considering the decision time and other necessary redundancy. Therefore, the maximum range to detect an object must exceed 170 m. Meanwhile, since autonomous driving vehicles negotiate through complex environments of different objects, they need to identify and classify detected objects accordingly. Data from multiple sensors are fused together at the pixel or feature level to achieve that. Due to the proximity of the operating wavelength of LiDAR and the camera, it is preferable to match their resolution so that each image pixel can be assigned a depth measurement, subsequently realizing direct 3D reconstruction of the object whose surface feature and attitude can be discerned based on pixel-wise depth difference. That being said, except for AT51251 released by HESAI in early 2024 and prototypes demonstrated by academic institutions, few LiDAR have a resolution beyond 1 Mpixel, while most of them52 exhibit a point rate of ~Mpps at a framerate of 20 ps. Therefore, a nominally centimeter-scale spatial resolution is usually cited, translating to the ranging precisions and angular resolutions in Table 1 and 2. Though some may claim that an angular resolution of 0.1° can discern a child (about 2 pixels of 50-cm-size-spot) at 300 m, a lot more pixels would be necessary for a practical perception according to vision-based models53, which translates to much finer angular resolutions54. Though the highway scenario itself is relatively limited in field-of-view, long-range LiDAR is also the front-facing LiDAR, meaning it needs to cover road intersections where a large horizontal field of view is necessary to monitor traffic in the intersecting lanes. To that end, a FOV of at least 120° by 25° is needed. Finally, since multiple frames are required for decision, a faster framerate saves decision time, consequently providing redundancy for the autonomous driving system to optimize strategy or respond to emergency. Meanwhile, the framerate should approximate or match the framerate of the camera.

The short-range LiDAR will monitor the blind spots around the vehicle in an urban scenario where the speed is <60 km/h. The corresponding stopping distance is estimated to be 36 m with redundancy. Since these LiDARs are installed to cover the blind spot, multiple devices are required around the vehicle. Since automotive OEMs are sensitive to the cost and footprint of sensors, short-range LiDARs must reach even lower price, size, and power consumption to justify installing four or more of them on relatively exposed positions around the vehicle. Judging from the datasheet of competitive commercial products and market reports, the power consumption of main/long-range LiDAR has been reduced to 10 ~ 30 Watts. The price point is around 500 ~ 1000 USD. For the blind-spot-detection/ short-range LiDAR, the power consumption and the price are expected to be half to one-third of the main LiDAR. Except for shorter ranges and relatively coarser angular resolution, blind-spot-detection LiDARs require a larger vertical field of view to capture objects in the vehicle’s vicinity fully. It is worth noting that blind-spot-detection LiDARs have been proposed recently to handle corner cases such as cross-traffic alerts or door-open warnings. Their adoption and competitiveness are yet to be proven.

Apart from the ranging precision, the listed specifications in Table 1 and 2 are highly dependent on the beam scanner. A coarse mapping can be established as follows,

-

1.

The angular resolution is dominated by the beam width of the array, which is inversely proportional to the size of the emitting aperture. The coherent aperture size should be larger than \(1015{{\boldsymbol{\lambda }}}_{0}\) in both dimensions for the main LiDAR and \(203{{\boldsymbol{\lambda }}}_{0}\) for the blind-spot LiDAR.

-

2.

The aliasing ultimately limits the field of view, which is determined by element pitch or the integration density of photonic emitters. If we approximate this range with the aliasing-free range around the broadside direction, the given FOVs translate to element pitches of \(0.58{{\boldsymbol{\lambda }}}_{0}\) and \(0.87{{\boldsymbol{\lambda }}}_{0}\) for 120° and 70°, respectively.

-

3.

The point-rate is determined by the speed of on-chip phase control and their corresponding drivers. To achieve a 1 Mpps point rate, all channels must be reconfigured within 1 μs. The phase control mechanism should be able to rely on this reconfiguration to the optical field within 1 μs.

-

4.

The range is dependent on the loss of the entire device, including but not limited to the previously introduced beam-forming loss, the coupling loss from the laser to the chip, the loss of passive and active photonic integrated components, and the off-chip coupling loss at the emitting aperture.

For the typical near-infrared operating wavelength, combining requirements 1 & 2 will lead to a millimeter-scale aperture with seemingly millions of elements for a 2-D OPA. Nevertheless, as discussed in the next section, this challenge was resolved through architectural innovations.

For the second requirement, since PICs are initially developed for optical communication applications where ~GHz modulation speed is widely available on main-stream platforms, the modulation mechanisms can satisfy the performance requirement in most cases. The side effects are an increased and phase-dependent modulation loss and, again, the need to drive a large number of phase and amplitude modulators fully.

Finally, we emphasize the loss of the device for two key reasons. To begin with, the collected optical power is inversely proportional to \({R}^{2}\), a 3-dB device loss, which would translate to a 30% decrease in the maximum detectable range. In the case of a solid-state LiDAR using OPAs as both transmitter (TX) and receiver (RX), the device loss will be deducted twice from the power budget. Therefore, a 3-dB device loss will directly result in two times the measurable range. Secondly, for coherent LiDARs that count photons over a given duration, we have to compensate for the increased loss with longer integration time to reach the same signal-to-noise ratio, which would further decrease the point rate of the ranging scheme. Though these margins are not outstanding academically, they will become increasingly crucial for the final product as the automotive LiDAR market matures. That being said, it is worth noting that the LiDAR market as a whole has many niche applications, each with different specifications since they compete with other alternatives. As a rule of thumb, automotive LiDARs can be adapted for drones, robotics, intelligent logistics, and astronautics, where awareness is prioritized over precision. In contrast, sub-mm ranging precision at a relatively lower point rate is generally considered acceptable for surveying or inspection.

Challenges and breakthroughs

Motivated by the key merits and potentials mentioned in “Introduction”, vigorous investigations have been conducted to demonstrate and optimize photonic-integrated optical phased arrays that meet the above requirements. Several dedicated reviews have been published concerning the implementation and general application55, the architecture56, the building blocks57, the material platforms58, the improvements at the component and the device level59,60, and recent developments61 of the optical phased arrays. More general publications have approached the optical phased array as a nanophotonic beam scanner62,63 and an enabling component for LiDAR15,16. In addition, the feasibility and capability of an OPA-based free-space communication transceiver64 or a high-energy beam combiner65 have been surveyed for the corresponding applications. Readers are encouraged to reference these publications for a detailed view of the specific topic.

Briefly speaking, to build an integrated optical phased array, the laser will be split into multiple channels, each capable of at least channel-wise phase modulation, ideally with amplitude modulation and/or gain. The channels will then feed the modulated light into the array of coherent emitters discussed in “Operating principle and performance indicators”. The former is generalized as the feeding network, while the latter is the emitting aperture. For an ideal chip implementation of a solid-state LiDAR, photonic-integrated components of both categories are expected to be integrated with one or multiple layers of photonic integrated circuits through a self-contained semiconductor process flow, eliminating or minimizing the requirement for optical alignment of multiple discrete optics while improving the robustness of the LiDAR system.

However, to feed and form a millimeter-scale aperture at an affordable insertion loss, researchers are obliged to adopt low-loss dielectric platforms instead of plasmonic ones. This, in turn, means that we are limited to component size and spacing bounded by the diffraction-limit of the guided mode, which is at wavelength level. In other words, the on-chip laser source or the input spot size converter, the power distribution network, the phase shifters, the amplitude modulators, the emitter array, and the necessary routing waveguides between them will occupy a specific area for themselves and should be kept at a low-crosstalk spacing from each other. As we have evaluated in the last section, an increased element pitch results in an increased beam-forming loss and can cause aliasing for uniform arrays and subsequently limit field-of-view. Meanwhile, if a denser aperture is achieved, we must strike a delicate balance between complexity, speed, scale, and loss within the feed network containing at least thousands or tens of thousands of channels. In this section, we categorize key endeavors into four aspects: the reduction of control complexity, the improvement of the power budget, the elimination of aliasing, and the suppression of crosstalk. Due to the intertwining nature of the performance indicators, we arrange these overlapping topics in this order to clarify the necessary concept first and dive down into the component-level innovations afterward.

Reduction of control complexity

There are two approaches to reduce the control complexity. The first is optically or electronically removing redundancy from the array without undermining the beam-forming condition. The second is to delegate the control to electronic ICs (EICs) tightly integrated with the PIC and, ideally, multiplex the controllers for larger elements.

Redundancy removal

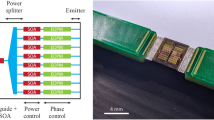

The former has been validated in the preliminary development, with the first demo66 introducing both wavelength-assisted beam-steering in the wavelength tuning axis (typically denoted as \(\theta\), which corresponds to \({\theta }_{X}\) in section 2) and thermo-optic phase shifters driven via a single control pad, reducing the control complexity from \(o({n}^{2})\) to 2, where \(n\) denotes the required number of elements in each dimension. Such drastic reduction is feasible because, for any uniform array with no phase error, the phase values assigned to the array elements are sampled from a titled phase-front, as shown in Eq.2 and Eq. (2). This means the phase difference between any elements in the array maintains a linear relationship. Ideally, we can synthesize such a phase-front with only two variables: the operating wavelength of the grating and the inter-channel phase difference. This work laid the foundation for the de-facto standard architecture for most OPA demos as shown in Fig. 9, which we named the 1-D array of wavelength-assisted gratings (AWAG). This is the default architecture of all reviewed devices without further specification. To adapt this architecture for larger arrays where phase errors may arise due to fabrication variations, the complexity is relaxed to \(o(n)\), where each channel has separate phase control for phase error compensation.

Over the years, the electronic approach was revisited with phase shifters of linear length difference67, or by cascading phase shifters within the power splitting network68,69,70,71,72 as illustrated in Fig. 10. To address the issue of inter-element phase error, the phase shifters are grouped into three or more subgroups, where it is assumed that phase coherence can be maintained locally. Nevertheless, it is worth noting that the demonstrated chips in Table 3 are relatively small, while the achieved beam qualities are relatively modest compared to the implementation with \(o(n)\) complexity. Nevertheless, given the fact that the robustness of the integrated platform is improving to extend the phase coherence length of the PIC, an OPA beam-scanner driven by only the operating wavelength and a limited number of electric signals is technically feasible.

Additionally, some efforts adopted an entirely passive array with two-dimensional beam-steering enabled by dispersion only. This review refers to this architecture as the 2-D dispersive arrays, with early demonstrations dated back to73,74. As shown in Fig. 11, the inter-channel phase-shift is introduced with delay lines of linear optical path differences. This results in a control complexity of 1.

To combat the phase error accumulation within the chip, recent demonstrations in Fig. 12 resort to waveguides with widened width75,76 or an implementation on the silicon nitride (Si3N4) platform77 to improve the coherence length of the delay lines. The device also improved robustness by adopting metamaterial structures78, and a switch-multiplexed multi-line aperture has been demonstrated79. The demonstrated devices are listed in Table 4.

Recent demonstrations of 2-D dispersive arrays: (a) a 128-channel OPA based on the silicon nitride platform77; (b) a 128-channel OPA on the SOI platform with widened multi-mode waveguide76; (c) a 112-channel OPA based on metamaterials/sub-wavelength structures78; (d) a switchable 8-by-39 channel OPA based on the silicon nitride platform79.

As a closing-remark on 2-D dispersive arrays, though a passive device generally enjoys lower loss and processing complexity, generating, amplifying, modulating, and demodulating over a large tunable bandwidth with compact solutions is challenging. Dispersion engineering similar to a slow-light grating80 may be preferable to reduce the optical bandwidth.

Integration of electronic ICs

The underlying assumption of the redundancy-removal approach is that linear phase difference is sufficient for beam-forming and beam-steering. This limits array performance due to the accumulation of phase noise and prohibits their adoption in aperiodic arrays or arbitrary wavefront generation.

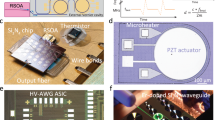

Tightly integrated electronic drivers connecting all channels are more desirable to avoid such a tradeoff while reducing the final electronic interfaces required. To the best of our knowledge, the first OPA demonstration with electronic ICs was a monolithic implementation published in 201533. In 2018 the scale was extended to 1024 channels23 with cascaded driving techniques similar to68. Nevertheless, a monolithic solution constitutes a hidden tradeoff between the optimization and performance of EIC and PIC. Therefore, heterogeneous solutions with Through-Oxide Via (TOV)34,35 or copper bumps81,82 are being investigated for higher readiness. Another takeaway from these demonstrations is that the blocks of digital-to-analog converters will account for significant power consumption in the final implementation, and a μs-level refresh time across all channels remains hard to reduce82,83, even with multiple DAC blocks driving in parallel. Therefore, a tradeoff between 3.1.1 and 3.1.2 may be necessary, striking a balance between the granularity and the complexity of element control. In Fig. 13, we highlight examples of monolithic, 3D-integrated and Cu-bump solutions.

As listed in Table 5, the tight integration of EICs with PICs can address tens of thousands of channels and operate the device at a power consumption of tens of watts with a point rate of up to 300 kpps. This is currently sufficient for OPAs belonging to the AWAG architecture. However, multi-beam operation is necessary to satisfy the ~ Mpps requirements of LiDAR applications, which can increase the number of drivers required.

Improvement of power budget

As discussed in “Operating principle and performance indicators”, the total loss consists of the coupling loss into and from the chip, the insertion-loss within the chip, and the beam-forming loss in the free-space. Though incremental efforts have been made to reduce the insertion loss of the photonic components and the beam-forming loss in the free space, researchers have also been merging the merits of multiple integration platforms to fundamentally alleviate the detrimental effect of optical loss. Since we will discuss the former part in later topics, we will introduce developments on the III/V-on-SOI platform and the multi-layered SiN-on-SOI platform concerning their potential to improve the power budget in 3.2.

III/V-on-SOI Platform

Due to the indirect bandgap of the crystalline silicon, it is challenging to obtain optical gain within the indigenous SOI platform. However, with on-chip amplifiers and lasers, we can eliminate the loss at the input spot-size converter, compensate for the loss of photonic components, and get around the nonlinearity-limited power bottleneck at the bus waveguide with channel-wise amplification and coherent combining. The main-stream methods for on-chip amplification and lasing can be divided into four categories84, namely through the heterogeneous integration of III/V materials, the doping or straining of Ge/GeSn, the doping of Rare-earth-elements, and the nonlinearity-induced stimulated Raman scattering. Among these methods, the heterogeneous integration of III/V materials, though not CMOS-compatible, can be electrically pumped and flexibly and reliably deployed within the feeding network. As shown in Fig. 14, the active and passive properties of the III/V materials can be largely persevered with processing techniques such as transfer-printing85 or wafer bonding86. The light is evanescently coupled between the III/V waveguides and the silicon waveguides, enabling components including the external cavity laser, the semiconductor optical amplifiers, and the III/V-based phase shifters.

As an amplifier, a channel-wise gain of at least 10 dB is achievable in the C-band, while more than 30 dBm can be provided in O-band. Meanwhile, tunable lasers with sub-kHz linewidth are capable of output power of 10 ~ 40 mW. A general limitation is the ~10% plug-wall-efficiency and the subsequent issues with thermal degradation, especially for arrayed applications. Recently, thermal degradation has been investigated on the indigenous III/V integration platform87, where an 8-channel OPA exhibits a maximum emitted power of 15.5 dBm (35.5 mW). The maximum power is achieved with an on-chip input power of 8.5 dBm, indicating a device gain of 7 dB, which is smaller than the maximum gain of 21.5 dB achieved at -20 dBm input, with at least 3.5 dB degradation attributed to thermal crosstalk. Thermal issues will be exacerbated for heterogeneous integration since thermal dissipation and circulation can stress bonding and affect evanescent coupling between III/V and silicon waveguides. Nevertheless, the output power reaches 35.5 mW with only 8 channels/amplifiers, which can be adequately spread out and isolated with air trenches given a larger design area. Therefore, this work demonstrated the immense potential for coherent beam combining through waveguide-integrated OPAs, given sufficient thermal management and adaptive phase compensation. In case high-power laser applications65 require channel-wise amplification and consequently result in demanding thermal dissipation requirements, advanced cooling techniques88,89 ranging from water-cooled chip-scale cold plate90,91 to microchannels/microfluidics92,93 can be incorporated for heat flux up to hundreds or thousands of W/cm2 to prevent thermal degradation or failure. Meanwhile, active phase alignment techniques similar to those used for coherent beam combining are necessary to compensate for the on-chip thermal gradient.

For reasons we will elaborate in “Discussions and prospects”, heterogenous integration is preferable compared to indigenous/single-material integration. OPA chips based on the III/V-on-SOI platform were first pioneered by UCSB in 201229, with scaling and improvements published in 201530 and 201927. Recently, Samsung has also published heterogeneously integrated OPAs and LiDAR prototypes based on a similar wafer-bonding process32,94. In the latter case, the output power in the main-beam is sufficient for pulsed/time-of-flight ranging with avalanche photodetector as receiver over a range of 20 meters. Corresponding developments are sorted in Fig. 15 and Table 6. For detailed performance and yield of heterogeneous integrated photonic components, we suggest the reader refer to the following publications95,96,97,98,99.

Finally, a special note is highlighted for III/V-based electro-optical phase shifters, which exhibit ~0.15 dB modulation-dependent loss and 0.3 ~ 0.6 dB passive loss27. They are more desirable compared to indigenous silicon EO phase shifters100 based on free carrier dispersion, which introduces phase-dependent power variations up to 2.4 dB for the carrier-depletion/p-n type101, and 3 ~ 6 dB for the carrier-injection/p-i-n type102,103.

In summary, the III/V-on-SOI platform offers closely integrated optical amplification and high-speed electro-optical phase modulation with a smaller average insertion loss. Both developments can help to improve power efficiency.

Multi-layered SiN-on-SOI platform

As previously mentioned, due to the low plug-wall efficiency, thermal degradation will hinder the device’s performance especially when amplifiers are used as distributed gain within the feeding network. Additionally, though it is preferable to use high-index-contrast materials such as silicon for the emitting aperture to increase the density of the array, silicon is also sensitive to thermal crosstalk, which can undermine the beam-forming condition if no attention is paid to thermal management. Suppose we intend to concentrate the optical gain, preferably away from the OPA chip, to ensure higher yield and performance for both components. In that case, we must first address the nonlinearity-limited power throughput issue at the bus waveguide. Due to the strong mode confinement of high-index-contrast waveguides, nonlinear losses such as two-photon absorption (TPA) and consequential free-carrier absorption (FCA) dictate that a silicon bus waveguide with a cross-section for fundamental mode transmission can handle power throughput up to 18 ~ 20 dBm104,105, with damage threshold around 2.15 W106. Though this can be partially alleviated by widening the waveguide or removing the free-carriers with reverse-biased p-i-n-junctions, implementing these measures along the power splitting network at a system scale complicates the design flow and the driving circuits. With the maturing of the multi-layered silicon-nitride-on-silicon platform, we can eliminate the power bottleneck thanks to the negligible nonlinear loss of the silicon-nitride waveguide107,108. Additionally, since silicon-nitride is CMOS-compatible, this approach enables complementary integration of Silicon and Silicon Nitride without back-end processes.

As shown in Fig. 16, typical implementations involve power splitting with the silicon nitride waveguide and power splitters, modulation with the silicon-based thermo-optic or electro-optic phase shifters, and emission with either Silicon, silicon nitride, or hybrid grating antennas. Interlayer coupling is realized by evanescent couplers with high yield and tolerance109,110. It is measured that the silicon nitride waveguide with a cross-section for fundamental mode transmission can handle a continuous-wave (CW) power throughput up to ~watt-level26,111 with a damage threshold of up to 35 W106. Recently, a 3D integrated OPA was also demonstrated on the multi-layered Si3N4 platform, benchmarking the flexibility of Si3N4 as a waveguide material112. Furthermore, silicon nitride photonic components are less sensitive to fabrication deviation or thermal crosstalk thanks to their smaller refractive index and thermo-optic coefficient. When used as interconnecting waveguides, they possess lower insertion loss and longer phase coherence length, which was demonstrated in 2019113. Most recent developments belong to the AWAG architecture, focusing on developing high-performance grating antennas.

a a 256-channel OPA shared between TX & RX with on-chip balanced PD for self-calibration and frequency-modulated-continuous-wave (FMCW) frontend217; (b) a 128-channel aperiodic array with silicon-based carrier-depletion electro-optic phase shifters25; (c) a 256-channel OPA with thin film silicon nitride perturbation231; (d) a 256-channel aperiodic OPA with on-chip external cavity laser230; (e) a 256-channel aperiodic OPA with improved directivity and aliasing-free scanning up to 150°114; (f) a 64-channel with fully-etched SiN grating assisted by silicon grating reflector124.

Thanks to its potential, OPAs on the SiN-on-SOI platform have received tremendous interest in recent years. Hence, Table 7 is not an exhaustive accounting for every variation and component optimization. Two aspects are highlighted here for discussion. The first is the growing proliferation of pure silicon-nitride-based grating antennas25,114,115,116,117, especially for large apertures, aiming to exploit the longer phase coherence length of the waveguide material. However, as noted by these authors, these gratings are prone to optical crosstalk due to the weaker mode confinement of the silicon nitride waveguide, necessitating the adoption of aperiodic geometries and leading to a beam-forming loss beyond 10 dB. As previously mentioned, LiDAR application is loss-sensitive, and a denser aperture is generally necessary for a lower beam-forming loss. In addition, due to the smaller group index of the silicon nitride waveguide, the wavelength scanning coefficient is half that of the silicon-based grating. In other words, by adopting a pure SiN grating, more than two times the optical bandwidth is necessary to cover the same field of view in \({\theta }_{X}\). Again, it is encouraged to conserve the optical bandwidth for the same reason described in 3.1.1. Consequently, it is preferable to adapt the design and processing techniques used for SiN grating antennas for SOI-based or Si-SiN hybrid grating antennas for long-term development. Secondly, SiN-based waveguides and components are 2–4 times larger than their Si-based counterparts, which occupy significant design areas in the OPAs mentioned above. Ideally, given more precise mask alignment between the Si and SiN layers, more SiN-SOI hybrid components can be investigated for footprint reduction without sacrificing power handling or robustness against fabrication variations.

Elimination of aliasing

As discussed in “Operating principle and performance indicators”, increasing or dispersing the angular period can eliminate aliasing in the visible region. Also, for a LiDAR system, aliasing can be eliminated at the system level, where a Vernier difference between the transmitter and the receiver can suppress the detrimental effect of grating-lobe aliasing and side-lobe crosstalk.

Aperiodic array design

As a quick recapitulation, aperiodic arrays disperse the angular period, undermining the beam-forming condition at grating-lobe directions while preserving the beam-forming condition at the main-lobe. The catch, as derived in Eq. (12), dictates that energy outside the main-lobe is dispersed into the noise floor and cannot be retrieved through geometry optimization or real weighting, which are the main methods employed in aperiodic array designs. Nevertheless, as a widely adopted technique to extend the field-of-view, progress has been made since 2008118, with different names including the irregular array, the unequally spaced array119,120, the sparse array121, or the non-uniform array122. At the same time, a vast number of numerical simulations have been conducted for 1-D and 2-D arrays. In this review, we will cover the design techniques used in demonstrated chips, focusing on the side-lobe suppression ratio and the beam-forming loss in the broadside of the device whenever possible.

Current methods can be roughly divided into two categories. Most demos implement optimization algorithms25,114,123,124,125 to search for the optimal geometry by evaluating the far-field pattern. As the array grows larger in dimension and scale, this optimization becomes computationally intensive due to the larger number of samples required to populate the search space of higher dimensions and the denser far-field grid required for sampling the finer beam pattern.

On the other hand, emerging methods126, such as Fig. 17b, seek to optimize the array geometry based on the maximum dispersion of angular periods as indicated by Eq. (12). The latter transforms the array design into a mathematical problem, eliminating the need for array synthesis such as Eq. (6) on a densely sampled far-field. Additionally, the solution to the mathematical problem, though not analytically expressed in most cases, has been comprehensively solved by the phased array designers, including the generation of non-redundant arrays127,128,129 based on Golomb Ruler and Costas Array and thinning according to binary sequences generated with difference-sets (DSs) or almost difference-sets (ADSs)130,131,132.

Apart from the computation load, the former can choose almost arbitrary positions for array elements. The latter imposes a more rigid grid to generate the optimal or sub-optimal binary sequence based on evaluating its autocorrelation. Of course, optimization methods, including the Genetic Algorithm, have been used to thin an array from a denser fixed grid133, as shown in Fig. 17(c), which can be seen as a hybrid between the two extremes. Finally, more akin to the mathematically-inspired schemes, basic parametric geometries, including the linearly chirped grid26,121, the 2nd-order polynomial grid134, or the circular/multi-annular ring grid135,136 have been verified to be suitable arrangements of array elements. These geometries can position large element counts with a small number of defining parameters, shrinking the dimensions of the search space and consequently reducing the computation load.

As listed in Table 8, all demonstrated aperiodic arrays exhibit a beam-forming loss beyond 3 dB, with large-scale implementations ranging from 4.6 ~ 28.2 dB. Given that the total power efficiency consists of both the device loss and the beam-forming loss, current values are detrimental to LiDAR application, especially when OPAs are used for both TX and RX. Though a smaller average pitch may be the next direction for the design of aperiodic arrays, when the emitting aperture becomes denser, the remaining space to optimize the geometry shrinks drastically. More investigations are required to validate the tradeoff between geometry optimization and beam-forming loss.

In summary, aperiodic OPAs have demonstrated aliasing-free beam-steering with acceptable beam quality over a large field of view. In the future, more assessments directed toward reducing the beamforming loss and validating the detection-wise signal-to-noise ratio should be considered.

Bistatic vernier arrays

Implementing a pair of uniform OPAs with Vernier difference between the angular periods of the TX and RX is mainly motivated by two reasons. To begin with, both Eq. (12) and simulations in refs. 26,137 indicate that an aperiodic array should have the same beam-forming loss compared to a uniform array of identical average pitch. In other words, though aperiodic arrays extend the field-of-view by undermining the beam-forming conditions at the grating lobe directions, the power initially concentrated within the grating lobes is now dispersed into the raised noise floor, which can never be used for other purposes. On the other hand, since there remains significant reflection from the PIC138, even the most matured implementation28 has bistatic arrangements of TX and RX OPAs to physically isolate the interference from reflection and subsequently improve the SNR of the return signal from the free space. Therefore, it is more beneficial to achieve aliasing-free detection by introducing a mismatch between the grating lobes of TX and RX rather than aliasing-free beam-forming on both TX and RX, especially given that bistatic arrangement is necessary for the time being.

This idea, which we named the Bistatic Vernier Array (BVA), has been circulating since 2018139, with chip demonstrations in 202236,140 and LiDAR system demonstrations in 202437. Since this is an emerging development, we gathered results from all these publications for a more general discussion. Simulation in Fig. 18a was conducted for a pair of uniform arrays, each with 128 elements at a 3- and 4.5- μm pitch, respectively. To differentiate between the side-lobe suppression ratio in the beam-forming scenario, the relative directional gain difference of the detection angular spectrum is referred to as the side-mode suppression ratio, which was reported139 to be larger than 30 dB in the broadside direction.

a Simulation results with 30 dB SMSR139; (b) Experimental Configuration for SMSR measurement proposed in140; (c–i) Configurations and die-shot for the pair of 2-D arrays and (c-ii) measured best case detection angular spectrum; (d) 3-D point cloud in a FOV of 3° by 135°, the inset is the die-shot and the zoom-up of the co-prime aperture.

The idea was revisited with a pair of 2-D dispersive arrays in 2022, with the 32 and 31 antennas arranged at 16- and 16.516- μm pitch for TX and RX, respectively. The simulated SMSR is 8.6 dB and measured to be 6.4 dB, with 10 ~ 20 dB designs proposed numerically. Additionally, this is further validated for 2-D active URAs in36, where two 8×8 arrays of 9.2- and 12.4- μm element pitch are paired into a co-prime aperture. The highest achieved SMSR is 11.3 dB, while the 2-D co-prime scanning is achieved within a field-of-view of 23°×16.3°. Finally, Jilin University built a LiDAR transceiver37 based on two 128-channel arrays of wavelength-assisted gratings at 3.8- and 4.2- μm pitch. The chosen Vernier yields a 10.4 ~ 25.3 dB SMSR up to ±80°, with measured signal-too-noise pedestal ratios ranging from 7.3 ~ 20 dB for the FMCW beat signal. With careful optimization of individual photonic components, especially the dual-level grating antenna, 160° co-prime scanning in \({\theta }_{Y}\) is demonstrated with 3-D point-cloud demonstration in 135° and 160° ranges. This work soundly validated the feasibility of the Bistatic Vernier Arrays. These developments are gathered in Fig. 18.

As these devices have different architectures and readiness levels, the comparison table is omitted for this topic. It is worth noting that all three devices suffer from a significant loss, with total power efficiencies (main beam power vs. total input power) reported as ~20 dB140, 8.33 dB (theoretical)36, and 14 dB37. Nevertheless, the grating lobes of the BVAs are not dispersed into the noise floor, meaning that they can facilitate novel scanning mode by aligning grating-lobes closest to the target direction rather than scanning the main-lobes of both TX and RX to the target direction. Theoretically, this reduces the phase shift required by exploiting the periodicity and potentially lowers the average power consumption for the scanning in the entire FOV.

In short, bistatic Vernier arrays extend the FOV using spatial mode mismatch between TX and RX. The periodicity is persevered, yielding a lower noise floor and potential for new scanning schemes. However, BVAs have higher beam-forming loss compared to tightly integrated AWAG OPAs.

Suppression of crosstalk

At the beginning of this section, we have identified that the diffraction limit of the low-loss dielectric platforms dictates the size and spacing of the photonic components. To achieve higher integration density, especially to achieve a lower beam-forming loss for the emitting aperture, researchers have been working to suppress or compensate the inter-element optical crosstalk with techniques including the introduction of inter-element mode mismatch, the enhancement of mode confinement and the pre-distortion of the input phase and amplitude distribution.

Mode Mismatch

As predicted by coupled mode theory (CMT)37,141,142, the power coupled from a waveguide to its passive neighbor within a lossless system over an adjacent length \(z\) is given as,

where \({P}_{0}\) is the power launched into the source waveguide, \(\Delta \beta\) is the propagation constant difference between the two waveguides, \({\kappa }^{2}={\kappa }_{12}{\kappa }_{21}\) is the coupling coefficient between the waveguide, and \(\gamma =\sqrt{0.25\Delta {\beta }^{2}+{\kappa }^{2}}\). Therefore, as the propagation constant difference increases, the maximum coupled power decreases, suppressing the evanescent coupling between closely adjacent waveguides. As guided modes are characterized by their propagation constant, this technique is also referred to as the introduction of mode mismatch, which can be implemented by varying the width and/or the bend radius143 of individual waveguides. For a dense waveguide array, it is proved that sufficient coupling suppression can be achieved by implementing a waveguide super-lattice144, where several waveguides of different widths formed a supercell that was periodically repeated within the array. Notably, most chip demonstrations are one-dimensional arrays122,145,146,147,148,149,150 since the mode-mismatched grating antennas will result in phase mismatch within the coherent aperture, and interleaved variations along the longitudinal direction, such as what is proposed in ref. 151 may lengthen the effective grating period and reduce the phase coherence length. As for the slab grating152,153 implemented at the end of the waveguide array, since the refractive index of the interference region is 3.47 for room-temperature silicon at 1550 nm, the periodicity of the phased array is now \({\Lambda }_{Y}/({{\rm{n}}}_{Si}{{\boldsymbol{\lambda }}}_{0})\), meaning that multiple grating lobes will exist within the slab plane, contributing to additional beam-forming loss. Nevertheless, as reported by ref. 153, in-plane grating lobes are largely confined within the slab, therefore not producing aliasing in the far-field.

For OPAs collected in Fig. 19, the loss in Fig. 19b is measured from the reference device with two curved waveguide arrays connected in a mirrored fashion. Meanwhile, since the width variations will repeat periodically, an n-element supercell has a spatial period of \(n{\Lambda }_{Y}\). Therefore, superlattice lobes are analyzed and minimized in Fig. 19c. Another insight obtained through these demonstrations is that the beam-forming loss can be reduced to ~1.5 dB for arrays at half-wavelength pitch. This value can be seen as the minimum beam-forming loss without further fill ratio improvements.

Finally, as a small side note, works in 3.4.1 and 3.4.2 tend to name these 1-D arrays as end-fire arrays. However, the end-fire direction is parallel to the array aperture, which is not where these arrays perform beam-forming or beam-scanning. Therefore, it is advisable to avoid this naming convention for better clarity.

As listed in Table 9, crosstalk suppression as high as 30 dB has been demonstrated by introducing mode mismatch in the form of width or radius variations within dense waveguide arrays. The viability of these techniques has been validated with OPA chips with good SLSR performance, indicating negligible inter-element crosstalk.

Mode confinement enhancement

Another approach to control evanescent coupling is enhancing mode confinement in optical waveguides. Novel proposals based on metamaterial-cladded waveguides154, sometimes called the e-skid waveguide155,156 have been simulated157,158,159 and demonstrated160,161,162,163 with closely spaced waveguides. Similar techniques based on the relaxed total internal reflection theory are implemented with leaky sub-wavelength grating164,165. These efforts have verified crosstalk level beneath 30 dB at half-wavelength pitch, with an affordable increase in waveguide propagation loss. More importantly, there is no mode/phase mismatch between the elements, simplifying the design of OPAs of AWAG architecture. However, the ribbon-like or subwavelength grating structures typically possess a critical dimension of ~60 nm or smaller, limiting their yield and implementation within an OPA system. Besides, it should be noted that the grating antenna within the AWAG is a periodic leaky-wave structure, which is different from the strip waveguides. They should be re-evaluated for compatibility with grating antennas and robustness against fabrication deviations for the practical adoption of these innovative approaches.

Another straightforward way to enhance the mode confinement is to increase the effective index of the guided mode as demonstrated by166, this effort indicates that a thicker waveguide can extend the coupling length at sub-wavelength element pitch. Nevertheless, it is worth noting that though a higher index contrast contributes to stronger mode confinement, it also renders the guided mode more susceptible to fabrication variation and side-wall roughness, resulting in higher loss and lower robustness.

Again, this review will only cover the examples with demonstrated OPA chips for simplicity and consistency. Since these preliminary demonstrations have wildly different configurations and performances, a comparison chart is omitted here. Among the three demonstrations, Fig. 20a used photonic crystal separation to compensate for the substantial crosstalk of corrugated waveguide grating, which used width variation for refractive index perturbation. Though the radius of the photonic crystal hole is 263 nm and thus can be fabricated, this is implemented for an element pitch of 4 μm. Figure 20b used metamaterial-cladded grating antennas. However, the fabricated 32-channel OPA shows a high side-lobe level and significant irregularity within the FOV, which could indicate a higher level of mutual coupling or phase noise accumulation. The method used in Fig. 20c provides a good reference data point and offers the potential to implement sub-wavelength arrays with commercially available platforms. Still, whether this method can sustainably reduce element pitch with even longer grating antennas remains to be seen.

a with photonic crystal separation, [i] aperture composition, [ii] crosstalk simulation, [iii] zoom-up of the fabricated aperture, [iv] far-field pattern; (b) with metamaterial cladding, [i, ii] layout and SEM of the grating aperture; (c) with thicker silicon layer, [i] Simulation and [ii, iii] measurement of waveguide crosstalk of different thickness.

To summarize, we can also suppress inter-channel crosstalk by enhancing mode confinement. Accordingly, 2D and 1D photonic crystal structures have been sandwiched between adjacent channels, while SOI wafers with thicker silicon have been employed. These techniques rely on structures of refined feature sizes or wafers that are less commonly processed, rendering them more sensitive to processing errors and less mature for integrating large on-chip systems. That being said, developments mentioned in 3.4.2 and Fig. 12c can benefit from the adoption of more advanced processing nodes167, where finer features and patterns will improve the uniformity and yield of power splitters and grating apertures based on subwavelength metamaterials78,168,169,170,171,172,173 and photonic crystals174,175.

Pre-distortion of input optical field

Finally, suppose we can model the optical crosstalk within the emitting aperture. In that case, it may be possible to pre-distort the input optical field so that the crosstalk is partially compensated over the aperture as proposed in176. To achieve this, we need both amplitude and phase control to emulate the complex field containing the optimal feed component at the input of the emitting aperture. The problem can be written as,

where \({\bf{C}}(0)\) is the input complex weight of each element and the optimization parameter. By optimizing \({\bf{C}}(0)\) and subsequently optimizing all complex weights along the grating aperture according to beam quality indicators, such as the side-lobe suppression ratio, the effect of the crosstalk matrix \({\bf{M}}\) can be mitigated. It should be noted that there is currently no mathematical insight generated from this model since the array synthesis involves the coherent combining of the entire aperture. Though an inverse matrix can theoretically be calculated for a specific, \({\bf{C}}(x)\) as claimed by their submission to the OFC 2024, it can only compensate that specific \({\bf{C}}(x)\) with unspecified implications for the final beam quality. In practice, the authors implemented an optimization algorithm to solve Eq. (27) experimentally in Fig. 21.

a \({\theta }_{Y}\) cut of the far-field beam pattern achieved with both amplitude and phase optimization; (b, c) 9 incidences and their performance indicators of steered beam, [i] the beam-width measured in FWHM, [ii] the side-lobe suppression ratio, [iii] beam-forming loss reported as main-lobe power concentration, [iv] additional loss due to amplitude modulation with integrated variable optical attenuators.

Currently, there is no connection or analytical proof that the promoted Genetic Algorithm fundamentally resolved the influence of the crosstalk matrix. The demonstrated beam-forming result for a 32-channel AWAG array of 1-mm long grating at half-wavelength pitch is ~13 dB in SLSR. That being said, this review holds the favorable position that this approach may be valid for the decomposition and deconvolution of optical crosstalk within densely packed arrays. At least, it proved that amplitude and phase modulation can partially address the crosstalk issue.

As a closing remark on this topic, in-depth modeling of the crosstalk within the grating aperture would offer far more insight into the pre-distortion of the input electric field, and the field should welcome such a development, through which a new boom of advanced feeding networks and optimization procedures may be realized.

Discussions and prospects

Thanks to breakthroughs in complexity reduction, complementary integration, aliasing suppression, and crosstalk compensation, OPAs with larger and denser transceiving apertures are being demonstrated with higher power efficiency, larger field of view, and better beam quality. The readiness of the technology has prompted preliminary prototyping efforts. Though no field test reports are openly available for OPA-based LiDAR implemented in autonomous driving vehicles, by comparing current progress against the performance requirements collected in Table 1 and 2, it can be seen in Table 10 that PIC-based OPAs are en route to fulfilling practical needs in almost every aspect. The field is working vigorously to extend the maximum range and the point rate of OPA-based LiDAR prototypes. Additionally, the vertical FOV is still insufficient for blind-spot detection LiDAR.