Abstract

Unmanned rollers are typically equipped with satellite-based positioning systems for positional monitoring. However, satellite-based positioning systems may result in unmanned rollers driving out of the specified compaction areas during asphalt road construction, which affects the compaction quality and has potential safety hazards. Additionally, satellite-based positioning systems may encounter signal interference and cannot locate unmanned rollers. To solve this problem, a lateral positioning method for unmanned rollers is proposed to realize the positioning of unmanned rollers relative to asphalt road. First, we captured images from different perspectives and developed a dataset for asphalt road construction. Second, a method for boundary extraction of asphalt road is proposed to accurately locate pixels of asphalt road boundary. Subsequently, the lateral distances are measured by the designed lateral positioning methods. Finally, field validation experiments are conducted to evaluate the effectiveness of the proposed lateral positioning method. The results indicate that the method excels in extracting the asphalt road boundary. Furthermore, the proposed lateral positioning method shows excellent performance, with a mean relative error of 3.40% and a frequency of 6.25 Hz. The proposed lateral positioning method meets the performance requirements for lateral positioning in both accuracy and real-time in asphalt road construction for unmanned rollers.

Similar content being viewed by others

Introduction

With the advancement of automation technology and artificial intelligence, rollers have gradually transitioned from manual operation to unmanned driving1,2,3. Unmanned rollers not only enhance construction efficiency and reduce labor costs but also significantly improve safety at construction sites. Accurate positioning is fundamental for unmanned rollers to achieve accurate and reliable compaction in complex construction environments and to enhance overall construction quality4,5.

Currently, the most commonly used positioning technologies for unmanned rollers are the global positioning system6 (GPS) and the real-time kinematic global navigation satellite system7,8 (RTK-GNSS). Although the satellite-based positioning systems can achieve centimeter-level positioning accuracy, they have limitations in obstructed environments such as buildings, trees, and mountains9,10. Signal interference and signal attenuation in such environments significantly affect the accuracy and reliability of the satellite-based positioning systems. In addition, limited by the positioning accuracy of the satellite-based positioning systems and their inability to perceive the surrounding environment, unmanned rollers may drive out of the specified compaction areas during asphalt road construction, which will affect the compaction quality and has potential safety hazards. The satellite-based positioning systems are suitable for the positioning of unmanned rollers in open areas, like dams11. Given the limitations of the satellite-based positioning systems, researchers have introduced the visual perception-based positioning to locate unmanned rollers. Compared with the satellite-based positioning, visual perception-based positioning can provide accurate position and orientation for unmanned rollers by analyzing the relevant images12. It efficiently solves the positioning challenges when satellite-based positioning fails. Moreover, visual perception-based positioning offers the ability to identify surrounding environment within the compaction areas13,14, which provides convenience for the monitoring of the construction site and enhances the security for the construction of unmanned rollers.

Few researchers focused on visual perception-based positioning for unmanned rollers. Lu et al.15 introduced a thermal-based technology that tracks and maps roller paths. The proposed technology provides the current position based on the previous position of the roller. However, after working for a long time, it still needs to manually periodically input the actual position of the roller into the algorithm to eliminate the cumulative translation error. After this, they optimized the estimation of the lateral positioning to reduce the error, which makes it achieve the same accuracy as RTK-GNSS9. Sun et al.16 proposed a lateral positioning method based on visual feature extraction for soil roads. Although the proposed method has realized the relative positioning, it is still necessary to manually set the ground markings. These visual perception-based positioning methods for unmanned rollers above still require manual intervention to ensure the accuracy of positioning. Additionally, existing visual perception-based positioning systems are used as visual odometers to achieve the positioning of unmanned rollers relative to the construction starting point of construction. These visual perception-based positioning systems do not pay attention to the lateral positioning between the roller and the asphalt road boundary, which is not suitable for the positioning of unmanned rollers during asphalt road construction.

Building upon this context, this paper aims to propose an unmanned roller lateral positioning method. This method aims to measure the distances between the roller steel wheels and the asphalt road boundary to realize the positioning of unmanned rollers relative to asphalt road, which can effectively avoid the possible collision between the roller steel wheels and the curbstones. This goal is realized by a Red Green Blue - Depth camera (RGB-D camera). The first step is to accurately extract the asphalt road boundary. The asphalt road boundary is typically extracted by edge detection combined with Hough transform for line detection. However, it is difficult to adjust the optimal parameters to adapt to the complex construction environment. In order to solve this problem, a robust method for the extraction of the asphalt road boundary in complex environments is necessary. The second step is to measure the distances according to the proposed lateral positioning method. Finally, the effectiveness of the proposed method was verified in construction sites.

The remainder of this paper is organized as follows. The proposed lateral positioning method is described in “Methodology”. In “Experiments and discussion”, the experiments are conducted to validate the accuracy of the proposed method. Then, the experimental results are discussed. Finally, conclusions and future work are provided in “Conclusions”.

Methodology

The proposed lateral positioning method aims to measure the distances between the roller steel wheels and the asphalt road boundary. The flowchart of the proposed method is shown in Fig. 1. Firstly, road images and the corresponding depth images are captured. Secondly, semantic segmentation is employed to segment the asphalt road. Edge detection is employed on the segmented results to obtain the semantic boundary. After eliminating the abnormal pixels of the semantic boundary, the asphalt road boundary can be obtained. Thirdly, the asphalt road boundary in the pixel coordinate system can be converted to the camera coordinate system by using the internal parameters of the camera. Subsequently, line fitting is performed on the asphalt road boundary in the camera coordinate system to obtain the direction vector \(\vec {n}\) of the asphalt road boundary and a point M on the asphalt road boundary. Finally, the lateral distances between the roller steel wheels and the asphalt road boundary are measured. The proposed roller lateral positioning method includes lateral positioning based on the camera installation height (LPCIH) and lateral positioning based on the roller steel wheels (LPRSW).

Data preprocessing

The extraction of the asphalt road boundary stands as a pivotal stage within the proposed lateral positioning method, and it directly affects the accuracy of positioning. Traditionally, the asphalt road boundary is extracted through edge detection operator combined with Hough transform for line detection17,18. However, due to the intricate background surrounding the asphalt road boundary, it is difficult to extract the pixels of asphalt road boundary by adjusting the parameters of the edge detection operator19,20. This makes it challenging to use Hough transform for line detection to obtain the asphalt road boundary21,22. Although the detection of lane lines23,24 is similar to the detection of asphalt road boundary, these methods cannot be directly used to extract the asphalt road boundary because there is no lane lines on the road during asphalt road construction. Therefore, we proposed a method (SSED) combining semantic segmentation and edge detection to effectively extract the asphalt road boundary.

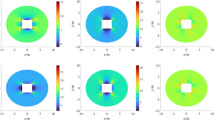

In this paper, DeepLabv3+25 with MobileNetV226 serving as the backbone is utilized to segment asphalt road, because it stands out among numerous semantic segmentation frameworks due to its effectiveness and efficiency27. The structure of DeepLabv3+ used in this paper is shown in Fig. 2. The original images are input into DeepLabv3+ to extract backbone features and shallow features. Backbone features are extracted from the output results of MobileNetV2. These features contain information about the overall structure and category of the objects in the image. Shallow features are extracted from the middle layer of MobileNetV2. These features contain more local texture, edge, color and other information. Backbone features are used to distinguish different categories (background and asphalt road), while shallow features are used to determine the boundaries of each category. The combination of backbone features and shallow features significantly improves the accuracy of segmentation of asphalt road. The backbone features are up-sampled four times and stacked with the shallow features to obtain the fused features. The fused features are recovered the resolution of the original image through up-sampling to finish the image prediction.

Asphalt road boundary is typically surrounded by different objects, such as curbstones, guardrails, construction enclosures, greening facilities, drainage ditches, etc. These objects will affect the accurate extraction of the asphalt road boundary. We captured the images from different perspectives to develop a dataset for asphalt road construction. Part images of the developed dataset are shown in Fig. 3. They are used as the training dataset for DeepLabv3+. In addition, data enhancement is performed on these images to enhance the generalization ability of DeepLabv3+. Data enhancement includes image angle transformation and image brightness transformation. During the asphalt road construction, the position of rollers on the asphalt road is changing. As a result, the position of the asphalt road in the images also changes. Angle transformation can simulate the images of asphalt road captured from different positions and angles. Moreover, the changes of lighting and weather conditions have a significant influence on the images of roller construction environment. Brightness transformation is used to simulate images captured at different lighting and weather conditions. In order to expedite the training process, we utilized pre-trained weights from MobileNetV2 to initialize the backbone of DeepLabv3+.

Following the semantic segmentation of asphalt roads by using DeepLabv3+, the Canny edge detection operator is employed to extract the pixels of asphalt road boundary from the results of semantic segmentation, because it has superior edge location and low false detection rate28,29. Given that the segmentation ability of semantic segmentation at the image boundary30 is limited, there are always abnormal pixels in the asphalt road boundary extracted by edge detection. The abnormal pixels must be eliminated to obtain the accurate asphalt road boundary. Since wrong segmentation occurs only on one side of the image, it is easy to remove the abnormal pixels by searching the pixels at the side of the image.

Subsequently, it is necessary to convert the asphalt road boundary from the pixel coordinate system to the camera coordinate system for lateral positioning. A diagram in Fig. 4 shows the process of converting pixel coordinates to camera coordinates. The camera coordinate system is O-xyz. O is the optical center of the camera. N(X, Y, Z) are in the camera coordinate system, and its coordinates in the imaging plane are \(\hbox {N}^{\prime }\)(\(\hbox {X}^{\prime }\), \(\hbox {Y}^{\prime }\), \(\hbox {Z}^{\prime }\)). The focal length of the camera is f.

According to the triangle similarity principle, as shown in Fig. 4b, there is the relationship shown in equation (1).

where, Z represents the depth information, which is sourced from the depth image captured by the camera.

Suppose that a pixel plane is fixed on the imaging plane. The coordinates of N on the pixel plane are (u, v). So, there is the following relationship:

where, \(f_{x}\) and \(f_{y}\) are the focal length of the camera in x direction and y direction, respectively. \(c_{x}\) and \(c_{y}\) are the main point coordinates of the camera.

Convert Eq. (2) into a matrix, and it can be expressed in the form of homogeneous coordinates as follows:

According to Eq. (3), the points in the pixel coordinate system can be converted to the camera coordinate system.

The turning radius of the asphalt road is typically large to ensure driving safety. Additionally, due to the limitation of the camera’s perspective, the asphalt road boundary within images can be approximated as a straight line. Therefore, the direction vector \(\vec {n}=(n_{x}, n_{y}, n_{z})\) of the asphalt road boundary and a point M(x, y, z) on the asphalt road boundary can be obtained by line fitting the asphalt road boundary in the camera coordinate system. The lateral distances can be measured from the contact points between the roller steel wheels and the road to the asphalt road boundary.

Estimation of roller lateral positioning

Lateral positioning based on the camera installation height

The schematic diagram of LPCIH is shown in Fig. 5. LPCIH includes both the lateral distance from the front roller steel wheel to the asphalt road boundary and the lateral distance from the rear roller steel wheel to the asphalt road boundary. When the camera is installed on the roller, the camera installation height h is determined. However, the measurement reference of the camera height h is not on the rear roller steel wheel, as shown in Fig. 5b. The lateral distances are measured through the relative relationship \(\triangle P\) between the camera and the roller steel wheels.

It needs to measure the distance \(d_{OP}\) between the camera and the positioning point D on the asphalt road boundary. \(d_{OP}\) can be calculated by equation (4).

where, P is the foot point of the roller steel wheel to asphalt road boundary. \(\vec {n}\) is the direction vector of the fitted asphalt road boundary AB, as shown in Fig. 5a. M is a point on the asphalt road boundary. O is the optical center of the camera. \(\vec {OM}\) represents the vector from the point O to the point M. \(\times\) represents the cross product between vectors. |\(\cdot\)| represents the modulus of a vector.

The lateral distance \(d_{PD}\) between the camera and the positioning point D can be measured by the pythagorean theorem, as shown in equation (5).

Finally, the distances between the roller steel wheels and the asphalt road boundary are measured by combining the relative position \(\triangle P\), as shown in Eq. (6).

Lateral positioning based on the roller steel wheels

The schematic diagram of LPRSW is shown in Fig. 6. Compared with LPCIH, LPRSW needs to ensure that there are roller steel wheels in the view of the camera. When the camera is installed on the roller, the two contact points between the roller steel wheels and the asphalt road are fixed in their positions relative to the camera. The coordinates of these two points, E and F, are manually identified from the captured images, and they are assumed to be unchanged. The distance \(d_{CE}\) between the front roller steel wheel and the positioning point on the asphalt road boundary, and the distance \(d_{DF}\) between the rear roller steel wheel and the positioning point on the asphalt road boundary are measured by equation (7).

where, C and D are the foot points of the roller steel wheels to asphalt road boundary, respectively. \(\vec {ME}\) and \(\vec {MF}\) represent the vector from the point M to the the contact points between the roller steel wheels and the asphalt road (E and F), respectively.

Experiments and discussion

The proposed lateral positioning method is implemented on a laptop equipped with a Core i7-10875H CPU and a NVIDIA RTX2060 GPU. The camera used in this paper is a ZED2i camera. ZED2i camera is a binocular camera and is a kind of RGB-D camera. It captures the images of the same scene through two side-by-side cameras, and then uses the parallax principle to calculate the depth information of each pixel to form a depth map based on the binocular stereo vision principle. Its lens focal length is 2.1 mm, its perspective is \(110^{\circ }\) (Horizontal) \(\times 70^{\circ }\) (Vertical), and its resolution is set to 1280\(\times\)720. These ensure that both roller steel wheels and the asphalt road boundary are fully within the view of the camera. The frame rate of image acquisition is set to 30 FPS. The vibration of the roller will make the image captured by the camera produce motion blur and affect the quality of the image. ZED2i camera can effectively reduce motion blur due to its global shutter technology. Additionally, a vibration-damping device is installed to further mitigate the impact of the vibration on the camera. These measures effectively solve the vibration and ensure the reliability and accuracy of our lateral positioning method.

Asphalt road boundary extraction

Some models with superior semantic segmentation ability were compared with DeepLabv3+. These models include HRNet31, PSPNet32, PP-LiteSeg33, U2Net34, and LR-ASPP35. Intersection over Union (IoU), Recall, Precision, Pixel Accuracy (PA), and inferring time serve as metrics for evaluating the performance of different semantic segmentation models in asphalt road segmentation. The experimental results are shown in Fig. 7. All these metrics, except the inference time, the difference among them is small. It indicates that the accuracy of these models in asphalt road segmentation is almost the same. DeepLabv3+ shows the best performance with an inference time of 64.7 ms. Therefore, DeepLabv3+ is selected to segment the asphalt road to ensure the real-time performance of the lateral positioning method.

We conducted experiments to validate the effectiveness of SSED. The experimental setup includes the usage of images, the lighting conditions, the presence of obstacles, and roller positions, as shown in Table 1. The results are shown in Fig. 8. The images labeled from 1 to 4 in Fig. 8a are used to validate the performance of DeepLabv3+ in segmenting asphalt road. These images with this perspective are used to develop a dataset for asphalt road construction and enhance the performance in segmenting asphalt road but not utilized for lateral positioning. The images labeled from 5 to 7 in Fig. 8a are captured from the perspective of the roller and utilized for lateral positioning. The image labeled 7 in Fig. 8a is used to test the robustness of SSED in extracting road boundaries in insufficient lighting conditions.

In the images labeled 1 and 5 in Fig. 8a, shadows appeared on the asphalt road due to the influence of roadside facilities. However, as shown in the results in Fig. 8b, the segmentation results of asphalt road are not affected by the shadow on the asphalt road. In addition, for the image labeled 7 in Fig. 8a, the segmentation results of asphalt road are consistent with the actual asphalt road. It indicates that image brightness transformation during training process enhances the adaptability of DeepLabv3+ to changes in brightness. In Fig. 8a, although the images labeled from 5 to 7 contain non-road areas such as the roller body, the asphalt road segmented is almost the same as the actual asphalt road. The non-road areas are not mistakenly divided into asphalt road, which indicates that DeepLabv3+ realizes the accurate segmentation of asphalt road. Fig. 8d shows the edge results obtained by performing edge detection and eliminating abnormal pixels on the semantic results in Fig. 8b. In the images labeled 5 to 7 in Fig. 8a, since there is a roller body near the bottom boundary of the images, the contour of the roller body is detected when edge detection is performed directly on the results of semantic segmentation. Given that these non-road areas consistently occur near the bottom boundary of the images, it is easy to remove the non-road boundary pixels by searching the pixels near the bottom boundary of the image during performing edge detection on the semantic segmentation results. This process ensures that only the asphalt road boundary, which is crucial for lateral positioning, is retained. The final asphalt road boundary extracted by combining semantic segmentation and edge detection are shown in Fig. 8e, and they can effectively represent the actual asphalt road boundary. This indicates that the proposed method that combines semantic segmentation and edge detection to extract the asphalt road boundary is effective.

Lateral positioning experiments

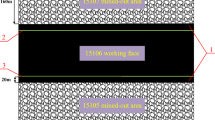

As far as we know, the lateral positioning method based on visual perception is rare. A comparative analysis with the lateral positioning method (LPVFE) in Ref.16 was conducted to validate the performance of the proposed lateral positioning method. LPVFE16 is summarized as follows. Firstly, the distortion of images with ground markings is corrected by the internal parameters of the camera. Secondly, the images are converted to grayscale images, after which Gaussian Filter is performed to reduce noise and smooth the images. Subsequently, a method combining edge gradient threshold and color feature threshold is used to extract the features of interest. Then, the morphological operation is performed to highlight features of interest. Additionally, the region of interest (ROI) is set as the part of the ground markings within work area to avoid background interference. Following this, the Hough transform is performed on the pre-processed region of interest to form a line set. Then, a slope threshold is established for these lines to eliminate redundant and falsely detected lines. Finally, the lateral distances between the look-ahead point in the moving direction of the roller and the left and right ground markings are calculated by the camera imaging geometry. The scenes of the comparative experiment are shown in Fig. 9. In order to better reproduce the ground markings extraction of LPVFE, we arranged white ground markings along the asphalt road boundary, as shown in the blue box in Fig. 9a, b. There are no ground markings in Fig. 9c. In order to make the lateral positioning methods comparable, we set the coordinates of the contact point between the roller steel wheel and the asphalt road in our proposed method to the midpoint of the bottom boundary of the image to calculate the lateral distance.

The accuracy of the lateral positioning methods is evaluated by absolute error and relative error, as shown in Table 2. The lateral positioning accuracy of LPVFE is the worst due to its larger absolute errors and relative errors. When the roller is far away from the asphalt road boundary, the ground markings in the image do not intersect with the bottom boundary of the image, as shown in Fig. 9b. In this case, LPVFE cannot calculate the lateral distance, which is one of its limitations. In the experiment, when the lateral distance exceeds 1.392 m, LPVFE can no longer calculate the lateral distance. However, LPCIH and LPRSW are still able to calculate the lateral distances without being affected by the position of the asphalt road boundary in the image. This is one of the advantages of our proposed lateral positioning method. When the real distances are small, the absolute errors of LPCIH and LPRSW are around 0.01 m. As distances increase, the absolute errors of LPCIH and LPRSW are less than 0.10 m. Therefore, the proposed lateral positioning method exhibits superior accuracy and applicability compared with LPVFE.

The reasons for the poor accuracy of LPVFE is explained in Fig. 10. Figure 10b shows the threshold segmentation results of the ROI at the ground markings obtained by the threshold segmentation method in Ref.16. As shown in Fig. 10b, there is false detection for the ground markings by using edge gradient threshold and color feature threshold to extract the ground markings in the ROI. This is also a limitation of LPVFE. In Fig. 10c, the red line represents the ground markings extracted by combining Hough transform for line detection and slope threshold of LPVFE, and the green line represents the ideal ground markings. It can be seen that there is a difference between the extracted ground markings and the ideal ground markings. The inaccurate extraction of ground markings is the primary reason for the poor accuracy of LPVFE. In contrast, the proposed method does not rely on the ground markings, and it uses a semantic segmentation model to segment the asphalt road, as shown in Fig. 10e. The proposed method enhances accuracy for extraction of the asphalt road boundary by combining semantic segmentation and edge detection, as shown in Fig. 10f. It provides a good foundation for the proposed lateral positioning.

In order to validate the accuracy of LPCIH and LPRSW, we conducted lateral positioning experiments on the front and rear roller steel wheels, respectively. The experimental results of lateral positioning for the front roller steel wheel are shown in Table 3. LPCIH measures the lateral distance through the relative position \(\triangle P\). The relative position \(\triangle P\) needs to be properly corrected with the change of the movement direction of the roller since the movement direction of the roller may not always be parallel to the asphalt road boundary and the installation position of the camera is close to the rear roller steel wheel. It is difficult to correct the relative position \(\triangle P\) in real time when the roller is working. Therefore, the error of lateral positioning for the front roller steel wheel obtained by LPCIH is larger, with a mean relative error of 8.64%. In contrast, LPRSW directly measures the lateral distance between the front roller steel wheel and the asphalt road boundary since the front roller steel wheel is in the view of the camera. Therefore, LPRSW obtains smaller error, with a mean relative error of 3.40%. When the roller is close to the asphalt road boundary, the absolute error of lateral positioning for LPRSW is only 0.012 m. When the roller is far away from the asphalt road boundary, the absolute error of LPRSW remains below 0.15 m. Therefore, the proposed lateral positioning method meets the requirement of the lateral positioning accuracy for the front roller steel wheel.

The experimental results of lateral positioning for the rear roller steel wheel are shown in Table 4. The errors of lateral positioning for the rear roller steel wheel obtained by LPCIH and LPRSW are similar. When the roller is close to the asphalt road boundary, the absolute errors of LPCIH and LPRSW are less than 0.01 m. When the roller is far away from the asphalt road boundary, the absolute errors of LPCIH and LPRSW remain below 0.15 m. This indicates that both LPCIH and LPRSW meet the requirements of the lateral positioning accuracy for the rear roller steel wheel. Compared with the error of lateral positioning for the front roller steel wheel obtained by LPCIH, the error of lateral positioning for the rear roller steel wheel obtained by LPCIH is smaller. This is because the installation position of the camera is close to the rear roller steel wheel, so that the relative position \(\triangle P\) does not need to be corrected excessively. The mean relative error of lateral positioning for LPCIH is 2.32%, which fully meets the needs of lateral positioning accuracy. LPRSW directly measures the lateral distance between the rear roller steel wheel and the asphalt road boundary since the rear roller steel wheel is in the view of the camera. Consequently, LPRSW demonstrates commendable accuracy in lateral positioning, with a mean relative error of 1.87%. Therefore, the proposed lateral positioning method can meet the requirement of the roller for positioning accuracy for the rear roller steel wheel.

An experiment in construction sites was conducted to verify the effectiveness of the proposed lateral positioning method. The experimental scene and experimental results are shown in Fig. 11. The roller speed during compaction is about 2\(\sim\)3 km/h. The experimental results are expressed in the form of (real distance, measured distance). In Fig. 11a, the absolute errors of the lateral distances AB and CD are 0.123 m and 0.143 m, respectively, and the relative errors are 3.30% and 3.81%, respectively. In Fig. 11b, the absolute errors of the lateral distances, EF and GH, are 0.124 m and 0.117 m, respectively, and the relative errors are 3.04% and 2.85%, respectively. It can meet the requirements of the lateral positioning accuracy of the roller during the construction process.

In the lateral positioning experiments, the camera captures images at a resolution of 1280\(\times\)720, with an acquisition frame rate of 30 FPS. With these parameters, the proposed lateral positioning method achieves a frequency of 6.25 Hz. This frequency fully satisfies the requirements for real-time application in asphalt road construction for rollers whose working speeds are typically about 2\(\sim\)3 km/h.

Discussion

LPCIH employs the relative position \(\triangle P\) between the camera and the roller steel wheels to measure lateral distances. It needs to calibrate the relative position \(\triangle P\). Given that the movement direction of the roller may not always be parallel to the asphalt road boundary, proper adjustment of the relative position \(\triangle P\) is necessary to ensure accurate lateral positioning as the movement direction of the roller changes. Otherwise, it will cause errors in lateral positioning. LPRSW directly measures the lateral distances between the roller steel wheels and the asphalt road boundary since the roller steel wheels are in the view of the camera. This makes LPRSW show higher accuracy and better practicability in roller lateral positioning. On the other hand, during the compaction of the asphalt road by the roller, the roller will change the lane to compact the road. In this case, the front roller steel wheel may temporarily disappear in the camera’s field of view, which will cause LPRSW to fail to complete the lateral positioning of the front roller steel wheel. At this time, LPCIH can be used as a preparation method to make up for the missing lateral positioning of the front roller steel wheel because its accuracy is only slightly lower than that of LPRSW.

The asphalt road typically has road cross slope, slope36,37,38, and so on. The lateral distances measured by LPCIH are in the horizontal plane, not in the plane parallel to the asphalt road. The lateral distances measured by LPCIH need to be properly corrected to approximate the real lateral distances. However, the slope angle of asphalt roads is not constant. It is necessary to continuously adjust the correction coefficient to accurately measure the lateral distances, which will make it difficult to accurately measure the lateral distances. LPRSW measures the lateral distances from the contact point of the roller steel wheels and the ground to the asphalt road boundary, and it is unaffected by the road cross slope, slope, and so on.

The proposed lateral positioning method for unmanned rollers focuses on the positioning of unmanned rollers relative to asphalt road during asphalt road construction. By monitoring the lateral distances between roller steel wheels and asphalt road boundary, our proposed method can effectively reduce potential collision between the roller and the objects on the asphalt road boundary. Once the roller collides with the asphalt road boundary, it will cause damage to the roller and asphalt road, which will affect the asphalt road construction. Moreover, the proposed lateral positioning method enhances the accuracy of lateral positioning, which is helpful for making the compaction of the asphalt road more uniform and durable. Additionally, our proposed method can be used to monitor the compaction state of asphalt road. This constant monitoring contributes to the longevity and reliability of asphalt road.

Currently, the most commonly used positioning systems for unmanned rollers in asphalt road construction are GPS. The cost of a robust GPS with multiple antennas and high precision is about CNY ¥20,000. Additionally, GPS might involve extra data service fees for regular updates and subscriptions. Continuous subscription fees for high-precision GPS are about CNY ¥4,000 per year. In short, a robust GPS may cost CNY ¥24,000. In contrast, the cost of a robust camera-based system with high precision is about CNY ¥4,000 per unit, and the camera-based system usually does not involve additional data connection fees. In this paper, a ZED2i camera is used for lateral positioning, and it can accurately measure distances within a range of 5 m. Its cost is about CNY ¥4,000 per unit. For higher precision requirements, a visual sensor with superior precision is recommended, and its cost will also increase. Therefore, compared with the commonly used GPS for unmanned rollers, the camera-based roller lateral positioning used in this paper has a significant advantage in cost.

Conclusions

An unmanned roller lateral positioning method for asphalt road construction is proposed in this paper to realize the positioning of unmanned rollers relative to asphalt road. Compared with satellite-based positioning, this relative positioning can accurately determine whether unmanned rollers drive out of the specified compaction areas when unmanned rollers are working near the asphalt road boundary. On the other hand, the proposed lateral positioning method can provide positioning for unmanned rollers when the satellite-based positioning systems fail. Additionally, a method combining semantic segmentation and edge detection was proposed to extract the asphalt road boundary, and it significantly enhances the accuracy of the extraction of asphalt road boundary. Compared with the traditional method of using edge detection and Hough transform for line detection to extract asphalt road boundary, the proposed method solves the difficulty of adjusting the edge detection parameters caused by the intricate background surrounding the asphalt road boundary. The experimental results show that the extracted asphalt road boundary is almost consistent with the actual asphalt road boundary. Field validation demonstrates that the proposed lateral positioning method has high accuracy, with a mean relative error of 3.40%. The frequency of lateral positioning can reach 6.25 Hz. It fully satisfies the performance requirements for lateral positioning in both accuracy and real-time during asphalt road construction for unmanned rollers. In addition, the proposed lateral positioning method can be implemented solely through a camera, which demonstrates simplicity and low hardware costs.

The main contributions of this paper are summarized as follows:

-

(1)

An unmanned roller lateral positioning method is proposed to realize the positioning of unmanned rollers relative to asphalt road, which has received little attention before. The method solves the problem that unmanned rollers may drive out of the compaction areas and collide with the objects around the construction area during construction of asphalt road. Moreover, the proposed method can still locate unmanned rollers when satellite-based positioning fails.

-

(2)

An image dataset for asphalt road construction is developed. Different from the current asphalt road datasets, our dataset is primarily focused on images of asphalt road with different objects surrounding the asphalt road, such as curbstones, guardrails, construction enclosures, greening facilities, and drainage ditches, etc. These images were captured during asphalt road construction, and there is no lane lines and other driving signs on the road, which is the most significant feature of our dataset.

-

(3)

A method combining semantic segmentation and edge detection is proposed to accurately extract the asphalt road boundary. The proposed method effectively solves the challenge of adjustment parameters of both edge detection and Hough transform for accurate extraction of asphalt road boundary in complex construction environment.

Although the proposed method is robust, there are still some limitations that need to be solved to further improve the performance of the proposed method. Accurate extraction of asphalt road boundary is a crucial step for the proposed roller lateral positioning method. The complex background at the asphalt road boundary, the indistinct features at the asphalt road boundary, and variations in weather conditions etc., lead to significant differences in the images of the asphalt road, thereby affecting the accuracy of boundary extraction. For future work, image contrast transformation can be employed to enhance the dataset for the semantic segmentation to improve the adaptability of the semantic segmentation model to such situation. In addition, the current lateral positioning is discrete along the movement direction of the roller. We intend to focus on longitudinal positioning to achieve more accurate roller positioning by combining lateral positioning and longitudinal positioning.

Data availability

The data used to support this study are available from the corresponding author upon request.

References

Zhang, Q. et al. Unmanned rolling compaction system for rockfill materials. Autom. Construct. 100, 103–117. https://doi.org/10.1016/j.autcon.2019.01.004 (2019).

Zhang, Q. et al. Intelligent rolling compaction system for earth-rock dams. Autom. Construct. 116, 103246. https://doi.org/10.1016/j.autcon.2020.103246 (2020).

Wang, N., Ma, T., Chen, F. & Ma, Y. Compaction quality assessment of cement stabilized gravel using intelligent compaction technology—A case study. Construct. Build. Mater. 345, 128100. https://doi.org/10.1016/j.conbuildmat.2022.128100 (2022).

Kassem, E., Liu, W. T., Scullion, T., Masad, E. & Chowdhury, A. Development of compaction monitoring system for asphalt pavements. Construct. Build. Mater. 96, 334–345. https://doi.org/10.1016/j.conbuildmat.2015.07.041 (2015).

Xu, G. H., Chang, G. K., Wang, D. S., Correia, A. G. & Nazarian, S. The pioneer of intelligent construction—An overview of the development of intelligent compaction. J. Road Eng. 2, 334–345. https://doi.org/10.1016/j.jreng.2022.12.001 (2015).

Zhan, Y. et al. Intelligent paving and compaction technologies for asphalt pavement. Autom. Construct. 156, 105081. https://doi.org/10.1016/j.autcon.2023.105081 (2023).

Huang, S. X. & Zhang, W. A fast calculation method of rolling times in the GNSS real-time compaction quality supervisory system. Adv. Eng. Softw. 128, 20–33. https://doi.org/10.1016/j.advengsoft.2018.11.008 (2019).

Han, T., Fang, Z., Zhang, Y. & Han, C. J. A BIM-IOT and intelligent compaction integrated framework for advanced road compaction quality monitoring and management. Comput. Electr. Eng. 100, 107981. https://doi.org/10.1016/j.compeleceng.2022.107981 (2022).

Lu, L. J., Dai, F. & Zaniewski, P. Automatic roller path tracking and mapping for pavement compaction using infrared thermography. Comput.-Aided Civ. Infrastruct. Eng. 36, 1416–1434. https://doi.org/10.1111/mice.12683 (2021).

Gao, H. J., Wang, J. J., Cui, B., Wang, X. L. & Lin, W. W. An innovation gain-adaptive Kalman filter for unmanned vibratory roller positioning. Measurement 203, 111900. https://doi.org/10.1016/j.measurement.2022.111900 (2022).

Shi, M. N., Wang, J. J., Cui, Q. H., Guan, S. W. & Zeng, T. C. Accelerated earth-rockfill dam compaction by collaborative operation of unmanned roller fleet. J. Construct. Eng. Manag. 148, 1083. https://doi.org/10.1061/(ASCE)CO.1943-7862.0002267 (2022).

Zhang, J. Q. et al. Automated guided vehicles and autonomous mobile robots for recognition and tracking in civil engineering. Autom. Construct. 146, 104699. https://doi.org/10.1016/j.autcon.2022.104699 (2023).

Kim, S. & Chi, J. Multi-camera vision-based productivity monitoring of earthmoving operations. Autom. Construct. 112, 103121. https://doi.org/10.1016/j.autcon.2020.103121 (2020).

Rao, A. S. et al. Real-time monitoring of construction sites: Sensors, methods, and applications. Autom. Construct. 136, 104099. https://doi.org/10.1016/j.autcon.2021.104099 (2022).

Lu, L. J. & Dai, F. A thermal-based technology for roller path tracking and mapping in pavement compaction operations. In 2020 Winter Simulation Conference (WSC). 10–15. https://doi.org/10.1109/WSC48552.2020.9383888 (IEEE, 2020).

Sun, Y. M. & Xie, H. Lateral positioning method for unmanned roller compactor based on visual feature extraction. In 2019 3rd Conference on Vehicle Control and Intelligence (CVCI). 1–6. https://doi.org/10.1109/CVCI47823.2019.8951726 (IEEE, 2019).

Yu, G. Q. & Qiu, D. Research on lane detection method of intelligent vehicle in multi-road condition. In 2021 China Automation Congress (CAC). 2779–2783. https://doi.org/10.1109/CAC53003.2021.9728269 (IEEE, 2021).

Alamsyah, S. A., Purwanto, D. & Attamimi, M. Lane detection using edge detection and spatio-temporal incremental clustering. In 2021 International Seminar on Intelligent Technology and Its Applications (ISITIA). 364–369. https://doi.org/10.1109/ISITIA52817.2021.9502232 (IEEE, 2021).

Suttiponpisarn, P., Charnsripinyo, C., Usanavasin, S. & Nakahara, H. An enhanced system for wrong-way driving vehicle detection with road boundary detection algorithm. Proc. Comput. Sci. 204, 164–171. https://doi.org/10.1016/j.procs.2022.08.020 (2022).

Gajjar, H., Sanyal, S. & Shah, M. A comprehensive study on lane detecting autonomous car using computer vision. Exp. Syst. Appl. 233, 120929. https://doi.org/10.1016/j.eswa.2023.120929 (2023).

Duan, J. M. & Viktor, M. Real time road edges detection and road signs recognition. In 2015 International Conference on Control, Automation and Information Sciences (ICCAIS). 107–112. https://doi.org/10.1109/ICCAIS.2015.7338642 (IEEE, 2015).

Nie, X., Gao, Y. Q., Gao, F., Li, Q. & Alam, Z. A novel vision based road detection algorithm for intelligent vehicle. In 2019 IEEE International Conference on Power, Intelligent Computing and Systems (ICPICS). 504–507. https://doi.org/10.1109/ICPICS47731.2019.8942460 (IEEE, 2019).

Tang, J. G., Li, S. B. & Liu, P. A review of lane detection methods based on deep learning. Pattern Recognit. 111, 107623. https://doi.org/10.1016/j.patcog.2020.107623 (2021).

Hao, W. Y. Review on lane detection and related methods. Cognit. Robot. 3, 135–141. https://doi.org/10.1016/j.cogr.2023.05.004 (2023).

Chen, L. C., Zhu, Y. K., Papandreou, G., Schroff, F. & Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In European Conference on Computer Vision (ECCV). 833–851. https://doi.org/10.1007/978-3-030-01234-2_49 (IEEE, 2018).

Sandler, M., Howard, A., Zhu, M. L., Zhmoginov, A. & Chen, L. C. Mobilenetv2: Inverted residuals and linear bottlenecks. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 4510–4520. https://doi.org/10.1109/CVPR.2018.00474 (IEEE, 2018).

Wang, Z. F., Zhang, Y. Y., Mosalam, K. M., Gao, Y. Q. & Huang, S. L. Deep semantic segmentation for visual understanding on construction sites. Comput.-Aided Civ. Infrastruct. Eng. 37, 145–162. https://doi.org/10.1111/mice.12701 (2022).

Jing, J. F., Liu, S. J., Wang, G., Zhang, W. C. & Sun, C. M. Recent advances on image edge detection: A comprehensive review. Neurocomputing 503, 259–271. https://doi.org/10.1016/j.neucom.2022.06.083 (2022).

Wang, L. T., Gu, X. Y., Liu, Z., Wu, W. X. & Wang, D. Y. Automatic detection of asphalt pavement thickness: A method combining GPR images and improved canny algorithm. Measurement 196, 111248. https://doi.org/10.1016/j.measurement.2022.111248 (2022).

Liu, Y. Y. et al. Image semantic segmentation approach based on deeplabv3 plus network with an attention mechanism. Eng. Appl. Artif. Intell. 127, Part A, 107260. https://doi.org/10.1016/j.engappai.2023.107260 (2024).

Wang, J. D. et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 43, 3349–3364. https://doi.org/10.1109/TPAMI.2020.2983686 (2020).

Zhao, H. S., Shi, J. P., Qi, X. J., Wang, X. G. & Jia, J. Y. Pyramid scene parsing network. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 6230–6239. https://doi.org/10.1109/CVPR.2017.660 (IEEE, 2017).

Peng, J. C. et al. Pp-liteseg: A superior real-time semantic segmentation model. https://doi.org/10.48550/arXiv.2204.02681 (2022).

Qin, X. B. et al. U2-net: Going deeper with nested u-structure for salient object detection. Pattern Recognit. 106, 107404. https://doi.org/10.1016/j.patcog.2020.107404 (2020).

Howard, A. et al. Searching for mobilenetv3. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV). 1314–1324. https://doi.org/10.1109/ICCV.2019.00140 (IEEE, 2019).

Feng, J. H., Qin, D. T., Liu, Y. G. & You, Y. Real-time estimation of road slope based on multiple models and multiple data fusion. Measurement 181, 109609. https://doi.org/10.1016/j.measurement.2021.109609 (2021).

Zhao, L. F., Zhang, M. L., Cai, B. X., Qu, Y. & Hu, J. F. One estimation method of road slope and vehicle distance. Measurement 181, 112481. https://doi.org/10.1016/j.measurement.2023.112481 (2023).

Wang, Y. C. et al. Automatic cross section extraction and cross slope measurement for curved ramps using light detection and ranging point clouds. Measurement 228, 114369. https://doi.org/10.1016/j.measurement.2024.114369 (2024).

Acknowledgements

This work was supported by National Natural Science Foundation of China [grant number 61901056], Shaanxi Province Qin Chuangyuan Program - Innovative and Entrepreneurial Talents Project [grant number QCYRCXM-2022-352], and Scientific Research Project of Department of Transport of Shaanxi Province [grant numbers 23-10X, 24-74K].

Author information

Authors and Affiliations

Contributions

Pengju Yue: Conceptualization, Methodology, Data curation, Formal analysis, Validation, Writing—original draft, Writing—Review and Editing. Xiaohua Xia: Funding acquisition, Writing—original draft, Writing—Review and Editing. Yongbiao Hu: Conceptualization, Supervision. Xuebin Wang: Writing—original draft, Writing— Review and Editing. Pengcheng He: Data curation, Writing— original draft. Xufang Qin: Writing—original draft.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yue, P., Xia, X., Hu, Y. et al. Unmanned roller lateral positioning method for asphalt road construction. Sci Rep 15, 418 (2025). https://doi.org/10.1038/s41598-024-84575-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-84575-x