Abstract

Optical Character Recognition (OCR) systems play a crucial role in converting printed Arabic text into digital formats, enabling various applications such as education and digital archiving. However, the complex characteristics of the Arabic script, including its cursive nature, diacritical marks, handwriting, and ligatures, present significant challenges for accurate character recognition. This study proposes a hybrid transformer encoder-based model for Arabic printed and handwritten character classification. The methodology integrates transfer learning techniques utilizing pre-trained VGG16 and ResNet50 models for feature extraction, followed by a feature ensemble process. The transformer encoder architecture leverages its self-attention mechanism and multilayer perceptron (MLP) components to capture global dependencies and refine feature representations. The training and evaluation were conducted on the Arabic OCR and Arabic Handwritten Character Recognition (AHCR) datasets, achieving exceptional results with an accuracy of 99.51% and 98.19%, respectively. The proposed model is evaluated in an extension ablation study using the Arabic Char 4k OCR dataset for training, while testing on the part of the AHCR dataset to evaluate performance on unseen data. The proposed model significantly outperforms individual CNN-based models and ensemble techniques, demonstrating its robustness and efficiency for Arabic character classification. This research establishes a foundation for improved OCR systems, offering a reliable solution for real-world Arabic text recognition tasks.

Similar content being viewed by others

Introduction

Optical Character Recognition (OCR) has emerged as a transformative technology in digitizing text-based materials, facilitating widespread applications such as digital archiving, automated documentation, and natural language processing1. The conversion of printed text into machine-readable formats through OCR systems has significantly advanced the accessibility and usability of information. However, the application of OCR to non-Latin scripts, particularly the Arabic language, poses distinct challenges due to the script’s unique characteristics2. Arabic is a Semitic language with a rich linguistic structure, comprising a cursive script, complex ligatures, diacritical marks, and context-dependent letterforms3. These features introduce text segmentation and recognition intricacies, demanding specialized solutions for accurate Arabic OCR systems.

Arabic, considered to be one of the oldest languages in the world, is the fifth most spoken language and serves as one of the six languages of the United Nations4. The Arabic content is used as a medium of communication for education, business, technology, the web, and social media. Arabic, also as a connected script, is written from right to left. The Arabic script has a relatively richer alphabet with 28 letters, and more precisely, there are a total of 250 Arabic graphemes formed from 28 Arabic letters 5,6.

Figure 1 illustrates samples of printed Arabic characters in different shapes and forms, representing the complex nature of Arabic script with equivalents in Latin letters. The figure showcases a variety of characters, such as "س", "ش", "ص", "ض", "ط", and "ظ", demonstrating how Arabic characters can appear in various contexts, including standalone, initial, medial, and final forms within words7. Each row corresponds to a specific character, and each column highlights its variation depending on its position in a word. Arabic script is inherently cursive, and the shape of a character changes based on its surrounding letters. This dynamic and context-dependent nature of Arabic characters introduces unique challenges in text recognition tasks8. For example, characters like "س" and "ش" exhibit subtle differences, such as diacritical marks, which must be accurately identified. Similarly, "ص" and "ض" share structural similarities, making their recognition more challenging. In addition, Fig. 2 illustrates samples of the Arabic handwritten characters in different shapes and forms.

Despite advancements in OCR technologies, the Arabic script continues to present formidable challenges. Its cursive nature requires sophisticated segmentation algorithms capable of distinguishing between initial, medial, final, and isolated forms of letters. Additionally, the presence of diacritical marks, which alter the phonetic meaning of characters, further complicates the recognition process. Traditional machine learning techniques often struggle to address these complexities effectively, resulting in suboptimal accuracy for Arabic text recognition9.

CNNs, a cornerstone of deep learning, have demonstrated remarkable success in extracting spatial features from images. Models like VGG16 and ResNet50 have been widely adopted for OCR applications due to their robustness in capturing intricate patterns10. Ensemble learning approaches have also gained traction in the OCR domain, leveraging multiple models to enhance prediction accuracy and generalization. By combining the strengths of individual models, ensemble techniques mitigate the limitations of single-model architectures11. The emergence of transformer-based architectures, particularly Vision Transformers (ViTs), has introduced a paradigm shift in OCR systems. Transformers leverage self-attention mechanisms to capture both local and global dependencies within data, making them highly effective for tasks requiring contextual understanding. The self-attention mechanism allows transformers to process entire sequences in parallel, enabling the model to learn intricate relationships between distant elements in the input data7. The attention-based fusion mechanism further optimizes the integration of features, making it adaptable for practical applications in resource-constrained environments, thus contributing significantly to real-world implementations in character classification12.

This study proposes a state-of-the-art hybrid transformer encoder-based model for Arabic printed character classification. The proposed methodology integrates transfer learning techniques with transformer-based architectures to address the unique challenges of Arabic OCR. This hybrid approach combines the strengths of CNNs in feature extraction with the global dependency modeling capabilities of transformers, resulting in a robust and accurate classification model.

The significance of this research lies in its contribution to advancing Arabic OCR systems through the integration of cutting-edge deep learning techniques. The following main contributions of this work:

-

Designed a cutting-edge model that integrates CNN-based feature extraction with transformer-based global context modeling, addressing the challenges of Arabic OCR.

-

Introduced a robust feature fusion process to combine complementary features from multiple CNN models before feeding them into the transformer encoder.

-

The study evaluated the proposed models under three distinct scenarios to comprehensively assess their performance. In the first scenario, the models were evaluated using the Arabic Char 4 k OCR dataset. The second scenario involved the evaluation of the models on the AHCR dataset. In the third scenario, the Arabic Char 4 k OCR dataset was utilized for training the models, while the AHCR dataset served as unseen data for testing, thereby examining the models’ effectiveness in handling previously unseen datasets.

-

The hybrid transformer model provides a stable and scalable solution, minimizing misclassifications and improving recognition for complex scripts like Arabic.

The following sections include a review of previous studies in the section "Related work". In the section "Methodology", there is an in-depth description of the proposed methodology. The section "Results and discussion" assesses the performance of the model about existing methods, while the discussion emphasizes the significance of the results. The section "Conclusions" provides the conclusion and future research.

Related work

There has been research in character recognition from different domains, with literature studying the techniques used and approaches such as optical character recognition, artificial intelligence, and machine learning.

Traditional and machine learning methods

Several methods have been presented to recognize characters using different scripts. In recent years, efforts to advance Arabic Handwritten Text Recognition (HTR) have included collaborations with PRImA Research Lab. One promising solution is Transkribus, a leading HTR tool that has demonstrated success in recognising historical documents, including Arabic scripts13. Additionally, the Defoe tool has been enhanced to integrate advanced natural language processing, enabling the storage of preprocessed text across multiple storage systems and supporting various query types14.

A different research study highlighted the increasing focus on recognizing handwritten Arabic characters among children, utilizing the Hijja dataset, which contains 47,434 letter samples, alongside the AHCD dataset comprising 16,800-character samples15. The study achieved a notable accuracy of 92.96% by applying the SVM classifier while training on both datasets.

This study employed various machine learning methodologies, including RF, KNN, and SVM, utilizing deep features derived from CNN architectures such as VGG16, VGG19, and SqueezeNet. The performance of the proposed model was assessed, achieving an optimal accuracy of 88.8% through the application of neural networks in conjunction with VGG1616.

Furthermore, the authors have proposed and conducted a comparative analysis of two methodologies for the classification of Arabic characters17. The first methodology uses a traditional machine learning approach with an SVM classifier and various feature sets.

The existing literature highlights significant progress in Arabic character recognition, leveraging both traditional machine learning and deep learning approaches. Traditional methods, such as SVM, RF, and KNN, have demonstrated effectiveness in feature-based classification, but their accuracy is often limited by the complexity and variability of handwritten Arabic characters. While studies utilizing datasets like Hijja and AHCD have achieved promising results, their reliance on handcrafted features may not generalize well across different handwriting styles.

A recent study has focused on improving AHR by combining CNNs with various machine learning classifiers. One such approach is the hybrid CNN + Kolmogorov Arnold Networks (KANs) model, which leverages CNNs for feature extraction and KANs for approximating complex functions18. The model achieved an accuracy of 97.71% on the AHCD.

Deep learning and transformer methods

Numerous researchers have focused on feature extraction methods to enhance the accuracy of classification19. Yuting Li et al. introduced a data-efficient ViT method that utilizes only the encoder of the standard transformer, addressing the challenge of limited labeled data in handwritten text recognition20. It incorporates a CNN for feature extraction, replacing the original patch embedding. In addition, the authors utilize a CNN, specifically VGG16, to automate the classification process, enabling the model to learn and extract valuable features directly from images. This was accomplished using a diverse collection of Arabic document images sourced from different origins. The testing on Arabic characters resulted in an average classification accuracy of 92.09%. In addition, the authors present ADOCRNet, a sophisticated deep learning framework developed for the recognition of Arabic documents3. Another model employs a hybrid approach that integrates CNNs with Bidirectional Long Short-Term Memory (Bi-LSTM) networks.

Furthermore, a recent investigation has been conducted focusing on the recognition of air-written Arabic characters through the application of machine learning models, CNN, and OCR techniques21. The framework presented in this study22, achieved a classification accuracy of 98.33% using the VGG 16 for recognizing Arabian handwriting.

The image processing techniques employed include contrast-limited adaptive histogram equalization (CLAHE) for image enhancement and the k-means algorithm for image segmentation, contributing to the overall effectiveness of the handwriting recognition system.

The study11 presents a stacking ensemble classifier combining CNN and BLSTM networks. A key innovation is using the CNN output probability vector as input for the BLSTM meta-classifier, applied to a dataset of 102,352 Arabic digits. This model achieved 99.39% accuracy on the test set, outperforming traditional CNN methods.

In addition, the study7 presented a hybrid deep learning approach combining CNNs with bidirectional recurrent neural Networks, specifically Bi-LSTM and Bi-GRU. By integrating CNNs for spatial feature extraction and Bi-GRU for modelling temporal dynamics, the models effectively address the complexities of Arabic handwriting. Experiments demonstrated state-of-the-art accuracy rates of 97.05% on the AHCR dataset.

Another study23 introduced a technique for the classification of printed Arabic characters through integrated feature extraction methodologies. This encompasses the analysis of black pixel densities, the application of Hu invariant moments, the utilization of Gabor features, and the implementation of CNN classifiers. These combined approaches significantly improve recognition accuracy on the printed Arabic text dataset, PAT-A01. Mohammed et al.24 proposed a “DeepAHR” model designed for the recognition of Arabic handwritten characters through the utilization of the CNN deep learning architecture. This model underwent training on two publicly available datasets, namely Hijaa and AHCD. The DeepAHR model accomplished accuracy rates of 98.66% on the AHCD dataset and 88.24% on the Hijaa dataset.

In contrast, the second employs deep learning through CNNs for self-characterization of Arabic features. A CNN architecture was introduced, and multiple transfer learning strategies assessed on OIHACDB and AIA9K datasets led to accuracies of 94.7%, 98.3%, and 95.2% for different test sets.

A. M. Mutawa et al.25 introduced a model for the recognition of Arabic handwritten text, utilizing advanced deep machine learning methodologies. The proposed model combines a residual network (ResNet) for the extraction of features with BiLSTM and connectionist temporal classification for effective sequence modeling. The system attained a character error rate of 13.2% and a word error rate of 27.31%.

Furthermore, the authors put forth an ensemble technique that employs deep learning to tackle the recognition of Arabic handwritten characters26. This approach integrates hierarchical feature extraction from high-resolution images utilizing pre-trained models, namely the ViT and Inception ResNet V2. When assessed on the HMBD dataset, which is organized according to writing positions, the ensemble model attained test accuracies between 89 and 98%. A different research effort integrated transfer learning and data augmentation techniques to improve the recognition of handwritten Arabic characters27. They utilized the VGG16 model, which was pre-trained and further refined, alongside data augmentation methods. Results from their experiments on the IFHCDB and HACDB datasets revealed recognition accuracies of 96.01% and 97.15%, respectively. Ahmed et al.28 developed a CNN model aimed at the recognition of Arabic characters inscribed by children, attaining an accuracy rate of 91% on the Hijja dataset, which is specifically tailored for children’s handwriting, and 97% on the Arabic Handwritten Character Recognition dataset (AHCR). By integrating the Hijja and AHCR datasets, the model achieved an overall average prediction accuracy of 96%.

The authors29 introduced a handwriting recognition model designed for the classification of handwritten images. This model integrates features derived from four pre-trained convolutional neural networks (CNNs), namely ResNet50 V2, MobileNet V2, ResNet101 V2, and Xception. Utilizing the CSVD dataset, the system implemented preprocessing techniques on concatenated features produced through a parallel deep feature extraction methodology. The model attains an outstanding average accuracy of 99.97%. The researchers conducted the ArCAR mode to identify Arabic text at the character level30. The ArCAR system underwent validation through fivefold cross-validation, addressing two specific applications: Arabic document classification and sentiment analysis. In the context of document classification, the model demonstrated exceptional performance on the Alarabiya-balance dataset, achieving an accuracy of 97.76%, a precision of 94.16%, a recall of 94.08%, and an F1-score of 94.09%.

The deep learning models have significantly improved recognition accuracy by automatically extracting relevant features. CNNs and hybrid architectures incorporating Bi-LSTM, Bi-GRU, and Transformer-based models have achieved state-of-the-art performance, particularly in handling the complexities of handwritten Arabic script. The ADOCRNet and DeepAHR models have pushed accuracy boundaries, with some models surpassing 99% accuracy on well-established datasets.

Furthermore, ensemble models and transfer learning techniques have emerged as effective strategies for improving recognition robustness. The use of ResNet, ViT, and Inception ResNet V2 in hierarchical feature extraction has led to high classification performance, particularly in printed Arabic text. However, some studies focus on specific datasets that may not fully represent broader Arabic script variations, making direct comparisons difficult.

While these advancements are noteworthy, future research should focus on handling low-resource Arabic scripts, reducing model complexity while maintaining accuracy, and improving generalizability across different handwriting styles. However, challenges remain in generalizing across diverse datasets and real-world handwriting variations.

Methodology

This method’s primary goal is to build Arabic-printed character classification models using an Arabic handwritten dataset. Figure 3 illustrates the workflow of the proposed hybrid feature fusion ensemble-based model combined with a transformer ViT architecture for Arabic printed character classification. The methodology encompasses several sequential steps: Initially, the input data is prepared using the Arabic-printed character dataset in conjunction with the AHCR dataset. Subsequently, the images undergo preprocessing, which includes resizing the inputs. The next step involves feature extraction, utilizing transfer learning models; specifically, pre-trained transfer learning models are employed to obtain features from the input images. These models draw upon their learned representations from extensive datasets to proficiently capture spatial and contextual information pertinent to Arabic-printed characters. Then, the top-level features of transfer learning models are extracted without classification layers and then fused to combine complementary ensemble models. The fusion process enhances the robustness and diversity of the extracted features. Then, patch embedding, where the fused features are further segmented into embedded patches to align with the input requirements of the transformer ViT model. Next, the self-attention mechanism within the ViT processes the embedded patches to capture global dependencies and relationships between patches. The output is passed through an MLP to refine the predictions and generate classification outputs. Finally, the processed features are used to classify Arabic-printed characters effectively. This hybrid approach leverages both transfer learning for robust feature extraction and the ViT model for its powerful self-attention mechanism, resulting in improved accuracy and performance for Arabic character recognition.

Datasets description

In this study, two datasets containing printed and handwritten characters are used. Both datasets have multiple diverse ranges of character variations per letter in multiple forms, which are usable and reusable character sets and contribute to the robustness of the model.

The Arabic char 4k OCR dataset

It is an extensive compilation of images of Arabic text, both printed and handwritten, created for training, assessing, and validating optical character recognition systems31. This dataset specifically tackles the distinctive challenges posed by the Arabic script, such as its cursive writing style, the presence of diacritical marks, and intricate ligatures. It generally features scanned or digitally produced images of printed Arabic materials, showcasing a variety of fonts with different styles, sizes, and weights. Additionally, the dataset encompasses a wide range of handwritten texts gathered from numerous writers to reflect individual variations. It includes isolated representations of Arabic characters (29 letters) in their initial, medial, final, and standalone forms with 127,027 images, as well as frequently occurring Arabic ligatures like "لا" (Lam-Alif) to enhance the overall robustness of the model.

Arabic handwritten character recognition dataset (AHCR)

AHCR is a collection of handwritten Arabic characters, typically used for developing and evaluating models for Arabic script recognition32. This dataset contains a wide range of handwritten characters, often including variations in style, size, and stroke patterns, reflecting the natural diversity in handwriting across different individuals. The AHCR dataset typically includes characters from the Arabic alphabet, which consists of 28 primary letters, and is used in this work as an ablation study to test the effectiveness of the proposed model.

Datasets splitting

Table 1 illustrates the division of the Arabic OCR dataset and the AHCR dataset into three distinct subsets: training, validation, and testing. This division for the first dataset is executed according to a specified ratio of 80% for training, 10% for validation, and 10% for testing. The training set has 101,610 images used for training the model. The validation set contains 12,716 images for monitoring performance and fine-tuning. The testing set includes 12,701 images used for an unbiased final evaluation of model performance. For the second dataset, 13,261 samples for training, 1668 samples for validation, and 1662 samples for testing.

This splitting strategy ensures a balanced distribution across all subsets, maintaining consistency in label representation while enabling robust model training, validation, and evaluation. For preprocessing, resizing the images to a fixed dimension of 224 × 224 pixels and normalizing the pixel values to a range of [0, 1].

Artificial intelligence models

This section outlines the various artificial intelligence models employed in the methodology for Arabic printed character classification, highlighting their unique architectures, roles, and contributions to the proposed system.

Convolutional neural network model

A CNN is a feed-forward neural network designed to extract hierarchical features from input data, such as images or sounds. Utilizing backpropagation for training, CNNs can learn complex nonlinear mappings and automatically detect prominent features, demonstrating robustness to variations and distortions in input data33. In this study, we developed two separate transfer learning models, which were trained individually before their features were fused, as described below:

VGG16 model

The VGG16 model, developed by Oxford University, is a pre-trained deep learning architecture often applied through transfer learning. By leveraging its training on the ImageNet dataset, this model extracts meaningful features for similar tasks34. In this study, layers below 17 were fine-tuned and set as untrainable, optimizing its feature extraction capability.

ResNet50 model

ResNet50, a deep CNN architecture introduced by Microsoft, employs residual connections to address the vanishing gradient problem in deep networks. These connections enable the network to skip layers, improving gradient flow35. For this work, layers below 123 are set as untrainable, ensuring efficient feature extraction without overfitting.

Feature extraction

Both VGG16 and ResNet50 are utilized for the extraction of significant features from the input images depicting Arabic characters. These models, which have undergone training on extensive datasets such as ImageNet, are employed for their proficiency in recognizing fundamental spatial features, including edges, textures, and elementary patterns. In the context of this task, the models are fine-tuned by freezing the lower layers while permitting only the upper layers to acquire features pertinent to the recognition of Arabic characters.

The proposed ensemble learning model

An ensemble model is a machine-learning technique that enhances prediction accuracy by combining multiple base models. This approach aims to improve the model’s overall stability and ability to generalize by leveraging the collective strengths of the integrated models, effectively reducing the risk of overfitting. Ensemble models often outperform individual models, providing more reliable and precise predictions. As a result, they are widely used in fields such as image recognition, natural language processing, and predictive analytics36. In this study, the proposed feature extraction method adopts an ensemble strategy that merges two pre-existing deep learning models while excluding their classification layers.

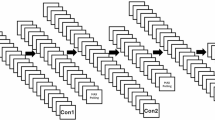

This study employed an ensemble learning approach by identifying an optimal combination of pre-trained deep learning models to serve as the primary framework for feature extraction. As illustrated in Fig. 4, the ensemble method proposed herein integrates multiple pre-trained deep learning models to facilitate the feature extraction process.

Feature fusion

After extracting features using both VGG16 and ResNet50, the next step involves fusion. The outputs from the two models are concatenated, combining their extracted features into a unified representation. This ensemble approach allows the model to benefit from the strengths of both architectures, enabling it to capture a broader range of information, from simple patterns (VGG16) to more complex representations (ResNet50). The fused features are then prepared for further processing in the subsequent stages.

The proposed hybrid transformer encoder-based model

The encoder is one of the two primary components in transformer models. Encoders are built up through several layers, with each layer composed of two parallel network channels: the Multi-Headed Self-Attention and the Feedforward Neural Network with output neurons. Encoders are the models of choice when dealing with input sequences and can function independently of one another. The input sequence is embedded from a 1-dimensional input space to a conceptual D-dimensional space. Each pair of encoders uses identical illustrations37. The Multi-Headed Self-Attention uses a scaled dot-product attention weighted sum function to associate within the input vectors and create a context matrix. The context matrix is the focal point of the encoder and is controlled by a separate layer normalization, then it is forwarded to the next part. Positional encoding can be applied pre- or post-the input sequence embedding unit. This is a vital part of the transformer model that determines the natural order of the input sequence and helps the model to understand context38.

There are two important roles played by the encoder. The first is that it is used in the training loop to minimize the discrepancy between generated probability distributions and oracle probability distributions. Second, it is used during the testing loop to decode given data. Each of the inputs is processed through four layers, from top to bottom, and then pooled to develop a joint tensor by transforming the learned representations. Since the transformer model uses self-attention, it allows the processing input vectors in parallel, thereby enabling it to compute the representations of any input sequence containing vectors in constant time.

In this paper, we propose a transformer encoder model for the task of Arabic printed characters classification. Our proposed model has a transformer encoder-based architecture that has unique features and modifications. The encoded architecture consists of a self-attention mechanism complemented by a multilayer perceptron (MLP) component. Within each block, there is an integration of a normalization layer in conjunction with residual connections. The multilayer perceptron represents a distinct variant of feed-forward neural networks characterized by the incorporation of dense layers and dropout layers, as detailed in the corresponding equations:

Where Q denotes the query vector, V is the value vector with its respective dimensions, and K indicates the key vector. The product exhibits a variance that has a mean of zero. Furthermore, the product is normalized by dividing it by the standard deviation. The SoftMax function then transforms this scaled dot-product into an attention score.

The multi-head attention linearly extends the queries, keys, and values h times using a variety of learned linear projections, and can be calculated by Eqs. (2, 3).

the projections are parameter matrices \({\text{W}}_{i}^{Q}\in {\text{R}}^{{d}_{model} x {d}_{k}}, {\text{W}}_{i}^{K}\in {\text{R}}^{{d}_{model} x {d}_{k}}\), \({\text{W}}_{i}^{V}\in {\text{R}}^{{d}_{model} x {d}_{v}}\) and \({W}^{o}\in {\text{R}}^{{hd}_{v} x {d}_{model}}\). Conversely, the MLP component comprised a non-linear layer utilizing the Gaussian Error Linear Unit (GELU) activation function, incorporating 1024 neurons and batch normalization, with a dropout rate of 50% applied uniformly across all dropout layers.

This mechanism is fundamental to the transformer model, enabling it to provide parallel attention for analyzing the complete content of the input image. Due to the multi-head attention feature, the model can simultaneously engage with input from multiple representation subspaces located in different areas.

Transformer ViT integration

Once the features are fused, they are segmented into embedded patches to align with the input requirements of the ViT. The transformer model processes these patches using its self-attention mechanism to capture both local and global dependencies across the entire image. This hybrid approach ensures that the model can learn both fine-grained details (from CNN features) and contextual information (from ViT’s global relationships), resulting in superior performance for Arabic character classification.

By combining the strengths of transfer learning models and transformer architectures, this methodology achieves improved accuracy and robust feature extraction for Arabic printed character recognition.

Final classification

The transformer encoder processes the embedded patches, and the final classification is carried out through an MLP, which refines the feature representations and generates the classification output.

Evaluation

To evaluate the performance of deep learning models, various metrics are calculated using the outcomes of predictions: True Positives (TP), False Positives (FP), True Negatives (TN), and False Negatives (FN). The equations for the most commonly used metrics are as follows:

Accuracy:

Accuracy measures the proportion of correctly predicted samples out of the total predictions.

Precision (Positive Predictive Value):

Precision indicates how many of the predicted positive instances are correct.

Recall (Sensitivity or True Positive Rate):

Recall measures the ability of the model to correctly identify all relevant instances.

F1 Score:

The F1 Score is the harmonic mean of precision and recall, providing a balanced measure when precision and recall are equally important.

These metrics collectively provide a comprehensive evaluation of a model’s performance, balancing accuracy, precision, recall, and error rates to ensure a thorough analysis of its strengths and weaknesses.

The multi-class confusion metrics are utilized to obtain the values of TP, TN, FP, and FN parameters.

In addition, the area under the receiver operating characteristic (ROC) curve (AUC) serves as a metric for assessing a classifier’s ability to differentiate between distinct classes. For the multi-classification scenario, we adopt a one-class-versus-others strategy to generate the ROC curves along with their corresponding AUC values39. Subsequently, we provide the computed mean AUC values for further interpretation.

Experimental setup

In this section, we describe the experimental setup used for training the model in our study in Table 2. We focus on the configuration of key hyperparameters and the setup for the model’s training process, such as image size, batch size, learning rate, optimizer, early stopping patience, epochs, clip value, and the number of heads in multi-head attention mechanisms. Head value determines how many separate attention mechanisms the model uses to capture different relationships within the input data.

For this training of the proposed AI hybrid model, it used a learning rate of 0.0001, the Adam optimizer with a clip value of 0.2, and an early stopping patience of 11. All models were trained for 25 epochs to fine-tune their hyperparameters. In the encoder section, the image size is set to 224, the patch size to 2, the input size to 20, the dropout rate for all layers to 0.01, and 8 attention heads. For handling multi-class classification tasks, the categorical cross-entropy loss function was applied.

The study utilized an MSI GS66 laptop featuring specific technical specifications, including an 11th-generation Intel Core i7 (11800H) processor, 32 GB of RAM, 2 TB of NVMe SSD storage, and an RTX 3080 graphics card equipped with 16 GB of memory. The analysis presented in this article was performed using Python 3.10 on a Windows 11 operating system, leveraging the Keras and TensorFlow backend libraries for computational tasks.

Results and discussion

This section provides a detailed analysis of the classification performance of the proposed AI models for Arabic printed character recognition. The evaluation focuses on various pre-trained deep learning models, including VGG16 and ResNet50, as well as the ensemble model and the proposed hybrid transformer model. The models were evaluated across three distinct scenarios: the first scenario utilized the Arabic Char 4k OCR dataset, the second employed the AHCR dataset, and the third scenario combined the 4k OCR dataset for training with the AHCR dataset for testing, serving as an ablation study to assess the models’ generalization and robustness across different datasets.

Scenario 1: evaluation of the proposed AI models using the Arabic char 4k OCR dataset

A comparative analysis of the classification performance of individual pre-trained deep learning models, namely VGG16 and ResNet50, alongside the ensemble model and the proposed hybrid transformer model, is evaluated on the testing set as shown in Table 3. The performance of each model is assessed using several key evaluation metrics, including ACC, SEN, PRE, F1 score, and AUC. These metrics offer a comprehensive assessment of each model’s effectiveness in recognizing Arabic-printed characters, providing insight into their strengths and limitations.

The results reveal that the VGG16 model achieves strong performance, with an accuracy of 98.41%, sensitivity of 98.40%, specificity of 98.81%, and an F1 score of 98.41. The model also demonstrates a high AUC value of 99.20, indicating a reliable classification capability. Similarly, the ResNet50 model achieves slightly lower results, with an accuracy of 97.94%, sensitivity of 97.90%, specificity of 98.60%, and an F1 score of 97.89. Its AUC value of 98.90% reflects its slightly lower ability to distinguish between classes compared to VGG16.

The ensemble model, which combines features from multiple models of VGG16 and resNet50, improves the overall performance. It achieves an accuracy of 98.72%, sensitivity of 98.72%, specificity of 98.98%, and an F1 score of 98.74%, with an AUC of 99.30%. These results confirm that ensemble learning enhances the model’s stability and generalization ability by leveraging the strengths of multiple models. The proposed hybrid transformer model significantly outperforms all other models. It achieves the highest accuracy of 99.51%, sensitivity of 99.55%, specificity of 99.49%, and an F1 score of 99.53%. The AUC value reaches 99.70%, demonstrating superior classification performance and the model’s ability to differentiate between classes with high precision.

The proposed hybrid transformer model’s exceptional results can be attributed to its advanced architecture, which integrates a self-attention mechanism and an MLP component. The self-attention mechanism enables the model to capture global dependencies within the data, while the MLP component refines the feature representations for improved classification accuracy.

The discussion of findings indicates that traditional CNN-based models, such as VGG16 and ResNet50, deliver strong performance but are limited in their ability to handle complex relationships in the data. The ensemble model improves upon these results by combining multiple feature extractors, highlighting the benefits of leveraging complementary information. However, the hybrid transformer model achieves the best results due to its ability to process global dependencies through self-attention, which enhances feature extraction and classification accuracy. The high AUC values across all models suggest that they perform well in distinguishing between classes. The proposed hybrid model’s superior metrics highlight its robustness and effectiveness for Arabic printed character classification, demonstrating its potential for real-world OCR applications.

The confusion matrix presented above evaluates the performance of the proposed hybrid transformer model on the Arabic OCR dataset consisting of 29 labels. The model achieved a remarkable 99.51% accuracy with only 62 misclassified cases out of a total of 12,701 test samples, as shown in Fig. 5. The confusion matrix demonstrates a strong diagonal dominance, indicating that the majority of the predictions align perfectly with the true labels. This reflects the model’s excellent performance in correctly classifying Arabic-printed characters.

A total of 62 instances were identified as misclassified, representing a notably low percentage relative to the overall size of the test dataset. The distribution of these misclassifications is limited to a select few labels, implying that the model may intermittently struggle to differentiate between visually analogous characters. The number of correct predictions stands at 12,639, which constitutes roughly 99.51% of the cases. Conversely, the misclassified cases account for about 0.49%. This remarkable performance underscores the resilience and dependability of the proposed hybrid transformer model for tasks related to Arabic optical character recognition. However, there are still occasional misclassifications, primarily involving visually similar Arabic characters, which can be challenging due to their structural similarities. This insight highlights the model’s limitations and suggests areas for improvement, particularly in distinguishing similar characters.

The training and validation accuracy, as well as the training and validation loss, are shown in Fig. 6 for the proposed hybrid transformer model during the OCR character classification task. Figure 6a shows the progression of training and validation accuracy across 25 epochs. The model achieves rapid convergence, with both training and validation accuracy exceeding 95% within the first few epochs and stabilizing close to 99.5% as training progresses. The minimal gap between the training and validation accuracy curves indicates that the model generalizes well to unseen data and does not overfit. Figure 6b depicts the training and validation loss across the same 25 epochs. The training loss and validation loss show a steep decline during the initial epochs, with both stabilizing near 0.01 as the training continues.

The rapid convergence of accuracy and the simultaneous decline in loss indicate that the hybrid transformer model efficiently learns to classify Arabic OCR characters. The close correlation between training and validation curves demonstrates the model’s robustness and generalization performance. Achieving such high accuracy and low loss confirms the effectiveness of the hybrid architecture, particularly the self-attention mechanism, in capturing relevant spatial and contextual features from the input images.

Figure 7 presents the evaluation results of the proposed hybrid transformer model compared to individual AI models VGG16, ResNet50, and the Ensemble model) for Arabic character classification for the evaluation metrics. The proposed hybrid transformer model outperforms all other models across all evaluation metrics, achieving the highest accuracy, sensitivity, specificity, F1 score, and AUC scores. The proposed hybrid transformer model’s superior performance highlights its robustness and ability to accurately classify Arabic characters by leveraging the self-attention mechanism and feature extraction capabilities.

Scenario 2: evaluation of the proposed AI models using the AHCR dataset

The classification performance results presented in Table 4 highlight the comparative effectiveness of the proposed models when evaluated on the AHCR dataset. The VGG16 model achieved an accuracy of 96.69%. The ResNet50 model exhibited marginally better results than VGG16, with an accuracy of 96.99% and a recall of 96.99%. This improvement suggests that ResNet50’s deeper architecture and residual learning capabilities may contribute to its enhanced classification performance. The ensemble model, combining predictions from both VGG16 and ResNet50, outperformed the individual models with an accuracy of 97.59% and an F1 score of 97.51%. The proposed hybrid transformer model demonstrated the best performance across all metrics, achieving an accuracy of 98.19%.

The ensemble model’s performance boost demonstrates the effectiveness of combining predictions to reduce model bias and variability. However, the hybrid transformer model outperformed all others, showcasing the advantage of transformer architectures in capturing contextual information and complex dependencies, which are crucial for accurate character recognition. The AUC values further validate the robustness of the proposed models, with the hybrid transformer achieving the highest score of 99.10%, signifying its reliability in distinguishing between classes.

Figure 8 demonstrates the superior performance of the proposed hybrid transformer model across all metrics. While VGG16 and ResNet50 exhibit solid baseline performances, the ensemble model shows an improvement by combining their outputs. However, the hybrid transformer model consistently achieves the highest values, particularly excelling in AUC 99.1% and precision 98.34%, showcasing its robust capability in handling complex classification tasks. This visual comparison highlights the effectiveness of leveraging transformer-based architectures for Arabic character recognition.

In comparison to both scenarios, the first scenario, the Arabic Char 4k OCR dataset, consists of printed characters, which are more uniform and easier for the model to recognize due to their consistent structure and form. Printed characters typically exhibit less variability compared to handwritten text. In contrast, the AHCR dataset contains handwritten characters, which introduce a higher degree of variability in terms of writing styles, strokes, and shapes. This increased variability makes handwritten character recognition inherently more challenging, resulting in lower performance compared to printed characters.

Capturing local and global dependencies

The model integrates a hybrid approach that utilizes both CNNs and ViT, capturing different aspects of data dependencies as follows:

Local dependencies

The convolutional layers of the VGG16 and ResNet50 models are responsible for extracting local features from the images, such as edges, textures, and basic shapes. These CNNs operate on local receptive fields, meaning they focus on specific small regions within the input data. For example, in the case of Arabic characters, these layers capture fine-grained features like individual strokes, shapes, and letter components. The VGG16 and ResNet50 models are pre-trained on large datasets like ImageNet, which enables them to recognize low-level features, and these extracted features are then used to capture local relationships in the characters.

Global dependencies

The ViT, which is a core part of the hybrid model, addresses the limitations of CNNs by capturing global dependencies across the entire image. Unlike CNNs, which focus on local patches, the ViT uses a self-attention mechanism to compute relationships between all parts of the image simultaneously. This allows the model to consider the global context and understand how individual features (local dependencies) interact across the image. For example, in recognizing Arabic script, the ViT can learn the context in which certain characters appear, thus understanding the relationships between characters, their positions in a word, and their variations due to diacritical marks and ligatures.

Feature fusion and global context modeling

In our hybrid model, feature fusion is applied to combine the local features extracted by the CNNs (VGG16 and ResNet50) with the global context provided by the ViT. This fusion allows the model to leverage both types of information: the local fine-grained features and the global contextual relationships. By passing the combined features through the transformer encoder, the model refines the feature representations, making it capable of handling complex dependencies present in Arabic handwriting and printed text.

The self-attention mechanism in the ViT model is particularly effective in capturing these global dependencies, as it enables the model to focus on the most relevant parts of the image, even when they are far apart. This is crucial for Arabic character recognition, where characters’ meanings are heavily dependent on their context within words and sentences.

Scenario 3: ablation study (Arabic char 4k OCR dataset for training and AHCR dataset for testing)

The ablation study was conducted to further evaluate and validate the effectiveness of the proposed models using an unseen AHCR dataset.

The complete Arabic Char 4k OCR dataset is utilized for training purposes, whereas a part of the AHCR dataset, containing 3699 samples, is employed to evaluate the proposed models.

This ablation study aimed to test the generalizability and robustness of the proposed models when exposed to a dataset different from the training set. Table 5 presents the classification performance of the models based on key evaluation metrics. The results provide a comparative analysis of the models, highlighting the strengths of each approach. The VGG16 model achieved an accuracy of 72.97% with a sensitivity of 72.97%. Similarly, the ResNet50 model showed slight improvements, achieving an accuracy of 74.45% and a sensitivity of 74.42%. The ensemble model, which combines predictions from multiple models, provided further improvements with an accuracy of 74.86%, a sensitivity of 74.83%, and a specificity of 74.62%. The proposed hybrid transformer model demonstrated the best performance among all tested approaches. It achieved the highest accuracy of 75.40%, a sensitivity of 75.40%, and a specificity of 74.87%. This indicates reasonable classification performance despite testing on unseen datasets.

The model maintained strong accuracy across these scenarios, demonstrating its ability to handle diverse handwriting styles. Additionally, the datasets used already encompass a wide range of character variations, fonts, and noise levels, which contribute to the model’s adaptability.

The classification report presented here evaluates the performance of the proposed hybrid transformer model on the unseen AHCR dataset as part of the ablation study in Fig. 9. The evaluation metrics include precision, recall, F1-score, and support for each of the 29 classes in the dataset, along with overall averages for these metrics. The model achieved an overall accuracy of 75%, indicating that it correctly classified 75% of the 3699 samples in the dataset.

Comparing the performance and computational costs of the proposed AI models

This section presents a detailed comparison of the performance and computational costs for the proposed AI models. The models are evaluated based on the number of fine-tuned layers, trainable parameters, total training time in milliseconds, and the average training time per epoch. These metrics are essential for understanding the computational efficiency and effectiveness of each model in terms of both training time and model complexity. The data presented in Table 6 highlights a clear trade-off between model complexity and training efficiency. While VGG16 is the most computationally efficient in terms of both training time and number of parameters, it is outperformed by deeper and more complex models like ResNet50, the Ensemble Model, and the Proposed Hybrid Transformer Model in terms of their ability to capture more intricate patterns within the data.

Overall, the results suggest that while more complex models, such as the hybrid transformer model, may lead to better performance, they come with significantly higher computational costs.

Evaluation of comparisons concerning recent research findings

The evaluation of the proposed model’s performance is compared with the most recent research in Arabic character recognition, as shown in Table 7. The results demonstrate that the hybrid approach presented in this study, utilizing ensemble learning and hybrid transformer ViT models, offers superior accuracy compared to previous methods. The results highlight the steady improvement in Arabic character recognition over time. While earlier approaches relying on traditional machine learning methods or simpler CNN models achieved respectable results, the use of ensemble learning and hybrid transformer models has driven the current state-of-the-art performance, as demonstrated by our proposed work. This progression underscores the importance of adopting more sophisticated techniques in achieving higher accuracy rates and expanding the capabilities of automated Arabic text recognition systems.

Limitations and future work

While the proposed hybrid transformer ViT model demonstrates exceptional accuracy and robustness for Arabic printed and handwritten character classification, some limitations need to be considered.

The model may face challenges when applied to noisy, distorted, or low-quality scans, as it currently performs best on clean images; future work will involve augmenting the dataset with such conditions to improve robustness. Additionally, while the hybrid transformer model is highly accurate, it requires significant computational resources for both training and inference, so optimization techniques like pruning and quantization will be explored to reduce its computational footprint. Furthermore, although the model performs well on Arabic OCR and AHCR datasets, further testing on diverse datasets with varying languages and handwriting styles is necessary to evaluate its generalization across broader OCR tasks with new techniques such as federated learning and explainable AI40.

Conclusions

This study proposed a hybrid transformer encoder-based model for Arabic printed character classification, effectively combining advanced transfer learning techniques with a self-attention mechanism. The methodology utilized pre-trained models such as VGG16 and ResNet50 to extract meaningful features, followed by feature fusion and processing through the transformer encoder for robust classification. The hybrid transformer model achieved a remarkable accuracy of 99.51% on the Arabic OCR dataset and 98.19% on the AHCR dataset, with superior performance metrics such as the sensitivity of 99.55% and 98.19%, respectively. These results highlight the robustness and efficiency of the proposed approach in accurately recognizing Arabic-printed characters.

These results significantly surpass traditional CNN models and ensemble approaches, as validated by the evaluation metrics and confusion matrix analysis. The proposed model’s superior performance can be attributed to its ability to capture local and global dependencies within the data using self-attention mechanisms, while the MLP component further refined the feature representations. Compared to individual models and existing methodologies, the hybrid model achieved higher accuracy and generalization, making it a robust solution for Arabic optical character recognition tasks. This study explored the classification of printed and handwritten Arabic letters; however, it still requires a more diverse multimodal Arabic dataset and a reduction in training time. Future work will explore additional improvements through multimodal data and augmentation, multilingual OCR, real-time processing, optimization of transformer layers, and testing the model’s adaptability to other languages and handwritten text. This research establishes a solid foundation for leveraging hybrid architectures in OCR applications and provides significant advancements for real-world Arabic character recognition systems.

Data availability

The datasets used in this paper are publicly available at: https://github.com/HusseinYoussef/Arabic-OCR/tree/master and https://github.com/HossamBalaha/HMBD-v1.

References

Kasem, M. S. E., Mahmoud, M., & Kang, H.-S. Advancements and challenges in Arabic optical character recognition: a comprehensive survey. arXiv Prepr. arXiv:2312 (2023).

Balaha, H. M., Ali, H. A. & Badawy, M. Automatic recognition of handwritten Arabic characters: a comprehensive review. Neural Comput. Appl. 33, 3011–3034 (2021).

Mosbah, L. et al. ADOCRNet: a deep learning OCR for Arabic documents recognition. IEEE Access 12, 55620–55631 (2024).

Chejne, A. G. The Arabic Language: Its Role in History (Minnesota Press, 1968).

Nemeth, T. Arabic Type-Making in the Machine Age: The Influence of Technology on the Form of Arabic Type, 1908–1993 (Brill, 2017).

El Rifai, H., Al Qadi, L. & Elnagar, A. Arabic text classification: the need for multi-labeling systems. Neural Comput. Appl. 34, 1135–1159 (2022).

Mahdi, M. G., Sleem, A., Elhenawy, I. M. & Safwat, S. Enhancing the recognition of handwritten Arabic characters through hybrid convolutional and bidirectional recurrent neural network models. Sustain. Mach. Intell. J. 9, 34–56 (2024).

AlifahRoslan, N., IzuraUdzir, N., Mahmod, R. & Gutub, A. Systematic literature review and analysis for Arabic text steganography method practically. Egypt. Inform. J. 23, 177–191 (2022).

El Khayati, M., Kich, I. & Taouil, Y. CNN-based methods for offline arabic handwriting recognition: a review. Neural Process. Lett. 56, 115 (2024).

Faizullah, S., Ayub, M. S., Hussain, S. & Khan, M. A. A survey of OCR in Arabic language: applications, techniques, and challenges. Appl. Sci. 13, 4584 (2023).

Haghighi, F. & Omranpour, H. Stacking ensemble model of deep learning and its application to Persian/Arabic handwritten digits recognition. Knowl.-Based Syst. 220, 106940 (2021).

Venkatasubramanian, K. et al. Unified deep learning framework integrating CNNs and vision transformers for efficient and scalable solutions. SSRN Electron. J. https://doi.org/10.2139/ssrn.5077827 (2025).

Keinan-Schoonbaert, A. Using Transkribus for Arabic handwritten text recognition. Br. Libr. Digit. Scholarsh. Blog (2020).

Filgueira, R. et al. Extending defoe for the Efficient Analysis of Historical Texts at Scale. in 2021 IEEE 17th International Conference on eScience (eScience) 21–29 (IEEE, 2021). https://doi.org/10.1109/eScience51609.2021.00012.

Alwagdani, M. S. & Jaha, E. S. Deep learning-based child handwritten Arabic character recognition and handwriting discrimination. Sensors 23, 6774 (2023).

Mohammed, A. & Kora, R. An effective ensemble deep learning framework for text classification. J. King Saud. Univ.-Comput. Inf. Sci. 34, 8825–8837 (2022).

Soumia, F., Djamel, G. & Haddad, M. Handwritten Arabic Character Recognition: Comparison of Conventional Machine Learning and Deep Learning Approaches, 1127–1138. https://doi.org/10.1007/978-3-030-70713-2_100 (2021).

Alsayed, A., Li, C., Abdalsalam, M. & Fat’hAlalim, A. A hybrid model for Arabic character recognition using CNN and Kolmogorov Arnold Networks (KANs). Multimed. Tools Appl. https://doi.org/10.1007/s11042-025-20934-8 (2025).

Alghamdi, T., Snoussi, S. & Hsairi, L. Arabic document classification by deep learning. Int. J. Adv. Comput. Sci. Appl. 12(10), 314–321 (2021).

Li, Y., Chen, D., Tang, T. & Shen, X. HTR-VT: Handwritten text recognition with vision transformer. Pattern Recognit. 158, 110967 (2025).

Nahar, K. M. O. et al. Recognition of Arabic air-written letters: machine learning, convolutional neural networks, and optical character recognition (OCR) techniques. Sensors 23, 9475 (2023).

Ritonga, M. et al. Optimized convolutional neural network deep learning for Arabian handwritten text recognition. Bull. Electr. Eng. Inform. 14, 1497–1506 (2025).

Bouchakour, L., Meziani, F., Latrache, H., Ghribi, K. & Yahiaoui, M. Printed Arabic Characters Recognition Using Combined Features and CNN classifier. in 2021 International Conference on Recent Advances in Mathematics and Informatics (ICRAMI) 1–5 (IEEE, 2021). https://doi.org/10.1109/ICRAMI52622.2021.9585941.

AlShehri, H. DeepAHR: a deep neural network approach for recognizing Arabic handwritten recognition. Neural Comput. Appl. 36, 12103–12115 (2024).

Mutawa, A. M., Allaho, M. Y. & Al-Hajeri, M. Machine learning approach for Arabic handwritten recognition. Appl. Sci. 14, 9020 (2024).

Rouabhi, S., Azerine, A., Tlemsani, R., Essaid, M. & Idoumghar, L. Conv-ViT fusion for improved handwritten Arabic character classification. Signal, Image Video Process 18, 355–372 (2024).

Amara, M., Smairi, N., Mnasri, S. & Zidouri, A. Revitalizing Arabic character classification: unleashing the power of deep learning with transfer learning and data augmentation techniques. Arab. J. Sci. Eng. 49, 12791–12815 (2024).

Alheraki, M., Al-Matham, R. & Al-Khalifa, H. Handwritten Arabic character recognition for children writing using convolutional neural network and stroke identification. Hum.-Centric Intell. Syst. 3, 147–159 (2023).

Hamida, S., El Gannour, O., Cherradi, B., Ouajji, H. & Raihani, A. Handwritten computer science words vocabulary recognition using concatenated convolutional neural networks. Multimed. Tools Appl. 82, 23091–23117 (2023).

Muaad, A. Y., Jayappa, H., Al-antari, M. A. & Lee, S. ArCAR: a novel deep learning computer-aided recognition for character-level Arabic text representation and recognition. Algorithms 14, 216 (2021).

Youssef, H. Arabic-OCR. Github https://github.com/HusseinYoussef/Arabic-OCR/tree/master (2020).

Balaha, H. M., Ali, H. A., Saraya, M. & Badawy, M. A new Arabic handwritten character recognition deep learning system (AHCR-DLS). Neural Comput. Appl. 33, 6325–6367 (2021).

Cong, S. & Zhou, Y. A review of convolutional neural network architectures and their optimizations. Artif. Intell. Rev. 56, 1905–1969 (2023).

Qassim, H., Verma, A. & Feinzimer, D. Compressed residual-VGG16 CNN model for big data places image recognition. in 2018 IEEE 8th Annual Computing and Communication Workshop and Conference (CCWC) 169–175 (IEEE, 2018). https://doi.org/10.1109/CCWC.2018.8301729.

Koonce, B. ResNet 50. In Convolutional Neural Networks with Swift for Tensorflow 63–72 (Apress, Berkeley, CA, 2021). https://doi.org/10.1007/978-1-4842-6168-2_6.

Shetty, A. & Sharma, S. Ensemble deep learning model for optical character recognition. Multimed. Tools Appl. 83, 11411–11431 (2024).

Chen, S. & Guo, W. Auto-encoders in deep learning: a review with new perspectives. Mathematics 11, 1777 (2023).

Al-Hejri, A. M. et al. ETECADx: ensemble self-attention transformer encoder for breast cancer diagnosis using full-field digital X-ray breast images. Diagnostics 13, 89 (2022).

Pedregosa, F. et al. Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Al-Hejri, A. M. et al. A hybrid explainable federated-based vision transformer framework for breast cancer prediction via risk factors. Sci. Rep. 15, 18453 (2025).

Acknowledgements

The authors extend their appreciation to Taif University, Saudi Arabia, for supporting this work through project number (TU-DSPP-2024-52).

Author information

Authors and Affiliations

Contributions

Mohammed R. Al-Maamari: Conceptualization; Data curation; Formal analysis; Investigation; Methodology; Resources; Software; Visualization; Writing—original draft. Rakesh Ramteke: Conceptualization; Methodology; Investigation; Data Curation; Formal Analysis; Writing—Review & Editing; Resources; Validation; Supervision. Aymen M. Al-Hejri: Conceptualization; Methodology; Investigation; Data Curation; Formal Analysis; Writing—Review & Editing; Resources; Validation; Supervision. Sultan S. Alshamrani: Formal analysis; investigation, validation, visualization, writing (review & editing), revising key sections, and interpreting results.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Al-Maamari, M.R., Ramteke, R., Al-Hejri, A.M. et al. Integrating CNN and transformer architectures for superior Arabic printed and handwriting characters classification. Sci Rep 15, 29936 (2025). https://doi.org/10.1038/s41598-025-12045-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-12045-z

Keywords

This article is cited by

-

Predicting tourism growth in Saudi Arabia with machine learning models for vision 2030 perspective

Scientific Reports (2026)

-

A hybrid vision transformer with ensemble CNN framework for cervical cancer diagnosis

BMC Medical Informatics and Decision Making (2025)