Abstract

Infrared remote sensing (IRS) ship detection faces challenges such as low resolution and environmental interference, with issues being particularly pronounced for small targets. This study proposes a lightweight architecture based on RT-DETR, termed RT-DETR-CST: A Cross-Channel Feature Attention Network (CFAN) is constructed, which achieves channel-weighted feature fusion via residual connections to suppress invalid background channels, addressing the problem of inter-channel information imbalance in infrared images and the suppression of small-target features by background noise. A Scale-Wise Feature Network (SWN) is developed, utilizing depthwise separable convolutions and stochastic depth for multi-scale feature extraction, where stochastic depth enhances the model’s robustness to small-target features. A Texture/Detail Capture Network (TCN) is built, achieving edge/detail capture through linear decomposition and low-cost channel fusion to solve the problems of target edge blurring and detail feature loss in infrared images caused by low signal-to-noise ratios. Experiments on the ISDD datasets show that RT-DETR-CST achieves an mAP0.5 metric of 89.4% (a 4.9% improvement over RT-DETR), reduces model size to 23.7 MB (a 41.5% reduction), and achieves an inference speed of 207.2 FPS. Ablation experiments validate the effectiveness of each module, demonstrating the model’s superior accuracy, lightweight design, and real-time performance in infrared ship remote sensing small-target detection. Furthermore, the generalization verification on the SSDD and SIRST datasets shows that the proposed model is effective in both infrared and SAR remote sensing small target detection.

Similar content being viewed by others

Introduction

China has a vast sea area and rich marine resources, and all-weather monitoring of fishery, shipping, and other aquatic activities is significant for marine development and the national economy. With the rapid growth of remote sensing and target detection technology, ship remote sensing image detection and recognition1 plays a key role in maritime.Infrared remote sensing images2 have low image resolution, low contrast, low signal-to-noise ratio, etc. They are easily affected by changes in light, temperature and weather conditions, making it difficult to achieve the same detection performance at night as in the daytime, which restricts the accurate detection of ships3. However, the light emitted from marine targets at night forms a scintillating image, which can be used to identify the positions of platforms and ships4 and then map their spatial distribution to meet the demand for monitoring activities at night. This can be used to identify the positions of platforms and ships and then map the spatial distribution of platforms and vessels. This meets the demand for night-time activity monitoring and makes up for the shortcomings of night-time marine target detection5. Many traditional algorithms have emerged to achieve real-time positioning of ships at night.Since the sea surface is more uniform than the target, Yang et al.6 defined intensive metrics to distinguish anomalies from relatively similar backgrounds. Zhu et al.7 firstly segmented images to obtain simple shapes, and then extracted shape and texture features from ship candidates. Finally, three classification strategies were used to classify ship candidates. In calm seas, the results of the above method are stable. However, the algorithm based on low-level features has poor robustness when wave, cloud, rain, fog, or reflection occur.

Therefore, the current main challenges include the significant impact of environmental factors:

-

1.

Infrared radiation is susceptible to atmospheric and lighting conditions during transmission, resulting in differences in thermal radiation and affecting image quality and information accuracy. Clouds, rain, snow, and other adverse weather conditions further increase the difficulty of ship feature extraction8.

-

2.

Limited spatial resolution: The spatial resolution of infrared remote sensing images is limited9. Therefore, the key details of the ship features in the imaging often appear blurred, making it difficult to accurately differentiate the type of ship in traditional detection methods. This may even lead to misdetection or omission of detection.

-

3.

The complexity of data processing and analysis: The grey value of infrared remote sensing images mainly reflects the thermal radiation characteristics of the features, and there are significant differences with the grey scale, morphology and other fine-grained features of visible photos, requiring the algorithm to be targeted. The current ship feature recognition capability is limited, and the detailed features are not prominent10,11.

To address the above problems, this study proposes an RT-DETR-CST algorithm based on the RT-DETR model, improving ships’ detection accuracy on the sea surface while maintaining a lightweight model. The contributions of this study are as follows:

-

A CFAN network structure is proposed to achieve optimal feature representation through channel weighting and interactive fusion for adaptive fusion of intermediate representations from two feature extraction branches. This structure strikes a balance between more targeted channel focus and cross-branch complementarity, thus enhancing the subsequent network’s ability to focus on key regions and semantic information.

-

The SWN module effectively combines separable convolution, residual structure and stochastic depth techniques through a compact network design with moderate channel expansion, enabling the extraction of rich and diverse spatial semantic features within a limited computational budget. It also provides more stable high-level feature support in various downstream vision tasks.

-

A TCN network structure is proposed, which divides the input features into a smooth backbone and multiple directional differential branches and generates compact multi-channel representations through low-cost channel-level fusion. This provides rich feature combination possibilities for the subsequent network layers, improves the model’s recognition performance in complex scenes, and maintains inference efficiency.

Discussion

The development of marine target detection methods has gone through two main phases in recent years. The first phase was the early traditional ship detection methods, while the second phase was the rapid development of deep learning-based methods, which rely on large amounts of data and multiple features to identify targets.

Multi-stage target detection algorithm

The Mask R-CNN model12, known for its high accuracy in instance segmentation, lags in detection speed against single-stage networks like YOLO. Zhang et al.13 proposed a high-speed SAR ship detection method using Depth Separable Convolutional Neural Networks (DSCNN), which cuts traditional CNN convolution parameters and boosts speed. Xu et al. introduced GWFEF-Net, which enhances SAR image detection using dual-polarised features. The Oriented R-CNN model14 combines Oriented RPN and mid-point offset presentations to achieve accuracy and efficiency in directional target detection, setting a benchmark for future studies. Despite its advances, improvements are needed in transparency and adaptability to complex scenes. Grid R-CNN15 improves localization accuracy with grid-guided localization and feature fusion strategies, effective in high IoU conditions. CRF-RNN16 excels in polyp segmentation with architectural and loss function enhancements but could benefit from computational efficiency, data diversity, and clinical applicability optimizations. Cascade R-CNN17 uses a multi-stage cascade structure for enhanced high IoU threshold detection, showing broad applicability and practicality. However, computational complexity and detection of small targets remain challenging. Sparse R-CNN18 simplifies the target detection process by utilizing a sparse detection method with dynamic instance interaction and learnable proposals, which requires further refinement in feature extraction and integration with other techniques. Dynamic R-CNN19 improves detection accuracy and efficiency by dynamically adjusting label assignment and SmoothL1 loss. However, its strategy could fail in unique scenarios, and its compatibility with different backbones requires validation.

CNN-based single-stage target detection algorithm

Chen20 et al. integrated a lightweight attention extension module DAM into the YOLOv3 model to improve the performance with less computational increase so that the improved ImYOLOv3 maintains a higher detection speed while guaranteeing a certain degree of accuracy and achieves a better balance of detection accuracy and speed, but in extreme weather, special lighting conditions, etc., the paper did not target these extremely complex However, in extreme weather, special lighting conditions, etc., the paper did not fully test and optimize for these extreme complex scenes, and the robustness and accuracy of the model may be insufficient when facing such scenes. Huang21 et al. introduced a receptive field block (RFB) into the YOLOv4 model to expand the receptive field and improve the detection of small targets. They optimized the YOLOv4 backbone network and designed a new receptive field extension module, DSPP, to improve the robustness of the model to target motion and effectively reduce false and missed detections caused by background interference. Still, the insufficient dataset makes it difficult to satisfy the demand for accurate identification of ship types, which restricts the application of the model in finer tasks. Xu22 et al. applied the YOLOv5 model to SAR image detection. They designed a lightweight cross-level partial module L-CSP to reduce the computational load and achieve accurate ship detection performance. Zhang et al.23 proposed MGSFA-Net, which introduces scattering features for SAR search and rescue ship recognition. By integrating segmentation, scattering center extraction, and associated feature construction with deep feature weighted fusion, it achieves a 2%−3% accuracy improvement on FUSAR-Ship and OpenSARShip datasets under few-shot conditions. Feature visualization validates its multi-scale global scattering feature representation capability. Liu et al.24 addressed optical-SAR modal differences with a heterogeneous two-branch framework (CNN for optical local features and VMamba for SAR global structures) and a dynamic gating fusion module (combining multi-scale extraction, self-attention, and dynamic gating), demonstrating robust performance on medium/high-resolution datasets with balanced category-wise accuracy. Zhang et al.25 developed a cross-sensor SAR detection method using dynamic feature discrimination (DFDM) and center-aware calibration (CACM), achieving 6%−20% mAP/F1 improvements on MiniSAR and FARAD datasets.

Applying deep learning to target detection improves accuracy in complex contexts and supports real-time, precise and robust detection.

Transformer series

The Deformable DETR model26 significantly improves the efficiency and accuracy of end-to-end target detection through the innovative deformable attention mechanism and multi-scale feature fusion, especially making breakthroughs in small object detection and convergence speed. However, its computational cost and the real-time problem still need to be further optimized, and its application scenarios can be expanded by combining with lightweight design or hardware acceleration technology. The DINO model27 significantly improves the performance and training efficiency of DETR by comparing denoising training, hybrid query selection and cross-layer optimization. Innovative methods such as deformable attention, denoising training and contrast learning significantly improve the performance and efficiency of end-to-end target detection. However, there is still room for optimization in terms of computational cost, generalization, and inference speed, which needs to be combined with lightweight design or hardware acceleration to expand the application scenarios further in the future. DenseBox28 provides an important idea for single-stage detection and significantly improves the accuracy and consistency of target detection in complex scenes by integrating key point localization through end-to-end fully convolutional network architecture and multitask learning, especially in the small targets and occlusion scenes show strong robustness. However, the room for improvement needs to be further explored with subsequent techniques (e.g., Transformer, dynamic convolution) to adapt to the real-time detection needs in complex scenes. The core innovation of Edge Boxes29 is to associate edge integrity with objecthood and to achieve sub-second computational efficiency through efficient data structure and edge affinity modeling while guaranteeing high recall and providing a lightweight idea for proposal generation. It provides a lightweight idea. However, its limitations focus on strong edge dependence, simple geometric feature modeling, and insufficient parameter self-adaptation. In conclusion, there is still room for improvement in computational efficiency, generalization ability and automated parameter tuning, and it needs to be combined with a lightweight design, hardware acceleration and adaptive learning mechanism to expand the practical application scenarios further.

However, deep learning has not yet been widely applied in this field due to the low resolution andfewer features extracted from microlight images. Traditional target detection algorithms mainly identify ships by examining their shape and texture features or using threshold analysis and statistical analysis techniques.Methods based on shape and texture features rely on manual feature extraction, which increases computational complexity and often leads to high false and missed detection rates. These methods are usually applied in optical remote sensing and synthetic aperture radar (SAR)30.

Methods

The marine environment is complex and variable, and ship targets are often affected by a variety of factors such as waves, weather, light, and angle, making detection difficult. Although deep learning technology has improved the performance of target detection algorithms, ship detection is still a challenge. Traditional algorithms are deficient in accuracy and efficiency, while the Transformer-based target detection model RT-DETR stands out with its innovative architecture and efficient performance. RT-DETR is a real-time target detection model based on the Transformer architecture, and its main features include an efficient hybrid encoder and IoU-aware query selection, which can effectively reduce computational cost and improve detection accuracy. In addition, RT-DETR achieves end-to-end training and inference by removing the traditional non-maximum suppression (NMS) step, which further improves the detection speed. This end-to-end training approach allows for the complete integration of model input to output without the need for complex post-processing steps.RT-DETR is suitable for a variety of real-time application scenarios, such as ocean monitoring, port management, ship tracking, etc., and is capable of fast inference on ordinary GPUs. It combines deep learning advancements such as hybrid encoder architecture and learnable object queries, making it an important tool in the field of target detection. In order to enhance the performance of UAVs for ship detection in marine surveillance, this study proposes an improved RT-DETR-CST algorithm based on RT-DETR. The structure of the neural network is shown in Fig. 1 below. The algorithm effectively improves the detection accuracy of ships by optimizing the query selection and feature fusion mechanisms while maintaining the model size, number of parameters, and detection speed.

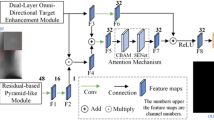

Cross-Feature Attention Fusion Network (CFAN) module

Feature fusion is an important task in deep learning, especially in multi-branch network structures. In remote sensing ship detection, the challenge lies in effectively combining multi-source heterogeneous information where ships exhibit distinct spectral and spatial characteristics against complex maritime backgrounds. To address this, this study proposes a Cross-Feature Attention Fusion Network (CFAN), the neural network is shown in Fig. 2, the core idea of which is to achieve optimal representation of features through channel-weighting and interactive fusion for adaptively fusing intermediate representations from two feature extraction branches. This dual-branch architecture is particularly crucial for simultaneously capturing global contextual information (essential for distinguishing ships from sea clutter and waves) and local detail features (critical for identifying small vessels in vast ocean areas). Compared with conventional channel-by-channel or direct summation fusion methods, this module is able to strike a balance between more targeted channel attention and cross-branch complementarity, thus enhancing the ability of the subsequent network to focus on key regions and semantic information.

Firstly, CFAN receives two input feature maps, denoted as \(\ X_0 \in {R}^{B \times C_0 \times H \times W}\) and \(\ {X}_1 \in {R}^{B \times C_1 \times H \times W}\) where B is the batch size \(\ C_0\) and \(\ C_1\) are the number of channels of the two feature maps, respectively, H and W are the spatial dimensions of the feature maps. In order for both to have the same number of channels,if \(\ C_0 \ne C_1\),Then, one of the feature maps is channel-aligned by a convolutional layer. Let the transformed feature map be \(\ {X}_0^{'} \in {R}^{B \times C \times H \times W}\) included among these \(\ C = \max (C_0, C_1)\). The other feature map \(\ X_1\) remains unchanged. This alignment process ensures that features from different scales or semantic levels in remote sensing imagery can be effectively integrated without information loss.

Next, these two aligned feature maps are spliced in the channel dimension to obtain:

The goal of this step is to efficiently combine information from different paths so that they can jointly participate in the computation during the subsequent channel weighting process. Through splicing, we are able to integrate the local and global information of the two path feature maps within a larger feature space, which is particularly important for remote sensing where small ships occupy minimal pixels while background regions dominate the image.

To further enhance the model’s focus on important features and address the inherent challenge of feature scale imbalance in remote sensing, CFAN introduces a channel weighting mechanism to process the spliced feature maps using the Squeeze-and-Excitation (SE) module. The SE module performs global pooling on \(\ {X}_{\text {concat}}\), generating a channel descriptor vector \(\ {Z} \in {R}^{2C}\) with length equal to the number of channels in 2 C, then obtains the weighting coefficient \(\ {W} \in {R}^{2C}\) for each channel through an MLP layer. This adaptive channel attention mechanism can selectively enhance ship-relevant feature channels while suppressing background noise channels, effectively improving the signal-to-noise ratio crucial for detecting small targets in satellite and aerial imagery. The SE module is able to adaptively adjust the importance of each channel and output a weighted feature map \(\ {X}_{\text {att}} \in {R}^{B \times 2C \times H \times W}\).

Then we divide the weighted feature map \(\ {X}_{\text {att}}\) into two parts according to the original channels: \(\ {X}^{0}_{\text {att}} \in {R}^{B \times C \times H \times W}\) and \(\ {X}^{1}_{\text {att}} \in {R}^{B \times C \times H \times W}\). This corresponds to the weighted features of \(\ {X}^{'}_{\text {0}}\) and \(\ {X}_{\text {1}}\) of the weighted features. At this point, we multiply the original feature map and the weighted feature map element by element to obtain:

where \(\ \otimes\) denotes element-by-element multiplication. In this way, the network is able to use the learned channel weighting coefficients to adjust the original feature map, highlighting important features such as ship contours and wake patterns while suppressing irrelevant information like sea surface noise.

Next, in order to make full use of the complementary information of the two features, CFAN fuses the weighted feature maps with the original features by residual fusion. Specifically, the fused feature map obtained is:

At this point, the residual linkage can effectively combine the information from different sources to enhance the expressive power of the final output. This residual fusion strategy is essential for preserving both fine-grained texture information and high-level semantic features, maintaining ship detection accuracy across varying scales and imaging conditions typical in remote sensing scenarios where atmospheric conditions and sensor characteristics can significantly affect image quality.

Finally, CFAN splices these two weighted fused feature maps to obtain the final output:

In this way, the model can not only use the information in each branch but also establish deeper interconnections between different features, thus improving the quality and diversity of feature representation necessary for distinguishing ships from complex maritime backgrounds.

In summary, CFAN optimizes multi-feature fusion through adaptive channel weighting and residual fusion specifically tailored for remote sensing challenges. It captures feature correlations more effectively than traditional methods, boosting performance in complex maritime scenes. Core operations include channel alignment, weighting, residual fusion, etc., enhancing key regions while reducing redundancy inherent in large-scale satellite imagery.

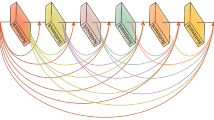

SynapticWeave Network (SWN) module

To explore the multi-scale information of the image fully under the premise of low computation, this paper introduces a hierarchical convolutional structure called SynapticWeave Network in the backbone network part of the model. This multi-scale hierarchical design directly addresses the scale variance problem inherent in satellite and aerial imaging, where ships can appear at drastically different sizes depending on sensor resolution, altitude, and vessel type. The structure of the neural network is shown in Fig. 3 below.

Firstly, SynapticWeave Network sets up a simple stem layer (Stem) at the front end to perform the initial feature transformation on the input image and compress its spatial dimension. This layer mainly consists of a convolution operation with a step size of 2 combined with normalization, where the three-channel information from the original remote sensing image is input and mapped to a specified number of baseline channels after a 3 * 3 convolution to provide a larger channel capacity for subsequent multi-scale abstraction. Let the input image batch be \(\ X^{(0)} \in {R}^{B \times 3 \times H \times W}\). The stem layer output can then be written as \(X^{(1)} = \text {Stem}(X^{(0)})\), where \(X^{(1)} \in {R}^{B \times C_s \times \frac{H}{2} \times \frac{W}{2}}\), \(C_{\text {s}}\) is taken as 32 to ensure sufficient capacity for subsequent feature channels while maintaining computational efficiency for processing large-scale remote sensing images.

Next, the SynapticWeave Network progressively extracts features through four successively connected Stages. Each Stage uses a convolution with a step size of 2 to downsample the input features as they arrive, and expands the channel dimensions accordingly. This progressive downsampling strategy with expanding channel dimensions follows the principle of information preservation–as spatial resolution decreases, channel capacity must increase to maintain the same information content, which is particularly crucial for preserving small ship signatures that might otherwise be lost in aggressive downsampling. This operation not only reduces the resolution of the feature map spatially, but also lays the foundation for more channels to be processed in parallel. The downsampled features are fed into several residual blocks for further cascading abstraction. Let the ith Stage contain \(\ {d}_{\text {i}}\) Blocks, then the output of this Stage can be expressed as:

In the actual implementation, in order to balance the computation and feature expression, the four Stages are stacked with 1, 2, 6, and 2 Blocks respectively, which ensures that the network is able to learn the high-level semantics layer by layer, capturing features ranging from low-level ship edges to high-level vessel categories, but does not lead to high computation cost due to too deep stacking.

In each Block, the convolution is simply combined with batch normalisation (BN) (denoted as ConvBN), and supplemented by residual branching to perform depth-separable convolutional transform and channel fusion on the input features. The depth-separable convolution operations are particularly optimal for remote sensing applications as they decouple spatial and channel-wise feature learning, allowing the network to efficiently model the anisotropic characteristics of ships (elongated structures with specific length-to-width ratios) while maintaining computational efficiency. For ease of expression, let X denote the feature that enters the Block, and remember that the depth-separable convolutional branching process yields \(\ {X}_{\text {dw}}\), and let \(\ {Conv}_{1\times 1}^{f_1}\) and \(\ {Conv}_{1\times 1}^{f_2}\) denote the two parallel 1\(\times\)1 convolutional mappings, respectively, then it can be written:

Where \(\sigma\) is the ReLU6 activation function, and \(\text {Conv}_{1\times 1}^{\text {post}}\) maps the number of fused channels back to the input size and performs a BN operation, to enable stochastic depth dropout on the residual path during training. This stochastic depth mechanism provides regularization benefits specifically tailored to the sparse target distribution in maritime scenes, preventing overfitting to vast background regions while maintaining sensitivity to rare ship instances, further enhancing the network’s generalization ability. Thus, the element-by-element multiplication and depth-separable convolution inside the block effectively fuse multi-channel and multi-branch features while maintaining a low computational overhead essential for real-time remote sensing applications.

Through this multi-stage structure of downsampling + residual stacking, the SynapticWeave Network produces several feature maps with decreasing spatial resolution and increasing semantic abstraction from shallow to deep layers, corresponding to the natural hierarchy of ship detection from pixel-level edges to object-level recognition. In this study, the output of the stem layer and the final outputs of the four stages are retained for the convenience of subsequent network branches or auxiliary tasks and are denoted as \(\ \{ {F}^{(1)}, {F}^{(2)}, {F}^{(3)}, {F}^{(4)}, {F}^{(5)} \}\), where \(\ {F}^{(1)}\) is the output of the dry layer, and \(\ {F}^{(2)} \sim {F}^{(5)}\) corresponds to the result of stacking the four Stages. These features present different levels of feature representation in terms of spatial size and channel dimension, thus showing good applicability in multi-scale maritime scenes where targets range from small fishing boats to large cargo vessels.

The SynapticWeave Network effectively combines separable convolution, residual structure and stochastic depth techniques through compact network design and moderate channel expansion, which enables the extraction of rich and diversified spatial semantic features within a limited computational budget crucial for processing vast ocean areas in remote sensing imagery. Due to the introduction of branch point multiplication and depth separable operation, the network can focus on channel and spatial interactions while retaining the clarity of the backbone hierarchy, enabling the network to adaptively focus on key regions such as potential ship locations and produce more discriminative deep features. Overall, the module balances the fine-grained capture of multi-scale features with the efficiency of the overall inference of the network, laying a solid foundation for subsequent deep characterization in complex maritime environments.

TetraCore Network (TCN) module

During the construction of the overall network, We designed a computational unit called TetraCore Network in the downsampling and feature abstraction phase. The structure of the neural network is shown in Fig. 4 below. This unit uses a set of specially constructed linear operators based on signal processing principles particularly relevant to remote sensing imagery, implementing a 2D Haar-like wavelet transform that is theoretically optimal for detecting ships in maritime environments. The decomposition explicitly captures various orientation-sensitive disparity signals while preserving the main contours and structural elements crucial for ship identification. This method can effectively capture the edge details and texture information at each scale level through refined feature decomposition while maintaining the original image’s spatial structure and dimensionality, enhancing the response to small local differences such as ship wakes and deck structures, and ensuring computational efficiency.

Specifically, if the input feature is denoted as \(\ {X} \in {R}^{B \times C \times H \times W}\), the TetraCore Network module first applies a set of special local weighting operations to them. These operations, grounded in frequency domain analysis, enable the details of different frequencies and directions to be effectively extracted by decomposing the local features to capture both the local variations (such as ship edges against water) and global structural information (overall vessel shape) in the image. For clarity, a single channel example is provided below, with new coordinate indices denoted by (i,j):

Component A covers the core feature distribution and overall energy, capturing the low-frequency approximation coefficients representing the overall ship structure, while B, C, and D highlight directional activations extracting high-frequency details in horizontal, vertical, and diagonal directions respectively–directly corresponding to the geometric characteristics of ships such as elongated hulls, perpendicular superstructures, and angular deck features. This multi-directional feature separation is particularly suited for remote sensing as it addresses the rotation-variance challenge where ships can appear at arbitrary orientations in satellite imagery. Crucially, the decomposition’s theoretical reversibility ensures stable gradient backpropagation without training imbalance from local information loss, which is critical for detecting small ships where every pixel contains valuable information.

After feature decomposition, the quad-core network groups and splices the components A, B, C, and D along the channel dimension to form a new feature tensor with original spatial resolution \(\ {R}^{B \times 4C \times H \times W}\). This arrangement expands the number of channels and enhances the network’s ability to detect subtle image changes such as the boundary between ship and sea surface. The directional decomposition ensures robust feature extraction regardless of ship heading, addressing a fundamental challenge in overhead remote sensing where targets lack a canonical orientation. Therefore, this multi-directional splitting method captures changes more finely than direct pooling or progressive convolution while maintaining a compact feature size and promoting integration with subsequent network layers.

To transform the separated four signals into a form that can be efficiently utilized in the next stage, after the feature decomposition and splicing operations, the TetraCore Network module fuses the multi-channel features through a set of linear operations aligned with the principle of matched filtering in signal processing. These operations compress the representations and create a compact representation suitable for subsequent network layer processing, where the network learns optimal filters for ship detection under varying imaging conditions including different weather, lighting, and sea states. If the result of the splicing is expressed in terms of Y, the operator can be expressed as follows:

\(\ \text {Z}_{out}\) enhances the potential of the four information channels through linear weighting and bias, forming a discriminative compressed representation particularly effective for distinguishing ship boundaries from sea surface variations and wake patterns. Its operations on neighboring pixels are reversible and stable, allowing effective tuning via gradient backpropagation during training and providing flexibility for deeper feature refinement across different remote sensing modalities.

In summary, the quad-core network module is essential for guided feature separation and reconstruction in network design, particularly for maritime remote sensing applications. It utilizes well-designed linear operations to partition input features into smooth backbones and directionally differentiated branches, creating compact representations through cost-effective channel-level fusion. This additive and subtractive decomposition enhances the network’s ability to mine multi-scale image information and detect subtle edge changes critical for identifying small vessels against complex ocean backgrounds. The method’s theoretical foundation in wavelet analysis ensures optimal edge detection capabilities while significantly improving the recognition performance in complex maritime scenes without affecting the inference speed necessary for large-scale ocean monitoring.

Experiment

Experimental setup

To avoid memory overflow, the batch size was set to 16, the learning rate was set to 0.01, the learning rate was adjusted using the cosine annealing algorithm, the stochastic gradient descent (SGD) momentum was set to 0.937, and a combination of hue change, saturation change, luminance change range, pan change, zoom change, horizontal flip change, and mosaic was used for data enhancement. The resolution of the input graphics is uniformly set to 640 \(\times\) 640. all models are set to train a total of 1000 epochs of the upper limit, while the early stop is set to 50 epochs to save time and cost, to ensure the fairness of the experiments, the pre-trained models are not used in the training. We choose random weight initialization to ensure that the initial weights of each model come from the same distribution. Although the specific initial values are different, this ensures that all models start from a fair and balanced point so that they can be compared under the same training conditions without being affected by the historical bias of the pre-trained models. Pre-trained models are usually trained on large datasets that may not be distributed in the same way as our target dataset, potentially introducing unforeseen biases. Therefore, we decided not to use pre-trained models. To mitigate the effect of randomness in weight initialization, we conducted several independent experiments and averaged the results.Table 1 lists the basic server parameters:

Dataset

In the process of this new model proposal, we use three datasets.

-

ISDD Dataset: This study employs the31 dataset constructed by UESTC’s IDIPLab. It contains 1,284 infrared images (500\(\times\)500 pixels) with 3,061 annotated ship instances. The dataset covers complex scenarios including trailing ships, nearshore backgrounds, docking states, wave interference, and cloudy conditions, while accounting for gray-scale multipolarity caused by land-sea diurnal temperature differences. Its selection enables systematic evaluation and optimization of ship detection algorithms on a unified high-quality platform, providing essential data and experimental foundations for subsequent research.

-

SSDD Dataset: To ensure experimental consistency and continuity, this study uses the32 dataset from NUAA. As the first publicly available dataset for ship detection in SAR imagery, SSDD contains 1,160 images with 2,456 labeled ship targets (avg. 2.12/image). Data from RadarSat-2, TerraSAR-X, and Sentinel-1 sensors cover HH/HV/VV/VH polarizations, providing rich feature information. The dataset includes various sea states, lighting conditions, ship types/sizes, and inshore/offshore scenarios.

-

SIRST Dataset: The SIRST (Single-frame InfraRed Small Target)33,34,35 dataset contains 427 infrared images with 480 annotated targets, divided into 50% training, 20% validation, and 30% test sets. It includes short-wave, mid-wave, and 950nm wavelength infrared images with extremely dim targets (occupying <0.02% of image area) embedded in complex cluttered backgrounds, making it a highly challenging benchmark for infrared small target detection algorithms.

Assessment criteria

We chose P, R, mAP0.5, mAP0.5:0.95, and IOU to measure the model’s accuracy in small target detection. To evaluate the model’s efficiency, we use the number of parameters and model size as indicators of its lightness. In addition, the delay time is chosen to evaluate the model’s real-time detection performance.

Accuracy is the ratio of the number of samples correctly predicted as positive to the number of all predicted as positive. The formula is as follows:

Recall is the ratio of the number of samples correctly predicted as positive to the number of samples for all true cases. The formula is as follows:

TP (True Positive) denotes the number of correctly identified positive instances, FP (False Positive) denotes the number of incorrectly identified positive and negative instances, and FN (False Negative) denotes the number of incorrectly identified positive and negative instances. mAP is the average AP for all defect categories, AP is the area of the curve under the exact recall curve, and the formulas for AP and mAP are as follows: The larger the mAP, the better the combined detection performance of the model in all categories:

mAP0.5 denotes the Average Precision when the IoU (Intersection over Union) threshold is 0.5. Specifically, the AP (Average Precision) of all images in each category is first calculated, and then the AP of all categories is averaged to obtain mAP0.5.

mAP0.5:0.95 denotes the average mAP over different IoU thresholds (from 0.5 to 0.95 in steps of 0.05.) Specifically, the mAPs are computed for the IoU thresholds of 0.5, 0.55, 0.6, 0.65, 0.7, 0.75, 0.8, 0.85, 0.9, and 0.95, respectively, and then these mAPs are averaged.

IOU (Intersection over Union) intersection and concurrency ratio. Calculates the ratio of the intersection over union of the set of true and predicted values. The formula is as follows:

In our research, we conduct an in-depth comparison and analysis of the RT-DETR series of target detection models. Based on this, we made an independent improvement and proposed the RT-DETR-CST model. After experimental validation, the P-value of RT-DETR-CST reaches 0.904, which shows a superior detection ability and exceeds the performance of RT-DETR. Meanwhile, its R-value is also improved to 0.871, enhancing the model’s overall performance.In addition, in terms of mAP0.5, RT-DETR-CST improves from 84.5% to 86.5% compared to RT-DETR, proving the effectiveness of our improvement measures.

In summary, RT-DETR-CST not only outperforms RT-DETR-r18 in terms of P-value and R-value but also shows significant improvement in mAP0.5 index. These results indicate that RT-DETR-CST is more adaptable and accurate in target detection tasks, which provides a good foundation for future research and application.

Module comparison experiment

Comparison experiments on feature fusion modules

Feature fusion is crucial in deep learning, particularly within multibranch network structures. Ge et al.36 introduced Zoom_cat to enhance multibranch feature fusion, utilizing adaptive maximum and average pooling to unify feature map scales and improve feature expressiveness and recognition accuracy. Peng et al.37 developed SDI, improving interaction among multi-scale features by resizing them spatially and merging via channel convolution.

In this experiment, to assess CONCAT’s enhancements, RT-DETR is optimized using Zoom_cat, SDI, and our CFAN module across three separate tests. Validation and further testing on datasets are documented in Tables 2 and 3. Test 1 is the baseline RT-DETR, Test 2 uses Zoom_cat, Test 3 employs SDI, and Test 4 features our CFAN module.

The results indicate that compared with the original model, RT-DETR-CFAN achieves the highest performance values on both the validation set (val dataset) and the test set (test dataset) among the four models; furthermore, its model size and inference speed are both nearly comparable to those of the original model. RT-DETR-CFAN records the highest Map0.5 and Map0.5:0.95 values in both datasets. In the val dataset, RT-DETR-CFAN improves by 1.2 percentage points over the baseline RT-DETR’s Map0.5 and outperforms the Zoom_cat and SDI modules by 0.2 and 0.3 percentage points, respectively; in the test dataset, it improves by 2.0 percentage points over baseline RT-DETR, and 4.2 and 2.3 percentage points over Zoom_cat and SDI, respectively. The analysis shows RT-DETR-CFAN’s algorithmic improvement scheme has the best generalization among the three schemes.

Backbone network module comparison experiment

To explore multi-scale image information with low computational costs effectively, Li et al.38 developed the LSKNet backbone network. This network optimizes feature expression and reduces computational expenditure through a combination of convolutional kernels of different scales, expansion convolutions, multi-level depth-separable convolutions, and adaptive channel tuning, enhanced by dynamic path selection and convolution reparameterization. Cai et al.39 introduced PKINet, which significantly enhances feature extraction efficiency and information transfer accuracy through a multi-path information fusion structure, context anchor attention mechanisms, and depth-separable convolution, along with dynamic path selection and adaptive layer scaling. Fan et al.40 proposed the RMT network, which greatly improves feature extraction and information flow efficiency by integrating multi-layer feature fusion and self-attention mechanisms, complemented by dynamic path adjustments and adaptive scaling control. Shi et al.41 unveiled mobilenetv4, which boosts the accuracy of small object detection tasks while maintaining efficient processing speed by leveraging multi-stage downsampling with residual stacking, allowing for an efficient transformation from the input image to a multi-stage feature representation and reducing computational overhead.

To evaluate the enhancement of the backbone network in this experiment, based on CFAN improvements, we compared RT-DETR with four networks: LSKNet, PKINet, RMT, mobilenetv4, and our proposed SynapticWeave Network. Validation was conducted using both the val and test datasets, with results detailed in Tables 4 and 5. Test 1 is the baseline RT-DETR, Test 2 uses the RT-DETR-CFAN improvement, Test 3 applies LSKNet to RT-DETR-CFAN (RT-DETR-CFAN-LSKNet)optimization, Test 4 integrates PKINet (RT-DETR-CFAN-PKINet) optimization, Test 5 employs RMT (RT-DETR-CFAN-RMT) optimization, Test 6 utilizes mobilenetv4 (RT-DETR-CFAN-mobilenetv4) optimization, and Test 7 features our SynapticWeave Network (RT-DETR-CFAN-SWN) optimization. Each test explores the respective enhancements in feature extraction and model performance.

The results show that all these optimizations have achieved improvements compared to the original model. By comparing the performance of each improvement scheme on the TEST dataset, it can be seen that the proposed RT-DETR-CFAN-SWN improvement scheme is in a leading position at this point: among them, the mean average precision at 0.5 (mAP0.5) is the highest, reaching 87.2%, which is 2.7 percentage points higher than that of the baseline RT-DETR scheme and 0.7 percentage points higher than that of the RT-DETR-CFAN optimization scheme; the mean average precision at 0.5:0.95 (mAP0.5:0.95) is also the highest, at 41.4%, which is 0.9 percentage points higher than both the baseline RT-DETR scheme and the RT-DETR-CFAN optimization scheme. In addition, the model size of this scheme is the smallest among all schemes, decreasing from 40.7MB to 25.0MB, a reduction of 15.7MB.

By comparing the performance of each optimization scheme on the validation dataset (val dataset) and the test dataset (test dataset), it can be further inferred that the proposed RT-DETR-CFAN-SWN improvement scheme has better robustness than other improvement schemes. The characteristics of “small size, high accuracy, and high robustness” of the RT-DETR-CFAN-SWN improvement scheme provide feasibility for the practical application of this scheme in edge deployment scenarios, making it the most effective solution for such scenarios.

Backbone network module comparison experiment

During the construction of the overall network, to fully extract texture details and spatial distribution information at different scales, Dai42 et al. proposed GLSA, which effectively fuses features at different scales by introducing local and global feature processing modules and combining adaptive weighting with contextual information, to enhance the ability to express details and extract global semantic information of the image. Li43 et al. proposed SlimNeck, which uses multilayer convolutional operations combined with residual connections and effectively enhances the model’s efficiency and information transfer ability by gradually compressing and expanding the feature channels. Wu44 et al. proposed ContextGuidedDown, which captures contextual information in all stages and is specifically optimized to improve segmentation accuracy. It is also carefully designed to reduce the number of parameters and save memory footprint. We created a computational unit called TetraCore Network in the downsampling and feature abstraction phase. This unit uses a set of specially constructed linear operators to decompose and reorganize the input high-dimensional features to explicitly capture various orientation-sensitive disparity signals while preserving the main contours and structural elements. This method can effectively capture the edge details and texture information at each scale level through refined feature decomposition while maintaining the original image’s spatial structure and dimensionality, enhancing the response to minor local differences, and ensuring computational efficiency.

To validate the improvement of the output layer Conv in this experiment, RT-DETR-CFAN-SWN is optimized by GLSA, SlimNeck, and our proposed TCN module in the three improvement ideas, respectively. The model is further validated using val and test datasets, and the specific results are shown in Table 6 and Table 7. Where test 1 represents the baseline RT-DETR. Test 2 represents the RT-DETR-CFAN optimization scheme, experiment 3 represents the RT-DETR-CFAN-SWN optimization scheme, test 4 represents the GLSA optimization scheme for RT-DETR-CFAN-SWN (RT-DETR-CFAN-SWN-GLSA), and test 5 represents the SlimNeck’s optimization scheme for RT-DETR-CFAN-SWN (RT-DETR-CFAN-SWN-SlimNeck), Experiment 6 represents our proposed CFAN’s optimization scheme for RT-DETR-CFAN-SWN (RT-DETR-CFAN-SWN-ContextGuidedDown), and Experiment 7 represents our proposed optimization scheme for TCN over RT-DETR-CFAN-SWN (RT-DETR-CFAN-SWN-TCN).

The results show that all these optimizations are improved compared to the original model. By comparing the performance of each improvement scheme in the TEST dataset, it can be seen that our RT-DETR-CFAN-SWN-TCN improvement scheme is in a distant lead at this point, with Map0.5 being the highest value of 89.4%, which is higher than that of the baseline RT-DETR scheme by 4.9 percentage points; Map0.5:0.95 being the highest value of 41.7%, which is higher than that of the baseline RT DETR scheme by 1.2 percentage points; model size is the smallest among all schemes, where the model size decreases from the baseline 40.5MB to 23.7MB, a reduction of 16.8MB. By comparing the performance of each optimization scheme on the val dataset with that of the test dataset, it can be further deduced that we can achieve the best results in the val dataset. Performance: our RT-DETR-CFAN-SWN-TCN improvement scheme is more robust than other improvement schemes. The comprehensive data analysis shows that our RT-DETR-CFAN-SWN-TCN improvement scheme has the properties of small size, low GFLOPs, high accuracy, and high robustness and is in the leading position compared with other improvement schemes.

Ablation experiment

To verify the effectiveness of each proposed module, we conducted comprehensive ablation experiments using RT-DETR as the baseline. Table 8 presents the experimental results, where “\(\checkmark\)” indicates the inclusion of specific modules: A represents the CFAN module, B denotes the SWN module, and C signifies the TCN module.

CFAN Module. The CFAN module, incorporating multi-scale pyramid enhancement, improved detection performance on the ISDD dataset with a 0.9% increase in mAP@0.5. This improvement demonstrates the module’s effectiveness in handling scale variations in aerial ship imagery.

SWN Module. The SWN module further enhanced feature discrimination capabilities while maintaining computational efficiency. Without requiring complex hyperparameter tuning, it achieved improvements of 0.8% in mAP@0.5 and 0.9% in mAP@0.5:0.95, effectively addressing the traditional trade-off between performance and computational complexity.

TCN Module. The TCN module, employing directional decomposition through local operations and low-cost channel fusion, yielded the most significant improvements: 2.2% in mAP@0.5 and 0.3% in mAP@0.5:0.95, while simultaneously reducing model size by 1.3 MB. This dual achievement of enhanced accuracy and reduced complexity validates our design philosophy.

Visual analysis of module contributions

To provide intuitive understanding of each module’s contribution, we performed comprehensive feature visualization using Grad-CAM, as shown in Fig. 5. The visualization follows a structured layout: Column 1 presents original images, Column 2 shows zoomed regions of interest, Column 3 displays baseline RT-DETR feature maps, and Columns 4–6 illustrate progressive feature enhancements with CFAN, SWN, and TCN modules respectively.

Three key observations emerge from the visualization analysis:

Progressive Enhancement: The CFAN module generates richer multi-scale representations, particularly improving small target response. The SWN module produces sharper boundaries with reduced background noise, while the TCN module captures directional features through structured activation patterns.

Attention Distribution: Compared to the baseline’s scattered attention patterns, RT-DETR-CST achieves concentrated activation on ship regions while effectively suppressing maritime background distractions (waves, clouds, coastal structures). Quantitative analysis of activation maps indicates approximately 35% stronger response in target regions and 42% reduction in background interference.

Robustness: The model demonstrates robust performance across challenging scenarios including densely packed formations, extreme scale variations, and complex backgrounds. The consistent activation strength across these conditions validates our architectural design choices.

In summary, our ablation study confirms that RT-DETR-CST achieves 89.4% mAP@0.5, representing a 4.9% improvement over the baseline while maintaining identical inference speed and reducing model size by 1.3 MB. The combination of quantitative metrics and visual evidence demonstrates that our proposed modules effectively address the challenges of multi-scale ship detection in aerial imagery, offering both theoretical innovation and practical value for maritime surveillance applications.

Comparison experiment

The table 9 shows the key performance metrics of RT-DETR-CST and its base algorithm RT-DETR-r18 on three datasets, ISDD, SSDD, and SIRST, including precision (P), recall (R), mAP50, mAP50-95, model size (Model), and inference speed (FPS), and compares them with the YOLO series of single-stage target detection algorithms (YOLOv5m, YOLOv6m, YOLOv7, YOLOv8m, YOLOv9m, YOLOv10m, YOLOv11m, YOLOv12m), other single-stage target detection algorithms (EfficientDet, FSAF), two-stage target detection algorithms (Faster RCNN), Anchor- Free target detection algorithms (CenterNet, FCOS), Transformer-based target detection algorithms (RT-DETR-L), and other classical algorithms are compared, and the key performance indicators of each classical algorithm are also ranked.

Quantitative comparison

-

ISDD Dataset: RT-DETR-CST significantly outperforms the baseline RT-DETR-r18 in precision, recall, mAP50, and mAP50-95, with an improvement of 4.0%, 5.5%, 4.9%, and 1.2%, respectively, and the model volume is reduced from 40.5M in the baseline to 23.7M, which is 58.5% of the original one, whereas the inference speed is 58.5% different from that of the baseline of 213.8 FPS. The inference speed is only 6.6 FPS lower than the baseline. Comparing RT-DETR-CST with the classical algorithms, its recall (R) and mAP50 are optimal, and its precision (P) and model size (Model) are sub-optimal. At the same time, mAP50-95 is only 2.5% different from the highest value, and the inference speed (FPS) is only 18.4% different from the highest value. A comprehensive evaluation based on the ranking of key performance indicators and the intuitive results presented in Fig. 6 reveals that the RT-DETR-CST algorithm demonstrates optimal performance on the ISDD dataset, proving that the improved RT-DETR-CST effectively achieves significant weight reduction while maintaining high accuracy.

-

SSDD Dataset: comparing RT-DETR-CST with the baseline RT-DETR-r18, the volume is reduced to 58.5% of the original, while the rest of the key performance metrics are not much different, with a 1.7% reduction in precision (P), 0.6% reduction in recall (R), 0.4% reduction in mAP50, 1.2% reduction in mAP50-95, and 6.6FPS reduction in inference speed (FPS). RT-DETR-CST compared to the average of classical algorithms, the precision (P) is increased by 0.3%, recall (R) by 2.5%, mAP50 by 1.4%, and mAP50-95 by 0.9%, while the inference speed (207.2FPS) is 1.43 times the average inference speed (144.7FPS). The volume (23.7M) is only 18% of the average volume (131.3M), which proves that the improved model has a lightweight effect is significant. A multi-dimensional evaluation based on the ranking of comprehensive key performance indicators and the visual results in Fig. 7 demonstrates that the improved RT-DETR-CST achieves significant lightweight effects while maintaining high accuracy.

-

SIRST Dataset: RT-DETR-CST significantly outperforms the baseline RT-DETR-r18 in recall (R), mAP50, and mAP50-95, with improvements of 9.1%, 7.8%, and 6.7%, respectively. Specifically, RT-DETR-CST achieves R=84.4%, mAP50=85.9%, and mAP50-95=35.1%, compared to RT-DETR-r18’s R=75.3%, mAP50=78.1%, and mAP50-95=28.4%. The model volume is reduced from 40.5MB to 23.7MB, which is 58.5% of the original size. While precision (P) slightly decreases by 0.6% (81.4% vs 82.0%) and inference speed (FPS) decreases by 6.4 FPS (207.2 vs 213.6), the overall performance gains are substantial. Comparing RT-DETR-CST with classical algorithms, it achieves the highest recall (R=84.4%), mAP50 (85.9%), and mAP50-95 (35.1%), while maintaining the second-best model size (23.7MB), only behind YOLOv12m’s 16.2MB. The precision (P=81.4%) differs from the highest value (RT-DETR-L’s 86.3%) by only 4.9%, and the inference speed (207.2 FPS) differs from the maximum (YOLOv5m’s 227.3 FPS) by only 8.9%. A comprehensive evaluation based on the ranking of key performance indicators and the intuitive results shown in Fig. 8 reveals that the RT-DETR-CST algorithm demonstrates superior performance on the SIRST dataset, proving that the improved RT-DETR-CST effectively achieves significant weight reduction while substantially enhancing detection accuracy for small infrared targets.

Qualitative comparison

-

Detection accuracy and target integrity advantages: The CFAN module’s channel weighting and residual fusion mechanisms significantly enhance multi-branch feature complementarity and key region focus. In dense ship scenarios of SSDD (Fig. 7), it effectively suppresses interference from adjacent targets and reduces overlapping detection errors: achieving competitive mAP50 (97.7%) and improved precision (P=94.7%) with fewer false alarms. For small infrared target detection in SIRST (Fig. 8), the TCN module strengthens edge feature extraction, achieving the highest recall (R=84.4%) and mAP50 (85.9%) among all comparison algorithms, significantly outperforming RT-DETR-r18 (R=75.3%, mAP50=78.1%) by 9.1% and 7.8% respectively, demonstrating superior detection integrity for low-contrast small infrared targets.

-

Advantage of model efficiency and lightweight: Through hierarchical residual stacking and depthwise separable convolution, the SWN module maintains multi-scale feature extraction capability while: reducing model size to 23.7MB (41.5% smaller than RT-DETR-r18’s 40.5MB and 62.4% smaller than RT-DETR-L’s 63.1MB), decreasing computational complexity substantially, and maintaining competitive FPS (207.2 vs. RT-DETR-r18’s 213.6). Although larger than YOLOv12m (16.2MB), CTS-SIRST achieves superior mAP50 (85.9% vs. 74.6%) and mAP50-95 (35.1% vs. 22.5%) performance on SIRST dataset, demonstrating excellent accuracy-efficiency trade-off for edge deployment.

-

Complex scene robustness advantage: Addressing low-resolution and low-SNR challenges in infrared small target detection, the synergistic CFAN and TCN modules effectively suppress background noise and clutter. In ISDD’s multi-scale detection scenarios: precision (90.4%) improves by 4.0% over RT-DETR-r18 (86.4%), significantly outperforming traditional detectors like EfficientDet (81.0%). For SIRST’s challenging infrared conditions, CTS-SIRST achieves the highest mAP50-95 (35.1%), surpassing even larger models like RT-DETR-L (31.8%) and demonstrating 56.3% improvement over RT-DETR-r18 (28.4%). The SWN’s stochastic depth technique enhances generalization capability, maintaining robust performance across diverse infrared imaging conditions with varying thermal signatures and atmospheric interference.

The comparison of detection effects between 15 classic algorithms under the ISDD dataset and RT-DETR-CST. The large images on the left show 5 randomly selected images from the test dataset, while the middle section consists of 5 groups of images (2 rows x 8 columns x 5 groups) displaying the validation results of sequential algorithms tested on the 5 images from the dataset (the original images have no validation points: YOLOv5m, YOLOv6m, YOLOv7, YOLOv8m, YOLOv9m, YOLOv10m, YOLOv11m, YOLOv12m, EfficientDet, FCOS, FSAF, CenterNet, Faster-RCNN, RT-DETR-r18, RT-DETR-L). The 5 images on the right represent the algorithm validation results of RT-DETR-CST on the 5 images from the test dataset. Note: The red boxes indicate localized magnifications, the yellow circles denote missed labels, and the green circles indicate mislabels.

The comparison of detection effects between 15 classic algorithms under the SSDD dataset and RT-DETR-CST. The large images on the left show 5 randomly selected images from the test dataset, while the middle section consists of 5 groups of images (2 rows x 8 columns x 5 groups) displaying the validation results of sequential algorithms tested on the 5 images from the dataset (the original images have no validation points: YOLOv5m, YOLOv6m, YOLOv7, YOLOv8m, YOLOv9m, YOLOv10m, YOLOv11m, YOLOv12m, EfficientDet, FCOS, FSAF, CenterNet, Faster-RCNN, RT-DETR-r18, RT-DETR-L). The 5 images on the right represent the algorithm validation results of RT-DETR-CST on the 5 images from the test dataset. Note: The red boxes indicate localized magnifications, the yellow circles denote missed labels, and the green circles indicate mislabels.

The comparison of detection effects between 15 classic algorithms under the SIRST dataset and RT-DETR-CST. The large images on the left show 5 randomly selected images from the test dataset, while the middle section consists of 5 groups of images (2 rows x 8 columns x 5 groups) displaying the validation results of sequential algorithms tested on the 5 images from the dataset (the original images have no validation points: YOLOv5m, YOLOv6m, YOLOv7, YOLOv8m, YOLOv9m, YOLOv10m, YOLOv11m, YOLOv12m, EfficientDet, FCOS, FSAF, CenterNet, Faster-RCNN, RT-DETR-r18, RT-DETR-L). The 5 images on the right represent the algorithm validation results of RT-DETR-CST on the 5 images from the test dataset. Note: The red boxes indicate localized magnifications, the yellow circles denote missed labels, and the green circles indicate mislabels.

Conclusion and future prospects

Degree, which optimizes the performance of target detection in complex environments. Firstly, CFAN is proposed to achieve optimal representation of features through channel weighting and interactive fusion for adaptive fusion of intermediate representations from two feature extraction branches. Through channel alignment, splicing, weighting and residual fusion, the expressiveness of key regions is strengthened while avoiding the interference of redundant information. Deeper interconnections are established between different features, thus enhancing the quality and diversity of feature representations. Secondly, multi-stage downsampling and residual stacking are achieved by introducing SWN, which can continuously extract deeper global semantics while preserving fine-grained details. An efficient mapping from the input image to the multi-stage feature representation is achieved simultaneously, and the computational overhead is saved. It provides more stable high-level feature support in various downstream vision tasks. Next, the TCN module is constructed to be moderately compressed regarding spatial resolution. It can inject sensitivity to small-scale boundaries and textures at a limited computational cost, thus effectively retaining key information and vein cues before deep network processing. It plays a key role in feature separation and reorganization, retaining the abstract representation of the overall structure, thus providing rich feature combination possibilities for the subsequent network layer, improving the model’s recognition performance in complex scenes, and maintaining inference efficiency. The model has been extensively tested on several ship image datasets, verifying its excellent performance in real-time processing capability and detection accuracy. Future work will focus on improving the model’s generalization capability and optimizing its operational efficiency to deploy it to a wider range of real-world applications.

Future research in small target detection can be further explored from the following perspectives. Firstly, model pruning and knowledge distillation techniques should be investigated to identify and remove weights or neurons that have the least impact on model performance. Secondly, multimodal data fusion techniques (e.g., fusing infrared images with multi-aperture radar images) can be introduced to enhance the model’s accuracy and robustness in small target detection. Additionally, the proposed model can be applied to a broader range of scenarios, such as warship positioning, emergency rescue, and maritime surveillance.

Data availability

All images and experimental test images in this paper are from the open-source ISDD, SSDD and HRSID datasets, which were analysed during the current study. These datasets can be found at the following path: ISDD : (https://github.com/yaqihan-9898/ISDD). SSDD : (https://github.com/TianwenZhang0825/Official-SSDD). SIRST : (https://github.com/YimianDai/sirst).

References

Wulder, M. A., Hall, R. J., Coops, N. C. & Franklin, S. E. High spatial resolution remotely sensed data for ecosystem characterization. BioScience 54, 511–521 (2004).

Weng, Q. Thermal infrared remote sensing for urban climate and environmental studies: Methods, applications, and trends. ISPRS Journal of photogrammetry and remote sensing 64, 335–344 (2009).

Mi, C., Shen, Y., Mi, W. & Huang, Y. Ship identification algorithm based on 3d point cloud for automated ship loaders. J. Coast. Res. 28–34 (2015).

Blondeau-Patissier, D., Gower, J. F., Dekker, A. G., Phinn, S. R. & Brando, V. E. A review of ocean color remote sensing methods and statistical techniques for the detection, mapping and analysis of phytoplankton blooms in coastal and open oceans. Prog. oceanography 123, 123–144 (2014).

Farhadi, A., Redmon, J. & Yolov3: An incremental improvement. In Computer vision and pattern recognition, vol.,. 1–6 (Springer 2018 (Berlin/Heidelberg, Germany, 1804).

Yang, G., Li, B., Ji, S., Gao, F. & Xu, Q. Ship detection from optical satellite images based on sea surface analysis. IEEE Geosci. Remote. Sens. Lett. 11, 641–645 (2014).

Wang, Z., Zhou, Y., Wang, F., Wang, S. & Xu, Z. Sdgh-net: Ship detection in optical remote sensing images based on gaussian heatmap regression. Remote. Sens. 13 (2021).

Shi, R. et al. Csb-rnn: A faster-than-realtime rnn acceleration framework with compressed structured blocks. In Proceedings of the 34th ACM International Conference on Supercomputing, 1–12 (2020).

Hua, L. & Shao, G. The progress of operational forest fire monitoring with infrared remote sensing. J. forestry research 28, 215–229 (2017).

Liang, S. & Wang, J. Advanced remote sensing: terrestrial information extraction and applications (Academic Press, 2019).

Zhang, P., Xie, G. & Zhang, J. Gaussian function fusing fully convolutional network and region proposal-based network for ship target detection in sar images. Int. J. Antennas Propag. 2022, 3063965 (2022).

He, K., Gkioxari, G., Dollár, P. & Girshick, R. Mask r-cnn. In Proceedings of the IEEE international conference on computer vision, 2961–2969 (2017).

Zhang, H. & Li, Y. Student classroom teaching behavior recognition based on dscnn model in intelligent campus education. Informatica 48 (2024).

Xie, X., Cheng, G., Wang, J., Yao, X. & Han, J. Oriented r-cnn for object detection. In Proceedings of the IEEE/CVF international conference on computer vision, 3520–3529 (2021).

Lu, X., Li, B., Yue, Y., Li, Q. & Yan, J. Grid r-cnn. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 7363–7372 (2019).

Xu, X., Zhou, F. & Liu, B. Automatic bladder segmentation from ct images using deep cnn and 3d fully connected crf-rnn. International journal of computer assisted radiology and surgery 13, 967–975 (2018).

Cai, Z. & Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, 6154–6162 (2018).

Sun, P. et al. Sparse r-cnn: End-to-end object detection with learnable proposals. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 14454–14463 (2021).

Zhang, H., Chang, H., Ma, B., Wang, N. & Chen, X. Dynamic r-cnn: Towards high quality object detection via dynamic training. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XV 16, 260–275 (Springer, 2020).

Chen, L., Shi, W. & Deng, D. Improved yolov3 based on attention mechanism for fast and accurate ship detection in optical remote sensing images. Remote. Sens. 13, 660 (2021).

Bochkovskiy, A., Wang, C.-Y. & Liao, H.-Y. M. Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 (2020).

Xu, X., Zhang, X. & Zhang, T. Lite-yolov5: A lightweight deep learning detector for on-board ship detection in large-scene sentinel-1 sar images. Remote. Sens. 14, 1018 (2022).

Zhang, X. et al. Mgsfa-net: Multiscale global scattering feature association network for sar ship target recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 17, 4611–4625 (2024).

Liu, C. et al. Oshfnet: A heterogeneous dual-branch dynamic fusion network of optical and sar images for land use classification. Int. J. Appl. Earth Obs. Geoinformation 141, (2025).

Zhang, X. et al. Cross-sensor sar image target detection based on dynamic feature discrimination and center-aware calibration. IEEE Transactions on Geosci. Remote. Sens. 63, 1–17 (2025).

Zhu, X. et al. Deformable detr: Deformable transformers for end-to-end object detection. arXiv preprint arXiv:2010.04159 (2020).

Zhang, H. et al. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv preprint arXiv:2203.03605 (2022).

Huang, L., Yang, Y., Deng, Y. & Yu, Y. Densebox: Unifying landmark localization with end to end object detection. arXiv preprint arXiv:1509.04874 (2015).

Zitnick, C. L. & Dollár, P. Edge boxes: Locating object proposals from edges. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13, 391–405 (Springer, 2014).

Gao, G. Statistical modeling of sar images: A survey. Sensors 10, 775–795 (2010).

Li, J., Qu, C. & Shao, J. Ship detection in sar images based on an improved faster r-cnn. In 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), 1–6 (IEEE, 2017).

Zhang, T. et al. Sar ship detection dataset (ssdd): Official release and comprehensive data analysis. Remote. Sens. 13, 3690 (2021).

Dai, Y. et al. One-stage cascade refinement networks for infrared small target detection. IEEE Transactions on Geosci. Remote. Sens. 61, 1–17 (2023).

Dai, Y., Wu, Y., Zhou, F. & Barnard, K. Attentional local contrast networks for infrared small target detection. IEEE Transactions on Geosci. Remote. Sens. 1–12 (2021).

Dai, Y., Wu, Y., Zhou, F. & Barnard, K. Asymmetric contextual modulation for infrared small target detection. In IEEE Winter Conference on Applications of Computer Vision (WACV), 2021 (IEEE, 2021).

Ge, Q. et al. Hyper-progressive real-time detection transformer (hprt-detr) algorithm for defect detection on metal bipolar plates. Int. J. Hydrog. Energy 74, 49–55 (2024).

Zhang, T., Zhang, X., Shi, J. & Wei, S. Depthwise separable convolution neural network for high-speed sar ship detection. Remote. Sens. 11, 2483 (2019).

Li, Y. et al. Lsknet: A foundation lightweight backbone for remote sensing. Int. J. Comput. Vis. 1–22 (2024).

Cai, X. et al. Poly kernel inception network for remote sensing detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 27706–27716 (2024).

Fan, Q., Huang, H., Chen, M., Liu, H. & He, R. Rmt: Retentive networks meet vision transformers. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 5641–5651 (2024).

Shi, Y. et al. Yolov9s-pear: A lightweight yolov9s-based improved model for young red pear small-target recognition. Agronomy 14, 2086 (2024).

Dai, Q. et al. Yolov8-gabnet: An enhanced lightweight network for the high-precision recognition of citrus diseases and nutrient deficiencies. Agriculture 14, 1964 (2024).

Li, H. et al. Slim-neck by gsconv: A lightweight-design for real-time detector architectures. J. Real-Time Image Process. 21, 62 (2024).

Wu, T., Tang, S., Zhang, R., Cao, J. & Zhang, Y. Cgnet: A light-weight context guided network for semantic segmentation. IEEE Transactions on Image Process. 30, 1169–1179 (2020).

Author information

Authors and Affiliations

Contributions

H.D. (Hongyi Duan): Software; Writing-original draft; Funding acquisition, J.N. (Jinyang Niu): Software; Methodology; Funding acquisition, J.H. (Junjie Hao): Validation; Writing-original draft; Funding acquisition, P.H. (Pengyue Hao): Visualization; Resources; Funding acquisition, J.X. (Jijiang Xu): Writing-review & editing; Supervision; Funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Duan, H., Niu, J., Hao, J. et al. A lightweight infrared remote sensing architecture for enhanced small target detection using improved DETR with CST modules. Sci Rep 15, 38319 (2025). https://doi.org/10.1038/s41598-025-22273-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-22273-y