Abstract

Bone deterioration from osteoporosis creates fractures that primarily affect females who have reached menopause and older adults. Early detection of osteoporosis requires affordable methods because current diagnostic systems are both expensive and challenging to use. The current application of deep learning models for bone radiography analysis faces three major limitations: shallow architecture, insufficient dataset adaptation, and inadequate feature extraction methods. Talk-based machine learning techniques rely heavily on human-generated feature construction; however, they fail to recognize the delicate image patterns that are present in medical contexts. VGG-16 provides strong power, but businesses must invest in advanced and expensive hardware platforms while maintaining challenges with data quantity. The detection of osteoporosis in knee X-rays through deep learning and the ResNet-50 model using transfer learning remains our primary proposal for solving existing issues. An overview of 372 X-ray images with verified medical information was constructed for T-score ratings determined using a quantitative ultrasound system. Following fine-tuning of our model based on ResNet-50, we achieved 90% accuracy, which exceeded all alternative models tested, including VGG-16 with 88% accuracy using fine-tuning, 80% without fine-tuning, a 3-layer CNN at 66%, ResNet-18 at 79%, and non-fine-tuned ResNet-50 at 83%. The clinical value of the ResNet-50 model stems from its high accuracy, strong sensitivity, and specificity rates. The detection reliability of osteoporosis by the deep transfer learning CNN ResNet-50 has been proven through these findings, which provide healthcare practitioners with an effective diagnostic tool for early detection and prevention of fractures. Our research indicates that deep learning using transfer learning strategies produces a crucial enhancement in medical imaging systems, leading to better patient results.

Similar content being viewed by others

Introduction

The most widely used imaging method in the medical field for identifying bone diseases is X-ray imaging, which is the oldest and most widely used method for taking pictures of every bone in the body, including the wrist, knee, elbow, shoulder, knee, pelvis, and spine. X-ray imaging helps identify fractures, joint dislocations, bone damage, aberrant bone development, infections, and arthritis. Although mostly unintentional, bone fractures can also be pathological. Osteoporosis, cancer, and osteogenesis can weaken bones. Osteoporosis is the primary bone disease that causes millions of fractures globally1, with a higher incidence among women2. Although osteoporosis is associated with aging because it weakens bones with the passage of time, it can also occur at earlier ages3. Osteoporosis is often known as a silent illness since its symptoms do not show up in the early stages and become noticeable when it has progressed to a point where bones can break with a little fall. Economic resources are heavily depleted by osteoporosis therapy expenditures, including fracture repair4,5. Therefore, early diagnosis is necessary to reduce treatment costs.

Osteoporosis is diagnosed using dual-energy X-ray absorptiometry (DXA)6. It calculates Bone Mineral Density (BMD) using the T-score- and Z-scores accepted by the WHO for various osteoporosis stages7. However, it has several drawbacks, such as its high cost and limited availability for area measurements. Computed Tomography (CT)7,8, Magnetic Resonance Imaging (MRI)9,10, and the Quantitative Ultrasound System (QUS)11,12 are further imaging modalities used to identify osteoporosis. CT is a 3D geometric imaging modality with volumetric measurements, but it has a high radiation dose and is not eligible for MRI. MRI is a 3T enhanced bone micro-architecture imaging technique with a lower spatial resolution9,10. QUS is easy to use, non-invasive, portable, affordable, and employs sound waves to examine bones; however, it is site-specific and lacks solid empirical support11,12. In light of these constraints, there is a need for an osteoporosis detection system that is accurate, affordable, and easily accessible. This requirement prompted researchers to use computer algorithms to evaluate medical images and create Computer-Aided Diagnostic (CAD) systems by utilizing current developments in the field of imaging technology.

Deep Learning-based Convolutional Neural Network (CNN) algorithms have been more popular among CAD systems for medical image analysis in the recent years13,14 because of its cutting-edge outcomes in identifying a variety of illnesses from pictures such as brain tumor identification15, multiple sclerosis16, human activity identification17, breast cancer detection18, pneumonia detection19, cancer detection19,20, and so forth. In the classification of medical images, CNNs such as AlexNet, ResNet-50, VGG-16, VGG-19, and GoogleNet21,22,23,24 have demonstrated state-of-the-art performance. The biggest problem with CNN classifiers is that they require a large quantity of labeled data for training, which may be quite challenging to obtain in the medical field. Researchers have developed the concept of transfer learning to solve this problem25. In the transfer learning approach, a CNN trained on a large dataset is retrained on a smaller dataset about a new problem. Using the information gathered from the large dataset, the CNN can quickly learn the characteristics of the new small dataset, thereby assisting in picture classification.

Numerous CAD methods, including deep learning, have been suggested for diagnosing osteoporosis at different bone locations, such as the hip, spine, hand, and teeth; however, little research has been conducted to identify osteoporosis in the knee26,27. The knee is the most strained joint in the body, bearing the weight of the body and controlling movement. The prevalence of osteoporotic fractures around the knees increases with age, and women are more likely to sustain tibial and fibular fractures28. Approximately half of knee fractures are thought to occur in people over the age of 50 years, and a significant one-year mortality rate of 22% is observed in senior patients who sustain femoral fractures, leaving them with reduced function and a lower quality of life29. To avoid fractures and lower treatment expenses, an early detection method is required to identify the prevalence of osteoporosis in the knee bone30,31,32.

Although osteoporosis is a single disease caused by poor bone mineral density, it can be divided into the following two stages:

Primary osteoporosis

It prevalent stage of osteoporosis. This can be further categorized into two steps.

-

a.

Postmenopausal Osteoporosis: Due to decreased levels of the hormone estrogen, postmenopausal women are more likely to develop osteoporosis.

-

b.

Senile osteoporosis: this form of osteoporosis is associated with the age factor, which can primarily affect men and women.

Secondary osteoporosis

This type of osteoporosis is caused by medications, lifestyle choices, and other health issues. In this study, we developed an osteoporosis detection system for knee bones using a CNN, a well-known deep learning technique. The proposed model uses a transfer learning approach based on ResNet-50, which is a variant of CNN.

Machine Learning (ML) and Artificial Intelligence (AI) have shown transformative potential in healthcare, which can be integrated into patient-focused applications, offering personalized health alerts, treatment summaries, and educational resources that improve patient engagement and collaboration in care. The self-organizing Map (SOM) neural network with block processing offers significant applications in healthcare by enhancing the accuracy of cancer zone detection in MRI images, even in the presence of noise33. By improving segmentation accuracy and clearly delineating abnormal regions, this approach supports early detection and aids radiologists in reducing diagnostic errors. Its high specificity and precision make it an effective clinical decision-support tool that streamlines workflows and ensures timely interpretation of scans. Similarly, ML- and AI-based approaches address the limitations of traditional diagnostic methods by enabling the precise analysis of complex blood-related data, including cell morphology, biomarkers, and prognostic indicators. It shows significant contributions to the fields of hematology and hematopathology34,35. Deep learning models, such as convolutional neural networks (CNNs), XGBoost, and artificial neural networks (ANNs), have demonstrated high performance, achieving diagnostic accuracies above 96%. In clinical applications, AI facilitates the early and accurate diagnosis of disorders, such as leukemia, lymphoma, myelodysplastic syndromes, and chronic myeloid leukemia, by automating the interpretation of flow cytometry, bone marrow morphology, and biopsy images. The study in36 presents a machine vision-based framework for diagnosing tuberculosis (TB) using computerized tomography (CT) scan images. The authors developed an automated diagnostic pipeline that extracts radiological features from CT scans, applies advanced image processing and segmentation techniques, and employs machine learning classifiers to distinguish between TB cases and healthy controls. This study underscores the growing role of artificial intelligence in improving medical imaging–based disease diagnosis.

A hybrid deep-learning framework that combines EfficientNetB0 and ResNet50, enhanced with transformer-inspired training strategies, improved the early prediction of breast cancer37. EfficientNetB0 provides parameter-efficient feature extraction, whereas ResNet50 captures deeper and more hierarchical representations. This study underscores the potential of transformer-guided optimization in computer-aided diagnosis, offering a more reliable and interpretable method for early breast cancer detection from histopathology images. Similarly, the application of voice biomarkers in healthcare offers transformative possibilities38. Early detection allows for timely interventions, such as medication, physiotherapy, and lifestyle modifications, which slow disease progression and improve the quality of life. Unlike costly and invasive diagnostic methods such as MRI or PET scans, voice-based assessments are affordable, simple, and non-invasive, making them especially valuable in resource-limited settings. Overall, the use of vocal measures enriched with machine learning represents a reliable, scalable, and patient-friendly approach to diagnosing and managing Parkinson’s disease, marking a significant advancement in healthcare.

The main highlights of this research study are:

-

Due to the increased number of osteoporosis cases, researchers have developed different detection models, but they suffer from overfitting problems. This problem was overcome in this study by using the ResNet-50 model along with the transfer learning approach, which achieved 90% accuracy on a dataset consisting of 372 images.

-

Most research focuses on a certain age or gender. In this study, we used X-ray images gathered from both genders and different age categories.

-

The Pretrained CNN (ResNet-50) x-ray classification process is entirely automatic. It does not use separate techniques for classification, selection, or feature extraction; rather, these processes are performed automatically.

-

In this study, data augmentation and transfer learning approaches were employed to address the issues of a small dataset.

The rest of the paper is organized as follows. Section 2 evaluates the existing related works, and Sect. 3 details the materials and methods used in this research study. The results are analyzed in Sect. 4, and conclusions, possible challenges, and future recommendations are summarized in Sect. 5.

Related works

Various machine learning and deep learning approaches have led to significant research progress in osteoporosis detection in recent years. The existing literature on osteoporosis detection can be divided into sections for a methodological review by grouping them according to their shared principles. This section covers the current progress in osteoporosis recognition using machine learning and deep learning algorithms. X-ray images are widely used in research studies focusing on osteoporosis detection. Our analysis includes an evaluation of machine learning models that blend different data sources for detection purposes. Conventional diagnostic models for osteoporosis detection are also covered in the last part of this section. Such a structured methodology provides a complete understanding of both the strengths and weaknesses of past research in this field.

Recent advances in osteoporosis detection using machine learning and deep learning models

Research has shown that Deep Convolutional Neural Networks (CNNs) under machine learning achieve promising detection results in osteoporosis cases39. Multiple imaging techniques, such as X-ray combined with CT and MRI, undergo analysis through machine learning models that help identify osteoporosis more effectively while performing automated diagnostic classification.

Osteoporosis detection using X-ray images

In 2023, hip X-ray images were used to analyze the left and right radiographs to segment and classify osteoporosis40. In addition to segmentation using U-Net and U-Net++, the study used VGG-16, ResNet-50, and DenseNet-121 classification methods. Both U-Net models achieved superior segmentation outcomes, but DenseNet-121 achieved the best accuracy rate of 74% among all models. The conducted study did not analyze fine-tuning strategies that could improve the model performance based on small or imbalanced datasets. The group of researchers led by Jang et al.41 performed hip radiograph evaluations on female patients over the age of 50 years using VGG-16, which delivered 81.2% accuracy with 91.1% sensitivity, along with a specificity of 68.90%. However, this approach sometimes misclassifies healthy patients as having osteoporosis. The high false-positive rate can lead to avoidable anxiety, excessive medical testing, and financial burdens on the healthcare system. Moreover, this study was limited to a specific group of age and gender, reducing the generalizability of the model. A study employed AlexNet, VGG-16, VGG-19, and ResNet for knee X-ray image analysis, which classified the results into normal, osteopenia, and osteoporosis categories9. AlexNet performed the best in this task, achieving 91.1% accuracy with a maximum validation loss measurement of 0.54. The proposed model suffers from an overfitting problem because no proper method, such as data augmentation and regularization, was used. In addition, this study does not describe how the model would perform in real clinical settings. According to42, researchers used DenseNet-121 and the Adam optimizer (learning rate = 0.001; batch size = 30) to identify osteoporosis in chest X-ray images in 2024. To prevent overfitting, L2 regularization, and an early stopping mechanism were used. Although these steps are good practices, this study failed to provide a detailed performance mechanism compared with other models. This lack of performance makes it difficult to analyze the practical relevance of the proposed method. Using chest radiography instead of specific bone regions, such as the hip or knee, may affect the diagnostic accuracy of the model.

Osteoporosis detection using Multi-Modal and machine learning approaches

The Korean National Health and Nutrition Examination Survey (KNHANES) and the National Health and Nutrition Examination Survey (NHANES) national health datasets from South Korea and the United States were used as training data for a deep learning model developed by Suh et al.43. The researchers used cross-sectional data, which may suffer from selection bias, as participants were selected based on specific criteria, such as age and the availability of certain measurements. These limitations suggest that further research is needed to develop more robust and interpretable models for osteoporosis detection in the future. Eight different models, including Decision Trees (DT), Random Forests (RF), k-nearest neighbors (k-NN), Support Vector Machines (SVM), and Artificial Neural Networks (ANN), were used in the study by Fasihi et al.44 to analyze osteoporosis risk assessment using artificial intelligence techniques. Among the aforementioned techniques, random forest analysis achieved the highest degree of classification accuracy. This study was limited by the use of retrospective data without clinical validation. In addition, the interpretability makes it difficult for medical practitioners to trust and apply the results in real medical practice. Another study conducted in 2024 improved the categorization accuracy of osteoporosis by combining patient demographics with chest and hip CT images45. A report issued by the researchers disclosed a hip radiomics model success rate of 87%, but the chest radiomics model reached a 78% success rate. The accuracy rate reached 91% when multimodal fusion solutions were used. This study examined CT-based osteoporosis classification through the application of MVCTNet, which represents an artificial intelligence system developed for CT-based deep learning analysis46.

Osteoporosis detection using deep learning and traditional models

Tsai et al.39 developed a deep learning model which used chest X-ray images to find osteoporosis cases with a 75.3% accuracy that beat standard machine learning methods as well as the Osteoporosis Self-Assessment Tool (OSAT. The model suggested a greater all-cause mortality risk for people with osteoporosis because it demonstrated possible skills in early detection of the condition. However, the study’s reliance on past data and lack of model interpretability limit its therapeutic application. The model needs additional studies to show its usefulness in different patient groups, as well as changes to make it clear for healthcare workers in real settings.

Femoral osteoporosis was diagnosed using radiomics features and RF model analysis in 2021, with 92.7% validation accuracy and 92.9% training accuracy47. Studies conducted in the year 2023 evaluated XGBoost as the algorithm that performed best among eight machine learning techniques (SVM, RF, K-NN, DT, LR, NB, NN, and GBM) for osteoporosis classification48. However, despite the promising results, the model relies only on retrospective data, which lacks interpretability in the XG Boost. This approach improves diagnostic accuracy while reducing computational complexity. A Deep Convolutional Neural Network (DCNN) was used for the X-ray examination of the lumbar spine to detect osteoporosis and osteopenia49. The initial dataset achieved an AUC value of 0.767 for osteoporosis diagnosis, with a corresponding sensitivity measurement of 73.7%, along with an AUC value of 0.787 for osteopenia diagnosis, with a sensitivity rate of 81.8%. The second dataset achieved diagnostic performance with an osteoporosis AUC of 0.726, while the AUC for osteopenia was 0.810. Moreover, the sensitivity rates were 68.4% for osteoporosis and 85.3% for osteopenia. The developed model lacks the capability of dataset diversity and testing on real-world X-ray images, which affects its performance.

The field of osteoporosis detection faces ongoing difficulties due to restricted access to diagnostic datasets and unbalanced data samples, while researchers need to validate their models according to clinical standards. The diagnosis becomes more accurate by integrating CT scans with X-rays and patient metadata into multimodal approaches. Additional research is needed to achieve optimal performance for the clinical implementation of these models. Recent research on osteoporosis classification has utilized advanced deep learning models composed of CNNs with VGG-16 and DenseNet-121, as documented in Table 1. Our study used ResNet-50, which demonstrates exceptional features because of its proven ability to extract features effectively for the enhanced classification of osteoporosis. The proposed approach achieved 90% accuracy through transfer learning implementation and dataset imbalance handling, resulting in better osteoporosis diagnostic performance.

Materials and methods

Dataset description

In our research, we have used medical imaging data along with the X-rays that are present in the publicly available dataset54 for osteoporosis detection analysis. The dataset contains annotated medical data divided into groups of normal and osteoporosis patient results. There are 372 RGB radiographs available for analysis, which contain an equal distribution of 186 images for normal and 186 images for abnormal osteoporosis symptoms with variable native resolutions. For comparison evaluation, we have used the acquired images for training, validation, and testing processes within the binary classification framework. The images from the dataset can be viewed properly. Figure 1 (a), (b) shows a normal knee X-ray, whereas Fig. 2 (a), (b) shows an osteoporosis-affected knee X-ray.

Adapted from publically available dataset54.

Normal knee x-ray.

Adapted from publically available dataset54.

Osteoporotic knee x-ray.

The detail wise statistics of the dataset used in this paper are given below in Table 2 while graphical representation is shown in Fig. 3.

Preprocessing

Images must be preprocessed before being put into a model, which increases accuracy and performance. Pre-processing steps involved in this study are:

Resizing

Since the images in the used dataset vary in size, we scale them to (224,224), which works well for ResNet-50 deep learning models because the ResNet-50 architecture requires an image size of 224. Using too much storage to enter all the data into the model at once might slow down the training phase of the model. To solve this issue, we supplied images in batches.

Normalization

It is employed to modify the input scale in order to enhance deep learning mode training. It also contributes to making the learning process more stable. The model will slow down if it is trained with a big pixel value training procedure. Normalization is therefore necessary. The image is normalized into a scale of 1/255 using the Keras preprocessing layer tf. keras.layers.

Data augmentation

Data augmentation is a process of increasing the amount of data from the original dataset. When training data on a deep learning model, it is essential to have sufficient data for training. Data augmentation replicates the copy of the original images by flipping, rotating, etc. We have used a data augmentation technique to enlarge the training dataset. We aim to improve the correctness of the model by providing diverse datasets and thus improve generalization on unseen data. The augmentation is applied on‑the‑fly to the training split only (not to validation/test), using random: horizontal flip (p = 0.5), rotation (± 10°), width/height shift (± 10%), zoom (± 10%), brightness/contrast jitter (± 10%). Because augmentation is stochastic per epoch, the augmented set size is not a fixed number; effectively, each epoch presents ~ 260 training images (see split below) in transformed form. We now state the per‑epoch sample count and the transform ranges.

Proposed methodology

The block diagram of the suggested approach for identifying osteoporosis from knee X-rays is displayed in Fig. 4. To create an image dataset that can be divided into training data (used to train the CNN (ResNet-50) classifier) and test data (used to test the trained classifier), the knee X-rays were first gathered as described in Sect. 3.1. Since Pre-trained CNN models perform better with additional data, the training data is expanded to include more pictures. After that, the CNN model is trained using the picture data. The classifier’s ability to distinguish between normal and osteoporosis pictures is then assessed by analyzing the prediction ratio of the test and train data.

Transfer learning approach

This study implemented a pretrained CNN model since these models prove highly effective for image classification operations. We used transfer learning as our method instead of developing everything from scratch. The model started with important image features extracted from a large dataset before being trained to process my specific dataset. Using this technique brings significant advantages to situations with limited training datasets. Transfer learning obtains optimal outcomes through its two distinct operational methods, labeled feature extraction with fine-tuning. With feature extraction, the model builds its ability to recognize patterns obtained through learned processes, yet fine-tuning enables the model to adapt to my dataset specifications. The training process became more efficient as well as delivered superior accuracy through this approach.

Proposed CNN architecture

The specialized deep learning model called Convolutional Neural Network (CNN) has been designed to deal with image data through efficient processing. Unlike traditional neural networks, image detection using spatial hierarchies and patterns runs through convolutional layers in CNN structures. CNNs have transformed computer vision operations by enabling better performance for image classification tasks, object detection work, and medical image evaluation. The latest deep learning achievements are produced by CNN architectures, including ResNet, VGG, and EfficientNet. Table 3 presents CNN features, while Table 4 shows the CNN layers.

We have used the popular pre-trained model ResNet-50 using transfer learning in this research.

ResNet-50 model

In 2015, He et al.21 ResNet CNN architecture won the ILSVC competition with an error rate as low as 3.6%. With 152 layers, it was a very deep network. Multiple stocks of residual blocks serve as the foundation for ResNets. Blocks that remain after one layer are activated, which helps the network’s deeper layer through the use of skip connections, and as a result, the system trains more quickly. Depending on the number of network layers, ResNet comes in a variety of versions, including ResNet-18, ResNet-34, ResNet-50, ResNet-101, and ResNet-152. Because of the small size of our used dataset, we have employed the ResNet-50 architecture in this work. Table 5 shows the layers, their description, and the output shape of the ResNet-50 model. ResNet-50 has demonstrated highly promising results in medical picture classification, including the diagnosis of breast cancer51, protein & peptides52,53,54,55, thyroid ultrasound imaging56, and brain disease57.

Results and analysis

Discussion on performance comparison

The conducted research utilized the ResNet-50 model for knee X-ray dataset training through transfer learning approaches. The accuracy rate reached 90% as mentioned in Table 6. The adoption of transfer learning enabled the ResNet-50 model to adjust for knee X-ray image features by conducting a complete training process produced superior results compared to the existing approaches. Medical image analysis benefits substantially from the utilization of pretrained models. The top performance outcomes for detecting osteoporosis in knee X-rays were established through comparisons between the preferred modified ResNet-50 model and other counterparts, as presented in Table 7. All participants who joined the study included people from different age groups and both male and female genders.

The findings indicate that CNNs trained only on knee x-ray data had acceptable classification accuracy, while pretrained CNNs, which are trained on knee x-ray data, have shown higher accuracy. This suggests that transfer learning enhances the system’s overall performance without requiring the addition or deletion of any layers or the creation of a new CNN from scratch. Although, they performed well, the existing deep learning models for osteoporosis diagnosis from other body parts had several drawbacks. In addition to helping in the early detection of osteoporosis, the deep learning model trained on supervised X-ray images can also be a readily available and reasonably priced tool in low-income nations with greater population densities, like Africa, India, Pakistan, or other nations. The automatic operation of CNN systems is possible because they do not need extra work during feature extraction or feature selection, or classification steps. This class contained fewer images, which led to an effective performance by the ResNet-50 model. The addition of more images into each classification type will potentially enhance the diagnostic abilities of ResNet-50 regarding normal and osteoporotic radiographic results.

Different parameters used in the proposed model’s performance evaluation are defined as follows;

Confusion Matrix.

A confusion matrix, shown in Fig. 10, functions similarly to a table. It aids in evaluating the model’s performance. This matrix is used both for binary and multiclass classification purposes62. To assess the proposed model’s visual performance, we actually count the True Positive, True Negative, False Positive, and False Negative predictions in the confusion matrix63,64,65,66.

True Positive (TP) is the quantity of accurately categorized positive samples.

True Negative (TN) is the quantity of accurately identified negative samples.

False Positive (FP) is the number of negative samples that are mistakenly labeled as positive.

False Negative (FN) is the quantity of positive samples that are mistakenly labeled as negative.

Accuracy.

Accuracy is the measuring parameter that indicates how the model distinguishes between accurate and inaccurate predictions67,68,69,70,71. Mathematically,

By putting values in Eq. (1), the accuracy of the proposed model is evaluated;

Recall.

Recall is the number of real positive examples that the model is able to identify. In other words, it is also known as sensitivity, which is calculated as follows.

By putting values in Eq. (2),

Precision.

Precision indicates how many of the model’s categorized positive predictions are, in fact, positive. Mathematically,

Putting values in Eq. (3), the precision of the proposed model can be calculated as,

F1-Score.

F1-Score is a single figure that incorporates recall and accuracy. Mathematically, it can be defined as:

The F1-Score falls between 0.0 and 0.1. An F1 score of value 1 is the highest attainable score, which indicates that the model has strong recall and precision.

Putting values of precision and recall in Eq. (4), the F1-Score of the proposed model is calculated as;

Evaluation of the proposed model performance compared with the VGG-16 model

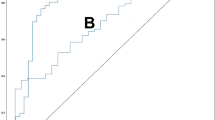

As shown in Fig. 5, an accuracy rate of 90% was achieved by the proposed model during osteoporosis classification, thus surpassing the accuracy levels from58 with and without fine-tuning. The proposed model delivers superior results due to its extensive design structure, which allows it to detect complex features within the data. The residual connections in the proposed model lead to better training efficiency that results in faster convergence and enhanced generalization capabilities, thus reporting higher accuracy than VGG-16 as mentioned in ref58,72.

Evaluation of the proposed model performance compared with the 3-Layered CNN model

As shown in Fig. 6 through the application of transfer learning in the proposed model, we reached an accuracy level of 90% which exceeds the accuracy rate of 66% of the CNN model with three layered architectures59. Transfer learning through proposed model enables pre-trained knowledge retention so the model performs enhanced feature extraction and produces better generalization results. The applied approach led to obtaining a strong model that reached higher training results and better generalized predictions for unknown data points.

Evaluation of the proposed model performance compared with the ResNet-18 model

As shown in Fig. 7, the proposed model architecture delivered 90% accuracy, which supersedes the accuracy level of 79% achieved by the ResNet-18 model. The pre-trained features in transfer learning accelerate learning efficiency and boost the generalization capabilities of the model. The authors in60 have presented ResNet-18 without transfer learning, which led to the reduced accuracy levels in comparison to other approaches.

Evaluation of the proposed model performance compared with the ResNet-50 model

As shown in Fig. 8, implementation of the proposed model, ResNet-50, along with transfer learning, delivers better accuracy results than the referenced study used for ResNet-50 without using the transfer learning technique61. We applied the fine-tuning technique to enhance the model’s performance in dealing with the dataset. The model’s performance reached optimal levels by adding custom dense layers during the fine-tuning step, resulting in it achieving enhanced precision rates.

Visualization

Figure 9 plots results showing ResNet-50 with transfer learning model’s training and validation accuracy, training loss, and validation loss across 45 epochs, which offer performance dynamics and generalization potential (Fig. 10).

Test image analysis

ResNet-50 evaluation takes place through batch testing with pre-labeled test images that contain knee X-ray pictures. A functionality in TensorFlow named image_dataset_from_directory enables loading data from the class-based directory organization in the test dataset. The model accepts X-ray images scaled to 224 × 224 pixels, which matches the required input format of ResNet-50. The processed images from the model, which was trained via transfer learning, allow the system to produce predictions about image categories (normal or osteoporotic). A final SoftMax operation receives class probability values from the output layer. In the case of each image, the selected prediction results from choosing the class with the most probable label together with its associated score value. A batch of test images can be displayed with Matplotlib, while each subplot displays the actual class and predicted class, and their corresponding confidence values. The visual inspection has been provided using both the actual and predicted labels and confidence scores for the tested images, as shown in Fig. 11.

Adapted from publicly available dataset54.

Test Images.

Conclusion, Challenges, and future work

Conclusion

The basic goal of this research study was to evaluate the ResNet-50 architecture’s performance using a transfer learning approach in diagnosing osteoporosis disease using knee X-ray images. Using a medically recognized bone mineral density test called the Quantitative Ultrasound System (QUS), which determines the T-score by measuring bone BMD, the X-ray pictures were extracted from a bespoke dataset and categorized into normal, osteopenia, and osteoporosis groups. The total number of knee X-ray scans in the custom dataset was 372. The results show that the best performance was achieved by ResNet-50 with 90% accuracy when compared with the previous approaches. This achievement showed high diagnostic capability by using transfer learning with CNN on knee x-ray examinations. This model represents a financially practical and convenient diagnostic system.

Challenges

The conducted study suffers from some challenges also as mentioned follows.

-

(a)

A small number of the dataset’s images, particularly those in the normal and osteoporosis classes, had an impact on the CNNs’ performance. This problem can be overcome by adding more images to each class, which can improve the effectiveness of the networks.

-

(b)

The QUS system, which provides unstable bone characteristics and has a different validation database than the BMD DXA, was used to construct the T-score. The QUS system is a cost-effective method of evaluating the fracture risk by looking at the calcaneus of the various bones.

Therefore, by using DXA to measure the BMD, we can further confirm our dataset.

(c) The clinical and other data gathered from the participants can also be employed to forecast the patient’s bone health and hence attempt to improve diagnosis.

It can be concluded that basic knee X-rays, regardless of the cause for the scan, may be processed by the CNN system and evaluated for osteoporosis or normal without further screening or expense. The use of these deep learning techniques in medical Artificial Intelligence Systems is not yet accessible, but they can be highly useful in modern health care by providing early warning about the likelihood of a disease.

Future work

As future work, more information can be gathered from people who are osteoporotic and normal to create a universal osteoporosis diagnosis method, which can determine how osteoporosis in the knee relates to osteoporosis in other body parts. It is also likely to construct a system that can identify osteoporosis using clinical characteristics and pictures.

Data availability

The data and code used in the work are publicly available via a GitHub link https://github.com/shahidawkum/Osteoporosis-Knee-Classification.

References

Johnell, O. & Kanis, J. An estimate of the worldwide prevalence and disability associated with osteoporotic fractures. Osteoporos. Int. 17 (12), 1726–1733 (2006).

Kanis, J. Assessment of osteoporosis at the primary health-care level. Techn Rep, (2008).

Cooper, C. & Melton, L. J. Epidemiology of osteoporosis. Trends Endocrinol. Metabolism. 3 (6), 224–229 (1992).

Becker, D. J., Kilgore, M. L. & Morrisey, M. A. The societal burden of osteoporosis. Curr. Rheumatol. Rep. 12 (3), 186–191 (2010).

Mithal, A., Bansal, B., Kyer, C. S. & Ebeling, P. The Asia-pacific regional audit-epidemiology, costs, and burden of osteoporosis in India 2013: a report of international osteoporosis foundation. Indian J. Endocrinol. Metabol. 18 (4), 449–454 (2014).

Dimai, H. P. Use of dual-energy X-ray absorptiometry (DXA) for diagnosis and fracture risk assessment; WHO-criteria, T-and Z-score, and reference databases. Bone 104, 39–43 (2017).

Brett, A. D. & Brown, J. K. Quantitative computed tomography and opportunistic bone density screening by dual use of computed tomography scans. J. Orthop. Translation. 3 (4), 178–184 (2015).

Jiang, H. et al. Peripheral quantitative computed tomography (pQCT) measures contribute to the Understanding of bone fragility in older patients with low-trauma fracture. J. Clin. Densitometry. 21 (1), 140–147 (2018).

Wani, I. M. & Arora, S. Osteoporosis diagnosis in knee X-rays by transfer learning based on Convolution neural network. Multimedia Tools Appl. 82 (9), 14193–14217 (2023).

Ferizi, U. et al. Artificial intelligence applied to osteoporosis: a performance comparison of machine learning algorithms in predicting fragility fractures from MRI data. J. Magn. Reson. Imaging. 49 (4), 1029–1038 (2019).

Gregg, E. W. et al. The epidemiology of quantitative ultrasound: a review of the relationships with bone mass, osteoporosis and fracture risk. Osteoporos. Int. 7 (2), 89–99 (1997).

Hans, D. & Baim, S. Quantitative ultrasound (QUS) in the management of osteoporosis and assessment of fracture risk. J. Clin. Densitometry. 20 (3), 322–333 (2017).

Li, Q. et al. Medical image classification with convolutional neural network, in 13th international conference on control automation robotics & vision (ICARCV), 2014: IEEE, pp. 844–848., 2014: IEEE, pp. 844–848. (2014).

Yadav, S. S. & Jadhav, S. M. Deep convolutional neural network based medical image classification for disease diagnosis. J. Big Data. 6 (1), 1–18 (2019).

Lu, S., Wang, S. H. & Zhang, Y. D. Detecting pathological brain via ResNet and randomized neural networks, Heliyon, vol. 6, no. 12, (2020).

Ambati, L. S., El-Gayar, O. & Nawar, N. Design principles for multiple sclerosis mobile self-management applications: a patient-centric perspective, (2021).

Ambati, L. S. & El-Gayar, O. Human activity recognition: a comparison of machine learning approaches, Journal of the Midwest Association for Information Systems (JMWAIS), vol. no. 1, p. 4, 2021. (2021).

Chen, Y. et al. Fine-tuning ResNet for breast cancer classification from mammography, in The international conference on healthcare science and engineering, : Springer, pp. 83–96. (2018).

Rahman, T. et al. Transfer learning with deep convolutional neural network (CNN) for pneumonia detection using chest X-ray. Appl. Sci. 10 (9), 3233 (2020).

Salau, A. O. & Jain, S. Computational modeling and experimental analysis for the diagnosis of cell survival/death for Akt protein. J. Genetic Eng. Biotechnol. 18 (1), 11 (2020).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition, in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778. (2016).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Commun. ACM. 60 (6), 84–90 (2017).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition, arXiv preprint arXiv:1409.1556, (2014).

Lee, S., Choe, E. K., Kang, H. Y., Yoon, J. W. & Kim, H. S. The exploration of feature extraction and machine learning for predicting bone density from simple spine X-ray images in a Korean population. Skeletal Radiol. 49 (4), 613–618 (2020).

Torrey, L. & Shavlik, J. Transfer learning, in Handbook of Research on Machine Learning Applications and Trends: algorithms, methods, and Techniques: IGI Global Scientific Publishing, 242–264. (2010).

Wani, I. M. & Arora, S. Deep neural networks for diagnosis of osteoporosis: a review, Proceedings of ICRIC 2019: Recent Innovations in Computing, pp. 65–78, (2019).

Wani, I. M. & Arora, S. Computer-aided diagnosis systems for osteoporosis detection: a comprehensive survey. Med. Biol. Eng. Comput. 58 (9), 1873–1917 (2020).

Court-Brown, C. M. & Caesar, B. Epidemiology of adult fractures: a review, Injury, vol. 37, no. 8, pp. 691–697, (2006).

Stange, R. & Raschke, M. J. Osteoporotic distal femoral fractures: when to replace and how, in Surgical and Medical Treatment of Osteoporosis: CRC, 235–244. (2020).

Ali, A. M., El-Shafie, M. & Willett, K. Failure of fixation of tibial plateau fractures. J. Orthop. Trauma. 16 (5), 323–329 (2002).

Mallina, R., Kanakaris, N. K. & Giannoudis, P. V. Peri-articular fractures of the knee: an update on current issues. Knee 17 (3), 181–186 (2010).

Wang, S. P. et al. Association of osteoporosis and varus inclination of the tibial plateau in postmenopausal women with advanced osteoarthritis of the knee. BMC Musculoskelet. Disord. 22 (1), 223 (2021).

Porkar, P. et al. Enhancing cancer zone diagnosis in MRI images: A novel SOM neural network approach with block processing in the presence of noise. Iran. J. Blood Cancer. 17 (2), 34–45 (2025).

Chen, D. Y. et al. Predicting Cardiovascular Risk in Patients with Prostate Cancer Receiving Abiraterone or Enzalutamide by Using Machine Learning, Cancers, vol. 17, no. 15, p. 2414, (2025).

Tajvidi Asr, R. et al. Hematology and hematopathology insights powered by machine learning: shaping the future of blood disorder management. Iran. J. Blood Cancer. 16 (4), 9–19 (2024).

Haq, I. et al. Machine vision approach for diagnosing tuberculosis (TB) based on computerized tomography (CT) scan images, Symmetry, vol. 14, no. 10, p. 2022. (1997).

Shahzad, T., Mazhar, T., Saqib, S. M. & Ouahada, K. Transformer-inspired training principles based breast cancer prediction: combining EfficientNetB0 and ResNet50. Sci.Rep. 15 (1), 13501 (2025).

Naeem, I. et al. Voice biomarkers as prognostic indicators for parkinson’s disease using machine learning techniques. Sci. Rep. 15 (1), 12129 (2025).

Tsai, D. J. et al. Artificial intelligence-enabled chest X-ray classifies osteoporosis and identifies mortality risk. J. Med. Syst. 48 (1), 12 (2024).

Feng, S. W., Lin, S. Y., Chiang, Y. H., Lu, M. H. & Chao, Y. H. Deep learning-based hip x-ray image analysis for predicting osteoporosis. Appl. Sci. 14 (1), 133 (2023).

Tejaswini, E., Vaishnavi, P. & Sunitha, R. Detection and prediction of osteoporosis using impulse response technique and artificial neural network, in International Conference on Advances in Computing, Communications and Informatics (ICACCI), 2016: IEEE, pp. 1571–1575., 2016: IEEE, pp. 1571–1575. (2016).

Jang, R. et al. Prediction of osteoporosis from simple hip radiography using deep learning algorithm. Sci. Rep. 11 (1), 19997 (2021).

Iliou, T., Anagnostopoulos, C. N. & Anastassopoulos, G. Osteoporosis detection using machine learning techniques and feature selection. Int. J. Artif. Intell. Tools. 23 (05), 1450014 (2014).

Gharib, H. et al. Medical guidelines for clinical practice for the diagnosis and management of thyroid nodules. Endocr. Pract. 12 (1), 63–102 (2006).

Suh, B. et al. Interpretable deep-learning approaches for osteoporosis risk screening and individualized feature analysis using large population-based data: model development and performance evaluation. J. Med. Internet. Res. 25, e40179 (2023).

Hussein, R. S. & Wahdan, M. M. Knowledge about symptoms and risk factors of osteoporosis among adult women in Cairo, Egypt. Egypt J. Commun. Med, 39 (2), 59-68 (2021).

Fasihi, L., Tartibian, B., Eslami, R. & Fasihi, H. Artificial intelligence used to diagnose osteoporosis from risk factors in clinical data and proposing sports protocols. Sci. Rep. 12 (1), 18330 (2022).

Mebarkia, M., Meraoumia, A., Houam, L. & Khemaissia, S. Improved osteoporosis detection process using lightweight deep features. AIJR Abstracts. 134–135 (2024).

Zhang, B. et al. Deep learning of lumbar spine X-ray for osteopenia and osteoporosis screening: A multicenter retrospective cohort study. Bone 140, 115561 (2020).

Dhanyavathi, A. & Veena, M. Osteoporosis detection and classification of femur X-ray images through spectral domain analysis using texture features. International J. Adv. Comput. Sci. Applications, 14, 9, (2023).

Yu, X. & Wang, S. H. Abnormality diagnosis in mammograms by transfer learning based on ResNet18. Fundamenta Informaticae. 168, 2–4 (2019).

Akbar, S. et al. Prediction of amyloid proteins using embedded evolutionary & ensemble feature selection based descriptors with eXtreme gradient boosting model. IEEE Access. 11, 39024–39036. https://doi.org/10.1109/ACCESS.2023.3268523 (2023).

Ullah, M., Akbar, S., Raza, A., Khan, K. A. & Zou, Q. TargetCLP: clathrin proteins prediction combining transformed and evolutionary scale modeling-based multi-view features via weighted feature integration approach. Brief. Bioinform. 26 (1). https://doi.org/10.1093/bib/bbaf026 (2025).

Raza, A. et al. Comprehensive analysis of computational methods for predicting Anti-inflammatory peptides. Biochem Pharmacol, 31, 6, pp. 3211–3229, 2024/08/01 2024, https://doi.org/10.1007/s11831-024-10078-7

Hayat, M. et al. pACPs-DNN: predicting anticancer peptides using novel peptide transformation into evolutionary and structure matrix-based images with self-attention deep learning model. Comput. Biol. Chem. 117, 108441 (2025).

Guo, M. & Du, Y. Classification of thyroid ultrasound standard plane images using ResNet-18 networks, in IEEE 13th international conference on anti-counterfeiting, security, and identification (ASID), 2019: IEEE, pp. 324–328., 2019: IEEE, pp. 324–328. (2019).

Liu, D., Liu, Y. & Dong, L. G-ResNet: Improved ResNet for brain tumor classification, in International Conference on Neural Information Processing, : Springer, pp. 535–545. (2019).

Abubakar, U. B., Boukar, M. M. & Adeshina, S. Evaluation of parameter fine-tuning with transfer learning for osteoporosis classification in knee radiograph. Int. J. Adv. Comput. Sci. Appl. 13, 8 (2022).

Lee, K. S., Jung, S. K., Ryu, J. J., Shin, S. W. & Choi, J. Evaluation of transfer learning with deep convolutional neural networks for screening osteoporosis in dental panoramic radiographs. J. Clin. Med. 9 (2), 392 (2020).

Yamamoto, N. et al. Deep learning for osteoporosis classification using hip radiographs and patient clinical covariates, Biomolecules, vol. 10, no. 11, p. 1534, (2020).

Sukegawa, S. et al. Identification of osteoporosis using ensemble deep learning model with panoramic radiographs and clinical covariates. Sci. Rep. 12 (1), 6088 (2022).

Akbar, S., Ahmad, A. & Hayat, M. Iris detection by discrete sine transform based feature vector using random forest, JAEBS, vol. 4, pp. 19–23, (2014).

Akbar, S., Ullah, M., Raza, A., Zou, Q. & Alghamdi, W. DeepAIPs-Pred: predicting anti-inflammatory peptides using local evolutionary transformation images and structural embedding-based optimal descriptors with Self-Normalized BiTCNs. J.Chem. Inf. Model. 64 (24), 9609–9625 (2024).

Qureshi, M. S. et al. AI based predictive acceptability model for effective vaccine delivery in healthcare systems. Sci. Rep. 14 (1), 26657 (2024).

Akbar, S. et al. DeepAIPs-SFLA: deep convolutional model for prediction of Anti-Inflammatory peptides using binary pattern decomposition of novel multiview descriptors with an SFLA approach. ACS Omega, 10(32), 35747-35762 (2025).

Akbar, S. et al. Accelerating prediction of antiviral peptides using genetic Algorithm-Based weighted multiperspective descriptors with Self-Normalized deep networks. J Chem. Inf. Model, 2025/09/03 2025, https://doi.org/10.1021/acs.jcim.5c01777

Raza, A. et al. AIPs-SnTCN: predicting anti-inflammatory peptides using fasttext and transformer encoder-based hybrid word embedding with self-normalized Temporal convolutional networks. J. Chem. Inf. Model. 63 (21), 6537–6554 (2023).

Akbar, S., Raza, A. & Zou, Q. Deepstacked-AVPs: predicting antiviral peptides using tri-segment evolutionary profile and word embedding based multi-perspective features with deep stacking model. BMC Bioinform. 25 (1), 102 (2024).

Akbar, S., Zou, Q., Raza, A. & Alarfaj, F. K. iAFPs-Mv-BiTCN: predicting antifungal peptides using self-attention transformer embedding and transform evolutionary based multi-view features with bidirectional Temporal convolutional networks. Artif. Intell. Med. 151, 102860 (2024).

Ullah, M., Akbar, S., Raza, A. & Zou, Q. DeepAVP-TPPred: identification of antiviral peptides using transformed image-based localized descriptors and binary tree growth algorithm, Bioinformatics, vol. 40, no. 5, p. btae305, (2024).

Raza, A. et al. AIPs-DeepEnC-GA: predicting anti-inflammatory peptides using embedded evolutionary and sequential feature integration with genetic algorithm based deep ensemble model. Chemometr. Intell. Lab. Syst. 254, 105239 (2024).

Shamas, M. et al. Classification of pulmonary diseases from chest radiographs using deep transfer learning. PloS One. 20 (3), e0316929 (2025).

Acknowledgements

The work was supported by a university research grant PP-IVI-2025, and National Natural Science Foundation of China (No. 62131004) .

Funding

This research article was supported by Universiti Kebangsaan Malaysia with the Code TAP-K020558, and National Natural Science Foundation of China (No. 62131004).

Author information

Authors and Affiliations

Contributions

MB.Q., Model Creation, methodology, writing. M.S., Implementation, writing, validation A. R., interpretation, Model Creation, and Writing. MS. Q., Data curation, Model visualization, and Model supervision. W. A., Writing, Interpretation, and Implementation S.A ., Supervision, Idea, and Proof-Reading. RA. K., Model Validation, funding, formal analysis, MR.S., visualization, writing, resources, and formal analysis. All authors reviewed and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Qureshi, M.B., Sani, M., Raza, A. et al. Deep‑learning based osteoporosis classification in knee X‑rays using transfer‑learning approach. Sci Rep 15, 38448 (2025). https://doi.org/10.1038/s41598-025-24338-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-24338-4

Keywords

This article is cited by

-

Exploring gallbladder cancer prognosis using machine learning and explainable AI

Discover Computing (2026)