Abstract

Vision is a fundamental sense that profoundly impacts daily life and independence. For visually impaired people (VIP), the absence or impairment of this sense presents significant challenges, particularly in navigating their environment and identifying objects independently. A considerable challenge for visually impaired individuals is the inability to navigate independently and identify objects, which restricts their daily activities and routines. Various investigations have been conducted in the domain of real-time object detection (OD) using deep learning (DL). DL-based techniques are shown to attain performance in OD. In this manuscript, an Intelligent Object Detection-Based Assistive System Using Ivy Optimisation Algorithm (IODAS-IOA) model is proposed for VIPs. The aim is to develop an effective OD system to help visually impaired persons navigate their environment safely and independently. To achieve this, the image pre-processing stage initially employs the Gaussian filtering (GF) method to eliminate noise. Moreover, a novel YOLOv12 method is employed for the OD process. Furthermore, the DenseNet161 method is used for the feature extraction process. Additionally, the bidirectional gated recurrent unit with attention mechanism (BiGRU-AM) method is implemented for classifying the extracted images. Finally, the parameter tuning process is performed using the Ivy optimization algorithm (IOA) method to improve classification performance. Extensive experimentation was performed to validate the performance of the IODAS-IOA approach under the indoor OD dataset. The experimental results of the IODAS-IOA approach emphasized the superior accuracy value of 99.74% over recent techniques.

Similar content being viewed by others

Introduction

An individual’s life relies on the basic five senses, where vision ability is undoubtedly significant1. VIPs lack this sense of vision. Therefore, accomplishing their everyday activities became very difficult for them2. This might result in problems that may merely be passive for a short time by aiding staff, and incidents present when some conditions are deadly, not only for the impaired person, but also to people in the surrounding area3. The VIPs encounter challenges with such actions, and object recognition is a vital feature they often rely on. They typically experience difficulties with object and action recognition in their surroundings, particularly when walking on the road. Hence, object recognition methods are helpful for VIP to live self-reliantly4. Previously, numerous applications, processes, devices, and systems have been established in the field of assistive technology to assist individuals with disabilities in performing activities that they were previously unable to do5. Such solutions typically incorporate electronic devices equipped with microprocessors, cameras, and sensors, which enable them to make decisions and provide auditory or tactile feedback to the user.

Numerous present object recognition and identification methods achieve higher precision but can’t deliver essential data and features to track VIPs, ensuring their safe mobility6. However, blind people can’t view objects in their environments, so it could be beneficial to be aware of them. Additionally, there is a need to create a tracking system that allows VIPs’ family members to monitor their actions7. Furthermore, the navigation systems play a vital part in augmenting the mobility and independence of the VIP. Firstly, these methods are still essential for improving confidence, providing practical details about their environments and directing them by routes, allowing them to self-assuredly discover new areas, resulting in superior independence in their everyday life8. Moreover, these methods enhance the protection of VIPs during their travels, providing necessary hearing signals and guiding them to avoid problems, proficiently navigate complex public transportation networks, and safely traverse streets, thereby reducing the possibility of injuries and accidents, and making both indoor and outdoor movement safer and more controllable9. Several studies are taking place in the real-time object recognition domain with the help of DL. The novel DL-driven models beat the conventional object recognition methods by massive margins10.

In this manuscript, an Intelligent Object Detection-Based Assistive System Using Ivy Optimisation Algorithm (IODAS-IOA) model is proposed for VIPs. The aim is to develop an effective OD system to help visually impaired persons navigate their environment safely and independently. To achieve this, the image pre-processing stage initially employs the Gaussian filtering (GF) method to eliminate noise. Moreover, a novel YOLOv12 method is employed for the OD process. Furthermore, the DenseNet161 method is used for the feature extraction process. Additionally, the bidirectional gated recurrent unit with attention mechanism (BiGRU-AM) method is implemented for classifying the extracted images. Finally, the parameter tuning process is performed using the optimization (IOA) method to improve classification performance. Extensive experimentation was performed to validate the performance of the IODAS-IOA approach under the indoor OD dataset. The significant contribution is listed below.

-

The GF model is initially utilized to reduce noise and improve image clarity, resulting in more accurate OD, particularly in low-light and cluttered environments. Furthermore, enhancing image quality early ensures better performance in subsequent stages, which directly supports reliable navigation assistance for VIPs.

-

The YOLOv12 technique is used for achieving real-time, high-precision OD in indoor environments. The model enables the precise detection of various objects simultaneously with minimal latency, and also facilitates safer and more informed navigation for VIPs. This system additionally strengthens the responsiveness and adaptability of the system in dynamic indoor settings.

-

The DenseNet161 approach is employed for performing deep and efficient feature extraction, thus capturing rich spatial and contextual details from detected objects. The semantic comprehension of indoor scenes is strengthened, and the classification accuracy is also improved in this process. The system attains superior object representation by preserving complex feature hierarchies and also enhances the reliability of assistive feedback for VIPs.

-

The BiGRU-AM methodology effectually captures temporal dependencies and emphasizes salient features. The classification of visually similar or partially occluded objects within indoor scenes is also improved. This also enables more context-aware recognition, enhancing decision-making accuracy. The approach ensures reliable and precise assistance for VIPs in dynamic environments.

-

The IOA-based hyperparameter tuning yields enhanced classification accuracy and faster convergence. The overall robustness and effectiveness of the model, compared to conventional methods, are improved by the optimization, and it also ensures that each component operates at its optimal performance level. The approach enhances the reliability of the assistive system for providing real-time support to visually impaired users.

-

The novel IODAS-IOA framework uniquely integrates YOLOv12 for detection, DenseNet161 for deep feature extraction, and BiGRU with attention for context-aware classification. The integration of the IOA ensures optimal performance across all stages. This method enables VIPs to perform real-time indoor object recognition, outperforming existing methods by improving accuracy, adaptability, and responsiveness in both static and dynamic indoor environments.

Literature reviews

Yu and Saniie11 introduced the Visual Impairment Spatial Awareness (VISA) device, designed to holistically assist individuals with visual impairments in indoor routines through an organized, multi-layered approach. Then, it incorporates information from those tools to assist in intricate navigational tasks, namely pathfinding and obstacle avoidance. Kan et al.12 presented a proof-of-concept wearable navigation device called eLabrador, intended to help VIP in long-distance walking in unusual outdoor settings. This hybrid model allows precise and safe navigation for VIP in outdoor environments. Specifically, the eLabrador utilizes a head-mounted RGB-D camera to capture objects and environmental geometric terrain in outdoor city settings. Joshi et al.13 proposed an AI-enabled advanced wearable assistive system called SenseVision for analyzing sensory and visual information regarding objects and difficulties encountered while observing the surrounding area. This system encompasses a broad integration of computer vision (CV) and sensor-based techniques, which generate audio information in terms of recognized objects or acoustic warnings for identified hazards. In14, the Indoor-Outdoor YOLO Glass network (In-Out YOLO), an OD technique that relies on video, is presented to support VIP by giving details about near objects and facilitating self-regulating navigation. For VIPs, this technique enables the detection and evasion of objects that affect their day-to-day actions and capability to operate in both outdoor and indoor environments. Kuriakose et al.15 presented DeepNAVI, a smartphone-aided navigation assistant, which uses DL capabilities. Moreover, presenting information about the problems, this method also provides details about their posture, scene information, motion status, and distance from the user. All these details are presented to users in an audio manner without compromising convenience and mobility. With a rapid inference time and a small model size, this navigation assistant is leveraged on a handy device, such as a smartphone, and works effortlessly in a practical landscape. In16, a new saliency-induced moving OD (SMOD) method is introduced to remove feature outliers for RGB-D-enabled simultaneous localization and mapping (SLAM) in challenging adaptive workplaces. They are employed to recognize objects efficiently from varying circumstances. In17, an effective approach is proposed to support the everyday routines of VIPs for indoor object recognition through a recently developed Honey Adam African Vultures Optimisation (HAAVO) method.

Now, object recognition and detection are performed by DCNN and GAN. The deep residual network (DRN) and DCNN classifier are applied to evaluate the distances that are optimally trained through the suggested HAAVO. Htet et al.18 proposed a privacy-preserving elderly activity monitoring system by utilizing stereo depth cameras and DL techniques for real-time recognition of daily actions and transitions, with high accuracy and adaptability through TL. Lin et al.19 developed SLAM2, a semantic RGB-D Simultaneous Localisation and Mapping (SLAM) model, which accurately estimates camera and object 6 Degrees of Freedom (6DOF) poses in dynamic environments by incorporating geometric features and DL for robust 3D mapping. Jung and Nam20 proposed a technique for enhancing real-time fall detection by comparing the YOLOv11 and Real-Time Detection Transformer version 2 (RT-DETRv2) models. A YOLO-based system with feature map-based knowledge distillation is also proposed for improved accuracy and speed. Zhang et al.21 proposed the YOLOv5 approach, which incorporates GhostNet as the backbone, a coordinate attention mechanism (CAM), and a Bidirectional Feature Pyramid Network (BiFPN), and integrates it with RealSense D435i depth sensing and a voice interface on a Raspberry Pi 4 B platform. Pratap, Kumar, and Chakravarty22 evaluated and compared YOLO and Single Shot Multibox Detector (SSD), as well as faster region-based CNN (R-CNN) and Mask R-CNN, for accurate and efficient indoor object detection to assist VIPs in real-time navigation. Afif et al.23 developed an efficient object detection system using an optimized version of the You Only Look Once version 3 (YOLOv3) technique. Sachin et al.24 developed an AI-based indoor navigation system for VIPs utilizing YOLO for OD, depth sensing for distance estimation, and real-time voice output for improved spatial awareness and room classification. Saida and Parthipan25 compared the efficiency of YOLO and Residual Network 50 (ResNet50) methods for improving motion detection accuracy using DL models. Han et al.26 presented a SimAM and BiFPN-enhanced YOLO version 7 (SBP-YOLOv7) model that integrates SimAM attention, Short-BiFPN, Partial Convolution (PConv), Group Shuffle Convolution (GSConv), and VoVGSCSP modules for optimized feature extraction and reduced complexity. Zhao et al.27 proposed a methodology by enhancing the YOLO version 8 nano (YOLOv8n) with ParNetAttention and CARAFE modules.

Although existing studies pose various limitations, they are effective in indoor OD for VIPs. Some techniques face challenges in terms of real-time processing efficiency and high computational demands, which restrict their portability and practical usability. User trust may be compromised due to the insufficient addressing of privacy concerns, and several techniques exhibit reduced accuracy and robustness in intrinsic, dynamic indoor environments with varying lighting and occlusions. Several models lack seamless integration of multimodal data, such as depth sensing and voice interaction, in lightweight, cost-effective devices. There is also insufficient emphasis on handling small object detection and adapting models to diverse indoor settings. The research gap, when addressed, involves developing efficient, privacy-aware, and adaptable frameworks that balance accuracy, speed, and hardware constraints to enhance real-world usability for VIP indoor navigation and assistance.

Methodological framework

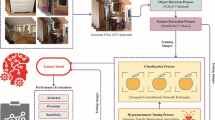

In this manuscript, the IODAS-IOA model is proposed for VIPs. The IODAS-IOA model aims to develop an effective OD system to help visually impaired persons navigate their environment safely and independently. To attain this, the presented IODAS-IOA model has image pre-processing, OD, transfer learning, classification, and parameter tuning models. Figure 1 indicates the complete workflow of the IODAS-IOA technique.

Image pre-processing: GF model

Initially, the image pre-processing stage employs the GF technique28. This model effectively mitigates noise while preserving crucial edge details, which is significant for accurate OD in indoor environments. The GF presents a smooth and consistent blur that improves image quality without introducing artefacts, unlike median or bilateral filters (BFs). Its computational simplicity facilitates real-time applications, crucial for assistive systems aimed at visually impaired users. Furthermore, this method is highly capable of handling Gaussian noise commonly seen in low-light and cluttered indoor scenes, making it superior to other filtering techniques. The balance between noise reduction and edge preservation improves the overall robustness and reliability of the OD process. Consequently, GF provides a robust foundation for downstream tasks, such as feature extraction and classification, ensuring higher accuracy and faster processing.

GF is an extensively applied image pre-processing model that smooths images by minimizing noise and detail, utilizing a Gaussian function. In OD for VIP, GF plays a crucial role in enhancing the input image quality before the detection methods process it. By using a Gaussian blur, this model suppresses higher-frequency noise, making object edges and contours more distinct for feature extraction. This results in enhanced precision in identifying related objects inside cluttered or complex environments. Additionally, GF enables steady detection performance under changing lighting conditions, ensuring the assistance system provides consistent and reliable guidance for visually impaired individuals in real-time settings.

OD: YOLOv12 technique

Moreover, the YOLOv12 method is mainly executed for the OD process. This method is chosen for its superior balance between speed and accuracy, making it ideal for real-time applications, such as assistive systems for visually impaired users. This technique offers an enhanced feature representation and improved handling of small and overlapping objects, which are common in indoor environments, compared to prior YOLO versions or methods such as Faster R-CNN or SSD. This single-stage architecture enables faster inference. Furthermore, the system’s scalability and robustness across diverse object categories enhance its versatility. Thus, the YOLOv12 model is considered superior for indoor OD. Figure 2 indicates the structure of the YOLO-v12 method.

YOLOv12 is designed to strike a balance between precision and speed, making it ideal for real-time applications. Its framework combines progressive attention mechanisms (AMs) that allow it to focus on relevant aspects in an image, while neglecting irrelevant details29. At the basis of YOLOv12 lies the attention-centric method that sets it apart from CNN. Although CNNs excel in removing spatial aspects, they frequently struggle with acquiring longer‐range dependencies and intricate relations within an image. YOLOv12 addresses these limitations by combining AMs that dynamically weigh the significance of various image areas. It comprises three significant enhancements to YOLOv12, designed to improve efficiency and performance. Initially, area attention (AA) segments images into vertical or horizontal regions, mitigating computational complexity while maintaining a large receptive field. This facilitates the handling of varying object sizes and densities, as well as the processing of high-resolution images. Furthermore, it integrates the Residual Efficient Layer Aggregation Networks (R-ELAN) model to address the vanishing gradients issue and improve the training stability and feature aggregation through residual connections. Lastly, YOLOv12 features an optimized architecture by eliminating redundant layers, balancing attention and convolutional operations, and integrating Flash Attention to improve memory access and inference speed.

The attention-centric method in YOLOv12 is motivated by the success of transformer methodologies in CV and natural language processing (NLP). Diverse conventional CNNs utilize convolutional filters uniformly throughout an image, and AMs enable the method to focus on specific areas adaptively.

Feature extraction: densenet161 approach

Following this, the IODAS-IOA model utilizes the DenseNet161 model for the feature extraction process30. This model is effective for training and feature representation due to its dense connectivity pattern, which promotes feature reuse and improves the vanishing gradient problem. The DenseNet161 model requires fewer parameters while capturing complex contextual and spatial information, which are considered significant for discriminating similar indoor objects, compared to other CNN methods such as ResNet or VGG. Its deep layers enable the extraction of high-level semantic features, enhancing classification performance in intrinsic scenes. Moreover, the model’s robust generalization ability enhances its robustness across diverse indoor environments. Thus, this technique is considered most appropriate for reliable and accurate feature extraction in assistive systems for visually impaired users.

DenseNet161 represents a paradigmatic shift in CNN structures, characterized by its dense connectivity pattern, which facilitates feature reuse and low gradient propagation throughout the network. Well-known for its better performance in capturing complex visual representations, it has proven to be a foundation in tasks such as image classification, particularly in settings that require strong feature extraction. In real-world settings, this model has established extraordinary efficiency in several fields, including remote sensing and medical image analysis. Its dense connectivity form and hierarchical framework facilitate the transmission of lower information, allowing the method to capture increasingly abstract representations as information passes deeper into the network. Structurally, it comprises a sequence of dense blocks, all of which feature densely linked layers that collect direct input from each previous layer within the block. This model facilitates feature reuse and gradient low propagation, enabling effective training and improved model expression. In addition, global average pooling operations and transition layers further enhance classification performance and feature aggregation, making this model a strong competitor in the field of image classification structures.

Classification: BiGRU-AM method

Moreover, the BiGRU-AM technique is implemented for the classification process31. This technique is an ideal model for effectively capturing both past and future contextual data through its bidirectional architecture, improving understanding of sequential dependencies in visual data. The most relevant features are enhanced by the integration of the AM technique, thereby improving the discrimination between similar or partially occluded objects. This method is highly efficient in handling intrinsic patterns and noise commonly seen in indoor environments, compared to conventional RNNs or unidirectional GRUs. Its ability to model temporal relationships and highlight crucial cues results in higher classification accuracy. This incorporation makes BiGRU-AM particularly appropriate for real-time, reliable classification in assistive systems for VIPs.

Dual gates form the framework of the GRU, comprising the reset and update gates. It establishes the significance of earlier time-step data and regulates the number of data acquired and passed to the future. The resetting gate controls how much previous data is erased.

The computation for resetting gate \(\:{r}_{t}\) and update gate \(\:{z}_{t}\) is specified:

Now \(\:{h}_{t}\) refers to existing memory and the hidden state of the candidate for \(\:t=\text{1,2},\dots\:,n\), \(\:{h}_{t-1}\) represents preceding memory, \(\:{x}_{t}\) specifies novel input, \(\:{b}_{z},{b}_{r},{b}_{h}\) refer to biased vectors and \(\:{W}_{xz},{W}_{hz},{W}_{xr},{W}_{hr},{W}_{xh},{W}_{hh}\) indicate the weighted matrices. \(\:\sigma\:\) signifies the activation function, and \(\:*\:\)represents the Hadamard product among dual matrices.

A bi-directional framework of GRU, termed Bi-GRU, processes data in dual directions: backwards and forward. These two processes enable the method to entirely remove the appropriate data from either the front or back of the data sequence. This framework allows for the acquisition of intricate temporal dependencies by considering the overall sequence during training.

The weighted feature \(\:{\widehat{x}}_{t}\) substitutes for the original input \(\:{x}_{t}\) as the neural network’s input for time-series data. Particularly, the utilization of AM might be calculated:

The attention score \(\:{e}_{t}\) is specified as being calculated depending on the input \(\:{\chi\:}_{t}\), preceding state \(\:{s}_{t-1}\) and prior attention weight \(\:{\alpha\:}_{t-1}\) for time \(\:t=1,\dots\:,T\) and observation \(\:j=1,\dots\:,\:n\).

Parameter tuning: IOA process

Finally, the parameter tuning process is performed using the IOA model to enhance classification performance32. This model is chosen for its superior capability in exploring the hyperparameter space and avoiding local minima compared to conventional optimization methods, such as grid search or gradient-based techniques. This method effectively balances exploration and exploitation, leading to faster convergence and enhanced model performance. Its adaptive nature allows for the dynamic adjustment of parameters, thereby improving robustness and accuracy in complex models. Furthermore, IOA requires fewer iterations, mitigating computational cost while optimizing multiple parameters simultaneously. This makes IOA an ideal choice for fine-tuning DL models in real-time assistive systems for VIPs.

IVY is motivated by the coordinated growth, orderly and diffusion evolution procedure of ivy plants. Its adaptive dynamic adjustment mechanism and prevailing global searching ability enable IVY to excel in complex optimization concerns. IVY pretends to represent the propagation and coordinated growth of a population, as well as the behaviour of ivy plants; an individual ivy depicts every possible solution in the problem-solving area of ivy. Every individual establishes its progress direction based on the data from adjacent ivy plants and enhances it by choosing the closest and most important neighbours, thereby imitating the behaviour of ivy. Amid the iterations of the model, every individual dynamically modifies its location and progress direction depending on the existing finest solution and neighbourhood cooperation data. The growth and position vectors of every individual are updated utilizing Eqs. (8) and (9). This mechanism enables the model to explore the solution space effectively and gradually approach the global optimum solution.

Here, \(\:{x}_{j}\) signifies the existing location of \(\:i\) th individual, \(\:{x}_{i}^{{\prime\:}}\) depicts the upgraded location of \(\:i\) th individual, \(\:{g}_{j}\) indicates the growth vector of \(\:i\) th individual, \(\:{x}_{best}\) specifies existing global optimum solution, \(\:{r}_{3}\) represents an arbitrary number created from a uniform distribution, \(\:{r}_{1},\) \(\:{r}_{2}\), and \(\:{r}_{4}\) refers to arbitrary numbers created from the standard normal distribution, \(\:\beta\:\) specifies the control parameter and \(\:f\left(x\right)\) depict fitness value of \(\:i\) th individual.

Here, \(\:{g}_{i}\) indicates the existing vector growth of the ith individual, \(\:{g}_{i}^{{\prime\:}}\) signifies the upgraded growth vector of \(\:the\:i\)th individual, \(\:{r}_{5}\) and \(\:{r}_{6}\) represent an arbitrary number created from a uniform distribution and a standard normal distribution.

The fitness choice is a significant feature influencing the performance of the IOA. The hyperparameter selection method incorporates a solution encoding technique to evaluate the effectiveness of the candidate outcomes. The IOA deliberates that accuracy is the primary standard for designing the fitness function, as demonstrated.

Whereas, \(\:TP\) and \(\:FP\) illustrate the true and the false positive rates.

Experimental analysis

In this section, the experimental validation of the IODAS-IOA model is verified under the indoor OD dataset33. The dataset contains 6642 total counts under 10 objects, such as Door with 562 counts, Cabinet Door with 3890 counts, Refrigerator Door with 879 counts, Window with 482 counts, Chair with 223 counts, Table with 248 counts, Cabinet with 208 counts, Couch with 24 counts, Opened Door with 90 counts, and Pole with 36 counts.

Table 1 presents the average outcomes achieved by the IODAS-IOA approach on the 80:20 dataset. On 80% TRPHE, the IODAS-IOA method achieves an average \(\:acc{u}_{y}\) of 99.65%, \(\:pre{c}_{n}\) of 95.56%, \(\:rec{a}_{l}\) of 88.64%, \(\:F{1}_{score}\:\)of 91.42%, and \(\:{G}_{Measure}\) of 91.75%. Moreover, on 20%TSPHE, the IODAS-IOA technique achieves an average \(\:acc{u}_{y}\) of 99.74%, \(\:pre{c}_{n}\) of 98.75%,\(\:\:rec{a}_{l}\) of 94.33%, \(\:F{1}_{score}\:\)of 96.32%, and \(\:{G}_{Measure}\) of 96.43%.

Figure 3 establishes the classifier outcomes of the IODAS-IOA technique on 80:20. Figures 3a exhibits the accuracy examination of the IODAS-IOA approach. The figure indicates that the IODAS-IOA approach achieves progressively higher values as the number of epochs increases. Moreover, the consistent improvement in validation relative to training demonstrates that the IODAS-IOA model effectively learns from the test dataset. Lastly, Figs. 3b exemplifies the loss analysis of the IODAS-IOA method. The outcomes specify that the IODAS-IOA model achieves closely aligned training and validation loss values. It is noted that the IODAS-IOA method learns effectually from the test dataset.

Figure 4 depicts the classifier outcomes of the IODAS-IOA approach on 80:20. Figures 4a illustrates the PR investigation of the IODAS-IOA technique. The outcomes indicated that the IODAS-IOA technique yielded elevated PR values. Furthermore, the IODAS-IOA technique can reach high PR values on all class labels. Ultimately, Figs. 4b elucidates the ROC exploration of the IODAS-IOA technique. The figure shows that the IODAS-IOA model yielded enhanced ROC values. Additionally, the IODAS-IOA technique can achieve higher ROC values for all class labels.

Table 2 presents the average outcomes obtained using the IODAS-IOA technique on a 70:30 split. On 70% TRPHE, the IODAS-IOA model presents an average \(\:acc{u}_{y}\) of 99.69%, \(\:pre{c}_{n}\) of 96.56%, \(\:rec{a}_{l}\) of 90.69%, \(\:F{1}_{score}\:\)of 93.13%, and \(\:{G}_{Measure}\) of 93.37%. Moreover, on 30%TSPHE, the IODAS-IOA method achieves an average \(\:acc{u}_{y}\) of 99.73%, \(\:pre{c}_{n}\) of 97.76%,\(\:\:rec{a}_{l}\) of 93.15%, \(\:F{1}_{score}\:\)of 94.99%, and \(\:{G}_{Measure}\:\)of 95.22%.

Figure 5 reveals the classifier outcomes of the IODAS-IOA approach on 70:30. Figures 5a displays the accuracy study of the IODAS-IOA technique. The figure reports that the IODAS-IOA technique achieves progressively higher values with increasing epochs. Moreover, the persistent improvement in validation relative to training exposes that the IODAS-IOA technique learns effectively from the test dataset. At last, Figs. 5b illuminates the loss evaluation of the IODAS-IOA technique. The outcomes specify that the IODAS-IOA technique achieves closely aligned training and validation loss values. It is noted that the IODAS-IOA technique learns proficiently from the test dataset.

Figure 6 presents the classifier outcomes of the IODAS-IOA method on 70:30. Figures 6a portrays the PR analysis of the IODAS-IOA model. The results showed that the IODAS-IOA model yielded increasing PR values. Additionally, the IODAS-IOA model can achieve advanced PR values for each class. Finally, Figs. 6b elucidates the ROC evaluation of the IODAS-IOA model. The figure indicates that the IODAS-IOA model yielded better ROC values. Besides, the IODAS-IOA model can reach higher ROC values for each class.

Table 3; Fig. 7 shows the comparison results of the IODAS-IOA model with the present methods19,20,34,35,36. The results highlighted that the SLAM2, YOLOv11, RT-DETRv2, PointNet++, RSNet, Faster-RCNN, YOLOv3, Sparse RCNN, YOLOXs, and YOLOv8s methodologies have shown the worst performance. Whereas, the IODAS-IOA technique provided better-quality performance with the greatest \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l},\) and \(\:{F1}_{score}\) of 99.74%, 98.75%, 94.33%, and 96.32%, respectively.

Table 4; Fig. 8 illustrates the computational time (CT) of the IODAS-IOA methodology compared to existing techniques. The IODAS-IOA model gets lesser CT of 5.34 s, whereas the SLAM2, YOLOv11, RT-DETRv2, PointNet++, RSNet, Faster-RCNN, YOLOv3, Sparse RCNN, YOLOXs, and YOLOv8s techniques attain greater CT of 16.45 s, 10.98 s, 14.08 s, 20.00 s, 10.24 s, 17.18 s, 8.07 s, 18.69 s, 9.29 s, and 13.63 s, respectively.

Table 5; Fig. 9 depict the error analysis of the IODAS-IOA methodology with existing models. The SLAM2, PointNet++, and RSNet techniques exhibit relatively higher \(\:acc{u}_{y}\) and \(\:pre{c}_{n}\) but lower \(\:{F1}_{score}\), highlighting unbalanced detection capabilities. Additionally, YOLOv3 and YOLOv8s techniques achieved better \(\:rec{a}_{l}\) and \(\:{F1}_{score}\). Sparse RCNN achieved the highest \(\:rec{a}_{l}\) at 10.56% but with moderate \(\:pre{c}_{n}\) and \(\:{F1}_{score}\), suggesting it detects more objects but with less \(\:acc{u}_{y}\). On the contrary, the IODAS-IOA model exhibits the lowest \(\:acc{u}_{y}\) at 0.26% and \(\:pre{c}_{n}\) at 1.25%, highlighting significant limitations in detection reliability. Overall, YOLOv3 and YOLOv8s illustrate a more effective balance between \(\:acc{u}_{y}\) and robustness, making them better appropriate for real-time assistive systems for visually impaired individuals.

Table 6 depict the ablation study result of the IODAS-IOA approach, demonstrating the impact of each component on system performance for indoor OD and assistance. With only YOLOv12, the model attained robust baseline results with an \(\:acc{u}_{y}\) of 96.53% and \(\:{F1}_{score}\) of 92.82%. Incorporating BiGRU without AM improved \(\:acc{u}_{y}\) to 97.19%, showing the benefit of temporal modeling. Adding the AM in BiGRU-AM additionally improved \(\:acc{u}_{y}\) and \(\:{F1}_{score}\) to 97.84% and 94.13%, respectively. By adding DenseNet161 method for feature extraction significantly enhanced results, raising the \(\:{F1}_{score}\) to 94.84%. Optimizing hyperparameters with IOA while excluding feature extraction resulted in even better performance, with an \(\:{F1}_{score}\) of 95.63%. Finally, the IODAS-IOA model, combining BiGRU-AM, feature extraction, and tuning, attained the highest performance across all metrics, with an \(\:acc{u}_{y}\) of 99.74% and an \(\:{F1}_{score}\) of 96.32%, confirming the efficiency of the fully integrated framework.

Table 7 indicate the performance comparison based on computational efficiency to highlight the advantages of the IODAS-IOA model37. With the lowest floating point operations (FLOPs) at 2.91 gigaflops and minimal graphics processing unit (GPU) memory usage of 1123 megabytes, the IODAS-IOA method illustrated exceptional computational efficiency. It also attained the fastest inference time of 8.32 s, outperforming all other models. In contrast, models such as RT-DETR-L and CenterNet achieved significantly higher FLOPs of 108.00 and 70.20, respectively, resulting in longer inference times and greater GPU demand. Even YOLOv5sp6, with moderate FLOPs of 16.30, depicted a much longer inference time of 35.43 s. These outputs specified that the IODAS-IOA model presented a highly optimized balance between speed and resource usage, making it ideal for real-time applications in resource-constrained environments.

Conclusion

In this manuscript, the IODAS-IOA model is proposed for VIPs. The image pre-processing stage initially employed the GF technique to eliminate noise. Moreover, the YOLOv12 method was used for OD. Following this, the IODAS-IOA approach utilized DenseNet161 for feature extraction. Furthermore, the BiGRU-AM method was implemented for classification. Finally, the parameter tuning process was performed through the IOA to improve the classification performance. Extensive experiments on the indoor OD dataset demonstrated that the IODAS-IOA approach achieves a superior accuracy of 99.74%, outperforming recent methods. The limitations include that controlled indoor datasets may not fully capture the variability and complexity of real-world environments. The performance may also be affected by dynamic lighting conditions, occlusions, and diverse object appearances encountered outside the environmental setup. Furthermore, the deployment on low-power or portable devices may be limited by the computational needs. Future work should focus on improving the adaptability to varying real-world scenarios, including outdoor and multi-room settings, and enhancing efficiency for edge-device implementation. Integrating multimodal sensors and user feedback can also improve robustness and usability in practical applications.

Data availability

The data that support the findings of this study are openly available in the Kaggle repository at [https://www.kaggle.com/datasets/thepbordin/indoor-object-detection](https:/www.kaggle.com/datasets/thepbordin/indoor-object-detection).

References

Pareek, P. K. Pixel level image fusion in moving objection detection and tracking with machine learning. J. Fusion: Pract. Appl. 2 (1), 42–60 (2020).

Arya, C. et al. April. Object detection using deep learning: a review. In Journal of Physics: Conference Series . 1854 (1), 012012 IOP Publishing (2021).

Wang, J., Zhang, T. & Cheng, Y. Deep learning for object detection: A survey. Computer Syst. Sci. & Engineering, 38(2), (2021).

Hoeser, T. & Kuenzer, C. Object detection and image segmentation with deep learning on earth observation data: A review-part i: Evolution and recent trends. Remote Sensing, 12 (10), 1667 (2020).

Cynthia, E. P. et al. December. Convolutional Neural Network and Deep Learning Approach for Image Detection and Identification. In Journal of Physics: Conference Series 2394 (1), 012019 IOP Publishing (2022).

Jiao, L. et al. A survey of deep learning-based object detection. IEEE access. 7, 128837–128868 (2019).

Wang, W. et al. Salient object detection in the deep learning era: an in-depth survey. IEEE Trans. Pattern Anal. Mach. Intell. 44 (6), 3239–3259 (2021).

Zhang, Y., Song, C. & Zhang, D. Deep learning-based object detection improvement for tomato disease. IEEE access. 8, 56607–56614 (2020).

Nguyen, N. D., Do, T., Ngo, T. D. & Le, D. D. An evaluation of deep learning methods for small object detection. Journal of electrical and computer engineering, 2020(1), 3189691. (2020).

Gogineni, H. B., Bhuyan, H. K. & Lydia, L. Leveraging Marine Predators Algorithm with Deep Learning Object Detection for Accurate and Efficient Detection of Pedestrians. (2023).

Yu, X. & Saniie, J. Visual impairment Spatial awareness system for indoor navigation and daily activities. J. Imaging. 11 (1), 9 (2025).

Kan, M. et al. eLabrador: A Wearable Navigation System for Visually Impaired Individuals (IEEE Transactions on Automation Science and Engineering, 2025).

Joshi, R. C., Singh, N., Sharma, A. K., Burget, R. & Dutta, M. K. AI-SenseVision: a low-cost artificial-intelligence-based Robust and real-time Assistance for Visually Impaired People (IEEE Transactions on Human-Machine Systems, 2024).

Gladis, K. A., Madavarapu, J. B., Kumar, R. R. & Sugashini, T. In-out YOLO glass: Indoor-outdoor object detection using adaptive spatial pooling squeeze and attention YOLO network. Biomedical Signal Processing and Control, 91 105925 (2024).

Kuriakose, B., Shrestha, R. & Sandnes, F. E. DeepNAVI: A deep learning based smartphone navigation assistant for people with visual impairments. Expert Systems with Applications 212, 118720 (2023).

Sun, C. et al. Saliency-induced moving object detection for robust RGB-D vision navigation under complex dynamic environments. IEEE Trans. Intell. Transp. Syst. 24 (10), 10716–10734 (2023).

Nagarajan, A. & Gopinath, M. P. Hybrid optimization-enabled deep learning for indoor object detection and distance estimation to assist visually impaired persons. Advances in Engineering Software, 176, p.103362. (2023).

Htet, Y. et al. Smarter aging: developing a foundational elderly activity monitoring system with AI and GUI interface. IEEE Access. 12, 74499–74523 (2024).

Lin, Z. et al. Slam2: Simultaneous localization and multimode mapping for indoor dynamic environments. Pattern Recognition, 158, 111054 (2025).

Jung, E. & Nam, D. Lightweight YOLO-Based Real-Time Fall Detection Using Feature Map-Level Knowledge Distillation (ICT Ex, 2025).

Zhang, K. et al. Improved yolov5 algorithm combined with depth camera and embedded system for blind indoor visual assistance. Scientific Reports, 14(1), 23000 (2024).

Pratap, A., Kumar, S. & Chakravarty, S. Adaptive Object Detection for Indoor Navigation Assistance: A Performance Evaluation of Real-Time Algorithms. arXiv preprint arXiv:2501.18444. (2025).

Afif, M., Said, Y., Ayachi, R. & Hleili, M. An End-to-End Object Detection System in Indoor Environments Using Lightweight Neural Network. Traitement du Signal. 41 (5), 2711 (2024).

Sachin, A. et al. June. NAVISIGHT: A Deep Learning and Voice-Assisted System for Intelligent Indoor Navigation of the Visually Impaired. In 2025 3rd International Conference on Inventive Computing and Informatics (ICICI). 848–854. IEEE. (2025).

Saida, S. K. & Parthipan, V. Detecting the accurate moving objects in indoor stadium using you only look once algorithm compared with ResNet50. In Applications of Mathematics in Science and Technology. 502–507 CRC (2025).

Han, H., Li, W., Han, X., Kuang, L. & Yang, X. Indoor object detection algorithm based on SBP-YOLOv7. Cluster Computing, 28(9), 558 (2025).

Zhao, Y. et al. Object detection in smart indoor shopping using an enhanced YOLOv8n algorithm. IET Image Proc. 18 (14), 4745–4759 (2024).

Obayya, M., Al-Wesabi, F. N., Alshammeri, M. & Iskandar, H. G. An intelligent optimized object detection system for disabled people using advanced deep learning models with optimization algorithm. Sci. Rep. 15 (1), 1–19 (2025).

Quevit, V. et al. April. CFUs detection in Petri Dish images using YOLOv12. In 7 th International Conference on Advances in Signal Processing and Artificial Intelligence (ASPAI’2025). (2025).

Aksoy, S. Multi-Input Melanoma Classification Using MobileNet-V3-Large Architecture. Journal of Automation, Mobile Robotics and Intelligent Systems. 73–84 (2025).

Azman, S., Pathmanathan, D. & Balakrishnan, V. A two-stage forecasting model using random forest subset-based feature selection and BiGRU with attention mechanism: application to stock indices. PloS One. 20 (5), e0323015 (2025).

Zhao, X. et al. Optimization Design of Lazy-Wave Dynamic Cable Configuration Based on Machine Learning. Journal of Marine Science and Engineering. 13 (5), 873 (2025).

https://www.kaggle.com/datasets/thepbordin/indoor-object-detection

Luo, N., Wang, Q., Wei, Q. & Jing, C. Object-level segmentation of indoor point clouds by the convexity of adjacent object regions. IEEE Access. 7, 171934–171949 (2019).

Qin, P., Shen, W. & Zeng, J. DSCA-Net: indoor head detection network using Dual‐Stream information and channel attention. Chin. J. Electron. 29 (6), 1102–1109 (2020).

Fan, Z., Mei, W., Liu, W., Chen, M. & Qiu, Z. I-DINO: High-Quality Object Detection for Indoor Scenes. Electronics. 13 (22), 4419 (2024).

Xu, Y. & Fu, Y. Complex Indoor Human Detection with You Only Look Once: An Improved Network Designed for Human Detection in Complex Indoor Scenes. Applied Sciences. 14 (22), 10713 (2024).

Acknowledgements

The authors extend their appreciation to the King Salman center For Disability Research for funding this work through Research Group no KSRG-2024- 396.

Funding

The authors extend their appreciation to the King Salman Centre for Disability Research for funding this work through Research Group no. KSRG-2024- 396.

Author information

Authors and Affiliations

Contributions

Marwa Obayya: Conceptualisation, methodology, validation, investigation, writing—original draft preparation, Fahd N. Al-Wesabi: Project administration, Conceptualisation, methodology, writing—original draft preparation, writing—review and editingWafi Bedewi: methodology, validation, writing—original draft preparationMenwa Alshammeri: software, visualization, validation, data curation, writing, review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Obayya, M., Al-Wesabi, F.N., Bedewi, W. et al. An intelligent framework for visually impaired people through indoor object Detection-Based assistive system using YOLO with recurrent neural networks. Sci Rep 15, 43720 (2025). https://doi.org/10.1038/s41598-025-27603-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-27603-8