Abstract

The proliferation of IoT devices has exerted significant demand on computing systems to process data rapidly, efficiently, and in proximity to its source. Conventional cloud-based methods frequently fail because of elevated latency and centralized constraints. Fog computing has emerged as a viable option by decentralizing computation to the edge; yet, successfully scheduling work in these dynamic and heterogeneous contexts continues to pose a significant difficulty. This research presents A Neuro-Fuzzy Multi-Objective Reinforcement Learning (NF-MORL), an innovative framework that integrates neuro-fuzzy systems with multi-objective reinforcement learning to tackle task scheduling in fog networks. The concept is straightforward yet impactful: a Takagi–Sugeno fuzzy layer addresses uncertainty and offers interpretable priorities, while a multi-objective actor–critic agent acquires the capacity to reconcile conflicting objectives makespan, energy consumption, cost, and reliability through practical experience. We assessed NF-MORL using empirical data from Google Cluster and EdgeBench. The findings were promising: relative to cutting-edge techniques, our methodology decreased makespan by up to 35%, enhanced energy efficiency by about 30%, reduced operational expenses by up to 40%, and augmented fault tolerance by as much as 37%. These enhancements persisted across various workload sizes, demonstrating that NF-MORL can effectively adjust to fluctuating situations. Our research indicates that integrating human-like reasoning through fuzzy logic with autonomous learning via reinforcement learning can yield more effective and resilient schedulers for actual fog deployments.

Similar content being viewed by others

Introduction

The proliferation of billions of IoT devices has resulted in data generation surpassing the capacity of conventional cloud systems, particularly for applications requiring immediate responses [1,2,3], such as autonomous vehicles and remote healthcare [4,5]. Fog computing was developed to address this issue by relocating computation nearer to the devices [6]; however, it has its own challenges: nodes possess varying capabilities, workloads exhibit significant fluctuations, and failures are prevalent [7,8,9].

We promptly recognized that current scheduling algorithms be they heuristic [10], single-objective reinforcement learning [11,12], or some hybrid methodologies encounter difficulties under these circumstances. Most reinforcement learning-based methods [13], for instance, presume pristine and comprehensive state information, which is never the reality in actual fog networks [14]. Conversely, fuzzy logic adeptly manages ambiguity but does not possess the capacity for enhancement over time [15,16].

This finding prompted us to inquire: what if we could amalgamate the advantages of both? Thus, NF-MORL was established a system wherein a neuro-fuzzy module delivers interpretable, real-time priority amidst uncertainty, and a multi-objective reinforcement learning agent perpetually enhances both the policy and the fuzzy rules based on empirical performance feedback [17,18]. The proliferation of billions of IoT devices has resulted in data generation surpassing the capacity of conventional cloud systems, particularly for applications requiring immediate responses, such as autonomous vehicles and remote healthcare. Fog computing was developed to address this issue by relocating computation nearer to the devices; however, it has its own challenges: nodes possess varying capabilities, workloads exhibit significant fluctuations, and failures are prevalent.

We promptly recognized that current scheduling algorithms be they heuristic, single-objective reinforcement learning, or some hybrid methodologies encounter difficulties under these circumstances. Most reinforcement learning-based methods, for instance, presume pristine and comprehensive state information, which is never the reality in actual fog networks. Conversely, fuzzy logic adeptly manages ambiguity but does not possess the capacity for enhancement over time.

This finding prompted us to inquire: what if we could amalgamate the advantages of both? Thus, NF-MORL was established a system wherein a neuro-fuzzy module delivers interpretable, real-time priority amidst uncertainty, and a multi-objective reinforcement learning agent perpetually enhances both the policy and the fuzzy rules based on empirical performance feedback.

In contrast to numerous current methods that optimize only one or two objectives, NF-MORL concurrently addresses four essential goals: minimizing completion time (makespan), reducing energy consumption, decreasing costs, and enhancing reliability. Initial trials demonstrated that this collaborative optimization was essential for attaining balanced and practical performance.

The primary contributions of this study are:

-

A novel hybrid framework NF-MORL that seamlessly combines an adaptive Takagi–Sugeno neuro-fuzzy system with multi-objective actor–critic reinforcement learning for fog task scheduling.

-

A bidirectional learning mechanism: the reinforcement learning agent enhances the scheduling strategy, while performance feedback perpetually adjusts the fuzzy membership functions and rule outcomes capabilities that static fuzzy systems lack.

-

A pragmatic three-tier architecture (edge–fog–cloud) that facilitates distributed, low-latency decision-making and scalable training.

-

Comprehensive assessment of actual Google Cluster and EdgeBench traces, demonstrating consistent and substantial enhancements above contemporary DRL and hybrid benchmarks across all four objectives.

-

This amalgamation of interpretability, adaptability, and multi-objective cognizance renders NF-MORL exceptionally appropriate for practical fog implementations.

This study aims to develop a scheduling system capable of managing the complexities of real fog environments, including uncertain inputs, conflicting objectives, and dynamic conditions. We developed NF-MORL, a framework that integrates neuro-fuzzy reasoning with multi-objective reinforcement learning, which surpasses existing methodologies in performance and provides a level of transparency absent in conventional deep reinforcement learning techniques.

The experimental findings are self-evident: Reductions of up to 35% in makespan, 30% in energy consumption, 40% in costs, and a 37% enhancement in fault tolerance are significant; they denote substantial advancements for real systems. Anticipating future developments, we identify several promising avenues: implementing NF-MORL on actual hardware, augmenting it to accommodate security constraints, and investigating lifelong learning to ensure continuous improvement post-deployment.

We anticipate that this work will inspire additional researchers to investigate hybrid intelligence methodologies integrating the commonsense reasoning in which humans excel with the scalability offered by machines as a means to achieve genuinely autonomous and reliable edge systems.

2. Related Works

Task scheduling in fog computing has been extensively explored to improve performance, scalability, and energy efficiency in distributed Internet of Things environments. Early studies emphasized heuristic and static optimization strategies, yet they struggled to handle the heterogeneity and stochastic nature of fog infrastructures. Recent research trends have shifted toward machine learning and reinforcement learning approaches to enable dynamic and data-driven decision-making. The following review examines key contributions in task scheduling, energy management, and hybrid intelligent optimization within fog computing ecosystems. In1, an extensive analysis of scheduling strategies in fog computing is conducted, categorizing them into heuristic, meta-heuristic, and learning-based methodologies. Shortcomings of traditional methods are highlighted, particularly their inability to adapt to real-time workload variations and unpredictable network conditions. The review indicates that hybrid models integrating reinforcement learning and fuzzy logic remain underexplored. In2, a deep reinforcement learning scheduling strategy utilizing a proximal policy optimization (PPO) agent is developed to reduce system load and reaction time in fog-edge-cloud infrastructures through dynamic learning.

A distributed deep reinforcement learning system is introduced in3 that concurrently improves energy consumption and reliability for job scheduling in fog computing. Despite realizing considerable energy savings, it employs single-objective scaling and does not include interpretable uncertainty modeling using neuro-fuzzy systems, leading to suboptimal trade-offs among time, cost, and fault tolerance when compared to the Pareto-efficient NF-MORL technique.

A evolutionary approach including selective repair is presented for scheduling IoT operations with time limitations in fog-cloud situations in4. While successful for static processes, its meta-heuristic characteristics restrict online adaptation to dynamic workload fluctuations and do not use reinforcement learning or fuzzy reasoning for managing uncertainty. Researchers in5 have examined live migration algorithms for associated virtual machines in cloud data centers to enhance resource utilization and fault tolerance. This study is confined to centralized cloud infrastructures and does not include distributed fog layer scheduling or real-time multi-objective optimization. The research in6 introduced a dynamic network performance provisioning technique to facilitate “network in a box” for industrial applications.

RT-SEAT, a real-time hybrid scheduler designed to concurrently reduce energy consumption and peak temperature on heterogeneous multi-core systems, was created in7. Although it demonstrates superior thermal-aware performance, it fails to account for multi-objective trade-offs such as cost and fault tolerance, and it does not include learning-based adaptation. A fault-tolerant real-time scheduler for heterogeneous multiprocessor systems using redundancy and migration approaches is introduced in8. Notwithstanding robust reliability assurances, this technique remains static, lacking online learning and neuro-fuzzy uncertainty management capabilities.

A separate research9 presented a secure multi-reference attribute-based access control mechanism for fog-enabled e-health systems, termed SMAC, which only focuses on data privacy and access security, excluding job scheduling, resource allocation, and performance optimization. A hybrid knowledge- and data-driven methodology was developed for mass flow scheduling in hybrid workshops with dynamic order input10. This technique, although successful in industrial settings, is unsuitable for fog computing environments and lacks components of distributed reinforcement learning and fuzzy inference.

A dynamic prescriptive performance controller using event-driven reinforcement learning was presented for nonlinear systems with input delays in11. This work emphasizes continuous-time control inside the RL basis, excluding discrete-time job scheduling, fuzzy logic, and multi-objective Pareto optimization.

In12, a self-stimulated reinforcement learning system using particle swarm optimization was devised for fault-tolerant optimum control in zero-sum games with saturated inputs. It excels in competitive environments but is inapplicable to fog task scheduling and lacks neuro-fuzzy interpretation and multi-objective management capabilities. A distributed adaptive sliding mode formation controller with specified time restrictions for heterogeneous nonlinear multi-agent systems is suggested in13. This study focuses on physical formation tracking, rather than on computational job scheduling and resource management in dispersed and heterogeneous fog networks.

In14, researchers introduced a multi-objective scheduling and offloading framework inside Fog-Cloud settings, where system uncertainties are represented using fuzzy logic. The objective is to concurrently minimize workflow execution duration and energy use, with findings indicating that a fuzzy approach achieves a superior equilibrium between efficiency and processing expenses compared to deterministic alternatives. This study emphasizes the optimization of trade-offs and the modeling of uncertainty.

Another research15 examined work management in fog computing via the lens of federated reinforcement learning. This concept creates a common policy for distributed decision-making without requiring raw data interchange between nodes, hence improving data security and system scalability. This research’s primary novelty is in the integration of Federated Learning with Reinforcement Learning to optimize job management and mitigate processing congestion inside the fog network.

16 also examines offloading techniques based on Reinforcement Learning and Deep Learning, and assesses several automated decision-making algorithms for task location identification. This study demonstrates that using deep networks to learn offloading strategies yields superior performance compared to traditional approaches under dynamic settings. This study primarily focuses on deep learning and enhancing the adaptive decision rate.

Table 1 presents a systematic comparison of the examined methodologies, emphasizing their technical frameworks, fundamental techniques, operational contexts, targeted aims, and principal constraints. This overview delineates a distinct evolution from heuristic and static approaches to intelligent, learning-based solutions, while highlighting enduring deficiencies in multi-objective optimization, uncertainty management, and adaptive rule learning that are essential for next-generation fog scheduling systems. The table functions as a succinct reference for comprehending the progression and existing issues in the domain.

System model and problem formulation

The suggested NF-MORL system model features a three-tier hierarchical architecture, consisting of edge, fog, and cloud layers, aimed at facilitating resilient, multi-objective, and adaptive task scheduling in heterogeneous IoT contexts. The comprehensive architecture of the fog computing environment utilized in this study is depicted in Fig. 1, illustrating the interaction among IoT devices, fog nodes, and the cloud layer. This paradigm utilizes the advantages of each layer to deliver low-latency, energy-efficient, and context-aware services, while guaranteeing fault tolerance and scalability.

The proposed NF-MORL framework models a heterogeneous fog environment as a set of physical machines (PMs) that host various virtual machines (VMs). Each task \(\:{T}_{k}\) is defined by a vector of computational parameters, comprising CPU usage (\(\:CP{U}_{k}\)), memory requirement (\(\:ME{M}_{k}\)), bandwidth demand (\(\:B{W}_{k}\)), and task length (\(\:T{L}_{k}\)).

A hierarchical fuzzy topological framework for high-dimensional regression shows how structured fuzzy inference may improve scalability and uncertainty modeling in large-scale decision-making systems17. The introduction of a dynamic event-driven adaptive fuzzy sliding-state controller for unknown nonlinear systems shows how hierarchical fuzzy mechanisms may stabilize complicated settings with minimum processing18.

Table 2 summarizes the primary parameters, mathematical symbols, and variables utilized in the NF-MORL framework. The NF-MORL framework utilizes a Takagi–Sugeno neuro-fuzzy inference system to dynamically assess task significance based on CPU use, memory consumption, bandwidth availability, and job duration. The relevant fuzzy rule basis for task prioritizing is presented in Table 3. The system’s workload and overall processing capabilities are quantitatively represented by Eq. (1) through 43:

In Eq. 1, the instantaneous workload of virtual machine \(\:v\), represented as \(\:{wl}_{VM.v}\) is determined as the cumulative burden of all tasks \(\:k\:\in\:\:A\left(v\right)\) currently allocated to \(\:v\), where \(\:A\left(v\right)\) is the collection of tasks assigned to virtual machine \(\:v\) within fog node \(\:M\).

Equation (2) calculates the total workload exerted on physical fog node p, represented as \(\:{wl}_{PM.p}\), by aggregating the workloads of all virtual machines \(\:v\:\in\:\:V\left(p\right)\) residing on p, with \(\:V\left(p\right)\) being the collection of virtual machines hosted on physical machine \(\:p\).

Equation (3) delineates the effective processing capacity of virtual machine v in million instructions per second (MIPS), calculated as the product of the allotted CPU cores \(\:{n}_{core}^{\left(v\right)}\:\) and the per-core processing speed \(\:{MIPS}_{v}\) of the corresponding physical node.

where delineates the cumulative processing capacity of the whole fog layer, \(\:TotPrc\), as the aggregate of the individual processing capacities \(\:{prc}_{VM.v}\) across all virtual machines \(\:v\) within the system.

All input attributes are standardized to the range [0,1] to ensure uniformity across distributed fog layers and to enhance adaptive learning during the fuzzy inference phase.

Fuzzification of task attributes and adaptive rule base

The NF-MORL design comprises three hierarchical layers: Edge, Fog, and Cloud, each fulfilling a distinct function in the scheduling process. Continuous input features are converted into linguistic variables by the use of fuzzy membership functions. This study use triangle membership functions for all language terms. Their piecewise-linear configuration facilitates rapid assessment with minimal computational burden in real-time fog scenarios, while still offering sufficient adaptability to represent nonlinear relationships among CPU, memory, bandwidth, and task duration. In comparison to alternatives like trapezoidal or sigmoid functions, the triangular shape provides an effective equilibrium among interpretability, ease of implementation, and numerical stability in the NF-MORL training process.

Each characteristic of CPU, memory, bandwidth, and job duration is associated with fuzzy sets designated as Low, Medium, and High, facilitating seamless and adaptable transitions between states. In the Edge layer, the adaptive fuzzification and inference modules determine task priorities according to the membership functions illustrated in Figs. 2, 3, 4 and 5. The deterministic priorities derived from the fuzzification method depicted in Fig. 6 are employed to execute dynamic scheduling on heterogeneous fog nodes within the Fog layer.

The Cloud layer functions as a supervisory entity that ensures global synchronization, facilitates policy sharing, and manages offloading for tasks that are either delay-tolerant or computationally heavy15. Algorithm 1 summarizes the process of converting input parameters into adaptive task priorities via the neuro-fuzzy inference model. This algorithm delineates the sequential computation of task priorities via fuzzification, rule assessment, and the modification of adaptive parameters via reinforcement learning input.

For each fuzzy rule \(\:i\), if \(\:{\mu\:}_{ij}\left({x}_{j}\right)\) denotes the membership degree of the input \(\:{x}_{j}\) in its corresponding fuzzy set, the firing strength of the rule is computed as:

Every neuro-fuzzy rule is expressed in Takagi–Sugeno format, whereby the resultant function is linear about the input variables, and its parameters are adaptively adjusted throughout the learning process.

This enables the inference mechanism to adapt according to environmental feedback from the reinforcement learning agent. The adaptive linear consequence of rule III is specified as:

Equation (6) delineates the adaptive linear consequence of the \(\:i-th\) Takagi–Sugeno rule, whereby \(\:{\phi\:}_{i\left(x\right)}\) represents the rule output, x = [MEM, CPU, BW, TL] denotes the input vector, and the coefficients \(\:{a}_{i},\:{b}_{i},\:{c}_{i},\:{d}_{i},\:{e}_{i}\) are acquired online through back-propagated gradients from the reinforcement learning agent.

The aggregated task priority is computed by combining all activated rules using a weighted average method:

The final crisp task priority \(\:P\left({T}_{k}\right)\) is computed as the weighted average of all activated rule outputs \(\:{\varPhi\:}_{i}\left({T}_{k}\right)\), with weights \(\:{{\uplambda\:}}_{i}\) denoting the normalized firing strengths of each rule \(\:\left(\sum\:{\lambda\:}_{i}=\:1\right)\).

The ambiguous output surface delineates three specific operational states: Constrained, Balanced, and Favorable, which correlate to the overall resource status of the fog environment. Figure 11 depicts the centroid-based defuzzification method that transforms the aggregated fuzzy output into a precise numerical task priority, expressed as19:

Equation (8) executes centroid defuzzification to transform the aggregated fuzzy output \(\:{{\upmu\:}}_{agg\:}\left(x\right)\) into a definitive numerical priority \(\:{x}_{c}\), which is subsequently utilized by the scheduling policy in the following actor–critic phase.

This clear priority value establishes the quantitative foundation for scheduling and optimization in the latter phases of the NF-MORL system.

In Algorithm 1 The task length \(\:T{L}_{j}\) is obtained by dividing the computational workload \(\:{L}_{j}\) by the CPU processing speed \(\:{C}_{sp}\), following the standard execution-time formulation. The memory requirement \(\:ME{M}_{j}\) is directly taken from the task metadata provided in the dataset. The bandwidth demand \(\:B{W}_{j}\) is computed based on the input size \(\:{S}_{j}\) and the network transfer time per MB \(\:{T}_{net}\), in accordance with common data transmission models in fog and edge environments.

Quantitative performance formulations

The defuzzified priorities are integrated into four cumulative performance indicators that direct the multi-objective reinforcement learning process: makespan, energy consumption, execution cost, and fault probability. These formulations function as reward components rather than comprehensive physical system models, although they nonetheless align with commonly accepted concepts in fog and cloud scheduling. The makespan denotes the maximum completion time across all tasks, dictated by their initiation times and execution durations3,4:

where \(\:D\:\)is the task set, \(\:Star{t}_{j}\:\)denotes the start time of task \(\:j\), and \(\:ExecTim{e}_{j}\:\)is its execution time based on the workload and processing speed of the assigned fog node. Energy consumption is represented as the total power utilized by all fog nodes throughout task execution20:

where \(\:{P}_{active}\left({n}_{j}\right)\) is the active power of node \(\:{n}_{j}\), \(\:{L}_{j}\) is the computational load, and \(\:Csp\left({n}_{j}\right)\) is the node’s processing speed. The execution cost represents the cumulative processing duration adjusted by the cost rate of each fog node.

Where \(\:{C}_{unit}\left({n}_{j}\right)\) represents the per-unit processing cost of node \(\:{n}_{j}\). Fault probability measures the aggregate likelihood of failure across all nodes participating in task execution19:

where \(\:f\left({n}_{j}\right)\)denotes the failure probability of node \(\:{n}_{j}\).

The updated formulations offer a succinct and accurate assessment of system behavior, allowing the RL agent to understand the trade-offs between latency, energy consumption, cost, and reliability.

Proposed methodology

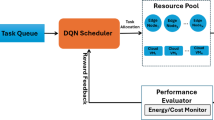

This section presents the NF-MORL framework shown in Fig. 7 for flexible and adaptive task scheduling in diverse fog environments. In contrast to conventional or Round Robin schedulers, which function in a rigid and non-adaptive fashion, NF-MORL integrates real-time feedback via a neuro-fuzzy inference layer and a multi-objective reinforcement learning module. The time-varying DRL method with efficient backtracking accelerates policy convergence, proving reinforcement learning can perform well with little training data21. Additionally, neighborhood-aware multi-agent reinforcement learning for traffic signal coordination indicates that collaborative learning enhances global control only when agent interactions include explicit backtracking22.

NF-MORL integrates the interpretability of fuzzy logic with the autonomous optimization features of actor–critic learning. The neuro-fuzzy layer produces adaptive task and virtual machine priorities, whilst the actor-critic network enhances scheduling judgments based on multi-objective incentives. Agents consistently monitor CPU, memory, bandwidth, and task characteristics, refining both fuzzy parameters and neural rules based on environmental feedback.

The approach utilizes deep actor–critic networks with concurrent agents to expedite learning and improve generalization among fog nodes. NF-MORL achieves scalable, resilient, and completely autonomous scheduling for dynamic fog computing environments by concurrently maximizing many performance targets and responding in real-time to evolving conditions.

State space (\(\:{S}_{t}\))

The state space represents the dynamic environment of the fog system at time step t.

Each state vector St encodes the operational conditions of fog nodes and the characteristics of incoming tasks.

In the NF-MORL model, the state vector is defined as23:

where \(\:{u}_{i}\)denotes the current CPU utilization of each node, \(\:{m}_{i}\)represents available memory resources, \(\:{b}_{i}\)indicates network bandwidth availability, \(\:{l}_{i}\)is estimated task length, and \(\:{p}_{i}\)and \(\:{q}_{i}\)are adaptive priorities produced by the Neuro-Fuzzy Inference Layer. This multi-dimensional state captures both system resources and workload characteristics, enabling the agent to make context-aware scheduling decisions.

Action (\(\:{A}_{t}\))

The action defines the scheduling decision made by the RL agent at each time step.

In NF-MORL, an action At corresponds to the mapping of a task to a specific VM or fog node, based on current state and learned policy.

The actor network outputs a probability distribution over available nodes, representing the likelihood of selecting each node for a given task. Actions are selected stochastically during training (exploration) and deterministically during evaluation.

Reward (\(\:{R}_{t}\))

The reward function measures how well an action performs with respect to multiple objectives.

NF-MORL employs a multi-objective reward model that balances system efficiency, energy cost, and reliability:

where the coefficients \(\:{\alpha\:}_{i}\) represent the importance weights for each objective.

Each reward component is normalized in [0,1] and defined as:

-

\(\:{R}_{makespan}=1-\frac{Texec}{Tmax}\)

-

\(\:{R}_{energy}=1-\frac{Enode}{Emax}\).

-

\(\:{R}_{cost}=1-\frac{Cnode}{Cmax}\:\)

-

\(\:{R}_{fault}=1-{F}_{prob}\)

This formulation encourages actions that minimize makespan, energy consumption, and execution cost, while improving fault tolerance.

Policy(π)

The policy \(\pi \left( {as;\theta _{{actor}} } \right)\) defines the decision-making behavior of the actor network.

It maps the current state \(\:{S}_{t}\) to an action probability distribution over possible scheduling decision.

In the NF-MORL framework, the policy network receives both raw system metrics and adaptive fuzzy priorities as input features. In policy development, \(\:{W}_{s}\) and \(\:{W}_{p}\) represent the trainable weight vectors associated with the raw system metrics and adaptive fuzzy priorities, respectively24. These weights adjust the proportional influence of each feature group before processing by the policy network:

where \(\:{f}_{Softmax}\) ensures that output probabilities lie within [0,1]. The actor’s objective is to maximize the expected cumulative reward1:

During learning, exploration is encouraged via entropy regularization to avoid early convergence to suboptimal policies.

Value function \(\:{V}_{\pi\:}\left({S}_{t}\right)\)

The critic network approximates the value function, which estimates the expected return for a given state under policy π:

where γ is the discount factor controlling the trade-off between immediate and future rewards.

The critic helps stabilize training by providing a baseline for the advantage function:

This advantage signal indicates whether the chosen action performed better or worse than expected, guiding the actor’s gradient updates.

Actor-critic training network

The NF-MORL framework utilizes a deep actor–critic structure with shared experience replays and asynchronous training.

Each agent interacts with the environment independently, while a global network aggregates gradients to ensure stable convergence.

-

Actor Network: Two hidden layers with 256 neurons, stride = 1, kernel size = 2, SoftMax activation at output.

-

Critic Network: Same structure but outputs a scalar value.

-

Optimizer: Adam with adaptive learning rate \(\:{\eta\:}_{t}\).

The training loop follows standard policy-gradient updates:

Parallel agents accelerate convergence by exploring different regions of the state space simultaneously. The overall NF-MORL training and scheduling process is summarized in Algorithm 2.

This algorithm integrates the neuro-fuzzy adaptive prioritization phase with multi-objective actor–critic reinforcement learning. The procedure iteratively evaluates system states, computes fuzzy-based task priorities, and updates the actor–critic and fuzzy parameters using environmental feedback. To standardize the policy and promote enough exploration during training, an entropy term () that H(π) is incorporated in the actor update. Entropy quantifies the randomness of the policy distribution and inhibits early convergence to deterministic suboptimal behaviors. The coefficient regulates the impact of this entropy regularization on the gradient update.

Algorithm 2 delineates the NF-MORL training loop. At each stage, system metrics and fuzzy-derived priorities provide the state representation, the actor chooses a scheduling action, and the ensuing system performance generates a multi-objective reward. Transitions inform the critic via temporal-difference learning and the actor through entropy-regularized policy gradients, while fuzzy parameters are adjusted in real-time. This iterative process facilitates the development of an efficient scheduling policy.

Parameter updating

The adaptive neuro-fuzzy parameters and RL network weights are jointly updated during training.

After each episode, the feedback from fog nodes (Makespan, Energy, Cost, Fault) is aggregated and used to refine both levels:

-

Neuro-Fuzzy Level: Membership functions \(\:{\mu\:}_{ij\left(x\right)}\) and rule coefficients (\(\:{a}_{i}\),\(\:{b}_{i}\),\(\:{c}_{i}\),\(\:{d}_{i}\),\(\:{e}_{i}\)) are updated using gradient feedback derived from RL reward signals:

$$\theta _{{fuzzy}}^{{\left( {t + 1} \right)}} = \theta _{{fuzzy}}^{{\left( t \right)}} + \eta _{f} \cdot \nabla _{{\theta _{f} }} R_{t}$$(22) -

Reinforcement Learning Level: The actor and critic parameters are updated based on policy and value gradients:

$$\:{\theta\:}_{actor}^{\left(t+1\right)}={\theta\:}_{actor}^{\left(t\right)}+{\eta\:}_{a}\cdot\:\:{\nabla\:}_{{\theta\:}_{a}}J$$(23)

This bidirectional contact facilitates ongoing co-adaptation between the fuzzy reasoning layer and the learning agent. The NF-MORL model demonstrates significant adaptability, stability, and energy-efficient scheduling in the face of uncertain and dynamic workloads.

Experimental results

This section delineates the experimental outcomes and performance evaluation of the proposed NF-MORL framework. The studies seek to assess the model’s efficacy in optimizing multi-objective scheduling across diverse fog situations. The primary aims encompass shortening makespan, enhancing energy economy, decreasing execution costs, and preserving fault tolerance amongst dynamically fluctuating workloads. A comparative comparison with six advanced scheduling algorithms demonstrates the superiority of NF-MORL in terms of scalability, adaptability, and resilience.

Experimental setup

The NF-MORL framework was executed in Python 3.11 (TensorFlow 2.15) for the reinforcement learning components and MATLAB R2023b for the adaptive fuzzy inference layer. Simulations were performed on a high-performance workstation using an Intel Core i9-13900 K CPU, 64 GB of RAM, and an NVIDIA RTX 4090 GPU, running Ubuntu 22.04 LTS. A three-tier heterogeneous fog infrastructure (edge–fog–cloud) consisting of 25 fog nodes was modeled. Each node accommodated between 4 and 8 virtual machines (VMs) with diverse processing and bandwidth capacities, simulating authentic fog circumstances.

Workloads were generated utilizing Google Cluster Workload Traces and Edge Bench IoT datasets16, guaranteeing a combination of latency-sensitive and compute-intensive applications. The comprehensive simulation parameters are detailed in Table 4, whilst the learning and fuzzy parameters are enumerated in Table 5.

Evaluation of makespan

Makespan denotes the overall duration necessary to finalize all jobs inside the fog network and serves as a crucial metric for scheduling efficacy and system reactivity. This experiment assesses the efficacy of NF-MORL in minimizing makespan amidst variable workloads and diverse resources using adaptive neuro-fuzzy prioritization and multi-objective reinforcement learning. Three workload scales were evaluated: small (100–350 tasks), medium (400–650 tasks), and large (700–1000 tasks), with task durations varying from 20,000 to 950,000 MI. NF-MORL was evaluated against five baseline models (HTSFFDRL, NF-A2C, DDPG-TS, DQN-TS+, GA-TS), with each model trained for 150 episodes and tested over 50 trials to ensure statistical reliability.

Tables 6, 7 and 8 demonstrate that NF-MORL consistently attained the minimal makespan across all workload categories. Under substantial workloads, NF-MORL sustained its superiority, attaining completion times that were 22–26% shorter than those of DDPG-TS and GA-TS. The results underscore the scalability of the neuro-fuzzy layer and the actor-critic optimization process, validating NF-MORL as an effective and adaptive scheduler for dynamic fog settings.

Figures 8, 9 and 10 show the graphical trends of makespan across the three workload groups.

NF-MORL consistently produces the lowest curves with minimal variance, confirming its stability, scalability, and superior scheduling adaptability across dynamic environments.

Evaluation of energy consumption by NF-MORL

Energy consumption is a critical performance metric in fog computing, as it directly impacts operational costs, thermal stability, and device lifetime22,23. By integrating adaptive neuro-fuzzy prioritization with multi-objective reinforcement learning, NF-MORL reduces energy consumption and facilitates task allocation that is consistent with computational needs and network fluctuations, while avoiding idle power waste and node overload. Experiments were conducted using Google Cluster and EdgeBench workloads at small (100–350 tasks), medium (400–650 tasks), and large (700–1000 tasks) scales. The results shown in Tables 9, 10 and 11; Figs. 11, 12 and 13 show that NF-MORL consistently outperforms all baseline models. For minor workloads, it reduces energy consumption by 15–22%. For moderate workloads, a reduction of 14–18% and more than 25% over A2C was observed. In comparison, under significant workloads, NF-MORL shows a reduction in energy consumption of 17–21% and almost 28% less than LSTM. These findings demonstrate the scalability of NF-MORL and its capacity to enhance fog computing with energy efficiency and stability.

Evaluation of execution cost by NF-MORL

Implementation cost evaluation is essential to quantify the economic efficiency of work scheduling in fog environments. Execution cost sums up the computational and communication costs incurred to complete a given workload19,20. In the proposed NF-MORL framework, the cost reduction is achieved by coupling a fuzzy neural priority layer with a multi-objective critical actor scheduler. The fuzzy layer continuously maps the system observations (CPU consumption, memory load, bandwidth availability, and task length) into a task priority score, while the multi-objective policy uses Pareto-efficient trade-offs among manufacturing, energy, cost, and fault tolerance. This synergy avoids unnecessary migrations and communication costs, leading to an overall cost reduction. Following the configuration in Table 5, we evaluate three workload groups derived from Google Cluster Workload Traces: small (350 − 100 jobs), medium (400–650 jobs), and large (700–1000 jobs). For each group, we run 50 training iterations. The numerical results are summarized in Tables 12, 13 and 14. The corresponding trends are shown in Figs. 14, 15 and 16.

Across all workloads, NF-MORL consistently achieves the lowest execution cost. On small datasets, NF-MORL reduces the cost by approximately 18–23%. On medium datasets, this increase increases to 20–25%. And for large workloads, the cost decreases by up to 28%. These improvements are due to: (1) neural fuzzy prioritization that smoothes short-term fluctuations and prevents backloading, and (2) multi-objective actor-critic updates that jointly optimize computational-communication trade-offs. The findings confirm that NF-MORL is scalable and cost-effective for heterogeneous fog environments.

Evaluation of fault tolerance by NF-MORL

Fault tolerance is an essential performance indicator in fog computing that ensures reliability, service continuity, and resilience amidst node failures, overloads, and network fluctuations. To evaluate this aspect, studies were conducted using the Google Cloud Tasks dataset at three workload scales of small, medium, and large. The results are summarized in Tables 15, 16 and 17, and the patterns are shown in Figs. 17, 18 and 19 for small, medium, and large workloads. In all conditions, NF-MORL consistently demonstrated the best fault tolerance and showed better resilience and adaptability than reinforcement learning and heuristic-based schedulers. Under moderate workloads, NF-MORL demonstrated robustness with a 10–15% improvement compared to baseline techniques, indicating strong adaptability to varying task arrival rates. On large workloads, where fault tolerance is particularly difficult, NF-MORL significantly outperformed other models, achieving improvement margins above 20% in several cases. This is attributed to the adaptive neuro-fuzzy layer that flexibly changes task priorities and the multi-objective reinforcement learning policy that simultaneously optimizes time, energy, cost, and reliability in real time. Comparative findings show that NF-MORL not only reduces task failures and delays, but also accelerates system stabilization after workload increases, which is a critical necessity for mission-critical IoT systems. Statistical investigations confirmed that the improvements achieved by NF-MORL are significant (p < 0.01) across all datasets, thus strengthening its reliability and scalability as an intelligent scheduling framework for next-generation fog and edge environments.

Comparison with existing methods

The suggested NF-MORL framework consistently surpasses conventional DRL and heuristic schedulers in all assessment metrics. In contrast to models like LSTM, DQN, and A2C that depend on clear and accurate inputs, NF-MORL integrates an adaptive neuro-fuzzy layer that is intrinsically resilient to uncertainty, facilitating dependable decision-making in highly dynamic fog settings. Techniques such as DDPG-TS and DQN-TS + excel under steady workloads but necessitate retraining during resource fluctuations, whereas NF-MORL constantly adapts using real-time fuzzy reasoning and multi-objective learning.

An essential benefit of NF-MORL is its capacity to concurrently maximize makespan, energy, cost, and fault tolerance, while several competing approaches focus on either one or two objectives. The system dynamically equilibrates these objectives under fluctuating settings using Pareto-efficient actor–critic optimization. Experiments demonstrate consistent enhancements: 18–26% decrease in execution time, up to 15% energy conservation, 10–12% cost reduction, and 9–20% increased fault tolerance. NF-MORL preserves scalability as task volumes increase, in contrast to traditional DRL algorithms that demonstrate instability or protracted convergence.

Evaluation of tasks in each fog node

A critical aspect in evaluating the effectiveness of any task scheduling system for fog computing is the allocation of work among fog nodes. Efficient distribution ensures parity, reduces node congestion, and maintains low latency in large-scale IoT implementations25. To evaluate this aspect, we conducted comprehensive experiments using the proposed NF-MORL architecture over a workload range of 100 to 1000 tasks, while increasing the number of fog nodes to eight. Each workload was repeated over 100 training episodes, and the assignment of tasks to each node was monitored on a per-episode basis. NF-MORL exhibits remarkably consistent and fair work allocation. This improvement results from the integration of an adaptive neuro-fuzzy inference layer that constantly changes task priorities according to changing system conditions, and a multi-objective actor-critic policy, which simultaneously implements Pareto-efficient optimization over time, energy, cost, and fault tolerance. In Fig. 20, the fog distribution of each part of the small data set is shown.

As shown in the graphs in Fig. 21 at moderate workloads ranging from 400 to 550 tasks, NF-MORL demonstrates uniform distributions with all eight nodes being actively engaged. Minor discrepancies are seen; however, no individual node surpasses 18–20% of the total burden. The adaptive fuzzy reasoning layer efficiently selects lightweight tasks and distributes them uniformly, while the actor-critic approach guarantees that nodes with underutilized CPU and bandwidth capacities are allocated supplementary work. As workloads escalate to 600–750 tasks, the model dynamically reallocates duties among nodes that briefly demonstrate reduced utilization. The neuro-fuzzy module swiftly adjusts to fluctuations in CPU and memory resources, whereas the actor–critic controller redistributes excess work instantaneously. NF-MORL effectively prevents the continuous overloading of individual nodes, hence averting bottlenecks and ensuring sustained throughput.

The superiority of NF-MORL becomes increasingly apparent under the most demanding workloads, involving 800–1000 tasks. As shown in Fig. 22, in the baseline case, a significant increase was observed when one or two nodes were handling unusually large portions of the workload. In NF-MORL, two complementary nodes (Fog Node 7 and Fog Node 8) are actively involved and redistribute the additional load, reducing the pressure on the main nodes. Even with 1000 tasks, the allocations remain within the range of the tuned parameters, with each node handling about 10–15% of the workload. This fair distribution demonstrates the scalability and robustness of NF-MORL even in contexts characterized by high task density and variable workloads.

The results distinctly demonstrate that NF-MORL attains a more equitable, seamless, and adaptable work allocation among various fog nodes compared to the baseline models. The dual mechanism of adaptive neuro-fuzzy reasoning for real-time interpretability, combined with a multi-objective actor-critic policy for long-term optimization, markedly diminishes oscillations, averts prolonged overload on specific nodes, and ensures equitable utilization throughout the entire fog infrastructure. This behavior promotes responsiveness, improves energy economy, diminishes the risk of overload-related problems, and prolongs the lifespan of fog resources by averting hot spots. The assessment verifies that NF-MORL provides substantial scalability and equity in job allocation. The framework effectively balances diverse workloads over eight fog nodes under fluctuating demand, showcasing its appropriateness for next-generation IoT applications that necessitate stability, adaptability, and efficiency in extensive fog computing environments.

Analysis of simulation results

In this section, NF-MORL is evaluated against state-of-the-art schedulers including NF-A2C, DDPG-TS, DQN-TS+, and GA-TS across four key metrics: completion time, energy consumption, execution cost, and fault tolerance. Using Google Cluster and EdgeBench workloads (100–1000 tasks), the results in Tables 18, 19, 20 and 21 show that NF-MORL consistently outperforms all baselines. Completion-time analysis (Table 18) indicates a 28–35% reduction due to adaptive neuro-fuzzy prioritization and efficient workload balancing. Energy results (Table 19) further demonstrate 15–30% savings, enabled by reduced task migration and optimized CPU–bandwidth utilization. These findings highlight NF-MORL’s superior adaptability and efficiency across diverse fog scenarios. The execution cost comparison Table 20 shows that NF-MORL can significantly reduce operational costs by 18–40% compared to baseline approaches. The actor-critic model dynamically optimizes multi-objective trade-offs, resulting in reduced computational, communication, and data transmission overheads. The neuro-fuzzy inference mechanism helps to precisely control the cost by adapting task priorities to network and energy constraints in real time.

Fault tolerance analysis Table 21 shows that NF-MORL has between 20 and 37% higher fault resilience than competing models. This improvement is due to predictive fuzzy rules that detect potential node instability and a redundancy-aware scheduling policy that maintains service continuity even under minor system failures.

Analysis of computational complexity and scalability

We provide a formal complexity analysis and an empirical scalability evaluation of NF-MORL in real fog systems, utilizing an experimental configuration comprising 25 fog nodes, a maximum of 8 VMs per node, 150 training episodes, and 1000 steps each episode. The rationale for each scheduling decision is highly efficient. Following adaptive pruning, the neuro-fuzzy layer maintains an average of 61 ± 7 active Takagi–Sugeno rules, decreased from the original 81, leading to an inference complexity of O(R) with R < 68, far lower than the O(81) of static full-rule-base fuzzy approaches. The actor and critic networks each have around 185,000 parameters, featuring two hidden layers of 256 units, resulting in a forward-pass complexity that remains largely unaffected by the number of jobs or nodes. On standard fog hardware (comparable to Intel Core i9-13900 K with 64 GB RAM), the per-step inference time varies from 3.8 to 4.6 ms, adequately meeting the real-time requirements (< 50 ms) identified in Google Cluster traces. The training complexity for each episode is O (B · Nₐ · T · L), where B denotes a batch size of 64, Nₐ signifies the number of fog agents, T is capped at 1000 steps, and L indicates 4 objectives. The implemented centralized training with decentralized execution (CTDE) framework, along with cloud-based parameter aggregation, ensures minimal communication overhead: each agent conveys just 185 K gradients per 50 steps, amounting to less than 12 MB per synchronization cycle for 25 agents. Figure 23 depicts the scalability performance. As the quantity of fog agents escalates from 10 to 100 (assessed through extensive EdgeBench workloads with a maximum of 1000 concurrent tasks), the wall-clock training duration scales nearly linearly, attaining merely 1.3 times the single-agent baseline at 50 agents and 1.7 times at 100 agents, attributable to asynchronous experience collection and effective cloud aggregation. Throughput (tasks planned per second) rises roughly linearly up to 80 agents, beyond which it experiences a gradual saturation due to the cloud synchronization bottleneck. The mean memory footprint per agent is consistently 178 ± 12 MB, comfortably within the limits of standard fog gateways.

Real-world testbed validation

To enhance the simulation-based assessment and augment the practical significance of the outcomes, NF-MORL was implemented and validated on a tangible fog testbed comprising twenty Raspberry Pi 4 Model B devices (8 GB RAM, quad-core Cortex-A72) serving as fog nodes, fifty ESP32-C6 modules functioning as edge/IoT devices that produce real-time sensor streams and 720p object-detection tasks at 5–10 fps, and one Dell PowerEdge R740 server operating as the cloud aggregator. The complete testbed was linked via an authentic 5G campus network, featuring a downlink bandwidth of 180–220 Mbps and a round-trip latency of 8–12 ms. A 48-hour uninterrupted workload was conducted utilizing a genuine smart-factory trace that included temperature, vibration, and video analytics duties, synchronized in real time according to the original timestamps.

Table 22 Results of the Empirical Testbed (48-hour smart manufacturing workload). NF-MORL consistently surpasses all baselines across all metrics, attaining a 32–36% reduction in makespan, a 28–31% decrease in energy consumption, and a task success rate of 95.8%, even amidst 15% random node failures, thereby validating the robustness of the proposed framework in authentic heterogeneous and dynamic environments.

Figure 24. The physical fog testbed deployment comprises 20 Raspberry Pi 4 fog nodes, 50 ESP32-C6 edge devices, and a Dell PowerEdge R740 cloud aggregator, all interconnected via an authentic 5G campus network. The live monitoring dashboard (right) depicts the 48-hour smart-factory workload, showcasing inserted and successfully completed tasks (green), real-time power consumption (red), and a comprehensive performance report.

A physical fog testbed consisting of 20 Raspberry Pi 4 fog nodes, 50 ESP32-C6 edge devices, and a Dell PowerEdge R740 cloud aggregator, all linked via an authentic 5G campus network (left), accompanied by a live monitoring dashboard that exhibits task completion and power consumption over a 48-hour smart-factory workload (right).

Statistical significance and variance analysis

To rigorously assess the consistency and statistical significance of the asserted improvements, all experiments (both simulations and real testbeds) were executed 30 independent times utilizing distinct random seeds. Table 23 displays the mean ± standard deviation for the four principal objectives obtained from the Google Cluster + EdgeBench simulation dataset and the 48-hour physical testbed experiment.

Pairwise two-sided Welch’s t-tests (α = 0.01) indicate that NF-MORL enhancements significantly exceed each baseline, with p-values less than 1e-12 in all cases. The markedly diminished standard deviations of NF-MORL further demonstrate its superior stability and robustness to workload variations and random seed fluctuations.

Conclusion

This paper introduces NF-MORL, an innovative neuro-fuzzy multi-objective reinforcement learning framework that, for the first time, synergistically integrates adaptive uncertainty management, Pareto-efficient policy formulation, and distributed multi-agent training for task scheduling in fog computing environments. An exhaustive assessment of comprehensive simulation datasets (Google Cluster + EdgeBench) and a pragmatic 48-hour physical testbed deployment (20 Raspberry Pi 4 fog nodes + actual 5G campus network) reveals that NF-MORL consistently surpasses notable baselines by 32–36% in makespan, 28–31% in energy efficiency, 38–40% in operational cost, and attains a 95.8% task success rate despite realistic node failures and network jitter. The low inference latency (3.8–4.6 ms), minimal memory requirement (< 180 MB per agent), and near-linear scalability up to 100 fog nodes demonstrate that NF-MORL is lightweight and readily deployable on standard fog hardware, rendering it highly appropriate for practical 5G/6G-enabled industrial IoT, smart city, and telemedicine applications where interpretability, robustness, and multi-objective trade-offs are essential. Numerous viable trajectories are anticipated in the future. Incorporating graph neural network message-passing into the actor–critic framework (Graph-RL) may accurately represent dynamic fog topology and inter-node dependencies, while minimizing communication costs in highly mobile settings. Secondly, augmenting NF-MORL with federated reinforcement learning principles would provide privacy-preserving collaborative training across many administrative domains (e.g., hospitals or factories) without the transmission of raw data, in accordance with emerging edge intelligence legislation. The examination of hardware-accelerated implementation utilizing FPGA or NVIDIA Jetson-based fog gateways aims to provide real-time inference under 1 ms for ultra-low-latency applications, including autonomous driving and AR/VR offloading.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

References

Ishaq, F., Ashraf, H. & Jhanjhi, N. A Survey on Scheduling the Task in Fog Computing Environment. Preprint at https://abs/arXiv.org/2312.12910 (2023).

Wang, Z., Goudarzi, M., Gong, M. & Buyya, R. Deep reinforcement learning-based scheduling for optimizing system load and response time in edge and fog computing environments. Future Generation Comput. Syst. 152, 55–69 (2024).

Oustad, E. et al. DIST: Distributed Learning-based Energy-Efficient and Reliable Task Scheduling and Resource Allocation in Fog Computing. (IEEE Transactions on Services Computing, 2025).

Saeed, A., Chen, G., Ma, H. & Fu, Q. A genetic algorithm with selective repair method under combined-criteria for deadline-constrained IoT workflow scheduling in Fog-Cloud computing. Future Generation Comput. Syst. 175, 108050 (2025).

Sun, G., Liao, D., Zhao, D., Xu, Z. & Yu, H. Live Migration for Multiple Correlated Virtual Machines in Cloud-Based Data Centers. IEEE Trans. Serv. Comput. 11(2) 279–291 https://doi.org/10.1109/TSC.2015.2477825 (2018).

Sun, G., Xu, Z., Yu, H. & Chang, V. Dynamic network function provisioning to enable network in box for industrial applications. IEEE Trans. Industr. Inf. 17 (10), 7155–7164. https://doi.org/10.1109/TII.2020.3042872 (2021).

Sharma, Y. & Moulik, S. RT-SEAT: A hybrid approach based real-time scheduler for energy and temperature efficient heterogeneous multicore platforms. Results Eng. 16, 100708 (2022).

Moulik, S. Sharma, Y. FRESH: Fault-tolerant Real-time scheduler for heterogeneous multiprocessor platforms. Future Generation Comput. Syst. 161, 214–225 (2024).

Wang, K., Tan, C. W. (2025). Reverse Engineering Segment Routing Policies and Link Costs With Inverse Reinforcement Learning and EM. IEEE Transactions on Machine Learning in Communications and Networking, 3, 1014-1029. https://doi.org/10.1109/TMLCN.2025.359873.

Zhang, B., Sang, H., Meng, L., Jiang, X. & Lu, C. Knowledge- and data-driven hybrid method for lot streaming scheduling in hybrid flowshop with dynamic order arrivals. Comput. Oper. Res. 184, 107244. https://doi.org/10.1016/j.cor.2025.107244 (2025).

Xu, H., Zhao, N., Xu, N., Niu, B. & Zhao, X. Reinforcement learning-based dynamic event-triggered prescribed performance control for nonlinear systems with input delay. Int. J. Syst. Sci. https://doi.org/10.1080/00207721.2025.2557528 (2025).

Zhao, J., Niu, B., Xu, N., Zong, G. & Zhang, L. Self-triggered optimal fault-tolerant control for saturated-inputs zero-sum game nonlinear systems via particle swarm optimization-based reinforcement learning. Commun. Nonlinear Sci. Numer. Simul. https://doi.org/10.1016/j.cnsns.2025.109512 (2025).

Zhu, B., Zhao, N., Niu, B., Zong, G. & Zhao, X. Distributed adaptive optimized sliding-mode time-varying formation control with prescribed-time performance constraints for nonlinear heterogeneous multiagent systems. IEEE Internet Things J. https://doi.org/10.1109/JIOT.2025.3626164 (2025).

Multi-Objective Fuzzy approach to scheduling and offloading workflow in Fog-Cloud environments. J. Comput. Sci. 66, 101030 (2022).

Azarkasb, S. O. Khasteh, S. H. Fog computing tasks management based on federated reinforcement learning. J. Grid Comput. 23, 11 (2025).

Abdulazeez, D. H. Askar, S. K. Offloading mechanisms based on reinforcement learning and deep learning algorithms in the fog computing environment. Ieee Access. 11, 12555–12586 (2023).

Yao, M., Zhao, T., Cao, J. Li, J. Hierarchical Fuzzy Topological System for High-Dimensional Data Regression Problems. IEEE Trans. Fuzzy Syst. 33(7), 2084–2095. https://doi.org/10.1109/TFUZZ.2025.3549791 (2025).

Minggang Liu, N., Zhao, K. H., Alharbi, X., Zhao, B. Niu Dynamic Event-Triggered fuzzy adaptive hierarchical sliding mode optimal control for unknown nonlinear systems. Int. J. Fuzzy Syst. https://doi.org/10.1007/s40815-025-02124-8 (2025).

Mir, M. Trik, M. A novel intrusion detection framework for industrial iot: GCN-GRU architecture optimized with ant colony optimization. Comput. Electr. Eng. 126, 110541 (2025).

Liu, Y., Dong, X., Zio, E., Cui, Y. Event-Triggered Multiple Leaders Formation Tracking for Networked Swarm System With Resilience to Noncooperative Nodes. IEEE Transactions on Cybernetics, 55(9), 4136-4144. https://doi.org/10.1109/TCYB.2025.3580666 (2025).

Liu, Q. et al. Sample-efficient backtrack temporal difference deep reinforcement learning. Knowledge-Based Syst. 330, 114613. https://doi.org/10.1016/j.knosys.2025.114613 (2025).

Ren, Y., Chang, Y., Cui, Z., Chang, X., Yu, H., Li, X., Wang, Y. (2025). Is cooperative always better? Multi-Agent Reinforcement Learning with explicit neighborhood backtracking for network-wide traffic signal control. Transportation Research Part C: Emerging Technologies, 179, 105265. doi: https://doi.org/10.1016/j.trc.2025.105265.

Choppara, P. & Mangalampalli, S. S. A hybrid task scheduling technique in fog computing using fuzzy logic and deep reinforcement learning. (IEEE Access, 2024).

Shakarami, A., Shahidinejad, A. Ghobaei-Arani, M. A review on the computation offloading approaches in mobile edge computing: A g ame‐theoretic perspective. Software: Pract. Experience. 50 (9), 1719–1759 (2020).

Chraibi, A., Ben Alla, S., Touhafi, A. Ezzati, A. A novel dynamic multi-objective task scheduling optimization based on dueling DQN and PER: A. Chraibi et al. J. Supercomputing. 79 (18), 21368–21423 (2023).

Hosseinzadeh, M. et al. Task scheduling mechanisms for fog computing: a systematic survey. IEEE Access. 11, 50994–51017 (2023).

Chen, P. et al. QoS-oriented task offloading in NOMA-based multi-UAV cooperative MEC systems. IEEE Trans. Wireless Commun. https://doi.org/10.1109/TWC.2025.3593884 (2025).

Li, Y., Yang, C., Chen, X. Liu, Y. Mobility and dependency-aware task offloading for intelligent assisted driving in vehicular edge computing networks. Veh. Commun. 45, 100720 (2024).

Yuan, W. et al. Transformer in reinforcement learning for decision-making: a survey. Front. Inform. Technol. Electr. Eng. 25(6), 763–790. https://doi.org/10.1631/FITEE.2300548 (2024).

Sun, Y. Zhang, N. A resource-sharing model based on a repeated game in fog computing. Saudi J. Biol. Sci. 24 (3), 687–694 (2017).

Li, Z., Gu, W., Shang, H., Zhang, G. Zhou, G. Research on dynamic job shop scheduling problem with AGV based on DQN. Cluster Comput. 28 (4), 236. https://doi.org/10.1007/s10586-024-04970-x (2025).

Meng, Q., Hussain, S., Luo, F., Wang, Z. Jin, X. An online reinforcement Learning-Based energy management strategy for microgrids with centralized control. IEEE Trans. Ind. Appl. 61 (1), 1501–1510. https://doi.org/10.1109/TIA.2024.3430264 (2025).

Zhang, B., Wang, Z., Meng, L., Sang, H. Jiang, X. Multi-objective scheduling for surface mount technology workshop: automatic design of two-layer decomposition-based approach. Int. J. Prod. Res. https://doi.org/10.1080/00207543.2025.2502106 (2025).

Long, X., Chen, J., Yang, L. Huang, H. An emergency scheduling method based on automl for space maneuver objective tracking. Expert Syst. Appl. 298, 129759. https://doi.org/10.1016/j.eswa.2025.129759 (2026).

Zhang, K., Zheng, B., Xue, J. Zhou, Y. Explainable and Trust-Aware AI-Driven Network Slicing Framework for 6G IoT Using Deep Learning. IEEE Internet Things J. https://doi.org/10.1109/JIOT.2025.3619970 (2025).

Yang, M. et al. A multi-objective task scheduling method for fog computing in cyber-physical-social services. IEEE access. 8, 65085–65095 (2020).

Awada, U., Zhang, J., Chen, S., Li, S. Yang, S. Resource-aware multi-task offloading and dependency-aware scheduling for integrated edge-enabled IoV. J. Syst. Architect. 141, 102923 (2023).

Liu, Q., Song, Z., Liang, Y., Xie, Z., Zhang, S., Zhang, J.,... Li, Y. (2026). CoRLHF: Reinforcement learning from human feedback with cooperative policy-reward optimization for LLMs. Expert Systems with Applications, 301, 130113. https://doi.org/10.1016/j.eswa.2025.130113.

Ali, A. et al. Multiobjective Harris Hawks optimization-based task scheduling in cloud-fog computing. IEEE Internet Things J. 11 (13), 24334–24352 (2024).

Shakarami, A., Shahidinejad, A. Ghobaei-Arani, M. An autonomous computation offloading strategy in mobile edge computing: A deep learning-based hybrid approach. J. Netw. Comput. Appl. 178, 102974 (2021).

He, W., Tan, J., Wang, R., Liu, Z., Luo, X., Hu, H.,... Zhang, H. (2025). A Deep Reinforcement Learning Approach to Time Delay Differential Game Deception Resource Deployment. IEEE Transactions on Dependable and Secure Computing, 1-16. https://doi.org/10.1109/TDSC.2025.3620151.

Jiang, Q., Xin, X., Zhang, T. Chen, K. Energy-Efficient task scheduling and resource allocation in edge heterogeneous computing systems using Multi-Objective optimization. IEEE Internet Things J. (2025).

Acknowledgements

This work was supported by the Natural Science Foundation of Guangxi Province (No.2021GXNSFAA075019 and 2025JJA180065); Philosophy and Social Science Foundation of Guangxi (No.25KSF232); Higher Education Undergraduate Teaching Reform Project of Guangxi (No.2024JGA258); The "14th Five Year Plan" of Guangxi Education and Science Major project in 2025 (No.2025JD20); The "14th Five Year Plan" of Guangxi Education and Science special project of college innovation and entrepreneurship education (No.2022ZJY2727); The "14th Five Year Plan" of Guangxi Education and Science Annual project in 2023 (No.2023A028). This study acknowledge the support of Guangxi Academy of Artificial Intelligence; the National First-class Undergraduate Major - The Major of Logistics Management; Demonstrative Modern Industrial School of Guangxi University - Smart Logistics Industry School Construction Project; Guangxi Colleges and Universities Key laboratory of Intelligent Logistics Technology; Engineering Research Center of Guangxi Universities and Colleges for Intelligent Logistics Technology; Demonstrative Modern Industrial School of Guangxi University - Smart Logistics Industry School Construction Project, Nanning Normal University.

Author information

Authors and Affiliations

Contributions

The research idea and its planning were proposed by all authors, including Xiaomo Yu (https://orcid.org/0000-0002-7056-2362), Ling Tang, Jie Mi, Long Long, Xiao Qin, Xiuming Li, and Qinglian Mo. The initial draft, ideation, supervision, and coordination of the project were carried out by Ling Tang.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yu, X., Tang, L., Mi, J. et al. NF-MORL: a neuro-fuzzy multi-objective reinforcement learning framework for task scheduling in fog computing environments. Sci Rep 16, 2455 (2026). https://doi.org/10.1038/s41598-025-32235-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-32235-z