Abstract

The sharing of patient-level data necessary for covariate-adjusted survival analysis between medical institutions is difficult due to privacy protection restrictions. We propose a privacy-preserving framework that estimates balanced Kaplan–Meier curves from distributed observational data without exchanging raw data. Each institution sends only the low-dimensional representation obtained through dimensionality reduction of the covariate matrix. Analysts reconstruct the aggregated dataset, perform propensity score matching, and estimate survival curves. Experiments using simulation datasets and five publicly available medical datasets showed that the proposed method consistently outperformed single-site analyses. This method can handle both horizontal and vertical data distribution scenarios and enables the collaborative acquisition of reliable survival curves with minimal communication and no disclosure of raw data.

Similar content being viewed by others

Introduction

In medical research, survival outcomes are one of the most important factors for evaluating treatment effects. When estimating treatment effects for survival outcomes from observational data such as electronic medical records, confounding bias is unavoidable because treatment assignment is not random1,2. To mitigate such biases and ensure the validity of results, it is essential to appropriately adjust for covariates related to both treatment assignment and outcomes. Specific adjustment methods include multivariate regression, propensity score matching, inverse probability weighting, and stratification, which are standardly used3,4, and these methods have been shown to reduce confounding bias in observational data5,6.

Such methods require enough cases and diverse covariates to effectively adjust for confounding, as demonstrated in simulation studies7,8,9. However, data from a single site may suffer from insufficient case numbers and biased patient characteristics, which reduce estimation accuracy and limit external validity. Therefore, integrating data distributed across multiple sites, countries, and regions to analyze them as a large cohort has become essential for improving the reliability of confounding adjustment10,11,12,13,14. On the other hand, sharing raw data is challenging due to privacy concerns and regulations. Therefore, establishing a framework that allows for the analysis of multi-site data while maintaining privacy is an urgent task.

Representative methods in survival time analysis include the Cox proportional hazards model15 and the Kaplan–Meier (KM) curve16. Since the Cox model is a multivariate regression framework, bias can be adjusted in a model-based manner by simultaneously incorporating covariates. In recent years, numerous frameworks have been proposed for estimating the Cox model from distributed data while maintaining privacy. First, few-shot algorithms have been proposed where each facility sends only minimal statistics such as event counts, partial likelihood gradients, and Hessian to the server, and parameters are estimated centrally17,18. Additionally, a one-shot algorithm has been proposed where each facility reduces the dimension of the covariate matrix and shares it, and the server integrates and redistributes the parameters, ultimately enabling each institution to calculate local parameters19. The advantage of these algorithms is that they can adjust the effects of covariates using only the model, without using external indicators such as propensity scores. In addition, a method has been proposed that uses information from each institution to estimate propensity scores, applies inverse probability weighting or matching, and then shares weighted or matched aggregate statistics to estimate hazard ratios20,21. These studies enhance the robustness of the model by combining propensity score-based confounding adjustment with Cox model estimation. Such research suggests that the requirement to simultaneously achieve covariate adjustment and Cox model estimation under privacy protection is being met to a certain extent.

While the Cox model performs regression analysis, the KM curve visually represents survival probability, making it an intuitive indicator for medical professionals to understand22,23. Major reporting guidelines also recommend presenting KM curves adjusted for covariate24. Under privacy protection, it is necessary to estimate KM curves without sharing raw data. Previously proposed methods have been limited to estimating unadjusted KM curves with differential privacy applied to distributed data25,26, leaving confounding bias unaddressed. As a result, their practicality is limited from the perspectives of clinical decision-making and guideline compliance. In other words, there is still no method available to estimate covariate-balanced KM curves, which are highly valued in clinical settings, while protecting privacy using distributed data.

To address this gap, this study focuses on data collaboration quasi-experiments (DC-QE)27, one of the distributed data analysis methods. DC-QE is attracting attention as a one-shot framework that enables covariate adjustment and causal effect estimation without sharing raw data. In this method, each institution shares privacy protected representations instead of raw data to perform propensity score analysis. While DC-QE has been shown to be effective for estimating the average treatment effect for binary and continuous outcomes, it is not designed for survival outcomes.

In this study, we propose a new method for estimating covariate-balanced KM curves under privacy protection. The proposed method extends the existing DC-QE framework for privacy preserving quasi-experiments to survival outcomes, enabling collaboration without directly sharing distributed samples or covariates. The main contributions of this study are as follows.

-

We proposed the first privacy-preserving framework for estimating covariate-balanced KM curves based on propensity scores in a distributed data environment.

-

Through collaboration across the distributed samples, the proposed method identified appropriately matched pairs between the treatment and control groups. Furthermore, through collaboration across the distributed covariates, bias can be effectively reduced, and more accurate survival curves can be estimated.

-

Numerical simulation results using an artificial dataset and five open medical datasets show that the proposed method outperforms the analysis performed on the local dataset alone.

Method

Data collaboration quasi-experiment for survival outcomes

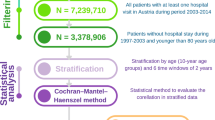

The algorithm proposed in this study consists of two stages: data aggregation and estimation of covariate-balanced survival curves. In the first phase, collaborative representations are created by analysts from dimension-reduced site-specific data and publicly shared anchor data. In the second stage, covariate-balanced survival curves are estimated using propensity score matching (PSM) and Kaplan-Meier estimation from these representations. Figure 1 shows an overview of the proposed algorithm.

Problem settings

In this paper, we define two roles participating in DC-QE: analysts and users. Users provide data, while analysts are responsible for processing and analyzing aggregated information. Each user \(\:k\:(k=1,\dots\:,c)\) has a private covariate matrix \(\:{X}_{k}\in\:{\mathbb{R}}^{{n}_{k}\times\:m}\), an observed time vector \(\:{\varvec{t}}_{k}\in\:{\mathbb{R}}^{{n}_{k}}\), an event indicator vector \(\:{\varvec{\delta\:}}_{k}\in\:{\left\{\text{0,1}\right\}}^{{n}_{k}}\), and a binary treatment variable \(\:{\varvec{Z}}_{\varvec{k}}\in\:{\left\{\text{0,1}\right\}}^{{n}_{k}}\) indicating the treatment group and control group. Here, \(\:n\) denotes the sample size and \(\:m\) denotes the number of covariates. Furthermore, we assume that the covariate dataset is managed by \(\:c\) institutions and divided horizontally, and is also divided vertically into \(\:d\) parties with different covariates due to physical or operational reasons. Therefore, the covariate matrix \(\:X\) is divided as follows:

The \(\:(k,l)\)-th party holds a dataset \(\:{X}_{k,l}\in\:{\mathbb{R}}^{{n}_{k}\times\:{m}_{l}}\) where \(\:n={\sum\:}_{k=1}^{c}{n}_{k}\) and \(\:m={\sum\:}_{l=1}^{d}{m}_{l}\).

The estimation targets are the covariate-balanced survival function \(\:{S}^{1}\left(t\right)\) and \(\:{S}^{0}\left(t\right)\) for each of the treatment and control group. Under such a distributed setting, it is expected that each party individually will face bias and error in estimation results due to a lack of covariates and insufficient sample size. Furthermore, although the most accurate analysis can be performed by aggregating data from all parties, this is difficult to implement due to restrictions and mechanisms related to privacy protection.

Phase 1: construction of collaboration representation

DC-QE adopts the data collaboration framework28 for data aggregation. In data collaboration, the collaboration representation used for analysis is constructed from dimensionally reduced intermediate representations.

First, each user generates and shares an anchor dataset \(\:{X}^{\text{a}\text{n}\text{c}}\in\:\:{\mathbb{R}}^{r\times\:m}\), where \(\:r\) is the number of samples in the anchor dataset. The anchor dataset is a publicly shareable dataset composed of either publicly available or randomly generated dummy data. This is used to compute the reconstruction function \(\:{g}_{k}\) (described below). Next, each user constructs an intermediate representation, as follows:

where \(\:{f}_{k,\:l}\) is a row-wise linear or nonlinear dimensionality reduction function applied to the local covariate block \(\:{X}_{k,l}\) at \(\:(k,l)\)-th party. In this study, as a concrete example, \(\:{f}_{k,l}\) is implemented by applying principal component analysis (PCA) to \(\:{X}_{k,l}\) and retaining the first \(\:{\tilde{m}}_{k,l}\) principal component scores for each individual; thus \(\:{\tilde{X}}_{k,l}\) consists of these PCA scores. Other dimensionality reduction methods, such as locality preserving projections or nonnegative matrix factorization, can also be used within the same framework. Here, \(\:{\tilde{m}}_{k,l}\) denotes the reduced dimensions. We use \(\:{X}_{:,l}\) to denote the collection of the \(\:l\)-th covariate block across all institutions. In other words, \(\:{X}_{:,l}\) corresponds to the same set of variables (for example, the same laboratory measurements) observed at multiple institutions and stored locally as \(\:{X}_{k,l}\) at each site. Function \(\:{f}_{k,\:l}\) is kept private for each user and differs across users. Through this process, each party generates an intermediate representation, enabling it to provide only low-dimensional representations to analysts without directly disclosing its private data. Subsequently, each user shares only the intermediate representations \(\:{\tilde{X}}_{k,l}\:\text{a}\text{n}\text{d}\:{\tilde{X}}_{k,l}^{\text{a}\text{n}\text{c}}\), with the analyst. To handle survival outcomes and treatment indicator, each party additionally shares\(\:\:{t}_{i}\), \(\:{\delta\:}_{i}\) and \(\:{Z}_{i}\). Importantly, the original covariate data \(\:{X}_{k,l}\) were not directly shared.

The analyst then constructs a collaboration representation from shared intermediate representations. Each party’s intermediate representation \(\:{\tilde{X}}_{k}\) is expressed in its own local coordinate system, so even the anchor representations \(\:{\tilde{X}}_{k}^{\text{a}\text{n}\text{c}}\)are not aligned across institutions. The remedy is to map all intermediate representations into a common coordinate system. Concretely, if we construct a shared coordinate matrix \(\:H\) and linear mapping functions \(\:{g}_{k}\) such that \(\:{g}_{k}\left({\tilde{X}}_{k}^{\text{a}\text{n}\text{c}}\right)\approx\:H\:\)for the anchor data, then each party’s space can be aligned to \(\:H\) and can be integrated. The singular value decomposition (SVD) is employed to derive \(\:H\), following Imakura et al. (2020)28. The details are described below.

The goal is to find linear mapping function \(\:{g}_{k}\) such that the projected anchor datasets satisfy \(\:{g}_{k}\left({\tilde{X}}_{k}^{\text{a}\text{n}\text{c}}\right)\approx\:{g}_{{k}^{{\prime\:}}}\left({\tilde{X}}_{{k}^{{\prime\:}}}^{\text{a}\text{n}\text{c}}\right)\) for \(\:k\ne\:\:k^{\prime\:}\). Assuming that the mapping function \(\:{g}_{k}\) from intermediate representations to collaboration representations is a linear transformation function, we write

where \(\:{G}_{k}\in\:{\mathbb{R}}^{{\tilde{m}}_{k}\times\:\check{m}}\) is the matrix representation of \(\:{g}_{k}\), and \(\:{\tilde{X}}_{k}=[{\tilde{X}}_{k,1},\:{\tilde{X}}_{k,2},\dots\:,{\tilde{X}}_{k,d}]\). These transformations can be determined by solving a least-squares problem:

This problem is difficult to solve directly. However, an approximate solution can be derived using SVD. We compute a low-rank SVD

where \(\:{{\Sigma}}_{1}\in\:{\mathbb{R}}^{\check{m}\times\tilde{m}}\) is a diagonal matrix containing the largest singular values, and \(\:{U}_{1}\) and \(\:{V}_{1}\) are column-orthogonal matrices corresponding to the left and right singular vectors, respectively. We set the shared coordinate matrix as \(\:H={U}_{1}\). Then each transformation matrix \(\:{G}_{k}\) is calculated as follows:

where † denotes the Moore–Penrose pseudoinverse. By construction, the transformed anchor data satisfy

which means that the anchor data from all organizations are aligned in the common collaboration space. Using these transformations, the final collaboration representation \(\:\check{X}\) is given by:

Phase 2: estimation of covariate-balanced survival curves

In the second phase, the analyst performs PSM and survival curve estimation from \(\:\check{X},\:t,\:\delta\:\:\text{a}\text{n}\text{d}\:\text{Z}\). The propensity score on the collaboration representation is defined as

where \(\:{\check{x}}_{i}\) denotes the value of the \(\:i\)-th patient in the collaboration representation \(\:\check{X}\). Using methods such as logistic regression, the analyst can estimate \(\:{\alpha\:}_{i}\) from the aggregated \(\:\check{X}\) and \(\:Z\) obtained during the first phase. This enables the estimation of individual propensity scores without sharing raw private data. Now that the propensity scores are calculated, we can make a dataset by matching samples with similar propensity scores in the treatment and control groups using PSM. From this dataset, we can estimate the survival curves for each group using the Kaplan–Meier estimator16.

Through these steps, our proposed method enables the integration of information from multiple parties and the estimation of treatment effects on survival outcomes while preserving privacy. Although the proposed method is based on the DC-QE algorithm, it differs because the outcome of interest is the survival curve. Consequently, survival time data were included among the shared information, and Kaplan–Meier estimation was employed for treatment effect estimation. The pseudocode for the proposed method is presented in Algorithm 1.

Privacy preservation of proposed method

In this section, we describe how privacy and confidentiality are preserved using the proposed method. Similar to prior works on data collaboration analysis19,29, our approach incorporates two layers of privacy protection to safeguard sensitive data, \(\:{X}_{k,l}\).

-

First Layer: Under this protocol, no participant other than the data owner has access to \(\:{X}_{k,l}\), and transformation function \(\:{f}_{k,l}\) remains private. Because neither the inputs nor the outputs of \(\:{f}_{k,l}\) are shared with the other participants, \(\:{f}_{k,l}\) remains confidential. Consequently, others cannot infer the original data \(\:{X}_{k,l}\) from the shared intermediate representation \(\:{\tilde{X}}_{k,l}\).

-

Second Layer: The second layer of protection stems from the fact that \(\:{f}_{k,l}\) is a dimensionality reduction function. Even if \(\:{f}_{k,l}\) were leaked, the original data \(\:{X}_{k,l}\) could not be reconstructed from \(\:{\tilde{X}}_{k,l}\) because of the dimensionality reduction properties. This privacy guarantee aligns with the concept of \(\:\epsilon\:\)-DR (Dimensionality Reduction) privacy30.

However, it should be noted that some aggregate statistical properties, such as the means or variances of the original data, could potentially leak through the anchor data. This is a potential risk inherent to the data collaboration framework.

Common settings and evaluation scheme

To validate the effectiveness of the proposed method, three benchmark methods are established for comparison.

-

a.

Central Analysis (CA):

In CA, the analyst has access to the entire dataset, including covariates \(\:X\), treatment assignments \(\:Z\), survival times \(\:t\), and event indicators \(\:\delta\:\), and estimates survival curves based on this complete information. As there are no data-sharing constraints, CA represents the ideal baseline.

-

b.

Local Analysis (LA):

In LA, each user independently analyzes their own data without sharing any information externally. Each user possesses only their own dataset—covariates \(\:{X}_{k}\), treatment \(\:{Z}_{k}\), survival times \(\:{t}_{k}\), and event indicators \(\:{\delta\:}_{k}\) —and estimates survival curves solely based on this limited information.

-

c.

Local Matching and Central Analysis (LMCA):

Since survival curve estimation requires \(\:t\), \(\:\delta\:\), and\(\:\:Z\), under the considered setting, it is possible to perform survival curve estimation by sharing only these variables after local matching. Specifically, each user conducts PSM independently using their own \(\:{X}_{k}\) and \(\:{Z}_{k}\), then shares only the matched survival times \(\:{t}_{k}^{\text{m}\text{a}\text{t}\text{c}\text{h}\text{e}\text{d}}\), event indicators \(\:{\delta\:}_{k}^{\text{m}\text{a}\text{t}\text{c}\text{h}\text{e}\text{d}}\), and treatment indicotors \(\:{Z}_{k}^{\text{m}\text{a}\text{t}\text{c}\text{h}\text{e}\text{d}}\) with the analyst, without sharing the original covariates \(\:{X}_{k}\). Thus, privacy is preserved using this method.

In this study, it is desirable that the results of the proposed method are closer to those obtained by CA than to those obtained by LA.

The experimental conditions were as follows: The anchor dataset \(\:{X}^{\text{a}\text{n}\text{c}}\) was generated using random numbers sampled uniformly within the range defined by the minimum and maximum values of the entire dataset. This type of anchor data was also employed in Imakura and Sakurai28. The number of samples in the anchor dataset \(\:r\) was set to be equal to the total number of samples \(\:n\). PCA was applied to each participant as a dimensionality reduction function. PCA is one of the most widely used techniques for reducing dimensionality.

The analyst estimates propensity scores using logistic regression, which is commonly adopted in the literature on propensity scores. Following the approach of Kawamata et al.27, the logistic regression model used in the experiments consisted of a linear combination of the collaboration representation and an intercept term for the proposed method, whereas for central and local analyses, the model consisted of a linear combination of the covariates and an intercept term. Caliper matching was employed for matching, where the caliper width was set to 0.2 times the standard deviation of the logit of the estimated propensity scores, as recommended by Austin31.

The performance of each method was evaluated from two perspectives: covariate balance and the accuracy of survival curve estimation. For covariate balance, we used three metrics: the sample size of the matched pairs created through PSM, the inconsistency of the estimated propensity scores, and the balance of covariates after matching. For survival curve estimation, we compared the accuracy of the estimated survival curves across different methods.

Inconsistency of propensity score with CA

To evaluate the accuracy of the estimated propensity scores, we used the following inconsistency measures:

where \(\:\widehat{\varvec{e}}={\left[{\widehat{e}}_{1},\dots\:,{\widehat{e}}_{n}\right]}^{T}\) denotes the estimated propensity scores, and \(\:{\widehat{\varvec{e}}}^{\text{C}\text{A}}={\left[{\widehat{e}}_{1}^{\text{C}\text{A}},\dots\:,{\widehat{e}}_{n}^{\text{C}\text{A}}\right]}^{T}\) denotes the propensity scores estimated by CA. A smaller value indicates that the estimated propensity scores were closer to those obtained by CA.

Covariate balance

To evaluate covariate balance improvement through PSM, we used the standardized mean difference (SMD), a commonly adopted metric32, defined as:

where \(\:{\bar{x}}_{T}^{j}\) and \(\:{s}_{T}^{j}\) are the mean and standard deviation of covariate \(\:{x}^{j}\) in the treatment group, and \(\:{\bar{x}}_{C}^{j}\) and \(\:{s}_{C}^{j}\)are those in the control group, respectively. For the overall covariate balance assessment, we employed the maximum absolute standardized mean difference (MASMD), defined as:

where \(\:\varvec{d}=[{d}^{1},\dots\:,{d}^{m}]\). Similar to the SMD, the MASMD measures the bias in covariate distributions between the treatment and control groups, with smaller values indicating better covariate balance.

Accuracy of estimated survival curve

To assess how faithfully each distributed or local method reproduces the survival curves obtained by central analysis (CA), we evaluated the closeness between the estimated survival curves and the CA-estimated curves. Here, CA is treated as a realistic benchmark corresponding to conventional centralized propensity score matching analysis. It should be emphasized that CA itself is an estimator, not the true survival function. Therefore, the “gap” metric described later specifically quantifies proximity to CA, while comparison to the true survival function is separately evaluated using metrics based on RMST. Let \(\:{\widehat{S}}_{m,z}\left(t\right)\) denote the estimated survival curve for treatment group \(\:z\in\:\left\{\text{0,1}\right\}\) obtained by method \(\:m\), and let \(\:{\widehat{S}}_{\text{C}\text{A},z}\left(t\right)\) be the corresponding curve from the central analysis.

The gap between the survival curves of method \(\:m\) and CA for group \(\:z\) was defined as:

where\(\:\:{\widehat{S}}_{m,z}\left(t\right)=[{\widehat{S}}_{m,z}\left({t}_{0}\right),\dots\:,{\widehat{S}}_{\text{C}\text{A},\:z}({t}_{T}\left)\right]\). A smaller Gap value indicates that the survival curve estimated by method m approximates the survival curve derived from the central analysis of the corresponding treatment group. In real-world data experiments, this CA-based Gap serves as a direct measure of how well each distributional method reproduces the covariate-balanced survival curve obtained under centralized PSM.

Metrics based on restricted mean survival time (RMST)

RMST provides not only a numerical summary of survival curves but also a clear definition of the effect we aim to estimate: the average survival time up to a clinically relevant time point \(\:\tau\:\) in each treatment group and their difference[34,35]. For a prespecified truncation time \(\:\tau\:>0\), the restricted mean survival time (RMST) for group \(\:z\) under method \(\:m\) is defined as

The corresponding RMST difference for method \(\:m\) is

We use this quantity to define two additional performance measures: (i) the agreement with the central analysis, and (ii) the bias with respect to the true survival functions in the simulation study.

-

1.

Difference of RMST difference relative to the central analysis:

Using the RMST difference obtained by the central propensity score matching analysis (CA) \(\:{\widehat{{\Delta\:}}}_{\text{C}\text{A}}\left(\tau\:\right)\), we quantify how closely each comparison method approximates the estimated results as follows:

$$\:\begin{array}{c}{D}_{m}^{\text{C}\text{A}}\left(\tau\:\right)=\left|{\widehat{{\Delta\:}}}_{m}\left(\tau\:\right)-{\widehat{{\Delta\:}}}_{\text{C}\text{A}}\left(\tau\:\right)\right|.\:\end{array}\:\:$$(17)A value of \(\:{D}_{m}^{\text{C}\text{A}}\left(\tau\:\right)\) close to zero indicates that method \(\:m\) yields RMST differences with covariate balance comparable to that of central analysis. In real world data experiments, since the true survival function is unknown, only comparisons based on this CA are available.

-

2.

Difference of RMST difference relative to the true survival function (simulation study only):

In synthetic data experiments, the true covariate-balanced survival function \(\:{S}_{z}^{\text{t}\text{r}\text{u}\text{e}}\left(t\right)\) can be defined from the data generation process for \(\:z\in\:\left\{\text{0,1}\right\}\) (see the Method Section). The corresponding true RMST and its difference are as follows:

$$\:\begin{array}{c}{\mu\:}_{z}^{\text{t}\text{r}\text{u}\text{e}}\left(\tau\:\right)={\int\:}_{0}^{\tau\:}{S}_{z}^{\text{t}\text{r}\text{u}\text{e}}\left(t\right)\:dt,\:\:{{\Delta\:}}^{\text{t}\text{r}\text{u}\text{e}}\left(\tau\:\right)={\mu\:}_{1}^{\text{t}\text{r}\text{u}\text{e}}\left(\tau\:\right)-{\mu\:}_{0}^{\text{t}\text{r}\text{u}\text{e}}\left(\tau\:\right).\end{array}$$(18)

For each method \(\:m\), we define the difference of RMST difference relative to the true effect as follows:

This value \(\:{D}_{m}^{\text{t}\text{r}\text{u}\text{e}}\left(\tau\:\right)\) quantity directly measures how accurately method \(\:m\) recovers the difference in true RMST suggested by the data generation model.

As with other metrics, we report the mean and standard deviation across repeated trials. A value closer to zero indicates smaller bias and less variability, suggesting the estimator is more stable across simulation datasets.

For all RMST-based metrics, the truncation time \(\:\tau\:\) was determined from the data in both synthetic and real data analyses. Specifically, for each scenario or dataset, the minimum value of the maximum observed time in the treatment and control groups was set as \(\:\tau\:\), using all samples prior to resampling or matching.

All numerical experiments were conducted on a Windows 11 machine equipped with an Intel(R) Core(TM) i7-1255U @ 1.70 GHz processor and 16 GB RAM, using Python 3.8.

Experiment I: synthetic data

Simulation settings

We conducted validation experiments using synthetic data. The synthetic dataset contained 1,000 samples, each with six covariates, simulated baseline covariates, treatment assignments, and event times. Covariates \(\:{\varvec{x}}_{i}=\left[{x}_{i}^{1},\:\dots\:,\:{x}_{i}^{6}\right]\) are generated from a multivariate normal distribution.

where \(\:\mathcal{N}\left(0,\:S\right)\) denotes a normal distribution with mean zero, and the covariance matrix \(\:S\) is given by

The probability that a patient \(\:i\) receives treatment (\(\:{z}_{i}=1\)) is given by:

Event times (survival outcomes) were simulated based on the method proposed in Bender et al.33, using a Weibull distribution. Specifically, survival times were generated according to

where \(\:{\lambda\:}\) is the scale parameter, \(\:v\) is the shape parameter of the Weibull distribution, and \(\:\gamma\:\) represents the marginal treatment effect. In the experiments, we set \(\:\lambda\:=2,\:v=2\) and\(\:\:\gamma\:=-1\). Thus, the survival times depend on both covariates \(\:X\) and the treatment \(\:Z\).

To introduce right censoring, the censoring time was independently generated from an Exponential distribution

In the experiments, we set \(\:{\lambda}_{C}=0.3\). The observed time and event indicator were then defined as

This setup ensures that the treatment assignment depends on covariate \(\:X\) and that the covariates also directly affect survival outcomes. Therefore, the covariates act as confounders when estimating the causal effect of treatment on survival. Without adjusting for these confounders, the estimated survival curves might have been biased.

In addition, for defining the “true” covariate-balanced survival curve and RMST used in the evaluation, we generated potential event time \(\:{T}_{i}^{\left(0\right)}\) and \(\:{T}_{i}^{\left(1\right)}\) by fixing \(\:{Z}_{i}=0\) and \(\:{Z}_{i}=1\) in the above Weibull distribution and computed the marginal survival functions

via Monte Carlo simulation (N = 100,000) over the covariate distribution. The corresponding true RMST and their difference \(\:{{\Delta}}^{\text{t}\text{r}\text{u}\text{e}}\left(\tau\right)\) were obtained by numerical integration of \(\:{S}_{z}^{\text{t}\text{r}\text{u}\text{e}}\left(t\right)\) on \(\:[0,\:\tau\:]\).

How to partition the data

For data distribution, we assumed horizontal and vertical partitioning with \(\:c=2\) institutions and \(\:d=2\) partitions.

The datasets were randomly divided such that each institution had an equal number of samples. Each experiment was repeated 100 times.

In this setting, we compare the results for the following cases: In this simulation dataset, the distribution of covariates does not depend on sample \(\:i\) and the samples are randomly split; to eliminate redundancy in the results, we represent the results of the (1,1)-th user in the individual analysis. Because the same results are expected from left-side and right-side collaboration, as well as from upper-side and lower-side collaboration, left-side collaboration (L-clb), upper-side collaboration (T-clb), and overall collaboration (W-clb) are considered as the three proposed methods. (L-clb, T-clb, and W-clb) are considered as the results of the proposed method (DC-QE), and the left collaboration (L-clb) is considered as the result of LMCA. Here, \(\:{\tilde{m}}_{k,l}=2\) and \(\:\check{m}=3\) for left-side collaboration, and \(\:\check{m}=6\) for upper and overall collaboration.

Experiment II: real-world medical data

We further evaluated the performance of the proposed method using five publicly available medical datasets, as listed in Table 1. The datasets included four survival analysis datasets from the survival package in R and a real-world Right Heart Catheterization (rhc) dataset.

We evaluated the performance of the proposed method by comparing it with LA, CA, and LMCA. Each dataset was horizontally partitioned into \(\:c=3\) users (\(\:d=1\)), and the samples were randomly divided such that each user held approximately the same number of samples. Each experiment was repeated \(\:20\) times.

Results

Experiment I: synthetic data

The results of the CA, LA, LMCA, and the three proposed methods (DC-QE (T-clb, L-clb, and W-clb)) are shown in Table 2. The inconsistency metric quantifies the closeness of the estimated propensity scores to those obtained through CA. Among all the methods, DC-QE (T-clb) achieved the smallest inconsistency at 0.0480, indicating that the estimated propensity scores were extremely close to those from the central analysis. This was followed by DC-QE (W-clb) at 0.0857 and DC-QE (L-clb) at 0.1033. In contrast, LA and LMCA exhibited inconsistency values of approximately 0.17, suggesting that without collaborative techniques, whether through simple local analysis or sharing only matched data, the estimated propensity scores deviated substantially from those obtained via central analysis. The particularly low inconsistencies observed for DC-QE (T-clb) and DC-QE (W-clb) indicate that the proposed method effectively approximates the central analysis by leveraging additional covariate information.

Regarding the covariate balance, as measured using the MASMD metric, CA achieved the lowest value of 0.1211, reflecting the best covariate balance. LA and LMCA exhibited larger MASMD values (0.6820 and 0.6720, respectively), implying that when propensity score estimation and matching were performed independently for each user, covariate balance was not sufficiently achieved. In contrast, the proposed method (DC-QE) consistently achieved smaller MASMD values than LA across all collaboration settings, demonstrating that even in a distributed environment, our method can achieve a covariate balance closer to that of centralized analysis.

The proximity of the estimated survival curve and the CA curve were evaluated for both the treatment group and the control group using the Gap metric. DC-QE (W-clb) achieved the smallest gap in both the treatment and control groups (0.0259 and 0.0275, respectively), followed by DC-QE (L-clb) and DC-QE (T-clb), both of which significantly outperformed LA and LMCA. This demonstrates that the collaborative approach can faithfully reproduce Kaplan-Meier curves obtained from centralized PSM. These results suggest that DC-QE (W-clb) benefits from both an increased number of samples through sample-level collaboration and enhanced covariate information through covariate-level collaboration, resulting in survival curve estimates that closely approximate those of a centralized analysis.

Furthermore, we evaluated how well each method reproduces the RMST difference estimated by CA using the difference of RMST difference relative to CA. Among the distributed methods, DC-QE (W-clb) showed the smallest deviation from the CA (mean 0.0425), while DC-QE (T-clb) and DC-QE (L-clb) achieved very similar values (approximately 0.05). In contrast, LA and LMCA exhibited much larger errors (approximately 0.10–0.11). These results confirm that the proposed DC-QE framework can recover the covariate-balanced estimand from CA with substantially smaller errors than other local methods.

In synthetic data environments, the data generation process defines the true covariate-adjusted RMST difference. Therefore, we calculated the difference of RMST difference relative to the true survival function. Since PSM was performed on finite samples, the estimated CA still exhibited bias (mean 0.0690) relative to the true value. However, all DC-QE variants reduced bias compared to LA and LMCA. Among distributed methods, DC-QE (W-clb) achieved the smallest bias (0.0854), followed closely by DC-QE (T-clb) (0.0886). In contrast, DC-QE (L-clb) exhibited slightly larger bias (0.0998). LA and LMCA exhibited even larger biases (approximately 0.16).

Experiment II: real-world medical data

We further evaluated the performance of the proposed method using five publicly available medical datasets. Table 3 summarizes the mean and standard deviation of the four evaluation metrics for each dataset (colon, lung, pbc, veteran, and rhc). Across all datasets, the proposed method (DC-QE) consistently demonstrated superior or at least comparable performance relative to LA and LMCA in terms of inconsistency metric, MASMD, and Gap metric. These results suggest that DC-QE can achieve an accuracy close to that of the CA even under distributed data settings.

Specifically, compared to LA, DC-QE achieved substantially smaller MASMD values and inconsistency levels that were close to those of CA. Moreover, for the Gap metric, which measures survival curve estimation accuracy, DC-QE consistently outperformed LA, particularly for the control group (Gap(C)), where the estimated survival curves were often much closer to those obtained by central analysis.

When compared with LMCA, DC-QE generally achieved smaller inconsistencies and Gap values. However, for MASMD, no consistent pattern was observed; in some datasets, LMCA exhibited smaller MASMD values than DC-QE. This indicates that while DC-QE outperforms LMCA in terms of treatment effect estimation accuracy and survival curve estimation, the advantage of covariate balance is dataset-dependent.

Since the true survival function is unknown in the real datasets, we focused on the difference of RMST difference relative to CA. \(\:{D}^{\text{C}\text{A}}\) varies across datasets, likely reflecting differences in sample size, observation period, and confounding strength specific to each real dataset. For multiple datasets (lung, veterans, rhc), DC-QE showed the smallest bias in \(\:{D}^{\text{C}\text{A}}\). In the other two data sets (colon and pbc), LMCA showed a slightly smaller deviation than DC-QE, but DC-QE still significantly improved LA.

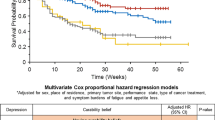

Figure 2 shows the survival curves estimated using each method. Overall, the proposed method (DC-QE) yields survival curves that are visually closer to those obtained by the CA than to those obtained by LA and LMCA. This tendency was particularly evident in datasets with relatively small sample sizes, such as the lung, pbc, and veteran datasets. In these datasets, although LA and LMCA show noticeable deviations from the survival curves obtained using CA, the proposed method can approximate the central analysis survival curves with higher accuracy.

Survival curves in Experiment II. (a) to (e) plot the results of survival curves for each dataset. The X-axis represents time, and the Y-axis represents survival probability. In the legend, solid lines represent the results of the treatment group, and dashed lines represent the results of the control group for each comparison method.

Discussion

In this study, we propose a novel method for estimating covariate-balanced survival curve in distributed data environments while preserving privacy by extending the DC-QE to survival analysis. In synthetic data experiments, the proposed method significantly improved covariate balance and inconsistency metrics compared to LA and LMCA. DC-QE (W-clb) estimated survival curves closer to the centralized analysis with larger sample sizes. The proposed method most faithfully reproduced both the RMST difference obtained under centralized PSM and the true RMST difference defined in the data generation process. In contrast, LA and LMCA exhibited much larger deviations. Furthermore, experiments using open datasets confirmed the effectiveness of the proposed method across multiple datasets with varying sample sizes and numbers of covariates. The proposed method generated survival curves most similar to the central analysis results. While the difference of RMST difference relative to CA fluctuated across datasets and was occasionally slightly smaller for LMCA, DC-QE simultaneously improved covariate balance and survival curve proximity, achieving better performance than LA.

Unlike simply applying parametric or semiparametric models, such as the Cox model, to distributed environments, the survival analysis approach proposed in this study uniquely combines PSM with the Kaplan–Meier estimator. Nonparametric methods have the advantage of flexibly estimating survival curves based on observed data without requiring prior assumptions about the form of survival time distribution. This flexibility allows them to mitigate bias more effectively when confounders have complex effects. Thus, even in observational studies in which confounding structures are complicated or the number of covariates is large, the proposed method offers the important advantage of accurately estimating survival curves without being constrained by strong model assumptions.

Because DC-QE estimates propensity scores through an integrated framework, even in distributed environments, it facilitates an improved covariate balance between the treatment and control groups. Moreover, although the range of covariate information available for matching may vary depending on the data distribution setting, the proposed method leverages the anchor data along with dimensionality reduction and reconstruction mechanisms, effectively enabling the use of a broader range of covariate information. Consequently, even when individual institutions cannot secure enough samples or covariates on their own, reliable estimation can be achieved by performing matching based on globally integrated information.

We adopted PSM as a covariate balance adjustment method. However, other adjustment techniques based on propensity scores are also available, such as inverse probability weighting and stratification. A major advantage of DC-QE is its flexibility; once the propensity scores are estimated, the framework can be extended to incorporate alternative adjustment methods, such as weighting or stratification, within the context of survival analysis. In practice, it may be beneficial to consider methods other than matching, depending on the characteristics of the survival data. Combining DC-QE with more robust covariate adjustment techniques can further enhance its performance and broaden its applicability.

Several limitations must also be acknowledged. First, even centralized PSM analysis (CA) in simulations retains non-negligible bias relative to the true RMST-based estimand, meaning that all comparative methods—including our distributed approach—are evaluated against the Kaplan–Meier estimator under finite sample size and right censoring, a “realistic but imperfect benchmark.” However, since CA, which aggregates all individual-level data to perform PSM, represents the most natural practical analysis procedure and can be considered the gold standard that distributed analysis should approximate, this study adopted CA as the primary comparison method. From this perspective, it is more important to assess how well DC-QE reproduces the same estimand as CA and suppresses additional errors arising from data partitioning; as a supplementary check, the simulation also reports the difference of RMST difference relative to the true survival function. Second, RMST-related metrics in real data are influenced by differences in observation design across datasets, such as follow-up duration and censoring rates. This reflects the fact that RMST captures the explicit quantity “mean survival time up to time \(\:\tau\:\),” and the reliability with which this quantity can be estimated naturally varies across datasets. Therefore, in this study we interpret \(\:{D}^{\text{C}\text{A}}\left(\tau\:\right)\) and \(\:{D}^{\text{t}\text{r}\text{u}\text{e}}\left(\tau\:\right)\) not as standalone performance metrics, but as one component within a multifaceted evaluation alongside MASMD, survival curve gap, and discrepancy from CA.

Future research directions include extending the framework to handle cases where the treatment variable \(\:Z\) takes multiple values or where time-dependent covariates are involved, and integrating the framework with existing techniques such as differential privacy to establish stronger privacy guarantees. In the current implementation, propensity scores are estimated using logistic regression. Incorporating more flexible machine learning-based propensity score estimation methods could further enhance the method’s ability to handle nonlinear confounding structures.

Conclusion

In conclusion, the proposed method, which extends DC-QE to survival analysis, is shown to significantly improve confounder adjustment compared to LA and achieve covariate-balanced KM curve estimates that closely approximate those obtained by central analysis. Our approach enables a more accurate estimation even in situations where data sharing between facilities or institutions is challenging, making it highly applicable to medical and public health research, which requires balancing privacy preservation with analytical accuracy. In future work, we plan to enhance the practical utility of this method by applying and extending it to more diverse environments and analytical objectives, such as real-world clinical settings and regional public health data.

Data availability

All data used in this study are either reproducible synthetic data or publicly available data. Synthetic data can be generated using the methods and parameter settings described in the Methods section. The real-world benchmark datasets—lung, veteran, and pbc from the survival package and rhc from the Hmisc package—are all open access and can be obtained from R packages. The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

References

Uchida, S. et al. Targeting uric acid and the inhibition of progression to end-stage renal disease—A propensity score analysis. PLoS ONE. 10, e0145506 (2015).

Kawachi, K., Kataoka, H., Manabe, S., Mochizuki, T. & Nitta, K. Low HDL cholesterol as a predictor of chronic kidney disease progression: a cross-classification approach and matched cohort analysis. Heart Vessels. 34, 1440–1455 (2019).

Rosenbaum, P. R. & Rubin, D. B. The central role of the propensity score in observational studies for causal effects. Biometrika 70, 41–55 (1983).

Granger, E., Watkins, T., Sergeant, J. C. & Lunt, M. A review of the use of propensity score diagnostics in papers published in high-ranking medical journals. BMC Med. Res. Methodol. 20, 132 (2020).

Austin, P. C. & Schuster, T. The performance of different propensity score methods for estimating absolute effects of treatments on survival outcomes: A simulation study. Stat. Methods Med. Res. 25, 2214–2237 (2016).

Austin, P. C., Thomas, N. & Rubin, D. B. Covariate-adjusted survival analyses in propensity-score matched samples: imputing potential time-to-event outcomes. Stat. Methods Med. Res. 29, 728–751 (2020).

Cenzer, I., Boscardin, W. J. & Berger, K. Performance of matching methods in studies of rare diseases: a simulation study. Intractable Rare Dis. Res. 9, 79–88 (2020).

Pirracchio, R., Resche-Rigon, M. & Chevret, S. Evaluation of the propensity score methods for estimating marginal odds ratios in case of small sample size. BMC Med. Res. Methodol. 12, 70 (2012).

Austin, P. C. An introduction to propensity score methods for reducing the effects of confounding in observational studies. Multivar. Behav. Res. 46, 399–424 (2011).

Li, Z., Roberts, K., Jiang, X. & Long, Q. Distributed learning from multiple EHR databases: contextual embedding models for medical events. J. Biomed. Inf. 92, 103138 (2019).

Huang, Y., Jiang, X., Gabriel, R. A. & Ohno-Machado, L. Calibrating predictive model estimates in a distributed network of patient data. J. Biomed. Inf. 117, 103758 (2021).

Dayan, I. et al. Federated learning for predicting clinical outcomes in patients with COVID-19. Nat. Med. 27, 1735–1743 (2021).

Uchitachimoto, G. et al. Data collaboration analysis in predicting diabetes from a small amount of health checkup data. Sci. Rep. 13, 11820 (2023).

Nakayama, T. et al. Data collaboration for causal inference from limited medical testing and medication data. Sci. Rep. 15, 9827 (2025).

Cox, D. R. Regression models and life-tables. J. R Stat. Soc. Ser. B Methodol. 34, 187–202 (1972).

Kaplan, E. L. & Meier, P. Nonparametric estimation from incomplete observations. J. Am. Stat. Assoc. 53, 457–481 (1958).

Lu, C. L. et al. WebDISCO: a web service for distributed Cox model learning without patient-level data sharing. J. Am. Med. Inf. Assoc. 22, 1212–1219 (2015).

Späth, J. et al. Privacy-aware multi-institutional time-to-event studies. PLOS Digit. Health. 1, e0000101 (2022).

Imakura, A., Tsunoda, R., Kagawa, R., Yamagata, K. & Sakurai, T. DC-COX: data collaboration Cox proportional hazards model for privacy-preserving survival analysis on multiple parties. J. Biomed. Inf. 137, 104264 (2023).

Yoshida, K., Gruber, S., Fireman, B. H. & Toh, S. Comparison of privacy-protecting analytic and data‐sharing methods: A simulation study. Pharmacoepidemiol Drug Saf. 27, 1034–1041 (2018).

Huang, C., Wei, K., Wang, C., Yu, Y. & Qin, G. Covariate balance-related propensity score weighting in estimating overall hazard ratio with distributed survival data. BMC Med. Res. Methodol. 23, 233 (2023).

Denz, R., Klaaßen-Mielke, R. & Timmesfeld, N. A comparison of different methods to adjust survival curves for confounders. Stat. Med. 42, 1461–1479 (2023).

Armstrong, K., FitzGerald, G., Schwartz, J. S. & Ubel, P. A. Using survival curve comparisons to inform patient decision making: can a practice exercise improve understanding? J. Gen. Intern. Med. 16, 482–485 (2001).

Dey, T., Mukherjee, A. & Chakraborty, S. A. Practical overview and reporting strategies for statistical analysis of survival studies. Chest 158, S39–S48 (2020).

Bonomi, L., Jiang, X. & Ohno-Machado, L. Protecting patient privacy in survival analyses. J. Am. Med. Inf. Assoc. 27, 366–375 (2020).

Bonomi, L., Lionts, M. & Fan, L. Private continuous survival analysis with distributed multi-site data. In IEEE International Conference on Big Data (BigData) 5444–5453. https://doi.org/10.1109/BigData59044.2023.10386571 (IEEE, 2023).

Kawamata, Y., Motai, R., Okada, Y., Imakura, A. & Sakurai, T. Collaborative causal inference on distributed data. Expert Syst. Appl. 244, 123024 (2024).

Imakura, A. & Sakurai, T. Data collaboration analysis framework using centralization of individual intermediate representations for distributed data sets. ASCE-ASME J. Risk Uncertain. Eng. Syst. Part. Civ. Eng. 6, 04020018 (2020).

Imakura, A., Inaba, H., Okada, Y. & Sakurai, T. Interpretable collaborative data analysis on distributed data. Expert Syst. Appl. 177, 114891 (2021).

Nguyen, H., Zhuang, D., Wu, P. Y. & Chang, M. AutoGAN-based dimension reduction for privacy preservation. Neurocomputing 384, 94–103 (2020).

Austin, P. C. Optimal caliper widths for propensity-score matching when estimating differences in means and differences in proportions in observational studies. Pharm. Stat. 10, 150–161 (2011).

Austin, P. C. & Stuart, E. A. Moving towards best practice when using inverse probability of treatment weighting (IPTW) using the propensity score to estimate causal treatment effects in observational studies. Stat. Med. 34, 3661–3679 (2015).

Bender, R., Augustin, T. & Blettner, M. Generating survival times to simulate Cox proportional hazards models. Stat. Med. 24, 1713–1723 (2005).

Royston, P. & Parmar, M. K. Restricted mean survival time: an alternative to the hazard ratio for the design and analysis of randomized trials with a time-to-event outcome. BMC Med. Res. Methodol. 13, 152 (2013).

Uno, H. et al. Moving Beyond the Hazard Ratio in Quantifying the Between-Group Difference in Survival Analysis. J. Clin. Oncol. 32, 2380–2385 (2014).

Acknowledgements

The authors would like to thank Editage (http://www.editage.jp) for English-language editing.

Funding

This work was supported in part by the Japan Society for the Promotion of Science (JSPS), Japan Grants-in-Aid for Scientific Research (Nos. JP22K19767, JP23K22166, JP23K28192), Japan Science and Technology Agency (JST) (No. JPMJPF2017) and JST SPRING (No. JPMJSP2124).

Author information

Authors and Affiliations

Contributions

A. T., Y. K., T. N., and Y. O. designed the study. A. T. conducted simulation experiments and data analysis. A. T. and Y. K. wrote the manuscript. Y. K., A. I., and T. S. constructed a DC analysis model tailored to the current problem set and interpreted the results mathematically. All the authors have read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Toyoda, A., Kawamata, Y., Nakayama, T. et al. Estimating covariate-balanced survival curve in distributed data environment using data collaboration quasi-experiment. Sci Rep 16, 3368 (2026). https://doi.org/10.1038/s41598-025-33245-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-33245-7