Abstract

Anti-patterns are explicit structures in the design that represents a significant violation of software design principles and negatively impacts the software design quality. The presence of these Anti-patterns highly influences the maintainability and perception of software systems. Thus it becomes necessary to predict anti-patterns at the early stage and refactor them to improve the software quality in terms of execution cost, maintenance cost, and memory consumption. In the anti-pattern prediction domain, during research analysis, it was realized that there had been very little work instigated on addressing both class imbalance and feature redundancy problems jointly to enhance models’ performance and prediction accuracy. It has been perceived in the literature survey to study droughts with a comprehensive comparative analysis of different sampling and feature selection strategies. To achieve greater precision results and performance, this research constructs a web service anti-pattern prediction model over preprocessed software source code metrics using sampling and feature selection techniques to handle imbalanced data and feature redundancy to gain flawless web service anti-pattern prediction outcomes. Considering the above erudition, we have applied different variants of aggregation measures to find the metrics at the system level. These extracted metrics are used as input, so we have also applied different variants of feature selection techniques to remove irrelevant features and select the best combination of features. After finding important features, we have also applied different variants of data sampling techniques to overcome the problem of class imbalance. Finally, we have used thirty-three different classifiers to find import patterns that help identify anti-patterns. These all techniques are compared using Accuracy and Area Under the ROC (receiver operating characteristic curve) Curve (AUC). The experimental result of web service anti-pattern prediction models validated on 226 WSDL files illustrates that the least square support vector machine (LSSVM) with RBF kernel attains the best performance among the other 33 competing classifiers employed with the lowest Friedman mean rank value of 1.18. During comparative analysis over different feature subset selection techniques, the outcome indicates the mean accuracy value of 88.40% and mean AUC value of 0.88 for the models developed using significant features are higher in comparison to other techniques. The result shows the up-sampling methods (UPSAM) method secured the highest mean accuracy % and mean AUC with values of 86.14% and 0.87, respectively. The experimental result indicates the performance of the web service anti-pattern prediction models is adversely impacted by class imbalance and irrelevance of features. The outcome demonstrates that the performance of trained models improved with an AUC value between 0.805 to 0.99 post-application of sampling and feature selection strategies without using feature selection and sampling techniques. The outcome implies that USMAP achieves better performance. The result demonstrates that the models developed using significant features drive the desired effect compared to other implemented feature selection techniques.

Similar content being viewed by others

Introduction

System autonomy, heterogeneity, and context adaptability are critical in the software business, leading to the development of web services based on service-oriented architecture (SOA). For successful businesses and contemporary governments, SOA is the progression of distributed computing toward integrating expert departments and IT. Services may be accessed via the internet using the web service implementation of SOA, which is agnostic of the platform and programming language. SOA is generally regarded in IT systems as the technology that can improve the receptivity of both business and IT organizations since it is self-adaptable to context. Web services may be built in various languages and on various platforms, allowing them to be used on a wide range of devices.

Modeling Service-Based Systems (SBSs) like Paytm, DropBox and Amazon are made feasible by SOA, and the growth of these systems causes many challenges. As new devices and technologies are introduced, SBSs must evolve to keep up with the demands of their users. Like any other big and complicated system, SBSs are prone to ongoing modification to accommodate new user needs and modify the execution circumstances. It’s also possible that all of these modifications may decrease SBS’ Quality of Service (QoS) and result in a retro design, which has been given the name of “Anti-patterns”1. Structures like these imply a breach of fundamental design principles and a decrease in design quality. Because they make it challenging to improve and maintain a software system, anti-patterns are helpful for spotting issues with its design, source code, or overall project management. Therefore, it has become compulsory to develop prediction models that help to detect anti-patterns present in web services. Software quality researchers have used simple models to predict different types of anti-patterns based on source code metrics that help improve the software quality in terms of execution cost, maintenance cost, and memory consumption. Empirical experiments have been carried out in the past related to web service anti-pattern predictions (Travassos et al.2, Marinescu et al.3, Munro et al.4, Ciupke et al.5 Simon et al.6, Rao et al.7, Khomh et al.8, Moha et al.9). Though these research works have raised the need to develop perdition models, it was realized that there had been very little work instigated on addressing both class imbalance and feature redundancy problems jointly to enhance models’ performance and prediction accuracy. It has been perceived in the above work to study droughts with a comprehensive comparative analysis of different sampling and feature selection strategies.

In this work, we investigate the predictive power of different aggregation measures which are used for finding file-level metrics, feature selection techniques that are used for selecting significant features, data sampling techniques that are used for handling the class imbalance nature of datasets, and different variants of machine learning for finding the pattern. Here, our focus is on how accurately these techniques help to predict anti-patterns present in web services. Initially, we selected 226 different web-service as WSDL from various domains such as finance, tourism, health, education, etc. Then we applied the WSDL2Java tool to each WSDL file to extract the java files. After extracting the java files, we have used CKJM10 tool proposed by Chidamber and Kemerer to find metrics at the class level. Since our objective is to find the anti-pattern present in the WSDL file, so we have applied different variants of aggregation measures to find metrics at the system level. After computing metrics at the system level, we have also applied feature selection techniques to find the significant set of features, which are later used as input for the anti-pattern prediction models. We also observed that the considered data have imbalanced nature of classes. Henceforth, to handle the class imbalance problem and its impact on the prediction accuracy of the models, we have also used five data sampling techniques. We compare the performance of the models generated using this sampling technique with the model developed using the original data (ORGD).

Finally, we have applied different categories of machine learning techniques to find import patterns that help to identify anti-patterns present in unseen WSDL files. Initially, we have applied the most frequently used classifiers like different variants of Naive Bayes (Bernoulli, Gaussian, Multinomial), decision trees, logistic regression, support vector machines with different kernels, and artificial neural networks with different back-propagation algorithms. Different researcher mainly uses these types of classifiers to predict software quality parameters. Then, advanced levels of classifiers like least square support vector machines with multiple kernels and extreme and weighted extreme learning machines with multiple kernels have been used to find better sets of patterns for anti-pattern predictions. Finally, we have used ensemble learning and deep-learning approaches to find the best patterns for anti-pattern predictions. The predictive power of these techniques is evaluated in terms of accuracy and AUC values and validated with 5-fold cross-validation approaches on 226 different web-service. In order to find the significant impact of the techniques, we have used Wilcoxon Signed Rank Test (WSRT) with Friedman mean rank (FMR).

The major contributions of this research work are:

-

Proposed a framework to predict web service anti-patterns based on extracted java files of WSDL.

-

Proposed a framework using the aggregation measures concept to extract file-level metrics from class-level metrics.

-

Usage of different sampling approaches to counter the class imbalance problem.

-

Usage of different feature selection techniques to remove irrelevant features and set the right sets of features.

-

thirty-three different classifiers are considered to develop a model to identify the files with anti-patterns.

-

Various statistical tests were conducted to determine the effectiveness of the proposed anti-pattern detection model.

The paper is organized as follows: Section 2 provides the summary of related work in the field of software fault prediction. Section 3 explains the used methodologies in our experimentation. The research framework, result analysis, and model performance is presented in Sections 4 and 5. Section 6 covers the comparative analysis. The final results discussion and conclusion work are depicted in Sections 7 and 8 respectively.

Related work

There is a good number of existing methods proposed by various researchers to predict anti-patterns or code smells present in object-oriented software. A manual procedure to identify anti-pattern or design smells is proposed by Travassos et al.2. They have used manual reviews and reading techniques types of concepts to find the smells that do not meet the specification. A similar kind of work is also proposed by Marinescu et al.3 to predict the design smell present in software systems based on extracted metrics from the source code of the software system. They have executed their proposed work on the IPLASMA tool with the help of some detection techniques to find the pattern that helps to identify smells in a software system. They have applied ten detection techniques to predict anti-patterns or code smells. The major limitations of their approach are that extensive knowledge of metric-based rules is required to detect an anti-pattern successfully, and the varied threshold values lead to a varied outcome. Munro and his team4 also proposed one new method with the objective to overcome the limitations of text-based descriptions for predicting systematically characterized code smells. They have applied metric-based heuristics concepts to detect anti-patterns.

Ciupke et al.5 presented a method to study legacy code by specifying design problems as queries. Their approach is based on extracting the occurrences of the problems using models designed using extracted metrics from the source code of software systems. Simon and his team6 proposed methods based on visualization concepts to find the correlation between fully automated approaches, which are productive, systematic, and time-consuming. The major advantage of their strategies is there is no need for effective manual inspections.

Rao et al.7 introduced a method to propose anti-patterns based on the Design Propagation Probability concept to design the models that will treat like detection techniques. Based on the design Propagation Probability concept, they have focused on two anti-patterns, such as Divergent change and Shotgun surgery. Similarly, Khomh and his team8 presented the method with the help of anti-pattern definition, Goal Question Metric(GQM), and Bayesian Detection Expert(BDTEX) to develop Bayesian Belief Networks(BBN). The BBN method allows quality analysts to use their prior probability to predict anti-patterns.

Moha et al.9 proposed an automated method to predict different types of anti-patterns like Spaghetti Code, Functional Decomposition, Blob, and Swiss Army Knife. Their proposed methods also help to identify 15 underlying code smells. They gave the DECOR name of their proposed methods containing all the necessary steps used to specify and detect code and design smells. Their team also proposed another detection method called DETEX9 which helped to provide a platform to convert the rules extracted from the DECOR method into detection algorithms. They have clearly explained the correlation between the metrics extracted from code with different categories of anti-patterns.

Hemanta Kumar Bhuyan and Vinayakumar Ravi presented the importance of feature selection techniques in data mining applications11. They have proposed the optimization model using a Lagrangian multiplier to find and analyze a new class. They have used several classifiers with searching and statistical methods to validate the proposed subfeatures. Their finding confirms that their proposed methods benefit novel classes based on selected subfeature data. Hemanta Kumar Bhuyan and Narendra Kumar Kamila also provide the content related to the importnace of the feature selection techniques in data mining applications12. The have used fuzzy probabilities to proposed privacy preservation of individual data for both feature and sub-feature selection. They conclused that the fuzzy random variable approach confined the expected range on which the selection of sub-feature from feature database is made easy. Similar work is also done by Hemanta Kumar Bhuyan et al. to find the importance of feature selection during model development13. They proposed methods to choose the optimal feature for classification by utilizing mutual information (MI) and linear correlation coefficients (LCC). Their proposed methods offers the best selection on the same data set as compared to others.

Motivation

Based on the above survey, profound research has been conducted in the area of web service anti-pattern prediction models using machine learning approaches. However, further analysis indicates there is very little investment seen in converting file-level metrics using class-level, handling class imbalance of datasets, removing irrelevant features, and comparing wide varieties of machine learning techniques. As a result, there is a need for in-depth research to evaluate the performance of anti-pattern prediction models by combining aggregation, feature selection, and sampling techniques. This point is our primary motivation for our present work. It leads us to endow our focus on implementing the proposed model to address the substantial gap identified to extemporize the performance and predictability of the anti-pattern prediction model by engaging aggregation, sampling, and feature selection techniques jointly with a wide variety of machine learning techniques. This research work exploits the implication of sixteen aggregation measures, seven feature selection techniques, five sampling strategies, and thirty-three different classifiers to develop the best web service anti-pattern prediction models. The performance of these developed models is analyzed using AUC and Accuracy metrics. This leads to the contextual following research questions (RQ):

-

RQ 1:

Can web-service anti-patter prediction models be developed using source code metrics and machine learning?

-

RQ 2:

What is the significant impact of considering reduced sets of features as input on the performance of models?

-

RQ 3:

What is the significant impact of sampling techniques on the predictability of anti-pattern prediction models?

-

RQ 4:

What effect do different classifiers have on predicting anti-patterns using source code metrics?

Methodologies

This section enlightens on the components required for our study. We are providing information on datasets, feature selection techniques, sampling strategies, and classification approaches.

Data collection

We have prepared the datasets in this experiment to validate our proposed anti-pattern prediction model framework. Figure 1 shows the working procedure to prepare datasets. Initially, we applied the WSDL2Java tool on the WSDL file to extract the java files. These extracted java files are used as an input of CKJM10 tool to find object-oriented metrics as mentioned in Table 1 at the class level. CKJM takes java files as an input and computes metrics at the class level, but we need metrics at the system level because, in the experiment, we predict the anti-pattern at the WSDL level. To achieve this, we have applied aggregation measures to find metrics at the system level. Vasilescu et al.14 suggested using multiple aggregation measures to find metrics at the higher level without losing information. They have empirically proved that the use of a single aggregation measure creates a data loss problem. So, in this work, we have applied 16 aggregation measures as mentioned in Table 2 on class-level metrics to find metrics at the system level.

Experimental dataset

This experiment makes use of publicly available web-services datasets consisting of 226 WSDL files shared by Ouni et al. on GitHub https://github.com/ouniali/WSantipatterns. Table 3 shows the a detailed description of the considered datasets in terms of different types of anti-patterns. The first column of the table contains the name of anti-patterns like Fine-Grained anti-pattern (FGWS), Chatty anti-pattern (CSW), God Object anti-pattern (GOWS), Data ant-pattern (DWS), Ambiguous Anti-pattern (AWS). The second column contains the number web-service not having these patterns, the third column contains the number web-service having these patterns, and the last column contains the percentage of web-service having these patterns. From Table 3, we can say that the 13 web-service has FGWS anti-pattern with 5.75 %.

Data balancing techniques

The information in Table 3 confirms that the considered datasets have no equal distribution of anti-patterns, i.e., only 9.29% of WSDL files have CSW type of anti-pattern. This information confirms that the considered datasets have a class imbalance problem. So, we have applied five data sampling techniques as Adaptive Synthetic Sampling Technique (ADASYN), Synthetic Minority Oversampling Technique (SMOTE), SVMSMOTE, Borderline SMOTE (BLSMOTE), and UP sampling Technique (UPSAM), to generate balanced data. The predictive ability of these techniques is also compared using the model trained on original data to find the impact of using sampling techniques.

-

SMOTE15: The concept of SMOTE is based on nearest neighbors. It will generate minority class instances.

-

Borderline smote (BLSMOTE)16: BLSMOTE creates new instances of the minority class utilizing the closest neighbors of these cases in the border region between classes.

-

SVM-SMOTE (SVMSMOTE)17: SVMSMOTE generates new minority class samples over the border with SVM to establish a boundary line between the classes using SVM18.

-

Adaptive synthetic sampling technique (ADASYN)19: ADASYN is built on the notion of adaptively producing minority data samples depending on their distributions. More synthetic data is created for minority-class samples that are more difficult to learn than for minority-class samples that are simpler to understand. This strategy helps to lessen the learning bias imposed by the initial unbalanced data distribution. Still, it may also adaptively move the decision boundary to concentrate on samples that are harder to learn, which is very useful when dealing with large datasets. The most significant distinction between SMOTE and ADASYN is how synthetic sample points for minority data points are generated in each system20. In ADASYN, we consider a density distribution \(r_x\), which determines the number of synthetic samples to create for a given point, while in SMOTE, all minority points have the same weight.

-

Upsampling (UPSAM) technique: Upsampling is the technique in which the instances from the minority class are randomly duplicated21.

Selection of relevant metrics

In the process of Knowledge Data Discovery (KDD), Feature Selection (FS) is a vital part of the pre-processing step. Some of the numerous names given to Feature Selection Algorithms include Attribute Selection, Instance Selection, Data Selection, Feature Construction, Variable Selection, and Feature Extraction, to mention just a few. They are primarily used to remove unnecessary and redundant material. Feature selection methods22 enhance the quality of data and boost data mining algorithms’ accuracy by minimizing the data’s complexity in terms of space and time. Eliminating duplicate and irrelevant data is the primary goal of feature selection. Several feature selection methods have been released in the last decade; however, the vast majority of them do not perform well on high-dimensional datasets with a significant number of duplicated features. As a result, feature selection is more critical in eliminating irrelevant features2324. As a result, machine learning algorithms can concentrate on the features required to build a classification model. Two subclasses of feature selection techniques can be generally distinguished:

-

Metrics selection using feature ranking techniques: In this technique, each feature is ranked according to a few key criteria before some features that are appropriate for a particular project are chosen.

-

Metrics selection using feature subset selection techniques: In feature subset selection, our objective is to find a subset of features that have strong predictive power

Metrics selection using feature ranking techniques

-

Selection of significant features(SIGF) Initially, we applied hypothesis testing to each metric to find “whether the metric can differentiate the WSDL file having anti-pattern or not”25. So, In this experiment, we have applied the Wilcoxon signed-rank test at a 0.05 level to find the difference between the metric values for a file having an anti-pattern and not having an anti-pattern. This test is mainly used to find whether two dependent samples are significantly the same or different.

-

Features ranking using information gain (INFG) An attribute ranking approach that’s both simple and quick is widely employed in text classification applications when the sheer volume of data makes it impossible to utilize more complicated methods26. If P is an attribute and Q is a class, then eq. 1 and 2 provide the values for the entropy of the class before and after the attribute is observed:

$$\begin{aligned} H(Q)= & -\sum _{q\epsilon Q } p(q)\log _2p(q) \end{aligned}$$(1)$$\begin{aligned} H(Q|P)= & -\sum _{p\epsilon P } p(p)\sum _{q\epsilon Q} p(q|p)\log _2p(q|p) \end{aligned}$$(2)When the entropy of a class lowers by a certain level, it indicates how much new information about that class has been supplied by the attribute, which is referred to as information gain.

Based on the information gain value between the class and each \(P_i\), a score is awarded to each \(P_i\):

$$\begin{aligned} \begin{aligned} IG_i&=H(Q)-H(Q|P_i) =H(P_i)-H(P_i|Q) \\&=H(P_i)+H(Q)-H(P_i,Q) \end{aligned} \end{aligned}$$(3) -

Features ranking using gain ratio (GNR) The gain ratio is a modification of the information gain that decreases the bias of the information gain. When picking an attribute, the gain ratio considers the number and size of branches27. When the intrinsic information is taken into account, it corrects the information gained. Intrinsic information is the entropy of instance distribution into branches, i.e., how much information is required to determine which branch an instance belongs to. The value of an attribute decreases as the number of intrinsic information increases.

$$\begin{aligned} \text {GNR}=\frac{\text {Gain of attribute}}{\text {intrisinc information of attribute}} \end{aligned}$$(4) -

Features ranking using OneR attribute evaluation (OneR) OneR, short for “One Rule,” is a straightforward but accurate classification algorithm that generates one rule for each predictor in the data and then selects the rule; with the slightest total error as its “one rule.” A rule for a predictor is created by creating a frequency table for each predictor and comparing it to the objective (the target)28. Compared to state-of-the-art classification algorithms, it has been shown that OneR creates rules that are only marginally less accurate while also making straightforward rules for people to comprehend.

-

Features extraction using principal component analysis (PCA) Principle Component Analysis(PCA)29 is applied to find the new values of features with high variance. The concept is based on removing highly correlated features and finding new sets of feature values. Here, we have applied PCA with the varimax rotation technique on extracted sets of file-level source code metrics. In this work, we have considered all the Principle Components whose eigenvalue is greater than 1.

Metrics selection using feature subset selection techniques

-

Selection of features using correlation coefficient (CORR) Correlation Coefficient feature selection is used to remove the features having high co-relation with other features. In this paper, we have used the concept of Pearson’s correlation to find the pair of features having highly correlated or not, i.e., \(>=\)0.7 or \(<=\)-0.7 represent the high correlation30. After finding highly correlated features, we have to select one feature among the two based on certain conditions.

-

Selection of Features using CFS subset Evaluator (CFS): This technique assesses the effectiveness of the subset of features by taking into consideration the predictive ability of each feature. This technique selects the subset of features with low inter-correlation but is highly correlated with the target class31.

-

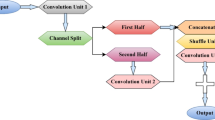

Selection of features using genetic algorithm (GA) Genetic algorithm32 helps to search for the best set of features that can improve the performance of the models. The advantage of this technique is that it permits the best solution to rise out of the best of earlier solutions. The core idea of this technique is to combine the various solutions from generation to extract the best features(genes) from each one to create new and more fitted individuals. Figure 2 shows the flowchart for GA to find the best sets of metrics. Initially, we generated 50 numbers of chromosomes with each gene of the chromosome containing the value 0 or 1, i.e., 0 for not considering features and 1 for considering features. Then, we computed the fitness value of each chromosome using Equation 5. Equation 5 is designed to maximize the accuracy and minimize the number of features. After finding the fitness value of all chromosomes, we selected the chromosome with a higher fitness value. The higher fitness value chromosome compared with the stopping condition. If satisfied, stop; otherwise, we will proceed with the next step. The next step is to apply crossover and mutation to all chromosomes and get half the number of the chromosomes i.e., two chromosomes combined using crossover to get one chromosome. The remaining half of the chromosomes are generated randomly. The above process will continue until we meet the stopping conditions.

$$\begin{aligned} Fitness=0.8*Accuracy+0.2*\frac{Total_{Features}-Selected_{Features}}{Total_{Features}} \end{aligned}$$(5)

Classification techniques

The primary objective of this research is to find the pattern based on source code metrics extracted from the WSDL file’s Java file that help to predict anti-patterns present in unseen WSDL files. These patterns are identified using thirty different variants of machine learning techniques as shown in Table 4. These machine learning are validated using 5-fold cross-validation, and their ability to predict anti-patterns is computed in terms of accuracy and AUC values.

Proposed framework

Figure 3 shows our framework consisting of several steps. The dataset contemplated has a set of WSDL files considered as the input. The detailed steps of the proposed framework are given below:

-

As shown in Fig. 3, we have calculated the Chidamber and Kemerer Java Metrics(CKJM) for each Java file generated from the WSDL file. Then, we applied different aggregation measures to the CKJM metrics computed from each Java file to generate file-level metrics.

-

After finding metrics at the system level using different aggregation measures, we have also applied feature selection techniques to find the relevant set of features and remove irrelevant features. This set of metrics is later used as input to generate models for detecting web service anti-patterns. The Min-max normalization approach is used for normalizing the values of all selected features in the range of 0 to 1.

-

While reviewing and inspecting the datasets, we observed that the considered data have an imbalanced nature of classes. Henceforth, to handle the class imbalance problem and its impact on the prediction accuracy of the models, we have also used five data sampling techniques. We compare the performance of the models generated using this sampling technique with the model developed using the original data (ORGD).

-

After finding the balanced data with relevant sets of features as shown in Fig. 3, we have used a wide variety of classifiers. These techniques comprise general ML classifiers (LOGR, DT, etc..), Advanced deep learning classifiers (ELM, WELM, etc..), DL with distinct hidden layers (DL1, DL2, etc..), and Ensemble classifiers (BAG, EXTRA, etc..) to train the anti-pattern models and find important patterns that help to identify anti-pattern on future data. These models are validated using a 5-fold cross-validation approach. Table 17 contains the hyper-parameters used for model development.

-

Finally, the impact and dependability of these techniques are measured using different performance parameters such as AUC and Accuracy. Table 5 shows the naming conventions used in this work.

Results and analysis

In this segment of the paper, we showcase the results & performance obtained from feature ranking and feature subset selection techniques over class-level metrics on the imbalanced and balanced dataset generated from sampling techniques. To get these balanced datasets, we first used the stated five different sampling techniques to overcome the class imbalance issue. Then we employed different variants of classifiers to detect the detect anti-patterns. The model’s effectiveness was computed using different performance parameters. Considering the space constraint, we have included the results of the randomly selected one-feature ranking technique and one-feature subset selection technique.

Feature selection results

Here, in this study, we would like to compare and contrast feature-subset selection and feature ranking techniques to examine if any of the techniques is superior to the others or if all the techniques perform equally well.

Relevant feature sets are generated after the application of the feature selection techniques, namely: Significant Features(SIGF) obtained by applying the Wilcoxon sign test, Information Gain(INFG), Gain Ratio(GNR), Correlation coefficient(CORR), Genetic Algorithm(GA), CFS subset evaluator(CFS), OneR, Principal Component Analysis(PCA) along with the 13 aggregation techniques namely: variance, arithmetic mean, skewness, median, quartile1, theli index, standard deviation, quartile3, generalized entropy, maximum, gini index, kurtosis and atkinson index are used as input for the generation of models for the detection of web service anti-patterns. Along with this, a model using the original dataset(OD) is also generated for detecting web service anti-patterns. The sets of features selected after applying each of the feature selection techniques considered are given in Tables 6, 7, 8, 9 and 10. Tables 6, 7, 8, 9 and 10 contains the results for anti-pattern type 1 to 5. The information present in Tables 6 suggested that the features like Q1(WMC), Mean(CBO), Gini index(CBO), Hoover index(CBO), Generalized entropy (RFC), skewness(LCOM), Q1(Ca), Max(MOA) are best set of features identified using information gain for AP1.

Accuracy and AUC values analysis

In this work, We used a wide range of classifier techniques to find the important pattern that helps identify different types of anti-patterns in web service. Initially, we have tried with most frequently used classifier techniques such as three variants of Naive Bayes, Support Vector Classifier with linear Kernel (SVC-LIN), SVC with the polynomial kernel (SVC-POLY), SVC with radial bias kernel (SVC-RBF), Logistic Regression Analysis (LOGR) to find an important pattern. After, we used the advanced level of machine learning like extreme learning machine(ELM), Least square SVM, weighted extreme learning machine (WELM) with different kernels, and Ensemble classifiers such as AdaBoost Classifier(AdaB), Random Forest Classifier(RF), Bagging Classifier(BAG), Extra Trees Classifier(EXTR), and Gradient Boosting Classifier(GraB). Further, the deep layer technique with a varying number of hidden layers has also been used to find the important pattern that helps to identify different types of anti-patterns present in web services. These techniques are validated using 5-fold cross-validation approaches and compared using Accuracy and AUC performance values on the testing data. In this work, we have also examined the benefit of using different variants of sampling techniques like SMOTE, UPSAMPLING, BLSMOTE, etc., to handle the class-imbalanced nature of data sets. To deal with the feature redundancy problem, we have used different variants of aggregation techniques to find system-level metrics using class-level metrics without losing important information. Further, different variants of feature selection techniques have also been used to remove irrelevant metrics and find the best combination of reverent metrics. Tables 11, 12, and 13 show the accuracy and AUC values of the models trained using the most frequently used classifiers, advanced level of classifiers, and ensemble learning. The rows of the tables are used to represent the input metrics for the models, and columns are used to represent the classifiers used to train the models, i.e., the trained anti-pattern prediction model using MNB by taking all features as an input achieved 84.96% of Accuracy and 0.86 value of AUC. The AUC value greater than 0.7 confirms that the trained models have the ability to predict anti-patterns using source code metrics. The high-value AUC in the case of advanced level of machine learning confirms that the models trained using the advanced level of machine learning, like LSSVM with different kernels, and WELM with different kernels, have better ability for anti-pattern prediction as compared to other techniques. Similarly, the models trained on sampled data have a better ability to predict as compared to the original data. Finally, the models developed by taking selected sets of features as input have a higher value of AUC. Accuracy confirms that the models trained on reduced sets of features have a better capability of anti-pattern prediction than all features.

RQ 1: | Can web-service anti-patter prediction models be developed using source code metrics and machine learning? | |||

ANS: | The high value of AUC, i.e., greater than 0.7, as shown in Table 11, 12, and 13 confirms that the developed models have the ability to predict anti-patterns based on source code metrics. The experimental findings confirmed that the models performed better after applying sampling and FS techniques. | |||

Comparative analysis

This research aims to evaluate the impact of feature selection techniques, data-sampling techniques, and a wide variety of machine learning on the performance of the web-service anti-pattern prediction models. Considering this, we have applied twenty-two different sets of features, five different data-sampling, and thirty-two different classifiers for anti-pattern prediction models. The predictive power of these techniques is computed using Accuracy & AUC and compared with the help of box-plot diagrams and hypothesis rank-sum techniques. The final intensive assessment and performance of these techniques individually are presented in subsequent subsections.

Aggregation measures and feature selection techniques

In our experiment, different aggregation measures were used to find the source code metrics at the system level from the class level without losing information. Further, eight feature selection techniques have also been used to remove irrelevant and redundant features. After applying aggregation measures and feature selection techniques along with the original features, all these feature sets are used as input for developing the models for detecting web service anti-patterns. Finally, Statistical and AUC studies were used to determine the significance and reliability of various feature selection strategies on five different types of anti-patterns.

Comparison of different aggregation measures and sets of features: Descriptive statistics and box-plot The Fig. 4a, b of Fig. 4 depict the box-plot for the Accuracy and AUC of different aggregation measures and sets of features. The descriptive statistics of all employed feature selection techniques are presented in Table 14. The following conclusions can be drawn from Fig. 4 and Table 14:

-

All the models give reasonable accuracies ranging between 75-95 % and AUC values between 0.8-0.95.

-

The models trained on all features achieves 83.35 mean accuracy and 0.80 as mean AUC.

-

Among the aggregation measures, AG3 shows the best performance, with a mean AUC value of 0.86. At the same time, the model developed using the feature set computed by using AG6 as input shows the worst performance, with a mean AUC value of 0.76.

-

Among all the feature sets which are considered as input for developing the models to detect web service anti-patterns, SIGF is the best model, with a mean AUC value of 0.88. In contrast, the model developed with features selected by PCA as input is the worst model, with a mean AUC value of 0.71. The model developed by AG3 has the second-best performance, with a mean accuracy of 0.86.

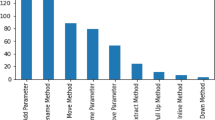

Comparison of different aggregation measures and sets of features: Wilcoxon Signed Rank Test (WSRT) with Friedman mean rank (FMR): In this experiment, we have also employed two statistical tests for hypothesis analysis: Wilcoxon Signed Rank Test and Friedman Test. Initially, we applied WSRT to find pair-wise significant differences between the predictive capability of the models trained by taking different sets of features as input. This test is used to test our considered null hypothesis “There is no significant impact on the performance of anti-patter models after applying feature selection techniques”. The considered hypothesis is only accepted if the calculated p-value using WSRT is less than 0.05. Figure 5 shows the result of WSRT on different pairs of feature sets, i.e., \(\times\) symbol indicates that the p-value\(\le\)0.05, and  symbol indicates that the p-value>0.05. According to Fig. 5, the predictive ability of the models is significantly impacted by using different sets of features. After finding the impact of feature selection techniques, we have also applied Friedman’s mean rank (FMR) to find the best sets of features for anti-pattern prediction. The last column of Table 14 shows the FMR for the aggregation measures and the various applied feature selection techniques. According to FRM, the SIGF has the lowest mean rank of 5.97. Hence, we conclude that the models trained by taking selected sets of features using SIGF have a significantly better ability of prediction as compared to other techniques. Similarly, PCA has the highest mean AUC rank, 17.90, indicating that the model developed with features selected by PCA will have the worst performance.

symbol indicates that the p-value>0.05. According to Fig. 5, the predictive ability of the models is significantly impacted by using different sets of features. After finding the impact of feature selection techniques, we have also applied Friedman’s mean rank (FMR) to find the best sets of features for anti-pattern prediction. The last column of Table 14 shows the FMR for the aggregation measures and the various applied feature selection techniques. According to FRM, the SIGF has the lowest mean rank of 5.97. Hence, we conclude that the models trained by taking selected sets of features using SIGF have a significantly better ability of prediction as compared to other techniques. Similarly, PCA has the highest mean AUC rank, 17.90, indicating that the model developed with features selected by PCA will have the worst performance.

RQ 2: | What is the significant impact of considering reduced sets of features as input on the performance of models? | |||

ANS: | The experimental findings based on Figs. 4a, b, 5 and Table 14 confirmed that the models trained by taking selected sets of features can predict significantly better than all features. | |||

Sampling techniques

In this experiment, we have also considered five types of data imbalance techniques such as SMOTE, BLSMOTE, SVMSOMTE, ADASYN, and UPSAM to tackle the class imbalance problem, and the resulting balanced datasets are used as training data for anti-pattern prediction models. The significance and reliability of these employed sampling strategies were determined using statistical and AUC analyses.

Comparison of sampling techniques using descriptive statistics and box-plots: Figure 6 shows the box-plot diagram for accuracy and the AUC of the models trained on sampled datasets. These sample datasets are generated using five different sampling techniques. The descriptive statistics for AUC and accuracy for sampling techniques considered are summarized in Table 15. According to Fig. 6 and Table 15, the models trained on sampled data using upsampling (UPSAM) with a mean AUC of 0.87 achieved better results. In contrast, the model developed with the original data with a mean AUC of 0.70 has the worst performance. ADASYN and SMOTE showed the worst performance among the data sampling techniques applied, with mean AUC values of 0.83 and 0.83, respectively.

Comparison of different sampling technique: Wilcoxon Signed Rank Test (WSRT) with Friedman mean rank (FMR):

In this experiment, we have also employed two statistical tests for hypothesis analysis: Wilcoxon Signed Rank Test and Friedman Test. Initially, we applied WSRT to verify the impact of sampling techniques on the performance of anti-pattern prediction models. This test is used to test our considered null hypothesis “There is no significant impact on the performance of anti-patter models after training on balanced data”. Figure 7 shows the result of WSRT on different pairs of sampling techniques, i.e., \(\times\) symbol indicates that the p-value\(\le\)0.05, and  symbol indicates that the p-value>0.05. The information present in Fig. 7 suggested that the null hypothesis was rejected for all comparable sampling technique pairs. Hence, we concluded that the predictive ability of the models is significantly impacted by using sampling techniques. After verifying the conclusion like “the performance of the models significantly improves after training on sampled data”, we have used the Friedman test to find the best sampling techniques. The lower rank of the Friedman test represents the best results. Table 15 shows the Friedman test results for various data sampling techniques. From Table 15, we infer that UPSAM has the best performance with a mean AUC rank of 2.13, whereas the model developed with the original dataset has the worst performance with a mean rank of 5.41.

symbol indicates that the p-value>0.05. The information present in Fig. 7 suggested that the null hypothesis was rejected for all comparable sampling technique pairs. Hence, we concluded that the predictive ability of the models is significantly impacted by using sampling techniques. After verifying the conclusion like “the performance of the models significantly improves after training on sampled data”, we have used the Friedman test to find the best sampling techniques. The lower rank of the Friedman test represents the best results. Table 15 shows the Friedman test results for various data sampling techniques. From Table 15, we infer that UPSAM has the best performance with a mean AUC rank of 2.13, whereas the model developed with the original dataset has the worst performance with a mean rank of 5.41.

RQ 3: | What is the significant impact of sampling techniques on the predictability of anti-pattern prediction models? | |||

ANS: | The experimental findings based on Figs. 6, 7 and Table 15 confirmed that the predictive ability of the models is significantly impacted by using sampling techniques. The performance of the models significantly improves after training on sampled data. | |||

Classification techniques

In this work, 33 classifiers varying from general machine learning classifiers to advance deep learning classifiers have been employed to train models for detecting web service anti-patterns. We computed the implications and dependabilities of these classifiers using box plots, descriptive statistics, and statistical test analyses on different anti-patterns.

Comparison of different classification techniques using descriptive statistics and box plots: Figure 8 shows the AUC and accuracy box plots for the different categories of classifier techniques. According to Fig. 8, we can conclude the following:

-

Among the general classifiers category, KNN shows the best performance with a mean AUC value of 0.92. In contrast, the Support Vector Machine with the linear kernel (SVC-LIN) offers the worst performance, with a mean AUC value of 0.62.

-

Among the ensemble classifiers employed for training the models for detection of anti-patterns, the extra tree classifier (EXTR) and Random Forest (RF) classifiers are showing the best performance with a mean AUC value of 0.94, and the Gradient Boosting classifier (GraB) is delivering the worst performance with a mean AUC of 0.88.

-

Of all the deep learning algorithms with varying hidden layers, DL2 shows the best performance with a mean AUC value of 0.84, and DL4 offers the worst performance with a mean AUC value of 0.81. DL3 performance is similar to that of the model developed using DL2.

-

Over the advanced ML classifiers used for developing models for detecting web service anti-patterns, LSSVM-RBF shows the best performance with a mean AUC value of 0.99. In contrast, the models trained using ELM-LIN show the worst performance, with a mean AUC value of 0.70.

Figure 9 shows the AUC and accuracy values for all the classifier techniques combinedly. The descriptive statistics for all the classifier techniques are depicted in Table 16. From Figs. 9 and Table 16, we infer that the models trained using LSSVM-RBF are showing the best performance with a mean AUC value of 0.99. LSSVM-Poly, RF, and EXTR perform better after LSSVM-RBF with a mean AUC value of 0.95, 0.94, and 0.94, respectively. SVC-LIN is delivering the worst performance with a mean AUC value of 0.63.

Comparison of different classification techniques: Wilcoxon Signed Rank Test (WSRT) with Friedman mean rank (FMR): Similar to the previous subsections, we also have the Wilcoxon Test and the Friedman test to compute statistically significant differences among various pairs of classifier techniques. Initially, we applied WSRT to verify the impact of different classifiers on the performance of anti-pattern prediction models. This test is used to test our considered null hypothesis “There is no significant impact on the performance of anti-patter models after changing classifiers”. Figure 10 shows the result of WSRT on different pairs of sampling techniques, i.e., \(\times\) symbol indicates that the p-value\(\le\)0.05, and  symbol indicates that the p-value>0.05. According to Fig. 10, the predictive ability of the models trained using different classifiers is not significantly the same. Table 16 shows the Friedman test results for various classifier techniques considered in this work. From Table 16, we infer that LSSVM-RBF has the best performance with a mean rank of 1.18, whereas the SVC-LIN classifier technique has the worst performance with a mean rank of 27.60.

symbol indicates that the p-value>0.05. According to Fig. 10, the predictive ability of the models trained using different classifiers is not significantly the same. Table 16 shows the Friedman test results for various classifier techniques considered in this work. From Table 16, we infer that LSSVM-RBF has the best performance with a mean rank of 1.18, whereas the SVC-LIN classifier technique has the worst performance with a mean rank of 27.60.

RQ 4: | What effect do different classifiers have on predicting anti-patterns using source code metrics? | |||

ANS: | The experimental findings based on Figs. 9, 10, and Table 16 confirmed that the predictive ability of the models trained using different classification techniques is significantly different. The performance of the models significantly improves after changing the classification techniques. | |||

Discussion of results

In this work, extensive experimentation by using different variants of aggregation measures, feature selections, data sampling, and classifiers has been made, and a solution for developing such models to predict the anti-pattern using object-oriented metrics with improved performance and predictability power is proposed. In general, it was observed that the prediction models with 0.7 AUC value have the ability to predict class on unseen patterns i.e., the models with 0.7 AUC are acceptably by the community. The experimental results obtained using the proposed framework confirm that the trained models delivered a greater than 0.7 AUC and have the ability to predict anti-patterns on an unseen WSDL file. We have already presented the AUC values of the models trained for anti-pattern 1 using sets of features with different classifiers on both original and balanced data. The highest possible AUC value for all classifiers with the application of different combinations of sampling and feature selection techniques attained greater than 0.9; this proves the greater predictability of developed anti-pattern prediction models. The classifier post-application of feature selection and sampling techniques has outperformed with an AUC value of 1.

Conclusion

The developed web service anti-pattern prediction models using object-oriented metrics help in building quality web-based applications by identifying anti-patterns at the initial stage of Software Development. This research represents a significant step forward in the development of effective anti-pattern prediction models by dealing with feature selection, aggregation measures, and the class imbalance problem efficiently. The developed anti-pattern prediction models use different variants of classifiers, and their performance has been measured against five different variants of anti-patterns. The proposed framework was validated using 226 WSDL files collected from various domains such as finance, tourism, health, education, etc. The focused insights of this research are:

-

For most anti-patterns, adopting sampling methods such as SMOTE, BLSMOTE, SVMSMOTE, and USAM improves the predictability of developed models.

-

Employing the different variants of aggregation measures with feature selection strategies over balanced datasets reduces computational effort and improves the overall performance of anti-pattern prediction models.

-

In comparison to other employed sampling techniques to handle the data imbalance, the UPSAM technique outperformed by gaining the highest mean Accuracy & AUC of 86.14% & 0.87, respectively

-

Experimental results suggested that the models trained by selected sets of features using SIGF performance best compared to other employed feature selection techniques by attaining 88.40% Accuracy & 0.88 AUC. This finding confirmed that there exist irrelevant features.

-

The LSSVM with RBF kernel classifier stands first among all other classifiers.

-

Post implication of feature selection techniques study indicates a reduction of 97% irrelevant features from the original dataset while pertaining improved performance of anti-pattern models trained against all metrics.

Data availability

The data used in this paper is available at https://github.com/ouniali/WSantipatterns. The processed data will be made available on request.

References

Kral, J. & Zemlicka, M. The most important service-oriented antipatterns. In International Conference on Software Engineering Advances (ICSEA 2007) pp. 29–29. IEEE (2007).

Travassos, G., Shull, F., Fredericks, M. & Basili, V. R. Detecting defects in object-oriented designs: using reading techniques to increase software quality. ACM Sigplan Notices 34(10), 47–56 (1999).

Marinescu, R. Detection strategies: Metrics-based rules for detecting design flaws. In 20th IEEE International Conference on Software Maintenance, 2004. Proceedings, pp. 350–359. IEEE (2004).

Munro, M. J. Product metrics for automatic identification of “bad smell” design problems in java source-code. In 11th IEEE International Software Metrics Symposium (METRICS’05), pp. 15–15. IEEE (2005).

Ciupke, O. Automatic detection of design problems in object-oriented reengineering. In Proceedings of Technology of Object-oriented Languages and Systems-TOOLS 30 (Cat. No. PR00278), pp. 18–32. IEEE (1999).

Simon, F., Steinbruckner, F. & Lewerentz, C. Metrics based refactoring. In Proceedings fifth European conference on software maintenance and reengineering, pp. 30–38. IEEE (2001).

Ananda Rao, A. & Reddy, K. N. Detecting bad smells in object oriented design using design change propagation probability matrix 1 (2007).

Khomh, F., Vaucher, S., Guéhéneuc, Y.-G. & Sahraoui, H. Bdtex: A gqm-based bayesian approach for the detection of antipatterns. J. Syst. Softw. 84(4), 559–572 (2011).

Moha, N., Guéhéneuc, Y.-G., Duchien, L. & Le Meur, A.-F. Decor: A method for the specification and detection of code and design smells. IEEE Trans. Softw. Eng. 36(1), 20–36 (2009).

Chidamber, S. R. & Kemerer, C. F. A metrics suite for object oriented design. IEEE Trans. Softw. Eng. 20(6), 476–493 (1994).

Hemanta Kumar Bhuyan and Vinayakumar Ravi. Analysis of subfeature for classification in data mining. IEEE Trans. Eng. Manag. 70(8), 2732–2746 (2021).

Hemanta Kumar Bhuyan and Narendra Kumar Kamila. Privacy preserving sub-feature selection based on fuzzy probabilities. Clust. Comput. 17(4), 1383–1399 (2014).

Bhuyan, H. K., Saikiran, M., Tripathy, M. & Ravi, V. Wide-ranging approach-based feature selection for classification. Multimedia Tools Appl. 82(15), 23277–23304 (2023).

Vasilescu, B., Serebrenik, A. & Van den Brand, M. By no means: A study on aggregating software metrics. In Proceedings of the 2nd International Workshop on Emerging Trends in Software Metrics, pp. 23–26 (2011).

Fernández, A., Garcia, S., Herrera, F. & Chawla, N. V. Smote for learning from imbalanced data: Progress and challenges, marking the 15-year anniversary. J. Artif. Intell. Res. 61, 863–905 (2018).

Xiaowei, G., Angelov, P. P. & Soares, E. A. A self-adaptive synthetic over-sampling technique for imbalanced classification. Int. J. Intell. Syst. 35(6), 923–943 (2020).

Tang, Y., Zhang, Y.-Q., Chawla, N. V. & Krasser, S. Svms modeling for highly imbalanced classification. IEEE Trans. Syst. Man Cybern. Part B (Cybernetics) 39(1), 281–288 (2008).

Ghorbani, R. & Ghousi, R. Comparing different resampling methods in predicting students’ performance using machine learning techniques. IEEE Access 8, 67899–67911 (2020).

Zhiquan, H., Wang, L., Qi, L., Li, Y. & Yang, W. A novel wireless network intrusion detection method based on adaptive synthetic sampling and an improved convolutional neural network. IEEE Access 8, 195741–195751 (2020).

He, H., Bai, Y., Garcia, E. A & Li, S. Adasyn: Adaptive synthetic sampling approach for imbalanced learning. In 2008 IEEE iNternational Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), pp. 1322–1328. IEEE (2008).

Utami, E., Oyong, I., Raharjo, S., Hartanto, A. D. & Adi, S.. Supervised learning and resampling techniques on disc personality classification using twitter information in Bahasa Indonesia. Appl. Comput. Inform. (2021).

Vandana, C. P. & Chikkamannur, A. A. Feature selection: An empirical study. Int. J. Eng. Trends Technol. 69(2), 165–170 (2021).

Di Mauro, M., Galatro, G., Fortino, G. & Liotta, A. Supervised feature selection techniques in network intrusion detection: A critical review. Eng. Appl. Artif. Intell. 101, 104216 (2021).

Dogra, V., Singh, A., Verma, S., Jhanjhi, N. Z & Talib, M. N. et al. Understanding of data preprocessing for dimensionality reduction using feature selection techniques in text classification. In Intelligent computing and innovation on data science, pp. 455–464. Springer (2021).

Zebari, R., Abdulazeez, A., Zeebaree, D., Zebari, D. & Saeed, J. A comprehensive review of dimensionality reduction techniques for feature selection and feature extraction. J. Appl. Sci. Technol. Trends 1(1), 56–70 (2020).

Stiawan, D. et al. Cicids-2017 dataset feature analysis with information gain for anomaly detection. IEEE Access 8, 132911–132921 (2020).

Hasdyna, N., Sianipar, B., & Zamzami, E. M. Improving the performance of k-nearest neighbor algorithm by reducing the attributes of dataset using gain ratio. J. Phys.: Conf. Ser., vol. 1566, p. 012090. IOP Publishing (2020).

Al Sayaydeha, Osama Nayel & Mohammad, Mohammad Falah. Diagnosis of the parkinson disease using enhanced fuzzy min-max neural network and oner attribute evaluation method. In 2019 International Conference on Advanced Science and Engineering (ICOASE), pp. 64–69. IEEE (2019).

Karamizadeh, S., Abdullah, S. M., Manaf, A. A., Zamani, M. & Hooman, A. An overview of principal component analysis. J. Signal Inf. Process., 4 (2020).

Meng, X.-L., Rosenthal, R. & Rubin, D. B. Comparing correlated correlation coefficients. Psychol. Bull. 111(1), 172 (1992).

Xu, S., Zhang, Z., Wang, D., Hu, J., Duan, X. & Zhu, T. Cardiovascular risk prediction method based on cfs subset evaluation and random forest classification framework. In 2017 IEEE 2nd International Conference on Big Data Analysis (ICBDA), pp. 228–232. IEEE (2017).

Too, J. and Abdullah, A. R. A new and fast rival genetic algorithm for feature selection. J. Supercomput. 77(3), 2844–2874 (2021).

Romano, M., Contu, G., Mola, F. & Conversano, C. Threshold-based naïve bayes classifier. Adv. Data Anal. Classification, pp. 1–37 (2023).

Prasanta Kumar Dey. Project risk management: A combined analytic hierarchy process and decision tree approach. Cost Eng. 44(3), 13–27 (2002).

Niu, L. A review of the application of logistic regression in educational research: Common issues, implications, and suggestions. Educ. Rev. 72(1), 41–67 (2020).

Alam, S., Sonbhadra, S. K., Agarwal, S. & Nagabhushan, P. One-class support vector classifiers: A survey. Knowl.-Based Syst. 196, 105754 (2020).

Leong, W. C., Bahadori, A., Zhang, J. & Ahmad, Z. Prediction of water quality index (wqi) using support vector machine (svm) and least square-support vector machine (ls-svm). Int. J. River Basin Manag. 19(2), 149–156 (2021).

Wang, J., Siyuan, L., Wang, S.-H. & Zhang, Y.-D. A review on extreme learning machine. Multimedia Tools Appl. 81(29), 41611–41660 (2022).

Liu, Z., Jin, W. & Ying, M. Variances-constrained weighted extreme learning machine for imbalanced classification. Neurocomputing 403, 45–52 (2020).

Heidari, A. A., Faris, H., Mirjalili, S., Aljarah, I. & Mafarja, M. Ant lion optimizer: theory, literature review, and application in multi-layer perceptron neural networks. Nature-Inspired Optimizers: Theories, Literature Reviews and Applications, pp. 23–46 (2020).

Boateng, E. Y., Otoo, J. & Abaye, D. A. Basic tenets of classification algorithms k-nearest-neighbor, support vector machine, random forest and neural network: a review. J. Data Anal. Inf. Process. 8(4), 341–357 (2020).

Khan, Z. et al. Optimal trees selection for classification via out-of-bag assessment and sub-bagging. IEEE Access 9, 28591–28607 (2021).

Ahangari, S., Jeihani, M., Anam Ardeshiri, Md., Rahman, M. & Dehzangi, A. Enhancing the performance of a model to predict driving distraction with the random forest classifier. Transp. Res. Rec. 2675(11), 612–622 (2021).

Abubaker, H., Ali, A., Shamsuddin, S. M. & Hassan, S. Exploring permissions in android applications using ensemble-based extra tree feature selection. Indonesian J. Electr. Eng. Comput. Sci. 19(1), 543–552 (2020).

Malhotra, R. & Jain, J. Handling imbalanced data using ensemble learning in software defect prediction. In 2020 10th International Conference on Cloud Computing, Data Science and Engineering (Confluence), pp. 300–304. IEEE (2020).

Nguyen, H. & Hoang, N.-D. Computer vision-based classification of concrete spall severity using metaheuristic-optimized extreme gradient boosting machine and deep convolutional neural network. Autom. Constr. 140, 104371 (2022).

Sarker, I. H. Deep learning: A comprehensive overview on techniques, taxonomy, applications and research directions. SN Comput. Sci. 2(6), 420 (2021).

Author information

Authors and Affiliations

Contributions

LK and ST conceptualize the topic. ST, LK, LBM, SM and AK are involved in Methodology, investigation, and validation. LK, ST and SM supervised the whole work. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical and informed consent for data used:

No ethical approval and consent are required based on the following. (a) This article does not contain any studies with animals performed by any of the authors. (b) This article does not contain any studies with human participants or animals performed by any of the authors. (c) This article does not use any figure or table from any source.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kumar, L., Tummalapalli, S., Murthy, L.B. et al. An empirical analysis on webservice antipattern prediction in different variants of machine learning perspective. Sci Rep 15, 5183 (2025). https://doi.org/10.1038/s41598-025-86454-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-86454-5