Abstract

Artificial intelligence (AI) is increasingly being integrated into business practices, fundamentally altering workplace dynamics and employee experiences. While the adoption of AI brings numerous benefits, it also introduces negative aspects that may adversely affect employee well-being, including psychological distress and depression. Drawing upon a range of theoretical perspectives, this study examines the association between organizational AI adoption and employee depression, investigating how psychological safety mediates this relationship and how ethical leadership serves as a moderating factor. Utilizing an online survey platform, we conducted a 3-wave time-lagged research study involving 381 employees from South Korean companies. Structural equation modeling analysis revealed that AI adoption has a significant negative impact on psychological safety, which in turn increases levels of depression. Data were analyzed using SPSS 28 for preliminary analyses and AMOS 28 for structural equation modeling with maximum likelihood estimation. Further analysis using bootstrapping methods confirmed that psychological safety mediates the relationship between AI adoption and employee depression. The study also discovered that ethical leadership can mitigate the adverse effects of AI adoption on psychological safety by moderating the relationship between these variables. These findings highlight the critical importance of fostering a psychologically safe work environment and promoting ethical leadership practices to protect employee well-being amid rapid technological advancements. Contributing to the growing body of literature on the psychological effects of AI adoption in the workplace, this research offers valuable insights for organizations seeking to address the human implications of AI integration. The section discusses the practical and theoretical implications of the results and suggests potential directions for future research.

Similar content being viewed by others

Introduction

The swift adoption of AI technologies is transforming organizations and employee experiences (Dwivedi et al. 2021; Tambe et al. 2019), necessitating investigation into its effects on employee perspectives and behaviors (Kellogg et al. 2020; Uren and Edwards, 2023). AI integration automates tasks, optimizes decisions, and enhances operational effectiveness (Budhwar et al. 2023; Chowdhury et al. 2023), driven by goals to secure competitive advantages and increase efficiency (Fountaine et al. 2019). Research on AI adoption examines its catalysts, obstacles, and impacts on organizational and employee dynamics (Borges et al. 2021; Chowdhury et al. 2023; Pereira et al. 2023; Ransbotham et al. 2017), with its implications remaining a critical area of inquiry (Fountaine et al. 2019; Oliveira and Martins, 2011). Recent multilevel reviews have further emphasized how AI is reshaping organizational dynamics across individual, team, and organizational levels, necessitating new approaches to understanding employee responses to these technologies (Bankins et al. 2024; Pereira et al. 2023). As organizations navigate various stages of AI readiness and adoption, the human dimensions of technological transitions have emerged as critical factors determining adoption success (Uren and Edwards, 2023). AI introduces both opportunities and challenges (Amankwah-Amoah, 2020; Shrestha et al. 2019), requiring analysis of its psychological, social, and administrative impacts (Barley et al. 2017; Glikson and Woolley, 2020).

In AI-centric environments (Brougham and Haar, 2018; Nam, 2019), AI reshapes jobs and workflows, affecting workers’ psychological health, satisfaction, commitment, and performance, as well as broader organizational outcomes (Budhwar et al. 2023; Chowdhury et al. 2023; Glikson and Woolley, 2020). This raises urgent questions about mental health impacts (Budhwar et al. 2019; Wang and Siau, 2019) and disrupts social dynamics, communication, and hierarchical structures, influencing employee autonomy and proficiency (Delanoeije and Verbruggen, 2020; Wilson and Daugherty, 2018). Understanding how AI shapes employee attitudes is vital for leveraging its benefits while minimizing downsides.

While there is an increasing acknowledgment of the need to explore artificial intelligence’s (AI) impact on employees, substantial gaps persist within scholarly research. First, numerous studies have theorized about AI’s effects on diverse organizational outcomes (Benbya et al. 2020; Borges et al. 2021; Brock and Von Wangenheim, 2019; Budhwar et al. 2023; Chowdhury et al. 2023; Fountaine et al. 2019; Glikson and Woolley, 2020; Ransbotham et al. 2017; Tambe et al. 2019; Uren and Edwards, 2023), yet empirical research specifically addressing outcomes like employee mental health remains relatively scarce (Budhwar et al. 2023; Uren and Edwards, 2023). Considering the important influence that mental health has on both individual well-being and organizational efficacy, it is critical to rigorously delve into both the precursors and influences of employee depression within the context of AI integration (Delanoeije and Verbruggen, 2020; Wilson and Daugherty, 2018).

While AI adoption affects various mental health aspects, we focus on depression for three reasons. First, depression represents one of the most prevalent and costly mental health conditions in workplace settings (World Health Organization, 2023) and has been identified as particularly sensitive to technological changes that create uncertainty and threaten job security (Theorell et al. 2015; Joyce et al. 2016). Second, unlike more transient psychological states, depression often reflects prolonged exposure to stressors (Melchior et al. 2007), aligning with the gradual nature of AI adoption in organizations. Third, depression has been linked to significant organizational outcomes, including decreased productivity and increased absenteeism (Evans-Lacko and Knapp, 2016), and is more strongly associated with perceptions of technological displacement compared to other mental health outcomes (Brougham and Haar, 2018).

Second, while existing literature often discusses AI adoption’s direct organizational impacts, there has been less consideration of the deeper psychological processes (mediators) and contextual factors (moderators) that influence these effects (Budhwar et al. 2023; Uren and Edwards, 2023). Conducting empirical studies on mediating variables could shed light on the pathways through which AI adoption affects employee mental health (Brougham and Haar, 2018; Delanoeije and Verbruggen, 2020). Likewise, examining moderating variables could reveal various environmental or contextual factors that might amplify or mitigate the associations between AI adoption and employee outcomes. Therefore, it is strongly advised that one empirically investigate these mediators and moderators in order to develop a deeper understanding of the organizational implications of AI.

Third, existing literature on AI adoption within organizations has not adequately focused on the critical role of leadership in navigating the organizational changes brought about by AI integration (Budhwar et al. 2023; Chowdhury et al. 2023; Uren and Edwards, 2023). Organizational studies scholars have long acknowledged the profound impact that leadership has on shaping employee perceptions, attitudes, and behaviors (Dineen et al. 2006; Simons et al. 2015). In organizational environments, employees often look for cues from their surroundings to lessen uncertainty and enhance predictability (Wimbush, 1999). Consequently, they tend to heavily rely on insights gained from their leaders’ behaviors and styles of leadership (Shamir and Howell, 1999). This dependency underscores the crucial influence of leadership in influencing organizational culture and individual employee actions (Epitropaki et al. 2017; Wimbush, 1999). Given leaders’ substantial impact on their followers, it is plausible to surmise that leadership behaviors play an essential role as a contextual factor in the adoption of AI technologies within organizations. Therefore, exploring the moderating effect of leadership is essential.

To bridge these identified gaps, this study focuses on three key theoretical perspectives: the job demands-resources (JD-R) model (Bakker and Demerouti, 2017), the conservation of resources (COR) theory (Hobfoll, 1989), and the uncertainty reduction theory (Berger and Calabrese, 1974). By synthesizing these complementary theories, we construct a focused yet comprehensive framework that elucidates the dynamics between AI adoption, employee depression, psychological safety, and ethical leadership. The JD-R model helps explain how AI adoption represents a job demand that can deplete resources and affect well-being, the COR theory illuminates how psychological safety functions as a critical resource that can be threatened by AI adoption, and the uncertainty reduction theory addresses how the ambiguity introduced by AI adoption affects psychological safety and how ethical leadership can mitigate these effects. Specifically, this study will explore how AI adoption impacts employee depression, the mediating role of psychological safety, and the moderating role of ethical leadership in these relationships.

Psychological safety, understood as the collective belief that a group or team environment is conducive to interpersonal risk-taking (Edmondson, 1999), plays a critical role in bolstering employee wellness, building learning, and stimulating innovation (Edmondson and Lei, 2014). We selected psychological safety as the mediating mechanism between AI adoption and depression for three reasons. First, psychological safety addresses uncertainty during technological transitions (Edmondson and Lei, 2014; Newman et al. 2017), relevant to AI contexts where work roles change significantly. Second, it functions as a critical resource in helping employees navigate demanding situations (Frazier et al. 2017), aligning with our Conservation of Resources theoretical foundation. Third, psychological safety mediates between organizational changes and well-being (Baer and Frese, 2003; Carmeli and Gittell, 2009), influencing whether employees voice concerns during technological adaptation (Edmondson, 1999). While other mechanisms exist, psychological safety best captures the social-psychological processes linking AI adoption to employee well-being.

Furthermore, the exploration of moderating variables such as ethical leadership offers insights into the conditions that influence how AI adoption impacts employee outcomes (Budhwar et al. 2023; Uren and Edwards, 2023). Ethical leadership, defined by actions that reflect normatively proper behavior and the encouragement of such behavior among members (Brown and Treviño, 2006), has proven to enhance trust, fairness, and employee welfare during organizational transitions (Bedi et al. 2016; Demirtas and Akdogan, 2015; Ko et al. 2018; Mayer et al. 2012; Ng and Feldman, 2015). While several leadership styles could potentially moderate the relationship between AI adoption and psychological safety, we selected ethical leadership for three key reasons. First, AI adoption raises unique ethical concerns including algorithmic bias, privacy issues, and job displacement (Bostrom and Yudkowsky, 2014; Jobin et al. 2019), which ethical leadership is particularly equipped to address through its focus on normative appropriateness and moral management (Brown and Treviño, 2006). Second, unlike transformational leadership (focused on vision) or authentic leadership (centered on self-awareness), ethical leadership specifically emphasizes fairness, transparency, and ethical decision-making—elements crucial for navigating the ethical complexities of AI adoption (Treviño et al. 2014). Third, ethical leadership has demonstrated stronger effects on reducing employee anxiety and uncertainty during organizational transitions compared to other leadership styles (Mo and Shi, 2017; Sharif and Scandura, 2014), making it particularly relevant for our study of psychological safety and depression.

The current research makes significant contributions to both theory and practice. First, it advances our knowledge of the psychological implications of artificial intelligence adoption in organizations by examining its impact on employee depression, providing HR professionals and leaders with insights to develop preventive mental health programs during AI transitions (Chowdhury et al. 2023). Secondly, the study elucidates the underlying mechanism through which AI adoption affects employee mental health by identifying psychological safety as a key mediating factor, offering organizations practical strategies to foster safe environments where employees can voice concerns about AI adoption and seek necessary support (Edmondson, 2018). Thirdly, it deepens the ethical leadership literature by demonstrating its moderating effect in reducing the harmful influences of AI adoption on psychological safety, suggesting that organizations should prioritize developing ethical leadership capabilities among managers overseeing technological changes to mitigate negative psychological impacts (Brown and Treviño, 2006). Lastly, by integrating these elements into a comprehensive moderated mediation model, the study provides a holistic framework that organizations can apply as a diagnostic tool to identify potential psychological risks in their AI adoption strategies and develop targeted interventions to protect employee well-being while successfully integrating AI technologies (Budhwar et al. 2023; Tambe et al. 2019).

Theory and hypotheses

AI adoption in an organization and employee depression

The current research suggests that AI adoption will increase employee depression. The adoption of AI in an organization unfolds through multiple phases: awareness, evaluation, decision-making, adoption, and post-adoption reflection (Brem et al. 2021; Oliveira and Martins, 2011). Recent empirical research has identified specific performance determinants of successful AI adoption, including technological infrastructure quality, leadership support, and employee readiness (Chen et al. 2023). Furthermore, studies examining the mental health implications of AI adoption have revealed that technological changes can significantly impact employee psychological well-being, with effects moderated by individual factors such as self-efficacy (Kim and Lee, 2024; Kim and Kim, 2024; Kim et al. 2024). Organizations need to meticulously evaluate their readiness to integrate AI by considering various critical factors such as the availability of data, the robustness of technological infrastructure, the competence of human capital, and the adaptability of organizational culture (Uren and Edwards, 2023). For AI adoption to be successful, it must be strategically orchestrated to align with the organization’s objectives, actively engage various stakeholders, and conscientiously address the ethical and societal repercussions (Benbya et al. 2020; Bughin et al. 2023).

Employee depression encompasses symptoms such as persistent sadness, a diminished interest in significant activities, and feelings of low self-worth (Evans-Lacko and Knapp, 2016; Theorell et al. 2015). As a prevalent mental health disorder, depression profoundly affects individuals’ emotional well-being, performance at work, and general functionality (World Health Organization, 2023). It is a significant workplace concern, with incidence rates varying from 5% to 20% across diverse professions and geographic regions (Modini et al. 2016; World Health Organization, 2023). Employee depression has been linked to several detrimental outcomes including diminished productivity, heightened absenteeism, increased healthcare expenditures, and deteriorated work relationships (Evans-Lacko and Knapp, 2016; Joyce et al. 2016; Martin et al. 2009; Modini et al. 2016). Work-related stressors including excessive job demands, limited control over job-related decisions, and insufficient social support are recognized as contributing factors to the development of depressive symptoms in employees (Harvey et al. 2017; Theorell et al. 2015).

Understanding the ramifications of AI adoption on employee well-being, especially depression, can be explored through the frameworks of the JD-R theory and the COR theory.

First, the JD-R theory offers an extensive model for assessing how various job characteristics impact employee health and performance (Bakker and Demerouti, 2017). Based on this concept, job demands indicate the specific components of work that require continuous physical, cognitive, or emotional exertion and are associated with physical and mental stress. Illustrative instances encompass arduous workloads, rigorous time limits, and complex decision-making prerequisites (Bakker and Demerouti, 2017). On the other hand, job resources are the components of a job that help to accomplish work goals, reduce job pressures, or support personal and professional growth. The resources mentioned include autonomy, collegial support, and opportunities for skill growth (Bakker and Demerouti, 2017).

The JD-R model asserts that an imbalance where job demands surpass available resources leads to employee strain and fatigue, potentially resulting in adverse effects like burnout and depression (Bakker and Demerouti, 2017). This model is especially applicable in scenarios of AI adoption within workplaces, which introduces substantial job demands (Braganza et al. 2021; Brougham and Haar, 2018; Budhwar et al. 2023). The demands from AI adoption require employees to acquire new skills, adjust to different processes, and handle an elevated level of complexity and uncertainty in their roles (Brougham and Haar, 2018; Chowdhury et al. 2023; Pereira et al. 2023). Employees who lack critical resources such as technological proficiency, supportive networks, or adaptability may find these demands particularly daunting (Budhwar et al. 2023; Chowdhury et al. 2023). The excessive demands brought about by AI, including the need for new skills, adaptation to novel processes, and navigation of increased work complexity, might overwhelm the available resources, causing significant stress and fatigue (Bakker and Demerouti, 2017). Such prolonged exposure to high demands and insufficient resources may lead to the emergence of depressive symptoms in employees (Bakker and Demerouti, 2017; Chowdhury et al. 2023).

Second, the COR theory elaborates on the potential detrimental effects of AI integration on worker well-being. This hypothesis suggests that humans are driven to maintain, safeguard, and amass resources—anything perceived as valuable, such as personal skills, job security, or professional autonomy. When these resources are perceived as threatened or actually depleted, it results in significant psychological stress and adverse outcomes (Hobfoll et al. 2018). With AI adoption, there is a real risk that employees will perceive threats to crucial resources like job stability, independence in their work, and professional competencies (Bankins et al. 2024; Brougham and Haar, 2018; Chowdhury et al. 2023). For instance, the potential loss of employment due to automation, diminished decision-making autonomy, and the challenge of maintaining relevance amid technological advancements could significantly stress employees (Budhwar et al. 2023; Pereira et al. 2023). Such chronic stress from threatened resources can substantially heighten the likelihood of developing depressive states among workers (Hobfoll et al. 2018; Budhwar et al. 2023).

Based on the arguments, we suggest the following hypothesis:

Hypothesis 1: AI adoption in an organization will increase employee depression.

AI adoption in an organization and psychological safety

The current research posits that AI adoption in an organization will diminish the extent of psychological safety. Psychological safety means the collective perception among team members that it is secure to engage in interpersonal risks, such as openly asking questions, expressing concerns, or acknowledging errors, without facing adverse consequences (Edmondson, 1999; Frazier et al. 2017). This concept is essential for enhancing employee engagement, promoting learning, and improving performance (Edmondson, 1999; Frazier et al. 2017). Recent research has highlighted the heightened importance of psychological safety in human-AI collaborative environments, where perceptions of job insecurity can significantly affect employee behavior and well-being (Wu et al. 2022). Studies specifically examining AI contexts have found that psychological safety plays a critical role in facilitating productive human-machine collaboration and reducing resistance to technological change (Endsley, 2023). To explore how AI adoption affects psychological safety, this discussion incorporates insights from the uncertainty reduction theory (Berger and Calabrese, 1974).

The uncertainty reduction theory (Berger and Calabrese, 1974) offers a framework to comprehend the potential impacts of AI on psychological safety. This theory suggests that individuals attempt to minimize uncertainty by acquiring information to better understand their environment (Berger and Calabrese, 1974). With the introduction of AI, employees often face increased uncertainty due to significant changes in their work roles, processes, and the overall organizational structure (Dwivedi et al. 2021; Pereira et al. 2023). Such uncertainties might arise from the necessity to master new technological skills, adjust to revamped operational practices, and deal with altered organizational hierarchies (Bankins et al. 2024; Brougham and Haar, 2018; Budhwar et al. 2023).

As employees navigate these uncertainties related to AI integration, they may feel more exposed and less inclined to participate in interpersonal risk-taking activities (Frazier et al. 2017). This heightened vulnerability could manifest as hesitance to voice opinions, share innovative ideas, or acknowledge errors, leading to a more cautious behavior in the workplace (Edmondson, 1999). Thus, the pervasive uncertainty induced by AI could detrimentally affect psychological safety by discouraging employees from participating in actions that could potentially attract criticism or negative feedback (Edmondson and Lei, 2014; Frazier et al. 2017).

Based on the arguments, we suggest the following hypothesis:

Hypothesis 2: AI adoption in an organization will decrease psychological safety.

Psychological safety and employee depression

Our hypothesis posits that cultivating a feeling of psychological security among employees will result in a decrease in employee depression. Psychological safety, which is understood as the collective belief that a workgroup or organization supports interpersonal risk-taking (Edmondson, 1999), is increasingly acknowledged as an essential element affecting employee well-being and mental health (Frazier et al. 2017; Newman et al. 2017). This concept’s connection to employee depression can be examined through the frameworks of the COR theory and the JD-R theory.

First, the COR theory elucidates that psychological safety acts as a pivotal resource that can shield against the development of employee depression. The theory suggests that people endeavor to obtain, conserve, and cultivate resources that they cherish (Hobfoll et al. 2018). Within an organization, psychological safety is regarded as a crucial resource that employees aim to preserve (Frazier et al. 2017; Tu et al. 2019). When individuals perceive a high degree of psychological safety at work, they feel supported and secure, enabling them to voice their thoughts without fear of repercussions (Edmondson, 1999; Newman et al. 2017). This environment of support facilitates employees’ ability to manage job demands effectively, mitigates stress, and conserves vital resources like emotional and cognitive energy (Hobfoll et al. 2018; Tu et al. 2019). Preserving these resources allows employees to better navigate work challenges and sustain their psychological health, thus diminishing the likelihood of depressive symptoms arising (Frazier et al. 2017). Additionally, psychological safety encourages employees to seek assistance and support in confronting workplace stressors, offering a further layer of protection against depression (Newman et al. 2017).

Second, the JD-R theory also underscores the connection between psychological safety and employee depression. According to this theory, psychological safety is regarded as a key job resource that assists members in managing job demands and in guarding against depressive symptoms (Bakker and Demerouti, 2017; Tu et al. 2019). In environments marked by high psychological safety, employees benefit from an atmosphere of trust, support, and open communication (Edmondson, 1999; Newman et al. 2017). Such a supportive backdrop can buffer the adverse impacts of job demands, role ambiguity, or interpersonal conflicts, which are recognized contributors to employee depression (Bakker and Demerouti, 2017; Frazier et al. 2017).

Based on the arguments, we suggest the following hypothesis:

Hypothesis 3: Psychological safety would diminish depression.

The mediating role of psychological safety in the AI adoption in an organization-depression link

The current paper seeks to delve into the intermediating function of psychological safety in the AI adoption and depression. The mediation hypothesis is based on both the COR theory and the JD-R theory, which provide a comprehensive framework for studying the interactions between organizational characteristics, human resources, and mental health. According to the COR theory, resources play a critical role in fostering employee well-being and resilience (Hobfoll et al. 2018). In the realm of AI adoption, psychological safety can be regarded as a precious resource that enables members to manage the challenges and uncertainties that emerge with new technology integration (Hobfoll et al. 2018; Tu et al. 2019).

When organizations implement AI, members often confront stressors like job insecurity, role ambiguity, and heightened cognitive demands (Brougham and Haar, 2018). Such stressors may diminish psychological safety, making employees hesitant to take interpersonal risks, voice concerns, or acknowledge errors (Edmondson, 1999; Newman et al. 2017). A decline in psychological safety can intensify the harmful influences of AI on mental health, heightening the risk of depressive symptoms (Chowdhury et al. 2023; Hobfoll et al. 2018; Tu et al. 2019).

The JD-R theory explains the role of psychological safety in mediating the link between AI adoption and employee depression. Within the realm of AI adoption, the introduction of new technologies presents a job demand that necessitates employee adaptation, skill acquisition, and managing increased uncertainty (Budhwar et al. 2023; Chowdhury et al. 2023). But, the JD-R theory posits that the impacts of job demands on employee outcomes are modifiable, contingent on the availability of job resources (Bakker and Demerouti, 2017). Psychological safety, as a job resource, can mitigate the negative consequences of AI on mental health by creating a supportive and inclusive environment (Newman et al. 2017).

Organizations that cultivate psychological safety during AI transitions enable employees to feel more supported and valued, enhancing their capacity to voice concerns and needs (Edmondson, 1999; Newman et al. 2017). This secure and supportive atmosphere can help employees more effectively manage the demands associated with AI adoption, decreasing the likelihood of depressive symptoms (Tu et al. 2019). Thus, the presence of psychological safety as a job resource can diminish the impact of AI-induced changes on employee depression by equipping employees with the necessary resources to adjust and prosper amidst technological advancements.

Hypothesis 4: Psychological safety would mediate the AI adoption in an organization-depression link.

The moderating influence of ethical leadership in the AI adoption in an organization-psychological safety link

Our suggestion is that ethical leadership may function as a moderating variable, reducing the harmful impact of AI adoption on psychological safety within an enterprise. From the followers’ standpoint, their leader serves as a pivotal point of reference for shaping their comprehension and attitudes toward the regulations and standards that govern acceptable conduct in the workplace (Potipiroon and Ford, 2017; Wimbush, 1999). Essentially, leaders play a dual role: they are key information sources, guiding employees on their expected roles, and they are role models, setting the standards and expectations for appropriate behavior within the organization (Epitropaki et al. 2017; Wimbush, 1999). Through their behaviors, decisions, and communications, leaders influence employees’ perceptions of the AI adoption process, help create a supportive and inclusive work environment, and alleviate potential adverse influences on the well-being of members (Brown et al. 2005; Bedi et al. 2016).

Ethical leadership involves demonstrating morally upright behavior through personal actions and interpersonal relationships, as well as promoting such behavior among subordinates through interactive communication, reinforcement, and decision-making (Brown et al. 2005). Recent studies have expanded this concept to emphasize the crucial role of ethical leadership in creating responsible AI adoption contexts. This research highlights how ethical leadership enables transparent communication about AI capabilities and limitations, fair treatment during technological transitions, and the cultivation of trusting human-AI relationships (Baker and Xiang, 2023; Dennehy et al. 2023). Empirical evidence now demonstrates that ethical leadership can significantly mitigate the negative impacts of AI-induced job insecurity on employee behaviors and well-being (Kim et al. 2024; Kim and Lee, 2025). Ethical leaders possess distinctive qualities such as honesty, trustworthiness, fairness, and a genuine concern for others (Treviño et al. 2003). Previous works have demonstrated that ethical leadership is linked to various favorable results inside an organization, such as increased job satisfaction, organizational loyalty, creativity, and performance (Bedi et al. 2016; Byun et al. 2018; Ng and Feldman, 2015). It is also linked to decreased counterproductive work behaviors like employee theft and sabotage (Mayer et al. 2012), and promotes a positive ethical climate that encourages ethical behavior among employees (Demirtas and Akdogan, 2015; Mayer et al. 2010).

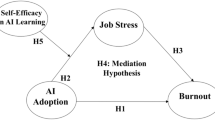

To understand how ethical leadership specifically moderates the relationship between AI adoption and psychological safety, we draw on our core theoretical frameworks. From the uncertainty reduction theory perspective (Berger and Calabrese, 1974), AI adoption inherently introduces significant uncertainty into the organizational environment through three primary mechanisms: (1) technological uncertainty about how AI will function and integrate with existing systems, (2) task uncertainty about how job roles and responsibilities will change, and (3) social uncertainty about how workplace relationships and power dynamics might be reconfigured. These inherent uncertainties in the AI technology itself and its adoption process directly threaten psychological safety by increasing ambiguity and reducing predictability Fig. 1.

Ethical leadership moderates this relationship by specifically addressing and reducing these various forms of AI-related uncertainty. When ethical leadership is high, leaders proactively address the technological uncertainty by providing clear, honest information about the AI technology’s capabilities and limitations; they mitigate task uncertainty by transparently communicating how job roles will evolve and involving employees in adoption decisions; and they reduce social uncertainty by establishing fair processes for managing any workforce transitions (Brown and Treviño, 2006; Ng and Feldman, 2015). Through these specific mechanisms, ethical leadership directly attenuates the uncertainty inherent in AI adoption itself, thereby weakening the negative relationship between AI adoption and psychological safety.

From the conservation of resources (COR) perspective (Hobfoll, 1989), AI adoption threatens psychological safety by potentially depleting key employee resources in three ways: (1) by challenging existing expertise and skills, requiring new learning; (2) by potentially diminishing job autonomy and decision-making authority; and (3) by jeopardizing job security. These resource threats are direct consequences of the specific features of AI adoption – the technology’s capability to automate cognitive tasks, make predictions, and operate autonomously.

Ethical leadership specifically moderates this resource depletion process. When ethical leadership is high, leaders provide additional resources that directly counterbalance the specific threats posed by AI adoption: they offer skills development opportunities directly relevant to working alongside AI systems, ensure that AI adoption preserves meaningful human autonomy and decision rights, and provide resource security through clear communication about how AI will affect employment (Brown and Treviño, 2006; Ng and Feldman, 2015). These targeted resource provisions specifically buffer against the resource-depleting aspects of AI technology, thereby weakening the negative relationship between AI adoption and psychological safety.

Finally, from the job demands-resources (JD-R) perspective (Bakker and Demerouti, 2017), AI adoption increases job demands through: (1) learning requirements to work with new systems, (2) adaptation demands to new workflows, and (3) performance pressures to demonstrate value alongside AI. These increased demands are direct consequences of the specific nature of AI adoption, and they threaten psychological safety by increasing stress and reducing employees’ capacity for interpersonal risk-taking.

Ethical leadership specifically moderates this demands-resources ratio through the targeted provision of job resources that directly offset the demands created by AI. When ethical leadership is high, leaders provide learning resources specifically tailored to AI technologies, ensure realistic adaptation timelines that acknowledge the challenges of working with new AI systems, and implement fair performance evaluation systems that account for the transition period (Brown and Treviño, 2006; Al Halbusi et al. 2021). These focused responses to the specific demands created by AI weaken the negative relationship between AI adoption and psychological safety.

In contrast, when ethical leadership is low, leaders do not address the specific uncertainties, resource threats, and increased demands directly created by AI adoption, allowing these negative effects to more strongly impact psychological safety. In essence, ethical leadership moderates the AI adoption-psychological safety relationship by specifically targeting and counteracting the particular mechanisms through which AI adoption threatens psychological safety.

To illustrate this moderation effect, consider two contrasting scenarios of AI adoption. In a healthcare setting where AI diagnostic tools are being implemented, high ethical leadership would manifest as leaders who directly address the technological uncertainty by involving medical staff in testing phases and providing transparent information about the AI’s diagnostic capabilities and limitations. They would mitigate task uncertainty by clearly defining how physicians will complement and oversee AI diagnoses rather than be replaced by them. This targeted response to the specific uncertainties created by AI adoption weakens the negative relationship between AI adoption and psychological safety.

Conversely, in a similar healthcare setting with low ethical leadership, leaders might implement the same AI diagnostic technology without addressing its specific uncertainties and resource threats. They might provide minimal information about the technology’s limitations, exclude staff from adoption decisions, and be ambiguous about how AI will affect job roles and evaluation metrics. By failing to counteract the specific mechanisms through which AI threatens psychological safety, low ethical leadership allows the negative relationship between AI adoption and psychological safety to manifest more strongly.

These scenarios illustrate how ethical leadership specifically moderates the AI adoption-psychological safety relationship by targeting the particular mechanisms through which AI adoption affects psychological safety, rather than simply having a direct effect on psychological safety itself.

These scenarios emphasize that ethical leadership is pivotal in moderating the AI adoption-employee psychological safety link. Ethical leaders influence employee perceptions of the change process by reducing uncertainty and providing crucial support, helping to weaken the harmful influences of artificial intelligence adoption on psychological safety. Given the aforementioned reasoning, we put up the subsequent hypothesis.

Hypothesis 5: Ethical leadership would diminish the decreasing effect of AI adoption on psychological safety.

Method

Subjects and Methodology

A cohort of individuals aged 20 and above, who were employed in various South Korean organizations, was involved in the research. The research followed a longitudinal three-wave approach, collecting data at three specific time intervals. A well-known online research firm, which oversees a database of around 5.84 million potential participants, recruited the individuals. During the enrollment process, participants were required to provide details about their employment status and verify their identity using either their mobile phone numbers or email addresses. Prior studies have shown that online surveys are a reliable and effective method for attracting diverse participants (Landers and Behrend, 2015).

This study aimed to collect data from currently employed individuals in South Korea at 3 specific time points to deal with the limitations of cross-sectional investigations. Through the digital platform, participant engagement was accurately tracked throughout the study to ensure consistent involvement in all stages of data collection. Surveys were carried out every 5 to 6 weeks and remained open for 2 to 3 days, allowing enough time for respondents to submit their answers. The platform employed advanced techniques like geo-IP restriction traps to prevent hastily submitted responses, thus upholding the integrity of the data. The survey company proactively contacted its members to ask them to take part in the research, emphasizing that their involvement was optional, and their responses would be kept private. Strict ethical guidelines were followed to ensure that participants provided informed consent. As a token of appreciation, respondents received a financial reward ranging from $10 to $11 for their participation.

To address potential sampling bias, the study utilized a method of selecting participants at random from different demographic and professional groups to reduce any associated biases. This method also allowed for precise tracking of participants across various digital platforms, ensuring that the same individuals were involved at every stage of the survey.

Measures

In the first instance, participants were asked about the degree of AI adoption in an organization and the existence of ethical leadership. Following this, their psychological safety was evaluated in a second instance. Lastly, their depression level was gauged in a third instance. The assessments for these factors were conducted using multi-item scales on a 5-point Likert scale.

AI Adoption in an organization (Point in Time 1, as reported by workers)

This article assessed the level of AI adoption by utilizing 5 items from well-established works, modifying the scales used in those studies (Chen et al. 2023; Cheng et al. 2023; Jeong et al. 2024; Pan et al. 2022). The components incorporated in this study comprised: “Our company uses artificial intelligence technology in its human resources management system”, “Our company uses artificial intelligence technology in its production and operations management systems”, “Our company uses artificial intelligence technology in its marketing and customer management systems”, “Artificial intelligence technology is used in our company’s strategic and planning systems”, and “Artificial intelligence technology is used in our company’s financial and accounting systems”. The scale exhibited a Cronbach’s α value of 0.943.

Ethical leadership (Point in Time 1, as reported by workers)

The 10 items from the ethical leadership scale developed by Brown and his colleagues (2005) were used to assess ethical leadership. Item samples comprised: “My leader disciplines employees who violate ethical standards”, “My leader discusses business ethics or values with employees”, “My leader sets an example of how to do things the right way in terms of ethics”, “When making decisions, my leader asks what is the right thing to do”, “My leader listens to what employees have to say”, and “My supervisor can be trusted”. The scale exhibited a Cronbach’s α value of 0.867.

Psychological safety (Point in Time 2, as reported by workers)

Using seven items from a previously developed scale, this research determined the degree of psychological safety (Edmondson, 1999). It gauges how workers feel about their own psychological safety on the job. The items given as samples were: “It is safe to take a risk in this organization”, “I am able to bring up problems and tough issues in this organization”, “It is easy for me to ask other members of this organization for help”, and “No one in this organization would deliberately act in a way that undermines my efforts”. The items have been used in previous works conducted in the South Korean context (Kim et al. 2019, 2021). The scale exhibited a Cronbach’s α value of 0.783.

Depression (Point in Time 3, as reported by workers)

The CES-D-10, a measurement tool established by Andresen et al. (1994), was utilized to measure the extent of depression. It consists of 10 items. This measure assesses multiple dimensions of depression, encompassing feelings of hopelessness, dread, loneliness, unhappiness, and challenges related to focus and sleep. Item samples comprised: “I felt hopeful about the future,” “I felt lonely,” “I could not get going,” and “I felt depressed.” The scale exhibited a Cronbach’s α value of 0.948.

Control variables

This research included a number of control variables, such as tenure, gender, position, and education level, to account for the impacts on depression. It relied on the suggestions of extant studies (Lerner and Henke, 2008). At the initial time point, these control variables were collected.

Statistical analysis

In order to discover patterns in the associations between research variables, researchers utilized SPSS 28 software to conduct a Pearson correlation analysis once data collecting was complete. Following a two-stage sequential approach suggested by Anderson and Gerbing (1988), the study proceeded as planned. A structural model and a measurement model were part of it. In order to assess the structural model, AMOS 28 software was used to perform a moderated mediation model analysis. The ML estimation approach, in line with the principles of Structural Equation Modeling (SEM), was employed. The measurement model was validated using Confirmatory Factor Analysis (CFA).

To address potential concerns about common method bias, we conducted Harman’s one-factor test as recommended by Podsakoff et al. (2003). This technique involves loading all items from all constructs into an exploratory factor analysis to determine whether a single factor emerges or whether one general factor accounts for the majority of the covariance among the measures. Our analysis revealed that the first factor explained 26.01% of the total variance, which is well below the threshold of 50% typically indicative of common method bias. Additionally, multiple factors emerged with eigenvalues greater than 1.0, collectively accounting for 78.23% of the total variance. These results suggest that common method bias is unlikely to be a significant concern in our study, though we acknowledge it cannot be entirely ruled out. The temporal separation of our independent, mediating, and dependent variables across three time points further helps mitigate potential common method variance (Podsakoff et al. 2012) Table 1.

The model’s goodness of fit with the empirical data was assessed using a variety of fit indices, such as the Comparative Fit Index (CFI), the Tucker-Lewis Index (TLI), and the Root Mean Square Error of Approximation (RMSEA). Academically recognized norms dictated that CFI and TLI thresholds be greater than 0.90, while RMSEA thresholds be less than 0.06. A bootstrapping analysis with a 95% bias-corrected confidence interval (CI) was performed to provide further evidence in favor of the mediation hypothesis. In accordance with Shrout and Bolger (2002), if the CI is not zero, then the indirect influence is statistically significant at the 0.05 level.

Results

Descriptive statistics

The study found strong relationships between the factors of AI adoption, ethical leadership, psychological safety, and depression, as described in Table 2.

Measurement model

The primary research variables, which include company-wide AI adoption, ethical leadership, psychological safety, and depression, were evaluated for their uniqueness using a Confirmatory Factor Analysis (CFA) on all items to determine the fit of the measurement model. The four-factor model exhibited the following statistical values when compared to other models: a three-factor model with values of 1020.988, 0.815, 0.789, and 0.116 for χ2 (df = 167), a two-factor model with values of 1559.023, 0.698, and 0.147 for CFI, TLI, and RMSEA, and a one-factor model with values of 3002.172, 0.385, and 0.209 for CFI, TLI, and RMSEA, respectively, when compared to other models. Various models were compared with the four-factor model in this study. Through chi-square difference tests, it was established that the four-factor model exhibited the best fit among the models compared. The corresponding values for CFI, TLI, and RMSEA were 0.966, 320.824, 0.961, and 0.050, respectively. The study variables have shown sufficient ability to differentiate between different concepts, according to the tests.

Structural model

A moderated mediation model, which combines mediation and moderation frameworks, was used to examine the hypotheses in the study. How much psychological safety influences the impact of AI installation on depression was the question this study set out to answer. Ethical leadership was considered a contextual component that affected the effect of AI adoption on psychological safety under the moderation paradigm.

After centering the variables of AI adoption and ethical leadership around their means, the interaction term for the moderation analysis was constructed by multiplying them. In addition to reducing multicollinearity, this strategy also prevented correlation weakening, which improved the accuracy of the moderation analysis (Brace et al. 2003).

In this investigation, the multicollinearity was examined by utilizing variance inflation factors (VIF) and tolerances, following the procedures outlined by Brace et al. (2003). Tolerance indices came out at 0.971, and VIF scores for ethical leadership and AI adoption were 1.030. Results showing VIF values below 10 and tolerance values above 0.2 suggest little multicollinearity issues.

Mediation analysis results

By comparing a full and partial model with a chi-square difference test, we were able to identify the best mediation model. Similar to the partial mediation model, the complete mediation model does not account for the direct causal relationship between an organization’s AI adoption and depression. Full mediation model statistics included a chi-squared value of 384.121 with 197 degrees of freedom, a CFI of 0.954, a TLI of 0.946, and an RMSEA of 0.050, indicating good fit indices in both models. The partial mediation model’s statistical values were as follows: 196 degrees of freedom, a chi-square value of 384.093, a CFI of 0.954, a TLI of 0.946, and an RMSEA of 0.050.

The full mediation model seemed to fit better, as the chi-square difference test revealed a little difference (Δ χ2 [1] = 0.028, p > 0.05) between the two models. Rather than being a direct effect, this finding suggests that psychological safety mediates the effect of AI installation on depression. Additional control variables like gender, education level, employment position, and length of service were included in the model; however, these factors did not show any substantial impact on depression.

Hypothesis 1 was not supported since the investigation utilizing the partial mediation model showed that there was no statistically significant direct influence of AI adoption on depression (β = 0.009, p > 0.05). This result, along with the fact that the partial mediation model’s coefficient was not statistically significant, led to the conclusion that the full mediation model was the superior choice. The better fit indices show that the full mediation model is superior, and the data support this. In light of the dismal correlation between AI adoption and depression and the comparison of the two models, it is reasonable to conclude that psychological safety plays a determining role in the effect of AI on depression.

Furthermore, the study’s findings corroborate Hypothesis 2, which postulates that psychological safety is significantly negatively affected by the adoption of AI (β = −0.324, p < 0.001), and also support Hypothesis 3, which shows that psychological safety significantly negatively affects depression (β = −0.211, p < 0.001). Table 3 and Fig. 2 show the important findings and the relationships between the variables that were studied.

Bootstrapping analysis

Testing Hypothesis 4, which proposes that psychological safety mediates the link between AI adoption in a company and depression, required a bootstrapping technique with a high sample size of 10,000. In line with the methodology put forth by Shrout and Bolger (2002), this approach ensures that the average indirect effect’s 95% bias-corrected confidence interval (CI) does not contain zero. This is necessary for the indirect effect of a variable like job stress to be deemed statistically significant Fig. 3.

In order to examine the large sample and determine the mediating role of psychological safety, the study employed a bootstrapping technique. For this mediation effect to be considered statistically significant, the 95% bias-corrected confidence interval (CI) must not contain zero. The analytical method yielded results with a confidence interval (CI) ranging from 0.025 to 0.127. The fact that this range is clearly not zero demonstrates the significant mediating function of psychological safety. This result lends credence to Hypothesis 4 and sheds light on the intricate mechanisms at play in the link between AI adoption and depression. Table 4 details the implications of AI adoption on depression, including direct, indirect, and total effects.

Moderation analysis results

This research also looked at how ethical leadership could moderate the connection between AI adoption and psychological safety. Using a mean-centering technique, we combined AI adoption and ethical leadership to create a composite variable that we could use to analyze this. According to the results, ethical leadership moderates the connection by minimizing the harmful influences of AI adoption on psychological safety, and the interaction term had a significant statistical effect (β = 0.211, p < 0.001). The results of this study lend credence to Hypothesis 5, which states that ethical leadership can lessen the impact of AI’s unintended repercussions on organizations.

Discussion

This research explores the dynamics between AI adoption within organizations and its effects on employee depression, with psychological safety as a mediator and ethical leadership as a moderator. The study validates the theoretical model proposed and sheds light on the psychological impacts of integrating AI technologies into the workplace environment.

According to the JD-R model and relevant studies on technological advancements and worker well-being, our findings confirm a significant direct relationship between AI adoption and an increase in employee depression (Hypothesis 1). This correlation highlights the psychological strains that AI can impose on employees by disrupting established job roles and increasing workplace stress and uncertainty, potentially leading to higher depression rates (Chowdhury et al. 2023; Pereira et al. 2023). The results emphasize the necessity for organizations to acknowledge and weaken the harmful effects of AI on mental health by implementing supportive measures during technological transitions (Budhwar et al. 2019; Delanoeije and Verbruggen, 2020).

Additionally, the research identifies a significant negative effect of AI integration on psychological safety within the workplace (Hypothesis 2), indicating that AI technologies may diminish the perceived support and inclusiveness of the work environment. This outcome is supported by socio-technical systems theory (Trist and Bamforth, 1951) and previous findings that link technological changes to reduced psychological safety (Edmondson and Lei, 2014; Kellogg et al. 2020). The disruption caused by AI can result in a work environment filled with ambiguity and fear, adversely affecting interpersonal trust and safety (Newman et al. 2017). Therefore, it’s crucial for organizations to cultivate a psychologically safe atmosphere that encourages open communication and risk-taking during periods of significant technological change (Carmeli and Gittell, 2009; Edmondson, 1999).

This research also demonstrates a substantial negative association between psychological safety and employee depression (Hypothesis 3), aligning with the COR theory and research on psychological safety’s protective role in employee well-being (Carmeli and Gittell, 2009; Idris et al. 2012). Psychological safety acts as a buffer, enabling employees to manage the demands and uncertainties of AI adoption more effectively, thus reducing the likelihood of depression. This protective mechanism is critical in helping employees adapt and remain resilient amidst technological advancements (Frazier et al. 2017). This underscores the critical function of psychological safety as a mediating mechanism that explains the AI adoption-depression link, as proposed in Hypothesis 4 and supported by the results.

Moreover, our results indicate that ethical leadership significantly moderates the impact of AI on psychological safety (Hypothesis 5), consistent with social exchange theory (Blau, 1964) and research emphasizing ethical leadership’s role during organizational transformations. Ethical leaders, by promoting fairness, transparency, and employee involvement, help to maintain a supportive work environment that lessens the adverse effects of AI on psychological safety. Their proactive engagement helps to establish a climate of trust and inclusivity, essential for navigating the uncertainties introduced by AI.

In summary, this study enriches the existing literature on AI, employee well-being, and leadership by detailing how AI adoption influences employee depression through the mechanisms of psychological safety and ethical leadership. The findings highlight the complex challenges posed by AI and the crucial roles of psychological safety and ethical leadership in mitigating these effects. They provide valuable insights and practical recommendations for organizations and leaders aiming to manage AI adoption effectively while promoting employee well-being and resilience.

Theoretical implications

This research makes substantial theoretical contributions to the understanding of AI adoption and employee well-being, applying and extending established psychological and organizational theories. Firstly, this study enriches the JD-R theory by applying it within the context of AI adoption and its influence on employee mental health. The present paper positions AI adoption as a significant job demand and psychological safety as a crucial job resource, illustrating how the JD-R framework can elucidate the intricate relationship among technological, psychological, and organizational factors affecting employee well-being in environments increasingly dominated by AI. The findings confirm that job demands associated with AI can deplete psychological resources, resulting in adverse mental health outcomes such as depression (Bakker and Demerouti, 2017; Pereira et al. 2023). Additionally, the current paper highlights the critical role of job resources, specifically psychological safety, in buffering the detrimental impacts of job demands on employee well-being (Bakker and Demerouti, 2017; Hu et al. 2018). By doing so, it enhances our understanding of the JD-R model’s relevance and utility in exploring AI adoption’s effects on employee mental health.

Secondly, the research extends the COR theory by spotlighting psychological safety as a crucial resource that alleviates the harmful impacts of AI adoption on employee depression. While the COR theory has traditionally been used to explain how various personal and organizational resources help cope with job stress and enhance well-being, the specific function of psychological safety in the context of AI transitions has received limited attention. The study expands the COR theory’s framework and highlights the need of creating a psychologically safe workplace by shedding light on the mediating role of psychological safety between AI adoption and employee depression. In the face of rapid technological change, this setting encourages workers to be resilient and adaptive (Newman et al. 2017). This study introduces new avenues for research into the impacts of AI on well-being and performance at work by extending the realm of COR theory to include psychological safety.

Thirdly, this study adds to our knowledge of social exchange theory by looking at the ways in which ethical leadership influences the relationships among AI adoption, psychological safety, and employee depression. For a long time, social exchange theory has helped us understand how leader-follower relationships impact employee attitudes and outcomes (Cropanzano et al. 2017; Gottfredson et al. 2020). But research into its potential applications in the context of AI adoption is still in its early stages, especially as it relates to the psychological well-being of workers. Expanding the theory’s applicability, this study shows that ethical leadership can lessen the harmful impact of AI adoption on psychological safety and depression. The importance of positive leader-follower interactions in promoting employee health and resilience in the face of technological change has been highlighted in recent research (Budhwar et al. 2022; Pereira et al. 2023). This theoretical development calls for additional research into the ways in which different types of leadership impact the social and psychological dynamics of AI-enhanced workplaces (Bankins et al. 2024).

The study brings together various theoretical perspectives, such as the JD-R model, COR theory, and social exchange theory, to develop a comprehensive and detailed framework that discusses the psychological impacts of AI adoption in organizations. This novel theoretical combination captures the complex interplay among technological, psychological, and social aspects that influence employee well-being and performance in AI-dominated environments. By amalgamating these theories, the research not only enhances its explanatory power but also sets the stage for future investigations into how these frameworks interact and define the circumstances under which they are applicable in the context of AI and mental health (Bankins et al. 2024; Budhwar et al. 2022). Furthermore, the study promotes a more interdisciplinary and multi-level approach to understanding the human impact of AI in the workplace by emphasizing the significance of considering various levels of analysis, ranging from individual psychological processes to interpersonal relationships and organizational practices.

Practical implications

The study’s findings have important practical implications for organizations implementing AI technologies.

First, organizations should implement structured psychological risk assessment protocols before and during AI adoption. Specifically, we recommend: (1) conducting pre-adoption psychological baseline assessments using validated depression and anxiety screening tools; (2) establishing quarterly pulse surveys focused on technology-related stress and psychological safety perceptions; and (3) developing AI-specific employee assistance programs with specialized counselors trained in technology-related workplace challenges.

Second, to foster psychological safety during AI transitions, organizations should implement targeted interventions at team and organizational levels: (1) create cross-functional AI learning communities where employees can openly discuss challenges without fear of judgment; (2) establish formal psychological safety metrics within team performance evaluations; (3) develop structured feedback protocols for AI-related concerns with guaranteed anonymity; and (4) implement “AI shadowing” programs where employees can safely practice alongside AI systems before full adoption.

Third, our findings on ethical leadership suggest specific leadership development initiatives: (1) create AI ethics training modules focused on transparent communication about AI limitations and realistic adoption timelines; (2) establish concrete decision-making frameworks that prioritize employee well-being in AI adoption decisions; (3) implement “AI fairness audits” led by managers to ensure equitable impact across different employee groups; and (4) develop specific feedback mechanisms for employees to report ethical concerns about AI adoption.

Fourth, organizations should adopt a comprehensive, human-centered approach to AI adoption by: (1) creating staged adoption plans with clear employee role evolution paths; (2) establishing cross-departmental AI governance committees with mandatory employee representation; (3) developing formal AI impact assessment protocols that measure both productivity and well-being outcomes; and (4) implementing “AI co-design” workshops where employees actively participate in customizing AI tools for their specific work contexts.

These concrete interventions address the specific psychological mechanisms identified in our research and provide organizations with actionable strategies to mitigate the negative effects of AI adoption on employee mental health while maximizing the benefits of these technologies.

Limitations and suggestions for future research

This study adds significantly to the ongoing conversation over AI adoption and its effects on worker welfare. However, the study’s limitations call for further investigation into the interplay between AI, psychological safety, the mental health of employees, and ethical leadership in the workplace.

First, the study’s cross-sectional feature makes it difficult to draw firm conclusions about the relationships between the variables (Spector, 2019), despite the fact that the study employs a three-wave time-lagged research methodology. Despite using well-established theories like the JD-R and COR theories as its basis, the study can’t rule out the possibility of reverse causality or reciprocal correlations among the variables due to the cross-sectional data. To better establish the timing of these interactions and give more solid causal proof, future studies should use experimental or longitudinal methods (Spector, 2019).

Second, the study focuses on a limited set of factors that influence the connection between AI integration and employee welfare. While psychological safety and ethical leadership are important, there are other variables that could also affect this relationship (Bankins et al. 2024; Dwivedi et al. 2021; Pereira et al. 2023). Future research could explore additional factors that mediate, such as technostress, perceived organizational support, job insecurity, and job stress, as well as factors that moderate, such as employee receptiveness to change, technological self-assurance, adaptability, levels of training and support during AI adoption, organizational culture, industry characteristics, and national context. Considering these factors could lead to a more comprehensive framework to grasp the complexities of how AI adoption impacts employee well-being.

Third, there is a possibility of common method bias because this study mostly uses self-reported data to evaluate the variables (Podsakoff et al. 2012). To tackle this, future research might use a variety of data sources to reduce the likelihood of bias, such as evaluations from supervisors or objective measures of employee happiness and organizational success (Podsakoff et al. 2012). To further enhance the quantitative results and provide a more detailed understanding of the effects of AI in the workplace, qualitative methods such as focus groups or interviews could offer deeper insights into employees’ personal experiences and perceptions regarding AI adoption, psychological safety, and mental health (Pereira et al. 2023; Bankins et al. 2024; Dwivedi et al. 2021).

Fourth, the research, although informative, has limited generalizability due to the specific organizational and cultural contexts in which it was carried out. The impact of AI on employee well-being is likely to vary across different industries, job roles, and cultural environments (Dwivedi et al. 2021; Pereira et al. 2023). To improve the understanding of how these findings can be applied, future research should investigate these dynamics in diverse organizational and cultural settings, thus defining the boundaries and broader significance of the established connections. Additionally, the utilization of multi-level research designs could yield deeper insights into how individual, team, and organizational factors interact to shape the experiences of employees in AI-driven environments (Newman et al. 2017).

The fifth point is that there may be other health problems associated with AI in the workplace besides depression, which the study didn’t address. In order to understand the psychological effects of AI, future research should investigate a broader range of outcomes, including anxiety, burnout, work-life balance, and job satisfaction (Wang and Siau, 2019). Organizations could gain valuable insights into the costs of AI adoption and ways to mitigate its negative effects by looking at the broader impacts of employee depression, such as absenteeism, turnover, and job performance (Delanoeije and Verbruggen, 2020; Kellogg et al. 2020).

Lastly, the primary focus of the present study is to investigate how AI can negatively affect employee well-being, with a particular emphasis on psychological safety and depression. However, it’s crucial to note that AI adoption can also have a positive impact on employee well-being by improving job satisfaction, increasing work engagement, and creating more opportunities for personal growth. Future research should strive for a more balanced approach, exploring both the advantageous and detrimental impacts of AI on employee well-being. This kind of study should aim to identify the specific conditions under which AI could not only help employees cope but also thrive and prosper in today’s workplace (Budhwar et al. 2022; Luu, 2019). Taking this wider view could offer a more comprehensive understanding of the dual effects of AI, aiding organizations in maximizing both technological progress and employee well-being.

Conclusion

The fast adoption and integration of AI at work is having a profound impact on the physical and mental health of employees. Given that AI is radically altering work processes and the overall employee experience, it is critical to examine the psychological risks and challenges that these technical enhancements entail. This research explores the link between AI adoption and depression, specifically looking at the mediating effect of psychological safety and the moderating role of ethical leadership.

The current paper develops and supports a thorough perspective that explains the intricate relationship between AI adoption, employee mental health, psychological safety, and ethical leadership by utilizing various theories. The results show that using AI makes employees’ depression much worse, thus, it is important to acknowledge and deal with the harmful influences of technology advancement on workers’ mental health. Organizations should create a culture that promotes trust, open communication, and interpersonal risk-taking, since this research shows that psychological safety plays a key mediating role in explaining the impact of AI adoption on employee depression.

In addition, the research underscores the significance of ethical leadership in mitigating the negative impacts of AI adoption on psychological well-being and employee morale. Leaders who demonstrate ethical conduct by establishing clear ethical standards, ensuring transparency, involving employees in decision-making, and prioritizing their welfare can encourage positive interactions with employees, thereby mitigating the psychological challenges associated with AI integration. These findings support a people-focused and ethically aware approach to AI adoption, which carefully weighs technological progress against considerations for employee welfare and ethical principles.

The findings of this study hold particular significance for the Korean market, which has recently experienced accelerated AI adoption across multiple sectors. South Korea’s position as a global technology leader with the highest robot density in manufacturing and rapid AI integration in service industries creates a distinctive context for our research. Korean companies face unique challenges in AI adoption due to their hierarchical organizational structures, high power distance cultural values, and a strong emphasis on social harmony that can impact psychological safety (Kim et al. 2021). Our results suggest that the negative psychological impacts of AI adoption may be especially pronounced in the Korean work environment, where job security is highly valued and technological changes can disrupt established social dynamics. The moderating effect of ethical leadership identified in our study is particularly relevant for Korean organizations, as it offers a culturally congruent approach that aligns with traditional Confucian values of benevolent leadership while addressing modern technological challenges. As the Korean government continues to promote its Digital New Deal policy and AI adoption accelerates across industries, our findings provide timely guidance for Korean organizations seeking to implement AI technologies while safeguarding employee well-being through enhanced psychological safety and ethical leadership practices (Kim and Lee, 2024).

This paper offers meaningful insights into the psychological effects of implementing AI in organizational settings and highlights the essential functions of psychological safety and ethical leadership in mitigating negative impacts on employee mental well-being. With the continuous advancement and integration of AI technology in our workplaces, it is imperative for organizations and leaders to prioritize the welfare of their employees, foster a supportive and inclusive working environment, and embrace an ethical approach that prioritizes people when incorporating AI. The findings of the current research offer fundamental knowledge and practical advice for present and future investigations into AI adoption and employee welfare, advocating for a more psychologically informed and socially responsible management of technological changes in the work environment.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Al Halbusi H, Williams KA, Ramayah T, Aldieri L, Vinci CP (2021) Linking ethical leadership and ethical climate to employees’ ethical behavior: the moderating role of person–organization fit. Personnel Review 50(1):159–185

Amankwah-Amoah J (2020) Stepping up and stepping out of COVID-19: New challenges for environmental sustainability policies in the global airline industry. Journal of Cleaner Production 271:123000

Anderson JC, Gerbing DW (1988) Structural equation modeling in practice: A review and recommended two-step approach. Psychological Bulletin 103(3):411–423

Andresen EM, Malmgren JA, Carter WB, Patrick DL (1994) Screening for depression in well older adults: Evaluation of a short form of the CES-D. American Journal of Preventive Medicine 10(2):77–84

Baer M, Frese M (2003) Innovation is not enough: Climates for initiative and psychological safety, process innovations, and firm performance. Journal of Organizational Behavior: The International Journal of Industrial, Occupational and Organizational Psychology and Behavior 24(1):45–68

Baker S, Xiang W (2023) Explainable AI is responsible AI: How explainability creates trustworthy and socially responsible artificial intelligence. arXiv preprint arXiv:2312.01555

Bakker AB, Demerouti E (2017) Job demands-resources theory: Taking stock and looking forward. Journal of Occupational Health Psychology 22(3):273–285

Bankins S, Ocampo AC, Marrone M, Restubog SLD, Woo SE (2024) A multilevel review of artificial intelligence in organizations: Implications for organizational behavior research and practice. Journal of Organizational Behavior 45(2):159–182

Barley SR, Bechky BA, Milliken FJ (2017) The changing nature of work: Careers, identities, and work lives in the 21st century. Academy of Management Discoveries 3(2):111–115

Bedi A, Alpaslan CM, Green S (2016) A meta-analytic review of ethical leadership outcomes and moderators. Journal of Business Ethics 139(3):517–536

Benbya H, Davenport TH, Pachidi S (2020). Artificial intelligence in organizations: Current state and future opportunities. MIS Quarterly Executive 19(4):1–13

Berger CR, Calabrese RJ (1974) Some explorations in initial interaction and beyond: Toward a developmental theory of interpersonal communication. Human Communication Research 1(2):99–112

Blau PM (1964) Exchange and power in social life. Wiley, New York

Borges AF, Laurindo FJ, Spínola MM, Gonçalves RF, Mattos CA (2021) The strategic use of artificial intelligence in the digital era: Systematic literature review and future research directions. International journal of information management 57:102225

Bostrom N, Yudkowsky E (2014) The ethics of artificial intelligence. In K Frankish & WM Ramsey (Eds.), The Cambridge handbook of artificial intelligence (pp. 316–334). Cambridge University Press

Brace N, Kemp, R, Snelgar R (2003) SPSS for psychologists: A guide to data analysis using SPSS for Windows (2nd ed.). London: Palgrave

Braganza A, Chen W, Canhoto A, Sap S (2021) Productive employment and decent work: The impact of AI adoption on psychological contracts, job engagement and employee trust. Journal of business research 131:485–494

Brem A, Giones F, Werle M (2021) The AI digital revolution in innovation: A conceptual framework of artificial intelligence technologies for the management of innovation. IEEE Transactions on Engineering Management 70(2):770–776

Brock JKU, Von Wangenheim F (2019) Demystifying AI: What digital transformation leaders can teach you about realistic artificial intelligence. California Management Review 61(4):110–134

Brougham D, Haar J (2018) Smart technology, artificial intelligence, robotics, and algorithms (STARA): Employees’ perceptions of our future workplace. Journal of Management & Organization 24(2):239–257

Brown ME, Treviño LK (2006) Ethical leadership: A review and future directions. The Leadership Quarterly 17(6):595–616

Brown ME, Treviño LK, Harrison DA (2005) Ethical leadership: A social learning perspective for construct development and testing. Organizational Behavior and Human Decision Processes 97(2):117–134

Budhwar P, Malik A, De Silva M, Thevisuthan P (2022) Examining the impact of artificial intelligence on employee wellbeing and performance: The moderating role of ethical leadership. Journal of Business Research 151:336–348. https://doi.org/10.1016/j.jbusres.2022.07.012

Budhwar P, Chowdhury S, Wood G, Aguinis H, Bamber GJ, Beltran JR, Varma A (2023) Human resource management in the age of generative artificial intelligence: Perspectives and research directions on ChatGPT. Human Resource Management Journal 33(3):606–659

Budhwar P, Pereira V, Mellahi K, Singh SK (2019) The state of HRM in the Middle East: Challenges and future research agenda. Asia Pacific Journal of Management 36:905–933

Bughin J, Hazan E, Ramaswamy S, Chui M, Allas T, Dahlström P (2023) Artificialintelligence: the next digital frontier? McKinsey Global Institute

Byun G, Karau SJ, Dai Y, Lee S (2018) A three-level examination of the cascading effects of ethical leadership on employee creativity: A moderated mediation analysis. Journal of Business Research 88:44–53

Carmeli A, Gittell JH (2009) High-quality relationships, psychological safety, and learning from failures in work organizations. Journal of Organizational Behavior 30(6):709–729

Chen Y, Hu Y, Zhou S, Yang S (2023) Investigating the determinants of performance of artificial intelligence adoption in hospitality industry during COVID-19. International Journal of Contemporary Hospitality Management 35(8):2868–2889

Cheng B, Lin H, Kong Y (2023) Challenge or hindrance? How and when organizational artificial intelligence adoption influences employee job crafting. Journal of Business Research 164:113987

Chowdhury S, Dey P, Joel-Edgar S, Bhattacharya S, Rodriguez-Espindola O, Abadie A, Truong L (2023) Unlocking the value of artificial intelligence in human resource management through AI capability framework. Human Resource Management Review 33(1):100899

Cropanzano R, Anthony EL, Daniels SR, Hall AV (2017) Social exchange theory: A critical review with theoretical remedies. Academy of Management Annals 11(1):479–516