Abstract

Addressing the challenges of time-consuming and labor-intensive traffic data collection and annotation, along with the limitations of current deep learning models in practical applications, this paper proposes a cross-domain object detection transfer method based on digital twins. A digital twin traffic scenario is constructed using a simulation platform, generating a virtual traffic dataset. To address distributional discrepancies between virtual and real datasets, a multi-task object detection algorithm based on graph matching is introduced. The algorithm employs a graph matching module to align the feature distributions of the source and target domains, followed by a multi-task network for object detection. An attention mechanism is then applied for instance segmentation, with the two tasks exhibiting different noise patterns that mutually enhance the robustness of the learned representations. Additionally, a multi-level discriminator is designed, leveraging both low- and high-level features for adversarial training, thus enabling tasks to share useful information, which improves the performance of the proposed method in object detection tasks. Through comprehensive comparative experiments with various state-of-the-art methods, the practical value of the generated virtual dataset has been fully demonstrated. Furthermore, the effectiveness of the proposed graph-matching-based transfer method has been validated. These findings highlight the dataset’s capacity to enhance task performance and underscore the robustness and adaptability of the proposed approach in diverse scenarios.

Similar content being viewed by others

Introduction

With the rapid development of artificial intelligence and autonomous driving technologies, computer vision faces significant challenges in executing diverse perception tasks within complex environments1. Deep learning algorithms typically rely on large volumes of annotated data for training; however, this data-driven paradigm has several limitations in practical applications2,3: (1) Collecting and annotating large-scale, diverse datasets requires substantial resources, and human annotation errors can degrade data quality, limiting the generalizability of visual models; (2) Environmental factors, such as weather and lighting, are challenging to control in real-world scenarios, complicating efforts to study their precise effects on visual perception; (3) Data from unique or extreme situations, such as traffic accidents or satellite debris, is difficult to collect, further impacting the performance of visual models; (4) In real-world applications, datasets often exhibit long-tail distributions, where a few categories dominate, leaving most categories underrepresented and thereby reducing the overall generalization capability of the models.

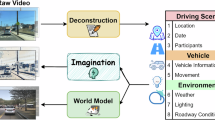

Expanding and enhancing dataset diversity is critical for addressing these challenges. Digital twin technology, which bridges the physical and virtual worlds through simulation, allows the creation of richly annotated traffic data within virtual environments3. By simulating elements such as object position, type, semantic segmentation, and movement trajectories, digital twins can augment real-world data, improving the generalization of deep learning models in perception tasks.

In this context, we propose a cross-domain object detection method leveraging virtual simulation data. Specifically, virtual datasets are integrated with existing real-world datasets to pre-train visual perception models, which are then transferred to target domains to mitigate data insufficiency in complex traffic scenarios. Traditional deep learning models operate under the assumption that training and testing data share the same distribution—a condition frequently violated in real-world applications. Directly applying models trained in one domain to another often results in significant performance degradation. Therefore, addressing cross-domain distributional discrepancies constitutes a primary objective of this study.

However, existing methods pay limited attention to multi-task domain-adaptive object detection. Single-domain adaptation frameworks typically address individual tasks such as object detection or image segmentation. Given the presence of multiple datasets, domain-adaptive object detection models, while aligning source and target domain distributions, may introduce additional noise4. Since different tasks, like object detection and instance segmentation, exhibit distinct noise patterns, jointly learning these tasks enables the model to learn more robust representations, thereby improving generalization and reducing task-specific noise. The interplay between tasks strengthens the model’s ability to capture more robust feature representations.

In summary, this study integrates virtual simulation platforms with digital twin technology to construct digital twin traffic scenarios. By controlling environmental variables such as weather, lighting, and visibility in the virtual environment, we generated image and sensor data under various conditions, producing a richly annotated virtual traffic dataset. Considering the distributional differences between virtual and real datasets, we propose a graph matching-based multi-task object detection algorithm. This algorithm consists of three modules: a graph matching module, a multi-task network, and a multi-level discriminator. The graph matching module aligns the feature distributions of the source and target domains by constructing graph-based features and completing missing semantics. The multi-task network utilizes both image-level features \(F_{s/t}\) from the source and target domains for object detection and applies an attention mechanism for instance segmentation. The multi-level discriminator conducts adversarial training at both low- and high-level feature layers, fully exploring the relationships between detection and segmentation tasks, facilitating the alignment of feature distributions between the source and target domains. The contributions of this study are summarized as follows:

-

A novel transfer learning method combining virtual and real-world data is proposed to effectively address the challenge of object detection in complex traffic scenarios due to insufficient and inconsistent data characteristics.

-

Leveraging an advanced virtual simulation platform, this study constructs a digital twin traffic scenario, generating diverse virtual traffic datasets by controlling environmental variables such as weather, lighting, and visibility. The richness and precision of this dataset provide strong supplementary data for real-world traffic perception tasks, greatly reducing the cost of data collection.

-

A graph matching-based multi-task object detection algorithm is introduced. Since different tasks, such as object detection and instance segmentation, exhibit distinct noise patterns, the interplay between tasks enables the multi-task model to learn more robust feature representations.

Related works

Research on virtual traffic scene construction

With advancements in simulation technology, the construction of virtual traffic scenes has garnered significant attention, leading to the development of numerous novel virtual datasets. Ros et al. established a small, open virtual traffic environment and collected semantically annotated data through an embedded camera under various weather conditions and viewing angles. They generated the SYNTHIA virtual dataset5 to train DCNNs for semantic segmentation in driving scenes. Similarly, Gaidon et al. proposed a cloning approach from real to virtual worlds and introduced the VKITTI video dataset for multi-object tracking6. Li et al. developed a method using the Unity3D engine and CityEngine 3D city models to construct artificial scenes, resulting in the ParallelEye virtual dataset7. Building on this, Li et al. continued to create simulation environments for autonomous driving scenarios and generated the ParallelEye-CS dataset8. As simulation engines progressed, Cabon et al. introduced the VKITTI2 dataset9, and Dokania et al. presented a method for transforming road scene datasets into virtual datasets10. In response to the needs of V2X autonomous driving technology, Li et al. proposed the V2X-Sim virtual dataset for V2X-assisted driving applications11.

Research on cross-domain object detection methods

In computer vision, domain adaptation methods for object detection focus on transferring models trained in a source domain to a target domain. Chen et al. pioneered domain adaptation for object detection with the DAFasterRCNN algorithm12. Shan et al. incorporated the CycleGAN model12 at the image level and applied traditional loss functions at the feature level13. Saito et al. demonstrated that strong global feature alignment is not always optimal, proposing a method that strongly aligns local features while weakly aligning global features14. Kim et al. generated diverse labeled data and utilized multi-domain discriminators15. Zhu et al. conducted research from a regional perspective to improve the distribution alignment effect16. Khodabandeh et al. introduced domain adaptation training with noise perturbation17. Sindagi et al. designed a domain adaptation method with prior knowledge, which proved effective even under challenging conditions like rainy and foggy environments18. Hsu et al. emphasized feature center alignment to enhance adaptation to foreground regions19. He et al. introduced the unsupervised domain adaptive Asymmetric Tri-training method to address distributional differences between the source and target domains20. Xu et al. connected the backbone of object detection models with image-level multi-label classifiers and trained them with supervision from the source domain21.

Research on graph matching methods

Graph matching is a crucial component of graph models, where feature matching in images can be transformed into a vertex matching problem between graphs22,23. Graph matching establishes one-to-one correspondences between nodes in two graphs, considering both node and structural affinities24. As a combinatorial quadratic assignment problem, Loiola et al. optimized the affinity matrix between images to encode node correspondences25. Recently, graph matching has been applied in various fields, such as object detection, multi-object tracking, point cloud matching, and transfer learning, by modeling pairwise relationships in graph space. Gao et al. modeled keypoint-based graph structures in images and applied graph matching for identical objects26. Fu et al. modeled graph structures in 3D point clouds and performed graph matching to achieve more robust point cloud alignment27. He et al. applied graph matching in trajectory and detection space for high-quality object tracking28. Differing from these scenarios, this study innovatively redefines the domain adaptive object detection problem as a graph matching problem and leverages the solution to the quadratic assignment problem to bridge the domain gap.

Digital twin scenario and virtual dataset generation

Virtual scenario simulation platform

The autonomous driving simulation platform is based on a real physical model, equipped with a comprehensive library of vehicle models and various sensors to meet diverse needs. It integrates virtual scenario construction and simulation functionalities. In this study, Prescan, the most technically advanced platform in autonomous driving simulation, is employed for virtual scenario construction and simulation, which is presented in Fig. 1.

Prescan, built on the Unreal3D engine and physical models, is an autonomous driving simulation platform. The process is divided into four parts: building the virtual environment, configuring sensors, setting up the simulation module, and conducting simulation experiments. The platform boasts a rich model library, including a variety of building models, trees, traffic signs, and vehicles. Roads can be manually constructed or automatically imported using OpenStreetMap (OSM) or OpenDrive formats. In terms of sensors, Prescan offers a wide range, including monocular and stereo cameras, radar, and ground truth sensors like 2D bounding box, point cloud, semantic, and target sensors. For vehicle control systems, Prescan allows integration with compiled models in MATLAB’s Simulink or the use of external models (e.g., CarSim’s vehicle dynamics models) for co-simulation. The platform also provides visualization tools for analyzing outputs during simulation and enables the storage of sensor data.

Digital twin modeling

Prescan provides a variety of car, truck, and pedestrian models but lacks options for bicycles, motorcycles, and buses, which must be imported from external sources. Digital twin modeling is implemented in accordance with parallel intelligence simulation theory. The process begins by capturing real-world traffic scene elements such as vehicles, non-motorized vehicles, pedestrians, roadside trees, buildings, lane markings, and signs from street maps. Buildings are either manually modeled or imported from external 3D model libraries using tools like SketchUp. Scene modeling is further divided into two levels: coarse-grained geometric simulation and fine-grained instance-level simulation:

-

1.

Geometric simulation: This step involves creating coarse-grained models of the primary entities in a traffic scene (e.g., roads, buildings) using geometric information such as dimensions and positions observed in real environments. This approach provides a visual representation of the major entities within the virtual environment. Due to its lower precision and computational efficiency, it supports global traffic scene simulation, offering macro-level visualization of the entire environment. For example, Fig. 2 illustrates a modeled building entity.

-

2.

Instance-level simulation: In a digital twin, fine-grained instance simulation is necessary to accurately replicate material and texture properties for comprehensive scenario simulation. Based on attention mechanisms and related theories, instance-level simulations of traffic vehicles and pedestrians are carried out by creating or importing detailed 3D models to meet task-specific requirements within the traffic scene.

Digital twin scenario generation

Following the digital twin modeling theory, geometric models of roadside buildings are first generated using real street maps. The buildings’ size, texture, and color are refined to closely match their real-world counterparts. After completing the geometric model, it is imported into Prescan’s static model library. Next, OSM-format road network files are imported into the virtual environment, visualizing the road models. Using the road network as a reference frame, 3D models of buildings, fences, and traffic signs are placed according to the relative positions observed in the map. Tree models are placed at 15-m intervals to replicate the roadside environment, completing the static elements of the virtual traffic scene as shown in Fig. 3.

After constructing the static scene, dynamic elements, including vehicles and pedestrians, are modeled at the instance level. Seven categories of elements—persons, cars, trucks, buses, trains, motors and bikes are simulated, based on common real-world traffic scenes. To mitigate the long-tail distribution of traffic models, the density of bicycles, motorcycles, and buses is increased. The digital twin traffic scene generated by the simulation platform is shown in Figs. 4 and 5.

Using the platform’s weather, lighting, and visibility control modules, various weather conditions such as fog, rain, and snow are simulated by adjusting meteorological parameters. Lighting conditions at any time of day are simulated by modifying visibility, sun angle, and height, as shown in Fig. 6. These settings allowed for the creation of diverse environmental scenarios.

In addition to weather conditions, the platform provides diverse environments, including cityscapes, snowy landscapes, and deserts, while supporting the import of external landscape images, such as polar regions, tropical rainforests, and karst formations. Various ground textures—such as concrete, tiles, grass, and sand—are available, with the option to import external images as surface textures into the virtual environment. Traffic elements within the virtual environment, including traffic lights, road signs, vehicles, and pedestrians, are designed as highly controllable models. Traffic lights can be networked to share synchronized timing configurations or individually programmed for specific streets. Vehicle and pedestrian routes can be manually configured through designated trajectory points or automatically linked to roads for seamless operation. Additionally, randomized movement can be defined within specified areas. By integrating dynamic traffic elements with traffic lights, the virtual environment can realistically replicate real-world traffic scenarios. The digital twin traffic environment generated by the simulation platform enables the rapid creation of large-scale, highly controllable, all-weather virtual traffic scenes, combining both coarse-grained and fine-grained modeling techniques.

Virtual dataset generation

The generation of a virtual dataset requires the continuous collection of images throughout the simulation process. A proven approach is to equip a moving vehicle with camera sensors and bounding box detectors to continuously capture images and other data. In this study, additional curved roads are incorporated into the existing digital twin environment to increase the complexity of the dataset by utilizing the frequent changes in camera perspectives as the vehicle navigated the curves. To further reduce the issue of excessive image similarity, the sensor sampling frequency is loared to 10 Hz, and the vehicle speed is increased from 10 m/s to 15 m/s (54 km/h). This adjustment significantly reduced the similarity between consecutive images, effectively mitigating the problem of overly similar adjacent images.

Once the detectability of the traffic elements, as well as the weather and lighting conditions, are set, the simulation is initiated. Upon completion, the sensors recorded both image data and bounding box annotations. The post-simulation process involved matching these two datasets, an essential step in data handling. The bounding box data are converted to the standard PASCAL VOC format, and corresponding annotation files are generated. Although a large-scale virtual dataset is initially created from the simulation, it could not be directly applied to computer vision tasks due to unfiltered annotation data. As shown in Fig. 7, unfiltered annotations often included noise, such as distant, blurry objects being treated as valid targets.

To address this, a visualization script is used to overlay the annotation data onto the corresponding images, and OpenCV is employed to generate a video, allowing for a quick review of the annotation accuracy. Through visualization, two main types of noise are identified: (1) small, blurry objects due to long distances, and (2) incomplete objects partially occluded or outside the sensor’s field of view. Two filtering rules are applied to mitigate these issues: area-based filtering and aspect ratio-based filtering. After extensive testing and adjustments in both straight and curved road environments, optimal filtering rules are established for each scenario. Only bounding boxes with areas exceeding a minimum threshold and with aspect ratios within a defined range are considered valid. These filtering rules, as shown in Table 1, significantly improved annotation accuracy. Bounding boxes that are too small or had invalid aspect ratios are excluded from the final dataset. As shown in Fig. 8, different colored boxes represent different target categories, with category labels omitted for clarity. After passing the visualization inspection, the large-scale virtual traffic dataset is deemed ready for further experimental research. This dataset is named the virtual dataset of city scapes (VDCS).

Multi-task object detection algorithm based on graph matching

Algorithm overview

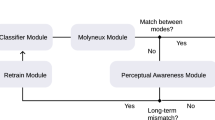

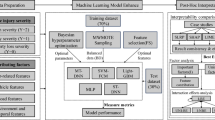

This chapter introduces a multi-task object detection method based on graph matching, building on the domain adaptive object detection algorithm that also leverages graph matching. Since instance segmentation is a structured output that contains spatial similarities of different domains, the model applies graph-based feature distribution alignment and adversarial learning at the primary feature level. Design a multi-level adversarial mechanism to enhance the alignment effect of different distributions. As illustrated in Fig. 9, the domain adaptation model contains three modules: a graph matching module, a multi-task network (denoted as G), and the adversarial discriminator \(D_{i}\), with i representing the multi-level discriminator in the adversarial learning process.

Given a batch of labeled source domain images \(I_{s}^{B} = \{ (x_{s}^{i} ,y_{s}^{i} )\}_{i = 1}^{B}\) and unlabeled target domain images \(I_{t}^{B} = \{ (x_{t}^{i} )\}_{i = 1}^{B}\), a shared feature extractor \(\phi\) is employed to extract image-level features \(F_{s/t} = \left\{ {\phi (x_{s/t}^{i} )} \right\}_{i = 1}^{B}\). These features \(F_{s/t}\) are passed through the discriminator \(D_{1}\) to identify whether each pixel belongs to the source or target domain, thereby aligning features at the image level and reducing domain bias. Simultaneously, image-level features \(F_{s/t}\) are further aligned in the graph matching module.

Source and target features \(F_{s/t}\) are passed through the shared multi-task network to optimize the segmentation network G. The segmentation network utilizes the backbone-extracted features \(F_{s/t}\) and the fully convolutional one-stage object detection (FCOS) method29 to predict bounding boxes. The spatial attention guided mask (SAGM) module, integrated within the network, uses spatial attention to generate segmentation masks within the detected bounding boxes. This enables the model to focus on relevant pixels while suppressing noise.

The algorithm aims to make the segmentation predictions \(P_{s/t}\) for the source and target domains similar. To achieve this, the segmentation results \(P_{s/t}\) are input into the discriminator \(D_{2}\) to determine whether they originate from source or target. Through adversarial learning in the target detection task, gradients are propagated from \(D_{2}\) to G, and the goal is to make the generated data in the source domain as similar as possible to the target domain.

Multi-task object detection network based on attention mechanism

The multi-task object detection network consists of three components: a backbone network, an FCOS-based detection head, and a mask head. As depicted in Fig. 10, FCOS, similar to fully convolutional networks (FCN)30, is an anchor-free, proposal-free object detection method that relies on pixel-level predictions. Unlike mainstream object detection methods such as Faster R-CNN31, YOLO32, and RetinaNet33, which utilize predefined anchor boxes, FCOS directly predicts a four-dimensional vector and a class label at each spatial location in the feature map, embedding the relative offsets from the bounding box edges to the center. In addition, FCOS incorporates a centerness branch to estimate the distance of each pixel from the center of its respective bounding box. By eliminating the need for anchor boxes, FCOS not only simplifies the detection process but also reduces memory usage and computational overhead, achieving superior performance compared to anchor-based approaches.

Given its efficient detection speed and strong performance, FCOS is selected as the base for the multi-task object detection model, with segmentation tasks added on top of the FCOS detection architecture. Since the multi-task network is built upon FCOS, the hyperparameters mostly follow those of FCOS. However, FCOS struggles to generate positive RoI samples in the initial stage, so the positive score threshold is set to 0.03 instead of 0.05. During the detection phase, a feature pyramid network (FPN)34 with 256 channels is utilized, and the P3 to P7 feature layers are employed in the segmentation phase.

After the FCOS module predicts the objects, the multi-task network uses a mask head similar to that in Mask R-CNN to predict segmentation masks within the detected regions. Since RoIs are predicted at different feature pyramid levels, RoIAlign is applied at varying feature scales according to the RoI size. Specifically, larger RoIs are assigned to higher-level features, while smaller RoIs are assigned to lower-level ones. In two-stage detection methods like Mask R-CNN, Formula (1) is used to determine which feature map a given RoI is assigned to in the FPN.

where \(k_{0}\) is set to 4, w and h represent the width and height of each RoI, respectively, and \(A_{RoI}\) is the area of the RoI, with the standard ImageNet pretraining size set to 224. However, this equation is not applicable to the single-stage object detection method discussed in this chapter.

Firstly, Eq. (1) is designed for two-stage object detection methods, which differ from the feature levels used in single-stage methods. For the FPN layers, two-stage detection typically utilizes P2 (stride 22) to P5 (stride 25) feature layers, while single-stage detection employs P3 (stride 23) to P7 (stride 27). This results in a larger receptive field for single-stage methods at lower resolutions. Furthermore, the fixed value of 4 in Eq. (1), based on the standard ImageNet pretraining size of 224, does not adapt well to varying feature scales. For instance, when the input dimension is 1024*1024 and the RoI area is 2242, the RoI is assigned to a relatively high feature level, P4, despite being small relative to the input dimension. This misallocation reduces the average precision for small objects.

To address this issue, this chapter proposes an adaptive RoI assignment function for single-stage object detection, as shown in Eq. (2):

where \(k_{\max }\) is the area of the input image, \(A_{input}\) is the RoI area, and \(A_{RoI}\) is the final feature map from the backbone network.

Equation (2) adaptively allocates RoIs based on the ratio of the input image area to the RoI area. If the calculated level is lower than the minimum feature level P3, it is constrained to P3. While Eq. (1) assigns P4 to RoIs with an area of 2242, Eq. (2) adjusts the level to \(k_{\max }\)-5, which corresponds to a feature level for RoIs approximately 20 times smaller than the input image area. This adaptive RoI assignment method improves detection accuracy for small objects compared to Eq. (1).

Recently, attention mechanisms have been widely applied in object detection as they help focus on important features while suppressing irrelevant ones. Channel attention emphasizes regions of interest across feature map channels, while spatial attention identifies areas where significant information resides. Thus, spatial attention guided mask (SAGM) is employed in this paper.

After the RoI features are extracted by RoIAlign at a resolution of 14 × 14, these features are sequentially processed through four convolutional layers and the spatial attention module (SA). The spatial attention map \(A_{sag} (X_{i} ) \in {\mathbb{R}}^{C \times W \times H}\) serves as a feature descriptor for the input feature map \(X_{i} \in {\mathbb{R}}^{C \times W \times H}.\) Specifically, SA first generates pooled features \(P_{avg} \in {\mathbb{R}}^{C \times W \times H}\) and \(P_{\max } \in {\mathbb{R}}^{C \times W \times H}\) through average and max pooling operations, respectively, and then aggregates them by concatenation. This is followed by normalization using a 3 × 3 convolutional layer and a sigmoid function, as represented in Eq. (3):

where \(\sigma\) denotes the sigmoid function, \({\mathcal{F}}_{3 \times 3}\) represents the 3 × 3 convolutional layer, and \(X_{sag} \in {\mathbb{R}}^{C \times W \times H}\) indicates the concatenation operation. Thus, the attention-guided feature map \(T\) is computed as shown in Eq. (4):

where \(\otimes\) signifies element-wise multiplication. Subsequently, 2*2 deconvolution operation upsamples the spatial attention feature map to a resolution of 28*28. Finally, 1*1 convolution is applied to predict the masks for specific classes.

Multi-layer adversarial learning

Given the softmax output \(P = G(I) \in {\mathbb{R}}^{C \times W \times H}\) from the segmentation network, a cross-entropy loss \({\mathcal{L}}_{d}\) is employed to pass P to the discriminator D, as illustrated in Eq. (5):

where z = 0 and z = 1 mean the sample belongs to source domain and target domain, respectively. In practical, the segmentation loss is generally the cross-entropy loss for the source domain images, as outlined in Eq. (6):

where \(Y_{s}\) is the true annotation for source domain images and \(P_{s} = G(I_{s} )\) is the output from the segmentation network.

For target domain images, p predictions are generated by passing images through the segmentation network G, yielding the predicted output \(P_{t} = G(I_{t} )\). To align the distribution of \(P_{t}\) closer to \(P_{s},\) an adversarial loss \({\mathcal{L}}_{adv}\) is employed, as shown in Eq. (7):

This adversarial loss helps train the segmentation network to deceive the discriminator, increasing the likelihood that the predictions for target domain images are perceived as coming from the source domain.

Loss function

The model in this chapter employs a graph matching approach to align the feature distributions of the source and target domains, with the graph matching loss defined in Eq. (8):

where \({\mathcal{L}}_{mat}\) denotes the graph matching loss, \({\mathcal{L}}_{NA}\) indicates the node alignment loss, and \(\lambda\) is set to 0.1.

The algorithm utilizes the same mask target as Mask R-CNN, determined by the overlap between the Region of Interest (RoI) and the associated ground truth masks. The multi-task loss function applied to each RoI is represented by Eq. (9):

where \({\mathcal{L}}_{cls},\) \({\mathcal{L}}_{ctr},\) and \({\mathcal{L}}_{box}\) represent the classification loss, center loss, and detection box regression loss, respectively, consistent with FCOS, and \({\mathcal{L}}_{mask}\) is the binary cross-entropy loss similar to Mask R-CNN.

The multi-layer adversarial learning section includes two losses:\({\mathcal{L}}_{GA}\) and \({\mathcal{L}}_{adv}.\) The primary features are processed by a global discriminator \(D_{GA}\) to identify whether each pixel in the feature map originates from the source or target domain. For the position (u, v) in feature F, the loss function is expressed in Eq. (10):

For the loss \({\mathcal{L}}_{adv},\) an architecture similar to that proposed by Chen et al. is utilized, leveraging all layers to preserve spatial information. This network comprises five convolutional layers with a kernel size of 4 × 4 and a stride of 2, with channel counts of {64, 128, 256, 512, 1}. Each convolutional layer, except the last, is followed by a leaky ReLU activation.

Consequently, the total loss of the algorithm framework in this chapter is represented in Eq. (11):

where \({\mathcal{L}}_{mat}\) is the graph matching loss, \({\mathcal{L}}_{NA}\) is the node alignment loss, \({\mathcal{L}}_{GA}\) is the global adversarial loss, \({\mathcal{L}}_{muti}\) is the detection loss, and \(\lambda\) is set to 0.1.

Evaluation of the virtual dataset

This section presents various experimental setups to evaluate the practical value of the VDCS virtual dataset from two perspectives: its advantages over other datasets and its applicability in real-world scenarios. Experiments assessing the advantages of VDCS are divided into two categories: precision comparison with other virtual datasets and long-tail distribution ratio analysis. Applicability experiments are further categorized into tests under normal and adverse weather conditions. Through comparative analyses, the strengths of the VDCS virtual dataset are thoroughly examined.

Experimental setup

Experimental environment

The experiments were conducted on a machine running Ubuntu 18.04, equipped with an Intel Xeon E5-2640 v4 processor, an NVIDIA Titan Xp GPU with 12 GB of VRAM, and CUDA version 11.0. The deep learning framework used was PyTorch 1.9.0, and all training and testing tasks were GPU-accelerated.

Dataset description

KITTI dataset35: A widely-used dataset in computer vision for tasks such as vehicle detection, lane detection, and 3D vision. It contains diverse sensor data, including RGB images, LiDAR, GPS, and inertial measurement unit (IMU) readings.

VKITTI virtual dataset6: A synthetic extension of the KITTI dataset, rendered to simulate KITTI scenes with greater diversity.

ViPER virtual dataset36: A high-fidelity synthetic dataset covering a broad range of driving scenarios and weather conditions.

RAND Cityscapes virtual dataset37: A generative dataset that emulates the style and distribution of the Cityscapes dataset.

Cityscapes dataset38: A well-known urban scene segmentation dataset containing high-resolution street-level images from various cities, each with pixel-level annotations for objects and scene categories.

Foggy Cityscapes virtual dataset39: Generated by artificially adding fog effects to real images from the Cityscapes dataset.

Experimental description

Each virtual dataset was used as the source domain, while the KITTI dataset served as the target domain. A Faster R-CNN cross-domain object detection model with a ResNet101 backbone pre-trained on ImageNet was employed. The training settings included 2000 iterations, a batch size of 512, an initial learning rate of 0.001, and an average iteration time of approximately 9 s. The total training time was 1774.4 s.

To demonstrate the reliability and effectiveness of the VDCS dataset for object detection, two sets of experiments were designed:

Horizontal comparison with other vrtual datasets: Target detection models were trained on VDCS, VKITTI, SYNTHIA, and ViPER datasets and tested on the KITTI dataset. The results of these tests were compared to evaluate the performance of each virtual dataset.

Validation of VDCS as a data augmentation tool: The VDCS dataset, SYNTHIA’s RAND Cityscapes dataset, and a combination of VDCS and RAND Cityscapes were used as the source domain for model training. The trained models were then tested on the Cityscapes and Foggy Cityscapes datasets to assess the effectiveness of VDCS under both normal and adverse weather conditions. The results were compared with those obtained using individual datasets as the source domain.

Evaluation metrics

In object detection, evaluation metrics are crucial tools for measuring model performance. These metrics not only reflect the model’s detection accuracy but also serve as benchmarks for comparing different models. Among the most widely used metrics are Average Precision (AP) and Mean Average Precision (mAP).

AP measures the accuracy of a model’s object detection for a single category and is the average precision over varying recall rates. Essentially, AP is the area under the precision-recall curve, typically calculated using numerical integration. mAP, the mean of APs across all categories, is one of the most important metrics as it provides a comprehensive evaluation of the model’s performance across the entire dataset. The mAP calculation follows these steps:

-

1.

For each category, sort the predicted bounding boxes based on the model’s confidence scores.

-

2.

Compute precision and recall for each category.

-

3.

Plot the precision-recall curve for each category.

-

4.

Calculate the area under each curve, which represents the AP.

-

5.

Average the APs across all categories to obtain the mAP.

Experimental results and analysis

Experiment 1: validating the advantages of the VDCS dataset

To evaluate the advantages of the VDCS dataset, its performance was compared against publicly available virtual datasets such as VKITTI and SYNTHIA. The results of training the Faster R-CNN model on each dataset and testing it on the KITTI dataset are summarized in Table 2. Since the categories across datasets vary, using mAP as a metric was not considered meaningful. Instead, the AP values of key traffic-related objects were used as evaluation metrics. The results demonstrate that the VDCS dataset exhibits notable advantages in terms of accuracy and diversity in traffic object categories.

Table 3 presents a comparison of the instance counts for key categories—cars, pedestrians, and buses—across public traffic datasets and the VDCS dataset. Additionally, a histogram illustrating the proportional relationships between these instance counts is provided in Fig. 11. The VDCS dataset significantly alleviates the long-tail distribution issue observed in other datasets, achieving a more balanced ratio among the three categories. This confirms the superiority of the VDCS dataset in terms of data distribution.

Despite hardware constraints limiting the resolution of VDCS images to 600 × 500 pixels—lower than the resolutions of VKITTI, RAND Cityscapes, and ViPER datasets (2048 × 1024 or 1024 × 720)—the VDCS dataset still demonstrates superior accuracy on the KITTI test set for categories such as "Bus," "Car," and "Pedestrian." Moreover, the VDCS dataset offers the most comprehensive object category coverage, including classes such as "car," "bus," "truck," "motorbike," "bicycle," “rider” (cyclist), “person” (pedestrian), and "van." This breadth further highlights the dataset’s value for diverse traffic scenarios.

Experiment 2: evaluating the practical significance of the VDCS dataset

While Experiment 1 demonstrated the advantages of the VDCS dataset over other virtual datasets, Experiment 2 aimed to validate its practical applicability in large-scale, complex traffic scenarios. Specifically, the experiment evaluated the effectiveness of using the VDCS dataset, combined with other datasets, as the source domain for training models that were subsequently tested on target domains. The results are summarized in Tables 4 and 5.

To ensure the experimental results were as intuitive as possible, the same object categories were consistently labeled across both source and target datasets. The datasets selected for this experiment included VDCS, RAND Cityscapes (hereafter referred to as RAND), Cityscapes (CS), and Foggy Cityscapes (Foggy CS). These datasets share common object categories, such as “bicycle,” “bus,” “car,” “motorcycle,” and “person,” with identical labeling conventions. Among these, VDCS and RAND are virtual datasets, CS consists of real-world traffic scene data, and Foggy CS is derived from the CS dataset with artificial fog added to simulate foggy conditions.

By comparing the performance of models trained on these datasets, the experiment assessed the feasibility of applying the VDCS dataset in practical training scenarios, providing critical insights into its effectiveness across diverse environmental conditions.

The results in Tables 4 and 5 indicate that using the VDCS dataset in combination with other datasets as the source domain for training models significantly enhances the performance of various categories in the target domain under both normal and adverse weather conditions. This demonstrates the practical utility of VDCS as a data augmentation tool. Additionally, a comparison of the results in Table 5 across five source domain configurations—CS, VDCS, RAND, VDCS + CS, and VDCS + RAND + CS—reveals that combining multiple related but distinct datasets for joint training yields substantially better performance on the same test set than training on individual datasets alone. Notably, although the VDCS dataset lacks the “rider” category, its inclusion alongside RAND and CS during joint training results in a significant improvement in the precision of the “rider” category. This is likely due to the presence of abundant features in VDCS’s “person” and “bicycle” categories that closely resemble those of "rider," which positively influence model training.

Through Experiments 1 and 2, we evaluated the VDCS dataset against other publicly available virtual datasets. The results validate the superiority of VDCS within the current set of virtual datasets and its effectiveness and practicality for large-scale, complex traffic scenarios in object detection tasks.

Performance evaluation in mixed real and virtual scenarios

To evaluate the performance of the proposed MNBGM method for object detection, we conducted style transfer experiments using the VDCS virtual dataset images as the source domain and the Cityscapes public dataset as the target domain, as described in Section "Digital twin scenario and virtual dataset generation".

Experimental setup

The feature extractors in MNBGM are implemented using PyTorch versions of VGG-16 and ResNet-50. The training process consists of 40 epochs, with an image buffer size of 50 and a batch size of 1, meaning images in the buffer are directly used for training. The Adam optimizer is employed, with an initial learning rate of 0.0002. The learning rate remains constant for the first 20 epochs, followed by a weight decay of 1 × 10−5, reducing the learning rate to zero after 20 epochs. Each epoch requires approximately 2309 s, resulting in a total training time of 92,364.8 s.

Input images are resized such that the shorter side is 800 pixels, with the longer side limited to 1333 pixels. During training, up to 100 graph nodes are sampled from feature maps in each domain. To address potential failures in graph matching when no nodes appear in the target domain, the framework undergoes initial training following the method proposed by Hsu et al.40, prior to integrating the bipartite graph matching module. Other graph-matching-related configurations adhere strictly to methodologies outlined in related works40,41.

The VDCS virtual dataset, consisting of 12,726 images, is utilized as the source domain, while the Cityscapes dataset, containing 3,457 images, serves as the target domain. Both datasets include annotations for eight object categories: person, rider, car, truck, bus, train, motor, and bike. In addition to the generated VDCS virtual dataset, the publicly available Cityscapes dataset38 is used for cross-domain object detection tasks.

Cityscapes Dataset: As illustrated in Fig. 12, the Cityscapes dataset consists of street scene images captured using an onboard camera at 17 frames per second under dry weather conditions, spanning three seasons: spring, summer, and autumn. The dataset contains annotated bounding boxes for eight object categories.

Analysis of experimental results

Table 6 presents the object detection results of the proposed MNBGM model when using VGG-16 and ResNet-50 as the backbone networks, with VDCS as the source domain and Cityscapes as the target domain.

The results clearly demonstrate the effectiveness of the proposed model. When using VGG-16 and ResNet-50 as backbone networks, MNBGM achieved mAP scores of 44.8% and 51.9%, respectively, on the VDCS → Cityscapes task, significantly outperforming existing methods. Compared to category-level domain adaptation methods, such as CFFA42 (38.6%), RPNPA43 (40.5%), MeGA-CDA44 (41.8%), KTNet45 (40.9%), GPA46 (39.5%), and TDD47 (43.1%), MNBGM achieved mAP improvements of 6.2%, 4.3%, 3.0%, 3.9%, 5.3%, and 1.7%, respectively, highlighting the superiority of our approach.

Moreover, MNBGM demonstrated improvements over other FCOS-based object detection methods, surpassing EPM19, KTNet45, and SSAL48 by 8.8%, 3.9%, and 5.2% in mAP, respectively. When using VGG-16 as the backbone, MNBGM achieved a slight improvement of 0.3% over SCBGM (44.5% mAP). Similarly, with ResNet-50 as the backbone, MNBGM outperformed SCBGM (51.7% mAP) by 0.2%, further confirming the effectiveness of the proposed multi-task learning approach.

Ablation study

To assess the effectiveness of each key module in the MNBGM model, ablation experiments are conducted on the VDCS → Cityscapes scenario.

The experimental results in Table 7 demonstrate that the Graph Matching Head plays a crucial role, providing a significant 9.0% improvement in mAP compared to the GA module, highlighting its substantial impact on enhancing domain adaptation performance. Incorporating the SAGM attention mechanism further increased the model’s mAP by 2.3%, while adding a high-level feature discriminator contributed an additional 3.8% improvement.

Moreover, the removal of the low-level discriminator from the GA network resulted in a 9.9% drop in mAP, underscoring the indispensable role of adversarial training in feature distribution alignment. These findings clearly indicate that each module in the model is essential and significantly enhances the effectiveness of domain adaptation.

Visualization of experimental results

Figure 13 presents a comparison between the performance of the proposed method and the model without the Graph Matching Head, as well as a comparison with the ground truth bounding boxes in the detection task.

It can be observed that in challenging visual conditions, where the comparison methods fail to effectively detect objects or produce false positives, the proposed method still accurately locates and identifies targets. For most easily detectable objects, the results from the proposed method are closer to the ground truth bounding boxes compared to the alternatives. This further verifies the importance of the Graph Matching Head strategy in improving detection accuracy. Figure 14 shows a heatmap comparison between the baseline network and the proposed method using Grad-CAM for the same image.

The heatmap comparisons reveal clear differences in focus between the two methods, with or without the Graph Matching Head strategy. The proposed method exhibits more concentrated attention on hard-to-detect objects within the image, particularly when environmental factors pose challenges to the detection task. This focused attention enables the model to more effectively identify and locate objects. In contrast, removing the Graph Matching Head leads to degraded performance in complex or adverse environments. The heatmap visualizations further confirm the effectiveness of the proposed method in improving cross-domain detection tasks.

Performance evaluation in real traffic scenarios

This experiment aims to explore the model’s performance across various visual conditions in urban road settings, with a particular focus on assessing its generalization capabilities. Initially, the model is trained using a dataset from clear daytime conditions and then validated on datasets from different environmental scenarios. This approach evaluates the baseline performance of the network under standard and challenging visual conditions. Further, by training the proposed method and validating it across five distinct scenarios, we demonstrate its capability to enhance generalization to adverse visual environments in road scenes. A comparative analysis with methods discussed in Section “Analysis of experimental results” highlights the advantages of the proposed method.

Dataset description

The datasets used in this section are constructed based on the work of Wu et al.42, integrating images from multiple existing datasets. These datasets are carefully selected to cover common visual conditions encountered in urban roads, forming a comprehensive benchmark for detecting urban scenarios under various conditions. The five datasets represent, clear daytime scenes, nighttime scenes, rainy daytime scenes, rainy nighttime scenes and foggy scenes. Each dataset includes annotations for seven common object categories: pedestrians, cars, riders, trucks, motorcycles, bicycles, and buses.

Clear daytime scene selects from the BDD-100 k dataset, this subset comprises 27,708 images taken under clear daytime conditions. Among them, 19,395 images are used for model training, and 8,313 images are used for validation. This subset evaluates the model’s performance under optimal lighting conditions. Nighttime scene also sources from the BDD-100 k dataset, this subset includes 26,158 images captured at night. These low-light conditions pose significant challenges for object detection, providing insights into the model’s nighttime performance. Rainy daytime and rainy nighttime scene similarly select from the BDD-100 k dataset, these subsets contain 3,501 and 2,494 images, respectively. They test the model’s stability and accuracy under rainy conditions. Foggy Scene subset combines 3,775 images from the Foggy Cityscapes and AdverseWeather datasets. It assesses the model’s capability in low-visibility conditions. Figure 15 illustrates examples from each of these five scenarios, highlighting the diverse environmental conditions present in the datasets.

Experimental results and analysis

Table 8 presents the comparative experimental results of the model validation in clear daytime conditions, where ResNet-50 is chosen as the backbone network. The results demonstrate the effectiveness of the proposed model. In the clear daytime scenario, MNBGM achieves an mAP of 61.6%, significantly outperforming existing methods. When compared to domain adaptation methods at the category level, such as GPA and TDD, MNBGM achieves mAP improvements of 12.3% and 5.5%, respectively, showing the superiority of the proposed approach. Additionally, when using the same FCOS object detection method, MNBGM outperforms EPM, DIDN, DSS, and SDA by 11.0%, 11.9%, 17.7%, and 10.3% in mAP, respectively. Compared to SCBGM, MNBGM achieves a 0.3% improvement, demonstrating the effectiveness of the multi-task learning approach. Model visualization comparison in clear daytime scene is presented in Fig. 16.

The results shown in Table 9 demonstrate the performance of various models in a foggy weather scenario. As expected, when the model faces an unknown target domain with a distribution significantly different from the source domain used for training, the accuracy tends to decrease. However, MNBGM (the proposed method) shows the least accuracy degradation compared to other methods, highlighting its robustness in foggy conditions.

In particular, despite not being specifically trained for foggy weather, MNBGM successfully improves the mAP to 46.1%, demonstrating its effective performance even under adverse weather conditions. This underscores the method’s efficiency in challenging environments and its necessity for handling changing conditions in real-world applications. Figure 17 visualizes the detection performance of SCBGM and the proposed MNBGM method in foggy weather. The image shows a comparison with the ground-truth bounding boxes, illustrating how the proposed MNBGM method performs in foggy scenarios. The results highlight the superior detection capabilities of MNBGM, which can maintain robust performance despite the challenging visual conditions.

Table 10 presents the performance results of various models tested in nighttime scenarios. The experiment results show that the proposed method achieves an improvement in performance for nighttime scenes. Compared to the SCBGM method, the proposed method improves the model’s performance by 2.1%. This improvement not only demonstrates the effectiveness of the proposed method in low-light conditions, but also highlights its potential for generalization across different lighting conditions. The results suggest that the method can adapt well to varying environmental factors, such as low-light nighttime scenarios, where traditional models often struggle. Figure 18 visualizes the detection performance of SCBGM and the proposed method in a nighttime scene. The image includes comparisons with the ground-truth bounding boxes, showcasing the effectiveness of the proposed method in detecting objects under low-light conditions. The results indicate that the proposed method outperforms SCBGM, providing more accurate and reliable detection even in challenging lighting conditions.

Table 11 presents the performance results of various models tested in rainy conditions. Model visualization comparison in Rainy scene is presented in Fig. 19. The experimental results highlight a significant performance improvement achieved by the proposed method under rainy weather conditions. Compared to other methods, the proposed approach demonstrates a more substantial advantage, improving the mAP to 48.9%. This improvement is primarily attributed to the effective application of multi-task learning, which notably enhances the model’s ability to recognize objects under challenging conditions such as rain.

Table 12 presents the performance results of various models tested in rainy night conditions, a particularly challenging scenario in the validation set. In rainy night conditions, visual information is severely impaired by factors such as reduced lighting, interference from raindrops, and dynamic background noise, all of which significantly affect the performance of object detection models.

The performance of other methods in this challenging scenario is notably inadequate, with their mAP values falling below 20%. This highlights the difficulty of achieving satisfactory detection performance under such extreme conditions without extensive scene annotations and supervised learning methods. However, the proposed method overcomes these challenges by introducing a graph matching module and leveraging multi-task network-assisted training. This results in a significant enhancement in the model’s generalization ability, particularly in extreme visual conditions like rainy nights. Remarkably, the proposed method allows the model to be trained only on daytime sunny scenes while still maintaining strong detection performance under the extreme conditions of rainy nights. When compared to several previous methods, the proposed approach demonstrates its superiority across various aspects. Figure 20 shows a comparison of detection performance between SCBGM and the proposed method in rainy night conditions, including comparisons with the ground-truth bounding boxes. The results clearly illustrate that the proposed method outperforms SCBGM in maintaining detection accuracy in such challenging visual environments. This further emphasizes the robustness and adaptability of the proposed approach in handling extreme weather scenarios with limited data from the source domain.

Figure 21 illustrates the heatmap visualizations for the four challenging environments (excluding daytime clear conditions) produced by SCBGM and the proposed method. By comparing the heatmaps generated by SCBGM and the proposed method, it is evident that SCBGM, influenced by various environmental factors, exhibits scattered attention across the targets. This results in many objects being missed, and those detected have relatively low confidence scores. In contrast, the proposed method effectively maintains its focus on the relevant targets even in road scenes with restricted visual conditions. Notably, this performance is achieved without using data from these specific scenes for supervised training, further confirming the robustness of the proposed method and its ability to generalize effectively across diverse road scenarios.

Conclusion

This study utilized an autonomous driving simulation platform to construct a digital twin traffic scenario, rich in diverse traffic elements and building models. By flexibly controlling environmental variables such as weather, lighting, and visibility, a variety of digital twin traffic scenes are generated. Using the platform’s camera and target boundary sensors, multi-view images and location data of traffic targets, such as vehicles and pedestrians, are collected, resulting in the creation of a large-scale virtual traffic dataset, VDCS. Based on this dataset and real-world data, a multi-task object detection algorithm, MNBGM, is proposed. Through multi-task learning, the MNBGM model effectively addressed noise introduced during domain adaptation when aligning feature distributions. To further enhance domain adaptation, the model incorporated a multi-level discriminator, effectively performing domain adaptation tasks at different feature levels and improving the model’s generalization capability. Experimental results show that the generated VDCS virtual dataset has significant practical application value. In the virtual-to-real data transfer experiment, the virtual dataset was shown to effectively enhance the performance of object detection tasks, further demonstrating the superiority of the proposed transfer method. Additionally, experiments on complex real-world datasets confirmed the effectiveness of the proposed transfer method. These results indicate that the proposed approach has efficient knowledge transfer capabilities and can perform well in complex real-world scenarios, showcasing its adaptability and generalization ability.

Although progress has been made in constructing digital twin scenarios, creating virtual datasets, and designing domain adaptation models, some limitations remain. Future research will focus on the following aspects: (1) Expanding the scope of existing scenes to construct more comprehensive and complex traffic scenarios, better simulating real-world traffic diversity. (2) Conducting research on object detection under various modal data (such as lidar, text, spatiotemporal information, and meteorological elements) to improve detection accuracy and robustness.

Data availability

The Cityscapes dataset analysed in the current study is available at https://www.cityscapes-dataset.com/. The VDCS virtual dataset analysed in the current study is available from the corresponding author upon reasonable request.

References

Zhai, C., Wu, W. & Xiao, Y. The jamming transition of multi-lane lattice hydrodynamic model with passing effect. Chaos Solitons Fractals 171, 113515 (2023).

Zhai, C. et al. Phase diagram in multi-phase heterogeneous traffic flow model integrating the perceptual range difference under human-driven and connected vehicles environment. Chaos Solitons Fractals 182, 114791 (2024).

Shuai, Y. et al. YoLite+: a lightweight multi-object detection approach in traffic scenarios. Proc. Comput. Sci. 199, 346–353 (2022).

Zhao, R. et al. Z-YOLOv8s-based approach for road object recognition in complex traffic scenarios. Alex. Eng. J. 106, 298–311 (2024).

Ros, G., Sellart, L., Materzynska, J., et al. The synthia dataset: A large collection of synthetic images for semantic segmentation of urban scenes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 3234–3243 (2016).

Gaidon, A., Wang, Q., Cabon, Y., et al. Virtual worlds as proxy for multi-object tracking analysis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4340–4349 (2016)

Li, X. et al. The ParallelEye dataset: A large collection of virtual images for traffic vision research. IEEE Trans. Intell. Transp. Syst. 20(6), 2072–2084 (2018).

Li, X. et al. ParallelEye-CS: A new dataset of synthetic images for testing the visual intelligence of intelligent vehicles. IEEE Trans. Veh. Technol. 68(10), 9619–9631 (2019).

Cabon, Y., Murray, N., Humenberger, M. Virtual kitti 2. arXiv preprint arXiv:2001.10773, 2020.

Dokania, S., Subramanian, A., Chandraker, M., et al. TRoVE: Transforming road scene datasets into photorealistic virtual environments. In: European Conference on Computer Vision. Cham: Springer Nature Switzerland 592–608 (2022)

Li, Y., An, Z., Wang, Z., et al. V2x-sim: A virtual collaborative perception dataset for autonomous driving. arXiv preprint arXiv:2202.08449, 2022.

Chen, Y., Li, W., Sakaridis, C., et al. Domain adaptive faster r-cnn for object detection in the wild. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 3339–3348 (2018)

Shan, Y., Lu, W. F. & Chew, C. M. Pixel and feature level based domain adaptation for object detection in autonomous driving. Neurocomputing 367, 31–38 (2019).

Saito, K., Ushiku, Y., Harada, T., et al. Strong-weak distribution alignment for adaptive object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 6956–6965 (2019)

Kim, T., Jeong, M., Kim, S., et al. Diversify and match: A domain adaptive representation learning paradigm for object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 12456–12465 (2019)

Zhu, X., Pang, J., Yang, C., et al. Adapting object detectors via selective cross-domain alignment. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 687–696 (2019)

Khodabandeh, M., Vahdat, A., Ranjbar, M., et al. A robust learning approach to domain adaptive object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. 480–490 (2019)

Sindagi, V.A., Oza, P., Yasarla, R., et al. Prior-based domain adaptive object detection for hazy and rainy conditions. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XIV 16. Springer International Publishing 763–780 (2020)

Hsu, C.C., Tsai, Y.H., Lin, Y.Y., et al. Every pixel matters: Center-aware feature alignment for domain adaptive object detector. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part IX 16. Springer International Publishing 733–748 (2020)

He, Z., Zhang, L.: Multi-adversarial faster-rcnn for unrestricted object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision 6668–6677 (2019)

Xu, C.D., Zhao, X.R., Jin, X., et al. Exploring categorical regularization for domain adaptive object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 11724–11733 (2020).

Jia, Z. et al. An efficient diagnostic strategy for intermittent faults in electronic circuit systems by enhancing and locating local features of faults. Meas. Sci. Technol. 35(3), 036107 (2023).

Li, W., Liu, X. & Yuan, Y. Sigma++: Improved semantic-complete graph matching for domain adaptive object detection. IEEE Trans. Pattern Anal. Mach. Intell. 45(7), 9022–9040 (2023).

Cai, Y. et al. HTMatch: An efficient hybrid transformer based graph neural network for local feature matching. Signal Process. 204, 108859 (2023).

Loiola, E. M. et al. A survey for the quadratic assignment problem. Eur. J. Oper. Res. 176(2), 657–690 (2007).

Gao, Q., Wang, F., Xue, N., et al. Deep graph matching under quadratic constraint. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 5069–5078 (2021).

Fu, K., Liu, S., Luo, X., et al. Robust point cloud registration framework based on deep graph matching. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 8893–8902 (2021)

He, J., Huang, Z., Wang, N., et al. Learnable graph matching: Incorporating graph partitioning with deep feature learning for multiple object tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 5299–5309 (2021)

Tian, Z., Shen, C., Chen, H., et al. FCOS: Fully convolutional one-stage object detection. arXiv 2019. arXiv preprint arXiv:1904.01355, 2019.

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 3431–3440 (2015)

Wang, R., Yan, J., Yang, X.: Learning combinatorial embedding networks for deep graph matching. In: Proceedings of the IEEE/CVF International Conference on Computer Vision 3056–3065 (2019)

Redmon, J.: You only look once: Unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016)

Ross, T.Y., Dollár, G.: Focal loss for dense object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2980–2988 (2017)

Lin, T. Y., Dollár, P., Girshick, R., et al. Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2117–2125 (2017)

Geiger, A. et al. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 32(11), 1231–1237 (2013).

Simon, K., Lausen, G.: ViPER: Augmenting automatic information extraction with visual perceptions. In: Proceedings of the 14th ACM International Conference on Information and Knowledge Management 381–388 (2005)

Ros, G., Sellart, L., Materzynska, J., Vazquez, D., Lopez, A.M.: The SYNTHIA dataset: A large collection of synthetic images for semantic segmentation of urban scenes. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3234–3243 (2016).

Cordts, M., Omran, M., Ramos, S., et al. The cityscapes dataset for semantic urban scene understanding. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 3213–3223 (2016)

Sakaridis, C., Dai, D. & Van Gool, L. Semantic foggy scene understanding with synthetic data. Int. J. Comput. Vis. 126, 973–992 (2018).

Hsu, C.C., Tsai, Y.H., Lin, Y.Y., et al. Every pixel matters: Center-aware feature alignment for domain adaptive object detector. In Proceedings of the European conference on Computer Vision, 733–748 (2020)

Tian, K., Zhang, C., Wang, Y., et al. Knowledge mining and transferring for domain adaptive object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, 9133–9142 (2021)

Zheng, Y., Huang, D., Liu, S., et al. Cross-domain object detection through coarse-to-fine feature adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 13766–13775 (2020)

Zhang, Y., Wang, Z., Mao, Y.: Rpn prototype alignment for domain adaptive object detector. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 12425–12434 (2021)

Vs, V., Gupta, V., Oza, P., et al. Mega-cda: Memory guided attention for category-aware unsupervised domain adaptive object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 4516–4526 (2021).

Tian, K., Zhang, C., Wang, Y., et al. Knowledge mining and transferring for domain adaptive object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision 9133–9142 (2021)

Xu, M., Wang, H., Ni, B., et al. Cross-domain detection via graph-induced prototype alignment. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 12355–12364 (2020)

He, M., Wang, Y., Wu, J., et al. Cross domain object detection by target-perceived dual branch distillation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 9570–9580 (2022)

Munir, M. A. et al. Ssal: Synergizing between self-training and adversarial learning for domain adaptive object detection. Adv. Neural Inf. Process. Syst. 34, 22770–22782 (2021).

Author information

Authors and Affiliations

Contributions

Writing—original draft, Mi Li; software, Xiaolong Pan; validation and writing—review and editing, Chuhui Liu; conceptualization,Zirui Li. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, M., Liu, C., Pan, X. et al. Digital twin-assisted graph matching multi-task object detection method in complex traffic scenarios. Sci Rep 15, 10847 (2025). https://doi.org/10.1038/s41598-025-87914-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-87914-8