Abstract

It is estimated that 2% of all journal submissions across all disciplines originate from paper mills, both creating significant risk that the body of research that we rely on to progress becomes corrupted, and placing undue burden on the submission process to reject these articles. By understanding how the business of paper mills—the technological approaches that they adopt, as well as the social structures that they require to operate—the research community can be empowered to develop strategies that make it harder, or ideally impossible for them to operate. Most of the contemporary work in paper-mill detection has focused on identifying the signals that have been left behind inside the text or structure of fabricated papers that result from the technological approaches that paper mills employ. As technologies employed by paper mills advance, these signals will become harder to detect. Fabricated papers do not just need text, images, and data however, they also require a fabricated or partially fabricated network of authors. Most ‘authors’ on a fabricated paper have not been associated with the research, but rather are added through a transaction. This lack of deeper connection means that there is a low likelihood that co-authors on fabricated papers will ever appear together on the same paper more than once. This paper constructs a model that encodes some of the key characteristics of this activity in an ‘authorship-for-sale’ network with the aim to create a robust method to detect this type of activity. A characteristic network fingerprint arises from this model that provides a robust statistical approach to the detection of paper-mill networks. The model suggested in this paper detects networks that have a statistically significant overlap with other approaches that principally rely on textual analysis for the detection of fraudulent papers. Researchers connected to networks identified using the methodology outlined in this paper are shown to be connected with 37% of papers identified through the tortured-phrase and clay-feet methods deployed in the Problematic Paper Screener website. Finally, methods to limit the expansion and propagation of these networks is discussed both in technological and social terms.

Similar content being viewed by others

Introduction

The study of paper mills—the organised manufacture of falsified manuscripts that are submitted to a journal for a fee on behalf of researchers1, has become a research topic that is quickly establishing its importance in the safeguarding of the integrity of the research process. Indeed, with ongoing technological developments, research in this area is set to play a critical role in safeguarding the integrity of the scholarly record, and may even prove to be existentially important.

It is estimated that 2% of all journal submissions across all disciplines originate from paper mills1, both creating significant risk that the body of research that we rely on to progress becomes corrupted, and placing undue burden on the submission process to reject these articles. By understanding how the business of paper mills—the technological approaches that they adopt, as well as the social structures that they require to operate—the research community can be empowered to develop strategies that make it harder, (or ideally) impossible for them to operate.

Most of the contemporary work in paper-mill detection has focused on identifying the signals that have been left behind inside the text or structure of fabricated papers that result from the technological approaches that paper mills employ. Current efforts to automate the detection of unusual research include: the identification of ‘tortured phrases’ in text that are designed to mask plagiarism2; the identification of common phrases and templates that appear to be used by specific paper mills3; the detection of manipulated images4; the manipulation of data5,6; and, the creation of falsified data through statistical methods7,8. These efforts to detect fabricated papers are in constant tension with the technical capabilities of paper-mill operators, with the latest generative AI techniques being explored on both sides9.

To date there has been little research into whether the social structures that enable paper mills to flourish also leave an imprint on the fabricated papers that they create. Research into the social structures that foster scientific misconduct, of which paper mills are only a part, has focused on the systemic drivers within research culture10. It is argued11 that undesirable behaviours associated with paper mills are driven by the precise systems put in place to ensure that research is well-regulated. In recent years these systems have led to highly competitive, metricized and unhealthy approaches rewarding production frequency and volume. Other pressures include requirements to publish in order to be promoted within a clinical career, or meet the requirements of a doctoral program1. Whilst these insights shine a torch on the cultural changes that need to occur to reduce the need for paper mills, they do not in themselves lead to actionable insights that can be used to detect paper-mill output.

We assert that a closer inspection of the social drivers behind paper-mill output can be translated into insights that can be used directly to dismantle paper-mill enterprises. Specifically, we believe that practices fundamental to the business models of paper mills leave a collaboration fingerprint that can be identified using techniques familiar to the ‘Science of Science’12. These fingerprints are difficult to mask without significant social engineering or outlandish payments to bona fide academics, both of which may price paper mills out of the market, and hence should remain a high-quality mechanism for detection even as technological fabrication techniques evolve.

Our key insight is that paper mills do not just fabricate content, convincing paper-mill outputs also fabricate co-authorship networks of the researchers that they involve. Of the estimated 2% of all journal submissions across all disciplines made up by paper-mill submissions, most are usually submitted by authors who have not previously submitted to the journal or who have not previously published in an academic venue1. Papers with these two attributes already carry an increased risk profile compared with an average paper. Armed with this information, a journal review process could adapt their processes to apply a higher level of scrutiny to these submissions. Paper-mill papers that successfully make it through the submission and review processes are likely to require a more convincing fabricated author network.

By understanding and identifying the properties of these fabricated co-authorship networks, we propose effective strategies aimed at identifying paper-mill activity. By identifying authors that are very likely to be part of fabricated co-authorship networks, we provide techniques that can make it easier to detect paper-mill output during the submission process; highlight journals that appear to be compromised; and reduce the supply of willing participants by identifying ’at risk’ researchers within universities.

This paper is arranged as follows: In the remainder of this introductory section we formulate a model of co-authorship activity that allows us to develop a set of conjectures for how these activities could manifest in a co-authorship network. We then discuss strategies for validating the appearance of these characteristics through comparison with established, known paper-mill networks. In "Methodology" section we describe both the technical and mathematical approach that we have used to implement the model and explore the characteristic described in the current section. In "Results and analysis" section we present an analysis of these methods and compare them with a set of validation steps proposed in the prior section. Finally, in "Discussion" section we make recommendations on how to apply the results of this approach both at the institutional and publisher level.

Principal attributes of networking exhibiting paper-mill activity

Research collaborations typically arise from social situations such as shared research group membership, PhD mentoring relationships, or conference and seminar attendance, but this is not how paper-mill co-authors form relationships and the differences in the genesis of these different network leaves a fingerprint. In this section we review the fingerprints left by paper-mill activities in the co-authorship network and encapsulate these as network attributes that we can use to identify the specific strategies used by paper mills.

In the case of an ordinary, non-paper-mill-generated manuscript, the research network behind the paper has emerged organically: when we read such a multi-authored paper, we do not just encounter new ideas and experiments, we read the work as the product of a collaboration that has taken time to build—often representing researchers from many different career stages. New research arises out of existing research communities, connected through time, via a network of supervisions and collaborations. New PhD students are trained by supervisors, who likely become co-authors before branching off into new collaborations forged through projects and conferences.

Normal, organically-generated research networks typically build, connect, and spread slowly early in a career, accelerating in later years. It is not unreasonable to image that there exist characteristic levels of connectedness as research careers develop, constrained perhaps by a research network equivalent of a Dunbar number (the maximal number of friends that a person can reasonably call friends given the time and cognitive load of maintaining friendships)13. Co-author network shapes may differ by field of research or location, among other factors, but they have more similarities than differences. Outliers may exist, for example a younger researcher who has ridden on the coat-tails of a prolific senior researcher, but through co-author affiliations we can deduce certain types of relationship and hence explain certain categories of prolific or highly-connected work. Occasionally, true outliers exist—tremendously brilliant or lucky individuals who have happened to work on a new stream of research in atypical ways, and this is why the processes that we elucidate here should not be 100% automated - our methods are designed to highlight outliers, but they do not give the reason behind their outlier status.

Networks involving “ordinary” fraudulent research activity such as plagiarism, data/image fabrication and ghost authorship also develop organically ‘inside the tent’, or endogenously, within established research networks. These endogenous networks also tend to be localised and build over time, with the machinery and knowledge of misconduct retained within the team. While the impact of internal misconduct of this nature is undeniably damaging to our ability to trust science, they are limited as the penalties for such actions often end careers14,15.

Such behaviour is naturally localised as, if it becomes known outside a fairly tight network of individuals, then the likelihood of a report to an institutional ethics board, funder oversight committee, editor, publisher or professional organisation becomes too high and the consequences noted above are much more likely to ensue. While these types of infractions are damaging to the scholarly record, they are perhaps more corrosive to trust both among academics and between the public and research as a publicly-funded enterprise. More directly and practically, funding is potentially diverted to the wrong places (both people and fields), inappropriately disadvantaging ethical researchers who may be passed over in favour of a more prolific but dishonest colleague.

However, paper-mill networks are different to both the other types that we have discussed above. We can regard this type of network as being ‘outside-the-tent’, or exogenous to existing research networks. In these cases, individual researchers either willingly depart, or are enticed or entrapped into departing, from their usual local networks and purchase authorship-for-sale positions on publications from paper mills. In doing so, they become part of a global research misconduct network. . Numerous examples of authorship-for-sale have been documented by Nick Wise via the @author_for_sale Twitter account, with datasets also hosted on Figshare16. As evidenced from these examples, an important part of the authorship-for-sale process is anonymity - you can buy a position on an author-ship-for-sale paper, but you will not know who the other authors are.

This network does not have the same constraints as either natural, locally occurring networks or the misconduct networks described above—it is unconstrained by the number of publications it can produce, or by the number of researchers it can recruit. In addition to its structural ability to scale, we propose that global misconduct networks associated with authorship-for-sale paper-mill outputs can be distinguished from organic research networks based on the following attributes:

Network Attribute 1.1.1

(The majority of researchers within authorship-for-sale networks will tend to have a ‘young’ publication age) We define the quantity of “publication age” to be the elapsed time from an author’s first publication to their most recent publication. Most authors on paper-mill papers—at least the authors that have paid to be there—will tend to be early in their career (as measured by publication age). Researchers with established careers have reputations to protect.

The motivations identified by the Committee on Publication Ethics (COPE) for purchasing authorship on a paper are also associated with young researchers. These include1:

-

1.

Doctoral students being unable to graduate unless they have published a paper

-

2.

A clinician in a hospital required to publish before they can apply for or be eligible for a promotion

-

3.

A researcher trying to boost their publication profile in order to appear more accomplished so that they can secure a research grant

The exception to this are ‘foundation authors’ (covered shortly in Attribute 1.1.3), who are involved in authorship-for-sale publications for other reasons.

Network Attribute 1.1.2

(High-volume researchers within the authorship-for-sale network should have an egocentric network with a low clustering coefficient) In contrast to the organic research network, the network of researchers associated with paper-mill papers will not be a product of an evolving collaboration. Most ‘authors’ on a fabricated paper will have been associated transactionally (i.e., through the chance purchasing of an author position on the same paper), with a low likelihood that they will ever appear together on the same paper again. Furthermore, if a person purchases an author position on a paper, and does this many times (perhaps in building a profile in order to obtain a grant), the network they form will not reflect an evolving research collaboration of a young researcher. Instead, the created network will look like a highly centralised research hub. Remove the hub, and the network will fall apart into the sub-clusters created by individual publications. This pattern of research collaboration for researchers with a ‘young’ publication age may not be uncommon to a few types of researchers (e.g., statisticians), however, it is unusual for most disciplines.

More formally, for a researcher who is heavily engaged with authorship-for-sale networks, their personal network (their egocentric network) of co-authors will have a low clustering coefficient17, with the only connection between the different papers being the researcher themselves. The clustering coefficient is defined to be the residual density of the researcher’s egocentric network after the removal of their (central) node. This measure is a good proxy for determining the centrality of a researcher to their local community. One caveat here is that this method works poorly in cases where publications include a large number of researchers such as high-energy particle physics.

Network Attribute 1.1.3

(An authorship-for-sale co-authorship network will have a limited number of senior ‘foundation’ authors) As a group of authors on a paper with little publication history is likely to invite extra scrutiny, an effort must be made to fabricate or co-opt researchers with more substantial publication records. It is reasonable to assume that most researchers with established research networks will not have anything to do with fabricated papers. The high degree of competition for tenured research positions, and the public nature of research outputs ensures that academic careers cannot be based on high volume, low-quality paper-mill output. To be discovered is to damage your career18.

Agents employ multiple means to fabricate co-authors with publication histories:

-

1.

Authors can be added to papers without their knowledge (this carries a high risk of discovery)19;

-

2.

Given that authors’ correspondence is handled by the paper mill for authorship-for-sale transactions1, we speculate that authors from previously accepted paper-mill papers could be reused (without permission or recourse for them to complain);

-

3.

Authors with peripheral academic careers (with less to lose) could be co-opted into the process;

-

4.

Authors could be co-opted for financial advantage in order to offset the risk of discovery20.

We can regard each of these attributes as resulting from a strategy (with an accompanying business model) of the paper mill in service of profitable false papers production. Each attribute or strategy can be used alone or in combination with others. However, as we have described, each strategy leaves a fingerprint in the academic co-author network.

With the exception of the (high-risk) strategy associated with the first attribute, each of these strategies takes effort to develop. Either profiles must be created based on previously submitted work, or relationships (coercive or otherwise) must be developed. As ‘foundation authors’ provide credibility to a paper, their presence will be required on most fabricated papers. This tension in effort in developing foundation authors and the need to include them on as many paper-mill papers as possible creates the likelihood that these assets will be overused. The result of overused foundation authors is that these researchers will end up with an artificially inflated per-year number of publications over a short space of time. The egocentric research networks that these authors create will also have a low clustering coefficient (Attribute 1.1.2).

Of the attributes described above, with their associated production strategies, we conjecture that an approach focused on building foundation authors from previously submitted authorship-for-sale papers is the most scalable, as it offers opportunities to continue create profiles, even as the pool of willing participants from the organic research network becomes (potentially) exhausted. As these profiles are constructed from paper-mill output, they are also likely to be associated with researchers that also have a reasonably young publication age.

Network Attribute 1.1.4

(High-volume participants in the authorship-for-sale cohort should form a network of low cluster coefficient egocentric networks)

Counterintuitively, although each high-volume participant in an authorship-for-sale network will have a low clustering coefficient for their egocentric network, all high-volume participants should be loosely connected to a number of other high-volume participants via random co-authorship ‘collisions’ on authorship-for-sale papers. The sum of these connections should form a connected graph that is largely separate from the organic research network.

A further property of authorship-for-sale graphs is that their development takes place over a significantly shortened timescale than would be natural for an organically developed graph. For paper-mill authors, it isn’t time that gradually connects researchers together, but rather the exogenous factor of the scale of the authorship-for-sale provider.

Network Attribute 1.1.5

(Researchers within the authorship-for-sale network will exhibit low levels of mentorship) Egocentric co-authorship networks that arise mainly from patronage to authorship-for-sale papers will not contain patterns of mentorship such as dominant relationships between PhD researchers and their advisors and the collaborative networks to which they are introduced through their community. Although it is not possible to identify advisors and supervisors from their publishing profiles, researchers can be identified by publication age. Within an authorship-for-sale co-authorship network then, we do not expect to find mentorship pairings.

Network Attribute 1.1.6

(Papers within the authorship-for-sale network will likely have a greater number of authors than the discipline norm) As paper-mill activity is a fundamentally commercial activity and hence driven by profit, it follows that efficiency is also a driver. This leads to a further artifact in the network: The more author “slots” that can be sold on a manuscript, the greater profit margin for that manuscript since the paper only needs to be produced and published once. Rebalancing so that the ratio of paid authors to real authors is not in the short-term interest of profitability. This property has been observed empirically in an investigation of a Russian paper mill, where it was found that potential paper-mill papers exhibited an elevated average of 3.9 authors per paper, compared with an overall discipline average of 2.6 authors per paper20,21.

Secondary attributes of networking exhibiting paper-mill activity

In the last section, we restricted our attention to how the attributes of co-authorship graphs were affected by paper-mill strategies. In this section, we summarise two further effects that we see in the data, but which do not relate to the co-authorship network.

Paper-mill Attribute 1.2.1

(Papers within the authorship-for-sale network should form a citation cartel) As the nature of paper-mill papers is that they tend to contain either low quality or repetitious work, they are highly unlikely to attract citations from mainstream research. As a result, it is reasonable to expect that citations to paper-mill papers will come from other paper-mill papers.

Paper-mill Attribute 1.2.2

(Evidence of peer-review enablement) Authorship-for-sale networks also require peer review processes that allow the publication of papers with questionable quality. Like foundational authors, complicit peer reviewers are likely to be a relatively scarce resource. As we know, peer reviewers take time to be inserted into a journals trusted network of peer reviewers. This means that where open peer review data are available, it is possible to track the behaviour of referees and evaluate their actions with respect to known paper-mill papers.

Brokered authorship-for-sale as a complicating factor

While we have tried to define robust attributes aligned with data fingerprints that identify the practices of paper mills, paper mills do not always generate their own content. For example, authorship-for-sale also occurs in the context of research papers involving researchers who have carried out their research in good faith. This is possible due to the research processes information asymmetry22 that can exist on papers when it comes to authorship. Not all authors on a paper may be aware of the exact contributions of other authors. Perhaps only the lead author might. This asymmetry leaves the door open for an unscrupulous lead author to add non-contributing authors to a paper facilitated by a paper mill without other legitimate authors being aware. In this example, research is carried out and written up in good faith by some of the authors on a paper can still end up being brokered by a paper mill20.

In these cases, although the contributing authors’ research networks will look ‘normal,’ the networks of the researchers who have purchased authorship spots are still likely to have a low cluster coefficient.

The presence of brokered authorship-for-sale articles means the authorship-for-sale research network will be connected to the organic research network. This in turn means that not all researchers that are connected to an authorship-for-sale network will have done something wrong. Use of any methodology that identifies unusual author practice in a way that would be detrimental to an author must also require individual investigation.

Methodology

Datasources

To analyse network patterns in the literature we used Dimensions from Digital Science23. Dimensions is well-positioned for the analysis that we perform here as:

-

(a)

Dimensions’ inclusion criteria is based on an output having a unique identifier rather than on an editorial policy23 and hence does not implicitly limit the range of publication venues that can be analysed. This approach means that the full bad-actor network is available for analysis and, in particular, authorship-for-sale networks can be tracked across all publication venues;

-

(b)

Dimensions is frequently used in paper-mill analyses, notably tortured phrases analyses (see, for example2). As at Oct 2023, links to Dimensions have been included in 3952 posts on the social network for public research review - PubPeer24. At the time of publication, PubPeer can be searched for use of Dimensions via the URL https://pubpeer.com/search?q=*app.dimensions*;

-

(c)

All of the Dimensions data is represented as a dataset on Google Cloud Platform on BigQuery25, facilitating the global inspection of trends, and allowing the easy addition of other external datasets26, such as Retraction Watch27,28, ORCiD29, and tortured phrases2.

Detecting unusual collaboration signals in the scholarly record

To attempt to detect a ‘paper-mill co-authorship signal’ in the literature, we first establish network shapes associated with authorship-for-sale that can be identified. Based on our theorised shape of an ‘authorship-for-sale’ researcher (see "Principal attributes of networking exhibiting paper‑mill activity" section), these network shapes should exhibit a low clustering coefficient, where most of the connections between authors go through the researcher around which the egocentric network is constructed (Attribute 1.1.2). Importantly, in order to be positively identified in our analysis, at least a subset of these networks need to be rare enough to be distinguished from normal patterns of research collaboration.

One tool that we use extensively is the concept of “publication age”, which we defined in Attribute 1.1.1. Through basic calculations, we access this quantity by using the researcher disambiguation data in Dimensions. Each researcher in Dimensions is mapped to an identity, which is, wherever possible, linked to an ORCiD. As we have access to disambiguated profiles of researchers, we assess the date of the first paper that we know to be associated with that profile in Dimensions and calculate the separation between that date and the date of the most recent paper associated to the same researcher profile. It should be acknowledged that the researcher profiles in Dimensions are not completely accurate as the process for their creation is, in part, statistical in nature and relies on data availability. This means that publication age can only be used statistically in our work. However, the profiles, data and calculated ages are sufficiently robust as to allow meaningful analysis.

Describing network shapes

This methodology also relies on the researcher disambiguation described above. In creating the graphs under consideration, we use the fact that each individual researcher in Dimensions is identified by a unique researcher ID. We define the shape of a researcher’s immediate co-authorship network to be the depersonalised collection of edges and nodes for a given publication year. We call this the network “shape”, as it is unconcerned with who the collaborating researchers are, what institutions they are at, where papers have been published or any other identifying material. The ”shape” is merely the underlying structure of the collaborative graph that is important. We then parameterise these collections of edges and nodes by the calculation of two quantities:

-

(a)

The number of researchers (nodes) in their network;

-

(b)

The clustering coefficient C of the network calculated as the number of edges E in the network divided by the number possible edges as a function of the number of nodes N when the central researcher is removed:

$$\begin{aligned} C = \frac{2(E-N)}{N(N-1)}, \end{aligned}$$(1)where N = total number of nodes -1, and E = total number of edges -N.

For each shape, we can then use the two quantities above to assign a uniqueness measure across the dataset. This means that every author-year combination had assigned to it the two quantities above and the frequency of those quantities was compared across all such author-year pairings. To calculate the research network shape, only journal articles of type research article were used (e.g, no commentaries, reviews). Publications with greater than twenty authors were also excluded to avoid distortions that publications with high numbers of authors create in local co-authorship networks. If paper mill activity were to be observed in papers with a high number of authors, this exclusion, and the methodology to establish research network shape would need to be revisited.

Researchers were also allocated approximate career stages based on their publication age in five-year increments (Table 1). Throughout this analysis career, stage labels are used for convenience, although the actual status of an individual researcher within a cohort may differ in reality.

The model we constructed through the attributes listed in the previous section suggests that rare network shapes should be associated with researchers with a ‘young’ publication age (Stages I and II) (Attribute 1.1.1). Further, as our profile of ‘authorship-for-sale’ researchers suggests that these networks should not exhibit strong characteristics of mentorship (Attribute 1.1.5). We impose a further restriction that the most frequent collaborator within these networks should also be a young researcher (Stages I–III). The age restriction on mentorship is relaxed slightly so that, for instance, we do not require postdocs at the top of the range to collaborate only with somebody younger than them.

To reduce the chances of creating false positives within our initial set of selected researchers, we further restrict the set to those researchers that have published greater than 20 publications in the same calendar year. This profile best represents either high-frequency paper-mill patrons, or foundation authors with a young publication age. This restriction to publication year also imposes a limitation that should be noted as this is artificially excluding a part of the co-authorship graph that may contain fraudulent activity. Some researchers that would otherwise be captured will be missed as their publication peak falls over two years.

Finally, our model asserts that authorship-for-sale authors should form a loosely connected network (Attribute 1.1.4). To implement this restriction, we take the largest connected graph of researchers for each publication year based on that year’s publications and the following year. We have limited the graph to two publication years as we are not interested in measuring the growth of organic research collaboration, but rather the suggested random connections of an authorship-for-sale network. All graph calculations within the paper were undertaken using the python networkX library30.

Due to the focus on unique network shape, and the focus on other unusual attributes, we shall refer to these authors identified by the methodology above as being ’unusual’. It is important to stress that in every case, investigative work will still be required to ensure that unusual collaborations do not have another explanation.

Validation methods

Having identified a yearly cohort of researchers that meet our profile of an ‘authorship-for-sale’ researcher, we then seek to assess how well this correlates with other datasets with high signals of paper-mill activity including the tortured phrases, and the Retraction Watch database. Whilst neither of these sources will account for all paper mill activity, they provide useful indicators to validate our work.

-

1.

Tortured-phrase dataset. Tortured phrases2 are awkward English phrases substituted for common language terms (e.g., ‘flag to commotion’ instead of ‘signal to noise’). While a small portion of these terms may have been legitimately used for non-native English writers, the papers are generally accepted as an indicator of fabricated publication. A list of papers containing problematic phrases is available from the the Problematic Paper Screener at https://www.irit.fr/~Guillaume.Cabanac/problematic-paper-screener. Helpfully, each paper in the list is identified by its Dimensions publication id allowing an easy comparison based on matching identifiers. More than just a dataset, the Problematic Paper Screener represents a new collaborative research integrity investigation methodology. Issues detected via algorithms (such as tortured phrases), can then be investigated and verified by a volunteer research investigation community, with completed investigations documented on PubPeer. The net result of this workflow is that the tortured-phrase dataset receives significant ongoing review. As well as tortured phrases, the Problematic Paper Screener dataset contains information on papers that appear to have clay feet by citing other problematic papers. Based on our assertion that paper-mill papers are likely to cite other paper-mill papers (Attribute 1.2.1), we have also included clay-feet publications in our comparative analysis. To further assess the effectiveness of using this network signal as an additional method to identify paper-mill activity, we compare the percentage of implicated papers in the tortured-phrase dataset to randomly generated sets of research articles generated in the same time period. It is important to stress that the methods represented by the Problematic Paper Screener including “clay feet” and “tortured phrases”, create indicators that can lead to allegations of paper mill activity. Should allegations be raised for any given paper, formal investigations still need to be undertaken.

-

2.

Retraction Watch data. Retraction Watch27, a blog series commencing in 2010, has the most comprehensive database of retracted scholarly publications. Within the retraction watch dataset, in 2021 71% of publications have been retracted due to questionable or fraudulent research or authorship practices. This percentage is also in broad agreement with previous partial studies in 2012, and 201631,32

-

3.

ORCiD data. Finally, we analyse the network for evidence of peer review enablement (Attribute 1.2.2) by identifying researchers within the network with unusually high peer review activity within the ORCiD public dataset29.

Results and analysis

In this section, we take the model that emerges from the attributes discussed in earlier sections of this paper and apply it to the graph generated from the data sources described in "Methodology" section. We work through an example in detail and test these results against our validation criteria.

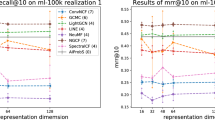

To establish whether unique network shapes (the specific collaboration patterns that emerge from the attributes that describe our model) provide a robust signal for the detection of author-for-sale paper-mill practices, we first establish whether unique network shapes can be identified successfully using the methods suggested (Methods "Detecting unusual collaboration signals in the scholarly record", "Describing network shapes" section). For publication year 2022, Table 2 breaks down the percentage of researchers by approximate age into unique-network-shape frequency bins. Each bin is an order-of magnitude of frequency. Explicitly, the column labelled “\(> n=0\)” refers to network shapes that occur fewer than 10 times in the whole of the 2022 co-authorship graph. Thus, only 0.10% of Stage I researchers, and 0.43% of Stage II researchers have network shapes that have been repeated fewer than 10 times.

Stage I and II researchers are more likely than not to have a network with a low cluster co-efficient (Attribute 1.1.2), however, there remains a large tail of highly connected networks (see Figure 1). Figure 1 shows a breakdown of the population of Stage I and II researchers with highly unique collaboration patterns using further attributes. To be clear, this Figure only depicts data relating to the 0.53% of researchers identified in the top-left cells of Table 2. The y-axis is frequency and the x-axis is binned by clustering co-efficient. The light blue histogram shows the frequency of researchers overall with clustering co-efficient assigned to each division; the green histogram is more restrictive, showing the number of researchers associated with more than 20 publications in 2022; the dark blue (hardly distinguishable from red in shape) show the frequency of researchers with more than 20 papers and their most frequent collaborator is another Stage I-III collaborator on the paper; the red distribution shows the frequency of researchers associated with more than 20 papers in 2022 and where at least 50% of their immediate collaboration network consists of young (Stage I-II) researchers.

Histogram of Stage I and Stage II researchers with network shapes that have been been repeated fewer than 10 times in 2022. Researchers are distributed across the x-axis by their clustering coefficient. The middle green histogram represents the subset of researchers that have produced greater than 20 publications in the year. In the bottom red subset, the most frequent collaborator is a Stage I-III researcher, and greater that 50% of the network is made up of Stage I-II researchers.

If our hypothesis is correct and that our model detects paper-mill activity, then we can regard Fig. 1 as defining bands of probability of likelihood that a researcher is involved in such a network. The light blue area consists of authors in possession of a highly unique collaboration network. This may not in-and-of itself be sufficient for someone to be involved in a paper mill - it is merely a statement that their collaboration pattern is irregular for someone of their age, for which there may be many reasons—discipline, geographical situation of university, connectedness of PhD advisor/supervisor, and nature of their specific research project, etc. However, once we enter the green region we are now focusing on young researchers who have highly unique collaboration networks and who are producing more than 20 papers in a single year. (We remind the reader that high-energy physics papers and the like with hundreds or thousands of authors have been removed from this analysis.) In order to be productive at this level so early in a career and to have a highly-unique network demonstrates a mode of research that requires further explanation.

With each additional restriction that we impose, the peak of the frequency distribution moves left-ward toward the region of lower clustering cohesiveness (lower clustering co-efficient), with a heavily suppressed tail at higher levels of connectedness/clustering. What this means in practical terms is that this the region in which fewer and fewer authors in the network are tightly coupled or come from the same existing networks–put simply–are less likely to actually know each other. If the full context of the situation is taken into account this seems particularly unusual. The confluence of young researchers without a more senior connector (cf. Attribute 1.1.5), and access to extended disparate networks with high production levels is certainly unusual if not impossible.

One effect that the criteria applied in Fig. 1 are likely to rule out is that these signatures are associated with a new type of brilliant researcher. When we think of the rise of a talented research we would expect them to be producing results that garnered high levels of attention from serious established researchers, but this is not the behaviour that we’re seeing here. Rather it is a different type of “brilliance” - this is a brilliance in which researchers are highly productive in volume but choose to publish in less recognised and less mainstream venues; one in which they choose by preference to work with younger researchers only; one in which they connect seemingly at random to many different networks and simultaneously make and manage, but then don’t maintain, a vast number of research relationships. At one level this is perhaps a little reminiscent of some social networking behaviour33, but this is not how research tends to work.

As Dunbar13 points out, there is a limit to the number of friendships that we can maintain at different levels of intensity. Research relationships are not like friendships in that they require significantly more work to maintain and hence, at any one time, one can retain relatively fewer research relationships that form a coherent core to the research on which one is working. We do not think it likely that this signature suggests a newly emergent class of brilliant researcher, whose characteristic is to be massive connectors of lower level research. Even if this were to be the case, it might be reasonable to question the value of this type of activity to the research enterprise. The green-shaded region of Fig. 1 is unusual due to volume of output but we hope that it is clear from the discussion above that the dark blue and red regions highlight behaviour that is highly likely to be worthy of further investigation.

We now turn to a further filter, as outlined in Section 2 and related to Attribute 1.1.4. This filter makes the assumption that most influential (and detectable) paper-mill networks need to be connected due to the mechanism behind their creation. As a result, we seek the largest connected component of that graph and filter out any small, disconnected components as these are much less likely to be associated with paper-mill activity.

By plotting the signal that derives from the application of these restrictions together in Fig. 2, we can observe the relative change in the unusual author population over time. Overall, the global population of unusual researchers—those researchers who meet all our criteria from the discussion of Fig. 1 above—remains small relatively compared with the overall number of Stage I and Stage II researchers (our overall normalisation factor). Prior to 2018, around 0.020% of the population could be considered to require further investigation. From 2018 there is transition in behaviour that doubles the relative occurrence of unusual researchers over a 4-year period. This appears to be driven by the development of a large, connected network to which a significant proportion of unusual authors are linked. Indeed, the largest connected component of this group of authors goes from accounting for 0.002% of authors between 2010 an 2017 (or around 10% of the unusual author cohort in that period) to 0.034% of authors in 2022, meaning that around 75% of all unusual authors identified by our model were participants in the largest connected component of the network.

We believe the inflection point that takes place in this plot in 2017 demonstrates clear evidence that there is a coordinating influence at the centre of these activities that started at scale in 2017. From a technical perspective, we argue that the behaviour change in 2017 is termed a second-order phase transition in the language of physics and is a behaviour often seen in random graphs dynamics where the number of nodes in the graph is growing with a fixed probability34.

Development of the unusual author cohort as a percentage of all Stage I and II researchers by year. The two areas are overlapping and not cumulative. The area between the top of the light blue region and the x-axis represents all unusual Stage I and II researchers as a percentage of all Stage I and Stage II researchers. The dark blue area shows just those that are part of the largest connected component of the unusual co-author network defined by our model.

Although random in nature, there are two important characteristics of the unusual author graph that make it distinguishable from random connections of non-paper-mill publications. To baseline our results, we studied the network properties of other random samples of the same number of researchers with similar properties to our sample of unusual researchers. When compared with other equivalent samples of researchers in 2021 (Fig. 3), with a cluster coefficient of greater than 0.4, and publications greater than 20, the graph created by unusual author cohort had three times the density of the 190 randomly generated samples (0.004 vs 0.0012 SD 0.000047.) The graph of unusual authors also differs from the randomly sampled set in that the largest connected component of researchers represents a smaller ratio of the total researchers compared, 0.66 compared with 0.80 (SD 0.019) for the sample graphs. A cluster coefficient of 0.4 was chosen, as most of the sample of the unusual author set falls below that cutoff , however the comparison remains unchanged if the cutoff is lowered to 0.1 (N = 50, density = 0.0012, largest component ratio = 0.91) or increased to 0.9 (N = 210, density = 0.001, largest component ratio = 0.76).

These results are consistent with the authorship-for-sale model expressed above in that there are two different populations of publications that authors are randomly connecting within. In the authorship-for-sale set, the theory would suggest that authors are (mostly) randomly colliding over paper-mill papers. In the equivalent samples, authors are (mostly) colliding over publications within the organic research graph. As the set of paper-mill papers is (thankfully) smaller than the set of non-paper-mill papers, the ‘collision’ probability of two authors coauthoring on the same paper should be higher, and therefore the graph more dense. This probability is increased further if authorship-for-sale papers have a higher average number of authors (Attribute 1.1.6). If the probability of collision is higher, you would also expect the percentage of the largest connected graph to be higher. The fact that it is lower, suggests that there is a number of false positives in the unfiltered unusual author set. Because these false positives are publishing within the organic research graph, they do not typically collide with researchers that are authors on paper-mill papers. For this reason, filtering on the largest connected component of the unusual author graph can be seen as an appropriate additional tactic for reducing the number of false positives in the sample. The effectiveness of this approach will be evaluated in the next section.

For the period between 2020-2022, 2056 researchers have been selected as having a profile that meets our unusual author criteria. It should be stressed that having a profile that matches the criteria of an authorship-for-sale profile does not in itself mean that an individual researcher has participated in research misconduct. How well these profiles align in aggregate with other measures and indicators that may imply research misconduct will be assessed in the next section.

Assessing the effectiveness of the ‘authorship-for-sale profile’ to identify authors that participate in questionable research

To assess the veracity of the analysis in Fig. 2 we would ideally turn to a completely different data source that relies on distinct properties of detection to the model that we have proposed. In this section we use such a dataset to verify our findings.

The Problematic Paper Screener (which we will refer to as the PPS method or simply ‘the PPS’ for brevity), described in our comments on the tortured-phrase dataset of "Validation methods" section, provides an ideal external verification mechanicsm. The way in which the PPS identifies unusual authors is purely linguistic and makes use of full text analysis, not relying at all on network structure. Thus, the two methods are complementary and may be used to independently verify each other. In addition, the PPS approach identifies papers rather than unusual authors directly, and this point of difference is another aspect of the two approaches that delineates them and strengthens their independence.

Proportion of papers exhibiting tortured phases as a percentage of all journal articles produced in a year. The tortured-phases dataset was extracted from the Problematic Paper Screener. The drop in papers in 2022 might be explained by the implementation of the tortured phrases detection strategies into submission processing workflows, or a reaction to detection by paper mills.

As an initial test to assess the high-level correlation between the tortured-phrase methodology and the network methodology, we examined the number of research articles identified by the PPS method as a proportion of the total number of journal articles recorded in Dimensions each year (see Fig. 4). It is at once easy to see the shared features of this figure and our findings shown in Fig. 2. The overall shape of Fig. 4 is similar to that of Fig. 2, with an inflection point in the PPS method showing in 2016 rather than 2017. Of course, our data shows the percentage of researchers rather than papers Fig. 4. While there are two different quantities, they are clearly correlated up to 2021. As a percentage, the flat region prior to 2016 in the PPS data, is in good agreement with the output reported in our model (the PPS shows between 0.005% and 0.01% of total output in this period, compared with 0.002% to 0.023% of researchers in our model). The broader range in our model compared with that of PPS makes sense as one model is modelling people and the other is modelling papers. However, there is a fundamental coupling between the two. The co-authorship graph has papers as edges and people as nodes, but the PPS model effectively reveals the dual of this graph - the graph with papers as nodes and co-authors as edges. In 2022, the percentage of PPS papers that have tortured phrases or clay feet drop away. We assert that this is a real effect and not due to end-of-year effects or incomplete data. Rather it is an example of the dynamic nature of paper mill the interplay between publishers and paper mills as each adopts and adapts to the deployment of new technologies.

The dual relationship between the graphs that underlie this model mean that scaling effects should be correlated (as we observe) but the actual scales of the two graphs and their normalisation is not necessarily fixed. As a result, one can look at variations but one should not read too much into absolute values. It is also interesting to note that the PPS method more quickly identifies paper mills (2016 versus 2017 inflection point), but this too makes sense as our method requires connections to be made in the network (thus mutliple papers need to be published), whereas the PPS method can detect a single paper as soon as the manuscript has been parsed. This speed advantage goes away as soon as the network is established, as any author who has been drawn into the orbit of an unusual author network can instantly be identified. Thus, we should expect the graphs to have a more similar shape as paper-mill practices develop.

To understand whether the similarity between Figs. 2 and 4 is more than superficial, we need to assess the direct overlap between the papers identified directly by the PPS method and the papers identified through affiliation with identified authors.

To create a tortured-phase dataset for comparison, we focused on publications in the 2020-2022 year range, with a document type of ‘research article’. Once identified in the Problematic Paper Screener, reviewers are invited to individually assess a paper, and lodge a report in PubPeer35. In this analysis we have used both assessed and non-assessed publications. Although papers with less than 5 tortured phrases are not shown on the public part of the PPS (to avoid showing false positives), some false positives will remain. Additionally, publications were required to have at least one author that had been resolved to a researcher_id within Dimensions, as papers with no resolved authors would be invisible to our analysis. Of the 3739 publications identified, 10% (367) included a researcher identified in the ‘authorship-for-sale’ profile, 37% (1371) included an author that had co-authored with a researcher in the ‘authorship-for-sale’ set. Overall, via this more inclusive measure 72% of the researchers in the ‘authorship-for-sale’ cohort could be connected back to the tortured-phrase dataset, compared with 16% who were direct authors.

There was no difference in the proportion of tortured-phrase or clay-feet publications between the matched and unmatched sets, however there were some differences in authorship patterns. Unsurprisingly, papers with fewer authors were less likely to be matched back to the ‘authorship-for-sale’ network. Articles in the unmatched set contained an average of 3.7 authors, compared with 5.0 authors for the matched set. This difference is increased when authors that can be matched back to a unique researcher ID are taken into account (2.6 compared with 4.4 authors). Publications within the matched set were also far more likely to have international co-authors (50%) vs 8% for the unmatched set. Of the unmatched set, 56% were also a single institution, vs 27% for the matched set. The bias towards a greater number of authors as well as greater percentage of international co-authors aligns well with the expected behaviour of authorship-for-sale networks (Attribute 1.1.6).

A further test that we conducted aggregated unusual papers by a variety of different axes to search for implicit biases in the data (or, put another way, to see whether the datasets share similar findings). Upon examining geographical aggregation we find that the profile of matched and unmatched articles also differed by country of author affiliation. For the largest two country cohorts in the top 10, only 25% of articles with Chinese authors were matched, with 46% for India. The highest rates were for Saudi Arabia, and Pakistan (94% and 93%) (Table 3). This difference reflects the skew in the countries of authors identified by the authorship-for-sale profile, however given the the greater prevalence of domestic and single institution papers in the unmatched set, it may also reflect a split in the way paper-mill papers are constructed, with one model focusing on selling entire papers, and another focusing on selling authorship places.

The data in Table 3 suggests that there is a good correlation between the tortured-phrase and unusual author datasets, however, a final step is required to show that these overlap percentages are significant. We must test against a null model—specifically, we want to see if the model is susceptible to detection of false positives. In other words, can this method discriminate between a unusual paper signal and background publication noise? To do this, we use a numerical Monte Carlo simulation—we randomly selected groups of publications from Dimensions. These groups were designed to be of the same size and from the same time period as the tortured phrases dataset. We then compared the incidence of tortured papers and model-identified papers between these random sets. Authors for the ‘authorship-for-sale’ profile appeared 1.2% of the time (SD = 0.15). Authors that had previously collaborated with one of the authors in the authorship-for-sale profile appeared on 16% of papers (SD 0.63). These rates are significantly lower than the unusual author overlap figures of 10% (direct) and 37% (collaborated) for the tortured-phrase dataset, indicating that our method for identifying papers belonging to unusual authors is effective at identifying paper-mill content without also identifying a high number of false positives.

All of the analyses above pertained to the highly restricted dataset, where we filtered to the largest connected component. We extended our analysis further to examine the overlap between the PPS method and the set of papers identified by association with the less restricted group of unusual authors including those who are not (yet) part of the most connected subgraph. When compared with the tortured-phrase dataset, 1% of the potential unusual authors outside of the largest connected graph matched to the tortured-phrase dataset, and 20% have coauthored with a author on a tortured-phrase dataset. These rates are significantly lower than the rates for the connected graph (16% and 72%). As foreshadowed, the much lower match rates justifies removing these researchers outside of the largest connected component of the network from the unusual author set.

A further sense check that was carried out (as described in Section 2 was to perform a similar comparison with the Retraction Watch publications as was performed with the tortured-phrase dataset described above. Articles within the Retraction Watch database were filtered to include only those with a retraction reason associated with a breach of research integrity36. Overall, authors identified in the unusual author set appeared on 7.43% of the 1858 research articles articles listed in the Retraction Watch database between 2020 and 2022, over six times the rate established above for randomly selected sets of publications. Although this percentage is lower than the 10% overlap with the tortured-phrase dataset, this is to be expected as retractions cover inside-the-tent issues of research integrity as well as those that arise from paper mills.

Proximity to high-volume peer reviewers

In order to facilitate publications ending up in reputable journals, paper mills must rely on complicit peer reviewers to push publishing decisions through. Although it is not possible to directly identify complicit peer reviewers, it is possible to use the public ORCiD data file to identify peer reviewers with an unusually high number of peer reviews. ORCiD records are matched back to Dimensions researcher records, allowing publication age, and connection to the unusual author co-authorship graph to be calculated.

Between 2020 and 2022 (in keeping with our previous analysis), we were able to identify 741 Stage I or II researchers that had recorded greater than 250 peer reviews over three years. Of these, 8.2% of researchers were also part of the ‘authorship-for-sale’ cohort, and 51% had previously co-authored with someone in the unusual author cohort, representing 218,907 claimed peer reviews. Of the 49% of peer reviewers not matched to the co-authored cohort, 143,066 peer reviews were recorded. The high degree of connectivity between these high volume peer reviewers and ‘authorship-for-sale’ cohort, provides a new perspective on the observation that peer reviewers are more likely to be favourable to authors within their network37. Some allowance for double counting must be made, as in rare cases reviews for the same journal have been reported in ORCiD from both Publons38 and the publisher directly. A breakdown of high-volume peer reviewers by country shows a similar distribution to the tortured-phrase dataset (Figure 4) although Saudi Arabia is less dominant.

Without direct access to publisher records, it is tempting to make a link between the 218,907 peer reviews and the 84,075 publications authored by individuals in the unusual authors cohort. However, the overlap is less apparent at the journal level. If we define a review ratio as the total number of reviews divided by the total number of publications, then for the 181 journals producing more than 50 publications per year, there is one review for every five publications on average, and only 11 journals have a peer review to review ratio of greater than 1 in 2. Nonetheless, the co-authorship proximity of a high-volume peer review network is notable - especially given that these reviews are just the ones that have been made public.

Applications

Based on the analyses above, we have shown that it is possible to identify young researchers having characteristics that are shared with authors appearing to have frequently participated in authorship-for-sale transactions - either as high-frequency customers or as foundation authors. These researchers are young by publication age (approximately Students or Postdocs), have an egocentric networks with a low clustering coefficient, and yet are connected to each other. When taken together, this cohort aligns well directly or via one degree of collaboration with the tortured-phrase dataset. The network also has access to a large number of high-volume Peer Reviewers.

Further, we have shown that direct detection rates in random sets of papers are significantly lower than the detection rates in the tortured-phrase dataset. This means that detecting the presence of an elevated ‘unusual author’ signal in a collection of publications should be a cause for concern. In this section, we explore the application of this measure to publishers, journals, national systems, and institutions.

Identifying publisher risk profiles

The initial motivation for this paper was the observation that paper-mill papers with more convincing author profiles were more likely to pass through the submissions and review process. If the ability to flag authors as belonging to a unusual author network were implemented at the beginning of the submission process, how many papers would require additional review?

Figure 5 depicts the number of publications by publisher that involve an author from the unusual author cohort (2017–2022) and identifies the number of papers that require close review. By 2022, Elsevier had published more than 11,000 implicated publications, with MDPI just under 7000, and Springer Nature at over 5000 publications. If review is only handled at the publication level, all of these publishers would have a significant amount of work to review these publications. Given that there are only in the order of 1700 researchers associated with these papers, and further, that these authors would be common across publishers, a joint investigation strategy that focused on authors rather than individual publications is likely to more efficient.

Aside from identifying the individual papers that need to be investigated, a comparative risk profile can be calculated for each publisher based on the overall network statistics associated to publishers via the papers that they accept. This can also be thought of as a capability profile to assess the abilities of publishers to detect fraud and to safeguard the scholarly record against paper-mill activity. As Figure 6 highlights, our method appears to suggest that Hindawi began to assume a significantly higher risk profile from 2020 onwards. By 2022, our model indicates that Hindawi had doubled its risk profile expressed as the percentage of implicated publications to 4%, with MDPI steadily climbing to just under 3%, and most other publishers sustaining a risk profile of somewhere between 1 and 2%. Whilst the recent struggles with paper mills at Hindawi have been well documented39,40, it should be sobering to note that Hindawi has only double the risk profile of the majority of other publishers, according to our model, and that risk profiles of implicated papers can change rapidly over a small time period. Moreover, whilst the absolute number of implicated papers is staggering, the percentage of implicated publications in the scientific record for most publishers is lower, but not much lower than the reported minimum 2% paper-mill submission rates at the journal level1. For the highest volume publishers, it could also be argued that a higher standard of probity should be required as they are able to have a disproportionate effect on the viability of paper mill business model.

Identification of journals of concern

As reported in the COPE and STM report on paper mills, paper mills target some journals more than others, especially when they have been successful in the past. At a more granular level, the unusual authors identified in this paper can be used to identify specific journals of concern. Figure 7 provides a log scale histogram of journals and their associated risk percentages. Only journals with greater than 50 publications in a year have been assessed. Of these 12,434 journals (90%) have little association (< 2% of papers) with authors identified in the ‘authorship-for-sale’ profile. Just over 10,000 journals have no direct overlap with the researchers identified in this paper at all.

Noting that 4% is overall rate for Hindawi, and the overall rate for most publishers is between 1 and 2%, across the publishing industry there are 696 journals with concerning percentages of implicated papers between 2 and 4%, and 717 journals with highly concerning risk profiles at greater than 4%. Armed with this analysis, publishers should be able to target their analysis to specific high risk journals.

Histogram of journal exposure unusual author cohort. The x-axis is the percentage of papers in any given journal that appear to be associated with the unusual paper network (i.e. number of papers found in a journal that appear to be authored by a unusual author). The y-axis, using a \(\log _{10}\)-scale, is the frequency with which journals fall into each 1% band of exposure. There are 12,434 journals (shown in green) that contain potentially unusual-author-affiliated publications at a rate between 0 and 2%; 696 journals (shown in orange) have an exposure of between 2% and 4% of their articles; 717 journals have an exposure to the unusual-author network with more than 4% of their articles. Note that the \(log_{10}\) scale of the y-axis compresses and underplays the number of “safe” journals and tends to emphasize the size of the tale of this distribution.

Profiling the selected researchers by country

Like publishers, the reputation of national research systems and institutions can also be harmed through the association of their affiliation with paper-mill content.

Table 5 provides a breakdown by country and institution. What is immediately apparent is the Saudi Arabia is over represented when compared with the size of its research population. Of Saudi Arabia’s Stage I-II workforce, 1.2% have been identified as having an unusual author profile, corresponding to 22.6% percent of research articles and reviews. Whilst this does not necessarily mean that 22.6% of Saudi Arabia’s publications have been produced by paper mills, it does suggest that there is strong reason to ask why these numbers differ so significantly from other countries.

Institutional reputation protection

At an institutional level, being associated with a large number of paper-mill papers that have not been retracted would reflect poorly on institutional culture and research integrity training. On the other hand, evidence of proactive activity in retracting papers on behalf of institutional authors reflects well on an institution41.

In the publisher use cases above, we have focused on identifying papers where an author in the unusual author cohort is specifically an author on a paper. This is because using broader measure of connected authors (15% of papers in the random set) is too high to be useful. At an institutional level however, connected authors can be used to assess the exposure of institutional researchers to the unusual authors network.

Where institutional researcher connections to the unusual author network are found, interventions need not be time consuming. For researchers where the exposure is through a single paper, the action required by the institution might just be to make the researcher aware of the connection, and ensure that they were aware of the authors involvement in the paper. For senior researchers with a large amount of exposure, a more formal investigation may be warranted. For less senior researchers, who are often the targets of authorship-for-sale enterprises, the action might require engaging in a conversation with their supervisor to understand how they came to be involved in the paper, and supporting the researcher in initiating a request to retract the paper. Interventions such as these are invaluable as they are unlikely to be initiated by the junior researcher, and remove the possibility that the researcher can be coerced later in their career into further participation in the authorship-for-sale enterprises. Further, if institutions are able to identify and remove ‘foundation authors’ (Attribute 1.1.3) that are operating under their affiliation, then institutions can play a pivotal role removing the ability of paper mills to construct convincing author networks in the first place -provided the work required by individual institutions is not overly onerous.

Table 6 provides a country level view of the average number of researchers per institution that have some exposure to the ‘unusual author cohort.’ Only institutions with greater than 3000 unique researchers in 2022 based on publication affiliation have been considered here. For most countries, the average number of researchers, and therefore interventions required, falls between 20 to 80. For countries that are more implicated in the unusual author network (such as India with an average number of 147 reviews per institution and Iran with 296), the average number of interventions are higher, but still manageable. Alongside the main figures, the number of exposed junior researchers is also provided, giving an indication of the amount of interventions where extra effort is required.

A collaborative response to the problem of paper mills

Through the use of the authorship-for-sale co-authorship network, institutions and publishers have an opportunity to collaborate on removing paper-mill content. Institutions benefit from having a relatively low number of researchers to investigate, with established lines of communication to researchers who were most likely not the corresponding author. If pursued proactively, institutions can also play a pivotal role in cutting off the supply of convincing author profiles that can be included in paper-mill papers.

Within the limits of this approach (discussed further in the section below), publishers for their part have a new process available to assist in identifying and preventing paper-mill content from being published. It is hoped that over time, this could also lead to a decreased amount of investigative work required at the institutional level. It is of course important to stress that in every case, investigative work will still be required to ensure that unusual collaborations do not have another explanation.

Discussion

We have demonstrated an approach to identifying paper mills that complements existing methodologies. In our method, rather than focusing on analysis of the text of the manuscript as established methods do, we focus on identifying irregularities in the context of the article. We have shown that it is possible to develop a model that describes some facets of paper-mill activity and have shown this model to yield results that are comparable to those of the PPS method. We have also shown that this method discriminates effectively between background noise and a signal associated with paper-mill operation. It is noteworthy that the model described in this paper is only one model that can be developed to detect paper-mill signals in contextual data—our approach communicated here focuses on a specific collection of facets based on a particular set of assumptions for how paper mills operate—more research and a better understanding of paper-mill operation could lead to a set of models or an extended model that could become a more powerful tool.

Established technologies that detect unusual author behaviour, as pointed out above, use text mining and other computationally expensive approaches. Thus, while these are excellent methods to understand the structure of a manuscript, they do not necessarily scale well and, with improving technology, constantly need to be developed. Our model has different scalability characteristics, which is helpful as a complement to existing strategies. Once the the network of unusual authors is calculated, then those connecting to that network are instantly worthy of further investigation. Consequently, our approach has the facet that the network calculation needs to be carried out frequently but it is done for all papers that need to be checked rather than being done individually per paper. Calculations of the nature required for this purpose have not been easy to access until the development of cloud compute capacities and its application in scientometrics and bibliometrics25. Thus, we believe that a strength of this method is that it is both may efficient and cheap to use at implement scale, and less susceptible to adaptation than than methods that focus on the technology of paper mill production. However, like the PPS methods described above, our method is only identifying outliers with a statistical probability and, as such, is no silver bullet. Rather, we believe that it serves as a useful method to enhance and extend methods such as the PPS to improve confidence levels and to help to detect a wider class of papers that may be unusual.

Of course, our method is not without significant challenges and it is important to be aware of the weaknesses of the approach that we have described in this paper. In the remainder of this section we reflect on the limitations of our approach and identify several opportunities for the application of our approach. Finally, we make some suggestions for future avenues of exploration.

Limitations

-

(a)

Author disambiguation One limitation of analysing the authorship-for-sale network is that a focus on young researchers is a region of name disambiguation that is the hardest; there is the least amount of publication information over which to base an identity decision. Author disambiguation is also more challenging for some countries with written character systems42. Even in countries where author disambiguation is easier, a number of factors will mean that false positives are unavoidable. Even state-of-the-art algorithmic author disambiguation is fallible, and will sometimes mean that two (or more) distinct authors are either merged into the same profile or a single author has multiple profiles. Both of these issues with automated disambiguation cause problems for the confidence that one can have in our methodology. In the first case, an author may be flagged as having an unusual profile when they merely have a similar name to another author who actually does have an unusual profile. In the second case, an author may have multiple unique identifiers and be able to evade detection as only a subset of their work is associated with the unusual author network. Indeed, this latter use case is the basis for a strategy to evade our method. For these reasons, it is important that all authors identified by the methodology outlined above have humans curate the findings before any action is taken. Further, there must be a process to disentangle researcher identities when disambiguation errors are detected. In this context, it is clear that ORCiD adoption is an important tool in combating paper mills. Requiring authors to register an ORCiD and to associate their ID with the paper at the time of submission decreases reliance on fully automated disambiguation methodologies and strengths our approach. Relatively low ORCiD adoption rates43,44 are effectively keeping a low bar for paper-mill activity. The call for system wide change with regard to ORCiD is not new45, but it is certainly now more urgent. We also realise that this recommendation does not solve all problems—ORCiD identifiers are being misused or registered afresh for each new paper-mill paper46. However, the date of ORCiD registration is easily measured, and a set of new ORCiD records, alongside authors with little history raises its own red flag. Having an a ORCiD is not on its own a sign of upstanding research integrity, but a journal that enforces their use across all authors makes the investigation of research integrity issues far easier to manage. Beyond an investigation into paper-mill authorship, this study has also shown that the use of ORCiD’s in recognising peer review is also useful as a tool in identifying researchers for whom—by volume of peer reviews alone—should not be requested to review further papers. This signal is immensely valuable as it covers all publishers. Where acceptable, we strongly suggest that all publishers mandate the registration of peer reviews. Removing the network of peer reviewers that enable the publishing of authorship-for-sale papers is equally as important as dismantling the social network of authorship-for-sale authors itself.

-

(b)

A limit to ‘outside-the-tent’ research misconduct. As identified in the introduction, a further limit on taking a research network approach to the identification of suspected paper-mill activity is that it is only effective in identifying behaviour where researchers have had to go ‘outside-the-tent’ of their local research network to participate in paper-mill networks. ‘Inside-the-tent’ misconduct such as image manipulation, plagiarism, data fabrication, or even the purchasing of entire research papers to populate with local authors will not be detected. For these papers, research misconduct detection techniques that focus on the content of the paper remain the most effective approach.

Reflections on data driven research integrity collaboration between institutions and publishers

By basing the identification of issues concerning research integrity on externally accessible linked data sources, opportunities arise for collaboration across multiple publishers and institutions.