Abstract

The 1978 A/H1N1 influenza outbreak in a boarding school in northern England provides a widely used dataset for epidemiological modelling. However, its focus on recovery dynamics rather than transmission presents challenges for standard SIR (SEIR) modelling. Recent attempts to fit the data using a delay differential equation (DDE) model have demonstrated an excellent retrospective fit but raise concerns regarding complexity, the effects of overfitting, and an unusually high estimate of the basic reproduction number. This study assesses the robustness of the DDE model, revealing that its parameter estimates and outputs-particularly the basic reproduction number-are highly sensitive to dataset size distortions, leading to poor predictive performance in the early epidemic stages. To address these limitations, we propose a simpler stochastic SIR model that, while yielding a slightly worse retrospective fit, provides more stable and reliable forward predictions. Our findings challenge the notion that classic SIR-type models cannot capture the dynamics of the 1978 outbreak and highlight the risks of undesirable effects of over-parametrization when employing complex models. More broadly, this study underscores the importance of balancing model complexity with predictive reliability in epidemiological modeling, in order to support robust public health decision-making when data availability is limited.

Similar content being viewed by others

Introduction

An outbreak of influenza A/H1N1 occurred between January and February 1978 in northern England at a boarding school1. The epidemic began in late January with symptoms of headache, fever, and sore throat. Symptomatic students were confined to bed in the college infirmary, and symptoms typically subsided quickly thereafter. Confined-to-bed students were allowed to get up 1.5 days after their temperature had returned to normal, thus entering the convalescent stage, before returning to class a few days later. Partial data from the outbreak was published as an anonymous note in the British Medical Journal1, containing three key pieces of information: (1) the daily number of confined-to-bed students (B) over 14 time points; (2) the daily number of convalescent students (C) over 14 time points; and (3) the total number of students affected by the epidemic, or the total attack rate (AR), which was 512 out of 763.

This dataset has several notable characteristics. First, it was published as a brief anonymous note with minimal details on the epidemic’s overall course1. Second, the data is atypical for epidemiological studies, as it focuses only on removed students (confined-to-bed and convalescent), describing the recovery process rather than transmission dynamics. Third, the dataset is relatively small. Lastly, it is not well-defined, as daily prevalence values for the two removed student groups were reported only as points on a graph and thus required digitisation. As a result, different versions of the dataset exist in the current literature2.

Despite these limitations, this dataset is widely used in epidemiological research. It appears in numerous textbooks and lecture notes on mathematical biology2,3,4,5,6, is included in the R package “outbreaks” as part of a collection of disease outbreak data7, and has been analysed in various studies employing mathematical models. However, standard SIR (or SEIR)-based approaches have struggled to provide satisfactory fits2. This difficulty led Li (2003)8 to describe the 1978 influenza outbreak dataset as an “epidemic enigma”. Kalachev et al. (2003)9, however, pointed out that earlier misinterpretations of the data may have contributed to these difficulties-particularly when confined-to-bed students were incorrectly classified as infectious (rather than removed), and convalescent individuals were often overlooked2,9.

Recently, Avilov et al.2 developed a model deliberately designed to overfit, thereby achieving a close correspondence with the 1978–55 influenza outbreak dataset. Models of this kind have attracted increasing interest within the machine learning community due to the so-called “double descent phenomenon”, whereby certain over-parameterised models can simultaneously achieve low variance and low bias (see, for example, Ref.10). The model consists of six compartments: susceptible (S), exposed (E), infectious (I), confined-to-bed (B), convalescent (C), and removed (R). It assumes that individuals spend a fixed duration in compartments E and I, leading to a system of delay differential equations (DDEs). The model also allows newly infected individuals to bypass the E stage, accounts for pre-existing immunity, and incorporates non-exponential residence time distributions (so-called Erlang distributions) in compartments B and C. Parameter estimates were obtained by minimising the weighted sum of squared Euclidean distances between the model output and the observed data for the trajectories of B and C, as well as the total number of affected individuals. Overall, the model demonstrates an excellent fit to the data for B, C, and the total number of affected individuals.

While the Avilov et al.2 model achieves an excellent fit, some challenges remain. First, the model is structurally complex, involving 24 equations (including those modelling the Erlang distributions) and eight estimable parameters. Additionally, there are predefined parameters, chosen heuristically or through small-scale simulations, that could also be estimated, such as the shape parameters of the Erlang distributions and the weights in the objective function. Given the dataset’s small size and potential noise, the large number of parameters and equations raises concerns about the adverse effects of overfitting.

Furthermore, the estimated basic reproduction number \(R_0\) in the DDE model is 8.14, which is substantially higher than the typical range of \(R_0 \in [1,4]\) for influenza epidemics2,11,12,13. Although \(R_0\) values can be higher in closed populations such as boarding schools, this discrepancy warrants further scrutiny. Additionally, the high \(R_0\) estimate in the DDE model implies that 249 students were initially immune, meaning that all students who were not infected during the outbreak were classified as immune. While these results could be explained by factors such as contact network effects or prior vaccination (though this argument is less convincing since the available vaccines did not contain the H1N1 component)2, they could also stem from overfitting, which raises questions about their reliability.

Although the DDE model demonstrates excellent ex post (retrospective) fit-i.e., when the full epidemic dataset is included-its over-parametrised design raises concerns about the ability to generate reliable forward predictions during an ongoing outbreak. This issue is particularly important, as the primary goal of epidemiological modelling is to forecast epidemic trajectories and inform policy decisions.

In this study, we address this concern by demonstrating that the DDE model’s parameter estimates and outputs, including the basic reproduction number \(R_0\), are highly sensitive to reductions in dataset size. This sensitivity can lead to poor predictive performance, particularly in the early stages of an epidemic. To mitigate these limitations, we propose a simpler stochastic SIR model that, while yielding a slightly worse (but still satisfactory) ex post fit compared with the DDE model, provides more stable and reliable forward predictions. Our findings also challenge the notion that classic SIR (SEIR)-type models cannot fit the 1978 influenza outbreak data.

Overall, our findings extend beyond this specific dataset. We emphasise that stochastic SIR-type models are valuable and flexible tools for epidemiological modelling. Additionally, we underscore the risks associated with building overly complex epidemic models on small datasets, which may be prone to undesirable overfitting effects and may produce unreliable predictions-potentially undermining public health decision-making at a societal level.

Results

Analysis with the DDE model

First, we reproduce the results of the DDE model developed in Ref.2. The model consists of six compartments: susceptible (S), exposed (E), infectious (I), confined-to-bed (B), convalescent (C), and removed (R). It assumes that individuals spend fixed durations in the E and I compartments, allows newly infected individuals to bypass the E stage, accounts for pre-existing immunity, and incorporates Erlang-distributed residence times in compartments B and C. The latter is implemented by introducing ten consecutive sub-compartments, \(B_j\) and \(C_j\) (\(j = 1, \ldots , 10\)), connected by equal-rate linear transfer terms. The model is characterized by eight parameters: the initial numbers of immune (\(R_{t=0}\)) and exposed (\(E_{t=0}\)) individuals, the infectivity coefficient (\(\beta _I\)), the probability (\(\rho _{\textrm{noE}}\)) that infected individuals bypass the E stage, the residence times \(T_E\) and \(T_I\) in the exposed and infectious stages, respectively, and the average transition rates \(\delta\) (from B to C) and \(\epsilon\) (from C to R). It is defined by the following set of equations:

with the initial conditions

Following Avilov et al. (2004)2, the DDE model is fitted to the data by minimising the weighted sum of squared residuals for the variables B (the number of confined-to-bed students), C (the number of convalescent students), and AR (the total attack rate), with weights of 1, 0.4, and 10, respectively. Following the protocol in Ref.2, we employ a gradient descent method for numerical optimisation (using the fmincon function in Matlab). The fitting process is repeated 50 times from random starting points drawn from uniform distributions over the permitted parameter range (for more details, see section S1 of the Supplementary Material) to mitigate issues with local minima. Finally, the solution providing the best fit to the data is selected.

Note that the results of the DDE model presented here differ slightly from those reported in Ref.2. This is because we fit the DDE model to the 14 time points provided in the original paper1, whereas Avilov et al. (2004)2 used an extended dataset with 26 time points, assuming that the prevalence of confined-to-bed and convalescent students beyond the original range was zero.

Forward predictions of the DDE model based on the first \(\tau\) time points (training dataset). Shown are the results for the number of susceptible (S), infected (I), confined-to-bed (B), and convalescent (C) individuals, respectively. The model outcomes (non-black lines) are shown alongside the data (solid black lines). Solid non-black lines correspond to the average trajectories, while dashed lines indicate 95% credible intervals. (b–d) The results are based on the top \(M=100\) (out of \(10^5\)) simulations with the best fit in the first \(\tau\) time steps. (a) \(\tau =14\) (entire epidemic); (b) \(\tau =9\) (peaks in curves B and C reached); (c) \(\tau =6\) (only the peak in curve B reached); (d) \(\tau =5\) (neither B nor C peaks reached). For panels (b–d), RMSE values are calculated based on the testing dataset.

Next, we assess the predictive power of the DDE model by training it on only the first \(\tau\) time steps of the epidemic (training dataset), generating forward predictions, and evaluating the goodness-of-fit of these predictions against the remaining time steps (testing dataset). The fitting protocol is adjusted for the reduced dataset size. Specifically, since the total attack rate (AR) is unknown until the epidemic concludes, it is excluded from the DDE fitting procedure. Instead, the model is fitted by minimising the weighted sum of squared residuals for variables B and C using the weights specified in Ref.2.

We consider three values of \(\tau\): \(\tau =9\), \(\tau =6\), and \(\tau =5\), corresponding to the peak in curve C, the peak in curve B, and one step before the peak in curve B, respectively. Notably, in all cases, the DDE model predicts that the peak infection prevalence has already occurred (see Figure 1).

For model fitting, we follow the protocol outlined in Ref.2. However, given the small sample size of the training dataset-where the number of model parameters approaches the number of data points-the objective function exhibits numerous local minima. To address this issue, we conduct \(10^5\) simulations. In each simulation, we apply the Avilov et al.2 protocol with 50 runs. We then select the top M simulations with the best fit, yielding M vectors of parameter estimates that approximate an optimal fit. This sample enables us to compute average parameter estimates and construct credible intervals. The parameter M represents the sample size tolerance level, which is set to \(M=100\) by default.

As shown in Figure 1, the predicted trajectories align well with the data when the peaks of both curves B and C have already been reached. However, further reducing \(\tau\) has a dramatic effect on predictive accuracy. For \(\tau =5\), the root mean square errors for compartments B and C (\(RMSE_B\) and \(RMSE_C\), respectively) increase twentyfold compared with the ex post estimation. Notably, when \(\tau\) is relatively small, the actual trajectories either fall outside the credible intervals produced by the DDE model or fluctuate near their boundaries (see Figures 1c and 1d). This result remains unaffected by the tolerance parameter M (see Figures S4–S6 in the Supplementary Material), indicating that the DDE model is unreliable for predicting epidemic progression in its early stages.

Finally, we assess the robustness of the parameter estimates under these data perturbations-i.e., changes in the number of data points \(\tau\) in the training dataset. The DDE model’s parameter estimates vary dramatically as the training set size decreases (see Table 1 and Figures S1–S3 in the Supplementary Material). In particular, the estimated basic reproduction number \(R_0\) initially triples, rising from 7.51 for \(\tau =14\) (full dataset) to 19.09 for \(\tau =6\), before dropping fivefold to 3.86 for \(\tau =5\). These fluctuations in \(R_0\) are accompanied by significant changes in the estimated number of immune individuals at \(t=0\), \(R_{t=0}\), which first doubles and then decreases sevenfold as \(\tau\) decreases, along with similar variations in the total attack rate (AR).

The key takeaway is that while the DDE model achieves an excellent ex post fit over the full course of the epidemic, it performs poorly in forward prediction, particularly in the early stages. Moreover, its parameter estimates are highly sensitive to the training set size, and combined with biological concerns-such as unrealistically high \(R_0\) values-this raises serious doubts about the reliability of the model’s estimates.

Analysis with the SIR model

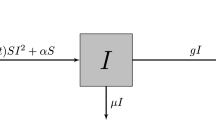

As an alternative to the DDE model, we introduce a stochastic SIR-based modelling approach in this section. Given the relatively small population size and the significant role of random events in disease outbreaks within such settings, we adopt a stochastic formulation of the standard SIR model, governed by the following reactions:

Here, S, I, B, C, and R represent the susceptible, infectious, confined to bed, convalescent, and removed compartments, respectively. We assume that the third reaction is a delayed process with a fixed time delay of 1.5 days. This reflects the fact that students confined to bed were transferred to the convalescent compartment 1.5 days after their temperature returned to normal. Essentially, this means an individual spent a random time duration in compartment B until their fever subsided, followed by an additional 1.5 days before moving to compartment C1.

To estimate the model parameter vector \(\varvec{\theta }=(\beta ,\gamma ,\delta ,\nu )\), we employ the Approximate Bayesian Computation algorithm (vanilla ABC)14. We first sample the proposal parameters \(\varvec{\theta ^{\#}}\) from the following priors:

where U[a, b] denotes the uniform distribution on the interval [a, b]. The sampled proposal parameters are then used to simulate the stochastic SIR model using a modification of the Gillespie algorithm that takes into account delayed reactions15,16,17,18 (see section S3 of the Supplementary Material for more details). For each simulation run, by discretizing time, we obtain realizations of the stochastic process at time steps \(t \in \{1,..,14\}\): \(S_{t}\), \(I_{t}\), \(B_{t}\), \(C_{t}\), and \(R_{t}\). We then determine the summary statistics

where AR is the total attack rate (i.e., the total number of students affected by the epidemic, \(AR = 763 - S(\infty )\)). Comparing \(\varvec{x}\) with the actual number of confined to bed students, convalescent individuals, and the total number of affected individuals-the information stored in the vector \(\varvec{x'}\)-the vector of proposal parameters \(\varvec{\theta ^{\#}}\) is accepted if the distance between the model output and the data is relatively small, i.e., if

where \(\epsilon\) is the tolerance. We set \(\epsilon=0.03\) and run our simulations until the sample size reaches \(10^4\).

The results of the stochastic SIR model (retrospective fit) for the number of susceptible (S), infected (I), confined-to-bed (B), and convalescent (C) individuals. Shown are the model outputs (non-black lines) and fit to the data (solid black lines). (a) Solid non-black lines correspond to the average trajectories, while dashed lines show the \(95\%\) credible intervals. (b) The results of a simulation run with the smallest sum of the \(RMSE_B\) and \(RMSE_C\) values.

The results of the stochastic SIR model (retrospective fit). (a) Posterior distributions of the model parameters with 95% credible intervals. (b) Posterior distributions of the basic reproduction number \(R_0\) and the total attack rate AR. The posterior distributions are derived from a sample of \(10^4\) simulation runs of the stochastic SIR model, generated using the vanilla Approximate Bayesian Computation (ABC) algorithm with a tolerance parameter of \( \epsilon= 0.03\). Credible intervals were computed via kernel density estimation using Matlab’s mvksdensity function, with bandwidth selection determined according to Silverman’s rule of thumb..

Retrospective fit

As shown in Figure 2a, the stochastic SIR model fits the data quite well. As expected, it has somewhat larger root mean squared errors than the DDE model (16.8 vs 4.6 in the variable B and 17.3 vs 9.3 in the variable C). A better fit can be achieved in some individual SIR simulation runs. For example, the run with the smallest sum of the root mean squared errors in variables B and C has \(RMSE_B=13.5\) and \(RMSE_C=13.0\) (see Figure 2b). Furthermore, as shown in Figure S7 in the Supplementary Material, SIR runs with the best fit in the variables B and C, respectively, are characterised by RMSE values that are comparable with those of the DDE model (\(RMSE_B=9.3\) and \(RMSE_C=8.2\), respectively). This contradicts the claim that the fit of the SIR model, even in the B curve alone, is not satisfactory2. Note that decreasing the tolerance parameter \(\epsilon\) will further improve the goodness-of-fit of the stochastic SIR model (albeit requiring more extensive simulations).

The posterior distributions of the SIR model parameters, along with their 95% credible intervals, are shown in Figure 3a. These intervals are obtained using kernel density estimation19, implemented via the mvksdensity function in Matlab, with bandwidth selection determined by Silverman’s rule of thumb20. The corresponding credible intervals for the stochastic SIR model trajectories are presented in Figure 2a.

The average estimated total attack rate is 540 individuals (see Figure 3b), which closely aligns with the actual value of \(AR=512\) students. Notably, our model estimates the basic reproduction number \(R_0\) at an average value of 1.76 (see Figure 3b), which falls well within the established range of \(R_0 \in [1,4]\) for various influenza outbreaks2,11,12,13. This stands in stark contrast with the DDE model, which produces an unrealistically high estimate of \(R_0 = 7.51\). Moreover, the DDE model’s large \(R_0\) estimate leads to an inferred initial number of immune individuals of \(R_{t=0} = 249\), implying that nearly all uninfected students were classified as immune. In contrast, the stochastic SIR model does not require an initial population of immune individuals, making it less restrictive in terms of underlying biological and social assumptions.

Overall, while the stochastic SIR model does not achieve as good a retrospective fit as the DDE model-an expected outcome given the DDE model’s greater number of parameters and equations-it produces more biologically plausible estimates of \(R_0\) and avoids restrictive assumptions about immunity. This balance between model complexity and interpretability highlights the advantages of the stochastic SIR framework in epidemic modelling (Table 2).

Forward predictions of the stochastic SIR model for the number of susceptible (S), infected (I), confined-to-bed (B), and convalescent (C) individuals based on the first \(\tau\) time points (training dataset). Shown are the model outcomes (non-black lines) and fit to the data (solid black lines). Solid non-black lines correspond to the average trajectories, while dashed lines show 95% credible intervals. (a) \(\tau =14\) (the entire epidemic); (b) \(\tau =9\) (the peaks in curves B and C are reached); (c) \(\tau =6\) (only the peak in curve B is reached); and (d) \(\tau =6\) (the peaks in curves B and C are not reached).

Forward predictions

Here, we evaluate the predictive performance of the stochastic SIR model by training it on only the first \(\tau\) time steps of the epidemic (training dataset), generating forward predictions, and assessing the goodness-of-fit of these predictions against the remaining time steps (testing dataset). To account for the reduced dataset size, we modify our fitting protocol: since the total attack rate (AR) is unknown until the epidemic concludes, it is excluded from the computation of the summary statistic x used in the Bayesian estimation of the stochastic SIR model.

As shown in Figure 4a, decreasing \(\tau\) significantly affects prediction accuracy. For \(\tau =5\), the root mean square errors (RMSE) in compartments B and C increase fivefold and threefold, respectively, compared with the ex post estimation. However, for \(\tau =5\), the stochastic SIR model exhibits slightly lower RMSE in compartment B and four times lower RMSE in compartment C compared with the DDE model, suggesting that the stochastic SIR model produces better forward predictions in the early epidemic stages. Notably, unlike the DDE model, the stochastic SIR model generates credible intervals that consistently contain the observed trajectories.

Furthermore, most parameter estimates of the stochastic SIR model remain stable as the training set size decreases (see Table 1 and Figures S8–S10 in the Supplementary Material). The only exception is the total attack rate, which increases from 540 to 661 as \(\tau\) decreases from 14 to 5. The estimated basic reproduction number \(R_0\) remains relatively robust to data perturbations, rising from 1.76 for \(\tau =14\) (full dataset) to 2.72 for \(\tau =5\), staying within the range of \(R_0\) estimates reported in the literature for various influenza outbreaks.

Overall, our findings lead to two key conclusions. First, while the stochastic SIR model provides a worse ex post fit than the DDE model, it generates more reliable forward predictions, particularly in the early stages of the epidemic. Second, the stochastic SIR model’s parameter estimates are significantly less sensitive to the size of the training set compared with those of the DDE model, further supporting its robustness in predictive applications.

Discussion

We analysed the 1978 A/H1N1 influenza outbreak using two modelling approaches: a delay differential equation (DDE) model2 and a stochastic SIR model. While the DDE model achieves an excellent retrospective fit, it is highly sensitive to data availability, leading to unreliable forward predictions and biologically implausible parameter estimates, particularly for the basic reproduction number (\(R_0\)). Estimates of \(R_0\) from the DDE model range from 3.86 to an implausible 19.09, exceeding values reported in the literature, even for the 1918 influenza pandemic, where estimates rarely exceed 421,22,23. Although some argue that \(R_0\) should be higher in closed populations, historical data often do not support this claim; studies on influenza outbreaks in small military populations24 and the 2009 A/H1N1 outbreak in a residential school in India25 report values in line with general population estimates. In contrast, the stochastic SIR model, despite a slightly worse retrospective fit, provides more stable and biologically consistent parameter estimates (\(R_0 = 1.76\) to 2.72) and produces credible intervals that reliably capture observed epidemic trajectories.

The instability observed in the DDE model raises concerns not only about its practical application but also about the broader modelling strategy employed by Avilov et al.2. Their approach involves constructing a flexible compartmental discrete-time epidemiological model with tabulated residence time distributions, where daily progression rates in each compartment are treated as model parameters. This model is then fitted to the dataset by minimising an objective function that accounts for the sum of squared residuals between the model outputs and the data. In the next step, the inferred residence time distributions are used to construct a simplified model, which, in the case of the 1978 influenza outbreak, results in the DDE model. We focus on the DDE model because Avilov et al.2 treat it as the primary model for the 1978 influenza outbreak dataset. Furthermore, if overfitting critically compromises the predictive capability of the DDE model, its impact is likely even more pronounced in the more complex flexible discrete-time model, which consists of 28 equations and 29 parameters. Thus, our findings raise concerns not only about the reliability of the DDE model’s results but also about the broader approach of fine-tuning a model’s structure-such as its residence time distributions-to fit a specific dataset, as proposed by Avilov et al.2. In addition, we do not consider the simplified discrete-time model from Avilov et al.2, as it serves as an auxiliary model derived from the DDE model and is used primarily to assess the identifiability limits of the DDE model parameters. Finally, we fit the models to the 14 time points provided in the original paper1, while Avilov et al.2 extended the dataset to 26 time points through artificial data imputation, assuming that the prevalence of confined-to-bed and convalescent students beyond the original range was zero. We believe that this data imputation introduces redundancy, artificially improves fit to data, and potentially increases both data uncertainty and estimate errors. More importantly, it does not impact forward predictions, as most of the imputed data fall after the last reported day of the epidemic.

Another way to highlight the effects of overfitting in the DDE model would be to compare it with the stochastic SIR model using AIC and BIC scores. However, this approach presents two key problems. Firstly, the DDE model is not driven by a likelihood function. Although a likelihood can be constructed to mimic the least-squares minimisation employed for parameter estimation, overfitting causes the DDE model to achieve an almost perfect fit. This, in turn, leads to highly constrained variance estimates within the constructed Gaussian-based likelihood. Secondly, not all parameters in the DDE model are treated equally. For example, the shape parameters of the Erlang distributions and the weights in the objective function are prespecified rather than directly estimated. As a consequence, the DDE estimation protocol effectively forces the likelihood function to degenerate, placing unit mass on the observed data. This artificially lowers the AIC and BIC scores, thereby distorting model comparisons.

Recent work on the double descent phenomenon in over-parameterised models (see, for instance, Ref.26 and references therein) suggests that increasing the number of model parameters sometimes enhances flexibility and reduces structural constraints, guiding the model-fitting process towards solutions that generalise well. This behaviour-attributed to implicit regularisation arising from standard optimisation and model selection techniques-has motivated the machine learning community to explore new approaches for assessing parsimony in models that exceed traditional limits on parameter counts. However, the DDE model in its current form is unlikely to exhibit such favourable behaviour. Its large-yet still relatively modest by machine learning standards-set of estimated parameters, combined with numerous fixed or pre-specified components, limits its ability to enter the low-bias, low-variance regime characteristic of the double descent phenomenon. Furthermore, as our analysis demonstrates, the simplicity of the contagion process being modelled and the limited availability of data constrain the model’s capacity to generalise-not only to new datasets of comparable size but even to modestly altered versions of the training set.

Although our proposed model is stochastic, it is important to emphasise that the distinction between stochastic and deterministic formulations is often secondary to the question of model adequacy for prediction. Both stochastic and deterministic models can be informative for forward prediction during the early to mid-stages of epidemics. For instance, Ref. 27 illustrates this using a deterministic SEIV model fitted to Ebola data from the 2014 outbreak in Sierra Leone, which recorded over 10,000 cases across approximately 60 weeks. The model was calibrated using the first \(T = 10, 20, 30,\) and 40 weeks of data to forecast subsequent epidemic dynamics. The results showed reliable predictive performance when \(T \ge 20\) (roughly one-third of the outbreak duration), consistent with our findings for the stochastic SIR model applied to the 1978 influenza outbreak. In general, stochastic models are particularly valuable for epidemics in small populations-such as the 1978 influenza outbreak in a boarding school-where random individual-level events, especially in the early phase, can substantially influence epidemic trajectories. In contrast, for large populations, stochastic fluctuations tend to average out, and deterministic formulations-representing the limiting case of their stochastic counterparts-produce comparable results. In such settings, deterministic models may be preferred, as they offer a practical balance between predictive accuracy and computational efficiency.

More broadly, the discussion of stochastic versus deterministic formulations points to a deeper question-how to assess whether a model is adequate for its intended purpose. The notion of model adequacy naturally extends to the broader challenge of how models are evaluated, refined, and deployed in real-time decision-making contexts. From this perspective, our findings align with broader views on the role of modelling in crisis management, such as those articulated by Getz et al. (see Fig. 3 in Ref. 28). In this framework, modelling is conceived as an iterative process in which a model’s adequacy is continually reassessed in light of its purpose and the available data. Decisions about refining model structure or collecting additional information depend on whether the model sufficiently addresses the questions at hand. The perceived adequacy of models and data is inherently context dependent, particularly under the time constraints of public-health emergencies. For instance, a moderately effective intervention applied early in an epidemic may avert more cases than a highly optimised intervention introduced later, underscoring the importance of timely, transparent, and interpretable modelling over maximal complexity or perfect retrospective fit. Historical cases, such as the 1978 influenza outbreak, illustrate that simpler stochastic formulations can often yield more stable and actionable insights than overly elaborate models. These observations highlight the importance of preparedness: systematically benchmarking models against past data, refining them iteratively, and establishing early-stage data-collection protocols can greatly enhance predictive reliability and decision readiness in future outbreaks.

To conclude, our study highlights the trade-off between model complexity and predictive stability in epidemiological modelling. While detailed frameworks such as the DDE model can capture outbreak dynamics, they are vulnerable to the adverse effects of over-parameterisation and limited generalisability. In contrast, simpler models - whether stochastic or deterministic - tend to offer greater robustness and reliability for real-time epidemic forecasting, reinforcing their value in public health decision-making, particularly in settings with limited to moderate data availability.

Data availability

The 1978 influenza outbreak dataset is available in the R package “outbreaks” . The code for analyzing the DDE model can be found in Avilov et al.(2024). The Matlab code for producing the results of the stochastic SIR model is available in the online repository referenced in the paper.

References

Anonymous. Influenza in a boarding school. BMJ1, 587–587 (1978).

Avilov, K. K. et al. The 1978 English boarding school influenza outbreak: Where the classic seir model fails. J. R. Soc. Interface 21, 20240394 (2024).

De Vries, G., Hillen, T., Lewis, M., Muller, J. & Schonfisch, B. A course in mathematical biology: quantitative modeling with mathematical and computational methods (SIAM, 2006).

Martcheva, M. An introduction to mathematical epidemiology, vol. 61 (Springer, 2015).

Raissi, M., Ramezani, N. & Seshaiyer, P. On parameter estimation approaches for predicting disease transmission through optimization, deep learning and statistical inference methods. Lett. Biomath. 6, 1–26 (2019).

Grinsztajn, L., Semenova, E., Margossian, C. C. & Riou, J. Bayesian workflow for disease transmission modeling in stan. Stat. Med. 40, 6209–6234 (2021).

Jombart, T., Frost, S., Nouvellet, P., Campbell, F. & Sudre, B. Outbreaks: A collection of disease outbreak data. R package version1 (2017).

Li, M. An epidemic enigma: challenges in modeling the influenza epidemic in a boarding school. In 2023 Canadian Mathematical Society Summer Meeting (Ottawa, Canada, 2023).

Kalachev, L., Landguth, E. L. & Graham, J. Revisiting classical sir modelling in light of the covid-19 pandemic. Infect. Dis. Model. 8, 72–83 (2023).

Curth, A., Jeffares, A. & van der Schaar, M. A u-turn on double descent: Rethinking parameter counting in statistical learning. Adv. Neural. Inf. Process. Syst. 36, 55932–55962 (2023).

He, D., Dushoff, J., Day, T., Ma, J. & Earn, D. J. Inferring the causes of the three waves of the 1918 influenza pandemic in England and Wales. Proceedings of the Royal Society B: Biological Sciences 280, 20131345 (2013).

Biggerstaff, M., Cauchemez, S., Reed, C., Gambhir, M. & Finelli, L. Estimates of the reproduction number for seasonal, pandemic, and zoonotic influenza: a systematic review of the literature. BMC Infect. Dis. 14, 1–20 (2014).

Nikbakht, R., Baneshi, M. R., Bahrampour, A. & Hosseinnataj, A. Comparison of methods to estimate basic reproduction number (r0) of influenza, using Canada 2009 and 2017–18 a (h1n1) data. J. Res. Med. Sci. 24, 67 (2019).

Kypraios, T., Neal, P. & Prangle, D. A tutorial introduction to Bayesian inference for stochastic epidemic models using approximate Bayesian computation. Math. Biosci. 287, 42–53 (2017).

Bratsun, D., Volfson, D., Tsimring, L. S. & Hasty, J. Delay-induced stochastic oscillations in gene regulation. Proc. Natl. Acad. Sci. 102, 14593–14598 (2005).

Barrio, M., Burrage, K., Leier, A. & Tian, T. Oscillatory regulation of hes1: Discrete stochastic delay modelling and simulation. PLoS Comput. Biol. 2, e117 (2006).

Anderson, D. F. A modified next reaction method for simulating chemical systems with time dependent propensities and delays. The Journal of chemical physics127 (2007).

Barbuti, R., Caravagna, G., Milazzo, P. & Maggiolo-Schettini, A. On the interpretation of delays in delay stochastic simulation of biological systems. arXiv preprint arXiv:0910.1219 (2009).

Peter, D. H. Kernel estimation of a distribution function. Commun. Stat. Theor. Methods 14, 605–620 (1985).

Silverman, B. W. Density estimation for statistics and data analysis (Routledge, 2018).

Chowell, G., Nishiura, H. & Bettencourt, L. M. Comparative estimation of the reproduction number for pandemic influenza from daily case notification data. J. R. Soc. Interface 4, 155–166 (2007).

Chowell, G., Bettencourt, L. M., Johnson, N., Alonso, W. J. & Viboud, C. The 1918–1919 influenza pandemic in England and Wales: spatial patterns in transmissibility and mortality impact. Proceedings of the Royal Society B: Biological Sciences 275, 501–509 (2008).

Mills, C. E., Robins, J. M. & Lipsitch, M. Transmissibility of 1918 pandemic influenza. Nature 432, 904–906 (2004).

Riley, P. et al. Multiple estimates of transmissibility for the 2009 influenza pandemic based on influenza-like-illness data from small us military populations. PLoS Comput. Biol. 9, e1003064 (2013).

Shil, P., Gurav, Y. K., Chadha, M. S. & Mishra, A. C. Transmission dynamics of novel influenza a/h1n1 2009 outbreak in a residential school in india. Current Science 1177–1183 (2011).

Dubova, M. et al. Is Ockham’s razor losing its edge? New perspectives on the principle of model parsimony. Proc. Natl. Acad. Sci. 122, e2401230121. https://doi.org/10.1073/pnas.2401230121 (2025).

Getz, W. M. et al. Making ecological models adequate. Ecol. Lett. 21, 153–166 (2018).

Getz, W. M., Salter, R., Muellerklein, O., Yoon, H. S. & Tallam, K. Modeling epidemics: A primer and numerus model builder implementation. Epidemics 25, 9–19 (2018).

Jombart, T., Frost, S., Nouvellet, P., Campbell, F. & Sudre, B. Outbreaks: A collection of disease outbreak data. R package version1 (2017).

Tverskoi, D. Model fit vs. predictive reliability: A case study of the 1978 influenza outbreak (2025). Data repository. https://github.com/dtverskoi/Model_Fit_vs._Predictive_Reliability_A_Case_Study_of_the_1978_Influenza_Outbreak; Accessed: 3 March 2025.

Acknowledgements

We thank Sean S. Downey, participants of the HEALMOD seminar and members of Grzegorz Rempala’s lab at The Ohio State University for valuable comments and suggestions.

Author information

Authors and Affiliations

Contributions

G.A.R. conceived the study. D.T. performed simulations and data analysis. D.T. and G.A.R. discussed the results, drew conclusions, and wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tverskoi, D., Rempala, G.A. Model fit vs. predictive reliability: a case study of the 1978 influenza outbreak. Sci Rep 15, 42110 (2025). https://doi.org/10.1038/s41598-025-26072-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-26072-3