Abstract

This study investigated the impact of generative AI tools on human resource management practices, organizational commitment, employee engagement, and employee performance. The authors also investigated the mediating role of trust in the relationship between user perception and organizational commitment. A structured questionnaire was used to collect the data from the information technology industry employees to measure the 9 reflective constructs optimism, innovativeness, ease of use, usefulness, trust, organizational commitment, vigor, dedication, and employee performance. Two constructs, ease of use and usefulness, are modeled as a higher-order construct – User Perception, whereas vigor and Dedication are modeled as another higher-order construct, Employee Engagement. The mode-fit indices were assessed for both the higher- and lower-order constructs, and the model-fit indices for both the models, higher- and lower-order constructs reveal an excellent model fit. Positive and statistically significant impacts were observed between the study constructs. The impact of organizational commitment on employee engagement was positive and statistically significant, and in turn, the impact of employee engagement on employee performance was also positive and statistically significant. Trust partially mediated the relationship between user perception and organizational commitment, fostering enhanced employee engagement and performance. The theories of technology readiness, stimulus-organization-response, and technology acceptance model were utilized in the development of the study’s theoretical framework, which provided fresh perspectives on how employee engagement, organizational commitment, and performance are influenced by user experience and trust in the context of generative AI. Theoretically, it opens up new applications such as personalized education and services, digital art, and realistic virtual assistants that were previously unfeasible or impractical for automation. The practical implications are that the field of interdisciplinary research as well as the information technology industry will be greatly impacted by generative AI. To facilitate swift adoption, the principles of generative AI are conceptualized from model-, system-, and application-level perspectives in addition to a social-technical perspective, where they are explicated and defined. In the end, our research has given future scholars a significant research agenda that will enable them to study generative AI from different theoretical perspectives while incorporating the concepts from these theories.

Similar content being viewed by others

Introduction

Technological advancements and innovations in particular information technology sectors, like artificial intelligence, machine learning, and allied fields, have enabled significant transformation of organizations in various domains, and information technology is no exception. The integration of various technological tools and applications has significantly transformed the working styles of employees from hard work to smart work. Academicians in universities, the education sector, information technology (IT), IT-enabled companies, banking, and business process outsourcing industry employees regularly use generative artificial intelligence tools such as OpenAI ChatGPT, Google Gemini (formerly known as Bard a generative AI chatbot), and Copilot and Bing AI from Microsoft, which are based on large language models; these are the most commonly used generative AI tools in several domains. The other available generative AI tools, namely, SlidesAI, Wepik, and Tome, are used for presentation development. Jasper AI, Notion AI, and Writesonic are used for content creation. Several other artificial intelligence (AI) tools are available for image creation (OpenAI Dall-E, Adobe Firefly; for video generation, RunwayML, Pictory, Fliki; and other generative AI tools for paraphrasing Quillbots; and generative AI assistants such as Google Duet AI). The amalgamation of several AI tools and applications has revolutionized quick solutions (Southworth et al. 2023; Reddy et al. 2023). The ChatGPT, an AI tool, is routinely used by business schools across India and the globe for article writing, text generation, literature review, and manuscript development (Ratten and Jones, 2023). ChaptGPT, an AI-powered tool that serves as a virtual assistant, can provide instant solutions to queries with explanations and requested content. ChatGPT can increase accessibility, commitment, and engagement and enhance performance (Mijwil et al. 2023).

However, innovative and novel technological tools such as generative AI and their effects on employee engagement, employee performance, and user satisfaction are well understood (Acemoglu, Johnson, and Viswanath, 2023). The release of the generative AI tool ChatGPT in November 2022 exemplified the environment, as this AI tool spans a variety of applications and generates diverse and requested content, including text, code, images, audio, and videos (Nazaretsky et al. 2022; Chui et al. 2023). Generative AI technology influences transformer technology, an advanced neural network architecture that uses input data for predictive generation. In turn, these AI tools influence employee engagement, productivity, and commitment and enhance employee performance (Wijayati et al. 2022). The users of these AI tools and chatbots are influenced by several aspects of their routine work wherever possible (Gatzioufa and Saprikis, 2022). Furthermore, these AI tools have long-lasting impacts on employee engagement, organizational commitment, and performance (Pillai et al. 2023). Generative AI tools offer several benefits, such as easy access to information from different sources, the ability to accomplish routine tasks with enhanced speed and accuracy, and the ability to automate some individual tasks (Chui et al. 2023; Gill et al. 2024; Hwang and Chang, 2023).

Review of the literature

Generative AI technologies have the potential to automate most employees’ routine tasks with increased performance and productivity. These technologies can offer tailored assistance by analyzing previous information and can provide timely feedback that matches human communication (Kuhail et al. 2023). AI tools can minimize labor expenses, enhance performance, and improve computer and human interaction in the workplace (Pereira et al. 2023). The positive impacts of AI technologies on organizational performance, employee engagement, organizational commitment, market valuations, and increased growth are well documented (Babina et al. 2024; Czarnitzki et al. 2023). The unique qualities of chatbots enable employees’ timely work completion (Foroughi et al. 2023). However, several organizations consider generative AI tools to be relatively novel. However, there is a lack of research and information on the adoption intentions of these new technologies. Very few studies are available on the use of generative AI tools in healthcare, engineering, and management (Rahimi and Abadi, 2023; Javaid et al. 2023; Nikolic et al. 2023; Onal and Kulavuz-Onal, 2024) and hospitality and tourism industry (Dwivedi et al. 2024; Rejeb et al. 2024; Ratten and Jones, 2023).

However, some people are concerned about the possible threats of AI tools, such as ownership, privacy concerns, potential reductions in jobs, productivity optimization, and employee performance. These consequences can lead employees to have negative adaptive intentions and mindsets, which decreases their engagement, commitment, and turnover intentions (Bankins et al. 2024). Furthermore, the literature lacks a comprehensive and reliable model to explain the dimensions that influence the use and adoption intentions of generative AI for addressing the issues of employee engagement, organizational commitment, and employee performance. Several researchers have integrated the unified theory of acceptance and use of technology 3 (UTAUT 3) model, the TAM model, and the stimulus‒organism‒response framework to comprehend how generative AI is being used by employees.

The UTAU3 model is a robust framework that considers a variety of dimensions that influence the adoption and use of generative AI or similar technologies (Gupta et al. 2023). Several domains have employed the Technology Acceptance Model to study the adoption intentions of new technologies (Foroughi et al, 2023). Generative AI tools have the capacity to revolutionize work culture, which has attracted several researchers to carry out further studies (Reddy et al. 2023). Generative AI tools can realize and participate in discussions in human languages, creating content in response to user input. Generative AI tools can enhance engagement and commitment; improve employee performance; and have a positive effect on the industry (Kasneci et al. 2023).

On the basis of this background, some issues need to be addressed

-

Does the intention to use generative AI tools positively impact its acceptance and use in the workplace?

-

Is trust in these tools a vital factor?

-

Relationships among organizational commitment, employee engagement, and employee performance in the context of generative AI

The main objectives of this research are as follows:

-

To determine and validate the user perceptions that influence the use of generative AI tools in the workplace

-

Does trust play a mediating role in the relationship between user perception and organizational commitment?

-

How organizational commitment impacts employee engagement and, in turn, how employee engagement impacts employee performance in the context of generative AI tools

Important factors such as ease of use and usefulness of generative AI tools, users’ perceptions, and individuals’ acceptance of new technologies are the prime factors in the adoption of new AI technologies (Parasuraman, 2000). Usefulness, positive user perception, and usefulness at work are the main factors affecting individuals’ acceptance of new technologies (Parasuraman and Colby, 2015). In relation to the theory of reasoned action, Davis’s (1989) TAM model dissects the relationship between users’ attitudes toward new technology and their actual usage. Another important factor is an individual’s trust in technology for its adoption and continuous usage in the workplace, which influences organizational commitment and employee engagement. Employees in the workplace are concerned only with reliable results before they use generative AI tools. If an employee perceives that the results are useful and believes that these AI tools can perform accurately, trust in these tools will increase. Therefore, trust is a crucial factor in the use and adoption of generative AI tools. Employees can trust generative AI tools for continuous usage, positive perceptions, and experience if they find them reliable and useful (Gkinko and Elbanna, 2023). The quality of results and their usage influence users’ trust in technologies that are developing loyalty to AI tool use (Chen et al. 2023). Another factor is how the use of generative AI tools can influence employees’ work engagement. When generative AI tools are useful and trusted, employees are positively influenced by the use of these tools in their work engagement.

Using ability-motivation-opportunity (AMO) and person-organization (P-O) fit theories, Jia and Hou (2024) investigated the relationships between AI-driven sustainable human resource management (HRM), employee engagement, and employee performance in the context of a conscientious personality. According to the authors, AI-driven sustainable HRM improves employee engagement, which in turn boosts output. The association between AI-driven sustainable HRM and employee engagement was tempered by conscientiousness.

Mishra et al. (2024) investigated the relationship between AI and employee engagement in the context of organizational performance. The authors reported that adopting AI-based software can significantly help management to the extent that employees are engaged in their work and, in turn, improve employee performance. Wang et al. (2023) reported that AI can impact healthcare practitioners’ attitudes toward AI, satisfaction with AI, and AI usage intentions. The authors report a positive impact on healthcare workers’ engagement in the workplace. Yu et al. (2023) examined the configurational effects of artificial intelligence (AI) on hiring decision transparency, consistency, human involvement, and organizational commitment. The authors reported a positive influence of AI tools on human resource practices and organizational commitment. Baabdullah (2024) carried out an empirical study to examine how the adoption of AI in decision-making can enhance the efficiency and performance of an organization. The structural equation modeling results reveal a positive impact of AI tools on employee performance, indicating that the adoption of AI tools can enhance organizational and functional performance.

To conduct the present empirical study, the authors created a number of reflective constructs following a comprehensive review of the literature. The first two constructs are related to adoption intention and user perceptions – ease of use and usefulness in relation to organizational commitment and employee engagement. To examine the mediating role of the construct “trust” in the relationship between user perception and organizational commitment, the authors carried out a mediation analysis. Finally, the study assessed the impact of organizational commitment on employee engagement and, in turn, the impact of employee engagement on employee performance.

Theoretical framework

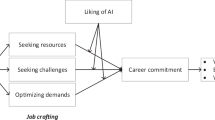

Generative AI is an AI tool that allows users to create, enhance, and summarize unstructured data to a meaningful and useful outcome. The theoretical framework is based on the TAM (Davis, 1989), and the two constructs from this model, ease of use and usefulness, encapsulate user perception. The authors also used the items of Parasuraman’s (2000) Technology Readiness Index optimism, a positive attitude toward technology, and innovativeness, with new functionalities added that can be useful. The integration of TRI and TAM theories has been conceptually developed and well-documented (Lai and Lee, 2020) and tested by Flavián et al. (2022). Furthermore, stimulus‒organism‒response (SOR) theory (Mehrabian and Russell, 1973) was combined with the TAM model as a factor that influences the internal states of an individual and stimulates the organism through internal processes related to external stimuli and individual actions. Therefore, on the basis of these theories, the authors developed the theoretical framework presented in Fig. 1. The author’s research model is presented in Fig. 2. Trust is a mediator between user perception and organizational commitment. A positive attitude toward the use of new and innovative technology with optimism led the authors to propose the following two hypotheses:

H1: Optimism is statistically significant and positively impacts User Perception.

H2: Innovativeness is statistically significant and positively impacts User Perception

Trust is one of the important dimensions that impact interactions between generative AI and its users. It is defined as “the user’s judgment or expectations about how the AI system can help when the user is in a situation of uncertainty or vulnerability” (Cheng et al. 2022; Vereschak et al. 2021). Not trusting and blindly trusting affect users’ interactions with an AI tool and cause risks in human-AI interactions (Annette M O’Connor et al. 2019; Pearce et al. 2022; Perry, et al. 2022). Thus, how general users of generic AI tools build their trust in AI and develop/design tools that users can trust has become a topic. According to Cheng et al.‘s (2023) analysis of online communities, developers work together to interpret how AI affects communities so that researchers can assess AI recommendations.

Despite several studies on generative AI, there is a lack of understanding of user trust in AI tools and their implications, which need to be explored critically. Therefore, trust is important in human‒generative AI interactions because the adoption of AI tools in the workplace depends on the reliability and trustworthiness of the tools (McKnight et al. 2002, Korngiebel and Mooney, 2021). Trust is a dynamic concept and depends on previous success and experiences. Trust in technology also depends on data security, privacy, and data protection (Yang and Wibowo, 2022). These authors further emphasize trust in the context of AI via the TAM. Therefore, the authors formulate the following hypothesis:

H3: User experience positively impacts Trust

The authors would like to integrate AI into organizational outcomes such as organizational commitment, work engagement, and employee performance. Researchers have suggested that the use of generative AI enhances work engagement (Picazo Rodríguez et al. 2023). Marikyan et al. (2022) reported the relationship between productivity and engagement in the context of digital assistance based on AI and reported that trust is an antecedent of satisfaction, commitment, and engagement. Rane (2023) investigated the integration of generative artificial intelligence systems with human resource practices for workforce management and employee management. The authors suggested maintaining a harmonious balance between generative AI tools and humans so that employees can engage the tools with trust.

Employee engagement is an important factor for managing the health and productivity of any organization. Generative AI tools can be used to manage employees through employee engagement. However, employees should be motivated to use new technologies. Whether such systems will be successful in long-term employee management depends on a number of factors, including perceived risk, trust, usefulness, fairness, and ease of use (Hughes, 2019). Dutta et al. (2023) investigated the impact of generic AI tools on employee engagement in the context of trust as a mediator between generative AI and impact engagement. The authors reported the implications of generative AI tools for employee engagement and performance. The authors reported that motivation and trust are the main factors for employee engagement with generative AI tools. Assefa (2022) investigated the deployment of AI tools to improve employee communication effectiveness. The authors reported that access to and use of various AI tools can help foster innovation and that creativity can increase organizational commitment. The structural equation model results indicate that AI tools used to automate regular tasks increase productivity, commitment, employee engagement, and performance.

Feng-Hsiung Hou et al. (2021) investigated the relationships between demographics, such as gender, age, and education, and artificial intelligence users in terms of organizational commitment. The authors reported statistically significant differences among the demographics and organizational commitments of AI business employees. In a research study, the authors examined the configurational effects on procedural justice perceptions and organizational commitment. Generative AI tools positively influence hiring and organizational commitment performance (Yu et al. 2023). Organizations are increasingly using AI tools to provide performance back to employees by examining employee behavior at work and recommending certain aspects to enhance employee performance. The authors reported the negative effects of disclosing using AI and deploying AI tools. Tong et al. (2021) warned organizations to alleviate and mitigate the disclosure effect issue through proactive communication and explanations of the objective of such AI tools to employees in the organization.

Wijayati et al. (2022) examined the impact of AI on work engagement and employee performance change leadership as moderators. The SEM results indicate that AI has a statistically significant and positive effect on employee performance and employee engagement and that change leadership positively moderates the impact of AI and the association between employee performance and work engagement. Elegunde and Osagie (2020) examined the impact of the deployment of AI tools on employee performance in the Nigerian banking industry. The results indicated that AI can work as a catalyst to ease routine operations and enhance organizational commitment, employee engagement, and performance. On the basis of these critical reviews, the following hypotheses are formulated:

H4: Trust positively impacts organizational commitment in the context of generative AI tools.

H5: Organizational commitment positively impacts employee engagement in the context of generative AI tools.

H6: Employee engagement positively impacts employee performance in the context of generative AI tools.

H7: Trust mediates the relationship between user perception and organizational commitment.

Methodology

Data collection and sample

A carefully designed questionnaire was used to gather the data to measure nine reflective constructs. There were two sections in the survey. The respondents’ demographic profile made up the first section, and the TAM-SOR-TRI models’ constructs made up the second. A seven-point Likert-type scale was used, where one point denoted strong disagreement and seven polint denoted strong agreement. The suitability of the questionnaire was determined via a pretest. The questionnaire content was examined by four professionals, who are experts in management and information technology, and an English language consultant was deployed. The pilot study was carried out with 100 information technology employees, who are software engineers, testing specialists, team leaders, and project managers. Convenience sampling was used to reach the targeted sample that fulfilled the study sample characteristics. The data were collected from January to March 2024 through a questionnaire that was published on Google Forms. The link was shared with the targeted participants with predetermined sample characteristics because the respondents used generative AI tools. A total of 552 responses were received; 52 responses were dropped because of respondents misbehavior, and some respondents did not answer more than 40% of the questions. Thus, 500 valid responses were received. Table 1 presents the demographic characteristics of the participants.

Justification of sample size

According to Anderson and Gerbing (1984), the recommended sample size for maximum likelihood estimation with multivariate normal data should be between 200 and 400, with a case-to-free parameter ratio of 5:1. This indicates that the sample needed is for one indicator/statement. Furthermore, following the criterion provided by James Gaskin (2023) for SEM analysis, 50 + 5x, where x is the number of statements, was used. The present empirical research has 33 questions, and the required sample size per these criteria is 215. The valid response rate of 500 subjects for this empirical study was greater than the required sample size. Furthermore, the sample size used is greater than what Wolf et al. (2013) suggested for SEM analysis. This study assessed the sample size needed for widely used applied SEMs via Monte Carlo data simulation techniques. Power analysis was also performed by the writers to support the sample size.

Power analysis

A power analysis was conducted via SPSS version 29 to assess the power of the study sample (Faul, Erdfelder, Lang, and Buchner, 2007), with an alpha of 0.05. The standard deviation of the sample was 1.17. The results revealed an actual power value of 0.955 for the sample size of 500, with an effect size of 0.82, indicating a strong relationship among the variables, which was significant (Fig. 3). Thus, the sample size of N = 500 is more than adequate to test the study hypotheses (Kyriazos, 2018; Goulet-Pelletier, Cousineau, 2018).

Measures

To develop a model that aims to address the constructs that influence the inclination of IT sector employees to use generative AI tools and their subsequent user perceptions, a comprehensive review of the literature was carried out. However, few studies exist on generative AI tools and their relationships with human resource outcomes in the context of TAM-SOR-TRI models.

To bridge this gap in the current research, this study proposes a theoretical framework that incorporates TAM-SOR-TRI models (Fig. 1). Using measurements from past research, the constructs of optimism and innovativeness were operationalized in this empirical research (Parasuraman, 2000; Parasuraman and Colby, 2015). The optimism construct has 3 items, and 4 items measure innovativeness. Following the TAM model, the two constructs of perceived usefulness and perceived ease of use were constructed (Davis et al. 1989), and both constructs had 3 items each. These two constructs are modeled as a higher-order construct, User Perception. The Trust construct has five items and was modeled following the theories of (McKnight et al. 2002; Glikson and Wooley, 2020; Frank et al. 2024; and Candrian and Scherer, 2022). Organizational commitment was developed following the theories of Allen and Meyer (1993) and Meyer et al. 1989). This construct has five items. The two constructs for employee engagement, Vigor and Dedication, were modeled through the following work engagement questionnaire developed by Schaufeli et al. (2006). These two constructs had 3 items each and were modeled as higher-order EMPENG constructs to measure employee engagement. The performance has five items, and the construct was developed on the basis of the model of Pradhan and Jena (2017).

Data analysis and results

As the information technology population size is not known, the Cochran (1977) formula was used to determine the sample size for the unknown population. According to this formula, the required sample size is 385. Another school of thought was to have a sample size of 50 + 5X (where x is the number of statements). Our study has 9 reflective constructs with 33 items. Therefore, the sample size required for SEM analysis is 50 + 5*33 = 215; however, the number of available valid responses of 500 is far greater than the sample size (Wolf et al. 2013) suggested for SEM analysis.

Exploratory factor analysis (EFA) was used to uncover the underlying pattern of structure in a set of observed variables. The factor analysis extracted the 33 study variables into 9 components on the basis of their shared variance. The nine components had a cumulative variance of 81.961, which was greater than the recommended value of >50%. Therefore, further analysis was carried out.

KMO and Bartlett’s test: The Kaiser–Meyer–Olkin (KMO) measure of sampling adequacy, which gauges the suitability of the data for factor analysis, was used to determine the suitability of the data. A factor analysis of the data indicated a value of 0.929. (Table 2). Bartlett’s test of the sphericity value (<0.001) indicated that the correlation matrix was not an identifiable matrix; thus, further analysis was carried out. Factor loadings are presented in Table 3.

This study utilized IBM SPSS for factor analysis and AMOS version 28 for structural equation modeling analysis, which is ideal for revealing the amount of variability in the dependent variables (Hair et al. 2019). The SEM results generated three components, the measurement model, which was assessed to determine the relationships between indicators and constructs, followed by the analysis of the structural model to verify the expected relationships between the constructs (Ringle and Sarstedt, 2016). Third, a mediation analysis was carried out to determine the role of the mediator Trust in the relationship between user perception and organizational commitment.

Measurement model assessment

The measurement model was assessed for both the 9 constructs separately and for the model with the higher-order constructs of User Perception and Employee Engagement. The results are presented in Table 3. Three important criteria were assessed: discriminant validity, convergent validity, and internal consistency. All the constructs exhibited good reliability, as revealed by Cronbach’s alpha values ranging from 0.897 to 0.948, exceeding the threshold value of 0.70 (Hair, 2010). Every factor loading was greater than 0.6, and no construct showed excessive residual variance that was shared with other constructs. Additionally, all of the average variance extracted (AVE) values of the constructs are greater than 0.5, demonstrating adequate convergent validity (Table 3, Hair et al. 2019).

Discriminant validity was assessed via the Fornell–Larcker (1981) criterion and the Heterotrait–Monotrait ratio (HTMT). The square roots of the AVE for each construct in the overall model were greater than the correlation coefficients, and the variables indicated good discriminant validity. Nevertheless, the HTMT ratio was also used to evaluate discriminant validity, and all ratios were below the necessary cutoff of 0.85 (Henseler et al. 2015). Consequently, discriminant validity (Tables 4 and 5) was established.

Model fit

CFA was performed via AMOS version 28. First, in the lower-level model CFA, factor loadings were assessed for each item. Model fit measures were used to assess the model’s overall goodness of fit (“CMIN/df, GFI, CFI, TLI, SRMR, and RMSEA”). All the values were acceptable, as recommended by (Ullman, 2001; Hu and Bentler, 1998, Bentler, 1990). The nine-factor model (PERF: Performance, OCT: Organizational commitment; Trust, Innovativeness, Dedication, Optimism, Vigor, Ease of use, and usefulness) fit the data well; CMIN/df 1.99, CFI 0.967, GFI 938, TLI 0.925, IFI 0.987, NFI 0.958, SRMR 0.033, RMSEA 0.024. PClose 1.000. The factor loading values (Kline, 2015) are excellent, nonnegative, and acceptable and are >0.5, with average factor loadings >0.7 for all nine constructs, indicating that the model was excellent (Byrne, 2013).

Furthermore, a model with higher-order constructs of user perception and employee engagement was assessed for model fit. The model fit with higher-order constructs also indicated an excellent model fit, as revealed by the model fit indices CMIN/df 2.905, CFI 0.947, GFI 928, TLI 0.925, IFI 0.957, NFI 0.938, SRMR 0.037, RMSEA 0.048. As both models exhibited excellent fit, the structural model (Fig. 4) was evaluated.

Structural model

The relationships among the constructs were tested by assessing structural equation model created using AMOS 28 software. According to Hair (2010), a well-fit model is recognized if the Tucker and Lewis indices (1973), the confirmatory fit index (CFI) (Bentler, 1990), the GFI (Hair, 2010), and the CMIN/df are less than 5. Furthermore, according to Hair (2010), a model was deemed adequate if the standardized root mean square residual (RMR) using AMOS computation was less than 0.05 and if the root mean square error approximation (RMSEA) fell between 0.05 and 0.08.

The squared multiple correlations were 0.63 for user perception, 0.38 for trust, 0.30 for organizational commitment, 0.50 for employee engagement, and 0.54 for employee performance. These values indicate that 63% of the variance is explained by two variables: optimism and innovativeness. Similarly, 38% of the variance in trust due to user perception, 30% in organizational commitment due to user perception, 50% in employee engagement due to organizational commitment, and 54% in employee adaptive performance variance are explained by employee engagement (Fig. 4).

Common method bias

Common method bias, or CMB, is the inflation or depletion of the true correlation between the study’s observable variables. Artificial inflation of covariance is possible because respondents usually answer questions that include both independent and dependent variables simultaneously. This study assessed common method bias via Harman’s single-factor test and the common method latent factor.

Harman’s single factor test: Confirmatory factor analysis was used to evaluate the model fit after the researchers loaded all the indicators onto a single factor. After verification, the model fit was not appropriate, ruling out common approach bias.

Latent Common Method Factor: A latent construct with a direct relationship to each of the construct’s model indicators was employed by the researchers. A latent construct known as the common method was drawn. The model contained a direct correlation between each indicator in the model and the latent construct of the unobserved common method. A path from the common method construct to each indicator in the model is drawn, and then a constraint on all the relationships from the method factor being identical is established to ascertain whether there is a common influence among all the items/indicators. The model was run via the latent common method variable, which has a direct relationship with each of the variables. The CFA model’s chi-square value was noted.

The observed chi-square value for the original model without the latent construct is 915.700, and there are 436 degrees of freedom. With 437 degrees of freedom, the basic model’s chi-square with a latent factor is 919.800. The chi-square difference of 4.10 suggested the presence of common method bias. Since the CMB is so low and has little bearing on the study’s findings, it is not a significant problem in this work (Tables 6 and 7).

Testing of hypotheses

The study assessed the impact of optimism and innovativeness on user perception, the impact of user perception on trust and organizational commitment, and the impact of organizational commitment on employee engagement, in turn, the impact of employee engagement on employee performance in the context of generative AI tool use and adoption by employees in the organization (Table 8).

H1: “Optimism is statistically significant and positively impacts user perceptions” was supported (ß = 0.416, t = 9.176, p < 0.001), suggesting that optimism has a positive and statistically significant effect on user perception. A positive user perception is a key element in adopting and using any new technology. H2: “Innovativeness is statistically significant and positively impacts User Perception” was also supported (ß = 0.081, t = 3.964, p < 0.001) in that innovativeness was positive and statistically significant. However, when considering the regression weights of optimism (ß = 0.419) and innovativeness (ß = 0.081), optimism is a stronger influencer than user perception is. These findings are consistent with the findings of Wang et al. (2023), who reported that AI can be more effective, enhance employee engagement and improve performance in the context of the TAM.

The third hypothesis states that H3: “User perception impact is statistically significant and positively impacts Trust”. This was supported by the path analysis results (ß = 0.891, t = 8.906, p < 0.001). According to our fourth hypothesis, the influence of trust on organizational commitment is positive and statistically significant (ß = 0.101, t = 2.290, p < 0.05) in the context of generative AI tools.

The authors also examined the mediating role of trust in the relationship between user perception and organizational commitment. The direct effect is positive but insignificant (ß = 0.758, t = 6.218, p < 0.001), whereas the indirect effect is positive and statistically significant (ß = 0.090, t = 7.456, p < 0.001), indicating that trust partially mediates the relationship between user perception and organizational commitment (Zhang and Zhang, 2015). This finding supports H4, “Trust positively impacts organizational commitment” in the context of generative AI tools, and H7, “Trust mediates the relationship between user perception and organizational commitment” (Table 9).

The impact of organizational commitment on employee engagement is positive and statistically significant (ß = 0.512, t = 7.994, p < 0.001), supporting H5: “Organizational commitment positively impacts employee engagement” in the context of generative AI tools. The impact of employee engagement on employee performance is positive and statistically significant (ß = 0.750, t = 8.048, p < 0.001), supporting H6: “Employee engagement positively impacts employee performance” in the context of generative AI tools. The results of path analysis are shown in Fig. 5.

Discussion

In the information technology sector, the effectiveness of AI-driven tools has received considerable attention. This can be attributed to improved employee engagement, performance, and automation of routine tasks. This study investigated the factors that influence the effect of generative AI adoption and use and, in turn, how such adoption influences real usage patterns among information technology employees. Very few studies exist on the adoption and use of generative AI tools in the context of trust, organizational commitment, employee empowerment, and employee performance. The outcomes of this study provide valuable insights into the adoption of AI tools in the workplace. The results can also help to advance further research on AI tool usage for routine and repetitive assignments by employees. The authors investigated the effects of several constructs—user perception, trust, organizational commitment, employee engagement, and employee performance—in relation to generative AI tools and their influence in the workplace. The main findings of the study were that all the hypotheses were supported. However, the mediating construct of Trust partially mediated the relationship between user perception and organizational commitment. User perception, ease of use, usefulness of new tools, and trust are paramount factors for the use and adoption of new AI tools. The results confirm the findings of past studies that the use of generative AI tools can enhance organizational commitment, employee engagement, and employee performance. Our findings are consistent with the results of Assefa (2022) and Yu et al. (2023) in the context of organizational commitment.

Ghazali et al. (2024) reported the importance of the constructs optimism and innovativeness in the adoption intentions of AI tools. The continuous postadoption of AI tools depends on the optimism, innovativeness, and ease of use of the new AI tools. Optimism and innovativeness are the two major antecedents of the use and adoption of generative AI tools and other AI tools in the workplace. The factors of the technology readiness index that Shah et al. (2024) considered were optimism, innovativeness, security, and customer discomfort. engagement.

The study also assessed the purchase intentions of online shoppers through AI voice assistants. The structural equation modeling results reveal that the TRI dimensions of optimism and innovativeness are critical factors driving customer engagement, which further results in purchase intentions for online shoppers deploying through AI VAs. Our results are similar and in line with these findings. Myin and Watchravesringkan (2024) examined consumers’ adoption of generative AI tool chatbots in the context of apparel shopping. This research was integrated with the TAM (Davis, 1987) and behavioral reasoning theory (Westaby, 2005). The hypothesized relationships were tested via structural equation modeling analysis. The findings show that perceived ease of use is positively and significantly influenced by optimism. On the other hand, innovativeness has a statistically significant positive effect on perceived usefulness and relative advantage. The outcomes of our study and our hypotheses are consistent with our findings.

Datta et al. (2023) investigated how chatbots with AI capabilities affect worker engagement. With trust as a mediator, the authors examined how AI-enabled chatbots affected employee engagement. The outcome of the study was full mediation of trust and a statistically significant impact of AI-enabled chatbots on employee engagement and employee performance. Braganza et al. (2021) investigated the effects of psychological contracts, job engagement, and trust in the context of the adoption of AI tools. The authors reported the positive influence of the adoption of AI tools on job engagement and psychological contracts. Our results concur with the authors’ findings.

Jia and Hou (2024) examined the relationship between AI-enabled HR< in the context of employee engagement and employee performance. Researchers have integrated ability-motivation-opportunity (AMO) and person-organization (P-O) fit theories to model the study. The results reveal that AI-driven sustainable HRM practices positively influence employee engagement and, in turn, enhance employee performance. Mishra et al. (2024) investigated AI tool usage in the context of employee engagement and employee performance. AI-based tools can significantly and positively impact employee engagement and organizational performance. Prentice et al. (2023) investigated the effect of AI in the context of employees’ job engagement and job performance. The authors reported that the effective use of AI had a significant influence on job engagement and employee performance and suggested adopting and deploying AI-based tools to enhance employee engagement and employee performance. Our results are consistent with the authors’ findings.

Theoretical discussion

We modeled our study by combining the Technology Acceptance Model, Technology Readiness Index, Stimulus‒Organism‒Response theory, and Self-Determination Theory. The study used the constructs Optimism and Innovation from the Technology Readiness Index (Parasuraman, 2000) and modeled user perception and trust from (McKnight et al. 2002), Organization commitment from (Meyers et al. 1989), Employee Engagement in the context of Generative AI (Hughes et al. 2019) and Performance in the Context of Generative AI (Elegance and Osagie). We briefly discuss other theories in the context of generative AI.

Delone and McLean IS success model

The Information System (IS) success model developed by Delone and McLean is a popular framework for assessing the effectiveness of ISs in businesses. According to the model, IS success is a multifaceted concept comprising six dimensions: individual impact, organizational impact, system quality, information quality, use, and user satisfaction. The model has found widespread use in IS research and is employed to assess the performance of a variety of ISs, such as e-commerce, healthcare, and enterprise systems. According to research, the model can be a useful tool for determining what makes an IS successful and where it needs to be improved. The construct use and user satisfaction of this model are less similar to those of user perception; the individual and organizational impact of this model can be integrated into employee engagement and performance. Although some studies (Ojo, 2017) have validated this model in the context of health informatics, it has several limitations in the context of AI implementation, such as its dependence on self-reported data, lack of an unambiguous framework, lack of consideration in the context of the dynamic nature of generative AI, lack of attention to culture and power dynamics and limited generalizability.

Through the lens of information system success theory, Kulkarni et al. (2023) examined the information system success factors that impact the adoption of the GAI in wealth management services. using an online structured questionnaire. The data were subjected to structural equation modeling analysis. The results show that while information quality had no effect on the adoption of generative artificial intelligence, system and service quality had a significant effect on generative net benefits. It was shown that there was a substantial further moderating effect of perceived risk on the relationship between the GAI and net benefit. Additionally, the adoption of generative artificial intelligence had a major effect on the information system’s net benefits. Our results show that the adoption of generative artificial intelligence has a major effect on the net benefits of information systems (Delone and McLean, 2003).

Theory of planned behavior

The theory of planned behavior (Icek Ajzen, 1991) states that human behavior is guided by behavioral beliefs (attitudes toward the behavior), normative beliefs (subjective norms), and control beliefs (perceived behavioral control). The more favorable the attitudes and the more subjective and greater the perceived control is, the stronger the person’s intention to perform the behavior in question, i.e., the use of generative AI. This theory can be explained in the context of the proposed generative AI model: user perception develops the intention and attitude toward the behavior, i.e., to use generative AI, which actually controls behavior. When given enough real control over a behavior, people act on their intentions when given the chance and circumstances. Here, intention is assumed to be the antecedent of behavior (Ajzen, 2011). The theory offers general insights into the foundations of individual attitudes that are applicable to a variety of research contexts outside of generative AI. In the context of elements connected to the theory of planned behavior, Ivanov et al. (2024) investigated the relation between perceived benefits, strengths, weaknesses, and risks. Additionally, the study investigated the potential impact of the intention to use GenAI tools on their actual implementation in higher education, as well as the structural relationship between the TPB variables and this intention. The data were collected from university lecturers and students at higher educational institutions. The findings show that while lecturers and students may have different opinions about the benefits and drawbacks of GenAI tools, their attitudes, subjective norms, and sense of behavioral control are significantly and favorably impacted by the technologies’ perceived strengths and advantages. The TPB core variables have a positive and significant effect on the intentions of instructors and students to use GenAI tools, which in turn has a positive and significant effect on tool adoption. In a similar vein, by outlining the factors influencing the adoption of GenAI technologies in the IT sector, our study advances theory. It provides managers and policy makers with a variety of options for how to formulate rules and regulations that optimize the advantages of these instruments while reducing their disadvantages.

Diffusion innovation theory

According to Moore and Benbasat (1991), this theory focuses on innovation-specific factors that influence users’ behavior in regard to adopting new technologies. The innovation spreads over time through particular channels. The rate of adoption is based on the adopter, and if the adopter is influential within a social network, this creates a desire for a specific innovation to adopt the new technologies. Diffusion theory states that for successive groups of users who adopt new technology, the share of the technology will reach the saturation level. This is in contrast to the adoption of generative AI, which started in the recent past, and now, it is not possible to predict the saturation of generative AI. In the context of generative AI, adopters are similar to users. Ghimire and Edwards (2024) analyzed the fundamental elements affecting educators’ opinions and acceptance of large language models (LLMs) and GenAI. The authors polled educators and used the innovation diffusion theory (IDT) and technology acceptance model (TAM) frameworks to analyze the results. The authors reported a significant positive correlation between educators’ perceptions of the usefulness of GenAI tools and their acceptance, which emphasizes the significance of providing concrete advantages to them. Furthermore, although to a lesser degree, perceived ease of use was a significant factor influencing acceptance. Our results also demonstrate that there are differences in the knowledge and acceptance of these tools, indicating that to enable broader integration of AI, targeted strategies are needed to address the unique needs and concerns of each adopter category.

Theory of reasoned action

According to the theory of reasoned action (Ajzen and Fishbein, 1980), an individual’s attitudes toward carrying out the behavior in question can predict the behavior through the intervening effect of the behavioral intention. This theory has four constructs—beliefs, attitudes, subject norms, and intentions. This theory postulates that a person’s intentions to perform a behavior (in this case, using a new technology such as generative AI) are influenced by social pressures similar to the social influence of the UTAUT-2 construct. This will lead to individual perceptions similar to user perceptions in our generative AI model and what others will think about them performing such behavior in question. Hee-Young et al. (2023) investigated the associations between the factors of credibility and usability and the user innovativeness of the ChatGPT in the context of the theory of reasoned action and innovation diffusion theories. The data were collected from ChatGPT, a Generative Ai user. First, the TRA (Subjective Norm, Attitude) benefits from the ChatGPT factors (Credibility, Usability). Second, the innovativeness of ChatGPT users positively impacts subjective norms and attitudes (TARs). Third, the subjective norms and attitudes (TARs) of ChatGPT users positively affected their intention to switch. According to our findings, users’ innovativeness and generative AI usability and credibility have a large effect on their intention to switch from other portal services (such as Daum, Navier, and Google) to ChatGPT. To deliver services that meet expectations, generative AI systems such as ChatGPT should work to develop a range of services, such as making functions more convenient for creative users to use.

Task–Technology Fit

The TTF model states that the degree to which task requirements and technology characteristics align predicts an individual’s technology use and performance (Goodhue and Thompson, 1995). The goal of this theory was to investigate the postadoption facets of technology use. Task‒technology fit refers to the relationship that exists between an individual (a user of generative AI) and technology (hardware, data, and software tools), as well as a task—that is, the action that an individual performs to achieve specific outcomes. The theory aims to investigate and validate the hypothesis that increased performance from the use of technology is contingent upon its functional alignment with users’ task requirements. In our model of generative AI, we also examined the use of generative AI tools in the context of employee engagement and performance. Huy et al. (2024) created an integrated research model to investigate the variables influencing the use of ChatGPT and the consequences for users’ propensity to stick with the app and refer others to it. By surveying 671 chatGPT users, the authors also examined the primary uses of ChatGPT and the moderating effect of curiosity on the relationships between various influencing factors and ChatGPT use. Interestingly, contrary to our expectations, ChatGPT use was unaffected by effort expectations, social influence, and trust. Second, word-of-mouth (WOM) and the intention to continue using ChatGPT were directly impacted by ChatGPT use. Third, the intention to stick to ChatGPT had a large effect on word-of-mouth. Ultimately, the study revealed that there were just three pathways—hedonic motivation, facilitating conditions, and performance expectancy—to which curiosity functioned as a moderator. In our study, user perception and trust positively impacted the outcome variables of employee engagement and performance.

According to social cognitive theory, an individual may develop the intention to use generative AI by observing other users.

Unified theory of acceptance and use of technology (UTAUT)

Several models described earlier have one or more limitations. Therefore, there is a need to develop a model that is at least near fit and acceptable to all people. Past technological inventions, such as big data, artificial intelligence, robotics, and ICT, have dramatically changed the way organizations conduct business. A continuous thrust of organizations is employee engagement, which in turn enhances employee performance through the use of technologies. The consequences of technology adoption on organizations’ performance and the technology utilization and acceptance gap are major areas of research in the recent past. Texts such as the TPB and TRA analyze the relevant variables from these theories to provide a psychological perspective on human behavior. The main focus of diffusion innovation theory is on the innovation-specific elements that influence user behavior with respect to the adoption of new technologies. The theories’ varying points of view were a reflection of the kinds of variables included in each model. Consequently, a cohesive strategy is required to incorporate variables that represent various viewpoints and academic fields and broaden the theory’s application to various situations. (Venkatesh et al. 2003).

To provide a holistic understanding of technology acceptance, Venkatesh et al. (2003) integrated important constructs predicting behavioral intention and used to create a unified theory of technology that offered a comprehensive understanding of technology acceptance. UTAUT theory looks into how technology is accepted and how performance expectancy, effort expectancy, and social influence and facilitating conditions play a role. This model is more advanced than the technology acceptance model. The model states that the actual use of technology is determined by behavioral intentions. The technology adoption and adoption of generative AI are dependent on these four constructs. These constructs are more or less similar in underpinning generative AI. Performance expectancy is “the degree to which an individual believes that using the new technology will help him/her attain gains in job performance”. This construct is based on the TAM and other models. Effort expectancy is “the degree of ease associated with the new technology”. Effort expectancy is perceived as similar to ease of use according to the TAM. Social influence is “the degree to which an individual perceives that important others believe he/she should us the new technology”. This construct is similar to subjective norms or the TRA, TPB, and IDT. Facilitating conditions are “the degree to which an individual believes that an organization’s and technical infrastructure exists to support the use of the system” (Venkatesh et al. 2003). This construct is drawn from the TPB and IDT.

Wang and Zhang (2023) investigated and assessed the characteristics of Generation Z that encourage its adoption of GenAI-assisted design. The study model included the trait curiosity concept, the technology readiness index, and the unified theory of acceptance and use of technology 2 (UTAUT2). Structural equation modeling was used to analyze the data that were gathered from 326 participants in the southeast Chinese mainland. Price value, hedonic motivation from UTAUT2, and effort expectancy all have a positive effect on the intention to use GenAI, but performance expectancy has no statistically significant effect. 2) Optimism and creativity have a significant effect on price value, hedonic motivation, performance expectancy, and effort expectancy.

Grassini et al. (2024) investigated the factors that influence a sample of university students in Norway to adopt and use ChatGPT. The study’s theoretical approach is founded on a previously validated model and is anchored in the Unified Theory of Acceptance and Use of Technology (UTAUT2). The suggested model combines six constructs to explain ChatGPT’s real usage patterns and behavioral intentions in the context of higher education. On the basis of the structural equation modeling results, performance expectancy was found to have the greatest influence on behavioral intention, followed by habits. This study adds to the body of knowledge regarding the variables affecting college students’ use of generative AI technologies. It also contributes to a deeper understanding of the ways in which educational contexts can effectively integrate tools such as ChatGPT to support instructors’ teaching and students’ learning. As described earlier, the performance expectancy and expectancy constructs were modeled from the TAM and other theories. The results are similar to the outcomes we presented in our model.

Conclusions

Generative AI tools hold great potential for transforming office settings with enhanced employee engagement and employee performance. However, trust and user perception play crucial roles in the adoption and use of these AI tools. This study aimed to identify the factors that influence the adoption intentions of information technology sector employees. The study followed convenience sampling to target information technology employees who were tech-savvy and who frequently deployed and used generative AI tools for their routine job activities. The study incorporated a total of 9 constructs; in turn, the ease of use and usefulness constructs, which are modeled as higher user perceptions, and vigor and dedication, which are modeled as employee engagement, constitute another higher construct. Both models with lower-order and higher-order constructs were assessed for their model fits, and both models were found to have an excellent fit. Therefore, the structural model was assessed. Path analysis and hypothesis testing were carried out through SEM analysis with IBM AMOS version 28 software. The results revealed that the strong influence of user perception on trust, organizational commitment, and organizational commitment significantly impacts employee engagement; in turn, employee engagement positively impacts employee performance among information technology employees. The proposed model, which integrates the TRI-SOR-TAM model, offers valuable insights for organizations and human resource management programs through the integration of generative AI tools. However, adoption intentions vary from employee to employee, and user perceptions need to be considered for the effective implementation and adoption of generic AI tools.

Theoretical implications

This empirical research significantly contributes to existing theories and research in the context of the adoption and use of generative AI tools for good human practices. This study provides insights into the extant literature on generative AI tools and their acceptance by employees and organizations. This study extends the scope of the TAM and TRI model frameworks by adding technical value in the context of human resource practices in the IT industry. Employee engagement and performance are positively and significantly influenced by user perception, trust, and organizational commitment. The model developed can be applied to certain HR practices, such as talent acquisition, hiring, finding talent, and training.

The study offers practical insights into several HR functions and the stakeholders involved in the deployment of generative AI tools to automate repetitive assignments. This outcome from this research can also help organizations understand and adopt generative AI tools. Management should provide clear and concise information on the benefits of adopting generative AI tools in organizations, such as quick responses and accuracy in carrying out employees’ routine activities. Guidance to employees should be provided to ensure the optimal use of generative AI tools and to make them familiar with these tools and their features for improved contributions. Additionally, this study provides insights for organizations to maximize profits and promote the successful integration of generative AI tools in various contexts.

Generative AI tool adoption and use are significantly influenced by ease of use and usefulness. Developers should prioritize task effectiveness and enhance the intelligence system to understand employee requirements and deliver accurate results. Integrating generative AI tools and other technologies in the office environment can ease routine and repeated jobs and enhance employee engagement in the IT sector for tech-savvy employees.

Practical implications

Specifically, it opens up new applications such as personalized education and services, digital art, and realistic virtual assistants that were previously unfeasible or impractical for automation. The practical implications are that the field of interdisciplinary research as well as the information technology industry will be greatly impacted by generative AI. To facilitate swift adoption, the principles of generative AI are conceptualized from a model-, system-, and application-level perspective in addition to a social-technical perspective, where they are explicated and defined. In the end, our research has given future scholars a significant research agenda that will enable them to study generative AI from different theoretical perspectives while incorporating the concepts from these theories. The results of our study should be interpreted considering several limitations. Our research was geographically limited to Hyderabad city and the Indian Metro, reducing the broader applicability of our findings to other regions with different cultural and educational backgrounds.

Furthermore, our findings might need to be updated quickly due to the rapid evolution of AI technology. There is a need to update our findings, as generative AI tools release new updates that could make them even more useful for students’ assignments. However, this restriction is applicable to all research in the quickly developing field of artificial intelligence. As our study addresses, it is critical to understand how the field and its instruments have changed over time, including attitudes and applications.

Limitations and future directions

This study has certain limitations that can be addressed by future researchers. The results are a representative sample of information technology employees from Hyderabad, an Indian Metro. This may limit the scope of application of the findings. However, the sample size was too large to generalize the results. The self-report questionnaire has the problem of revealing the true perspective of respondents, raising the potential for inaccuracies. The authors addressed this concern thoughtfully interpreting the data. Future studies can use other theoretical frameworks, integrating the TAM with the diffusion of innovation, information system service quality, and technology adoption models to further understand the factors that influence the adoption of generative AI tools. The study followed convenience sampling to target the population with the required characteristics, such as employees who use generative AI tools in their organizational settings. This study paves the way for future research to explore the presented relationships in different cultural and organizational settings. The authors recommend longitudinal studies to understand and assess how these relationships evolve over a period of time when generative AI is needed. Finally, further studies are suggested to dissect gender parity and incorporate moderation variables such as organizational commitment and human resource practices into the current model. As this field is still in its nuance stage, more explorative studies are needed to assess how the constructs of our model, such as organizational commitment and employee engagement, can influence the close construct of HRM practices’ psychological well-being and job satisfaction to better understand the theoretical framework. It is important to remember, however, that, by international standards, the population of reference (i.e., information technology employees working in Hyderabad) is very large. We purposely excluded other factors that might have an impact and concentrated only on the UTAUT2 model, as we are left to future researchers to integrate UTAUT-2 and generative AI. The authors suggest carrying out similar studies that integrate other theoretical models with generative AI.

Data availability

The datasets generated during the research and analyzed during the current study are available from the online data repositories Figshare at https://figshare.com/s/8b383f7c09dfa0c8ea80.

References

Acemoglu D, Johnson S, Viswanath K (2023) Why the power of technology rarely goes to the people. MIT Sloan Manag. Rev. 64(4):1–4

Anderson JC, & Gerbing DW (1984) The effect of sampling error on convergence, improper solutions, and goodness-of-fit indices for maximum likelihood confirmatory factor analysis. Psychometrika 49:155–173

Ajzen I (1991) The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 50(2):179–211

Ajzen I (2011) The theory of planned behaviour: Reactions and reflections. Psychology & health 26(9):1113–1127

Ajzen I, & Fishbein M (1980) Understanding attitudes and predicting social behavior. Englewood Cliffs, NJ:Prentice-Hall

Allen NJ, Meyer JP (1993) Organizational commitment: evidence of career stage effects? J. Bus. Res. 26(1):49–61

Assefa S (2022). Data Management Strategy for AI Deployment in Ethiopian Healthcare System. In Pan African Conference on Artificial Intelligence (pp. 50-66). Cham: Springer Nature Switzerland.hou

Baabdullah AM (2024) The precursors of AI adoption in business: Toward an efficient decision-making and functional performance. Int. J. Inf. Manag. 75:102745

Babina T, Fedyk A, He A, Hodson J (2024) Artificial intelligence, firm growth, and product innovation. J. Financ Econ. 151:103745

Bankins S, Ocampo AC, Marrone M, Restubog SLD, & Woo SE (2024) A multilevel review of artificial intelligence in organizations: Implications for organizational behavior research and practice. J. Organ. Behav. 45(2):159–182

Bentler PM (1990) Comparative fit indices in structural models. Psychol Bull. 107(2):238

Braganza A, Chen W, Canhoto A, Sap S (2021) Productive employment and decent work: The impact of AI adoption on psychological contracts, job engagement and employee trust. J. Bus. Res. 131:485–494

Byrne BM (2013) Structural equation modeling with Mplus: Basic concepts, applications, and programming. routledge

Candrian C, Scherer A (2022) Rise of the machines: Delegating decisions to autonomous AI. Comput Hum. Behav. 134:107308

Chen Q, Lu Y, Gong Y, Xiong J (2023) Can AI chatbots help retain customers? Impact of AI service quality on customer loyalty. Internet Res. 33(6):2205–2243

Cheng X, Zhang X, Cohen J, Mou J (2022) Human vs. AI: Understanding the impact of anthropomorphism on consumer response to chatbots from the perspective of trust and relationship norms. Inf. Process. Manag. 59(3):102940

Cheng X, Qiao L, Yang B, & Han R (2023) To trust or not to trust: a qualitative study of older adults’ online communication during the COVID-19 pandemic. Electronic Commerce Research, 1–30

Chui M, Hazan E, Roberts R, Singla A, Smaje K (2023) The economic potential of Generative AI. The next Productivity Frontier (McKinsey & Company). https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-Generative-ai-the-next-productivity-frontier#introduction

Cochran WG (1977) Sampling techniques. John Wiley & Sons

Czarnitzki D, Fernández GP, Rammer C (2023) Artificial intelligence and firm-level productivity. J. Econ Behav. Organ. 211:188–205

Davis FD (1987) User acceptance of information systems: the technology acceptance model (TAM)

Davis FD (1989) Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q., 319–340

DeLone WH, McLean ER (2003) The DeLone and McLean model of information systems success: a ten-year update. J. Manag. Inf. Syst. 19(4):9–30

Dutta D, Mishra SK, Tyagi D (2023) Augmented employee voice and employee engagement using artificial intelligence-enabled chatbots: a field study. Int. J. Hum. Resour. Manag. 34(12):2451–2480

Dwivedi YK, Pandey N, Currie W, Micu A (2024) Leveraging ChatGPT and other Generative artificial intelligence (AI)-based applications in the hospitality and tourism industry: practices, challenges and research agenda. Int. J. Contemp. Hosp. Manag. 36(1):1–12

Elegunde AF, Osagie R (2020) Artificial intelligence adoption and employee performance in the Nigerian banking industry. Int. J. Manag. Adm. 4(8):189–205

Faul F, Erdfelder E, Lang AG, Buchner A (2007) G* Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. methods 39(2):175–191

Flavián C, Pérez-Rueda A, Belanche D, Casaló LV (2022) Intention to use analytical artificial intelligence (AI) in services–the effect of technology readiness and awareness. J. Serv. Manag. 33(2):293–320

Fornell C, Larcker DF (1981) Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 18(1):39–50

Foroughi B, Senali MG, Iranmanesh M, Khanfar A, Ghobakhloo M, Annamalai N, Naghmeh-Abbaspour B (2023) Determinants of intention to use ChatGPT for educational purposes: Findings from PLS-SEM and fsQCA. Int. J. Hum.–Comput. Interact., 1–20

Frank J, Herbert F, Ricker J, Schönherr L, Eisenhofer T, Fischer A, ... & Holz T (2024) A representative study on human detection of artificially generated media across countries. In 2024 IEEE Symposiumon Security and Privacy (SP) (pp. 55–73). IEEE

Gatzioufa, P, Saprikis, V (2022) A literature review on users’ behavioral intention toward chatbots’ adoption. Appl. Comput. Inform., (ahead-of-print)

Ghazali E, Mutum DS, Lun NK (2024) Expectations and beyond: The nexus of AI instrumentality and brand credibility in voice assistant retention using extended expectation‐confirmation model. J. Consum. Behav. 23(2):655–675

Ghimire A, Edwards J (2024) Generative AI Adoption in Classroom in Context of Tec7hnology Acceptance Model (TAM) and the Innovation Diffusion Theory (IDT). https://doi.org/10.48550/arXiv.2406.15360

Gill SS, Xu M, Patros P, Wu H, Kaur R, Kaur K, Buyya R (2024) Transformative effects of ChatGPT on modern education: Emerging Era of AI Chatbots. Internet Things Cyber-Phys. Syst. 4:19–23

Gkinko L, Elbanna A (2023) The appropriation of conversational AI in the workplace: A taxonomy of AI chatbot users. Int. J. Inf. Manag. 69:102568

Glikson E, & Woolley AW (2020) Human trust in artificial intelligence. Review of empirical research

Goodhue DL, Thompson RL (1995) Task-technology fit and individual performance. MIS Q. 19(2):1827–1844

Goulet-Pelletier JC, Cousineau D (2018) A review of effect sizes and their confidence intervals, Part I: The Cohen’sd family. Quant. Methods Psychol. 14(4):242–265

Grassini S, Aasen ML, Møgelvang A (2024) Understanding University Students’ Acceptance of ChatGPT: Insights from the UTAUT2 Model. Appl. Artif. Intell. 38(1):2371168

Gupta S, Mathur N, Narang D (2023) E-leadership and virtual communication adoption by educators: An UTAUT3 model perspective. Glob. Knowl., Mem. Commun. 72(8/9):902–919

Hair JF (2010) Black. WC, Babin. BJ; and Anderson. RE (2010), Multivariate Data Analysis. Pearson Prentice Hall 7th edition, New York: Prentice Hall

Hair JF, Risher JJ, Sarstedt M, Ringle CM (2019) When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 31(1):2–24

Hee-Young CHO, Hoe-Chang YANG, HWANG BJ (2023) The Effect of ChatGPT Factors & Innovativeness on Switching Intention: Using Theory of Reasoned Action (TRA). J. Distrib. Sci. 21(8):83–96

Henseler J, Ringle CM, Sarstedt M (2015) A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 43:115–135

Hou FH, Wang CY, Shu JJ (2021) How Demographic Factors Effect Organizational Commitment for Artificial intelligence business Employees

Hu LT, Bentler PM (1998) Fit indices in covariance structure modeling: Sensitivity to underparameterized model misspecification. Psychol. Methods 3(4):424

Hughes C, Robert L, Frady K, Arroyos A (2019) Artificial intelligence, employee engagement, fairness, and job outcomes. In Managing technology and middle-and low-skilled employees (pp. 61-68). Emerald Publishing Limited

Hughes JM (2019) Technology Integration in Special Education: A Case Study of Teacher Attitudes and Perceptions (Doctoral dissertation, Grand Canyon University)

Huy LV, Nguyen HT, Vo-Thanh T, Thinh NHT, Thi Thu Dung T (2024) Generative AI, why, how, and outcomes: a user adoption study. AIS Trans. Hum.-Comput. Interact. 16(1):1–27

Hwang GJ, Chang CY (2023) A review of opportunities and challenges of chatbots in education. Interact. Learn. Environ. 31(7):4099–4112

Ivanov S, Soliman M, Tuomi A, Alkathiri NA, Al-Alawi AN (2024) Drivers of generative AI adoption in higher education through the lens of the Theory of Planned Behavior. Technol. Soc. 77:102521

Javaid M, Haleem A, Singh RP (2023) ChatGPT for healthcare services: An emerging stage for an innovative perspective. Bench Council Trans. Benchmarks, Stand. Eval. 3(1):100105

James G (2023) My Educator. SEM Online Course. https://statwiki.gaskination.com/index.php?title=Main_Page

Jia X, Hou Y (2024) Architecting the future: exploring the synergy of AI-driven sustainable HRM, conscientiousness, and employee engagement. Discov. Sustain. 5(1):1–17

Kasneci E, Seßler K, Küchemann S, Bannert M, Dementieva D, Fischer F, Kasneci G (2023) ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. differences 103:102274

Kline P (2015) A handbook of test construction (psychology revivals): introduction to psychometric design. Routledge

Korngiebel DM, Mooney SD (2021) Considering the possibilities and pitfalls of Generative Pretrained Transformer 3 (GPT-3) in healthcare delivery. NPJ Digit. Med. 4(1):93

Kuhail MA, Al Katheeri H, Negreiros J, Seffah A, Alfandi O (2023) Engaging students with a chatbot-based academic advising system. Int. J. Hum.–Comput. Interact. 39(10):2115–2141

Kulkarni, MS, Pramod, D, Patil, KP (2023, December). Assessing the net benefits of generative artificial intelligence systems for wealth management service innovation: A validation of the Delone and Mclean model of information system success. In International Working Conference on Transfer and Diffusion of IT (pp. 56-67). Cham: Springer Nature Switzerland

Kyriazos TA (2018) Applied psychometrics: sample size and sample power considerations in factor analysis (EFA, CFA) and SEM in general. Psychology 9(08):2207

Lai YL, Lee J (2020) Integration of technology readiness index (TRI) into the technology acceptance model (TAM) for explaining behavior in the adoption of BIM. Asian Educ. Stud. 5(2):10

Marikyan D, Papagiannidis S, Rana OF, Ranjan R, Morgan G (2022) “Alexa, let’s talk about my productivity”: The impact of digital assistants on work productivity. J. Bus. Res. 142:572–584

McKnight DH, Choudhury V, Kacmar C (2002) The impact of initial consumer trust on intentions to transact with a web site: a trust building model. J. Strateg. Inf. Syst. 11(3-4):297–323

Mehrabian A, Russell JA (1973) A measure of arousal seeking tendency. Environ. Behav. 5(3):315

Meyer JP, Paunonen SV, Gellatly IR, Goffin RD, Jackson DN (1989) Organizational commitment and job performance: It is the nature of the commitment that counts. J. Appl. Psychol. 74(1):152

Mijwil M, Aljanabi M, Ali AH (2023) Chatgpt: Exploring the role of cybersecurity in the protection of medical information. Mesop. J. Cybersecur. 2023:18–21

Mishra GP, Mishra KL, Pasumarti SS, Mukherjee D, Pande A, Panda A (2024) Spurring organization performance through artificial intelligence and employee engagement: an empirical study. In The Role of HR in the Transforming Workplace. Productivity Press, 60–78

Moore GC, & Benbasat I (1991) Development of an instrument to measure the perceptions of adopting an information technology innovation. Inf. Syst. Res. 2(3):192–222

Myin MT, Watchravesringkan K (2024) Investigating consumers’ adoption of AI chatbots for apparel shopping. J. Consum. Mark

Nazaretsky T, Ariely M, Cukurova M, Alexandron G (2022) Teachers’ trust in AI‐powered educational technology and a professional development program to improve it. Br. J. Educ. Technol. 53(4):914–931

Nikolic S, Daniel S, Haque R, Belkina M, Hassan GM, Grundy S, Sandison C (2023) ChatGPT versus engineering education assessment: a multidisciplinary and multi-institutional benchmarking and analysis of this Generative artificial intelligence tool to investigate assessment integrity. Eur. J. Eng. Educ. 48(4):559–614

O’Connor AM, Tsafnat G, Thomas J, Glasziou P, Gilbert SB, Hutton B (2019) A question of trust: can we build an evidence base to gain trust in systematic review automation technologies? Syst. Rev. 8:1–8

Ojo AI (2017) Validation of the DeLone and McLean information systems success model. Healthc. Inform. Res. 23(1):60–66

Onal S, & Kulavuz-Onal D (2024) A cross-disciplinary examination of the instructional uses of ChatGPT inhigher education. J. Educ. Technol. Syst. 52(3):301–324

Parasuraman A (2000) Technology Readiness Index (TRI) a multiple-item scale to measure readiness to embrace new technologies. J. Serv. Res. 2(4):307–320

Parasuraman A, Colby CL (2015) An updated and streamlined technology readiness index: TRI 2.0. J. Serv. Res. 18(1):59–74

Pearce H, Yanamandra K, Gupta N, Karri R (2022) Flaw3d: A trojan-based cyber attack on the physical outcomes of additive manufacturing. IEEE/ASME Trans. Mechatron. 27(6):5361–5370

Pereira V, Hadjielias E, Christofi M, Vrontis D (2023) A systematic literature review on the impact of artificial intelligence on workplace outcomes: A multiprocess perspective. Hum. Resour. Manag. Rev. 33(1):100857

Perry N, Spang B, Eskandarian S, Boneh D (2022) Strong anonymity for mesh messaging. arXiv preprint arXiv:2207.04145. https://doi.org/10.48550/arXiv.2207.04145

Picazo Rodríguez B, Verdú-Jover AJ, Estrada-Cruz M, Gomez-Gras JM (2023) Does digital transformation increase firms’ productivity perception? The role of technostress and work engagement. Eur. J. Manage. Bus. Econ

Pillai R, Sivathanu B, Metri B, Kaushik N (2023) Students’ adoption of AI-based teacher-bots (T-bots) for learning in higher education. Inf. Technol. People, (ahead-of-print)

Pradhan RK, Jena LK (2017) Employee performance at workplace: Conceptual model and empirical validation. Bus. Perspect. Res. 5(1):69–85

Prentice C, Wong IA, Lin ZC (2023) Artificial intelligence as a boundary-crossing object for employee engagement and performance. J. Retail. Consum. Serv. 73:103376