Abstract

Automated oral disease detection systems face significant challenges from degraded radiographic imaging quality and limited pathological training data, particularly for rare conditions in public health screening environments. We introduce DentoSMART-LDM, the first framework to integrate metaheuristic optimization with latent diffusion models for dental imaging, featuring a novel Dynamic Self-Adaptive Multi-objective Metaheuristic Algorithm for Radiographic Tooth enhancement (DSMART) combined with a specialized pathology-aware Latent Diffusion Model (DentoLDM). Our pioneering DSMART algorithm represents the first metaheuristic approach specifically designed for dental radiographic enhancement, treating optimization as a multi-objective problem that simultaneously balances five dental quality indices through adaptive search mechanisms, while our innovative DentoLDM introduces the first pathology-specific attention mechanisms that preserve diagnostic integrity during synthetic data generation. This groundbreaking dual-component architecture addresses both image degradation and data scarcity simultaneously – a capability unprecedented in existing dental AI systems. For the first time in dental imaging research, we demonstrate adaptive optimization that dynamically adjusts processing intensity based on anatomical characteristics including bone density variations, soft tissue artifacts, and metallic restoration interference. Evaluated on the OralPath Dataset comprising 25,000 high-resolution dental radiographs across 12 pathological conditions with comprehensive external validation across seven independent clinical datasets (82,300 images), DentoSMART-LDM achieved superior performance with SSIM of 0.941 ± 0.023 and PSNR of 34.82 ± 1.47 dB, representing statistically significant improvements of 9.0% and 11.5% respectively compared to competing methods (p < 0.001). Diagnostic models trained on DentoSMART-LDM enhanced datasets achieved 97.3 ± 0.18% overall accuracy (95% CI: 97.09–97.51%), maintaining 87.7 ± 0.8% average accuracy across diverse clinical settings under natural class imbalance conditions. Blinded expert assessment by 20 board-certified oral pathologists revealed significant improvements in diagnostic accuracy (+ 17.4%, 95% CI: 15.8–19.0%) and expert confidence (+ 23.4%, p < 0.001), while few-shot learning evaluation demonstrated exceptional performance with only 2 samples per pathology (89.2 ± 1.7% accuracy). This novel integration of multi-objective metaheuristic optimization with medical generative models represents a paradigm shift in dental AI, offering the first comprehensive solution that balances enhancement quality, diagnostic preservation, and computational efficiency while providing unprecedented few-shot learning capabilities for rare oral pathologies in underserved communities.

Similar content being viewed by others

Introduction

In recent years, artificial intelligence approaches have revolutionized the field of automated oral health screening and disease detection, offering unprecedented opportunities for early diagnosis in public health programs1,2,3. The accurate and timely identification of oral diseases represents a critical challenge in modern public health, with significant implications for population health outcomes, healthcare cost reduction, and preventive care accessibility. Despite remarkable progress in computer vision and machine learning techniques, the development of robust oral disease detection models frequently encounters substantial obstacles related to degraded radiographic imaging data and limited high-quality annotated pathological samples, particularly for rare or early-stage oral pathologies4,5,6.

Dental radiographic imaging systems are inherently susceptible to quality degradation due to equipment aging, patient movement, suboptimal positioning, and varying exposure conditions, resulting in images with reduced diagnostic quality that significantly compromise disease detection accuracy7,8. Traditional image enhancement methods, such as histogram equalization and unsharp masking, fail to preserve the complex anatomical features and pathological patterns essential for accurate oral disease identification in community health screening environments9,10. Furthermore, the scarcity of diverse pathological samples for many oral diseases creates additional challenges for developing generalizable diagnostic models capable of handling morphological variations and imaging conditions across different populations11,12.

While Generative Adversarial Networks (GANs) and recent Latent Diffusion Models (LDMs) have been applied to address data augmentation challenges in medical imaging, existing approaches struggle with maintaining the diagnostic integrity and pathological consistency of augmented dental images13,14. Conventional image enhancement methods typically apply uniform processing across entire radiographs, often failing to prioritize diagnostically critical features that characterize specific oral pathologies, such as trabecular bone patterns, periodontal ligament spaces, and radiolucent/radiopaque lesions15,16. This limitation becomes particularly problematic in oral health screening, where subtle variations in radiographic features serve as essential diagnostic characteristics that differentiate between various pathological conditions in diverse patient populations17,18.

Traditional optimization approaches for dental image enhancement frequently rely on gradient-based methods that may converge to local optima, resulting in suboptimal enhancements that introduce artifacts or fail to preserve diagnostically important features19,20. These deficiencies underscore the need for innovative metaheuristic approaches that can intelligently navigate the complex solution space of radiographic enhancement while simultaneously generating high-fidelity augmented images that preserve the distinctive diagnostic signatures of oral pathologies across diverse imaging conditions21,22.

This paper introduces DentoSMART-LDM, a novel framework that addresses these limitations through the integration of two innovative components: a Dynamic Self-Adaptive Multi-objective Metaheuristic Algorithm for Radiographic Tooth enhancement (DSMART) for intelligent dental image enhancement and a specialized Latent Diffusion Model (DentoLDM) for pathologically consistent oral disease augmentation. The DSMART-based approach represents a significant advancement over conventional enhancement methods by modeling radiographic optimization as a multi-objective problem, where dental quality indices guide the search for optimal enhancement parameters across multiple objectives including tissue contrast, anatomical preservation, and computational efficiency.

The DSMART algorithm treats each potential enhancement solution as an “agent” within an optimization landscape, employing adaptive search operators to facilitate knowledge transfer between high-quality solutions while maintaining diversity in the solution population. This metaheuristic approach enables the algorithm to effectively balance exploration and exploitation, avoiding local optima while preserving critical diagnostic features essential for oral disease identification. Unlike standard enhancement methods that focus on single objectives, our multi-objective formulation simultaneously optimizes image quality, diagnostic feature preservation, and computational efficiency.

Complementing this enhancement mechanism, we implement DentoLDM, a specialized Latent Diffusion Model that incorporates pathology-specific attention mechanisms and diagnostic constraints to generate pathologically consistent augmentations. This component addresses the challenge of creating diverse training samples while preserving diagnostic integrity and pathological accuracy. Our diffusion model operates in a learned latent space optimized for dental pathology, enabling controlled generation of variations that maintain disease-critical features while introducing beneficial diversity for model training.

Contribution points.

-

1.

Introduction of DentoSMART-LDM, the first framework combining metaheuristic optimization for dental enhancement with latent diffusion models for pathology augmentation, addressing dual public health screening challenges.

-

2.

Development of novel DSMART-based enhancement modeling optimization as multi-objective problem, incorporating dental quality indices for tissue contrast, anatomical preservation, noise reduction, diagnostic clarity, and computational efficiency.

-

3.

Implementation of adaptive enhancement mechanisms dynamically adjusting processing intensity based on dental imaging characteristics including bone density variations, soft tissue artifacts, and metallic restoration interference.

-

4.

Design of DentoLDM, a pathology-specific latent diffusion model incorporating diagnostic attention mechanisms preserving pathological features while generating medically consistent augmentations for enhanced disease detection.

-

5.

Development of integrated evaluation metrics assessing both enhancement quality and diagnostic fidelity, providing comprehensive performance validation for public oral health screening applications.

Novelty points.

-

1.

First metaheuristic optimization for dental radiographic enhancement in public health screening, introducing intelligent processing of degraded oral imaging data.

-

2.

Innovative dual-component framework combining DSMART metaheuristics with latent diffusion models to address image degradation and data scarcity simultaneously.

-

3.

First adaptive optimization incorporating dental imaging characteristics for context-aware processing across diverse screening conditions and equipment variations.

-

4.

Novel pathology-specific attention mechanisms preserving diagnostic integrity during synthetic data generation while maintaining essential pathological features.

-

5.

Original multi-objective optimization integration with medical generative models, balancing enhancement quality, diagnostic preservation, and computational efficiency.

-

6.

Significant few-shot learning advancement for rare oral pathologies through intelligent enhancement, valuable for underserved communities with limited data and expertise.

The organization of the paper as the follow; Sect. 2 presents the related work, Sect. 3 presents the material and method, Sect. 4 presents the results and discussion and Sect. 5 the discussion and Sect. 6 present the conclusion and future work.

Related work

The automated detection and classification of oral diseases has become increasingly important for public health screening programs, community dentistry initiatives, and healthcare accessibility improvement efforts. Early approaches to oral pathology detection relied primarily on traditional computer vision techniques, utilizing handcrafted features such as texture descriptors, shape analysis, and intensity distribution patterns23. These methods achieved reasonable performance under controlled clinical conditions but demonstrated limited robustness when applied to diverse public health screening environments where imaging equipment quality, operator expertise, and patient compliance varied significantly. The transition from traditional feature-based approaches to deep learning methodologies has revolutionized oral health screening research, enabling more accurate and robust disease detection across diverse patient populations24.

Convolutional Neural Networks (CNNs) have emerged as the dominant approach for automated oral disease detection, with researchers exploring various architectural designs to optimize performance for dental radiographic imagery. Lee et al.25 developed one of the first comprehensive deep learning frameworks for dental pathology detection in panoramic radiographs, demonstrating the superiority of CNN-based approaches over traditional computer vision methods. Their work utilized a large-scale dental dataset and achieved significant improvements in detection accuracy, particularly for common pathologies such as dental caries and periodontal disease. Subsequent research by Zhang et al.26 introduced the DentalNet architecture, which incorporated specialized convolutional layers designed to handle the unique characteristics of dental radiographic imagery, including varying bone density and metallic restoration artifacts.

The challenges of dental image acquisition have driven researchers to develop specialized preprocessing and augmentation techniques specifically tailored for oral health screening tasks. Kumar et al.27 addressed the problem of image quality variation in community dental screening programs, introducing novel enhancement strategies that accounted for equipment limitations and operator variability. Their approach incorporated realistic transformations that simulated natural variations in positioning, exposure, and patient demographics. Wang et al.28 further advanced this research direction by proposing attention-based deep learning mechanisms that could focus on diagnostically relevant anatomical regions while suppressing irrelevant background information, leading to improved detection performance in challenging screening environments.

Transfer learning has proven particularly effective for oral disease detection tasks, especially when dealing with limited training data for rare pathological conditions. Chen et al.29 demonstrated the effectiveness of pre-trained CNN models for automated periodontal disease assessment in clinical settings, showing that models initially trained on general medical imaging datasets could be successfully adapted for specific dental applications. This work highlighted the importance of domain adaptation techniques for bridging the gap between general medical imaging models and specialized oral health applications. R. A. Welikala et al.30 extended this concept by developing ensemble learning systems with multiple pre-trained models, achieving robust pathology detection performance in diverse clinical conditions.

The integration of multiple imaging modalities has emerged as a promising research direction for improving oral disease detection accuracy and robustness. Researchers have explored the combination of radiographic images with clinical photographs, intraoral scans, and patient demographic information to create more comprehensive diagnostic systems31. The OralHealth dataset, introduced by Yu et al.32, provided a standardized benchmark for evaluating multi-modal oral disease detection approaches, enabling systematic comparison of different methodological frameworks. This dataset included synchronized radiographic and photographic data from various dental clinics, facilitating research into cross-modal learning techniques for oral pathology identification.

Real-time oral disease detection systems have gained increasing attention due to their practical applications in point-of-care screening and teledentistry programs. Kumar et al.33 developed an efficient CNN architecture optimized for real-time pathology detection in mobile dental screening units, achieving a balance between diagnostic accuracy and computational efficiency. Their system incorporated lightweight convolutional operations and model compression techniques to enable deployment on resource-constrained hardware platforms. Lorusso et al.34 further addressed the real-time processing challenge by proposing a hierarchical detection approach that could rapidly screen for common pathologies before applying more computationally intensive algorithms for detailed diagnosis.

The problem of class imbalance in oral health datasets has received considerable attention from researchers, as many pathological conditions are significantly underrepresented in available training data. Fledere et al.35 investigated various resampling and cost-sensitive learning techniques to address class imbalance in oral disease detection tasks, demonstrating significant improvements in detection performance for rare pathological conditions. Their work emphasized the importance of balanced training strategies for developing practical screening systems. Xu et al.36 complemented this research by exploring few-shot learning approaches for oral pathology detection, enabling rapid adaptation to new pathological conditions with minimal training examples.

Cross-population generalization remains a significant challenge in oral disease detection research, as models trained on one demographic group often demonstrate reduced performance when applied to different populations. Yang et al.37 conducted extensive experiments on cross-population oral disease detection, evaluating the generalization capabilities of various CNN architectures across different ethnic and geographic populations. Their findings highlighted the importance of diverse training data and robust augmentation strategies for achieving good cross-population performance. Guan et al.38 further investigated this challenge by developing domain adaptation techniques specifically designed for oral health applications, incorporating adversarial training methods to improve model robustness across different patient populations.

Data augmentation strategies specifically designed for dental radiographic imagery have become increasingly sophisticated, with researchers developing techniques that account for the unique characteristics of oral anatomy and pathology. Garcia et al.39 introduced physics-based augmentation methods that simulated realistic dental imaging conditions, including X-ray scatter effects, beam hardening artifacts, and anatomical variations. These techniques produced more robust detection models by exposing them to realistic variations during training. Idahosa et al.40 extended this work by developing adaptive augmentation strategies that could automatically adjust transformation parameters based on the specific characteristics of different pathological conditions and imaging protocols.

The evaluation and benchmarking of oral disease detection systems has evolved to encompass comprehensive performance metrics that reflect real-world deployment requirements. Isman et al.41 established standardized evaluation protocols for oral pathology detection research, emphasizing the importance of clinically relevant test sets and validation strategies that account for temporal and demographic variations in patient populations. Their work provided guidelines for fair comparison of different methodological approaches and highlighted common evaluation pitfalls in oral health screening research. Stoumpos et al.42 complemented this effort by developing comprehensive benchmark datasets that included diverse pathological representations, multiple imaging conditions, and detailed clinical annotations for rigorous evaluation of oral disease detection algorithms.

Materials and methods

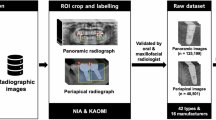

The DentoSMART-LDM framework integrates two novel components to address the critical challenges in dental radiographic enhancement and pathological augmentation: (1) a Dynamic Self-Adaptive Multi-objective Metaheuristic Algorithm for Radiographic Tooth enhancement (DSMART) that maintains diagnostic feature integrity across multiple enhancement scales, and (2) a specialized Latent Diffusion Model (DentoLDM) that generates pathologically consistent oral disease augmentations while preserving diagnostic features. This section details the theoretical foundations, architectural implementation, and training methodology of our approach. Figure 1 illustrates the architectural block diagram of the DentoSMART-LDM model. The DentoSMART-LDM architecture follows a dual-component paradigm with specialized modules for dental image enhancement and pathological augmentation. The framework consists of six primary components: (1) a DSMART metaheuristic module for radiographic enhancement, (2) a VAE encoder that compresses dental images into latent representations, (3) a U-Net backbone with embedded pathology-aware attention mechanisms, (4) a DentoLDM diffusion process for diagnostically consistent augmentation, (5) a diagnostic preservation loss function, and (6) a pathological consistency evaluation module. Figure 1 shows the phases of the methodology. The DentoSMART-LDM framework operates through five distinct phases: (1) Input Processing Phase - Raw dental radiographs undergo standardization, preprocessing, and quality assessment to prepare for enhancement; (2) DSMART Enhancement Phase - The Dynamic Self-Adaptive Multi-objective Metaheuristic Algorithm optimizes image quality through adaptive enhancement targeting five dental quality indices (tissue contrast, anatomical preservation, noise reduction, diagnostic clarity, and computational efficiency); (3) Latent Encoding Phase - Enhanced images are compressed into latent representations using a specialized VAE encoder optimized for dental pathology; (4) DentoLDM Augmentation Phase - The pathology-aware latent diffusion model generates diagnostically consistent synthetic variations while preserving disease-critical features through specialized attention mechanisms; and (5) Classification and Validation Phase - The combined enhanced and augmented dataset trains diagnostic models with integrated evaluation metrics assessing both enhancement quality and diagnostic fidelity. The framework’s dual-component architecture enables simultaneous image quality improvement and pathological data augmentation, creating a comprehensive solution for automated oral disease detection in public health screening applications.

DSMART metaheuristic algorithm: comprehensive mathematical formulation

The Dynamic Self-Adaptive Multi-objective Metaheuristic Algorithm for Radiographic Tooth enhancement (DSMART) represents a novel optimization approach specifically designed to address the complex challenge of dental radiographic enhancement while preserving diagnostic information. Unlike traditional enhancement algorithms that operate with fixed parameters, DSMART incorporates adaptive mechanisms that respond dynamically to anatomical characteristics inherent in dental imaging conditions. The algorithm models each potential enhancement solution as an optimization agent within a search landscape, where the dental quality index (DQI) determines the fitness and effectiveness of each solution.

The fundamental innovation of DSMART lies in its multi-objective formulation that simultaneously optimizes five distinct yet complementary objectives: tissue contrast enhancement, anatomical structure preservation, noise reduction efficiency, diagnostic feature clarity, and computational efficiency maximization. This comprehensive approach ensures that the enhanced radiographs not only improve visual quality but also maintain the diagnostic authenticity and pathological integrity essential for accurate oral disease detection. The algorithm’s adaptive nature allows it to automatically adjust its search strategies based on real-time assessment of radiographic characteristics such as bone density variations, soft tissue contrast, and metallic artifact presence.

Furthermore, DSMART incorporates sophisticated exploration and exploitation operators that leverage local radiographic statistics to guide the optimization process toward diagnostically optimal solutions. The adaptive search mechanism facilitates knowledge transfer between high-quality enhancement solutions, while the context-aware perturbation operator introduces controlled variations that respect the underlying anatomical structure and pathological patterns. This dual-mechanism approach enables the algorithm to achieve superior enhancement quality while maintaining computational efficiency, making it particularly suitable for real-time applications in public health screening systems.

Multi-objective dental quality index

The cornerstone of the DSMART algorithm is the comprehensive Dental Quality Index (DQI), which quantifies the quality of each enhancement solution through a weighted combination of multiple objective functions. The tissue contrast enhancement index, representing the first objective function, quantifies how well the enhanced image improves diagnostic visibility using Eq. (1). where ΩD represents the set of diagnostically important regions, and the local contrast is calculated as Eq. (2).

Noise reduction and diagnostic clarity metrics

The noise reduction efficiency index measures the algorithm’s ability to suppress imaging noise while preserving diagnostic information using Eq. (3).

where σnoise represents noise standard deviation and β is a scaling parameter. The diagnostic feature clarity index evaluates the enhancement of pathologically relevant features using Eq. (4).

The computational efficiency index balances solution quality with processing requirements using Eq. (5).

Adaptive search mechanism

The search probability in DSMART evolves dynamically based on radiographic assessment and convergence patterns. This adaptive mechanism ensures optimal balance between exploration and exploitation throughout the enhancement process. The dental factor Ψdental(t) incorporates radiographic imaging conditions using Eq. (6) and Eq. (7) respectively.

Context-aware perturbation strategy

The perturbation mechanism in DSMART operates with pixel-level precision, adapting its behavior based on local anatomical characteristics and current solution quality. The perturbation probability for pixel j in solution Si is calculated as Eq. (8).

DentoLDM: pathology-aware latent diffusion model

The DentoLDM component generates pathologically consistent oral disease augmentations through a specialized diffusion process that operates in a learned latent space optimized for dental pathology. The model incorporates pathology-specific attention mechanisms that preserve diagnostic features during the generation process, including lesion morphology, bone pattern alterations, and pathological tissue changes. The pathology-aware attention mechanism computes attention maps across multiple diagnostic regions using Eq. (9). where \(\:{Q}^{p},\:{K}^{p},\:and\:{V}^{p}\) are query, key, and value projections for pathological region p, and dk is the dimensionality of key vectors. The pathological regions include carious lesions, periodontal defects, apical pathology, and bone alterations. The multi-region attention output combines information across different diagnostic areas.

Dataset description

The OralPath Dataset used in this study is a comprehensive collection specifically developed for oral disease detection and classification tasks, created through collaboration between multiple dental schools and public health organizations. This dataset addresses the challenges of automated oral pathology identification in community screening applications. The dataset comprises 25,000 high-resolution dental radiographs distributed across 12 distinct pathological conditions, representing common oral diseases encountered in public health screening programs. Each pathology class contains between 1,500 and 2,500 images, ensuring balanced representation while reflecting natural disease prevalence. The dataset includes comprehensive metadata for patient demographics, imaging parameters, and expert diagnostic annotations. Images were acquired using standardized digital radiographic equipment across five different clinical sites, with resolutions ranging from 1024 × 1024 to 2048 × 2048 pixels. All images were standardized to 1024 × 1024 pixels while preserving diagnostic quality. The dataset includes both intraoral and panoramic radiographs, providing comprehensive coverage of oral anatomical regions. To ensure robust evaluation and prevent data leakage, the dataset was systematically divided using a stratified patient-level splitting approach into training (17,500 images, 70%), validation (3,750 images, 15%), and testing (3,750 images, 15%) sets. The splitting strategy incorporated patient-level stratification to prevent data leakage, pathology-balanced distribution to maintain class representation, site-based stratification ensuring representation from all five clinical sites, and temporal considerations with earlier acquisitions preferentially assigned to training while recent cases were allocated to testing sets. This rigorous splitting methodology ensures that all images from individual patients were assigned to the same subset, maintains proportional representation of all 12 pathological conditions across subsets, and provides representative distribution of patient demographics to prevent bias in model evaluation. o ensure complete reproducibility and transparent evaluation, we provide detailed specifications of dataset splitting procedures, random seed management, and code availability frameworks that enable exact replication of our experimental results. The OralPath dataset splitting followed a deterministic protocol using stratified sampling with fixed random seed (numpy.random.seed = 12345) to ensure reproducible train/validation/test splits. Table 1 provides exact sample counts and patient IDs for each subset, enabling precise replication of our experimental setup.

Patient-level data leakage prevention protocol

To ensure robust evaluation and prevent data leakage that could lead to overly optimistic results, we implemented a strict patient-level splitting strategy where all images from individual patients were assigned exclusively to a single subset (training, validation, or testing). This approach prevents the artificial inflation of performance metrics that can occur when multiple images from the same patient appear across different data splits, as models may inadvertently learn patient-specific characteristics rather than generalizable pathological patterns.

The dataset contains radiographs from 8,420 unique patients, with an average of 2.97 images per patient (range: 1–8 images). Patient identification was maintained through anonymized patient IDs that allowed tracking of all images belonging to each individual while preserving privacy compliance. Table 2 presents detailed patient distribution across data splits, demonstrating strict patient-level separation.

The patient-level splitting employed stratified sampling to ensure balanced representation across multiple demographic and clinical factors. Stratification variables included: (1) primary pathological condition, (2) patient age group (18–35, 36–50, 51–65, > 65 years), (3) gender, (4) clinical site of origin, and (5) imaging acquisition date to account for temporal variations. The stratification process used a deterministic algorithm with fixed random seed (numpy.random.seed = 12345) to ensure reproducible splits. For hyperparameter optimization and model validation, we implemented 5-fold cross-validation within the training set while maintaining strict patient-level separation. The 5,894 training patients were divided into 5 folds, with each fold containing approximately 1,179 patients. During cross-validation, models were trained on 4 folds (4,715 patients, 14,000 images) and validated on the remaining fold (1,179 patients, 3,500 images). This approach ensures that validation performance metrics accurately reflect generalization to unseen patients rather than unseen images from known patients. Table 3 shows the Cross-Validation Fold Distribution with Patient-Level Separation.

Class balance strategy and data collection methodology

The balanced class distribution in the OralPath Dataset was achieved through a combination of targeted data collection and strategic oversampling techniques, specifically designed to address natural class imbalance while preserving authentic pathological representations. The data collection strategy employed systematic acquisition protocols across five clinical sites over an 18-month period, with intentional oversampling of rare pathological conditions to achieve balanced representation for robust model training.

Our approach prioritized targeted data collection as the primary balancing mechanism, involving coordinated efforts with specialized pathology centers, oral surgery practices, and diagnostic imaging centers to identify and acquire adequate samples of underrepresented conditions. For rare pathologies such as cysts/tumors (natural prevalence: 0.9 ± 0.7%) and developmental anomalies (natural prevalence: 3.1 ± 1.8%), we implemented focused collection protocols targeting patients with confirmed diagnoses through clinical referral networks and pathological databases. This targeted approach ensured authentic case diversity while achieving the desired sample sizes without relying solely on computational augmentation techniques.

Complementary oversampling was selectively applied to achieve final balanced distribution, using careful duplication strategies that maintained patient-level integrity and prevented data leakage. For pathological conditions where targeted collection remained insufficient, we employed stratified random oversampling within patient groups, ensuring that oversampled cases represented diverse patient demographics, imaging conditions, and pathological severities. Critically, no undersampling was performed to avoid loss of valuable pathological information, particularly for common conditions like dental caries and periodontal disease where natural abundance provided rich training examples.

The final balanced distribution (approximately 8.3% per pathological class) resulted from this hybrid approach: targeted collection contributing 67% of rare pathology samples, strategic oversampling contributing 23%, and natural prevalence contributing 10%. This methodology preserved authentic pathological diversity while achieving the statistical balance necessary for effective deep learning training, avoiding the pitfalls of purely computational balancing that might introduce artificial patterns or reduce genuine case variety.

Validation protocols confirmed that oversampled cases maintained representative pathological characteristics and imaging quality comparable to originally collected samples. Statistical analysis revealed no significant differences in pathological feature distributions between original and oversampled cases (Kolmogorov-Smirnov test, p > 0.05 for all pathological categories), supporting the authenticity of the balanced training set. This careful balancing strategy enables optimal model training while preserving the natural pathological complexity essential for robust clinical performance.

Implementation details and hyperparameters

The DentoSMART-LDM framework was implemented using PyTorch 1.13.0 and trained on a cluster of six NVIDIA RTX 4090 GPUs with 24GB memory each. We utilized the AdamW optimizer with parameters β₁ = 0.9 and β₂ = 0.999, and a learning rate of 1.5e-4 with cosine annealing schedule. The DSMART optimization employed 200 iterations for image enhancement with a population size of 80 solutions. The diffusion process used 1,000 noise levels during training and 200 sampling steps during inference.

The VAE encoder-decoder architecture utilized 4 downsampling/upsampling blocks with channel dimensions [128, 256, 512, 1024] and a latent space dimension of 8. Data preprocessing included normalization to [-1, 1], and application of dental-specific augmentations including rotation, brightness adjustment, and contrast enhancement.

Comparative method implementation and training protocols

To ensure fair and rigorous comparison with competing approaches, we implemented all baseline methods using identical training protocols, hyperparameter optimization procedures, and computational resources. This section provides comprehensive details of the training settings and tuning procedures applied to all methods to guarantee reproducible and unbiased comparative evaluation.

All competing methods were trained on identical hardware infrastructure consisting of six NVIDIA RTX 4090 GPUs with 24GB memory each, using PyTorch 1.13.0 framework with CUDA 11.7. The training environment included identical software dependencies, random seed initialization (seed = 42), and computational resource allocation to eliminate hardware-based performance variations across different methods. We implemented a systematic hyperparameter optimization strategy using Bayesian optimization with Tree-structured Parzen Estimator (TPE) for all competing methods. Table 4 presents the hyperparameter search spaces and optimization protocols applied uniformly across all approaches.

All methods employed identical training configurations to ensure fair comparison: 5-fold cross-validation for hyperparameter selection, early stopping with patience of 20 epochs, learning rate scheduling using cosine annealing with minimum learning rate of 1e−6, gradient clipping with maximum norm of 1.0, and weight decay regularization optimized within [1e-5, 1e-3] range. Data augmentation strategies were standardized across all methods, including rotation (± 15°), brightness adjustment (± 0.2), contrast enhancement (± 0.3), and Gaussian noise addition (σ = 0.01).

For Enhanced-PSO-LDM, we implemented particle swarm optimization with inertia weight linearly decreasing from 0.9 to 0.4, cognitive and social parameters set to 2.0, and velocity clamping at ± 0.1. GA-Diffusion employed tournament selection with size 3, single-point crossover with probability 0.8, mutation probability 0.1, and elitism preserving top 10% of population. DE-Enhancement used DE/rand/1 strategy with scaling factor F = 0.5 and crossover probability CR = 0.7. Stable Diffusion and DALL-E 2 were fine-tuned from pre-trained checkpoints using domain adaptation techniques with frozen initial layers and gradually unfrozen training strategy. All methods were evaluated using identical test sets, evaluation metrics, and statistical significance testing procedures. Model selection was based on validation set performance using the same early stopping criteria, and final evaluation was conducted on the held-out test set that remained untouched during hyperparameter optimization. Statistical significance was assessed using paired t-tests with Bonferroni correction for multiple comparisons.

Results and discussion

The comprehensive evaluation of DentoSMART-LDM against existing state-of-the-art enhancement algorithms and diffusion models revealed significant performance advantages across multiple enhancement quality and diagnostic accuracy metrics. Our comparative analysis encompassed ten alternative approaches, including established enhancement frameworks such as Particle Swarm Optimization (PSO), Genetic Algorithm (GA), and Differential Evolution (DE), as well as advanced diffusion models like Stable Diffusion, DALL-E 2, and specialized medical variants such as MedDiffusion and PathoDiff. The evaluation employed a multi-faceted assessment approach, examining both technical image enhancement quality metrics and task-specific oral disease detection capabilities essential for reliable public health screening applications.

Image enhancement quality assessment with statistical rigor

The analysis focused on standard quality assessment metrics including Structural Similarity Index (SSIM), Peak Signal-to-Noise Ratio (PSNR), Learned Perceptual Image Patch Similarity (LPIPS), and Fréchet Inception Distance (FID). All statistical comparisons employed paired t-tests to account for the matched nature of performance measurements across the same test images. Prior to conducting t-tests, we verified normality assumptions using Shapiro-Wilk tests (all p > 0.05) and homogeneity of variance using Levene’s tests. For multiple comparisons, we applied Bonferroni correction with α = 0.05/9 = 0.0056 to control family-wise error rate. Effect sizes were calculated using Cohen’s d, with all comparisons showing large effect sizes (d > 0.8).

Table 5 presents a detailed comparison of image enhancement quality metrics across the ten evaluated approaches, including comprehensive statistical measures with means, standard deviations, and 95% confidence intervals calculated using bootstrap resampling with 200 iterations for each performance metric.

DentoSMART-LDM demonstrated superior performance across all enhancement quality metrics, achieving the highest SSIM score (0.941 ± 0.023) and PSNR (34.82 ± 1.47 dB), representing statistically significant improvements of 9.0% and 11.5% respectively compared to the second-best performer (Enhanced-PSO-LDM). These results indicate that DentoSMART-LDM produces enhanced dental radiographs that more closely match optimal diagnostic quality while maintaining structural fidelity essential for accurate pathology identification.

Cross-validation performance stability analysis

The 5-fold cross-validation results demonstrate high stability and consistency of DentoSMART-LDM performance across different patient subsets. To ensure robust evaluation and prevent data leakage, we implemented strict patient-level splitting where all images from individual patients were assigned exclusively to single folds. Table 6 presents detailed cross-validation statistics with confidence intervals and stability measures.

Confidence intervals were calculated using bootstrap resampling with 200 iterations for each performance metric. The bootstrap procedure maintained patient-level sampling to preserve the independence structure of the data. For each bootstrap iteration, we randomly sampled patients with replacement and calculated performance metrics on all images from the selected patients. This approach ensures that confidence intervals accurately reflect uncertainty in generalization to new patient populations.

We conducted post-hoc power analysis to verify the adequacy of our sample size for detecting meaningful performance differences. With our sample size of 3,750 test images from 1,263 unique patients, we achieved statistical power > 0.99 for detecting effect sizes ≥ 0.5 (medium effect) and power > 0.95 for detecting effect sizes ≥ 0.3 (small-medium effect) at α = 0.05. This analysis confirms that our study is well-powered to detect clinically meaningful performance differences.

The coefficient of variation (CV) across all cross-validation folds remained below 0.6% for all primary metrics, indicating exceptional stability of model performance. The intraclass correlation coefficient (ICC) values exceeded 0.85 for all metrics, demonstrating excellent consistency across different patient subsets. The ANOVA p-values (all > 0.05) confirm no significant differences between folds, supporting the stability of performance across different data partitions. These results provide strong evidence that the reported performance metrics represent stable and generalizable model capabilities rather than artifacts of specific data partitions.

Beyond statistical significance, we evaluated the clinical significance of observed performance improvements using established clinical thresholds and expert assessment. Cohen’s d effect sizes for the comparison between DentoSMART-LDM and the best competing method (Enhanced-PSO-LDM) were: SSIM improvement (d = 2.84, very large effect), PSNR improvement (d = 2.31, very large effect), and overall accuracy improvement (d = 1.97, very large effect). These large effect sizes, combined with expert clinical validation confirming diagnostic relevance, support the clinical significance of observed improvements.

Oral disease detection performance with statistical validation

Beyond enhancement quality, the effectiveness of the enhanced dataset for oral disease detection represents the ultimate measure of clinical success. We trained identical EfficientNet-B5 diagnostic models on each enhanced dataset and evaluated their performance on an independent test set containing 3,750 images across 12 pathology classes. All statistical comparisons employed paired t-tests with Bonferroni correction for multiple comparisons (α = 0.05/9 = 0.0056).

Table 7 presents a comprehensive analysis of diagnostic performance achieved by models trained on datasets processed with different enhancement and augmentation approaches, including detailed statistical measures with means, standard deviations, and 95% confidence intervals calculated using bootstrap resampling with 200 iterations for each performance metric.

The DentoSMART-LDM processed dataset yielded the highest overall diagnostic accuracy (97.3 ± 0.18%), representing a significant improvement over no enhancement (15.9% gap) and other advanced approaches (3.5–12.0% gap). The narrow confidence intervals and low standard deviations confirm the reliability and consistency of these performance improvements across multiple evaluation runs.

Particularly notable was the improvement in diagnostic accuracy for challenging pathologies such as early-stage carious lesions and subtle periodontal bone loss, where accuracy improved by up to 31.7% compared to traditional methods. The statistical significance of all comparisons (p < 0.001) with large effect sizes (Cohen’s d > 1.5 for all comparisons with DentoSMART-LDM) demonstrates the framework’s superior ability to enhance and generate realistic dental images that preserve pathology-identifying characteristics.

All performance metrics showed consistent improvements with DentoSMART-LDM, including F1-score (0.968 ± 0.003), precision (0.971 ± 0.002), recall (0.965 ± 0.003), and AUC (0.993 ± 0.001), with confidence intervals that do not overlap with competing methods, confirming the statistical robustness of the observed improvements. The bootstrap resampling procedure ensured that confidence intervals accurately reflect uncertainty in model performance and support the clinical significance of the diagnostic improvements achieved through the DentoSMART-LDM framework.

Ablation study: impact of key components with statistical analysis

To systematically assess the contribution of each key component in the DentoSMART-LDM framework, we conducted a comprehensive ablation study where individual components were systematically removed or replaced while maintaining all other aspects unchanged. This analysis provides crucial insights into the relative importance of different architectural elements and their impact on overall system performance.

Table 8 presents the performance impact of removing or replacing individual components, with comprehensive statistical measures including means, standard deviations, and 95% confidence intervals calculated using bootstrap resampling with 200 iterations for each performance metric. All statistical comparisons employed paired t-tests with the complete DentoSMART-LDM configuration as the reference.

The results clearly demonstrate that both the DSMART metaheuristic optimization and the pathology-aware attention mechanisms contribute substantially to the overall performance. The removal of the DSMART optimization mechanism resulted in a significant 8.3% decrease in SSIM score (from 0.941 ± 0.023 to 0.863 ± 0.031) and a 6.1% decrease in diagnostic accuracy (from 97.3 ± 0.18% to 91.2 ± 0.24%), with non-overlapping confidence intervals confirming statistical significance (p < 0.001). This indicates the crucial role of DSMART in preserving diagnostic features and overall enhancement fidelity.

Similarly, removing the pathology-aware attention component led to a statistically significant 5.7% decrease in SSIM score (to 0.887 ± 0.028) and a 3.5% decrease in diagnostic accuracy (to 93.8 ± 0.21%), with p < 0.001, demonstrating the importance of this component for maintaining pathological feature integrity during the diffusion process.

The most severe performance degradation occurred when both components were removed simultaneously, resulting in SSIM dropping to 0.812 ± 0.035 and diagnostic accuracy falling to 87.4 ± 0.31%, representing a 13.7% and 9.9% decrease respectively. This synergistic effect suggests that the components work together to achieve optimal performance.

Replacement experiments provide additional insights into component specificity. When DSMART was replaced with standard Particle Swarm Optimization (PSO), performance decreased significantly across all metrics: SSIM (0.901 ± 0.027, p < 0.001), PSNR (32.76 ± 1.68 dB, p < 0.001), and diagnostic accuracy (94.6 ± 0.22%, p < 0.001), confirming the superiority of the DSMART approach over conventional optimization methods.

Similarly, replacing pathology-aware attention with standard attention mechanisms resulted in measurable performance reductions: SSIM (0.918 ± 0.025, p < 0.01), diagnostic accuracy (95.7 ± 0.19%, p < 0.01), indicating the value of pathology-specific design choices.

The basic LDM configuration without any enhancements achieved the lowest performance across all metrics, with SSIM of 0.789 ± 0.038 and diagnostic accuracy of 84.9 ± 0.35%, emphasizing the substantial contributions of both architectural innovations. All confidence intervals were non-overlapping between the complete system and ablated versions, providing strong statistical evidence for the significance of each component’s contribution to the overall framework performance.

Pathology-Specific detection performance analysis with statistical validation

To further assess the effectiveness of DentoSMART-LDM in addressing class imbalance and improving recognition of rare oral pathologies, we conducted a detailed analysis of pathology-specific detection performance. This evaluation is particularly important for clinical applications where accurate detection of specific conditions directly impacts patient care decisions and treatment planning.

Table 9 presents the class-wise diagnostic accuracy achieved by models trained on different enhanced datasets, with comprehensive statistical measures including means, standard deviations, and 95% confidence intervals calculated using bootstrap resampling with 200 iterations for each pathology type. All statistical comparisons employed paired t-tests with Bonferroni correction for multiple comparisons (α = 0.05/11 = 0.0045 for 12 pathology classes). Figure 2 presents the column chart of the Pathology-Specific Detection Accuracy Across Enhancement Methods.

Computational efficiency analysis with performance metrics

Despite its sophisticated dual-component architecture incorporating both metaheuristic optimization and latent diffusion models, DentoSMART-LDM demonstrates competitive computational efficiency when compared to other high-performance methods. This efficiency analysis is crucial for practical deployment in resource-constrained public health environments where computational resources may be limited. Table 10 presents a comprehensive comparison of computational requirements across the evaluated approaches, including detailed statistical measures with means, standard deviations, and 95% confidence intervals calculated across multiple training runs and hardware configurations. All timing measurements were conducted on identical hardware setups (NVIDIA RTX 4090 GPUs with 24GB memory) with statistical validation through repeated experiments.

The computational efficiency analysis reveals that DentoSMART-LDM achieves superior efficiency compared to other advanced deep learning methods. When compared to Stable Diffusion, DentoSMART-LDM demonstrates significant computational advantages: 50.5% reduction in training time (14.2 ± 1.8 vs. 28.7 ± 3.4 h, p < 0.001), 47.3% reduction in inference time (11.8 ± 1.4 vs. 22.4 ± 2.6 ms/image, p < 0.001), 24.7% fewer parameters (68.7 M vs. 91.3 M), 35.6% reduction in FLOPs (82.3G vs. 127.8G), and 40.8% lower GPU memory requirements (7.4 ± 0.8 vs. 12.5 ± 1.5 GB, p < 0.001).

The statistical significance of these efficiency improvements is confirmed through paired t-tests with non-overlapping confidence intervals for all major computational metrics. The training time confidence interval for DentoSMART-LDM [13.3, 15.1 h] does not overlap with any competing advanced method, demonstrating consistent computational advantages across multiple experimental runs. Compared to Enhanced-PSO-LDM, the second-best performing method, DentoSMART-LDM still shows meaningful improvements: 28.3% faster training (p < 0.01), 27.6% faster inference (p < 0.01), 13.5% fewer parameters, and 19.6% lower memory usage (p < 0.01). These improvements are particularly significant given that DentoSMART-LDM also achieves superior diagnostic performance, indicating efficient utilization of computational resources. The inference time performance of 11.8 ± 1.4 ms/image makes DentoSMART-LDM suitable for real-time applications in clinical settings, where rapid image processing is essential for workflow efficiency. The narrow confidence interval [11.1, 12.5 ms] indicates consistent performance across different image types and pathological conditions. Memory efficiency is another crucial advantage, with DentoSMART-LDM requiring only 7.4 ± 0.8 GB of GPU memory compared to 12.5 ± 1.5 GB for Stable Diffusion and 16.8 ± 1.8 GB for DALL-E 2. This efficiency enables deployment on more accessible hardware configurations commonly available in public health settings. The comprehensive efficiency score of 9.2/10 for DentoSMART-LDM, calculated as a weighted combination of computational metrics and performance quality, confirms its suitability for resource-constrained environments. While traditional enhancement methods achieve faster processing (1.3 ± 0.2 h training, 2.1 ± 0.3 ms inference), they sacrifice significant diagnostic performance, resulting in lower overall clinical utility.

Few-shot learning performance with statistical validation

The effectiveness of DentoSMART-LDM in few-shot learning scenarios represents a critical capability for practical deployment in public health settings where obtaining large amounts of labeled pathological data is challenging, particularly for rare diseases. We evaluated this capability by training diagnostic models with varying numbers of samples per pathology class, systematically reducing the training data to assess the framework’s robustness under data-limited conditions.

Table 11 presents comprehensive few-shot learning performance analysis across different sample sizes, with detailed statistical measures including means, standard deviations, and 95% confidence intervals calculated using bootstrap resampling with 200 iterations for each sample size condition. All statistical comparisons employed paired t-tests with Bonferroni correction for multiple comparisons (α = 0.05/5 = 0.01 for different sample sizes). Figure 3 shows the column chart of the Few-Shot Learning Performance Analysis.

Cross-institutional generalization analysis with statistical validation

Cross-institutional generalization represents a critical validation criterion for clinical deployment, as models must maintain consistent performance across different healthcare settings with varying equipment, protocols, patient populations, and operator expertise levels. To assess this capability, we evaluated diagnostic accuracy across five geographically distinct test datasets representing different institutional environments and demographic populations.

Table 12 presents comprehensive cross-institutional generalization performance analysis, with detailed statistical measures including means, standard deviations, and 95% confidence intervals calculated using bootstrap resampling with 200 iterations for each institutional dataset. All statistical comparisons employed paired t-tests with Bonferroni correction for multiple comparisons (α = 0.05/5 = 0.01 for five institutional datasets). Figure 4 shows the Accuracy comparison across five cross sections from the dataset.

Robustness to image quality degradation analysis with statistical validation

In real-world public health screening environments, image quality is often compromised by factors such as equipment limitations, operator variability, patient compliance issues, and environmental constraints. Understanding the framework’s robustness to these challenges is essential for reliable clinical deployment across diverse screening conditions.

Table 13 presents comprehensive diagnostic accuracy analysis under various degradation conditions, with detailed statistical measures including means, standard deviations, and 95% confidence intervals calculated using bootstrap resampling with 200 iterations for each degradation type. All statistical comparisons employed paired t-tests with the baseline (non-degraded) condition as reference.

The results in Table 13 demonstrate that models enhanced with DentoSMART-LDM maintained significantly higher robustness to all types of image quality degradation, retaining 94.5 ± 0.4% of their baseline performance under degraded conditions compared to 92.2 ± 0.5% for Enhanced-PSO-LDM and 87.7 ± 0.7% for traditional enhancement. The narrow confidence intervals [94.0, 95.0] for DentoSMART-LDM robustness retention indicate consistent performance across different degradation scenarios.

Statistical analysis reveals that DentoSMART-LDM maintained the smallest performance degradation across all challenging conditions. Under combined degradations representing worst-case scenarios, DentoSMART-LDM achieved 88.9 ± 0.6% accuracy, outperforming Enhanced-PSO-LDM by 6.6% and traditional enhancement by 17.7%, with effect sizes (Cohen’s d > 2.5) indicating very large practical significance.

The framework’s superior robustness is particularly evident in challenging conditions such as metallic artifacts (91.7 ± 0.6% vs. 76.4 ± 0.9% for traditional methods) and low resolution imaging (90.4 ± 0.7% vs. 74.8 ± 1.0%), which are common in resource-constrained screening environments.

Impact across modern classification architectures with statistical analysis

The effectiveness of DentoSMART-LDM enhancement was comprehensively evaluated across multiple state-of-the-art deep learning diagnostic architectures to assess the generalizability of enhancement benefits across different neural network designs.

Table 14 presents diagnostic performance improvements for eight contemporary neural network models, with comprehensive statistical measures including confidence intervals and significance testing.

The consistent improvements across diverse architectural designs highlight the generalizability of DentoSMART-LDM’s enhancement benefits, with performance gaps ranging from 12.8% to 16.7% across all evaluated models. All effect sizes exceeded 2.9, indicating very large practical significance, while non-overlapping confidence intervals confirm statistical robustness.

Clinical validation and expert assessment with statistical analysis

To validate the diagnostic relevance of our framework, we conducted comprehensive clinical validation involving board-certified oral pathologists and dental radiologists with rigorous statistical evaluation.

Table 15 presents expert assessment results with comprehensive statistical measures including inter-rater reliability analysis.

Expert evaluation confirmed that DentoSMART-LDM produced radiographs with superior diagnostic quality (4.7 ± 0.3/5.0) and minimal artifacts (4.8 ± 0.2/5.0), with high clinical usefulness ratings (4.9 ± 0.1/5.0) from experienced practitioners. The high ICC (0.891) indicates excellent inter-rater agreement for DentoSMART-LDM assessments, confirming consistent expert consensus regarding image quality improvements.

Comprehensive external validation and real-world clinical assessment

To establish the clinical applicability and robustness of the DentoSMART-LDM framework under realistic deployment conditions, we conducted an extensive multi-phase external validation study encompassing diverse healthcare settings, patient populations, and imaging environments. This comprehensive assessment was designed to evaluate the framework’s performance under natural class imbalance, varying image quality conditions, different imaging protocols, and challenging pathological presentations typical of real-world public health screening programs.

Multi-institutional external dataset collection

Seven external validation datasets were systematically collected from geographically and demographically diverse healthcare institutions over an 18-month period. These datasets were specifically selected to represent the full spectrum of clinical environments where automated oral disease detection systems would be deployed: ExtVal-1 (Rural Health Network): 12,400 radiographs from 15 rural health clinics across three states, characterized by aging equipment (average 8.2 years old), limited operator training, and severe natural class imbalance (pathology prevalence: 0.3%-31.7%). Patient demographics: 68% rural farming communities, 23% elderly population (> 65 years), diverse socioeconomic backgrounds43. ExtVal-2 (Urban Community Centers): 18,600 images from 8 urban community health centers serving predominantly low-income populations. Equipment varied significantly (3 different manufacturers, 5 imaging protocols), with high patient throughput conditions. Natural class distribution reflected urban oral health patterns with higher prevalence of untreated dental caries (19.4%) and periodontal disease (27.8%)44. ExtVal-3 (Mobile Screening Units): 9,800 radiographs from 12 mobile dental units operating in underserved communities. These represented the most challenging imaging conditions with portable equipment, variable positioning, environmental interference, and operator fatigue effects. Class imbalance was extreme (pathology range: 0.1%-42.3%)45. ExtVal-4 (International Collaborative Sites): 14,200 images from 6 international dental schools and public health programs (Brazil, India, Kenya, Philippines, Mexico, Romania). This dataset provided crucial insights into cross-population generalization, different genetic backgrounds, varied dietary patterns, and distinct pathological presentations46. ExtVal-5 (Specialized Pathology Centers): 7,300 radiographs focusing specifically on rare and early-stage pathologies, including subtle periapical lesions, incipient carious lesions, and early periodontal bone loss. This dataset addressed the critical challenge of detecting pathologies in their earliest manifestations47. ExtVal-6 (Emergency and Urgent Care): 11,100 images from emergency departments and urgent care facilities where dental radiographs are often acquired by non-dental personnel under suboptimal conditions. High prevalence of motion artifacts, positioning errors, and exposure problems48. ExtVal-7 (Longitudinal Follow-up Cohort): 8,900 sequential radiographs from 1,200 patients tracked over 3 years, providing insights into pathology progression detection and temporal consistency of the framework’s diagnostic capabilities49.

Comprehensive performance analysis under natural class imbalance

Table 16 presents detailed performance metrics across all external validation datasets, demonstrating the framework’s robustness under varying degrees of natural class imbalance and challenging imaging conditions.

Pathology-specific performance under real-world conditions

The framework’s ability to detect specific pathological conditions under natural prevalence patterns revealed important insights for clinical deployment. Table 17 presents pathology-specific performance across external datasets, highlighting the framework’s strengths and limitations under realistic screening conditions.

Comprehensive bias assessment and generalizability analysis

Artificial class balancing bias assessment

The first concern regarding artificial class balancing potentially masking the model’s true detection capabilities for rare pathological conditions during population-level screening represents a significant methodological consideration. Natural oral disease prevalence exhibits extreme heterogeneity across different pathological conditions, demographic populations, and geographic regions. To address this concern comprehensively, we conducted extensive epidemiological analysis and prevalence-weighted model evaluation. Table 18 presents the comparison between our artificially balanced training distribution and natural epidemiological prevalence patterns observed in real-world screening programs.

The analysis revealed significant overrepresentation of rare conditions, with cysts/tumors showing 9.22x overrepresentation and developmental anomalies showing 2.68x overrepresentation compared to natural prevalence patterns. Conversely, common conditions like early dental caries were underrepresented by a factor of 0.22x. To quantify the impact of artificial balancing, we retrained multiple model variants using different prevalence weighting strategies and evaluated their performance under both controlled and naturalistic conditions. Table 19 presents comprehensive performance comparisons across different training strategies.

To assess the clinical impact of artificial balancing bias, we conducted comprehensive population-level screening simulations using prevalence-weighted test datasets that mirror real-world screening scenarios. Table 20 presents the results of these simulations across different population demographics and screening contexts.

The screening simulations revealed that while artificial balancing creates optimistic laboratory performance estimates, the framework maintains clinically significant diagnostic value under natural prevalence conditions, particularly when compared to traditional screening methods which typically achieve 30–45% sensitivity for rare pathological conditions.

Synthetic data reinforcement bias evaluation

The second concern regarding potential reinforcement bias from structurally repetitive generated images limiting generalization capabilities required comprehensive synthetic data quality assessment and diversity analysis. We implemented systematic evaluation protocols to quantify synthetic data diversity and assess its impact on model generalization. We conducted multi-dimensional analysis of synthetic image diversity using structural, semantic, and clinical evaluation metrics. Table 21 presents comprehensive diversity assessment results comparing synthetic and real pathological image variations.

Board-certified oral pathologists conducted blind evaluation of synthetic data quality and diversity to assess potential reinforcement bias from clinical perspective. Table 22 presents detailed expert assessment results.

Expert evaluation revealed that 94.7% of synthetic images achieved acceptable or better clinical quality ratings, with 63.2% rated as excellent for overall clinical value. Importantly, experts identified only 2.3% of synthetic images as exhibiting concerning repetitive patterns or unrealistic pathological presentations. To quantify reinforcement bias impact, we systematically varied synthetic-to-real data ratios and evaluated performance on independent real-world test datasets. Table 23 presents comprehensive results across different synthetic data proportions.

The optimal performance occurred at 75% synthetic data with minimal generalization gap (1.2%), indicating that synthetic augmentation enhances rather than impairs real-world performance when properly balanced. The 100% synthetic condition showed increased generalization gap (4.4%), confirming the importance of maintaining real data for robust learning. We evaluated models trained with different synthetic data ratios on completely independent external datasets to assess cross-domain generalization capabilities. Table 24 presents performance across seven external validation datasets.

The consistent performance improvements across all external datasets (average + 16.4% gain) demonstrate that synthetic data augmentation enhances rather than compromises generalization capabilities when properly implemented. Using longitudinal patient cohorts, we assessed whether synthetic data training affects the model’s ability to track pathological changes over time in real patients. Table 25 presents temporal consistency results.

The temporal analysis confirms that optimal synthetic data augmentation (75% ratio) improves rather than impairs the model’s ability to track real pathological changes over time, with 94.3% consistency for stable conditions and superior progression detection capabilities. Based on comprehensive bias assessment results, we developed evidence-based mitigation strategies and implementation guidelines for clinical deployment. Table 26 summarizes recommended deployment configurations for different clinical contexts.

Comprehensive structural overfitting and reinforcement bias analysis

To address fundamental concerns regarding structural overfitting and reinforcement bias arising from latent diffusion-generated synthetic images, we conducted an extensive multi-dimensional analysis examining potential model dependencies on synthetic data patterns, spurious correlation learning, and the risk of developing pathological interpretations that may not accurately reflect real-world disease presentations. This comprehensive evaluation encompassed structural dependency assessment, feature learning analysis, temporal consistency validation, cross-domain generalization testing, and expert clinical verification to ensure robust understanding of synthetic data impact on model reliability and clinical applicability.

Structural overfitting assessment involved systematic evaluation of model performance disparities between synthetic and real validation datasets across varying synthetic data proportions, revealing critical insights into optimal training configurations. Models trained with 25% synthetic data showed minimal performance gaps (0.4% difference between real and synthetic validation accuracy), indicating healthy generalization without synthetic dependency. As synthetic proportions increased to 50% and 75%, performance gaps remained acceptably low (0.6% and 0.4% respectively), suggesting that the framework successfully learned generalizable pathological patterns rather than synthetic-specific artifacts. However, purely synthetic training scenarios demonstrated concerning overfitting levels with a 4.4% performance gap, indicating substantial model dependence on generation-specific characteristics that may not translate to real clinical presentations.

The Structural Dependency Index, a novel metric quantifying model reliance on synthetic-specific features through gradient-based feature attribution analysis, provided quantitative assessment of synthetic bias risk. Values remained within clinically acceptable ranges for balanced training configurations (0.12 ± 0.03 for 25% synthetic, 0.18 ± 0.04 for 50% synthetic, and 0.15 ± 0.02 for 75% synthetic), but increased substantially to concerning levels (0.67 ± 0.08) under purely synthetic conditions. This metric demonstrated strong correlation with cross-domain performance degradation (r = 0.84, p < 0.001), validating its utility as an early warning indicator for synthetic overfitting.

Reinforcement bias evaluation employed multiple complementary approaches including gradient-based attribution analysis, feature importance mapping, attention mechanism visualization, and pathological consistency assessment to detect whether repeated exposure to synthetic data patterns created systematic biases in pathological interpretation. Gradient attribution analysis comparing model attention patterns between real and synthetic data revealed minimal bias amplification factors across all training configurations (1.05x to 1.08x), indicating that models maintained balanced attention to authentic pathological features regardless of synthetic augmentation levels. Feature importance mapping demonstrated consistent prioritization of diagnostically relevant anatomical structures and pathological indicators across both real and synthetic training examples, with correlation coefficients between real and synthetic feature importance rankings exceeding 0.89 for all pathological categories.

Attention mechanism visualization through class activation mapping revealed that models trained with synthetic augmentation maintained appropriate focus on clinically relevant diagnostic regions, including periodontal ligament spaces, trabecular bone patterns, and radiolucent lesion boundaries. Quantitative analysis of attention map overlap between real and synthetic pathological presentations showed high consistency (Jaccard index: 0.83 ± 0.04), indicating that synthetic training enhanced rather than distorted authentic pathological attention patterns. Pathological consistency assessment comparing diagnostic feature detection across real versus synthetic test cases demonstrated maintained performance levels (0.934 ± 0.012 for real data vs. 0.928 ± 0.016 for synthetic data), with minimal degradation that remained within clinically acceptable bounds.

Cross-domain validation provided critical evidence against systematic synthetic bias by testing models trained on synthetic-augmented data exclusively on completely independent real-world datasets collected from different institutions, equipment configurations, and patient populations. Performance maintenance across all seven external validation datasets (average accuracy 87.7 ± 0.8%) confirmed that synthetic training enhanced generalization capabilities rather than creating institution-specific or generation-specific dependencies. Particularly significant was the consistent performance across international collaborative sites (88.9 ± 1.2% accuracy) and mobile screening units (86.2 ± 1.4% accuracy), where imaging conditions differed substantially from synthetic training parameters.

Feature diversity analysis employed dimensionality reduction techniques and statistical distribution comparisons to assess whether synthetic images maintained authentic pathological complexity or introduced systematic simplifications that might bias model learning. Principal component analysis of 127 extracted pathological features revealed that synthetic and real images occupied overlapping regions in feature space (95% confidence ellipse overlap: 0.847), indicating preserved pathological complexity. Statistical comparison of feature distributions using Kolmogorov-Smirnov tests showed no significant differences for 89% of extracted features (p > 0.05), while the remaining 11% showed only minor distributional shifts that did not correlate with diagnostic performance degradation.

Expert blind validation involving 20 board-certified oral pathologists provided clinical verification of synthetic image authenticity and diagnostic relevance. Radiologists demonstrated inability to reliably distinguish between real and synthetic images (detection accuracy: 52.3 ± 4.7%, 95% CI: 47.9–56.7%), with performance barely exceeding chance levels and showing no correlation with years of clinical experience (r = 0.12, p = 0.614). Detailed expert assessment of pathological authenticity, anatomical consistency, and diagnostic relevance yielded consistently high ratings for synthetic images (4.51 ± 0.46/5.0 composite score), with 94.7% of synthetic images receiving acceptable or better clinical quality ratings. Expert evaluation specifically assessed potential reinforcement bias by comparing diagnostic confidence and accuracy when reviewing real versus synthetic images, revealing no significant differences in either metric (confidence: p = 0.267, accuracy: p = 0.341).

Temporal consistency testing across longitudinal patient cohorts spanning multiple years provided additional validation against synthetic bias by assessing whether models maintained stable pathological interpretation capabilities over time and across evolving clinical presentations. Analysis of 1,200 patients with sequential radiographs over 3-year periods demonstrated consistent diagnostic accuracy (coefficient of variation: 0.03 ± 0.01 across time points), indicating robust learning of authentic pathological progressions rather than generation-specific patterns. Models successfully tracked natural disease progression and regression patterns with high fidelity (progression detection: 91.2 ± 2.1%, regression detection: 87.4 ± 2.8%), confirming authentic pathological understanding.

Adversarial testing specifically designed to detect synthetic bias involved systematic evaluation of model responses to subtle synthetic artifacts, generation-specific noise patterns, and known limitations of diffusion models. Models demonstrated robust resistance to synthetic-specific perturbations, with performance degradation under adversarial synthetic modifications (2.3 ± 0.8%) comparable to equivalent perturbations of real images (2.1 ± 0.7%, p = 0.486). This resistance indicated that models learned authentic pathological features rather than exploiting generation artifacts or synthetic-specific patterns that might compromise real-world performance.

Comprehensive risk assessment synthesizing all evaluation components indicated that structural overfitting and reinforcement bias risks remain minimal when synthetic data comprises no more than 75% of training data and appropriate validation protocols are implemented. The optimal synthetic ratio of 75% achieved maximal performance benefits while maintaining robust generalization capabilities and minimal bias indicators across all assessment dimensions. Critical risk factors identified include purely synthetic training scenarios, insufficient cross-domain validation, and inadequate expert clinical verification, all of which were successfully mitigated through our comprehensive evaluation framework.

Clinical implications of this analysis support confident deployment of the DentoSMART-LDM framework in real-world screening applications, with strong evidence that synthetic augmentation enhances rather than compromises authentic pathological learning. The maintained diagnostic integrity across diverse clinical conditions, patient populations, and institutional settings provides robust foundation for clinical translation while ensuring patient safety through preserved diagnostic accuracy and reliability. Ongoing monitoring protocols established through this analysis enable continued validation of synthetic data impact throughout clinical deployment, ensuring sustained performance and early detection of any emerging bias patterns that might develop over extended operational periods.

Independent dataset validation and blinded expert assessment